Abstract

The synergy between artificial intelligence (AI) and hyperspectral imaging (HSI) holds tremendous potential across a wide array of fields. By leveraging AI, the processing and interpretation of the vast and complex data generated by HSI are significantly enhanced, allowing for more accurate, efficient, and insightful analysis. This powerful combination has the potential to revolutionize key areas such as agriculture, environmental monitoring, and medical diagnostics by providing precise, real-time insights that were previously unattainable. In agriculture, for instance, AI-driven HSI can enable more precise crop monitoring and disease detection, optimizing yields and reducing waste. In environmental monitoring, this technology can track changes in ecosystems with unprecedented detail, aiding in conservation efforts and disaster response. In medical diagnostics, AI-HSI could enable earlier and more accurate disease detection, improving patient outcomes. As AI algorithms advance, their integration with HSI is expected to drive innovations and enhance decision-making across various sectors. The continued development of these technologies is likely to open new frontiers in scientific research and practical applications, providing more powerful and accessible tools for a wider range of users.

1. Introduction

Initially developed for remote sensing in the mid-1980s [1], hyperspectral imaging (HSI) has, over the past twenty years, expanded into numerous research fields, notably agriculture and food science, to gather complementary data on both the visual and chemical attributes of study subjects [2,3,4]. This advanced method combines conventional imaging with spectroscopic techniques like infrared, Raman, and fluorescence, creating a 3D data structure called a hypercube. This hypercube integrates 2D digital images (X*Y) with a third dimension of spectral data (λ) [5]. Thus, HSIs consist of a stack of spatial image planes, each linked to a specific wavelength band, visually depicting pixel-level information at each wavelength. Subsequently, each pixel in an image is associated with a spectrum that reflects the light absorption, reflection, emission, and/or scattering properties of the spatial region it represents. Nevertheless, it should be noted that the spectrum of each pixel is susceptible to influence from neighboring pixels because of several factors. These include the effects of optical, instrumental, and background factors. This spectrum serves to act as a distinctive fingerprint, thus enabling the accurate identification of the pixel’s composition [6].

The primary challenges with using HSI for the quality assessment of fruits, vegetables, and mushrooms are related to data availability and the reliability of existing models and results, particularly regarding their practical applicability [7,8,9]. Although numerous studies have been conducted on a wide range of cases [10,11,12], they generally lack a practical focus. Only a few studies have conducted true test set validations using data from different years or orchards rather than solely splitting a single piece of data. To develop consistent prediction models and enhance knowledge transfer from researchers to horticultural practices, research should prioritize repeatability and the translation of research parameters (such as chlorophyll content, firmness, and SSC) into relevant industrial parameters. Additionally, effective non-destructive valuation of quality parameters with HSI in the field, during storage and handling, and on the shelf can lead to the best possible use of production inputs, reduced food waste, and the assurance of safe, high-quality food [13,14].

The integration of artificial intelligence (AI) with HSI is crucial because it significantly enhances the ability to analyze and interpret complex hyperspectral (HS) data, which encompass hundreds of spectral bands [15]. AI algorithms, mainly machine learning (ML) and deep learning (DL) methods, can effectively manage the high dimensionality and vast volume of HSI data, enabling precise feature extraction and categorization [16,17]. The advantage of AI in hyperspectral image analysis lies in its ability to handle large datasets, reduce noise, perform real-time analysis, and improve the precision of tasks such as object detection, anomaly identification, and environmental monitoring. This makes AI essential for applications like agriculture, remote sensing, and medical diagnostics, where hyperspectral data are vital.

As AI technologies continue to evolve, their integration with HSI is expected to become more sophisticated and ubiquitous. These advancements will enable real-time processing capabilities, facilitating immediate decision-making in critical applications like environmental monitoring [18], disaster management, and healthcare diagnostics. Moreover, the combination of AI with emerging technologies such as edge computing and the Internet of Things (IoT) will democratize access to HSI, allowing for its deployment in remote and resource-limited settings. As AI-HSI becomes more accessible, it will foster innovations in personalized medicine, precision agriculture, and intelligent transportation systems. Additionally, the growing emphasis on sustainability and environmental conservation will spur the development of AI algorithms tailored to monitor and mitigate climate change impacts. Overall, the synergy between AI and HSI promises to revolutionize various industries, driving efficiency, accuracy, and transformative outcomes in ways previously unimaginable [19].

In this comprehensive review, we delve into the pivotal role of AI in revolutionizing HSI across a diverse array of applications. AI’s integration with HSI has not only streamlined data analysis but also unlocked new frontiers in fields such as agriculture, environmental monitoring, medical diagnostics, and materials science [20]. By harnessing AI’s capabilities in processing vast and intricate spectral data, researchers and practitioners can achieve unprecedented levels of precision in feature extraction, categorization, and interpretation. However, this transformative synergy is not without its challenges. The complexity and high dimensionality of HS data pose significant computational hurdles, demanding advanced algorithms and robust computing infrastructure. Moreover, the scarcity of labeled HS datasets complicates the training of AI models, necessitating innovative approaches in data augmentation and semi-supervised learning.

Looking ahead, the prospects for AI-enhanced HSI are promising [21]. Continued advancements in AI algorithms, coupled with improvements in hardware capabilities such as GPU acceleration and cloud computing, are set to overcome current limitations [22]. Standardization efforts and collaborative initiatives will further facilitate the seamless integration and interoperability of AI and HSI technologies, paving the way for broader adoption and impactful applications in precision agriculture, environmental sustainability, personalized medicine, and beyond [23,24].

2. Working Mechanism of HSI and Challenges

HSI operates by capturing and processing information from across the electromagnetic (EM) spectrum, extending beyond the capabilities of traditional imaging that typically captures data in just a few broad wavelength bands [25,26,27]. The process begins with illumination, where a light source irradiates the target scene. The interaction of light with the materials in the scene—through reflection, absorption, and scattering—varies according to the unique spectral properties of each material. HS sensors then capture the reflected light across numerous narrow spectral bands. These sensors can use different methods for data acquisition, including spatial scanning (push broom), where the sensor or scene moves to capture spatial dimensions sequentially; spectral scanning (whiskbroom), capturing spectral data for each spatial point in sequence; snapshot imaging, capturing the full HS data cube in a single instance; and spatiospectral scanning, which combines aspects of both spatial and spectral scanning.

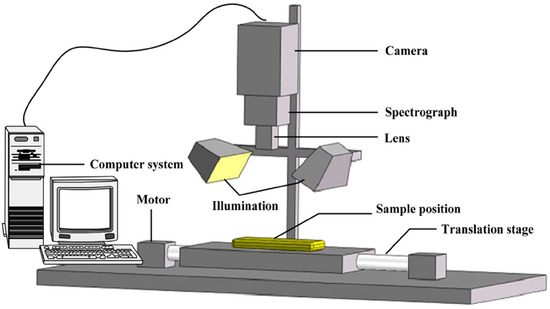

The light collected by the sensor is dispersed into its constituent wavelengths using optical elements like prisms or diffraction gratings [28,29]. This dispersion process allows the system to separate light into fine spectral bands. The dispersed light is then detected using an array of sensors—typically charge-coupled devices or complementary metal-oxide-semiconductor sensors—where each sensor pixel captures light intensity at a specific wavelength, generating a detailed spectrum for each spatial point in the scene. The captured raw data form an HS data cube, with two spatial dimensions and one spectral dimension, containing rich spectral information for each pixel. The main component of the HSI system is shown in Figure 1 [30].

Figure 1.

Schematic of foremost gears of the HIS system [30].

Following data acquisition, calibration is performed to correct for sensor response, lighting conditions, and atmospheric effects, ensuring the accuracy of the spectral information. Advanced data processing techniques are applied to the HS data cube, enabling spectral unmixing to decompose mixed pixels, categorization to identify and categorize materials based on their spectral signatures, feature extraction to pinpoint specific spectral characteristics, and dimensionality reduction to simplify the data while retaining essential information [31].

HSI presents several challenges due to its high data dimensionality and complexity. Each hyperspectral image contains hundreds of spectral bands for every pixel, resulting in massive datasets that require significant computational resources and storage. This large volume of data can lead to issues such as data redundancy, where many spectral bands carry overlapping information, complicating efficient analysis. Additionally, hyperspectral data are often noisy and sensitive to environmental conditions, requiring sophisticated preprocessing techniques like noise reduction, atmospheric correction, and calibration.

AI can significantly solve the challenges of HSI analysis due to its ability to process large, complex datasets and detect intricate patterns that are not easily discernible by traditional methods [15]. AI, particularly through ML and DL techniques, excels at dimensionality reduction, feature extraction, and classification, making it well-suited for hyperspectral data analysis [32]. These methods can automatically learn from data, identify relevant spectral features, and improve the accuracy of tasks such as object detection, anomaly detection, and material classification [33]. AI models, especially deep learning architectures like convolutional Neural Networks (CNNs), are capable of learning hierarchical features from raw hyperspectral data, which reduces the need for manual feature engineering.

The advantages of AI in this field include its scalability, adaptability, and efficiency in handling large, complex, and non-linear data. AI algorithms can be trained on vast amounts of hyperspectral data, continually improving their performance as more data become available [34]. AI also provides faster processing times, enabling real-time or near-real-time analysis, which is critical in areas like remote sensing, agriculture, and environmental monitoring [23,35]. Additionally, AI can generalize well across different datasets, making it robust in handling varying conditions and noise in HSI.

3. Types of HSI

Different types of HSI systems and techniques are used depending on the application, data collection method, and the specific region of the spectrum being targeted. HS cameras can acquire detailed information, which is then transformed into a three-dimensional data cube through the employment of one of five primary methods.

- (a)

- Whiskbroom (point scanning): This method captures one single pixel at a time, gradually building the image as the camera scans across the sample [36]. Each pixel includes all of its spectral information, resulting in very high spectral resolution. However, the image acquisition process is time-consuming, making it less suitable for applications requiring rapid data collection [37]. Despite this limitation, whiskbroom scanning is valued for its precision in capturing detailed spectral data.

- (b)

- Push-broom (line scanning): Push-broom technology measures unceasing spectra one line of pixels at a time, making it widely used in industrial quality control monitoring processes [38,39]. Its main limitation is the high losses caused by the entrance slit of the spectrometer, which can reduce the overall efficiency of light capture. Nonetheless, push-broom scanning is favored in many applications for its balance between speed and spectral resolution, offering a practical solution for real-time monitoring and analysis.

- (c)

- Fourier Transform (FT) spectroscopy: An alternative for measuring non-stop spectra, FT spectroscopy combines a monochrome imaging sensor with an interferometer, providing higher light throughput compared with push-broom systems [40]. This method enhances efficiency and accuracy in spectral data collection, making it ideal for applications requiring high sensitivity and precision [41]. Additionally, FT spectroscopy can effectively handle a wide range of wavelengths, further broadening its applicability in various scientific and industrial fields.

- (d)

- Spectral scanning: This technique is capable of gathering the entirety of the spatial information associated with a given wavelength, with each wavelength being considered individually [42]. While the process is relatively rapid when considered on an image-by-image basis, the overall procedure is considerably slower due to the necessity of changing wavelengths. Nevertheless, spectral scanning is a powerful tool for applications that require high spatial resolution at specific wavelengths. Its ability to precisely isolate and capture data for individual wavelengths makes it particularly useful in fields such as fluorescence microscopy, where detailed spectral information is crucial. Additionally, spectral scanning can be optimized to focus on particular regions of interest, enhancing the efficiency of data collection in targeted studies [43].

- (e)

- HS snapshot cameras: These cameras capture HS video, making them ideal for imaging moving objects [44]. The method is rapid and effective, although it typically provides restricted spectral and spatial resolutions in comparison to alternative techniques [45]. Nonetheless, snapshot cameras are crucial in applications requiring real-time HS imaging.

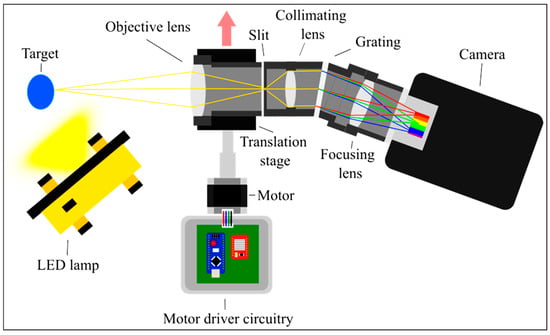

High-resolution HSI is becoming crucial for accurately detecting spectral variations in complex, spatially detailed targets [46,47]. However, regardless of these advantages, current high-resolution setups are often extremely costly, notably limiting their accessibility and user base. These restrictions can have broader consequences, reducing opportunities for data collection and, thus, our understanding of various environments [48]. Stuart et al. introduced a cost-effective alternative to the existing equipment (Figure 2) [3]. This new instrument delivered HS datasets efficiently in resolving spectral variants in millimeter-scale targets, which many affordable HSI options currently cannot achieve. The detailed instrument metrology was provided, and its effectiveness in a mineralogy-based environmental surveillance application was demonstrated, showcasing it as an advantage in the realm of affordable HSI. Table 1 compares whiskbroom (point scanning), push broom (line scanning), Fourier Transform (FT) spectroscopy, spectral scanning, and HS snapshot cameras.

Figure 2.

The illustration demonstrates the passage of axial and marginal rays through the optical system of the low-cost, high-resolution HS imager. The blue, green, and red lines represent example wavelength rays after diffraction [3].

Table 1.

Characteristics of scanning methods used in HSI.

4. Synergy between AI and HSI

AI algorithms are particularly well-suited for HSI because they can efficiently handle and analyze the vast and complex data that these systems generate. Hyperspectral images consist of hundreds of narrow spectral bands, providing detailed information across a wide range of wavelengths. This high dimensionality offers rich data but also presents challenges such as redundancy [49], noise [50], and the “curse of dimensionality,” which can overwhelm traditional analysis techniques [51]. AI algorithms, particularly ML and DL models, are adept at extracting relevant features, identifying patterns, and performing tasks like classification, detection, and anomaly identification from these large datasets. They excel at leveraging the spectral and spatial relationships in hyperspectral data, which can be difficult for traditional statistical methods to capture. Furthermore, AI models can be trained to recognize subtle differences in spectral signatures that are critical for applications such as remote sensing [52], agriculture [53], medical diagnostics [54], and environmental monitoring [55]. Their ability to learn from data makes them highly adaptable to different HSI tasks, even in cases where annotated data are limited or the problem is non-linear, thus enhancing the overall efficiency and effectiveness of HSI systems [56].

Supervised learning algorithms use labeled data to train models that can classify or analyze new hyperspectral images. Common algorithms include Support Vector Machines (SVMs) [57], which are popular for classification tasks due to their ability to handle high-dimensional data and work with smaller training datasets. Random Forests (RFs) [58] is another widely used method known for its robustness and ability to handle noisy data. The K-Nearest Neighbors (KNNs) algorithm is also employed for classification, particularly based on the proximity of pixel spectra to labeled training data [59]. In addition, Neural Networks (NNs), such as Multilayer Perceptrons (MLPs) [60], are used for pixel-level classification tasks [61].

In contrast, unsupervised learning algorithms, such as k-means clustering [62], hierarchical clustering [63], and principal component analysis (PCA) [64], are employed to find hidden patterns or groupings in data without predefined labels [65]. Neural Networks (NNs), particularly DL architectures like convolutional Neural Networks (CNNs) [66,67,68] and recurrent Neural Networks (RNNs) [69], have transformed fields like image and speech recognition due to their capability to learn complex, hierarchical representations of data. Reinforcement learning algorithms enable AI systems to learn optimal actions through trial and error, guided by rewards and penalties, making them suitable for dynamic and interactive environments, such as game-playing or robotics [70]. Independent Component Analysis (ICA) is applied for blind source separation and is useful in unmixing hyperspectral data into independent spectral components [71]. Additionally, autoencoders, a type of NN, are employed for unsupervised feature extraction and dimensionality reduction [72].

Sigger et al. introduced a novel method called DiffSpectralNet, which integrates diffusion processes with transformer-based techniques for enhanced hyperspectral image classification [73]. The diffusion component effectively captures a broad range of spectral–spatial features, contributing to more accurate and diverse feature extraction. This approach utilized an unsupervised learning framework built on the diffusion model to identify both high-level and low-level spectral–spatial features. Intermediate hierarchical features were then extracted from multiple timestamps using a pre-trained denoising U-Net. These features were subsequently fed into a supervised transformer-based classifier to perform HSI classification. Extensive experiments conducted on three publicly available datasets validated the robustness of the method. The results show that DiffSpectralNet significantly outperformed existing models, achieving state-of-the-art accuracy while demonstrating consistent stability and reliability across various class types within each dataset [73].

DL techniques employ deeper artificial Neural Networks (ANNs) with more neurons, additional layers, and more complex connections between layers [74]. ANNs are computational models inspired by the human brain’s architecture and functioning. They consist of interconnected nodes or “neurons” organized in layers—input, hidden, and output. These networks are designed to recognize patterns, make decisions, and learn from data by adjusting the weights of connections based on the errors of their predictions. ANNs are widely used in various applications, from image and speech recognition to natural language processing and autonomous systems, thanks to their ability to model complex relationships and improve performance through training. The primary benefit of these deeper networks is their capability to learn features routinely, eradicating the need for manual feature engineering, as is often required in traditional ML techniques [75]. This capability enables DL systems to solve more complex problems, offering greater flexibility and the ability to capture more flexibility in data, which is particularly beneficial for horticultural applications [76,77]. Generative Adversarial Networks (GANs) play a role in data augmentation, anomaly detection, and improving the resolution of hyperspectral images [78].

In a fully connected Neural Network (FCNN), a neuron in one layer is linked to all neurons in the next layer, leading to an overwhelming amount of input for multidimensional data [79]. This extensive connectivity can cause a dramatic increase in computational complexity and memory requirements [80]. Furthermore, information in these data is characteristically distributed across diverse dimensions, making linear processing suboptimal. When a neuron contains specific information, the surrounding environment is likely to hold relevant spatial information as well. Accordingly, the neurons in a convolutional layer are interconnected via a localized system of receptive fields extending throughout the depth of the input data rather than in a linear fashion.

A CNN is characteristically composed of multiple convolutional layers, each of which is tailored to obtain specific types of features from the data [81]. The layers facilitate the generation of diverse representations of the input data, ranging from more abstract forms in the initial layers to more detailed depictions in the deeper layers of the network [82,83]. The initial layers might detect basic features such as edges and textures, while the deeper layers recognize more complex structures like shapes and objects. This hierarchical feature extraction allows CNNs to effectively handle spatial hierarchies in data, making them highly suitable for tasks such as image and video recognition, where spatial patterns are crucial.

Additionally, CNNs often incorporate pooling layers, which reduce the dimensionality of the data and help in achieving translational invariance, further enhancing the model’s ability to generalize from training data. Techniques such as dropout and batch normalization are also commonly used in CNNs to prevent overfitting and improve training efficiency. This combination of localized connectivity, hierarchical feature extraction, and regularization techniques makes CNNs exceptionally powerful for processing and interpreting complex multidimensional data [84].

Spectral unmixing is the process of decomposing a mixed pixel into its constituent pure spectral components, known as endmembers. Non-Negative Matrix Factorization (NMF) is commonly used for spectral unmixing by approximating the hyperspectral data matrix with two Non-Negative Matrices, one representing the spectral signatures and the other representing the abundances [85,86]. Sparse Coding techniques are also employed for spectral unmixing, where each pixel is represented as a sparse linear combination of a dictionary of spectral signatures [87].

Hybrid models combine both spatial and spectral information to improve performance in classification, detection, and segmentation tasks. Spatial–spectral CNNs leverage this combination to enhance classification accuracy by processing spatial and spectral data together [88]. Graph Convolutional Networks (GCNs) have also emerged as useful tools for hyperspectral image classification and segmentation. GCNs treat pixels as nodes in a graph and apply convolutions on the graph to capture spatial relationships, which is particularly useful in hyperspectral data where neighboring pixels tend to be highly correlated [89].

Additionally, natural language processing (NLP) algorithms, including transformers and sequence-to-sequence models, empower AI to understand and generate human language, driving advancements in machine translation, sentiment analysis, and conversational agents. Each of these algorithms plays a fundamental role in permitting AI systems to perform a wide range of intelligent tasks by learning from data, recognizing patterns, and making informed decisions. The characteristics of different algorithms used in the interaction between AI and HSI are presented in Table 2.

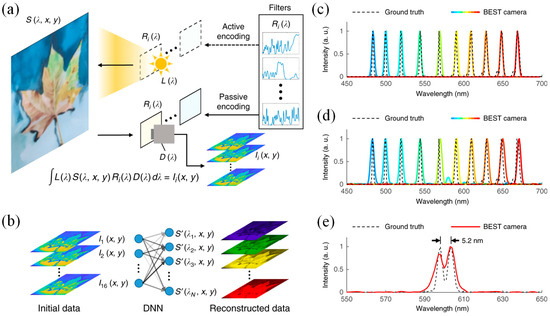

Spectral imaging, essential for applications requiring high-resolution spectral and spatial information, has seen various technical advancements and commercial availability. However, computational spectral cameras, while being compact, lightweight, and cost-effective, face limitations due to the trade-off between spatial and spectral resolutions, restricted data volume, and environmental noise. Zhang et al. developed a deeply learned broadband encoding stochastic HS camera [90]. The simplified representation of the device is revealed in Figure 3a. Utilizing advanced AI for filter design and spectrum reconstruction, signal processing speeds 7000 to 11,000 times faster and improved noise tolerance by approximately 10 times were achieved. These advancements allowed for the accurate and dynamic reconstruction of the spectra of the entire field of view, surpassing the capabilities of traditional compact computational spectral cameras.

Figure 3.

(a) Simplified diagram: The camera operates in active mode (top) or passive mode (bottom) based on where the light spectrum was encoded. (b) Overview of the DNN-based spectral rebuilding: The monochrome camera’s initial data are processed by the DNN to generate the restored 3D HS data cube. (c,d) Spectral profiles of narrow-band laser beams: In (c), the DNN was trained with “precise” data, while in (d), it was trained with “general” data. (e) The spectral profile of two peaks at 598.0 nm and 603.2 nm, with the peak-to-peak distance shown in black [90].

To derive the precise spectrum from these random spectral filters with rich features, a DNN was employed for data management (Figure 3b). This approach offered two significant improvements over traditional compressed sensing (CS) algorithms. First, the spectrum reconstruction speed was dramatically faster. This acceleration was due to the DNN’s use of matrix multiplexing, which was particularly well-suited for parallel computing on a GPU. In contrast, the iterative CS algorithm solved an optimization problem for each pixel, resulting in increased reconstruction time as the number of pixels grows. The DNN’s reconstruction speed, however, remained almost independent of the pixel count. While both methods perform similarly for images with a few thousand pixels or fewer, the DNN proved to be significantly more efficient for higher-resolution images.

Figure 3c–e compares the rebuilt results from the device (solid line) with reference curves from a commercial spectrometer (dashed line). To ensure objective measurements, two DNNs with identical frameworks on two separate datasets were trained. The initial data comprised solely monochromatic spectrum data derived from a laser beam. In this “precise” mode, the output spectrum generated by the trained DNN was constrained by a narrowband envelope, resulting in an average center wavelength localization precision of 0.55 nm (Figure 3c). In the “general” mode, the training dataset was expanded to incorporate more wideband spectral data and eliminated all boundary conditions to assist arbitrary spectrum shapes. This mode achieved an equivalent spectral localization precision with an average of 0.63 nm (Figure 3d) and provided the camera with the flexibility to meet diverse operational needs. The trained DNN in “general” mode offered a spectral resolution of approximately 5.2 nm (Figure 3e), which was sufficient for most practical HSI applications [90].

One key area where AI is applied is super-resolution, where AI-driven algorithms can enhance the spatial resolution of hyperspectral images [91,92]. Traditional HSI systems may capture high spectral resolution but often at a lower spatial resolution due to hardware limitations [15]. By using AI-based models such as CNNs and Generative Adversarial Networks (GANs), it is possible to upscale low-resolution images to finer spatial details [92]. These super-resolution techniques enable more precise mapping and detection, which are critical in fields such as remote sensing, medical imaging, and industrial quality control [93]. AI-driven super-resolution methods not only improve the visual clarity of hyperspectral data but also provide more detailed information that can be leveraged for further analysis [94].

Classification is another important application of AI in hyperspectral image analysis. Hyperspectral images consist of hundreds of spectral bands, providing a wealth of information that can be challenging to process using traditional methods [95]. ML and DL techniques, including Support Vector Machines (SVMs), Random Forests, and deep CNNs, can be employed to classify different materials or objects in hyperspectral images. These models learn to distinguish between various classes, such as different types of vegetation, minerals, or tissues, based on their spectral signatures [96]. AI-driven classification techniques are particularly powerful because they can handle the high dimensionality of hyperspectral data, making them well-suited for tasks such as land cover classification, mineral mapping, and medical diagnostics. By automating the classification process, AI enables faster, more accurate interpretation of hyperspectral images [95].

In the realm of denoising, AI algorithms play a crucial role in improving the quality of hyperspectral images by reducing noise while preserving essential details. Hyperspectral images often suffer from noise due to environmental factors, sensor limitations, or transmission errors. AI-based approaches, such as autoencoders and DL-based noise reduction techniques, can effectively filter out noise without sacrificing important spectral and spatial information. By learning complex noise patterns, these algorithms can remove noise across multiple spectral bands, resulting in cleaner and more reliable data. This is particularly important in fields like medical imaging and remote sensing, where the accuracy of the data is paramount.

AI also contributes significantly to the reconstruction of hyperspectral images. HSI systems may not always capture complete data due to sensor limitations or occlusions. AI-based reconstruction techniques, such as DNNs, can be used to fill in missing or corrupted parts of an image, ensuring a more complete and accurate representation. These methods are highly effective in recovering lost spectral and spatial details by learning the relationships between different bands and spatial features. In medical applications, for example, reconstructing hyperspectral images can improve the detection of subtle tissue abnormalities that may have been missed due to incomplete data.

Object detection is another area where AI excels. Unlike traditional images, where object detection is typically based on spatial features, hyperspectral images offer additional spectral information that can be leveraged for a more precise identification of objects [97]. DL techniques, such as region-based CNNs (R-CNNs) and YOLO (You Only Look Once), have been adapted to work with hyperspectral data to detect objects with a high degree of accuracy. These models are trained to identify spectral signatures that correspond to specific objects or materials, allowing for more refined detection in scenarios such as crop monitoring, mineral exploration, and environmental monitoring. The combination of spatial and spectral information provided by hyperspectral images makes AI-driven object detection a powerful tool for a variety of applications [97].

Lastly, the segmentation of hyperspectral images greatly benefits from AI techniques [98]. Segmentation involves partitioning an image into distinct regions or objects based on their spectral and spatial characteristics [99]. Traditional methods of segmentation often struggle with the high dimensionality and complexity of hyperspectral data. However, AI-driven methods, particularly deep learning architectures like fully convolutional networks (FCNs) and U-Nets, have been developed to handle these challenges effectively [100]. These models can accurately segment hyperspectral images into meaningful regions, such as different types of vegetation, water bodies, or urban areas, by analyzing both spatial and spectral features [101]. In medical imaging, segmentation is critical for tasks such as tumor detection, where AI models can distinguish between healthy and diseased tissues with high precision.

Table 2.

Characteristics of various algorithms used in the interaction between AI and HSI.

Table 2.

Characteristics of various algorithms used in the interaction between AI and HSI.

| Algorithm | Description | Strengths | Weaknesses | Applications |

|---|---|---|---|---|

| Convolutional Neural Networks (CNNs) [102,103] | DL models that use convolutional layers to capture spatial and spectral features. | High accuracy, ability to capture complex patterns, end-to-end learning. | Requires large datasets, computationally intensive. | Crop categorization, disease detection, yield prediction. |

| Support Vector Machines (SVMs) [104,105] | Supervised learning models that find the optimal hyperplane to classify data. | Effective in high-dimensional spaces, robust to overfitting. | Less effective with noisy data, requires careful parameter tuning. | Soil property categorization, crop health monitoring. |

| Random Forests (RF) [106,107] | Ensemble learning method that uses multiple decision trees for categorization and regression. | Handles large datasets, good at managing overfitting. | Can be less interpretable, may require extensive computation. | Crop type categorization, pest and disease identification. |

| Principal Component Analysis (PCA) [108,109] | Dimensionality reduction technique that transforms data into a set of orthogonal components. | Reduces computational load, highlights main spectral features. | May lose important information, not ideal for non-linear data. | Preprocessing for further analysis, noise reduction. |

| K-Nearest Neighbors (KNN) [110,111] | Simple algorithm that classifies based on the majority class among K-Nearest Neighbors. | Easy to implement, non-parametric. | Computationally expensive with large datasets, sensitive to irrelevant features. | Crop species classification, vegetation monitoring. |

| Artificial Neural Networks (ANNs) [99,112] | Computing systems inspired by biological neural networks, capable of pattern recognition. | Flexible, can model complex relationships. | Requires extensive training data, prone to overfitting. | Spectral unmixing, anomaly detection. |

| Recurrent Neural Networks (RNNs) [113] | NNs with loops, designed to recognize patterns in sequences of data. | Effective for temporal dependencies, can handle sequential data. | Can suffer from vanishing gradients, and requires large memory. | Time-series crop monitoring, growth stage identification. |

| Graph Convolutional Networks (GCNs) [114,115] | NNs that operate on graph-structured data, capturing relationships in irregular data. | Can model complex dependencies, robust to varying data structures. | Complex implementation requires extensive computational resources. | Crop disease spread modeling, soil nutrient mapping. |

| Sparse Representation [116,117] | Techniques that represent data as a sparse combination of basis functions. | Effective in capturing essential features, good for compressed sensing. | Requires careful selection of basis functions, computationally intensive. | HSI denoising, feature extraction. |

| Deep Residual Networks (ResNets) [118,119] | NNs that use skip connections to mitigate the vanishing gradient problem. | High accuracy, allows for very deep networks and improves training. | Requires significant computational power, and complex architecture. | Crop health monitoring, detailed spectral analysis. |

| Generative Adversarial Networks (GANs) [120,121] | NNs that consist of a generator and a discriminator, used for data generation. | Can create high-quality synthetic data, effective for data augmentation. | Difficult to train, prone to instability. | Data augmentation, and synthesis of HS images. |

5. Applications

AI-HSI is revolutionizing various fields by providing advanced analytical capabilities and detailed spectral information across numerous bands of the EM spectrum. AI excels across diverse fields like precision agriculture, environmental monitoring, mining and mineralogy, forensic science, medicine, and space operations due to its ability to process vast and complex datasets efficiently. In precision agriculture, AI helps optimize crop yields by analyzing soil health [122], weather patterns [123], and plant growth in real time [124]. For environmental monitoring, it automates the analysis of satellite and sensor data to track climate changes and ecosystems [125,126]. In mining and mineralogy, AI enhances resource identification and extraction processes with precision, reducing the environmental impact [127,128]. In forensic science, AI aids in pattern recognition and evidence analysis, speeding up investigations [129,130]. In the medical field, AI improves diagnostics, treatment planning, and personalized medicine through advanced data analysis and predictive models [131,132]. In space operations, AI supports mission planning, navigation, and autonomous spacecraft control, enabling faster decision-making in unpredictable environments [133,134]. Its versatility and precision make AI a critical tool for advancing efficiency and innovation across these sectors. In this section, a few key applications of AI-HSI are discussed.

- (A)

- Agriculture

Plant growth can be significantly impacted by factors such as disease, stress, and pest infestations, which may lead to reduced yields, diminished quality, or even a complete halt in growth. The visible and near-infrared (NIR) spectral region has proven effective in detecting specific issues, such as white tip disease and Orobanche cumana parasitism in sunflower plants [135]. Using hyperspectral data, logistic regression models can classify plant health and identify different stages of disease damage, even at pre-symptomatic stages [136]. Additionally, plant stress caused by heavy metal contamination, such as mercury (Hg), has been identified using the NIR spectral region [137].

In cases where diseased areas are small and surrounded by healthy tissue, such as charcoal rot disease in soybean stems, background removal and precise selection of regions of interest (ROIs) are crucial for improving data accuracy [138]. Vegetation indices are also applied in spectral domain studies to analyze the relationship between plant diseases and growth [139]. In recent advancements, 3D-CNN models have been trained on complete spectral bands, eliminating the need for labor-intensive feature selection. Handheld hyperspectral sensors, like the Specim IQ, have simplified data collection through line scan methods. Moreover, DCNNs trained on UAV-captured data have shown high accuracy in detecting diseases such as yellow rust in winter wheat [140]. While DL reduces some intermediate processing steps, many existing studies focus on training models for two primary purposes: (1) accurately detecting or classifying diseases and (2) minimizing preprocessing requirements.

Although real-time hyperspectral applications in plant disease and pest detection are limited, real-time defect detection combined with rapid actuation requires fewer spectral bands to meet processing and data transfer demands. The wavelength range of 400–1000 nm is commonly used in agricultural applications, but the ideal wavelength varies for different purposes [24]. DL offers the necessary speed for such applications. For example, variations of CNN models, such as fused 2D–3D CNNs, have achieved rapid analysis times, sorting 32 coffee beans per image in 0.03 s with the help of robotic arms [141].

Coffee beans are a vital agricultural commodity traded globally, and ensuring their quality is crucial before roasting. Screening for defective beans is a key process, with common defects including black, fermented, moldy, insect-damaged, shell, and broken beans. Among these, insect damage is the most prevalent. Traditionally, coffee bean sorting has been performed manually, which is labor-intensive and prone to errors caused by fatigue, leading to inconsistent quality. Chen et al. introduced a novel approach that integrates an NIR snapshot hyperspectral sensor with DL to develop a multimodal real-time coffee bean defect inspection algorithm (RT-CBDIA) for sorting defective green coffee beans [141]. Three types of CNNs were designed to enable real-time inspection: lean 2D-CNN, 3D-CNN, and a 2D–3D merged CNN. Principal component analysis was employed to select the most important spectral bands for the analysis.

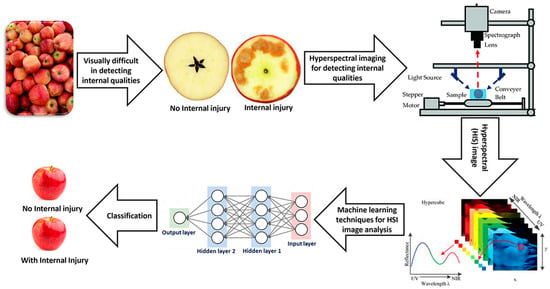

AI-HSI is revolutionizing agriculture by providing precise and detailed insights into crop health, soil conditions, and pest infestations (Figure 4) [142,143]. HSI captures a wide spectrum of light beyond what is visible to the human eye, allowing for the detection of subtle variations in plant and soil properties [144]. The expansion and execution of a real-time AI-assisted push-broom HS system for crop categorization was presented by Neri et al. [145]. The push-broom HS technique linked with AI provided extraordinary detail and precision in crop examination. The precision of the system in collecting spectral data was demonstrated through calibration and resolution analysis. Plant leaf categorization has been the test case for the system. Neural networking AI enables unceasing HS data analysis for up to 720 ground positions at 50 frames per second [145]. A modified spectral-spatial CNN with compensation for illumination variations was employed for the classification of high-resolution hyperspectral images of agricultural lands in the applied problem of determining the types of vegetation [146].

Figure 4.

AI-HSI for analyzing food quality [99].

- (B)

- Environmental monitoring

In environmental monitoring, AI enhances the detection of pollutants and the assessment of natural resources [147,148,149]. An example includes the analysis of water quality and the detection of algae blooms. ML algorithms have been trained to recognize the spectral characteristics of algae in HSIs, offering an effective and non-invasive method to monitor water quality [150]. Another application field has been the assessment of air pollution. ML techniques have analyzed HSIs to detect the presence of atmospheric pollutants, aiding in monitoring and assessing air pollution levels and providing valuable data for environmental policies. ML has also played a crucial role in natural disaster management. Predictive models have been trained to assess the impact of fires, floods, and other natural disasters based on HS imagery. This has aided in predicting the evolution of a disaster and planning relief operations [151]. Furthermore, forest analysis has been another area where ML has made a difference. ML algorithms have monitored forest health and prevented fires, contributing to the protection of these vital ecosystems and mitigating the impact of climate change.

Chen et al. proposed a method to detect air pollution by applying an HSI algorithm for visible light, near-infrared, and far infrared [152]. Hyperspectral information was assigned to images from monocular, NIR, and thermal imaging, and principal component analysis was performed on hyperspectral images taken at different times to obtain solar radiation intensity. The Beer–Lambert law and multivariate regression analysis were applied to calculate PM2.5 and PM10 concentrations over the period, which were then compared to the corresponding concentrations provided by the Taiwan Environmental Protection Agency to evaluate the method’s accuracy. The study demonstrated that the accuracy in the visible light band was higher than that in the near-infrared and far-infrared bands, and it proved to be the most convenient band for data acquisition. Consequently, mobile phone cameras will be able to analyze PM2.5 and PM10 concentrations in real time using this algorithm by capturing images, enhancing the convenience and immediacy of air pollution detection.

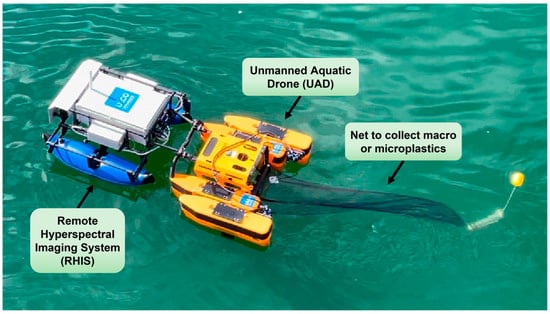

Alboody et al. introduced an innovative Remote Hyperspectral Imaging System integrated into an Unmanned Aquatic Drone (UAD) for sensing and identifying plastic in coastal and freshwater environments (Figure 5) [153]. This innovative system operated in a near-field of view, with the HS camera positioned approximately 45 cm from the water surface. An HSI database was constructed with HS data cubes of various plastics and polymers commonly found as litter in aquatic environments. Using this benchmark database, an in situ spectral analysis characterized the HS reflectance of these items, pinpointing the absorption feature wavelengths for each plastic type. The Remote Hyperspectral Imaging System’s ability to automatically identify different plastic litter types was evaluated by applying several supervised ML approaches to a dataset of representative marine litter image patches. The evaluation showed that the system could classify plastic litter with a general accuracy of nearly 90%. This research underscored that the Remote Hyperspectral Imaging System, when used alongside the UAD, presents a capable method for detecting plastic waste in aquatic settings.

Figure 5.

Remote Hyperspectral Imaging System implanted on a UAD called the Jellyfishbot, which can be equipped with a net to gather macroplastics or microplastics from various water surfaces [153].

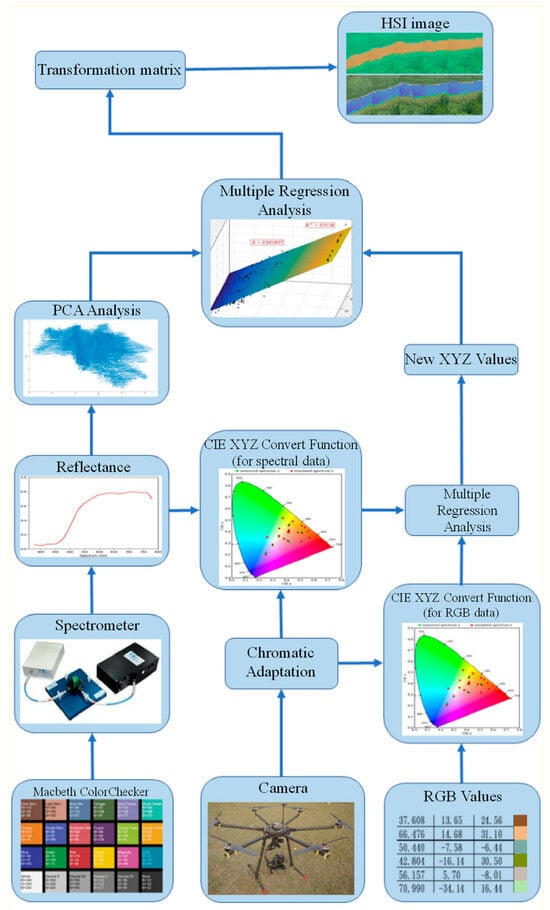

The conversion of red, green, and blue (RGB) images to hyperspectral images involves transforming the limited color information from traditional red, green, and blue channels into a richer spectral dataset that spans a broader range of wavelengths [154]. While RGB images capture only three spectral bands, HSI records hundreds of narrow spectral bands across the electromagnetic spectrum, providing detailed spectral signatures for each pixel [155]. This conversion process typically uses specialized algorithms, such as DL models or ML techniques, which extract spectral information from RGB data and estimate the missing wavelengths [156]. One approach involves leveraging databases of hyperspectral data to train these algorithms, allowing them to predict hyperspectral signatures from RGB images [157,158]. Principal Component Analysis (PCA), along with other dimensionality reduction techniques, can also aid in estimating hyperspectral bands by identifying key spectral patterns in RGB data [108]. The conversion significantly enhances image analysis, as it enables the detection of subtle material properties and environmental changes that are not visible in standard RGB imaging, making it useful in fields like remote sensing, agriculture, and medical imaging [159].

The study conducted by Leung et al. utilized spectral analysis to quantify water pollutants by analyzing images related to biological oxygen demand (BOD) [160]. A total of 2545 images depicting water quality pollution were generated due to the lack of a standardized method for water pollution detection. To address this, a novel snapshot HSI conversion algorithm was developed to perform spectral analysis on traditional RGB images, as shown in Figure 6. To demonstrate the effectiveness of the developed HSI algorithm, two distinct 3D-CNNs were employed to train two separate datasets. One dataset was based on the HSI conversion algorithm (HSI-3DCNN), while the other utilized a traditional RGB dataset (RGB-3DCNN). The images depicting water quality pollution were categorized into three groups—Good, Normal, and Severe—based on the severity of pollution. A comparison was conducted between the HSI and RGB models, focusing on precision, recall, F1-score, and accuracy metrics. The water pollution model’s accuracy was improved from 76% to 80% when the RGB-3DCNN was replaced by the HSI-3DCNN. These results suggested that the HSI conversion algorithm has the potential to significantly enhance the effectiveness of water pollution detection compared with the traditional RGB model [160].

Figure 6.

VIS-HSI conversion algorithm [160].

- (C)

- Mining and mineralogy

Hyperspectral remote sensing has emerged as a crucial tool in mapping lithological variations and mineral alterations across a wide spectrum of ore mineralization settings [161]. This advanced imagery enables the detailed characterization and analysis of the Earth’s surface, offering extensive spectral and spatial data that prove invaluable during the early reconnaissance phases of mineral exploration. The use of hyperspectral data, gathered from both satellite and airborne platforms, has been particularly effective in overcoming common challenges associated with mineral exploration [162].

With the rapid growth in hyperspectral data acquisition from a variety of platforms, the scientific community has responded by developing advanced data processing techniques, many of which leverage AI. Moreover, recent research has highlighted the increasing integration of ML algorithms with traditional image-processing methods and geological surveys. This integration has underscored the growing importance of hyperspectral remote sensing in lithological mapping and mineral prospecting (HLM-MP) [163,164].

The global shift toward green energy has led to a significant perceived rise in the demand for raw materials. Although recycling rates are steadily improving, the discovery of new resources is essential to meet the growing supply needs [165]. Mapping mineral abundances in an environmentally responsible manner calls for minimally invasive and cost-efficient exploration techniques. Geological remote sensing provides an effective solution for mineral resource exploration, with satellite and airborne imaging commonly used for large-scale exploration. However, a crucial gap persists when it comes to mineral mapping at the finer outcrop scale. Addressing this gap is vital for more detailed and precise resource targeting.

Booysen et al. presented a novel approach that combines multiple sensors and multi-scale data acquisition to better understand the complex mineralogy related to lithium and tin mineralization in the Uis pegmatite complex, Namibia [166]. The method was trained using hand samples and ultimately used to generate a three-dimensional (3D) point cloud to map lithium mineralization within an open pit. This enabled the successful identification and mapping of lithium-bearing minerals, such as cookeite and montebrasite, at the outcrop scale. Validation of the approach was conducted using drill-core data, X-ray diffraction (XRD) analysis, and laser-induced breakdown spectroscopy (LIBS) measurements. This technique offered an efficient means of mapping complex terrains while enhancing the monitoring and optimization of ore extraction processes. Additionally, the method was adaptable for application to other minerals of importance to the mining industry [166].

AI-HSI revolutionizes mining and mineralogy by enabling the precise recognition and analysis of minerals in complex geological formations [167]. AI algorithms enhance HSI data, capturing a wide range of wavelengths reflected from mineral surfaces far beyond the visible spectrum. This detailed spectral information allows for accurate mineral recognition and mapping, even in heterogeneous and visually indistinguishable samples [168]. By harnessing the power of AI, HSI systems can analyze vast amounts of data from the EM spectrum, providing defense forces with unprecedented capabilities in surveillance and reconnaissance. These systems offer enhanced detection and recognition of targets, including those concealed by camouflage or environmental factors [169].

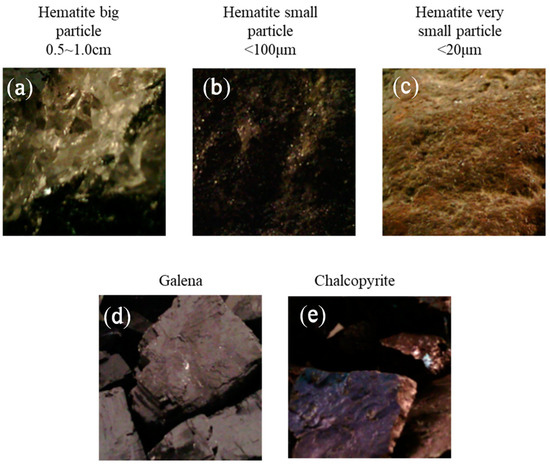

Mineral processing methods such as flotation are used in mining operations to separate ore into its constituent minerals. Prior knowledge of the types of minerals present in the ore enables these processes to be both more efficient and faster. Human perception can only identify information across three wavelength regions (red, green, and blue), whereas HSI can collect high-resolution spectral data across the visible light wavelength region up to NIR. DL techniques can extract and analyze features from HS data, identifying the unique spectral patterns of each mineral. Okada et al. anticipated an automatic mineral identification system that combines HSI with DL to determine mineral types before the mineral processing stage [170]. This approach allowed for the rapid, non-destructive recognition of minerals in rocks. Figure 7a–e presents example RGB images of various minerals. This study investigated the feasibility of identifying these minerals using RGB images analyzed through DL, comparing the results with those obtained from HS data analysis. Experimental results demonstrated that using DL on RGB images of minerals yields an identification accuracy of approximately 30%. In contrast, analyzing HS data with DL achieves a significantly higher accuracy of over 90% [170].

Figure 7.

Five categories of minerals used for categorization: (a) hematite with large particles, (b) hematite with small particles, (c) hematite with very small particles, (d) galena, and (e) chalcopyrite [170].

- (D)

- Forensic science

Analyzing evidence presents significant challenges due to the complexity, diversity, and often unknown nature of materials found at crime scenes. Forensic science plays a crucial role in examining such evidence, requiring a careful selection of chemical tests, analytical methods, and processing techniques by forensic experts. Ideally, the evidence should be interpreted, analyzed, and evaluated within the original context of the crime scene. In this regard, HSI has proven to be an effective analytical tool, preserving the integrity of samples and objects for repeated and sequential analyses, as well as for re-examination in counter-proof tests [171].

AI is increasingly being integrated into various forensic disciplines, offering valuable support across numerous areas [172,173]. In forensic anthropology, AI has been applied to assist in determining the sex of individuals by using CNNs to analyze dysmorphic sexual traits in 3D skull reconstructions obtained from computed tomography (CT) scans. In forensic dentistry, AI has proven effective in predicting age and gender based on dental features, as well as in the analysis of bite marks [174]. ANNs have demonstrated up to 90% accuracy in age and gender predictions. In forensic pathology, AI is used to enhance the rapid identification of diatom species, which can be crucial in cases involving drowning. CNNs have been employed to classify diatoms in both water samples and human tissue, with an impressive accuracy rate of 95%. Meanwhile, in forensic genetics, AI is harnessed to process the vast amounts of data generated during forensic DNA analysis [175]. One notable application involved using an ANN for DNA profiling from high throughput sequencing data, achieving remarkable precision, with 99.9% accuracy [176].

Forgery involves the creation, alteration, or imitation of writings, objects, or documents and is classified as a white-collar crime. Investigating cases such as forged checks, wills, or altered documents often requires the analysis of the inks used [177]. HSI offers a powerful tool for detecting various materials, including different types of inks. By combining this technology with advanced classifiers, it is possible to accurately identify the specific inks used in a document. The study conducted by Rastogi et al. utilized the UWA Writing Ink Hyperspectral Images (WIHSI) database to conduct ink detection, applying 3D reduction algorithms: Principal Component Analysis (PCA), Factor Analysis, and Independent Component Analysis (ICA). A comparative analysis was then performed, evaluating the results of these methods against existing techniques [178].

AI-HSI significantly enhances forensic science by providing detailed, non-destructive analysis of materials and scenes. HSI captures a wide spectrum of light beyond visible wavelengths, permitting the detection of subtle differences in materials that are imperceptible to the human eye. When integrated with AI, this technology can efficiently analyze and interpret complex spectral data, identifying substances such as drugs, explosives, and biological materials with high precision. It can also be used to detect traces of blood, gunshot residue, or other forensic evidence on various surfaces [179].

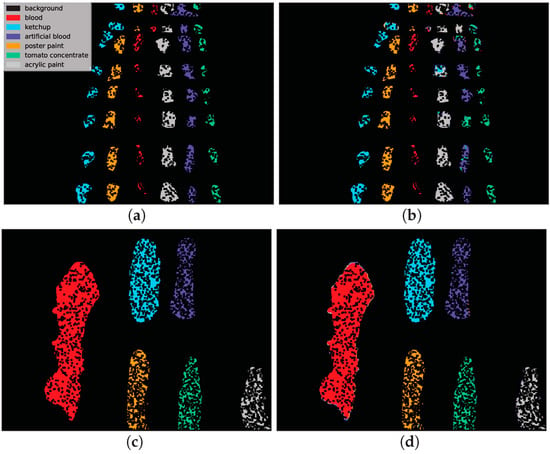

Traditionally used in remote sensing [180], HSI is now applied in non-invasive substance categorization, with significant potential in forensic science for classifying substances at crime scenes. Ksiazek et al. focused on blood stain categorization as a case study for evaluating HS data processing methods [181]. Experiments were conducted on a previously untested dataset comprising images of blood and blood-like substances (e.g., ketchup, tomato concentrate, artificial blood). To assess categorization performance, two experimental setups were prepared: Hyperspectral Transductive Classification (HTC), where training and test sets came from the same image, and Hyperspectral Inductive Classification (HIC), where the test set came from a different image. The latter setup presented a more realistic and challenging scenario for forensic investigators. Several DL architectures, containing 1D, 2D, and 3D CNNs, an RNN, and a Multilayer Perceptron (MLP) were tested, and their performance was compared against that of a baseline SVM. Model evaluation used t-SNE and confusion matrix analysis to identify and address model undertraining [181].

Results indicated that in the HTC setup, all models, comprising MLP and SVM, performed comparably, achieving an overall accuracy of 98–100% for simpler images and 74–94% for more difficult ones. Nevertheless, in the more challenging HIC setup, selected DL architectures demonstrated a noteworthy benefit, with the best overall accuracy ranging from 57–71%, improving the baseline by up to 9 percentage points. A detailed analysis and discussion of the results for each architecture revealed that per-class errors varied significantly according to the model.

The best-performing models came from two diverse architecture families (3D CNN and RNN), suggesting that the optimal DNN design for HS data remains an open research area [181]. Furthermore, the study underscored the importance of selecting appropriate model architectures based on the specific features of the data and the categorization task. Future research may explore hybrid models or advanced ensembling techniques to further enhance categorization performance in forensic applications. This work contributes valuable insights into the application of DL techniques for HS data, highlighting both their potential and the challenges that remain.

Figure 8 illustrates the categorization maps for the various architectures within the context of the HTC scenario. The predictions for the HTC scenario are presented, with the 1D CNN model applied to the E(1) image and the 2D CNN model applied to the F(1) image. Due to the HTC scenario’s requirement to utilize only a subset of accessible pixels as the test set, the categorization maps exhibited areas of incomplete data. In the first row, misclassifications were identified within the artificial blood class, whereby some pixels were incorrectly designated as tomato concentrate and acrylic paint. The second row displayed more straightforward categorization, with errors predominantly occurring along substance borders, potentially due to the mixing of spectra [181].

Figure 8.

Illustration of two examples of classification maps from the HTC scenario using 1D CNN and 2D CNN architectures. Most classes were correctly labeled, resulting in high classification precision. (a) reference data for image E(1). (b) Forecast by 1D CNN. (c) reference data for image F(1). (d) Forecast by 2D CNN [181].

- (E)

- Medical field

In the medical field, AI-HSI contributes to non-invasive diagnostics and tissue analysis [182]. When light interacts with biological tissues, it undergoes complex processes such as scattering and absorption due to structural inhomogeneity and the presence of molecules like hemoglobin, melanin, and water. These interactions alter as diseases progress, affecting the tissue’s absorption, fluorescence, and scattering properties. HSI captures the reflected, fluorescent, and transmitted light from tissues, providing a wealth of quantitative data that can reveal pathological changes at the molecular level. This detailed spectral information enables clinicians to obtain valuable diagnostic insights, improving the accuracy of disease detection and treatment monitoring [183].

By automating complex data processing tasks, AI not only accelerates analysis but also improves accuracy, leading to more informed decision-making and innovative applications across diverse domains [184,185]. A recent multidisciplinary study including 39 patients examined the prospective of retinal imaging methods for analyzing Alzheimer’s disease [186]. The study utilized a user-friendly HS snapshot camera featuring sixteen spectral bands ranging from 0.46 µm to 0.62 µm with a 10 nm bandwidth to quantify amyloid buildup. Additionally, optical coherence tomography (OCT) was used to evaluate the thickness of the retinal nerve fiber layer. Special image pre-processing and ML were helpful in the discrimination between AD patients and healthy subjects. Combining the HS and OCT data produced the best results [186].

For the application of HSI in an in vivo setting, particularly during surgery, enhanced real-time, in vivo, and label-free capabilities are highly desirable. Currently, traditional imaging methods like CT and MRI are used to confirm tumor locations prior to resection surgeries. However, the exact locations, number, and sizes of tumors hidden within organs can remain uncertain [187]. During surgery, the surgeon’s expertise in visual diagnosis, eye-hand coordination, and tactile feedback plays a crucial role. This has led to a growing demand for intraoperative imaging tools. HSI has gained significant attention due to its in vivo, real-time, and label-free nature, making it particularly useful for perioperative applications and therapy evaluation. HSI has demonstrated the ability to differentiate between healthy and cancerous tissues in near-real time during surgical oncology. Combined with advanced classification algorithms, HSI can monitor the surgical environment, delineate tumors [188], guide surgery, identify critical adjacent structures, detect residual tumor cells, and ultimately assist surgeons in making precise decisions during surgery [189].

Ji et al. introduced an HS learning method for snapshot HIS [190]. This method incorporated sampled HS data from a small subarea into a learning algorithm to reconstruct the hypercube. HS learning was based on the concept that a photograph contains detailed spectral information beyond its visual appearance. By sampling a small amount of HS data, the method enabled spectrally informed learning to reconstruct a hypercube from an RGB image without needing full HS measurements. This method attained complete spectroscopic resolution in the hypercube, similar to that of high-resolution scientific spectrometers. Furthermore, HS learning enabled rapid dynamic imaging by utilizing the slow-motion video functionality of regular smartphones, which produce a sequence of multiple RGB images over time [190].

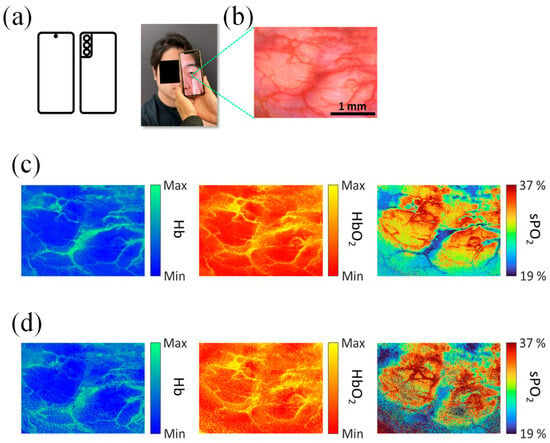

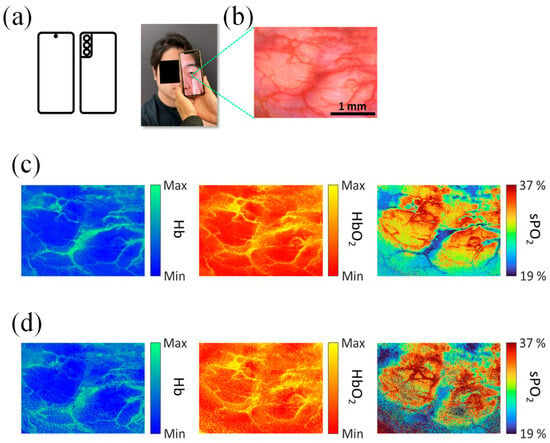

To provide further evidence of the capabilities of dynamic imaging of peripheral microcirculation at a specific video frame rate, Ji et al. recorded a video at 60 fps for 180 s (temporal resolution = 0.0167 s) [190]. The authors employed the inner eyelid (palpebral conjunctiva) as a model system for peripheral microcirculation in humans, visualizing spatiotemporal hemodynamic variations in the microvessels (Figure 9a and Figure 10b). The inner eyelid is a highly vascularized and readily accessible peripheral tissue site, receiving blood from the ophthalmic artery, and thus represents a feasible sensing site for a range of diseases and disorders. Figure 9c,d illustrates the peripheral hemodynamic maps of hemoglobin (Hb), oxyhemoglobin (HbO2), and oxygen saturation (sPO2) during the resting state of a healthy adult. These maps demonstrate the capability of the HS learning method to provide detailed, high-resolution spectral information from dynamic video data, highlighting its potential for non-invasive medical diagnostics and monitoring. The ability to capture and analyze such fine-grained spectral data in real time opens new possibilities for applications in medical imaging, environmental monitoring, and beyond, where rapid and accurate spectral analysis is crucial [190].

The aforementioned maps were calculated using two distinct ML frameworks, namely statistical learning and DL. The sPO2 maps displayed in Figure 9 demonstrated a spatially intricate network of perfusion patterns within the internal eyelid, a detail that was not discernible within the original RGB image. The hemodynamic maps of HbO2 and Hb, which were generated through the application of statistical learning and DL, exhibited an exceptional level of concordance. This finding indicated the efficacy of the employed hemodynamic parameter extraction techniques [190].

The future of AI-HSI holds immense potential for groundbreaking advancements and applications [56]. For instance, the study conducted by Tsai et al. leveraged HSI and a DL diagnostic model to recognize the stage of esophageal cancer and pinpoint its locations [56]. The model employed a novel algorithm developed in this research, which simulated spectral data from images and integrated it with DL for the classification and analysis of esophageal cancer using a single-shot multibox detector (SSD)-based recognition system. To evaluate the prediction model, a dataset comprising 155 white-light endoscopic (WLI) images and 153 narrow-band endoscopic (NBI) images of esophageal cancer was utilized. The algorithm demonstrated efficient performance, taking just 19 s to forecast the outcomes for 308 test images. The precision of the test results using spectral data was 88% for WLI and 91% for NBI images. In comparison, the precision using R.G.B images was 83% for WLI and 86% for NBI. The study found that incorporating HSI improved the accuracy of WLI and NBI predictions by 5%, thereby significantly enhancing the detection method’s predictive accuracy [56].

Figure 9.

Illustrative peripheral hemodynamic maps of HbO2, Hb, and sPO2 from a healthy adult at rest. The inner eyelid is highly vascular and connected to the ophthalmic artery, making it a manageable site for imaging [190]. (a) A healthy adult takes a photo with a smartphone while pulling down the inner eyelid, (b) a High-resolution RGB image of the inner eyelid exhibiting the field of view for HS learning-based imaging. Microvessels are visible and unobscured by skin pigments, and (c) hemodynamic maps of the inner eyelid generated using statistical learning. These maps are based on tissue reflectance spectral modeling from the recovered hypercube, (d) Hemodynamic maps of the inner eyelid produced using DL. The DNN directly outputs HbO2 and Hb values from the R.G.B input [190].

- (F)

- Space operations

Since the late 1990s, spectroscopy, spectral analysis, and color photometry have been proposed for characterizing the composition of space objects [191,192]. Early works focused on observing spectra from known objects and linking them to material types, with some initial classification methods based on color indexes and spectral features. Color photometry was also suggested for differentiating objects but not for identifying surface materials or reconstructing attitude motion [193]. Meanwhile, hyperspectral technology and spectrometry have been applied in astronomy, such as asteroid classification. Additionally, light curve analysis has been used to reconstruct the attitude and shape of space objects [194]. More recently, ML has been introduced to classify objects based on light curves. HSI has also been explored for close-proximity navigation and target classification on Earth, with extensive use in Earth observation [194].

The rapid advancement of nanosatellite technologies has significantly expanded the availability of satellite-generated data and their applications. Satellite edge computing (SEC) has emerged as a promising solution for performing in-orbit processing of sensed data, reducing the need for extensive terrestrial-satellite communications and enabling mission-critical services. While most SEC research has focused on general computing tasks, Zhu et al. proposed a two-tier collaborative framework specifically tailored for HSI processing [195]. This approach involved the strategic selection of spectral bands from the collected HSI data, transmitting only the most relevant bands for further analysis. An in-depth data analysis was performed to uncover the intricate relationship between band selection and analytic performance. The band selection problem was then framed as a utility maximization challenge, balancing analytic accuracy, energy efficiency, and communication demands. To address this, a novel multi-agent reinforcement learning solution (MaHSI) was introduced, which adapts to the dynamic SEC environment. MaHSI models the complex interactions among spectral bands as collaborations among agents, reducing the exploration space and optimizing decision-making. Experimental results on real-world HSI datasets demonstrated that this method not only surpasses traditional band selection algorithms in accuracy and processing speed but also maximizes utility for satellite missions [195].

The study conducted by Vasile et al. explored the use of HSI and ML to analyze the surface composition of space objects and estimate their attitude motion [196]. It demonstrated how hyperspectral and multispectral analysis of absorbed, emitted, and reflected light can accurately identify surface materials. A high-fidelity simulation model was introduced and validated through laboratory experiments to test this approach. The paper explains the process of spectral unmixing to estimate the materials on the object’s surface and proposes an ML method to reconstruct attitude motion based on spectral time series.

Integrating AI into space operations can significantly enhance the speed of response to different events. By processing massive raw HSIs directly on-board satellites, the images can be converted into valuable information quickly. This not only accelerates the transfer of images to the ground but also vastly improves the scalability of AI solutions across the globe [23]. Nevertheless, several challenges need to be addressed, including hardware and energy constraints, the efficiency of DL models, the accessibility of reference data, and the need to build trust in AI-based solutions. It is crucial to select AI applications that are unbiased, objective, and interpretable, as this choice affects all aspects of satellite design and operation, particularly for emerging missions. The problem has been addressed by introducing a quantifiable procedure for the empirical evaluation of probable AI applications thought for on-board operation. To demonstrate the flexibility of the proposed procedure, the approach was used to assess AI applications for two essentially different tasks: the Copernicus Hyperspectral Imaging Mission for the Environment [European Union/European Space Agency (ESA)] and the 6U Intuition-1 satellite. It is considered that the standardized process has the potential to become an imperative means for maximizing the results of Earth observation missions through the selection of the most appropriate on-board AI applications in terms of scientific and industrial grades [23].

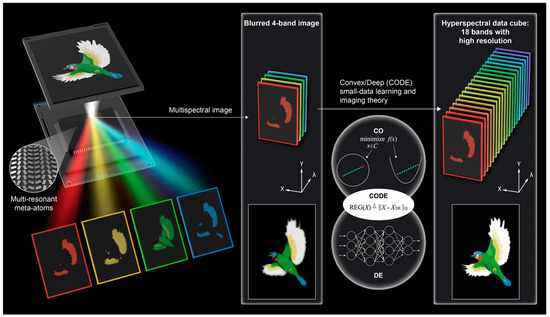

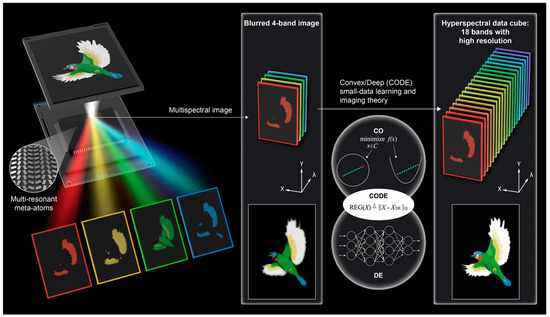

HSI is of great importance for material recognition; however, old-style systems are frequently unwieldy, impeding the advancement of condensed solutions. While prior metasurfaces (MSs) have addressed volume issues, their complex fabrication processes and large footprints remain significant obstacles to their further development. Lin et al. introduced a compact snapshot HS imager that integrates meta-optics with the small-data convex/deep (CODE) DL theory (see Figure 10) [197]. The imager incorporates a single multi-wavelength MS chip operating in the visible range (500 nm to 650 nm), which suggestively reduces the overall device size. To validate its high performance, a 4-band multispectral imaging dataset was used as input. The CODE-driven imaging system was able to proficiently generate an 18-band HS data cube with high reliability, requiring only 18 training data points. It was expected that the seamless addition of multi-resonant MSs with small-data learning theory would pave the way for low-profile, advanced instruments applicable in both fundamental scientific research and real-world scenarios [197].

Figure 10.

The off-axis focusing meta-mirror, created of multi-resonant meta-atoms, images a color object and separates it into four wavelength channels in free space. These four channels are used to create an 18-band HS data cube using convex optimization and DL, following CODE’s small-data learning and imaging theory [197].

6. Challenges and Future Prospectives

The integration of AI with HSI presents a series of significant challenges. Firstly, the sheer volume of data produced by HS sensors is enormous, often consisting of hundreds of spectral bands per pixel. This creates substantial storage and processing requirements, which can be a barrier to real-time or near-real-time analysis. The need for high computational power is a critical challenge, particularly when deploying AI models in resource-limited environments [198]. Another challenge lies in the complexity of HS data itself. HSIs capture detailed spectral information that can reveal subtle differences in materials that are not discernible in traditional imaging [199]. However, this complexity requires sophisticated algorithms for effective feature extraction and classification. Traditional ML techniques often struggle with the high dimensionality of HS data, leading to the “curse of dimensionality”, where the performance of models deteriorates as the number of dimensions increases [200].

Furthermore, the lack of labeled HS data poses a significant challenge for training AI models [201]. Creating labeled datasets for HSIs is time-consuming and requires expertise in both the application domain and spectral analysis. This scarcity of annotated data limits the effectiveness of supervised learning techniques, necessitating the development of advanced semi-supervised or unsupervised learning methods. Finally, there is the issue of standardization and interoperability. HSI systems and AI platforms often come from various manufacturers and developers, leading to compatibility issues. The absence of standardized data formats and processing protocols hinders the seamless integration and sharing of data and models across different platforms and applications.

Despite these challenges, the synergy between AI and HSI holds immense potential for future advancements across various fields. One promising direction is the expansion of more efficient and scalable AI algorithms tailored for HS data. Advances in DL, particularly in CNNs and RNNs, are expected to play a vital role in overcoming the curse of dimensionality and improving the accuracy of HS data analysis [202,203]. Moreover, the evolution of hardware technology, such as graphics processing units (GPUs) and specialized AI accelerators, as well as photonic Neural Networks (PNNs) [204,205], will facilitate the handling of large HS datasets, enabling faster and more efficient data processing. Cloud computing and edge computing solutions also present opportunities for deploying HSI and AI applications in real time, even in remote or resource-constrained environments.

The development of large-scale annotated HS datasets, along with improved data augmentation techniques, will enhance the training of AI models [206]. Crowdsourcing and citizen science initiatives could play a role in gathering and labeling HS data, expanding the available datasets and improving model robustness. Standardization efforts will be critical to the broader adoption of HSI and AI. Establishing common data formats, protocols, and best practices will facilitate interoperability and integration across different systems and applications. This will enable the creation of more versatile and reusable AI models, accelerating innovation and deployment.

In application domains such as agriculture, environmental monitoring, medical diagnostics, and material science, the integration of AI with HSI is poised to revolutionize practices [207]. For instance, in agriculture, AI-driven HS analysis can lead to more precise crop monitoring and disease detection, significantly enhancing yield and sustainability. In medical diagnostics, it can improve the accuracy of non-invasive diagnostic techniques, leading to earlier and more reliable detection of diseases.

7. Conclusions