Abstract

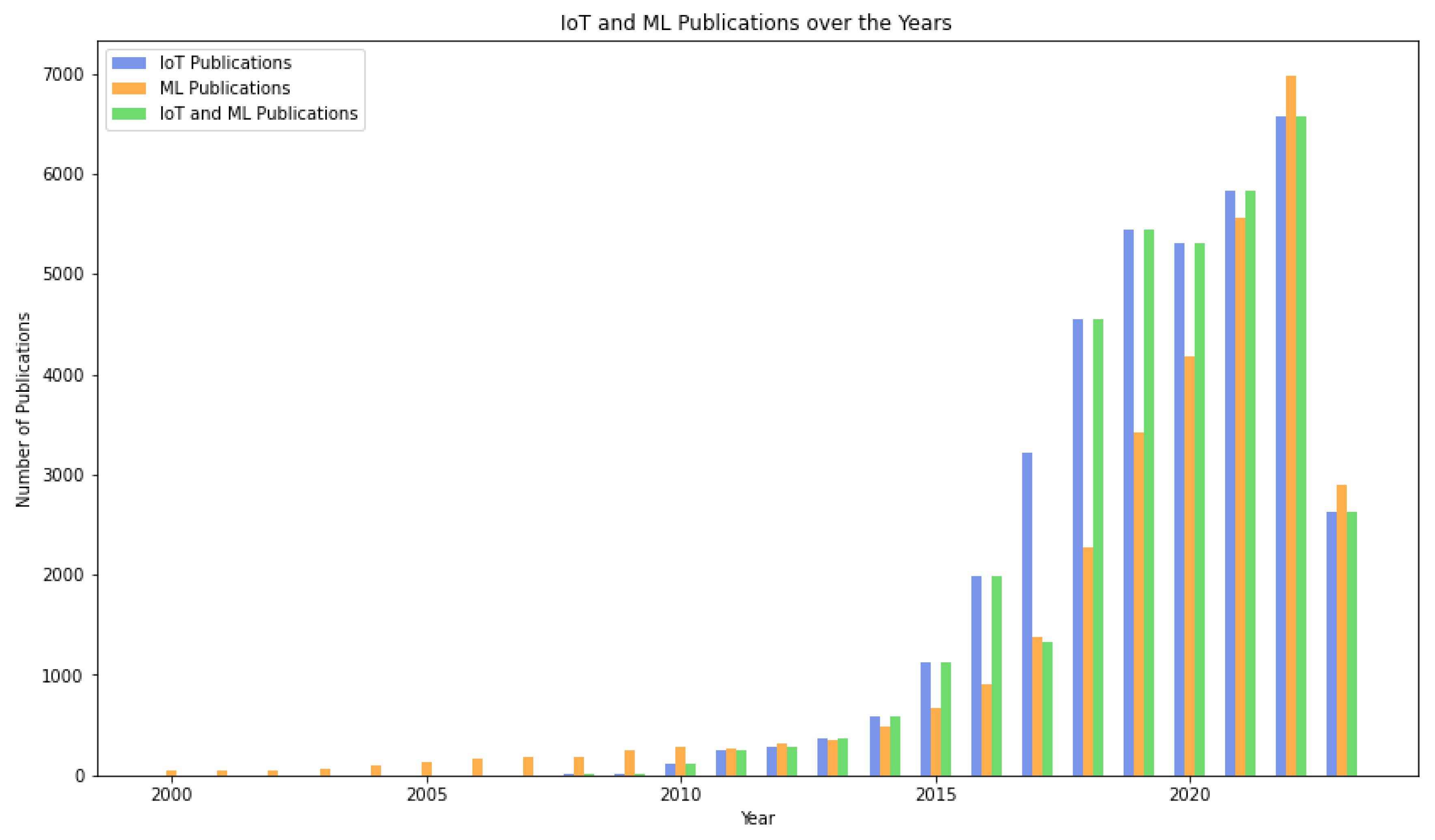

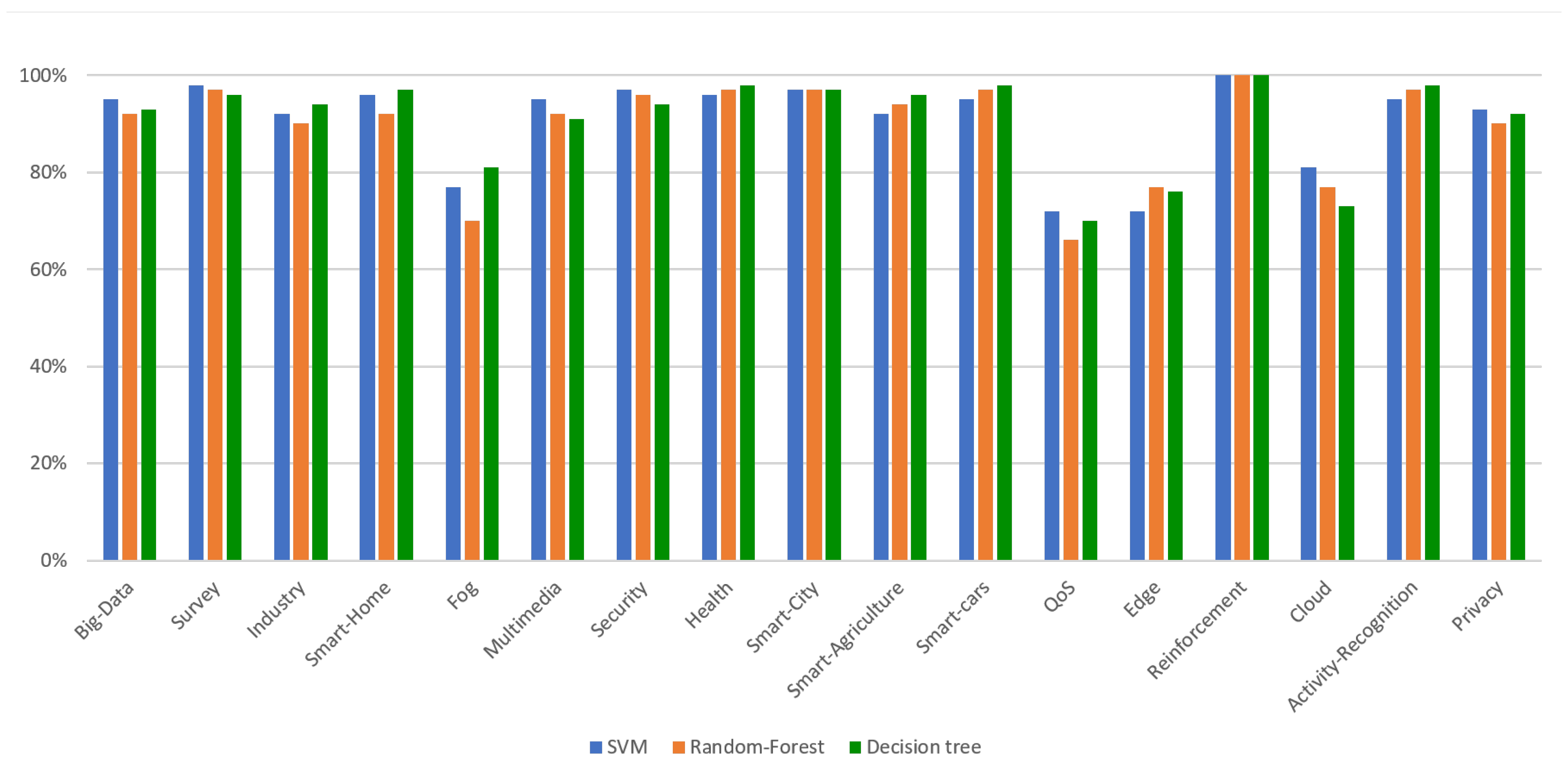

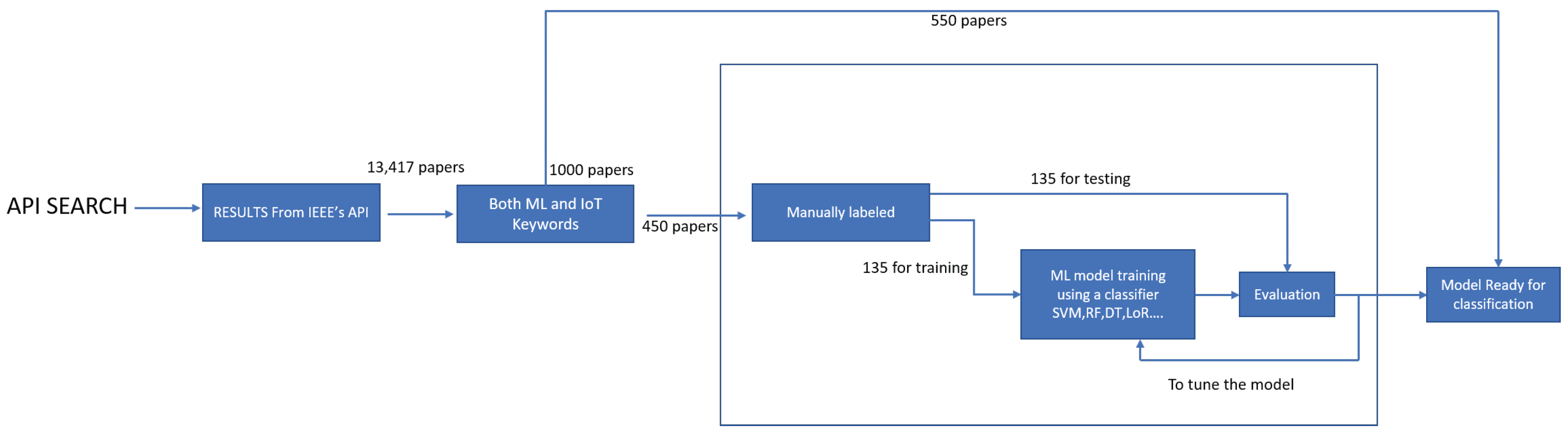

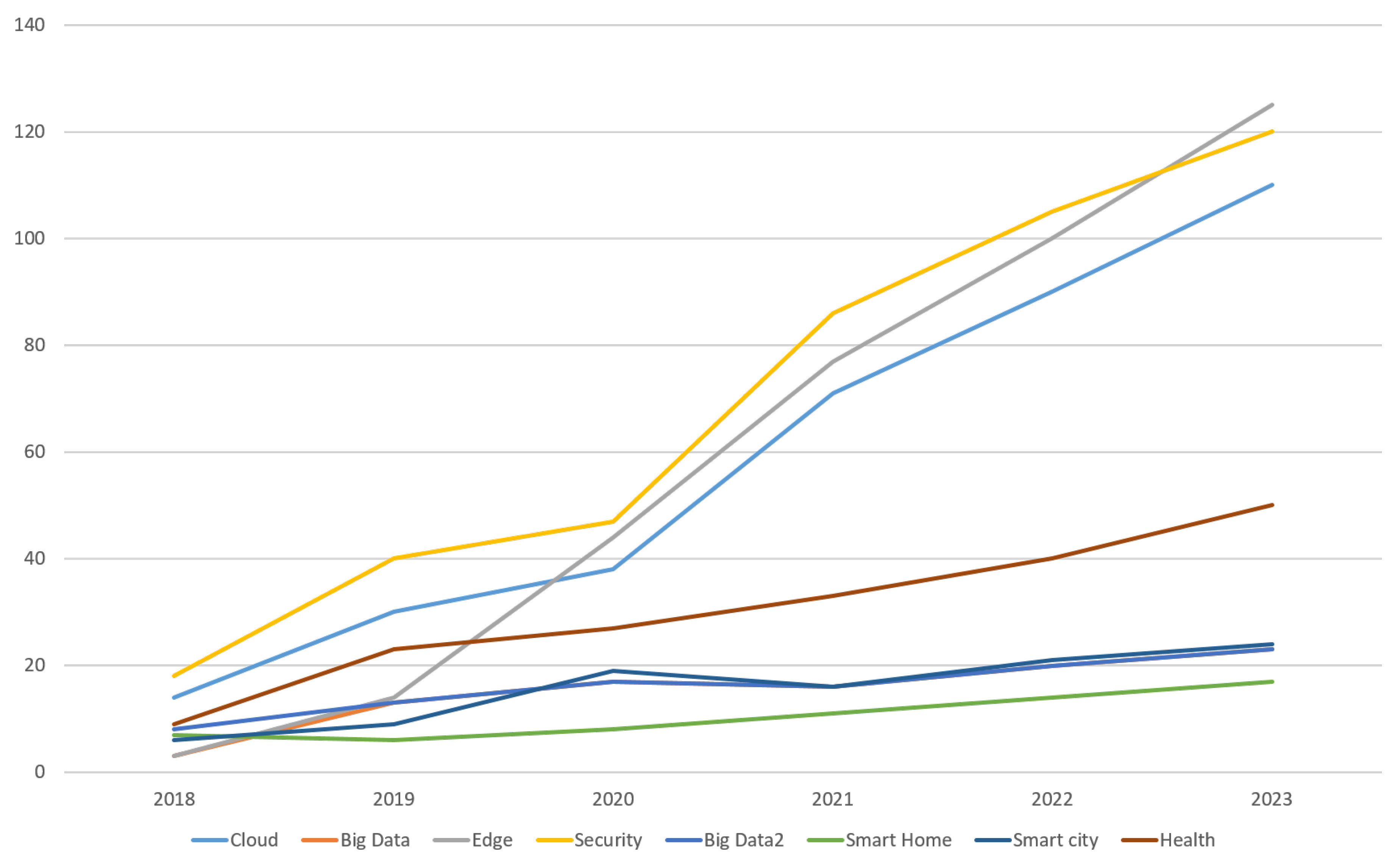

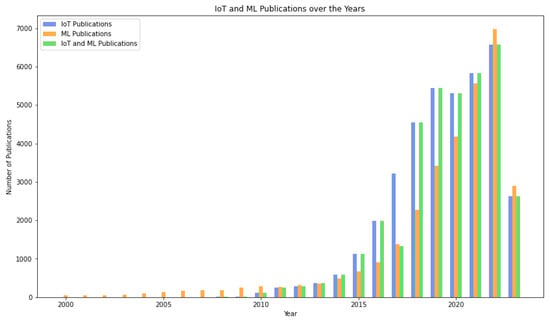

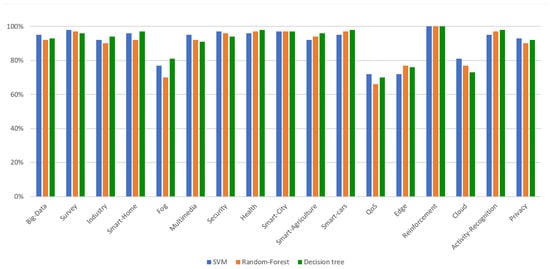

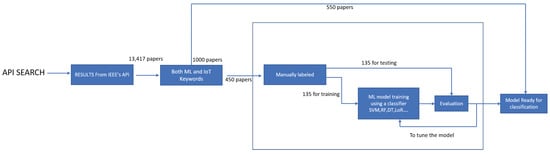

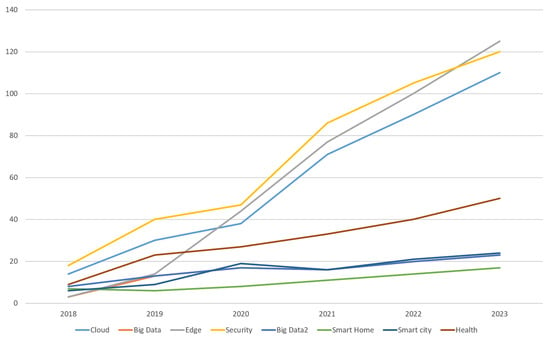

Internet of Things (IoT) devices often operate with limited resources while interacting with users and their environment, generating a wealth of data. Machine learning models interpret such sensor data, enabling accurate predictions and informed decisions. However, the sheer volume of data from billions of devices can overwhelm networks, making traditional cloud data processing inefficient for IoT applications. This paper presents a comprehensive survey of recent advances in models, architectures, hardware, and design requirements for deploying machine learning on low-resource devices at the edge and in cloud networks. Prominent IoT devices tailored to integrate edge intelligence include Raspberry Pi, NVIDIA’s Jetson, Arduino Nano 33 BLE Sense, STM32 Microcontrollers, SparkFun Edge, Google Coral Dev Board, and Beaglebone AI. These devices are boosted with custom AI frameworks, such as TensorFlow Lite, OpenEI, Core ML, Caffe2, and MXNet, to empower ML and DL tasks (e.g., object detection and gesture recognition). Both traditional machine learning (e.g., random forest, logistic regression) and deep learning methods (e.g., ResNet-50, YOLOv4, LSTM) are deployed on devices, distributed edge, and distributed cloud computing. Moreover, we analyzed 1000 recent publications on “ML in IoT” from IEEE Xplore using support vector machine, random forest, and decision tree classifiers to identify emerging topics and application domains. Hot topics included big data, cloud, edge, multimedia, security, privacy, QoS, and activity recognition, while critical domains included industry, healthcare, agriculture, transportation, smart homes and cities, and assisted living. The major challenges hindering the implementation of edge machine learning include encrypting sensitive user data for security and privacy on edge devices, efficiently managing resources of edge nodes through distributed learning architectures, and balancing the energy limitations of edge devices and the energy demands of machine learning.

1. Introduction

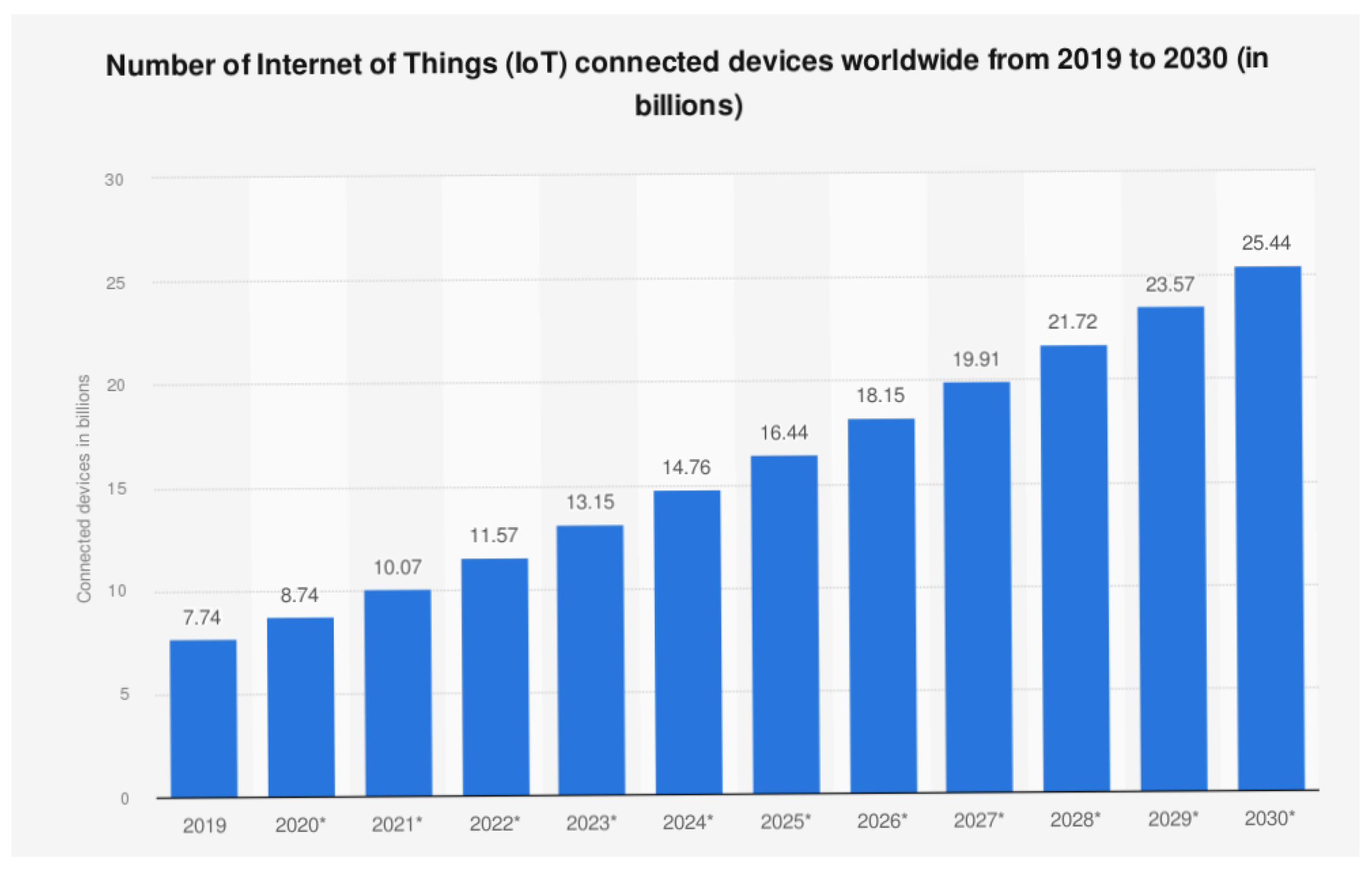

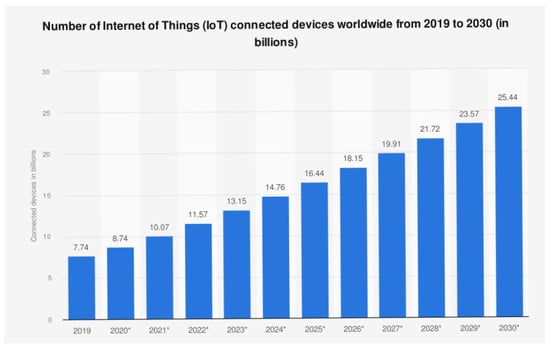

During the past ten years, there has been a growing interest in wireless communication technology, and the number of IoT devices has risen significantly. It is forecasted to reach more than 26 billion devices connected to the Internet by 2030 [1], as depicted in Figure 1. These devices generate a large volume of data per second, have limited computing power, small memory capacities, and lack the self-sufficient intelligence to process raw data locally and make independent decisions. Resource-constrained IoT devices include sensors, smart fridges, and smart lights.

Figure 1.

The Growth of IoT Devices between 2019 and 2030.

Feeding data into a machine learning (ML) system is among the most effective methods for extracting information and making decisions from IoT devices. Unfortunately, the processing capacity of these devices hinders the deployment of ML algorithms. Traditionally, the solution has been to offload all data to a centralized cloud system for further processing. However, this approach often leads to high latency, bandwidth saturation, and user data privacy concerns, as the data must be transferred and stored in the cloud indefinitely. Moreover, with the constant development of the IoT industry and the massive amount of data collected by each device, offloading all data to the cloud is becoming increasingly challenging (Figure 1). To address these issues, various solutions have been proposed. One effective solution is to process data as close as possible to its source and only transmit essential data to remote servers for further processing. This approach, known as edge computing or edge intelligence, offers several advantages.

In fact, edge intelligence (EI) proposes relocating data processing at the edge of the IoT network, which includes gateways, embedded devices, and edge servers near where the data is collected rather than a distant location. This is achieved by placing computing devices near the data-generating devices, spreading computation across the network’s layers. For example, the IoT gateway may preprocess the data before transmitting the temporary results to the cloud, which will complete the rest of the processing. Integrating ML capabilities into edge computing could reduce data dimensionality. The preprocessing would be executed at the edge node to extract important information transmitted to the cloud for advanced analysis, decreasing bandwidth requirements and workload in the cloud systems. By processing all data locally, the system not only saves on bandwidth but also preserves privacy. A similar term is fog computing, which refers to an infrastructure where the cloud is brought closer to IoT devices. This system reduces delays and improves security by handling computations closer to the network’s edge.

The primary distinction between fog and edge computing comes down to where data processing occurs. Edge computing handles data directly on sensor-equipped devices or on nearby gateway devices. Meanwhile, in the fog model, data processing occurs a bit farther from the edge, usually on devices linked through a local area network (LAN). Bringing the intelligence closer to the source can be implemented in edge or fog nodes. We delve deeper into this concept in Section 3 and Section 4. Another solution discussed is running ML algorithms on end devices (embedded intelligence), which is mostly addressed as on-device learning. This concept can introduce substantial improvements to the IoT infrastructure. Applying ML directly on the device enables real-time decision-making through data analysis, tailored behavior personalized specifically for each consumer, congestion prevention and, above all, to preserve the confidentiality of the user’s data. Several research efforts have been made in order to successfully deploy ML in the IoT device (closer to the data source). These smart devices will be able to process sensed data, analyze the problem, and operate directly. In order to achieve this, many devices have been developed with improved GPUs and CPUs capable of handling the complexity of machine learning and deep learning (DL).

Additionally, lighter versions of frameworks and platforms that can support on-device machine learning have been developed. Section 4 of this paper delves deeper into this topic. The final solution examined in this paper involves integrating computation across the layers of the IoT network. The deployment of complex ML algorithms on the device itself is still limited due to the computing and memory constraints. Therefore, it is recommended to distribute the data processing among IoT devices, gateways, fog, and cloud computing. For instance, the IoT device can preprocess the data using lightweight embedded models and only offload the necessary data to the cloud for additional processing. This concept focuses on deploying ML models across all layers of the network, leveraging the hierarchical structure of the IoT system. Each layer, from embedded devices to gateways to edge servers to cloud servers, is capable of performing a certain amount of computation, with the capabilities increasing as we move up the hierarchy. This distributed approach enables the efficient processing of data, from the edge to the cloud, by allocating tasks to the most suitable computational entity at each level. Model partitioning is another solution in deep learning, in which certain layers are processed on the device, while others are processed on an edge server or within the cloud. Due to the computational cycles of other edge devices, this approach may be able to reduce latency. Evidently, after computing the first layers of the deep neural network (DNN) model, the intermediate results are comparably smaller, facilitating faster network transmission to an edge server. We have discussed this aspect further in the paper.

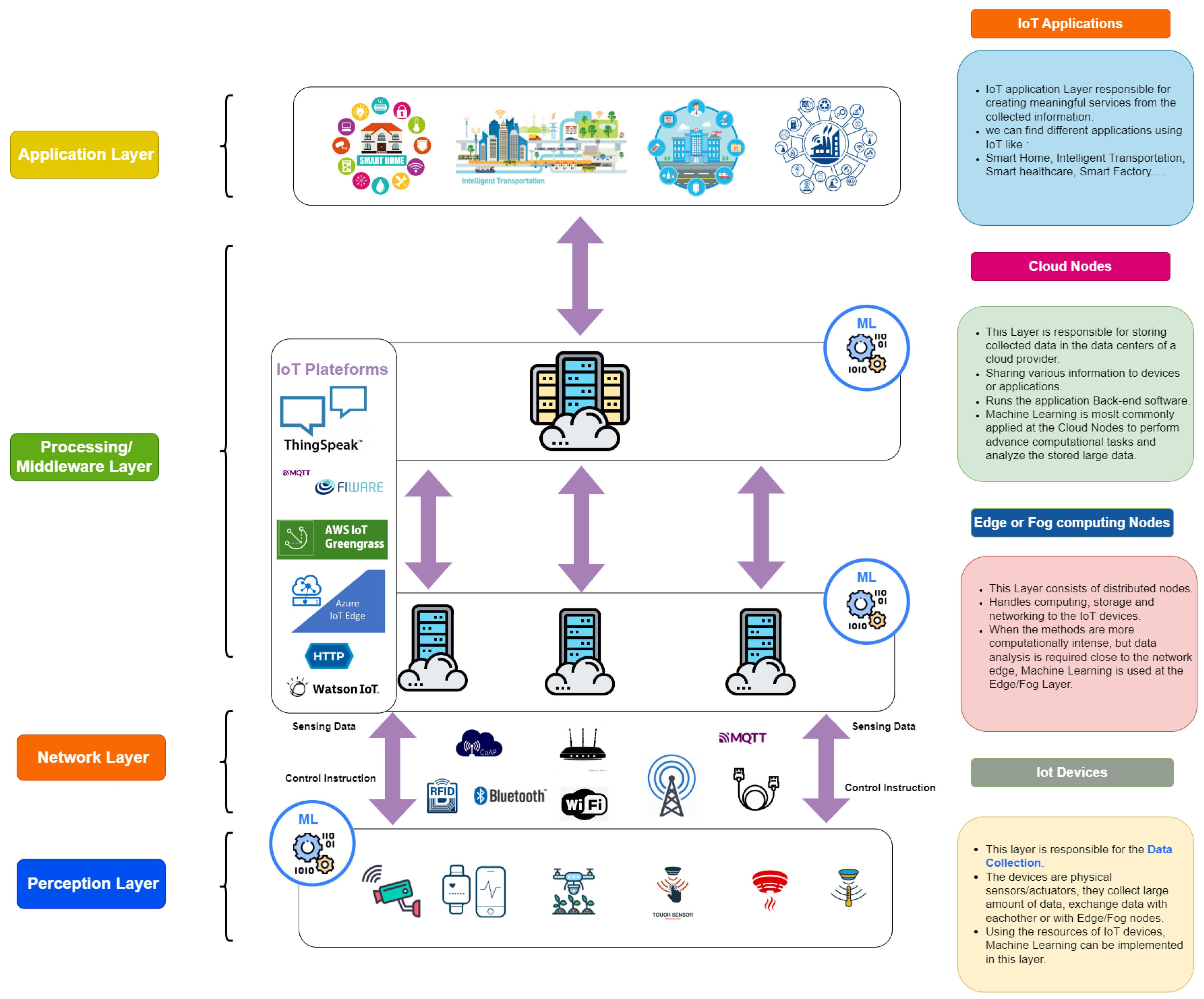

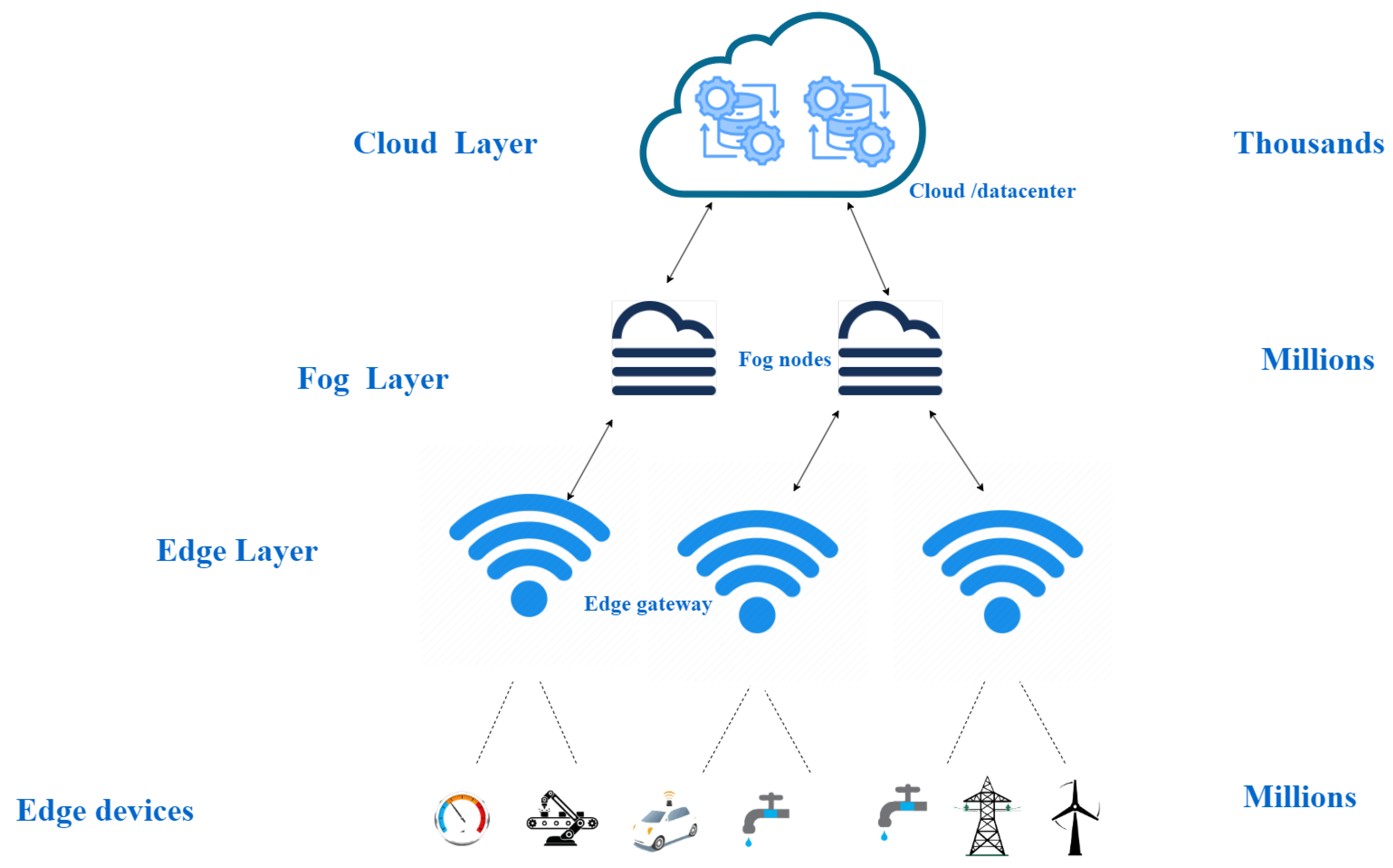

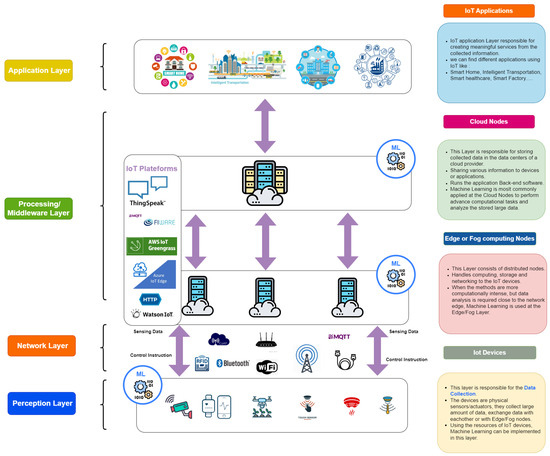

Although there is still much progress to be made in standardizing IoT architecture and technologies, Figure 2 can precisely identify the major components of the architecture as commonly utilized across a variety of applications. The architecture key components are divided into blocks in Figure 2. Each block illustrates a representation of the explained element, and arrows connect the blocks to show how each element interacts with the others. Text blocks are also included, with bulleted lists of the essential parts of each key factor. The IoT architecture includes:

Figure 2.

Essential Components of an IoT Architecture.

- Perception or Sensing Layer: the perception layer includes the physical components, such as IoT devices, sensors, and actuators. It is in charge of identifying objects and gathering information from them. we can find different types of sensors depending on the application [2]. ML can be implemented in this layer, which we identify as embedded intelligence. It is further explained in the next section.

- Network Layer: network or transmission layer is in charge of routing and transmitting the data collected from the physical objects to the upper layers. The communication can be wired or wireless. The communication protocols commonly utilized in IoT include Wi-Fi, Bluetooth, IEEE 802.15.4, Z-wave, LTE-Advanced, RFID, Near Field Communication (NFC), and ultra-wide bandwidth (UWB).

- Processing Layer: the middleware or processing layer collects and processes large amounts of data from the network layer. It possesses the capability to administer and deliver a range of services to the underlying layers. Among the technologies used in this layer are databases, cloud computing, and big data processing modules. The computation load could be divided between the fog/edge and cloud servers.

- Application Layer: the application layer is in charge of providing users with services designed for specific applications. It defines a variety of IoT applications, like Smart Home, Smart City, and Smart Health.

Furthermore, Figure 2 illustrates the significance of machine learning within the IoT infrastructure. ML techniques are applicable at various points, including IoT nodes, edge or fog servers, and cloud servers, adapted to suit the requirements of the specific application.

Combining artificial intelligence into edge computing applications has been explored by numerous review papers. In Grzesik et al. [3] provide a comprehensive survey on the integration of machine learning and edge computing within edge devices. The authors examine the benefits and challenges of combining AI in these devices. However, they have not explored the opportunities of applying AI to different architectures of the IoT. In their study, Chang et al. [4] investigate the combination of IoT and AI through the utilization of both edge computing and cloud resources. They delve into seven distinct IoT application scenarios, concentrating on techniques that facilitate the optimal deployment of AI models. Nonetheless, they overlooked the exploration of AI techniques and the potential benefits and applications of AI in edge-based scenarios and did not explore the opportunities of distributing the intelligence in all the IoT networks. Opposed to these reviews, our study examines the use of machine learning in various IoT fields, discusses the integration of intelligence into multiple layers of the IoT network, explores recent developments in this field and future work, and addresses related issues. Additionally, we employ a structured method based on ML classification techniques to analyze all publications on ML in IoT that are available via an API. The primary contributions of this paper are outlined as follows:

- We conduct a thorough investigation into the current state of the art regarding ML in the IoT. It involves categorizing ML approaches based on their deployment within the IoT architecture, their application domains, and the developed frameworks and hardware to facilitate ML integration.

- We deliver an in-depth analysis of diverse ML techniques, their characteristics, suitability for IoT, and innovative solutions to overcome associated challenges.

- We explore the opportunities and challenges of seamlessly integrating IoT devices with embedded intelligence while addressing the combination of the computational overload across the cloud, fog, and edge layers.

- We employ a machine learning approach to analyze publications concerning ML in the IoT in various applications, training precise classifiers to categorize publications based on key phrases found in their titles and abstracts. The insights provided enable other researchers to replicate the analysis with updated publications in the future.

- Based on a comprehensive review of IoT and machine learning literature, we highlight key challenges and promising research directions for optimizing machine learning on IoT systems, with a focus on edge computing as the primary paradigm.

The rest of this paper is structured as follows: Section 2 presents ML techniques, focusing on the requirements of ML algorithms in IoT. Section 3 reviews the fundamentals of edge computing. In Section 4, we explain the requirements of integrating ML in the IoT network, focusing on different possible architectures, including available frameworks and hardware to facilitate the deployment of ML models. We also review cutting-edge research on ML applications at the edge for various use cases, positioning them on the cloud-to-edge spectrum. In Section 5, we investigate the open challenges encountered in this field. Then, in Section 6, we analyze ML and IoT articles, classify, and label them using an ML approach. Finally, Section 7 outlines the future directions of machine learning at the edge, while Section 8 concludes this review.

2. Machine Learning Techniques

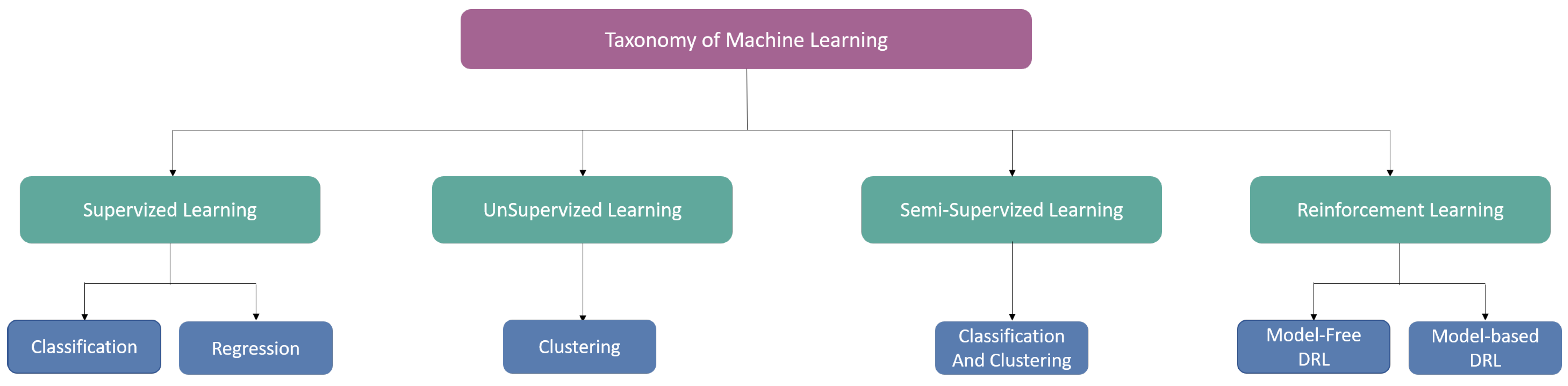

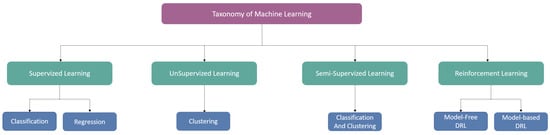

Machine learning (ML) is a subset of artificial intelligence (AI) focused on improving performance through automatic learning from experience. ML relies on training machines with large datasets to make better decisions. As defined by Mitchell, ML involves learning from experience E to improve performance measure P on tasks T [5]. ML algorithms can be classified into four major categories: supervised, unsupervised, semi-supervised, and reinforcement learning. Figure 3 demonstrates a detailed taxonomy of ML algorithms.

Figure 3.

Taxonomy of Machine Learning Algorithms.

2.1. Supervised Learning

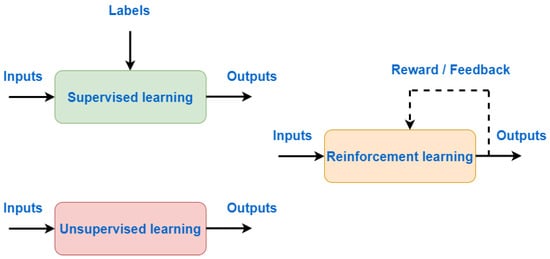

Supervised learning is one of the most used types of learning, the majority of ML algorithms are supervised. Supervised machine learning uses labeled datasets to train algorithms where the input and output are known in advance. The goal is to train the data to create a predictive model that can accurately anticipate responses when exposed to unseen data [6,7,8]. Supervised machine learning implements classification and regression techniques. Classification is utilized for estimating discrete responses, while regression is employed when dealing with continuous labels. Some of the most popular supervised machine learning algorithms are support vector machine (SVM) and random forest (RF), which are described in the following paragraphs. Their popularity rises because they can be implemented and executed in resource-constrained environments and because of their ability to be distributed in the cloud-to-things network.

SVM is a powerful but simple ML algorithm used in classification and regression [9]. It utilizes a hyperplane to separate data points into distinct classes, aiming to maximize the margin from the closest data points, referred to as support vectors. The margin is crucial, as a larger margin indicates better classification accuracy. When a new data point is introduced, SVM predicts its label based on its position relative to the hyperplane. While SVM inherently works with linearly separable data, it can handle nonlinear datasets by transforming them into higher-dimensional spaces. This transformation is achieved through the kernel trick, which aids in finding the optimal hyperplane without the need for explicit mapping to higher dimensions, thereby reducing computational complexity. Several solutions have been developed to deploy SVM in resource-constrained embedded environments. This paper proposed a real-time tomato classification system using a model that combines convolutional neural networks (CNN) and SVM [10]. The suggested model includes two parts: the EfficientNetB0 CNN model for feature extraction, while SVM handles classification tasks. Notably, to optimize model inference on the embedded platform, they employed TensorRT and performed quantization to lower the model’s computational complexity while maintaining accuracy. The hybrid model was deployed on the embedded single-board NVIDIA Jetson TX1, achieving an average accuracy of 93.54% for classifying static tomato images. During real-time implementation, it achieved an average inference speed of 15.6 frames per second, showcasing its capability for rapid decision-making. While the paper demonstrates significant advancements in real-time tomato classification using a hybrid CNN-SVM model deployed on an embedded system, issues such as ensuring data privacy and edge security continue to raise significant concerns. Additionally, optimizing algorithms and hardware to minimize energy consumption is essential for prolonged operation in resource-constrained environments.

RF is a simple yet effective algorithm. It tackles both regression and classification tasks by constructing a “forest” of decision trees through randomization, often employing the bagging algorithm [11,12]. In regression, RF averages outputs from multiple trees, while in classification, it selects the most voted outcome. RF stands out for its accuracy, robustness, and capacity to handle large datasets without overfitting. However, only applying current ML algorithms may not ensure optimal performance. Several studies have introduced solutions to address this challenge, such as FS-GAN, a federated self-supervised learning architecture proposed to facilitate automatic traffic analysis and synthesis across diverse datasets [13]. By leveraging federated learning, FS-GAN coordinates local model training processes across different datasets, enabling decentralized systems like edge computing networks to train models on multiple devices without transferring data to a central server. This approach addresses privacy concerns, minimizes communication overhead, and facilitates efficient model training in distributed environments.

2.2. Unsupervised Learning

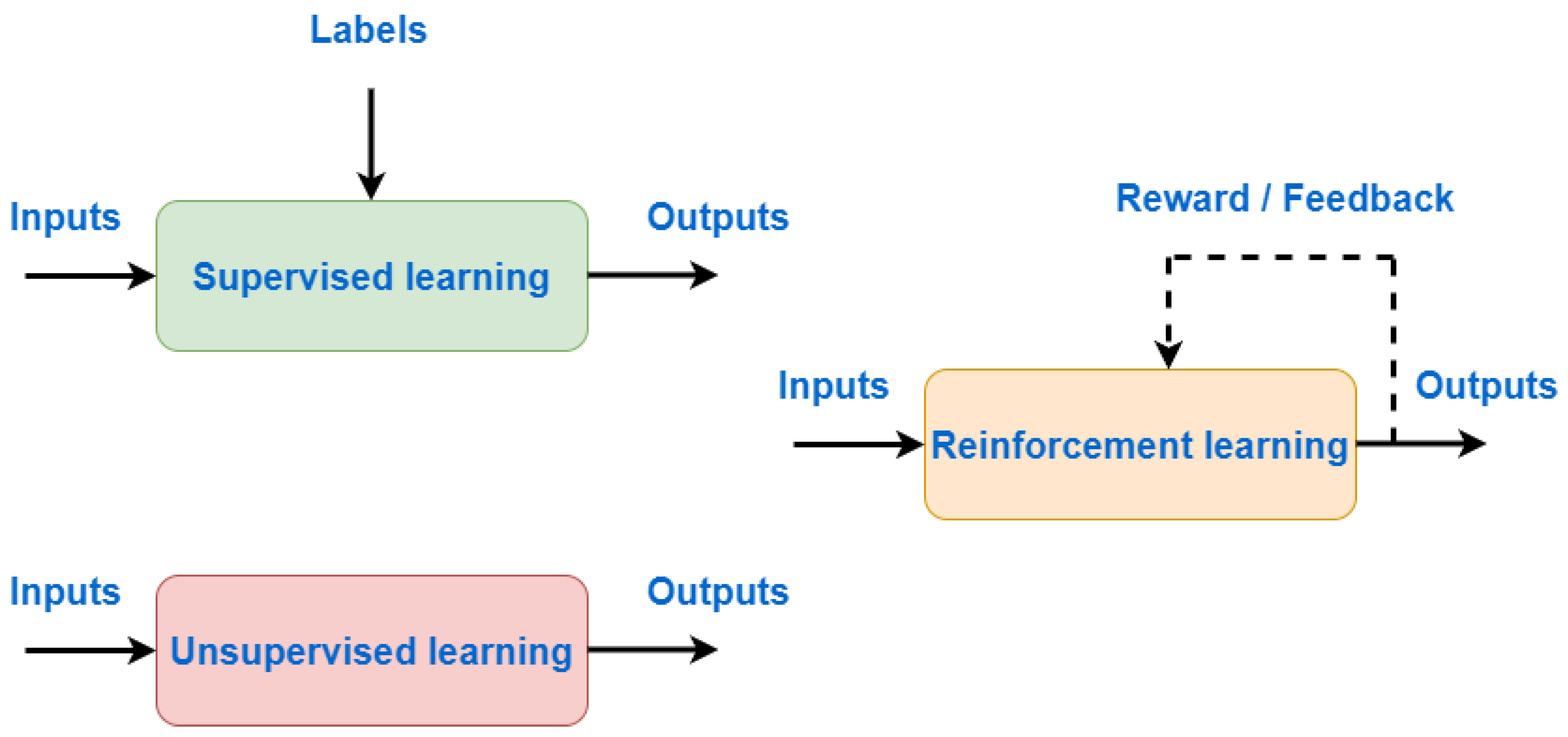

Unsupervised learning analyses datasets without predefined outputs, focusing only on the provided input. Its objective is to identify patterns and structures within unlabeled data. Unlike supervised learning, there are no provided outcomes, the system observes only input features and aims to cluster data accordingly [14,15]. Clustering is among the most widely used unsupervised learning methods. It splits the data into groups according to similarity. Data with similar features are placed in the same groups, while dissimilar points are placed in other groups. Clustering is mostly used in video-on-demand, marketing, smart grid, etc. Figure 4 illustrates the concepts of supervised, unsupervised, and reinforcement learning.

Figure 4.

Supervised/Unsupervised/Reinforcement Learning.

2.3. Semi-Supervised Learning

Semi-supervised learning uses both labeled and unlabeled data, optimizing efficiency and accuracy. Labeled data is typically scarce and costly to obtain, requiring skilled human annotators. In contrast, unlabeled data is easier to gather. Leveraging both types, semi-supervised learning offers a compelling solution for enhancing algorithms with less human effort while achieving higher accuracy [16,17]. These models enhance unsupervised learning by leveraging small amounts of labeled data, offering simplicity and cost-efficiency compared to supervised learning. Generative models, Graph-based models [18], mixture models And EM, and semi-supervised support vector machines [16] are some of the algorithms used in semi-supervised learning.

2.4. Reinforcement Learning

Reinforcement learning relies on interaction with the environment, where machines learn via experiments in order to improve their actions relying on rewards or penalties [7]. Notably, Q-learning stands out as a prominent algorithm in this field [19,20]. Unlike other methods, reinforcement learning does not rely on data but rather on past experiences. It’s widely applied in autonomous systems like race cars, robotic navigation, and AI gaming, forming a reward-based learning system. Various researchers have devised innovative solutions to deploy reinforcement-based models on the edge [21]. In this study, the author introduces a distributed edge intelligence sharing scheme aimed at improving learning efficiency and service quality in edge intelligence systems. This scheme allows edge nodes to enhance learning performance by exchanging their intelligence, tackling challenges like repetitive model training and overfitting caused by limited data samples. The approach is structured as a multi-agent Markov decision process, the scheme employs an ensemble deep reinforcement learning algorithm to optimize intelligence exchange among edge nodes [22].

2.5. Deep Learning

Deep learning approaches are well-suited for handling vast quantities of data and computationally intensive tasks such as image identification, speech recognition, synthesis, and others. As the demand for CPU power continues to rise, robust GPUs are increasingly being utilized to execute deep learning tasks. Deep neural networks, like artificial neural networks (ANNs), are created using deep learning. The term “deep” refers to the architecture of the network, which is characterized by numerous hidden layers [23]. Each deep learning model is made up of several layers. The input data is sequentially processed through the layers, with matrix multiplications performed at each layer. A layer’s output becomes the following layer’s input. The last output from the final layer can be a feature representation or a classification result. When a model has multiple layers, it is called a deep neural network [24].

In deep learning, the number of layers is proportional to the number of features extracted. Unlike traditional methods, deep learning techniques automatically determine the features, so no feature calculation or extraction is required before using the method. With the advancement of DL, multiple network structures have been developed. CNN is a special case of the DNN family. CNN is performed when the matrix multiplications include convolutional filter operations [25]. CNN classifiers are commonly used for image classification, computer vision, and video analysis. Recurrent neural networks (RNN) are DNNs designed specifically for time series prediction, including loop connections within their layers to retain state information and facilitate predictions on sequential inputs, solving problems related to machine translation, audio data in speech recognition [26]. LSTM (long short-term memory) is a variant of RNN [27]. An LSTM unit comprises a cell, an input gate, an output gate, and a forget gate. These gates control the flow of information into and out of the cell, enabling the cell to maintain relevant information over time. The forget gate, in particular, can identify which information is retained and which is discarded. Designing a good DNN model for a specific application is difficult due to the large number of hyperparameters involved. During the design process, decisions often involve compromises on system metrics. For instance, a DNN model with high accuracy typically needs greater memory capacity to store all the model parameters compared to a model with lower accuracy. The choice of success metric depends on the application domain where DL is implemented. Islam et al. developed a custom CNN model for detecting tomato diseases, achieving an impressive 99% accuracy. Their model outperformed other CNNs based on the training time and computational cost. Additionally, they demonstrated the feasibility of running their model on drone devices. However, further analysis and experiments are required to effectively deploy their model on edge devices [28].

3. Edge Computing for IoT

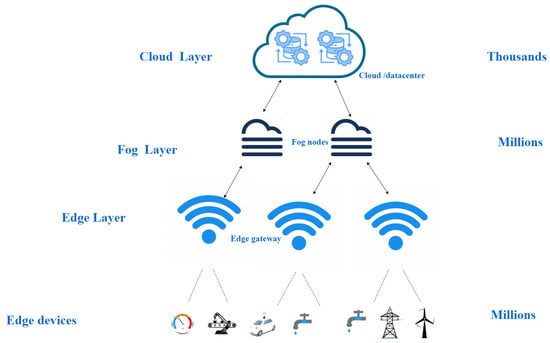

Current computing and storage paradigms include cloud, fog, and edge computing. They are another important part of the internet of thing’s architecture, the storage layer of a computing system for handling large amounts of data. A reliable data gathering, storage, computation, and analytic framework unfolds from the integration of these paradigms with the IoT.

3.1. Cloud Computing

Cloud computing is a centralized framework that provides on-demand computing resources such as servers, networks, storage, etc. [29]. The data must be transmitted to the data centers for additional processing and analysis in order to be usable, as illustrated in Figure 5. The cloud architecture is considered to have high latency and can congest the network because of its high load balancing. Since IoT’s data are high, only cloud computing is no longer an option. Cloud computing can be divided into numerous types:

Figure 5.

Fog and Edge Computing Layers.

- Software as a Service (SaaS): Applications run on a service provider in the cloud, they are hosted, managed in a distant computer and connect to users via Internet.

- Platform as a Service (PaaS): Offers a cloud environment with all necessary resources to develop and build ready-to-use applications but without expense and hassle of purchasing and managing hardware or softwares.

- Infrastructure as a Service (IaaS): Offers computing resources to business, from servers to storage and networks. it is a pay-as-you-go, internet-based service model.

In the last few decades, the IoT has become increasingly prevalent, and the number of connected devices has grown exponentially. This has resulted in unprecedented volumes of data being generated by these devices.

3.2. Fog Computing

Fog computing is considered a shift from a centralized paradigm (CC) to a more decentralized system. It was created by CISCO back in 2012 [30] and refers to a virtualized platform that offers computation, storage, and communications services across edge devices and standard cloud computing data centers. These centers are often located at the network’s edge, though not exclusively. Fog architecture consists of fog nodes (FNs) that can be placed anywhere along end devices and in the cloud. An FN can be constituted by various devices including switches, routers, servers, access points, IoT gateways, video surveillance cameras, or any other device equipped with processing capabilities, storage capacity, and network connectivity. This enables communication among the fog layer and end devices through a range of protocol layers and non-IP-based access technologies. The abstraction within the fog layer hides the complexity of various FNs offering a suite of services such as data processing, storage, resource allocation, and security. These functionalities are leveraged by a Service Orchestration Layer to efficiently distribute resources tailored to meet the distinct requirements of the end devices [31]. Integrating a fog layer into IoT-based systems to lower latency, conserve energy, and enhance real-time responsiveness has been the focus of various research studies. In the study by Singh et al. [32], a framework was introduced to track student stress and generate real-time alerts for stress forecasting. Data processing and cleaning were performed locally in the fog layer to minimize the workload in the cloud, followed by loading the data to the cloud layer for additional processing. The authors utilized multinomial Naive Bayes techniques to estimate emotion scores and categorize stress events. Additionally, they employed bidirectional Long Short-Term Memory (BiLSTM) for speech texture analysis and Visual Geometry Group (VGG16) for facial expression analysis.

3.3. Edge Computing

Edge computing, which was introduced prior to cloud computing, has gained substantial momentum, especially with the rise of the IoT. It enables IoT data computation and analysis at the network’s edge, near the data source. Within IoT networks, the primary goal is to address critical needs such as security, privacy, low latency, and high bandwidth. Edge computing lowers the amount of data transmitted between nodes, resulting in cost savings and reduced network bandwidth consumption. Furthermore, edge computing serves as a complement to existing cloud computing platforms, enabling efficient data processing. Depending on the IoT application, direct data processing on IoT devices may be necessary, supplementing centralized cloud infrastructure common in traditional setups. The concept of edge computing arises as processing capabilities are brought closer to end network elements. Edge computing and fog computing both involve processing data closer to where it’s generated. While edge computing processes data directly on devices or within the local network, aiming to reduce latency and bandwidth usage by handling tasks locally before sending relevant information to centralized servers. Fog computing, on the other hand, extends this concept by deploying the FN at various points within the network, including both edge devices and traditional cloud data centers. This allows for a more distributed architecture, enabling efficient data processing and analysis across multiple network tiers. So, while edge computing is more localized, fog computing takes a broader approach, encompassing a wider range of network locations and resources. Edge intelligence extends the foundation of edge computing by directly integrating intelligent algorithms and models into edge devices. At the edge, it enables local data analysis, decision-making, and autonomous behavior. Edge intelligence enables real-time insights, reduced reliance on cloud resources, and improved autonomy in IoT environments by embedding machine learning and artificial intelligence capabilities on edge devices. It uses edge computing infrastructure to perform intelligent tasks and data processing, enhancing the overall capabilities of IoT networks. By bringing computational power and intelligence closer to the data source, combining edge computing and AI enables efficient and effective IoT deployments. It meets critical requirements like security, privacy, low latency, and high bandwidth while decreasing reliance on centralized cloud resources. This convergence enables smarter and more autonomous IoT systems, allowing devices at the network’s edge to perform advanced analytics, decision-making, and real-time responses.

4. Implementing Machine Learning at the Edge

ML the edge requires several fundamental factors, including hardware, frameworks, model compression, and model selection. These elements have an impact on both the effectiveness and efficiency of edge-based AI applications. Hardware selection must strike a balance between computational power and energy efficiency while also taking into account specific use cases and resource constraints. In addition, model optimization techniques such as compression and selection are critical in adapting machine learning models for edge deployment.

4.1. IoT Hardware and Frameworks Employed in Edge Intelligence

IoT is a term that was presented in early 1999 by Kevin Ashton. It is an interconnection between physical world objects, embedded intelligent devices, and the internet. This is how the expression Internet of Things came to be in the first place. The key concept of IoT is connecting our day-to-day devices (cellphones, cars, lamps, etc.) via the internet to facilitate our work [2]. The connected devices have multiple types of sensors attached to them, such as temperature, speed, etc. to monitor and capture information from the physical world. Then data are sent to the cloud in order to be viewed, analyzed, and saved. IoT plays a crucial role in measuring and exchanging data between the physical world and virtual world. IoT devices must communicate with one another (the network or transmission layer in the 3-layer IoT architecture), the communication could be short or long range. Wireless technologies like Bluetooth, ZigBee, and Wi-Fi are suitable for short-range communication, while mobile networks like GSM, GPRS, 3G, 4G, LTEWiMAX, LoRa, Sigfox, CAT M1, NB-IoT and 5G are employed for long-range communication. Utilizing an IoT platform such as Mainflux, Thingspeak, Thingsboard, DeviceHive or Kaa to handle M2M communication and using protocols including MQTT, AMQP, STOMP, CoAP, XMPP, and HTTP has become common practice in IoT systems. However, with the rapid development of this new paradigm, our world is crowded with billions of smart IoT devices that generate a massive amount of data every day, hence the possibility to congest the network. In following section, we will discuss the advancement in the IoT devices and frameworks that made it possible to embed complex ML models in resource-constrained devices.

4.1.1. IoT Hardware in Edge Intelligence: Design and Selection

One of the current challenges of integrating intelligence into IoT devices was the hardware’s limited resources and its ability to handle on-device machine learning inference or training. That is why several companies have worked hard to develop an optimal hardware selection, considering factors like accuracy, energy consumption, throughput, and cost. The following section explains the hardware that has been specifically invented to deploy, build, and support ML at the network edge. To achieve high performance using a machine learning model, you will need rich GPUs that can handle big data with low latency. This is no longer the case since there are many efforts that have been made in the resource-constrained devices. Lately, we have noticed types of small devices that can support ML, even deep learning models. In the list below, we will introduce the most commonly used devices for deploying ML models at the edge.

The Raspberry Pi

Among the most commonly used devices for edge computing is the Raspberry Pi, a single-board computer designed by the Raspberry Pi Foundation [33]. It has been used to run machine learning inference without the need for additional hardware. The Raspberry Pi 3 Model B features a Quad Cortex A53 @ 1.2 GHz CPU, a 400 MHz VideoCore IV GPU, and 1 GB SDRAM. In comparison, the Raspberry Pi 4 Model 4 is an enhanced version, offering increased speed and power. It includes Gigabit Ethernet, built-in wireless networking, and Bluetooth capabilities. The Raspberry Pi 4 Model 4 boasts a Quad Cortex A72 @ 1.5 GHz CPU and supports up to 8 GB SDRAM.

NVIDIA’s Jetson

NVIDIA Jeston is a new product developed by the Company NVIDIA fully dedicated for edge computing. the platform high-performance, low-power and energy efficiency makes it suitable for embedded applications and deploying Intelligence on the device. One of the popular Jetson products is the NVIDIA Jeston Nano Developer Kit developed by NVIDIA Corporation in Santa Clara, CA, USA [34], Jetson Nano is the entry-level board of the NVIDIA Jetson family. It is suitable for running Neural Networks calculations due to it’s 128 Maxwell’s GPU cores. it has 4 USB ports, a micro USB, an Ethernet port, two ribbon connectors, and a jack socket to provide additional power if needed. The Jetson Nano is compatible with all major DL frameworks, such as TensorFlow, pyTorch, Caffe, MXNet... Another Module from the Jetson family is Nvidia Jetson TX2 Series Jetson TX2, the Nvidia Jetson modules’s medium-sized board, is larger than Jetson Nano. It proves useful when it comes to computer vision and deep learning. it has the Dual-Core NVIDIA Denver 2 64-Bit CPU and Quad-Core Arm® Cortex®-A57 MPCore processor. It is very suitable for running ML algorithms with its 256-core NVIDIA Pascal™ GPU. Jetson Tx2 proved be very efficient in real-time vehicle detection with high accuracy [35].

Nvidia Jetson AGX Xavier can function as a GPU workstation for edge AI applications with as little as 30 W power supply and delivering to 32 TOPs AI performance. Jetson AGX Orin Series are considered the most powerful in the Jetson modules, offering up to 275 TOPS and 8 times the performance compared to the previous generation for simultaneous AI inference. It is well-suited for a variety of applications, including manufacturing, logistics, retail, and healthcare, due to its high-speed interface that accommodates multiple sensors.

Arduino Nano 33 BLE Sense

Arduino Nano 33 BLE Sense is another AI enabled development board [36]. It can be considered one of the cheapest boards available in the market. The Nano 33 BlE Sense is simple and easy to use. It has an extensive library and resources which support TensorFlow lite, that offer the possibility to create your Ml model and upload it using the Arduino IDE. Although, it is limited to executing a single program at a time, It has a built-in microphone, acceleromter and a 9axis inertial sensor that makes it excellent for wearable devices. Arduino Nano Board 33 is used various applications such as speech recognition [37] and Smart Health [38].

STM32 Microcontrollers

STM32 [39] is a family of 32-bit microcontroller integrated circuits produced by STMicroelectronics. This family includes various models based on different ARM Cortex processor cores, such as the Cortex-M33F, Cortex-M7F, Cortex-M4F, Cortex-M3, Cortex-M0+, and Cortex-M0. Despite the differences in the specific Cortex cores used, all STM32 microcontrollers are built around the same 32-bit ARM processor architecture. Each microcontroller has a processor core, static RAM, flash memory, a debugging interface, and various peripherals internally. In order to implement AI at the edge for the STM32, STMicroelectronics has developed specialized libraries for its devices, specifically the STM32Cube. The AI Toolkit allows pre-trained NNs to be integrated into STM32 ARM CortexM-based microcontrollers. It generates from Tensorflow and Keras STM32-compatible C code-provided NN models, as well as models in the standard ONNX format. STM32Cube has an intriguing feature to execute large NNs; it stores weights and activation buffers either in external flash memory or RAM.

SparkFun Edge

SparkFun Edge is the result of a collaboration between Google and Ambiq to develop a real-time audio analysis Dev-Board [40]. It is utilized for voice and gesture recognition purposes. A distinguishing feature of this board is the inclusion of the Apollo3 Blue microcontroller from Ambiq Micro, which features a 32-bit ARM Cortex-M4F CPU with direct memory access. Operating at 48 MHz CPU clock speed (96 MHz with TurboSPOTTM), and extremely low power consumption of 6 uA/MHz. Despite its power efficiency, it can easily run TensorFlow Lite, offering 384 KB of SRAM and 1 MB of Flash memory. This development board also features two microphones, a 3-axis accelerometer from STMicroelectronics, and a camera connector.

Google Coral Dev Board

Coral Dev [41] is designed for conducting fast on-device ML inference due to its integrated Google Edge TPU, which is an Application-Specific Integrated Circuit. Coral Development Board is a single-board computer with wireless capabilities for high-speed machine learning inference. It has a removable system-on-Module. The operating system on this board is Mendel, a Debian Linux variant. Supports TensorFlow Lite for model inference, Python and C++ as programming languages This development board is primarily used for image classification and object detection, but it can be used for a variety of other tasks. Working with this Dev Board is made easier by good documentation and support available online.

Beaglebone AI

The BeagleBone AI is a brand-new, high-end single-board computer. It’s designed to help developers build ML and computer vision applications. This board is equipped by a Texas Instruments AM5729 system-on-chip. Powered by a Texas Instruments AM5729 system-on-chip, it features a dual-core C66x digital signal processor (DSP) and four embedded vision engine (EVE) cores. This configuration simplifies the exploration of machine learning applications in everyday life due to an optimized Texas Instruments Deep Learning (TIDL) framework and a machine learning OpenCL API with pre-installed toolkits. The BeagleBone AI is compatible with all popular machine learning frameworks, such as TensorFlow, Caffe, MXNet, and others. It is capable of executing tasks like image classification and object detection.

The selection of hardware platforms is crucial in determining the performance and capabilities of deployed solutions in edge computing and AI-enabled embedded systems. From widely accessible options like the Raspberry Pi, offering flexibility at a low cost, to specialized platforms like NVIDIA’s Jetson series, providing high-performance computing tailored for deep learning tasks, each hardware option presents distinct benefits and limitations. For instance, while platforms such as Arduino Nano 33 BLE Sense offer simplicity and affordability, they may lack the computational power necessary for complex AI tasks. However, solutions like Google Coral Dev Board and BeagleBone AI excel in high-speed on-device ML computing but at a higher cost. Understanding these trade-offs is essential for selecting the most suitable hardware platform based on the specific needs of the application at hand, whether it be real-time audio analysis, computer vision, or any IoT deployments.

4.1.2. Edge Intelligence Frameworks and Libraries

Since the rapid explosion of ML-based applications and the unfeasibility of using only cloud-based processing, edge computing has gained momentum, leading to the deployment of most deep learning applications at the edge. As a result, new techniques to support DL models at the edge are required. This rising demand has prompted the creation of customized ML frameworks designed for edge computing architecture. These frameworks are critical in allowing the creation and deployment of lightweight models capable of operating effectively with limited resources. They also ensure efficient inference and learning processes in resource-constrained environments by optimizing model size, complexity, and computational requirements. This section classifies and explains the popular AI frameworks and libraries utilized in the reviewed research papers:

TensorFlow

TensorFlow stands out as one of the most commonly used open source frameworks for creating, training, evaluating, and deploying machine learning models [42,43]. It was developed by the Google brain team in 2011 and made open-source by 2015. TensorFlow flexible architecture enables developers to use a single API for distributing computation across multiple CPUs and GPUs, whether it be on desktops, servers, or mobile devices. This adaptability makes it particularly well-suited for distributed machine learning algorithms. TensorFlow supports a wide range of applications [44], with a focus on deep neural network [45]. It runs on Windows, Linux, Mac and even Android. its main programming languages are Python, Java and C.

TensorFlow Lite is a lightweight version of TensorFlow for resource-constrained devices. It enables on-device learning and makes ML run everywhere with low latency. TensorFlow light is designed for on-device inference rather than training purposes. It achieves reduced latency by compressing the pre-trained DNN model.

OpenEI

OpenEI is an open lightweight framework for edge intelligence [46]. OpenEI allows performing AI computations, data sharing capabilities on low-power devices suitable for deployment at the edge. OpenEI is made up of three parts, a package manager for running real-time DL tasks and training models locally, a model selector for choosing the best models for various edge hardware, and a library with a RESTful API for data sharing. The objective of OpenEI is to ensure that once deployed, any edge hardware will have intelligent capabilities.

Core ML

Core ML is Apple’s ML framework. It enhances on-device performance by integrating the CPU, GPU, and Neural Engine while minimizing memory usage and power consumption [47]. Executing the full model on the user’s device avoids the necessity for a network connection, safeguarding the privacy of the user’s data and ensuring responsiveness of your app. Core ML supports a large selection of ML algorithms like ensemble learning, SVM, ANN, and linear models. However, Core ML does not support unsupervised models [48].

Caffe

Caffe is a fast and flexible DL framework created by the Berkeley Vision and Learning Center (BVLC), with contributions from a community of developers on GitHub. Caffe enables research projects, big manufacturing applications, and startup prototypes in vision, speech recognition, robotics, neuroscience, astronomy, and multimedia [49]. Caffe is a BSD-licensed C++ library that supports the training and deployment of CNNs and other DL models. It also integrates with Python/Numpy and MATLAB. Caffe2 a new lightweight version of Caffe, was designed to facilitate data processing with DL directly on end devices. Caffe2 incorporates numerous new computation patterns, such as distributed computation, mobile, and reduced precision computation, expanding upon the capabilities of the original Caffe framework. With cross-platform libraries, Caffe2 facilitates deployment across multiple platforms, enabling developers to harness the computing power of GPUs in both cloud and edge environments [50]. It’s worth noting that Caffe2 has been integrated into PyTorch.

Pytorch

PyTorch is a popular open-source library for building DL models. It was developed by Facebook. This package uses dynamic computation and uses Python concepts [51]. PyTorch carries PyTorch Mobile, which supports the execution of ML models on edge devices, mainly iOS and Android [52]. Both PyTorch and Caffe2 have their own set of benefits. Caffe2 is more towards industrial usage with a huge emphasis on mobile, whereas PyTorch is oriented toward research. In 2018, the Caffe2 and PyTorch projects came together to form PyTorch 1.0. This new framework combines the user experience of the PyTorch frontend with the scaling, deployment, and embedding capabilities of the Caffe2 backend. For the aforementioned reasons, simplicity and ease of usage are what make PyTorch popular among deep learning researchers and data scientists.

Apache MXNet

MXNet [53] is a deep-learning library that is both effective and versatile. It was created by the University of Washington and Carnegie Mellon University to support CNN and LSTM networks. It works by combining symbolic expression with tensor computation to maximize efficiency and flexibility. It is built to run on a variety of platforms (cloud or edge) and can perform training and inference tasks. It also supports the Ubuntu Arch64 and Raspbian ARM-based operating systems, in addition to the Windows, Linux, and OSX operating systems. MXNet is a lightweight library that can be integrated into various host languages and operate within a distributed setup. Several other projects have been published by some of the industry’s top-leading tech giants to move ML functions from the cloud to the edge, like Azure IoT Edge from Microsoft and AWS IoT Greengrass from Amazon.

The need for implementing machine learning models at the edge continues to rise, which has encouraged the development of customized frameworks specifically designed for edge computing architectures. These frameworks are pivotal in facilitating the creation and deployment of lightweight models capable of operating effectively within resource-constrained environments. One of the most often used AI frameworks and libraries in modern literature is TensorFlow; it stands out for its flexibility and cross-platform compatibility. TensorFlow Lite, a lightweight version, specifically targets resource-constrained devices, ensuring low-latency on-device computing. OpenEI, an open lightweight framework, supports AI computations and data sharing capabilities on low-power devices deployed at the edge, aiming to integrate any edge hardware with intelligent capabilities post-deployment. Core ML, Apple’s proprietary framework, optimizes on-device performance while maintaining user data privacy and application responsiveness. Furthermore, Caffe and its lightweight successor, Caffe2, serve as fast and flexible deep learning frameworks suitable for various applications, from research projects to industrial prototypes. PyTorch, favored by deep learning researchers and data scientists, boasts dynamic computation and native support for edge deployment via PyTorch Mobile. Apache MXNet, with effectiveness and adaptability, is capable of supporting a wide range of platforms and tasks, from CNNs to LSTM networks, positioning itself as a lightweight library amenable to deployment in distributed environments. These frameworks, alongside cloud-to-edge migration tools like Azure IoT Edge and AWS IoT Greengrass, show how researchers and companies are working together to make edge devices smarter with AI, thereby revolutionizing the landscape of edge machine learning. A comparison of multiple frameworks and libraries for edge intelligence is presented in Table 1.

Table 1.

A Comparison of Frameworks and Libraries for Edge Intelligence.

4.2. Model Compression

Model compression involves lowering the size and computational demands of ML models without compromising their performance. It enables us to deploy the model on tiny devices. Model compression techniques allow the implementation of resource-intensive DL models in resource-limited edge devices by minimizing the model’s parameter count or training DL models scaled down from their original size. Because edge devices have limited storage and processing power, compressing ML models is critical to ensuring efficient deployment. There are several techniques used in edge intelligence for model compression, like pruning, quantization, knowledge distillation, and low-rank factorization [68]. The two main ways for lowering the model size while maintaining a high accuracy are lower precision (fewer bits per weight), which is known as quantization and fewer weights, which is known as pruning. Post-training quantization reduces memory needs and execution speed while maintaining accuracy. Using ML frameworks like TensorFlow and Keras, this technique can be used both after and before training. Furthermore, pruning algorithms in neural networks remove redundant connections, reducing computing demands and program memory. Combining quantization and pruning approaches provides a comprehensive model compression solution that optimizes both size and performance for deployment on smaller devices [69]. Huang et al. introduced DeepAdapter, a cloud-based-edge-device framework aimed at optimizing DL models for mobile web applications. Within DeepAdapter, a context-aware pruning algorithm is employed to reduce model size and computational demands while preserving performance. This adaptive approach replaces fixed network pruning methods, ensuring that pruned models are finely tuned to current resource constraints. Through the pruning process, redundant parameters or connections are selectively eliminated from the deep neural network models. Resulting in a more streamlined model that is better suited for deployment on resource-constrained devices, ultimately improving efficiency and performance in mobile web environment [70]. These authors propose an iterative algorithm that optimizes the partitioning and compression of a base DNN model. By dynamically adjusting strategies based on rewards, the approach efficiently maximizes performance while meeting resource constraints [71]. This study also validates that pruned models demonstrate accelerated inference and reduced memory usage by introducing GNN-RL, a pruning method that combines graph neural networks (GNNs) and reinforcement learning for topology-aware compression [72].

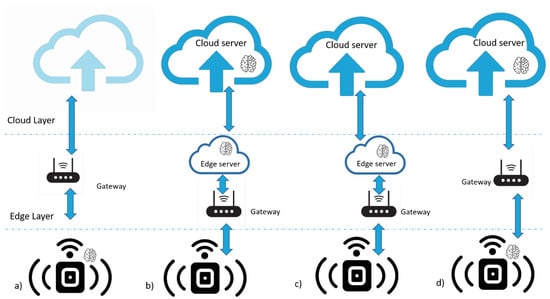

4.3. Architecture at the Edge (Where to Implement ML in IoT Architecture?)

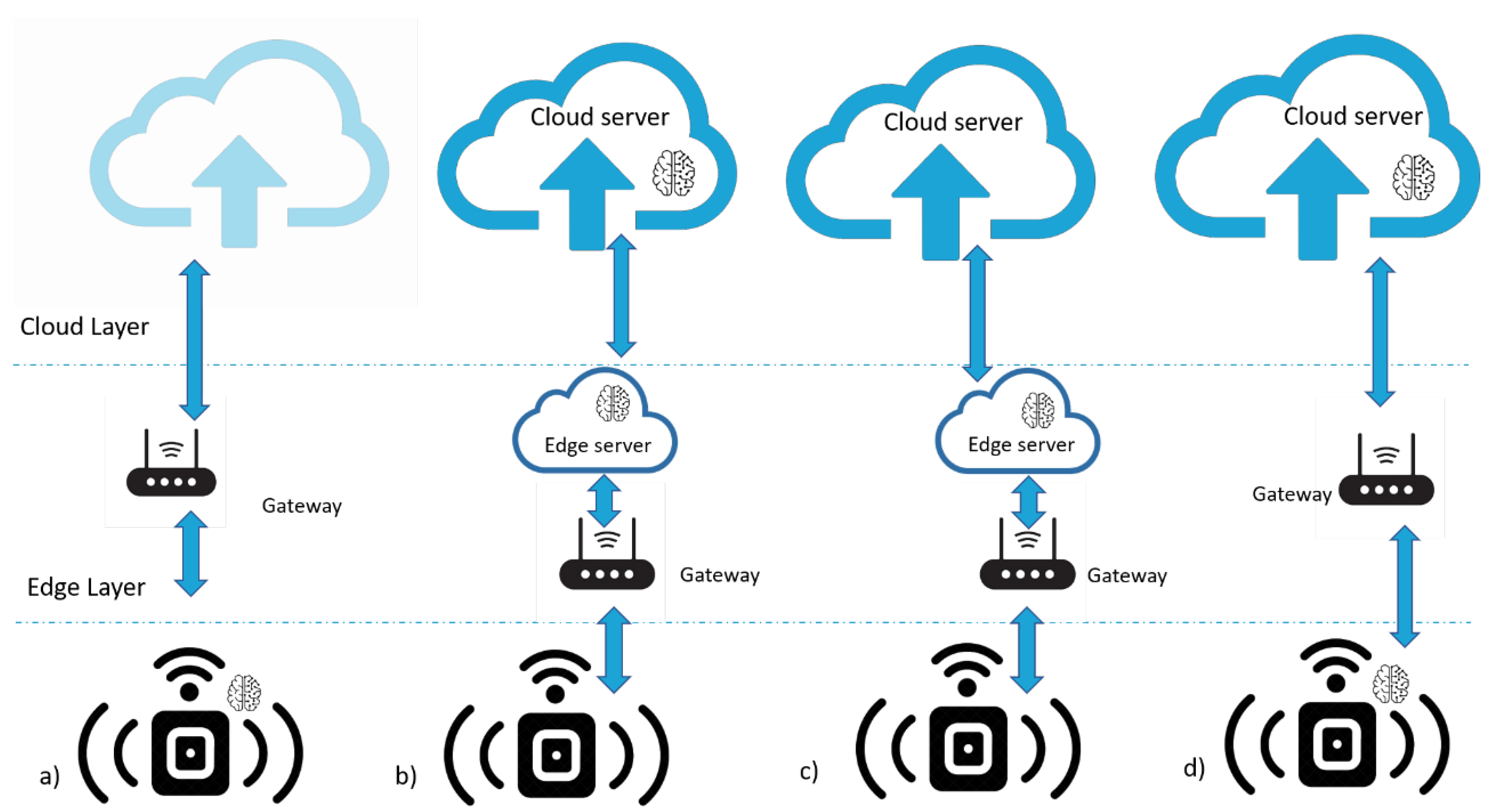

Deploying ML algorithms in IoT devices was nearly impossible due to their limited computation power and small memories. The typical solution suggests offloading data processing to the cloud, but this leads to greater latency, privacy issues, and decreasing bandwidth. To resolve these issues, many research studies have been made about distributing machine learning algorithms on four important architectures as we can see in the Figure 6. Deploying machine learning algorithms in IoT applications is used in a variety of fields; we can organize the important applications as follows:

Figure 6.

(a) On-Device Computation, (b) Distributed Edge Server-based Architecture, (c) Edge Computing, and (d) Joint Computation between the Cloud and the Device.

- Smart Health: To enhance patient well-being, innovative devices have emerged. For instance, adhesive plasters equipped with wireless sensors can observe wound status and transmit data to a doctor remotely, eliminating the necessity for the doctor’s physical presence. Additionally, wearable devices and tiny implants can track and relay various health metrics such as heart rate, blood oxygen levels, blood sugar levels, and body temperature. Notably, there are sensors designed to forecast health events, like seizures. For instance, a wearable device mentioned in a study by Samie et al. [73] predicts epileptic seizures, alerting the patient beforehand.

- Smart Transportation: By leveraging in-vehicle sensors, mobile devices, and city-installed appliances, we can provide improved route recommendations, streamline parking space reservations, conserve street lighting, implement telematics for public transportation, prevent accidents [74], and enable autonomous driving.

- Smart Agriculture: Using sensors and embedded devices for soil scanning and water, light, humidity, and temperature control. Introducing intelligence and IoT devices to farmers to enhance crop quality and yield while optimizing human labor [75,76].

- Surveillance Systems: Smart cameras can collect video from multiple locations on the street. Smart security systems can identify suspects or prevent dangerous situations with real-time visual object recognition.

- Smart Home: Conventional household appliances, such as refrigerators, washing machines, and light bulbs have evolved by integrating internet connectivity, enabling communication between devices and authorized users. This connectivity enhances device management and monitoring while optimizing energy consumption rates. Moreover, the availability of smart home sensors introduces features such as smart locks and home assistants, further enhancing the functionality and convenience of modern homes.

- Smart Environment: Wireless sensors dispersed all over the city offer the ideal infrastructure for monitoring a wide range of environmental conditions. Enhanced weather stations can leverage barometers, humidity sensors, and ultrasonic wind sensors. Moreover, intelligent sensors can oversee the city’s air quality and water pollution levels [77].

There are numerous other machine learning based IoT applications and various examples of how ML could be applied in these fields. It is explained in detail in many different other reviews. In this paper, we have decided to review and classify the integration of machine learning algorithms in IoT devices based on the location of the ML model in the architecture previously discussed, which is a new concept as far as we know. While writing this review, only English papers were taken into account. Furthermore, the reviewed papers were published between 2017 and 2024 to analyze the recent advancements in this field. The most advanced machine learning applications in IoT are categorized by application domain, input data type, machine learning techniques used, and where they fall on the cloud-to-things range.

4.3.1. On-Device Intelligence

ML models were primarily deployed on robust devices such as computers, servers, and specialized hardware. However, recent advancements have enabled the implementation of ML training and inference on low-power chips. These advancements involve both innovative hardware designs and software frameworks to extend the intelligence to the edge. On-device intelligence, also known as embedded intelligence, empowers devices to perform data processing, machine learning, and artificial intelligence tasks directly without relying on cloud or external server resources. This method provides numerous advantages, including lower latency, enhanced privacy, security, and improved performance in scenarios where network connectivity is limited or unreliable. In this study, Faleh et al. demonstrate the feasibility of deploying CNN models on embedded devices [78]. They used the Jetson Nano board to enable real-time mask recognition directly at the edge device. Utilizing a lightweight CNN classifier model, MobileNetV2, trained on a central server, they deployed it on the embedded Jetson Nano device linked to an alarm system to detect missing masks. The authors achieved an accuracy rate over 99% according to their experimental results. In this work [79], the authors presented a cost-effective intelligent irrigation system designed to forecast environmental factors using embedded intelligence. They conducted a comparative analysis across various frameworks, including the deployment of an LSTM/GRU-based model on a Raspberry Pi board. Their findings highlight the accuracy of LSTM and GRU in predicting environmental factors. Bansal et al. [74] introduced DeepBus, a pothole detection system leveraging IoT to identify surface irregularities on roads in real-time. Road data is collected using a Raspberry Pi 3B+ equipped with an accelerometer and gyroscope. Then, the data undergoes labelling, preprocessing to remove missing data, and outlier detection. The authors evaluated and trained various ML models, finding that the RF classifier reached an accuracy of 86.8% for pothole detection on the collected dataset. After these initial phases, the model is implemented on smartphones, where it continuously collects data from the phone’s sensors and detects potholes and bumps in real-time. This live data on potholes is made accessible to all users via a real-time map to enable smart transportation. Drivers can receive warnings based on this information, and their locations can be shared with civic authorities for prompt repair of road damages. Collecting and sharing real-time road data with users and civic authorities raises privacy and security concerns, posing significant challenges in protecting sensitive information and ensuring compliance with data protection regulations in IoT-based systems. Samie et al. [73] offered a new efficient algorithm that predicts epileptic seizure on IoT devices with limited resources. In fact, all the related work on epileptic seizure prediction applies SVM, Random Forest and CNN classifiers. However, based on the authors, these algorithms are not suitable for implementation on small, low-power portable IoT devices due to their complex features and higher computation processing requirements. Therefore, the authors proposed a new seizure prediction model. The EEG data which records electrical activity in the brain and can be measured with wearable or implantable sensors, are pre-processed (filtered and segmented) on the MSP432 IoT device. Then, features are extracted and selected to be passed to the logistic regression classifier, which is commonly used in seizure prediction (due to its light computation and low memory). After that, the remaining features, along with the logistic regression model output, are sent to the gateway (smartphone device also used by the patient) to eXtreme Gradient Boosting (XGBoost) for classification and post-processing. While the proposed approach achieves high accuracy and efficiency on constrained devices, it simplifies features and reduces the size of data segments, which may limit the model’s effectiveness in real-world epileptic seizure detection scenarios. Balancing the need to keep models lightweight for deployment on resource-constrained IoT devices while ensuring sufficient complexity and robustness to accurately detect seizures in diverse real-world scenarios is equally important. Various solutions have been developed to handle this challenge. For instance, this study utilized quantization to develop a lightweight CNN model capable of capturing complex EEG signal features while maintaining high performance in environments with limited computational resources [80]. Transfer learning techniques have emerged as a solution to reduce the need for intensive feature engineering, thus resulting in a lighter model. In this study, the authors developed a system for detecting wheat rust [81]. Utilizing a CNN model based on transfer learning on an NVIDIA Jetson Nano, they achieved on-device inference. Their smart edge device comprises a camera for capturing crop images, a trained ResNet-50 for disease classification, and a touchscreen interface to display results to farmers. This system aids farmers in monitoring crop health, facilitating swift and accurate disease identification in real-time. On-device intelligence holds the potential to significantly transform numerous fields by allowing real-time, resource-efficient data analysis and decision-making on IoT devices. By processing data locally, on-device intelligence reduces latency, enhances privacy and security, improves reliability in low-connectivity environments, optimizes resource utilization, and enables scalability and flexibility in IoT ecosystems.

While numerous hardware and software solutions exist for edge ML, designing and deploying ML models on end devices remains a complex task. To deploy an AI model effectively on embedded devices, researchers and developers must carefully consider factors such as hardware selection. ML and DNN models demand significant computational resources due to intensive linear algebra operations and large-scale matrix/vector multiplications, rendering traditional processors inefficient. As a result, various novel specialized processing architectures and methodologies have emerged to address these challenges and achieve goals such as compact size, cost-effectiveness, reduced power consumption, low latency, and enhanced computational efficiency for edge devices. The optimal hardware for integration into edge devices encompasses a range of options tailored to specific applications. These include Application-Specific Integrated Circuits (ASICs), Field-Programmable Gate Arrays (FPGAs), processors based on Reduced Instruction Set Computing (RISC), and embedded Graphics Processing Units (GPUs) [82]. A notable advancement in IoT hardware is the emergence of ultra-low-power AI accelerators, executing ML and DL tasks directly on the chip or device through parallel computation. Strategies like model design and compression, as well as techniques to reduce inference time on end devices, are crucial for successful AI model deployment on embedded devices.

4.3.2. Edge Intelligence

After discussing the implementation of ML techniques on device computing, we move forward where the processing and the algorithm’s execution would be on the edge layer. Edge intelligence broadens device intelligence to a wider concept. While device intelligence relates to the device’s ability to process and analyze data locally, such as on a smartphone, tablet, or IoT device, edge AI refers to the ability to process and analyze data near its source, typically at the edge, instead of relying on centralized cloud-based services. Edge AI includes not only individual devices, but also computing nodes or servers strategically located near data sources. In this study, the authors explore the use of edge computing in an intelligent aquaculture system [83]. They implemented a heterogeneous architecture consisting of various devices such as NVIDIA Jetson Nx and Jetson Nano to collect water sensor data and to perform real-time video-based fish detection using DL. Wardana et al. [77] introduced a new DL model that integrates one- dimensional CNN and LSTM network to anticipate a short-term hourly PM2.5 concentration at 12 distinct nodes according to a dataset by Zhang et al. [84]. Then, the CNN-LSTM was optimized with TensorFlow Lite to generate a lightweight model suitable for edge devices, such as the Raspberry Pi 3 Model B+ and Raspberry Pi 4 Model B boards. After comparing their proposed model to approximately 20 different deep learning methods, the authors concluded that their hybrid CNN-LSTM model surpasses other models. Proietti et al. [75] developed an edge intelligence approach to manage a Greenhouse. In this work, the authors presented an LSTM Encoder-Decoder-based system in a greenhouse to detect anomalies in plants and manage their growth and control equipment. The system layout in the greenhouse included an Arduino MKR that housed all the sensors responsible for environmental data collection (pressure, humidity, air temperature...), a Full-HD camera, and an Arduino Uno equipped with the actuators and an LED lamp to provide light for taking pictures. A Raspberry PI 4 model B and NVIDIA Jetson Nano as edge servers, which are connected to both the Arduino MKR and Arduino Uno to receive sensor data (MKR) and send control instructions (Uno). The images are taken and processed using ELA (Easy Leaf Area) in order to calculate the Leaf Area. The authors feeds the LSTM model the temperature, relative Humidity, pressure, light Intensity, UVA and Leaf Area data after pre-processing and reshaping into a suitable format for LSTM. After tuning and training the model in both NVIDIA and Raspberry Pi, it was obvious that with one LSTM layer and 64 LSTM units, they obtained the best results. Jetson Nano may be faster than Raspberry Pi. However, Nano was not capable of completing the analysis of different hyperparameter choices. Rumy et al. [76] presented an automatic rice leaf disease detection system that applies a lightweight ML model based only on edge computing. In order to make the image classification model, the authors experimented with several machine learning algorithms to pre-process images of healthy and infected leaves, but they concluded that the supervised learning random forest is the most efficient. After training, they exported and transformed the image classification model to Raspberry Pi edge device, which was used to classify and detect the healthy leaves from the infected ones. They achieved an accuracy rate of 97.50%, and using this method can reduce data transmission costs by about 86.66%, which in turn lowers latency. Using edge computing, the authors in this study in [85] presented a novel approach to online water quality monitoring and early warning. They presented an advanced version of the backpropagation neural network (BPNN), enhanced by a new hybrid optimization approach that combines the cuckoo search algorithm and the Nelder-Mead simplex method. This novel method successfully adjusted the BPNN’s weight and deviation parameters in an effort to increase the system’s precision and dependability when assessing water quality. However, more improvements are needed to reach a better level of accuracy.

The research papers presented in this section demonstrate the significance of combining machine learning models with edge computing to achieve efficient and real-time data processing in a variety of IoT applications. The studies show that hybrid deep learning models can be successfully implemented and optimized on the edge, demonstrating the advantage they offer over other methods. Researchers have achieved accurate and resource-efficient solutions for anticipating environmental factors, managing greenhouse conditions and detecting plant diseases, as well as unmanned aerial vehicle (UAV) applications, by harnessing edge-device intelligence. For instance, in a recent study by Liu et al. [86], they highlighted the importance of integrating edge computing into vehicular networks to enhance traffic safety and elevate travel comfort. By incorporating Mobile Edge Computing (MEC) into vehicular networks, the study addressed the challenges and opportunities of shifting cloud resources closer to the edge of the network. The research provided an extensive review of advancements in Vehicular Edge Computing (VEC), covering areas such as task offloading, caching mechanisms, data sharing protocols, flexible network management strategies, and security and privacy considerations. Despite the significant progress made in VEC, several issues remain unresolved. These include enhancing Quality of Service (QoS), improving scalability, and strengthening security and privacy measures. Addressing these challenges opens up avenues for further research and innovation in the field of VEC.

4.3.3. Edge-Cloud Joint Computation

In a joint computation between the cloud and the edge layers of a network, certain tasks or computations are carried out locally at the edge, while others are offloaded to centralized cloud servers for more intensive processing or analysis. This strategy maximizes resource utilization by using the advantages of both edge and cloud computing. For example, data preprocessing or initial analysis may occur at the edge for rapid response, while complex analytics or long-term storage take place in the cloud. Joint computation enhances system efficiency, scalability, and flexibility, making it useful for applications that require a balance between local processing and centralized resources, such as smart cities, intelligent transportation systems, or distributed surveillance networks. In this study, they introduced an innovative architectural framework to connect smart monitoring robotic devices in healthcare facilities [87]. Their framework consists of three layers: the IoT node layer, the edge layer, and the cloud layer. Then, data is collected and routed directly to the edge node, and from there, it is offloaded to the cloud server for primary data processing. However, the authors need to enhance the data privacy and security of the framework. Transmitting data from the edge node to the cloud server raises concerns, as without robust encryption and security measures in place, sensitive healthcare data could be susceptible to unauthorized access or breaches. Li et al. [88] proposed a novel approach using deep learning in a video recognition IoT application with edge computing to ensure privacy and minimize network traffic and data processing load in the central cloud. Multiple wireless video cameras monitor the environment and identify objects. The wireless cameras record video at a resolution of 720p with a bitrate of 3000 kilobits per second. the collected video’s raw data is offloaded to the edge layer (which is deployed in an IoT Gateway) via standard Wi-Fi connections to be pre-processed. Then, the edge nodes would upload the reduced intermediate data to the cloud servers for further processing using CNN models. In this study, the CNN model is divided into two parts, the edge servers computed the lower layers and the cloud computed the higher layers. Nevertheless, the system’s scalability may be limited by the capacity of edge nodes and cloud servers to handle increasing data volumes and computational tasks. As the number of IoT devices and video streams grows, the ability of the system to effectively scale to accommodate these demands may be limited. Sivaganesan et al. [89] developed an innovative approach “semi self-learning farm management” based on edge computing instead of cloud processing paradigm to improve speed, reduce security risks, costs and latency. The wireless environmental sensor collects data on temperature, humidity, soil moisture, wind speed, and pressure, while the Tetracam-ADC Lite camera mounted on the drone monitors crop growth and pest attacks. This data is then transmitted to the edge layer via Wi-Fi Max for processing by the H2O deep learning model. H2O uses the ISTAT (national institute of statistics) dataset for training, then it predicts the right time to sow, to reap, to water and fertilize the crops to avoid any damages. All processed information from the edge layer is transmitted back to the farmer’s portable device via Wi-Fi. Sajjad et al. [90] created a face recognition algorithm for police departments in a smart city. A mobile wireless camera attached on a police officer’s uniform is utilized to capture images, which are then transmitted to a Raspberry Pi3 for facial recognition. The Viola-Jones algorithm is employed for face detection in images, followed by the ORB algorithm (Oriented FAST and Rotated BRIEF), which extracts peculiarities from the faces and offloads the data to an SVM classifier in the cloud to identify people and potential criminals. Yet, the accuracy of facial recognition algorithms can be affected by various factors such as lighting conditions, image quality, and occlusions. Errors in face detection and feature extraction may lead to misidentification or false positives, which could have serious consequences in law enforcement applications. These authors proposed an online water-quality monitoring system within the water distribution system utilizing both edge and cloud computing. Through testing various scenarios including edge computing alone and cloud computing alone, they introduced a hybrid edge-cloud framework. This hybrid model demonstrated improved accuracy in classification models while maintaining low energy consumption rates. The research showcases the practical optimization and viability of integrating edge and cloud computing in water quality monitoring systems. However, there is potential for further reduction in power consumption [91]. Distributed edge-cloud computing paradigm has demonstrated its potential in a variety of IoT applications, delivering numerous benefits such as enhanced privacy, reduced network traffic, and improved real-time processing capabilities. The research studies discussed show that this architecture can be successfully implemented in video recognition, farm management, and Smart City face recognition, effectively addressing specific challenges in each domain. This approach has the potential to transform a wide range of IoT applications by delivering more efficient, secure, and scalable solutions.

4.3.4. Cloud-Device Joint Computation

While on-device computing, edge intelligence, and edge-cloud computing offer significant advantages, each approach has unique advantages and addresses specific challenges. However, in today’s interconnected and data-driven world, the demand for seamless integration and efficient utilization of computing resources is more pressing than ever. This is where the concept of joint computing comes as a compelling solution. By combining the localized processing power of devices with the vast computational capabilities of the cloud, joint computing fills the gap between edge and cloud computing, unlocking several benefits. Several researchers have applied various techniques to make this approach feasible. In this paper, the authors used a combination of cloud and device intelligence in smart transportation [92]. They created an autonomous vehicle system by collected data from various sensors and implemented a motion control system on an STM32F10 microcontroller. They also developed an intelligent detection system in the same vehicle by deploying models on the embedded device EAIDK-310, which included a camera module. Additionally, they utilized cloud services for data transmission and information exchange, enabling interaction with the collected data. Mrozek et al. [93] designed a new system called Whoops which consists of two main elements: an iPhone 8 mobile IoT device equipped with a triaxial-accelerometer, a triaxial-gyroscope and a local ML model (built using coreML library for iOS devices) that can sense the surroundings, detect falling in older people, pre-process the data and notify the caregiver in case of danger. then gather, analyse and transmit the data for further processing. Also, a datacenter where the collected data is analysed and processed in order to monitor numerous elderly people. After comparing these 4 classifiers: RF, ANN, SVM, and Boosted Decision Tree (BDT), the authors came across that BDT is the more suitable one due to its superior average accuracy, moderate precision, and minimal standard deviation. Which was chosen for both cloud and mobile processing. In order to validate these tests, the authors conducted a real-life experiments to confirm the accuracy of the classification. They conducted an experiment involving a participant, a 25-year-old equipped with a mobile device containing the Whoops app. The participant performed a series of falls in different directions, along with various daily activities such as walking, squats, and navigating slopes. The simulation involved falling into a pool of sponges to reduce the danger of injury. They recorded just one false positive in seventeen fall trials. In order to address the issue of air pollution. In this study, they proposed an IoT-Cloud-based model for forecasting the Air Quality Index [94]. The IoT devices were utilized to collect and preprocess real-time air pollution data, including various meteorological parameters such as temperature, humidity, and wind speed. These data were then sent to the cloud environment for further processing and analysis using LSTM and eXtreme Gradient Boosting. Barnawi et al. [95] introduced an IoT- UAV-based scheme that detects, track and notify covid- 19 cases using aerial thermal imaging. These authors used thermal onboard sensors to collect raw data (thermal images), captured in a real-time scenario from thermal infrared cameras in a large crowd or massive cities. The UAV performs preprocessing using the face recognition method to determine whether the person has an elevated temperature or not. The collected data are then transmitted to AI models (YOLO and DLiB) on a central server for temperature calculation, identification, and face mask detection. Five different machine learning classifiers SVM, KNN, XGB, LR, and MLP models were used to evaluate the proposed method.

Even though cloud-device joint computation provides numerous benefits, it also comes with its own drawbacks. One significant drawback is increased latency due to the need to offload data between devices and the cloud for processing. This latency can impact real-time applications that require immediate decision-making. Additionally, joint computing may raise concerns about data privacy and security, as sensitive information must be transmitted over networks to cloud servers. Furthermore, reliance on cloud infrastructure introduces dependency on external services, making the system vulnerable to outages or disruptions in internet connectivity. A full comparison of multiple types of machine learning methods and their applications in IoT is presented in Table 2.

Table 2.

A Review of Machine Learning Algorithms and their Application in IoT Architectures.