Abstract

This conceptual article introduces Perceived Cognitive Assistance (PCA)—a novel psychological construct capturing how interactive support from Large Language Models (LLMs) alters investors’ perception of their cognitive capacity to execute complex trading strategies. PCA formalizes a behavioral shift: LLM-empowered retail investors may transition from intuitive heuristics to institutional-grade strategies—sometimes without adequate comprehension. This empowerment–distortion duality forms the theoretical contribution’s core. To empirically validate this model, this article outlines a five-step research agenda including psychological diagnostics, trading behavior analysis, market efficiency tests, and a Behavioral Shift Index (BSI). One agenda component—a dual-agent simulation framework—enables causal benchmarking in post-LLM environments. This simulation includes two contributions: (1) the Virtual Trader, a cognitively degraded benchmark approximating bounded human reasoning, and (2) the Digital Persona, a psychologically emulated agent grounded in behaviorally plausible logic. These components offer methods for isolating LLM assistance’s cognitive uplift and evaluating behavioral implications under controlled conditions. This article contributes by specifying a testable link from established decision frameworks (Theory of Planned Behavior, Technology Acceptance Model, and Risk-as-Feelings) to two estimators: a moderated regression for individual decisions (Equation (1)) and a composite Behavioral Shift Index derived from trading logs (Equation (2)). We state directional, falsifiable predictions for the regression coefficients and for index dynamics, and we outline an identification and robustness plan—versioned, time-locked, and auditable—to be executed in the subsequent empirical phase. The result is a clear operational pathway from theory to measurement and testing, prior to empirical implementation. No empirical results are reported here; the contribution is the operational, falsifiable architecture and its implementation plan, to be executed in a separate preregistered study.

1. Introduction

Over the past decade, institutional investors have increasingly relied on algorithmic and AI-enhanced tools to improve decision quality, execution speed, and portfolio optimization (Harris, 2024; Elly et al., 2025). In contrast, retail investors (RIs) have historically lacked access to such cognitive infrastructure. The recent diffusion of Large Language Models (LLMs)—including ChatGPT, Claude, and BloombergGPT—onto retail-facing platforms marks a potential inflection point in the cognitive capacity of non-professional market participants (Dong et al., 2024; Lopez-Lira & Tang, 2024).

Unlike earlier retail-oriented fintech tools such as robo-advisors or stock screeners, LLMs offer real-time, dialogic, and context-sensitive support. These systems can simulate strategies, explain complex derivatives, interpret macroeconomic signals, and respond to natural-language queries (Nie et al., 2024; J. Yang et al., 2025). This shift enables retail users to access and apply concepts—like volatility arbitrage, delta-neutral positioning, or GARCH1-based risk modeling—that were previously limited to institutional domains (Kirtac & Germano, 2024; Valeyre & Aboura, 2024).

While this accessibility represents a major step toward democratizing financial intelligence, it also raises underexplored behavioral risks. LLMs blur the boundary between information access and interpretive authority (Z. Chen et al., 2025; Tatsat & Shater, 2025). They do not merely present data but shape how it is framed and understood, often producing compelling narratives that encourage confidence in users’ understanding and strategy formulation. Empirical studies already point to an “illusion of understanding” phenomenon, where perceived competence rises without corresponding gains in actual decision accuracy (Bahaj et al., 2025; F. Sun et al., 2025).

This inflation of perceived cognitive control may distort investor behavior, particularly under uncertainty (Jia et al., 2024). LLM-generated narratives can simplify complex tradeoffs, reduce the perceived risk of advanced strategies, and increase user trust—even when system outputs are heuristically generated or overfit (Boussioux, 2024; Jia et al., 2024). Such conditions may accelerate transitions from intuitive strategies, such as price momentum, to structurally complex volatility-based trades, such as straddles or iron condors, without adequate comprehension of nonlinear risks (Kirtac & Germano, 2024; Henning et al., 2025). In this study, we further propose that PCA acts not merely as an internal cognitive mechanism but as a conditional moderator. Specifically, PCA shapes the degree to which the availability of LLM cognitive support translates into actual adoption and effective execution of complex investment strategies.

Importantly, these psychological effects—perceived control inflation (overconfidence), intention–behavior divergence as described by risk-as-feelings, and coordination effects induced by LLM-supported heuristics—can scale across retail cohorts as LLM assistants diffuse. Retail investors already account for a significant share of trading volume in instruments such as 0DTE (zero-days-to-expiration) options (Bandi et al., 2023; Xu, 2025). If a large number of users adopt similar prompts or follow comparable trade logic generated by LLMs, the result may be algorithmic coherence—a form of emergent behavioral alignment where strategy convergence distorts price discovery, amplifies volatility, and contributes to reflexive feedback loops (Y. Peng, 2024; Y. Yang et al., 2025). This phenomenon has been documented in the context of risk-premia crowding and critical transitions in complex financial systems, and has been linked more recently to LLM-mediated coordination effects (Y. Peng, 2024; Y. Yang et al., 2025). In such environments, even independently rational decisions may aggregate into destabilizing collective patterns, particularly when LLM-generated narratives are similar, fluently persuasive, and widely adopted (L. Chen et al., 2025; Y. Yang et al., 2025).

Yet academic attention has focused disproportionately on LLM productivity and output fidelity, while largely neglecting retail behavioral shifts. A retrieval-augmented literature review of over 120 papers since 2023 performed by authors in preparation for this research shows strong emphasis on AI-enhanced forecasting, coding, and analysis—but near absence of research into psychological and strategic consequences for retail investors.

Relative to adjacent studies, our scope is investor-level and cognitive rather than macro-market or capability benchmarking. Forecasting and sentiment extraction studies primarily evaluate the predictive performance of LLMs; capability papers catalog model architectures and task scores; and macro co-movement work studies cross-asset linkages under stress or inflationary regimes, such as analyses of safe-haven properties and interactions between gold, technology equities, and cryptocurrencies during inflationary periods (Dimitriadis et al., 2025). Complementary evidence on cross-market connectedness shows time-varying spillovers: Kayani et al. (2024) employ quantile connectedness and a time-varying-parameter vector autoregression (TVP-VAR) to document asymmetric transmission between digital and traditional assets and renewable-energy prices, and Goodell et al. (2023) use TVP-VAR to map shock propagation across traditional assets, cryptocurrencies, and renewable energy through COVID-19 and the Russia–Ukraine war. By contrast, our contribution is micro-behavioral and operational: we theorize how LLM-scaffolded perceived control (PCA) shifts strategy selection at the investor level and we specify falsifiable estimators to test those shifts (Equation (1) moderated regression; Equation (2) BSI).

Central research question: How do large language models (LLMs) reshape retail investors’ strategy selection and risk posture through perceived cognitive assistance, and how can this be operationalized into testable investor-level predictions (Equation (1)) and a composite Behavioral Shift Index (BSI; Equation (2))?

Significance. This question matters because LLMs lower cognitive and operational frictions to higher-complexity tactics (for example, multi-leg options), potentially enabling informed adoption or, alternatively, inducing miscalibrated confidence. Distinguishing empowerment from distortion is essential for investor-protection policy, platform design, and for interpreting any market-level footprints of retail behavior within efficiency diagnostics.

This article explicitly adopts a conceptual stance, introducing two major theoretical and methodological innovations to behavioral finance literature. First, it proposes PCA as a distinctive psychological construct within Theory of Planned Behavior (TPB) frameworks, specifically capturing investor perceptions of cognitive facilitation provided by interactive LLMs. Second, acknowledging inherent methodological constraints, it advances an innovative dual-agent simulation approach, designed explicitly for causal benchmarking of LLM impacts on investor behavior. These contributions collectively pave the way for rigorous future empirical evaluation that proceeds on two distinct tracks: (i) investor-level tests of the mechanism using Equation (1) and Equation (2) (BSI), and (ii) a dual-agent counterfactual (Virtual Trader; Digital Persona) whose purpose is to evaluate whether the LAT workflow generates alpha beyond randomness and to benchmark attribution in post-diffusion settings.

In brief, the contribution is architectural: Equation (1) captures TPB-based micro-causality with LLM × PCA moderation, while Equation (2) provides an observable, time-series diagnostic of realized behavior change. Implementation refers to the explicit operationalization of these estimators, directional sign tests linking theory to coefficients and index movements, and a preregistered, version-controlled plan for estimation and replication.

The rest of this article is structured as follows: Section 2 develops the behavioral and theoretical foundations. Section 3 presents model-implied results and the theoretical model. Section 4 offers a discussion and a future research agenda. Section 5 presents the conclusions.

1.1. The Distinctive Nature of LLMs vs. Previous Investment Technologies

LLMs represent a cognitive inflection point in the evolution of retail financial tools. Unlike prior systems such as robo-advisors or screeners—which operated through static interfaces and rule-based outputs—LLMs function as interactive reasoning agents capable of simulating real-time, context-aware dialog (Gao et al., 2024; Ferrag et al., 2025). This qualitative distinction marks a transition from passive access to co-constructed inference.

LLMs uniquely support four capabilities critical to retail investor cognition: (1) dialogic simulation of strategic reasoning (Costarelli et al., 2024; Shinn et al., 2024); (2) seamless integration of technical, macroeconomic, and derivative contexts within a single thread (Wu et al., 2023; Xue et al., 2024); (3) iterative strategy refinement based on reciprocal logic rather than filter selection (Yu et al., 2023; H. Yang et al., 2024); and (4) hypothesis generation that surfaces novel relationships often missed by heuristic-based tools (Wu et al., 2023; Xue et al., 2024).

These features shift the investor’s role from selector to co-creator. Rather than choosing among predefined outputs, users engage in cognitive scaffolding that reduces complexity barriers and narrows the gap between intent and execution (Gao et al., 2024; Ferrag et al., 2025). While prior platforms extended reach, LLMs actively shape perceived competence and behavioral control, warranting a new theoretical lens on investor cognition in AI-mediated environments.

1.2. Do Retail Investors Favor Lower-Risk, Intuitive Strategies over Complex, High-Risk Ones?

Retail investors (RIs) consistently exhibit preferences for intuitive, low-complexity strategies that minimize analytical burden and perceived risk. This behavior is shaped by cognitive limitations, lack of institutional-grade tools, and behavioral biases such as overconfidence and recency effects (Aqham et al., 2024; D. Singh et al., 2024). Such preferences are reinforced by platform design and social cues, which often emphasize trend-following signals or simplified narratives (Briere, 2023; Xue et al., 2024).

For example, simple momentum investing and dip-buying exemplifies this pattern, relying on extrapolation of recent price trends and demanding minimal technical knowledge (Du et al., 2025; Wheat & Eckerd, 2024). Empirical studies show that retail flows gravitate toward recent winners, particularly in lower-literacy or high-volatility contexts (Miguel & Su, 2019; Wheat & Eckerd, 2024). Dividend investing and ETF buy-and-hold strategies are similarly favored for their perceived safety, familiarity, and low monitoring costs (Graham & Kumar, 2006; Gempesaw et al., 2023).

By contrast, uptake of structurally complex strategies—such as multi-leg options, volatility overlays, or delta-neutral positions—remains limited among retail cohorts (Bryzgalova et al., 2023; Bogousslavsky & Muravyev, 2024). These instruments require comprehension of Greeks, nonlinear payoffs, and market-maker dynamics—factors that impose high cognitive barriers (Alsup, 2023; Naranjo et al., 2023). Even with increased access to derivatives post-2019, most retail activity is limited to directional calls and puts (Bryzgalova et al., 2023; Bogousslavsky & Muravyev, 2024).

This behavioral asymmetry forms the baseline condition for our investigation: whether LLMs reduce complexity perception and facilitate measurable transitions to higher-risk, sophistication-intensive strategies.

In this study, complexity is defined not as academic richness or institutional usage, but as the number of informational dimensions, conditional relationships, and decision nodes involved in strategy execution. This definition aligns with decision science literature, which conceptualizes complexity as the increase in required cognitive operations and branching conditionality under uncertainty (Tversky & Kahneman, 1986; Payne et al., 1993).

2. Materials and Methods

The materials for this study are the theoretical constructs and estimands (the quantities the model intends to estimate in the empirical phase) specified in the main text and appendices: the TPB-based moderated regression (Equation (1)), the Behavioral Shift Index (Equation (2)), the model-implied propositions in Section 3 and the five-step empirical agenda. Methods comprise the operational definitions for Equations (1) and (2), the estimation blueprint (unit of observation, controls, and robustness) to be applied in the subsequent empirical phase, the dual-agent benchmarking design for counterfactual attribution, and market-level diagnostics under the Efficient Market Hypothesis (EMH)/Adaptive Markets Hypothesis (AMH). Implementation proceeds under preregistered versioning, time-locked inputs, and auditable artifacts, as documented in the accompanying protocol.

Theoretical Model—LLM Impact on Retail: Our unit of analysis is the investor period (for example, weekly observations), which motivates a moderated regression in Equation (1) to test whether exposure to LLM assistance and perceived control/assistance (PCA) jointly predict behavioral outcomes and whether the LLM × PCA interaction is positive as hypothesized; estimation uses linear or generalized models with repeated-observation robustness, as appropriate. Equation (2) complements this by aggregating period-over-period changes in multi-leg share, trading frequency, concentration, and volatility exposure into a Behavioral Shift Index (BSI) that provides a time-series diagnostic of realized behavior change. Because post-diffusion settings lack clean human controls, attribution is supported by two information-identical synthetic baselines—the Virtual Trader (bounded cognition) and the Digital Persona (behaviorally plausible benchmark)—evaluated on time-locked inputs. All model specifications, inputs, and artifacts are preregistered and version-controlled, with snapshots that freeze model epoch, tools, prompts, and market state for replay and auditability.

2.1. Extending the Theory of Planned Behavior (TPB) for LLM-Augmented Contexts

This section investigates whether exposure to LLMs triggers a shift in retail investors’ behavior—from low-complexity strategies to cognitively demanding, risk-intensive structures. To model this behavioral migration, we extend the Theory of Planned Behavior (TPB), drawing on complementary psychological and technology adoption constructs.

Theory of Planned Behavior (TPB): A Starting Point

TPB posits that Attitude, Subjective Norms, and Perceived Behavioral Control (PBC) shape behavioral intention, which in turn predicts action (Ajzen, 1991; Armitage & Conner, 2001). In retail investing, this will map onto observable phenomena such as stated intentions to financial actions and actual execution patterns (East, 1993; Xiao & Wu, 2006).

In principle, TPB is well suited to model behavioral transitions in retail investing. It allows us to trace a chain from LLM exposure → psychological construct shifts → changes in trading intention and execution. However, despite its utility, TPB exhibits critical limitations when applied to LLM-mediated investor behavior:

- Lack of system-oriented constructs: TPB does not account for technology-specific beliefs such as perceived usefulness or ease of use as other models do (Davis, 1989; Venkatesh & Davis, 2000). Other models can help to explain why some users develop trust in AI systems while others do not (Davis et al., 1989; Venkatesh et al., 2003).

- Neglect of affective mechanisms: TPB assumes rational intention formation (Ajzen, 1991; Sussman & Gifford, 2019). It does not incorporate emotional arousal, anticipatory anxiety, or vivid scenario framing (Sniehotta, 2009; Alhamad & Donyai, 2021)—factors that are central in LLM interactions, which often simulate high-stakes decision environments.

- No structured behavioral outputs: While TPB links intention to behavior conceptually, it provides no direct link to observable data such as portfolio changes, option usage, or trade frequency—key metrics in financial behavior modeling (East, 1993; Cucinelli et al., 2016).

To address these gaps, we propose four extensions: (1) innovative Perceived Cognitive Assistance (PCA) sub-construct, (2) Technology Acceptance Model (TAM) integration, (3) Risk-as-Feelings Theory integration, and (4) the Behavioral Shift Index (BSI).

2.2. Perceived Cognitive Assistance (PCA): Extending Perceived Behavioral Control for AI-Augmented Decisions

Perceived Cognitive Assistance (PCA) is an individuals’ subjective belief in their enhanced cognitive capability to execute cognitively demanding tasks, facilitated by intelligent, context-aware, and interactive support provided by LLMs, independent of their actual performance outcomes or proficiency with the LLM itself.

This definition deliberately separates three distinct elements: (1) the psychological state of perceived capability enhancement, (2) the availability of LLM support as an environmental condition, and (3) the actual performance outcomes that may or may not align with these perceptions. This separation is crucial for understanding how LLM fundamentally alter users’ self-perceived behavioral boundaries in ways that differ from traditional technology adoption.

To isolate the moderating effect of PCA, it is conceptualized as an emergent, temporally lagged construct: initial LLM exposure fosters perceived cognitive assistance, which then moderates subsequent LLM engagement effects on complex strategy adoption. This temporal separation mitigates endogeneity concerns inherent in cross-sectional models. In this role, PCA determines the strength and effectiveness of cognitive scaffolding by LLMs in shaping investor behavioral outcomes (see Table 1).

Table 1.

Comparison of Traditional PBC and PCA as Moderating Construct.

PCA builds upon established cognitive science concepts, notably the notion that external tools can scaffold human reasoning (Hutchins, 1995) and that individuals monitor and calibrate their cognitive readiness through metacognitive awareness (Flavell, 1979). In AI-mediated decision contexts, these foundations converge within emerging human-AI collaboration research, which highlights how interactive systems reshape users’ perceived cognitive boundaries (D. Wang et al., 2020).

To establish discriminant validity, we differentiate PCA from four related but distinct constructs:

- (a)

- Technology Self-Efficacy. While technology self-efficacy captures confidence in using a technology effectively (Compeau & Higgins, 1995; Marakas et al., 1998), PCA specifically addresses the perceived enhancement of domain-specific capabilities through AI support. A trader might have high self-efficacy in using LLM (knowing how to prompt, interpret outputs) while having low PCA (not believing it enhances their trading capabilities), or vice versa. PCA builds upon but is conceptually distinct from domain-specific self-efficacy (Bandura, 1997), which captures an individual’s belief in their ability to perform a task based on internal mastery or experience. In contrast, PCA reflects a perceived expansion of one’s capability boundaries specifically induced by external, AI-driven scaffolding, irrespective of genuine skill acquisition.

- (b)

- Cognitive Offloading. Cognitive offloading describes the delegation of memory or computation to external tools (e.g., calculators, to-do lists), but does not entail the internalized sense of behavioral readiness for novel or analytically intensive tasks (Risko & Gilbert, 2016; Gerlich, 2025). PCA differs fundamentally because it captures not just task delegation but the belief in expanded personal capability boundaries: the belief that one is cognitively able to engage in complex tasks due to real-time AI support—even in the absence of skill acquisition. Empirical support for this distinction is growing, as both preliminary studies (A. K. Singh et al., 2023; Spatharioti et al., 2023) and recent peer-reviewed evidence (Steyvers et al., 2025) consistently demonstrate that interactions with LLMs or dialogic AI tools inflate users’ self-assessed competence, even when their actual decision accuracy remains unchanged. Specifically, it was found that users exposed to AI-generated financial narratives rated themselves as more financially knowledgeable but failed to interpret basic derivative setups correctly (Jakesch et al., 2023; Spatharioti et al., 2023).

- (c)

- Trust in Automation. Trust in automation concerns the reliability, transparency, and dependability of the system (Parasuraman et al., 2000), rather than the user’s own felt competence in executing decisions under system assistance (J. D. Lee & See, 2004). PCA is orthogonal to trust—users might trust an LLM’s outputs while not feeling it enhances their capabilities, or might feel empowered by LLM despite harboring doubts about its reliability. This distinction is crucial for understanding the “illusion of understanding” phenomenon.

- (d)

- Perceived Usefulness. Perceived usefulness from the Technology Acceptance Model (TAM) reflects beliefs about system utility in task performance (Davis, 1989; Venkatesh & Davis, 2000), but it does not capture the user’s self-appraisal of increased capability to act (Davis, 1989; King & He, 2006). PCA specifically captures the user’s belief about their own enhanced capabilities, not just improved outcomes. An investor might find LLM useful for gathering information while not feeling it makes them a more capable trader.

Drawing on dual-process theory (Kahneman, 2011) and metacognitive research (Kruger & Dunning, 1999), we propose that PCA can manifest through two distinct pathways:

- Positive pathway: When supported by adequate understanding and factual confirmation of LLM effectiveness, PCA facilitates risk democratization with informed confidence.

- Negative pathway: When PCA outpaces actual comprehension and factual confirmation, it may lead to behavioral distortion.

This duality depends on factors such as actual vs. perceived understanding, the transparency of LLM outputs, availability of LLM effectiveness validations, and asymmetries in informational framing. To formalize this relationship, we specify the following regression model (see Appendix A):

Symbols: i indexes investors; t indexes time periods; Bi(t) = behavior measure (specified per test); LLMi(t) = LLM engagement intensity; PCAi(t) = perceived cognitive assistance; Ai(t) = attitude toward complex strategies; SNi(t) = subjective norms; Controlsi(t) = control covariates; γ is the coefficient vector on Controls; εi(t) = error.

In this regression model with moderation, PCA specifically moderates the cognitive scaffolding effectiveness of LLM capabilities. The interaction term (LLM × PCA) explicitly captures whether and how strongly perceived cognitive assistance enhances the relationship between exposure to LLM capabilities and behavioral outcomes. A statistically significant, positive β3 indicates PCA’s critical moderating role. Expected patterns and when they may fail. We expect a positive link between LLM use and the uptake of more complex strategies, a positive link between perceived assistance and that same uptake, and a stronger combined effect when both are high. These expectations can fail in recognizable situations: when costs or frictions are high, when risk is unusually punitive, when tools or data are unreliable, or when the investor does not feel meaningfully supported. If the analysis does not show these positive patterns—or they disappear once basic cost and risk checks are applied—the claim is not supported. In practice, Equation (1) will be estimated at the investor–period level (for example, weekly observations), using the pre-specified behavioral measures (option-trade frequency, the share of multi-leg strategies, and concentration across underlyings). Estimation will use standard linear models with errors robust to repeated observations per investor (or appropriate generalized models if the outcome is a count or rate). Variable clarifications. Ai(t) is the investor’s attitude toward using complex strategies; SNi(t) captures perceived social influence (for example, peer or community approval); PCA reflects the investor’s felt ability to handle complex tasks with help from an LLM assistant. Equation (2) then summarizes observed changes in these behaviors as a Behavioral Shift Index for within-investor and cohort comparisons; empirical estimation is reserved for the subsequent phase. For measurement, we propose preliminary scale items that capture PCA’s unique characteristics e.g., a 7-point Likert scale (for example: “When I have access to ChatGPT, I feel capable of executing trading strategies that would otherwise be beyond my abilities”; “AI assistance makes complex financial concepts manageable for me”).

Task complexity will be handled using pre-specified observable features of the trade decision (for example, the number of legs and conditional steps), with refinements to measurement deferred to the empirical phase.

While PCA is most likely to manifest in contexts involving complex, interpretively demanding tasks and interactive AI systems, its emergence is less probable—but not impossible—with simple, rule-based tasks or non-dialogic technologies. Future empirical work should specify these contextual moderators to delineate PCA’s operational boundaries.

2.3. Technology Acceptance Model (TAM): Explaining Variation in LLM Uptake and Reliance

While PCA explains how RIs may feel empowered to engage in more complex financial behavior due to LLM support, it does not explain why some investors develop this perception while others do not. To address this heterogeneity in technology engagement, we integrate constructs from the TAM (Davis et al., 1989; Venkatesh & Davis, 2000), which focuses on users’ perceptions of new technologies. TAM posits that two core beliefs—Perceived Usefulness (PU) and Perceived Ease of Use (PEOU)—predict the adoption, frequency, and persistence of technology usage. These constructs are particularly relevant to financial LLMs, which vary in interface design, response quality, and interpretability. These beliefs influence not only the initial adoption of LLMs but also how deeply and persistently investors integrate LLMs into their strategy formulation process. Empirical studies in behavioral fintech adoption show that PU and PEOU significantly predict usage of robo-advisors, mobile trading apps, and decision-support dashboards (Pavlou & Fygenson, 2006; Belanche et al., 2019). Similar logic applies to LLM engagement in financial contexts, though few studies have yet modeled this in relation to perceived behavioral control. TAM constructs moderate the formation of PCA and influence TPB dimensions—providing the upstream logic for adoption intensity and behavior shift. Operationally, we treat Perceived Usefulness (PU) and Perceived Ease of Use (PEOU) as upstream determinants of LLM engagement (uptake, intensity, reliance). In Equation (1) they enter through the engagement term and its interaction with PCA, and in Equation (2) their effects are expected to surface as directional shifts in ΔFrequency and ΔMultiLeg within the Behavioral Shift Index (BSI).

2.4. Risk-as-Feelings Theory: Modeling Affective Divergence

Affective Finance (AFT) examines how affective states, discrete emotions, and emotion-laden narratives shape judgments and market behavior. It combines three mechanisms that are directly relevant to LLM-augmented retail trading: (i) fast, valenced “good/bad” signals that guide judgments under uncertainty (the affect heuristic), (ii) appraisal-specific effects whereby emotions such as fear or anger shift risk perception and choice in predictable directions, and (iii) narrative-driven conviction that enables action under radical uncertainty. Representative syntheses include the Annual Review survey on emotion and decision-making (Lerner et al., 2015), the affect-heuristic literature (Finucane et al., 2000; Slovic et al., 2007), appraisal-tendency results showing opposite risk effects for fear versus anger (Lerner & Keltner, 2001), and narrative accounts such as Conviction Narrative Theory and narrative economics that link story-driven conviction to market outcomes (Shiller, 2017; Johnson et al., 2023; Tuominen, 2023). Within this broader AFT program, we focus analytically on the Risk-as-Feelings (RaF) hypothesis: immediate feelings at the point of choice can diverge from cognitive risk evaluations and, when they do, feelings often dominate behavior (Loewenstein et al., 2001). RaF is the most appropriate AFT mechanism for our setting for three reasons: (1) Horizon fit—our interest is in short-horizon execution where in-the-moment affect is most behaviorally potent; (2) Identifiable predictions—RaF implies measurable intention–behavior gaps (e.g., plan vs. execution) that our design can target; and (3) Clean operationalization—RaF maps directly onto our observables: transient increases in volatility exposure and compositional shifts in strategy mix.

While TPB and TAM provide robust cognitive models of intention formation and technology adoption, they do not account for a critical behavioral dimension: emotionally driven decision divergence. Retail investing—especially under conditions of high volatility or uncertainty—is often shaped not by rational intent, but by visceral reactions such as fear, excitement, regret, or urgency. In this context, RaF (Loewenstein et al., 2001; Kobbeltved & Wolff, 2009) offers indispensable explanatory value. This theory proposes that emotions experienced at the moment of decision-making—not just cognitive evaluations of outcomes—can override planned behavior (Loewenstein et al., 2001; Kobbeltved & Wolff, 2009). The divergence between cognitive intention and behavioral execution is particularly pronounced in domains involving risk and delayed outcomes, such as financial trading (C. Peng, 2024). Even if TPB constructs (e.g., high PCA) predict strategy engagement, emotionally charged LLM narratives may derail or distort execution. This theory explains why users may hesitate—or leap—despite previously formed plans.

Consistent with this mechanism, we pre-specify two bias risks most directly linked to affective divergence: overconfidence (miscalibrated probability judgments and over-precision) and confirmation bias (selective exposure to belief-congruent evidence). Detection proceeds via investor-level metrics reported in Section 4.4 and is estimated for the LLM-Augmented Trader (LAT) against two information-identical baselines—the Virtual Trader (VT) and the Digital Persona (DP)—on time-locked inputs.

In Equation (1), RaF motivates tests for execution-side divergence conditional on intentions (LLM engagement, PCA) and their interaction; episodes consistent with RaF should coincide with short-run deviations in behavior even when planned intentions are stable. In Equation (2), RaF predicts temporary spikes in ΔVolExposure and, at times, higher concentration within the Behavioral Shift Index (BSI), while the TAM path (Perceived Usefulness/Ease of Use) predicts sustained increases in ΔFrequency and ΔMultiLeg via higher LLM reliance. Together these AFT-RaF and TAM channels yield complementary, falsifiable sign patterns that the BSI can track over time.

2.5. Behavioral Shift Index (BSI): Empirical Operationalization

To connect psychological shifts with observable market behaviors, we develop the BSI—a composite, time-series-friendly measure that quantifies the extent to which LLM adoption alters investor strategy (see Appendix A):

Symbols: i indexes investors; t indexes time; wk ≥ 0 and ∑wk = 1; MultiLegi,t = share of option trades that are multi-leg; Frequencyi,t = option-trade count per period; Concentrationi,t = portfolio concentration across underlyings; VolExposurei,t = volatility exposure proxy (e.g., share of open positions with material vega or a designated vol intensity score).

This index instantiates the theoretical lenses as observable deltas: TPB constructs (attitude, norms, perceived control via PCA) map to ΔFrequency and ΔMultiLeg; TAM operates upstream by shaping LLM engagement intensity; Risk-as-Feelings predicts short-horizon divergence, anticipated as spikes in ΔVolExposure. Accordingly, the BSI serves as the outcome-side counterpart to Equation (1) when testing whether LLM exposure and PCA are associated with measurable shifts in trading behavior. The BSI thus enables time-series and cross-sectional comparisons across investor cohorts with varying levels of LLM usage, linking psychological frameworks to observable market data—a methodological advancement rarely implemented in TPB-based financial studies. To compute the Behavioral Shift Index for an investor or a cohort, we take period-over-period changes from trading logs in: (i) option-trade frequency, (ii) the share of multi-leg strategies, (iii) concentration across underlyings, and (iv) option risk exposure (for example, a higher share of trades that increase overall leverage). These components are standardized and combined with weights declared before analysis. What the index should show in practice. If LLM genuinely helps lower the effort required to use complex tactics, the BSI should rise when LLM use and perceived assistance rise. In its components, we expect (i) more multi-leg constructions, (ii) more overall attempts, and (iii), in short, emotionally charged windows, more use of volatility-linked positions. Concentration may drop at first as investors experiment across several tactic families, and then stabilize as learning consolidates. The direction of change in the BSI should be consistent with the positive patterns tested in Equation (1). Falsification and limits. These expectations are easy to disconfirm: if the expected movements do not follow increases in LLM use in the index and its components—or if any movement disappears once basic checks for cost, risk, liquidity, and execution quality are applied—the claim is not supported. We do not assert durable, repeatable excess returns at the market level; where temporary effects appear, they should fade as participants adapt. This defines what the propositions do and do not predict and provides a clear basis for later empirical checks. In addition to these continuous proxies, we code each trade with a Strategy Structural Complexity (SSC) label on a four-level ordinal scale C0–C3. SSC is used for descriptive distributions and robustness checks (e.g., share of trades at C2–C3), while ΔMultiLeg and ΔVolExposure remain the primary BSI complexity proxies.

2.6. Proposed Diagnostic Framework for Detecting Behavioral Shifts: Integrating Efficient Market Hypothesis and Adaptive Market Hypothesis

While the TPB, PCA, Risk-as-Feelings Theory, and TAM offer robust psychological accounts of how LLMs alter individual-level intentions and behaviors, they do not address the question of market consequences. Specifically, these frameworks are not designed to evaluate whether LLM-induced behavioral shifts among retail investors will be materialized in price formation, volatility dynamics, or the informational efficiency of markets. To assess these causalities, a market-oriented framework is required—one that enables the identification of deviations from random pricing, tests for persistent performance anomalies, and distinguishes between behavioral distortions and adaptive learning. This diagnostic need is particularly acute in the context of technological discontinuities, where behavioral changes may be rapid, widespread, and nonlinear. In this study, we adopt a dual-framework approach grounded in financial market theory: the Efficient Market Hypothesis (EMH) (Fama, 1970; Abdullahi, 2021) and the Adaptive Markets Hypothesis (AMH) (A. W. Lo, 2004). These two perspectives serve complementary functions. The EMH provides a null hypothesis framework for testing whether retail behavior—augmented by LLMs—generates abnormal returns or price patterns inconsistent with informational efficiency. The AMH, in contrast, offers an interpretive model that explains such deviations not as irrationality but as the result of bounded rational adaptation to changing cognitive and technological conditions.

This dual integration allows us to move beyond individual cognition and intention formation, toward diagnosing whether the cumulative behavioral effects of LLM adoption—particularly changes in strategy complexity, timing, and coordination—leave observable traces in market structure. Our aim is not to model systemic behavior directly, but to assess whether LLM-induced retail activity contributes to detectable anomalies such as return persistence, volatility clustering, or adaptive convergence. In this sense, the EMH and AMH serve as complementary diagnostic tools: one identifying statistical deviations, the other interpreting them through bounded rational adaptation.

2.6.1. EMH as Baseline Diagnostic Framework

The EMH serves as a null model against which persistent trading advantages or price anomalies linked to LLM use can be tested. The operational diagnostics we apply are summarized in Table 2. In its weak form, the EMH posits that historical price information is fully incorporated into current prices, rendering trend-following ineffective (Fama, 1970; Showalter & Gropp, 2019). The semi-strong form extends this logic to all publicly available information, precluding informational arbitrage (Diamond & Perkins, 2022).

Table 2.

EMH Operational Diagnostics.

By establishing tests, the EMH serves as a null hypothesis framework. If LLM-augmented trades do not yield statistically significant or repeatable performance gains, the market remains informationally efficient. However, if anomalies are observed, this suggests a deviation from pure market rationality—precisely where the AMH becomes relevant. At the market level, the contribution is a dual-frame diagnostic: the EMH provides the null (no persistent alpha or anomalies), while the AMH interprets any transients as adaptive learning—thereby making the micro-level predictions from Equation (1) and the BSI refutable against macro evidence.

2.6.2. AMH as an Evolutionary Framework

The AMH reconceptualizes market efficiency as dynamic and context-dependent, shaped by cycles of agent adaptation, feedback effects, and technological innovation (A. W. Lo, 2004). Unlike the EMH, which assumes stable, rational processing of available information, the AMH accounts for cognitive limitations, path dependence, and environmentally conditioned learning in financial behavior (A. Lo, 2004). Since LLMs represent a cognitive inflection point in this evolutionary process they collapse informational silos, simulate expert reasoning, and scaffold complex strategy development through interactive and multimodal feedback (Z. Wang et al., 2023; Bewersdorff et al., 2025). While these behavioral changes may temporarily produce patterns inconsistent with traditional EMH formulations (e.g., clustered returns, volatility overshoots), the AMH views such anomalies as part of an adaptive cycle, not evidence of market irrationality. Market participants—once exposed to new tools or conditions—learn, adapt, and modify behavior over time (Khuntia & Pattanayak, 2018; A. W. Lo, 2019). The AMH thus provides an interpretive lens for determining whether LLM-induced deviations are transient signals of adaptation or signs of persistent inefficiency. Table 3 outlines illustrative diagnostics grounded in AMH logic.

Table 3.

AMH Operational Diagnostics.

Ultimately, the AMH complements the EMH by interpreting observed deviations not as violations, but as learning dynamics. This dual-frame approach supports empirical efforts to disentangle short-term distortions from long-run cognitive evolution, providing a behavioral bridge between micro-level strategy shifts and macro-level financial adaptation.

3. Results

As a conceptual article, we report model-implied results rather than sample estimates. These model-implied results matter because they translate the theory into precise, falsifiable sign tests and platform-design checks to be executed in the preregistered empirical phase. The model predicts that exposure to LLM assistance and perceived cognitive assistance jointly increase adoption of structurally complex strategies; in Equation (1), this corresponds to positive main effects for LLM engagement and perceived control/assistance, and a positive interaction, with signs stated for later falsification. At the behavior level, Equation (2) (BSI) should rise with LLM exposure and be stronger when perceived assistance is high. At the market level, persistent alpha or systematic post-entry drift would challenge weak-form efficiency, whereas transient patterns are consistent with adaptive dynamics. These implications set out the testable contribution that later empirical work will adjudicate. In practice, this frames investor-protection and platform-design implications—especially where confidence may rise faster than competence—and links directly to the efficiency diagnostics specified in the manuscript for distinguishing adaptive transients from persistent anomalies.

Under the TAM path, higher perceived usefulness/ease should raise LLM uptake and reliance; in Equation (1) this implies positive coefficients on the LLM engagement term (and its interaction with PCA) and, in Equation (2), increases in ΔFrequency and the share of multi-leg strategies. Under the Affective Finance/Risk-as-Feelings path, short-horizon affective swings should manifest as transient increases in ΔVolExposure and, at times, higher concentration—indicating execution that departs from prior plan. PCA moderates these effects: when perceived capability is calibrated, we expect complexity uptake without destabilizing risk shifts; when PCA outpaces actual skill, we expect confidence-led complexity and volatility exposure that the BSI will flag. Taken together, Equation (1) yields falsifiable sign predictions on engagement and moderation, and the BSI provides a time-series diagnostic distinguishing capability-driven from affect-driven shifts.

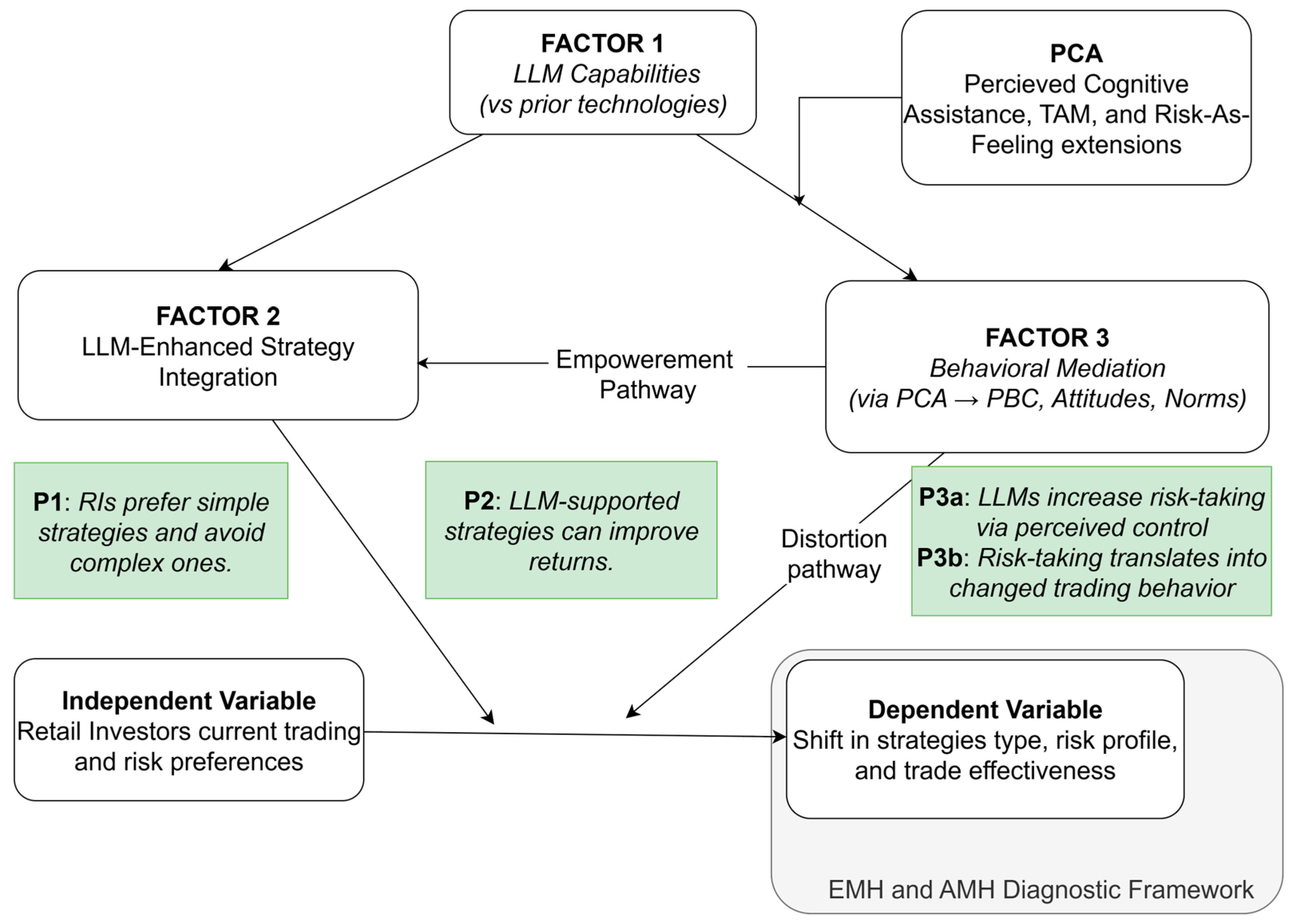

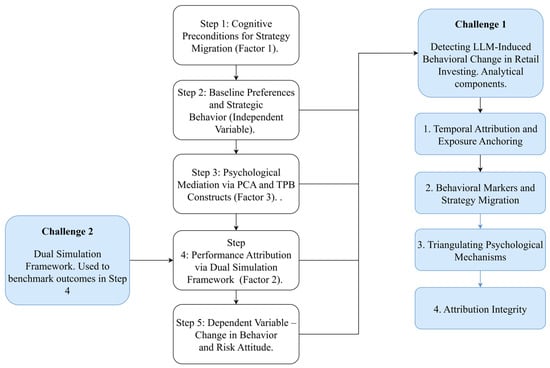

Theoretical Model—LLM Impact on Retail Investor Strategy Migration

The statements below answer the central research question by specifying falsifiable sign predictions in Equation (1), expected movements in the Behavioral Shift Index (Equation (2)), and market-level implications to be interpreted with the EMH/AMH diagnostics, with empirical estimation reserved for the preregistered program. This study advances a behavioral finance model that theorizes how LLMs facilitate strategic migration among retail investors—particularly the shift from heuristic-based, low-complexity strategies to structurally sophisticated approaches. Strategic complexity denotes the structural and procedural sophistication of a trade. Higher strategic complexity is reflected by moving beyond single-leg or simple two-leg directional setups toward multi-leg constructions, explicit volatility posture or time-staging (e.g., calendars/diagonals), and path-dependent or delta-neutral structures that require cross-Greek management. Empirically, we will label each executed or recommended trade with a four-level Strategy Structural Complexity (SSC) code (C0–C3) and use Equation (1) to test whether higher PCA is associated with a greater likelihood of selecting C2–C3 structures, conditional on controls. The model, depicted in Figure 1, is grounded in an extended TPB, enriched by the novel construct of PCA, and positioned within a dual diagnostic framework informed by the EMH and the AMH. Here, ‘implementation’ means operationalizing the model into estimable forms: an individual-level moderated regression (Equation (1)) and a cohort-level diagnostic index (Equation (2)), accompanied by explicit sign predictions and a preregistered estimation plan. In reduced form, we evaluate whether the BSI varies with LLM engagement (as shaped by Technology Acceptance Model constructs—Perceived Usefulness and Perceived Ease of Use) and PCA, including their interaction, conditional on controls.

Figure 1.

Theoretical Model.

At the core of the model lies a foundational relationship between the Independent Variable (IDV)—retail investors’ current trading preferences and risk attitudes—and the Dependent Variable (DV), defined as observable changes in strategy type, risk profile, and trade effectiveness. Empirical research demonstrates that retail investors, in the absence of external cognitive augmentation, systematically favor intuitive, simple strategies that minimize analytical burden and perceived complexity. These include approaches such as price momentum, dividend investing, and passive ETF allocations, which are accessible without requiring advanced knowledge of market microstructure, derivatives, or volatility dynamics (Foltice & Langer, 2015; D’Hondt et al., 2023; Chui et al., 2022).

This behavioral asymmetry reflects the cognitive and procedural barriers that limit retail engagement with complex instruments, including multi-leg options, volatility overlays, and delta-neutral strategies. The direct IDV → DV pathway thus anticipates that retail investors, constrained by their baseline cognitive resources and risk preferences, will predominantly select low-complexity strategies and avoid structurally sophisticated approaches. This leads to predictable patterns in strategy composition, portfolio concentration, and risk exposure.

Proposition 1.

Retail investors, without cognitive augmentation, exhibit a systematic preference for simple, intuitive strategies and avoid structurally complex approaches.

The introduction of LLMs, however, modifies this direct relationship by introducing three interrelated mechanisms that mediate and moderate the pathway from baseline investor preferences to observable strategic behavior.

Factor 1: LLM Capabilities. LLMs provide a qualitatively distinct form of cognitive scaffolding compared to prior retail-facing tools. Unlike static information platforms or rule-based advisors, LLMs offer real-time, dialogic, and multimodal reasoning support. These affordances enable users to simulate trading scenarios, integrate technical and macroeconomic signals, and comprehend complex instruments that would otherwise exceed unaided understanding.

Factor 2: LLM-Enhanced Strategy Integration. The cognitive scaffolding provided by LLMs reduces interpretive and procedural complexity, facilitating operational adoption of advanced strategies. Retail investors, supported by LLMs, can incorporate volatility-linked instruments, multi-leg derivatives, or delta-neutral positions into their decision routines—often embedding these within familiar heuristic frameworks.

Proposition 2.

LLM-supported strategies enable retail investors to operationalize structurally complex approaches, improving accessibility and the potential for enhanced risk-adjusted returns.

Factor 3: Behavioral Mediation via PCA and Moderating Role of LLM Capabilities. The behavioral impact of LLMs on retail investor strategy selection is conceptualized as part of a moderated psychological process. This model introduces PCA as a novel subdimension of PBC, capturing the investor’s subjective belief that complex tasks become tractable with intelligent system support, even in the absence of true domain mastery. PCA functions as a first-stage moderator, shaping the strength of the relationship between investors’ baseline predispositions—such as risk appetite, cognitive orientation, and strategy familiarity—and their willingness to adopt structurally complex approaches. Under conditions of high PCA and accessible LLM support, investors perceive greater self-efficacy and reduced cognitive barriers, increasing the likelihood of engaging with complex strategies such as volatility overlays or multi-leg derivatives. Conversely, when PCA is low or LLM capabilities are absent or underutilized, the cognitive and procedural barriers that constrain strategic migration remain intact.

Proposition 3a.

PCA moderates the relationship between baseline investor predispositions and strategic behavior; higher PCA strengthens the likelihood of adopting complex, risk-intensive strategies.

Proposition 3b.

The moderating effect of PCA is itself conditioned by LLM capabilities; greater LLM accessibility and functionality amplify the influence of PCA on behavioral outcomes.

These mechanisms operate along two distinct pathways. The Empowerment Pathway reflects the democratizing potential of LLMs to enhance access to institutional-grade strategies, supporting performance gains and strategic sophistication. The Distortion Pathway, by contrast, highlights the risk of behavioral miscalibration, where inflated perceptions of competence outpace actual understanding—amplifying risk-taking, overconfidence, and suboptimal decision-making. These pathways are conditional, not universal. The shift toward complex strategies should be muted or absent when costs or frictions are high, when risk is unusually punitive, when tools or data are unreliable, or when the investor does not feel meaningfully supported by the system. In emotionally intense episodes, short-term surges in risk-taking can occur even if long-run preferences do not change; this is the distortion side of the model.

Our claims are refutable. In later tests, we expect to see more frequent attempts, greater use of multi-leg constructions, and—especially in short, emotional episodes—more use of volatility-linked positions when LLM use and perceived assistance are higher. If these patterns do not appear, or if any apparent effects vanish once basic cost, risk, and execution checks are applied, the model is not supported. At the market level, we do not claim durable, repeatable abnormal returns; where temporary patterns arise, they should fade as participants adapt.

In sum, the model offers a causally structured and empirically testable account of how LLMs reshape retail investor behavior—not only by altering cognitive capacity but by fundamentally restructuring the psychological conditions under which complex strategies are adopted or avoided. Through its integration of PCA, TPB extensions, and market-level diagnostics via the EMH and AMH, the model enables evaluation of whether LLM-driven strategic migration constitutes an adaptive evolution or introduces new forms of behavioral distortion in retail trading ecosystems.

4. Discussion and Future Research Agenda

The model’s contribution is to specify a falsifiable link from LLM exposure and perceived assistance to observable shifts in retail trading complexity, with explicit estimators (Equations (1) and (2)) and an identification plan suitable for post-LLM environments. We emphasize boundaries: perceived uplift may outpace competence; intention–behavior gaps can widen under effects; and index-level diagnostics must separate transient adaptation from persistent anomalies. Interpreting individual-level findings against the EMH/AMH offers a principled way to distinguish short-run distortions from longer-run learning. The empirical phase is deliberately deferred; the present contribution is the operational mapping from theory to tests under a preregistered, auditable workflow.

4.1. Empirical Validation of the Theoretical Model

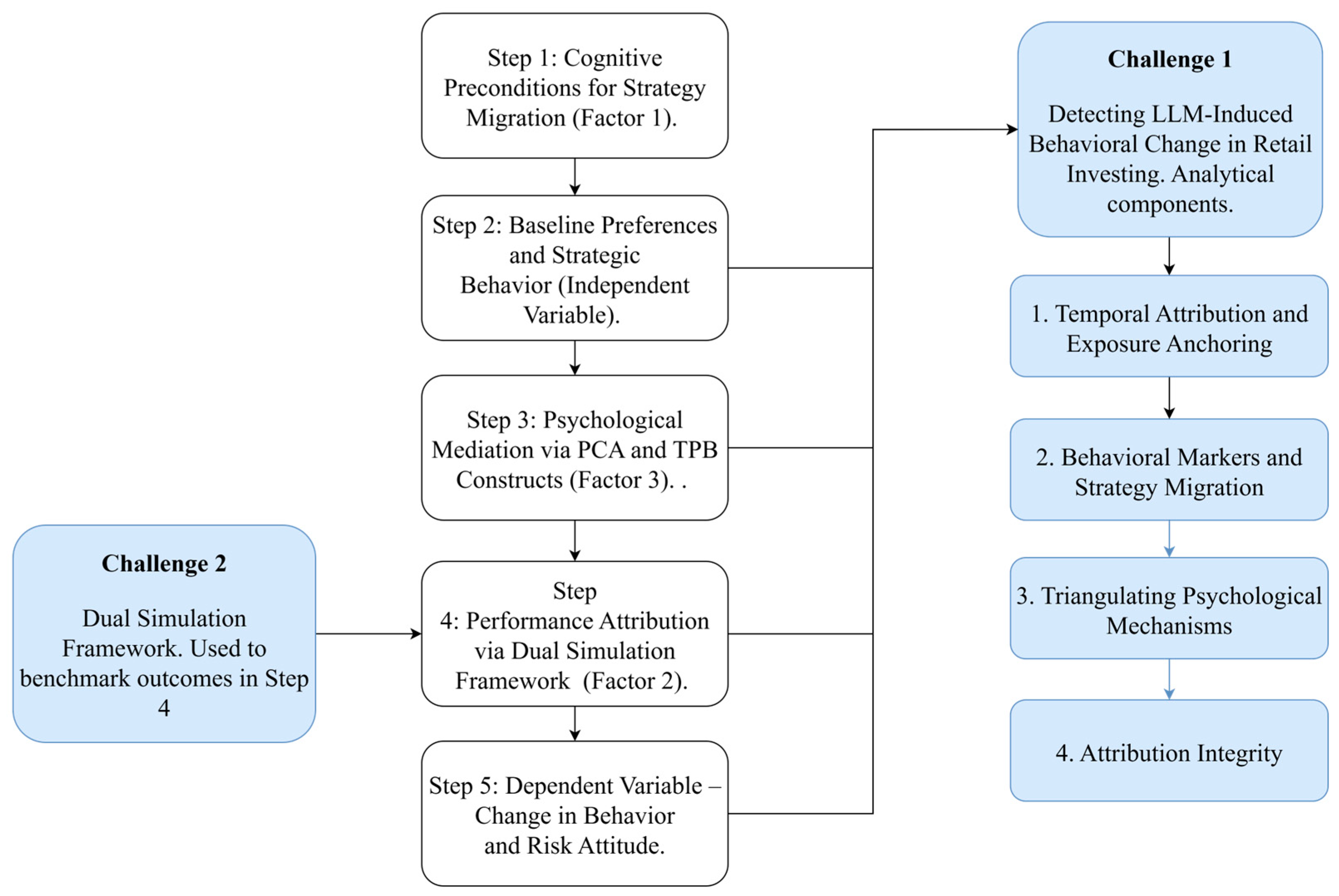

This study develops a behavioral finance model of how LLMs influence retail investor behavior. The model integrates constructs from the TPB, the TAM, Risk-as-Feelings, and PCA to explain strategy migration from intuitive, low-complexity approaches toward higher-complexity option strategies and volatility-linked instruments. The contribution of this article is conceptual-methodological: a fully operationalized, falsifiable architecture comprising an individual-level moderated regression (Equation (1)) and a Behavioral Shift Index (Equation (2)), with directional sign tests and an identification blueprint. Equation (1) will test whether exposure to LLM assistance and perceived control or complexity predict the behavioral measures, and whether their interaction is positive as hypothesized. Equation (2) will provide a composite index for within-investor and cohort comparisons. In parallel, we will report investor-level bias-metric deltas (calibration, selective exposure, disposition) for LAT vs. VT/DP so that any observed ‘confidence lift’ can be distinguished from genuine capability gains. Inference will be robust to repeated observations per investor, and we will use investor-specific and time effects to control for unobserved heterogeneity and common shocks. Timing checks and sensitivity analyses will probe identification. At the market level, results will be interpreted against efficient- and adaptive-market diagnostics already specified in the manuscript. Empirical results are intentionally out of scope; the empirical program is specified as a preregistered agenda. To translate the model’s causal logic into observable outcomes, we propose a five-step agenda (Figure 2) addressing two empirical challenges: First, the detection of LLM-induced behavioral change: whether exposure to LLMs is associated with measurable shifts in strategy complexity, risk-taking, and execution patterns. Second, causal benchmarking under post-LLM diffusion: a valid comparison of LLM-assisted trades with bounded-human counterfactuals when live control groups are infeasible. Our solution is a dual-agent benchmark (Virtual Trader and Digital Persona) that isolates decision-maker effects and is replicable. For falsifiability, we pre-register directional signs for Equation (1)—β1 > 0 (LLM main effect), β2 > 0 (PCA), β3 > 0 (LLM × PCA)—and expect the BSI to increase with LLM exposure and to be amplified by PCA; failure to observe these patterns would refute the model.

Figure 2.

Five-step empirical research agenda.

The five-step agenda is structured to ensure logical alignment with the theoretical model. Step 1 tests the enabling role of LLM capabilities (Factor 1) in reducing cognitive barriers to strategic complexity. Step 2 validates the baseline condition (Independent Variable) of retail investors’ historical preference for intuitive, low-risk strategies. Step 3 examines psychological mediation (Factor 3) via PCA and TPB constructs. Step 4 operationalizes performance attribution and tests LLM-enabled strategy integration (Factor 2) using the Dual Simulation Framework. Finally, Step 5 captures the dependent variable—observable changes in behavior and risk attitude—while embedding the analytical components necessary to address the detection and attribution of LLM-induced behavioral change.

Step 1: Cognitive Preconditions for Strategy Migration (Factor 1). The first step assesses whether LLMs reduce cognitive barriers that have historically limited retail engagement with complex instruments. This investigation draws on thematic literature reviews in AI accessibility, human–computer interaction, and fintech adoption. Comparative case studies of LLM-based tools are used to evaluate whether LLMs facilitate real-time reasoning, knowledge democratization, and cognitive scaffolding in financial decision-making. This step tests the foundational role of LLM capabilities (Factor 1) as the initiating mechanism in behavioral migration.

Step 2: Baseline Preferences and Strategic Behavior (Independent Variable). The second step empirically validates the baseline condition central to the theoretical model: retail investors’ historical preference for intuitive, low-complexity strategies. Desk research and market analysis document how platform design, user interface affordances, and limited cognitive resources have nudged retail investors toward momentum trading, dividend capture, and other heuristic-based approaches. This step establishes the counterfactual from which LLM-induced behavioral change is evaluated.

Step 3: Psychological Mediation via PCA and TPB Constructs (Factor 3). Building on the model’s psychological foundations, the third step investigates how LLM exposure alters investor psychology. Survey instruments based on the TPB will be designed to measure changes in TPB constructs—augmented by the PCA construct, which captures AI-scaffolded self-efficacy. Optional modules, including expert interviews and field experiments, assess confidence calibration and perceived competence. This step operationalizes Factor 3, testing the mediating role of PCA in enabling behavioral migration.

Step 4: Performance Attribution via Dual-Agent Simulation (Factor 2). The fourth step addresses the second challenge by testing whether LLM-assisted trades outperform human baselines using a dual-agent simulation framework comprising a VT and a DP (see Section 2.3, Appendix B). The VT applies empirically grounded cognitive degradations to model bounded rationality, representing a lower-bound human counterfactual. The SP simulates psychologically plausible, LLM-independent decision-making through structured prompting. This simulation framework operationalizes Factor 2 of the theoretical model by evaluating whether LLMs facilitate superior strategy integration and decision quality. Performance is benchmarked across Sharpe ratios, drawdown metrics, and trade consistency. Triangulated inference logic distinguishes true cognitive uplift from heuristic mimicry or distortion effects.

Step 5: Dependent Variable—Change in Behavior and Risk Attitude. The final step captures the dependent variable: measurable shifts in retail investor behavior and risk orientation. Change is operationalized via the BSI, trading frequency, portfolio concentration, and volatility exposure. This step incorporates the full apparatus necessary to address first challenge, structured around four analytical components: (1) temporal attribution and exposure anchoring; (2) identification of behavioral markers and strategy migration; (3) triangulation of psychological mechanisms via PCA diagnostics; and (4) attribution integrity using matched-asset and macro-event controls. These processes ensure that observed behavioral changes are causally linked to LLM exposure rather than confounded by exogenous factors. Market-level diagnostics are incorporated to evaluate whether individual behavioral shifts aggregate into detectable market anomalies. EMH tests assess deviations from informational efficiency, while AMH diagnostics interpret such deviations as bounded rational adaptation or reflexive feedback. Together, these frameworks support attribution across both micro (behavioral) and macro (market) levels, distinguishing cognitive uplift from collective distortion or convergence effects.

The empirical agenda integrates both quantitative and qualitative methodologies to ensure comprehensive validation of the proposed model. The initial steps employ established techniques including thematic literature reviews, comparative case studies, and desk research, widely recognized for their role in theory-driven behavioral investigation and technology evaluation (Webster & Watson, 2002; R. K. Yin, 2018). Survey instruments and field experiments grounded in the Theory of Planned Behavior (Ajzen, 1991) and validated through psychometric standards (DeVellis, 2016) capture psychological mediation and perceived cognitive assistance. The simulation-based performance attribution and market-level diagnostics build on rigorous agent-based and econometric methods, detailed in Appendix B and Appendix C. This mixed-method design reflects best practices in behavioral finance and information systems research, supporting both construct validity and empirical robustness across micro- and macro-level analyses.

Taken together, this five-step agenda provides a coherent, theoretically grounded, and empirically rigorous framework for validating the proposed model. By integrating psychological mediation, simulation-based benchmarking, and market diagnostics, the agenda enables causal inference in environments where traditional control groups are infeasible, advancing both theoretical understanding and methodological practice in LLM-augmented retail investing research.

4.2. Detecting LLM-Induced Behavioral Change in Retail Investing

This section operationalizes the causal pathway: LLM Exposure → Psychological Mediators (TPB, PCA) → Behavioral Shift (BSI) → Market Diagnostics (EMH/AMH). Appendix A and Appendix C provide full operational, statistical, and formulaic details; here we focus on theoretical anchoring and empirical linkage.

- Temporal Exposure Anchoring. The launches of ChatGPT (November 2022) and GPT-4 (March 2023) serve as exogenous structural breaks in time-series analyses of retail trading behavior. We follow event-study methodology refined for behavioral finance, aligning periods of LLM diffusion with shifts in investor activity. Diffusion intensity is proxied by LLM-related search and engagement metrics (e.g., Google Trends, Reddit, YouTube), a strategy grounded in the literature on technology adoption and investor attention (Kirtac & Germano, 2024).

- Strategy Shifts in Retail Trading (BSI Changes). Behavioral indicators—such as multi-leg options trading, increased turnover, portfolio concentration, and reduced holding durations—are used to capture shifts in investor strategies (Lopez-Lira & Tang, 2024). These serve as observable manifestations of perceived behavioral control (PCA) and overconfidence, consistent with prior work linking psychological distortions to risky retail trading (Glaser & Weber, 2007; Han et al., 2022). These metrics compose the Behavioral Shift Index (BSI), defined and mathematically specified in Appendix A.

- Psychological Mediation via TPB and PCA Calibration. To directly investigate psychological pathways, we implement TPB-based surveys measuring changes in TPB constructs following LLM exposure. TPB has robust empirical support across domains and informs our understanding of intention-to-behavior processes (Ajzen, 1991; Gimmelberg et al., 2025b). Qualitative interviews and confidence-calibration tasks to detect PCA-induced miscalibration—where increased confidence does not correspond to improved trading outcomes.

- Attribution Control via Market Diagnostics (EMH/AMH). We apply matched-asset counterfactuals—comparing LLM-associated securities to similar but presumably unexposed ones—enabling difference-in-differences estimation that accounts for confounders such as macro shocks, earnings announcements, and social sentiment (Tetlock, 2007). Patterns of behavioral change accompanied by price movement would align with competitive equilibrium. In contrast, persistent behavior with negligible price adjustment may indicate AMH dynamics, whereas sustained inefficiencies could suggest EMH deviations.

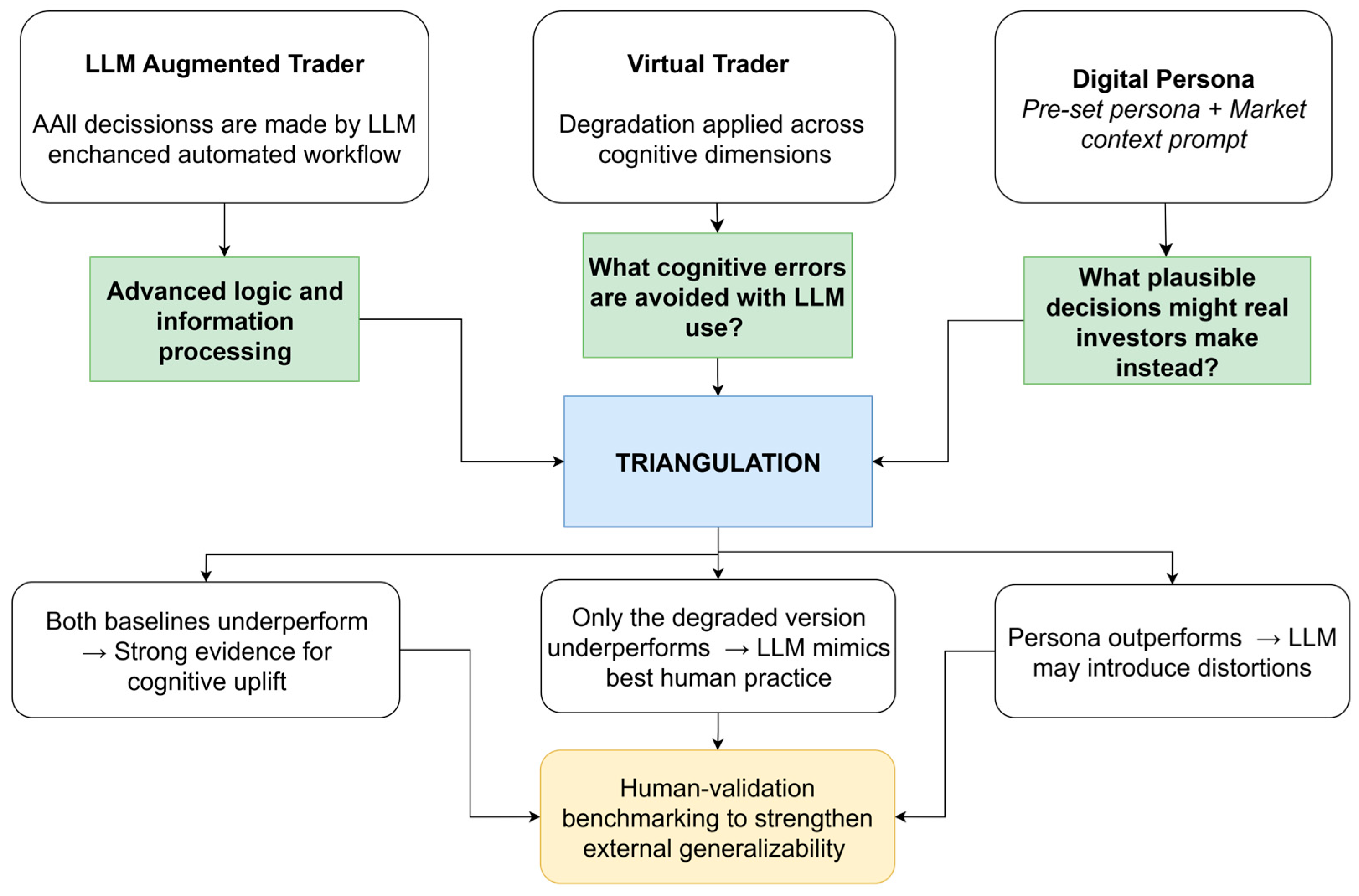

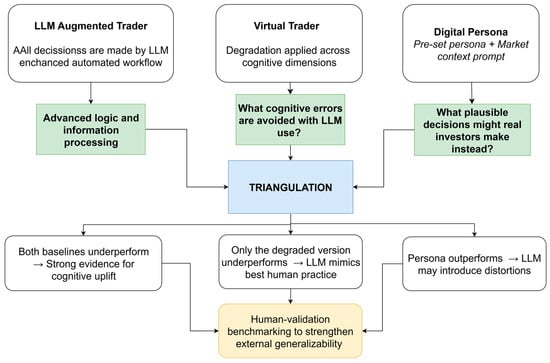

4.3. Dual Simulation Benchmarking: The Virtual Trader and Digital Persona Framework

In this section, we address the fundamental evaluation challenge: the absence of a valid human control group once LLMs are integrated into trading workflows. Such a control would require matched LLM-naive investors operating over prolonged periods under equivalent conditions—a setup that is logistically, ethically, and epistemologically untenable once cognitive exposure to LLMs has occurred. To overcome this, we propose a Dual-Agent Simulation Framework, depicted in Figure 3. Two complementary agents—the Virtual Trader (VT) and the Digital Persona (DP)—serve as computational stand-ins for human decision-making baselines. The VT models performance low bounds constrained by documented cognitive limitations, while the DP captures middling yet psychologically plausible investor behaviors. Their juxtaposition against LLM-augmented outcomes facilitates epistemic triangulation, offering structured inference in lieu of unobtainable human controls. This framework provides an empirically grounded alternative for assessing LLM-augmented trading performance.

Figure 3.

Triangulation and Inference Logic.

4.3.1. Virtual Trader: A Cognitively Degraded Counterfactual

The VT serves as a cognitively bounded counterfactual, designed to estimate the lower boundary of plausible human decision performance under real-world constraints. Rather than representing a sub-human or pathologically impaired agent, it models unaided retail investor cognition operating under empirically documented limitations: attentional fatigue, input omission, recency bias, volatility misestimation, and heuristic simplification. These degradation parameters do not simulate irrationality or failure but instead reflect well-established cognitive constraints observed in behavioral finance and decision science (H. A. Simon, 1955; Barberis & Thaler, 2002). By framing the VT as a lower-bound not in absolute human potential, but in unaided, real-time performance under cognitive load, the model clarifies what LLMs are expected to overcome. Importantly, this simulation does not benchmark against idealized expert behavior, but against a psychologically grounded baseline reflective of the conditions under which most retail investors operate. This provides a meaningful performance comparator for testing whether LLM augmentation offers true cognitive uplift in strategy design, execution, and outcome.

Degradation process draws on the bounded rationality framework (H. A. Simon, 1955) and reflects cognitive asymmetries documented in experimental markets, such as misestimation of implied volatility, recency bias, and limited multimodal integration. The methodology enables performance attribution—across Sharpe ratios, entry-exit timing, or drawdown metrics—while ensuring that the only difference between LLM and VT trades is the presence or absence of cognitive augmentation.

This approach embeds a key epistemological assumption: that LLMs represent an optimal or near-optimal strategic baseline. Recent research warns against such assumptions. LLMs exhibit hallucination, narrative overconfidence, and probabilistic miscalibration, especially in domains involving structured reasoning and stochastic inference (Ganguli et al., 2022a; L. Huang et al., 2025; Varshney et al., 2025). Treating the LLM as an infallible reference risks overestimating its advantage and must be mitigated via validation protocols, including signal corroboration, consensus scoring, and rejection of low-confidence outputs.

While the VT models cognitive constraints across validated dimensions, the current implementation treats these as modular and additive for purposes of tractability and simulation stability. This simplification facilitates calibration and attribution in the baseline framework. However, behavioral research highlights that such impairments often interact nonlinearly—e.g., fatigue amplifies attentional lapses, and emotional arousal skews volatility interpretation (Loewenstein et al., 2001; Kahneman, 2011). These limitations are acknowledged and addressed in Appendix A.3, which introduces a rule-based interdependency module as a forward-looking enhancement for simulating cascading cognitive effects under stress.

4.3.2. Digital Persona: Human Plausibility via LLM Emulation

The Digital Persona (DP) provides a conceptually distinct counterfactual. Rather than degrading an LLM output, this approach builds behavioral emulation from the ground up. Each persona is constructed using structured prompts encoding psychological traits, demographic attributes, and trading history, instructing the LLM to “think like” a specific type of retail investor. This technique draws on agent-based simulation theory and recent advances in LLM-enabled computational social science (Gao et al., 2023; H. Jiang et al., 2024; Hartley et al., 2025).

DPs offer mid-range performance estimates rooted in plausibility rather than constraint. They integrate constructs such as financial literacy, emotional reactivity, risk aversion, and platform familiarity. Unlike the VT, they do not assume LLM optimality and are evaluated based on whether their responses align with typical human behaviors under given constraints. Recent empirical studies demonstrate that LLM personas can exhibit realistic investment behavior when designed using psychological trait encoding, such as the Big Five personality model (Borman et al., 2024; Hartley et al., 2025).

However, DPs face significant ecological limitations. They do not learn from error, lack reward-based feedback loops, and exhibit no temporal memory of past trades—traits that are central to real investor cognition (Lux & Zwinkels, 2018). Furthermore, LLM-generated personas are sensitive to temperature settings, prompt design, and output randomness, which raises concerns regarding behavioral coherence and reproducibility, especially in longitudinal simulations (Gao et al., 2023; H. Jiang et al., 2024).

To mitigate such risks, simulations will be run under constrained randomness parameters with standard temperature controls and prompt regularization. Nonetheless, these limitations may challenge the authenticity of simulated investor behavior, particularly in high-stakes, feedback-sensitive domains like trading, which warrant further research.

4.3.3. Epistemic Triangulation and Inference Logic

This dual-agent design supports three structured inference scenarios:

- Both baselines underperform the LLM → Strong evidence for LLM-enabled cognitive uplift and strategic enhancement.

- Only the degraded Virtual Trader underperforms → Indicates that the LLM mimics best-practice human logic without necessarily surpassing it.

- Digital Persona outperforms the LLM → Suggests that LLMs may introduce distortions or risk-taking strategies inconsistent with typical investor behavior.

This triangulation (See Figure 3) approach aligns with emerging standards in simulation-based causal attribution, particularly in environments where treatment exposure (LLM usage) alters the cognitive architecture irreversibly (Holland, 1986; Athey & Wager, 2019). It also reflects broader principles of counterfactual realism and hybrid benchmarking in AI–human comparison studies (Gui & Toubia, 2023; Anthis et al., 2025).

To address potential algorithmic myopia and improve generalizability, a human-validation stage is specified as part of the preregistered empirical agenda (see Appendix B). Its execution and results lie outside the scope of the present conceptual contribution; the cited protocol defines how it will be conducted (Gao et al., 2023; Q. Wang et al., 2025). As specified in our preregistration protocol (Gimmelberg et al., 2025a), the LLM-Augmented Trader (LAT) operates as a capability-routed, multi-model system. Tasks are dispatched to the most suitable large language model (LLM) based on a maintained capability matrix: complex tool-orchestration and multi-step tool-calling are routed to the top-performing GPT-5 family model; multimodal parsing that requires combined PDF-and-image handling is routed to the top-performing Claude Sonnet family model; task types where current evidence indicates weaker tool-calling are not routed to that family until evidence changes. Each task invocation is recorded in a registry capturing provider, model family, model alias, release channel, client/library version, and prompt/protocol version. We distinguish two change classes: within-family upgrades (e.g., Claude Sonnet 4.1 to 4.5; GPT-5 minor refresh) are treated as minor, whereas cross-family substitutions (e.g., moving a tool-orchestration task from Gemini to GPT-5) are treated as major. All changes are time-locked and posted to the Open Science Framework (OSF)2 change log (Gimmelberg et al., 2025a). Validation is structured on two layers: first, information-identical replays by the Virtual Trader (a bounded-rule comparator) and a Digital Persona (a behaviorally specified counterfactual) isolate decision-maker effects; second, a small practitioner vignette panel is specified to rate plausibility and ecological fit. Data validation accompanies both layers via time-stamped decision snapshots and vendor cross-checks (prices, implied volatility, open interest) with schema validation of machine-readable artifacts. This architecture accommodates rolling model improvements while preserving auditability and replication at the capability-class level; empirical execution belongs to the separate, preregistered study.

Asset selection in this study is procedural and dynamic: at each decision timestamp, candidates are selected by a time-locked screener; therefore, no fixed ex ante asset list exists. For replication, the realized asset set and the strategy instances corresponding to the reported runs will be archived at study launch and on each protocol version bump as OSF artifacts (time-stamped screener exports and contemporaneous option-chain snapshots), with Appendix B, Appendix B.1 providing the sampling rules, consistent strategy families, and the data collection and quality control procedures applied at decision time. Concrete trading strategy families are enumerated in Appendix B, Appendix B.1 and match the OSF preregistered workflow.

4.4. Behavioral Bias: Quantification and Controls

This study pre-specifies bias quantification and control logic before data collection.

Our amplification test is defined ex ante as pairwise differences in bias metrics between LAT and each baseline (LAT–VT; LAT–DP). We report effect sizes and uncertainty for (i) probability calibration/over-precision, (ii) confirmation/selective exposure in evidence slates, and (iii) the disposition effect, with signs and magnitudes interpreted as amplification if LAT is statistically worse than both baselines under identical information. Directionally, LLM scaffolding may reduce selective exposure by broadening evidence collection, yet it can increase over-precision by fluently narrated but overconfident rationales; if such contrasts fail to materialize once basic cost/risk controls are applied, we will conclude no amplification.

Controls are implemented via two replay agents that receive the same information as the LLM-Augmented Trader (LAT): a Virtual Trader (VT; bounded-rule comparator) and a Digital Persona (DP; behaviorally specified baseline). These serve as control groups at this stage; the practitioner vignette panel rates plausibility only. We will quantify three bias families using established measures. First, calibration error of decisions is evaluated with proper scoring/Brier-based calibration (and, where applicable, coverage of stated intervals), following the verification literature on reliability and strictly proper scoring rules (Dawid, 1982; Tilmann & Raftery, 2007). Second, the disposition effect is measured using the standard Proportion of Gains Realized (PGR) minus Proportion of Losses Realized (PLR) specification, adapted to options via mark-to-market classification of gains and losses at decision time (Shefrin & Statman, 1985; Odean, 1998; Weber & Camerer, 1998). Third, selective-exposure/confirmation is operationalized as the share of belief-congruent evidence in the decision’s recorded evidence slate, anchored in the selective-exposure meta-analysis and a finance-specific confirmation bias literature (Nickerson, 1998; Hart et al., 2009; Pouget et al., 2017). We avoid ad hoc thresholds; any change with inferential impact will be version-bumped in the protocol and disclosed prior to execution. The experiment has not started; preregistration is finalized. Operational rubrics, inputs, and worked audit examples for all bias metrics are provided in Appendix B, and in the preregistered protocol (Gimmelberg et al., 2025a).

5. Conclusions