Fingerspelling and Its Role in Translanguaging

Abstract

1. Introduction

2. Fingerspelling

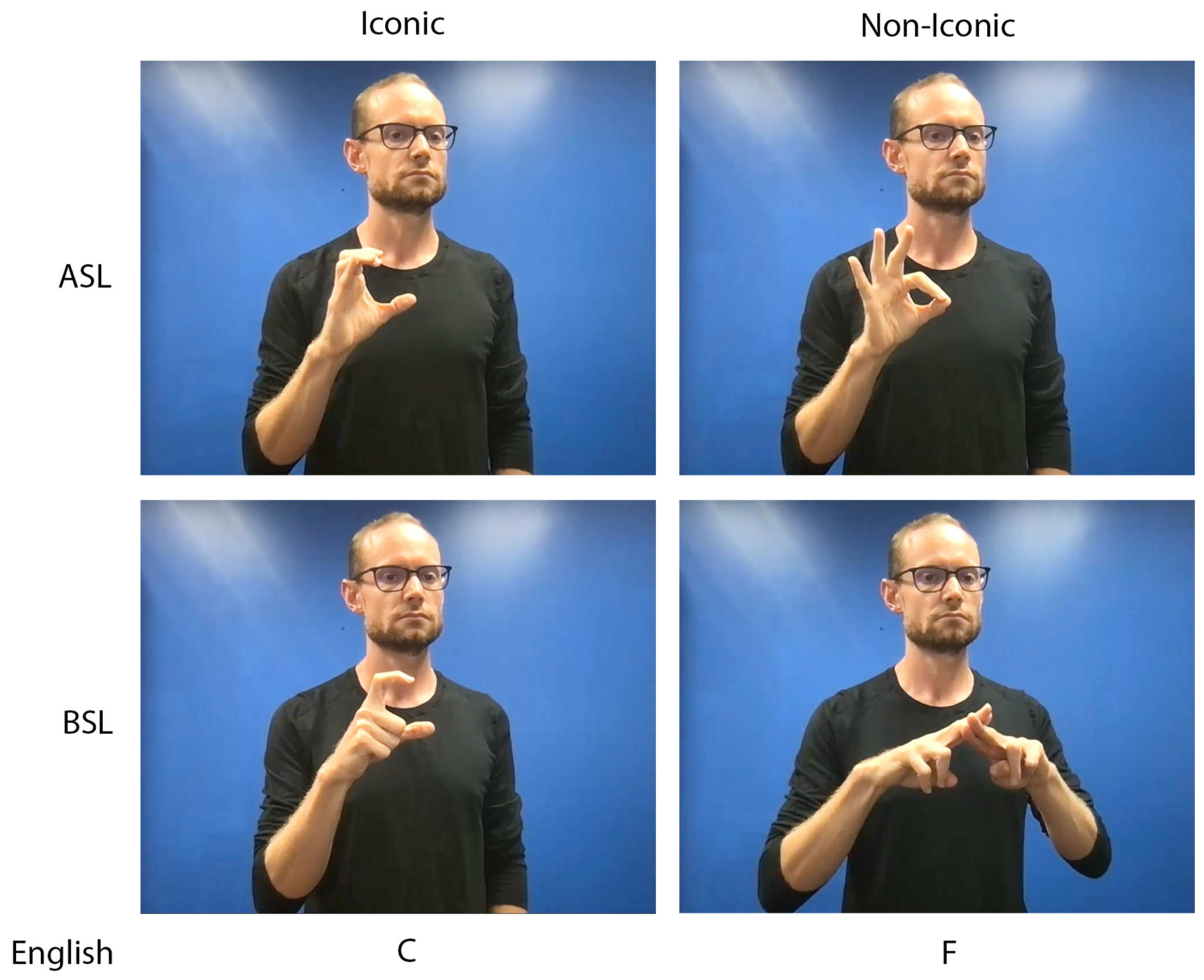

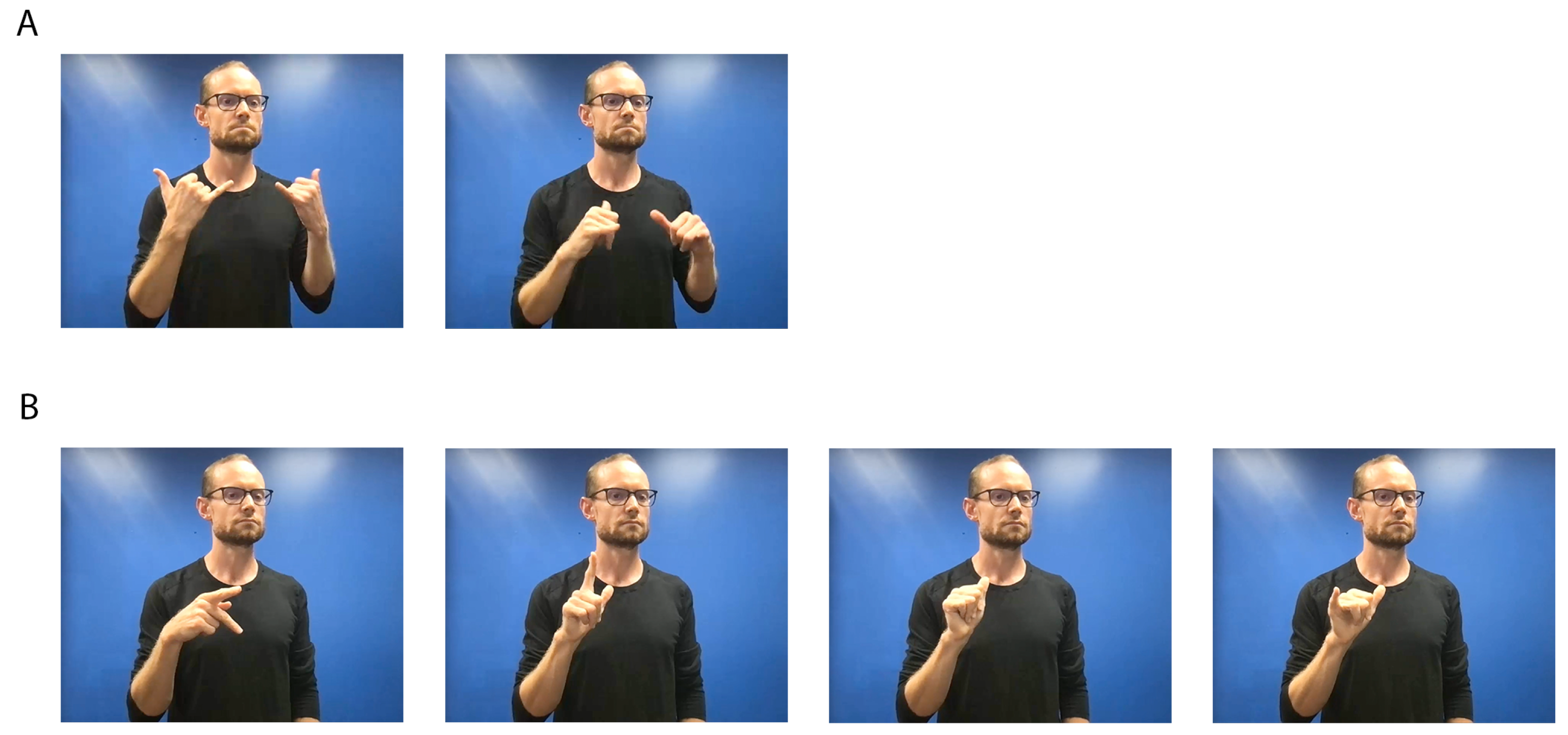

2.1. Linguistics of Fingerspelling

2.2. Fingerspelling Production

2.3. Fingerspelling Comprehension

2.4. Fingerspelling Acquisition

- Exploring handshapes, letters, and inventing fingerspelling (Age: <4)

- Exploring the direction of writing and fingerspelling (Ages 4–8)

- Practicing and memorizing names, words, and using fingerspelling as a memory aid (Ages 4–8)

- Segmenting fingerspelling words into individuals fingerspelled letters and decoding written words into fingerspelled letters (Ages 4–8)

- Learning the relationships between letters (print and fingerspelled), words, signs, mouth movements, and voice (Ages 4–8)

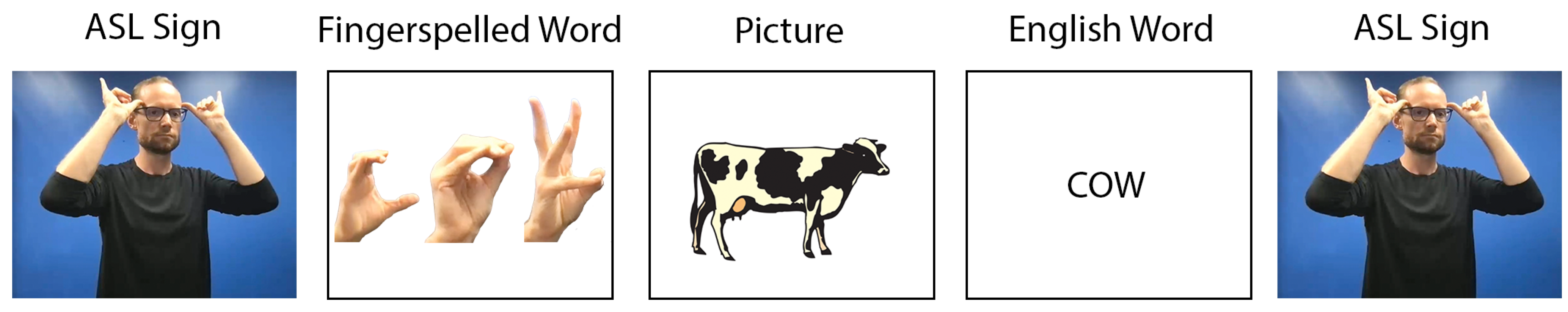

2.5. Fingerspelling and Reading

3. Fingerspelling and Translanguaging

3.1. Fingerspelling in an Integrated Linguistic System

3.2. Inside and Outside Perspectives on Fingerspelling

3.3. Translanguaging in the Classroom

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Akamatsu, Carol Tane. 1982. The Acquisition of Fingerspelling in Pre-School Children. Ph.D. dissertation, University of Rochester, Rochester, NY, USA. Unpublished. [Google Scholar]

- Alawad, Hadeel, and Millicent Musyoka. 2018. Examining the Effectiveness of Fingerspelling in Improving the Vocabulary and Literacy Skills of Deaf Students. Creative Education 9: 456–68. [Google Scholar] [CrossRef][Green Version]

- Allen, Thomas E. 2015. ASL Skills, Fingerspelling Ability, Home Communication Context and Early Alphabetic Knowledge of Preschool-Aged Deaf Children. Sign Language Studies 15: 233–65. [Google Scholar] [CrossRef]

- Anobile, Giovanni, Roberto Arrighi, and David C. Burr. 2019. Simultaneous and sequential subitizing are separate systems, and neither predicts math abilities. Journal of Experimental Child Psychology 178: 86–103. [Google Scholar] [CrossRef] [PubMed]

- Antia, Shirin D., Amy R. Lederberg, Susan Easterbrooks, Brenda Schick, Lee Branum-Martin, Carol M. Connor, and Mi-Young Webb. 2020. Language and Reading Progress of Young Deaf and Hard-of-Hearing Children. Journal of Deaf Studies and Deaf Education 25: 334–50. [Google Scholar] [CrossRef] [PubMed]

- Beal-Alvarez, Jennifer S., and Daileen M. Figueroa. 2017. Generation of signs within semantic and phonological categories: Data from deaf adults and children who use American sign language. Journal of Deaf Studies and Deaf Education 22: 219–32. [Google Scholar] [CrossRef] [PubMed]

- Bélanger, Nathalie N., and Keith Rayner. 2015. What Eye Movements Reveal About Deaf Readers. Current Directions in Psychological Science 24: 220–26. [Google Scholar] [CrossRef] [PubMed]

- Bellugi, Urusula, and Susan Fischer. 1972. A comparison of sign language and spoken language. Cognition 1: 173–200. [Google Scholar] [CrossRef]

- Blumenthal-Kelly, Arlene. 1995. Fingerspelling interaction: A set of deaf parents and their deaf daughter. In Sociolinguistics in Deaf Communities. Edited by C. Lucas. Washington, DC: Gallaudet University Press, pp. 62–76. [Google Scholar]

- Büchel, Christian, Cathy Price, and Karl Friston. 1998. A multimodal language region in the ventral visual pathway. Nature 394: 272–77. [Google Scholar] [CrossRef]

- Canagarajah, Suresh. 2011. Translanguaging in the classroom: Emerging issues for research and pedagogy. Applied Linguistics Review 2: 1–28. [Google Scholar] [CrossRef]

- Canals, Laia. 2021. Multimodality and translanguaging in negotiation of meaning. Foreign Language Annals 54: 647–70. [Google Scholar] [CrossRef]

- Chamberlain, Charlene, and Rachel I. Mayberry. 2000. Theorizing about the relation between American Sign Language and reading. In Language Acquisition by Eye. London: Routledge, pp. 221–59. [Google Scholar]

- Crume, Peter K. 2013. Teachers’ perceptions of promoting sign language phonological awareness in an ASL/English bilingual program. Journal of Deaf Studies and Deaf Education 18: 464–88. [Google Scholar] [CrossRef] [PubMed]

- De Meulder, Maartje, Annelies Kusters, Erin Moriarty, and Joseph J. Murray. 2019. Describe, don’t prescribe. The practice and politics of translanguaging in the context of deaf signers. Journal of Multilingual and Multicultural Development 40: 892–906. [Google Scholar] [CrossRef]

- Dehaene, Stanislas, and Laurent Cohen. 2011. The unique role of the visual word form area in reading. Trends in Cognitive Sciences 15: 254–62. [Google Scholar] [CrossRef] [PubMed]

- Dufour, André, Olivier Després, Renaud Brochard, Christian Scheiber, and Christel Robert. 2004. Perceptual encoding of fingerspelled and printed alphabet by deaf signers: An fMRI study. Perceptual and Motor Skills 98: 971–82. [Google Scholar] [CrossRef] [PubMed]

- Ebling, Sarah, Sarah Johnson, Rosalee Wolfe, Robyn Moncrief, John McDonald, Souad Baowidan, Tobias Haug, Sandra Sidler-Miserez, and Katja Tissi. 2017. Evaluation of Animated Swiss German Sign Language Fingerspelling Sequences and Signs. In International Conference on Universal Access in Human-Computer Interaction. Cham: Springer, pp. 3–13. [Google Scholar]

- Emmorey, Karen. 2021. New Perspectives on the Neurobiology of Sign Languages. Frontiers in Communication 6: 252. [Google Scholar] [CrossRef]

- Emmorey, Karen, and Jennifer A. F. Petrich. 2012. Processing orthographic structure: Associations between print and fingerspelling. Journal of Deaf Studies and Deaf Education 17: 194–204. [Google Scholar] [CrossRef] [PubMed]

- Emmorey, Karen, Katherine J. Midgley, Casey B. Kohen, Zed Sevcikova Sehyr, and Phillip J. Holcomb. 2017. The N170 ERP component differs in laterality, distribution, and association with continuous reading measures for deaf and hearing readers. Neuropsychologia 106: 298–309. [Google Scholar] [CrossRef]

- Emmorey, Karen, Sonya Mehta, Stephen McCullough, and Thomas J. Grabowski. 2016. The neural circuits recruited for the production of signs and fingerspelled words. Brain and Language 160: 30–41. [Google Scholar] [CrossRef]

- Emmorey, Karen, Stephen McCullough, and Jill Weisberg. 2015. Neural correlates of fingerspelling, text, and sign processing in deaf American sign language–English bilinguals. Language, Cognition and Neuroscience 30: 749–67. [Google Scholar] [CrossRef]

- Emmorey, Karen, Thomas Grabowski, Stephen McCullough, Hanna Damasio, Laura L. B. Ponto, Richard D. Hichwa, and Ursula Bellugi. 2003. Neural systems underlying lexical retrieval for sign language. Neuropsychologia 41: 85–95. [Google Scholar] [CrossRef]

- Erting, Carol J. 1999. Bilingualism in a deaf family: Fingerspelling in early childhood. In The Deaf Child in the Family and at School: Essays in Honor of Kathryn P. Meadow-Orlans. Edited by P. E. Spencer, C. J. Erting and M. Marschark. London: Psychology Press, pp. 41–54. [Google Scholar] [CrossRef]

- García, Ofelia, and Angel M. Y. Lin. 2016. Translanguaging in Bilingual Education. In Bilingual and Multilingual Education. Encyclopedia of Language and Education, 3rd ed. Edited by Ofelia García, Angel M. Y. Lin and Stephen May. Berlin: Springer, pp. 117–30. [Google Scholar] [CrossRef]

- Geer, Leah Caitrin. 2016. Teaching ASL Fingerspelling to Second-Language Learners: Explicit versus Implicit Phonetic Training. Ph.D. dissertation, University of Texas at Austin, Austin, TX, USA. Unpublished. [Google Scholar]

- Geer, Leah C., and Jonathan Keane. 2018. Improving ASL fingerspelling comprehension in L2 learners with explicit phonetic instruction. Language Teaching Research 22: 439–57. [Google Scholar] [CrossRef]

- Geer, Leah C., and Sherman Wilcox. 2019. Fingerspelling. In The SAGE Encyclopedia of Human Communication Sciences and Disorders. Edited by Jack Damico and Martin J. Ball. Los Angeles: Sage Publications Inc., pp. 754–57. [Google Scholar]

- Geraci, Carlo, Marta Gozzi, Costanza Papagno, and Carlo Cecchetto. 2008. How grammar can cope with limited short-term memory: Simultaneity and seriality in sign languages. Cognition 106: 780–804. [Google Scholar] [CrossRef] [PubMed]

- Grushkin, Donald A. 1998. Lexidactylophobia: The (Irrational) Fear of Fingerspelling. Annals of the Deaf 143: 404–15. [Google Scholar] [CrossRef] [PubMed]

- Haptonstall-Nykaza, Tamara S., and Brenda Schick. 2007. The transition from fingerspelling to english print: Facilitating english decoding. Journal of Deaf Studies and Deaf Education 12: 172–83. [Google Scholar] [CrossRef] [PubMed]

- Harris, Margaret, and John R. Beech. 1998. Implicit Phonological Awareness and Early Reading Development in Prelingually Deaf Children. The Journal of Deaf Studies and Deaf Education 3: 205–16. Available online: https://academic.oup.com/jdsde/article/3/3/205/334895 (accessed on 20 October 2022). [CrossRef]

- Hein, Kadri. 2013. Quantitative and qualitative aspects of switching between Estonian Sign Language and spoken Estonian. Eesti Rakenduslingvistika Ühingu Aastaraamat Estonian Papers in Applied Linguistics 9: 43–59. [Google Scholar] [CrossRef]

- Hile, Amy Elizabeth. 2009. Deaf Children’s Acquisition of Novel Fingerspelled Words. Ph.D. dissertation, University of Colorado, Boulder, CO, USA. Unpublished. [Google Scholar]

- Hirsh-Pasek, Kathy. 1987. The Metalinguistics of Fingerspelling: An Alternate Way to Increase Reading Vocabulary in Congenitally Deaf Readers. Reading Research Quarterly 22: 455–74. [Google Scholar] [CrossRef]

- Holmström, Ingela, and Krister Schönström. 2018. Deaf lecturers’ translanguaging in a higher education setting. A multimodal multilingual perspective. Applied Linguistics Review 9: 89–111. [Google Scholar] [CrossRef]

- Hornberger, Nancy H., and Holly Link. 2012. Translanguaging and transnational literacies in multilingual classrooms: A biliteracy lens. International Journal of Bilingual Education and Bilingualism 15: 261–78. [Google Scholar] [CrossRef]

- Hosemann, Jana, Nivedita Mani, Annika Herrmann, Markus Steinbach, and Nicole Altvater-Mackensen. 2020. Signs activate their written word translation in deaf adults: An ERP study on cross-modal co-activation in German Sign Language. Glossa 5: 1–25. [Google Scholar] [CrossRef]

- Humphries, Tom, and Francine MacDougall. 1999. “Chaining” and other links: Making connections between American Sign Language and English in Two Types of School Settings. Visual Anthropology Review 15: 84–94. [Google Scholar] [CrossRef]

- Jerde, Thomas E., John F. Soechting, and Martha Flanders. 2003. Coarticulation in Fluent Fingerspelling. Journal of Neuroscience 23: 2383–93. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Xianwei, Bo Hu, Suresh Chandra Satapathy, Shui-Hua Wang, and Yu-Dong Zhang. 2020. Fingerspelling Identification for Chinese Sign Language via AlexNet-Based Transfer Learning and Adam Optimizer. Scientific Programming 2020: 1–13. [Google Scholar] [CrossRef]

- Johnston, Trevor A., ed. 1998. Signs of Australia: A New Dictionary of Auslan. North Rocks: North Rocks Press. [Google Scholar]

- Keane, Jonathan. 2014. Towards and Articulatory Model of Handshape: What Fingerspelling Tells Us about the Phonetics and Phonology of Handshape in American Sign Language. Ph.D. dissertation, University of Chicago, Chicago, IL, USA. Unpublished. [Google Scholar]

- Keane, Jonathan, Diane Brentari, and Jason Riggle. 2012. Coarticulation in ASL fingerspelling. Paper presented at the 42nd Annual Meeting of the North East Linguistic Society, Toronto, ON, Canada, November 11–13. [Google Scholar]

- Kusters, Annelies. 2017. Deaf and hearing signers’ multimodal and translingual practices. Applied Linguistics Review 10: 1–8. [Google Scholar] [CrossRef]

- Lederberg, Amy R., Lee Branum-Martin, Mi-Young Webb, Brenda Schick, Shirin Antia, Susan R. Easterbrooks, and Carol McDonald Connor. 2019. Modality and Interrelations Among Language, Reading, Spoken Phonological Awareness, and Fingerspelling. Journal of Deaf Studies and Deaf Education 24: 408–23. [Google Scholar] [CrossRef]

- Lee, Brittany, Gabriela Meade, Katherine J. Midgley, Phillip J. Holcomb, and Karen Emmorey. 2019. ERP evidence for co-activation of English words during recognition of American Sign Language signs. Brain Sciences 9: 148. [Google Scholar] [CrossRef]

- Leeson, Lorraine, Sarah Sheridan, Katie Cannon, Tina Murphy, Helen Newman, and Heidi Veldheer. 2020. Hands in Motion: Learning to Fingerspell in Irish Sign Language TEANGA, Special Issue 11. TEANGA, The Journal of the Irish Association for Applied Linguistics 11: 120–41. [Google Scholar] [CrossRef]

- Leonard, Matthew K., Ben Lucas, Shane Blau, David P. Corina, and Edward F. Chang. 2020. Cortical Encoding of Manual Articulatory and Linguistic Features in American Sign Language. Current Biology 30: 4342–4351.e3. [Google Scholar] [CrossRef]

- Lepic, Ryan. 2019. A usage-based alternative to “lexicalization” in sign language linguistics. Glossa 4: 1–30. [Google Scholar] [CrossRef]

- Lucas, Ceil, and Clayton Valli. 1992. Language Contact in the American Deaf Community. New York: Academic Press Inc. [Google Scholar]

- Lyster, Roy. 2019. Translanguaging in immersion: Cognitive support or social prestige? Canadian Modern Language Review 75: 340–52. [Google Scholar] [CrossRef]

- Mazak, Catherine M., and Claudia Herbas-Donoso. 2015. Translanguaging practices at a bilingual university: A case study of a science classroom. International Journal of Bilingual Education and Bilingualism 18: 698–714. [Google Scholar] [CrossRef]

- McKee, David, and Graeme Kennedy. 2006. The Distribution of Signs in New Zealand Sign Language. Sign Language Studies 6: 372–90. [Google Scholar] [CrossRef]

- Meade, Gabriela, Katherine J. Midgley, Zed Sevcikova Sehyr, Phillip J. Holcomb, and Karen Emmorey. 2017. Implicit co-activation of American Sign Language in deaf readers: An ERP study. Brain and Language 170: 50–61. [Google Scholar] [CrossRef] [PubMed]

- Mesch, Johanna. 2001. Tactile Sign Language: Turn Taking and Questions in Signed Conversations of Deafblind People. Wien: Signum. [Google Scholar]

- Miller, Paul, Efrat Banado-Aviran, and Orit E. Hetzroni. 2021. Developing Reading Skills in Prelingually Deaf Preschool Children: Fingerspelling as a Strategy to Promote Orthographic Learning. Journal of Deaf Studies and Deaf Education 26: 363–80. [Google Scholar] [CrossRef]

- Morere, Donna A., and Daniel S. Koo. 2012. Measures of Receptive Language. In Assessing Literacy in Deaf Individuals. Edited by Dinna A. Morere and Thomas Allen. New York: Springer, pp. 159–78. [Google Scholar] [CrossRef]

- Morere, Donna A., and Rachel Roberts. 2012. Fingerspelling. In Assessing Literacy in Deaf Individuals. Edited by Dinna A. Morere and Thomas Allen. New York: Springer, pp. 179–89. [Google Scholar]

- Morford, Jill P., Corrine Occhino, Megan Zirnstein, Judith F. Kroll, Erin Wilkinson, and Pilar Piñar. 2019. What is the source of bilingual cross-language activation in deaf bilinguals? Journal of Deaf Studies and Deaf Education 24: 356–65. [Google Scholar] [CrossRef]

- Morford, Jill P., Erin Wilkinson, Agnes Villwock, Pilar Piñar, and Judith F. Kroll. 2011. When deaf signers read English: Do written words activate their sign translations? Cognition 118: 286–92. [Google Scholar] [CrossRef] [PubMed]

- Nicodemus, Brenda, Laurie Swabey, Lorraine Leeson, Jemina Napier, Giulia Petitta, and Marty M. Taylor. 2017. A Cross-Linguistic Analysis of Fingerspelling Production by Sign Language Interpreters. Sign Language Studies 17: 143–71. [Google Scholar] [CrossRef]

- Nonaka, Angela, Kate Mesh, and Keiko Sagara. 2015. Signed Names in Japanese Sign Language: Linguistic and Cultural Analyses. Sign Language Studies 16: 57–85. [Google Scholar] [CrossRef]

- Ormel, Ellen, Marcel R. Giezen, and Janet G. Van Hell. 2022a. Cross-language activation in bimodal bilinguals: Do mouthings affect the co-activation of speech during sign recognition? Bilingualism 25: 579–87. [Google Scholar] [CrossRef]

- Ormel, Ellen, Marcel R. Giezen, Harry Knoors, Ludo Verhoeven, and Eva Gutierrez-Sigut. 2022b. Predictors of Word and Text Reading Fluency of Deaf Children in Bilingual Deaf Education Programmes. Languages 7: 51. [Google Scholar] [CrossRef]

- Otheguy, Ricardo, Ofelia García, and Wallis Reid. 2015. Clarifying translanguaging and deconstructing named languages: A perspective from linguistics. Applied Linguistics Review 6: 281–307. [Google Scholar] [CrossRef]

- Padden, Carol A. 1998. The ASL lexicon. Sign Language & Linguistics 1: 39–60. [Google Scholar] [CrossRef]

- Padden, Carol. 2005. Learning to Fingerspell Twice: Young Signing Children’s Acquisition of Fingerspelling. In Advances in the Sign-Language Development of Deaf Children. Edited by Brenda Schick, Marc Marschark and Patricia Elizabeth Spencer. Oxford: Oxford University Press, pp. 189–201. [Google Scholar] [CrossRef]

- Padden, Carol A., and Darline Clark Gunsauls. 2003. How the Alphabet Came to Be Used in a Sign Language. Sign Language Studies 4: 10–33. Available online: https://www.jstor.org/stable/26204903 (accessed on 20 October 2022). [CrossRef]

- Padden, Carol, and V. Hanson. 2000. Search for the missing link: The development of skilled reading in deaf children. In The Signs of Language Revisited. Edited by Karen Emmorey and Harlan L. Lane. Mahwah: Psychology Press, pp. 371–80. [Google Scholar] [CrossRef]

- Padden, Carol A., and Barbara Le Master. 1985. An alphabet on the hand: The acquisition of fingerpselling in deaf children. Sign Language Studies 47: 161–72. [Google Scholar] [CrossRef]

- Padden, Carol, and Claire Ramsey. 1998. Reading Ability in Signing Deaf Children. Topics in Language Disorders 18: 30–46. [Google Scholar] [CrossRef]

- Padden, Carol, and Claire Ramsey. 2000. American Sign Language and reading ability in deaf children. In Language Acquisition by Eye. London: Routledge, pp. 65–89. [Google Scholar]

- Puente, Aníbal, Jesús M. Alvarado, and Valeria Herrera. 2006. Fingerspelling and Sign Language as Alternative Codes for Reading and Writing Words for Chilean Deaf Signers. American Annals of the Deaf 151: 299–310. [Google Scholar] [CrossRef]

- Reed, Charlotte M., Lorraine A. Delhorne, Nathaniel I. Durlach, and Susan D. Fischer. 1990. A study of the tactual and visual reception of fingerspelling. Journal of Speech and Hearing Research 33: 786–97. [Google Scholar] [CrossRef]

- Reich, Lior, Marcin Szwed, Laurent Cohen, and Amir Amedi. 2011. A ventral visual stream reading center independent of visual experience. Current Biology 21: 363–68. [Google Scholar] [CrossRef]

- Roos, Carin. 2013. Young deaf children’s fingerspelling in learning to read and write: An ethnographic study in a signing setting. Deafness and Education International 15: 149–78. [Google Scholar] [CrossRef]

- Sanjabi, Ali, Abbas Ali Behmanesh, Ardavan Guity, Sara Siyavoshi, Martin Watkins, and Julie A. Hochgesang. 2016. Zaban Eshareh Irani (ZEI) and Its Fingerspelling System. Sign Language Studies 16: 500–34. [Google Scholar] [CrossRef]

- Schembri, Adam, and Trevor Johnston. 2007. Sociolinguistic Variation in the Use of Fingerspelling in Australian Sign Language: A Pilot Study. Sign Language Studies 7: 319–47. [Google Scholar] [CrossRef]

- Schotter, Elizabeth R., Emily Johnson, and Amy M. Lieberman. 2020. The sign superiority effect: Lexical status facilitates peripheral handshape identification for deaf signers. Journal of Experimental Psychology: Human Perception and Performance 46: 1397–410. [Google Scholar] [CrossRef] [PubMed]

- Scott, Jessica A., and Jonathan Henner. 2021. Second verse, same as the first: On the use of signing systems in modern interventions for deaf and hard of hearing children in the USA. Deafness & Education International 23: 123–41. [Google Scholar]

- Sehyr, Zed Sevcikova, and Karen Emmorey. 2022. Contribution of Lexical Quality and Sign Language Variables to Reading Comprehension. The Journal of Deaf Studies and Deaf Education 27: 355–72. [Google Scholar] [CrossRef]

- Sehyr, Zed Sevcikova, Jennifer Petrich, and Karen Emmorey. 2017. Fingerspelled and printed words are recoded into a speech-based code in short-term memory. Journal of Deaf Studies and Deaf Education 22: 72–87. [Google Scholar] [CrossRef]

- Sehyr, Zed Sevcikova, Katherine J. Midgley, Phillip J. Holcomb, Karen Emmorey, David C. Plaut, and Marlene Behrmann. 2020. Unique N170 signatures to words and faces in deaf ASL signers reflect experience-specific adaptations during early visual processing. Neuropsychologia 141: 107414. [Google Scholar] [CrossRef]

- Sehyr, Zed Sevcikova, Marcel R. Giezen, and Karen Emmorey. 2018. Comparing semantic fluency in American Sign Language and English. Journal of Deaf Studies and Deaf Education 23: 399–407. [Google Scholar] [CrossRef]

- Șerban, Ioana Letiția, and Ioana Tufar. 2020. Developing and implementing A Romanian Sign Language curriculum. Education and Applied Didactics 2: 22–39. Available online: https://www.ceeol.com/search/article-detail?id=972261 (accessed on 20 October 2022).

- Shook, Anthony, and Viorica Marian. 2019. Covert co-activation of bilinguals’ non-target language. Linguistic Approaches to Bilingualism 9: 228–52. [Google Scholar] [CrossRef]

- Stone, Adam, Geo Kartheiser, Peter C. Hauser, Laura-Ann Petitto, and Thomas E. Allen. 2015. Fingerspelling as a novel gateway into reading fluency in deaf bilinguals. PLoS ONE 10: e0139610. [Google Scholar] [CrossRef]

- Swanwick, Ruth. 2017. Translanguaging, learning and teaching in deaf education. International Journal of Multilingualism 14: 233–49. [Google Scholar] [CrossRef]

- Swanwick, Ruth, and Linda Watson. 2007. Parents sharing books with young deaf children in spoken English and in BSL: The common and diverse features of different language settings. Journal of Deaf Studies and Deaf Education 12: 385–405. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Thoryk, Robertta. 2010. A call for improvement: The need for research-based materials in American Sign Language education. Sign Language Studies 11: 110–20. [Google Scholar] [CrossRef]

- Transler, Catherine, Jacqueline Leybaert, and Jean Emile Gombert. 1999. Do deaf children use phonological syllables as reading units. Journal of Deaf Studies and Deaf Education 4: 124–43. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Treiman, Rebecca, and Kathryn Hirsh-Pasek. 1983. Silent reading: Insights from second-generation deaf readers. Cognitive Psychology 15: 39–65. [Google Scholar] [CrossRef]

- Tucci, Stacey L., Jessica W. Trussell, and Susan R. Easterbrooks. 2014. A review of the evidence on strategies for teaching children who are DHH grapheme-phoneme correspondence. Communication Disorders Quarterly 35: 191–203. [Google Scholar] [CrossRef]

- Tulving, Endel, and P. H. Lindsay. 1967. Identification of simultaneously presented simple visual and auditory stimuli. Acta Psychologica 27: 101–9. [Google Scholar] [CrossRef]

- Valli, Clayton, Ceil Lucas, Kristin J. Mulrooney, and Miako Villanueva. 2011. Linguistics of American Sign Language. In An Introduction Fifth Edition, Completely Revised and Updated, 5th ed. Gallaudet: Gallaudet University Press. [Google Scholar]

- Velasco, Patricia, and Ofelia García. 2014. Translanguaging and the writing of bilingual learners. Bilingual Research Journal 37: 6–23. [Google Scholar] [CrossRef]

- Wang, Qiuying, and Jean F. Andrews. 2017. Literacy instruction in primary level deaf education in China. Deafness and Education International 19: 63–74. [Google Scholar] [CrossRef]

- Waters, Dafydd, Ruth Campbell, Cheryl M. Capek, Bencie Woll, Anthony S. David, Philip K. McGuire, Michael J. Brammer, and Mairéad MacSweeney. 2007. Fingerspelling, signed language, text and picture processing in deaf native signers: The role of the mid-fusiform gyrus. NeuroImage 35: 1287–302. [Google Scholar] [CrossRef]

- Wei, Li. 2018. Translanguaging as a Practical Theory of Language. Applied Linguistics 39: 9–30. [Google Scholar] [CrossRef]

- Wilcox, Sherman. 1992. Phonetics of Fingerspelling. Amsterdam: John Benjamins Publishing Company. [Google Scholar] [CrossRef]

- Willoughby, Louisa, Howard Manns, Shimako Iwasaki, and Meredith Bartlett. 2014. Misunderstanding and Repair in Tactile Auslan. Sign Language Studies 14: 419–43. [Google Scholar] [CrossRef]

- Xiao, Huimin, Caihua Xu, and Hetty Rusamy. 2020. Pinyin Spelling Promotes Reading Abilities of Adolescents Learning Chinese as a Foreign Language: Evidence From Mediation Models. Frontiers in Psychology 11: 596680. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, B.; Secora, K. Fingerspelling and Its Role in Translanguaging. Languages 2022, 7, 278. https://doi.org/10.3390/languages7040278

Lee B, Secora K. Fingerspelling and Its Role in Translanguaging. Languages. 2022; 7(4):278. https://doi.org/10.3390/languages7040278

Chicago/Turabian StyleLee, Brittany, and Kristen Secora. 2022. "Fingerspelling and Its Role in Translanguaging" Languages 7, no. 4: 278. https://doi.org/10.3390/languages7040278

APA StyleLee, B., & Secora, K. (2022). Fingerspelling and Its Role in Translanguaging. Languages, 7(4), 278. https://doi.org/10.3390/languages7040278