Measuring Emotion Recognition Through Language: The Development and Validation of an English Productive Emotion Vocabulary Size Test

Abstract

1. Introduction

2. Literature Review

2.1. Emotion Vocabulary in Language Learning

2.2. Challenges in Identifying Emotion Vocabulary

2.3. Existing Measures of Emotion Vocabulary

3. Methodology

3.1. Participants

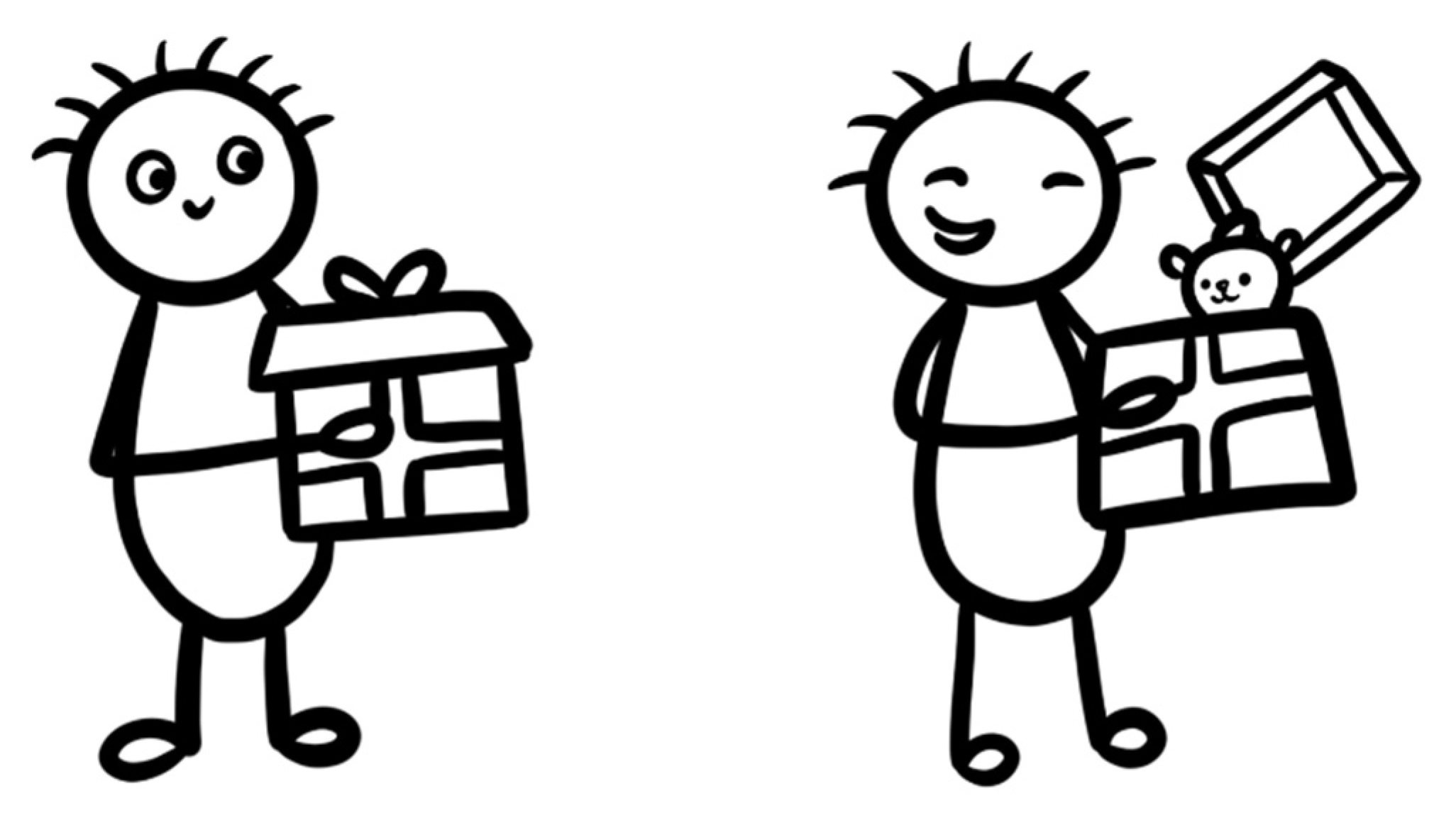

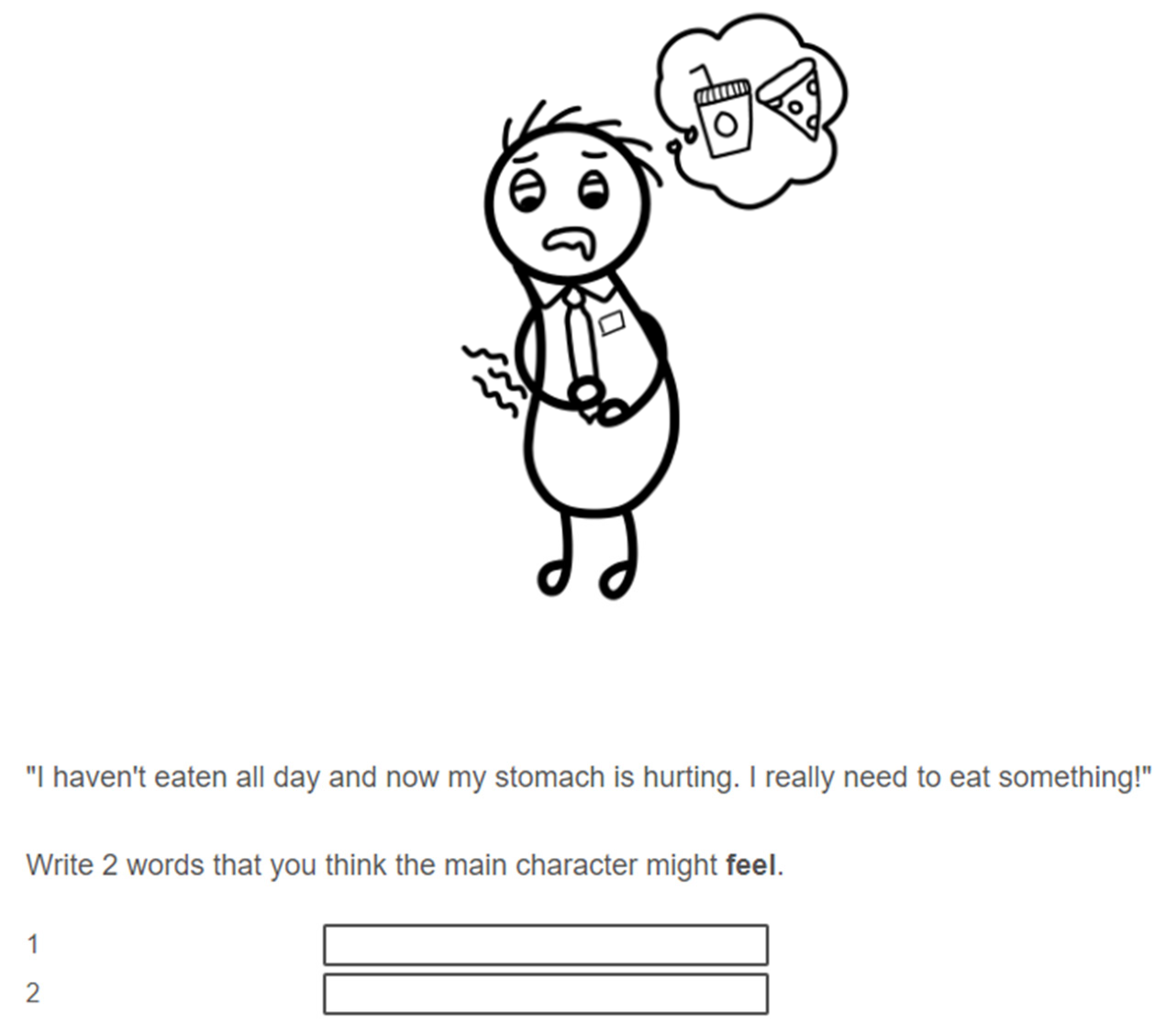

3.2. Designing the Productive Emotion Vocabulary Size Test (PEVST) (Appendix A)

- The emotion word was not explicitly mentioned in the context, but was conveyed through the protagonist’s actions or thoughts to prevent informing participants of the target emotion.

- Each character’s thoughts were presented in the first person to simulate emotional experiences that participants might feel.

- Each character’s thoughts did not exceed 2 sentences to keep the vignettes as brief as possible.

3.3. Supplementary Instruments

Lexical Test for Advanced Learners of English (LexTALE)

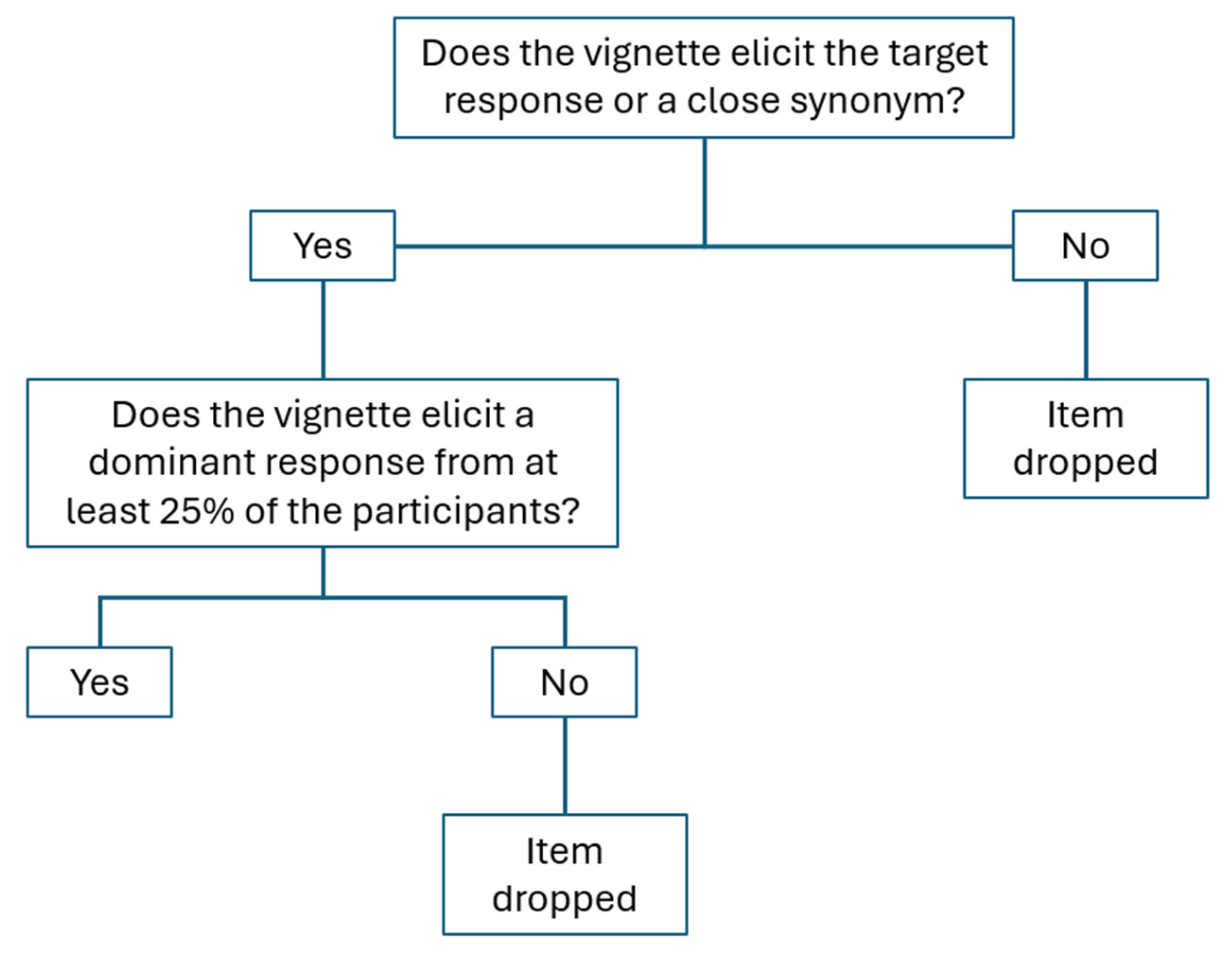

3.4. Data Analysis

3.4.1. Scoring the PEVST

3.4.2. Rasch Analysis

4. Results

4.1. By-Item Analysis

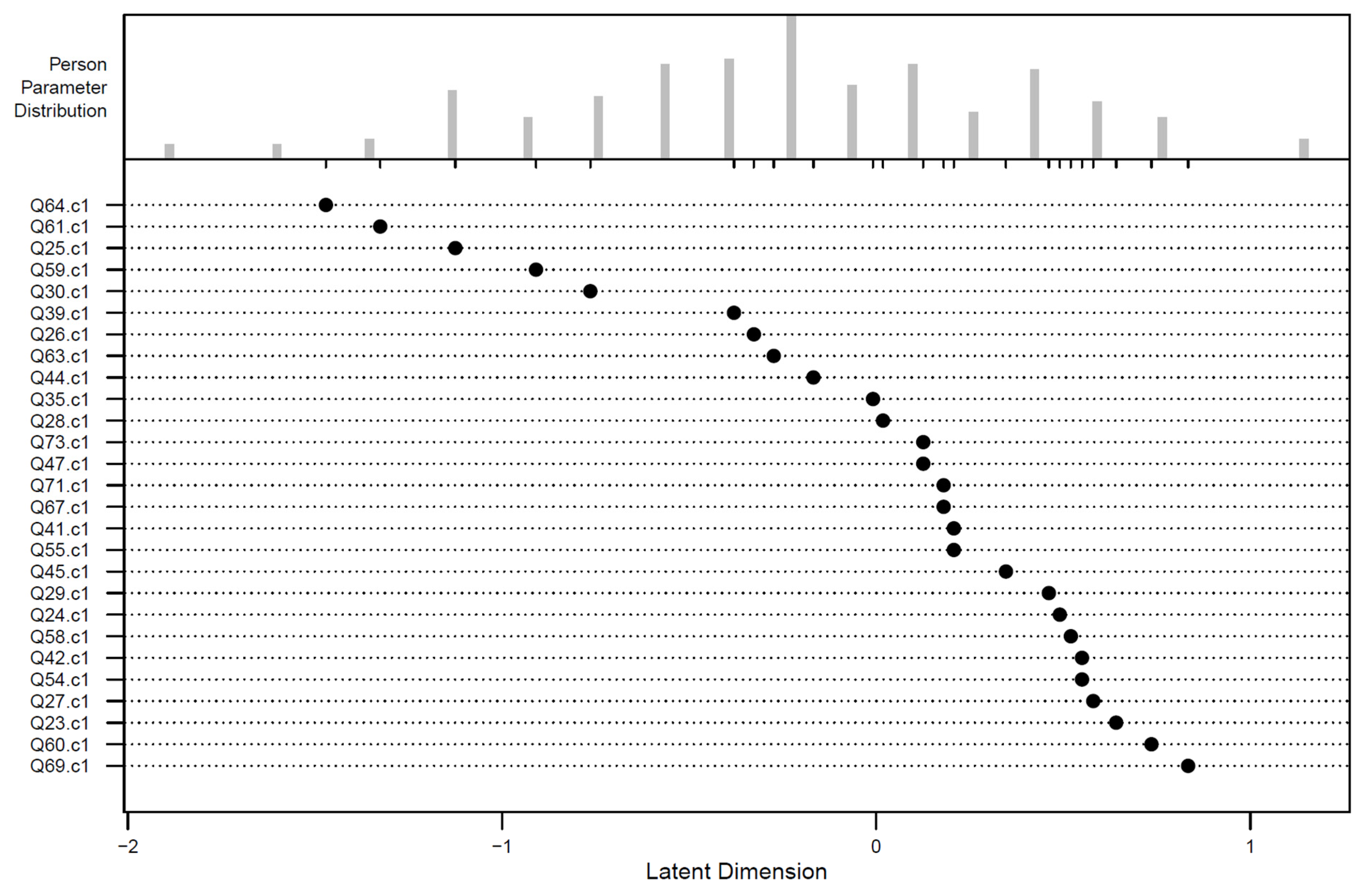

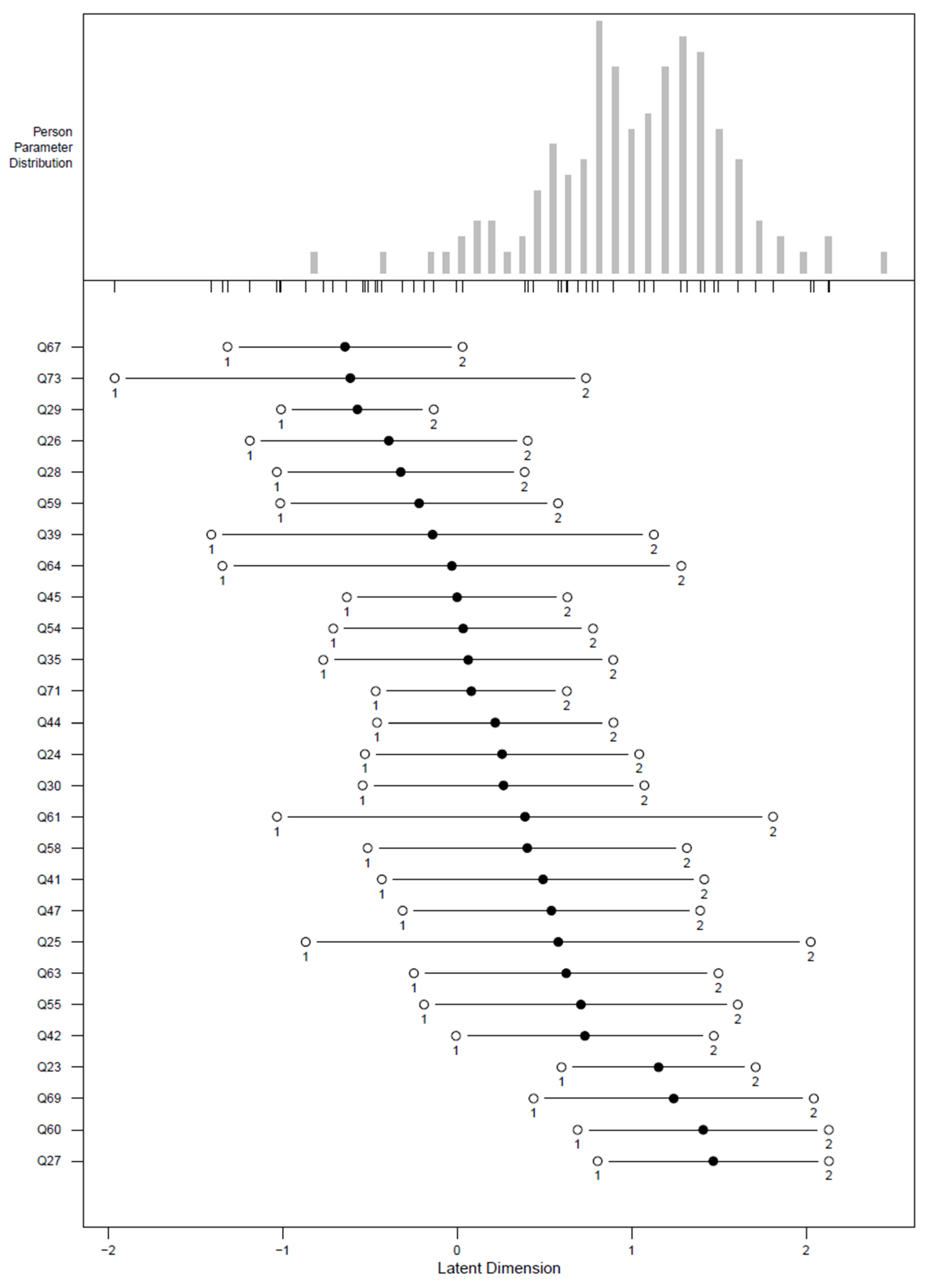

4.2. Rasch Analysis

4.2.1. Dichotomous Rasch Model

- Q26:

- Infit and outfit t were out of range (beyond −2);

- Q71:

- Negative discrimination value.

4.2.2. Polytomous Rasch Model

- Q30

- ○

- Q30.c1: p < 0.05, z > +1.96;

- ○

- Q30.c2: p = 0.065; although not significant, but close to threshold.

- Q41

- ○

- Q41.c2: p < 0.05, z > +1.96;

- ○

- Q41.c1: p = 0.054; although not significant, but close to threshold.

5. Discussion

Limitations and Future Directions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

Glossary

| L1/L2 | First language/second language. English L2 refers here to English learned as an additional language in ESL/EFL contexts, but does not denote a chronological or dominance/language proficiency or order of acquisition in the case of multilinguals. |

| ESL/EFL | English as a Second Language refers to learners who are acquiring English in a country where English is the dominant or official language and is used outside of classrooms in a natural manner. English as a Foreign Language refers to learners who are learning English in a non-English-speaking country and English is typically used only in the classroom, so exposure is more limited and structured. |

| BNC/COCA | A combined word frequency list derived from the British National Corpus (BNC) and the Corpus of Contemporary American English (COCA). The combined BNC/COCA list provides frequency rankings that reflect usage in both British and American English. |

| SUBTLEX-UK | A word frequency database based on over 200 million words from British television and film subtitles. As subtitles tend to closely match everyday spoken language, SUBTLEX-UK provides frequency data that are often more representative of colloquial usage than those based on traditional written corpora. |

| Word families | A base word and its inflected or derived forms (e.g., teach, teaches, teacher, teaching). Word family counting assumes learners can recognise related forms once the base word is known. |

| High-frequency words | The most commonly used words in a language. They cover a large proportion of everyday texts and are essential for basic comprehension. |

| Mid-frequency Words | Words that occur less frequently than high-frequency items but are still common in academic, literary, and general texts. |

| Low-frequency Words | Rare words, often domain-specific, which appear infrequently and are usually acquired incidentally or through specialised reading. |

| Frequency bands | Groupings of words based on how often they occur in large language corpora. (High-frequency: 1–3k; mid-frequency: 4–8k; low-frequency: 9k and above (Schmitt & Schmitt, 2014). |

Appendix A

| Item | Target Emotion | Frequency | Valence | Arousal | Vignette |

|---|---|---|---|---|---|

| Q23 | dirty | 1k | 4.50 | 3.44 | “Oh no, I need a shower!” |

| Q24 | doubt | 1k | 3.28 | 4.33 | “Can I really figure this out?” |

| Q25 | lazy | 1k | 3.90 | 2.76 | “I should really get up and start taking care of these tasks but I don’t want to.” |

| Q26 | defeat | 3k | 3.74 | 4.14 | “I lost the competition.” |

| Q27 | obsess | 4k | 3.23 | 4.95 | “This movie is so good, I’m going to get their merchandise!” |

| Q28 | bewilder | 5k | 4.32 | 4.57 | “What is going on? Why is our boss wearing a unicorn outfit to work?” |

| Q29 | dismay | 5k | 3.10 | 2.85 | “I can’t believe my job application was rejected again.” |

| Q30 | dizzy | 5k | 3.36 | 4.95 | “Oh no, I need to lie down.” |

| Q31 | inferior | 5k | 3.43 | 4.55 | “Everyone else seems to be doing so much better than me.” |

| Q32 | daze | 7k | 4.14 | 4.5 | “I can’t think clearly” |

| Q33 | phony | 7k | 2.52 | 4.40 | “I really hope they don’t notice that my bag is fake” |

| Q34 | drowsy | 9k | 4.25 | 2.83 | “I need to pull over. I can’t keep driving like this” |

| Q35 | vindictive | 10k | 3.24 | 4.64 | “I am going to hurt you just like how you hurt me” |

| Q36 | luck | 1k | 6.73 | 4.57 | “I feel like I will win this!” |

| Q37 | support | 1k | 6.89 | 3.05 | “I have a safety net. I always have people that I can rely on.” |

| Q38 | secret | 2k | 5.33 | 4.14 | “I need to watch what I say. I do not want them to find out about my partner yet.” |

| Q39 | relieve | 3k | 7.25 | 3.9 | “Thank goodness! I thought it might be something serious.” |

| Q40 | sympathy | 3k | 6.67 | 3.29 | “Oh dear, I can’t imagine what it feels like to be cheated on.” |

| Q41 | nostalgia | 6k | 6.65 | 4.38 | “I miss those good old days.” |

| Q42 | trustworthy | 8k | 7.25 | 4.22 | “I’m glad my friend shares his secrets with me”. |

| Q43 | sociable | 9k | 6.43 | 4.35 | “Aww I love having all these people around and making new friends.” |

| Q44 | bashful | 13k | 5.55 | 4.36 | “Oh? Who is this? I’ve never met her before!” |

| Q45 | homy | 15k | 5.68 | 3.41 | “It is so nice here, I want to live here forever” |

| Q46 | hate | 1k | 1.96 | 6.26 | “Urgh! I really can’t stand him.” |

| Q47 | responsible | 1k | 2.50 | 5.00 | “This is my fault! I will pay the owner for the repairs” |

| Q48 | accuse | 2k | 3.38 | 5.48 | “I haven’t done anything but she is scolding me.” |

| Q49 | hostile | 3k | 2.35 | 5.39 | “I can’t stand noisy children! Get out of my restaurant!” |

| Q50 | curse | 4k | 2.90 | 5.20 | “This is the sixth time I’ve gotten a punctured tyre this month.” |

| Q51 | greed | 4k | 2.48 | 4.45 | “Yum! This ice-cream is so delicious, I want more!” |

| Q52 | insult | 4k | 2.62 | 5.3 | “Why do they have to say such nasty things to me?” |

| Q53 | intimidate | 4k | 2.84 | 5.27 | “He always shouts at me and says I would be fired if I didn’t do well.” |

| Q54 | frantic | 5k | 3.79 | 5.39 | “I have to find it quickly before they realize it’s missing.” |

| Q55 | horrified | 5k | 2.68 | 6.29 | “How could something so awful happen?!” |

| Q56 | reckless | 5k | 3.09 | 5.18 | “I don’t care what happens.” |

| Q57 | squirm | 7k | 3.86 | 5.29 | “Ugh! I can’t stand the sight of blood.” |

| Q58 | foreboding | 10k | 3.53 | 5.30 | “I think something bad is going to happen.” |

| Q59 | grumpy | 10k | 2.81 | 5.05 | “Why is everyone bugging me today?! I wish they would leave me alone.” |

| Q60 | disbelief | 12k | 4.21 | 5.58 | “There is no way this is actually true.” |

| Q61 | famished | 14k | 4.47 | 6.43 | “I haven’t eaten all day and now my stomach is hurting. I really need to eat something!” |

| Q62 | silly | 1k | 6.27 | 5.13 | “I’m acting so childish.” |

| Q63 | adventure | 2k | 7.40 | 6.36 | “I live for new experiences.” |

| Q64 | curious | 2k | 6.37 | 5.90 | “I really want to understand how it works.” |

| Q65 | defence | 2k | 5.36 | 5.11 | “I need to justify myself. I will not let her criticise me like this.” |

| Q66 | impulse | 4k | 5.16 | 5.33 | “I just had to have it.” |

| Q67 | gratitude | 5k | 6.67 | 5.09 | “My mother is so thoughtful and kind.” |

| Q68 | enchant | 6k | 7.16 | 5.27 | “I feel like I’ve stepped into a magical world.” |

| Q69 | flirt | 6k | 6.73 | 5.93 | “You’re so beautiful. You made me forget my pickup line” |

| Q70 | tickle | 6k | 6.14 | 5.86 | “I can’t stop laughing! I can’t stand it anymore.” |

| Q71 | hilarious | 7k | 7.80 | 6.11 | “I killed that joke. Everyone is laughing hysterically!” |

| Q72 | inquisitive | 9k | 6.00 | 5.33 | “I wonder how many planes, pilots, passengers, and bags are at this airport? I have so many questions!” |

| Q73 | euphoric | 11k | 7.80 | 5.25 | “We’re finally having a baby after trying for five years! This is amazing!” |

Appendix B

| Target Emotion | Freq | Dom 1 | Freq | Count 1 | NA1 | Dom 2 | Freq | Count 2 | NA2 | Dom 3 | Freq | Count 3 | NA3 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dirty | 1k | Dirty * | 1k | 48 | 30.77 | Disgust | 2k | 35 | 22.43 | Un(comfort)able | 1k | 18 | 11.54 |

| Doubt | 1k | Doubt * | 1k | 53 | 33.97 | Confuse | 2k | 33 | 21.15 | Worry | 1k | 18 | 11.54 |

| Lazy | 1k | Lazy * | 1k | 111 | 71.15 | Relax | 2k | 24 | 15.38 | Tire | 1k | 19 | 12.18 |

| Defeat | 3k | Sad | 1k | 83 | 53.20 | Disappoint | 2k | 71 | 45.51 | Defeat * | 3k | 27 | 17.31 |

| Obsess | 4k | Excite | 1k | 50 | 32.05 | Happy | 1k | 34 | 21.79 | Obsess * | 4k | 16 | 10.26 |

| Dizzy | 5k | Dizzy * | 5k | 99 | 63.46 | Tire | 1k | 42 | 26.92 | Sick | 1k | 25 | 16.03 |

| Secret | 2k | Care | 1k | 31 | 19.87 | Cautious | 4k | 30 | 19.23 | Secret * | 2k | 30 | 19.23 |

| Relieve | 3k | Relieve * | 3k | 85 | 54.48 | Relief | 2k | 50 | 32.05 | Happy | 1k | 43 | 27.56 |

| Sympathy | 3k | Sad | 1k | 70 | 44.87 | Empathy | 6k | 41 | 26.28 | Sympathy * | 3k | 37 | 23.72 |

| Nostalgia | 6k | Nostalgic * | 7k | 63 | 40.38 | Happy | 1k | 59 | 37.82 | Sad | 1k | 27 | 17.31 |

| Responsible | 1k | Guilty | 2k | 66 | 42.30 | Responsible * | 1k | 40 | 25.64 | Worry | 1k | 19 | 12.18 |

| Greed | 4k | Happy | 1k | 72 | 46.15 | Satisfy | 2k | 27 | 17.30 | Greed * | 4k | 23 | 14.74 |

| Reckless | 5k | Reckless * | 5k | 36 | 23.07 | Care | 1k | 14 | 8.97 | Apathy | 7k | 9 | 5.77 |

| Disbelief | 12k | Doubt | 1k | 45 | 28.84 | Disbelief * | 12k | 32 | 20.51 | Sceptic | 4k | 26 | 16.67 |

| Silly | 1k | Happy | 1k | 56 | 35.89 | Fun | 1k | 36 | 23.07 | Silly * | 1k | 30 | 19.23 |

| Adventure | 2k | Excite | 1k | 81 | 51.92 | Adventure * | 2k | 69 | 44.23 | Happy | 1k | 31 | 19.87 |

| Curious | 2k | Curious * | 2k | 121 | 77.56 | Determine | 2k | 19 | 12.17 | Interest | 1k | 14 | 8.97 |

| Defence | 2k | Angry | 1k | 44 | 28.21 | Confident | 3k | 28 | 17.94 | Defence * | 2k | 26 | 16.67 |

| Impulse | 4k | Excite | 1k | 24 | 15.38 | Happy | 1k | 23 | 14.74 | Impulse * | 4k | 22 | 14.10 |

| Flirt | 6k | Flirt * | 6k | 42 | 26.92 | Confident | 3k | 24 | 15.38 | Happy | 1k | 17 | 10.90 |

| Inquisitive | 9k | Curious | 2k | 124 | 79.49 | Excite | 1k | 33 | 21.15 | Inquisitive * | 9k | 17 | 10.90 |

Appendix C

| Target Emotion | Freq | Dom 1 | Freq | Count 1 | NA1 | Dom 2 | Freq | Count 2 | NA2 | Dom 3 | Freq | Count 3 | NA3 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| bewilder | 5k | confuse * | 2k | 70 | 44.87 | curious | 2k | 58 | 37.18 | shock | 2k | 28 | 17.95 |

| dismay | 5k | sad | 1k | 54 | 34.61 | disappoint * | 2k | 47 | 30.13 | frustrate | 2k | 42 | 26.92 |

| inferior | 5k | sad | 1k | 56 | 35.90 | disappoint | 2k | 35 | 22.44 | depress | 2k | 13 | 8.33 |

| daze | 7k | confuse * | 2k | 35 | 22.44 | shock | 2k | 27 | 17.31 | distract | 4k | 12 | 7.69 |

| phony | 7k | worry | 1k | 50 | 32.05 | embarrass | 2k | 25 | 16.03 | anxious | 2k | 25 | 16.03 |

| drowsy | 9k | tire | 1k | 40 | 25.64 | dizzy | 5k | 36 | 23.08 | sick | 1k | 32 | 20.51 |

| vindictive | 10k | angry | 1k | 71 | 45.51 | vengeful * | 11k | 58 | 37.18 | revenge | 5k | 26 | 16.67 |

| luck | 1k | confident | 3k | 61 | 39.10 | hope | 1k | 56 | 35.90 | excite | 1k | 37 | 23.72 |

| support | 1k | happy | 1k | 53 | 33.97 | safe | 1k | 46 | 29.49 | grateful | 3k | 32 | 20.51 |

| trustworthy | 8k | happy | 1k | 52 | 33.33 | trust * | 1k | 40 | 25.64 | grateful | 3k | 31 | 19.87 |

| sociable | 9k | happy | 1k | 96 | 61.54 | joy | 2k | 22 | 14.10 | grateful | 3k | 18 | 11.54 |

| bashful | 13k | shy * | 1k | 77 | 49.36 | curious | 2k | 55 | 35.26 | scare | 1k | 24 | 15.38 |

| homy | 15k | comfort * | 1k | 58 | 37.18 | relax | 2k | 42 | 26.92 | happy | 1k | 39 | 25.00 |

| hate | 1k | annoy | 2k | 84 | 53.85 | angry | 1k | 55 | 35.26 | frustrate | 2k | 24 | 15.38 |

| accuse | 2k | confuse | 2k | 68 | 43.59 | sad | 1k | 23 | 14.74 | annoy | 2k | 15 | 9.62 |

| hostile | 3k | annoy | 2k | 90 | 57.69 | angry | 1k | 82 | 52.56 | frustrate | 2k | 24 | 15.38 |

| curse | 4k | frustrate | 2k | 37 | 23.72 | annoy | 2k | 30 | 19.23 | (un)luck(y) | 1k | 38 | 24.36 |

| insult | 4k | angry | 1k | 71 | 45.51 | sad | 1k | 28 | 17.95 | annoy | 2k | 20 | 12.82 |

| intimidate | 4k | sad | 1k | 52 | 33.33 | scare | 1k | 15 | 9.62 | worry | 1k | 14 | 8.97 |

| frantic | 5k | worry | 1k | 51 | 32.69 | anxious | 2k | 45 | 28.85 | panic * | 2k | 36 | 23.08 |

| horrified | 5k | sad | 1k | 62 | 39.74 | shock * | 2k | 39 | 25.00 | disbelief | 12k | 18 | 11.54 |

| squirm | 7k | scare | 1k | 77 | 49.36 | disgust | 2k | 56 | 35.90 | fear | 1k | 26 | 16.67 |

| foreboding | 10k | worry | 1k | 52 | 33.33 | anxious | 2k | 50 | 32.05 | scare | 1k | 34 | 21.79 |

| grumpy | 10k | annoy * | 2k | 105 | 67.31 | frustrate | 2k | 31 | 19.87 | irritate | 4k | 29 | 18.59 |

| famished | 14k | hungry * | 1k | 119 | 76.28 | tire | 1k | 22 | 14.10 | pain | 1k | 20 | 12.82 |

| gratitude | 5k | grateful * | 3k | 64 | 41.03 | love | 1k | 58 | 37.18 | happy | 1k | 51 | 32.69 |

| enchant | 6k | amaze | 2k | 46 | 29.49 | excite | 1k | 33 | 21.15 | happy | 1k | 28 | 17.95 |

| tickle | 6k | happy | 1k | 95 | 60.90 | fun | 1k | 30 | 19.23 | joy | 2k | 28 | 17.95 |

| hilarious | 7k | proud | 2k | 64 | 41.03 | happy | 1k | 62 | 39.74 | confident | 3k | 25 | 16.03 |

| euphoric | 11k | happy * | 1k | 67 | 42.95 | excite | 1k | 52 | 33.33 | joy * | 2k | 21 | 13.46 |

Appendix D

| Target Emotion | Freq | Examples | Sum of Accurate Responses |

|---|---|---|---|

| dirty | 1k | Frustrated, gross, annoyed | 35 |

| doubt | 1k | Anxious, unsure, uncertain | 66 |

| lazy | 1k | Unmotivated, overwhelmed, sluggish | 30 |

| defeat | 3k | Frustrated, dejected, depressed | 69 |

| obsess | 4k | Attracted, addicted, mesmerised | 35 |

| bewilder | 5k | Surprised, funny, puzzled | 62 |

| dismay | 5k | Dejected, hopeless, depressed | 90 |

| dizzy | 5k | Unwell, nauseous, weak | 35 |

| inferior | 5k | Jealous, insecure, worried | 66 |

| daze | 7k | Lost, distraught, disoriented | 40 |

| phony | 7k | Scared, fearful, ashamed | 63 |

| drowsy | 9k | Unwell, fatigue, nauseous | 30 |

| vindictive | 10k | Angry, hateful, resentful | 54 |

| luck | 1k | Optimistic, determined, positive | 33 |

| support | 1k | Loved, secure, comfortable | 55 |

| secret | 2k | Worried, nervous, scared | 54 |

| relieve | 3k | Grateful, thankful, reassured | 35 |

| sympathy | 3k | Pity, sorry, compassion | 35 |

| nostalgia | 6k | Longing, melancholic, wistful | 37 |

| trustworthy | 8k | Glad, appreciated, touched | 45 |

| sociable | 9k | Friendly, welcomed, popular | 30 |

| bashful | 13k | Nervous, confused, anxious | 46 |

| homy | 15k | Content, calm, cosy | 70 |

| hate | 1k | Irritated, disgusted, angry | 75 |

| responsible | 1k | Regret, remorse, sorry | 53 |

| accuse | 2k | Frustrated, misunderstood, wronged | 67 |

| hostile | 3k | Irritated, impatient, angry | 62 |

| curse | 4k | Sad, tired, disappointed | 41 |

| greed | 4k | Hungry, addicted, obsessed | 43 |

| insult | 4k | Hurt, upset, furious | 62 |

| intimidate | 4k | Stressed, hurt, discouraged | 56 |

| frantic | 5k | Scared, nervous, anxious | 52 |

| horrified | 5k | Worried, sorrowful, terrified | 55 |

| reckless | 5k | Fearless, impulsive, free | 30 |

| squirm | 7k | Nauseous, uncomfortable, terrified | 66 |

| foreboding | 10k | Fearful, paranoid, anxious | 47 |

| grumpy | 10k | Angry, impatient, agitated | 60 |

| disbelief | 12k | Unbelievable, suspicious, unimpressed | 33 |

| famished | 14k | Desperate, weak, suffering | 35 |

| silly | 1k | Playful, foolish, comical | 22 |

| adventure | 2k | Adventurous, happy, courageous | 29 |

| curious | 2k | Eager, intrigued, focused | 50 |

| defence | 2k | Determined, annoyed, indignant | 79 |

| impulse | 4k | Greedy, obsessed, tempted | 59 |

| gratitude | 5k | Appreciated, touched, thankful | 61 |

| enchant | 6k | Wonderous, awe, mesmerised | 65 |

| flirt | 6k | Attracted, lustful, infatuated | 55 |

| tickle | 6k | Amused, entertained, giggly | 46 |

| hilarious | 7k | Satisfied, accomplished, funny | 51 |

| inquisitive | 9k | Interested, intrigued, wonder | 45 |

| euphoric | 11k | Ecstatic, elated, overjoyed | 72 |

| 1 | English L2 refers here to English learned as an additional language in ESL/EFL contexts, but does not denote a chronological or dominance/language proficiency or order of acquisition in the case of multilinguals. |

References

- Aryadoust, V., Ng, L. Y., & Sayama, H. (2021). A comprehensive review of Rasch measurement in language assessment: Recommendations and guidelines for research. Language Testing, 38(1), 6–40. [Google Scholar] [CrossRef]

- Baron-Cohen, S., Wheelwright, S., Hill, J., Raste, Y., & Plumb, I. (2001). The “Reading the Mind in the Eyes” Test revised version: A study with normal adults, and adults with Asperger syndrome or high-functioning autism. The Journal of Child Psychology and Psychiatry and Allied Disciplines, 42(2), 241–251. [Google Scholar] [CrossRef]

- Barrett, L. F. (2017a). How emotions are made: The secret life of the brain. Pan Macmillan. [Google Scholar]

- Barrett, L. F. (2017b). The theory of constructed emotion: An active inference account of interoception and categorization. Social Cognitive and Affective Neuroscience, 12(1), 1–23. [Google Scholar] [CrossRef]

- Barrett, L. F., & Westlin, C. (2021). Navigating the science of emotion. Emotion Measurement, 39–84. [Google Scholar] [CrossRef]

- Baumann, J. F., & Graves, M. F. (2010). What is academic vocabulary? Journal of Adolescent & Adult Literacy, 54(1), 4–12. [Google Scholar] [CrossRef]

- Bazhydai, M., Ivcevic, Z., Brackett, M. A., & Widen, S. C. (2019). Breadth of emotion vocabulary in early adolescence. Imagination, Cognition and Personality, 38(4), 378–404. [Google Scholar] [CrossRef]

- Berscheid, E., Moore, B. S., & Isen, A. M. (1990). Contemporary vocabularies of emotion. In Affect in social behavior (pp. 22–38). Cambridge University Press. [Google Scholar]

- Bielak, J., & Mystkowska-Wiertelak, A. (2020). Investigating language learners’ emotion-regulation strategies with the help of the vignette methodology. System, 90, 102208. [Google Scholar] [CrossRef]

- Bond, T. (2015). Applying the rasch model: Fundamental measurement in the human sciences (3rd ed.). Routledge/Taylor & Francis Group. [Google Scholar] [CrossRef]

- Boone, W. J. (2016). Rasch analysis for instrument development: Why, when, and how? CBE Life Sciences Education, 15(4), rm4. [Google Scholar] [CrossRef]

- Boone, W. J., Yale, M. S., & Staver, J. R. (2014). Rasch analysis in the human sciences (pp. 1–482). Springer. [Google Scholar] [CrossRef]

- Boyd, R. L., Ashokkumar, A., Seraj, S., & Pennebaker, J. W. (2022). The development and psychometric properties of LIWC-22. University of Texas at Austin. [Google Scholar]

- Bradley, M. M., & Lang, P. J. (1999). Affective norms for English words (ANEW): Instruction manual and affective ratings. (Technical report C-1). The Center for Research in Psychophysiology. [Google Scholar]

- Brandenburger, M., & Schwichow, M. (2023). Utilizing Latent Class Analysis (LCA) to analyze response patterns in categorical data. In Advances in applications of rasch measurement in science education (pp. 123–156). Springer. [Google Scholar]

- Chen, C., & Truscott, J. (2010). The effects of repetition and L1 lexicalization on incidental vocabulary acquisition. Applied Linguistics, 31(5), 693–713. [Google Scholar] [CrossRef]

- Council of Europe. (2020). Common European framework of reference for languages: Learning, teaching, assessment companion volume. Council of Europe Publishing. Available online: www.coe.int/lang-cefr (accessed on 1 April 2023).

- Crossley, S., Salsbury, T., Titak, A., & Mcnamara, D. (2014). Frequency effects and second language lexical acquisition Word types, word tokens, and word production. International Journal of Corpus Linguistics, 19, 301–332. [Google Scholar] [CrossRef]

- Denham, S. A., Bassett, H. H., Brown, C., Way, E., & Steed, J. (2015). “I know how you feel”: Preschoolers’ emotion knowledge contributes to early school success. Journal of Early Childhood Research, 13(3), 252–262. [Google Scholar] [CrossRef]

- DeVellis, R. F., & Thorpe, C. T. (2021). Scale development: Theory and applications. Sage Publications. [Google Scholar]

- Dewaele, J.-M. (2008). Dynamic emotion concepts of L2 learners and L2 users: A second language acquisition perspective. Bilingualism: Language and Cognition, 11(2), 173–175. [Google Scholar] [CrossRef]

- Dewaele, J.-M. (2015). On emotions in foreign language learning and use. The Language Teacher, 39(3), 13–15. [Google Scholar] [CrossRef]

- Dylman, A. S., Blomqvist, E., & Champoux-Larsson, M. F. (2020). Reading habits and emotional vocabulary in adolescents. Educational Psychology, 40(6), 681–694. [Google Scholar] [CrossRef]

- Ebert, M., Ivcevic, Z., Widen, S. S., Linke, L., & Brackett, M. (2014). Breadth of emotion vocabulary in middle schoolers. Available online: https://elischolar.library.yale.edu/cgi/viewcontent.cgi?article=1038&context=dayofdata (accessed on 24 August 2021).

- Ekman, P. (1999). Facial expressions. In Handbook of Cognition and Emotion (pp. 301–320). John Wiley & Sons Ltd. [Google Scholar]

- Embretson, S. E., & Reise, S. P. (2013). Item response theory. Psychology Press. [Google Scholar]

- Encyclopaedia Britannica. (2022). Words for emotions vocabulary word list. Available online: https://www.britannica.com/dictionary/eb/3000-words/topic/emotions-vocabulary-english (accessed on 21 November 2022).

- Encyclopædia Britannica. (2024). Find definitions & meanings of words|Britannica dictionary. Available online: https://www.britannica.com/dictionary (accessed on 21 November 2022).

- Fabes, R. A., Eisenberg, N., Hanish, L. D., & Spinrad, T. L. (2001). Preschoolers’ spontaneous emotion vocabulary: Relations to likability. Early Education & Development, 12(1), 11–27. [Google Scholar] [CrossRef]

- Ferré, P., Guasch, M., Stadthagen-Gonzalez, H., & Comesaña, M. (2022). Love me in L1, but hate me in L2: How native speakers and bilinguals rate the affectivity of words when feeling or thinking about them. Bilingualism: Language and Cognition, 25(5), 786–800. [Google Scholar] [CrossRef]

- Fisher, W. P. J. (1992). Reliability statistics. Rasch Measurement Transactions, 6, 238. [Google Scholar]

- Glas, C. A. W., & Verhelst, N. D. (1995). Testing the Rasch Model. In G. H. Fischer, & I. W. Molenaar (Eds.), Rasch models: Foundations, recent developments, and applications (pp. 69–95). Springer. [Google Scholar] [CrossRef]

- Goetze, J. (2023a). An appraisal-based examination of language teacher emotions in anxiety-provoking classroom situations using vignette methodology. The Modern Language Journal, 107(1), 328–352. [Google Scholar] [CrossRef]

- Goetze, J. (2023b). Vignette methodology in applied linguistics. Research Methods in Applied Linguistics, 2(3), 100078. [Google Scholar] [CrossRef]

- Grosse, G., Streubel, B., Gunzenhauser, C., & Saalbach, H. (2021). Let’s talk about emotions: The development of children’s emotion vocabulary from 4 to 11 years of age. Affective Science, 2(2), 150–162. [Google Scholar] [CrossRef] [PubMed]

- Ha, A. Y. H., & Hyland, K. (2017). What is technicality? A technicality analysis model for EAP vocabulary. Journal of English for Academic Purposes, 28, 35–49. [Google Scholar] [CrossRef]

- Hoemann, K., Xu, F., & Barrett, L. F. (2019). Emotion words, emotion concepts, and emotional development in children: A constructionist hypothesis. Developmental Psychology, 55(9), 1830–1849. [Google Scholar] [CrossRef]

- Immordino-Yang, M. H., Yang, X. F., & Damasio, H. (2016). Cultural modes of expressing emotions influence how emotions are experienced. Emotion, 16(7), 1033. [Google Scholar] [CrossRef]

- Israelashvili, J., Oosterwijk, S., Sauter, D., & Fischer, A. (2019). Knowing me, knowing you: Emotion differentiation in oneself is associated with recognition of others’ emotions. Cognition and Emotion, 33(7), 1461–1471. [Google Scholar] [CrossRef]

- Kopp, C. B. (1989). Regulation of distress and negative emotions: A developmental view. Developmental Psychology, 25(3), 343–354. [Google Scholar] [CrossRef]

- Lakoff, G. (2016). Language and emotion. Emotion Review, 8(3), 269–273. [Google Scholar] [CrossRef]

- Lange, J., Heerdink, M. W., & van Kleef, G. A. (2022). Reading emotions, reading people: Emotion perception and inferences drawn from perceived emotions. Current Opinion in Psychology, 43, 85–90. [Google Scholar] [CrossRef] [PubMed]

- Laukka, P., & Elfenbein, H. A. (2020). Cross-cultural emotion recognition and in-group advantage in vocal expression: A meta-analysis. Emotion Review, 13(1), 3–11. [Google Scholar] [CrossRef]

- Lemhöfer, K., & Broersma, M. (2012). Introducing LexTALE: A quick and valid lexical test for advanced learners of English. Behavior Research Methods, 44(2), 325. [Google Scholar] [CrossRef] [PubMed]

- Linacre, J. M. (2024). A user’s guide to WINSTEPS® MINISTEP: Rasch-model computer programs. Program Manual 5.8.0, 719. WINSTEPS. [Google Scholar]

- Lindquist, K. A. (2021). Language and emotion: Introduction to the special issue. Affective Science, 2(2), 91–98. [Google Scholar] [CrossRef]

- Mair, P., Hatzinger, R., Maier, M. J., Rusch, T., & Mair, M. P. (2016). Package ‘eRm’. R Foundation. [Google Scholar]

- Masrai, A. (2019). Vocabulary and reading comprehension revisited: Evidence for high-, mid-, and low-frequency vocabulary knowledge. Sage Open, 9(2), 2158244019845182. [Google Scholar] [CrossRef]

- Mavrou, I. (2021). Emotional intelligence, working memory, and emotional vocabulary in L1 and L2: Interactions and dissociations. Lingua, 257, 103083. [Google Scholar] [CrossRef]

- Mayer, J. D., Caruso, D. R., & Salovey, P. (2016). The ability model of emotional intelligence: Principles and updates. Emotion Review, 8(4), 290–300. [Google Scholar] [CrossRef]

- Mayer, J. D., Salovey, P., & Caruso, D. (2012). Models of emotional intelligence. In Handbook of intelligence (pp. 396–420). Cambridge University Press. [Google Scholar] [CrossRef]

- Merriam-Webster. (2024). Merriam-webster: America’s most trusted dictionary. Available online: https://www.merriam-webster.com/ (accessed on 21 November 2022).

- Milton, J. (2013). Measuring the contribution of vocabulary knowledge to proficiency in the four skills. In C. Bardel, C. Lindqvist, & B. Laufer (Eds.), L2 vocabulary acquisition, knowledge and use: New perspectives on assessment and corpus analysis (pp. 57–78). Amsterdam; Eurosla. [Google Scholar]

- Nation, P. (2004). A study of the most frequent word families in the British National Corpus. In Vocabulary in a second language (pp. 3–13). John Benjamins Publishing Company. [Google Scholar]

- Nation, P., & Beglar, D. (2007). A vocabulary size test. The Language Teacher, 31(7), 9–13. [Google Scholar] [CrossRef]

- Ng, B. C., Cui, C., & Cavallaro, F. (2019). The annotated lexicon of chinese emotion words. WORD, 65(2), 73–92. [Google Scholar] [CrossRef]

- Nook, E. C., Sasse, S. F., Lambert, H. K., McLaughlin, K. A., & Somerville, L. H. (2017). Increasing verbal knowledge mediates development of multi-dimensional emotion representations. Nature Human Behaviour, 1(12), 881–889. [Google Scholar] [CrossRef] [PubMed]

- Nook, E. C., Stavish, C. M., Sasse, S. F., Lambert, H. K., Mair, P., McLaughlin, K. A., & Somerville, L. H. (2020). Charting the development of emotion comprehension and abstraction from childhood to adulthood using observer-rated and linguistic measures. Emotion, 20(5), 773–792. [Google Scholar] [CrossRef] [PubMed]

- Pavlenko, A. (2008). Emotion and emotion-laden words in the bilingual lexicon. Bilingualism, 11(2), 147–164. [Google Scholar] [CrossRef]

- Pavlenko, A. (2012). Affective processing in bilingual speakers: Disembodied cognition? International Journal of Psychology, 47(6), 405–428. [Google Scholar] [CrossRef]

- Pentón Herrera, L. J., & Darragh, J. J. (2024). Social-emotional learning in English language teaching. University of Michigan Press. [Google Scholar]

- Pérez-García, E., & Sánchez, M. J. (2020). Emotions as a linguistic category: Perception and expression of emotions by Spanish EFL students. Language, Culture and Curriculum, 33(3), 274–289. [Google Scholar] [CrossRef]

- Qian, D. D., & Lin, L. H. F. (2019). The relationship between vocabulary knowledge and language proficiency. In The Routledge handbook of vocabulary studies (pp. 66–80). Routledge. [Google Scholar] [CrossRef]

- Revelle, W. (2023). Procedures for psychological, psychometric, and personality research [R package psych version 2.3.12]. Available online: https://CRAN.R-project.org/package=psych (accessed on 11 November 2022).

- RStudio Team. (2020). RStudio: Integrated development environment for R (4.3.3). RStudio, PBC. [Google Scholar]

- Sánchez, M. J., & Pérez-García, E. (2020). Emotion(less) textbooks? An investigation into the affective lexical content of EFL textbooks. System, 93, 102299. [Google Scholar] [CrossRef]

- Schmitt, N., & Schmitt, D. (2014). A reassessment of frequency and vocabulary size in L2 vocabulary teaching1. Language Teaching, 47(4), 484–503. [Google Scholar] [CrossRef]

- Schmitt, N., & Schmitt, D. (2020). Vocabulary in language teaching. Cambridge University Press. [Google Scholar]

- Sette, S., Spinrad, T. L., & Baumgartner, E. (2017). The relations of preschool children’s emotion knowledge and socially appropriate behaviors to peer likability. International Journal of Behavioral Development, 41(4), 532–541. [Google Scholar] [CrossRef]

- Smith, A. B., Rush, R., Fallowfield, L. J., Velikova, G., & Sharpe, M. (2008). Rasch fit statistics and sample size considerations for polytomous data. BMC Medical Research Methodology, 8, 33. [Google Scholar] [CrossRef]

- Smith, E. V., Jr. (2002). Detecting and evaluating the impact of multidimensionality using item fit statistics and principal component analysis of residuals. Journal of Applied Measurement, 3(2), 205–231. [Google Scholar] [PubMed]

- Stanley, D. (2021). Create American Psychological Association (APA) style tables [R package apaTables version 2.0.8]. Available online: https://CRAN.R-project.org/package=apaTables (accessed on 2 November 2022).

- Streubel, B., Gunzenhauser, C., Grosse, G., & Saalbach, H. (2020). Emotion-specific vocabulary and its contribution to emotion understanding in 4- to 9-year-old children. Journal of Experimental Child Psychology, 193, 104790. [Google Scholar] [CrossRef] [PubMed]

- Szabo, C. Z., Bong, W. L. J., Wang, Y., Chee, A., & Lee, S. T. (forthcoming). Developing a productive emotion vocabulary test for adult speakers of english as an additional language. [Manuscript in preparation]. [Google Scholar]

- Takizawa, K. (2024). What contributes to fluent L2 speech? Examining cognitive and utterance fluency link with underlying L2 collocational processing speed and accuracy. Applied Psycholinguistics, 45(3), 516–541. [Google Scholar] [CrossRef]

- Taylor, J. E., Beith, A., & Sereno, S. C. (2020). LexOPS: An R package and user interface for the controlled generation of word stimuli. Behavior Research Methods, 52(6), 2372–2382. [Google Scholar] [CrossRef]

- van Heuven, W. J. B., Mandera, P., Keuleers, E., & Brysbaert, M. (2014). SUBTLEX-UK: A new and improved word frequency database for British English. Quarterly Journal of Experimental Psychology, 67(6), 1176–1190. [Google Scholar] [CrossRef]

- Vine, V., Boyd, R. L., & Pennebaker, J. W. (2020). Natural emotion vocabularies as windows on distress and well-being. Nature Communications, 11(1), 1–9. [Google Scholar] [CrossRef]

- Warriner, A. B., Kuperman, V., & Brysbaert, M. (2013). Norms of valence, arousal, and dominance for 13,915 English lemmas. Behavior Research Methods, 45(4), 1191–1207. [Google Scholar] [CrossRef]

- Webb, S., Sasao, Y., & Ballance, O. (2017). The updated Vocabulary Levels Test: Developing and validating two new forms of the VLT. ITL-International Journal of Applied Linguistics, 168(1), 33–69. [Google Scholar] [CrossRef]

- Weidman, A. C., Steckler, C. M., & Tracy, J. L. (2017). The jingle and jangle of emotion assessment: Imprecise measurement, casual scale usage, and conceptual fuzziness in emotion research. Emotion (Washington, D.C.), 17(2), 267–295. [Google Scholar] [CrossRef]

- Whissel, C. M. (1989). The Dictionary of Affect in Language. In The measurement of emotions (pp. 113–131). Academic Press. [Google Scholar] [CrossRef]

- Wickham, H., Averick, M., Bryan, J., Chang, W., Mcgowan, L. D., François, R., Grolemund, G., Hayes, A., Henry, L., Hester, J., Kuhn, M., Lin Pedersen, T., Miller, E., Bache, S. M., Müller, K., Ooms, J., Robinson, D., Seidel, D. P., Spinu, V., … Yutani, H. (2019). Welcome to the Tidyverse. Journal of Open Source Software, 4(43), 1686. [Google Scholar] [CrossRef]

- Wilkens, R., Dalla Vecchia, A., Boito, M. Z., Padró, M., & Villavicencio, A. (2014). Size does not matter. Frequency does. A study of features for measuring lexical complexity. In Advances in artificial intelligence—IBERAMIA 2014 (Vol. 8864, pp. 129–140). Lecture notes in computer science (Including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics). Springer. [Google Scholar] [CrossRef]

- Wright, B. D. (1994). Reasonable mean-square fit values. Rasch Meas Transac, 8, 370. [Google Scholar]

- Zhao, J., & Huang, J. (2023). A comparative study of frequency effect on acquisition of grammar and meaning of words between Chinese and foreign learners of English language. Frontiers in Psychology, 14, 1125483. [Google Scholar] [CrossRef]

- Zhu, Y., Zhang, B., Wang, Q. A., Li, W., & Cai, X. (2018). The principle of least effort and Zipf distribution. Journal of Physics: Conference Series, 1113(1), 012007. [Google Scholar] [CrossRef]

- Zipf, G. K. (2016). Human behavior and the principle of least effort: An introduction to human ecology. Ravenio Books. [Google Scholar]

| Demographics | N (%) |

|---|---|

| Gender | |

| Male | 64 (41%) |

| Female | 92 (59%) |

| Language Background | |

| L1 English | 82 (53%) |

| L2 English | 74 (47%) |

| Nationality | |

| Malaysian | 126 (81%) |

| Non-Malaysian | 30 (19%) |

| Range | N | Min | Max | Mean | SD | |

|---|---|---|---|---|---|---|

| Advanced | 80–100 | 103 | 80.00 | 100.00 | 91.74 | 5.75 |

| Upper intermediate | 60–79 | 24 | 61.25 | 78.75 | 71.04 | 5.83 |

| Lower intermediate | <60 | 10 | 40.00 | 55.00 | 48.38 | 4.88 |

| Item | Target Emotion | Dom 1 | Dom 2 | Dom 3 | Match % |

|---|---|---|---|---|---|

| Q23 | dirty | Yes | Yes | Yes | 100 |

| Q24 | doubt | Yes | Yes | Yes | 100 |

| Q25 | lazy | Yes | Yes | Yes | 100 |

| Q26 | defeat | Yes | Yes | Yes | 100 |

| Q27 | obsess | Yes | Yes | Yes | 100 |

| Q28 | bewilder | Yes | Yes | Yes | 100 |

| Q29 | dismay | Yes | Yes | Yes | 100 |

| Q30 | dizzy | Yes | Yes | Yes | 100 |

| Q31 | inferior | Yes | Yes | Yes | 100 |

| Q32 | daze | Yes | Yes | No | 66.66 |

| Q33 | phony | Yes | Yes | No | 66.66 |

| Q34 | drowsy | Yes | Yes | Yes | 100 |

| Q35 | vindictive | Yes | Yes | Yes | 100 |

| Q36 | luck | Yes | Yes | Yes | 100 |

| Q37 | support | Yes | Yes | No | 66.66 |

| Q38 | secret | Yes | Yes | Yes | 100 |

| Q39 | relieve | Yes | Yes | Yes | 100 |

| Q40 | sympathy | Yes | Yes | Yes | 100 |

| Q41 | nostalgia | Yes | Yes | No | 66.66 |

| Q42 | trustworthy | Yes | Yes | Yes | 100 |

| Q43 | sociable | Yes | Yes | No | 66.66 |

| Q44 | bashful | Yes | Yes | Yes | 100 |

| Q45 | homy | Yes | Yes | Yes | 100 |

| Q46 | hate | Yes | Yes | No | 66.66 |

| Q47 | responsible | Yes | Yes | Yes | 100 |

| Q48 | accuse | Yes | Yes | No | 66.66 |

| Q49 | hostile | Yes | Yes | Yes | 100 |

| Q50 | curse | Yes | Yes | Yes | 100 |

| Q51 | greed | Yes | Yes | No | 66.66 |

| Q52 | insult | Yes | Yes | Yes | 100 |

| Q53 | intimidate | Yes | Yes | No | 66.66 |

| Q54 | frantic | Yes | Yes | Yes | 100 |

| Q55 | horrified | Yes | Yes | Yes | 100 |

| Q56 | reckless | Yes | Yes | No | 66.66 |

| Q57 | squirm | Yes | Yes | No | 66.66 |

| Q58 | foreboding | Yes | Yes | Yes | 100 |

| Q59 | grumpy | Yes | Yes | No | 66.66 |

| Q60 | disbelief | Yes | Yes | Yes | 100 |

| Q61 | famished | Yes | Yes | No | 66.66 |

| Q62 | silly | Yes | Yes | Yes | 100 |

| Q63 | adventure | Yes | Yes | Yes | 100 |

| Q64 | curious | Yes | Yes | Yes | 100 |

| Q65 | defence | Yes | Yes | Yes | 100 |

| Q66 | impulse | Yes | Yes | Yes | 100 |

| Q67 | gratitude | Yes | Yes | Yes | 100 |

| Q68 | enchant | Yes | Yes | Yes | 100 |

| Q69 | flirt | Yes | Yes | Yes | 100 |

| Q70 | tickle | Yes | Yes | Yes | 100 |

| Q71 | hilarious | Yes | Yes | No | 66.66 |

| Q72 | inquisitive | Yes | Yes | No | 66.66 |

| Q73 | euphoric | Yes | Yes | Yes | 100 |

| Item | Chisq | df | p-Value | Outfit MSQ | Infit MSQ | Outfit t | Infit t | Discrim |

|---|---|---|---|---|---|---|---|---|

| Q23 | 152.294 | 155 | 0.546 | 0.976 | 0.974 | −0.239 | −0.350 | 0.218 |

| Q24 | 171.465 | 155 | 0.173 | 1.099 | 1.060 | 1.262 | 0.966 | 0.021 |

| Q25 | 157.887 | 155 | 0.420 | 1.012 | 0.996 | 0.159 | −0.031 | 0.149 |

| Q26 | 138.577 | 155 | 0.824 | 0.888 | 0.899 | −2.401 | −2.458 | 0.447 |

| Q27 | 144.565 | 155 | 0.715 | 0.927 | 0.972 | −0.862 | −0.402 | 0.246 |

| Q28 | 151.313 | 155 | 0.569 | 0.970 | 0.978 | −0.579 | −0.491 | 0.237 |

| Q29 | 145.396 | 155 | 0.698 | 0.932 | 0.941 | −0.896 | −0.974 | 0.333 |

| Q30 | 161.952 | 155 | 0.335 | 1.038 | 1.026 | 0.579 | 0.470 | 0.097 |

| Q35 | 145.824 | 155 | 0.689 | 0.935 | 0.944 | −1.316 | −1.320 | 0.334 |

| Q39 | 162.260 | 155 | 0.329 | 1.040 | 1.028 | 0.821 | 0.659 | 0.100 |

| Q41 | 167.880 | 155 | 0.227 | 1.076 | 1.060 | 1.271 | 1.202 | 0.040 |

| Q42 | 142.110 | 155 | 0.763 | 0.911 | 0.929 | −1.091 | −1.080 | 0.356 |

| Q44 | 162.648 | 155 | 0.321 | 1.043 | 1.047 | 0.908 | 1.126 | 0.086 |

| Q45 | 166.265 | 155 | 0.254 | 1.066 | 1.068 | 0.974 | 1.218 | 0.022 |

| Q47 | 155.877 | 155 | 0.465 | 0.999 | 0.995 | 0.004 | −0.101 | 0.200 |

| Q54 | 147.651 | 155 | 0.650 | 0.946 | 0.969 | −0.636 | −0.459 | 0.238 |

| Q55 | 152.778 | 155 | 0.535 | 0.979 | 0.983 | −0.330 | −0.338 | 0.234 |

| Q58 | 168.624 | 155 | 0.215 | 1.081 | 1.053 | 1.014 | 0.833 | 0.051 |

| Q59 | 155.565 | 155 | 0.472 | 0.997 | 0.992 | −0.010 | −0.098 | 0.175 |

| Q60 | 160.045 | 155 | 0.374 | 1.026 | 1.024 | 0.297 | 0.336 | 0.093 |

| Q61 | 149.983 | 155 | 0.599 | 0.961 | 0.976 | −0.311 | −0.235 | 0.196 |

| Q63 | 146.094 | 155 | 0.684 | 0.937 | 0.947 | −1.357 | −1.290 | 0.325 |

| Q64 | 135.482 | 155 | 0.869 | 0.868 | 0.934 | −1.053 | −0.636 | 0.291 |

| Q67 | 155.872 | 155 | 0.465 | 0.999 | 1.014 | 0.005 | 0.312 | 0.145 |

| Q69 | 153.436 | 155 | 0.520 | 0.984 | 0.979 | −0.121 | −0.221 | 0.181 |

| Q71 | 171.739 | 155 | 0.170 | 1.101 | 1.074 | 1.705 | 1.513 | -0.012 |

| Q73 | 151.808 | 155 | 0.557 | 0.973 | 0.961 | −0.472 | −0.826 | 0.315 |

| Item | z-Statistics | p-Value |

|---|---|---|

| Q23 | 1.056 | 0.291 |

| Q24 | 0.865 | 0.387 |

| Q25 | 0.945 | 0.344 |

| Q26 | −1.987 | 0.047 |

| Q27 | −1.072 | 0.284 |

| Q28 | −0.494 | 0.621 |

| Q29 | −1.050 | 0.294 |

| Q30 | −0.262 | 0.793 |

| Q35 | −1.679 | 0.093 |

| Q39 | 0.643 | 0.520 |

| Q41 | 1.118 | 0.264 |

| Q42 | −1.590 | 0.112 |

| Q44 | 1.858 | 0.063 |

| Q45 | 1.683 | 0.092 |

| Q47 | −0.098 | 0.922 |

| Q54 | 0.521 | 0.602 |

| Q55 | −0.906 | 0.365 |

| Q58 | 0.695 | 0.487 |

| Q59 | 0.755 | 0.450 |

| Q60 | 1.599 | 0.110 |

| Q61 | −1.073 | 0.283 |

| Q63 | −1.589 | 0.112 |

| Q64 | −1.017 | 0.309 |

| Q67 | 0.601 | 0.548 |

| Q69 | 0.305 | 0.760 |

| Q71 | 1.939 | 0.052 |

| Item | Chisq | df | p-Value | Outfit MSQ | Infit MSQ | Outfit t | Infit t | Discrim |

| Q23 | 161.713 | 155 | 0.340 | 1.037 | 1.033 | 0.462 | 0.435 | 0.133 |

| Q24 | 149.850 | 155 | 0.602 | 0.961 | 0.978 | −0.386 | −0.205 | 0.255 |

| Q25 | 163.957 | 155 | 0.296 | 1.051 | 1.050 | 0.505 | 0.498 | 0.037 |

| Q26 | 155.784 | 155 | 0.467 | 0.999 | 0.999 | 0.028 | 0.032 | 0.192 |

| Q27 | 161.377 | 155 | 0.346 | 1.034 | 1.013 | 0.410 | 0.175 | 0.133 |

| Q28 | 139.445 | 155 | 0.810 | 0.894 | 0.914 | −0.878 | −0.746 | 0.349 |

| Q29 | 132.924 | 155 | 0.900 | 0.852 | 0.896 | −0.940 | −0.723 | 0.385 |

| Q30 | 152.918 | 155 | 0.532 | 0.980 | 0.982 | −0.177 | −0.162 | 0.257 |

| Q35 | 159.021 | 155 | 0.396 | 1.019 | 1.018 | 0.226 | 0.213 | 0.166 |

| Q39 | 151.046 | 155 | 0.575 | 0.968 | 0.980 | −0.313 | −0.182 | 0.231 |

| Q41 | 163.181 | 155 | 0.311 | 1.046 | 1.037 | 0.522 | 0.430 | 0.134 |

| Q42 | 156.556 | 155 | 0.450 | 1.004 | 1.001 | 0.070 | 0.042 | 0.220 |

| Q44 | 142.253 | 155 | 0.760 | 0.912 | 0.927 | −0.891 | −0.758 | 0.337 |

| Q45 | 183.286 | 155 | 0.060 | 1.175 | 1.096 | 1.566 | 0.945 | 0.021 |

| Q47 | 139.361 | 155 | 0.811 | 0.893 | 0.899 | −1.185 | −1.130 | 0.391 |

| Q54 | 142.180 | 155 | 0.761 | 0.911 | 0.907 | −0.853 | −0.935 | 0.379 |

| Q55 | 147.801 | 155 | 0.647 | 0.947 | 0.948 | −0.560 | −0.558 | 0.312 |

| Q58 | 153.714 | 155 | 0.514 | 0.985 | 0.993 | −0.127 | −0.044 | 0.210 |

| Q59 | 148.962 | 155 | 0.622 | 0.955 | 0.967 | −0.379 | −0.278 | 0.268 |

| Q60 | 140.637 | 155 | 0.789 | 0.902 | 0.915 | −1.115 | −0.978 | 0.348 |

| Q61 | 149.637 | 155 | 0.606 | 0.959 | 0.960 | −0.369 | −0.360 | 0.243 |

| Q63 | 149.346 | 155 | 0.613 | 0.957 | 0.957 | −0.447 | −0.452 | 0.274 |

| Q64 | 146.730 | 155 | 0.670 | 0.941 | 0.937 | −0.617 | −0.652 | 0.328 |

| Q67 | 150.628 | 155 | 0.584 | 0.966 | 0.951 | −0.199 | −0.341 | 0.274 |

| Q69 | 151.652 | 155 | 0.561 | 0.972 | 0.964 | −0.288 | −0.385 | 0.239 |

| Q71 | 158.685 | 155 | 0.403 | 1.017 | 1.001 | 0.195 | 0.039 | 0.210 |

| Q73 | 147.618 | 155 | 0.651 | 0.946 | 0.963 | −0.560 | −0.375 | 0.285 |

| Items | z-Statistic | p-Value |

|---|---|---|

| Q23.c1 | 0.567 | 0.570 |

| Q23.c2 | 0.467 | 0.640 |

| Q24.c1 | −0.527 | 0.598 |

| Q24.c2 | −0.474 | 0.635 |

| Q25.c1 | 1.810 | 0.070 |

| Q25.c2 | 1.616 | 0.106 |

| Q26.c1 | −0.997 | 0.319 |

| Q26.c2 | −0.416 | 0.677 |

| Q27.c1 | 0.257 | 0.797 |

| Q27.c2 | −0.775 | 0.439 |

| Q28.c1 | −0.978 | 0.328 |

| Q28.c2 | −0.695 | 0.487 |

| Q29.c1 | 0.029 | 0.977 |

| Q29.c2 | −0.465 | 0.642 |

| Q30.c1 | 2.294 | 0.022 |

| Q30.c2 | 1.845 | 0.065 |

| Q35.c1 | 0.309 | 0.757 |

| Q35.c2 | 1.409 | 0.159 |

| Q39.c1 | −1.054 | 0.292 |

| Q39.c2 | −0.828 | 0.408 |

| Q41.c1 | 1.931 | 0.054 |

| Q41.c2 | 2.202 | 0.028 |

| Q42.c1 | 0.292 | 0.770 |

| Q42.c2 | 0.626 | 0.531 |

| Q44.c1 | −0.969 | 0.333 |

| Q44.c2 | −1.025 | 0.305 |

| Q45.c1 | −0.010 | 0.992 |

| Q45.c2 | 0.621 | 0.534 |

| Q47.c1 | −0.378 | 0.705 |

| Q47.c2 | −0.855 | 0.392 |

| Q54.c1 | 0.740 | 0.459 |

| Q54.c2 | −0.089 | 0.929 |

| Q55.c1 | −0.684 | 0.494 |

| Q55.c2 | 0.163 | 0.87 |

| Q58.c1 | 1.137 | 0.256 |

| Q58.c2 | 1.041 | 0.298 |

| Q59.c1 | −0.113 | 0.910 |

| Q59.c2 | −0.312 | 0.755 |

| Q60.c1 | −0.784 | 0.433 |

| Q60.c2 | −1.255 | 0.209 |

| Q61.c1 | 0.553 | 0.58 |

| Q61.c2 | −0.124 | 0.901 |

| Q63.c1 | 1.047 | 0.295 |

| Q63.c2 | 0.467 | 0.641 |

| Q64.c1 | −0.267 | 0.790 |

| Q64.c2 | −0.647 | 0.517 |

| Q67.c1 | −0.251 | 0.802 |

| Q67.c2 | −0.093 | 0.926 |

| Q69.c1 | −0.217 | 0.828 |

| Q69.c2 | −0.301 | 0.763 |

| Q71.c1 | 0.701 | 0.483 |

| Q71.c2 | 0.457 | 0.648 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chee, A.J.E.; Szabo, C.Z.; Ambrose, S. Measuring Emotion Recognition Through Language: The Development and Validation of an English Productive Emotion Vocabulary Size Test. Languages 2025, 10, 204. https://doi.org/10.3390/languages10090204

Chee AJE, Szabo CZ, Ambrose S. Measuring Emotion Recognition Through Language: The Development and Validation of an English Productive Emotion Vocabulary Size Test. Languages. 2025; 10(9):204. https://doi.org/10.3390/languages10090204

Chicago/Turabian StyleChee, Allen Jie Ein, Csaba Zoltan Szabo, and Sharimila Ambrose. 2025. "Measuring Emotion Recognition Through Language: The Development and Validation of an English Productive Emotion Vocabulary Size Test" Languages 10, no. 9: 204. https://doi.org/10.3390/languages10090204

APA StyleChee, A. J. E., Szabo, C. Z., & Ambrose, S. (2025). Measuring Emotion Recognition Through Language: The Development and Validation of an English Productive Emotion Vocabulary Size Test. Languages, 10(9), 204. https://doi.org/10.3390/languages10090204