1. Introduction

The structure of words in human language reflects a long history of adaptation to diverse functional and cognitive pressures. Far from being arbitrary, linguistic form evolves under constraints that promote communicative efficiency and processing ease. Prior research has demonstrated that phonological distinctiveness prevents confusion in speech perception (

Wedel et al., 2018), communicative efficiency drives word-length optimization relative to predictability and frequency (

Piantadosi et al., 2011), and learning and memory limitations shape lexical organization and transmission (

Bybee, 2006;

Kirby et al., 2015;

Zuidema & De Boer, 2009). Together, these factors suggest that linguistic systems tend to balance multiple pressures that favor effective communication.

Yet, written language introduces an additional modality through which such pressures operate. Orthography is not merely a symbolic representation of sound but a multimodal system that integrates visual, phonological, and semantic cues. Reading recruits both sensory and linguistic processes: visual decoding activates phonological codes and semantic associations even during silent reading (

C. A. Perfetti & Hart, 2008;

Rayner, 1998). The perceptual appearance of text—its letterforms, contrast, and spacing—affects not only legibility but also reading fluency and word recognition (

Beier & Larson, 2010;

Tinker, 1963). Despite this, the visual dimension of linguistic form has received relatively little systematic attention compared with the auditory domain.

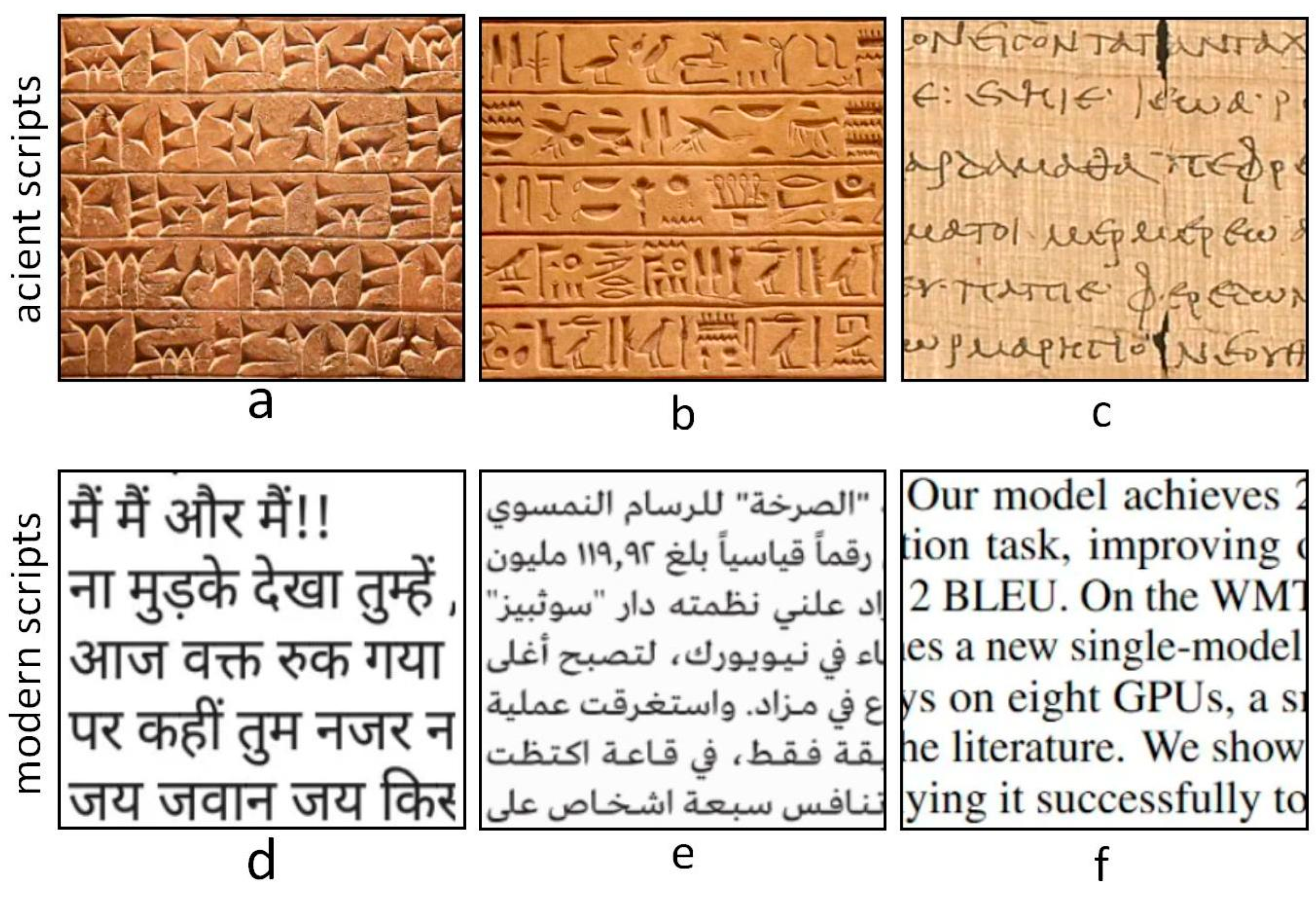

A preliminary illustration of this phenomenon is presented in

Figure 1, which compares representative examples of ancient and modern writing systems across several language families. The upper panels display early scripts such as Sumerian cuneiform, Egyptian hieroglyphs, and ancient Greek cursive, while the lower panels show modern counterparts including Devanagari, Arabic, and Latin. As writing systems evolved, the visual separation between words became increasingly pronounced: early scripts show continuous symbol sequences with limited spacing, whereas modern scripts exhibit clearer boundaries and stronger visual differentiation. This visible progression suggests that word-level visual distinctiveness may have gradually increased through cultural evolution, improving legibility and reducing perceptual confusability. Although the comparison is illustrative, it provides an intuitive motivation for examining whether such visual discriminability systematically shapes lexical form across languages.

Evidence from cognitive psychology and typography supports this interpretation. Experimental studies show that increased visual differentiation among graphemes enhances letter identification (

Geyer, 1977), reduces error under noisy conditions (

Bernard, 2016), and improves reading efficiency. Perceptually distinct graphemes lower confusability (

Mueller & Weidemann, 2012), while even subtle typographic refinements—such as spacing adjustments or stroke width variation—can significantly affect lexical decision performance (

Beier & Larson, 2010;

Tinker, 1963). These findings underscore that orthographic visual distinctiveness (orthographic visual distinctiveness) is cognitively functional, yet prior work has largely examined perception within fixed orthographies rather than its potential role as a shaping force in the evolution of written form.

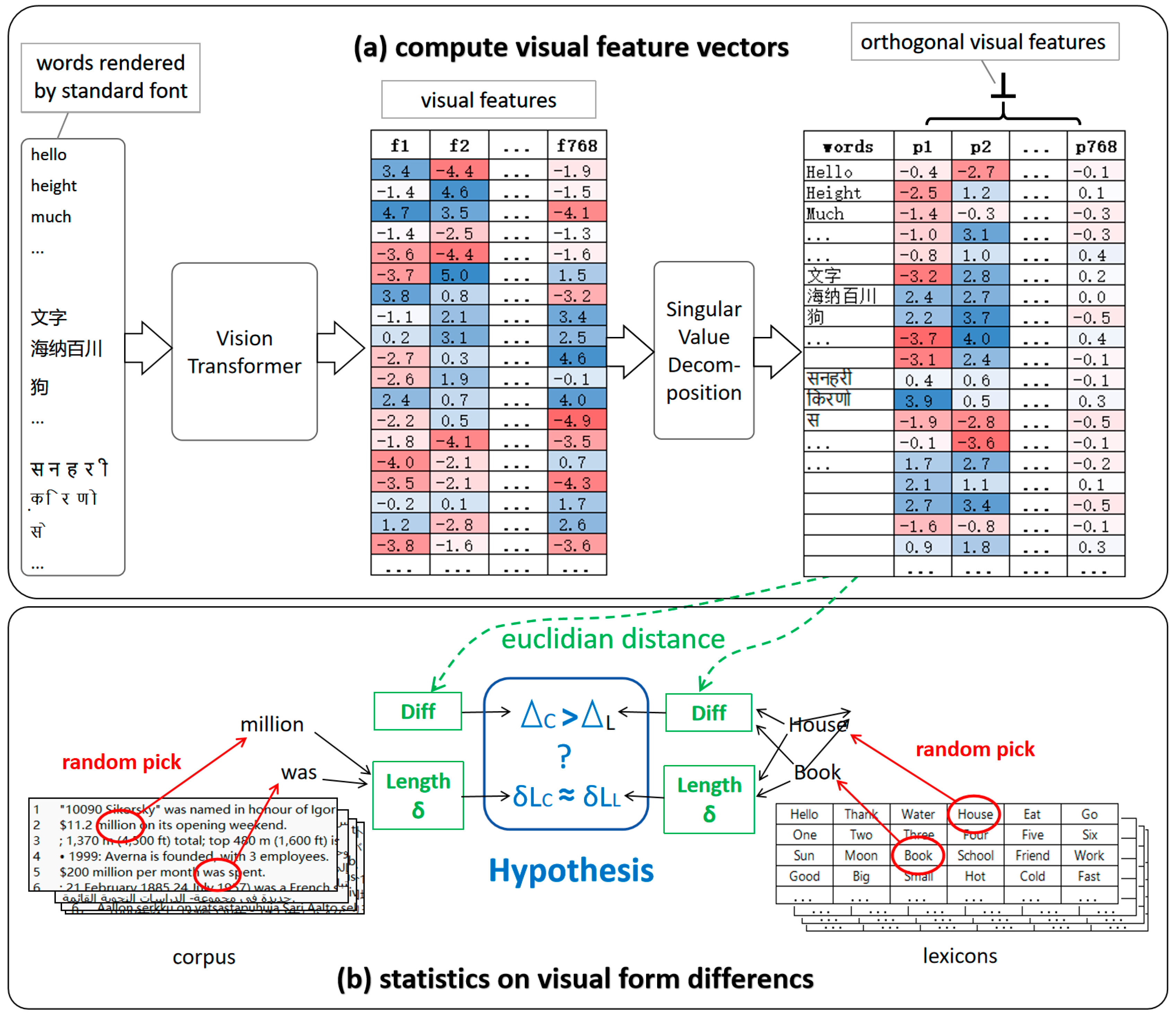

Building on these insights, the present study explores whether orthographic visual distinctiveness systematically constrains lexical form across languages. A direct historical analysis would be ideal but remains infeasible due to limited diachronic corpora. As an alternative, we adopt a large-scale, cross-linguistic approach, leveraging data from 131 languages to test whether words that co-occur in natural corpora tend to be more visually distinct than expected by chance. This method assumes that, if written language has been shaped by long-term visual optimization, then word pairs that co-occur in natural language should display greater visual dissimilarity than randomly paired words drawn from the lexicon.

To operationalize visual structure, we employ Vision Transformers (VITs)—a class of deep neural networks that process images as sequences of visual patches that approximate human shape-based visual perception through self-attention mechanisms. Self-attention allows the model to globally weight and integrate information across all parts of an image, enabling sensitivity to overall shape and structural configuration rather than only local visual details. In contrast to traditional convolutional neural network (CNN)-based models, which primarily rely on local convolution filters and hierarchical receptive fields, VITs directly capture global visual relationships. VITs have demonstrated strong shape bias (

Dehghani et al., 2023) and high cross-script generalizability, successfully modeling diverse character systems including Chinese (

Dan et al., 2022), Persian numerals (

Ardehkhani et al., 2024), Hangul (

Shana et al., 2024), and Manchu (

Zhou et al., 2024). Their ability to extract orthographic visual features enables a scalable and language-neutral comparison of visual distinctiveness across writing systems.

To isolate orthographic visual distinctiveness from simpler metrics, we include a control analysis based on word-length difference, which correlates with both visual complexity and lexical processing difficulty (

Changizi et al., 2006;

New et al., 2006;

O’Regan et al., 1984). By comparing visual dissimilarity while accounting for word-length variation, we separate the contribution of structural contrast from mere size effects.

Our results show that, in most languages, co-occurring word pairs are visually more distinct than randomly paired words, and this pattern persists after controlling for length. These findings suggest that visual distinctiveness functions as an independent, modality-specific constraint in the organization of written lexicons. Beyond their linguistic implications, the results also demonstrate that computational vision models can reveal cognitively relevant regularities in text, bridging perceptual and linguistic structure. This perspective extends functional theories of language design by incorporating visual perceptual pressures into models of lexical and orthographic evolution.

3. Results

To evaluate whether orthographic visual distinctiveness is systematically enhanced in natural language use, we analyzed pairwise visual distances between word forms across 131 languages.

The comparison between co-occurring words in natural corpora and randomly paired words from lexicons revealed a clear and consistent trend: in nearly all languages, naturally co-occurring words are visually more distinct than expected by chance. This effect persists after controlling for word length and is robust across writing systems, indicating that visual differentiation functions as an independent structural property of written language.

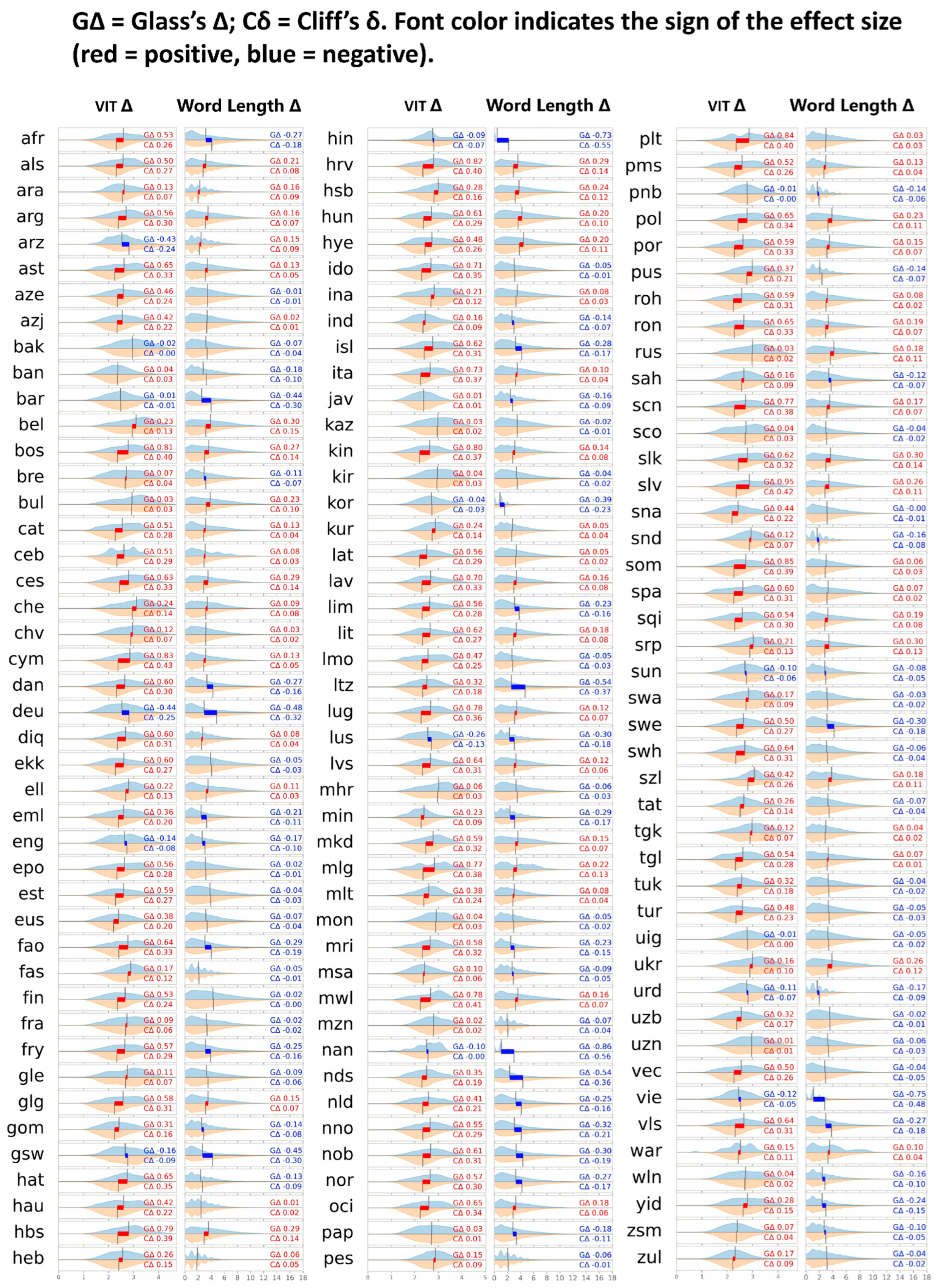

The same comparison was performed on word-length differences, which served as a structural baseline to ensure that visual effects were not merely reflections of size variation. Results from all 131 languages are summarized in

Figure 3 and

Table 1.

As illustrated in the ridge plots, for nearly every language the blue distributions (corpus pairs) show higher mean visual distances than the orange distributions (lexicon pairs). Effect sizes are consistently positive. More specific statistics can be observed in

Table 1 below.

Combining the charts, we can observe mean and median effect sizes for visual and length-based measures (Glass’s Δ and Cliff’s δ). Visual effects show a strong positive bias across languages, whereas length effects remain small and variable. Glass’s Δ exceeds 0.2 in 85 languages and is positive in 118, while Cliff’s δ exceeds 0.1 in 88 and is positive in 118. The unimodal and symmetric shape of most distributions suggests that the enhancement reflects a genuine central tendency rather than a few outliers. These findings indicate that words that co-occur in natural usage are visually more distinct than would be expected by chance, supporting the hypothesis of enhanced orthographic contrast in real language data.

In contrast, word-length differences display weaker and more variable effects. The corresponding distributions are often skewed or multimodal, and effect sizes remain small: Glass’s Δ exceeds 0.2 in only 16 languages (positive in 59), and Cliff’s δ exceeds 0.1 in 15 (positive in 60). There is no systematic pattern showing that corpus pairs differ consistently in length from random pairs. This confirms that the observed visual distinctiveness cannot be explained by trivial structural variation.

Overall, across 131 languages, visual-form distances derived from VIT embeddings reveal a robust and widespread enhancement of orthographic visual distinctiveness in natural corpora. This large-scale pattern suggests that the visual form of written words is subject to independent structural pressures that promote visual discriminability—a theme further explored in the Discussion.

4. Discussion

This section discusses the broader implications of our findings on orthographic visual distinctiveness and its role in shaping written language.

We begin by summarizing the main empirical results and their theoretical significance and then relate them to prior research in orthography, perception, and multimodal language processing.

Subsequent sections address cross-linguistic and typological perspectives, methodological considerations, and potential limitations.

Finally, we discuss how these findings inform our understanding of human reading versus model-based “reading” and outline directions for future research and applications in linguistics and multimodal NLP.

4.1. Summary of Findings and Theoretical Implications

This study provides cross-linguistic evidence that orthographic visual distinctiveness in written word forms is systematically enhanced in natural language use. By comparing word pairs sampled from corpora and lexicons across 131 languages, we found that naturally co-occurring words exhibit significantly greater visual distinctiveness, even when controlling for word length. These results demonstrate that visual discriminability is a stable and pervasive property of written language, rather than a by-product of simple structural variation.

Across languages, the visual distance between corpus-based word pairs is consistently larger than that of random lexical pairings. The effect is robust: Glass’s Δ exceeds 0.2 in 85 languages and is positive in 118, while Cliff’s δ exceeds 0.1 in 88 and is positive in 119.

Moreover, both corpus and lexicon distributions are largely unimodal and symmetric, suggesting that the enhancement of visual distinctiveness represents a central tendency across languages rather than the influence of a few outliers.

In contrast, word-length differences show greater variability and lack systematic directionality, confirming that the observed visual effect is not reducible to size or orthographic density.

These findings extend the concept of distinctiveness-based selection, previously established in phonology (

Wedel et al., 2018) and lexical semantics (

Piantadosi et al., 2011), into the visual domain of written language. Just as spoken languages evolve toward greater phonological contrast to minimize auditory ambiguity, writing systems appear to evolve toward greater visual distinctiveness, reducing perceptual overlap and improving readability. This convergence implies that orthographic visual distinctiveness, like phonological contrast, serves as a functional mechanism optimizing language for communication.

In addition, the results support the integration of visual features into multimodal models of language representation (

Kirby et al., 2015), suggesting that perceptual constraints form an essential part of the communicative design of linguistic systems.

4.2. Relation to Prior Work in Orthographic and Perceptual Research

This study builds upon a long tradition of research in perceptual psychology and orthographic design, which has demonstrated that the visual structure of writing systems directly affects reading performance and lexical processing. At the level of individual graphemes, letter distinctiveness has been shown to improve recognition accuracy and reading speed (

Geyer, 1977), particularly under degraded or low-contrast conditions (

Bernard, 2016). Similarly, graphemic similarity influences lexical access and confusability during word recognition (

Mueller & Weidemann, 2012), while typographic variation—including stroke width, spacing, and font style—can modulate both readability and cognitive load even in familiar scripts (

Beier & Larson, 2010;

Tinker, 1963). Together, these findings underscore that visual form is not a neutral carrier of linguistic information but a functional component of the reading process.

However, most previous studies have focused on within-script perception, employing controlled experiments or artificial stimuli to assess visual confusability and recognition speed. In contrast, our approach extends this inquiry to the language-system level, asking whether natural lexicons themselves show a cumulative bias toward visual differentiation. By analyzing thousands of real word pairs across 131 languages, we reveal a large-scale statistical tendency that complements experimental results: visual discriminability functions as an organizing pressure in written lexical form. This perspective introduces a bridge between micro-level perceptual effects and macro-level linguistic structure, suggesting that perceptual constraints observed in cognitive experiments may also shape the distributional design of orthographic systems over time.

Consequently, these findings provide a theoretical and empirical foundation for integrating perceptual principles into models of multimodal language representation and processing.

4.3. Cross-Linguistic and Typological Considerations

Our cross-language analysis offers insights into how orthographic visual distinctiveness interacts with script typology. While writing systems differ in mapping style (alphabetic vs. logographic), inventory size, directionality and segmentation conventions (

Sampson, 2016), our findings suggest a common tendency across typologies: word forms that co-occur tend to be visually more distinct than random pairings. This universality indicates that perceptual separation may operate as a modality-independent constraint on written lexicons.

At the same time, typological factors may modulate the effect. For example, scripts with large grapheme inventories (e.g., Korean characters) or dense visual packing may rely more on shape differentiation (

Miton & Morin, 2021), whereas alphabetic systems might exploit spacing, letter-contrast or boundary cues. Features such as cursive connectivity, diacritics, or script-mixing may impose additional visual constraints on word-form design (

Meletis & Dürscheid, 2022).

From a typological perspective, our results invite a richer orientation: visual discriminability should be considered as an additional dimension of writing-system variation alongside traditional axes such as phonographic depth, inventory size and transparency. In other words, script design may reflect not only linguistic mapping decisions but also perceptual design pressures. Future research might examine how historical reforms, orthographic simplification, or script transitions correspond to changes in visual separability over time.

In sum, the cross-linguistic evidence supports a broad but nuanced picture: while script conventions vary widely, the drive toward enhanced orthographic visual distinctiveness between word forms appears to be a common structural tendency, thus contributing to our understanding of writing systems as perceptually grounded cultural artifacts.

4.4. Methodological Reflections: Multimodal Analysis

Our findings underline the need to regard written language not simply as a symbolic code but as a multimodal communicative system, where visual, phonological, and semantic information jointly shape linguistic form. Traditional corpus linguistics has focused primarily on lexical or syntactic structure, yet text is inherently visual—its layout, spacing, and letterforms affect readability and cognitive processing (

C. A. Perfetti & Hart, 2008).

By representing words as visual objects, this study introduces a scalable way to quantify orthographic form. Using deep vision models such as the Vision Transformer, we can approximate human shape-based perception and thus measure visual distinctiveness across scripts in a language-neutral manner. The key contribution is not technical but conceptual: it demonstrates how computational vision can serve as a methodological bridge between corpus linguistics and the study of orthography, enabling quantitative comparisons of visual structure that were previously unattainable.

In this sense, the multimodal perspective adopted here expands the analytical scope of linguistic research, offering new tools to investigate how perceptual constraints interact with lexical organization and script design.

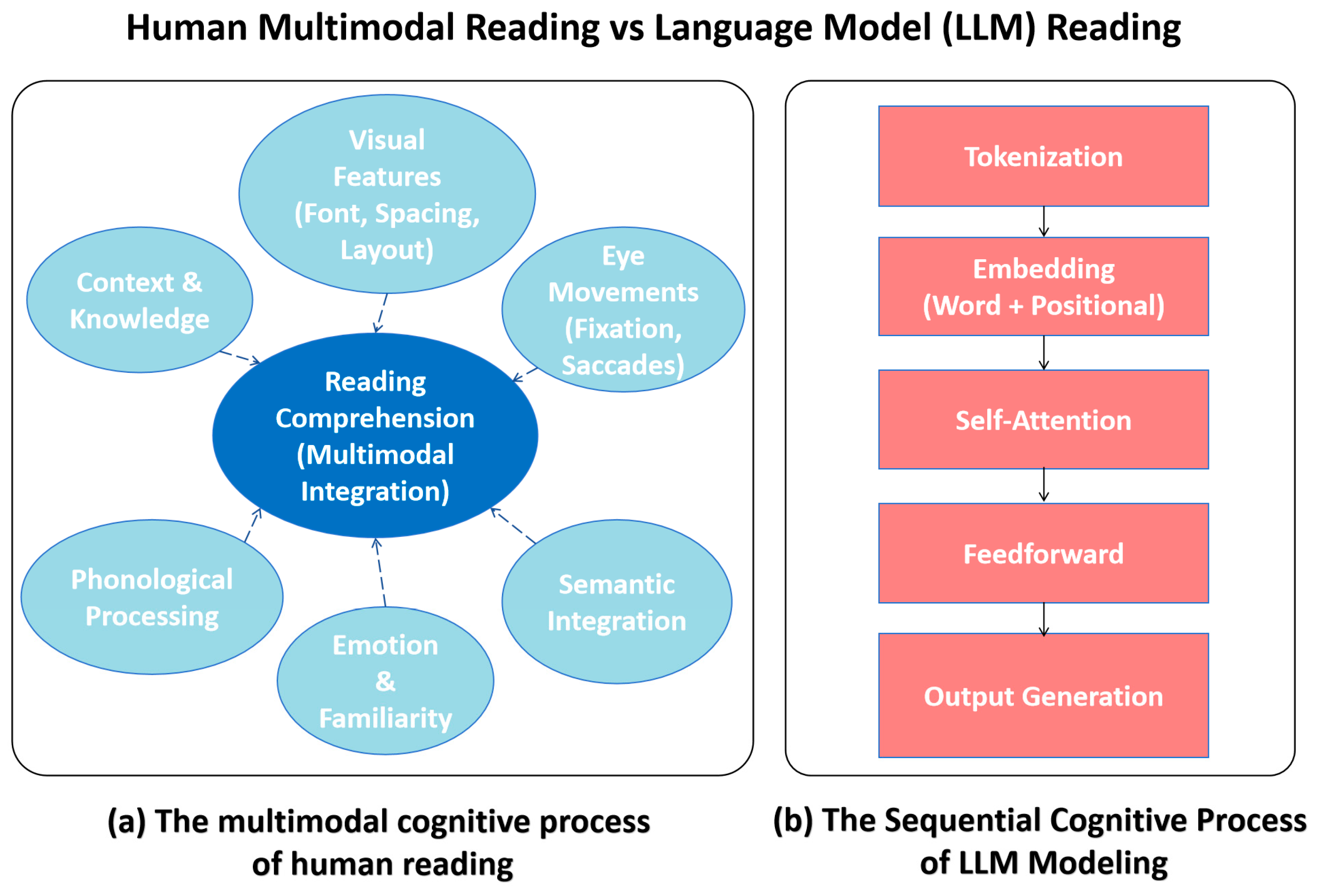

4.5. Human Reading vs. Language Model “Reading”: A Multimodal Gap

Human reading is a deeply multimodal cognitive process, integrating orthographic, phonological, and semantic information with visual properties such as font, spacing, and layout, and dynamically modulated by context, attention, and familiarity (

C. Perfetti, 2007;

Rayner, 1998;

Scaltritti et al., 2019;

Slattery & Rayner, 2013). Reading therefore engages both perceptual and linguistic systems: visual form serves not merely as a carrier of symbolic information but as a perceptual interface that shapes recognition and comprehension.

In contrast, current language models (LLMs) treat text as purely symbolic token sequences without perceptual grounding. They process strings of characters through statistical and attentional mechanisms yet remain blind to modality-specific cues such as orthographic visual distinctiveness, spacing, or graphemic structure. This difference reveals a fundamental multimodal gap between human reading and machine processing. While humans rely on the integration of perceptual and linguistic information, LLMs operate on abstracted symbols detached from sensory context.

Our findings highlight this gap: the demonstrated role of orthographic visual distinctiveness underscores how perception constrains linguistic structure in ways current models fail to capture. Incorporating visually informed representations—for instance, features derived from Vision Transformer encodings—may therefore help develop cognitively grounded multimodal language models that better approximate human reading (see

Figure 4).

4.6. Limitations and Future Directions

Despite its large cross-linguistic scope, this study has several limitations.

First, the VIT-based visual features used here approximate human shape perception but do not capture the full complexity of reading—such as eye-movement dynamics, familiarity effects, or contextual facilitation.

Second, although word length was explicitly controlled, other psycholinguistically relevant variables—including lexical frequency, predictability, and semantic association—were not directly modeled. These factors may indirectly influence visual distinctiveness and warrant further investigation in extended statistical frameworks.

Third, script-level properties—such as cursiveness, diacritics, or connected writing (e.g., in Arabic or Devanagari)—were not explicitly analysed and could moderate the observed effects.

Accordingly, the present study focuses on alphabetic and non-cursive writing systems in which word-level units are explicitly defined and visually separable. This design choice necessarily limits the typological coverage of the current analysis. In particular, the exclusion of logographic systems such as Chinese and mixed morpho-syllabic systems such as Japanese, as well as cursive writing systems with continuous character connections, means that the present findings cannot yet be generalized to all script types. Extending the present framework to segmentation-dependent scripts and to highly connected writing systems remains an important direction for future research.

Future work should therefore examine these factors within and across script families, integrating typological stratification to better isolate visual and structural influences. In addition, extending the analysis to historical scripts and diachronic corpora would provide direct evidence of how visual optimization unfolds over time.

The findings also point to several directions for future research and application. Theoretically, models of language evolution and orthographic change could incorporate visual discriminability as a central constraint, simulating how phonological, semantic, and visual pressures jointly shape lexical structure. Practically, integrating visual constraints into text design and AI-based generation could enhance readability and accessibility, particularly for a broad range of readers.

More broadly, the study underscores the importance of combining visual, phonological, and semantic modeling in multimodal frameworks, paving the way for cognitively grounded approaches to written-language processing and cross-cultural communication.

5. Conclusions

This study provides large-scale cross-linguistic evidence that orthographic visual distinctiveness in written word forms is not arbitrary but reflects systematic pressures toward perceptual distinctiveness. By combining deep visual representations with multilingual corpora, we show that co-occurring words are visually more distinct than random lexical combinations, a pattern that remains robust across 131 languages and independent of word length. These findings suggest that written languages, like spoken ones, evolve under functional pressures that promote discriminability and communicative clarity.

The results extend theories of distinctiveness-based selection to the visual domain, positioning orthographic design as part of a broader adaptive system linking perception, cognition, and communication. They also underscore a key difference between human multimodal reading—which integrates orthographic, phonological, semantic, and contextual information—and the symbolic text processing of current language models. Recognizing this gap invites new perspectives on how perceptual factors shape linguistic form and how future models might incorporate visually grounded representations to better reflect human language processing.

Overall, modeling language as a multimodal system highlights that perception and communication are inseparable dimensions of linguistic structure. Understanding written language through this integrated lens enriches both theoretical linguistics and applied language technologies, bridging cognitive, typological, and computational approaches to the study of human communication.