Non-Native Listeners’ Use of Information in Parsing Ambiguous Casual Speech

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Materials and Procedures

2.3. Statistical Analysis

3. Results

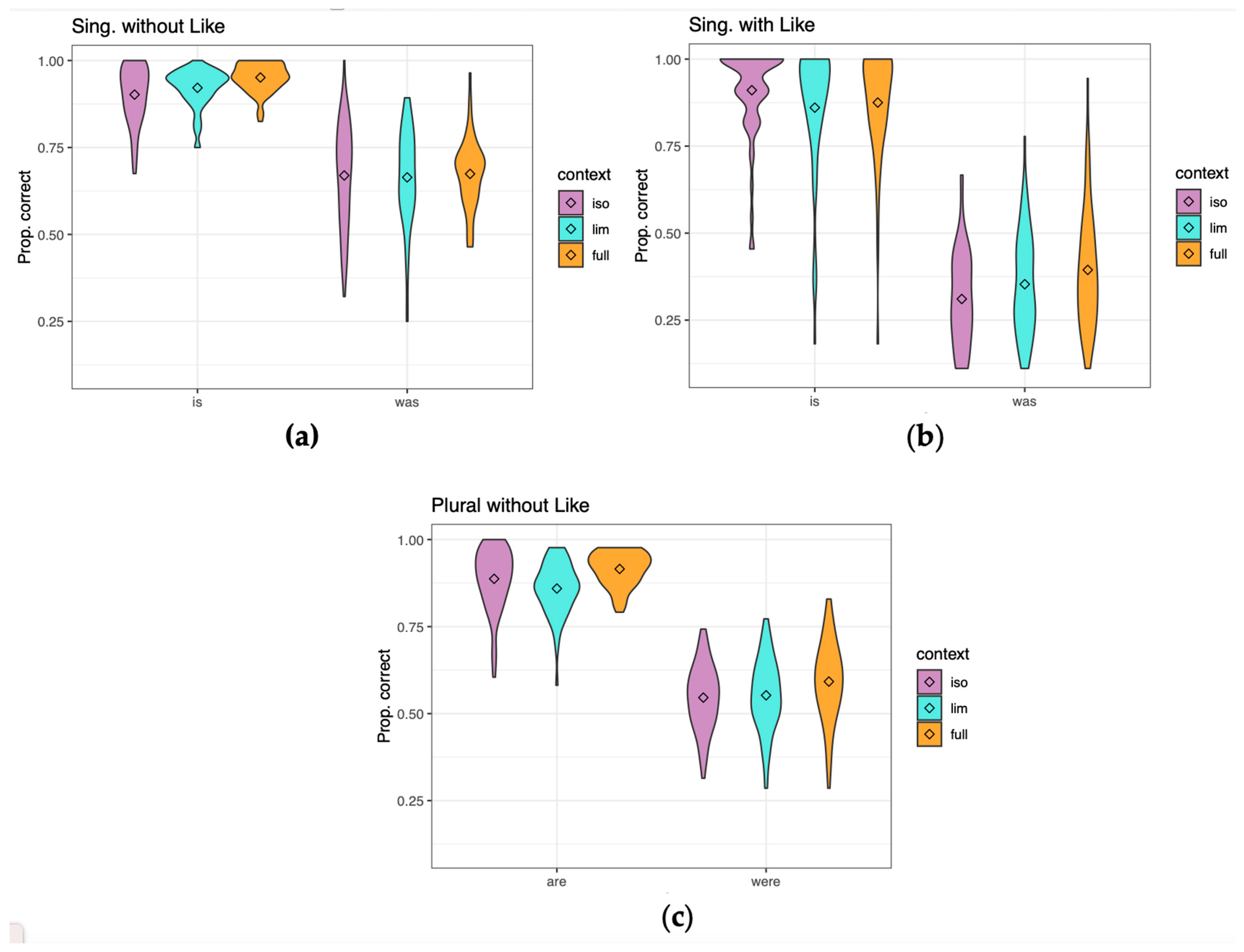

3.1. Proportion Correct

3.2. Relationship to English Proficiency

3.3. Detectablity and Bias

4. Discussion

4.1. Comparison to Native Listeners’ Results

4.2. Use of Various Types of Context Information When Not Followed by ‘Like’

4.3. Effects of Following ‘Like’

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

| 1 | We also analyzed all of the results using traditional ANOVAs, which gave similar results for significance/non-significance in nearly all cases and provided confirmation of the significant interactions. The major difference in outcomes was that some of the pairwise comparisons of Limited Context to the other two contexts that are significant in the LMEs were only significant by subjects or by items, and were at p < 0.10 on the other test, in the ANOVAs. |

| 2 | The model chosen for is without like, is with like, was with like, are without like, and were without like: Correct ~ Context + (1|Subject) + (1|Item); was without like: Correct ~ Context + (1+Context|Subject) + (1|Item). |

| 3 | Model for both ‘was’ and ‘were’ without ‘like’ as well as ‘was like’ (below): glmer(Correct ~ Context * Language + (1 + Context|Subject) + (1|Item). For ‘was’, the improvement relative to the same model without interaction is only significant at p = 0.082, however. For ‘were’ the improvement is fully significant (p < 0.001). |

| 4 | A reviewer suggests applying a Bonferroni correction for these tests of simple effects in order to be more conservative, even though they are motivated by statistically significant interactions. There are 12 tests of simple effects in all, so Bonferroni correction requires p < 0.00417 for each test to reach significance. This result thus remains significant with correction, as do all other significant results unless otherwise noted. |

| 5 | These two comparisons, for the ‘was like’ conditions, do not reach significance with Bonferroni correction. |

| 6 | The non-native listeners do not show improvement with either type of context in this ’was like’ condition under the stricter criterion of α = 0.00417 with Bonferroni correction. Under either criterion, their perception does not improve with the Limited context. |

References

- Baese-Berk, M. M., & Bradlow, A. R. (2021). Variability in speaking rate of native and nonnative speech. In R. Wayland (Ed.), Second language speech learning: Theoretical and empirical progress (pp. 312–334). Cambridge University Press. [Google Scholar]

- Baese-Berk, M. M., Morrill, T. H., & Dilley, L. C. (2016, May 31–June 3). Do non-native speakers use context speaking rate in spoken word recognition [Paper presentation]. 8th International Conference on Speech Prosody (SP2016) (Vol. 979983, ), Boston, MA, USA. [Google Scholar]

- Bates, D., Mächler, M., Bolker, B., & Walker, S. (2015). Fitting Linear Mixed-Effects Models Using lme4. Journal of Statistical Software, 67(1), 1–48. [Google Scholar] [CrossRef]

- Bradlow, A. R., & Alexander, J. A. (2007). Semantic and phonetic enhancements for speech-in-noise recognition by native and non-native listeners. The Journal of the Acoustical Society of America, 121(4), 2339–2349. [Google Scholar] [CrossRef] [PubMed]

- Bradlow, A. R., & Bent, T. (2002). The clear speech effect for non-native listeners. The Journal of the Acoustical Society of America, 112(1), 272–284. [Google Scholar] [CrossRef] [PubMed]

- Brand, S., & Ernestus, M. (2018). Listeners’ processing of a given reduced word pronunciation variant directly reflects their exposure to this variant: Evidence from native listeners and learners of French. Quarterly Journal of Experimental Psychology, 71, 1240–1259. [Google Scholar] [CrossRef]

- Brouwer, S., Mitterer, H., & Huettig, F. (2012). Speech reductions change the dynamics of competition during spoken word recognition. Language and Cognitive Processes, 27(4), 539–571. [Google Scholar] [CrossRef]

- Brown, M., Dilley, L. C., & Tanenhaus, M. K. (2012, August 1–4). Real-time expectations based on context speech rate can cause words to appear or disappear. 34th Annual Meeting of the Cognitive Science Society (Vol. 34, No. 34, pp. 1374–1379), Sapporo, Japan. [Google Scholar]

- Dilley, L. C., Morrill, T. H., & Banzina, E. (2013). New tests of the distal speech rate effect: Examining cross-linguistic generalization. Frontiers in Psychology, 4, 1002. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Dilley, L. C., & Pitt, M. A. (2010). Altering context speech rate can cause words to appear or disappear. Psychological Science, 21(11), 1664–1670. [Google Scholar] [CrossRef]

- E. F. Education First Ltd. (2019). EF EPI: EF english proficiency index: A ranking of 100 countries and regions by english skills. EF Education First. [Google Scholar]

- Ernestus, M., Baayen, H., & Schreuder, R. (2002). The recognition of reduced word forms. Brain and Language, 81(1–3), 162–173. [Google Scholar] [CrossRef] [PubMed]

- Ernestus, M., Dikmans, M. E., & Giezenaar, G. (2017a). Advanced second language learners experience difficulties processing reduced word pronunciation variants. Dutch Journal of Applied Linguistics, 6(1), 1–20. [Google Scholar] [CrossRef]

- Ernestus, M., Kouwenhoven, H., & Van Mulken, M. (2017b). The direct and indirect effects of the phonotactic constraints in the listener’s native language on the comprehension of reduced and unreduced word pronunciation variants in a foreign language. Journal of Phonetics, 62, 50–64. [Google Scholar] [CrossRef]

- Ernestus, M., & Warner, N. (2011). An introduction to reduced pronunciation variants. Journal of Phonetics, 39(3), 253–260. [Google Scholar] [CrossRef]

- Gerritsen, M., Van Meurs, F., Planken, B., & Korzilius, H. (2016). A reconsideration of the status of English in the Netherlands within the kachruvian three circles model. World Englishes, 35(3), 457–474. [Google Scholar] [CrossRef]

- Gottfried, T. L., Miller, J. L., & Payton, P. E. (1990). Effect of speaking rate on the perception of vowels. Phonetica, 47(3-4), 155–172. [Google Scholar] [CrossRef] [PubMed]

- Greenberg, S. (1999). Speaking in shorthand—A syllable-centric perspective for understanding pronunciation variation. Speech Communication, 29, 159–176. [Google Scholar] [CrossRef]

- Heffner, C. C., Dilley, L. C., McAuley, J. D., & Pitt, M. A. (2013). When cues combine: How distal and proximal acoustic cues are integrated in word segmentation. Language and Cognitive Processes, 28(9), 1275–1302. [Google Scholar] [CrossRef]

- Johnson, K. (2004). Massive reduction in conversational American English. In K. Yoneyama, & K. Maekawa (Eds.), Spontaneous speech: Data and analysis. Proceedings of the 1st session of the 10th international symposium (pp. 29–54). The National International Institute for Japanese Language. [Google Scholar]

- Lemhöfer, K., & Broersma, M. (2012). Introducing LexTALE: A quick and valid lexical test for advanced learners of English. Behavior Research Methods, 44, 325–343. [Google Scholar] [CrossRef]

- Marcoux, K., Cooke, M., Tucker, B. V., & Ernestus, M. (2022). The Lombard intelligibility benefit of native and non-native speech for native and non-native listeners. Speech Communication, 136, 53–62. [Google Scholar] [CrossRef]

- Miller, J. L., & Volaitis, L. E. (1989). Effect of speaking rate on the perceptual structure of a phonetic category. Perception and Psychophysics, 46, 505–512. [Google Scholar] [CrossRef]

- Morano, L., Bosch, L. T., & Ernestus, M. (2019). Looking for exemplar effects: Testing the comprehension and memory representations of r’duced words in Dutch learners of French. In S. Fuchs, J. Cleland, & A. Rochet-Capellan (Eds.), Speech perception and production: Learning and memory (pp. 245–277). Peter Lang. [Google Scholar]

- Morrill, T., Baese-Berk, M., & Bradlow, A. (2016). Speaking rate consistency and variability in spontaneous speech by native and non-native speakers of English. Proceedings of the International Conference on Speech Prosody, 2016, 1119–1123. [Google Scholar]

- Niebuhr, O., & Kohler, K. J. (2011). Perception of phonetic detail in the identification of highly reduced words. Journal of Phonetics, 39(3), 319–329. [Google Scholar] [CrossRef]

- Nijveld, A., Bosch, L. T., & Ernestus, M. (2022). The use of exemplars differs between native and non-native listening. Bilingualism: Language and Cognition, 25(5), 841–855. [Google Scholar] [CrossRef]

- Podlubny, R. G., Nearey, T. M., Kondrak, G., & Tucker, B. V. (2018). Assessing the importance of several acoustic properties to the perception of spontaneous speech. The Journal of the Acoustical Society of America, 143(4), 2255–2268. [Google Scholar] [CrossRef] [PubMed]

- Smiljanić, R., & Bradlow, A. R. (2011). Bidirectional clear speech perception benefit for native and high-proficiency non-native talkers and listeners: Intelligibility and accentedness. The Journal of the Acoustical Society of America, 130(6), 4020–4031. [Google Scholar] [CrossRef]

- van de Ven, M., & Ernestus, M. (2018). The role of segmental and durational cues in the processing of reduced words. Language and Speech, 61(3), 358–383. [Google Scholar] [CrossRef] [PubMed]

- van de Ven, M., Ernestus, M., & Schreuder, R. (2012). Predicting acoustically reduced words in spontaneous speech: The role of semantic/syntactic and acoustic cues in context. Laboratory Phonology, 3(2), 455–481. [Google Scholar] [CrossRef][Green Version]

- van de Ven, M., Tucker, B. V., & Ernestus, M. (2010, September 26–30). Semantic facilitation in bilingual everyday speech comprehension [Paper presentation]. 11th Annual Conference of the International Speech Communication Association (Interspeech) (pp. 1245–1248), Makuhari, Japan. [Google Scholar]

- van de Ven, M., Tucker, B. V., & Ernestus, M. (2011). Semantic context effects in the comprehension of reduced pronunciation variants. Memory and Cognition, 39, 1301–1316. [Google Scholar] [CrossRef] [PubMed]

- Volaitis, L. E., & Miller, J. L. (1992). Phonetic prototypes: Influence of place of articulation and speaking rate on the internal structure of voicing categories. The Journal of the Acoustical Society of America, 92(2), 723–735. [Google Scholar] [CrossRef]

- Warner, N., Brenner, D., Tucker, B. V., & Ernestus, M. (2022). Native listeners’ use of information in parsing ambiguous casual speech. Brain Sciences, 12(7), 930. [Google Scholar] [CrossRef] [PubMed]

| Condition | Context | d′ | β | Avg. Prop. Correct |

|---|---|---|---|---|

| is/was, no ‘like’ | Isolation | 1.733 | −0.739 | 0.786 |

| Limited | 1.842 | −0.917 | 0.793 | |

| Full | 2.106 | −1.267 | 0.813 | |

| is/was, with ‘like’ | Isolation | 0.843 | −0.780 | 0.609 |

| Limited | 0.694 | −0.512 | 0.605 | |

| Full | 0.884 | −0.626 | 0.635 | |

| are/were, no ‘like’ | Isolation | 1.326 | −0.726 | 0.717 |

| Limited | 1.209 | −0.570 | 0.706 | |

| Full | 1.605 | −0.914 | 0.754 |

| Condition | Context Comparison | Native Listeners | Non-Native Listeners |

|---|---|---|---|

| is, no ‘like’ | Iso. vs. Lim. | Iso. worse | Iso. worse |

| Lim. vs. Full | Full better | Full better | |

| was, no ‘like’ | Iso. vs. Lim. * | Iso. worse | non-sig. |

| Lim. vs. Full n.s. | non-sig. | non-sig. | |

| is, with ‘like’ | Iso. vs. Lim. | Iso. better | Iso. better |

| Lim. vs. Full | non-sig. | non-sig. | |

| was, with ‘like’ | Iso. vs. Lim. n.s. | Iso. worse | Iso. worse |

| Lim. vs. Full n.s. | non-sig. | Full better | |

| are, no ‘like’ | Iso. vs. Lim. | Iso. worse | Iso. better |

| Lim. vs. Full | Full better | Full better | |

| were, no ‘like’ | Iso. vs. Lim. * | Iso. worse | non-sig. |

| Lim. vs. Full n.s. | non-sig. | Full better |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Warner, N.; Brenner, D.; Tucker, B.V.; Ernestus, M. Non-Native Listeners’ Use of Information in Parsing Ambiguous Casual Speech. Languages 2025, 10, 8. https://doi.org/10.3390/languages10010008

Warner N, Brenner D, Tucker BV, Ernestus M. Non-Native Listeners’ Use of Information in Parsing Ambiguous Casual Speech. Languages. 2025; 10(1):8. https://doi.org/10.3390/languages10010008

Chicago/Turabian StyleWarner, Natasha, Daniel Brenner, Benjamin V. Tucker, and Mirjam Ernestus. 2025. "Non-Native Listeners’ Use of Information in Parsing Ambiguous Casual Speech" Languages 10, no. 1: 8. https://doi.org/10.3390/languages10010008

APA StyleWarner, N., Brenner, D., Tucker, B. V., & Ernestus, M. (2025). Non-Native Listeners’ Use of Information in Parsing Ambiguous Casual Speech. Languages, 10(1), 8. https://doi.org/10.3390/languages10010008