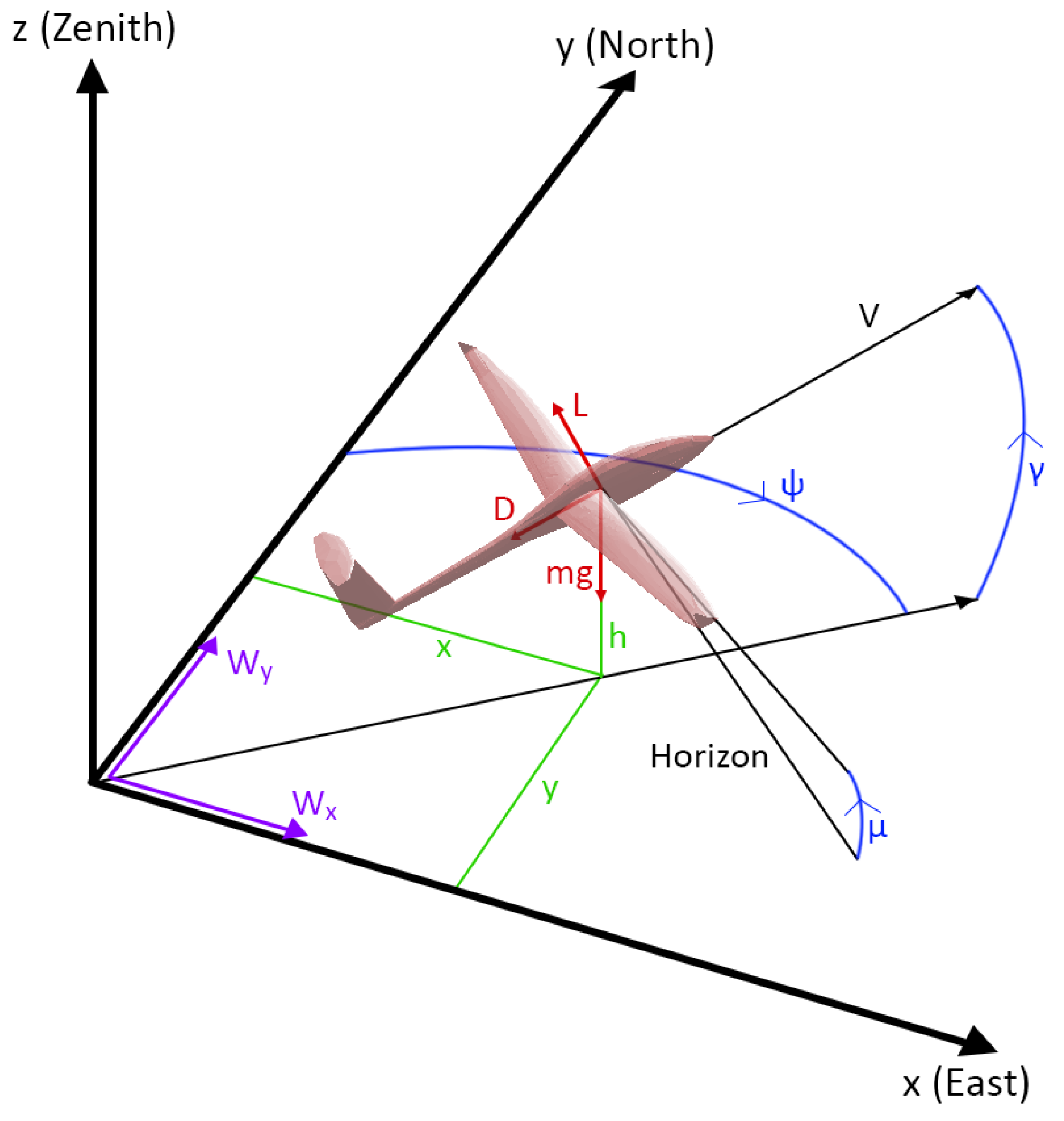

The following autonomous soaring test cases show the evolutionary approach’s capacity to generate simple and effective neurocontrollers. In the first case, a controller was evolved in a bidirectional wind environment using a biological albatross model to compare the trajectory of the neurocontroller to that of an albatross bird. The second case shows a neurocontroller evolved using a typical commercial SUAV model to demonstrate the NEAT-based training approach’s applicability to aerial vehicles, and the third test case presents an instance of SUAV thermal soaring. As was shown in

Section 2.1, the state vector used as inputs to the evolving neural networks does not contain any explicit information on the local wind field, and as such, the following neurocontrollers evolved by interacting with the wind model without prior knowledge of the environmental conditions. Similarly, the networks also did not receive the simulation time, indicating that the following results did not arise from a generated schedule of control commands.

Although the length of the simulations can vary greatly between population members due to the inherently unique nature of neurocontroller species, the neural networks shown in the next section required an average CPU time of 0.224 min across all three test cases to evolve a successfully soaring neurocontroller. For reference, the entire evolutionary process was run on an Intel four-core CPU with 16 GB of memory.

4.1. Albatross Dynamic Soaring

The characteristics of the albatross model [

1] are shown in

Table 1. The parameters of both the flight agent and the wind were selected to compare the results against the data collected and presented by Sachs et al., who recorded the energy extraction cycles of wandering albatrosses through GPS signal tracking [

3]. Parameters with the subscript 0 represent initial conditions at time

.

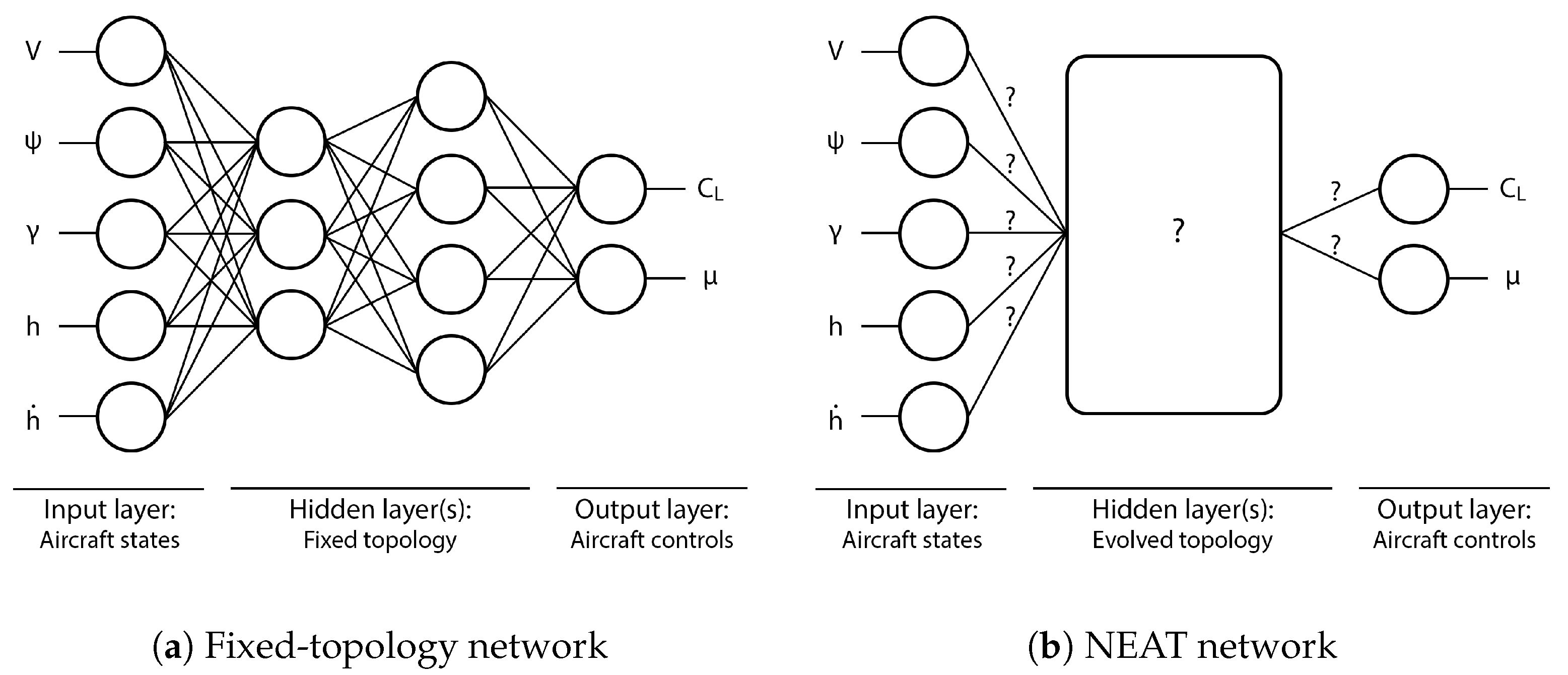

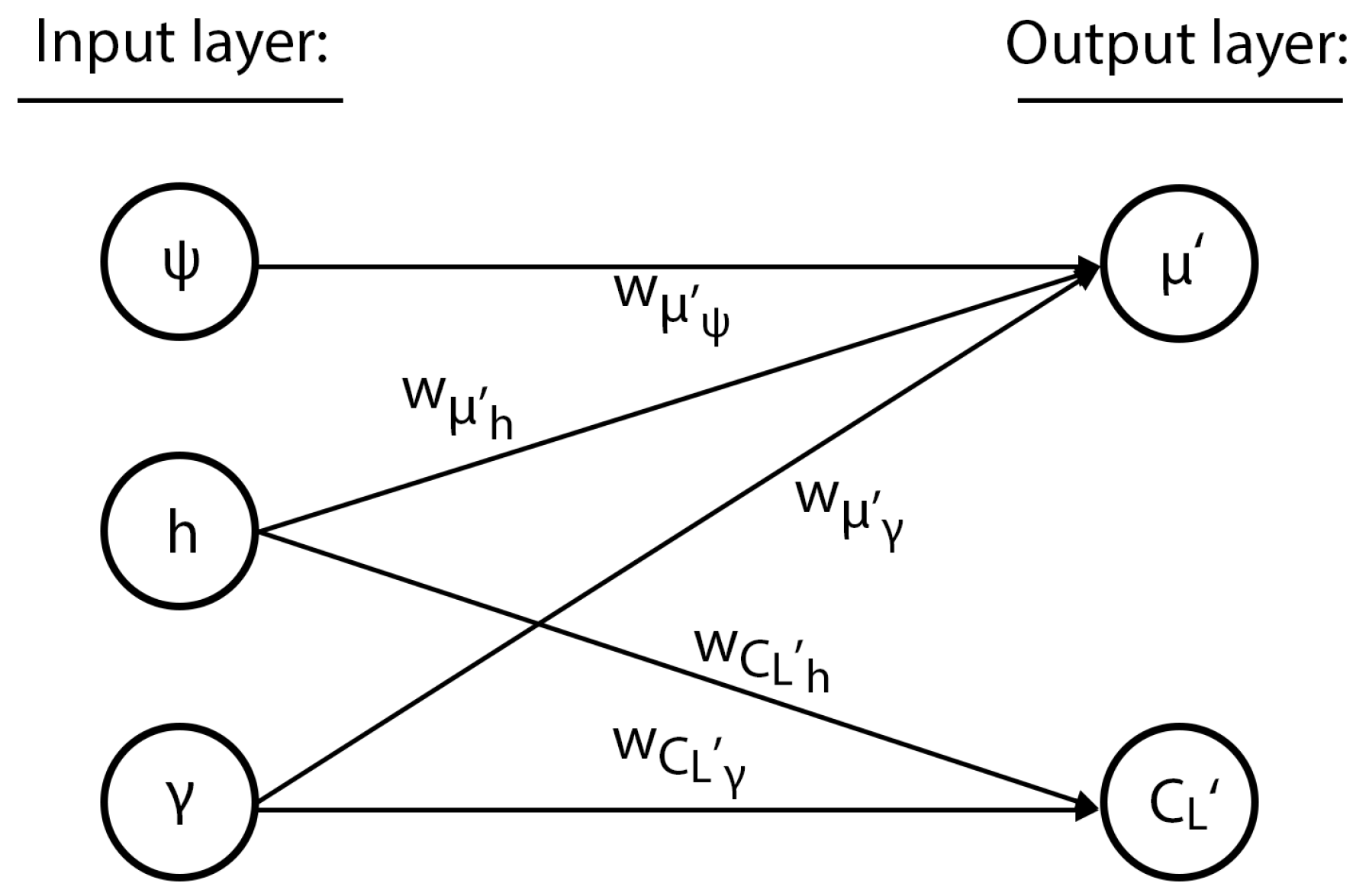

The neurocontroller that was trained using the albatross flight model with a two-dimensional wind profile described in

Section 2.3 is shown in

Figure 7. Evolved with both the distance-based reward and penalty functions detailed in

Section 3.3, the neural network uses a reduced input space of three nodes and no hidden layers, defined only by direct connections to the output nodes.

The bias nodes

and

, as well as the connection weights

w take the values:

Mathematically, the feedforward network can be described as shown below, where Equation (

47) converts the normalized outputs

and

of the sigmoid function

to values within the aircraft control limits:

The simplicity of the neural network revealed that the roll angle was determined by the agent’s height, with the lift coefficient determined by the height rate of change, the pitch angle, and the airspeed. This interpretability is important for the implementation and adoption of neurocontrol systems, where understanding the internal mechanisms of networks is a significant component in building trust in such systems. This characteristic of the neuroevolutionary method is further examined in the next test case.

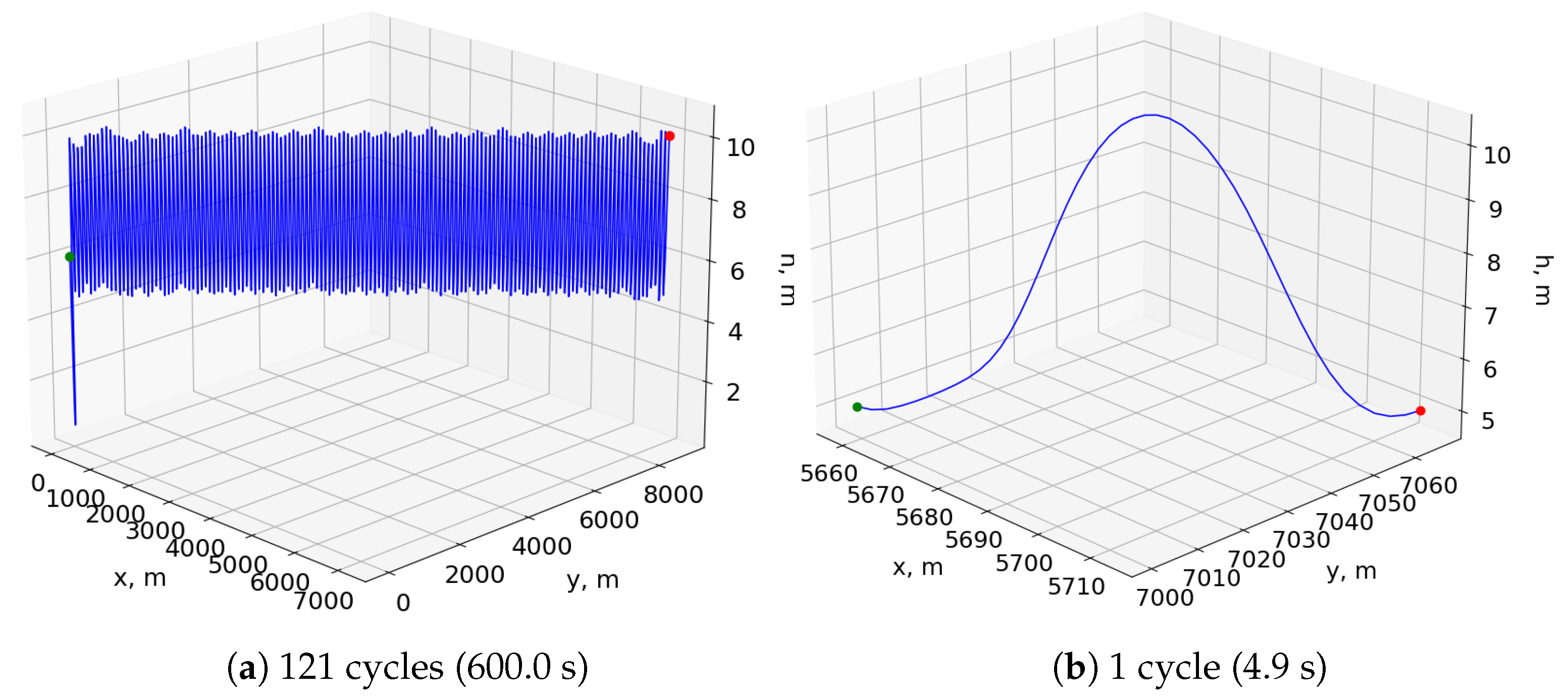

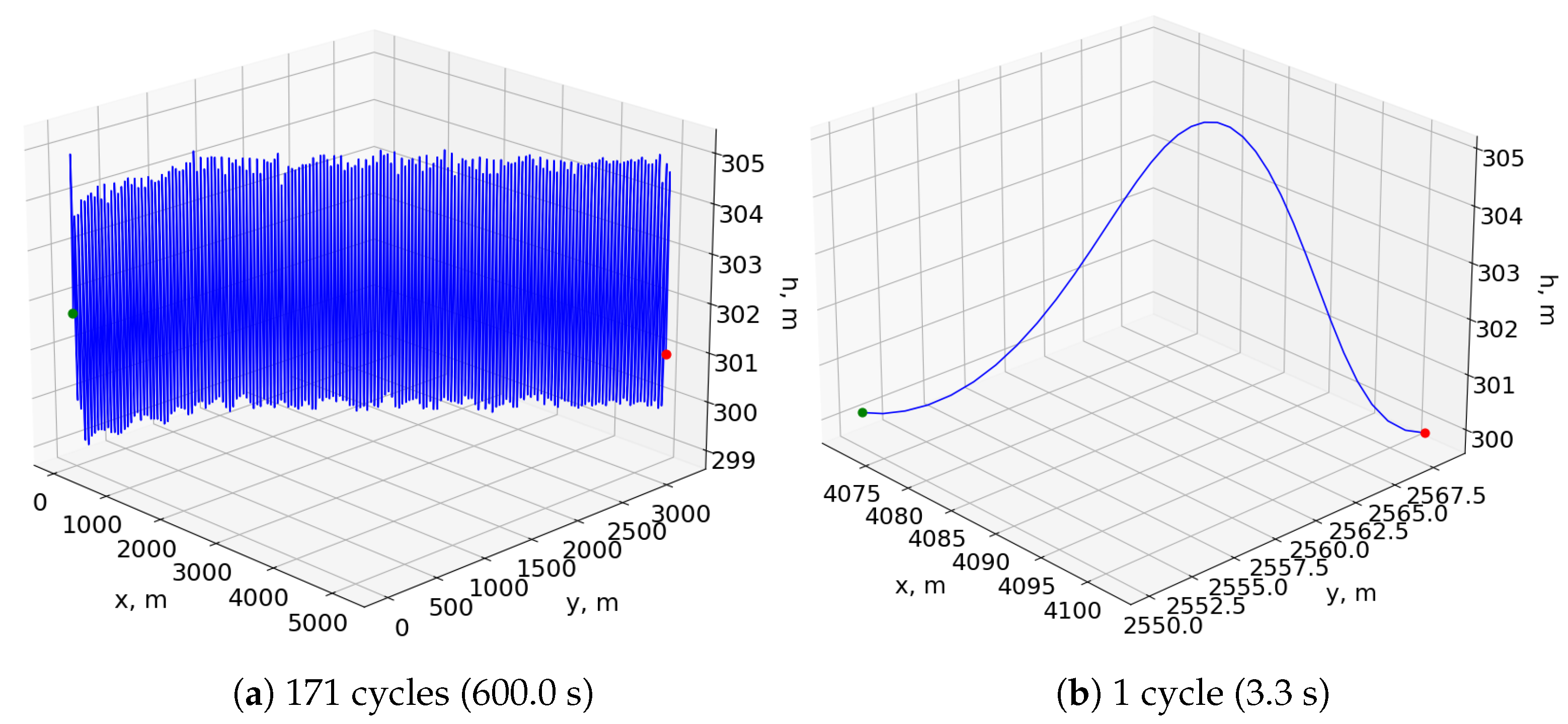

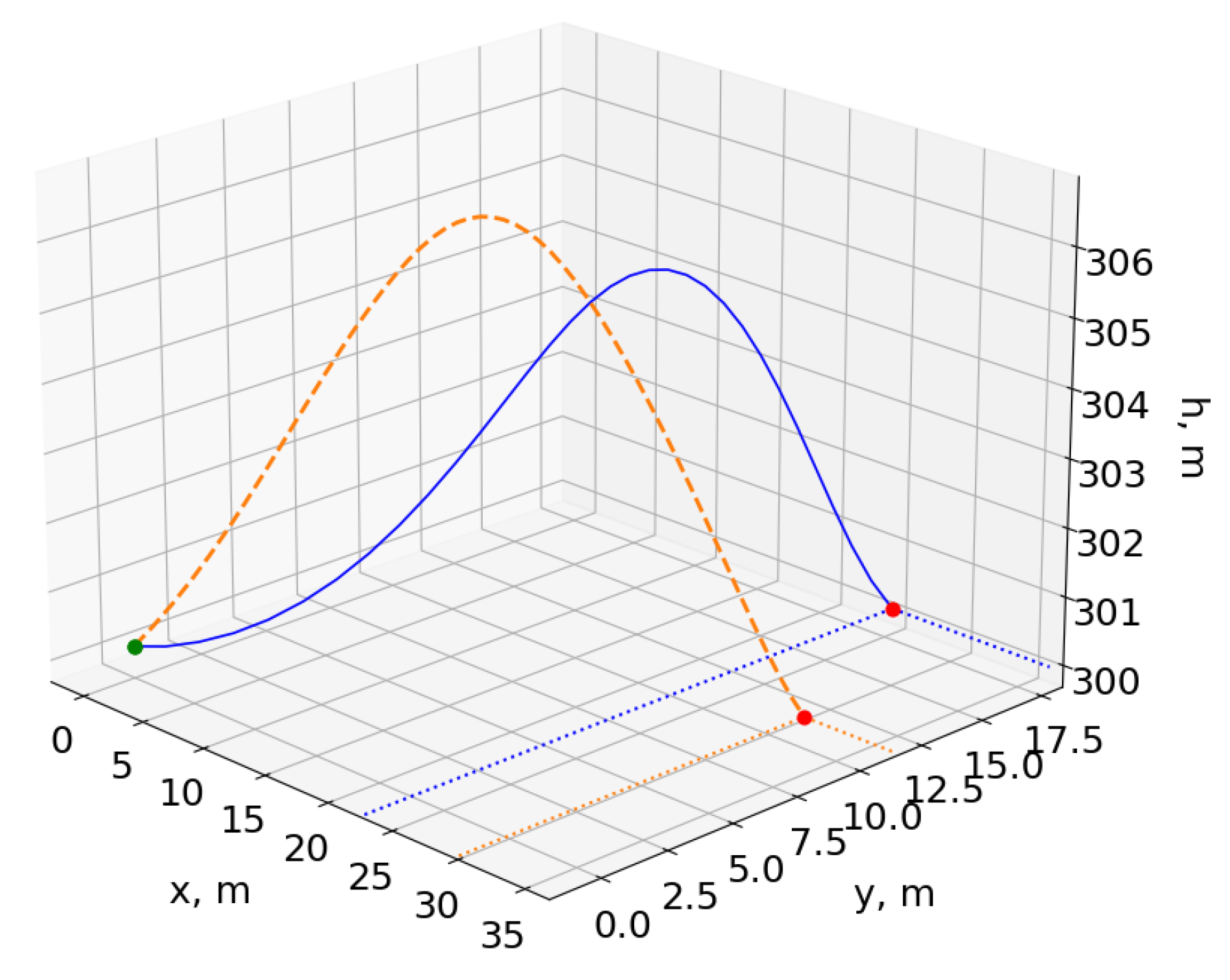

Figure 8a shows the resulting trajectory of simulating this neurocontroller for 600 s in the bidirectional wind environment, and

Figure 8b shows a single period of the same trajectory after the aircraft reaches a stable pattern. The flight agent undergoes an initial transitional period before it reaches an equilibrium point conducive to sustained cyclical soaring.

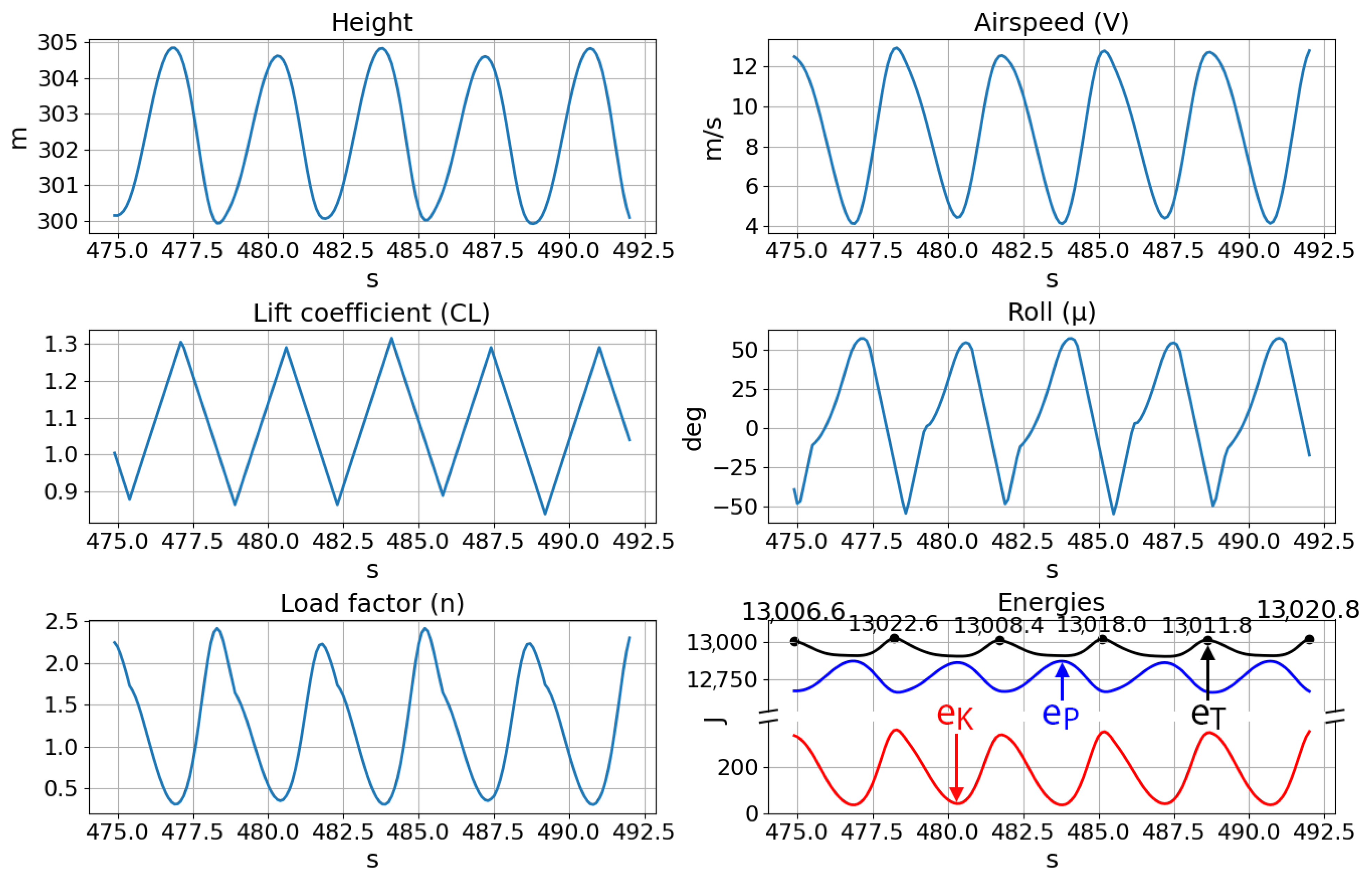

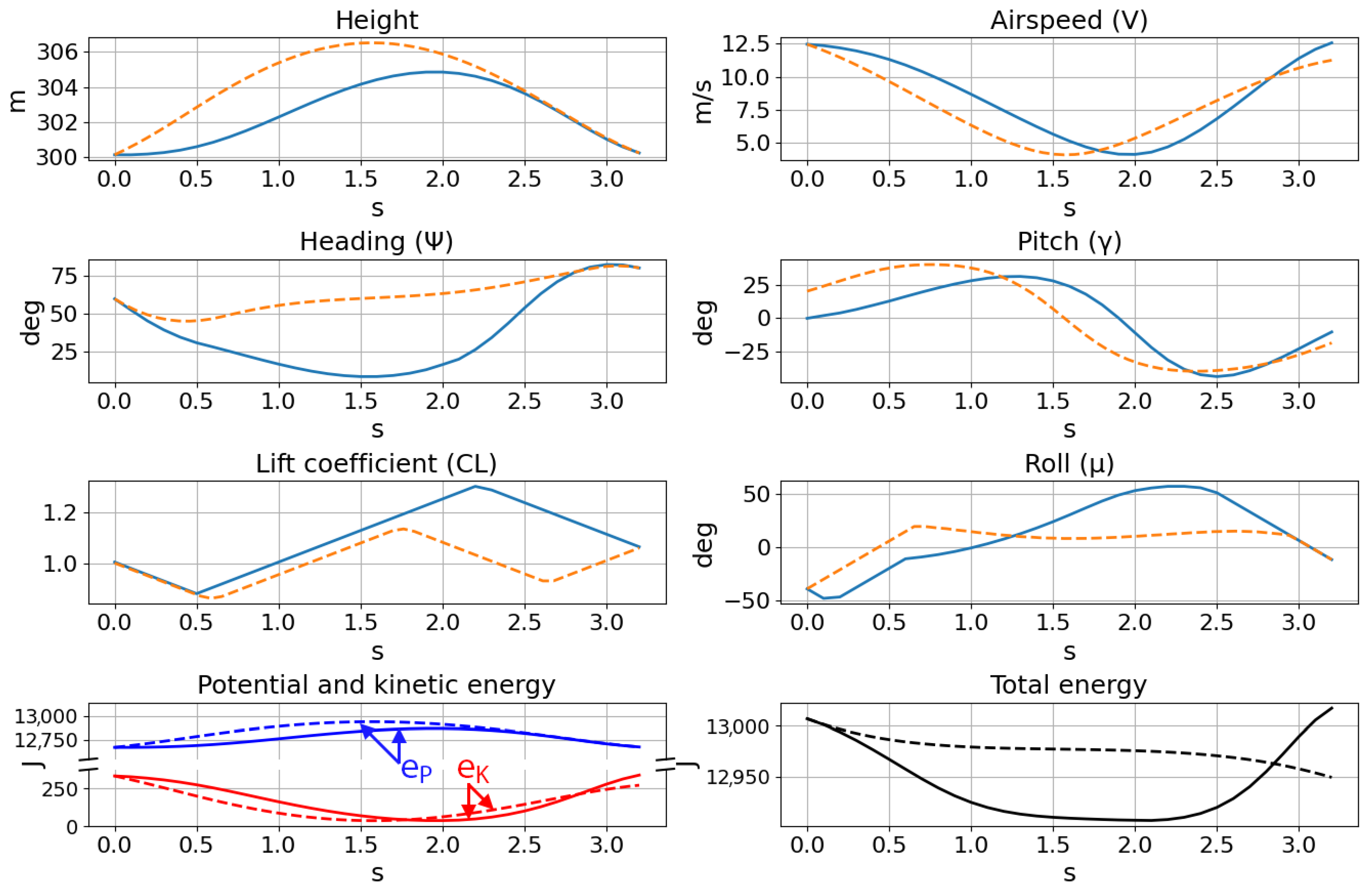

The smooth states of a consecutive five-cycle segment, shown in

Figure 9, mimic the soaring trajectories of albatross birds [

3]. Furthermore, examination of the maximum total energies of the five cycles reveals that the aircraft experiences positive net changes in energy between some periods and negative net changes for others, with this pattern of gaining and losing excess energy continuing throughout the test. Constantly accumulating additional energy between cycles is both naturally limited by the finite wind gradient and undesirable when maintaining stable soaring cycles. Therefore, sustained dynamic soaring is a problem of ensuring that the total energy of the agent does not fall below a certain threshold under which the ability to extract sufficient energy for future cycles is compromised. The test trajectories showed that the neurocontroller can manage the agent’s energy for continuous dynamic soaring, balancing any losses in energy with gains in subsequent cycles. This ability to generate continuous and stable soaring cycles after simple feedforward passes through a sparse neural network contrasts the much more limited trajectory horizons of numerical optimization, which typically only plots trajectories of a single period after extensive computation. By ensuring that the fitness criteria in the genetic algorithm are in part a function of the time for which a particular species survives, the proposed method evolves sustainable soaring as a trait within the controller itself.

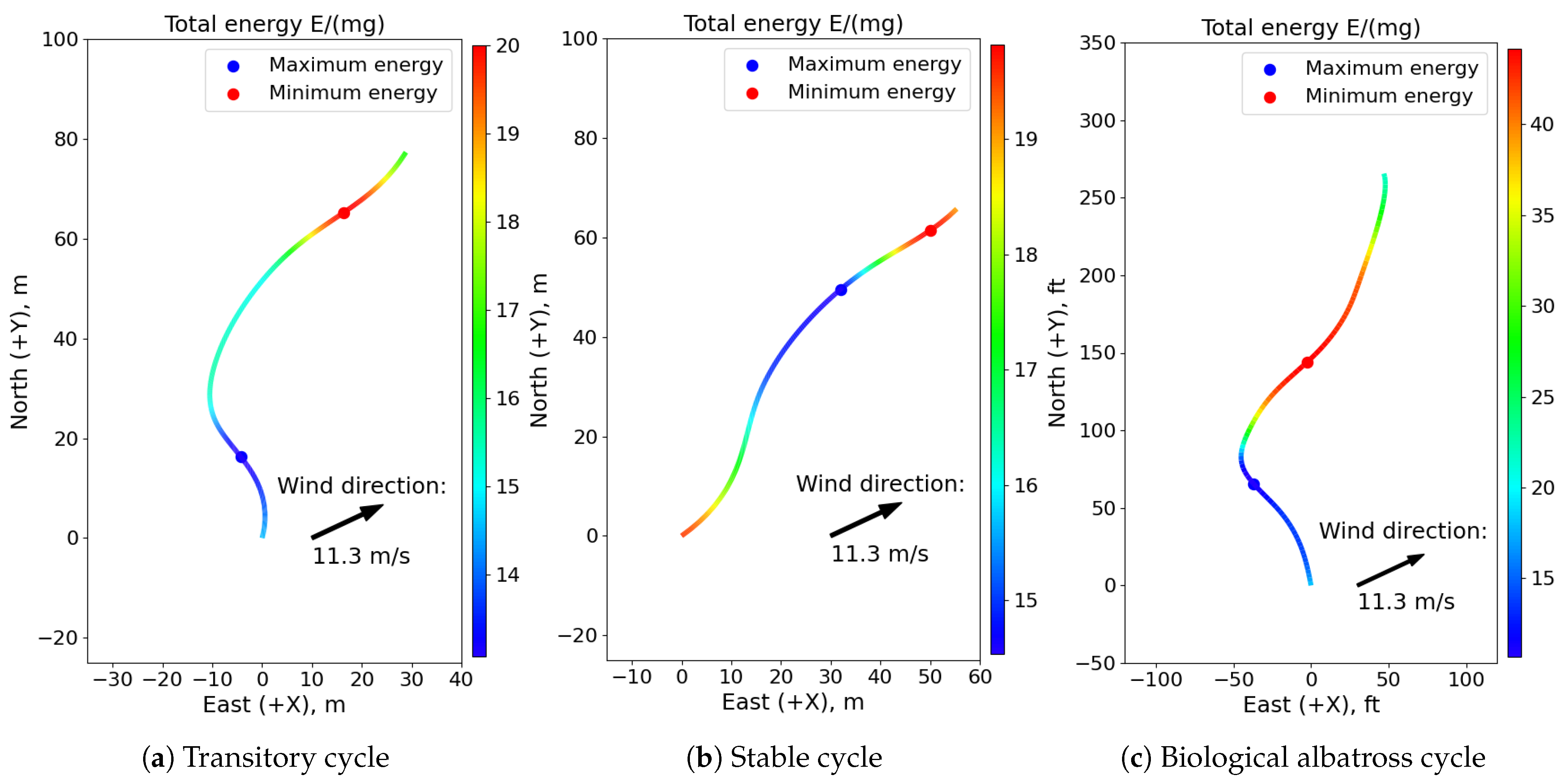

The differences in the total energy histories in the initial transitional phase of the soaring test, its stable phase, and a recorded cycle of a biological albatross flight captured by Sachs et al. [

3] are illustrated in

Figure 10, presented as specific energies. For the transitional cycle, the point of minimum energy occurs during the upwind climb phase as the flight agent uses the wind gradient to accumulate sufficient energy for sustained soaring. After increasing its total energy over multiple transitional cycles, the agent eventually reaches the altitude limit of the gradient and achieves a maximum airspeed that does not exceed control and structural limits. In the stable cycles, the minimum total energy point exists immediately before the downward sink, showing that the aircraft expends most of its energy during the upwind climb and high altitude turn phases before rapidly accelerating into the dive maneuver. This sharp fluctuation between extremes leaves little time to accumulate additional energy, which is necessary to prevent the agent from soaring out of the wind gradient layer or exceeding aircraft limits.

The recorded albatross flight resembles the transitory cycle of the neurocontroller better than the stable period, showing a stronger turn into the wind during the upwind climb phase to accrue excess energy. This aggressive maneuver from the albatross may have been necessary in the presence of a varying and uneven wind profile, unlike the deterministic wind experienced by the neurocontroller. In addition, a flying albatross is likely motivated by much more complex objectives such as minimizing control effort, maximizing flight time, and pursuing prey that extend beyond the relatively simple distance maximization criterion of the neurocontroller. Regardless, the trajectories of the neurocontroller show the smooth, continuous flight that is characteristic of albatross birds undergoing dynamic soaring. Discrepancies between flight paths can be attributed to the simulation’s unique wind modeling parameters and the inherently more complex behaviors of biological organisms.

4.2. SUAV Dynamic Soaring

Another neurocontroller was evolved using an SUAV model for the flight agent and a different set of wind parameters, all of which are described in

Table 2. The resulting neural network of

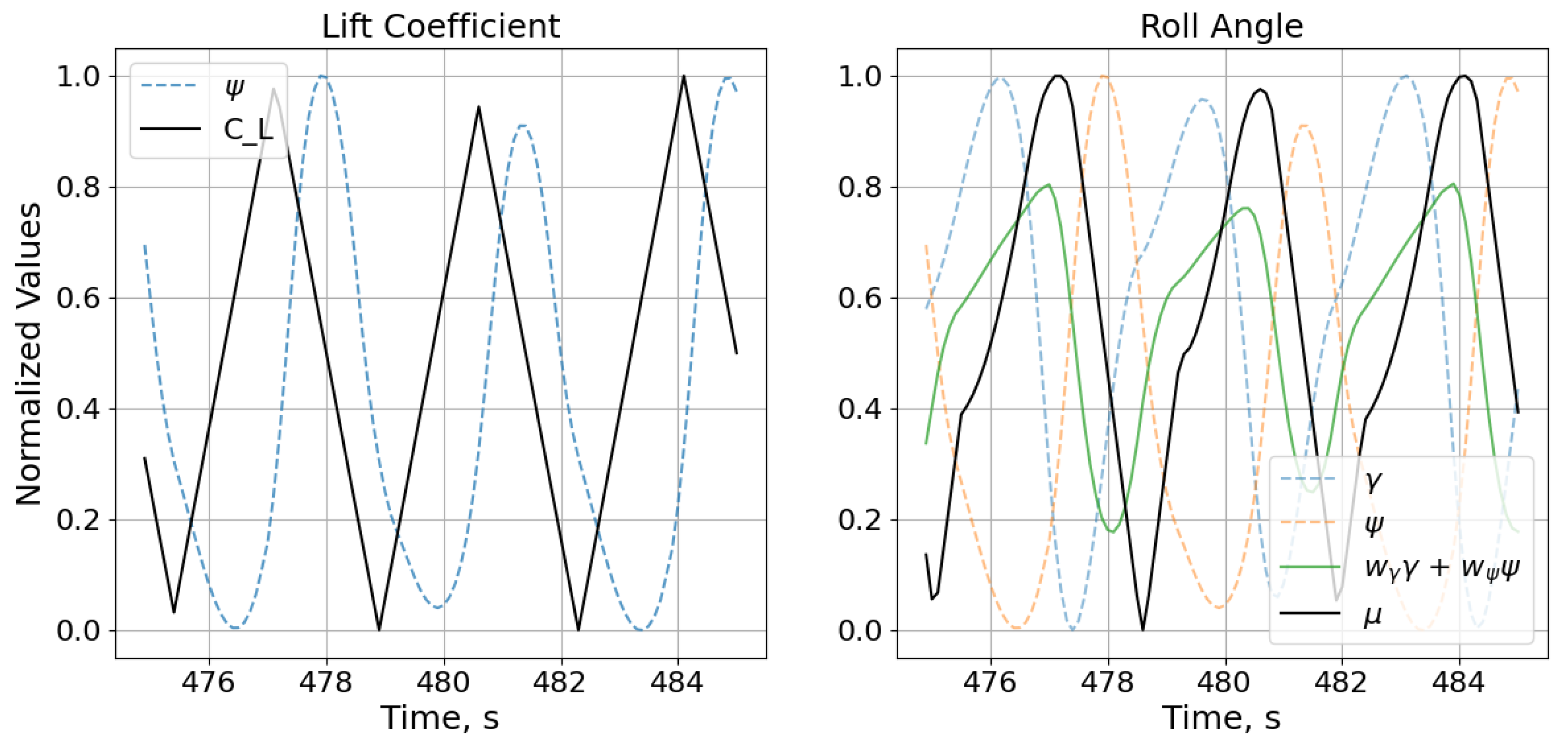

Figure 11 was evolved in a unidirectional wind environment and also only defined by input and output layers much the same as the albatross network, relying solely on the heading and pitch angles.

However, the topology of this neurocontroller is even more sparse than that of the albatross network. This reduction in network complexity can be attributed to the unidirectional wind profile of the environment in which the SUAV controller was evolved. There is simply one less dimension that the network must account for, and since the network must infer the wind model through its effects on the aircraft without receiving measurements of the local wind, this reduction of the wind profile has a nontrivial impact on the resulting topology. Nevertheless, the simplicity of the network further enables its interpretation. The network is rolling based on the aircraft’s pitch and heading angles while determining the angle of attack based on where the aircraft is headed. The NEAT process indirectly encoded the characteristics of the wind profile that the neurocontroller was subjected to during evolution into the neural network’s topology and weights, and this knowledge of the environment was used to determine when to execute pitch and roll maneuvers that led to dynamic soaring trajectories. To further illustrate the close relation between the states and controls,

Figure 12 shows the input signals plotted against the output control commands. Even without the effect of the sigmoid function and the subsequent scaling of the normalized controls, the outputs can be seen to track their respective inputs, albeit with offsets that are simply the result of the specific connection weights and biases. The proximity of the network’s inputs to its outputs in terms of the number of intermediary nodes and connections enables the observing and analytical tracking of the network’s feedforward process, and this topological traceability allows for a precise, intuitive understanding of the neurocontroller. This interpretability is an important aspect for validating neurocontrol schemes that is difficult to achieve with the abstract, black-box nature of densely interconnected deep neural networks.

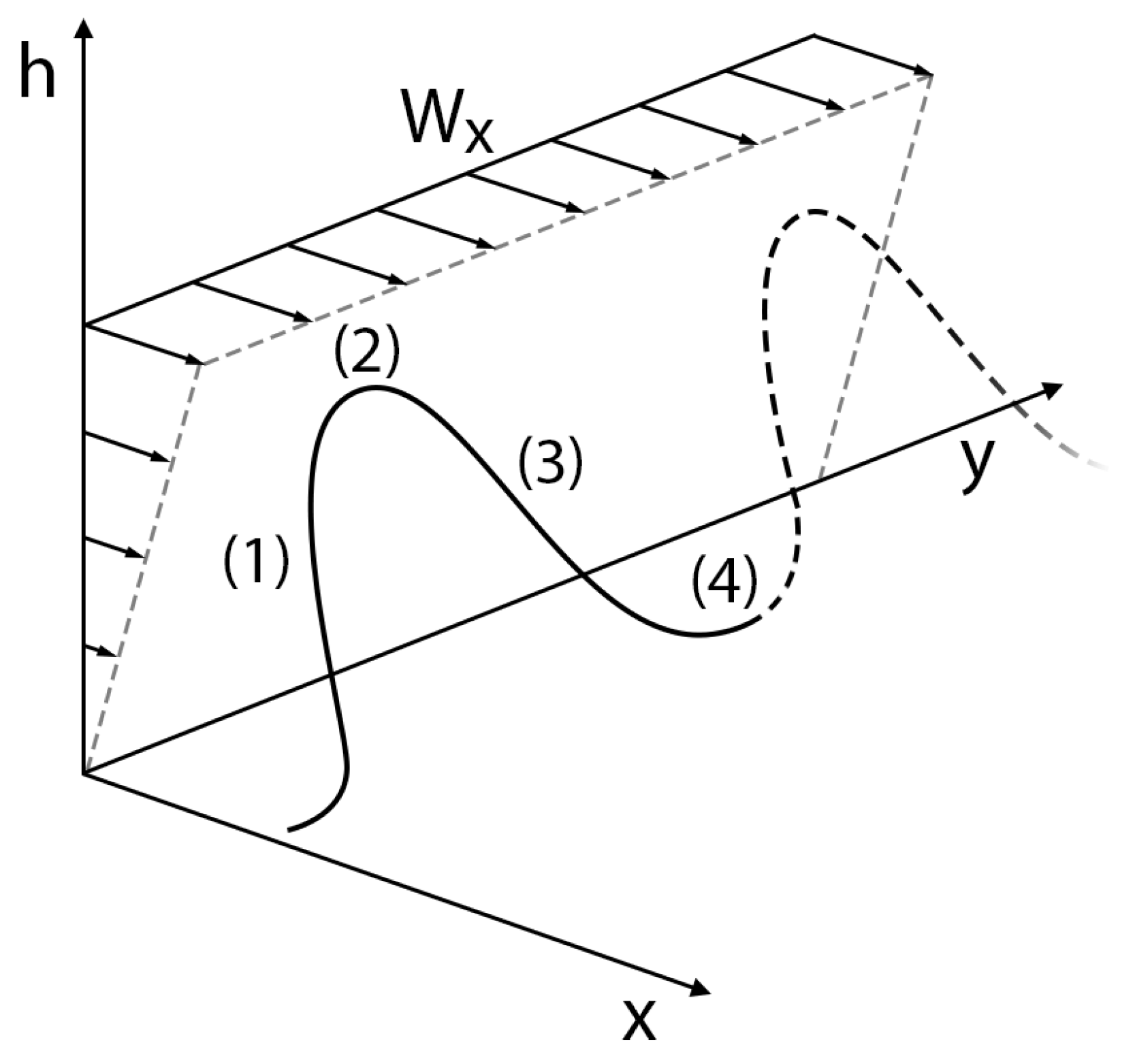

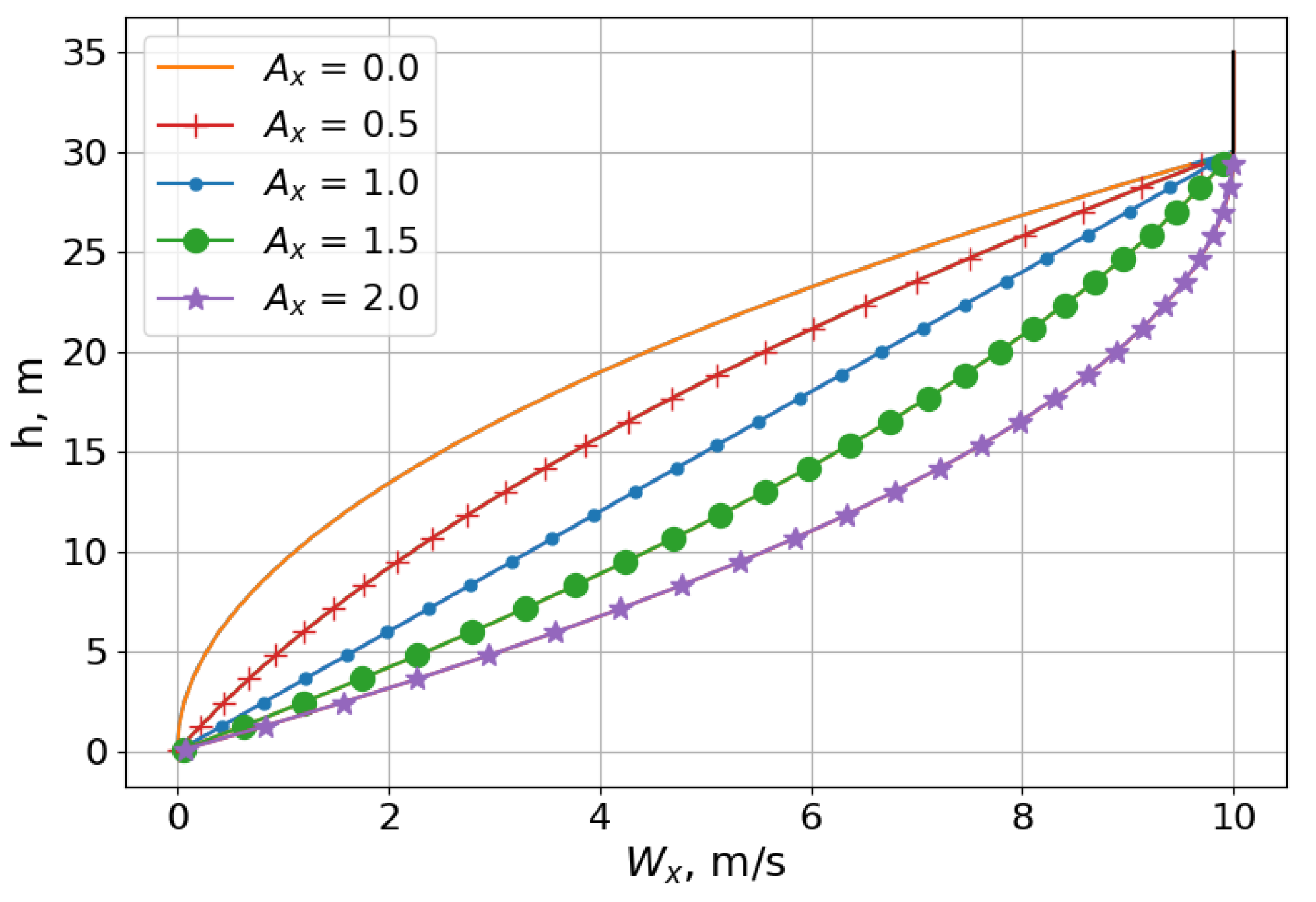

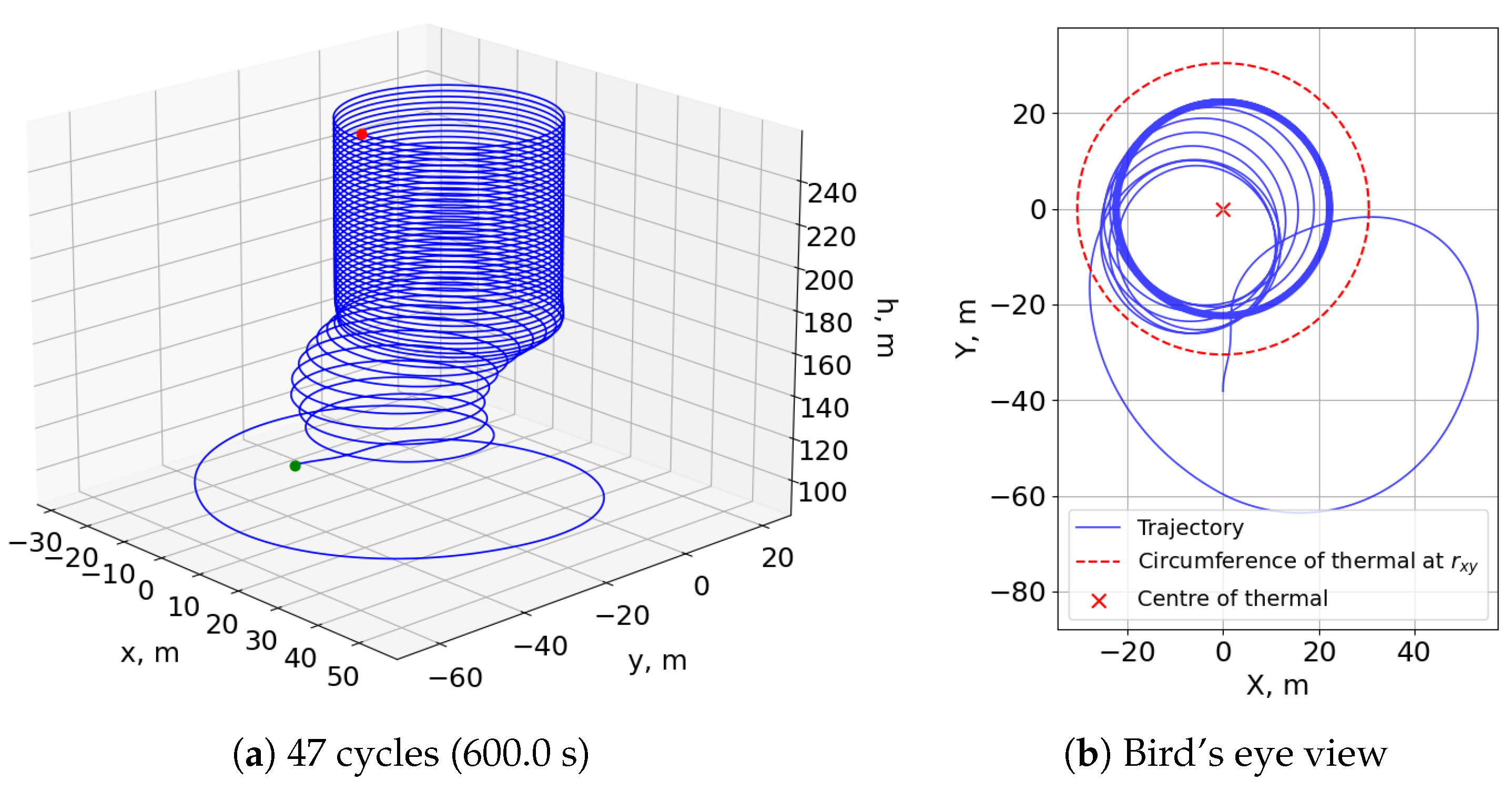

Figure 13 shows the trajectories of different scales as a result of simulating this neurocontroller in a unidirectional wind environment. The plots show that the SUAV model achieved a stable pattern near the transition height of the wind profile, which was uniquely shaped in this test case. The altitudes at which the aircraft would soar were too high to use the wind profile detailed in

Section 2.3, since the vertical gradient would be insufficiently weak when stretched over hundreds of meters. Therefore, the model used for this test case compressed the profile into a much smaller altitude range, as shown in

Figure 14, allowing the lightweight SUAV to extract enough energy from the environment. To perform dynamic soaring with greater altitude fluctuations and longer soaring cycles, a stronger wind profile would be required, with a proportionately sufficient vertical wind gradient, as well as a more robust aircraft that can withstand the load factors associated with more strenuous flight maneuvers. Regardless, the trajectories exemplify the ability to evolve neurocontrollers for dynamic soaring trajectories at altitudes much higher than those experienced by soaring seabirds, with narrower wind gradients.

The smooth states of the multiperiod trajectory, shown in

Figure 15, are desired when considering the response rates and limitations of real control systems and physical vehicles. The relatively abrupt changes in the lift coefficient and roll angle controls, however, are required for the rapid maneuvers of dynamic soaring, a characteristic that is also reflected by the cyclic history of the load factor. Additionally, the energy histories do not contain any significant reduction in the total energy between cycles, demonstrating that the neurocontroller can continuously soar given a constant wind profile. In reality, the finite nature of environmental winds would pose a greater obstacle to indefinite soaring than any periodic reduction in energy.

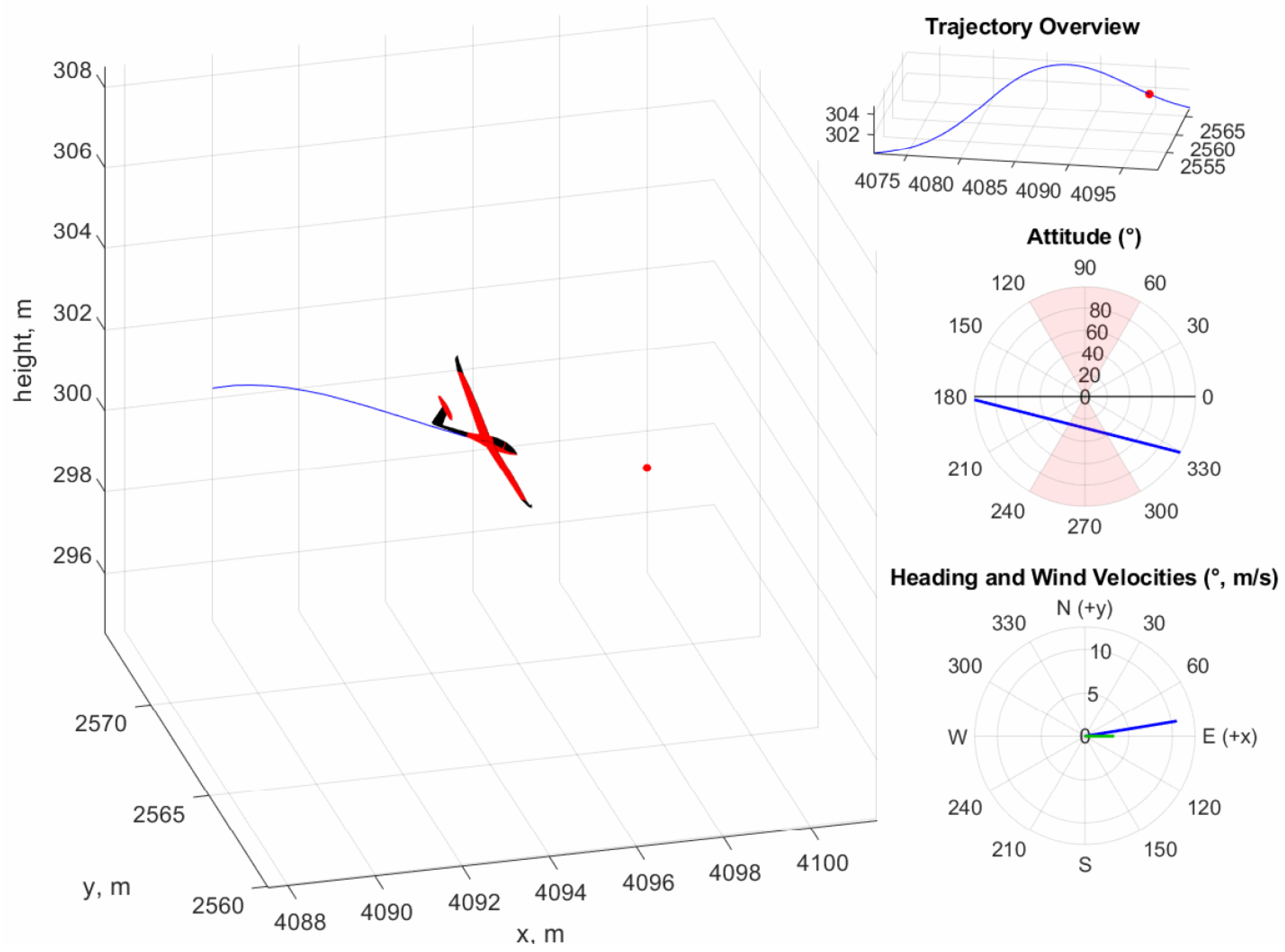

Figure 16 provides a dynamic visualization of the aircraft as it soars through a cycle of the trajectory. The observation of dynamic soaring behavior from such a simple neural network highlights the advantages of the neuroevolutionary method, which are that such controllers are more easily implementable on hardware-limited SUAV platforms than more complex neural networks while being interpretable.

To compare different trajectory planning and control approaches, a numerical trajectory optimization was performed for a single soaring cycle through a direct trapezoidal collocation nonlinear programming approach as described in

Section 2.1, where the optimization was conducted with pyOPT using the SNOPT optimizer [

25]. The SUAV and wind models were identical to those described in

Table 2, and the boundary and path constraints are detailed in

Table 3, with the initial and final conditions based on the neurocontroller trajectory of

Figure 13b. The trajectory optimization also used the cost function shown in Equation (

41) to maximize the total distance traveled over the soaring cycle.

The optimization process, consisting of 50 collocation points, took a CPU time of 1.55 min, nearly seven-times longer than the entire evolutionary process that produced the SUAV neurocontroller of

Figure 11, which once trained, procedurally generates trajectories in real time. Furthermore, although the flight paths and traces of the two methods superimposed for comparison in

Figure 17 and

Figure 18 show similar trajectories, the energy histories indicate that the optimized flight path ultimately cannot be used for dynamic soaring. While the boundary conditions were satisfied, the optimization process does not take into account the practical requirement for repeatable trajectories when computing individual cycles at a time. The singular focus on the cost function precludes any consideration of sustainable soaring, resulting in the decrease in the total energy after a single cycle. Attempting to calculate a longer trajectory comprised of multiple soaring cycles is also significantly more costly in terms of computation time and resources, and optimizations performed with twice the number of collocation points yielded identical results.

Many nonlinear programming solvers require an initial guess for the trajectory solution. Consequently, trajectory optimization results are directly dependent on this initial solution provided to the optimizer, and therefore, the computed optimal trajectories are local solutions by nature. In contrast, NEAT allows for the emergence of more global results, since the random member initialization and speciation mechanisms enable the exploration of numerous different network structures and weights, expanding the search space of potential trajectory and control solutions.

Another consideration of using pre-optimized trajectories is that they are decoupled from the control scheme. Either a control framework must be designed around the limits of the trajectory optimization method, or the optimization method must take into account the tracking control scheme prior to the lengthy computation. On the contrary, the presented neurocontroller scheme combines both trajectory planning and aircraft control by imposing and enforcing physical constraints during the evolutionary process. The control outputs and resulting flight path are direct reactions to the state of the aircraft and its environment, reducing the complexity of an autonomous system from a multilayered planning and control approach to one that combines both aspects of soaring. These considerations make it difficult to directly apply numerical trajectory optimization to soaring problems.

The relative simplicity of the NEAT-based neurocontrollers presented in this section becomes further apparent when comparing their topologies to those of related neural networks. For instance, a recent work involving deep neural networks for dynamic soaring control trained three separate neurocontrollers for the angle of attack, bank angle, and wing morphing parameter, each of which consisted of 5 network layers of 16 nodes with 1201 network weight values [

12]. Along with the extensive dataset of 1000 optimal trajectories that was required for training, such neurocontrollers make interpretation and implementation on physical systems a significant challenge.

Another work by Li and Langelaan introduced a parameterized trajectory planning method that aimed to solve the lengthy computation times of numerical trajectory optimization [

26]. The deep neural network resulting from the actor–critic reinforcement learning method used to generalize the parameterization approach consisted of an actor and critic network, both of which were comprised of two fully interconnected layers, each with sixteen neurons. Considering that the decision-making actor network was solving only for parameters that represent a dynamic soaring trajectory and not the control commands themselves, the relative complexity of the neural network contrasts the simple yet effective NEAT-based neurocontrollers. These related works in the field of dynamic soaring showcase the typical complexities of deep neural networks and highlight the training and implementation advantages of the evolutionary neurocontrol approach.

4.3. SUAV Thermal Soaring

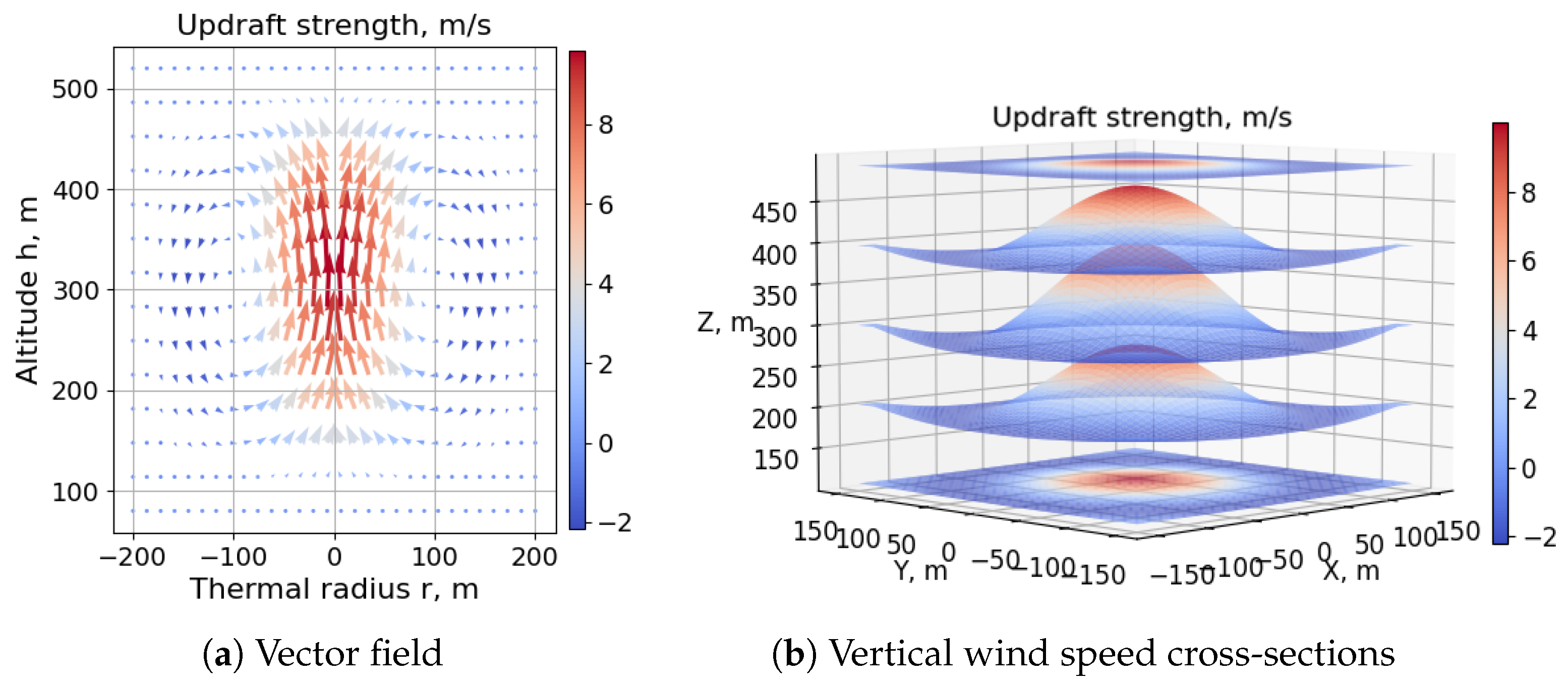

Table 4 details the parameters used to evolve and test the thermal soaring neurocontroller of

Figure 19. The horizontal wind model used in the dynamic soaring test cases was disabled and replaced by the toroidal thermal bubble model described in

Section 2.4.

The evolved neurocontroller has a single hidden neuron between the airspeed network input and the roll angle control output. The lift coefficient is a function of the height rate of change and the pitch angle, similar to the albatross network of

Section 4.1, suggesting that despite the stochastic nature of the evolutionary process, there exists a set of typical connections that are more closely correlated with aerodynamics and control rather than any specific model of the wind or flight agent.

In addition, the fitness function used to generate this neurocontroller only consisted of penalties, unlike the reward-mechanism-containing fitness of the dynamic soaring test cases. The trajectories of

Figure 20 demonstrate that an aversion to the extreme penalty of crashing the model was a sufficient motivator for the evolutionary process to develop soaring behavior. The controller initially finds one edge of the thermal bubble before circling around and centering the rising toroid at a radius from the thermal center where the updraft is sufficiently strong for a sustained climb.

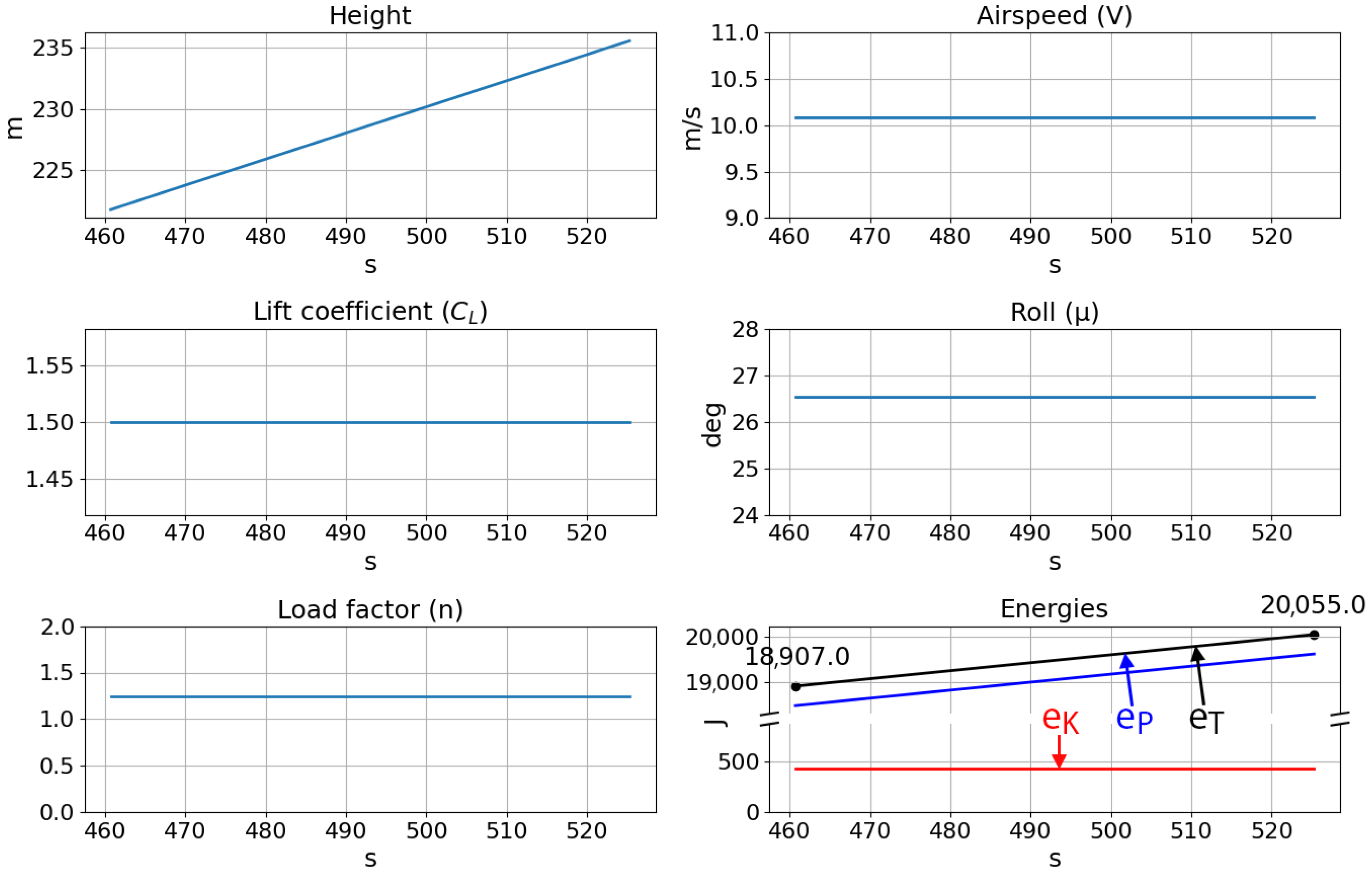

Lastly, the trajectory histories of five soaring loops presented in

Figure 21 show the smooth and simple states and controls of the neurocontroller. The roll is maintained at a constant 26.5 degrees, and the lift coefficient also remains at the maximum value so that the SUAV can remain in an optimal soaring region. For instance, at too great a radius from the thermal center, the updraft strength will be insufficient for continued soaring. On the contrary, at too small a radius, the roll angle required to circle the thermal will be greater, resulting in less lift acting on the aircraft, compromising future soaring cycles. Due to these simple controls and the consequent behavior of the flight agent, the SUAV continually gains potential energy while its kinetic energy remains constant. In all, this test case demonstrated the developed neuroevolutionary method’s applicability to other flight maneuvers.