Temporal-Sequence Offline Reinforcement Learning for Transition Control of a Novel Tilt-Wing Unmanned Aerial Vehicle

Abstract

1. Introduction

- Hardware-in-the-loop (HIL) simulations, based on a flight control board with custom-modified control logic, are employed for offline data acquisition using the newly designed tilt-wing UAV model;

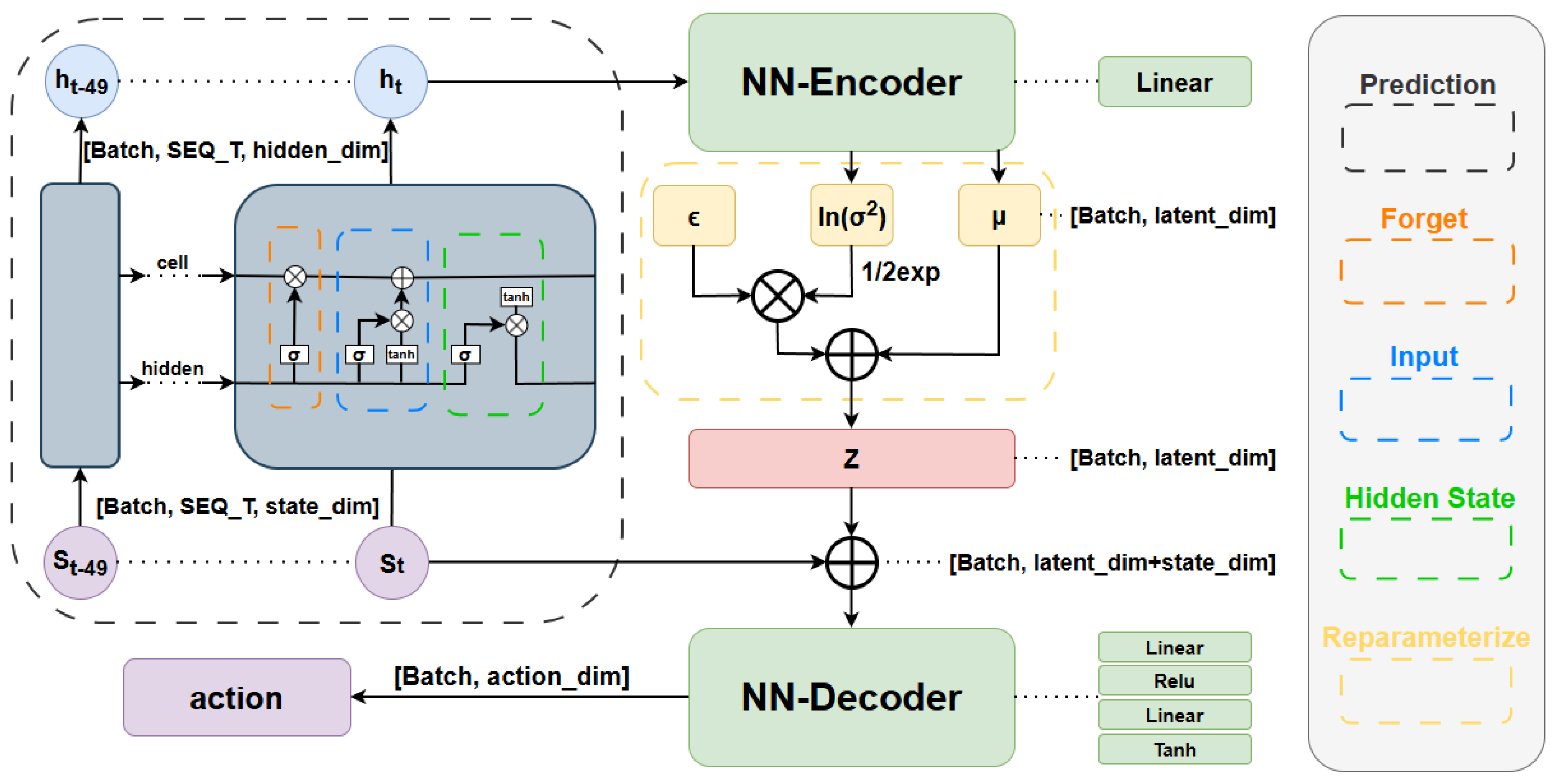

- We propose an offline reinforcement learning control algorithm named TSCQ, which leverages data-driven control for tilt-wing transitions. By incorporating additional predictive capability, the algorithm ensures the distributional consistency of entire action sequences;

- The superiority of the proposed method is validated through multiple comparative experiments and ablation studies conducted in the simulation.

2. Mathematical Model

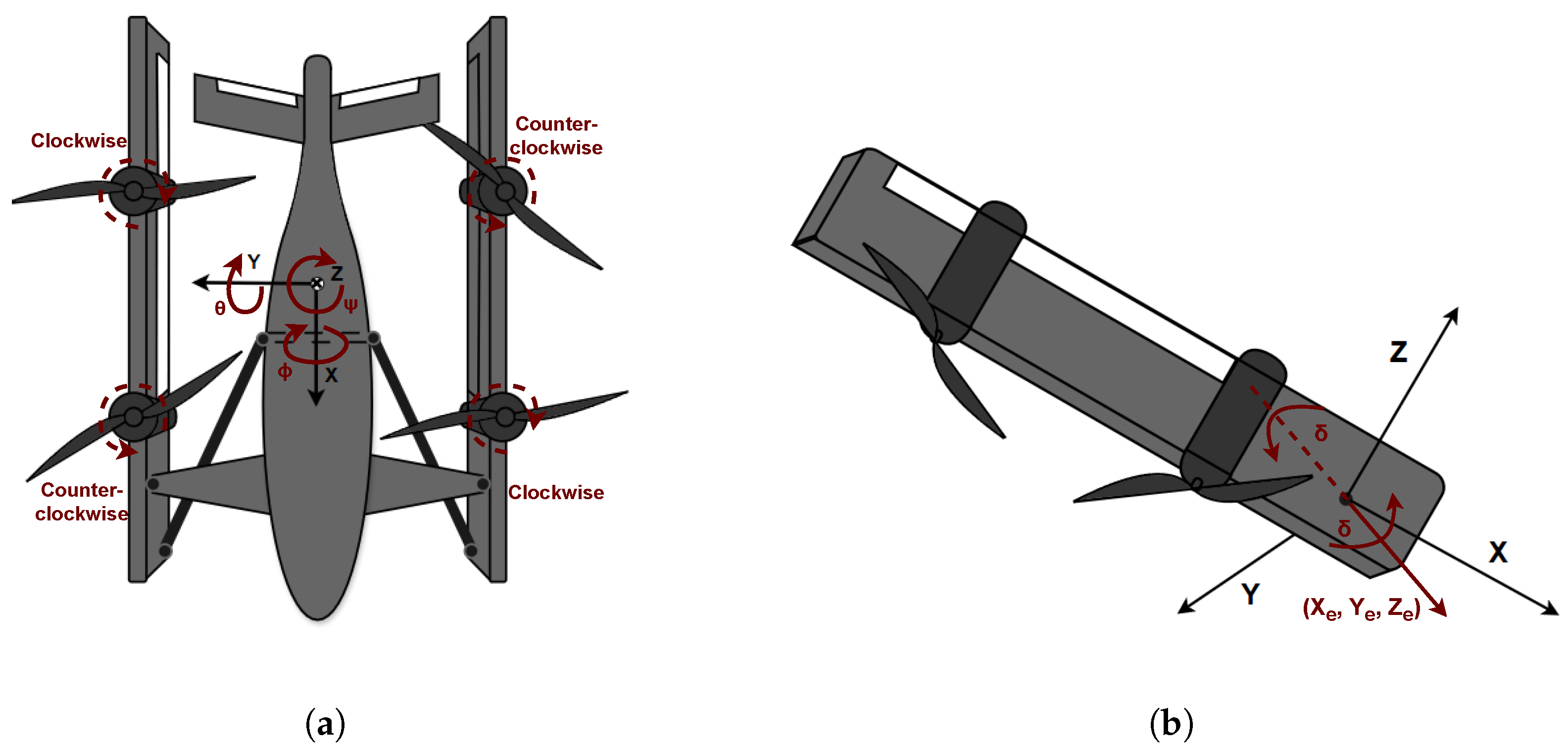

2.1. Coordinate System

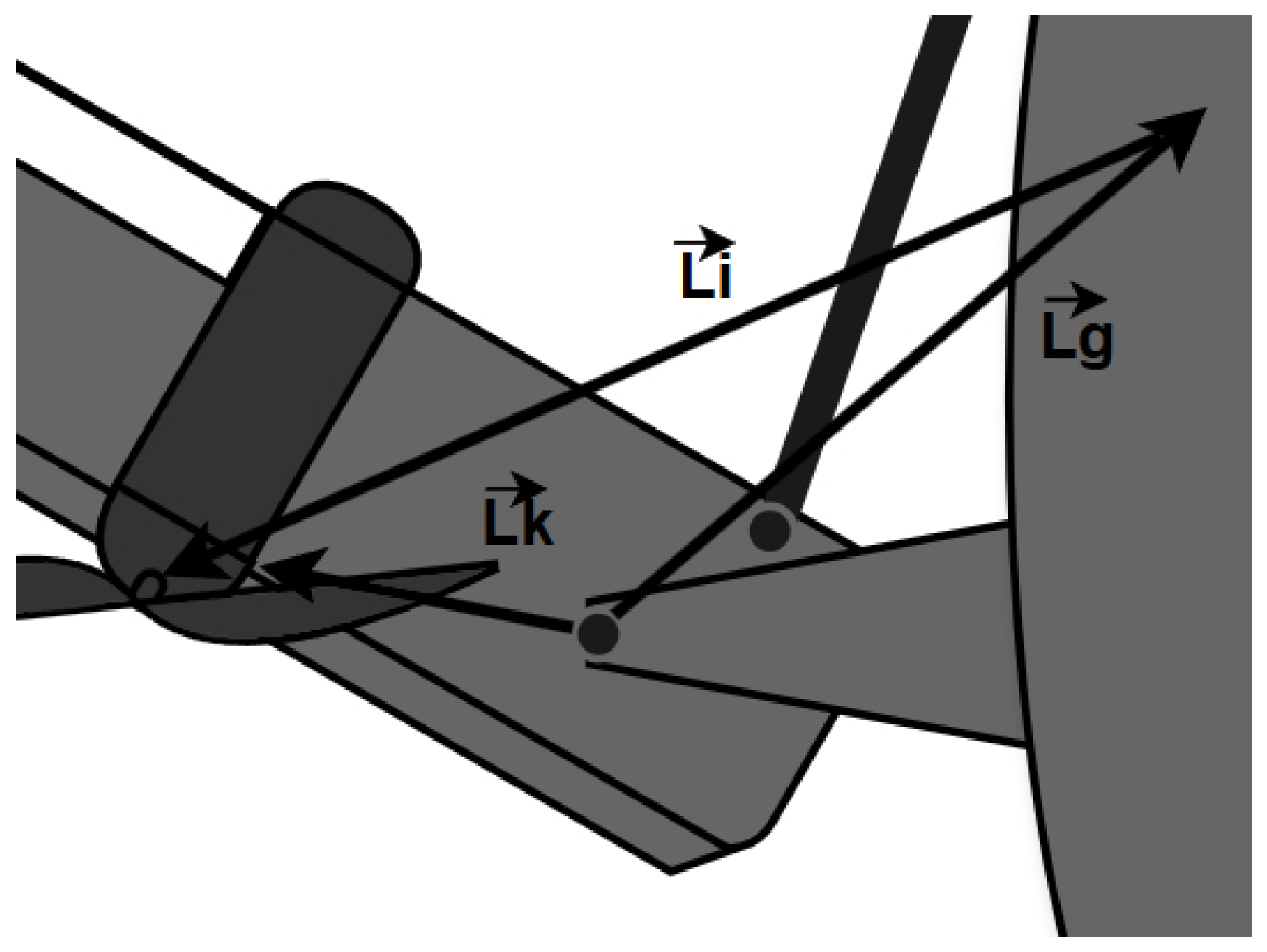

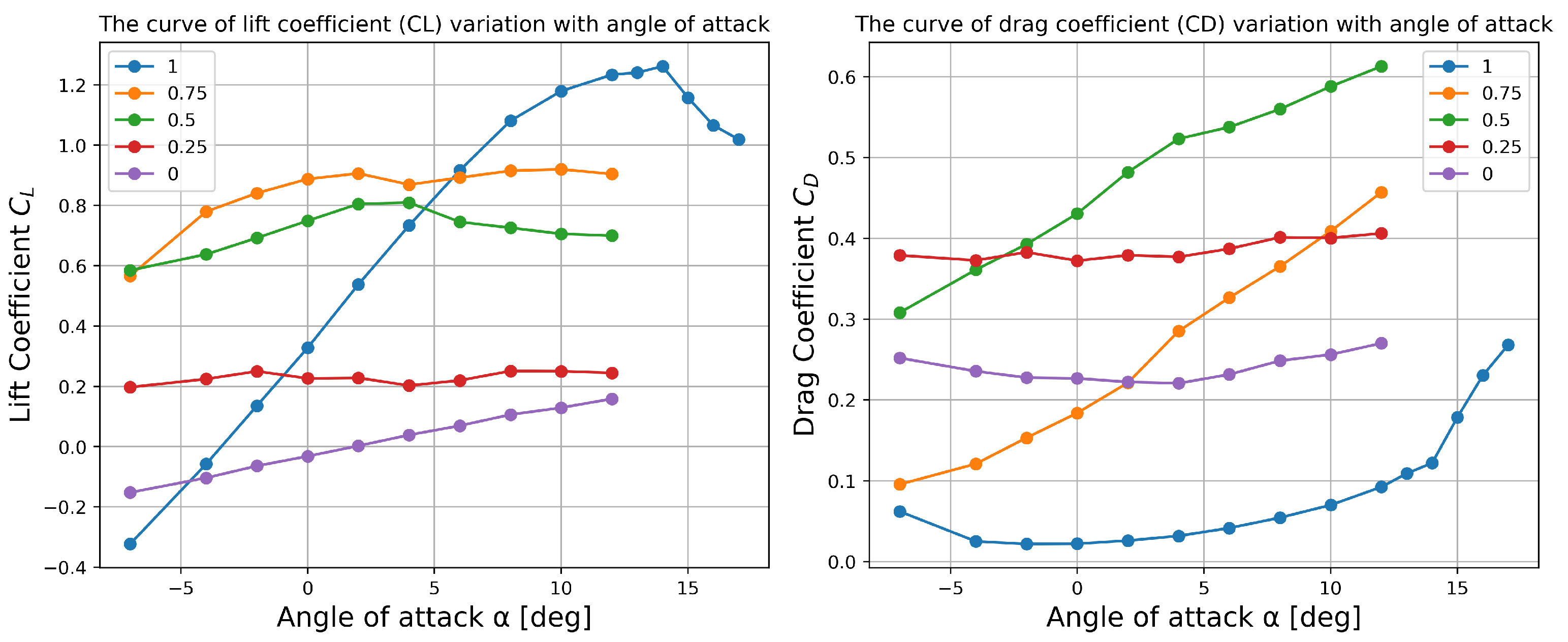

2.2. Model Description and Validation

3. Offline Dataset Generation

3.1. Hardware-in-the-Loop Simulation Methodologies

3.2. Data Augmentation and Refinement

4. Proposed Approach

4.1. Problem Formulation

- Minimizing pitch angle deviation:

- Maintaining the pitch rate q near zero:

- Avoiding altitude changes:

4.2. Algorithm Design and Implementation

5. Experimental Evaluation

- We set up an ablation study to validate the effectiveness of the temporal sequence module. We compare the TSCQ approach with BCQ, which also uses the VAE module, through ablation experiments.

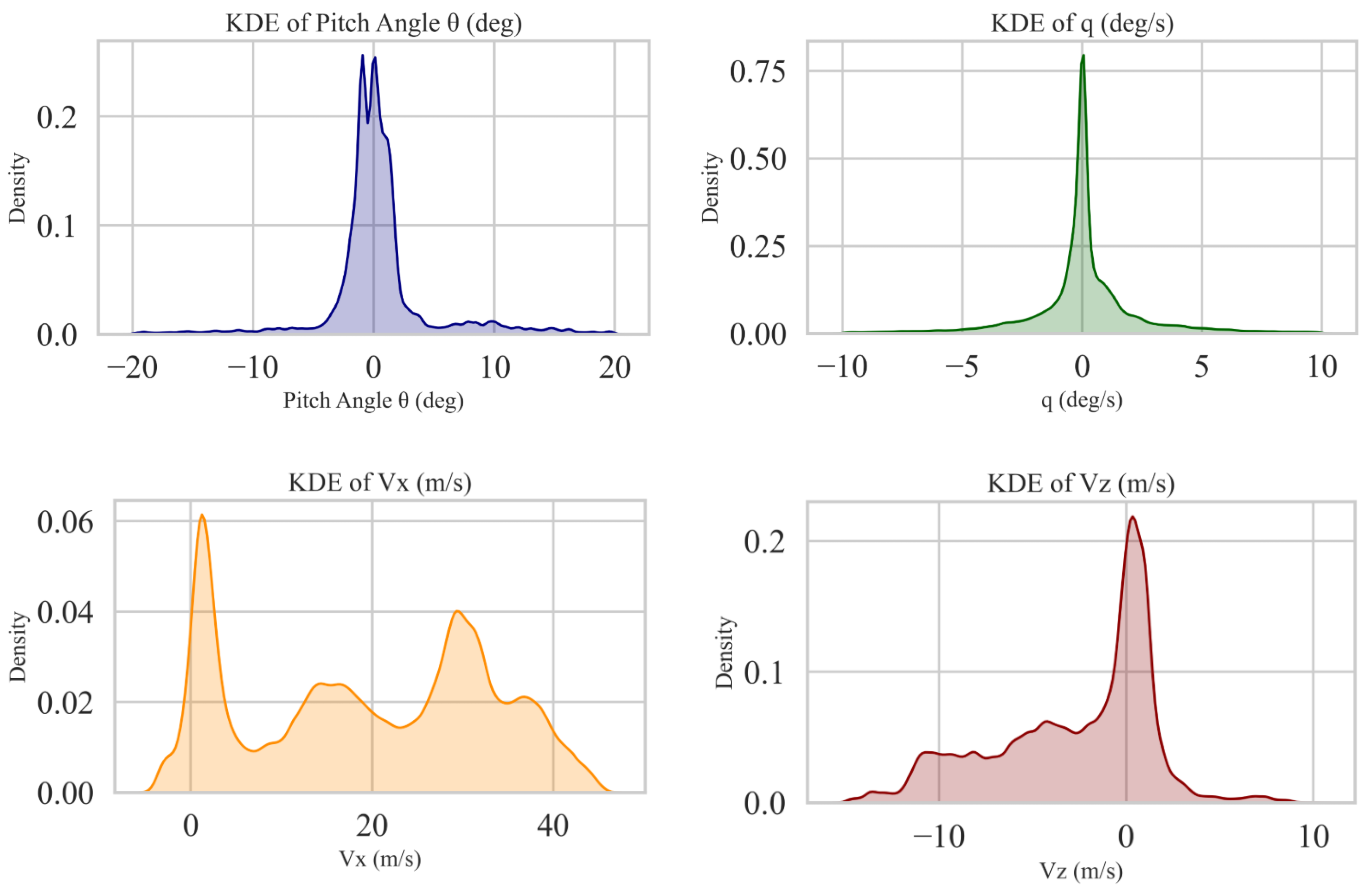

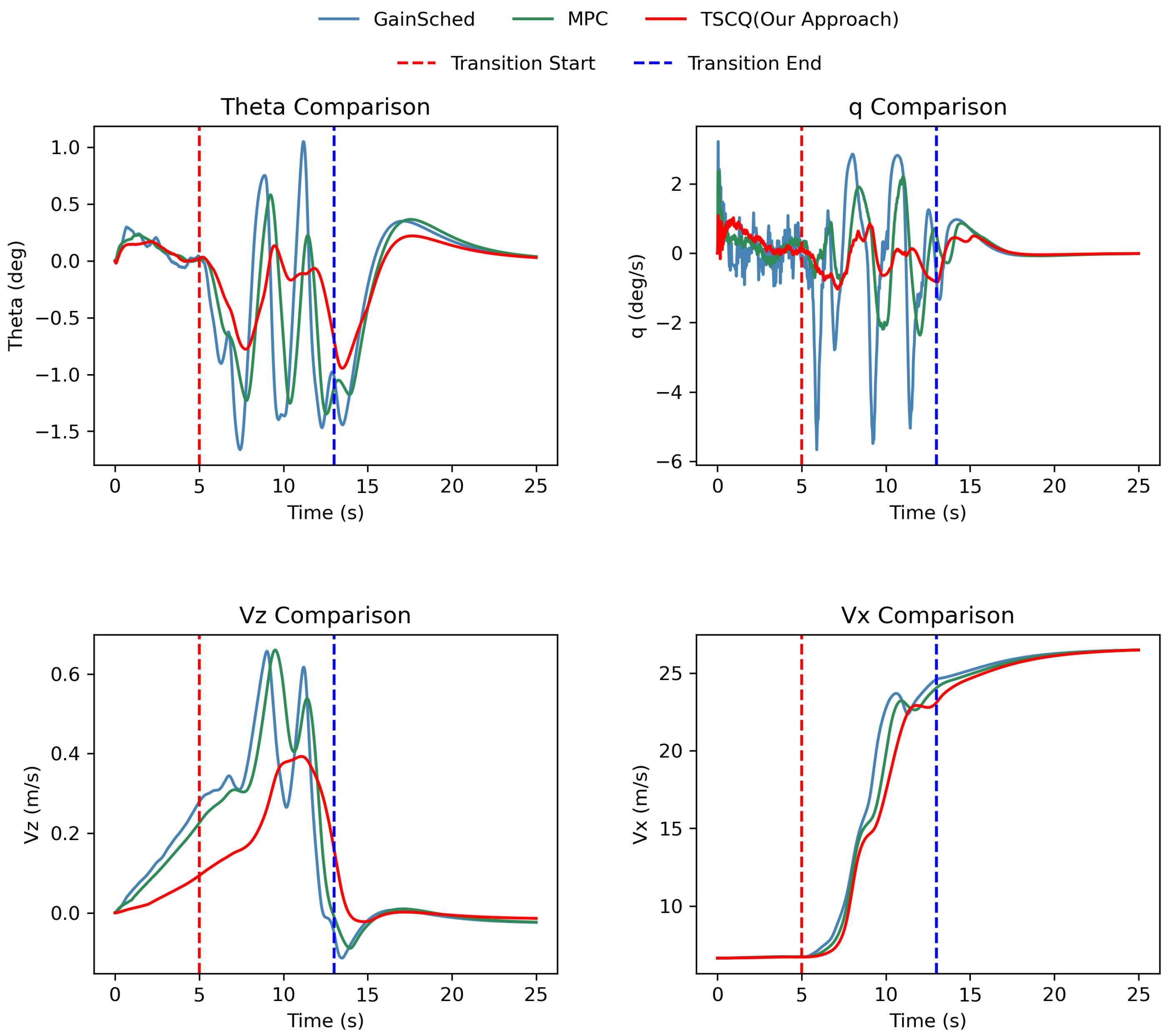

- Three controllers (gain scheduling, MPC, and TSCQ) are set up to compare the control performance of the newly designed tilt-wing UAV during the entire transition process from rotary-wing mode to the transition state and then to fixed-wing mode. The experimental results are presented as curves showing the variation over time of the pitch angle , the pitch rate q, the vertical speed , and the forward speed .

- We perform robustness experiments on the TSCQ approach. To satisfy Monte Carlo experiments, we run 300 trials for each experiment group, where the initial conditions are altered or random perturbations are introduced.

5.1. Ablation Study and Analysis

5.2. Comparison Experiment

5.3. Monte Carlo Experiments

- Transition time variation introduces the largest transient error, with the highest (1.236°) and (0.058°). This reflects the challenge of adapting to uncertain switching moments between flight modes. Despite this, the final vertical error remains minimal (0.012 m/s), showing strong recovery in altitude tracking.

- Sensor Noise causes moderate error across all metrics. The confidence intervals here are slightly wider, particularly for , suggesting higher sensitivity from trial to trial. This is likely due to measurement fluctuations that introduce irregular feedback delays.

- Motor degradation produces the highest vertical deviation during transition (0.422 m/s), likely caused by asymmetric thrust distribution. However, attitude errors remain tightly bounded, indicating that the controller compensates well through attitude correction.

- Lift/drag perturbation results in the lowest errors overall, with (1.007°) and (0.028°), along with narrow confidence intervals across all metrics. This suggests that the aerodynamic model effectively absorbs such variations, making them the least disruptive among the four.

6. Conclusions and Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Misra, A.; Jayachandran, S.; Kenche, S.; Katoch, A.; Suresh, A.; Gundabattini, E.; Selvaraj, S.K.; Legesse, A.A. A Review on Vertical Take-Off and Landing (VTOL) Tilt-Rotor and Tilt Wing Unmanned Aerial Vehicles (UAVs). J. Eng. 2022, 2022, 1803638. [Google Scholar] [CrossRef]

- Autenrieb, J.; Shin, H.S. Complementary Filter-Based Incremental Nonlinear Model Following Control Design for a Tilt-Wing UAV. Int. J. Robust Nonlinear Control 2025, 35, 1596–1615. [Google Scholar] [CrossRef]

- Çetinsoy, E.; Dikyar, S.; Hançer, C.; Oner, K.; Sirimoglu, E.; Unel, M.; Aksit, M. Design and construction of a novel quad tilt-wing UAV. Mechatronics 2012, 22, 723–745. [Google Scholar] [CrossRef]

- Erbil, M.A.; Prior, S.D.; Karamanoglu, M.; Odedra, S.; Barlow, C.; Lewis, D. Reconfigurable Unmanned Aerial Vehicles; 2009. Available online: https://eprints.soton.ac.uk/343526/1/Erbil%252C_M.A._Reconfigurable_Unmanned_Aerial_Vehicles_MES2009356.pdf (accessed on 11 May 2025).

- Huang, Q.; He, G.; Jia, J.; Hong, Z.; Yu, F. Numerical simulation on aerodynamic characteristics of transition section of tilt-wing aircraft. Aerospace 2024, 11, 283. [Google Scholar] [CrossRef]

- Rojo-Rodriguez, E.U.; Rojo-Rodriguez, E.G.; Araujo-Estrada, S.A.; Garcia-Salazar, O. Design and performance of a novel tapered wing tiltrotor UAV for hover and cruise missions. Machines 2024, 12, 653. [Google Scholar] [CrossRef]

- Whiteside, S.K.; Pollard, B.P.; Antcliff, K.R.; Zawodny, N.S.; Fei, X.; Silva, C.; Medina, G.L. Design of a Tiltwing Concept Vehicle for Urban Air Mobility; Technical Report; NASA: Washington, DC, USA, 2021.

- May, M.S.; Milz, D.; Looye, G. Transition Strategies for Tilt-Wing Aircraft. In Proceedings of the AIAA SciTech 2024 Forum, Orlando, FL, USA, 8–12 January 2024; p. 1289. [Google Scholar]

- Fatemi, M.M. Optimized Sliding Mode Control via Genetic Algorithm for Quad Tilt-Wing UAVs. In Proceedings of the 2024 10th International Conference on Artificial Intelligence and Robotics (QICAR), Qazvin, Iran, 29 February 2024; pp. 281–285. [Google Scholar]

- Dickeson, J.J.; Cifdaloz, O.; Miles, D.W.; Koziol, P.M.; Wells, V.L.; Rodriguez, A.A. Robust H∞ gain-scheduled conversion for a tilt-wing rotorcraft. In Proceedings of the 45th IEEE Conference on Decision and Control, San Diego, CA, USA, 13–15 December 2006; pp. 5882–5887. [Google Scholar]

- Benkhoud, K.; Bouallègue, S. Model predictive control design for a convertible quad tilt-wing UAV. In Proceedings of the 2016 4th International Conference on Control Engineering & Information Technology (CEIT), Hammamet, Tunisia, 16–18 December 2016; pp. 1–6. [Google Scholar]

- Yoo, C.S.; Ryu, S.D.; Park, B.J.; Kang, Y.S.; Jung, S.B. Actuator controller based on fuzzy sliding mode control of tilt rotor unmanned aerial vehicle. Int. J. Control. Autom. Syst. 2014, 12, 1257–1265. [Google Scholar] [CrossRef]

- Masuda, K.; Uchiyama, K. Robust control design for quad tilt-wing UAV. Aerospace 2018, 5, 17. [Google Scholar] [CrossRef]

- Yang, R.; Du, C.; Zheng, Y.; Gao, H.; Wu, Y.; Fang, T. PPO-based attitude controller design for a tilt rotor UAV in transition process. Drones 2023, 7, 499. [Google Scholar] [CrossRef]

- Akhtar, M.; Maqsood, A. Comparative Analysis of Deep Reinforcement Learning Algorithms for Hover-to-Cruise Transition Maneuvers of a Tilt-Rotor Unmanned Aerial Vehicle. Aerospace 2024, 11, 1040. [Google Scholar] [CrossRef]

- Guo, H.; Li, F.; Li, J.; Liu, H. Offline Reinforcement Learning via Conservative Smoothing and Dynamics Controlling. In Proceedings of the ICASSP 2025–2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; pp. 1–5. [Google Scholar]

- Feng, Y.; Hansen, N.; Xiong, Z.; Rajagopalan, C.; Wang, X. Finetuning offline world models in the real world. arXiv 2023, arXiv:2310.16029. [Google Scholar]

- Xu, H.; Jiang, L.; Li, J.; Yang, Z.; Wang, Z.; Chan, V.W.K.; Zhan, X. Offline rl with no ood actions: In-sample learning via implicit value regularization. arXiv 2023, arXiv:2303.15810. [Google Scholar]

- Liu, J.; Zhang, Z.; Wei, Z.; Zhuang, Z.; Kang, Y.; Gai, S.; Wang, D. Beyond ood state actions: Supported cross-domain offline reinforcement learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2024; Volume 38, pp. 13945–13953. [Google Scholar]

- Sato, M.; Muraoka, K. Flight controller design and demonstration of quad-tilt-wing unmanned aerial vehicle. J. Guid. Control. Dyn. 2015, 38, 1071–1082. [Google Scholar] [CrossRef]

- Totoki, H.; Ochi, Y.; Sato, M.; Muraoka, K. Design and testing of a low-order flight control system for quad-tilt-wing UAV. J. Guid. Control. Dyn. 2016, 39, 2426–2433. [Google Scholar] [CrossRef]

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Dai, B.; Wipf, D. Diagnosing and enhancing VAE models. arXiv 2019, arXiv:1903.05789. [Google Scholar]

- Holsten, J.; Ostermann, T.; Dobrev, Y.; Moormann, D. Model validation of a tiltwing UAV in transition phase applying windtunnel investigations. In Proceedings of the Congress of the International Council of the Aeronautical Sciences, International Council of the Aeronautical Sciences, Brisbane, Australia, 23–28 September 2012; Volume 28, pp. 1–10. [Google Scholar]

- Rohr, D.; Studiger, M.; Stastny, T.; Lawrance, N.R.; Siegwart, R. Nonlinear model predictive velocity control of a VTOL tiltwing UAV. IEEE Robot. Autom. Lett. 2021, 6, 5776–5783. [Google Scholar] [CrossRef]

- Mulder, J.; Chu, Q.; Sridhar, J.; Breeman, J.; Laban, M. Non-linear aircraft flight path reconstruction review and new advances. Prog. Aerosp. Sci. 1999, 35, 673–726. [Google Scholar] [CrossRef]

- Luukkonen, T. Modelling and control of quadcopter. Indep. Res. Proj. Appl. Math. 2011, 22, 1–24. [Google Scholar]

- Abdulghany, A. Generalization of parallel axis theorem for rotational inertia. Am. J. Phys. 2017, 85, 791–795. [Google Scholar] [CrossRef]

- Poksawat, P.; Wang, L.; Mohamed, A. Gain scheduled attitude control of fixed-wing UAV with automatic controller tuning. IEEE Trans. Control Syst. Technol. 2017, 26, 1192–1203. [Google Scholar] [CrossRef]

- Mathisen, S.H.; Fossen, T.I.; Johansen, T.A. Non-linear model predictive control for guidance of a fixed-wing UAV in precision deep stall landing. In Proceedings of the 2015 International Conference on Unmanned Aircraft Systems (ICUAS), Denver, CO, USA, 9–12 June 2015; pp. 356–365. [Google Scholar]

- Saderla, S.; Rajaram, D.; Ghosh, A. Parameter estimation of unmanned flight vehicle using wind tunnel testing and real flight data. J. Aerosp. Eng. 2017, 30, 04016078. [Google Scholar] [CrossRef]

- Thacker, B.H.; Doebling, S.W.; Hemez, F.M.; Anderson, M.C.; Pepin, J.E.; Rodriguez, E.A. Concepts of Model Verification and Validation 2004. Available online: https://inis.iaea.org/records/egfyy-d4t03 (accessed on 11 May 2025).

- Prudencio, R.F.; Maximo, M.R.; Colombini, E.L. A survey on offline reinforcement learning: Taxonomy, review, and open problems. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 10237–10257. [Google Scholar] [CrossRef] [PubMed]

- Agarwal, R.; Schuurmans, D.; Norouzi, M. An optimistic perspective on offline reinforcement learning. In Proceedings of the International Conference on Machine Learning, Shenzhen, China, 15–17 February 2020; pp. 104–114. [Google Scholar]

- Yao, Y.; Cen, Z.; Ding, W.; Lin, H.; Liu, S.; Zhang, T.; Yu, W.; Zhao, D. Oasis: Conditional distribution shaping for offline safe reinforcement learning. Adv. Neural Inf. Process. Syst. 2024, 37, 78451–78478. [Google Scholar]

- Lu, W. Hardware-in-the-loop simulation test platform for UAV flight control system. Int. J. Model. Simul. Sci. Comput. 2024, 15, 2441018. [Google Scholar] [CrossRef]

- Sotero, M.; Campos, B.F.d.A.; Silva, Í.S.S.; Mello, G.; Rolim, L.G.B. Hardware-in-the-Loop Modeling and Simulation of the Fin Control Subsystem with DSP. In Proceedings of the 2024 IEEE International Conference on Electrical Systems for Aircraft, Railway, Ship Propulsion and Road Vehicles & International Transportation Electrification Conference (ESARS-ITEC), Naples, Italy, 26–29 November 2024; pp. 1–6. [Google Scholar]

- Santoso, F.; Garratt, M.A.; Anavatti, S.G.; Wang, J.; Tran, P.V.; Ferdaus, M.M. Bio-Inspired Adaptive Fuzzy Control Systems for Precise Low-Altitude Hovering of an Unmanned Aerial Vehicle Under Large Uncertainties. In Proceedings of the 2024 European Control Conference (ECC), Stockholm, Sweden, 25–28 June 2024; pp. 3777–3782. [Google Scholar]

- Konyushkova, K.; Zolna, K.; Aytar, Y.; Novikov, A.; Reed, S.; Cabi, S.; de Freitas, N. Semi-supervised reward learning for offline reinforcement learning. arXiv 2020, arXiv:2012.06899. [Google Scholar]

- Olcott, J.W.; Sackel, E.; Ellis, D.R. Analysis and Flight Evaluation of a Small, Fixed-Wing Aircraft Equipped with Hinged Plate Spoilers; Technical Report; NASA: Washington, DC, USA, 1981.

- Fujimoto, S.; Meger, D.; Precup, D. Off-policy deep reinforcement learning without exploration. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 2052–2062. [Google Scholar]

- Kumar, A.; Zhou, A.; Tucker, G.; Levine, S. Conservative Q-Learning for Offline Reinforcement Learning. In Proceedings of the Advances in Neural Information Processing Systems; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 1179–1191. [Google Scholar]

- Kostrikov, I.; Nair, A.; Levine, S. Offline reinforcement learning with implicit q-learning. arXiv 2021, arXiv:2110.06169. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Mao, Y.; Wang, Q.; Chen, C.; Qu, Y.; Ji, X. Offline reinforcement learning with ood state correction and ood action suppression. arXiv 2024, arXiv:2410.19400. [Google Scholar]

- Cho, M.; How, J.P.; Sun, C. Out-of-distribution adaptation in offline rl: Counterfactual reasoning via causal normalizing flows. arXiv 2024, arXiv:2405.03892. [Google Scholar]

- Yuksek, B.; Vuruskan, A.; Ozdemir, U.; Yukselen, M.; Inalhan, G. Transition flight modeling of a fixed-wing VTOL UAV. J. Intell. Robot. Syst. 2016, 84, 83–105. [Google Scholar] [CrossRef]

| Hyperparameter | Value | Hyperparameter | Value |

|---|---|---|---|

| GENERATOR_LR | 5 × 10−5 | BATCH_SIZE | 64 |

| CRITIC_LR | 5× 10−4 | EPOCHS | 100 |

| MSE_WEIGHT | 1.0 | HIDDEN_DIM | 256 |

| KL_WEIGHT | 1.0 | LATENT_DIM | 128 |

| Q_VALUE_COEF 1 | 0.1 | TAU | 0.005 |

| ACTION_LIMIT | 1.0 | GAMMA | 0.99 |

| POLICY_DELAY | 2.0 | 1.0 | |

| SEQ_T 2 | 50.0 | 0.3 |

| Perturbation Type | Parameter | Success Rate |

|---|---|---|

| Transition time variation | 8–11 s 1 | 96.67% |

| Sensor noise | 5% Perturbation 2 | 94.67% |

| Motor Degradation | 5% Perturbation 2 | 99.00% |

| Lift/drag perturbation | 10% Perturbation 3 | 94.00% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, S.; Zhao, W. Temporal-Sequence Offline Reinforcement Learning for Transition Control of a Novel Tilt-Wing Unmanned Aerial Vehicle. Aerospace 2025, 12, 435. https://doi.org/10.3390/aerospace12050435

Jin S, Zhao W. Temporal-Sequence Offline Reinforcement Learning for Transition Control of a Novel Tilt-Wing Unmanned Aerial Vehicle. Aerospace. 2025; 12(5):435. https://doi.org/10.3390/aerospace12050435

Chicago/Turabian StyleJin, Shiji, and Wenjie Zhao. 2025. "Temporal-Sequence Offline Reinforcement Learning for Transition Control of a Novel Tilt-Wing Unmanned Aerial Vehicle" Aerospace 12, no. 5: 435. https://doi.org/10.3390/aerospace12050435

APA StyleJin, S., & Zhao, W. (2025). Temporal-Sequence Offline Reinforcement Learning for Transition Control of a Novel Tilt-Wing Unmanned Aerial Vehicle. Aerospace, 12(5), 435. https://doi.org/10.3390/aerospace12050435