Abstract

Variable environments and constrained edge devices pose the significantly challenging task of directly recognizing the relative attitude of unmanned aerial vehicles (UAVs). Furthermore, datasets of UAV landing markers that are accessible to the general public are scarce. To tackle these challenges, we first constructed a dataset on UAV landing markers called the UAV landing marker dataset (ULMD). Then, we enhanced the You Only Look Once (YOLO) model to devise a model specifically tailored for directly recognizing the relative attitude of UAVs, termed UAV relative attitude YOLO (URA-YOLO). Within URA-YOLO, we propose an enhanced multiscale feature fusion (EMF) module that increases the network’s perceptual range and extracts feature information corresponding to various image sizes. Additionally, we propose a lightweight and efficient feature extraction (LE) module to acquire high-dimensional semantic information. Finally, to mitigate background noise interference, we propose an efficient layer aggregation network with convolutional block attention (ELAN-CA) module. The experimental results demonstrate that our model outperforms the baseline by 10.8% in terms of accuracy while occupying a mere 5.8 M in size, representing a reduction of 6.5%, achieving a satisfactory balance between performance and resource consumption.

1. Introduction

Unmanned aerial vehicle (UAV) technology finds widespread application across diverse domains including logistics and distribution, search-and-rescue operations, and military endeavors [1,2,3,4]. In these contexts, the precise and real-time acquisition of relative attitude information within a specific environment assumes paramount importance for facilitating effective UAV control and navigation. However, there are still some challenges in directly recognizing the relative attitudes of UAVs using object detection algorithms. The alterations in landing markers across various attitudes are frequently minimal, while the quality of image representation for these markers may fluctuate in disparate environmental conditions. Algorithms must effectively distinguish features amidst diverse scenes, particularly in fluctuating weather conditions, where altering lighting and background interference pose additional detection complexities. On the other hand, the conventional ground processing mode of data downlink presents challenges in meeting the demands of high-time-sensitive applications like military surveillance and emergency rescue [5]. Taking the WorldView-4 satellite as an example, the images it collects every day cover an area of 680,000 km2 [6], and the huge amount of data it generates take up a large amount of bandwidth and storage resources during downlink transmission, resulting in high processing latency. In this case, the traditional ground processing mode cannot respond quickly to the task demand, especially in high-time-sensitive tasks that require real-time processing and decision making. Simultaneously, edge devices face limitations in compute capability and battery life, thereby heightening the performance demands on algorithms in terms of robustness, speed, and scalability.

Currently, research on UAV attitude detection focuses on methods that utilize a combination of sensors [7] and traditional image algorithms. Sensors commonly employ Inertial Measurement Units (IMUs), Global Positioning Systems (GPSs), and gyroscopes to attain real-time UAV attitude information [8,9,10]. As shown in the literature [11], this method significantly improves the localization accuracy in indoor environments by fusing Ultra-Wideband (UWB) signals with IMU data. However, in outdoor dynamically changing environments, vision-based techniques show more potential. The literature [12] indicates that combining a monocular camera with an inertial navigation system (INS) can effectively combine image information and inertial data to provide higher accuracy and robustness in UAV attitude recognition.

However, the development of high-precision sensors is intricate and comes with substantial costs. In the realm of traditional image algorithms, relative attitude detection is frequently achieved through the translation between the ground coordinate system and the camera coordinate system, coupled with feature point extraction algorithms. This process involves intricate calculations, which can potentially compromise the stability of the algorithm. Region-based convolutional neural networks (RCNN) [13] and region-based fully convolutional networks (R-FCNs) [14] are characterized by prolonged training and inference times, rendering them inadequate for meeting real-time operational demands. Hence, this paper introduces a novel approach centered on UAV relative attitude detection, leveraging the You Only Look Once (YOLO) [15] object detection algorithm. This method encompasses image classification, position determination, and semantic understanding to directly discern the attitude of landing markers relative to the UAV.

In this research, we propose a high-accuracy detector intended for full integration into real-time processing in the future. For feature enhancement, we propose an enhanced multiscale feature fusion (EMF) module. A parameter-based approach is first utilized to generate new feature representations by extending the sensory field of the backbone network through multi-branch dilated convolution. In addition, we introduce a parameter-free hierarchy based on generating multiple feature mappings by maxpool operations of different sizes after dilated convolution and further fusing the feature information of each hierarchy. To efficiently extract features for different poses and to obtain a smaller scale model, we carefully design a lightweight and efficient feature extraction (LE) module. This module replaces the convolutional block with 3 × 3 convolutional kernels in the original ELAN structure to build a better backbone. Finally, to facilitate accurate localization and detection of regions of interest while mitigating noise interference, we introduce ELAN-CA, an efficient layer aggregation network equipped with a convolutional block attention module.

The main contributions of this article are as follows:

- (1)

- We propose an enhanced multiscale feature fusion (EMF) module for acquiring multi-scale image features and fusing them. Each enhanced scale feature branch enhances the perceptual range of the network using dilated convolution and extracts feature information for the corresponding size image.

- (2)

- We propose a lightweight and efficient feature extraction (LE) module to capture high-dimensional semantic information. This structure adopts a two-branch design: one branch preserves key features to capture essential semantic information, while the other branch utilizes Ghost Convolution to optimize module complexity and delve deeper into the image’s feature information.

- (3)

- We propose an efficient layer aggregation network with convolutional block attention (ELAN-CA) module aimed at capturing essential image features more effectively. The incorporation of the attention module into the layer aggregation network prioritizes crucial information during the feature aggregation process.

- (4)

- We propose a URA-YOLO for detecting the relative attitude of UAVs. Our proposed approach demonstrates superior performance compared to several benchmark models.

- (5)

- A new dataset (ULMD) is constructed based on the independently designed landing flags. The ULMD comprises images captured under various simulated degradation conditions, including Gaussian noise, image blurring, and fog. The objective of this dataset is to increase the model’s detection accuracy under various environmental circumstances while also strengthening its overall robustness.

2. Related Works

2.1. UAV Attitude Detection

With the rapid advancement of computer vision technology, algorithms for UAV attitude detection are continually emerging. To detect landing markers, traditional detection algorithms typically combine techniques like the Hough algorithm, the Sobel operator, and the adaptive thresholding approach with exterior contour, texture, or color information, or combinations of these features. Ref. [16] uses the Hough algorithm to detect circles and arcs and calculates the center coordinates. Ref. [17] uses adaptive thresholding to extract the outlines of the markers from the grayscale image and then extracts the marker bits of the image using the maximum inter-class variance (OTSU) algorithm for recognition. Recent advancements in deep learning have enabled object detectors to locate objects using an end-to-end learning framework, dynamically extracting image attributes. Ref. [18] develops a model based on an enhanced convolutional neural network, aiming to enhance the generalization performance of the classifier.

2.2. Lightweight Model Frameworks

In the field of image classification, early models ranged from AlexNet [19] to Visual Geometric Group Networks (VGG) [20] and then to GoogleNet [21] and Residual Networks (ResNet) [22]. This evolution involved incrementally, deepening the network architecture to extract more intricate and informative features. However, the quantity and complexity of model parameters also rise with the model’s depth. In recent years, the ongoing advancement of edge devices like drones has underscored the significance of lightweight design as a crucial performance metric for detectors [23]. There are primarily two options: One is the p model compression technique based on pruning [24,25,26,27]. Its essence is to reduce the complexity and memory consumption of the model by removing some of the connections or parameters from the neural network. Creating more effective convolutional computation techniques that lower the model’s parameter count without compromising its functionality is a further strategy. Currently, the mainstream lightweight models include MobileNet [28], EfficientNet [29], and GhostNet [30]. In GhostNet, parameter count is notably reduced by partitioning the input channel into a primary path and an auxiliary path. The primary path conducts convolutional operations, while the auxiliary path executes low-cost convolutional operations.

2.3. Multi-Scale Feature Extraction

To address the issue of scale sensitivity in convolutional features, numerous methods employ a multi-scale feature extraction strategy. This approach enables the network to adeptly adapt to features of varying scales. These methods can be classified into two groups: parameter-based and parameter-free methods.

The parameter-based approach is to design a network structure with learnable parameters by learning the input data and extracting features. Dilated convolution [31] enlarges the receptive field by adjusting the dilation rate of convolution, introducing gaps in the feature map to aggregate multi-scale contextual information. ASPP [32] utilizes multiple parallel layers of dilated convolution with distinct dilation rates across layers, followed by concatenation of the convolutional layers to produce multi-scale feature representations. RFBNet [33] introduces convolutions with varying kernel sizes to broaden the receptive field, thereby enhancing the feature’s scale variation.

Parameter-free approaches aim to fully utilize existing feature representations without introducing new learnable units. Multi-scale feature fusion can be accomplished simply by combining or merging feature maps from several layers. The spatial pyramid [15] uses multiple maximal pooling layers of different sizes to capture information at different scales and finally connects their results. FPN [34] is also a parameter-free operation that combines high-resolution features at the bottom level with semantic information at the top level using top-down paths and lateral connections. PANet [35] further introduces bottom-up paths for more efficient feature aggregation.

2.4. Attention Mechanism

Attention mechanisms have found extensive application in deep learning models. The SE attention mechanism [36] amplifies the response to significant channels while dampening the response to feature channels deemed irrelevant to the current task. The CBAM [37] introduces a sequential attention structure from channel to space, inspired by the SE attention mechanism. This structure synthesizes the correlation between channel and spatial dimensions, selectively focusing on relevant regions while disregarding irrelevant areas. This enhancement aims to improve the performance of the attention mechanism in image processing tasks. In addition, the CA attention mechanism [38] further considers the interactions between channels and embeds the horizontal and vertical position information into the channel attention to improve the long-distance relationship extraction capability.

3. Proposed Method

In this section, we first present the overall structure of our model. In this study, YOLOv7-tiny is chosen as the base model for UAV relative attitude detection, mainly because it is lightweight and well suited for real-time edge processing tasks. YOLOv7-tiny achieves a good balance between detection accuracy, computational efficiency, and model size. Considering the limited computational resources and battery life of UAV edge devices, YOLOv7-tiny is able to minimize the number of model parameters and FLOPs while maintaining a high detection capability. While new-generation models like YOLOv8 may offer improvements in detection accuracy, they typically increase the computational burden and model size, which is not ideal for the resource-constrained UAV environments we study. YOLOv7-tiny provides us with a solid foundation, allowing us to focus on improving its feature extraction and attention mechanisms without significantly increasing the computational overhead. Next, we will explain each of our suggestions for improvement.

3.1. Overview

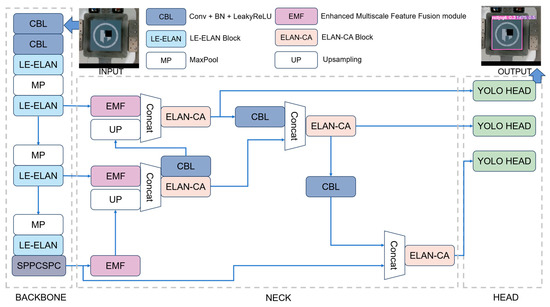

The overall architecture of URA-YOLO is shown in Figure 1. To ensure efficient feature extraction and stable performance, the backbone network of the URA-YOLO model integrates three key modules that play complementary and layered roles in working together. Firstly, the LE-ELAN module enhances the feature extraction capability of the model while maintaining computational efficiency, thus extending the effective sensory field and preserving key information while reducing the number of parameters. Next, the EMF module is introduced to enhance the multi-scale feature fusion capability of the model. By passing features from the LE module to EMF, the module is able to combine features from different scales to capture fine-grained local details and wider contextual information, leading to the better detection of objects at different scales and angles. Finally, the ELAN-CA module is integrated into the feature fusion process by applying an attention mechanism to further optimize the features provided by the EMF module. By prioritizing the extraction of critical features and suppressing noise, ELAN-CA improves the quality and detection accuracy of the fused features. Through the synergy of these three modules, an efficient and accurate URA-YOLO model is finally formed, which can effectively perform UAV relative attitude detection in real environments.

Figure 1.

The proposed framework of the URA-YOLO model. “LE-ELAN”, “ELAN-CA”, and “EMF” are our improved modules, details of which are explained in the next section. “Concat” denotes the channel dimension concatenation of two feature maps. “SPPCSPC” is an enhanced version of space pyramid pool technology.

3.2. Light and Efficient (LE) Module

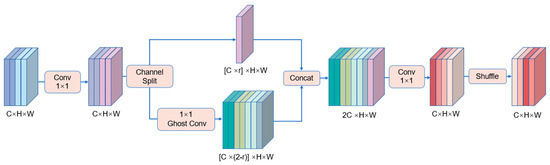

Since the attitude changes in landing markers are usually minimal, false alarms with similar features can occur in the detection task. However, YOLOv7-tiny has limited trunk extraction capability. Therefore, this paper designs a LE module as shown in Figure 2.

Figure 2.

Schematic diagram of LE module. The feature maps of several channels are represented by distinct colors. Ghost Convolution is employed to streamline the module’s complexity, while the concept of “split–transform–merge” is introduced to optimize the effective utilization of feature information.

The LE module begins with a 1 × 1 convolution (Conv) operation, aimed at increasing the number of channels in the input feature map from c to 2c while maintaining the aspect constant. The LE module employs mapping to transform the input data into a higher-dimensional feature representation, drawing inspiration from the inverted residual block found in MobileNetV2 [39]. Following the ideas in [40], we devise an intermediate extension structure rooted in the concept of “split–transform–merge.” This structure facilitates efficient feature mapping and channel extension. The feature map is split into two branches following the first 1 × 1 convolution; in this paper, the split ratio is set to 0.25. The smaller ratio effectively reduces the computational burden, while retaining the original feature information and avoiding the loss of important details in the feature extraction process. In addition, Ghost Convolution is able to extract new effective features while reducing the computational burden, which further enhances the performance of the network. Meanwhile, we also tried different segmentation ratios (e.g., 0.5 and 0.75), and found that the ratio of 0.25 achieves the best balance between accuracy and computational efficiency, which ensures a high detection accuracy and avoids an excessive increase in computational overhead, as shown in Table 1.

Table 1.

Experiments with different segmentation ratios.

The first branch is the identity branch, which is not subjected to any manipulation and forms a constant mapping to preserve the key features of the target. However, the mapping operation involves a large number of matrix operations and convolution operations, which usually incur a certain computational burden. MobileNetV2 chooses to use depth-separable convolution to reduce the complexity. To optimize the complexity of the residual structure, the LE module introduces Ghost Conv [30]. Another branch is passed through a convolution kernel as a 1 × 1 Ghost Conv output for further feature extraction. Ghost Convolution mitigates redundant computations arising from similar intermediate feature maps while adeptly extracting and leveraging information from the input feature maps. Finally, to realize the flow of information between channels, we splice the two branches together again and then change their random order. The mathematical expression for LE can be written as follows:

where denote the standard convolution and Ghost Convolution operations with kernel size 1 × 1, respectively is the feature map concatenation operation. is the input feature map. denotes the branch whose occupancy is after the channel splitting. denotes the shuffle operation. denotes the output of the LE module.

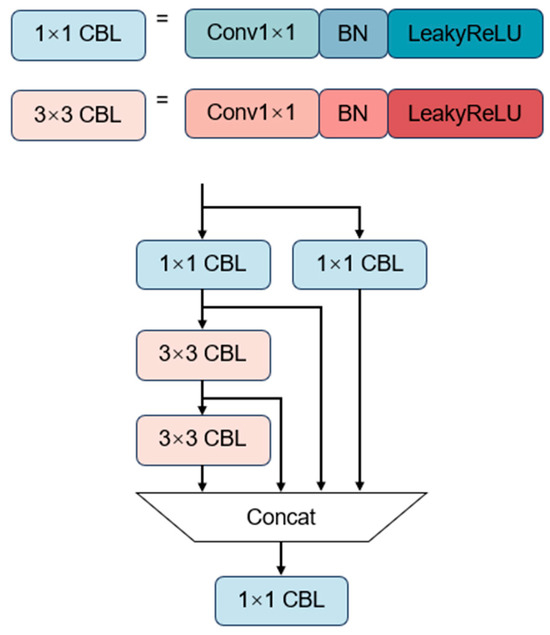

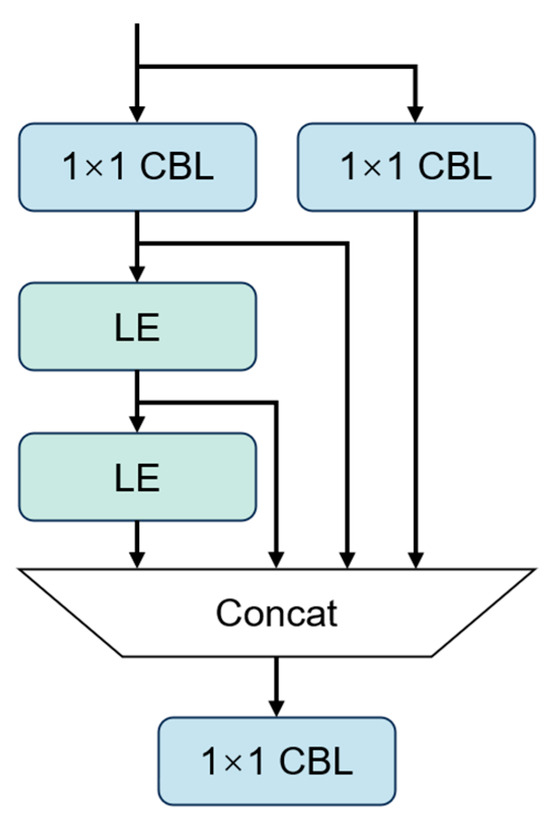

3.3. LE-ELAN and ELAN-CA

The ELAN structure in YOLOv7-tiny is used to regulate the longest and shortest gradient routes, thus facilitating learning and convergence of deeper model. The structure is shown in Figure 3. In this paper, the LE module is used instead of the 3 × 3 convolution in the ELAN structure to enable the residual structure to extract richer.

Figure 3.

Detailed diagram of ELAN module. Consists of 1 × 1 and 3 × 3 convolutional modules for gradient path optimization and efficient aggregation of feature information, respectively.

The structure is shown in Figure 4. We compare the LE module with a 3 × 3 convolutional block, and Table 2 shows the parameters (Params) and floating-point operations (FLOPs) of the two modules. Compared to the 3 × 3 standard convolutional block, feature extraction consumption is greatly decreased by our LE module. The LE-ELAN module is represented as follows:

Figure 4.

Detailed diagram of LE-ELAN module. Replacing the 3 × 3 convolution module with the LE module.

Table 2.

Difference between LE module and 3 × 3 convolution.

In addition, as shown in Figure 5, we introduced a CA attention mechanism at the back of the neck with the aim of highlighting significant characteristics of channels and places while suppressing insignificant features. This approach effectively mitigates the loss of positional and local information, resolves ambiguity between objects and backgrounds, and consequently enhances the detection of subtle changes in the target. To further validate the effectiveness of CA attention mechanisms, we designed a series of contrasting experiments to test several mainstream attention mechanisms and applied them to the neck structure, as shown in Table 3.

Figure 5.

Added a simplified model structure diagram for CA.

Table 3.

Comparative experiments of different attention mechanisms.

The experimental results show that the SE (Squeeze-and-Excitation) module has a low spatial information utilization rate in the multi-scale target detection task, and its ability to model the spatial relationship of the features is limited, which leads to a poor target recognition effect in complex backgrounds. ECA (Efficient Channel Attention) has an optimized computational efficiency compared to SE, but its spatial feature modeling ability is still weak, especially when dealing with multi-scale targets with complex backgrounds. ECA (Efficient Channel Attention) is optimized in terms of computational efficiency compared with SE, but its spatial feature modeling ability is still weak, especially when dealing with multi-scale targets with complex backgrounds, the effect fails to meet the expectation. Although the CBAM (convolutional block attention module) considers both channel and spatial attention, it is less computationally efficient in fusing these two types of information, especially in the task of multi-scale feature fusion. By comparing the SE, ECA, and CA attention mechanisms, we verified the superiority of the CA mechanism in spatial information modeling and target detection. Therefore, we finally decided to apply the CA attention mechanism to the back part of the ELAN for neck structures to enhance the detection of multi-scale targets and improve the overall detection performance of the model. The ELAN-CA module is represented as follows:

3.4. Enhanced Multiscale Feature Fusion (EMF) Module

Traditional convolutional neural networks have limitations in dealing with feature extraction at different scales. The extraction capability of the current backbone network is somewhat limited, especially in the neck stage where the extracted features may lack sufficient semantic information. To address this challenge, we propose an enhanced multiscale feature fusion (EMF) module, which is designed to obtain feature representations that are highly sensitive to small-scale variations.

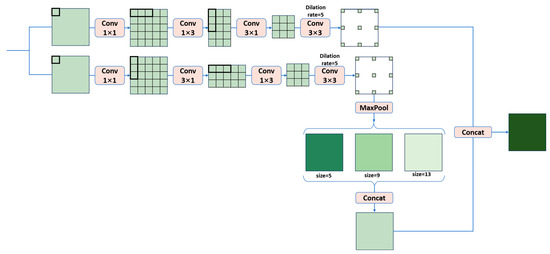

The EMF module comprises a hierarchical structure that amalgamates contextual information across multiple scales. The overall structure is shown in Figure 6. Inspired by RFB-s [33] and ACNet [41], the EMF module employs two branches with dilated convolution and performs cascaded standard convolution operations on these two branches with kernel sizes of 1 × 1, 3 × 1, 1 × 3, and 3 × 3, respectively. First, the input feature map undergoes a 1 × 1 convolution operation to adjust the number of channels. Following the ACNet design, two parallel 1 × 3 and 3 × 1 convolution operations are conducted to capture information along the horizontal and vertical directions. This step aims to deepen the network and enhance the abstraction of the input features. This combination has the property of parameter sharing, which can improve the computational efficiency. Dilated convolution further enhances the extraction of underlying information by altering the receptive field to capture more contextual letters, and the 3 × 3 convolutional kernel can capture a wider range of spatial information. Parameter-based design strategies are integrated into the EMF module, providing richer information for feature extraction and contextual understanding of the model.

Figure 6.

Illustration of the EMF module. The module includes two parallel 1 × 3 and 3 × 1 convolution operations and dilated convolution to help a deeper understanding of the feature information and also improves the utilization of multi-scale features through maximum pooling operations at different scales to further enhance the feature representation.

During the dilated convolution stage, expanding the receptive field enhances the network’s capacity to perceive information across various scales within the image. On this basis, performing spatial pyramid operations can further extend the sensory field while down-sampling using feature maps at different scales to capture multi-scale information. This manipulation aids the network in comprehensively grasping both the intricate details and the overarching structure of the image, and thus better recognizing semantic information about objects at different scales in the image, such as subtle differences in landing markers at different angles.

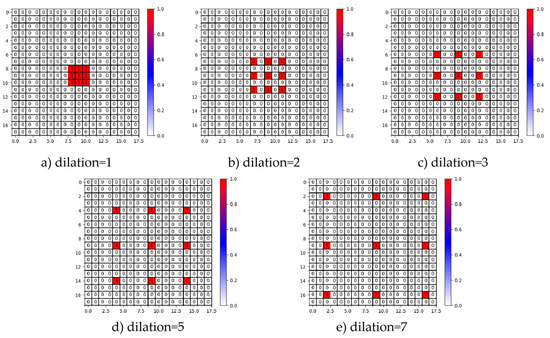

We chose a dilation rate of 5. Smaller dilation rates (e.g., 2 or 3), while providing a certain range of contextual information, are not effective in enhancing the network’s ability to perceive a large range of features. On the contrary, a larger dilation rate (e.g., 7) can enlarge the sensory field, but it also increases the computational complexity and may lead to excessive smoothing, which may lose some detailed information. In order to verify the effect of different dilation rates, we conducted experiments comparing the dilation rates (e.g., 1, 2, 3, 5, and 7), evaluated the mAP under each setting, and further expressed the change in the receptive field through the performance of the pixel position parameter, as shown in Figure 7. The experimental results show that when the dilation rate is 5, the model is able to effectively extract key features while maintaining high accuracy, especially in the fusion of multi-scale information, as shown in Table 4.

Figure 7.

(a–e) The pixel position parameters for dilation rates of 1, 2, 3, 5, and 7, respectively.

Table 4.

Comparison of different expansion rates for EMF module expansion convolution.

However, it is important to note that the spatial pyramid operation increases the computational burden as it requires down-sampling of the feature map at multiple scales, resulting in more computation. To reduce the computational overhead while maintaining effectiveness, we choose to perform the spatial pyramid operation on only one of the two dilated convolution branches. By concatenating the output of the other dilated convolution with the branch that performs the spatial pyramid operation as well as the branch that does not perform any operation, the following mathematical expression for the EMF module can be obtained.

where denotes a dilated convolution operation with an dilation of 5; denote the standard convolution operations, each with kernel sizes of 1 × 3, 3 × 1, and 1 × 1; is the maxpool operation with kernel size , and is the corresponding learned features; denotes the number of maxpool operations, which is set to 3; and and denote the two branches’ output feature maps following dilated and standard convolution.

The core concept of the EMF module is to derive new multiscale data through convolution operations within a parameter-based approach, and then optimize the existing feature maps using a parameter-free approach to further enhance the representation of multiscale data. The hierarchical structure of the spatial pyramid allows feature information at different scales to be superimposed on each other, thus enhancing the comprehensive representation of the feature map and enabling the model to better adapt to changes in information at different scales.

4. The Experiment

In this section, we first describe the experimental setup, including the dataset, implementation details, and evaluation metrics. Subsequently, we conduct an ablation study to assess the performance of URA-YOLO and juxtapose it with other prominent algorithms.

4.1. Experimental Setup

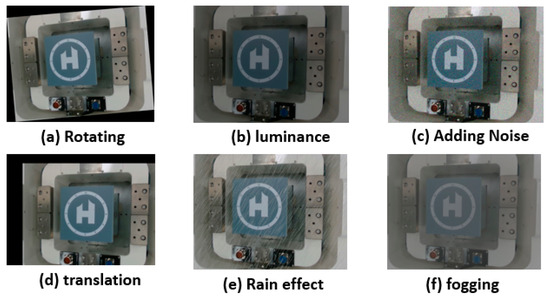

Datasets. We developed a dataset, named ULMD, partially to facilitate the training and validation of our proposed method, as shown in Figure 8. An indoor environment was chosen for the data collection process to ensure stability and consistency of the lighting conditions. The landing marker was placed on a high-precision inertial navigation test stand with a monocular camera directly above the platform, and 1029 images of the landing marker were collected at different horizontal and vertical axis positions. To simulate the subtle oscillations that may occur during the movement of the actual landing marker in the air, we set the rotation of the cross-swing angle to be 0.5 degrees, and this interval can effectively cover the small amplitude attitude changes while ensuring a sufficient amount of data. A smaller sampling interval helps the model to learn more detailed features, especially in practical applications, the UAV’s flight attitude may change slightly, and this interval can accurately capture these changes, thus improving the detection ability of the model. The vertical rotation angle rotation is 0.25 degrees, and since attitude changes in the vertical direction have a more significant impact on target recognition, a smaller vertical rotation increment ensures that detailed features are accurately captured, which improves the stability and robustness of the model in complex environments. This allows for more diversity in the data collected and covers a wider range of situations. To enhance the accuracy of detecting transverse and longitudinal swing angles, we analyzed different weather variations and flight states. Based on this, a data enhancement method for combined variations is proposed. The method performs operations such as randomly adding noise, cropping, translation, rotating, mirroring, fogging, and raining on the captured image. The effect of each operation is shown in Figure 9. Finally, the ULMD contains a total of 5145 images and 1029 labels, including 70 pose angles.

Figure 8.

UAV landing marker dataset.

Figure 9.

(a–f) The results of data enhancement for different operations, respectively.

Evaluation Metrics. To validate the effectiveness of the proposed method, we use mAP50, time complexity (FLOPs), Params, and frames per second (FPS) as evaluation metrics to comprehensively assess the proposed network. mAP, defined as the area under the precision–recall (P-R) curve with the IOU threshold set to 0.5 [42], serves as a metric indicating the average precision across all categories, thereby providing a comprehensive assessment of the model’s performance across various categories. To evaluate and contrast the computational complexity of various networks. The space complexity (Params) and time complexity (FLOPs) are selected to illustrate the relative merits of various approaches. Furthermore, the number of photos that can be identified per second is indicated by FPS.

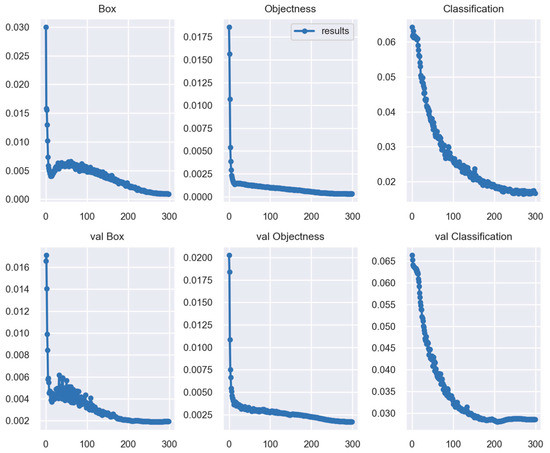

Implementation Details. In the training phase, we randomly divide the dataset into training set (3601 images), validation set (515 images), and test set (1029 images) according to the ratio of 7:1:2, and all the images will be scaled to 640 × 640. The proposed model is implemented in PyTorch 2.1.1 and Python 3.8 and deployed on workstations equipped with NVIDIA A5500 GPU. We choose a stochastic gradient descent optimizer with momentum of 0.9 and weight decay of 0.0005, and the initial learning rate is set to 0.01, and the warmup_epochs is set to 3.0. The total number of Epochs is 300 and the batch size is 32. The loss profile during training is shown in Figure 10. From the trend of the curves, it can be observed that both the training loss curve and the validation loss curve are close to a steady state in the later stages of the training process. The fact that there is currently little difference between the two suggests that the model training is complete.

Figure 10.

Training loss and validation loss curves. The horizontal coordinates represent the counts of calendar elements and the vertical coordinates represent the loss values. The loss curves during training are shown in the first row of graphs, while the loss curves during validation are shown in the second row.

4.2. Ablation Experiments

We utilized YOLOv7-tiny as a reference model for conducting ablation studies, evaluating the validity and effectiveness of LE-ELAN, ELAN-CA, and EMF in the model. The experimental results are listed in Table 5, where a tick indicates that the module was used in the model.

Table 5.

Results of the ablation experiment.

First, we replace the ELAN structure in the backbone with LE-ELAN. Experimental results demonstrate a significant reduction in parameter counts and computational costs of the entire model following the adoption of LE-ELAN, while simultaneously improving feature extraction capabilities. In contrast to the benchmark model, the mAP50 is improved by 3.3%, while the parameters and FLOPs are reduced by 14.9% and 15.2%, respectively. This demonstrates how LE-ELAN can increase the model’s accuracy and is particularly effective at reducing model parameters.

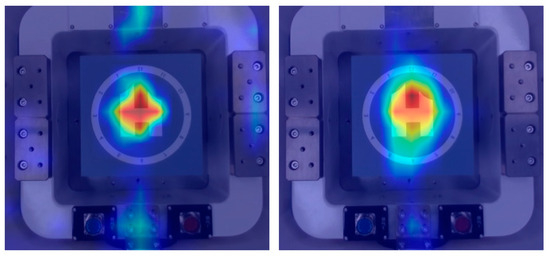

We describe the method of combining ELAN and CA and incorporate ELAN-CA into the model. Table 6 shows the experimental results.

Table 6.

Results of different CA module addition methods.

While MIX CA refers to immediately replacing the last convolutional layer of the ELAN model with a CA layer, ADD CA entails appending a distinct CA layer after the output layer of the ELAN model. Although there is not much difference between the two in terms of parameters and computational overhead, ADD CA is more effective. Therefore, we choose to adopt the ADD CA method. The output thermodynamics before and after the addition of ELAN-CA are shown in Figure 11. In the figure, the red color indicates the area of focus of the model, while the green color indicates the scope of the model. We can find that ELAN-CA can focus more on extracting valuable features and provide more accurate localization information with less interference from background noise.

Figure 11.

Comparison of heatmaps before and after the addition of ELAN-CA.

Finally, in the neck section, we used the EMF module. According to the experimental results, when using the EMF module alone, the model’s feature extraction capability is enhanced by 6.5% compared to the baseline model; however, the number of parameters and FLOPs inevitably increase by 7.4% and 5.1%. This indicates that the EMF module is highly effective in enhancing model accuracy without substantially increasing model parameterization and computational costs. The dilated convolution and spatial pyramid techniques lead to a more efficient sensory field, enabling the model to gather more global information. We evaluated the performance of LE-ELAN, ELAN-CA, and EMF individually, and as can be seen from Table 5, they all improve to varying degrees when acting alone. And when they are applied jointly, their combined effect is better. Thus, when we apply all three simultaneously to the model, the model achieves an optimal level of performance. With the assistance of LE-ELAN, the mAP50 increased by 10.8% compared to the original model, while the number of parameters and computational cost were reduced by 6.5% and 8.7%, respectively. This outcome underscores the superior target detection and localization capabilities of our approach, while also effectively reducing the number of parameters and computational cost to the level of a lightweight model.

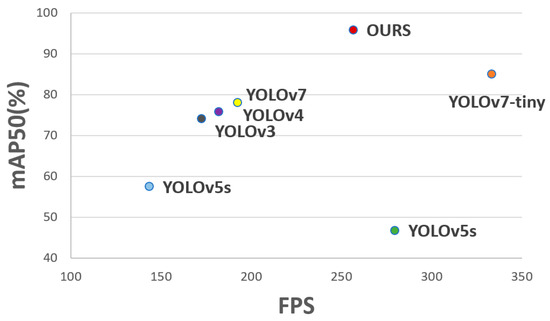

4.3. Comparison Experiments

We conducted a longitudinal comparison with popular target identification algorithms and benchmarked them on our dataset in order to accurately assess the URA-YOLO technology. YOLO algorithms have undergone multiple iterations and are currently frequently employed in industrial applications. As a result, we primarily use the YOLO family of algorithms for comparison, including YOLOv3 [43], YOLOv4 [15], YOLOv5, and YOLOv7 [44], released in recent years. We selected YOLOv5-s and YOLOv7-tiny to ensure the experiment’s rigor, which has similar model sizes to the compared algorithms. The comparisons are conducted under identical experimental environments and parameter settings. The results of the experiments are presented in Table 7.

Table 7.

Results of different algorithms on ULMD.

Our proposed algorithm achieves superiority over current algorithms on mAP50, exceeding the highest value of other algorithms by 10.8%. At the same time, our algorithm is ahead of other algorithms in terms of model size. Therefore, it can be concluded that our algorithm strikes the optimum balance between accuracy and speed.

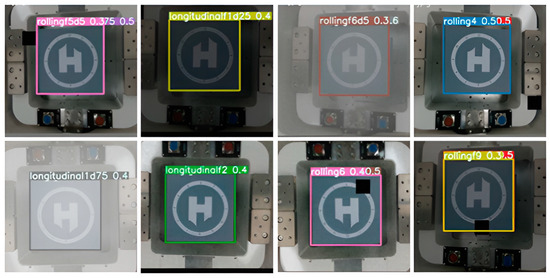

The graphs show this more visually. The scatter plot reflects the capacity to balance between detecting speed and accuracy, with FPS as the horizontal axis and mAP50 as the vertical axis. As can be seen from Figure 12, our proposed method occupies the upper right corner, indicating the attainment of the best overall performance. Some samples of the tests are given in Figure 13. Although the images in the test samples have overlapping parts, the code still outputs the corresponding text description of the detection results. The results show that URA-YOLO can achieve prediction by combining multi-scale feature information and local texture features.

Figure 12.

Comprehensive comparison of algorithm performance.

Figure 13.

Sample landing flag detection results. Our URA-YOLO model can still detect results even in dimly lit, subtly changing conditions.

To more fully evaluate the performance of the URA-YOLO model, we replaced the backbone network with several popular lightweight convolutional neural network architectures, such as MobileNet, GhostNet, and ShuffleNet, and compared their performance in object detection tasks, as shown in Table 8. We compare the accuracy (mAP), inference speed (FPS), and computational complexity (FLOPs) of these lightweight models, and the results show that while these lightweight networks have significant advantages in speed and computational efficiency, they may sacrifice some accuracy. In contrast, the URA-YOLO model provides higher precision through optimized backbone and feature extraction strategies, especially in complex scenes, showing stronger robustness and accuracy.

Table 8.

Results of different backbone network on ULMD.

While our model may not achieve the optimal speed index, it does achieve the highest accuracy on the created dataset. The structure of our algorithm incorporates more branches than YOLOv7-tiny, which increases the cost of memory access and consequently slows down the inference speed somewhat. This could be the underlying reason for this observation. For tasks requiring high precision, the inference speed can be improved by adjusting the input image resolution, quantization, or cropping techniques to meet the real-time requirements.

5. Conclusions

In the realm of UAV relative attitude recognition, we creatively introduce picture recognition approaches in this study. First, we collected image data of landing markers with different attitudes and constructed a dataset. Then, based on YOLOv7-tiny, we propose a deep learning framework and improve the structure to enhance the ability to recognize the relative attitude of UAVs, i.e., the URA-YOLO model. The model focuses on further lightening YOLOv7-tiny by reducing the model parameters by 6.5%. Meanwhile, the feature extraction and target position localization capabilities of the model are enhanced by rationally utilizing techniques such as residual structure, attention mechanism, and dilated convolution, which increases the mAP50 by 10.8%. The outcomes of the experiment demonstrate that the URA-YOLO model is capable of meeting the UAV relative attitude recognition task’s requirements for robustness, accuracy, and speed. However, some limitations still exist:

- (1)

- Before hardware deployment, the URA-YOLO approach needs to be further optimized for inference speed and memory usage, especially in real-time applications, such as on-edge computing devices (e.g., NVIDIA Jetson) for UAVs. Despite the lightweight treatment of the model, it may still face computational and memory bottlenecks in high-resolution images and complex environments. Therefore, future research will focus on quantization, model trimming, and hardware acceleration techniques to improve FPS and memory utilization.

- (2)

- Currently, the proposed method has only been validated on the ULMD-based dataset, which mainly simulates UAV attitude detection under specific conditions. The effectiveness of the URA-YOLO method still needs to be further investigated and validated against the challenges of complex environments (e.g., different weather conditions, flight attitude changes, uneven illumination, etc.) and lower-quality images in real flight. In future work, we plan to extend to more real-world UAV flight data and conduct field tests to evaluate the adaptability and robustness of the method in different environments.

During our research, we found that existing deep learning networks have difficulties in capturing the relative attitude of UAVs using only single-modal data (e.g., RGB camera images). For example, single-image data may lead to poor detection performance in low-light, blurred, or complex backgrounds. Therefore, a future research direction may be to use multi-source sensors (e.g., IMU, LiDAR, RGBD camera) to work in concert to acquire information from multiple dimensions and fuse data from different sensors to improve the accuracy and robustness of attitude detection. This multi-sensor fusion strategy can effectively compensate for the shortcomings of a single sensor, especially in complex environments, such as wind, waves, vibration, and other situations with large influencing factors, and has a wide range of application prospects.

Author Contributions

Conceptualization: F.Y.; Methodology: J.Q.; Formal analysis and investigation: J.Q.; Writing—original draft preparation: J.Q.; Writing—review and editing: J.W. and F.X.; Funding acquisition: X.C.; Resources: J.Q. and F.Y.; Supervision: J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Equipment Development Department of the Central Military Commission, grant number 347C0B5D. This research was funded by Ningbo Natural Science Foundation, grant number 2023J340. This research was funded by Ningbo Municipal People’s Government, grant number 2023Z044. This research was funded by Ningbo Major Innovation Project 2025, grant number 2022Z040. This research was funded by Ningbo Major Innovation Project 2035, grant number 2024Z063. This research was funded by Yongjiang Talent Project of Ningbo, grant number 2022A-012-G.

Data Availability Statement

The experimental data have been presented within this paper. For any additional information or data inquiries, please reach out to the first author or the corresponding author.

Acknowledgments

The author greatly appreciates the corresponding author, Wang, for his guidance, and Xu and Chen for their help.

Conflicts of Interest

The authors have no competing interests to declare that are relevant to the content of this article.

Abbreviations

The following abbreviations are used in this manuscript:

| UAVs | Unmanned aerial vehicles |

| ULMD | UAV landing marker dataset |

| EMF | Enhanced multiscale feature fusion |

| LE | Lightweight and efficient feature extraction module |

References

- Muhammad, B.; Gregersen, A. Maritime Drone Services Ecosystem-Potentials and Challenges. In Proceedings of the 2022 IEEE International Black Sea Conference on Communications and Networking (BlackSeaCom), Sofia, Bulgaria, 6–9 June 2022; pp. 6–13. [Google Scholar]

- Bayhan, E.; Ozkan, Z.; Namdar, M.; Basgumus, A. Deep Learning Based Object Detection and Recognition of Unmanned Aerial Vehicles. In Proceedings of the 2021 3rd International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Ankara, Turkey, 11–13 June 2021; pp. 1–5. [Google Scholar]

- Zhao, C.; Liu, R.W.; Qu, J.; Gao, R. Deep learning-based object detection in maritime unmanned aerial vehicle imagery: Review and experimental comparisons. Eng. Appl. Artif. Intell. 2024, 128, 107513. [Google Scholar] [CrossRef]

- Yang, T.; Jiang, Z.; Sun, R.; Cheng, N.; Feng, H. Maritime Search and Rescue Based on Group Mobile Computing for Unmanned Aerial Vehicles and Unmanned Surface Vehicles. IEEE Trans. Ind. Inform. 2020, 16, 7700–7708. [Google Scholar] [CrossRef]

- Zhang, Y.; Ye, M.; Zhu, G.; Liu, Y.; Guo, P.; Yan, J. FFCA-YOLO for Small Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- European Commission; Joint Research Centre. New Sensors Benchmark Report on WorldView-4: Geometric Benchmarking over Maussane Test Site for CAP Purposes; Publications Office: Luxembourg, 2017.

- Wang, Y.; Lu, J.; Li, Z.; Chu, Y. Fault detection for a class of non-linear networked control systems in the presence of Markov sensors assignment with partially known transition probabilities. IET Control Theory Appl. 2015, 9, 1491–1500. [Google Scholar] [CrossRef]

- Bostanci, E.; Bostanci, B.; Kanwal, N.; Clark, A.F. Sensor fusion of camera, GPS and IMU using fuzzy adaptive multiple motion models. Soft Comput. 2018, 22, 2619–2632. [Google Scholar] [CrossRef]

- Adarsh, S.; Ramachandran, K.I. Design of Sensor Data Fusion Algorithm for Mobile Robot Navigation Using ANFIS and Its Analysis Across the Membership Functions. Autom. Control Comput. Sci. 2018, 52, 382–391. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, H.; Chen, W. A real-time visual-inertial mapping and localization method by fusing unstable GPS. In Proceedings of the 2018 13th World Congress on Intelligent Control and Automation (WCICA), Changsha, China, 4–8 July 2018; pp. 1397–1402. [Google Scholar]

- Alonge, F.; D’Ippolito, F.; Garraffa, G.; Sferlazza, A. Hybrid Observer for Indoor Localization with Random Time-of-Arrival Measurments. In Proceedings of the 2018 IEEE 4th International Forum on Research and Technology for Society and Industry (RTSI), Palermo, Italy, 10–13 September 2018; pp. 1–6. [Google Scholar]

- Cao, Y.; Liang, H.; Fang, Y.; Peng, W. Research on Application of Computer Vision Assist Technology in High-precision UAV Navigation and Positioning. In Proceedings of the 2020 IEEE 3rd International Conference on Information Systems and Computer Aided Education (ICISCAE), Dalian, China, 27–29 September 2020; pp. 453–458. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object Detection via Region-based Fully Convolutional Networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Nice, France, 2016; Volume 29. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection 2020. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Sun, Y.; Li, H. Control of Rotor Drone’s Autonomous Landing on Moving Unmanned Surface Vehicle. Electron. Opt. Control 2021, 28, 86–89. [Google Scholar]

- Sun, K.; Yu, Y.; Feng, Y. Research on Target Tracking and Landing Method of Autonomous UAV Based on Vision. J. Shenyang Ligong Univ. 2022, 41, 21–28. [Google Scholar]

- Song, Y.; Huang, X.; Zhang, X.; Wang, H. UAV abnormal flight detection based on improved convolutional neural network. Inf. Technol. 2023, 47, 51–57+62. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition 2015. arXiv 2014, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A.; Liu, W.; et al. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Liu, Z.; Li, Y.; Shuang, F.; Huang, Z.; Wang, R. EMB-YOLO: Dataset, method and benchmark for electric meter box defect detection. J. King Saud Univ. Comput. Inf. Sci. 2024, 36, 101936. [Google Scholar] [CrossRef]

- Liu, Z.; Li, J.; Shen, Z.; Huang, G.; Yan, S.; Zhang, C. Learning Efficient Convolutional Networks Through Network Slimming. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2736–2744. [Google Scholar]

- He, Y.; Zhang, X.; Sun, J. Channel Pruning for Accelerating Very Deep Neural Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1389–1397. [Google Scholar]

- Guo, S.; Wang, Y.; Li, Q.; Yan, J. DMCP: Differentiable Markov Channel Pruning for Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1539–1547. [Google Scholar]

- Chang, J.; Lu, Y.; Xue, P.; Xu, Y.; Wei, Z. Automatic channel pruning via clustering and swarm intelligence optimization for CNN. Appl. Intell. 2022, 52, 17751–17771. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 18–23 January 2019; pp. 6105–6114. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features From Cheap Operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 1580–1589. [Google Scholar]

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions 2016. arXiv 2015, arXiv:1511.07122. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Huang, D.; Wang, Y. Receptive Field Block Net for Accurate and Fast Object Detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Springer: Berlin, Germany, 2018; pp. 385–400. [Google Scholar]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018, IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Xie, S.; Girshick, R.; Dollar, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Ding, X.; Guo, Y.; Ding, G.; Han, J. ACNet: Strengthening the Kernel Skeletons for Powerful CNN via Asymmetric Convolution Blocks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1911–1920. [Google Scholar]

- Padilla, R.; Netto, S.L.; da Silva, E.A.B. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).