Experimental Evaluation of Multi- and Single-Drone Systems with 1D LiDAR Sensors for Stockpile Volume Estimation

Abstract

1. Introduction

2. 1D LiDAR Drone-Borne Approaches for Stockpile Volume Estimation

2.1. Overview

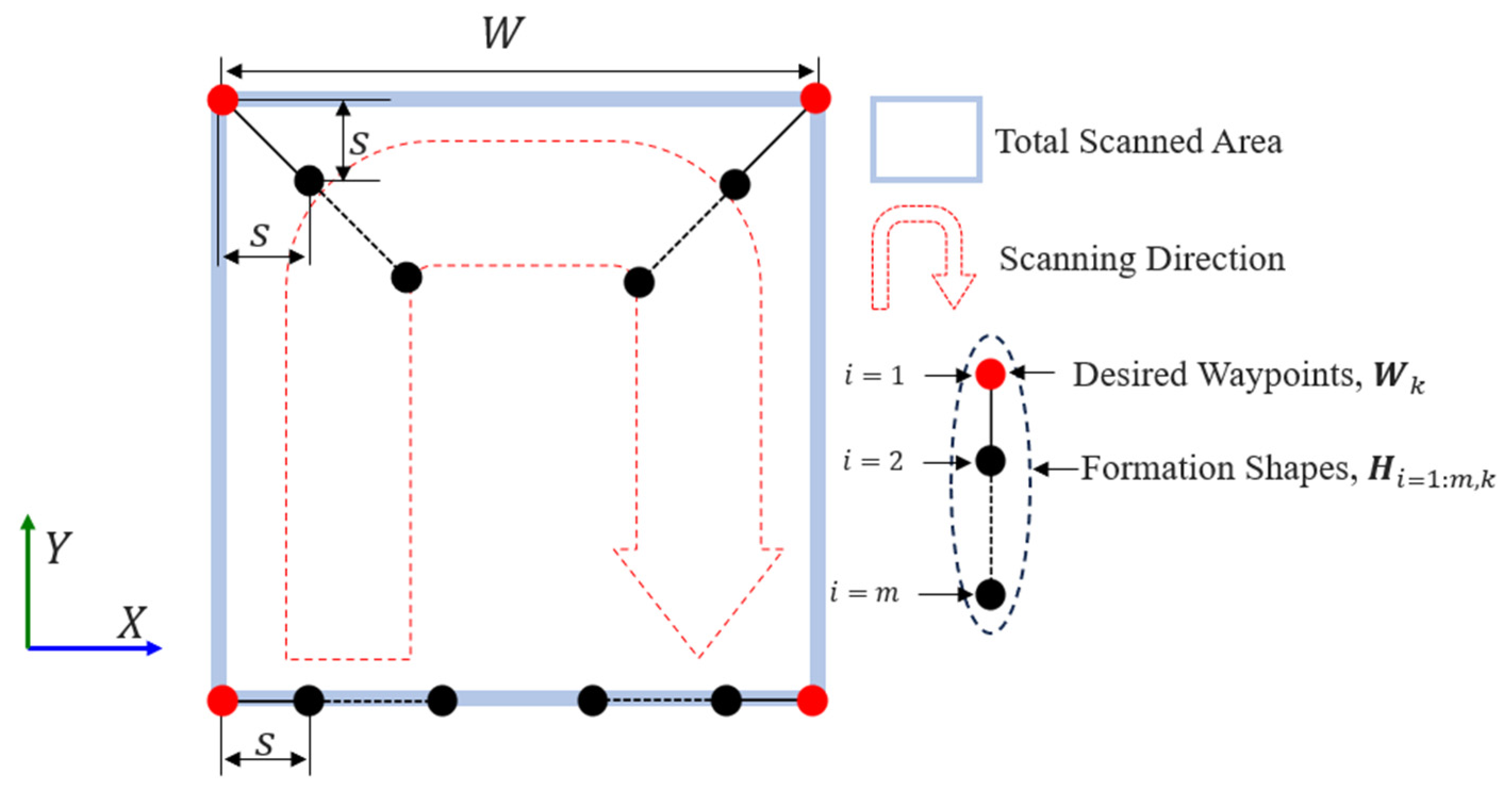

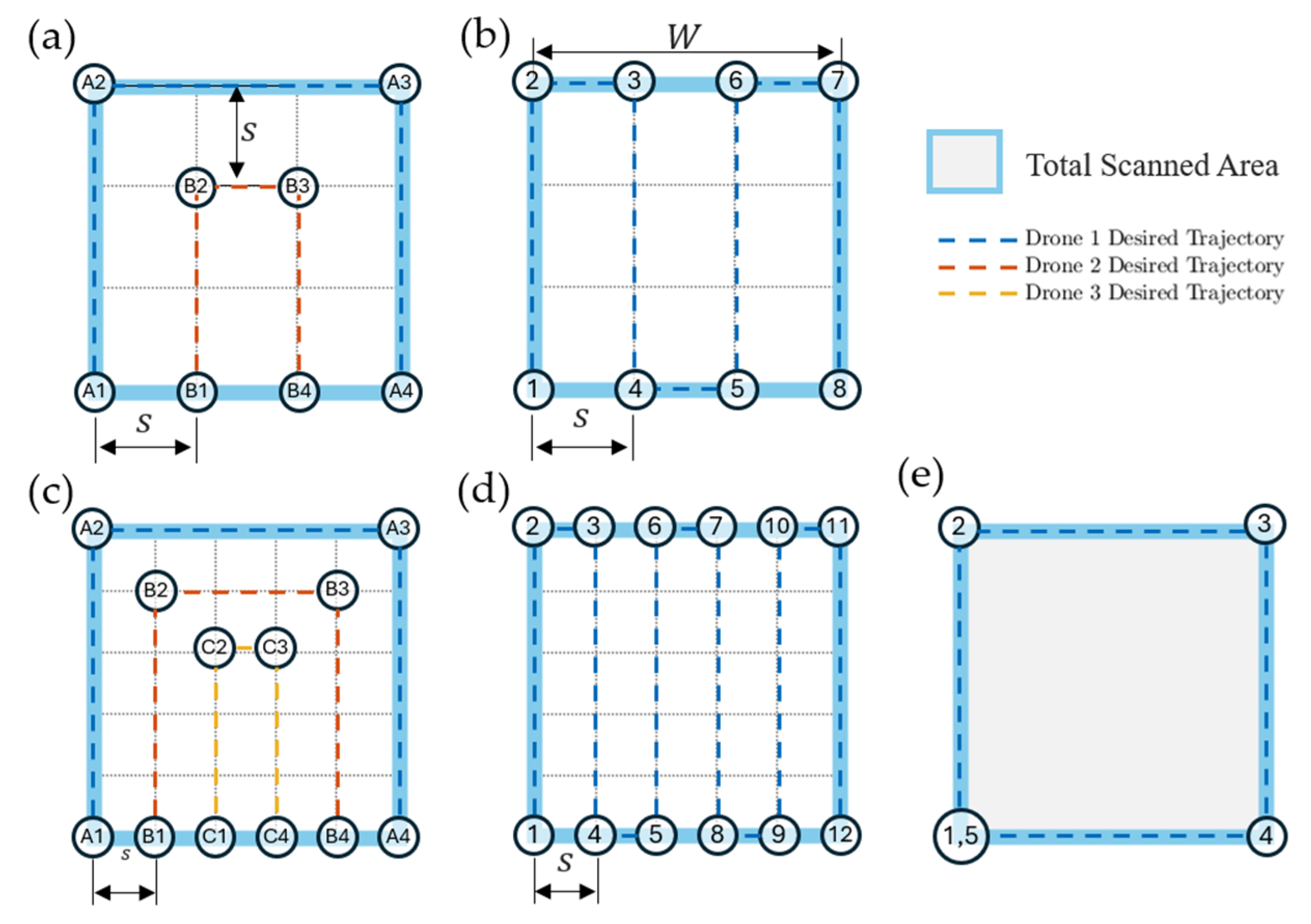

2.2. Multi-Drone Agents with Static 1D LiDAR Sensors

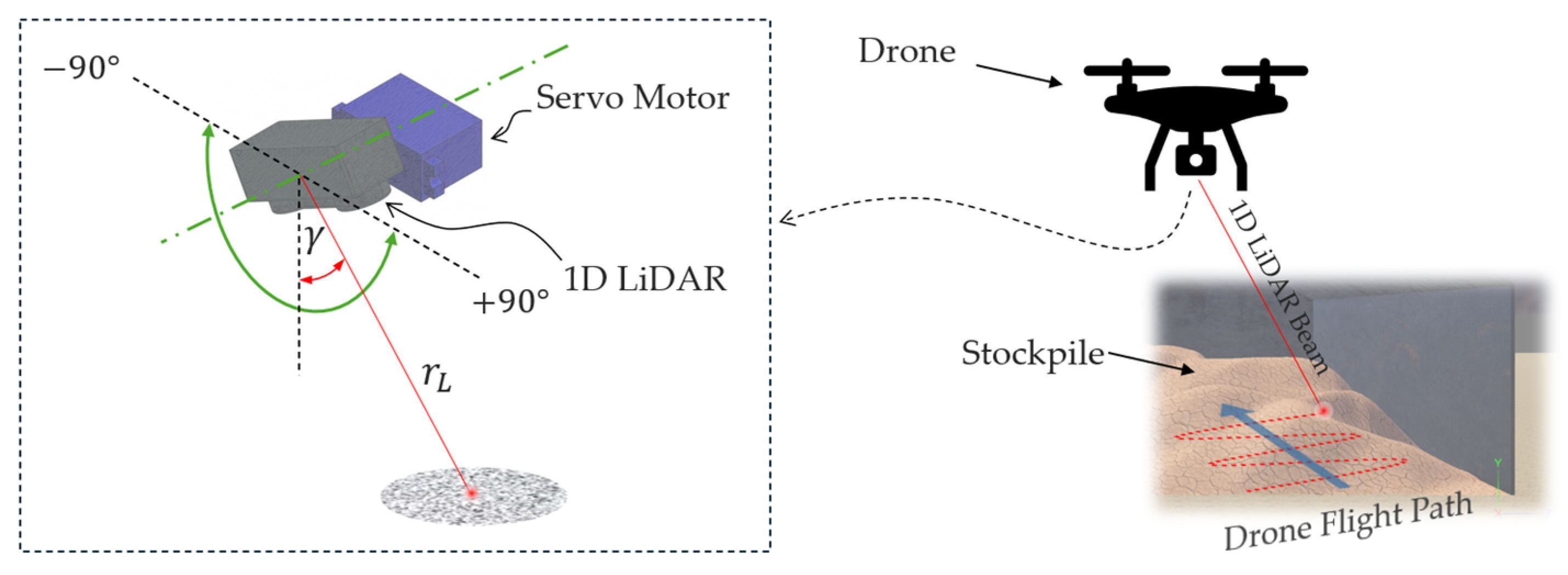

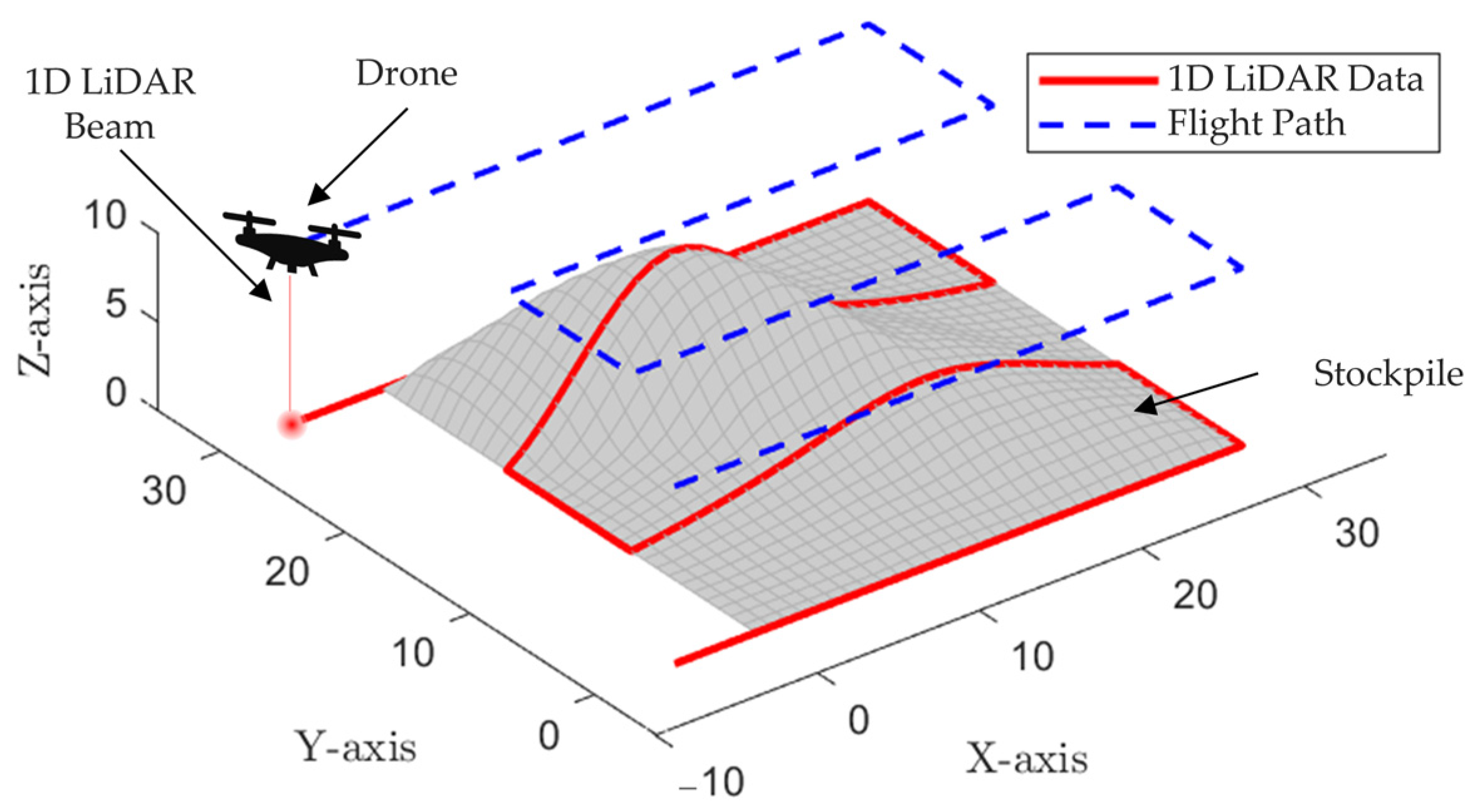

2.3. Single Drone with an Actuated 1D LiDAR Sensor

2.4. Single Drone with Static 1D LiDAR Sensor

2.5. Point Cloud Generation and Registration

3. Experimental Work

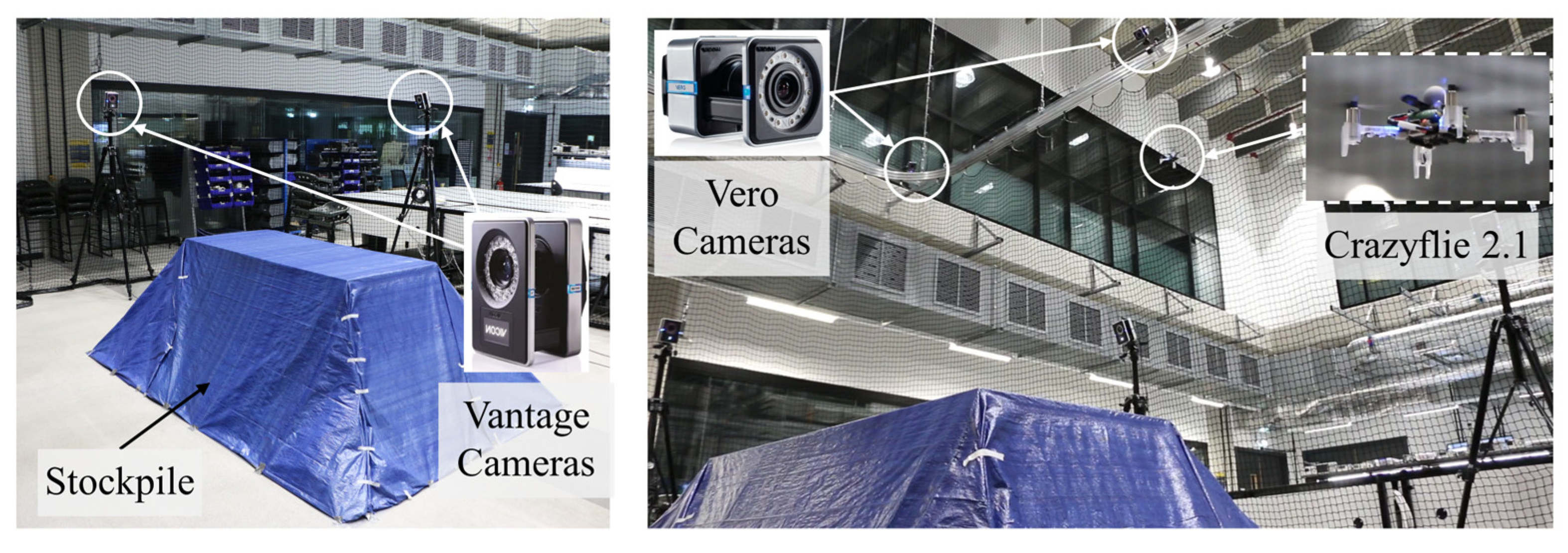

3.1. Setup for Multi-Drone Agents and Single-Drone with Static 1D LiDAR Sensors

- Initialisation of dedicated log files for each drone, cataloguing timestamp, position and orientation (sourced from the VICON system), desired positions, and depth range;

- ROS node activation to launch VICON data reception, which is simultaneously recorded in the log file via a distinct thread;

- Establishing connectivity with drones and updating the drones’ position estimator with their current positions from the VICON system;

- Execution of a closed-loop function, operating at 10 Hz, which constantly feeds the desired trajectory from the formation control to the drone’s desired position function in the Crazyflie Python library. Concurrently, it updates the drone position estimator using the VICON data.

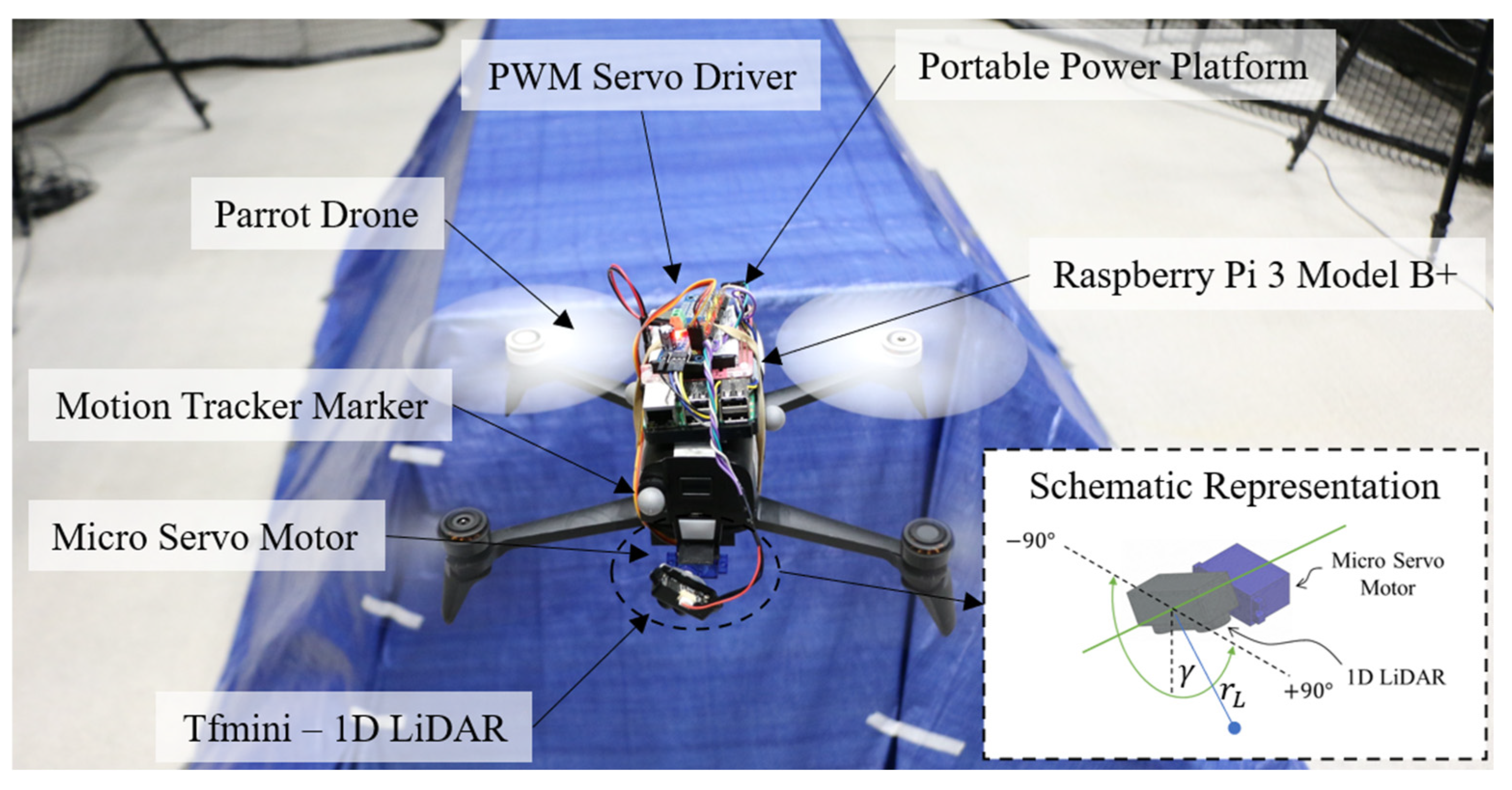

3.2. Setup for the Single Drone with the Actuated 1D LiDAR Sensor

- TFMini LiDAR Sensor (Benewake, Beijing, China): To measure distances ranging from 30 cm to 12 m;

- Servo Motor SG90 (Tower Pro, Taipei, Taiwan): A lightweight motor, offering about 180 degrees oscillation (90 degrees in either direction) to actuate the TFMini LiDAR sensor;

- PWM Servo Motor Driver (AZDelivery, Deggendorf, Germany): To ensure smooth and efficient servo motor operation;

- Raspberry Pi 3 Model B+ (Raspberry Pi Foundation, Cambridge, UK): Serves as a central control unit, managing the motion of the servo motor, running the LiDAR sensor, and handling the acquisition and storage of the servo angle and LiDAR data;

- PiJuice HAT (PiSupply, London, UK): A portable power platform powering both the Raspberry Pi unit and the sensor array;

- Parrot Bebop 2 Drone (Parrot, Paris, France): the aerial vehicle carrying the payload, equipped with four markers for monitoring its positional and orientational data using the VICON system.

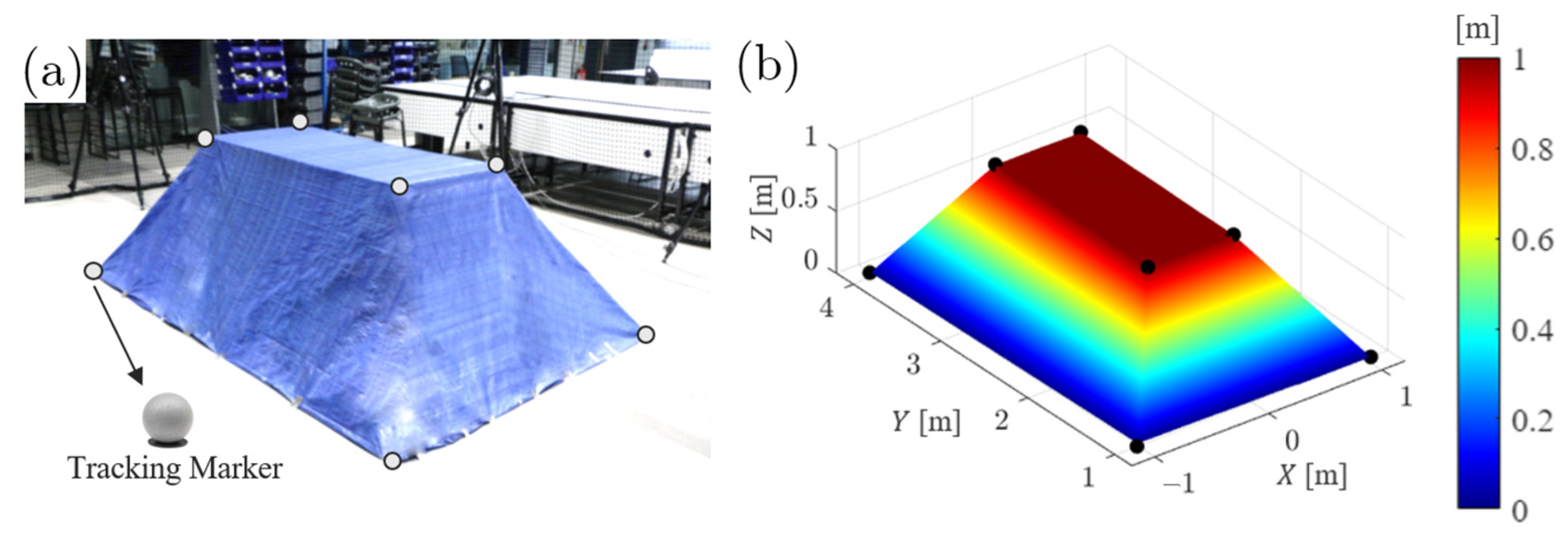

3.3. Reference Stockpile

3.4. Data Collection

4. Results and Discussion

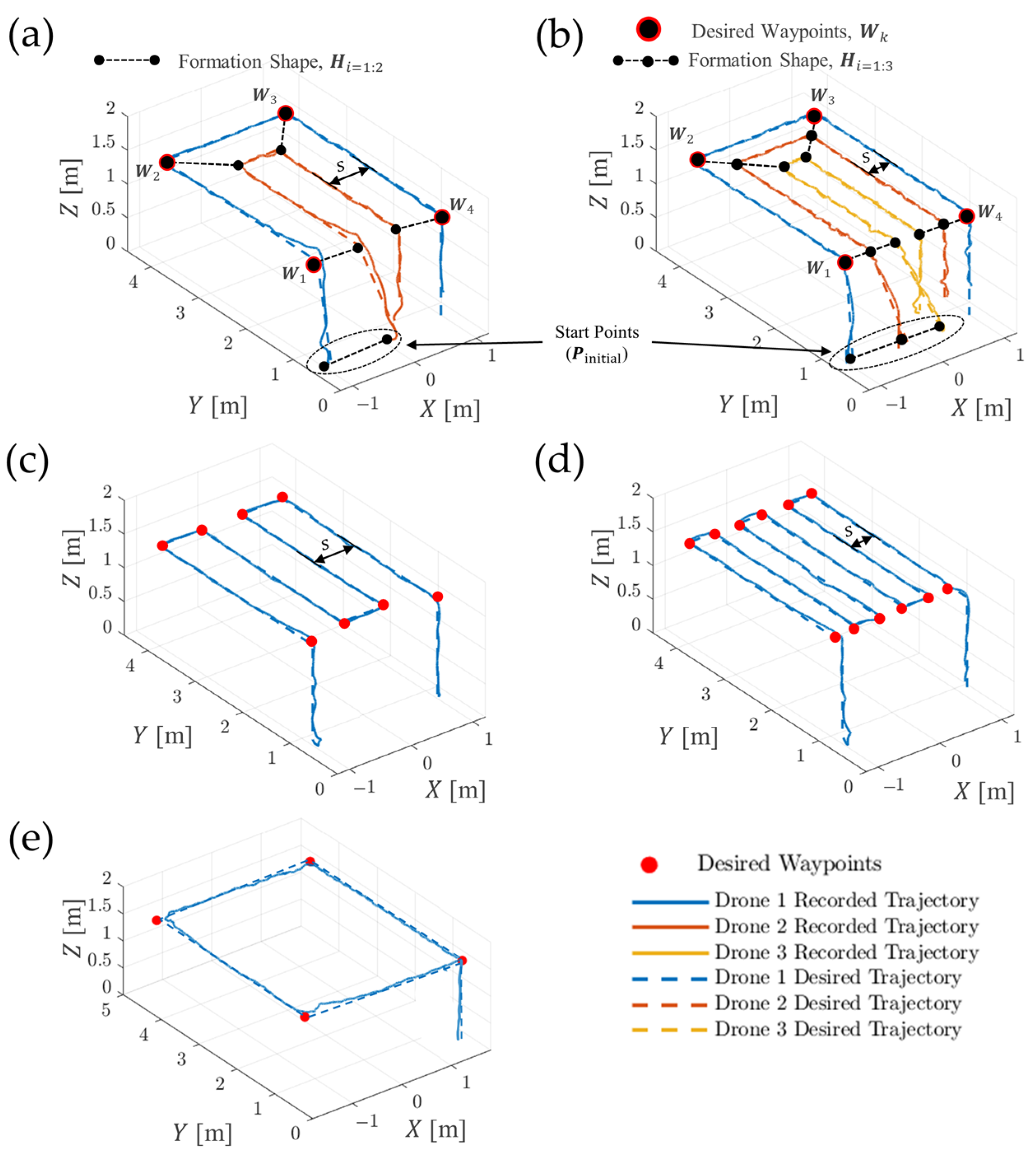

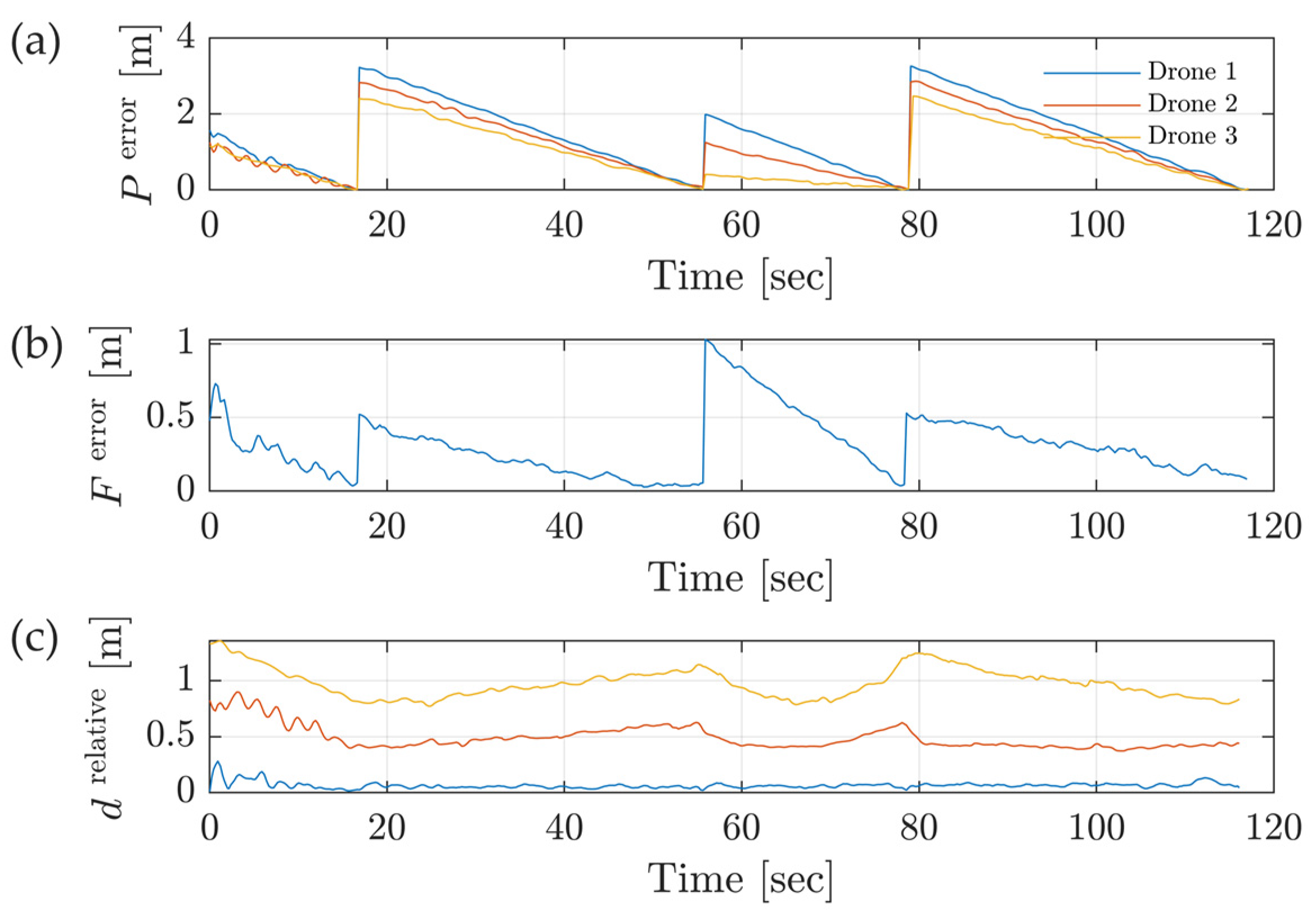

4.1. Flight Test Performance

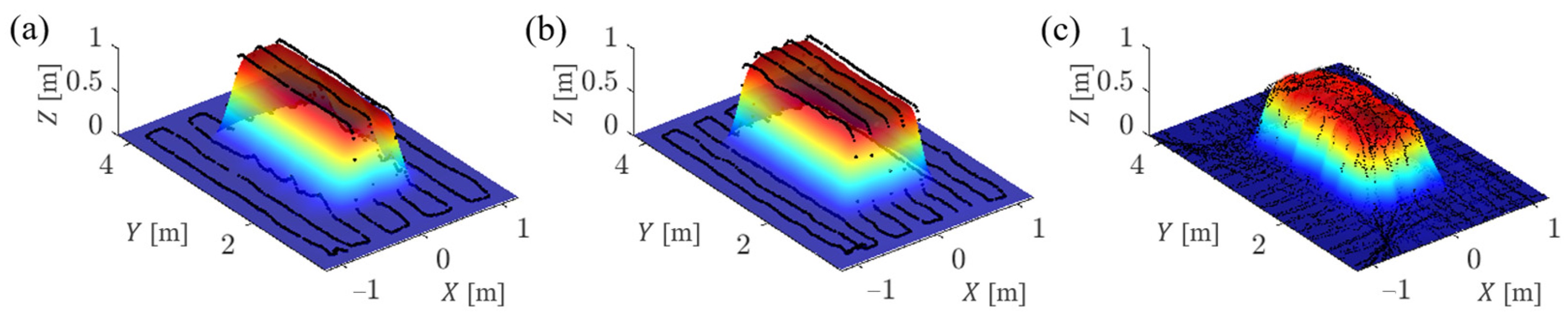

4.2. Point Cloud Registration and Stockpile Reconstruction

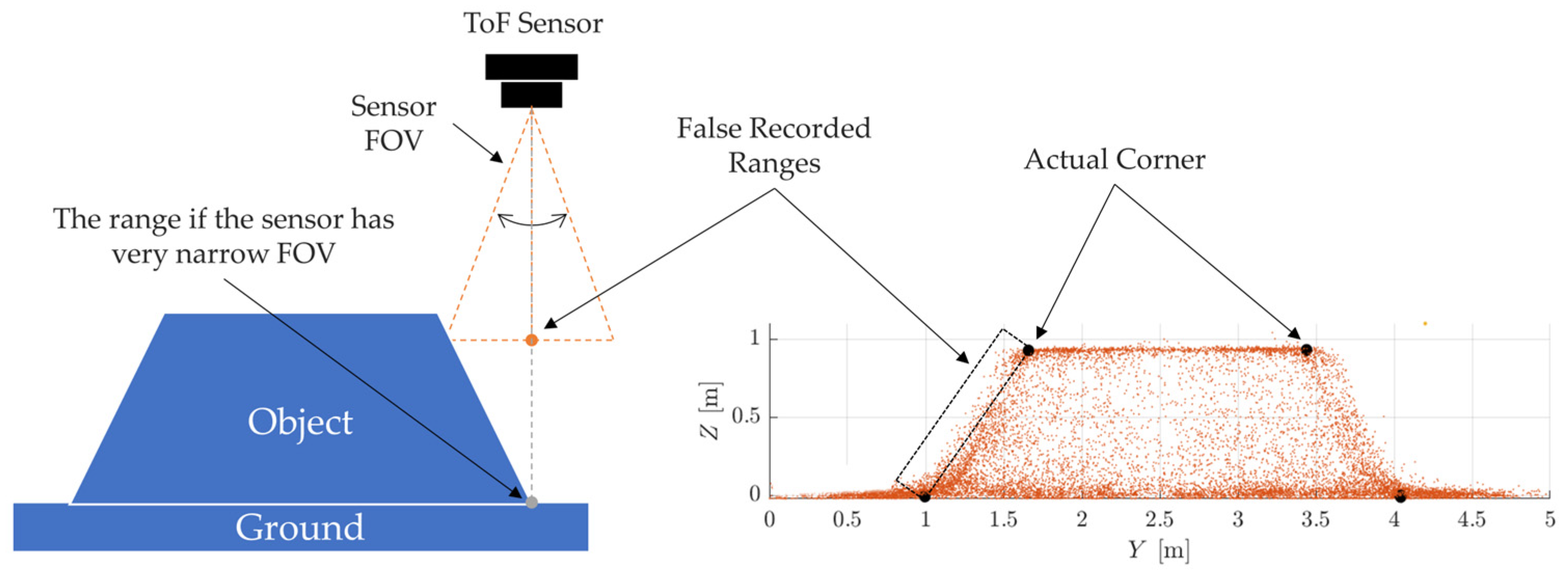

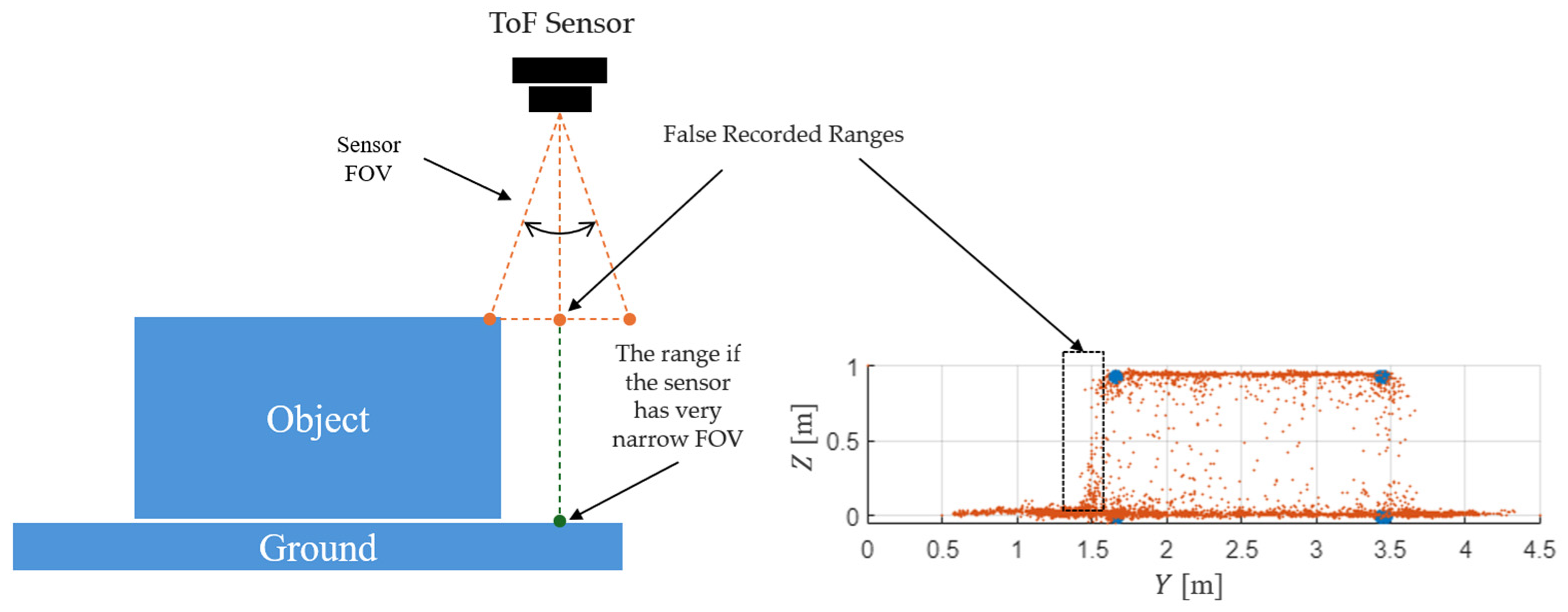

4.3. Comparative Analysis with a Second Object: Rectangular Prism Stockpile

4.4. Comparative Analysis of the Proposed Approaches

5. Final Comments

5.1. Outcomes

- A new adaptive formation control approach was developed for drone formation and trajectory tracking, ensuring smooth transitions between formation shapes by dynamically adjusting the drones’ velocities;

- Experimental tests were performed to scan an example stockpile within an indoor environment using multi-drone systems consisting of Crazyflie micro drones, demonstrating successful deployment of the proposed formation algorithm in a real experimental test, achieving an average deviation of 0.23% between the desired and actual paths of each drone within the formation;

- In comparison, the stockpile was also scanned using a solitary drone with either a static or actuated 1D LiDAR (with the latter approach being previously proposed based on simulation assessments, but we experimentally demonstrate its efficacy in this work);

- Successful approach integration was achieved through the development of Python codes to control the drones, seamlessly merging the data of the motion tracking system through ROS communication, and the developed codes have been provided in Supplementary Materials, Code S1;

- In terms of volumetric estimation of the reference trapezoidal prism stockpile considered in this study, whilst using the Crazyflie micro drones, a formation of two or three drones, or a single drone following closely similar zigzag paths, generated similar results with a promising average volumetric error margin of 1.3%. On the other hand, the servo-actuated 1D LiDAR approach showed a higher volumetric average error rate of 4.4% due to the significant number of outlier points and common LiDAR bias when scanning at non-vertical angles;

- For the second scanned shape, a smaller rectangular prism, the volumetric error increased dramatically due to challenges in reconstructing sharp edges and the impact of the ToF sensor’s FOV on object detection;

- In terms of flight time, the multi-drone approach and the single drone with the actuated 1D LiDAR approach significantly reduce mission duration compared to a single drone with a static sensor following a zigzag pattern trajectory. While deploying multiple drones increases the initial investment cost, it provides redundancy to the system and is beneficial in scenarios where a larger area needs to be scanned, which a single drone is expected not to be able to fully cover due to battery limitations. Meanwhile, the single drone with the actuated 1D LiDAR approach seems to offer a balance between flight time and cost. However, it has some limitations, such as mechanical complexity, data outliers, and noise, which lead to an increase in the estimated volumetric error.

5.2. Future Work

- Develop an automated approach for waypoints and formation topologies selection to provide an optimised coverage of the desired area;

- Investigate adaptive path optimization techniques for the multi-agent system, particularly for larger stockpile areas, to improve efficiency while maintaining coordinated flight and uniform coverage;

- Test the proposed multi-drone approach in conjunction with the dynamic formation strategy in large stockpile storages, where active collision avoidance would be essential;

- Integrate a leader–follower multi-agent system, providing the leader drone with enhanced capabilities, such as obstacle detection, and facilitating real-time information sharing with follower drones to further optimise operations;

- Integrate narrow FOV sensors within micro drones, as this would promise more accurate data acquisition by minimising errors and enhancing the fine details reconstruction.

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hassanalian, M.; Rice, D.; Abdelkefi, A. Evolution of Space Drones for Planetary Exploration: A Review. Progress Aerosp. Sci. 2018, 97, 61–105. [Google Scholar] [CrossRef]

- Bayomi, N.; Fernandez, J.E.; Bayomi, N.; Fernandez, J.E. Eyes in the Sky: Drones Applications in the Built Environment under Climate Change Challenges. Drones 2023, 7, 637. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, N.; Qu, F. Bio-Inspired Flapping Wing Robots with Foldable or Deformable Wings: A Review. Bioinspir Biomim. 2022, 18, 011002. [Google Scholar] [CrossRef]

- Ducard, G.J.J.; Allenspach, M. Review of Designs and Flight Control Techniques of Hybrid and Convertible VTOL UAVs. Aerosp. Sci. Technol. 2021, 118, 107035. [Google Scholar] [CrossRef]

- Phan, H.V.; Park, H.C. Insect-Inspired, Tailless, Hover-Capable Flapping-Wing Robots: Recent Progress, Challenges, and Future Directions. Progress Aerosp. Sci. 2019, 111, 100573. [Google Scholar] [CrossRef]

- Shearwood, T.R.; Nabawy, M.R.A.; Crowther, W.J.; Warsop, C. A Novel Control Allocation Method for Yaw Control of Tailless Aircraft. Aerospace 2020, 7, 150. [Google Scholar] [CrossRef]

- Shearwood, T.R.; Nabawy, M.R.; Crowther, W.J.; Warsop, C. Directional Control of Finless Flying Wing Vehicles—An Assessment of Opportunities for Fluidic Actuation. In Proceedings of the AIAA Aviation 2019 Forum; American Institute of Aeronautics and Astronautics: Reston, Virginia, 2019. [Google Scholar]

- Shearwood, T.R.; Nabawy, M.R.A.; Crowther, W.J.; Warsop, C. Coordinated Roll Control of Conformal Finless Flying Wing Aircraft. IEEE Access 2023, 11, 61401–61411. [Google Scholar] [CrossRef]

- Lanteigne, E.; Alsayed, A.; Robillard, D.; Recoskie, S.G. Modeling and Control of an Unmanned Airship with Sliding Ballast. J. Intell. Robot. Syst. 2017, 88, 285–297. [Google Scholar] [CrossRef]

- Näsi, R.; Mikkola, H.; Honkavaara, E.; Koivumäki, N.; Oliveira, R.A.; Peltonen-Sainio, P.; Keijälä, N.-S.; Änäkkälä, M.; Arkkola, L.; Alakukku, L. Can Basic Soil Quality Indicators and Topography Explain the Spatial Variability in Agricultural Fields Observed from Drone Orthomosaics? Agronomy 2023, 13, 669. [Google Scholar] [CrossRef]

- Korpela, I.; Polvivaara, A.; Hovi, A.; Junttila, S.; Holopainen, M. Influence of Phenology on Waveform Features in Deciduous and Coniferous Trees in Airborne LiDAR. Remote Sens. Environ. 2023, 293, 113618. [Google Scholar] [CrossRef]

- Sliusar, N.; Filkin, T.; Huber-Humer, M.; Ritzkowski, M. Drone Technology in Municipal Solid Waste Management and Landfilling: A Comprehensive Review. Waste Manag. 2022, 139, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Alsayed, A.; Nabawy, M.R.A. Stockpile Volume Estimation in Open and Confined Environments: A Review. Drones 2023, 7, 537. [Google Scholar] [CrossRef]

- Tucci, G.; Gebbia, A.; Conti, A.; Fiorini, L.; Lubello, C. Monitoring and Computation of the Volumes of Stockpiles of Bulk Material by Means of UAV Photogrammetric Surveying. Remote Sens. 2019, 11, 1471. [Google Scholar] [CrossRef]

- Ajayi, O.G.; Ajulo, J. Investigating the Applicability of Unmanned Aerial Vehicles (UAV) Photogrammetry for the Estimation of the Volume of Stockpiles. Quaest. Geogr. 2021, 40, 25–38. [Google Scholar] [CrossRef]

- Tamin, M.A.; Darwin, N.; Majid, Z.; Mohd Ariff, M.F.; Idris, K.M.; Manan Samad, A. Volume Estimation of Stockpile Using Unmanned Aerial Vehicle. In Proceedings of the 2019 9th IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Penang, Malaysia, 29 November–1 December 2019; pp. 49–54. [Google Scholar]

- Alsayed, A.; Yunusa-Kaltungo, A.; Quinn, M.K.; Arvin, F.; Nabawy, M.R.A. Drone-Assisted Confined Space Inspection and Stockpile Volume Estimation. Remote Sens. 2021, 13, 3356. [Google Scholar] [CrossRef]

- Dang, T.; Tranzatto, M.; Khattak, S.; Mascarich, F.; Alexis, K.; Hutter, M. Graph-Based Subterranean Exploration Path Planning Using Aerial and Legged Robots. J. Field Robot. 2020, 37, 1363–1388. [Google Scholar] [CrossRef]

- Cao, D.; Zhang, B.; Zhang, X.; Yin, L.; Man, X. Optimization Methods on Dynamic Monitoring of Mineral Reserves for Open Pit Mine Based on UAV Oblique Photogrammetry. Measurement 2023, 207, 112364. [Google Scholar] [CrossRef]

- Bircher, A.; Kamel, M.; Alexis, K.; Oleynikova, H.; Siegwart, R. Receding Horizon Path Planning for 3D Exploration and Surface Inspection. Auton. Robot. 2018, 42, 291–306. [Google Scholar] [CrossRef]

- Yin, H.; Tan, C.; Zhang, W.; Cao, C.; Xu, X.; Wang, J.; Chen, J. Rapid Compaction Monitoring and Quality Control of Embankment Dam Construction Based on UAV Photogrammetry Technology: A Case Study. Remote Sens. 2023, 15, 1083. [Google Scholar] [CrossRef]

- Salagean, T.; Suba, E.; Pop, I.D.; Matei, F.; Deak, J. Determining Stockpile Volumes Using Photogrammetric Methods. Sci. Papers Ser. E Land Reclam. Earth Obs. Surv. Environ. Eng. 2019, 8, 114–119. [Google Scholar]

- Rhodes, R.K. UAS as an Inventory Tool: A Photogrammetric Approach to Volume Estimation; University of Arkansas: Fayetteville, AR, USA, 2017. [Google Scholar]

- Eisenbeiss, H. UAV Photogrammetry. Ph.D. Thesis, Institut für Geodesie und Photogrammetrie, Zürich, Switzerland, 2009. [Google Scholar]

- Kokamägi, K.; Türk, K.; Liba, N. UAV Photogrammetry for Volume Calculations. Agron. Res. 2020, 18, 2087–2102. [Google Scholar] [CrossRef]

- Vacca, G. UAV Photogrammetry for Volume Calculations. A Case Study of an Open Sand Quarry. In Computational Science and Its Applications—ICCSA 2022 Workshops; Springer: Cham, Switzerland, 2022; Volume 13382, pp. 505–518. [Google Scholar] [CrossRef]

- Cho, S.I.; Lim, J.H.; Lim, S.B.; Yun, H.C. A Study on DEM-Based Automatic Calculation of Earthwork Volume for BIM Application. J. Korean Soc. Surv. Geod. Photogramm. Cartogr. 2020, 38, 131–140. [Google Scholar] [CrossRef]

- Rohizan, M.H.; Ibrahim, A.H.; Abidin, C.Z.C.; Ridwan, F.M.; Ishak, R. Application of Photogrammetry Technique for Quarry Stockpile Estimation. IOP Conf. Ser. Earth Environ. Sci. 2021, 920, 012040. [Google Scholar] [CrossRef]

- Zhang, L.; Grift, T.E. A LIDAR-Based Crop Height Measurement System for Miscanthus Giganteus. Comput. Electron. Agric. 2012, 85, 70–76. [Google Scholar] [CrossRef]

- Carabassa, V.; Montero, P.; Alcañiz, J.M.; Padró, J.-C. Soil Erosion Monitoring in Quarry Restoration Using Drones. Minerals 2021, 11, 949. [Google Scholar] [CrossRef]

- Forte, M.; Neto, P.; Thé, G.; Nogueira, F. Altitude Correction of an UAV Assisted by Point Cloud Registration of LiDAR Scans. In Proceedings of the 18th International Conference on Informatics in Control, Automation and Robotics, Virstual, 6–8 July 2021; SCITEPRESS—Science and Technology Publications: Setúbal, Portugal, 2021; pp. 485–492. [Google Scholar]

- Bayar, G. Increasing Measurement Accuracy of a Chickpea Pile Weight Estimation Tool Using Moore-Neighbor Tracing Algorithm in Sphericity Calculation. J. Food Meas. Charact. 2021, 15, 296–308. [Google Scholar] [CrossRef]

- Phillips, T.G.; Guenther, N.; McAree, P.R. When the Dust Settles: The Four Behaviors of LiDAR in the Presence of Fine Airborne Particulates. J. Field Robot 2017, 34, 985–1009. [Google Scholar] [CrossRef]

- Alsayed, A.; Nabawy, M.R.A. Indoor Stockpile Reconstruction Using Drone-Borne Actuated Single-Point LiDARs. Drones 2022, 6, 386. [Google Scholar] [CrossRef]

- Amaglo, W.Y. Volume Calculation Based on LiDAR Data. Master's Thesis, Royal Institute of Technology, Stockholm, Sweden, 2021. [Google Scholar]

- Zhang, W.; Yang, D. Lidar-Based Fast 3D Stockpile Modeling. In Proceedings of the 2019 International Conference on Intelligent Computing, Automation and Systems (ICICAS), Chongqing, China, 6–8 December 2019; pp. 703–707. [Google Scholar]

- Flyability Elios 3—Digitizing the Inaccessible. Available online: https://www.flyability.com/elios-3 (accessed on 22 August 2022).

- Alsayed, A.; Nabawy, M.R.; Arvin, F. Autonomous Aerial Mapping Using a Swarm of Unmanned Aerial Vehicles. In Proceedings of the AIAA AVIATION 2022 Forum; American Institute of Aeronautics and Astronautics: Reston, Virginia, 2022. [Google Scholar]

- Raj, T.; Hashim, F.H.; Huddin, A.B.; Ibrahim, M.F.; Hussain, A. A Survey on LiDAR Scanning Mechanisms. Electronics 2020, 9, 741. [Google Scholar] [CrossRef]

- Vicon|Award Winning Motion Capture Systems. Available online: https://www.vicon.com/ (accessed on 12 September 2023).

- Home|Bitcraze. Available online: https://www.bitcraze.io/ (accessed on 12 September 2023).

- Flow Deck v2|Bitcraze. Available online: https://www.bitcraze.io/products/flow-deck-v2/ (accessed on 9 July 2024).

- Kilberg, B.G.; Campos, F.M.R.; Schindler, C.B.; Pister, K.S.J. Quadrotor-Based Lighthouse Localization with Time-Synchronized Wireless Sensor Nodes and Bearing-Only Measurements. Sensors 2020, 20, 3888. [Google Scholar] [CrossRef]

- Vicon_Bridge—ROS Wiki. Available online: http://wiki.ros.org/vicon_bridge (accessed on 12 September 2023).

- GitHub—Bitcraze/Crazyflie-Lib-Python: Python Library to Communicate with Crazyflie. Available online: https://github.com/bitcraze/crazyflie-lib-python/tree/master (accessed on 12 September 2023).

- Pyparrot Documentation. Available online: https://pyparrot.readthedocs.io/en/latest/index.html (accessed on 12 September 2023).

- He, H.; Chen, T.; Zeng, H.; Huang, S. Ground Control Point-Free Unmanned Aerial Vehicle-Based Photogrammetry for Volume Estimation of Stockpiles Carried on Barges. Sensors 2019, 19, 3534. [Google Scholar] [CrossRef] [PubMed]

- Aziz, F.N.; Zakarijah, M. TF-Mini LiDAR Sensor Performance Analysis for Distance Measurement. J. Nas. Tek. Elektro Dan Teknol. Inf. 2022, 11, EN-192–EN-198. [Google Scholar]

- Petras, V.; Petrasova, A.; McCarter, J.B.; Mitasova, H.; Meentemeyer, R.K. Point Density Variations in Airborne Lidar Point Clouds. Sensors 2023, 23, 1593. [Google Scholar] [CrossRef]

- Alaba, S.Y.; Ball, J.E. A Survey on Deep-Learning-Based LiDAR 3D Object Detection for Autonomous Driving. Sensors 2022, 22, 9577. [Google Scholar] [CrossRef]

- You, J.; Kim, Y.-K. Up-Sampling Method for Low-Resolution LiDAR Point Cloud to Enhance 3D Object Detection in an Autonomous Driving Environment. Sensors 2022, 23, 322. [Google Scholar] [CrossRef]

| Methods | Altitude at 1.5 m | Altitude at 2.0 m | ||

|---|---|---|---|---|

| Volume [m3] | Error [%] | Volume [m3] | Error [%] | |

| Multi-Drone (2 Drones) | 3.03 | 1.0 | 3.08 | 2.7 |

| Multi-Drone (3 Drones) | 3.01 | 0.3 | 3.04 | 1.3 |

| Single Drone (Zigzag Path) | 3.05 | 1.7 | 3.08 | 2.7 |

| Single Drone (Finer Zigzag Path) | 2.98 | −0.7 | 3.03 | 1.0 |

| Single Drone (Actuated 1D LiDAR) | 3.11 | 3.7 | 3.15 | 5.0 |

| Reference Volume [m3] | 3.0 | |||

| Approaches | Advantages | Disadvantages |

|---|---|---|

| Multi-drone agents with static 1D LiDAR |

|

|

|

| |

|

| |

| Single drone with static 1D LiDAR |

|

|

|

| |

|

| |

| Single drone with an actuated 1D LiDAR |

|

|

|

| |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alsayed, A.; Bana, F.; Arvin, F.; Quinn, M.K.; Nabawy, M.R.A. Experimental Evaluation of Multi- and Single-Drone Systems with 1D LiDAR Sensors for Stockpile Volume Estimation. Aerospace 2025, 12, 189. https://doi.org/10.3390/aerospace12030189

Alsayed A, Bana F, Arvin F, Quinn MK, Nabawy MRA. Experimental Evaluation of Multi- and Single-Drone Systems with 1D LiDAR Sensors for Stockpile Volume Estimation. Aerospace. 2025; 12(3):189. https://doi.org/10.3390/aerospace12030189

Chicago/Turabian StyleAlsayed, Ahmad, Fatemeh Bana, Farshad Arvin, Mark K. Quinn, and Mostafa R. A. Nabawy. 2025. "Experimental Evaluation of Multi- and Single-Drone Systems with 1D LiDAR Sensors for Stockpile Volume Estimation" Aerospace 12, no. 3: 189. https://doi.org/10.3390/aerospace12030189

APA StyleAlsayed, A., Bana, F., Arvin, F., Quinn, M. K., & Nabawy, M. R. A. (2025). Experimental Evaluation of Multi- and Single-Drone Systems with 1D LiDAR Sensors for Stockpile Volume Estimation. Aerospace, 12(3), 189. https://doi.org/10.3390/aerospace12030189