New Approaches for the Use of Extended Mock-Ups for the Development of Air Traffic Controller Working Positions

Abstract

1. Introduction

1.1. Controller Working Position

1.2. Software Development in the ATC-Domain

2. CWP Development Workflow

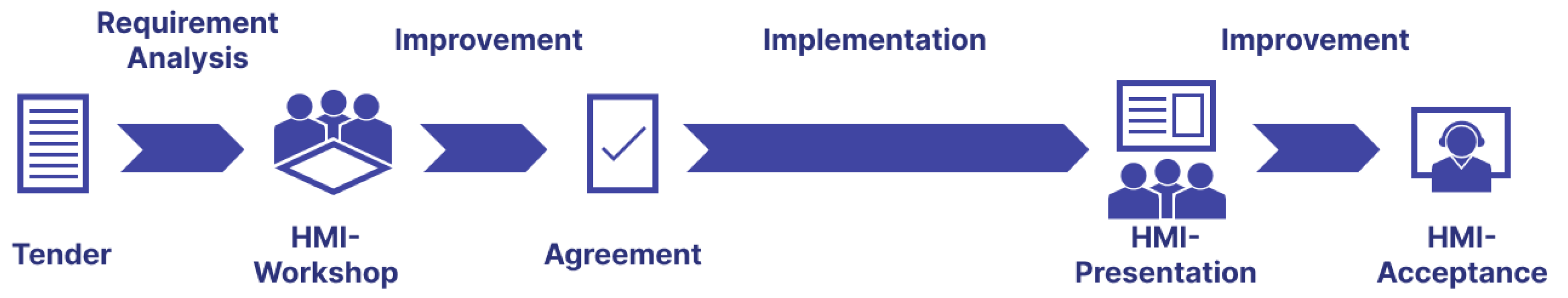

2.1. Current Project Development Workflow

- The process is not user-oriented. The tender does not include work flows or use cases because the tender serves the purpose of formally describing a system as a basis for a commercial offer. The same principle applies to the specification phase of the supplier which aims at reaching a solution agreement as quickly and as inexpensively as possible. Overall, the controllers, as users of the system, play only a subordinate role in the workflow.

- The decision-making process during one or two days of HMI workshops is insufficient, often rushing towards a consensus without allowing controllers sufficient time to weigh the advantages and disadvantages of new workflows presented.

- Technical abstractions are challenging for controllers who typically do not have a software development background, making wire frames, drawings, use cases, and requirements too abstract to engage with effectively.

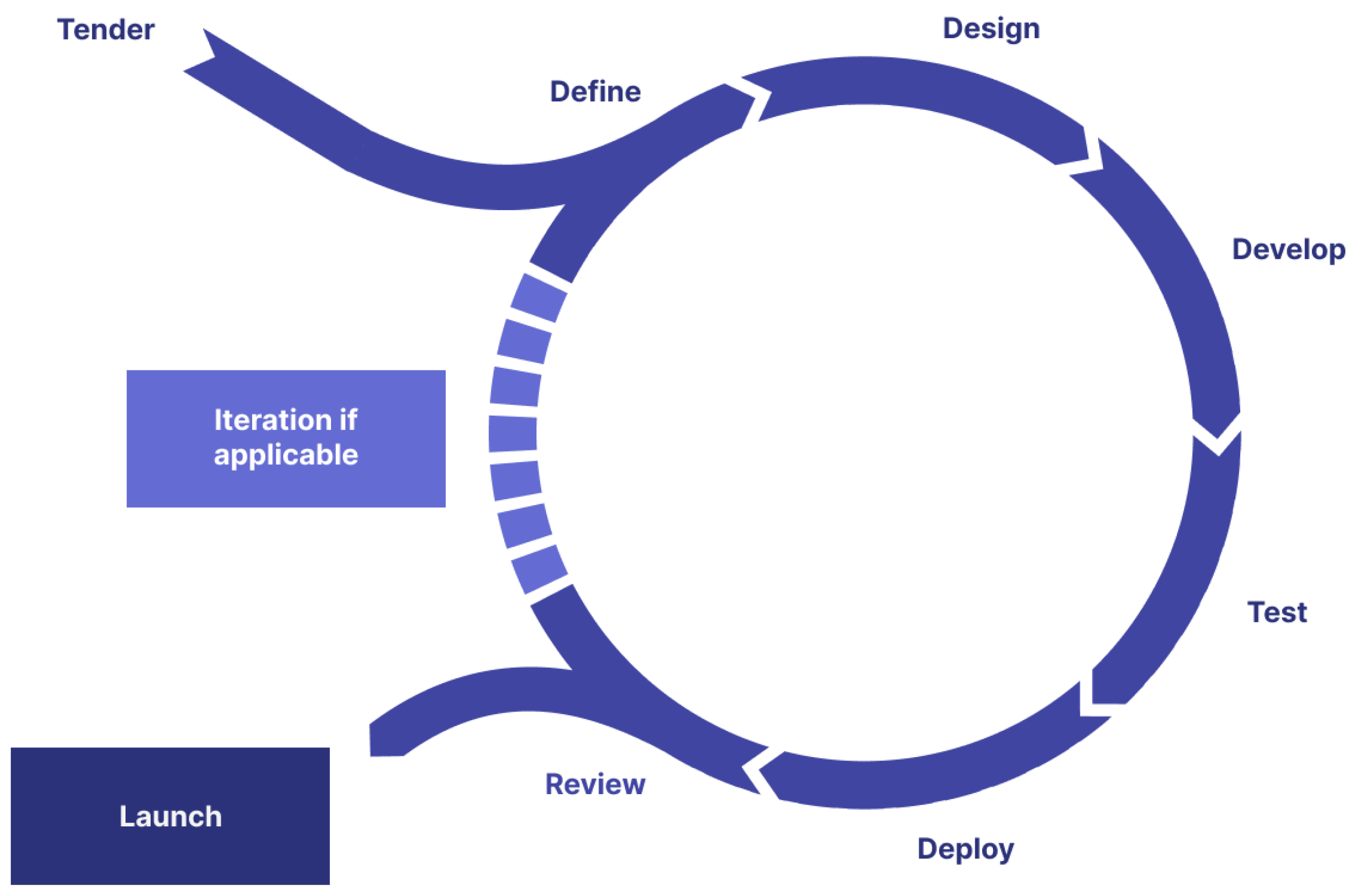

2.2. Agile Approach

- Conducting repeated HMI workshops with a larger group of controllers is impractical due to financial, temporal, and resource constraints. Neither the supplier nor the customers are able to support multiple, extensive workshops.

- Creating multiple HMI prototypes is prohibitively expensive. The presentation of HMI prototypes requires either face-to-face interactions between controllers and developers or the ability for controllers to independently operate prototype software. Given that the supplier’s clientele is globally dispersed, frequent travel for HMI feature development is unfeasible. Additionally, ATC security policies typically prevent controllers from installing software on their systems.

- ATC tender specifications often clash with agile methods due to the fixed formal specifications, pre-defined timelines, fixed prices, and missing customer commitment.

- There is insufficient continuous work to justify a full-time HMI designer position in the scope of a project. Moreover, a designer’s creativity may stagnate over time without fresh challenges. On the other hand, a good HMI designer knows the customer domain and the ATC user domain requires very specific know-how.

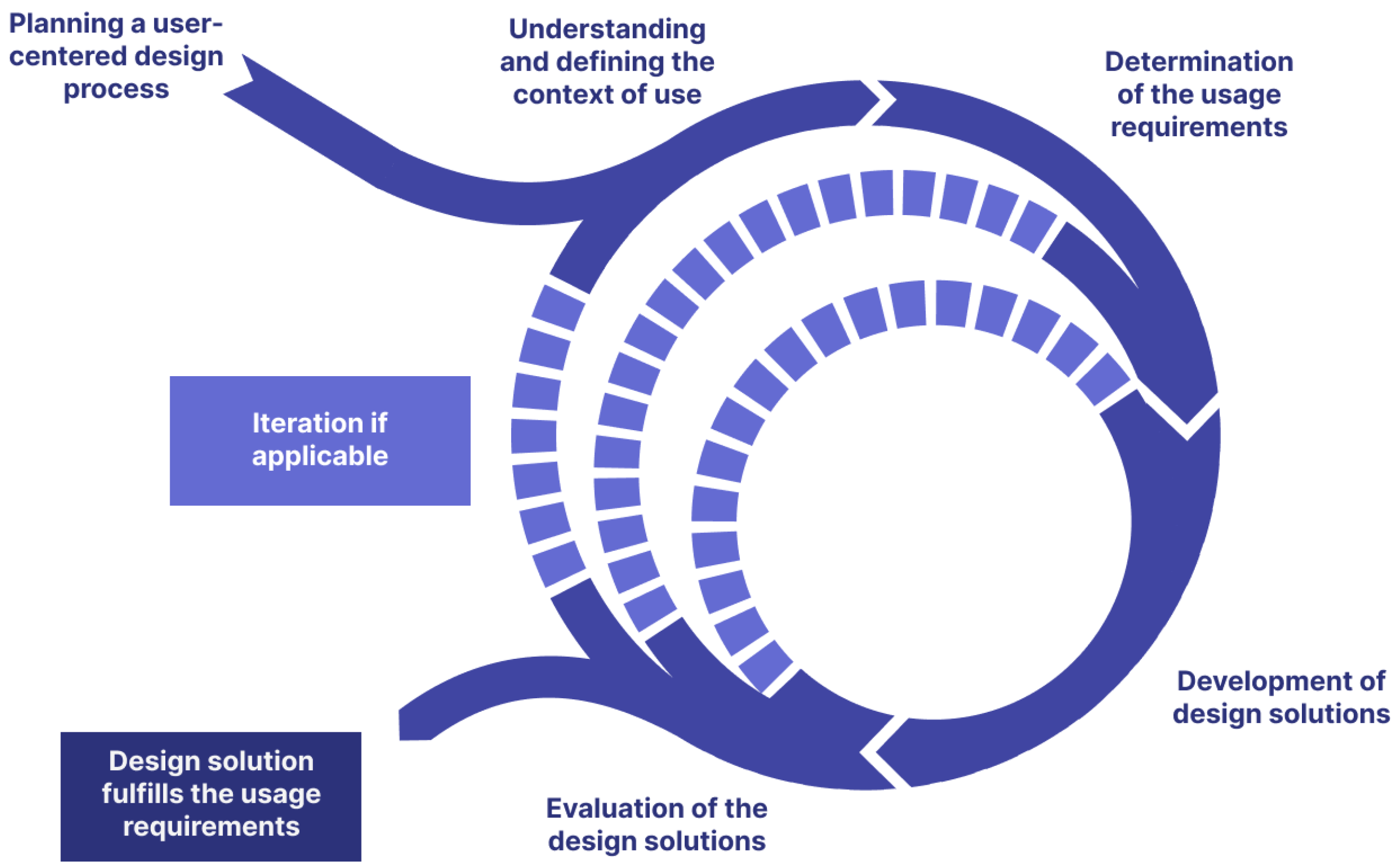

2.3. User-Centred Design

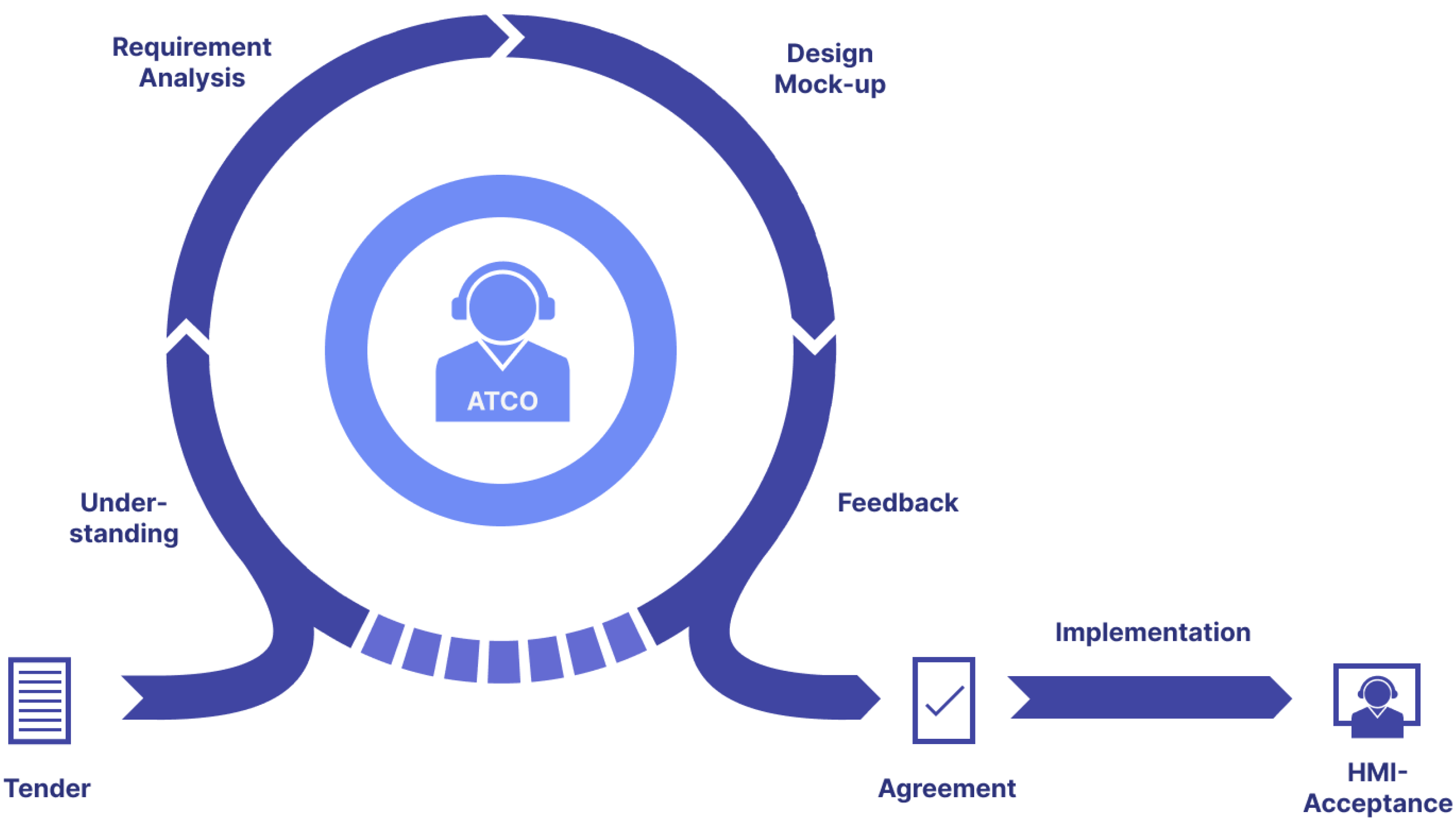

2.4. The New Workflow

3. Applying the New Workflow: A Case Study

3.1. Initial Phase and First Workshops

3.2. Main Workshop

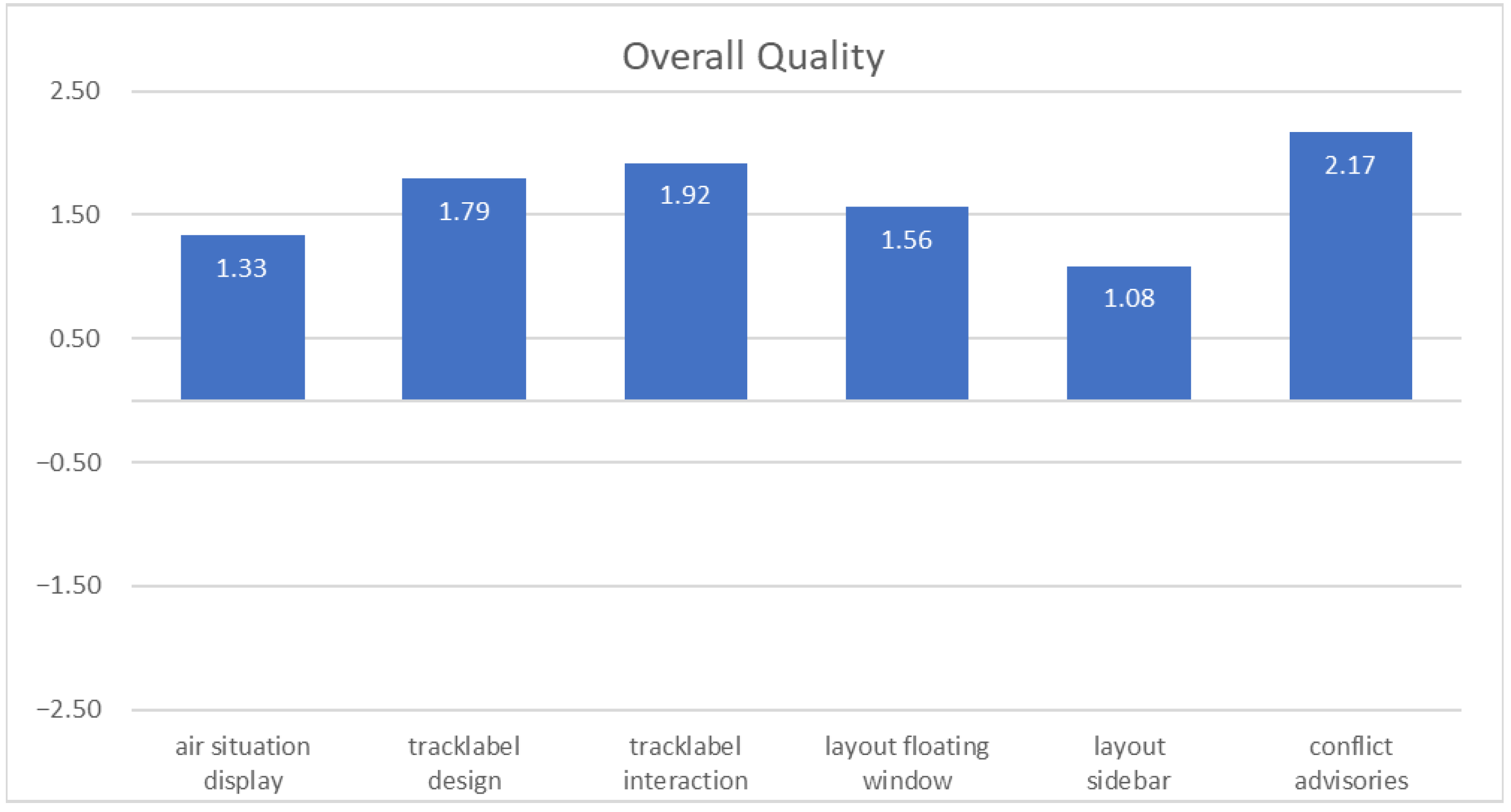

- Air situation display design

- Track label design

- Track label interactions

- Layout

- Advisories and conflicts

3.3. Follow-Up Workshop

- Inclusion of previous feedback

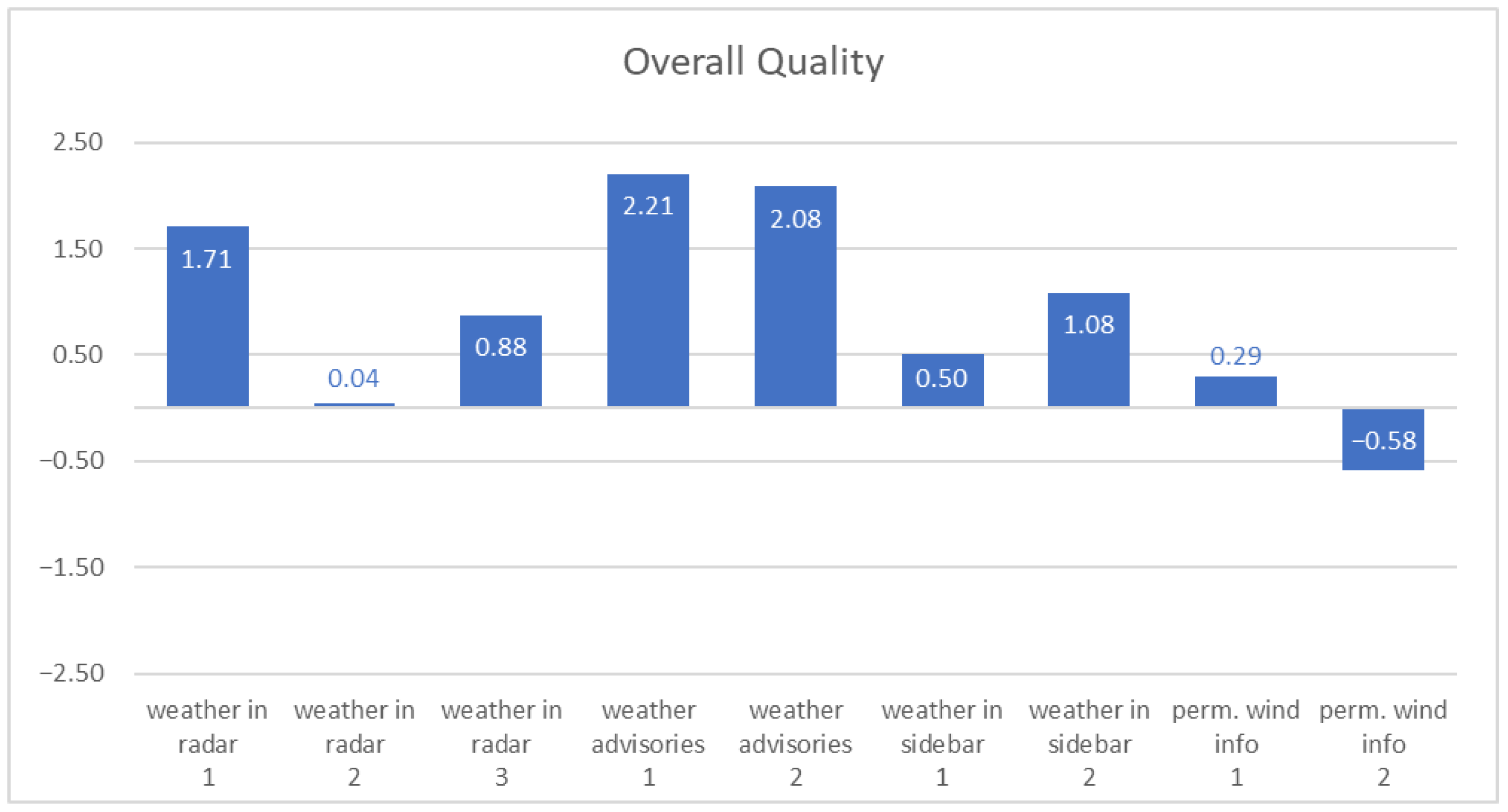

- Weather in radar screen (three variants)

- Weather advisories (two variants)

- Weather in sidebar (two variants)

- Permanent wind information (two variants)

3.4. Questionnaires

3.4.1. User Experience Questionnaire

3.4.2. Feature Questionnaire

3.4.3. Workflow Questionnaire

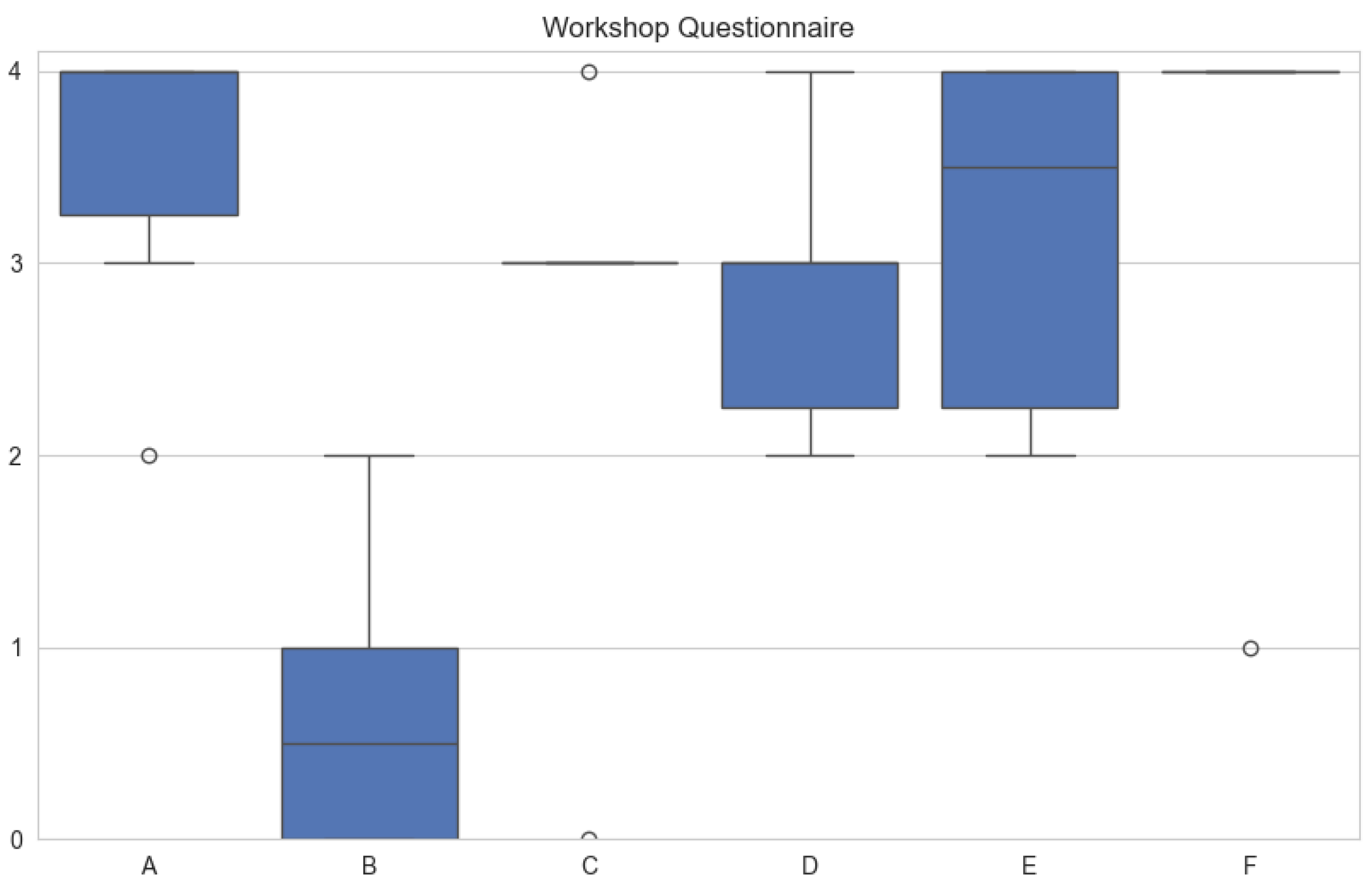

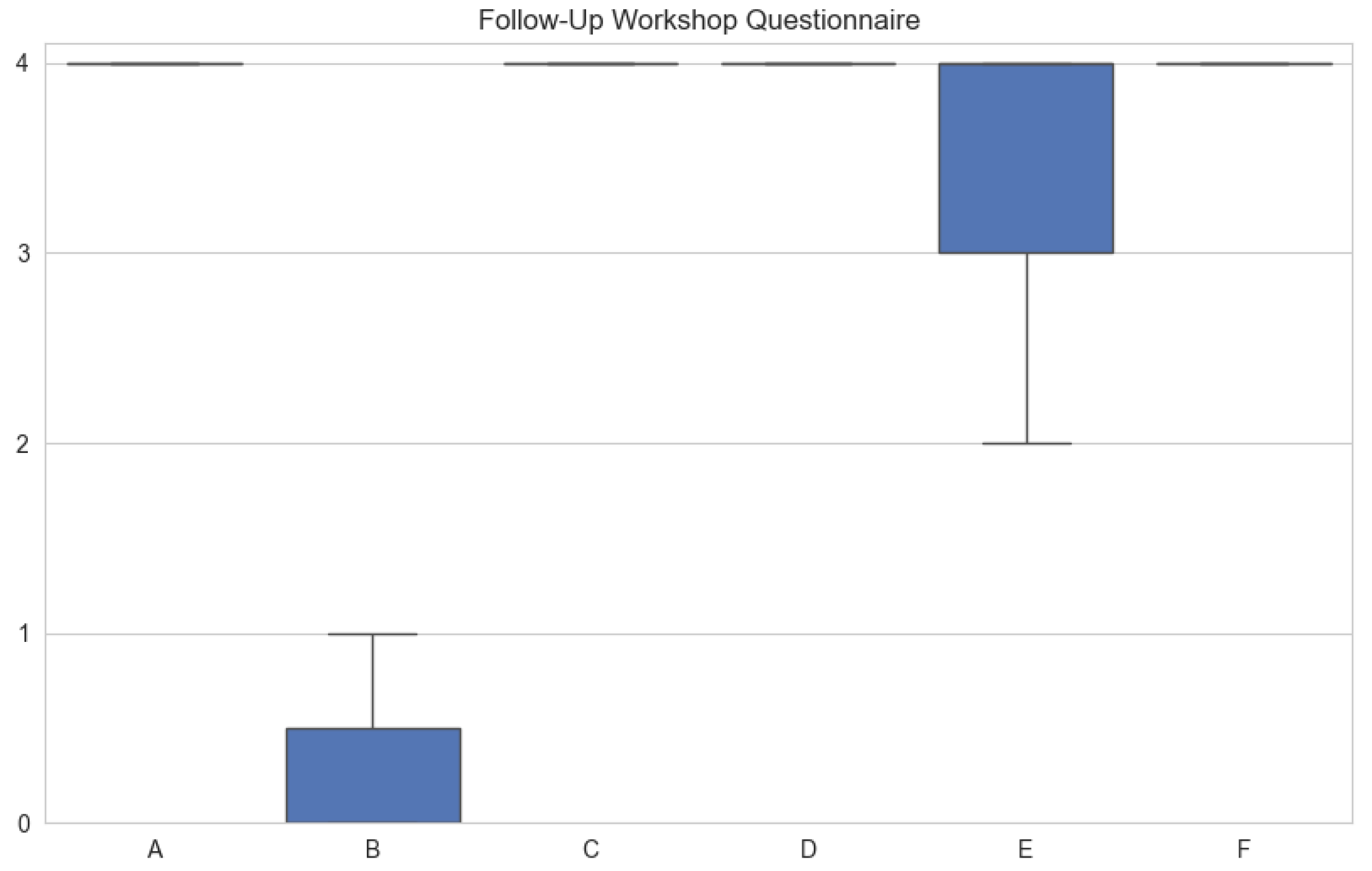

- (A)

- I can very well imagine being involved in the design process on a regular basis through the design evaluation process.

- (B)

- I find the evaluation process to be unnecessarily complex.

- (C)

- I can imagine most ATCOs understanding the evaluation process quickly.

- (D)

- I had enough information available to participate in the design evaluation process.

- (E)

- The mock-ups had enough functionality/contained enough information.

- (F)

- I think it is important that ATCOs are involved early in the design process.

3.4.4. Mock-Up Tool Questionnaire

- (A)

- I find the mock-ups easy to use.

- (B)

- I think I would need technical support to use the mock-ups.

- (C)

- I imagine that most people will learn to master the mock-ups quickly.

- (D)

- I find the mock-ups very cumbersome to use.

- (E)

- I felt very confident using the mock-ups.

- (F)

- I had to learn a lot of things before I could work with the mock-ups.

4. Validation Results

4.1. Main Workshop Results

4.1.1. User Experience Questionnaire (Short) Results

4.1.2. Workflow Evaluation Questionnaire

4.1.3. Mock-Up Tool Evaluation Results

4.1.4. Qualitative Results

4.2. Follow-Up Workshop Results

4.2.1. Follow-Up Workshop User Experience Questionnaire (Short) Results

4.2.2. Follow-Up Workshop Workflow Evaluation Questionnaire

4.2.3. Follow-Up Workshop Qualitative Results

5. Discussion

5.1. Main Workshop and Workflow

5.2. Remote Workshop

6. Summary and Outlook

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADS-B | Automatic Dependent Surveillance—Broadcast |

| AMAN | Arrival Manager |

| ANSP | Air Navigation Service Provider |

| A-SMGCS | Advanced Surface Movement Guidance and Control System |

| ATC | Air Traffic Control |

| ATCO | Air Traffic Controller |

| CPDLC | Controller Pilot Data Link Communication |

| CWP | Controller Working Position |

| DLR | Deutsches Zentrum für Luft- und Raumfahrt |

| DMAN | Departure Manager |

| HMI | Human Machine Interface |

| ISO | International Organization for Standardization |

| MTCD | Medium-term Conflict Detection |

| MUAC | Maastricht Upper Area Control |

| SME | Subject Matter Expert |

| STCA | Short-term Conflict Alert |

| TSAS | Terminal Sequencing and Spacing |

| UEQ(S) | User Experience Questionnaire (Short) |

| UX | User Experience |

References

- Wang, J.J.; Datta, K.; Landi, M.R. A Life-Cycle Cost Estimating Methodology for NASA-Developed Air Traffic Control Decision Support Tools. In Proceedings of the International Society of Parametric Analysis Conference, 21–24 May 2002; NASA/CR-2002-211395; NASA AMES Research Center: Moffett Field, CA, USA, 2002. [Google Scholar]

- Majumdar, A. Commercializing and restructuring air traffic control: A review of the experience and issues involved. J. Air Transp. Manag. 1995, 2, 111–122. [Google Scholar] [CrossRef]

- Gotel, O.; Finkelstein, C. An analysis of the requirements traceability problem. In Proceedings of the IEEE International Conference on Requirements Engineering, Colorado Springs, CO, USA, 18–22 April 1994; pp. 94–101. [Google Scholar] [CrossRef]

- Gordieiev, O.; Gordieieva, D.; Rainer, A.; Pishchukhina, O. Relationship between factors influencing the software development process and software defects. In Proceedings of the 13th IEEE International Conference on Dependable Systems, Services and Technologies (DESSERT’2023), Athens, Greece, 13–15 October 2023. [Google Scholar] [CrossRef]

- Rivero, J.M.; Grigera, J.; Distante, D.; Montero, F.; Rossi, G. DataMock: An Agile Approach for Building Data Models from User Interface Mockups. Softw. Syst. Model. 2019, 18, 663–690. [Google Scholar] [CrossRef]

- Temme, M.M.; Tyburzy, L.; Nöhren, L.; Muth, K.; Heßler, D.; Tenberg, F.; Viet, E.; Wimmer, M. Anwendung funktionaler Mockups bei der Entwicklung von Lotsenarbeitsplätzen. In Proceedings of the Deutscher Luft- und Raumfahrtkongress (DLRK2024), Hamburg, Germany, 30 September–2 October 2024. [Google Scholar] [CrossRef]

- Norman, D.A. Living with Complexity; MIT Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Morton, S. Specification for Medium-Term Conflict Detection; SPEC-0139; European Organisation for the Safety of Air Navigation (EUROCONTROL): Brussels, Belgium, 2017. [Google Scholar]

- Drozdowski, S. Guidelines for Short Term Conflict Alert—Part I—Concept and Requirements; EUROCONTROL-GUID-159; European Organisation for the Safety of Air Navigation (EUROCONTROL): Brussels, Belgium, 2017. [Google Scholar]

- Phojanamongkolkij, N.; Okuniek, N.; Lohr, G.W.; Schaper, M.; Christoffels, L.; Latorella, K.A. Functional Analysis for an Integrated Capability of Arrival/Departure/Surface Management with Tactical Runway Management; NASA report No NASA/TM–2014-218553; NASA Langley Research Center: Hampton, VA, USA, 2014. [Google Scholar]

- Temme, M.M.; Gluchshenko, O.; Nöhren, L.; Kleinert, M.; Ohneiser, O.; Muth, K.; Ehr, H.; Groß, N.; Temme, A.; Lagasio, M.; et al. Innovative Integration of Severe Weather Forecasts into an Extended Arrival Manager. Aerospace 2023, 10, 210. [Google Scholar] [CrossRef]

- Moser, C. User Experience Design—Mit Erlebniszentrierter Softwareentwicklung zu Produkten, die Begeistern; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Kurz, M.; Zebner, F. Zum Verhältnis von Design I& Technik. In Design, Anfang des 21. Jh.—Diskurse und Perspektiven; Eisele, P., Bürdek, B.E., Eds.; Avedition: Ludwigsburg, Germany, 2011; pp. 176–185. [Google Scholar]

- Hunger, R.; Christoffels, L.; Friedrich, M.; Jameel, M.; Pick, A.; Gerdes, I.; von der Nahmer, P.M.; Sobotzki, F. Lesson Learned: Design and Perception of Single Controller Operations Support Tools. In Engineering Psychology and Cognitive Ergonomics; HCII 2024; Lecture Notes in Computer Science; Harris, D., Li, W.C., Eds.; Springer: Cham, Switzerland, 2024; Volume 14693, pp. 15–33. [Google Scholar]

- Gerdes, I.; Temme, A.; Schultz, M. Dynamic airspace sectorisation for flight-centric operations. Transp. Res. Part Emerg. Technol. 2018, 95, 460–480. [Google Scholar] [CrossRef]

- Uebbing-Rumke, M.; Gürlük, H.; Jauer, M.L.; Hagemann, K.; Udovic, A. Usability Evaluation of Multi-Touch-Displays for TMA Controller Working Positions. 2014. Available online: https://api.semanticscholar.org/CorpusID:56047957 (accessed on 28 January 2025).

- EUROCONTROL. Procurement. Available online: https://www.eurocontrol.int/procurement (accessed on 12 November 2024).

- Alagar, V.S.; Periyasamy, K. Specification of Software Systems; Springer: London, UK, 2011. [Google Scholar] [CrossRef]

- Beck, K.; Grenning, J.; Martin, R.C.; Beedle, M.; Highsmith, J.; Mellor, S.; van Bennekum, A.; Hunt, A.; Schwaber, K.; Cockburn, A.; et al. Manifesto for Agile Software Development. Available online: https://agilemanifesto.org (accessed on 12 November 2024).

- Koch, A. Agile Software Development; Artech: Morristown, NJ, USA, 2004. [Google Scholar]

- König, C.; Hofmann, T.; Bruder, R. Application of the User-Centered Design Process According to ISO 9241-210 in Air Traffic Management; International Ergonomics Association: Recive, Brazil, 2012. [Google Scholar]

- DIN EN ISO 9241-210; Human-Centered Design Process for Interactive Systems. ISO: Geneva, Switzerland, 2008.

- DIN EN ISO 9241-11; Ergonomie der Mensch-System-Interaktion—Teil 11: Gebraustauglichkeit: Begriffe und Konzepte. DIN Deutsches Institut für Normung e.V.: Berlin, Germany, 2008.

- Hofmann, T.; Syndicus, M.; Bergner, J.; Bruder, R. Air Traffic Control HMI—Herausforderungen der Integration Mentaler Modelle Spezieller Nutzer in den Designprozess; GfA Frühjahrskongress: Dresden, Germany, 2019. [Google Scholar]

- Knothe, S.; Hofmann, T.; Bleßmann, C. Theory and Practice in UX Design—Identification of Discrepancies in the Develpopment of User-Oriented HMI; HCI: Copenhagen, Denmark, 2021. [Google Scholar]

- Wallace, G. FAA Still Short About 3000 Air Traffic Controllers, New Federal Numbers Show; CNN: Atlanta, GA, USA, 2024. [Google Scholar]

- IFATCA. Staff Shortage Survey—EUR Region. International Federation of Air Traffic Controllers’ Associations (IFATCA), Montreal, Quebec, Canada. 2024. Available online: https://ifatca.org/staff-shortage-survey-eur-region/ (accessed on 12 November 2024).

- König, C.; Hofmann, T.; Röbig, A.; Bergner, J. Fluglotsen-Arbeitsplätze der Zukunft, Neue Arbeits- und Lebenswelten Gestalten; Frühjahrskongress der GfA; GfA-Press: Darmstadt, Germany, 2010; Volume 56. [Google Scholar]

- Hofmann, T.; Heßler, D.; Knothe, S.; Lampe, A. New Scientific Methods and Old School Models in Ergonomic System Development. In Ergonomic Insights; Pazell, S., Karanikas, N., Eds.; CRC Press: Boca Raton, FL, USA, 2023; pp. 227–242. [Google Scholar]

- König, C.; Hofmann, T.; Bergner, J. Einsatz von Beobachtungsinterviews bei der Entwicklung von Interfaces für Tower Fluglotsen. Der Mensch im Mittelpunkt technischer Systeme. In Proceedings of the 8th Berliner Werkstatt Mensch-Maschine-Systeme, Berlin, Germany, 7–9 October 2009. [Google Scholar]

- Nielsen, N. Mental Models. 2010. Available online: https://www.nngroup.com/articles/mental-models (accessed on 28 January 2025).

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Applying the User Experience Questionnaire (UEQ) in Different Evaluation Scenarios. In Design, User Experience, and Usability. Theories, Methods, and Tools for Designing the User Experience, Proceedings of the Third International Conference, DUXU 2014, Held as Part of HCI International 2014, Heraklion, Crete, Greece, 22–27 June 2014, Proceedings, Part I 3; Springer International Publishing: New York, NY, USA, 2014; Volume 8517, pp. 383–392. [Google Scholar] [CrossRef]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Design and Evaluation of a Short Version of the User Experience Questionnaire (UEQ-S). Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 103. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nöhren, L.; Tyburzy, L.; Temme, M.-M.; Muth, K.; Hofmann, T.; Heßler, D.; Tenberg, F.; Viet, E.; Wimmer, M. New Approaches for the Use of Extended Mock-Ups for the Development of Air Traffic Controller Working Positions. Aerospace 2025, 12, 114. https://doi.org/10.3390/aerospace12020114

Nöhren L, Tyburzy L, Temme M-M, Muth K, Hofmann T, Heßler D, Tenberg F, Viet E, Wimmer M. New Approaches for the Use of Extended Mock-Ups for the Development of Air Traffic Controller Working Positions. Aerospace. 2025; 12(2):114. https://doi.org/10.3390/aerospace12020114

Chicago/Turabian StyleNöhren, Lennard, Lukas Tyburzy, Marco-Michael Temme, Kathleen Muth, Thomas Hofmann, Deike Heßler, Felix Tenberg, Eilert Viet, and Michael Wimmer. 2025. "New Approaches for the Use of Extended Mock-Ups for the Development of Air Traffic Controller Working Positions" Aerospace 12, no. 2: 114. https://doi.org/10.3390/aerospace12020114

APA StyleNöhren, L., Tyburzy, L., Temme, M.-M., Muth, K., Hofmann, T., Heßler, D., Tenberg, F., Viet, E., & Wimmer, M. (2025). New Approaches for the Use of Extended Mock-Ups for the Development of Air Traffic Controller Working Positions. Aerospace, 12(2), 114. https://doi.org/10.3390/aerospace12020114