Situation Awareness Discrimination Based on Physiological Features for High-Stress Flight Tasks

Abstract

1. Introduction

2. Methodology

2.1. Flight Simulation Experiment

2.1.1. Apparatus

2.1.2. Experimental Design and Task

2.1.3. Participants

2.1.4. Procedure

2.2. Data Acquisition and Preprocessing

2.2.1. SA Measurement and Labeling

2.2.2. Physiological Measurement and Feature Extraction

2.3. Deep Learning Models and Methods

2.3.1. Model Architecture

2.3.2. Model Processing and Evaluation

3. Results

3.1. General Results

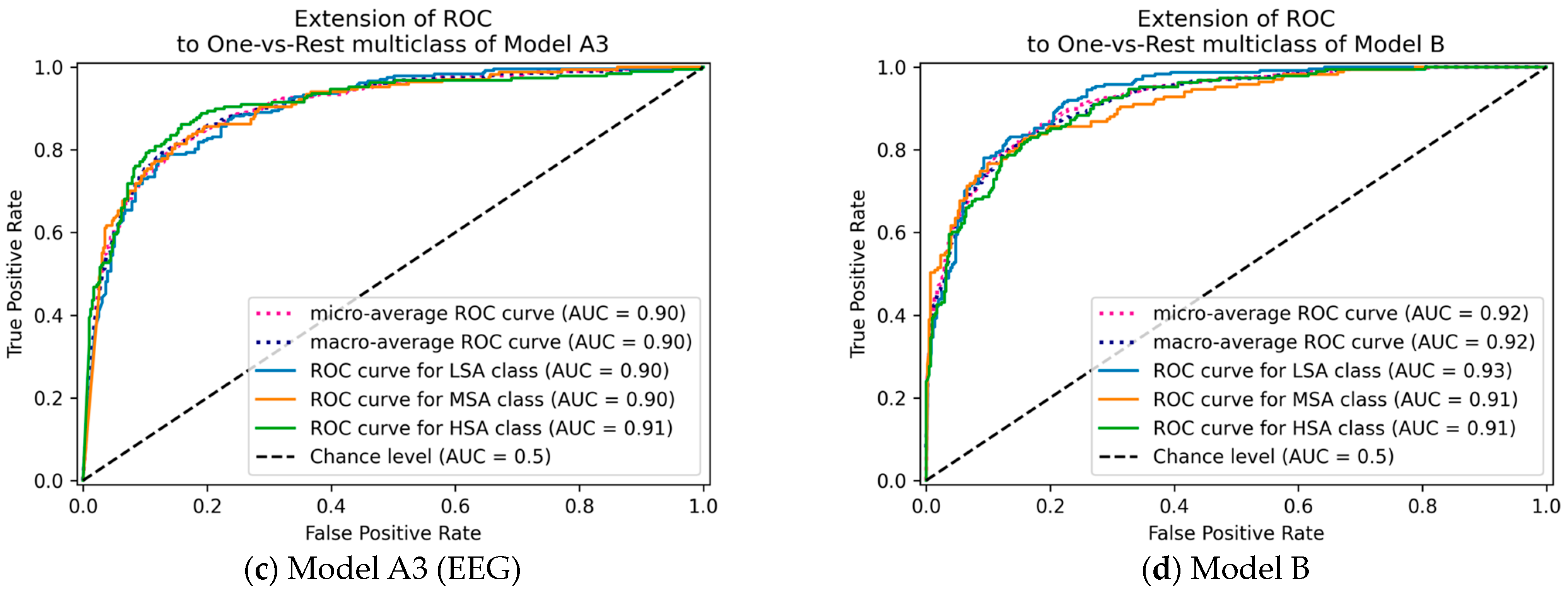

3.2. Unimodal Models

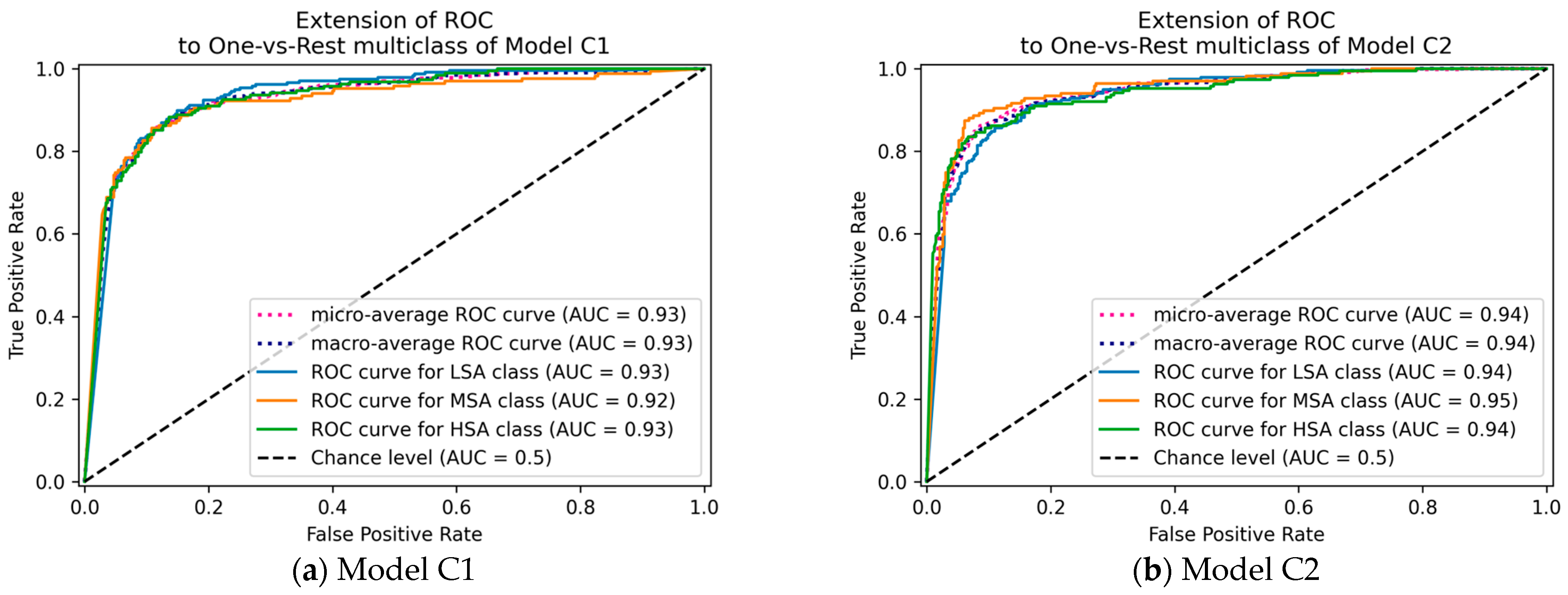

3.3. Multimodal Models

4. Discussion

5. Conclusions

- (1)

- The EEG modality and SW/FW features demonstrate promising potential in SA discrimination, as evidenced by the unimodal model comparison, where the EEG modality model outperformed the ET and HRV modalities.

- (2)

- The attention mechanism improves the SA discrimination capability of the EEG features compared to the MLP structure by efficiently incorporating relevant information from channel locations.

- (3)

- Decision-level fusion integrates unique information from multimodal features and effectively increases the accuracy of the SA model, achieving a best accuracy of 83.41% in triple-class SA discrimination.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lagomarsino, M.; Lorenzini, M.; Balatti, P.; De Momi, E.; Ajoudani, A. Pick the Right Co-Worker: Online Assessment of Cognitive Ergonomics in Human–Robot Collaborative Assembly. IEEE Trans. Cogn. Dev. Syst. 2022, 15, 1928–1937. [Google Scholar] [CrossRef]

- Wang, R.; Zhao, D.; Min, B.-C. Initial Task Allocation for Multi-Human Multi-Robot Teams with Attention-Based Deep Reinforcement Learning. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 7915–7922. [Google Scholar]

- Bolton, M.L.; Biltekoff, E.; Humphrey, L. The Level of Measurement of Subjective Situation Awareness and Its Dimensions in the Situation Awareness Rating Technique (SART). IEEE Trans. Hum. Mach. Syst. 2021, 52, 1147–1154. [Google Scholar] [CrossRef]

- Taylor, R.M. Situational Awareness Rating Technique (SART): The Development of a Tool for Aircrew Systems Design. In Situational Awareness; Routledge: London, UK, 2017; pp. 111–128. [Google Scholar]

- Liu, S.; Wanyan, X.; Zhuang, D. Modeling the Situation Awareness by the Analysis of Cognitive Process. Biomed. Mater. Eng. 2014, 24, 2311–2318. [Google Scholar] [CrossRef] [PubMed]

- Liang, N.; Yang, J.; Yu, D.; Prakah-Asante, K.O.; Curry, R.; Blommer, M.; Swaminathan, R.; Pitts, B.J. Using Eye-Tracking to Investigate the Effects of Pre-Takeover Visual Engagement on Situation Awareness during Automated Driving. Accid. Anal. Prev. 2021, 157, 106143. [Google Scholar] [CrossRef] [PubMed]

- Mehta, R.K.; Peres, S.C.; Shortz, A.E.; Hoyle, W.; Lee, M.; Saini, G.; Chan, H.-C.; Pryor, M.W. Operator Situation Awareness and Physiological States during Offshore Well Control Scenarios. J. Loss Prev. Process Ind. 2018, 55, 332–337. [Google Scholar] [CrossRef]

- Wang, R.; Jo, W.; Zhao, D.; Wang, W.; Gupte, A.; Yang, B.; Chen, G.; Min, B.-C. Husformer: A Multi-Modal Transformer for Multi-Modal Human State Recognition. IEEE Trans. Cogn. Dev. Syst. 2024, 16, 1374–1390. [Google Scholar] [CrossRef]

- Debie, E.; Fernandez Rojas, R.; Fidock, J.; Barlow, M.; Kasmarik, K.; Anavatti, S.; Garratt, M.; Abbass, H.A. Multimodal Fusion for Objective Assessment of Cognitive Workload: A Review. IEEE Trans. Cybern. 2021, 51, 1542–1555. [Google Scholar] [CrossRef]

- Endsley, M.R. Toward a Theory of Situation Awareness in Dynamic Systems. Hum. Factors 1995, 37, 32–64. [Google Scholar] [CrossRef]

- Endsley, M.R. A Taxonomy of Situation Awareness Errors. Hum. Factors Aviat. Oper. 1995, 3, 287–292. [Google Scholar]

- Hidalgo-Muñoz, D.; Matton, D.; El-Yagoubi, D. Influence of Anxiety and Mental Workload on Flight Performance in a Flight Simulator. In Proceedings of the 1st International Conference on Cognitive Aircraft Systems—ICCAS 2020, Toulouse, France, 18–19 March 2020. [Google Scholar]

- Masi, G.; Amprimo, G.; Ferraris, C.; Priano, L. Stress and Workload Assessment in Aviation—A Narrative Review. Sensors 2023, 23, 3556. [Google Scholar] [CrossRef]

- Villafaina, S.; Dr Fuentes-García, J.P.; Gusi, N.; Tornero-Aguilera, J.F.; Clemente-Suárez, V.J. Psychophysiological Response of Military Pilots in Different Combat Flight Maneuvers in a Flight Simulator. Physiol. Behav. 2021, 238, 113483. [Google Scholar] [CrossRef] [PubMed]

- Brennan, P.A.; Holden, C.; Shaw, G.; Morris, S.; Oeppen, R.S. Leading Article: What Can We Do to Improve Individual and Team Situational Awareness to Benefit Patient Safety? Br. J. Oral Maxillofac. Surg. 2020, 58, 404–408. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.; Niu, Y.; Shen, L. Adaptive Level of Autonomy for Human-UAVs Collaborative Surveillance Using Situated Fuzzy Cognitive Maps. Chin. J. Aeronaut. 2020, 33, 2835–2850. [Google Scholar] [CrossRef]

- Endsley, M.R. Designing for Situation Awareness in Complex Systems. In Proceedings of the Second International Workshop on Symbiosis of Humans, Artifacts and Environment, Kyoto, Japan, 12 November 2001; pp. 1–14. [Google Scholar]

- Zhang, T.; Yang, J.; Liang, N.; Pitts, B.J.; Prakah-Asante, K.; Curry, R.; Duerstock, B.S.; Wachs, J.P.; Yu, D. Physiological Measurements of Situation Awareness: A Systematic Review. Hum. Factors 2023, 65, 737–758. [Google Scholar] [CrossRef]

- Feng, C.; Liu, S.; Wanyan, X.; Dang, Y.; Wang, Z.; Qian, C. β-Wave-Based Exploration of Sensitive EEG Features and Classification of Situation Awareness. Aeronaut. J. 2024, early access, 1–16. [Google Scholar] [CrossRef]

- Yang, J.; Liang, N.; Pitts, B.J.; Prakah-Asante, K.O.; Curry, R.; Blommer, M.; Swaminathan, R.; Yu, D. Multimodal Sensing and Computational Intelligence for Situation Awareness Classification in Autonomous Driving. IEEE Trans. Hum. Mach. Syst. 2023, 53, 270–281. [Google Scholar] [CrossRef]

- Li, Q.; Ng, K.K.H.; Yu, S.C.M.; Yiu, C.Y.; Lyu, M. Recognising Situation Awareness Associated with Different Workloads Using EEG and Eye-Tracking Features in Air Traffic Control Tasks. Knowl. Based Syst. 2023, 260, 110179. [Google Scholar] [CrossRef]

- Zheng, H. An Interpretable Prediction Framework for Multi-Class Situational Awareness in Conditionally Automated Driving. Adv. Eng. Inform. 2024, 62, 102683. [Google Scholar] [CrossRef]

- Heard, J.; Harriott, C.E.; Adams, J.A. A Survey of Workload Assessment Algorithms. IEEE Trans. Hum. Mach. Syst. 2018, 48, 434–451. [Google Scholar] [CrossRef]

- Nath, R.K.; Thapliyal, H.; Caban-Holt, A.; Mohanty, S.P. Machine Learning Based Solutions for Real-Time Stress Monitoring. IEEE Consum. Electron. Mag. 2020, 9, 34–41. [Google Scholar] [CrossRef]

- Chen, J.; Xue, L.; Rong, J.; Gao, X. Real-Time Evaluation Method of Flight Mission Load Based on Sensitivity Analysis of Physiological Factors. Chin. J. Aeronaut. 2022, 35, 450–463. [Google Scholar] [CrossRef]

- Han, L.; Zhang, Q.; Chen, X.; Zhan, Q.; Yang, T.; Zhao, Z. Detecting Work-Related Stress with a Wearable Device. Comput. Ind. 2017, 90, 42–49. [Google Scholar] [CrossRef]

- Finseth, T.T.; Dorneich, M.C.; Vardeman, S.; Keren, N.; Franke, W.D. Real-Time Personalized Physiologically Based Stress Detection for Hazardous Operations. IEEE Access 2023, 11, 25431–25454. [Google Scholar] [CrossRef]

- Zhou, Y.; Huang, S.; Xu, Z.; Wang, P.; Wu, X.; Zhang, D. Cognitive Workload Recognition Using EEG Signals and Machine Learning: A Review. IEEE Trans. Cogn. Dev. Syst. 2022, 14, 799–818. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, Y.; Hu, C.; Yin, Z.; Song, Y. Transformers for EEG-Based Emotion Recognition: A Hierarchical Spatial Information Learning Model. IEEE Sens. J. 2022, 22, 4359–4368. [Google Scholar] [CrossRef]

- He, Z.; Li, Z.; Yang, F.; Wang, L.; Li, J.; Zhou, C.; Pan, J. Advances in Multimodal Emotion Recognition Based on Brain-Computer Interfaces. Brain Sci. 2020, 10, 687. [Google Scholar] [CrossRef]

- Brouwer, A.-M.; Hogervorst, M.A.; Oudejans, B.; Ries, A.J.; Touryan, J. EEG and Eye Tracking Signatures of Target Encoding during Structured Visual Search. Front. Hum. Neurosci. 2017, 11, 264. [Google Scholar] [CrossRef]

- Feng, C.; Liu, S.; Wanyan, X.; Chen, H.; Min, Y.; Ma, Y. EEG Feature Analysis Related to Situation Awareness Assessment and Discrimination. Aerospace 2022, 9, 546. [Google Scholar] [CrossRef]

- Martins, A.P.G. A Review of Important Cognitive Concepts in Aviation. Aviation 2016, 20, 65–84. [Google Scholar] [CrossRef]

- Kästle, J.L.; Anvari, B.; Krol, J.; Wurdemann, H.A. Correlation between Situational Awareness and EEG Signals. Neurocomputing 2021, 432, 70–79. [Google Scholar] [CrossRef]

- Zhou, F.; Yang, X.J.; de Winter, J.C.F. Using Eye-Tracking Data to Predict Situation Awareness in Real Time During Takeover Transitions in Conditionally Automated Driving. IEEE Trans. Intell. Transp. Syst. 2022, 23, 2284–2295. [Google Scholar] [CrossRef]

- Li, R.; Wang, L.; Sourina, O. Subject Matching for Cross-Subject EEG-Based Recognition of Driver States Related to Situation Awareness. Methods 2022, 202, 136–143. [Google Scholar] [CrossRef] [PubMed]

- Wickens, C.D. Situation Awareness and Workload in Aviation. Curr. Dir. Psychol. Sci. 2002, 11, 128–133. [Google Scholar] [CrossRef]

- Highland, P.; Schnell, T.; Woodruff, K.; Avdic-McIntire, G. Towards Human Objective Real-Time Trust of Autonomy Measures for Combat Aviation. Int. J. Aerosp. Psychol. 2023, 33, 1–34. [Google Scholar] [CrossRef]

- Truong, N.D.; Nguyen, A.D.; Kuhlmann, L.; Bonyadi, M.R.; Yang, J.; Ippolito, S.; Kavehei, O. Convolutional Neural Networks for Seizure Prediction Using Intracranial and Scalp Electroencephalogram. Neural Netw. 2018, 105, 104–111. [Google Scholar] [CrossRef]

- Gjoreski, M.; Kolenik, T.; Knez, T.; Luštrek, M.; Gams, M.; Gjoreski, H.; Pejović, V. Datasets for Cognitive Load Inference Using Wearable Sensors and Psychological Traits. Appl. Sci. 2020, 10, 3843. [Google Scholar] [CrossRef]

- Endsley, M.R.; Selcon, S.J.; Hardiman, T.D.; Croft, D.G. A Comparative Analysis of SAGAT and SART for Evaluations of Situation Awareness. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Santa Monica, CA, USA, 5–9 October 1998; SAGE Publications: Los Angeles, CA, USA, 1998; Volume 42, pp. 82–86. [Google Scholar]

- Endsley, M.R. A Systematic Review and Meta-Analysis of Direct Objective Measures of Situation Awareness: A Comparison of SAGAT and SPAM. Hum. Factors 2021, 63, 124–150. [Google Scholar] [CrossRef]

- Saus, E.-R.; Johnsen, B.H.; Eid, J.; Riisem, P.K.; Andersen, R.; Thayer, J.F. The Effect of Brief Situational Awareness Training in a Police Shooting Simulator: An Experimental Study. Mil. Psychol. 2006, 18, S3–S21. [Google Scholar] [CrossRef]

- Makowski, D.; Pham, T.; Lau, Z.J.; Brammer, J.C.; Lespinasse, F.; Pham, H.; Schölzel, C.; Chen, S.H.A. NeuroKit2: A Python Toolbox for Neurophysiological Signal Processing. Behav. Res. Methods 2021, 53, 1689–1696. [Google Scholar] [CrossRef]

- Wanyan, X.; Zhuang, D.; Lin, Y.; Xiao, X.; Song, J.-W. Influence of Mental Workload on Detecting Information Varieties Revealed by Mismatch Negativity during Flight Simulation. Int. J. Ind. Ergon. 2018, 64, 1–7. [Google Scholar] [CrossRef]

- Trapsilawati, F.; Herliansyah, M.K.; Nugraheni, A.S.A.N.S.; Fatikasari, M.P.; Tissamodie, G. EEG-Based Analysis of Air Traffic Conflict: Investigating Controllers’ Situation Awareness, Stress Level and Brain Activity during Conflict Resolution. J. Navig. 2020, 73, 678–696. [Google Scholar] [CrossRef]

- Gramfort, A.; Luessi, M.; Larson, E.; Engemann, D.A.; Strohmeier, D.; Brodbeck, C.; Goj, R.; Jas, M.; Brooks, T.; Parkkonen, L. MEG and EEG Data Analysis with MNE-Python. Front. Neurosci. 2013, 7, 70133. [Google Scholar] [CrossRef]

- Welch, P. The Use of Fast Fourier Transform for the Estimation of Power Spectra: A Method Based on Time Averaging over Short, Modified Periodograms. IEEE Trans. Audio Electroacoust. 1967, 15, 70–73. [Google Scholar] [CrossRef]

- Fernandez Rojas, R.; Debie, E.; Fidock, J.; Barlow, M.; Kasmarik, K.; Anavatti, S.; Garratt, M.; Abbass, H. Electroencephalographic Workload Indicators during Teleoperation of an Unmanned Aerial Vehicle Shepherding a Swarm of Unmanned Ground Vehicles in Contested Environments. Front. Neurosci. 2020, 14, 40. [Google Scholar] [CrossRef]

- Chen, J.; Jiang, D.; Zhang, Y. A Hierarchical Bidirectional GRU Model with Attention for EEG-Based Emotion Classification. IEEE Access 2019, 7, 118530–118540. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Ramachandram, D.; Taylor, G.W. Deep Multimodal Learning: A Survey on Recent Advances and Trends. IEEE Signal Process. Mag. 2017, 34, 96–108. [Google Scholar] [CrossRef]

- Singh, D.; Singh, B. Investigating the Impact of Data Normalization on Classification Performance. Appl. Soft Comput. 2020, 97, 105524. [Google Scholar] [CrossRef]

- Sohn, Y.W.; Doane, S.M. Memory Processes of Flight Situation Awareness: Interactive Roles of Working Memory Capacity, Long-Term Working Memory, and Expertise. Hum. Factors 2004, 46, 461–475. [Google Scholar] [CrossRef]

- van Moorselaar, D.; Foster, J.J.; Sutterer, D.W.; Theeuwes, J.; Olivers, C.N.; Awh, E. Spatially Selective Alpha Oscillations Reveal Moment-by-Moment Trade-Offs between Working Memory and Attention. J. Cogn. Neurosci. 2018, 30, 256–266. [Google Scholar] [CrossRef]

- Mitchell, D.J.; McNaughton, N.; Flanagan, D.; Kirk, I.J. Frontal-Midline Theta from the Perspective of Hippocampal “Theta”. Prog. Neurobiol. 2008, 86, 156–185. [Google Scholar] [CrossRef] [PubMed]

- Radhakrishnan, V.; Louw, T.; Cirino Gonçalves, R.; Torrao, G.; Lenné, M.G.; Merat, N. Using Pupillometry and Gaze-Based Metrics for Understanding Drivers’ Mental Workload during Automated Driving. Transp. Res. Part F Traffic Psychol. Behav. 2023, 94, 254–267. [Google Scholar] [CrossRef]

- Shaffer, F.; Ginsberg, J.P. An Overview of Heart Rate Variability Metrics and Norms. Front. Public Health 2017, 5, 258. [Google Scholar] [CrossRef]

- Li, C.; Zhang, Z.; Zhang, X.; Huang, G.; Liu, Y.; Chen, X. EEG-based Emotion Recognition via Transformer Neural Architecture Search. IEEE Trans. Ind. Inform. 2023, 19, 6016–6025. [Google Scholar] [CrossRef]

- Zhang, D.; Yao, L.; Chen, K.; Monaghan, J. A Convolutional Recurrent Attention Model for Subject-Independent EEG Signal Analysis. IEEE Signal Process. Lett. 2019, 26, 715–719. [Google Scholar] [CrossRef]

- Tao, W.; Li, C.; Song, R.; Cheng, J.; Liu, Y.; Wan, F.; Chen, X. EEG-Based Emotion Recognition via Channel-Wise Attention and Self Attention. IEEE Trans. Affect. Comput. 2020, 14, 382–393. [Google Scholar] [CrossRef]

- Gedam, S.; Paul, S. A Review on Mental Stress Detection Using Wearable Sensors and Machine Learning Techniques. IEEE Access 2021, 9, 84045–84066. [Google Scholar] [CrossRef]

| Eye Movement | ET Features | Unit | Description |

|---|---|---|---|

| Fixation | Average duration of fixations | [ms] | The average duration of the fixations in the interval. |

| Fixation frequency | [N/min] | Numbers of fixations every minute. | |

| Saccade | Average peak velocity of saccades | [deg/s] | The average peak velocity of all saccades in the interval. |

| Average amplitude of saccades | [deg] | The average amplitude of all saccades in the interval. | |

| Saccade frequency | [N/min] | Numbers of saccades in every minute. | |

| Blink | Blink frequency | [N/min] | Numbers of blinks in every minute. |

| Domain | HRV Features | Unit | Description |

|---|---|---|---|

| Time domain | HR | [N/min] | Number of heartbeats each minute. |

| SDNN | [ms] | The standard deviation of the RR intervals. | |

| RMSSD | [ms] | The square root of the mean of the squared successive differences between adjacent RR intervals. | |

| Frequency domain | HFn | No unit | The normalized spectral power of high frequencies (0.15 to 0.4 Hz). |

| Non-linear domain | SD1/SD2 | No unit | Ratio of SD1 (standard deviation perpendicular to the line of identity) to SD2 (standard deviation along the identity line). Describes the ratio of short-term to long-term variations in HRV. |

| Models | Input Features | Feature Size |

|---|---|---|

| Model A1 | ET features | 6 |

| Model A2 | HRV features | 5 |

| Model A3/Model B | EEG features | 18 |

| Model C1/Model C2 | ET, HRV, and EEG features | 29 |

| Model | Accuracy | Precision | Recall | F1 Score | AUC | ||

|---|---|---|---|---|---|---|---|

| LSA | MSA | HSA | |||||

| Model A1 | 0.6062 ± 0.0079 | 0.6194 ± 0.0190 | 0.6062 ± 0.0079 | 0.6081 ± 0.0092 | 0.78 ± 0.02 | 0.75 ± 0.01 | 0.76 ± 0.02 |

| Model A2 | 0.5747 ± 0.0114 | 0.5907 ± 0.0247 | 0.5747 ± 0.0114 | 0.5778 ± 0.0106 | 0.75 ± 0.01 | 0.74 ± 0.01 | 0.75 ± 0.01 |

| Model A3 | 0.7659 ± 0.0100 | 0.7729 ± 0.0079 | 0.7659 ± 0.0100 | 0.7661 ± 0.0099 | 0.90 ± 0.01 | 0.90 ± 0.01 | 0.91 ± 0.01 |

| Model B | 0.7710 ± 0.0058 | 0.7727 ± 0.0060 | 0.7710 ± 0.0058 | 0.7712 ± 0.0058 | 0.93 ± 0.01 | 0.91 ± 0.01 | 0.91 ± 0.01 |

| Model C1 | 0.8145 ± 0.0101 | 0.8155 ± 0.0101 | 0.8145 ± 0.0101 | 0.8146 ± 0.0101 | 0.93 ± 0.01 | 0.92 ± 0.01 | 0.93 ± 0.01 |

| Model C2 | 0.8341 ± 0.0059 | 0.8347 ± 0.0059 | 0.8341 ± 0.0059 | 0.8341 ± 0.0059 | 0.94 ± 0.01 | 0.95 ± 0.01 | 0.94 ± 0.01 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qian, C.; Liu, S.; Wanyan, X.; Feng, C.; Li, Z.; Sun, W.; Wang, Y. Situation Awareness Discrimination Based on Physiological Features for High-Stress Flight Tasks. Aerospace 2024, 11, 897. https://doi.org/10.3390/aerospace11110897

Qian C, Liu S, Wanyan X, Feng C, Li Z, Sun W, Wang Y. Situation Awareness Discrimination Based on Physiological Features for High-Stress Flight Tasks. Aerospace. 2024; 11(11):897. https://doi.org/10.3390/aerospace11110897

Chicago/Turabian StyleQian, Chunying, Shuang Liu, Xiaoru Wanyan, Chuanyan Feng, Zhen Li, Wenye Sun, and Yihang Wang. 2024. "Situation Awareness Discrimination Based on Physiological Features for High-Stress Flight Tasks" Aerospace 11, no. 11: 897. https://doi.org/10.3390/aerospace11110897

APA StyleQian, C., Liu, S., Wanyan, X., Feng, C., Li, Z., Sun, W., & Wang, Y. (2024). Situation Awareness Discrimination Based on Physiological Features for High-Stress Flight Tasks. Aerospace, 11(11), 897. https://doi.org/10.3390/aerospace11110897