1. Introduction

In the 21st century, with the development of information technology, UAVs have become a popular industry in this new round of global technological and industrial revolution. With the extensive use of UAVs, the importance of UAV safety has been paid increasing attention. A UAV is a complex system with multidisciplinary integration, high integration, high intelligence, and low-redundancy design [

1]. Therefore, improving the safety of UAVs is an important goal in the industry. Due to the presence of a large number of factors, such as the electrical system, engine, and flight control, UAV faults are difficult to detect in business scenarios [

2,

3]. In UAV Flight Data Sets, most of the data are normal data, and the unbalanced data cause difficulties in detecting faults [

4]. The traditional fault detection method is to monitor a certain factor; when the safety range is exceeded, a fault occurs. However, UAVs are complex systems, and a fault is caused by multiple factors [

5]. At present, self-supervised fault detection based on Auto-Encoders has become the main research direction [

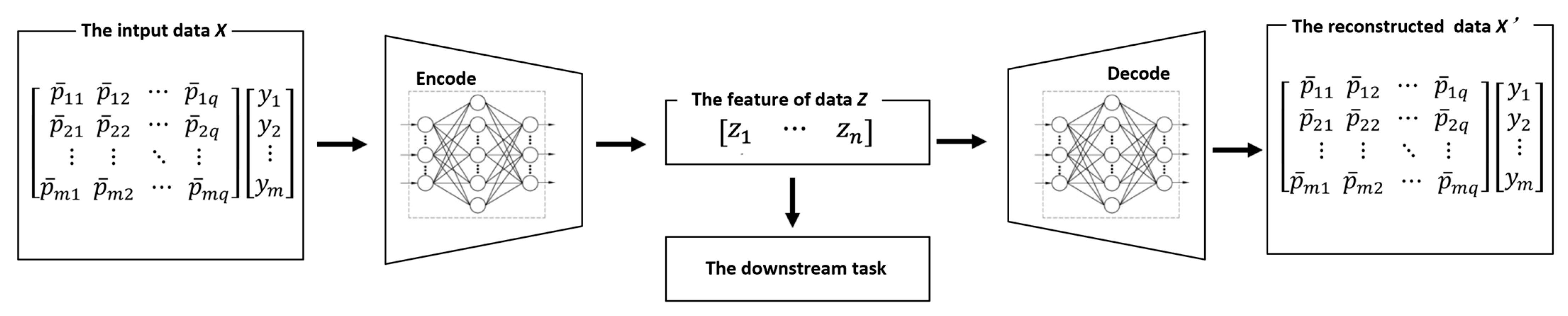

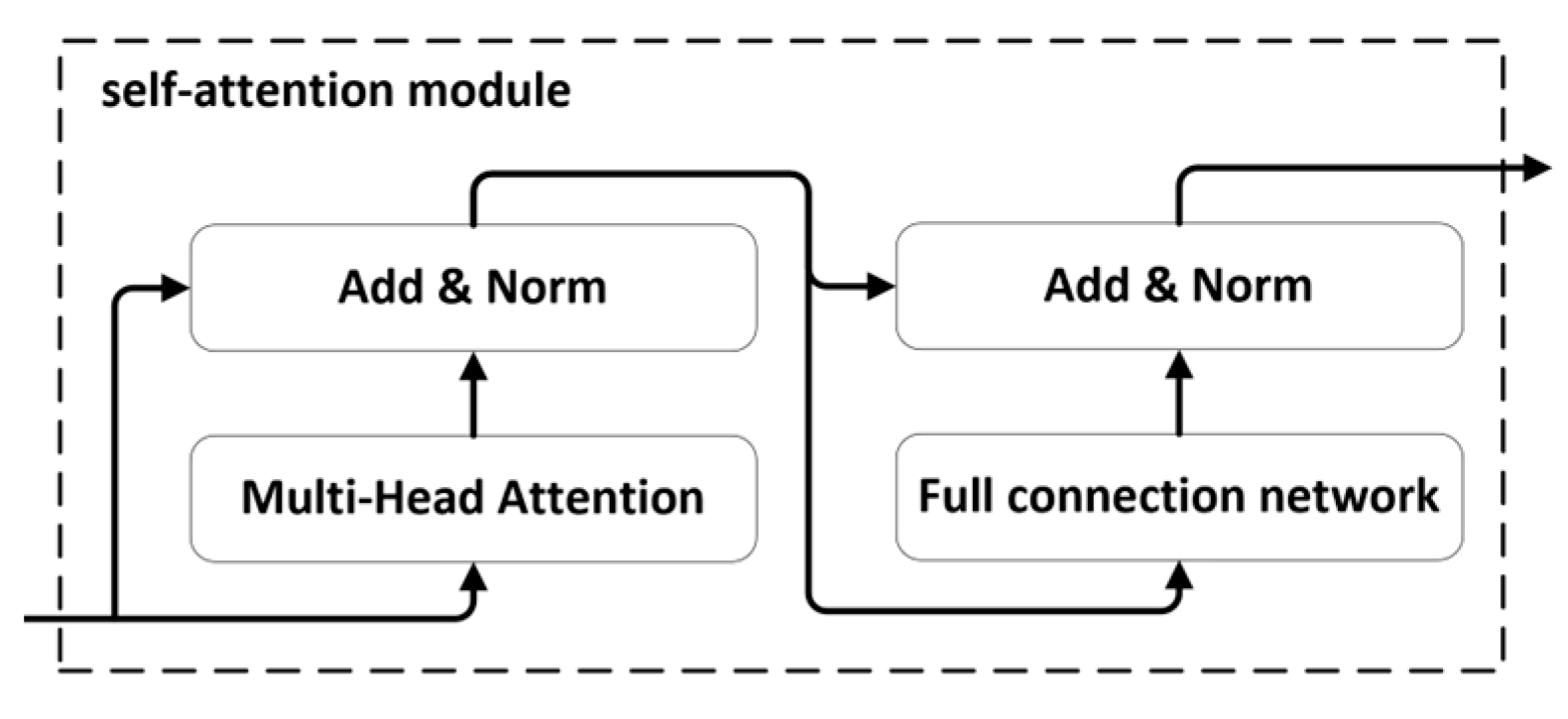

6]. Representation learning is used to extract features. With the help of an Auto-Encoder, features from the flight data are extracted to build a Self-supervised Fault Detection Model for UAVs.

The multiple features in the data contained in the UAV Flight Data Sets included navigation control, the electrical system, the engine, steering gear, flight control, flight dynamics, and the responder [

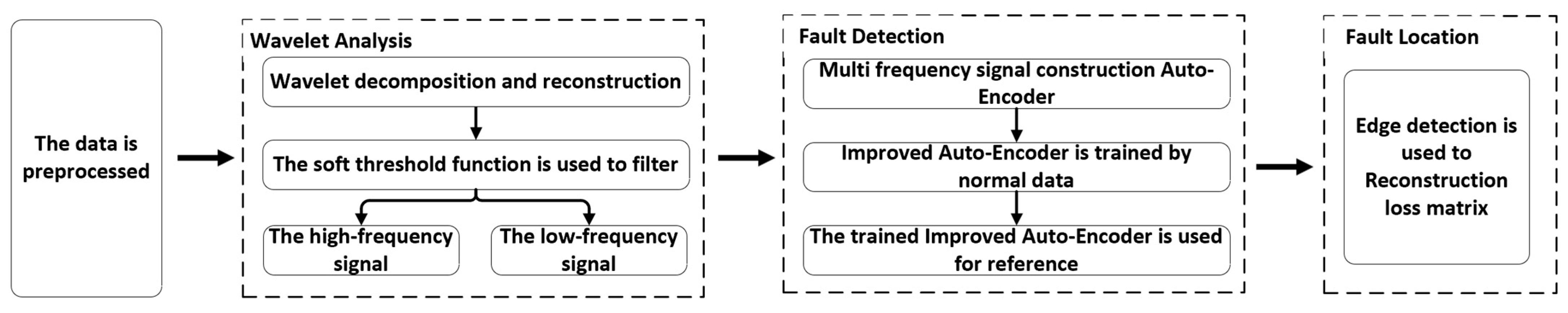

1]. An Auto-Encoder was used to extract the features and reduce the dimensions of the data. In the Auto-Encoder, a high-frequency signal in the data will affect the feature extraction of the model. We used wavelet analysis to extract the small-amplitude high-frequency signal in the data, which is similar to a random Gaussian signal. There was no need to pay attention to the random Gaussian signals with fewer features, and the Auto-Encoder was used for the feature extraction and reconstruction of the low-frequency signals with different frequencies. The normal data were used for training. The reconstruction loss of the low-frequency signals with different frequencies was weighted and averaged. The reconstruction loss was used as the criterion for fault detection. This method overcomes the problem of unbalanced data and low signal-to-noise ratio in data sets. To improve the effectiveness of the fault localization, an edge detection operator was used to calculate the reconstruction loss of the features in the UAV Flight Data Sets. The features with large reconstruction losses were considered fault features. The proposed method was verified in the UAV Flight Data Sets, and the results suggest the proposed prediction model has better performance. Compared with other studies, this study provides the following innovations:

Aiming at the problem of UAV fault detection, we developed a new Self-supervised Fault Detection Model for UAVs based on an Auto-Encoder and wavelet analysis;

As an efficient representation learning model, the Auto-Encoder overcomes the problem of insufficient fault data in the data sets and reduces the dimensions of the data. Wavelet analysis was used to process the data, which overcomes the problem of low signal-to-noise ratio in data sets;

The loss of the Auto-Encoder and the edge detection operator were used to locate fault factors for further fault detection. Faults caused by multiple factors were detected.

The rest of this paper is organized as follows. In

Section 2, related works are introduced, including the data feature extraction and model improvement.

Section 3 shows our overall framework and proposed method. In

Section 4, the effectiveness of the proposed Self-supervised Fault Detection Model for UAVs is evaluated using the UAV Flight Data Sets. The proposed model was compared with other models in the literature. In

Section 5, the study is concluded.

4. Experiment and Discussion

In order to verify the efficiency of the developed Self-supervised Fault Detection Model, we experimented with UAV Flight Data Sets. The experiments were implemented with Python software on a personal computer with Inter(R) Core(TM) i7-7500U CPU, NVIDIA GeForce 940MX GPU, 16 GB memory, and a Windows 11 64-bit system.

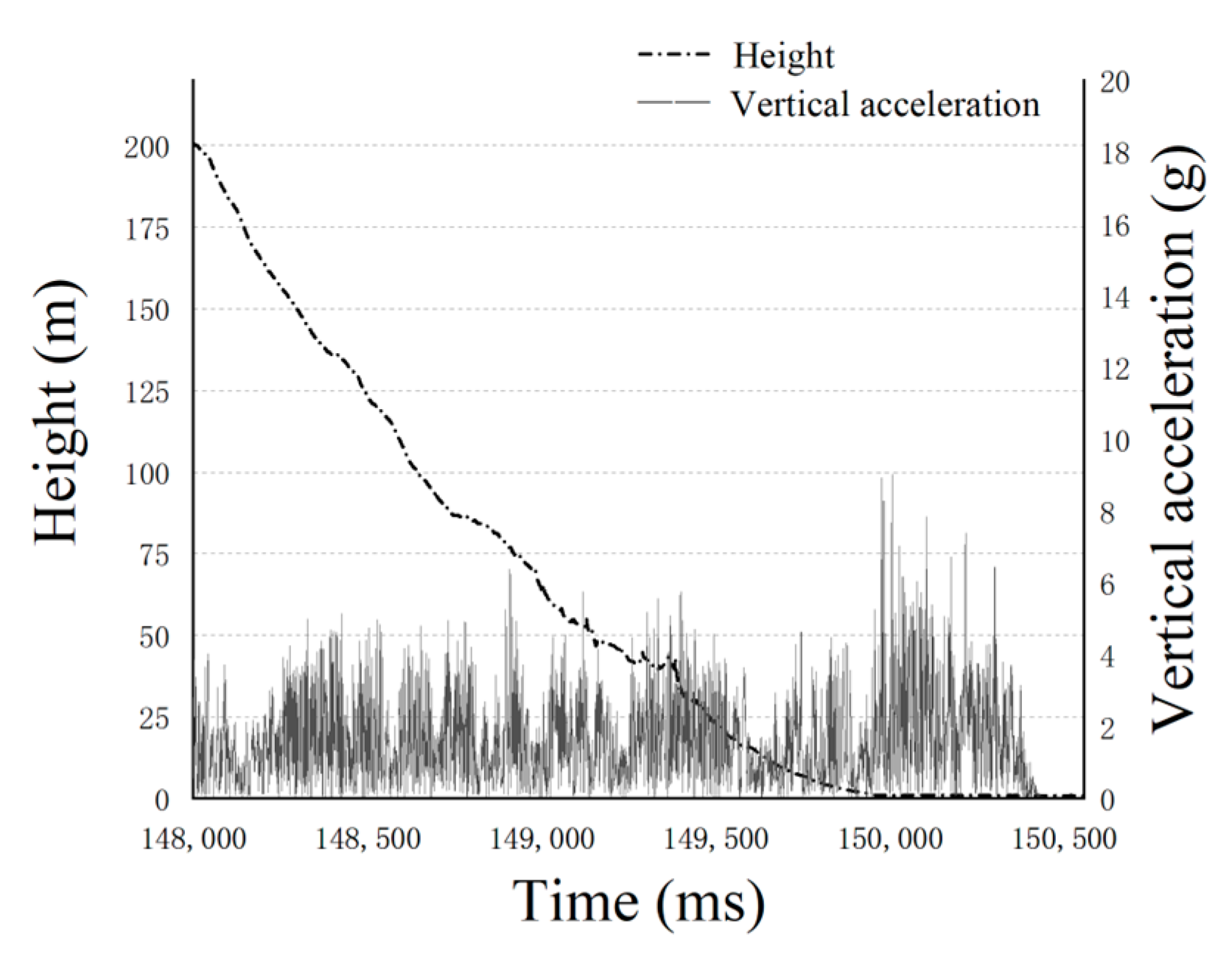

A hard landing is considered to be a major fault in the process of UAV landing. A hard landing means that the vertical acceleration of the UAV exceeds the threshold value when the UAV lands. A hard landing will cause equipment damage and make the UAV unusable. From the flight data, the vertical acceleration of the UAV during landing is obtained. A hard landing is defined as follows:

where

denotes whether a hard landing occurs (that is, when

is 1, a hard landing occurs);

denotes the average vertical acceleration of the UAV during landing; and

denotes the threshold value of a hard landing. In practice,

is

(

is the acceleration of gravity). For example, the vertical acceleration of a hard landing is shown in

Figure 8.

In this study, the flight data of 28 sorties of a certain UAV were collected. Multiple landings of the UAV were recorded in one sortie datum. We selected the data of before landing to complete the detection of the fault. A total of 127 representative factors were selected, and 8838 data were obtained. Among them, 432 data failed, and the other 8406 data were normal. The ratio of the fault data to all data was 4.88%. There was a total of 228,600 fault data and 893,826 normal data. For the flight data, the data were preprocessed as per Equation (1). The data were split from the time dimension. In the experiment, the original flight data of each sorting were set as data intervals every .

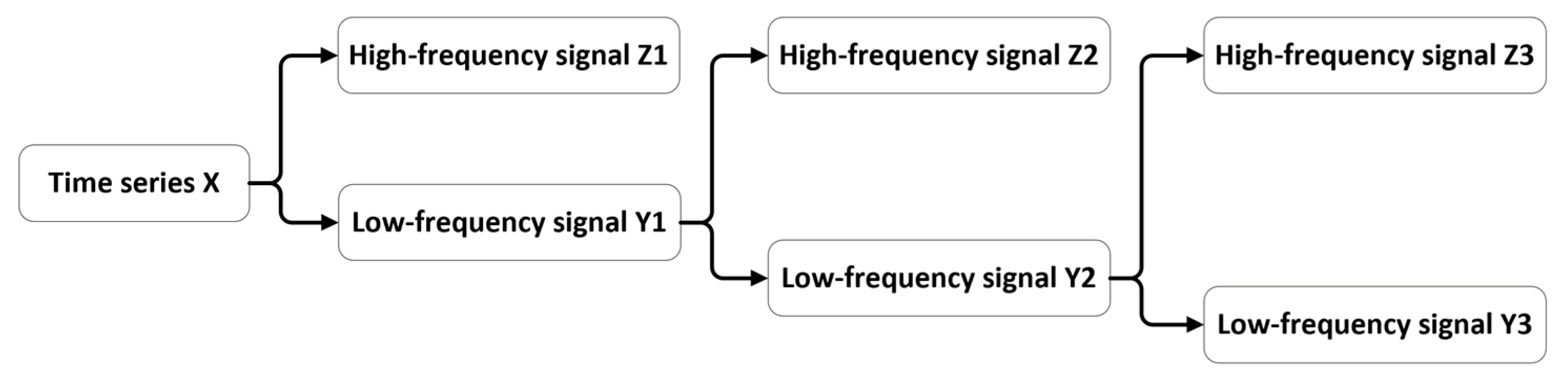

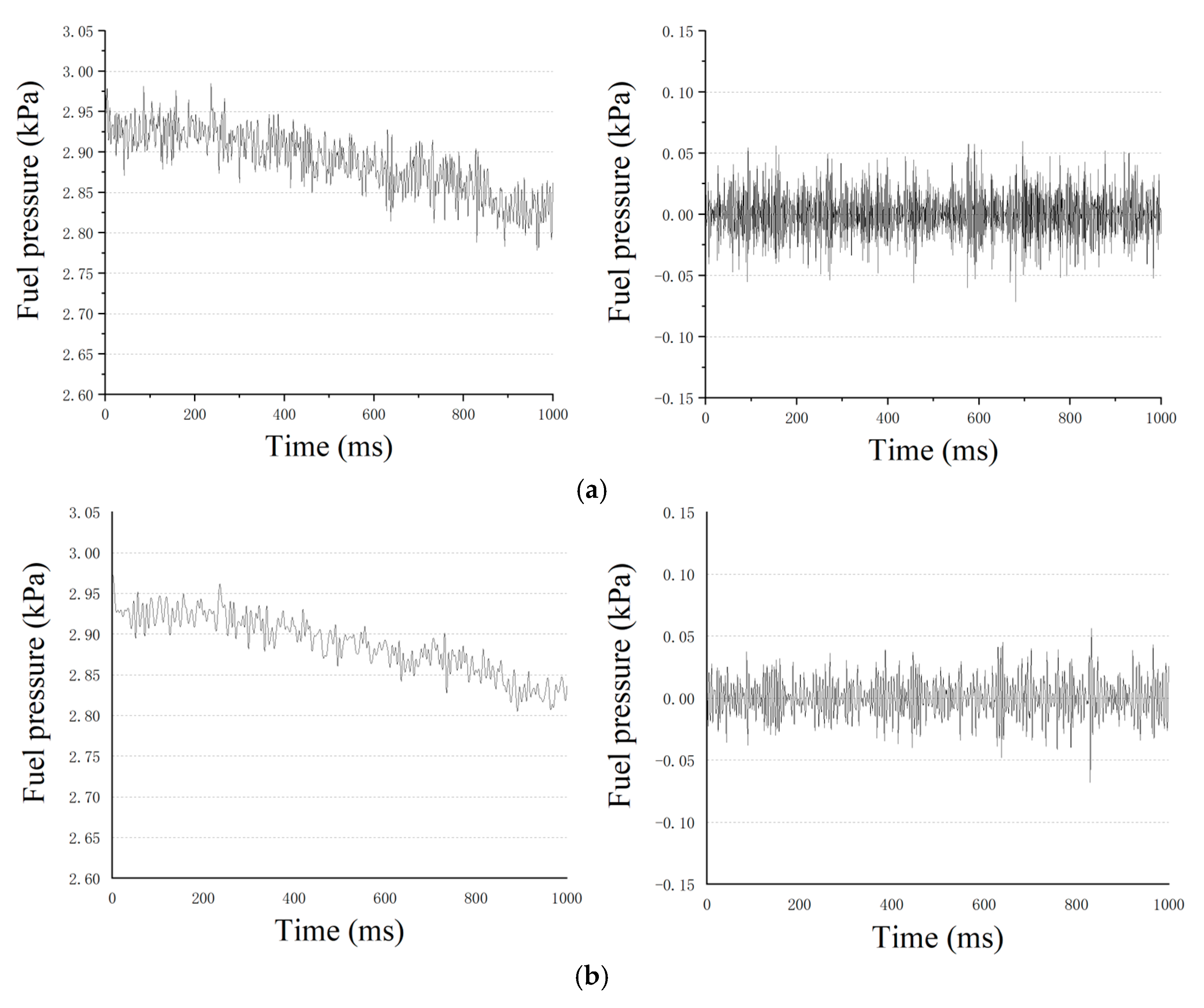

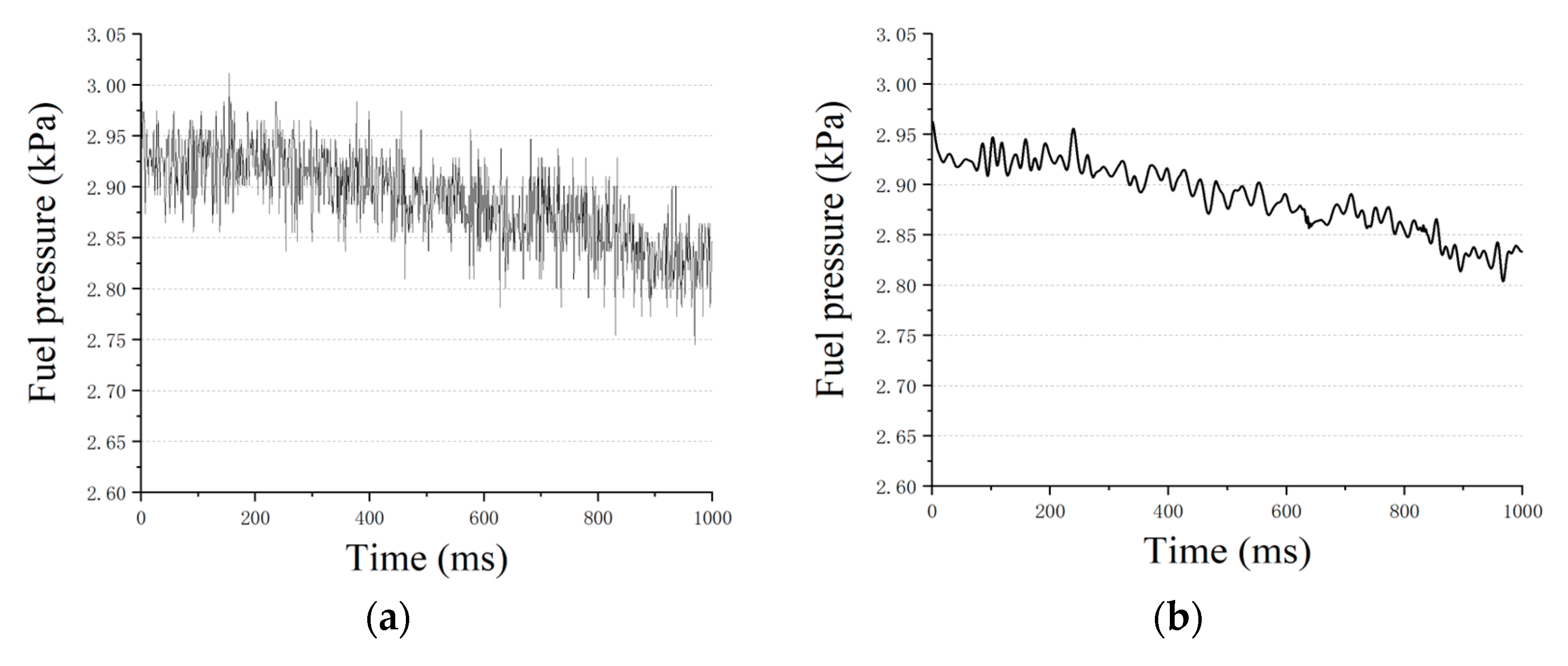

Some data of the fuel pressure were selected as an example. The wavelet decomposition and reconstruction divide the signal into two parts: low-frequency signal and high-frequency signal. The process of the signal decomposition is shown in

Figure 9, in which the results of the first-level decomposition are shown in

Figure 9a. The results of the second-level decomposition are shown in

Figure 9b. The results of the third-level decomposition are shown in

Figure 9c.

In

Figure 9, in the process of each level’s decomposition, the signal gradually decomposed by frequency. The high-frequency signal was filtered by wavelet analysis. We believe that the small-amplitude high-frequency signal should not be focused on by the prediction model. Therefore, it was filtered to prevent it from affecting the temporal features extracted by the Auto-Encoder. The time series and the low-frequency signal are shown in

Figure 10a,b, respectively.

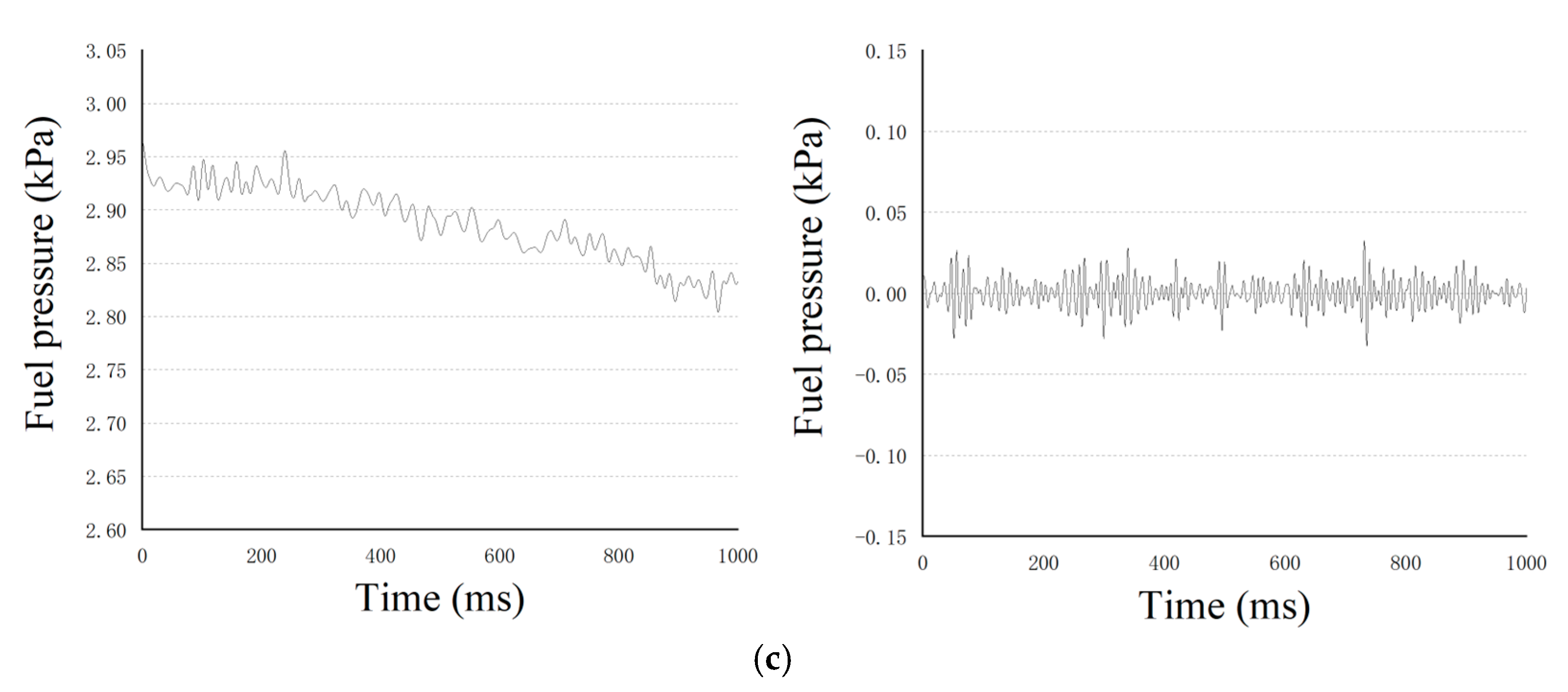

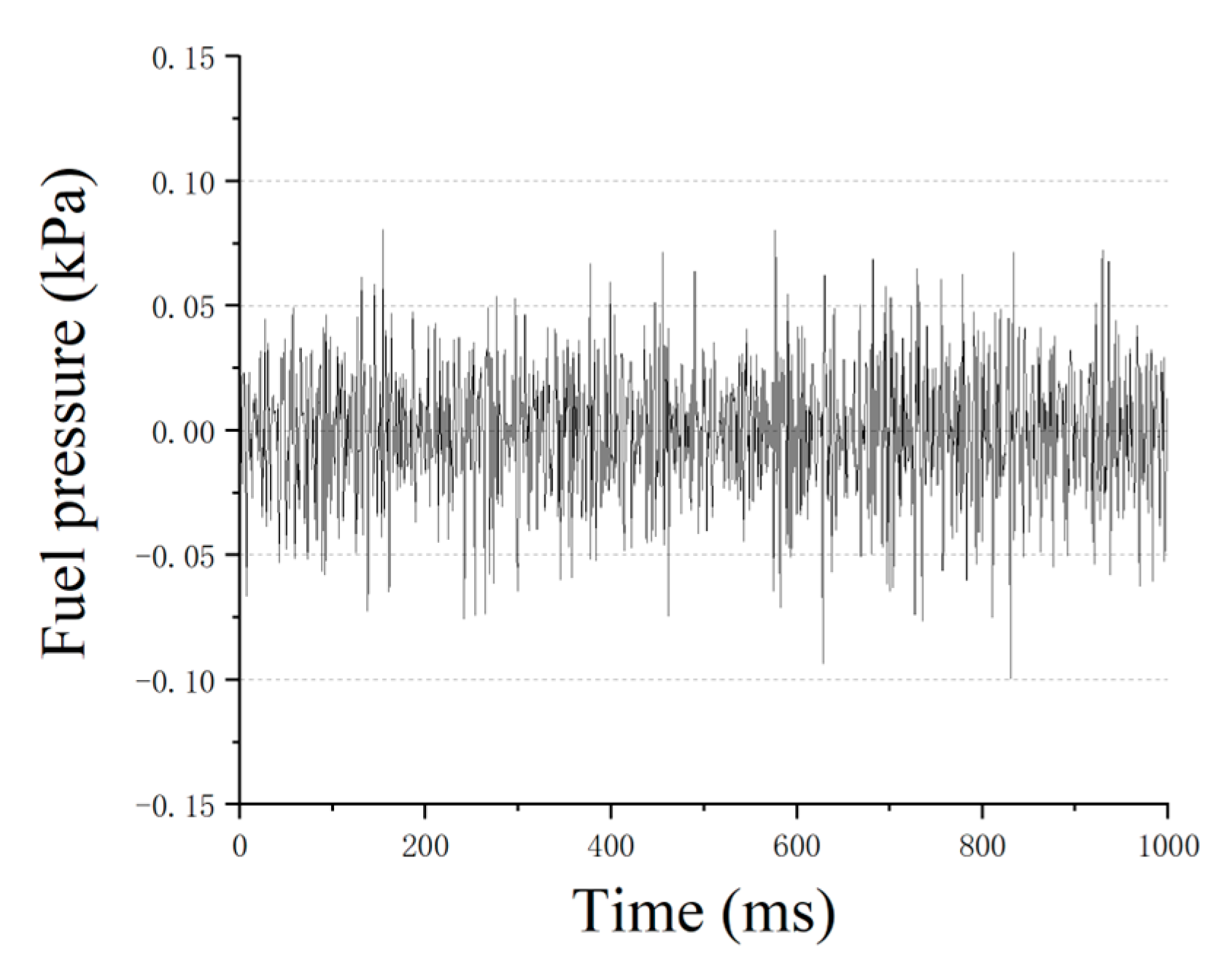

The filtered small-amplitude high-frequency signal is shown in

Figure 11.

In

Figure 11, the small-amplitude high-frequency signal was similar to the Gaussian signal. There were few valuable features to extract, which will affect the extraction of the temporal features in the signals. In contrast, the low-frequency signal contained almost all of the features of the data and directly determined the trend of the predicted signal. Therefore, the Auto-Encoder should focus on the low-frequency signal. In addition, extracting features according to different frequencies can make the Auto-Encoder pay more attention to important features.

In the UAV Flight Data Sets, the proposed model was used for testing, and different indicators were used for the evaluation to comprehensively evaluate the performance of the model. In the experiment, the label of the fault data was 1 (

) and the label of the normal data was 0 (

). Two-thirds of all data were used for the training set, and the remaining one-third was the test set. The parameters set for the improved Auto-Encoder are shown in

Table 2.

The parameters of Equation (19) were determined by several experiments. The parameters

,

, and

of Equation (19) are as follows:

The parameters

of Equation (23) are as follows:

In the training set, the normal data were used for training, and the reconstruction loss was used as the criterion for fault detection. The impact of the unbalanced data was avoided. We randomly selected two-thirds of the data to train the model and used the remaining data as the test set to verify the effectiveness of the model. There were 171 fault data and 2775 normal data in the verification set.

Accuracy,

Precision,

Recall,

F1 score, and

AUC were selected as the evaluation indicators of the model’s predictive ability. The confusion matrix is shown in

Table 3.

From

Table 3,

Accuracy,

Precision,

Recall, and

F1 score are defined by the confusion matrix, as follows [

45,

46]:

AUC is defined as the area enclosed by the coordinate axis under the ROC curve. The results of the validation set are shown in

Table 4.

A variety of fault detection models were selected for comparison, including GBDT, random forest, SVM, RNN, and CNN. The confusion matrix of the GBDT, random forest, SVM, RNN, and CNN are shown in

Table 5.

The comparison of the verification results of the UAV Flight Data Sets is shown in

Table 6.

From

Table 6, the accuracy of the proposed method was 0.9101. The developed method achieved better results in most situations. The accuracy of the GBDT and random forest was 0.7801 and 0.7410, respectively. They are common ensemble learning models, which improve the classification effect by adding decision trees. The SVM’s accuracy was 0.6167. Generally, the results of the SVM on the small sample training sets were better than the other algorithms. However, the SVM is not suitable for large data sets. It is sensitive to the selection of parameters and kernel functions. The accuracy of the RNN and CNN was 0.8778 and 0.8805, respectively. In the results for the RNN and CNN, there was a low precision and a high recall. Because of the imbalanced data, the feature extraction of the fault data was insufficient. The model has an insufficient ability to identify fault data. The fault data were wrongly detected as normal data [

47]. The proposed method alleviates this problem. The developed method uses a large number of normal data in the UAV Flight Data Sets to fully extract the features of normal data. The features of the fault data differed greatly from the normal data. The Auto-Encoder cannot use the features of the fault data for accurate reconstruction. Therefore, accurate fault detection can be achieved by reconstruction loss.

In order to verify the importance of filtering, we used the traditional Auto-Encoder to repeat the experiment. The original data were trained using the traditional Auto-Encoder. The other steps were the same as the developed methods in this paper. The results of both are shown in

Table 7.

From

Table 7, the accuracy of the traditional Auto-Encoder was 0.9080. The proposed method achieved better results. The filtering effectively reduced the interference of the high-frequency signal to feature extraction, and important features were extracted.

In order to explore the influence of the number of wavelet layers on the model, the signals filtered by the different number of wavelet layers were tested. The structure of the model is similar to that shown in

Figure 5. The only difference is the number of input data frequencies (the model in

Figure 5 inputs the data of three frequencies, and the number of wavelet layers is three). Other steps are the same as the developed methods in this paper. In the experiment, the number of wavelet layers were 1, 2, 3, and 4. The results of the experiment are shown in

Table 8.

From

Table 8, the accuracy of models was 0.9080, 0.9080, 0.9101, and 0.9035. The best number of wavelet layers was three. In addition, with the increase in the number of wavelet layers, the efficiency of the model is improved. We believe that the greater the number of wavelet layers, the more effective the signal filtering. When the number of wavelet layers was four, the accuracy of the model decreased. We believe that too much filtering will cause the loss of important features in the signal.

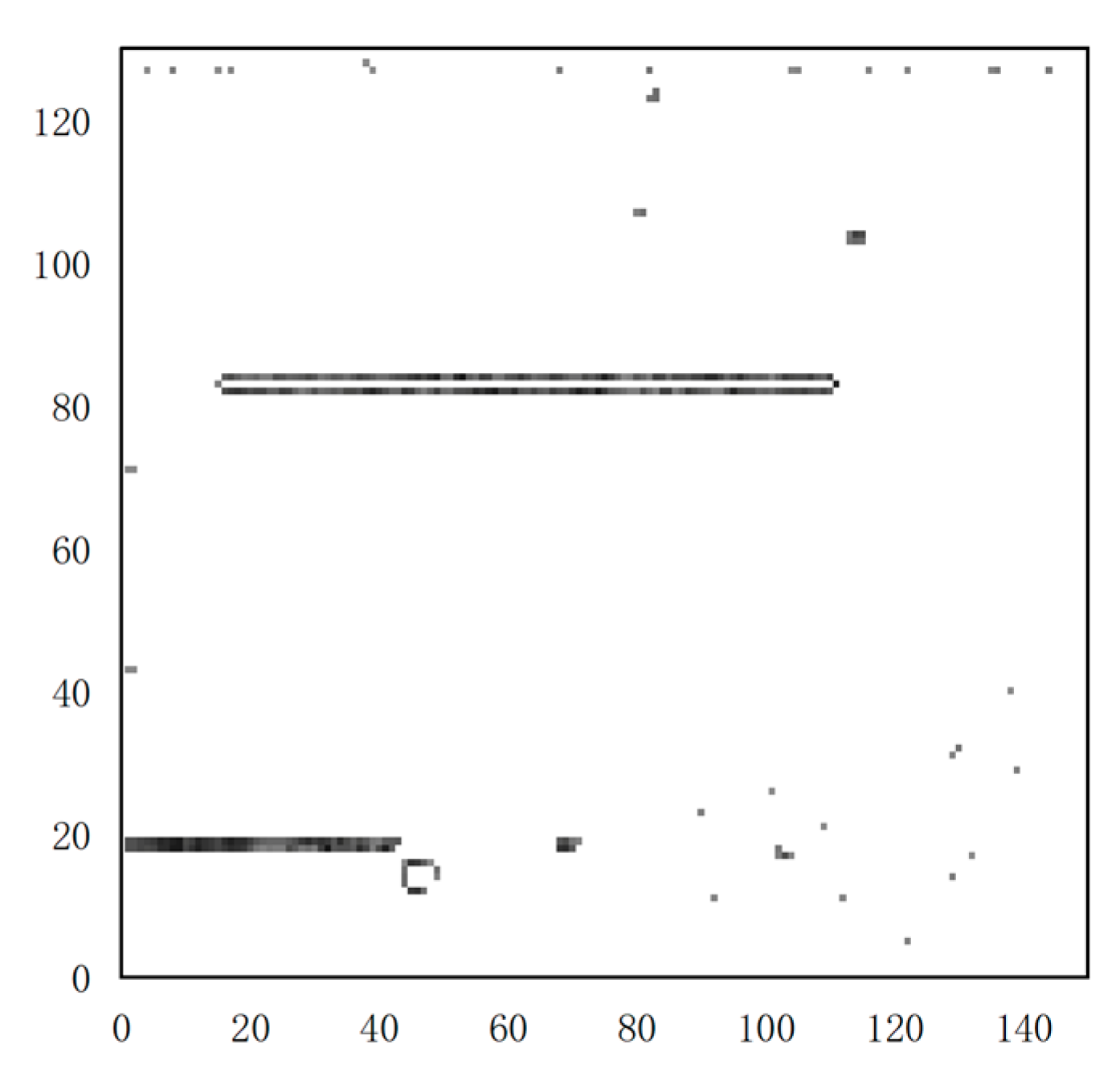

The efficiency of the developed fault factor location method was verified. The proposed fault factor location method is based on the reconstruction loss of the Auto-Encoder and edge detection operator. The fault data were selected as an example (selected the first

of the fault data).

in Equation (22) of the fault data is 0.87. The reconstruction loss matrix was obtained by Equation (28). For the reconstruction loss matrix, we should pay attention to the data with large gradient changes. The reconstruction loss matrix is calculated by the edge detection operator, as shown in Equation (25). In order to avoid errors, factors with detection results exceeding

are selected. In order to show the data more clearly, visualization of the edge detection results is shown in

Figure 12.

In

Figure 12, some elements in the matrix obviously had large gradients (areas circled in black). A large gradient means that the element has a greater reconstruction loss than the surrounding elements. The factors corresponding to these elements in the matrix cause UAV fault. For example, in the first

of the data, the factors that can be detected include temperature of the generator (factor 16), rudder ratio (factor 17), and pitch angle (factor 81). We speculate that it is due to mechanical fatigue and disturbance of external air flow. In order to show the effectiveness of the proposed model more clearly, more results of the experiment are shown in

Table 9.

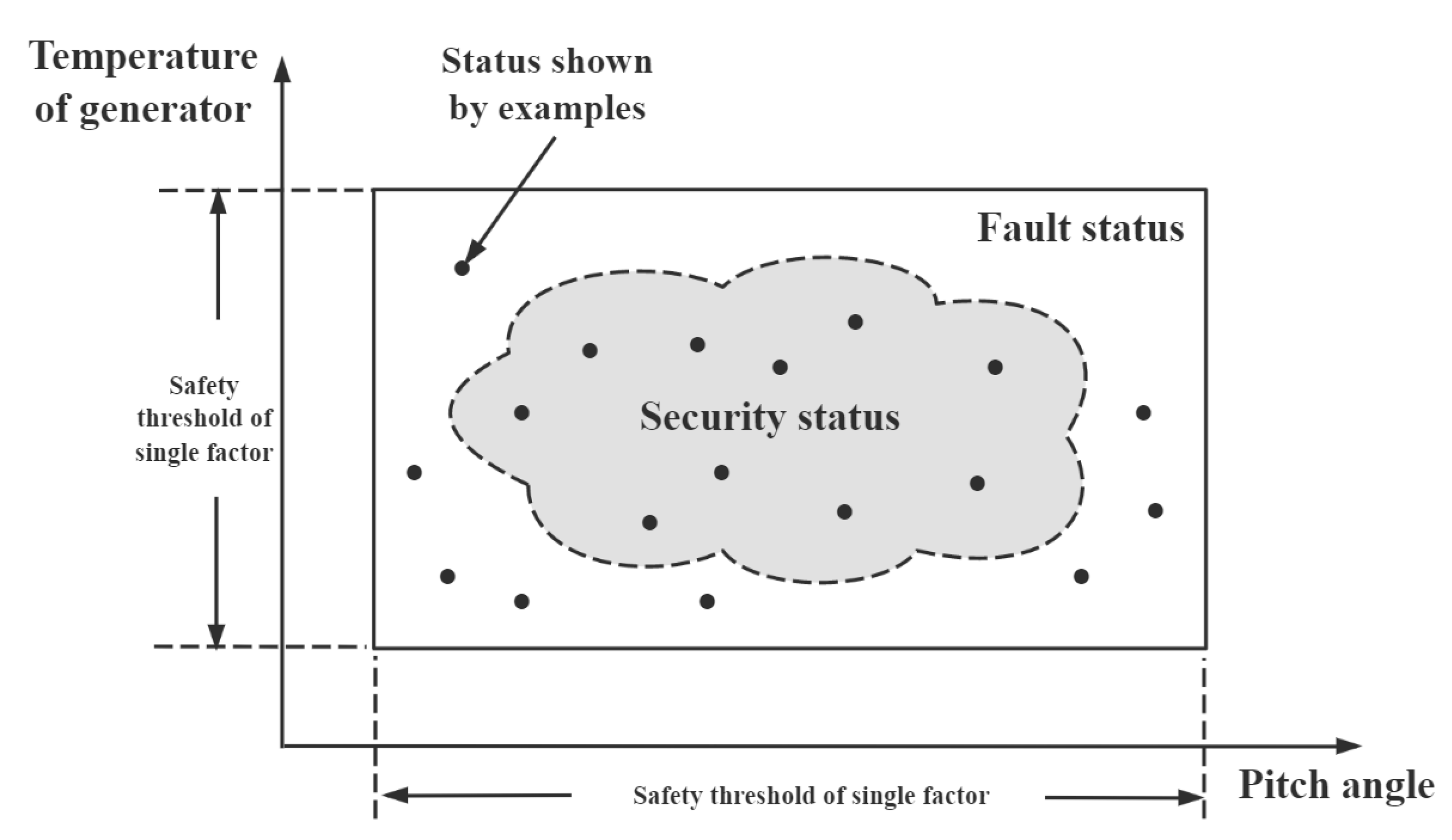

At the end of this study, an explanation is provided for why the proposed model had good results. The traditional fault detection method monitors a certain factor. When it exceeds the safety range, a fault occurs. However, UAVs are complex systems, and faults are caused by multiple factors. In some cases, multiple factors of a UAV are coupled with each other. A fault may occur even if every factor is normal. Traditional methods only focus on the influence of a certain factor on the fault or do not comprehensively consider the coupling of multiple factors. To intuitively illustrate the coupling of multiple factors, the security domain description was used. The security domain of the temperature of the generator and the pitch angle is shown in

Figure 13.

In

Figure 13, each factor did not exceed the safety range, but the deviation of multiple factors will also cause faults. The proposed model can effectively detect this case.

5. Conclusions

In this study, a new Self-supervised Fault Detection Model for UAVs based on an improved Auto-Encoder was proposed.

In the improved model, only normal data were available as training data to extract the normal features of the flight data and reduce the dimensions of the data. The impact of the unbalanced data was avoided. In the Auto-Encoder, we used wavelet analysis to extract low-frequency signals with different frequencies from the flight data. The Auto-Encoder was used for the feature extraction and reconstruction of low-frequency signals with different frequencies. The high-frequency signal in the data affects the extraction of data features by the Auto-Encoder. Using wavelet analysis to filter noise was helpful for feature extraction. The normal data were used for training. The reconstruction loss was used as the criterion for fault detection. The fault data will produce a higher reconstruction loss. To improve the effectiveness of the fault localization at inference, a novel fault factor location method was proposed. The edge detection operator was used to detect the obvious change of the reconstruction loss, which usually represents the fault factor in the data. The proposed Self-supervised Fault Detection Model for UAVs was evaluated according to accuracy, precision, recall, F1 score, and AUC with the UAV Flight Data Sets. The experimental results show that the developed model had the highest fault detection accuracy among those tested, at 91.01%. Moreover, an explanation of the Self-supervised Fault Detection Model’s results is provided.

It should be noted that only a few fault data were in the data set, and the features of the fault data could not be fully utilized in this study. The Auto-Encoder was used to extract the features of the normal data. In future work, we intend to extract the features of the fault data. In recent years, transfer learning has achieved success in many fields. We intend to analyze the fault data in other data sets through transfer learning. The transferred data can help enrich the features of the fault data. We expect that the full extraction of the fault data features can improve the effectiveness of UAV fault diagnosis. In addition, multiple faults are detected by the proposed model. However, we are unable to explain the reasons for faults caused by multiple factors (it is difficult to explain by the deep learning model), which requires practical and specific analysis. Using the deep learning model to explain the mechanism of a fault is also a future research direction.

In summary, this study analyzed the features of UAV Flight Data Sets and proposed a novel Self-supervised Fault Detection Model for UAVs. The proposed model had a better performance.