Jointly Modeling Autoregressive Conditional Mean and Variance of Non-Negative Valued Time Series

Abstract

1. Introduction

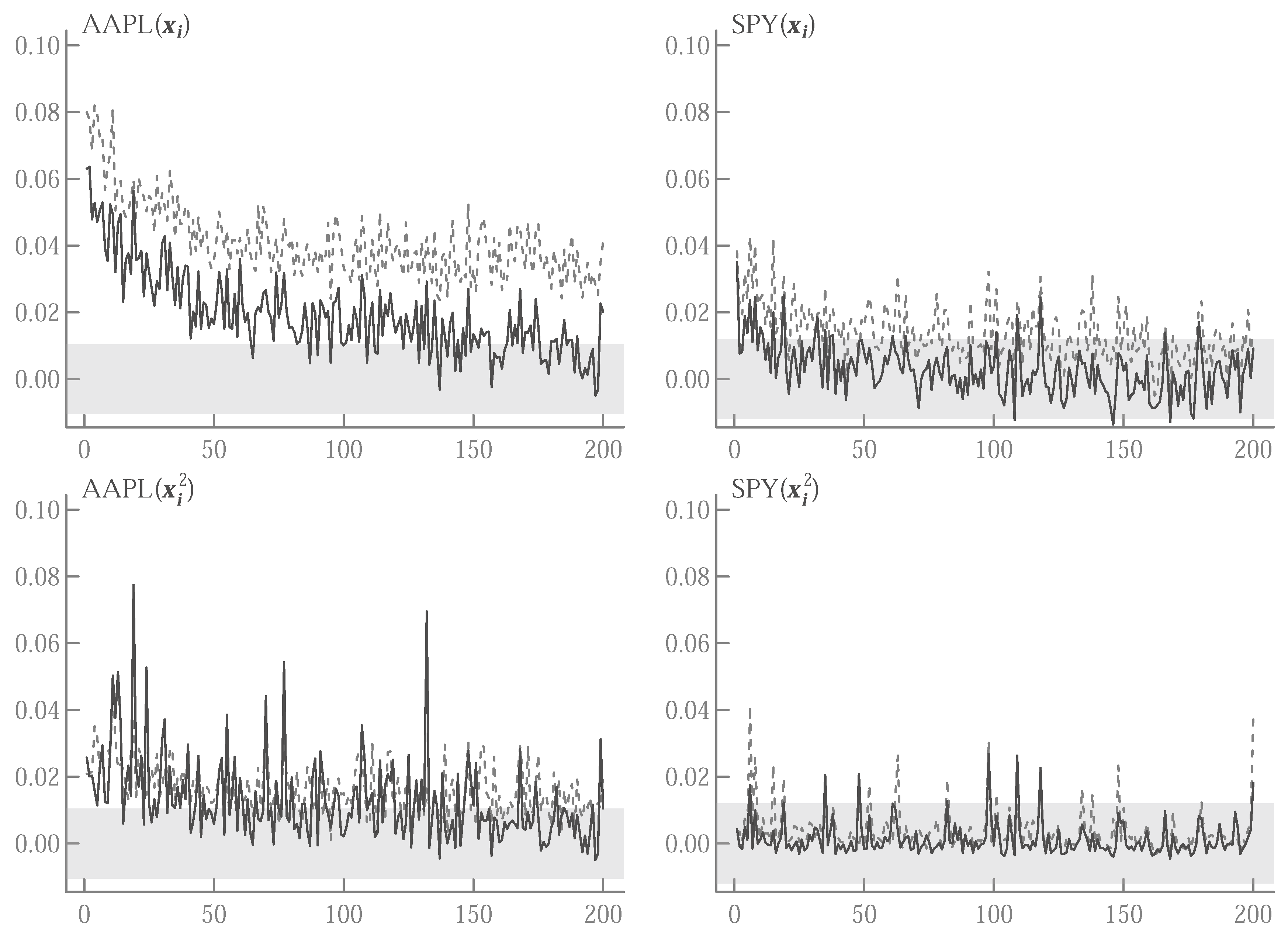

2. Model Specifications

2.1. Time-Varying Variance Parameter

2.2. Conditional Autoregression in Logs

3. Empirical Application

3.1. Data

3.2. Estimation

3.3. Conditional Moment Tests

4. Concluding Remarks

Supplementary Materials

Funding

Conflicts of Interest

References

- Andrews, Donald W. K. 1991. Heteroskedasticity and Autocorrelation Consistent Covariance Matrix Estimation. Econometrica 59: 817–58. [Google Scholar] [CrossRef]

- Andrews, Donald W. K. 2001. Testing When a Parameter is on the Boundary of the Maintained Hypothesis. Econometrica 69: 683–734. [Google Scholar] [CrossRef]

- Bauwens, Luc, and Pierre Giot. 2000. The Logarithmic Acd Model: An Application to the Bid-Ask Quote Process of Three NYSE Stocks. Annales d’Economie et de Statistique 60: 117–49. [Google Scholar] [CrossRef]

- Bhogal, Saranjeet Kaur, and Ramanathan Thekke Variyam. 2019. Conditional duration models for high-frequency data: A review on recent developments. Journal of Economic Surveys 33: 252–73. [Google Scholar] [CrossRef]

- Blasques, Francisco, Vladimír Holý, and Petra Tomanová. 2018. Zero-Inflated Autoregressive Conditional Duration Model for Discrete Trade Durations with Excessive Zeros. unpublished work. [Google Scholar]

- Bollerslev, Tim. 2010. Glossary to ARCH (GARCH). In Volatility and Time Series Econometrics: Essays in Honour of Robert F. Engle. Oxford: Oxford University Press, pp. 137–64. [Google Scholar]

- Brownlees, Christian T., Fabrizio Cipollini, and Giampiero M. Gallo. 2012. Multiplicative Error Models. In Handbook of Volatility Models and Their Applications. Hoboken: Wiley, Chp. 9. pp. 225–47. [Google Scholar]

- Cox, D.R. 1981. Statistical Analysis of Time Series: Some Recent Developments. Scandinavian Journal of Statistics 8: 93–115. [Google Scholar]

- Engle, Robert F. 2002a. Dynamic Conditional Correlation: A Simple Class of Multivariate Generalized Autoregressive Conditional Heteroskedasticity Models. Journal of Business & Economic Statistics 20: 339–50. [Google Scholar]

- Engle, Robert F. 2002b. New Frontiers for ARCH Models. Journal of Applied Econometrics 17: 425–46. [Google Scholar] [CrossRef]

- Engle, Robert F., and Giampiero M. Gallo. 2006. A multiple indicators model for volatility using intra-daily data. Journal of Econometrics 131: 3–27. [Google Scholar] [CrossRef]

- Engle, Robert F., and Victor K. Ng. 1993. Measuring and Testing the Impact of News on Volatility. Journal of Finance 48: 1022–82. [Google Scholar] [CrossRef]

- Engle, Robert F., and Jeffrey R. Russell. 1998. Autoregressive Conditional Duration: A New Model for Irregularly Spaced Transaction Data. Econometrica 66: 1127–62. [Google Scholar] [CrossRef]

- Francq, Christian, and Jean-Michel Zakoïan. 2009. Testing the Nullity of GARCH Coefficients: Correction of the Standard Tests and Relative Efficiency Comparisons. Journal of the American Statistical Association 104: 313–24. [Google Scholar] [CrossRef][Green Version]

- Ghysels, Eric, Christian Gouriéroux, and Joann Jasiak. 2004. Stochastic volatility duration models. Journal of Econometrics 119: 413–33. [Google Scholar] [CrossRef]

- Hansen, Bruce E. 1994. Autoregressive Conditional Density Estimation. International Economic Review 35: 705–30. [Google Scholar] [CrossRef]

- Harvey, Andrew, and Ryoko Ito. 2020. Modeling time series when some observations are zero. Journal of Econometrics 214: 33–45. [Google Scholar] [CrossRef]

- Hautsch, Nikolaus. 2012. Econometrics of Financial High-Frequency Data. Berlin: Springer. [Google Scholar]

- Hautsch, Nikolaus, Peter Malec, and Melanie Schienle. 2014. Capturing the Zero: A New Class of Zero-Augmented Distributions and Multiplicative Error Processes. Journal of Financial Econometrics 12: 89–121. [Google Scholar] [CrossRef]

- Liu, Lei, Ya-Chen Tina Shih, Robert L. Strawderman, Daowen Zhang, Bankole A. Johnson, and Haitao Chai. 2019. Statistical Analysis of Zero-Inflated Nonnegative Continuous Data: A Review. Statistical Science 34: 253–79. [Google Scholar] [CrossRef]

- Newey, Whitney K. 1985. Maximum Likelihood Specification Testing and Conditional Moment Tests. Econometrica 53: 1047–70. [Google Scholar] [CrossRef]

- Newey, Whitney K., and Kenneth West. 1994. Automatic Lag Selection in Covariance Matrix Estimation. Review of Economic Studies 61: 631–53. [Google Scholar] [CrossRef]

- Pacurar, Maria. 2008. Autoregressive Conditional Duration Models in Finance: A Survey of the Theoretical and Empirical Literature. Journal of Economic Surveys 22: 711–51. [Google Scholar] [CrossRef]

- Silvapulle, Mervyn J., and Pranab Kumar Sen. 2004. Constrained Statistical Inference. Hoboken: Wiley. ISBN 0-471-20827-2. [Google Scholar]

- Sin, Chor-Yiu, and Halbert White. 1996. Information criteria for selecting possibly misspecified parametric models. Journal of Econometrics 71: 207–25. [Google Scholar] [CrossRef]

- Wald, Abraham, and Wolfowitz Wolfowitz. 1940. On a Test Whether Two Samples are from the Same Population. Annals of Statistics 11: 147–62. [Google Scholar] [CrossRef]

- Wooldridge, Jeffrey. 1991. Specification testing and quasi-maximum-likelihood estimation. Journal of Econometrics 48: 29–55. [Google Scholar] [CrossRef]

| AAPL | SPY | |||||

|---|---|---|---|---|---|---|

| Reg | CS10 | CS30 | Reg | CS10 | CS30 | |

| n | 36,331 | 36,331 | 36,331 | 27,877 | 27,877 | 27,877 |

| mean | 1.00 | 1.00 | 1.00 | 1.00 | 0.99 | 0.99 |

| 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | |

| 2.66 | 2.57 | 2.85 | 3.35 | 3.26 | 3.31 | |

| max | 56.68 | 54.35 | 185.52 | 101.59 | 106.94 | 98.32 |

| 0.40 | 0.40 | 0.40 | 0.54 | 0.54 | 0.54 | |

| [0.000] | [0.000] | [0.000] | [0.000] | [0.000] | [0.000] | |

| 0.54 | 0.54 | 0.53 | 0.38 | 0.38 | 0.38 | |

| (0.004) | (0.004) | (0.004) | (0.004) | (0.004) | (0.004) | |

| 0.36 | 0.36 | 0.36 | 0.45 | 0.45 | 0.45 | |

| (0.003) | (0.003) | (0.003) | (0.004) | (0.004) | (0.004) | |

| 0.94 | 0.88 | 0.98 | 0.99 | |||

| MEM | MEMZ | LNZ | LNZ-G | LNZ-E | |||

|---|---|---|---|---|---|---|---|

| 0.008 | 0.056 | −0.269 | −0.238 | −0.205 | |||

| (0.002) | (0.006) | (0.044) | (0.042) | (0.042) | |||

| 0.074 | 0.092 | 0.690 | 0.669 | 0.684 | |||

| (0.009) | (0.008) | (0.041) | (0.043) | (0.043) | |||

| 0.924 | 0.906 | −0.408 | −0.385 | −0.409 | |||

| (0.010) | (0.008) | (0.036) | (0.035) | (0.035) | |||

| 0.853 | 0.873 | 0.877 | |||||

| (0.017) | (0.015) | (0.015) | |||||

| 15.360 | 1.181 | 0.059 | |||||

| (0.115) | (0.430) | (0.018) | |||||

| 0.089 | 0.198 | ||||||

| (0.017) | (0.021) | ||||||

| 0.299 | 0.082 | ||||||

| (0.174) | (0.006) | ||||||

| 0.860 | 0.104 | ||||||

| (0.040) | (0.018) | ||||||

| 0.925 | |||||||

| (0.011) | |||||||

| −0.849 | −1.515 | −0.590 | −0.584 | −0.582 | |||

| 1.699 | 3.030 | 1.180 | 1.169 | 1.164 | |||

| 1.700 | 3.031 | 1.181 | 1.172 | 1.166 | |||

| 1.699 | 3.030 | 1.180 | 1.170 | 1.165 |

| MEM | MEMZ | LNZ | LNZ-G | LNZ-E | |||

|---|---|---|---|---|---|---|---|

| 0.024 | 0.179 | −0.151 | −0.148 | −0.121 | |||

| (0.007) | (0.030) | (0.039) | (0.038) | (0.038) | |||

| 0.037 | 0.134 | 0.934 | 0.871 | 0.883 | |||

| (0.007) | (0.021) | (0.046) | (0.046) | (0.046) | |||

| 0.942 | 0.858 | −0.428 | −0.389 | −0.406 | |||

| (0.011) | (0.021) | (0.039) | (0.038) | (0.038) | |||

| 0.835 | 0.850 | 0.852 | |||||

| (0.015) | (0.015) | (0.015) | |||||

| 13.424 | 2.206 | 0.172 | |||||

| (0.163) | (0.376) | (0.046) | |||||

| 0.155 | 0.353 | ||||||

| (0.014) | (0.029) | ||||||

| 1.798 | 0.110 | ||||||

| (0.266) | (0.011) | ||||||

| 0.693 | 0.251 | ||||||

| (0.035) | (0.032) | ||||||

| 0.829 | |||||||

| (0.024) | |||||||

| −0.964 | −1.468 | −0.831 | −0.826 | −0.825 | |||

| 1.928 | 2.936 | 1.663 | 1.654 | 1.651 | |||

| 1.929 | 2.938 | 1.665 | 1.657 | 1.654 | |||

| 1.929 | 2.937 | 1.664 | 1.655 | 1.652 |

| AAPL | SPY | |||||||

|---|---|---|---|---|---|---|---|---|

| [-val] | [-val] | [-val] | [-val] | |||||

| , | ||||||||

| MEM | 0.24 | [0.888] | 0.42 | [0.811] | 63.85 | [0.000] | 66.62 | [0.000] |

| MEMZ | 134.14 | [0.000] | 247.40 | [0.000] | 32.47 | [0.000] | 40.45 | [0.000] |

| LNZ | 74.42 | [0.000] | 134.16 | [0.000] | 69.81 | [0.000] | 110.31 | [0.000] |

| LNZ-G | 16.51 | [0.000] | 16.63 | [0.000] | 1.08 | [0.583] | 1.15 | [0.563] |

| LNZ-E | 23.00 | [0.000] | 28.73 | [0.000] | 4.79 | [0.091] | 5.47 | [0.065] |

| , | ||||||||

| MEM | 10.11 | [0.039] | 10.01 | [0.040] | 70.17 | [0.000] | 74.67 | [0.000] |

| MEMZ | 148.55 | [0.000] | 275.39 | [0.000] | 37.17 | [0.000] | 47.10 | [0.000] |

| LNZ | 78.59 | [0.000] | 156.46 | [0.000] | 72.63 | [0.000] | 121.73 | [0.000] |

| LNZ-G | 21.11 | [0.000] | 20.11 | [0.000] | 2.22 | [0.696] | 2.34 | [0.674] |

| LNZ-E | 28.05 | [0.000] | 32.92 | [0.000] | 5.47 | [0.242] | 6.48 | [0.166] |

| , | ||||||||

| MEM | 185.52 | [0.000] | 192.12 | [0.000] | 163.57 | [0.000] | 167.92 | [0.000] |

| MEMZ | 285.97 | [0.000] | 311.37 | [0.000] | 72.65 | [0.000] | 72.52 | [0.000] |

| LNZ | 7.49 | [0.058] | 10.48 | [0.015] | 6.22 | [0.101] | 7.59 | [0.055] |

| LNZ-G | 0.10 | [0.991] | 0.11 | [0.991] | 0.11 | [0.991] | 0.11 | [0.991] |

| LNZ-E | 0.73 | [0.866] | 0.80 | [0.850] | 0.28 | [0.964] | 0.28 | [0.964] |

| , | ||||||||

| MEM | 193.96 | [0.000] | 193.70 | [0.000] | 111.43 | [0.000] | 112.49 | [0.000] |

| MEMZ | 286.87 | [0.000] | 288.54 | [0.000] | 46.36 | [0.000] | 46.47 | [0.000] |

| LNZ | 7.47 | [0.024] | 10.32 | [0.006] | 5.99 | [0.050] | 7.24 | [0.027] |

| LNZ-G | 0.10 | [0.950] | 0.10 | [0.950] | 0.04 | [0.979] | 0.04 | [0.979] |

| LNZ-E | 0.63 | [0.729] | 0.69 | [0.707] | 0.13 | [0.938] | 0.13 | [0.938] |

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kawakatsu, H. Jointly Modeling Autoregressive Conditional Mean and Variance of Non-Negative Valued Time Series. Econometrics 2019, 7, 48. https://doi.org/10.3390/econometrics7040048

Kawakatsu H. Jointly Modeling Autoregressive Conditional Mean and Variance of Non-Negative Valued Time Series. Econometrics. 2019; 7(4):48. https://doi.org/10.3390/econometrics7040048

Chicago/Turabian StyleKawakatsu, Hiroyuki. 2019. "Jointly Modeling Autoregressive Conditional Mean and Variance of Non-Negative Valued Time Series" Econometrics 7, no. 4: 48. https://doi.org/10.3390/econometrics7040048

APA StyleKawakatsu, H. (2019). Jointly Modeling Autoregressive Conditional Mean and Variance of Non-Negative Valued Time Series. Econometrics, 7(4), 48. https://doi.org/10.3390/econometrics7040048