Abstract

The analysis and modeling of categorical time series requires quantifying the extent of dispersion and serial dependence. The dispersion of categorical data is commonly measured by Gini index or entropy, but also the recently proposed extropy measure can be used for this purpose. Regarding signed serial dependence in categorical time series, we consider three types of -measures. By analyzing bias properties, it is shown that always one of the -measures is related to one of the above-mentioned dispersion measures. For doing statistical inference based on the sample versions of these dispersion and dependence measures, knowledge on their distribution is required. Therefore, we study the asymptotic distributions and bias corrections of the considered dispersion and dependence measures, and we investigate the finite-sample performance of the resulting asymptotic approximations with simulations. The application of the measures is illustrated with real-data examples from politics, economics and biology.

1. Introduction

In many applications, the available data are not of quantitative nature (e.g., real numbers or counts) but consist of observations from a given finite set of categories. In the present article, we are concerned with data about political goals in Germany, fear states in the stock market, and phrases in a bird’s song. For stochastic modeling, we use a categorical random variable X, i.e. a qualitative random variable taking one of a finite number of categories, e.g. categories with some . If these categories are unordered, X is said to be a nominal random variable, whereas an ordinal random variable requires a natural order of the categories (Agresti 2002). To simplify notations, we always assume the possible outcomes to be arranged in a certain order (either lexicographical or natural order), i.e. we denote the range (state space) as . The stochastic properties of X can be determined based on the vector of marginal probabilities by , where (probability mass function, PMF). We abbreviate for , where has to hold. The subscripts “” are used for and p to emphasize that only m of the probabilities can be freely chosen because of the constraint .

Well-established dispersion measures for quantitative data, such as variance or inter quartile range, cannot be applied to qualitative data. For a categorical random variable X, one commonly defines dispersion with respect to the uncertainty in predicting the outcome of X(Kvålseth 2011b; Rao 1982; Weiß and Göb 2008). This uncertainty is maximal for a uniform distribution on (a reasonable prediction is impossible if all states are equally probable, thus maximal dispersion), whereas it is minimal for a one-point distribution (i.e., all probability mass concentrates on one category, so a perfect prediction is possible). Obviously, categorical dispersion is just the opposite concept to the concentration of a categorical distribution. To measure the dispersion of the categorical random variable X, the most common approach is to use either the (normalized) Gini index (also index of qualitative variation, IQV) (Kvålseth 1995; Rao 1982) defined as

or the (normalized) entropy (Blyth 1959; Shannon 1948) given by

Both measures are minimized by a one-point distribution and maximized by the uniform distribution on . While nominal dispersion is always expressed with respect to these extreme cases, it has to be mentioned that there is an alternative scenario of maximal ordinal variation, namely the extreme two-point distribution; however, this is not further considered here.

If considering a (stationary) categorical process instead of a single random variable, then not only marginal properties are relevant but also information about the serial dependence structure (Weiß 2018). The (signed) autocorrelation function (ACF), as it is commonly applied in case of real-valued processes, cannot be used for categorical data. However, one may use a type of Cohen’s κ instead (Cohen 1960). A -measure of signed serial dependence in categorical time series is given by (see Weiß 2011, 2013; Weiß and Göb 2008);

Equation (3) is based on the lagged bivariate probabilities for . for serial independence at lag h, and the strongest degree of positive (negative) dependence is indicated if all (), i.e., if the event is necessarily followed by ().

Motivated by a mobility index discussed by Shorrocks (1978), a simplified type of -measure, referred to as the modified κ, was defined by Weiß (2011, 2013):

Except the fact that the lower bound of the range differs from the one in Equation (3) (note that this lower bound is free of distributional parameters), we have the same properties as stated before for . The computation of is simplified compared to the one of and, in particular, its sample version has a more simple asymptotic normal distribution, see Section 5 for details. Unfortunately, is not defined if only one of the equals 0, whereas is well defined for any marginal distribution not being a one-point distribution. This issue may happen quite frequently for the sample version if the given time series is short (a possible circumvention is to replace all summands with by 0). For this reason, appear to be of limited use for practice as a way of quantifying signed serial dependence. It should be noted that a similar “zero problem” happens with the entropy in Equation (2), and, actually, we work out a further relation between and below.

In the recent work by Lad et al. (2015), extropy was introduced as a complementary dual to the entropy. Its normalized version is given by

Here, the zero problem obviously only happens if one of the equals 1 (i.e., in the case of a one-point distribution). Similar to the Gini index in Equation (1) and the entropy in Equation (2), the extropy takes its minimal (maximal) value 0 (1) for (), thus also Equation (5) constitutes a normalized measure of nominal variation. In Section 2, we analyze its properties in comparison to Gini index and entropy. In particular, we focus on the respective sample versions , and (see Section 3). To be able to do statistical inference based on , and , knowledge about their distribution is required. Up to now, only the asymptotic distribution of and (to some part) of has been derived; in Section 3, comprehensive results for all considered dispersion measures are provided. These asymptotic distributions are then used as approximations to the true sample distributions of , and , which is further investigated with simulations and a real application (see Section 4).

The second part of this paper is dedicated to the analysis of serial dependence. As a novel competitor to the measures in Equations (3) and (4), a new type of modified is proposed, namely

Again, this constitutes a measure of signed serial dependence, which shares the before-mentioned (in)dependence properties with . However, in contrast to , the newly proposed does not have a division-by-zero problem: except for the case of a one-point distribution, is well defined. Note that, in Section 3.2, it turns out that is related to in some sense, e.g. is related to and to . In Section 5, we analyze the sample version of in comparison to those of , and we derive its asymptotic distribution under the null hypothesis of serial independence. This allows us to test for significant dependence in categorical time series. The performance of this -test, in comparison to those based on , is analyzed in Section 6, where also two further real applications are presented. Finally, we conclude in Section 7.

2. Extropy, Entropy and Gini Index

As extropy, entropy and Gini index all serve for the same task, it is interesting to know their relations and differences. An important practical issue is the “”-problem, as mentioned above, which never occurs for the Gini index, only occurs in the case of a (deterministic) one-point distribution for the extropy, and always occurs for the entropy if only one . Lad et al. (2015) further compared the non-normalized versions of extropy and entropy, and they showed that the first is never smaller than the latter. Actually, using the inequality for from Love (1980), it follows that

(see Appendix B.1 for further details).

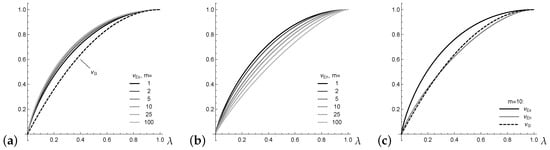

Things change, however, if considering the normalized versions , and . For illustration, assume an underlying Lambda distribution with defined by the probability vector (Kvålseth 2011a). Note that leads to a one-point distribution, whereas leads to the uniform distribution; actually, can be understood as a mixture of these boundary cases. For , the Gini index satisfies for all (see Kvålseth (2011a)). In addition, the extropy has rather stable values for varying m (see Figure 1a), whereas the entropy values in Figure 1b change greatly. This complicates the interpretation of the actual level of normalized entropy.

Figure 1.

Normalized dispersion measures for Lambda distribution against : in (a), in (b), comparison for in (c).

Finally, the example plotted in Figure 1c shows that, in contrast to Equation (7), there is no fixed order between the normalized entropy and Gini index . In this and many further numerical experiments, however, it could be observed that the inequalities and hold. These inequalities are formulated as a general conjecture here.

From now on, we turn towards the sample versions of . These are obtained by replacing the probabilities by the respective estimates , which are computed as relative frequencies from the given sample data . As detailed in Section 3, are assumed as time series data, but we also consider the case of independent and identically distributed (i.i.d.) data.

3. Distribution of Sample Dispersion Measures

To be able to derive the asymptotic distribution of statistics computed from , Weiß (2013) assumed that the nominal process is -mixing with exponentially decreasing weights such that the CLT on p. 200 in Billingsley (1999) is applicable. This condition is not only satisfied in the i.i.d.-case, but also for, among others, the so-called NDARMA models as introduced by Jacobs and Lewis (1983) (see Appendix A.1 for details). Then, Weiß (2013) derived the asymptotic distribution of , which is the normal distribution with given by

Using this result, the asymptotic properties of , and can be derived, as shown in Appendix B.2. The following subsections present and compare these properties in detail.

3.1. Asymptotic Normality

As shown in Appendix B.2, provided that , all variation measures , and are asymptotically normally distributed. More precisely, is asymptotically normally distributed with variance

a result already known from Weiß (2013). Here, might be understood as a measure of serial dependence, in analogy to the measures in Equations (3), (4) and (6). In particular, in the i.i.d.-case, and for NDARMA processes (Appendix A.1).

Analogously, is asymptotically normally distributed with variance

In the i.i.d.-case, where the last factor becomes 1 (cf. Appendix A.1), this result was given by Blyth (1959), whereas the general expression in Equation (10) can be found in the work of Weiß (2013).

A novel result follows for the extropy, where is asymptotically normally distributed with variance

Again, the last factor becomes 1 in the i.i.d.-case as , and for NDARMA processes (Appendix A.1).

In Equations (9)–(11), the notations , and have been introduced (see the respective expressions covered by the curly bracket) to highlight the similar structure of the asymptotic variances, and to locate the effect of serial dependence. Actually, one might use , and as measures of serial dependence in categorical time series, although their definition is probably too complex for practical use. In Section 3.2, when analyzing the bias of , and , analogous relations to the -measures defined in Section 1 are established.

3.2. Asymptotic Bias

In Appendix B.2, we express the variation measures , and as centered quadratic polynomials (at least approximately), and subsequently derive a bias formula. For the sample Gini index, it follows that

This formula was also derived by Weiß (2013), and it leads to the exact corrective factor in the i.i.d.-case. For , such bias formulae do not exist yet. However, from our derivations in Appendix B.2, we newly obtain that

In the i.i.d.-case, the last factor reduces to 1, and for NDARMA processes (Appendix A.1). Comparing Equations (12) and (13), we see that the effect of serial dependence on the bias is always expressed in terms of a -measure, using the ordinary (Equation (3)) for the Gini index, and the modified (Equation (4)) for the entropy. Concerning the extropy, it turns out that the newly proposed -measure from Equation (6) takes this role:

In the i.i.d.-case, the last factor again reduces to 1, and also holds for NDARMA processes (Appendix A.1). Altogether, Equations (12)–(14) show a unique structure regarding the effect of serial dependence. Furthermore, the computed bias corrections imply the relations , and . The sample versions of are analyzed later in Section 5.

3.3. Asymptotic Properties for Uniform Distribution

The asymptotic normality established in Section 3.1 certainly does not apply to the deterministic case , but we also have to exclude the boundary case of a uniform distribution . As shown in Appendix B.2, the asymptotic distribution of , and in the uniform case is not a normal distribution but a quadratic-form one. All three statistics can be related to the Pearson’s -statistic:

The actual asymptotic distribution can now be derived by applying Theorem 3.1 in Tan (1977) to the asymptotic result in Equation (8), which requires computing the eigenvalues of . In special cases, however, one is not faced with a general quadratic-form distribution but with a -distribution; this happens for NDARMA processes and certainly in the i.i.d.-case (see Weiß (2013)). Then, defining , it holds that Equation (15) asymptotically follows c times a -distribution (with in the i.i.d.-case).

4. Simulations and Applications

Section 4.1 presents some simulation results regarding the quality of the asymptotic approximations for , and as derived in Section 3. Section 4.2 then applies these measures within a longitudinal study about the most important goals in politics in Germany.

4.1. Finite-Sample Performance of Dispersion Measures

In applications, the normal and -distributions derived in Section 3 were used as an approximation to the true distribution of , and . Therefore, the finite-sample performance of these approximations had to be analyzed, which was done by simulation (with 10,000 replications per scenario). In the tables provided by Appendix C, the simulated means (Table A1) and standard deviations (Table A2) for , and are reported and compared to the respective asymptotic approximations. Then, a common application scenario was considered, the computation of two-sided 95% confidence intervals (CIs) for , and . Since the true parameter values are not known in practice, one has to plug-in estimated parameters into the formulae for mean and variance given in Section 3. The simulated coverage rates reported in Table A3 refer to such plug-in CIs. For these simulations, we either used an i.i.d. data-generating process (DGP) or an NDARMA DGP (see Appendix A.1): a DMA(1) process with or a DAR(1) process with . These were combined with the marginal distributions () summarized in Table 1: and were used before by Weiß (2011, 2013), by Kvålseth (2011b), and to are Lambda distributions with (see Kvålseth 2011a).

Table 1.

Marginal distributions considered in Section 4.1 together with the corresponding dispersion values.

Finally, we used the results in Section 3.3 to test the null hypothesis (level 5%) of the uniform distribution () based on , and . The considered alternatives were with , and the DGPs were as presented above. The simulated rejection rates are summarized in Table A4. Note that the required factor equald with in the DAR(1) case, and in the DMA(1) case, and was thus easy to estimate from the given time series data.

Let us now investigate the simulation results. Comparing the simulated mean values in Table A1 with the true dispersion values in Table 1, we realized a considerable negative bias for small sample sizes, which became even larger with increasing serial dependence. Fortunately, this bias is explained very well by the asymptotic bias correction, in any of the considered scenarios. With some limitations, this conclusion also applies to the standard deviations reported in Table A2; however, for sample size and increasing serial dependence, the discrepancy between asymptotic and simulated values increased. As a result, the coverage rates in Table A3 performed rather poorly for sample size 100; thus, reliable CIs generally required a sample of size at least 250. It should also be noted that Gini index and extropy performed very similarly and often slightly worse than the entropy. Finally, the rejection rates in Table A4 concerning the tests for uniformity showed similar sizes (columns “”; slightly above 0.05 for ) but little different power values: best for extropy and worst for entropy.

4.2. Application: Goals in Politics

The monitoring of public mood and political attitudes over time is important for decision makers as well as for social scientists. Since 1980, the German General Social Survey (“ALLBUS”) is carried out by the “GESIS—Leibniz Institute for the Social Sciences” in every second year (exception: there was an additional survey in 1991 after the German reunification). In the years before and including 1990, the survey was done only in Western Germany, but in all Germany for the years 1991 and later. In what follows, we consider the cumulative report for 1980–2016 in GESIS—Leibniz Institute for the Social Sciences (2018), and there the question “If you had to choose between these different goals, which one would seem to you personally to be the most important?”. The four possible (nominal) answers are

- : “To maintain law and order in this country”;

- : “To give citizens more influence on government decisions”;

- : “To fight rising prices”; and

- : “To protect the right of freedom of speech”.

The sample sizes of this longitudinal study varied between 2795 and 3754, and are thus sufficiently large for the asymptotics derived in Section 3. If just looking at the mode as a summary measure (location), there is not much change in time: from 1980 to 2000 and again in 2016, the majority of respondents considered (law and order) as the most important goal in politics, whereas (influence) was judged most important between 2002 and 2014.

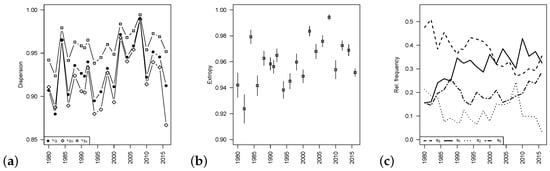

Much more fluctuations are visible if looking at the dispersion measures in Figure 2a. Although the absolute values of the measures differ, the general shapes of the graphs are quite similar. Usually, the dispersion measures take rather large values (≥0.90 in most of the years), which shows that any of the possible goals is considered as being most important by a large part of the population. On the other hand, the different goals never have the same popularity. Even in 2008, where all measures give a value very close to 1, the corresponding uniformity tests lead to a clear rejection of the null of a uniform distribution (p-values approximately 0 throughout).

Figure 2.

ALLBUS data from Section 4.2: (a) dispersion measures; (b) extropy with 95% CIs; and (c) relative frequencies; plotted against year of survey.

Let us now analyze the development of the importance of the political goals in some more detail, by looking at the extropy for illustration. Figure 2b shows the approximate 95%-CIs in time (a bias correction does not have a visible effect because of the large sample sizes). There are phases where successive CIs overlap, and these are interrupted by breaks in the dispersion behavior. Such breaks happen, e.g., in 1984 (possibly related to a change of government in Germany in 1982/83), in 2002 (perhaps related to 9/11), or in 2008 and 2010 (Lehman bankruptcy and economic crisis). These changes in dispersion go along with reallocations of probability masses, as can be seen from Figure 2c. From the frequency curves of and , we can see the above-mentioned change in mode, where the switch back to (law and order) might be caused by the refugee crisis. In addition, the curve for (fight rising prices) helps for explanation, as it shows that is important for the respondents in the beginning of the 1980s (where Germany suffered from very high inflation) and in 2008 (economic crisis), but not otherwise. Thus, altogether, the dispersion measures together with their approximate CIs give a very good summary of the distributional changes over time.

5. Measures of Signed Serial Dependence

After having discussed the analysis of marginal properties of a categorical time series, we now turn to the analysis of serial dependencies. In Section 1, two known measures of signed serial dependence, Cohen’s in Equation (3) and a modification of it, in Equation (4), are briefly surveyed, and, in Section 3.2, we realize a connection to and , respectively. Motivated by a zero problem with , a new type of modified is proposed in Equation (6), the measure , and this turns out to be related to .

If replacing the (bivariate) probabilities in Equations (3), (4) and (6) by the respective (bivariate) relative frequencies computed from , we end up with sample versions of these dependence measures. Knowledge of their asymptotic distribution is particularly relevant for the i.i.d.-case, because this allows us to test for significant serial dependence in the given time series. As shown by Weiß (2011, 2013), then has an asymptotic normal distribution, and it holds approximately that

The sample version of has a more simple asymptotic normal distribution with Weiß (2011, 2013)

but it suffers from the before-mentioned zero problem, especially for short time series.

Thus, it remains to derive the asymptotics of the novel under the null of an i.i.d. sample . The starting point is an extension of the limiting result in Equation (8). Under appropriate mixing assumptions (see Section 3), Weiß (2013) derived the joint asymptotic distribution of all univariate and equal-bivariate relative frequencies, i.e. of all and , which is the -dimensional normal distribution . The covariance matrix consists of four blocks with entries

where always , and where

This rather complex general result simplifies greatly special cases such as an NDARMA- DGP (Weiß 2013) and, in particular, for an i.i.d. DGP:

Now, the asymptotic properties of can be derived, as done in Appendix B.3. is asymptotically normally distributed, and mean and variance can be approximated by plugging Equation (18) into

In the i.i.d.-case, we simply have

Comparing Equations (16), (17) and (21), we see that all three measures have the same asymptotic bias , but their asymptotic variances generally differ. An exception to the latter statement is obtained in the case of a uniform distribution, then also the asymptotic variances coincide (see Appendix B.3).

6. Simulations and Applications

Section 6.1 presents some simulation results, where the quality of the asymptotic approximations for according to Section 5 is investigated, as well as the power if testing against different types of serial dependence. Two real-data examples are discussed in Section 6.2 and Section 6.3, first an ordinal time series with rather strong positive dependence, then a nominal time series exhibiting negative dependencies.

6.1. Finite-Sample Performance of Serial Dependence Measures

In analogy to Section 4.1, we compared the finite-sample performance of the normal approximations in Equations (16), (17) and (21) via simulations1. As the power scenarios, we not only included NDARMA models but also the NegMarkov model described in Appendix A.2. These models were combined with the marginal distributions to in Table 1 plus ; for the NegMarkov model, it was not possible to have the marginals and with very low dispersion. The full simulation results are available from the author upon request, but excerpts thereof are shown in the sequel to illustrate the main findings. As before, all tables are collected in Appendix C.

First, we discuss the distributional properties of under the null of an i.i.d. DGP. The unique mean approximation worked very well without exceptions. The quality of the standard deviations’ approximations is investigated in Table A5. Generally, the actual marginal dispersion was of great influence. For large dispersion (e.g., in Table A5), we had a very good agreement between asymptotic and simulated standard deviation, where deviations were typically not larger than . For low dispersion (e.g., in Table A5), we found some discrepancy for and increasing h: the asymptotic approximation resulted in lower values for (discrepancy up to 0.004), and in larger values for (discrepancy up to 0.002). Consequently, if testing for serial independence, we expect the size for to be smaller than the nominal -level, and to be larger for . This was roughly confirmed by the results in Table A6 (and by further simulations), with the smallest size values for (might be smaller by up to 0.01) and the largest for . The sizes of tended to be smaller than 0.05 for (discrepancies ), while those of were always rather close to 5%.

A more complex picture was observed with regard to the power of (see Table A6). For positive dependence (DMA(1) and DAR(1)), performed best if being close to a marginal uniform distribution, whereas had superior power for lower marginal dispersion levels (and performed second-best). For negative dependence (NegMarkov), in contrast, was the optimal choice, and might have a rather poor power, especially for low dispersion. Thus, while both showed a more-or-less balanced performance with respect to positive and negative dependence, we had a sharp contrast for .

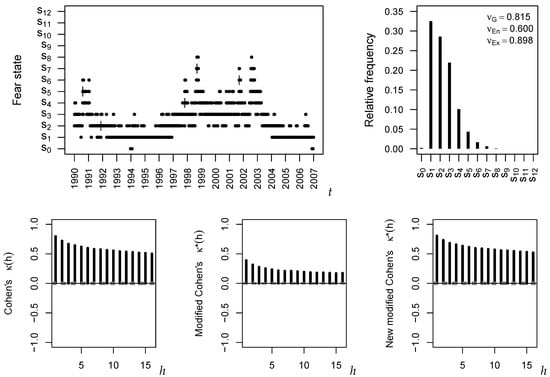

6.2. Application: Fear Index

The Volatility Index (VIX) serves as a benchmark for U.S. stock market volatility, and increasing VIX values are interpreted as indications of greater fear in the market (Hancock 2012). Hancock (2012) distinguished between the ordinal fear states given in Table 2. From the historical closing rates of the VIX offered by the website https://finance.yahoo.com/, a time series of daily fear states was computed for the trading days in the period 1990–2006 (before the beginning of the financial crisis). The obtained time series is plotted in Figure 3.

Table 2.

Definition of fear states for Volatility Index (VIX) according to Hancock (2012).

Figure 3.

Fear states time series plot and PMF (top); and plots of (bottom).

As shown in the plots in the top panel of Figure 3, the states – are never observed during 1990–2006, thus we have zero frequencies affecting the computation of and . The marginal distribution itself deviates visibly from a uniform distribution, thus it is reasonable that the dispersion measures , and are clearly below 1. Actually, the PMF mainly concentrates on the low to moderate fear states, high anxiety (or more) only happened for few of the trading days. Even more important is to investigate the development of these fear states over time. While negative serial dependence would indicate a permanent fluctuation between the states, positive dependence would imply some kind of inertia regarding the respective states. The serial dependence structure was analyzed, as shown in the bottom panel of Figure 3, where the critical values for level 5% (dashed lines) were computed according to the asymptotics in Section 5. All measures indicated significantly positive dependence, thus the U.S. stock market has a tendency to stay in a state once attained. However, (Figure 3, center) produced notably smaller values than (Figure 3, left and right). Considering that the time series plot with its long runs of single states implies a rather strong positive dependence, the values produced by did not appear to be that plausible. Thus, , which resulted in very similar values, appeared to be better interpretable in the given example. Note that the discrepancy between and went along with the discrepancy between and .

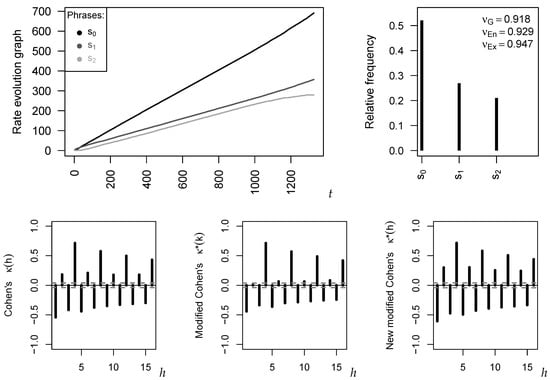

6.3. Application: Wood Pewee

Animal behavior studies are an integral part of research in biology and psychology. The time series example to be studied in the present section dates back to one of the pioneers of ethology, to Wallace Craig. The data were originally presented by Craig (1943) and further analyzed (among others) in Chapter 6 of the work by Weiß (2018). They constitute a nominal time series of length , where the three states express the different phrases in the morning twilight song of the Wood Pewee (“pee-ah-wee”, “pee-oh” and “ah-di-dee”, respectively). Since the range of a nominal time series lacks a natural order, a time series plot is not possible. Thus, Figure 4 shows a rate evolution graph as a substitute, where the cumulative frequencies of the individual states are plotted against time t. From the roughly linear increase, we conclude on a stable behavior of the time series (Weiß 2018).

Figure 4.

Wood Pewee time series: rate evolution graph and PMF plot (top); and plots of (bottom).

The dispersion measures , and all led to values between 0.90 and 0.95, indicating that all three phrases are frequently used (but not equally often) within the morning twilight song of the Wood Pewee. This is confirmed by the PMF plot in Figure 4, where we found some preference for the phrase . From the serial dependence plots in the bottom panel of Figure 4, a quite complex serial dependence structure becomes visible, with both positive and negative dependence values and with a periodic pattern. Positive values happen for even lags h (and particularly large values for multiples of 4), and negative values for odd lags. The positive values indicate a tendency for repeating a phrase, and such repetitions seem to be particularly likely after every fourth phrase. Negative values, in contrast, indicate a change of the phrase, e.g., it will rarely happen that the same phrase is presented twice in a row. While gave a very clear (and similar) picture of the rhythmic structure, it was again that caused some implausible values, e.g., the non-significant value at lag 2. Thus, both data examples indicate that should be used with caution in practice (also because of the zero problem). A decision between and is more difficult; is well established and slightly advantageous for uncovering positive dependencies, whereas is computationally simpler and shows a very good performance regarding negative dependencies.

7. Conclusions

This work discusses approaches for measuring dispersion and serial dependence in categorical time series. Asymptotic properties of the novel extropy measure for categorical dispersion are derived and compared to those of Gini index and entropy. Simulations showed that all three measures performed quite well, with slightly better coverage rates for the entropy but computational advantages for Gini index and extropy. The extropy was most reliable if testing the null hypothesis of a uniform distribution. The application and interpretation of these measures was illustrated with a longitudinal study about the most important political goals in Germany.

The analysis of the asymptotic bias of Gini index, entropy and extropy uncovered a relation between these three measures and three types of -measures for signed serial dependence. While two of these measures, namely and , have already been discussed in the literature, the “-counterpart” to the extropy turned out to be a new type of modified Cohen’s , denoted by . The asymptotics of were investigated and utilized for testing for serial dependence. A simulation study as well as two real-data examples (time series of fear states and song of the Wood Pewee) showed that has several drawbacks, while both and work very well in practice. The advantages of are computational simplicity and a superior performance regarding negative dependencies.

Funding

This research received no external funding.

Acknowledgments

The author thanks the editors and the three referees for their useful comments on an earlier draft of this article.

Conflicts of Interest

The author declares no conflict of interest.

Appendix A. Some Models for Categorical Processes

This appendix provides a brief summary of those models for categorical processes that are used for simulations and illustrative computations in this article. More background on these and further models for categorical processes can be found in the book by Weiß (2018).

Appendix A.1. NDARMA Models for Categorical Processes

The NDARMA model (“new” discrete autoregressive moving-average model) was proposed by Jacobs and Lewis (1983), and its definition might be given as follows (Weiß and Göb 2008):

Let and be categorical processes with state space , where is i.i.d. with marginal distribution p, and where is independent of . Let

be i.i.d. multinomial random vectors, which are independent of and of . Then, is said to be an NDARMA(p, q) process (and the cases and are referred to as a DAR(p) process and DMA(q) process, respectively) if it follows the recursion

(Here, if the state space is not numerically coded, we assume , and for each .)

NDARMA processes have several attractive properties, e.g. and have the same stationary marginal distribution: for all . Their serial dependence structure is characterized by a set of Yule–Walker-type equations for the serial dependence measure Cohen’s from Equation (3) (Weiß and Göb 2008):

where the satisfy . It should be noted that NDARMA processes satisfy , i.e. they can only handle positive serial dependence. Another important property is that the bivariate distributions at lag h are . This implies that and, as a consequence, that all of the serial dependence measures mentioned in this work coincide for NDARMA processes: .

Finally, Weiß (2013) showed that an NDARMA process is -mixing with exponentially decreasing weights such that the CLT on p. 200 in Billingsley (1999) is applicable.

Appendix A.2. Markov Chains for Categorical Processes

A discrete-valued Markov process is characterized by a “memory of length ”, in the sense that

has to hold for all . In the case , is commonly called a Markov chain. If the transition probabilities of the Markov chain do not change with time t, i.e., if for all , it is said to be homogeneous (analogously for higher-order Markov processes). An example of a parsimoniously parametrized homogeneous Markov chain (Markov process) is the DAR(1) process (DAR(p) process) according to Appendix A.1, which always exhibits positive serial dependence.

A parsimoniously parametrized Markov model with negative serial dependence was proposed by Weiß (2011), the “Negative Markov model” (NegMarkov model). For a given probability vector and some , its transition probabilities are defined by

The resulting ergodic Markov chain has the stationary marginal distribution

As an example, if , then the become such that p is also a uniform distribution. However, the conditional distribution given is not uniform:

Appendix B. Proofs

Appendix B.1. Derivation of the inequality in Equation (7)

The first inequality in Equation (7) was shown by Lad et al. (2015). Using the inequality for from Love (1980), it follows for that

Consequently, we have

as well as

Appendix B.2. Derivations for Sample Dispersion Measures

For studying the asymptotic properties of the sample Gini index according to Equation (1), it is important to know that can be exactly rewritten as the centered quadratic polynomial

Since holds exactly, this representation immediately implies an exact way of bias computation, , where is approximately given by according to Equation (8). Furthermore, provided that , the resulting linear approximation together with the asymptotic normality of can be used to derive the asymptotic result (Equation (9)) (Delta method). Here, has to be excluded, because then the linear term in Equation (A3) vanishes. Hence, in the boundary case , we end up with an asymptotic quadratic-form distribution instead of a normal one. Actually, it is easily seen that then coincides with the Pearson’s -statistic with respect to ; see Section 4 in Weiß (2013) for the asymptotics.

For the entropy in Equation (2), an exact polynomial representation such as in Equation (A3) does not exist, thus we have to use a Taylor approximation instead:

However, then, one can proceed as before. Thus, an approximate bias formula follows from

For , we can use the linear approximation implied by Equation (A4) to conclude on the asymptotic normality of with variance

also see the results in (Blyth 1959; Weiß 2013). Note that, in the i.i.d.-case, where , one computes

Finally, in the boundary case , again the linear term vanishes such that

equals the Pearson’s -statistic.

Finally, we do analogous derivations concerning the extropy in Equation (5). Starting with the Taylor approximation

it follows that

For , we can use the linear approximation implied by Equation (A5) to conclude on the asymptotic normality of with variance

Note that, in the i.i.d.-case, where , one computes

as before. Finally, in the boundary case , again the linear term vanishes such that

equals the Pearson’s -statistic.

Appendix B.3. Derivations for Measures of Signed Serial Dependence

We partition as , and we define

Then,

and

all other second-order derivatives equal 0. Thus, a second-order Taylor approximation of is given by

Hence, using Equation (18), it follows that

Furthermore, the Delta method implies that with

Note that, under the null of an i.i.d. DGP, we have the simplifications

Thus, the second-order Taylor approximation of then simplifies to

Furthermore,

This leads to Equation (21).

Appendix C. Tables

Table A1.

Asymptotic vs. simulated mean (M-a vs. M-s) of , , for DGPs i.i.d., DMA(1) with , and DAR(1) with .

Table A1.

Asymptotic vs. simulated mean (M-a vs. M-s) of , , for DGPs i.i.d., DMA(1) with , and DAR(1) with .

| DGP | i.i.d. | DMA(1), 0.25 | DAR(1), 0.40 | i.i.d. | DMA(1), 0.25 | DAR(1), 0.40 | i.i.d. | DMA(1), 0.25 | DAR(1), 0.40 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PMF | M-a | M-s | M-a | M-s | M-a | M-s | M-a | M-s | M-a | M-s | M-a | M-s | M-a | M-s | M-a | M-s | M-a | M-s | |

| 100 | 0.970 | 0.970 | 0.967 | 0.967 | 0.957 | 0.957 | 0.969 | 0.968 | 0.965 | 0.964 | 0.954 | 0.954 | 0.982 | 0.982 | 0.980 | 0.980 | 0.975 | 0.975 | |

| 250 | 0.976 | 0.976 | 0.975 | 0.975 | 0.971 | 0.971 | 0.975 | 0.975 | 0.973 | 0.973 | 0.969 | 0.969 | 0.986 | 0.986 | 0.985 | 0.985 | 0.983 | 0.983 | |

| 500 | 0.978 | 0.978 | 0.977 | 0.977 | 0.975 | 0.975 | 0.977 | 0.977 | 0.976 | 0.976 | 0.974 | 0.974 | 0.987 | 0.987 | 0.987 | 0.987 | 0.985 | 0.985 | |

| 1000 | 0.979 | 0.979 | 0.979 | 0.979 | 0.978 | 0.978 | 0.978 | 0.978 | 0.978 | 0.978 | 0.977 | 0.977 | 0.988 | 0.988 | 0.987 | 0.987 | 0.987 | 0.987 | |

| 100 | 0.627 | 0.627 | 0.625 | 0.625 | 0.619 | 0.619 | 0.649 | 0.649 | 0.645 | 0.644 | 0.634 | 0.634 | 0.739 | 0.739 | 0.737 | 0.737 | 0.731 | 0.731 | |

| 250 | 0.631 | 0.631 | 0.630 | 0.630 | 0.627 | 0.627 | 0.655 | 0.655 | 0.654 | 0.654 | 0.649 | 0.649 | 0.743 | 0.743 | 0.742 | 0.742 | 0.739 | 0.739 | |

| 500 | 0.632 | 0.632 | 0.632 | 0.631 | 0.630 | 0.630 | 0.657 | 0.657 | 0.657 | 0.656 | 0.654 | 0.654 | 0.744 | 0.744 | 0.743 | 0.743 | 0.742 | 0.742 | |

| 1000 | 0.633 | 0.633 | 0.632 | 0.633 | 0.632 | 0.632 | 0.658 | 0.658 | 0.658 | 0.658 | 0.657 | 0.657 | 0.744 | 0.744 | 0.744 | 0.744 | 0.744 | 0.743 | |

| 100 | 0.759 | 0.759 | 0.756 | 0.756 | 0.749 | 0.749 | 0.756 | 0.755 | 0.752 | 0.751 | 0.741 | 0.741 | 0.842 | 0.842 | 0.840 | 0.840 | 0.835 | 0.835 | |

| 250 | 0.764 | 0.764 | 0.762 | 0.762 | 0.760 | 0.760 | 0.762 | 0.762 | 0.761 | 0.761 | 0.757 | 0.757 | 0.846 | 0.846 | 0.845 | 0.845 | 0.843 | 0.843 | |

| 500 | 0.765 | 0.765 | 0.765 | 0.765 | 0.763 | 0.763 | 0.764 | 0.764 | 0.764 | 0.764 | 0.762 | 0.762 | 0.847 | 0.847 | 0.846 | 0.847 | 0.845 | 0.845 | |

| 1000 | 0.766 | 0.766 | 0.766 | 0.766 | 0.765 | 0.765 | 0.766 | 0.765 | 0.765 | 0.765 | 0.764 | 0.764 | 0.847 | 0.847 | 0.847 | 0.847 | 0.847 | 0.847 | |

| 100 | 0.433 | 0.433 | 0.431 | 0.431 | 0.427 | 0.428 | 0.486 | 0.486 | 0.482 | 0.481 | 0.471 | 0.471 | 0.568 | 0.568 | 0.566 | 0.566 | 0.560 | 0.561 | |

| 250 | 0.436 | 0.436 | 0.435 | 0.435 | 0.433 | 0.434 | 0.492 | 0.492 | 0.491 | 0.491 | 0.487 | 0.487 | 0.572 | 0.572 | 0.571 | 0.571 | 0.569 | 0.569 | |

| 500 | 0.437 | 0.437 | 0.436 | 0.436 | 0.435 | 0.436 | 0.495 | 0.495 | 0.494 | 0.494 | 0.492 | 0.492 | 0.573 | 0.573 | 0.572 | 0.572 | 0.571 | 0.571 | |

| 1000 | 0.437 | 0.437 | 0.437 | 0.437 | 0.436 | 0.436 | 0.496 | 0.496 | 0.495 | 0.495 | 0.494 | 0.494 | 0.573 | 0.573 | 0.573 | 0.573 | 0.573 | 0.573 | |

| 100 | 0.743 | 0.743 | 0.740 | 0.740 | 0.733 | 0.733 | 0.764 | 0.764 | 0.760 | 0.759 | 0.749 | 0.749 | 0.827 | 0.827 | 0.824 | 0.824 | 0.819 | 0.819 | |

| 250 | 0.747 | 0.747 | 0.746 | 0.746 | 0.743 | 0.743 | 0.770 | 0.770 | 0.768 | 0.769 | 0.764 | 0.765 | 0.830 | 0.830 | 0.829 | 0.829 | 0.827 | 0.827 | |

| 500 | 0.749 | 0.749 | 0.748 | 0.748 | 0.747 | 0.747 | 0.772 | 0.772 | 0.771 | 0.772 | 0.769 | 0.769 | 0.831 | 0.831 | 0.831 | 0.831 | 0.830 | 0.830 | |

| 1000 | 0.749 | 0.749 | 0.749 | 0.749 | 0.748 | 0.748 | 0.773 | 0.773 | 0.773 | 0.773 | 0.772 | 0.772 | 0.832 | 0.832 | 0.832 | 0.832 | 0.831 | 0.831 | |

| 100 | 0.928 | 0.928 | 0.925 | 0.925 | 0.916 | 0.916 | 0.929 | 0.929 | 0.925 | 0.925 | 0.915 | 0.915 | 0.956 | 0.956 | 0.953 | 0.954 | 0.948 | 0.948 | |

| 250 | 0.934 | 0.934 | 0.932 | 0.932 | 0.929 | 0.929 | 0.936 | 0.936 | 0.934 | 0.934 | 0.930 | 0.930 | 0.959 | 0.959 | 0.958 | 0.958 | 0.956 | 0.956 | |

| 500 | 0.936 | 0.936 | 0.935 | 0.935 | 0.933 | 0.933 | 0.938 | 0.938 | 0.937 | 0.937 | 0.935 | 0.935 | 0.960 | 0.960 | 0.960 | 0.960 | 0.959 | 0.959 | |

| 1000 | 0.937 | 0.937 | 0.936 | 0.936 | 0.935 | 0.935 | 0.939 | 0.939 | 0.939 | 0.939 | 0.938 | 0.938 | 0.961 | 0.961 | 0.961 | 0.961 | 0.960 | 0.960 | |

Table A2.

Asymptotic vs. simulated standard deviation (S-a vs. S-s) of , , for DGPs i.i.d., DMA(1) with , and DAR(1) with .

Table A2.

Asymptotic vs. simulated standard deviation (S-a vs. S-s) of , , for DGPs i.i.d., DMA(1) with , and DAR(1) with .

| DGP | i.i.d. | DMA(1), 0.25 | DAR(1), 0.40 | i.i.d. | DMA(1), 0.25 | DAR(1), 0.40 | i.i.d. | DMA(1), 0.25 | DAR(1), 0.40 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PMF | S-a | S-s | S-a | S-s | S-a | S-s | S-a | S-s | S-a | S-s | S-a | S-s | S-a | S-s | S-a | S-s | S-a | S-s | |

| 100 | 0.017 | 0.019 | 0.020 | 0.023 | 0.027 | 0.032 | 0.017 | 0.020 | 0.020 | 0.024 | 0.027 | 0.033 | 0.011 | 0.012 | 0.012 | 0.014 | 0.016 | 0.020 | |

| 250 | 0.011 | 0.011 | 0.013 | 0.014 | 0.017 | 0.018 | 0.011 | 0.012 | 0.013 | 0.014 | 0.017 | 0.019 | 0.007 | 0.007 | 0.008 | 0.008 | 0.010 | 0.011 | |

| 500 | 0.008 | 0.008 | 0.009 | 0.009 | 0.012 | 0.012 | 0.008 | 0.008 | 0.009 | 0.009 | 0.012 | 0.013 | 0.005 | 0.005 | 0.006 | 0.006 | 0.007 | 0.008 | |

| 1000 | 0.006 | 0.006 | 0.006 | 0.007 | 0.008 | 0.009 | 0.006 | 0.006 | 0.006 | 0.007 | 0.008 | 0.009 | 0.003 | 0.003 | 0.004 | 0.004 | 0.005 | 0.005 | |

| 100 | 0.071 | 0.071 | 0.084 | 0.083 | 0.109 | 0.106 | 0.063 | 0.064 | 0.074 | 0.075 | 0.097 | 0.096 | 0.057 | 0.058 | 0.067 | 0.068 | 0.088 | 0.088 | |

| 250 | 0.045 | 0.045 | 0.053 | 0.053 | 0.069 | 0.068 | 0.040 | 0.040 | 0.047 | 0.047 | 0.061 | 0.061 | 0.036 | 0.036 | 0.043 | 0.043 | 0.055 | 0.055 | |

| 500 | 0.032 | 0.032 | 0.037 | 0.037 | 0.049 | 0.049 | 0.028 | 0.029 | 0.033 | 0.033 | 0.043 | 0.043 | 0.026 | 0.026 | 0.030 | 0.030 | 0.039 | 0.039 | |

| 1000 | 0.023 | 0.023 | 0.027 | 0.027 | 0.035 | 0.035 | 0.020 | 0.020 | 0.024 | 0.024 | 0.031 | 0.031 | 0.018 | 0.018 | 0.021 | 0.021 | 0.028 | 0.028 | |

| 100 | 0.058 | 0.058 | 0.068 | 0.068 | 0.088 | 0.086 | 0.053 | 0.054 | 0.062 | 0.063 | 0.081 | 0.081 | 0.042 | 0.043 | 0.049 | 0.050 | 0.064 | 0.065 | |

| 250 | 0.037 | 0.037 | 0.043 | 0.043 | 0.056 | 0.055 | 0.033 | 0.034 | 0.039 | 0.039 | 0.051 | 0.051 | 0.027 | 0.027 | 0.031 | 0.031 | 0.041 | 0.041 | |

| 500 | 0.026 | 0.026 | 0.030 | 0.030 | 0.039 | 0.039 | 0.024 | 0.024 | 0.028 | 0.028 | 0.036 | 0.036 | 0.019 | 0.019 | 0.022 | 0.022 | 0.029 | 0.029 | |

| 1000 | 0.018 | 0.018 | 0.021 | 0.021 | 0.028 | 0.028 | 0.017 | 0.017 | 0.020 | 0.020 | 0.025 | 0.025 | 0.013 | 0.013 | 0.016 | 0.016 | 0.020 | 0.020 | |

| 100 | 0.078 | 0.077 | 0.092 | 0.090 | 0.119 | 0.115 | 0.072 | 0.073 | 0.085 | 0.085 | 0.110 | 0.109 | 0.073 | 0.073 | 0.085 | 0.086 | 0.111 | 0.110 | |

| 250 | 0.049 | 0.049 | 0.058 | 0.058 | 0.075 | 0.075 | 0.046 | 0.046 | 0.054 | 0.054 | 0.070 | 0.070 | 0.046 | 0.046 | 0.054 | 0.054 | 0.070 | 0.071 | |

| 500 | 0.035 | 0.035 | 0.041 | 0.041 | 0.053 | 0.053 | 0.032 | 0.032 | 0.038 | 0.038 | 0.049 | 0.049 | 0.033 | 0.033 | 0.038 | 0.038 | 0.050 | 0.050 | |

| 1000 | 0.025 | 0.025 | 0.029 | 0.029 | 0.038 | 0.038 | 0.023 | 0.023 | 0.027 | 0.027 | 0.035 | 0.035 | 0.023 | 0.023 | 0.027 | 0.027 | 0.035 | 0.035 | |

| 100 | 0.065 | 0.064 | 0.076 | 0.075 | 0.099 | 0.096 | 0.056 | 0.057 | 0.066 | 0.067 | 0.086 | 0.086 | 0.048 | 0.048 | 0.056 | 0.056 | 0.073 | 0.073 | |

| 250 | 0.041 | 0.041 | 0.048 | 0.048 | 0.062 | 0.062 | 0.036 | 0.036 | 0.042 | 0.042 | 0.054 | 0.054 | 0.030 | 0.030 | 0.035 | 0.035 | 0.046 | 0.046 | |

| 500 | 0.029 | 0.029 | 0.034 | 0.034 | 0.044 | 0.044 | 0.025 | 0.025 | 0.029 | 0.029 | 0.038 | 0.038 | 0.021 | 0.021 | 0.025 | 0.025 | 0.032 | 0.033 | |

| 1000 | 0.020 | 0.020 | 0.024 | 0.024 | 0.031 | 0.031 | 0.018 | 0.018 | 0.021 | 0.021 | 0.027 | 0.027 | 0.015 | 0.015 | 0.018 | 0.018 | 0.023 | 0.023 | |

| 100 | 0.033 | 0.034 | 0.039 | 0.040 | 0.051 | 0.052 | 0.030 | 0.032 | 0.036 | 0.037 | 0.046 | 0.050 | 0.021 | 0.022 | 0.025 | 0.026 | 0.032 | 0.034 | |

| 250 | 0.021 | 0.021 | 0.025 | 0.025 | 0.032 | 0.032 | 0.019 | 0.019 | 0.022 | 0.023 | 0.029 | 0.030 | 0.013 | 0.013 | 0.016 | 0.016 | 0.020 | 0.021 | |

| 500 | 0.015 | 0.015 | 0.017 | 0.017 | 0.023 | 0.023 | 0.014 | 0.014 | 0.016 | 0.016 | 0.021 | 0.021 | 0.009 | 0.010 | 0.011 | 0.011 | 0.014 | 0.015 | |

| 1000 | 0.010 | 0.011 | 0.012 | 0.012 | 0.016 | 0.016 | 0.010 | 0.010 | 0.011 | 0.011 | 0.015 | 0.015 | 0.007 | 0.007 | 0.008 | 0.008 | 0.010 | 0.010 | |

Table A3.

Simulated coverage rate for 95% CIs of , , for DGPs i.i.d., DMA(1) with , and DAR(1) with .

Table A3.

Simulated coverage rate for 95% CIs of , , for DGPs i.i.d., DMA(1) with , and DAR(1) with .

| Coverage , DGP: | Coverage , DGP: | Coverage , DGP: | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| PMF | i.i.d. | DMA(1), 0.25 | DAR(1), 0.40 | i.i.d. | DMA(1), 0.25 | DAR(1), 0.40 | i.i.d. | DMA(1), 0.25 | DAR(1), 0.40 | |

| 100 | 0.895 | 0.893 | 0.899 | 0.904 | 0.901 | 0.905 | 0.892 | 0.890 | 0.897 | |

| 250 | 0.914 | 0.909 | 0.898 | 0.920 | 0.915 | 0.905 | 0.912 | 0.907 | 0.896 | |

| 500 | 0.931 | 0.922 | 0.912 | 0.935 | 0.927 | 0.918 | 0.930 | 0.921 | 0.910 | |

| 1000 | 0.938 | 0.935 | 0.926 | 0.940 | 0.937 | 0.930 | 0.938 | 0.934 | 0.925 | |

| 100 | 0.936 | 0.926 | 0.908 | 0.937 | 0.929 | 0.915 | 0.931 | 0.928 | 0.911 | |

| 250 | 0.944 | 0.941 | 0.933 | 0.945 | 0.942 | 0.937 | 0.944 | 0.941 | 0.935 | |

| 500 | 0.947 | 0.946 | 0.941 | 0.947 | 0.946 | 0.943 | 0.947 | 0.946 | 0.941 | |

| 1000 | 0.949 | 0.947 | 0.944 | 0.949 | 0.948 | 0.946 | 0.949 | 0.947 | 0.945 | |

| 100 | 0.931 | 0.920 | 0.904 | 0.936 | 0.926 | 0.918 | 0.930 | 0.919 | 0.901 | |

| 250 | 0.941 | 0.938 | 0.930 | 0.943 | 0.943 | 0.937 | 0.940 | 0.937 | 0.929 | |

| 500 | 0.945 | 0.945 | 0.940 | 0.946 | 0.946 | 0.943 | 0.944 | 0.944 | 0.939 | |

| 1000 | 0.948 | 0.947 | 0.945 | 0.948 | 0.948 | 0.947 | 0.948 | 0.947 | 0.945 | |

| 100 | 0.941 | 0.925 | 0.904 | 0.938 | 0.925 | 0.905 | 0.938 | 0.931 | 0.913 | |

| 250 | 0.947 | 0.939 | 0.929 | 0.943 | 0.940 | 0.931 | 0.948 | 0.942 | 0.934 | |

| 500 | 0.949 | 0.946 | 0.941 | 0.948 | 0.946 | 0.942 | 0.950 | 0.947 | 0.943 | |

| 1000 | 0.952 | 0.945 | 0.946 | 0.949 | 0.946 | 0.947 | 0.949 | 0.946 | 0.947 | |

| 100 | 0.933 | 0.922 | 0.904 | 0.938 | 0.928 | 0.915 | 0.939 | 0.923 | 0.906 | |

| 250 | 0.945 | 0.939 | 0.931 | 0.947 | 0.942 | 0.935 | 0.944 | 0.940 | 0.931 | |

| 500 | 0.946 | 0.945 | 0.941 | 0.947 | 0.946 | 0.943 | 0.947 | 0.945 | 0.941 | |

| 1000 | 0.948 | 0.947 | 0.945 | 0.949 | 0.948 | 0.946 | 0.949 | 0.947 | 0.945 | |

| 100 | 0.909 | 0.894 | 0.884 | 0.920 | 0.908 | 0.901 | 0.906 | 0.890 | 0.877 | |

| 250 | 0.933 | 0.923 | 0.908 | 0.936 | 0.929 | 0.919 | 0.931 | 0.921 | 0.905 | |

| 500 | 0.938 | 0.935 | 0.927 | 0.940 | 0.938 | 0.933 | 0.937 | 0.934 | 0.925 | |

| 1000 | 0.943 | 0.941 | 0.939 | 0.945 | 0.943 | 0.942 | 0.943 | 0.941 | 0.938 | |

Table A4.

Rejection rate if testing null hypothesis of uniform distribution () on 5%-level based on , , . DGPs i.i.d., DMA(1) with , and DAR(1) with , with marginal distribution .

Table A4.

Rejection rate if testing null hypothesis of uniform distribution () on 5%-level based on , , . DGPs i.i.d., DMA(1) with , and DAR(1) with , with marginal distribution .

| 1.00 | 0.98 | 0.96 | 0.94 | 0.92 | 0.90 | 1.00 | 0.98 | 0.96 | 0.94 | 0.92 | 0.90 | 1.00 | 0.98 | 0.96 | 0.94 | 0.92 | 0.90 | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DGP | Rejection rate | Rejection rate | Rejection rate | ||||||||||||||||

| i.i.d. | 100 | 0.049 | 0.056 | 0.081 | 0.122 | 0.183 | 0.271 | 0.050 | 0.057 | 0.082 | 0.120 | 0.178 | 0.262 | 0.050 | 0.058 | 0.084 | 0.127 | 0.190 | 0.281 |

| 250 | 0.052 | 0.068 | 0.132 | 0.250 | 0.421 | 0.605 | 0.051 | 0.068 | 0.129 | 0.243 | 0.407 | 0.590 | 0.051 | 0.068 | 0.132 | 0.252 | 0.423 | 0.608 | |

| 500 | 0.050 | 0.088 | 0.223 | 0.465 | 0.723 | 0.899 | 0.050 | 0.087 | 0.219 | 0.455 | 0.712 | 0.893 | 0.050 | 0.088 | 0.225 | 0.468 | 0.727 | 0.901 | |

| 1000 | 0.051 | 0.132 | 0.420 | 0.782 | 0.961 | 0.997 | 0.051 | 0.132 | 0.414 | 0.775 | 0.959 | 0.997 | 0.051 | 0.133 | 0.423 | 0.785 | 0.962 | 0.997 | |

| DMA(1), 0.25 | 100 | 0.054 | 0.060 | 0.076 | 0.109 | 0.150 | 0.213 | 0.057 | 0.063 | 0.079 | 0.109 | 0.150 | 0.210 | 0.055 | 0.062 | 0.078 | 0.112 | 0.155 | 0.219 |

| 250 | 0.052 | 0.065 | 0.108 | 0.194 | 0.317 | 0.467 | 0.052 | 0.067 | 0.108 | 0.190 | 0.309 | 0.455 | 0.052 | 0.066 | 0.110 | 0.197 | 0.322 | 0.474 | |

| 500 | 0.051 | 0.077 | 0.172 | 0.350 | 0.575 | 0.778 | 0.052 | 0.077 | 0.169 | 0.343 | 0.564 | 0.769 | 0.051 | 0.078 | 0.174 | 0.354 | 0.579 | 0.782 | |

| 1000 | 0.049 | 0.106 | 0.315 | 0.634 | 0.879 | 0.978 | 0.050 | 0.106 | 0.310 | 0.625 | 0.873 | 0.976 | 0.050 | 0.107 | 0.317 | 0.638 | 0.881 | 0.978 | |

| DAR(1), 0.4 | 100 | 0.056 | 0.059 | 0.067 | 0.088 | 0.112 | 0.146 | 0.059 | 0.062 | 0.071 | 0.091 | 0.115 | 0.147 | 0.059 | 0.062 | 0.071 | 0.092 | 0.118 | 0.153 |

| 250 | 0.053 | 0.060 | 0.085 | 0.132 | 0.199 | 0.294 | 0.054 | 0.062 | 0.085 | 0.131 | 0.195 | 0.286 | 0.054 | 0.062 | 0.087 | 0.135 | 0.205 | 0.301 | |

| 500 | 0.050 | 0.066 | 0.120 | 0.218 | 0.366 | 0.535 | 0.051 | 0.067 | 0.119 | 0.213 | 0.356 | 0.522 | 0.051 | 0.067 | 0.121 | 0.221 | 0.371 | 0.542 | |

| 1000 | 0.050 | 0.083 | 0.199 | 0.404 | 0.647 | 0.846 | 0.050 | 0.083 | 0.195 | 0.396 | 0.636 | 0.838 | 0.050 | 0.084 | 0.200 | 0.408 | 0.651 | 0.850 | |

Table A5.

Asymptotic vs. simulated standard deviation (S-a vs. S-s) of , , for i.i.d. DGPs with .

Table A5.

Asymptotic vs. simulated standard deviation (S-a vs. S-s) of , , for i.i.d. DGPs with .

| S-a | S-s, | S-a | S-s, | S-a | S-s, | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PMF | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 | ||||

| 100 | 0.064 | 0.064 | 0.065 | 0.066 | 0.058 | 0.056 | 0.057 | 0.057 | 0.074 | 0.075 | 0.077 | 0.078 | |

| 250 | 0.040 | 0.041 | 0.041 | 0.041 | 0.037 | 0.036 | 0.036 | 0.036 | 0.047 | 0.047 | 0.048 | 0.048 | |

| 500 | 0.029 | 0.029 | 0.029 | 0.029 | 0.026 | 0.026 | 0.026 | 0.026 | 0.033 | 0.033 | 0.033 | 0.034 | |

| 1000 | 0.020 | 0.020 | 0.020 | 0.020 | 0.018 | 0.018 | 0.018 | 0.018 | 0.023 | 0.023 | 0.024 | 0.024 | |

| 100 | 0.060 | 0.060 | 0.061 | 0.061 | 0.058 | 0.057 | 0.057 | 0.058 | 0.063 | 0.064 | 0.064 | 0.065 | |

| 250 | 0.038 | 0.038 | 0.038 | 0.038 | 0.037 | 0.036 | 0.036 | 0.037 | 0.040 | 0.040 | 0.040 | 0.040 | |

| 500 | 0.027 | 0.027 | 0.027 | 0.027 | 0.026 | 0.026 | 0.026 | 0.026 | 0.028 | 0.028 | 0.028 | 0.028 | |

| 1000 | 0.019 | 0.019 | 0.019 | 0.019 | 0.018 | 0.018 | 0.018 | 0.018 | 0.020 | 0.020 | 0.020 | 0.020 | |

| 100 | 0.058 | 0.058 | 0.059 | 0.059 | 0.058 | 0.057 | 0.058 | 0.058 | 0.058 | 0.058 | 0.059 | 0.059 | |

| 250 | 0.037 | 0.036 | 0.037 | 0.037 | 0.037 | 0.036 | 0.037 | 0.036 | 0.037 | 0.037 | 0.037 | 0.037 | |

| 500 | 0.026 | 0.026 | 0.026 | 0.026 | 0.026 | 0.026 | 0.026 | 0.026 | 0.026 | 0.026 | 0.026 | 0.026 | |

| 1000 | 0.018 | 0.018 | 0.018 | 0.018 | 0.018 | 0.018 | 0.018 | 0.018 | 0.018 | 0.018 | 0.018 | 0.018 | |

Table A6.

Rejection rate (RR) if testing null hypothesis of i.i.d. data on 5%-level based on , , . DGPs i.i.d., DMA(1) with , DAR(1) with , and NegMarkov with .

Table A6.

Rejection rate (RR) if testing null hypothesis of i.i.d. data on 5%-level based on , , . DGPs i.i.d., DMA(1) with , DAR(1) with , and NegMarkov with .

| DGP | i.i.d., RR for | DMA(1), 0.15, RR for | DAR(1), 0.15, RR for | NMark, 0.75, RR for | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PMF | |||||||||||||

| 100 | 0.047 | 0.042 | 0.051 | 0.484 | 0.537 | 0.400 | 0.594 | 0.634 | 0.507 | 0.478 | 0.235 | 0.521 | |

| 250 | 0.049 | 0.048 | 0.049 | 0.851 | 0.896 | 0.756 | 0.925 | 0.947 | 0.861 | 0.886 | 0.654 | 0.908 | |

| 500 | 0.049 | 0.049 | 0.050 | 0.988 | 0.994 | 0.961 | 0.997 | 0.999 | 0.989 | 0.995 | 0.936 | 0.997 | |

| 1000 | 0.049 | 0.048 | 0.049 | 1.000 | 1.000 | 0.999 | 1.000 | 1.000 | 1.000 | 1.000 | 0.999 | 1.000 | |

| 100 | 0.048 | 0.047 | 0.049 | 0.540 | 0.559 | 0.509 | 0.662 | 0.673 | 0.631 | 0.338 | 0.268 | 0.353 | |

| 250 | 0.050 | 0.049 | 0.049 | 0.903 | 0.916 | 0.878 | 0.960 | 0.966 | 0.947 | 0.725 | 0.636 | 0.735 | |

| 500 | 0.050 | 0.049 | 0.051 | 0.996 | 0.997 | 0.992 | 0.999 | 1.000 | 0.999 | 0.958 | 0.920 | 0.961 | |

| 1000 | 0.049 | 0.050 | 0.050 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 0.998 | 1.000 | |

| 100 | 0.048 | 0.047 | 0.048 | 0.577 | 0.569 | 0.573 | 0.699 | 0.688 | 0.697 | 0.275 | 0.269 | 0.276 | |

| 250 | 0.047 | 0.048 | 0.048 | 0.925 | 0.924 | 0.924 | 0.973 | 0.972 | 0.973 | 0.640 | 0.636 | 0.641 | |

| 500 | 0.049 | 0.049 | 0.049 | 0.998 | 0.998 | 0.998 | 1.000 | 1.000 | 1.000 | 0.918 | 0.916 | 0.918 | |

| 1000 | 0.050 | 0.050 | 0.050 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 | 0.998 | 0.998 | 0.998 | |

References

- Agresti, Alan. 2002. Categorical Data Analysis, 2nd ed.Hoboken: John Wiley & Sons, Inc. [Google Scholar]

- Billingsley, Patrick. 1999. Convergence of Probability Measures, 2nd ed.New York: John Wiley & Sons, Inc. [Google Scholar]

- Blyth, Colin R. 1959. Note on estimating information. Annals of Mathematical Statistics 30: 71–79. [Google Scholar] [CrossRef]

- Cohen, Jacob. 1960. A coefficient of agreement for nominal scales. Educational and Psychological Measurement 20: 37–46. [Google Scholar] [CrossRef]

- Craig, Wallace. 1943. The Song of the Wood Pewee, Myiochanes virens Linnaeus: A Study of Bird Music. New York State Museum Bulletin No. 334. Albany: University of the State of New York. [Google Scholar]

- GESIS—Leibniz Institute for the Social Sciences. 2018. ALLBUS 1980–2016 (German General Social Survey). ZA4586 Data File (Version 1.0.0). Cologne: GESIS Data Archive. (In German) [Google Scholar]

- Hancock, Gwendolyn D’Anne. 2012. VIX and VIX futures pricing algorithms: Cultivating understanding. Modern Economy 3: 284–94. [Google Scholar]

- Jacobs, Patricia A., and Peter A. W. Lewis. 1983. Stationary discrete autoregressive-moving average time series generated by mixtures. Journal of Time Series Analysis 4: 19–36. [Google Scholar] [CrossRef]

- Kvålseth, Tarald O. 1995. Coefficients of variation for nominal and ordinal categorical data. Perceptual and Motor Skills 80: 843–47. [Google Scholar] [CrossRef]

- Kvålseth, Tarald O. 2011a. The lambda distribution and its applications to categorical summary measures. Advances and Applications in Statistics 24: 83–106. [Google Scholar]

- Kvålseth, Tarald O. 2011b. Variation for categorical variables. In International Encyclopedia of Statistical Science. Edited by Miodrag Lovric. Berlin: Springer, pp. 1642–45. [Google Scholar]

- Lad, Frank, Giuseppe Sanfilippo, and Gianna Agro. 2015. Extropy: Complementary dual of entropy. Statistical Science 30: 40–58. [Google Scholar] [CrossRef]

- Love, Eric Russell. 1980. Some logarithm inequalities. Mathematical Gazette 64: 55–57. [Google Scholar] [CrossRef]

- Rao, C. Radhakrishna. 1982. Diversity and dissimilarity coefficients: A unified approach. Theoretical Population Biology 21: 24–43. [Google Scholar] [CrossRef]

- Shannon, Claude Elwood. 1948. A mathematical theory of communication. Bell System Technical Journal 27: 379–423, 623–56. [Google Scholar] [CrossRef]

- Shorrocks, Anthony F. 1978. The measurement of mobility. Econometrica 46: 1013–24. [Google Scholar] [CrossRef]

- Tan, Wai-Yuan. 1977. On the distribution of quadratic forms in normal random variables. Canadian Journal of Statististics 5: 241–50. [Google Scholar] [CrossRef]

- Weiß, Christian H. 2011. Empirical measures of signed serial dependence in categorical time series. Journal of Statistical Computation and Simulation 81: 411–29. [Google Scholar] [CrossRef]

- Weiß, Christian H. 2013. Serial dependence of NDARMA processes. Computational Statistics and Data Analysis 68: 213–38. [Google Scholar] [CrossRef]

- Weiß, Christian H. 2018. An Introduction to Discrete-Valued Time Series. Chichester: John Wiley & Sons, Inc. [Google Scholar]

- Weiß, Christian H., and Rainer Göb. 2008. Measuring serial dependence in categorical time series. AStA Advances in Statistical Analysis 92: 71–89. [Google Scholar] [CrossRef]

| 1. | The “zero problem” for described after Equation (4) happened mainly for and for distributions with low dispersion such as to , in about 0.5% of the i.i.d. simulation runs. It increased with positive dependence, to about 2% for the DAR(1) simulation runs. This problem was circumvented by replacing all affected summands by 0. |

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).