Abstract

This paper improves a kernel-smoothed test of symmetry through combining it with a new class of asymmetric kernels called the generalized gamma kernels. It is demonstrated that the improved test statistic has a normal limit under the null of symmetry and is consistent under the alternative. A test-oriented smoothing parameter selection method is also proposed to implement the test. Monte Carlo simulations indicate superior finite-sample performance of the test statistic. It is worth emphasizing that the performance is grounded on the first-order normal limit and a small number of observations, despite a nonparametric convergence rate and a sample-splitting procedure of the test.

Keywords:

asymmetric kernel; degenerate U-statistic; generalized gamma kernels; nonparametric kernel testing; smoothing parameter selection; symmetry test; two-sample goodness-of-fit test MSC:

62G10; 62G20

JEL:

C12; C14

1. Introduction

Symmetry and conditional symmetry play a key role in numerous fields of economics and finance. Economists’ focuses are often on asymmetry of price adjustments (Bacon [1]), innovations in asset markets (Campbell and Hentschel [2]) or policy shocks (Clarida and Gertler [3]). In addition, the mean-variance analysis in finance is consistent with investors’ portfolio decision making if and only if asset returns are elliptically distributed (e.g., Chamberlain [4]; Owen and Rabinovitch [5]; Appendix B in Chapter 4 of Ingersoll [6]). Moreover, conditional symmetry in the distribution of the disturbance is often a key regularity condition for regression analysis. In particular, convergence properties of adaptive estimation and robust regression estimation are typically explored under this condition. For the former, Bickel [7] and Newey [8] demonstrate that conditional symmetry of the disturbance distribution in the contexts of linear regression and moment-condition models, respectively, suffices for adaptive estimators to attain their efficiency bounds. For the latter, Carroll and Welsh [9] warn invalidity in inference based on robust regression estimation when the regression disturbance is asymmetrically distributed. Indeed, symmetry of the disturbance distribution is often a key assumption for consistency of parameter estimators in certain versions of robust regression estimation (e.g., Lee [10,11]; Zinde-Walsh [12]; Bondell and Stefanski [13]). Based on their simulation studies, Baldauf and Santos Silva [14] also argue that lack of conditional symmetry in the disturbance distribution may lead to inconsistency of parameter estimates via robust regression estimation.

In view of the importance in the existence of symmetry, a number of tests for symmetry and conditional symmetry have been proposed. The tests can be classified into kernel and non-kernel methods. Examples for the former include Fan and Gencay [15], Ahmad and Li [16], Zheng [17], Diks and Tong [18], and Fan and Ullah [19]. The latter falls into the tests based on: (i) sample moments (Randles et al. [20]; Godfrey and Orme [21]; Bai and Ng [22]; Premaratne and Bera [23]); (ii) regression percentile (Newey and Powell [24]); (iii) martingale transformation (Bai and Ng [25]); (iv) empirical processes (Delgado and Escanciano [26]; Chen and Tripathi [27]); and (v) Neyman’s smooth test (Fang et al. [28]). Our focus is on the test by Fernandes, Mendes and Scaillet [29] (abbreviated as “FMS” hereafter). While this test can be viewed as the kernel-smoothed one, it has a unique feature. When a probability density function (“pdf”) is symmetric about zero, its shapes on positive and negative sides must be mirror images each other. Then, after estimating pdfs on positive and negative sides separately using positive and absolute values of negative observations, respectively, FMS examine whether symmetry holds through gauging closeness between two density estimates. By this nature, we call the test the split-sample symmetry test (“SSST”) hereafter. One of the features of the SSST is that it relies on asymmetric kernels with support on such as the gamma (“G”) kernel by Chen [30]. Asymmetric kernel estimators are nonnegative and boundary bias-free, and achieve the optimal convergence rate (in the mean integrated squared error sense) within the class of nonnegative kernel estimators. It is also reported (e.g., p. 597 of Gospodinov and Hirukawa [31]; p. 651 of FMS) that asymmetric kernel-based estimation and inference possess nice finite-sample properties. The split-sample approach is expected to result in efficiency loss. However, it can attain the same convergence rate as the smoothed symmetry tests using symmetric kernels do. Furthermore, unlike these tests, the SSST does not require continuity of density derivatives at the origin.

The aim of this paper is to ameliorate the SSST further through combining it with the generalized gamma (“GG”) kernels, a new class of asymmetric kernels with support on that have been proposed recently by Hirukawa and Sakudo [32]. Our particular focus is on two special cases of the GG kernels, namely, the modified gamma (“MG”) and Nakagami-m (“NM”) kernels. While superior finite-sample performance of the MG kernel has been reported in the literature, the NM kernel is also anticipated to have an advantage when applied to the SSST. It is known that finite-sample performance of a kernel density estimator depends on proximity in shape between the underlying density and the kernel chosen. As shown in Section 2, the NM kernel collapses to the half-normal pdf when smoothing is made at the origin, and the shape of the density is likely to be close to those on the positive side of single-peaked symmetric distributions. We also pay particular attention to the smoothing parameter selection. While existing articles on asymmetric kernel-smoothed tests (e.g., Fernandes and Grammig [33]; FMS) simply borrow the choice method based on optimality for density estimation, we tailor the idea of test-oriented smoothing parameter selection by Kulasekera and Wang [34,35] to the SSST.

The SSST with the GG kernels plugged in preserves all appealing properties documented in FMS. First, the SSST has a normal limit under the null of symmetry and it is also consistent under the alternative. Hence, unlike the tests by Delgado and Escanciano [26] and Chen and Tripathi [27], no simulated critical values are required. Second, Monte Carlo simulations indicate superior finite-sample performance of the SSST smoothed by the GG kernels. The performance is confirmed even when the entire sample size is 50, despite a nonparametric convergence rate and a sample-splitting procedure. Remarkably, the superior performance is based simply on first-order asymptotic results, and thus the assistance of bootstrapping appears to be unnecessary, unlike most of the smoothed tests employing fixed, symmetric kernels. This result complements previous findings on asymmetric kernel-smoothed tests by Fernandes and Grammig [33] and FMS.

The remainder of this paper is organized as follows. In Section 2 a brief review of a family of the GG kernels is provided. Section 3 proposes symmetry and conditional symmetry tests based on the GG kernels. Their limiting null distributions and power properties are also explored. As an important practical problem, Section 4 discusses the smoothing parameter selection. Our particular focus is on the choice method for power optimality. Section 5 conducts Monte Carlo simulations to investigate finite-sample properties of the test statistics. Section 6 summarizes the main results of the paper. Proofs are provided in the Appendix.

This paper adopts the following notational conventions: is the gamma function; signifies an indicator function; denotes the integer part; is the Euclidian norm of matrix A; and denotes a generic constant, the quantity of which varies from statement to statement. The expression “” reads “A random variable X obeys the distribution Y.” The expression “” is used whenever as . Lastly, in order to describe different asymptotic properties of an asymmetric kernel estimator across positions of the design point relative to the smoothing parameter that shrinks toward zero, we denote by “interior x” and “boundary x” a design point x that satisfies and for some as , respectively.

2. Family of the GG Kernels: A Brief Review

Before proceeding, we provide a concise review on a family of the GG kernels. The family constitutes a new class of asymmetric kernels, and it consists of a specific functional form and a set of common conditions, as in Definition 1 below. The name “GG kernels” comes from the fact that the pdf of a GG distribution by Stacy [36] is chosen as the functional form. A major advantage of the family is that for each asymmetric kernel generated from this class, asymptotic properties of the kernel estimators (e.g., density and regression estimators) can be delivered by manipulating the conditions directly, as with symmetric kernels.

Definition 1.

(Hirukawa and Sakudo [32], Definition 1) Let be a continuous function of the design point x and the smoothing parameter b. For such , consider the pdf of , i.e.,

This pdf is said to be a family of the GG kernels if it satisfies each of the following conditions:

Condition 1.

, where is some constant, the function satisfies for some constants , and the connection between x and at is smooth.

Condition 2.

, and for , α satisfies for some constant . Moreover, connections of α and γ at , if any, are smooth.

Condition 3.

, for some constant .

Condition 4.

.

Condition 5.

, , where constants depend only on ν.

The family embraces the following two special cases1. Putting

and in (1) generates the MG kernel

It can be found that this is equivalent to the one proposed by Chen [30] by recognizing that on p. 473 of Chen [30] and . The same and also yields the NM kernel

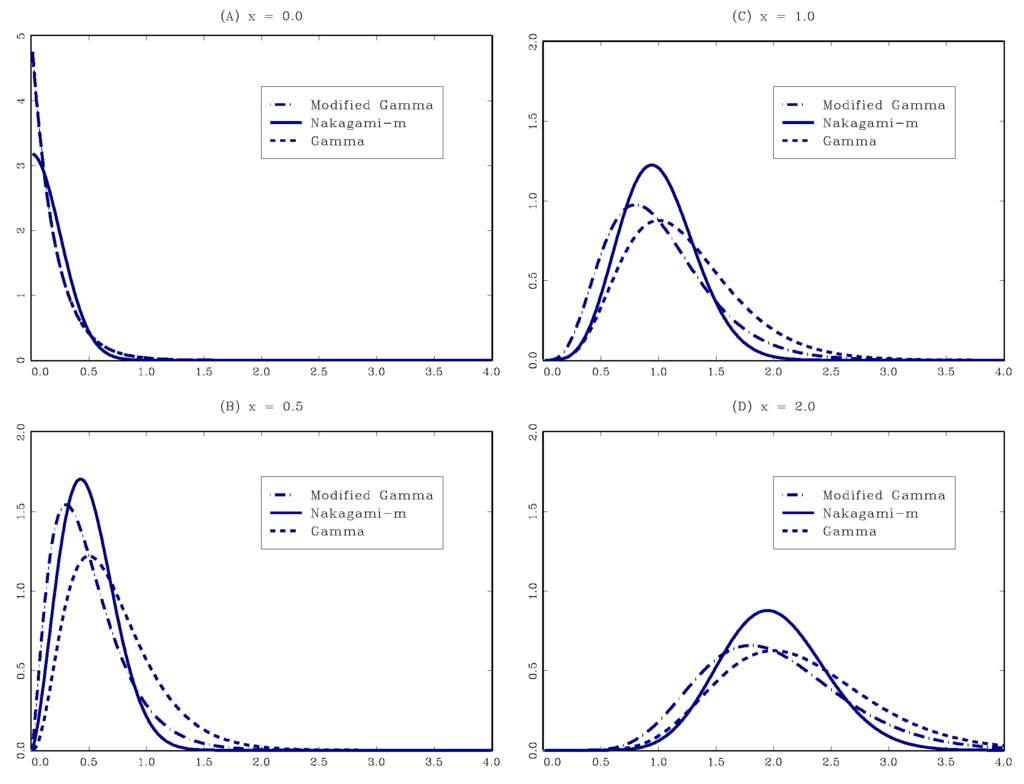

The GG kernels are designed to inherit all appealing properties that the MG kernel possesses. We conclude this section by referring to the properties. Two properties below are basic ones. First, by construction, the GG kernels are free of boundary bias and always generate nonnegative density estimates everywhere. Second, the shape of each GG kernel varies according to the position at which smoothing is made; in other words, the amount of smoothing changes in a locally adaptive manner. To illustrate this property, Figure 1 plots the shapes of the MG and NM kernels at four different design points () at which the smoothing is performed. For reference, the G kernel is also drawn in each panel. When smoothing is made at the origin (Panel (A)), the NM kernel collapses to a half-normal pdf, whereas others reduce to exponential pdfs. As the design point moves away from the boundary (Panels (B–D)), the shape of each kernel becomes flatter and closer to symmetry. We should stress that Figure 1 is drawn with the value of the smoothing parameter fixed at . Unlike variable bandwidth methods for fixed, symmetric kernels (e.g., Abramson [37]), adaptive smoothing of these kernels can be achieved by a single smoothing parameter, which makes them much more appealing in empirical work.

Figure 1.

Shapes of the GG Kernels When .

The remaining three properties are on density estimates using the GG kernels. Third, when best implemented, each GG density estimator attains Stone’s [38] optimal convergence rate in the mean integrated squared error within the class of nonnegative kernel density estimators. Fourth, the leading bias of each GG density estimator contains only the second-order derivative of the true density over the interior region, unlike many other asymmetric kernels including the G kernel. Fifth, the variance of the GG estimator tends to decrease as the design point moves away from the boundary. This property is particularly advantageous to estimating the distributions that have long tails with sparse data.

3. Tests for Symmetry and Conditional Symmetry Smoothed by the GG Kernels

3.1. SSST as a Special Case of Two-Sample Goodness-of-Fit Tests

This section proposes to combine the SSST with the GG kernels, explores asymptotic properties of the test statistic, and finally expands the scope of the test to testing the null of conditional symmetry. The SSST can be characterized as a special case of two-sample tests for equality of two unknown densities investigated by Anderson, Hall and Titterington [39]. Suppose that we are interested in testing symmetry of the distribution of a random variable . Without loss of generality, we hypothesize that the distribution is symmetric about zero. If U has a pdf, then under the null, its shapes on positive and negative sides of the entire real line must be mirror images each other. Let f and g be the pdfs to the right and left from the origin, respectively. Then, we would like to test the null hypothesis

against the alternative

Accordingly, a natural test statistic should be built on the integrated squared error (“ISE”)

where F and G are cumulative distribution functions corresponding to f and g, respectively.

The name of the SSST comes from the way to construct a sample analog to I. A random sample of N observations is split into two sub-samples, namely, and , where . Given the sub-samples, f and g can be estimated using a GG kernel with the smoothing parameter b as

respectively2. Similarly, is replaced with their empirical measures . In addition, because under , without loss of generality and for ease of exposition, we assume that N is even and that . Using a short-handed notation finally yields the sample analog to I as

Although we could use itself as the test statistic, the probability limit of plays a role in a non-vanishing center term of the asymptotic null distribution. Because the term is likely to cause size distortions in finite samples, we focus only on to construct the testing statistic. Now can be rewritten as

where and . Observe that is symmetric between and and that almost surely under . It follows that is a degenerate U-statistic, and thus we may apply a martingale central limit theorem (e.g., Theorem 1 of Hall [40]; Theorem 4.7.3 of Koroljuk and Borovskich [41]).

Before describing the asymptotic properties of , we make two remarks. First, applying the idea of two-sample goodness-of-fit tests to the symmetry test is not new. Ahmad and Li [16] and Fan and Ullah [19] have also studied the symmetry test based on closeness of two density estimates measured by the ISE. They estimate densities using two samples, namely, the original entire sample and the one obtained by flipping the sign of each observation in our notations. Because each of X and Y has support on by construction, a standard symmetric kernel is employed for density estimation unlike the SSST. Second, if X and Y are taken from two different distributions with support on , then can be viewed as a pure two-sample goodness-of-fit test. It can be immediately applied to the testing for equality of two unknown distributions of nonnegative economic and financial variables such as incomes, wages, short-term interest rates, and insurance claims.

To present the convergence properties of , we make the following assumptions.

Assumption 1.

Two random samples and are drawn independently from univariate distributions that have pdfs f and g with support on , respectively.

Assumption 2.

f and g are twice continuously differentiable on , and , , , .

Assumption 3.

The smoothing parameter satisfies as .

Assumption 4.

Let and be two independent copies of X and Y, respectively. Then, the followings hold:

- (a)

- ; and .

- (b)

- ;; ;and , where is a kernel-specific constant given in Condition 5 of Definition 1.

Assumptions 1–3 are standard in the literature of asymmetric kernel smoothing. On the other hand, Assumption 4 has a different flavor. Convergence results on are built on several different moment approximations. While Definition 1 implies the statements in Lemma A2 in the Appendix, it is unclear whether the definition may even admit such approximations as in Assumption 4. The difficulty comes from the fact that unlike symmetric kernels, roles of design points and data points are nonexchangeable in asymmetric kernels. What makes the problem more complicated is that functional forms of in the GG kernels are not fully specified in Definition 1. Considering that not all GG kernels may admit the moment approximations (a) and (b), we choose to make an extra assumption. Note that the MG and NM kernels fulfill Assumption 4, as documented in the next lemma.

Lemma 1.

If Assumptions 1–3 hold, then each of the MG and NM kernels satisfies Assumption 4.

The theorem below delivers the convergence properties of and provides a consistent estimator of its asymptotic variance.

Theorem 1.

Suppose that Assumptions 1–4 and hold.

- (i)

- Under , as , wherewhich reduces to under , and is a kernel-specific constant given in Condition 5 of Definition 1.

- (ii)

- A consistent estimator of is given by

We make a few remarks. First, it follows from Lemma 1 and Theorem 1 that the MG and NM kernels can be safely employed for the SSST, where values of for these kernels are . It also follows from Proposition 1 of FMS and Theorem 1 that limiting null distributions of using the G and MG kernels coincide, as expected. Second, while a similar form to the asymptotic variance can be found in Proposition 1 of FMS, takes a more general form. Accordingly, the variance estimator is consistent under both and . Third, it can be inferred from Theorem 1 that the test statistic becomes . As a consequence, the SSST is a one-sided test that rejects in favor of if , where is the upper α-percentile of .

The next proposition refers to consistency of the SSST. Observe that the power approaches one for local alternatives with convergence rates no faster than , as well as for fixed alternatives.

Proposition 1.

If Assumptions 1–4 hold, then under , as for any non-stochastic sequence satisfying .

3.2. SSST When Two Sub-Samples Have Unequal Sample Sizes

Convergence results in the previous section rely on the assumption that the sample sizes of two sub-samples and are the same, i.e., so far has been maintained. In reality, is often the case, in particular, when the entire sample size is odd or when is true.

Handling this case requires more tedious calculation. When , can be rewritten as

Following Fan and Ullah [19], we deliver convergence results under the assumption that two sample sizes and diverge at the same rate. The asymptotic variance of and its consistent estimate are also provided. Because the essential arguments are the same as those for Theorem 1 and Proposition 1, we omit the proofs of Theorem 2 and Proposition 2 and simply state the results. Observe that when , these results collapse to Theorem 1 and Proposition 1, respectively.

Theorem 2.

Suppose that Assumptions 1–4 and for some constant hold.

- (i)

- Under , as , wherewhich reduces to under .

- (ii)

- A consistent estimator of is given by

Proposition 2.

If Assumptions 1–4 and hold, then under , as for any non-stochastic sequence satisfying .

The next corollary is a natural outcome from Theorem 2 and comes from the fact that under , or holds. Because N could be odd in this context, n should read .

Corollary 1.

If Assumptions 1–4 and hold, then so that .

3.3. Extension to a Test for Conditional Symmetry

So far we have maintained the assumption that the random variable U is observable and has a distribution that is symmetric about zero. However, often U is unobservable or the axis of symmetry is not zero. The former is typical when we are interested in symmetry of the distribution of the disturbance conditional on regressors in regression analysis. In this scenario, the test is conducted after U is replaced with the residual. For the latter, the test should be based on location-adjusted observations, i.e., transformed observations with an estimate of the axis of symmetry (e.g., the sample mean or the sample median) subtracted from U. These aspects motivate us to generalize the SSST to the testing for conditional symmetry.

Following FMS, we consider a testing for symmetry in the conditional distribution of with within the framework of a semiparametric context. Specifically, for a parameter space and a function , it suffices to check whether the conditional distribution of is symmetric about for some . Observe that this is equivalent to test whether there is such that the conditional distribution of is symmetric about zero.

However, implementing this type of testing strategy requires to estimate the conditional pdf of nonparametrically. This is cumbersome, considering the curse of dimensionality in and another smoothing parameter choice. Instead, as in Zheng [17], Bai and Ng [25] and Delgado and Escanciano [26], we assume that there are a parameter space and a function that can attain symmetry of the marginal distribution of about zero for some . Given the dependence of V (and thus U) on , we can finally rewrite our testing scheme as the one that tests, for a suitable parameter space Θ and a function , symmetry of the marginal distribution of about zero for some Θ.

Accordingly, the procedure of the conditional symmetry test takes the following two steps. First, we estimate given N observations and denote a consistent estimator of as . Second, the test is conducted using . As before, the entire sample is split into two sub-samples and . Then, the test statistics, namely, and for equal () and unequal () sample sizes, can be obtained by replacing in and with , respectively.

Our remaining task is to demonstrate that there is no asymptotic cost in the test statistics with replaced by its estimator , as long as at a suitable rate of convergence. To control the convergence rate, we make Assumption 5 below. Observe that it allows for nonparametric rates of convergence; see Hansen [42], for instance, for uniform convergence rates of kernel estimators.

Assumption 5.

and uniformly over for some .

Theorem 3 below provides combinations of the shrinking rate q for b and the convergence rate r for that can establish the first-order asymptotic equivalence between () and () when two sub-samples have equal (unequal) sample sizes.

Theorem 3.

If Assumptions 1–5 hold, then under ,

as when and

as when , provided that belong to the set .

The set given in the theorem can be expressed as the triangular region formed by the corners , and on the plane. The theorem also indicates that we must employ the sub-optimal smoothing parameter or undersmooth the observations to avoid additional cost of estimating , as is the case with other kernel-smoothed tests. Moreover, FMS set and obtain the lower bound of r as . Indeed, the set provided in Theorem 3 overlaps the one derived by FMS .

4. Smoothing Parameter Selection

How to choose the value of the smoothing parameter b is an important practical problem. Nonetheless, it appears that the issue has not been well addressed in the literature on testing problems using asymmetric kernels. While Fernandes and Grammig [33] adopt a method inspired by Silverman’s [43] rule-of-thumb, FMS adjust the value chosen via cross validation. Both methods choose the smoothing parameter value from the viewpoint of optimality for density estimation. Such choices cannot be justified in theory or practice, because estimation-optimal values may not be equally optimal for testing purposes. In contrast, there are a few works on test-oriented smoothing parameter selection. For the test of equality in two unknown regression curves, Kulasekera and Wang [34,35], analytically explore the idea of choosing the smoothing parameter value that maximizes the power with the size preserved. Gao and Gijbels [44] combine this idea with the Edgeworth expansion for a bootstrap specification test of parametric regression models.

Below we tailor the procedure by Kulasekera and Wang [35] to the SSST. For a realistic setup, the case of is exclusively considered. Their basic idea is from sub-sampling. Without loss of generality assume that and are ordered samples. Then, the entire sample can be split into M sub-samples, where is a non-stochastic sequence that satisfies as . Given such M and , the mth sub-sample is defined as . This sub-sample yields the analogues to (3) and (4) as

and

where

It follows that the test statistic using the mth sub-sample becomes

Also denote the set of admissible values for as for some prespecified exponent and two constants . Moreover, let

where is the critical value for the size α test using the mth sub-sample. We pick the power-maximized , and the smoothing parameter value follows.

The behavior of can be examined by considering the local alternative

where satisfies and . Also let , where is the critical value for the size α test using the entire sample. For such , define . Then, is optimal in the sense of Proposition 3. The proof is omitted, because it is a minor modification of the one for Theorem 2.1 of Kulasekera and Wang [35]; indeed it can be established by recognizing that under , as in Proposition 3 of Fernandes and Grammig [33].

Proposition 3.

If Assumptions 1–4, and hold, then as .

We conclude this section by stating how to obtain in practice. Step 1 reflects that M should be divergent but smaller than both and in finite samples. Step 3 follows from the implementation methods in Kulasekera and Wang [34,35]. Finally, Step 4 considers that there may be more than one maximizer of .

| Step 1: Choose some and specify . |

| Step 2: Make M sub-samples of sizes . |

| Step 3: Pick two constants and define . |

| Step 4: Set and find by a grid search. |

| Step 5: Obtain and calculate . |

5. Finite-Sample Performance

5.1. Setup

It is widely recognized that asymptotic results on kernel-smoothed tests are not well transmitted to their finite-sample distributions, which reflects that omitted terms in the first-order asymptotics on the test statistics are highly sensitive to their smoothing parameter values in finite samples. On the other hand, Fernandes and Grammig [33] and FMS report superior finite-sample properties of asymmetric kernel-smoothed tests. To see which perspective dominates, this section investigates finite-sample performance of the test statistic for the SSST via Monte Carlo simulations.

To make a direct comparison with the results by FMS, we specialize in the conditional symmetry test using the same linear regression model as used in FMS. The data are generated in the following manner. First, the regressor x is drawn from . Second, the disturbance u, which is independent of x, is drawn from one of eight distributions with means of zero given in Table 1. Distributions with “S” (symmetric) and “A” (asymmetric) are used to investigate size and power properties of the test statistic, respectively. All the distributions are popularly chosen in the literature; the generalized lambda distribution (“GLD”) by Ramberg and Schmeiser [45], in particular, is known to nest a wide variety of symmetric and asymmetric distributions3. Finally, the dependent variable y is generated by setting .

Table 1.

Distributions of the Disturbance u in the Simulation Study.

We are interested in testing symmetry of the conditional distribution of y given x. For this purpose the SSST is applied for the least-squares residual using the sample , where are least-squares estimates of . Finite-sample size and power properties of the test statistic for two sub-samples with unequal sample sizes are examined against nominal 5% and 10% levels. The MG and NM kernels (denoted as “-MG” and “-NM”, respectively) are employed as examples of the GG kernels.

Finite-sample properties of -MG and -NM are evaluated in comparison with other versions of the SSST. First, two versions of FMS’s original test statistic built on an equivalence to our using the G kernel are considered. “FMS-G-O” is FMS’s truly original statistic, whereas “FMS-G-AltVar” is the one with the variance estimator replaced by given in Theorem 2. Second, using the G kernel (denoted as “-G”) is also calculated. Notice that FMS-G-AltVar and -G take exactly the same form. The only difference is the method of choosing the smoothing parameter b, which will be discussed shortly. Effects of changing the variance estimator, the method of choosing b, and the kernel choice can be examined by weighing FMS-G-O with FMS-G-AltVar, FMS-G-AltVar with -G, and -G with -MG or -NM, respectively.

The smoothing parameter b for FMS-G-O and FMS-G-AltVar is determined via making an adjustment for the value chosen by a cross-validation criterion; see p. 657 of FMS for details. On the other hand, the values of b for -G, -MG and -NM are selected by the power-optimality criterion in the previous section. Implementation details are as follows: (i) all critical values in are set at ; (ii) the shrinking rate of b is set at because of -consistency of least-squares estimates and Theorem 3; (iii) three different values are considered for δ, namely, ; and (iv) the interval for is set equal to . The sample size is , and 1000 replications are drawn for each combination of the sample size N and the distribution of u.

5.2. Simulation Results

Table 2 presents finite-sample rejection frequencies of each test statistic against nominal 5% and 10% levels across 1000 Monte Carlo samples. Critical values are simply based on the first-order normal limit, i.e., and correspond to the 5% and 10% levels, respectively.

Table 2.

Size and Power of the SSST.

Panel (A) reports size properties. At first glance, we can find that the results of FMS-G-O are close to what is reported in Table 3 of FMS. It has the tendency of over-rejecting the null slightly against the nominal size. Comparing FMS-G-O with FMS-G-AltVar reveals that replacing the variance formula is likely to decrease the rejection frequencies. Changing the choice method of b further reduces the rejection frequencies, and -G tends to result in mild under-rejection of the null. Effects of alternative kernel choices are mixed. While -G and -MG have similar size properties, -NM looks more conservative in the sense that its rejection frequencies are slightly smaller. Impacts of varying δ are found to be minor at best. A concern is that all test statistics exhibit size distortions for S4. However, the distribution is platykurtic and has sharp boundaries at . A platykurtic distribution is an exception rather than a rule in economics and finance, and a distribution with a compact support violates Assumption 1. In sum, all test statistics exhibit good size properties, although their convergence rates are nonparametric ones, effective sample sizes are (roughly) a half of the entire sample size N, and no size correction devices such as bootstrapping are used.

Panel (B) refers to power properties. We can immediately see that the rejection frequencies of each test statistic approach to one with the sample size N, which confirms consistency of the SSST. There is substantial improvement in power as the sample size increases from to 100. Most of rejection frequencies become nearly one for as small as . After a closer look, we can find it hard to judge whether changing the variance formula from FMS-G-O to FMS-G-AltVar may affect power properties favorably or adversely. However, once the smoothing parameter value is chosen via the power-optimality criterion, power properties are improved in general. Power properties of -G and -MG again look alike, whereas -NM appears to be more powerful than these two. Because the power tends to decrease with δ, it could be safe to choose from the viewpoint of power-maximization. Indeed, for and , each of -G, -MG and -NM exhibits better power properties than FMS-G-O and FMS-G-AltVar.

For convenience, Panel (B) presents size-adjusted powers, where the best case scenario (i.e., ) is considered for -G, -MG and -NM. These three test statistics again outperform FMS’s original statistics in terms of size-adjusted powers, and -NM appears to have the best power properties among three. All in all, Monte Carlo results indicate superior size and power properties of the SSST with the GG kernels plugged in.

6. Conclusions

The SSST developed by FMS is built on the idea of gauging the closeness between right and left sides of the axis of symmetry of an unknown pdf. To implement the test, we split the entire sample into two sub-samples and estimate both sides of the pdf nonparametrically using asymmetric kernels with support on . This paper has improved the SSST by combining it with the newly proposed GG kernels. The test statistic can be interpreted as a standardized version of a degenerate U-statistic. We deliver convergence properties of the test statistic and provide the asymptotic variance formulae for the cases of two sub-samples with equal and unequal sample sizes separately. It is demonstrated that the SSST smoothed by the GG kernels has a normal limit under the null of symmetry and is consistent under the alternative. As a part of the implementation method we also propose to select the smoothing parameter in a power-optimality criterion. Monte Carlo simulations indicate that the GG kernel-smoothed SSST with the power-maximized smoothing parameter value plugged in enjoys superior finite-sample properties. It should be stressed that the good performance of the SSST is grounded on the first-order normal limit and a small number of observations, despite its nonparametric convergence rate and sample-splitting procedure.

Appendix A. Appendix

Appendix A.1. Proof of Lemma 1

Because the proof for the MG kernel is basically the same as those for Lemmata 1(e) and 2 of FMS, we prove the case of the NM kernel. Among all statements, we concentrate on demonstrating that

All the remaining statements can be shown in the same manner. To approximate the gamma function, we frequently refer to the following well-known formulae:

- Stirling’s formula (“SF”):

- Legendre’s duplication formula (“LDF”):

In addition, proofs of the above statements require the following lemma. Its proof is virtually the same as those for Lemmata A.1 and A.2 of Fernandes and Monteiro [46], and thus it is omitted.

Lemma A1.

For a constant and two numbers ,

if , and

if .

Proof of (A1).

We apply the trimming argument as on p. 476 of Chen [30]. For some ,

where for interior . Then, the proof takes a multi-step approach including the following steps:

| Step 1: approximating |

| Step 2: approximating |

Step 1: Define

for . Then,

where the first term is denoted as , and the second term can be viewed as the pdf of . Moreover, can be further rewritten as

and an approximation to each of , and is provided separately.

By LDF, becomes

Then, by SF, an approximation to is given by

Next, it follows from LDF and SF that

Hence,

and thus

Furthermore, (A9) also implies that

Then,

Substituting (A8), (A10) and (A11) into (A7) finally yields

where

Then, for a random variable ,

By the property of GG random variables, (A5), (A9), and (A10),

In the end, a first-order Taylor expansion of around gives

which completes Step 1.

Step 2: For some , we split the interval for y into four subintervals as follows:

Also denote . Then, by (A4) and the change of variable ,

as .

Next, it follows from (A4) that

where . By the change of variable , the right-hand side becomes

Because and , we have

by letting t shrink toward zero. On the other hand, again by (A4) and the change of variable ,

so that

Hence, we can conclude that .

It can be also demonstrated that and with the assistance of (A3). Therefore, , and thus (A1) is established. □

Proof of (A2). Again for some ,

where for interior and the order of the remainder term is by construction. Observe that

It follows from (A9) that

for . Similarly,

Substituting (A13)–(A15) into (A12) and using SF, we have

As before, for some , consider

It follows from (A4) that

where . Then, by the change of variable ,

or .

Next, (A4) implies that

where . By the change of variable , the right-hand side becomes

so that

by letting t shrink toward zero, where . Notice that we may safely assume that : Assumption 2 ensures that f and g are bounded, and thus it must be the case that in the vicinity of the origin. On the other hand, (A4) also yields

By the change of variable ,

and thus

Hence, we can conclude that .

It also follows from (A3) that and . Therefore, , and thus (A2) is also established. □

Appendix A.2. Proof of Theorem 1

Because (ii) is obvious given that (i) is true, we concentrate only on (i). The proof strategy for (i) largely follows the one for Theorem 1.1 of Fernandes and Monteiro [46]. The proof of (i) also requires three lemmata below.

Lemma A2.

Let and be two independent copies of X and Y, respectively. Then, under Assumptions 1–3, the followings hold:

- (a)

- ; ;; and where is given in Condition 5 of Definition 1.

- (b)

- ; ;; and .

- (c)

- ; and .

- (d)

- ; ;; and .

Lemma A3.

If Assumptions 1–4 and hold, then

Lemma A4.

If Assumptions 1–4 and hold, then and

for some , where .

Appendix A.2.1. Proof of Lemma A2

The variance approximation in Theorem 1 of Hirukawa and Sakudo [32] and the trimming argument on p.476 of Chen [30] yield (a). On the other hand, the bias approximation in Theorem 1 of Hirukawa and Sakudo [32] is applied to (b)–(d). As a consequence, (b) can be established by recognizing that , for instance. Moreover, (c) and (d) follow from the proofs for (d) and (f) in Lemma A1 of FMS. □

Appendix A.2.2. Proof of Lemma A3

Because , we have

With the assistance of Assumption 4 and Lemma A2, we can pick out the leading terms of and as:

The result immediately follows. □

Appendix A.2.3. Proof of Lemma A4

It follows from Lemma A3 that

Next, by Jensen’s and -inequalities,

Furthermore, applying -inequality repeatedly yields

under . Essentially the same arguments as in the proofs of Lemmata 1 and A2 establish that is bounded by . It follows from that in the neighborhood of the origin, and thus holds. Hence, . Similarly, , and thus . It can be also shown that each of , and is bounded by . As a result,

Using -inequality and , we also have

Again, by -inequality,

where for some as so that is ensured. Therefore,

and thus is demonstrated.

In the end, by (A16)–(A18),

as long as . This completes the proof. □

Appendix A.2.4. Proof of Theorem 1

It follows from Lemma A4 that a martingale central limit theorem for a degenerate U-statistic (Theorem 4.7.3 of Koroljuk and Borovskich [41], to be precise) applies. Moreover, by Lemma A3, the asymptotic variance of the normal limit becomes

□

Appendix A.3. Proof of Proposition 1

The proof closely follows the one for Theorem 2.2 of Fan and Ullah [19]. Under , . Moreover, and , regardless of whether or may be true. Therefore, , and thus is a divergent stochastic sequence with an expansion rate of . The result immediately follows. □

Appendix A.4. Proof of Theorem 3

For brevity, we focus only on the case of equal sample sizes in two sub-samples. The proof largely follow the one for Proposition 5 of FMS. FMS consider the Taylor expansion

where and are partial derivatives of with respect to the first and second arguments evaluated at , respectively, and is the remainder term of a smaller order. The only difference between their proof and ours is that we derive the range of within which

is the case. Because each of and is , the left-hand side is bounded by

This becomes if satisfy , and . □

Acknowledgments

We would like to thank the editor Kerry Patterson, four anonymous referees, Yohei Yamamoto, and the participants of seminars at Hitotsubashi University and the Development Bank of Japan for their constructive comments and suggestions. We are also grateful to Marcelo Fernandes, Eduardo Mendes and Olivier Scaillet for providing us with the computer codes used for Monte Carlo simulations in Fernandes, Mendes and Scaillet [29]. This research was supported, in part, by the grant from Japan Society of the Promotion of Science (grant number 15K03405). The views expressed herein and those of the authors do not necessarily reflect the views of the Development Bank of Japan.

Author Contributions

The authors contributed equally to the paper as a whole.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- R.W. Bacon. “Rockets and feathers: The asymmetric speed of adjustment of UK retail gasoline prices to cost changes.” Energy Econ. 13 (1991): 211–218. [Google Scholar] [CrossRef]

- J.Y. Campbell, and L. Hentschel. “No news is good news: An asymmetric model of changing volatility in stock returns.” J. Financ. Econ. 31 (1992): 281–318. [Google Scholar] [CrossRef]

- R. Clarida, and M. Gertler. “How the Bundesbank conducts monetary policy.” In Reducing Inflation: Motivation and Strategy. Edited by C.D. Romer and D.H. Romer. Chicago, IL, USA: University of Chicago Press, 1997, pp. 363–412. [Google Scholar]

- G. Chamberlain. “A characterization of the distributions that imply mean-variance utility functions.” J. Econ. Theory 29 (1983): 185–201. [Google Scholar] [CrossRef]

- J. Owen, and R. Rabinovitch. “On the class of elliptical distributions and their applications to the theory of portfolio choice.” J. Finance 38 (1983): 745–752. [Google Scholar] [CrossRef]

- J.E. Ingersoll Jr. Theory of Financial Decision Making. Savage, MD, USA: Rowman & Littlefield, 1987. [Google Scholar]

- P.J. Bickel. “On adaptive estimation.” Ann. Stat. 10 (1982): 647–671. [Google Scholar] [CrossRef]

- W.K. Newey. “Adaptive estimation of regression models via moment restrictions.” J. Econom. 38 (1988): 301–339. [Google Scholar] [CrossRef]

- R.J. Carroll, and A.H. Welsh. “A note on asymmetry and robustness in linear regression.” Am. Stat. 42 (1988): 285–287. [Google Scholar]

- M.-J. Lee. “Mode regression.” J. Econom. 42 (1989): 337–349. [Google Scholar] [CrossRef]

- M.-J. Lee. “Quadratic mode regression.” J. Econom. 57 (1993): 1–19. [Google Scholar] [CrossRef]

- V. Zinde-Walsh. “Asymptotic theory for some high breakdown point estimators.” Econom. Theory 18 (2002): 1172–1196. [Google Scholar] [CrossRef]

- H.D. Bondell, and L.A. Stefanski. “Efficient robust regression via two-stage generalized empirical likelihood.” J. Am. Stat. Assoc. 108 (2013): 644–655. [Google Scholar] [CrossRef] [PubMed]

- M. Baldauf, and J.M.C. Santos Silva. “On the use of robust regression in econometrics.” Econ. Lett. 114 (2012): 124–127. [Google Scholar] [CrossRef]

- Y. Fan, and R. Gencay. “A consistent nonparametric test of symmetry in linear regression models.” J. Am. Stat. Assoc. 90 (1995): 551–557. [Google Scholar] [CrossRef]

- I.A. Ahmad, and Q. Li. “Testing symmetry of unknown density functions by kernel method.” J. Nonparametr. Stat. 7 (1997): 279–293. [Google Scholar] [CrossRef]

- J.X. Zheng. “Consistent specification testing for conditional symmetry.” Econom. Theory 14 (1998): 139–149. [Google Scholar] [CrossRef]

- C. Diks, and H. Tong. “A test for symmetries of multivariate probability distributions.” Biometrika 86 (1999): 605–614. [Google Scholar] [CrossRef]

- Y. Fan, and A. Ullah. “On goodness-of-fit tests for weakly dependent processes using kernel method.” J. Nonparametr. Stat. 11 (1999): 337–360. [Google Scholar] [CrossRef]

- R.H. Randles, M.A. Fligner, G.E. Policello II, and D.A. Wolfe. “An asymptotically distribution-free test for symmetry versus asymmetry.” J. Am. Stat. Assoc. 75 (1980): 168–172. [Google Scholar] [CrossRef]

- L.G. Godfrey, and C.D. Orme. “Testing for skewness of regression disturbances.” Econ. Lett. 37 (1991): 31–34. [Google Scholar] [CrossRef]

- J. Bai, and S. Ng. “Tests for skewness, kurtosis, and normality for time series data.” J. Bus. Econ. Stat. 23 (2005): 49–58. [Google Scholar] [CrossRef]

- G. Premaratne, and A. Bera. “A test for symmetry with leptokurtic financial data.” J. Financ. Econom. 3 (2005): 169–187. [Google Scholar] [CrossRef]

- W.K. Newey, and J.L. Powell. “Asymmetric least squares estimation and testing.” Econometrica 55 (1987): 819–847. [Google Scholar] [CrossRef]

- J. Bai, and S. Ng. “A consistent test for conditional symmetry in time series models.” J. Econom. 103 (2001): 225–258. [Google Scholar] [CrossRef]

- M.A. Delgado, and J.C. Escanciano. “Nonparametric tests for conditional symmetry in dynamic models.” J. Econom. 141 (2007): 652–682. [Google Scholar] [CrossRef]

- T. Chen, and G. Tripathi. “Testing conditional symmetry without smoothing.” J. Nonparametr. Stat. 25 (2013): 273–313. [Google Scholar] [CrossRef]

- Y. Fang, Q. Li, X. Wu, and D. Zhang. “A data-driven test of symmetry.” J. Econom. 188 (2015): 490–501. [Google Scholar] [CrossRef]

- M. Fernandes, E.F. Mendes, and O. Scaillet. “Testing for symmetry and conditional symmetry using asymmetric kernels.” Ann. Inst. Stat. Math. 67 (2015): 649–671. [Google Scholar] [CrossRef]

- S.X. Chen. “Probability density function estimation using gamma kernels.” Ann. Inst. Stat. Math. 52 (2000): 471–480. [Google Scholar] [CrossRef]

- N. Gospodinov, and M. Hirukawa. “Nonparametric estimation of scalar diffusion models of interest rates using asymmetric kernels.” J. Empir. Finance 19 (2012): 595–609. [Google Scholar] [CrossRef]

- M. Hirukawa, and M. Sakudo. “Family of the generalised gamma kernels: A generator of asymmetric kernels for nonnegative data.” J. Nonparametr. Stat. 27 (2015): 41–63. [Google Scholar] [CrossRef]

- M. Fernandes, and J. Grammig. “Nonparametric specification tests for conditional duration models.” J. Econom. 127 (2005): 35–68. [Google Scholar] [CrossRef]

- K.B. Kulasekera, and J. Wang. “Smoothing parameter selection for power optimality in testing of regression curves.” J. Am. Stat. Assoc. 92 (1997): 500–511. [Google Scholar] [CrossRef]

- K.B. Kulasekera, and J. Wang. “Bandwidth selection for power optimality in a test of equality of regression curves.” Stat. Probab. Lett. 37 (1998): 287–293. [Google Scholar] [CrossRef]

- E.W. Stacy. “A generalization of the gamma distribution.” Ann. Math. Stat. 33 (1962): 1187–1192. [Google Scholar] [CrossRef]

- I.S. Abramson. “On bandwidth variation in kernel estimates—A square root law.” Ann. Stat. 10 (1982): 1217–1223. [Google Scholar] [CrossRef]

- C.J. Stone. “Optimal rates of convergence for nonparametric estimators.” Ann. Stat. 8 (1980): 1348–1360. [Google Scholar] [CrossRef]

- N.H. Anderson, P. Hall, and D.M. Titterington. “Two-sample test statistics for measuring discrepancies between two multivariate probability density functions using kernel-based density estimates.” J. Multivar. Anal. 50 (1994): 41–54. [Google Scholar] [CrossRef]

- P. Hall. “Central limit theorem for integrated square error of multivariate nonparametric density estimators.” J. Multivar. Anal. 14 (1984): 1–16. [Google Scholar] [CrossRef]

- V.S. Koroljuk, and Y.V. Borovskich. Theory of U-Statistics. Dordrecht, The Netherlands: Kluwer Academic Publishers, 1994. [Google Scholar]

- B.E. Hansen. “Uniform convergence rates for kernel estimation with dependent data.” Econom. Theory 24 (2008): 726–748. [Google Scholar] [CrossRef]

- B.W. Silverman. Density Estimation for Statistics and Data Analysis. London, UK: Chapman & Hall, 1986. [Google Scholar]

- J. Gao, and I. Gijbels. “Bandwidth selection in nonparametric kernel testing.” J. Am. Stat. Assoc. 103 (2008): 1584–1594. [Google Scholar] [CrossRef]

- J.S. Ramberg, and B.W. Schmeiser. “An approximate method for generating asymmetric random variables.” Commun. ACM 17 (1974): 78–82. [Google Scholar] [CrossRef]

- M. Fernandes, and P.K. Monteiro. “Central limit theorem for asymmetric kernel functionals.” Ann. Inst. Stat. Math. 57 (2005): 425–442. [Google Scholar] [CrossRef]

- 1Hirukawa and Sakudo [32] present the Weibull kernel as yet another special case. However, it is not confirmed that this kernel satisfies Lemma 1 below, and thus the kernel is not investigated throughout.

- 2It is possible to use different asymmetric kernels and/or different smoothing parameters to estimate f and g. For convenience, however, we choose to employ the same asymmetric kernel function and a single smoothing parameter.

- 3Although the GLDs corresponding to A3 and A4 are used in Zheng [17] and FMS, they are found to have non-zero means. Therefore, we adjust the values of and with skewness and kurtosis maintained so that the resulting distributions have means of zero.

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license ( http://creativecommons.org/licenses/by/4.0/).