Abstract

With the advent of the Internet of Things (IoT) concept and its integration with the smart city sensing, smart connected health systems have appeared as integral components of the smart city services. Hard sensing-based data acquisition through wearables or invasive probes, coupled with soft sensing-based acquisition such as crowd-sensing results in hidden patterns in the aggregated sensor data. Recent research aims to address this challenge through many hidden perceptron layers in the conventional artificial neural networks, namely by deep learning. In this article, we review deep learning techniques that can be applied to sensed data to improve prediction and decision making in smart health services. Furthermore, we present a comparison and taxonomy of these methodologies based on types of sensors and sensed data. We further provide thorough discussions on the open issues and research challenges in each category.

1. Introduction

Smart cities are built on the foundation of information and communication technologies with the sole purpose of connecting citizens and technology for the overall improvement of the quality of lives. While quality of life includes ease of mobility and access to quality healthcare, amongst others, effective management and sustainability of resources, economic development and growth complement the fundamental requirements of smart cities. These goals are achieved by proper management and processing of the data acquired from dedicated or non-dedicated sensor networks. In most cases, the information gathered is refined continuously to produce other information for efficiency and effectiveness within the smart city.

In the Internet of Things (IoT) era, the interplay between mobile networks, wireless communications and artificial intelligence is transforming the way that humans live and survive via various forms of improvements in technological advancements, more specifically improved computing power, high performance processing and huge memory capacities. With the advent of cyber-physical systems, which comprise the seamless integration of physical systems with computing and communication resources, a paradigm shift from the conventional city concept towards a smart city design has been coined as the term smart city. Basically, a smart city is envisioned to be ICT driven and capable of offering various services such as smart driving, smart homes, smart living, smart governance and smart health, just to mention a few []. ICT-driven management and control, as well as the overwhelming use of sensors in smart devices for the good and well-being of citizens yields the desired level of intelligence to these services. Besides continuously informing the citizens, being liveable and ensuring the well-being of the citizens are reported among the requirements of smart cities []. Therefore, the smart city concept needs transformation of health services through sensor and IoT-enablement of medical devices, communications and decision support. This ensures availability, ubiquity and personal customization of services, as well as ease of access to these services. As stated in [], the criteria for smartness of an environment are various. As a corollary to this statement, the authors investigate a smart city in the following dimensions: (1) the technology angle (i.e., digital, intelligent, virtual, ubiquitous and information city), (2) the people angle (creative city, humane city, learning city and knowledge city) and (3) the community angle (smart community).

Furthermore, in the same vein, Anthopoulus (see []) divides the smart city into the following eight components: (1) smart infrastructures where facilities utilize sensors and chips; (2) smart transportation where vehicular networks along with the communication infrastructure are deployed for monitoring purposes; (3) smart environments where ICTs are used in the monitoring of the environment to acquire useful information regarding environmental sustainability; (4) smart services where ICTs are used for the the provision of community health, tourism, education and safety; (5) smart governance, which aims at proper delivery of government services; (6) smart people that use ICTs to access and increase humans’ creativity; (7) smart living where technology is used for the improvement of the quality of life; and (8) smart economy, where businesses and organizations develop and grow through the use of technology. Given these components, a smart health system within a smart city appears to be one of the leading gateways to a more productive and liveable structure that ensures the well-being of the community.

Assurance of quality healthcare is a social sustainability concept in a smart city. Social sustainability denotes the liveability and wellbeing of communities in an urban setting []. The large population in cities is actually a basis for improved healthcare services because one negligence or improper health service might lead to an outbreak of diseases and infections, which might become epidemic, thereby costing much more in curtailing them; however, for these health services to be somewhat beneficial, the methods and channels of delivery need to be top-notch. There have been various research studies into the adequate method required for effective delivery of healthcare. One of these methods is smart health. Smart health basically is the provision of health services using the sensing capabilities and infrastructures of smart cities. In recent years, smart health has gained wide recognition due to the increase of technological devices and the ability to process the data gathered from these devices with minimum error.

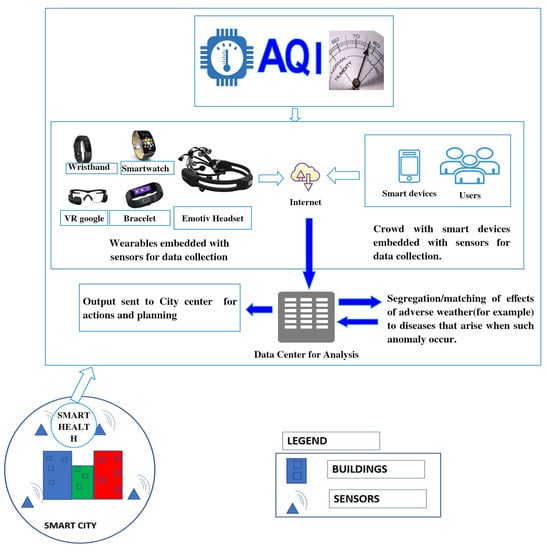

As recent research states, proper management and development of smart health is the key to success of the smart city ecosystem []. Smart health involves the use of sensors in smart devices and specifically manufactured/prototyped wearable sensors/bio-patches for proper monitoring of the health status of individuals living within a smart city, as shown in Figure 1. This example depicts a scenario for air quality monitoring to ensure healthier communities. Smart city infrastructure builds upon networked sensors that can be either dedicated or non-dedicated. As an integral component of the smart city, smart health systems utilize devices with embedded sensors (as non-dedicated sensing components) for environmental and ambient data collection such as temperature, air quality index (AQI) and humidity. Besides, wearables and carry-on sensors (as the dedicated sensing components) are also utilized to acquire medical data from individuals. Both dedicated and non-dedicated sensory data are transmitted to the data centres as the inputs of processing and further decision making processes. To achieve this goal, the already existing smart city framework coupled with IoT networking needs to be leveraged.

Figure 1.

Smart health embedded within a smart city. An example scenario is illustrated to detect the air quality indicator to ensure healthier communities (figure produced by Creately Online Diagram, Cinergix Pvt. Ltd., Mentone, Australia).

In other words, a smart city needs to provide the required framework for smart health to grow rapidly and achieve its aim. Ensuring the quality of big sensed data acquisition is one key aspect of smart health challenges, and the ability to leverage these data is an important aspect of smart health development, as well as building a sustainable smart city structure [,,]. Applications of smart health within smart cities are various. For example, Zulfiqar et al. [] proposed an intelligent system for detecting and monitoring patients that might have voice complication issues. This is necessary since a number of services within the smart cities are voice enabled. As such, any disorder with the voice might translate into everyday service problems within the smart city. The same problem has been studied by the researchers in [], where voice data and ECG signals were used as the inputs to the voice pathology detection system. Furthermore, with the aim of ensuring air quality within a smart city, the researchers in [] developed a cloud-based monitoring system for air quality to ensure environmental health monitoring. The motivation for environmental health is ensuring the wellness of communities in a smart city for sustainability. The body area network within a smart city can be used for ECG monitoring with the aim of warning an individual of any heart-related problem, especially cardiac arrest [], and also helps in determining the nature of human kinematic actions with the aim of ensuring improved quality of healthcare whenever needed []. Data management of patients is also one of the applications of smart health in smart cities that is of paramount importance. As discussed in [], proper management of patient records both at the data entry and application levels ensures that patients get the required treatment when due, and this also helps in the development of personalized medicine applications []. Furthermore, the scope of smart health in smart cities is not limited to the physiologic phenomena in human bodies; it also extends to the environment and physical building blocks of the smart infrastructure. Indeed, the consequences of mismanaged environment and/or physical structures are potentially unhealthy users and communities, which is not a sustainable case for a smart city. To address this problem, the researchers in [] created a system to monitor the structural health within a city using wireless sensor networks (WSN). It is worth mentioning that structural health monitoring also leads to inferential decision making services.

With these in mind, calls for new techniques that will ensure proper health service delivery have emerged. As an evolving concept, machine intelligence has attracted the healthcare sector by introducing effective decision support mechanisms applied to sensory data acquired through various media such as wearables or body area networks []. Machine learning techniques have undergone substantial improvements during the evolution of artificial intelligence and its integration with sensor and actuator networks. Despite many incremental improvements, deep learning has arisen as the most powerful tool thanks to its high level abstraction of complex big data, particularly big multimedia data [].

Deep learning (DL) derives from conventional artificial neural networks (ANNs) with many hidden perceptron layers that can help in identifying hidden patterns []. Although having many hidden perceptron layers in a deep neural network is promising, when the concept of deep learning was initially coined, it was limited mostly by computational power of the available computing systems. However, with the advent of the improved computational capability of computing systems, as well as the rise of cloudified distributed models, deep learning has become a strong tool for analysing sensory data (particularly multimedia sensory data) and assisting in long-term decisions. The basic idea of deep learning is trying to replicate what the human brain does in most cases. Thus, in a sensor and actuator network setting, the deep learning network receives sensory input and iteratively passes it to subsequent layers until a desirable output is met. With the iterative process, the weights of the network links are adapted so as to match the input with the desirable output during the training process. With the widespread use of heterogeneous sensors such as wearables, medical imaging sensors, invasive sensors or embedded sensors in smart devices to acquire medical data, the emergence and applicability of deep learning is quite visible in modern day healthcare, from diagnosis to prognosis to health management.

While shallow learning algorithms enforce shallow methods on sensor data for feature representation, deep learning seeks to extract hierarchical representations [,] from large-scale data. Thus, the idea is using deep architectural models with multiple layers of non-linear transformations []. For instance, the authors in [] use a shallow network with a covariance representation on the 3D sensor data in order to recognize human action from skeletal data. On the other hand, in the study in [], the AlexNet model and the histogram of oriented gradients features are used to obtain deep features from the data acquired through 3D depth sensors.

In this article, we provide a thorough review of the deep learning approaches that can be applied to sensor data in smart health applications in smart cities. The motivation behind this study is that deep learning techniques are among the key enablers of the digital health technology within a smart city framework. This is due to the performance and accuracy issues experienced by conventional machine learning techniques under high dimensional data. Thus, it is worth noting that deep learning is not a total replacement of machine learning, but an effective tool to cope with dimensionality issues in several applications such as smart health []. To this end, this article aims to highlight the emergence of deep learning techniques in smart health within a smart city ecosystem and at the same time to give future directions by discussing the challenges and open issues that are still pertinent. In accordance with these, we provide a taxonomy of sensor data acquisition and processing techniques in smart health applications. Our taxonomy and review of deep learning approaches pave the way for providing insights for deep learning algorithms in particular smart health applications.

This work is organized as follows. In Section 2, we briefly discuss the transition from conventional machine learning methodologies to the deep learning methods. Section 3 gives a brief overview of the use of deep learning techniques on sensor network applications and major deep learning techniques that are applied on sensory data, while Section 4 provides insights for the smart health applications where deep learning can be used to process and interpret sensed data. Section 5 presents outstanding challenges and opportunities for the researchers to address in big sensed health data by deep learning. Finally, Section 6 concludes the article by summarizing the reviewed methodologies and future directions.

2. Analysis of Sensory Data in E-Health

2.1. Conventional Machine Learning on Sensed Health Data

With the advent of the WSN concept, machine learning has been identified as a viable solution to reduce the capital and operational expenditures on the design of the network, as well as to improve the lifetime of the network []. Presently, the majority of the machine learning techniques use a combination of feature extraction and modality-specific algorithms that are used to identify/recognize handwriting and/or speech []. This normally requires a dataset that is big in volume and powerful computing resources to support tremendous amount of background tasks. Furthermore, despite tedious efforts, there are always bound to be certain issues, and these perform poorly in the presence of inconsistencies and diversity in the dataset. One of the major advantages of machine learning in most cases is feature learning where a machine is trained on some datasets and the output provides valuable representation of the initial feature.

Applications of machine learning algorithms on sensory data are various such as telemedicine [,,], air quality monitoring [], indoor localization [] and smart transportation []. However, conventional machine learning still has certain limitations such as inability to optimize non-differentiable discontinuous loss functions or not being able to obtain results following a feasible training duration at all times. These and many other issues encountered by machine learning techniques paved the way for deep learning as a more robust learning tool.

2.2. Deep Learning on Sensed Health Data

In [], deep learning is defined as a collection of algorithmic procedures that ‘mimic the brain’. More specifically, deep learning involves learning of layers via algorithmic steps. These layers enable the definition of hierarchical knowledge that derives from simpler knowledge []. There have been several attempts to build and design computers that are equipped with the ability to think. Until recently, this effort has been translated into rule-based learning, which is a ‘top down’ approach that involves creating rules for all possible circumstances []. However, this approach suffers from scalability since the number of rules is limited while its rule base is finite.

These issues can be remedied by adopting learning from experience instead of rule-based learning via a bottom-up approach. Labelled data form the experience. Labelled data are used as training input to the system where the training procedure is built upon past experiences. The learning from experience approach is well suited for applications such as spam filtering. On the other hand, the majority of the data collected by multimedia sensors (e.g., pictures, video feeds, sounds, etc.) are not properly labelled [].

Real-world problems that involve processing of multimedia sensor data such as speech or face recognition are challenging to represent digitally due to the possibly infinite the problem domain. Thus, describing the problem adequately suffers especially in the presence of multi-dimensional features, which in turn leads to an increase in the volume of the space in such a way that the available data become sparse, and training on sparse data would not lead to meaningful results. Nevertheless, such ‘infinite choice’ problems are common in the processing of sensory data that are mostly acquired from multimedia sensors []. These issues pave the way for deep learning as deep learning algorithms have to work with hard and/or intuitive problems, which are defined with no or very few rules on high dimensional features. The absence of a rule set enforces the system to learn to cope with unforeseen circumstances [].

Another characteristic of deep learning is the discovery of intricate structure in large datasets. To achieve this, deep learning utilizes a back propagation algorithm to adjust the internal parameters in each layer based on the representation of the parameters in the previous layer []. As such, it can be stated that representation learning is possible on partially labelled or unlabelled sensory data.

Acharya et al. [] used deep learning techniques (specifically CNN) in the diagnosis and detection of coronary artery disease from the signals acquired from the electrocardiogram (ECG) and achieved an accuracy of 94.95%. The authors in [] proposed the use of deep neural networks (DNN) for the active and automatic classification of ECG signals. Furthermore, in order to detect epileptic conditions early enough, deep learning with edge computing for the localization of epileptogenicity using electroencephalography (EEG) data has been proposed in []. Emotional well-being is a key state in the life of humans. With this in mind, the authors in [] classified positive and negative emotions using deep belief networks (DBN) and data from EEG. The aim is to accurately capture the moment an emotional swing occurs. Their work yielded an 87.62% classification accuracy. The authors in [] designed a BGMonitor for detecting blood glucose concentration and used a multi-task deep learning approach to analyse and process the data, and to make further inferences. The research yielded an accuracy of 82.14% when compared to the conventional methods.

3. Deep Learning Methods and Big Sensed Data

3.1. Deep Learning on Sensor Network Applications

Sensors are key enablers of the objects (things) of the emerging IoT networks []. The aggregation of sensors forms a network whose purpose amongst others is to generate and aggregate data for inferential purposes. The data generated from sensors need to be fine-tuned prior to undergoing any analytics procedure. This has led to various methods to formulate proper and adequate processing of sensed data from sensor and actuator networks. These methods are dependent on the type and applications of the sensed data. Deep learning (one of such methods) can be applied on sensor and actuator network applications to process data generated from sensors effectively and efficiently []. The output of a deep learning network can be used for decision making. Costilla-Reyes et al. used a convolutional neural network to learn spatio-temporal features that were derived from tomography sensors, and this yielded an effective and efficient way of performance classification of gait patterns using a limited number of experimental samples []. Transportation is another important service in a smart city architecture. The ability to acquire quality (i.e., high value) data from users is key to developing a smart transportation architecture. As an example study, the authors in [] developed a mechanism using a deep neural network to learn the transportation modes of mobile users. In the same study, the integration of a deep learning-driven decision making system with a smart transportation architecture has been shown to result in 95% classification accuracy. Besides these, deep learning helped in the power usage pattern analysis of vehicles using the sensors embedded in smart phones []. The goal of the study in [] is the timely prediction of the power consumption behaviour of vehicles using smart phones. The use of sensors in healthcare has been leading to significant achievements, and deep learning is being used to leverage the use of sensors and actuators for proper healthcare delivery. For instance, in assessing the level of Parkinson’s disease, Eskofier et al. [] used convolutional neural networks (CNN) for the classification and detection of the key features in Parkinson’s disease based on data generated from wearable sensors. The results of the research proved that deep learning techniques work well with sensors when compared to other methods. Moreover, deep learning techniques, particularly CNNs, were used in estimating energy consumption while using wearable sensors for health condition monitoring []. Furthermore, by using sensory data generated from an infrared distance sensor, a deep learning classifier was developed for fall detection especially amongst the elderly population []. Besides these, with respect to security, combining biometric sensors and CNNs has resulted in a more robust approach for spoofing and security breach detection in digital systems []. Yin et al. [] used a deep convolution network for proper visual object recognition as another application area of deep learning in the analysis of big sensed data, whereas for early detection of deforestation, Barreto et al. [] proposed using a multilayer perceptron technique. In both studies, the input data are acquired via means of remote sensing.

3.2. Major Deep Learning Methods in Medical Sensory Data

In this subsection, we discuss the major deep learning methods that are used in e-health applications on medical sensory data. The following are the major deep learning methods in e-health, and a table of all notation used is given as Table 1.

Table 1.

Basic notations used in the article. Notations are grouped into three categories: stand-alone symbols, vectors between units of different layers and symbols for functions.

3.2.1. Deep Feedforward Networks

Deep feed-forward networks can be counted among the first generation deep learning models and are based on multilayer perceptrons [,]. Basically, a feed-forward network aims at approximating a function . A mapping is defined by a feed-forward network to learn the value of the parameters by approximating with respect to the best function. Information flow in these networks is usually from the variables x being evaluated with respect to the outputs y.

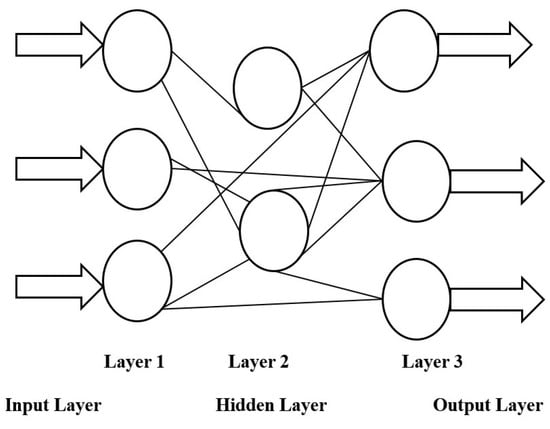

During training, the aim is to ensure matching of to , where each example of x is accompanied by a label . In most cases, the learning algorithm decides how to use these layers in order to get the best approximation of . Since these layers do not obtain the desired output from the training data, they are referred to as hidden layers such as Layer 2 in the illustration in Figure 2 below.

Figure 2.

A basic deep feed-forward network.

A typical model for the deep forward network is described as follows. Given K outputs for a given input x and the hidden layer, which consists of M units, then the output is formulated as shown in Equation (1) where is the activation function, denotes the matrix of weights from unit j of hidden Layer 1 to the output unit i. In the equation, and stand for the weight vector from unit j of hidden Layer 1 to output unit i, and the weight vector from unit k of hidden Layer 2 to output unit i, respectively.

3.2.2. Autoencoder

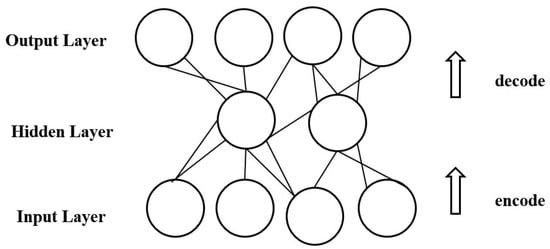

An autoencoder is a neural network that is trained to copy its input to its output [,]. In most cases, an autoencoder is implemented as a three-layer neural network (see Figure 3) by directly connecting output units back to input units. In the figure, every output i is linked back to input i. The hidden layer h in the autoencoder represents the input by a code. Thus, a minimalist description of the network can be made by two main components as follows: (1) an encoder function ; (2) a decoder function that is used to reconstruct the input, . Previously, autoencoders were used for dimensionality reduction or feature learning, but currently, the main purpose of autoencoder use is generative modelling because of the connection between autoencoders and latent variables.

Figure 3.

Autoencoder network.

Below are the various types of autoencoders.

- Undercomplete autoencoders [] are suitable for the situation where the dimension of the code is less than the dimension of the input. This phenomenon usually leads to the inclusion of important features during training and learning.

- Regularized autoencoders [] enable training any architecture of autoencoder successfully by choosing the code dimension and the capacity of the encoder/decoder based on the complexity of the distribution to be modelled.

- Sparse autoencoders [] have a training criterion with a sparsity penalty, which usually occurs in the code layer with the purpose of copying the input to the output. Sparse autoencoders are used to learn features for another task such as classification.

- Denoising autoencoders [] change the reconstruction error term of the cost function instead of adding a penalty to the cost function. Thus, a denoising autoencoder minimizes , where is a copy of x that has been distorted by noise.

- Contractive autoencoders [] introduce an explicit regularizer on making the derivatives of f as small as possible. The contractive autoencoders are trained to resist any perturbation of the input; as such, they map a neighbourhood of input points to a smaller neighbourhood of output points.

3.2.3. Convolutional Neural Networks

Convolutional neural networks (CNNs) replace matrix multiplication with convolutions in at least one of their layers [,,]. CNNs have multiple layers of fields with small sets of neurons where an input image is partially processed []. When the outputs of these sets of neurons are tiled, their input regions overlap, leading to a new representation of the original image with higher resolution. This sequence is repeated in each sublayer. It is also worth mentioning that the dimensions of a CNN are mostly dependent on the size of the data.

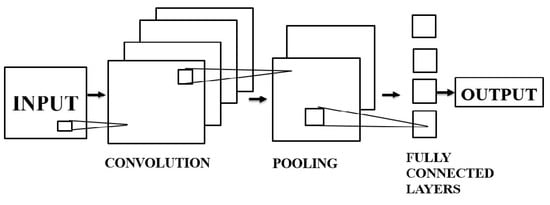

The CNN architecture consists of three distinct layers: (1) the convolutional layer, (2) pooling layer and (3) fully-connected layer. Although it is not a requirement for the CNNs, as illustrated in Figure 4, fully-connected layers can follow a number of convolutional and subsampling layers.

Figure 4.

CNN architecture.

- Convolutional layer: The convolutional layer takes an image as the input, where m and r denote the height/width of the image and the number of channels, respectively. The convolutional layer contains k filters (or kernels) of size , where and q can be less than or equal to the number of channels r (i.e., ). Here, q may vary for each kernel, and the feature map in this case has a size of .

- Pooling layers: These are listed as a key aspect of CNNs. The pooling layers are in general applied following the convolutional layers. A pooling layer in a CNN subsamples its input. Applying a max operation to the output of each filter is the most common way of pooling. Pooling over the complete matrix is not necessary. With respect to classification, pooling gives an output matrix with a fixed size thereby reducing the dimensionality of the output while keeping important information.

- Fully-connected layers: The layers here are all connected, i.e., both units of preceding and subsequent layers are connected

3.2.4. Deep Belief Network

The deep belief network (DBN) is a directed acyclic graph that builds on stochastic variables. It is a type of neural network composed of latent variables connected between multiple layers [,]. Despite the connections between layers, there are no connections between units within each layer. It can learn to reconstruct its inputs, then is trained to perform classification. In fact, the learning principle of DBNs is “one layer at a time via a greedy learning algorithm”.

The properties of DBN are:

- Learning generative weights is through a layer-by-layer process with the purpose of determining the dependability of the variables in layer ℓ on the variables in layer where ℓ denotes the index of any upper layer.

- Upon observing data in the bottom layer, inferring the values of the latent variables can be done in a single attempt.

It is worth noting that a DBN with one hidden layer implies a restricted Boltzmann machine (RBM). To train a DBN, first an RBM is trained using constructive divergence or stochastic maximum likelihood. The second RBM is then trained to model the defined distribution by sampling the hidden units in the first RBM. This process can be iterated as many times as possible to add further layers to the DBN.

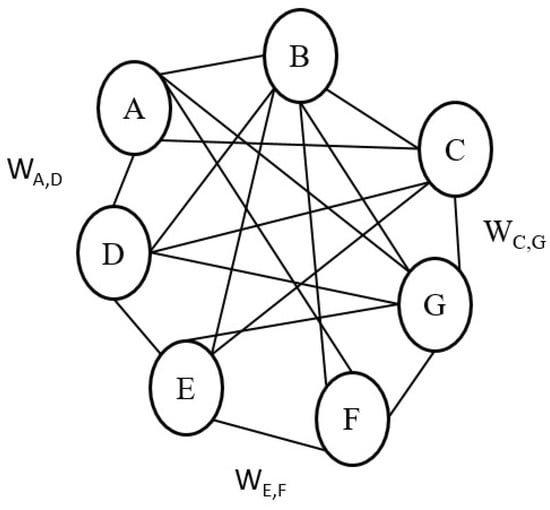

3.2.5. Boltzmann Machine

As a special type of neural network, the Boltzmann machine (BM) consists of nodes that are connected symmetrically as shown in Figure 5, where neurons help a BM make on/off decisions []. In order to identify features that exhibit complex data regularities, a BM utilizes learning algorithms that are well-suited for search and learning problems.

Figure 5.

Boltzmann network.

To visualize the operation of a BM better, suppose unit i is able to continuously change its state. First, the node calculates the total input , which is formulated as the sum of (bias) and all the weights on the connections from other units as formulated in Equation (2). In the equation, denotes the weights on connections between i and j, and is defined as in Equation (3). The probability for unit i is formulated as shown in Equation (4).

If all neurons are updated sequentially, the network is expected to reach a BM distribution with state vector probability q and energy as shown in Equations (5) and (6), respectively

With a view toward discarding the local optima, the weights on the connections could be chosen in such a way that each energy of the individual vectors represents the cost of these vectors. Learning in BM takes place in two different manners; either with hidden units or without hidden units.

There are various types of BM, and some of them are listed below:

Conditional Boltzmann machines model the data vectors and their distribution in such a way that any extension, no matter how simple it is, leads to conditional distributions.

Mean field Boltzmann machines compute the state of a unit based on the present state of other units in the network by using the real values of mean fields.

Higher-order Boltzmann machines have structures and learning patterns that can accept complex energy functions.

Restricted Boltzmann machines (RBMs): Two types of layers (i.e., visible vs. hidden) are included in the RBMs with no two similar connections [,].

In order to obtain unbiased elements from the set , the hidden units h need to be conditionally independent of the visible unit v. However, heavy computation is required to get unbiased samples from [].

Mathematically, the energy function of an RBM is given as formulated in Equation (7) and has a probability distribution as shown in Equation (8). In the equations, and stand for the biased weights for the hidden and visible units, respectively, whereas Z in the probability distribution function denotes the partition factor.

Upon learning one hidden layer, the outcome can be treated as the input data required for training another RBM. This in turn leads to cascaded learning of multiple hidden layers, thus making the entire network be viewed as one model with improvements done on the lower bound [].

4. Sensory Data Acquisition and Processing Using Deep Learning in Smart Health

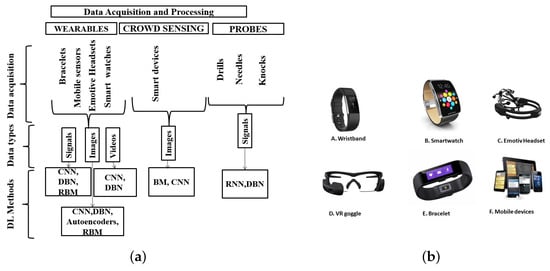

Accurate data acquisition and processing is key to effective healthcare delivery. However, ensuring the accuracy of the acquired data has been one of the typical challenges in smart healthcare systems. This is due to the nature of data needed for quality assurance of healthcare delivery and the methods used for data acquisition. This phenomenon in the acquisition of sensory data in healthcare applications has led to the development of innovative techniques for data acquisition with the aim of complementing the “already in use”, but upgraded methods. Due to these improvements in data acquisition methods, processing and interpreting sensory data have experienced an upward improvement, as well. These improvements have recently translated into improved quality of healthcare delivery. In this section, we briefly discuss the methods of sensory health data acquisition and processing as they relate to deep learning. Furthermore, we discuss how the generated sensory data types are processed using deep learning techniques. Figure 6a presents a brief taxonomy of data acquisition and processing. Data acquisition is performed mainly via wearables and probes as dedicated sensors and via built-in sensors of mobile devices as non-dedicated sensors.

Figure 6.

Data acquisition methods and processing techniques. (a) Taxonomy of sensory data acquisition and processing techniques; (b) types of wearables/carry-ons.

4.1. Sensory Data Acquisition and Processing via Wearables and Carry-Ons

Wearables and carry-ons have appeared as crucial components of personalized medicine aiming at performance and quality improvement in healthcare delivery. All wearables are equipped with built-in sensors that are used in data acquisition, and these smart devices with built-in sensors are in various forms as shown in the Figure 6b. The sensors in smart watches acquire data for the heart rate, movement, blood oxygen level and skin temperature. The virtual reality (VR) goggle captures video/image data, whereas the emotive headset senses mostly the brain signals. The wrist band and bracelets sense heart rate, body mass index (BMI), movement data (i.e., accelerometer and gyroscope) and temperature. Mobile devices provide non-dedicated sensing by acquiring sensory data regarding location, movement and BMI. While they all have similar functions, sensing functions mainly depend on the situations or needs for which they are required. The sensors embedded in these devices are the main resources for data acquisition and generation. The data output can be in the form of signals, images or videos; all with various importance and usefulness.

Processing of data generated from wearables and carry-ons is done based on the data types. Deep learning techniques used in this regards are also dependent on the data types and intended applications. This section aims to discuss the various deep learning methods used to process various data types generated from wearables and carry-ons.

- Image processing: Deep learning techniques play a major role in image processing for health advancements. Prominent amongst these methods are CNN, DBN, autoencoders and RBM. The authors in [] use CNNs to help create a new network architecture with the aim of multi-channel data acquisition and also for supervised feature learning. Extracting features from brain images (e.g., magnetic resonance imaging (MRI), functional Magnetic resonance imaging (fMRI)) can help in early diagnosis and prognosis of severe diseases such as glioma. Moreover, the authors in [] use DBN for the classification of mammography images in a bid to detect calcifications that may be the indicators of breast cancer. With high accuracy achieved in the detection, proper diagnosis of breast cancer becomes possible in radiology. Kuang and He in [] modified and used DBN for the classification of attention deficit hyperactivity disorder (ADHD) using images from fMRI data. In a similar fashion, Li et al. [] used the RBM for training and processing the dataset generated from MRI and positron emission tomography (PET) scans with aim of accurately diagnosing Alzheimer’s disease. Using deep CNN and clinical images, Esteva et al. [] were able to detect and classify melanoma, which is a type of skin cancer. According to their research, this method outperforms the already available skin cancer classification techniques. In the same context, Peyman and Hamid [] showed that CNN performs better in the preprocessing of clinical and dermoscopy images in the lesion segmentation part of the skin. The study argues that CNN requires less preprocessing procedure when compared to other known methods.

- Signal processing: Signal processing is an area of applied computing that has been evolving since its inception. Signal processing is an utmost important tool in diverse fields including the processing of medical sensory data. As new methods are being improved for accurate signal processing on sensory data, deep learning, as a robust method, appears as a potential technique used in signal processing. For instance, Ha and Choi use improved versions of CNN to process the signals derived from embedded sensors in mobile devices for proper recognition of human activities []. Human activity recognition is an important aspect of ubiquitous computing and one of the examples of its application is the diagnosis and provision of support and care for those with limited movement ability and capabilities. The authors in [] propose applying a CNN-based methodology on sensed data for the prediction of sleep quality. In the corresponding study, the CNN model is used with the objective of classifying the factors that contribute to efficient and poor sleeping habits with wearable sensors []. Furthermore, deep CNN and deep feed-forward networks on the data acquired via wearable sensors are used for the classification and processing of human activity recognition by the researchers in this field [].

- Video processing: Deep learning techniques are also used for processing of videos generated from wearable devices and carry-ons. Prominent amongst these applications is the human activity recognition via CNNs to process video data generated by wearables and/or multimedia sensors [,,].

4.2. Data Acquisition via Probes

Data acquisition using probes was an early stage data gathering technique. Their development has been made possible using technological enhancements attached to these probing tools. With these enhancements, acquiring sensory readings of medical data has become possible. Probes can be in the form of needles, drills and sometimes knocks, with feedbacks generated via the technological enhancements attached to the probing tools. Probes have seen a revolution since modern science and traditional medicine are being harmonized, with both playing vital roles in healthcare delivery.

Data generated via probes are usually in the form of signals. Processing probe data requires certain deep learning techniques that are augmented in most cases for this purpose. As an example, Cheron et al. [] used the invasive electrode injection method to acquire signals needed to formulate the kinematic relation between electromyography (EMG) and the trajectory of the arm during movements. To this end, the authors used a dynamic recurrent neural network to process these signals and showed the correlation between EMG and arm trajectory.

4.3. Data Acquisition via Crowd-Sensing

Mobile crowd-sensing is a non-dedicated sensing concept where data are acquired using built-in sensors of smart devices. As the capabilities of smart devices such as smartphones and tablets have tremendously improved during the last decade, any smartphone today is equipped with tens of built-in sensors. Thus, ubiquity, improved sensing, computing and communication capabilities in mobile smart devices have enabled these devices to be used as data acquisition tools in critical applications including smart health and emergency preparedness [,]. This type of data acquisition involves users moving towards a particular location and being implicitly recruited to capture required data by the built-in sensors in their smart devices []. Crowdsensing envisions a robust data collection approach where users have the leverage and the ability to choose and report more data for experimental purposes in real time []. Consequently, this type of data acquisition increases the amount of data required for any purpose especially for the applications under smart health and smart cities []. Moreover, it is worth noting that crowd-sensed data are big especially in volume and velocity; hence, application-specific and effective processing methods are required to analyse crowd-sensed datasets. Application-specific data analytics techniques are required for the following reason in mobile crowd-sensing: Besides volume and velocity, the variety and heterogeneity of sensors in mobile crowd-sensing are also phenomenal, which results in producing a gigantic amount of data, which might be partially labelled in some cases.

As an example smart health application where mobile crowd-sensed data are used, Pan et al. [] have introduced AirTick, which utilizes crowd-sensed image data to obtain air quality information. To this end, AirTick applies Boltzmann machines as the deep learning method on the crowd-sensed image to process the data for eventual results. Furthermore, the authors in [] have introduced a proposal for cleaner and healthier neighbourhoods and have developed a mobile crowd-sensing application called SpotGarbage, which allows users to capture images of garbage in their locality and send them to the data hub where the data are analysed. In the SpotGarbage application, CNNs are used as the deep learning method to analyse the crowd-sensed images.

Based on the review of different data acquisition techniques and the corresponding deep learning methodologies applied to sensed data under those acquisition techniques, a brief review is presented in Table 2. The table provides a summary of data acquisition techniques, their corresponding data types and some examples of the deep learning techniques used.

Table 2.

Summary of data acquisition methods, data types and examples of deep learning technique used. Three types of data acquisition categories are defined, which acquire images, one-dimensional signals and videos. CNN, DBN, restricted Boltzmann machine (RBM) and BM are the deep learning methods that are used to analyse big sensed data.

5. Deep Learning Challenges in Big Sensed Data: Opportunities in Smart Health Applications

Deep learning can assist in the processing of sensory data by classification, as well as prediction via learning. Training a dataset by a deep learning algorithm involves prediction, detection of errors and improving prediction quality with time. Based on the review of the state of the art in the previous sections, it can be stated that integration of sensed data in smart health applications with deep learning yields promising outcomes. On the other hand, there are several challenges and open issues that need to be addressed prior to realization of such integration. As those challenges arise from the nature of deep learning, sensor deployment and sensory data acquisition, addressing those challenges paves the way towards robust smart health applications. As a corollary, in this section, we introduce challenges and open issues in the integration of deep learning with smart health sensory data; and pursuant to these, we present opportunities to cope with these challenges in various applications with the integration of deep learning and the sensory data provided.

5.1. Challenges and Open Issues

Deep learning techniques have attracted researchers from many fields recently for sustainable and efficient smart health delivery in smart environments. However, it is worth noting that the application of deep learning techniques on sensory data still experiences challenges. Indeed, in most smart health applications, CNNs have been introduced as revolutionized methodologies to cope with the challenges that deep learning networks suffer. Thanks to the improvements in CNNs, to date, CNN has been identified as the most useful tool in most cases when smart health is involved.

To be able to fully exploit deep learning techniques on medical sensory data, certain challenges need to be addressed by the researchers in this field. The challenges faced by deep learning techniques in smart health are mostly related to the acquisition, quality and dimensionality of data. This is due to the fact that inferences or decisions are made based on the output/outcome of processed data. As seen in the previous section, data acquisition takes place on heterogeneous settings, i.e., various devices with their own sampling frequency, operating system and data formats. The heterogeneity phenomenon generally results in a data plane with huge dimensions. The higher the dimension gets, the more difficult the training of the data, which ultimately leads to a longer time frame for result generation. Moreover, determining the depth of the network architecture in order to get a favourable result is another challenge since the depth of the network impacts the training time, which is an outstanding challenge to be addressed by the researchers in this field.

Value and trustworthiness of the data comprise another challenge that impacts the success of deep learning algorithms in a smart health setting. As any deep learning technique is supposed to be applied to big sensed health data, novel data acquisition techniques that ensure the highest level of trustworthiness for the acquired data are emergent.

Uncertainty in the acquired sensor data remains a grand challenge. A significant amount of the acquired data is partially labelled or unlabelled. Therefore, novel mechanisms to quantify and cope with the uncertainty phenomenon in the sensed data are emergent to improve the accuracy, as well as the efficiency of deep learning techniques in smart health applications.

Furthermore, data acquisition via non-dedicated sensors is also possible in smart cities sensing []. In the presence of non-dedicated sensors for data acquisition in smart health, it is a big challenge to know how much data should be acquired prior to processing. Since dedicated and non-dedicated sensors are mostly coupled in smart health applications, determining the amount of data required from different wearables becomes a grand challenge, as well. Recent research proposes the use of compressing sensing methods in participatory or opportunistic sensing via mobile devices []; hence, data explosion in non-dedicated acquisition can be prevented. However, in the presence of non-dedicated sensing system, dynamic determination of the number of wearables/sensors that can ensure the desired amount of data is another open issue to be addressed prior to analysing the big sensed data via deep learning networks.

In addition to all this, ensuring trustworthiness of the acquired sensory data prior to deep learning analysis remains an open issue, while auction and game theoretic solutions have been proposed to increase user involvement in the trustworthiness assurance stage of the data that are acquired via non-dedicated sensors [,]. In the presence of a collaboration between dedicated and non-dedicated sensors in the data acquisition, coping with the reliability of the non-dedicated end still requires efficient solutions. It is worth noting that deep learning can also be used for behaviour analysis of non-dedicated sensors in such an environment with the aim of eliminating unreliable sensing sources in the data acquisition.

Last but not least, recent research points out the emergence of IoT-driven data acquisition systems [,].

5.2. Opportunities in Smart Health Applications for Deep Learning

In this section, we discuss some of the applications of deep learning. To this end, we categorize these applications into three main groups for easy reference. Table 3 shows a summary of these applications together with the deep learning methods used under the three categories, namely medical imaging, bioinformatics and predictive analysis. The table is a useful reference to select the appropriate deep learning technique(s) while aiming to address the challenges and open issues in the previous subsection.

Table 3.

Smart health applications with their respective deep learning techniques on medical sensory data. Applications are grouped into three categories: Medical imaging, bioinformatics and predictive analysis. Each application addresses multiple problems on sensed data through various deep learning techniques. DNN, deep neural network.

- Medical imaging: Deep learning techniques have actually helped the improvement of healthcare through accurate disease detection and recognition. An example is the detection of melanoma. To do this, deep learning algorithms learn important features related to melanoma from a group of medical images and run their learning-based prediction algorithm to detect the presence or likelihood of the disease.Furthermore, using images from MRI, fMRI and other sources, deep learning has been able to help 3D brain construction using autoencoders and deep CNN [], neural cell classification using CNN [], brain tissue classifications using DBN [,], tumour detection using DNN [,] and Alzheimer’s diagnosis using DNN [].

- Bioinformatics: The applications of deep learning in bioinformatics have seen a resurgence in the diagnosis and treatment of most terminal diseases. Examples of these could be seen in cancer diagnosis where deep autoencoders are used using gene expression as the input data []; gene selection/classification and gene variants using micro-array data sequencing with the aid of deep belief networks []. Moreover, deep belief networks play a key role in protein slicing/sequencing [,].

- Predictive analysis: Disease predictions have gained momentum with the advent of learning-based systems. Therefore, with the capability of deep learning to predict the occurrence of diseases accurately, predictive analysis of the future likelihood of diseases has experienced significant progress. Particular techniques that are used for predictive analysis of diseases are autoencoders [], recurrent neural networks [] and CNNs [,]. On the other hand, it is worth mentioning that in order to improve the accuracy of prediction, sensory data monitoring medical phenomena have to be coupled with sensory data monitoring human behaviour. Coupling of data acquired from medical and behavioural sensors helps in conducting effective analysis of human behaviour in order to find patterns that could help in disease predictions and preventions.

6. Conclusions

With the growing need and widespread use of sensor and actuator networks in smart cities, there is a growing demand for top-notch methods for the acquisition and processing of big sensed data. Among smart city services, smart healthcare applications are becoming a part of daily life to prolong the lifetime of members of society and improve quality of life. With the heterogeneous and various types of data that are being generated on a daily basis, the existence of sensor and actuator networks (i.e., wearables, carry-ons and other medical sensors) calls for effective acquisition of sensed data, as well as accurate and efficient processing to deduce conclusions, predictions and recommendations for the healthiness state of individuals. Deep learning is an effective tool that is used in the processing of big sensed data especially under these settings. Although deep learning has evolved from the traditional artificial neural networks concept, it has become an evolving field with the advent of improved computational power, as well as the convergence of wired/wireless communication systems. In this article, we have briefly discussed the growing concept of smart health within the smart city framework by highlighting its major benefits for the social sustainability of the smart city infrastructure. We have provided a comprehensive survey of the use of deep learning techniques to analyse sensory data in e-health and presented the major deep learning techniques, namely deep feed-forward networks, autoencoders, convolutional neural networks, deep belief networks, Boltzmann machine and restricted Boltzmann machine. Furthermore, we have introduced various data acquisition mechanisms, namely wearables, probes and crowd-sensing. Following these, we have also linked the surveyed deep learning techniques to existing use cases in the analysis of medical sensory data. In order to provide a thorough understanding of these linkages, we have categorized the sensory data acquisition techniques based on the available technology for data generation. In the last part of this review article, we have studied the smart health applications that involve sensors and actuators and visited specific use cases in those applications along with the existing deep learning solutions to effectively analyse sensory data. To facilitate a thorough understanding of these applications and their requirements, we have classified these applications under the following three categories: medical imaging, bioinformatics and predictive analysis. In the last part of this review article, we have studied smart health applications that involve sensors and actuators and visited specific problems in those applications along with the existing deep learning solutions to effectively address those problems. Furthermore, we have provided a thorough discussion of the open issues and challenges in big sensed data in smart health, mainly focusing on the data acquisition and processing aspects from the standpoint of deep learning techniques.

Acknowledgments

This material is based upon work supported by the Natural Sciences and Engineering Research Council of Canada (NSERC) under Grant RGPIN/2017-04032.

Author Contributions

Alex Adim Obinikpo and Burak Kantarci conceived and pursued the literature survey on deep learning techniques on big sensed data for smart health applications, reviewed the state of the art, challenges and opportunities, and made conclusions. They both wrote the paper. Alex Adim Obinikpo created the illustrative images.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Guelzim, T.; Obaidat, M.; Sadoun, B. Chapter 1—Introduction and overview of key enabling technologies for smart cities and homes. In Smart Cities and Homes; Obaidat, M.S., Nicopolitidis, P., Eds.; Morgan Kaufmann: Boston, MA, USA, 2016; pp. 1–16. [Google Scholar]

- Liu, D.; Huang, R.; Wosinski, M. Development of Smart Cities: Educational Perspective. In Smart Learning in Smart Cities; Springer: Singapore, 2017; pp. 3–14. [Google Scholar]

- Nam, T.; Pardo, T.A. Conceptualizing smart city with dimensions of technology, people, and institutions. In Proceedings of the 12th Annual International Digital Government Research Conference: Digital Government Innovation in Challenging Times, College Park, MD, USA, 12–15 June 2011; ACM: New York, NY, USA, 2011; pp. 282–291. [Google Scholar]

- Anthopoulos, L.G. The Rise of the Smart City. In Understanding Smart Cities: A Tool for Smart Government or an Industrial Trick? Springer: Cham, Switzerland, 2017; pp. 5–45. [Google Scholar]

- Munzel, A.; Meyer-Waarden, L.; Galan, J.P. The social side of sustainability: Well-being as a driver and an outcome of social relationships and interactions on social networking sites. Technol. Forecast. Soc. Change 2017, in press. [Google Scholar] [CrossRef]

- Fan, M.; Sun, J.; Zhou, B.; Chen, M. The smart health initiative in China: The case of Wuhan, Hubei province. J. Med. Syst. 2016, 40, 62. [Google Scholar] [CrossRef] [PubMed]

- Ndiaye, M.; Hancke, G.P.; Abu-Mahfouz, A.M. Software Defined Networking for Improved Wireless Sensor Network Management: A Survey. Sensors 2017, 17, 1031. [Google Scholar] [CrossRef] [PubMed]

- Pramanik, M.I.; Lau, R.Y.; Demirkan, H.; Azad, M.A.K. Smart health: Big data enabled health paradigm within smart cities. Expert Syst. Appl. 2017, 87, 370–383. [Google Scholar] [CrossRef]

- Nef, T.; Urwyler, P.; Büchler, M.; Tarnanas, I.; Stucki, R.; Cazzoli, D.; Müri, R.; Mosimann, U. Evaluation of three state-of-the-art classifiers for recognition of activities of daily living from smart home ambient data. Sensors 2015, 15, 11725–11740. [Google Scholar] [CrossRef] [PubMed]

- Ali, Z.; Muhammad, G.; Alhamid, M.F. An Automatic Health Monitoring System for Patients Suffering from Voice Complications in Smart Cities. IEEE Access 2017, 5, 3900–3908. [Google Scholar] [CrossRef]

- Hossain, M.S.; Muhammad, G.; Alamri, A. Smart healthcare monitoring: A voice pathology detection paradigm for smart cities. Multimedia Syst. 2017. [Google Scholar] [CrossRef]

- Mehta, Y.; Pai, M.M.; Mallissery, S.; Singh, S. Cloud enabled air quality detection, analysis and prediction—A smart city application for smart health. In Proceedings of the 2016 3rd MEC International Conference on Big Data and Smart City (ICBDSC), Muscat, Oman, 15–16 March 2016; pp. 1–7. [Google Scholar]

- Sahoo, P.K.; Thakkar, H.K.; Lee, M.Y. A Cardiac Early Warning System with Multi Channel SCG and ECG Monitoring for Mobile Health. Sensors 2017, 17, 711. [Google Scholar] [CrossRef] [PubMed]

- Kim, T.; Park, J.; Heo, S.; Sung, K.; Park, J. Characterizing dynamic walking patterns and detecting falls with wearable sensors using Gaussian process methods. Sensors 2017, 17, 1172. [Google Scholar] [CrossRef] [PubMed]

- Yeh, Y.T.; Hsu, M.H.; Chen, C.Y.; Lo, Y.S.; Liu, C.T. Detection of potential drug-drug interactions for outpatients across hospitals. Int. J. Environ. Res. Public Health 2014, 11, 1369–1383. [Google Scholar] [CrossRef] [PubMed]

- Venkatesh, J.; Aksanli, B.; Chan, C.S.; Akyurek, A.S.; Rosing, T.S. Modular and Personalized Smart Health Application Design in a Smart City Environment. IEEE Internet Things J. 2017, PP, 1. [Google Scholar] [CrossRef]

- Rajaram, M.L.; Kougianos, E.; Mohanty, S.P.; Sundaravadivel, P. A wireless sensor network simulation framework for structural health monitoring in smart cities. In Proceedings of the 2016 IEEE 6th International Conference on Consumer Electronics-Berlin (ICCE-Berlin), Berlin, Germany, 5–7 September 2016; pp. 78–82. [Google Scholar]

- Hijazi, S.; Page, A.; Kantarci, B.; Soyata, T. Machine Learning in Cardiac Health Monitoring and Decision Support. IEEE Comput. 2016, 49, 38–48. [Google Scholar] [CrossRef]

- Ota, K.; Dao, M.S.; Mezaris, V.; Natale, F.G.B.D. Deep Learning for Mobile Multimedia: A Survey. ACM Trans. Multimedia Comput. Commun. Appl. 2017, 13, 34. [Google Scholar] [CrossRef]

- Yu, D.; Deng, L. Deep Learning and Its Applications to Signal and Information Processing [Exploratory DSP]. IEEE Signal Process. Mag. 2011, 28, 145–154. [Google Scholar] [CrossRef]

- Larochelle, H.; Bengio, Y. Classification using discriminative restricted Boltzmann machines. In Proceedings of the 25th International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008; pp. 536–543. [Google Scholar]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.A. Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

- Wang, L.; Sng, D. Deep Learning Algorithms with Applications to Video Analytics for A Smart City: A Survey. arXiv, 2015; arXiv:1512.03131. [Google Scholar]

- Cavazza, J.; Morerio, P.; Murino, V. When Kernel Methods Meet Feature Learning: Log-Covariance Network for Action Recognition From Skeletal Data. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Keceli, A.S.; Kaya, A.; Can, A.B. Action recognition with skeletal volume and deep learning. In Proceedings of the 2017 25th Signal Processing and Communications Applications Conference (SIU), Antalya, Turkey, 15–18 May 2017; pp. 1–4. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Alsheikh, M.A.; Lin, S.; Niyato, D.; Tan, H.P. Machine Learning in Wireless Sensor Networks: Algorithms, Strategies, and Applications. IEEE Commun. Surv. Tutor. 2014, 16, 1996–2018. [Google Scholar] [CrossRef]

- Mohri, M.; Rostamizadeh, A.; Talwalkar, A. Foundations of Machine Learning; MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Clifton, L.; Clifton, D.A.; Pimentel, M.A.F.; Watkinson, P.J.; Tarassenko, L. Predictive Monitoring of Mobile Patients by Combining Clinical Observations with Data from Wearable Sensors. IEEE J. Biomed. Health Inform. 2014, 18, 722–730. [Google Scholar] [CrossRef] [PubMed]

- Tsiouris, K.M.; Gatsios, D.; Rigas, G.; Miljkovic, D.; Seljak, B.K.; Bohanec, M.; Arredondo, M.T.; Antonini, A.; Konitsiotis, S.; Koutsouris, D.D.; et al. PD_Manager: An mHealth platform for Parkinson’s disease patient management. Healthcare Technol. Lett. 2017, 4, 102–108. [Google Scholar] [CrossRef] [PubMed]

- Hu, K.; Rahman, A.; Bhrugubanda, H.; Sivaraman, V. HazeEst: Machine Learning Based Metropolitan Air Pollution Estimation from Fixed and Mobile Sensors. IEEE Sens. J. 2017, 17, 3517–3525. [Google Scholar] [CrossRef]

- Tariq, O.B.; Lazarescu, M.T.; Iqbal, J.; Lavagno, L. Performance of Machine Learning Classifiers for Indoor Person Localization with Capacitive Sensors. IEEE Access 2017, 5, 12913–12926. [Google Scholar] [CrossRef]

- Jahangiri, A.; Rakha, H.A. Applying Machine Learning Techniques to Transportation Mode Recognition Using Mobile Phone Sensor Data. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2406–2417. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep Learning in Neural Networks: An Overview. Neural Netw. 2014, 61, 85–117. [Google Scholar] [CrossRef] [PubMed]

- Deng, L.; Yu, D. Deep learning: Methods and applications. Found. Trends Signal Process. 2014, 7, 197–387. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Do, T.M.T.; Gatica-Perez, D. The places of our lives: Visiting patterns and automatic labeling from longitudinal smartphone data. IEEE Trans. Mob. Comput. 2014, 13, 638–648. [Google Scholar] [CrossRef]

- Al Rahhal, M.M.; Bazi, Y.; AlHichri, H.; Alajlan, N.; Melgani, F.; Yager, R.R. Deep learning approach for active classification of electrocardiogram signals. Inform. Sci. 2016, 345, 340–354. [Google Scholar] [CrossRef]

- Acharya, U.R.; Fujita, H.; Lih, O.S.; Adam, M.; Tan, J.H.; Chua, C.K. Automated Detection of Coronary Artery Disease Using Different Durations of ECG Segments with Convolutional Neural Network. Knowl.-Based Syst. 2017, 132, 62–71. [Google Scholar] [CrossRef]

- Hosseini, M.P.; Tran, T.X.; Pompili, D.; Elisevich, K.; Soltanian-Zadeh, H. Deep Learning with Edge Computing for Localization of Epileptogenicity Using Multimodal rs-fMRI and EEG Big Data. In Proceedings of the 2017 IEEE International Conference on Autonomic Computing (ICAC), Columbus, OH, USA, 17–21 July 2017; pp. 83–92. [Google Scholar]

- Zheng, W.L.; Zhu, J.Y.; Peng, Y.; Lu, B.L. EEG-based emotion classification using deep belief networks. In Proceedings of the 2014 IEEE International Conference on Multimedia and Expo (ICME), Chengdu, China, 14–18 July 2014; pp. 1–6. [Google Scholar]

- Gu, W. Non-intrusive blood glucose monitor by multi-task deep learning: PhD forum abstract. In Proceedings of the 16th ACM/IEEE International Conference on Information Processing in Sensor Networks, Pittsburgh, PA, USA, 18–20 April 2017; ACM: New York, NY, USA, 2017; pp. 249–250. [Google Scholar]

- Anagnostopoulos, T.; Zaslavsky, A.; Kolomvatsos, K.; Medvedev, A.; Amirian, P.; Morley, J.; Hadjieftymiades, S. Challenges and Opportunities of Waste Management in IoT-Enabled Smart Cities: A Survey. IEEE Trans. Sustain. Comput. 2017, 2, 275–289. [Google Scholar] [CrossRef]

- Taleb, S.; Al Sallab, A.; Hajj, H.; Dawy, Z.; Khanna, R.; Keshavamurthy, A. Deep learning with ensemble classification method for sensor sampling decisions. In Proceedings of the 2016 International Wireless Communications and Mobile Computing Conference (IWCMC), Paphos, Cyprus, 5–9 September 2016; pp. 114–119. [Google Scholar]

- Costilla-Reyes, O.; Scully, P.; Ozanyan, K.B. Deep Neural Networks for Learning Spatio-Temporal Features from Tomography Sensors. IEEE Trans. Ind. Electron. 2018, 65, 645–653. [Google Scholar] [CrossRef]

- Fang, S.H.; Fei, Y.X.; Xu, Z.; Tsao, Y. Learning Transportation Modes From Smartphone Sensors Based on Deep Neural Network. IEEE Sens. J. 2017, 17, 6111–6118. [Google Scholar] [CrossRef]

- Xu, X.; Yin, S.; Ouyang, P. Fast and low-power behavior analysis on vehicles using smartphones. In Proceedings of the 2017 6th International Symposium on Next Generation Electronics (ISNE), Keelung, Taiwan, 23–25 May 2017; pp. 1–4. [Google Scholar]

- Eskofier, B.M.; Lee, S.I.; Daneault, J.F.; Golabchi, F.N.; Ferreira-Carvalho, G.; Vergara-Diaz, G.; Sapienza, S.; Costante, G.; Klucken, J.; Kautz, T.; et al. Recent machine learning advancements in sensor-based mobility analysis: Deep learning for Parkinson’s disease assessment. In Proceedings of the 2016 IEEE 38th Annual International Conference of the Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 655–658. [Google Scholar]

- Zhu, J.; Pande, A.; Mohapatra, P.; Han, J.J. Using deep learning for energy expenditure estimation with wearable sensors. In Proceedings of the 2015 17th International Conference on E-health Networking, Application & Services (HealthCom), Boston, MA, USA, 14–17 October 2015; pp. 501–506. [Google Scholar]

- Jankowski, S.; Szymański, Z.; Dziomin, U.; Mazurek, P.; Wagner, J. Deep learning classifier for fall detection based on IR distance sensor data. In Proceedings of the 2015 IEEE 8th International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS), Warsaw, Poland, 24–26 September 2015; Volume 2, pp. 723–727. [Google Scholar]

- Menotti, D.; Chiachia, G.; Pinto, A.; Schwartz, W.R.; Pedrini, H.; Falcao, A.X.; Rocha, A. Deep representations for iris, face, and fingerprint spoofing detection. IEEE Trans. Inform. Forensics Secur. 2015, 10, 864–879. [Google Scholar] [CrossRef]

- Yin, Y.; Liu, Z.; Zimmermann, R. Geographic information use in weakly-supervised deep learning for landmark recognition. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; pp. 1015–1020. [Google Scholar]

- Barreto, T.L.; Rosa, R.A.; Wimmer, C.; Moreira, J.R.; Bins, L.S.; Cappabianco, F.A.M.; Almeida, J. Classification of Detected Changes from Multitemporal High-Res Xband SAR Images: Intensity and Texture Descriptors From SuperPixels. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 5436–5448. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- Alain, G.; Bengio, Y.; Rifai, S. Regularized auto-encoders estimate local statistics. Proc. CoRR 2012, 1–17. [Google Scholar]

- Rifai, S.; Bengio, Y.; Dauphin, Y.; Vincent, P. A generative process for sampling contractive auto-encoders. arXiv, 2012; arXiv:1206.6434. [Google Scholar]

- Abdulnabi, A.H.; Wang, G.; Lu, J.; Jia, K. Multi-Task CNN Model for Attribute Prediction. IEEE Trans. Multimedia 2015, 17, 1949–1959. [Google Scholar] [CrossRef]

- Deng, L.; Abdelhamid, O.; Yu, D. A deep convolutional neural network using heterogeneous pooling for trading acoustic invariance with phonetic confusion. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 6669–6673. [Google Scholar]

- Aghdam, H.H.; Heravi, E.J. Guide to Convolutional Neural Networks: A Practical Application to Traffic-Sign Detection and Classification; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Huang, G.; Lee, H.; Learnedmiller, E. Learning hierarchical representations for face verification with convolutional deep belief networks. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2518–2525. [Google Scholar]

- Ackley, D.H.; Hinton, G.E.; Sejnowski, T.J. A learning algorithm for boltzmann machines. Cognit. Sci. 1985, 9, 147–169. [Google Scholar] [CrossRef]

- Salakhutdinov, R.; Mnih, A.; Hinton, G. Restricted Boltzmann machines for collaborative filtering. In Proceedings of the 24th international conference on Machine learning, Corvalis, OR, USA, 20–24 June 2007; pp. 791–798. [Google Scholar]

- Ribeiro, B.; Gonçalves, I.; Santos, S.; Kovacec, A. Deep Learning Networks for Off-Line Handwritten Signature Recognition. In Proceedings of the 2011 CIARP 16th Iberoamerican Congress on Pattern Recognition, Pucón, Chile, 15–18 November 2011; pp. 523–532. [Google Scholar]

- Nie, D.; Zhang, H.; Adeli, E.; Liu, L.; Shen, D. 3D Deep Learning for Multi-Modal Imaging-Guided Survival Time Prediction of Brain Tumor Patients; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Rose, D.C.; Arel, I.; Karnowski, T.P.; Paquit, V.C. Applying deep-layered clustering to mammography image analytics. In Proceedings of the 2010 Biomedical Sciences and Engineering Conference, Oak Ridge, TN, USA, 25–26 May 2010; pp. 1–4. [Google Scholar]

- Kuang, D.; He, L. Classification on ADHD with Deep Learning. In Proceedings of the 2014 International Conference on Cloud Computing and Big Data, Wuhan, China, 12–14 November 2014; pp. 27–32. [Google Scholar]

- Li, F.; Tran, L.; Thung, K.H.; Ji, S.; Shen, D.; Li, J. A Robust Deep Model for Improved Classification of AD/MCI Patients. IEEE J. Biomed. Health Inform. 2015, 19, 1610–1616. [Google Scholar] [CrossRef] [PubMed]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Sabouri, P.; GholamHosseini, H. Lesion border detection using deep learning. In Proceedings of the 2016 IEEE Congress on Evolutionary Computation (CEC), Vancouver, BC, Canada, 24–29 July 2016; pp. 1416–1421. [Google Scholar]

- Ha, S.; Choi, S. Convolutional neural networks for human activity recognition using multiple accelerometer and gyroscope sensors. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 381–388. [Google Scholar]

- Sathyanarayana, A.; Joty, S.; Fernandez-Luque, L.; Ofli, F.; Srivastava, J.; Elmagarmid, A.; Arora, T.; Taheri, S. Sleep quality prediction from wearable data using deep learning. JMIR mHealth uHealth 2016, 4, e125. [Google Scholar] [CrossRef] [PubMed]

- Hammerla, N.Y.; Halloran, S.; Ploetz, T. Deep, convolutional, and recurrent models for human activity recognition using wearables. arXiv, 2016; arXiv:1604.08880. [Google Scholar]

- Baccouche, M.; Mamalet, F.; Wolf, C.; Garcia, C.; Baskurt, A. Sequential deep learning for human action recognition. In International Workshop on Human Behavior Understanding; Springer: Berlin/Heidelberg, Germany, 2011; pp. 29–39. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 221–231. [Google Scholar] [CrossRef] [PubMed]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Fei-Fei, L. Large-scale video classification with convolutional neural networks. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1725–1732. [Google Scholar]

- Cheron, G.; Draye, J.P.; Bourgeios, M.; Libert, G. A dynamic neural network identification of electromyography and arm trajectory relationship during complex movements. IEEE Trans. Biomed. Eng. 1996, 43, 552–558. [Google Scholar] [CrossRef] [PubMed]

- Page, A.; Hijazi, S.; Askan, D.; Kantarci, B.; Soyata, T. Research Directions in Cloud-Based Decision Support Systems for Health Monitoring Using Internet-of-Things Driven Data Acquisition. Int. J. Serv. Comput. 2016, 4, 18–34. [Google Scholar]

- Guo, B.; Han, Q.; Chen, H.; Shangguan, L.; Zhou, Z.; Yu, Z. The Emergence of Visual Crowdsensing: Challenges and Opportunities. IEEE Commun. Surv. Tutor. 2017, PP, 1. [Google Scholar] [CrossRef]

- Ma, H.; Zhao, D.; Yuan, P. Opportunities in mobile crowd sensing. IEEE Commun. Mag. 2014, 52, 29–35. [Google Scholar] [CrossRef]

- Haddawy, P.; Frommberger, L.; Kauppinen, T.; De Felice, G.; Charkratpahu, P.; Saengpao, S.; Kanchanakitsakul, P. Situation awareness in crowdsensing for disease surveillance in crisis situations. In Proceedings of the Seventh International Conference on Information and Communication Technologies and Development, Singapore, 15–18 May 2015; p. 38. [Google Scholar]

- Cardone, G.; Foschini, L.; Bellavista, P.; Corradi, A.; Borcea, C.; Talasila, M.; Curtmola, R. Fostering participaction in smart cities: a geo-social crowdsensing platform. IEEE Commun. Mag. 2013, 51, 112–119. [Google Scholar] [CrossRef]

- Pan, Z.; Yu, H.; Miao, C.; Leung, C. Crowdsensing Air Quality with Camera-Enabled Mobile Devices. In Proceedings of the Twenty-Ninth IAAI Conference, San Francisco, CA, USA, 6–9 February 2017; pp. 4728–4733. [Google Scholar]

- Mittal, G.; Yagnik, K.B.; Garg, M.; Krishnan, N.C. SpotGarbage: Smartphone app to detect garbage using deep learning. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016; pp. 940–945. [Google Scholar]

- Habibzadeh, H.; Qin, Z.; Soyata, T.; Kantarci, B. Large Scale Distributed Dedicated- and Non-Dedicated Smart City Sensing Systems. IEEE Sens. J. 2017, 17, 7649–7658. [Google Scholar] [CrossRef]

- Xu, L.; Hao, X.; Lane, N.D.; Liu, X.; Moscibroda, T. More with Less: Lowering User Burden in Mobile Crowdsourcing through Compressive Sensing. In Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Osaka, Japan, 7–11 September 2015; ACM: New York, NY, USA, 2015; pp. 659–670. [Google Scholar]

- Pouryazdan, M.; Kantarci, B. The Smart Citizen Factor in Trustworthy Smart City Crowdsensing. IT Prof. 2016, 18, 26–33. [Google Scholar] [CrossRef]

- Pouryazdan, M.; Kantarci, B.; Soyata, T.; Song, H. Anchor-Assisted and Vote-Based Trustworthiness Assurance in Smart City Crowdsensing. IEEE Access 2016, 4, 529–541. [Google Scholar] [CrossRef]

- Farahani, B.; Firouzi, F.; Chang, V.; Badaroglu, M.; Constant, N.; Mankodiya, K. Towards fog-driven IoT eHealth: Promises and challenges of IoT in medicine and healthcare. Future Gener. Comput. Syst. 2018, 78, 659–676. [Google Scholar] [CrossRef]

- Kleesiek, J.; Urban, G.; Hubert, A.; Schwarz, D.; Maier-Hein, K.; Bendszus, M.; Biller, A. Deep MRI brain extraction: A 3D convolutional neural network for skull stripping. Neuroimage 2016, 129, 460–469. [Google Scholar] [CrossRef] [PubMed]

- Fritscher, K.; Raudaschl, P.; Zaffino, P.; Spadea, M.F.; Sharp, G.C.; Schubert, R. Deep Neural Networks for Fast Segmentation of 3D Medical Images. In Medical Image Computing and Computer-Assisted Intervention—MICCAI; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Fakoor, R.; Ladhak, F.; Nazi, A.; Huber, M. Using deep learning to enhance cancer diagnosis and classification. In Proceedings of the 30th International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013. [Google Scholar]

- Khademi, M.; Nedialkov, N.S. Probabilistic Graphical Models and Deep Belief Networks for Prognosis of Breast Cancer. In Proceedings of the 2015 IEEE 14th International Conference on Machine Learning and Applications, Miami, FL, USA, 9–11 December 2016; pp. 727–732. [Google Scholar]

- Angermueller, C.; Lee, H.J.; Reik, W.; Stegle, O. DeepCpG: Accurate prediction of single-cell DNA methylation states using deep learning. Genome Biol. 2017, 18, 67. [Google Scholar] [CrossRef] [PubMed]

- Tian, K.; Shao, M.; Wang, Y.; Guan, J.; Zhou, S. Boosting Compound-Protein Interaction Prediction by Deep Learning. Methods 2016, 110, 64–72. [Google Scholar] [CrossRef] [PubMed]

- Che, Z.; Purushotham, S.; Khemani, R.; Liu, Y. Distilling Knowledge from Deep Networks with Applications to Healthcare Domain. Ann. Chirurgie 2015, 40, 529–532. [Google Scholar]

- Lipton, Z.C.; Kale, D.C.; Elkan, C.; Wetzell, R. Learning to Diagnose with LSTM Recurrent Neural Networks. In Proceedings of the International Conference on Learning Representations (ICLR 2016), San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Liang, Z.; Zhang, G.; Huang, J.X.; Hu, Q.V. Deep learning for healthcare decision making with EMRs. In Proceedings of the IEEE International Conference on Bioinformatics and Biomedicine, Belfast, UK, 2–5 November 2014; pp. 556–559. [Google Scholar]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).