Graphene–PLA Printed Sensor Combined with XR and the IoT for Enhanced Temperature Monitoring: A Case Study

Abstract

1. Introduction

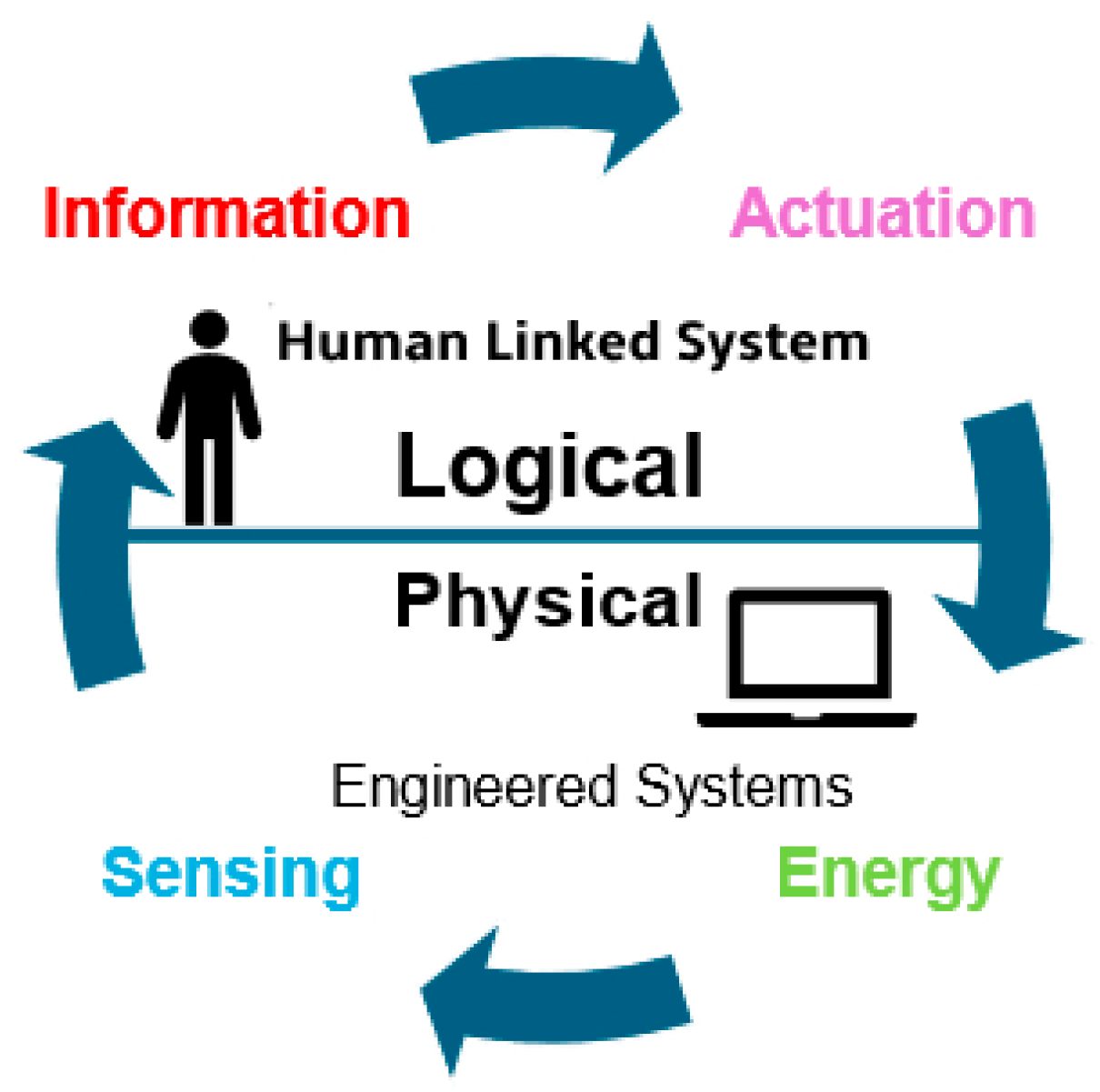

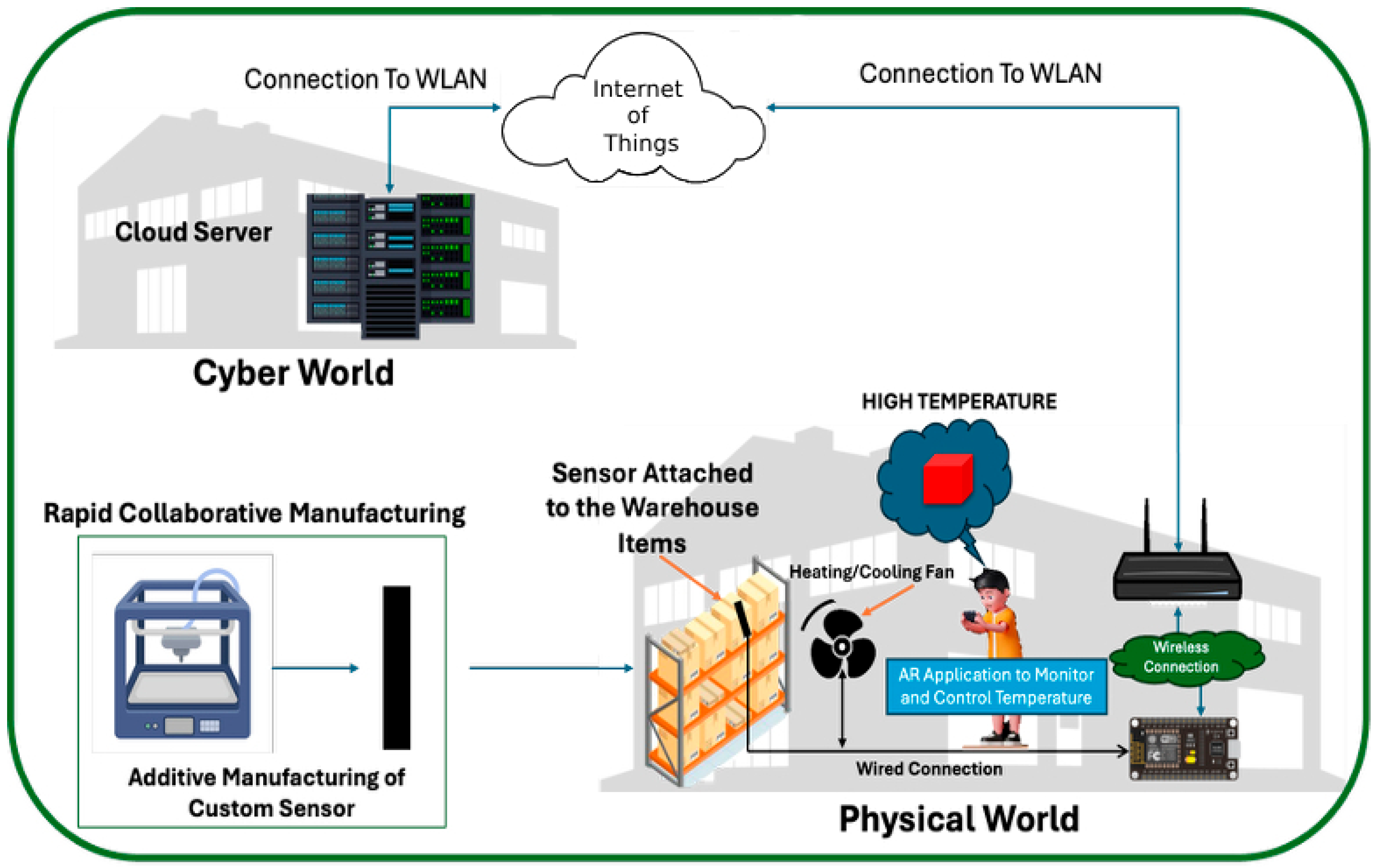

1.1. Role of Cyber–Physical Systems and the Internet of Things in Smart Factories

1.2. Additive Manufacturing for Custom Sensor Solutions

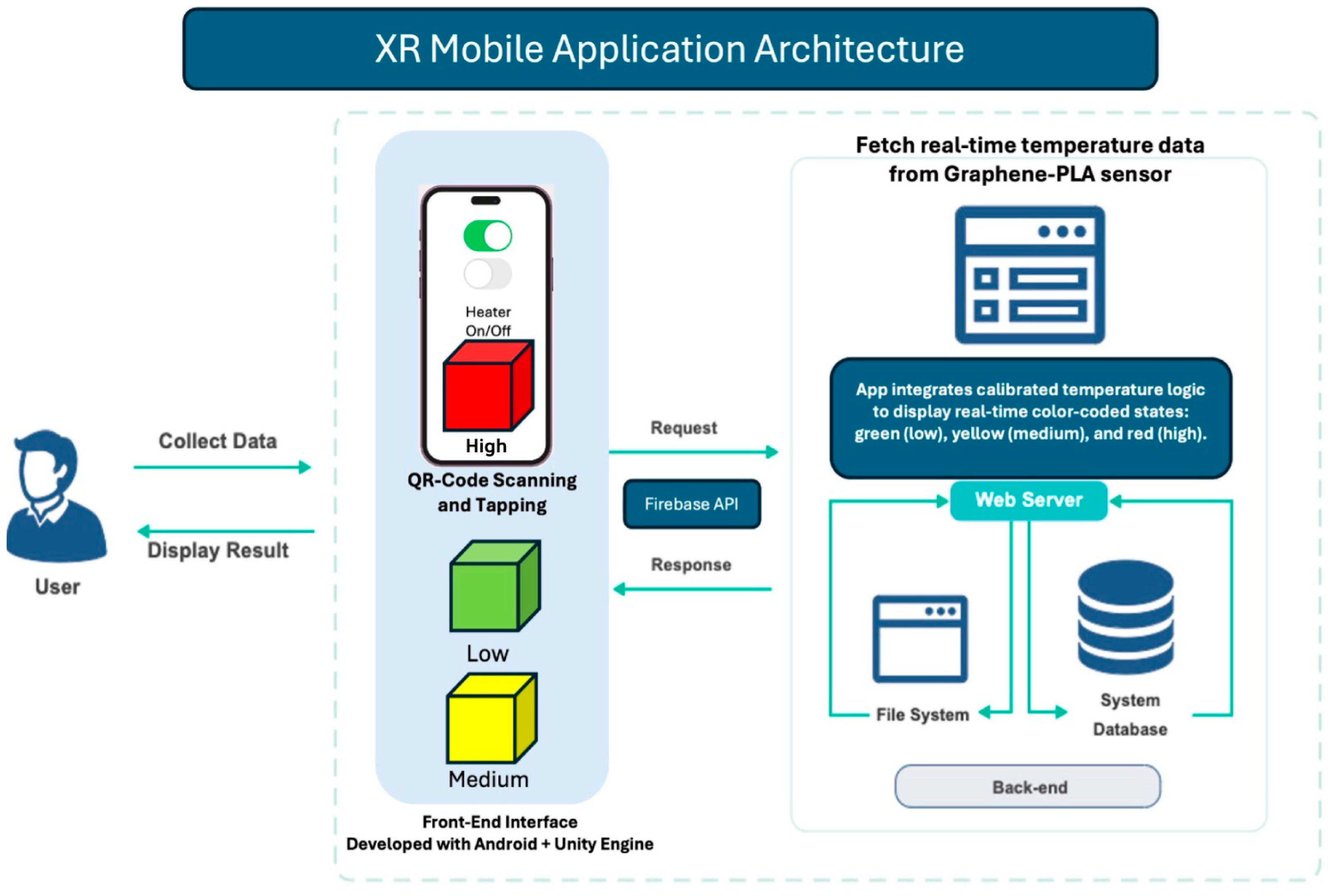

1.3. Extended Reality for Operational Management in Factories

1.4. Objective and Novelty of This Industry 4.0 Case Study

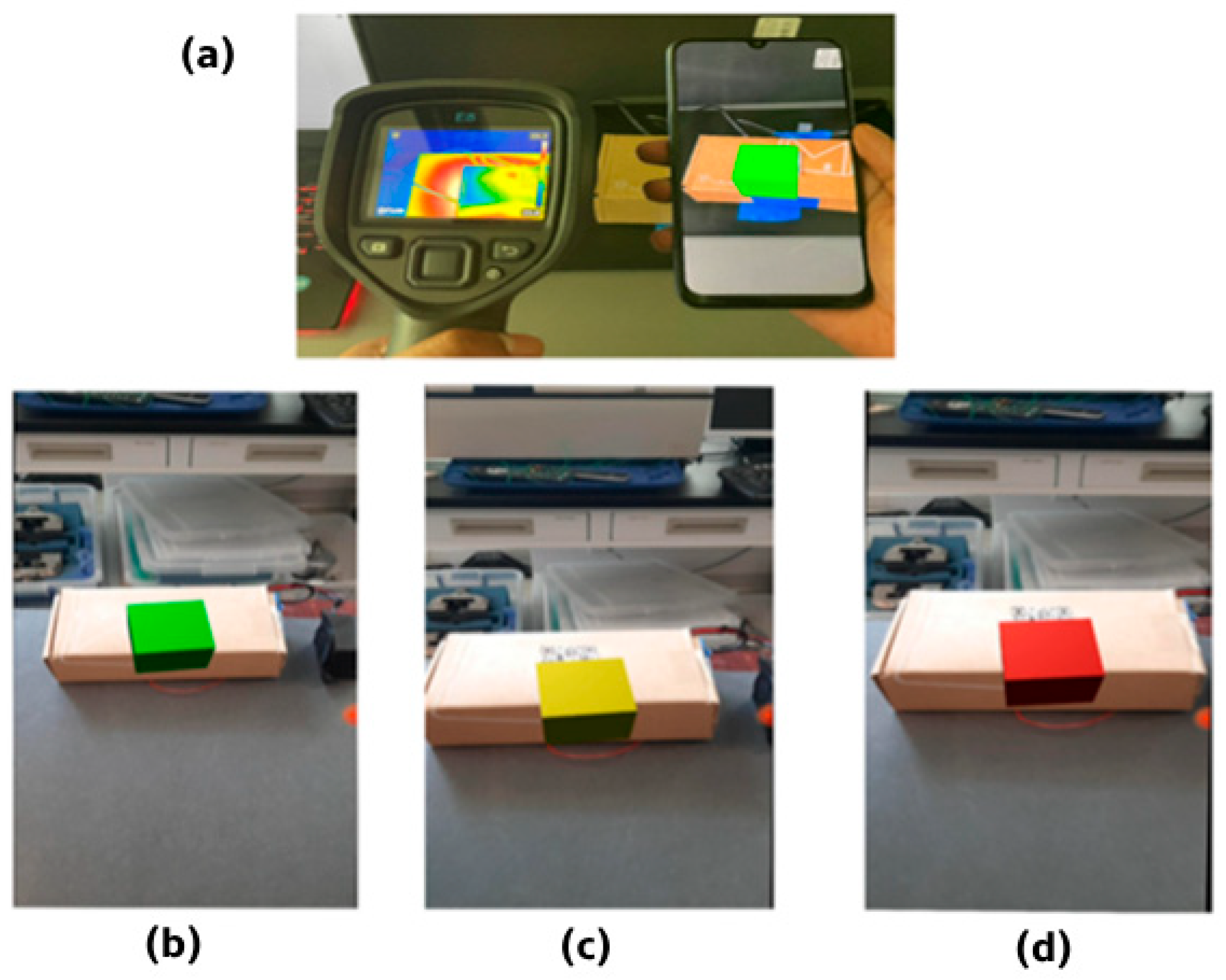

- (a)

- In the case of manual operation, the user would need to use two independent systems to (i) monitor and (ii) control the part temperature;

- (b)

- The thermal camera must be carried by the user across the inspection locations;

- (c)

- The user would need to move and interact with the control switches physically (which can be prohibitive if the part temperature is too high, or it is not physically easy to reach).

Novelty: A Human-in-the-Loop (HITL) CPS-Based System Demonstrator via Integration of XR, an AM-Based Sensing Modality

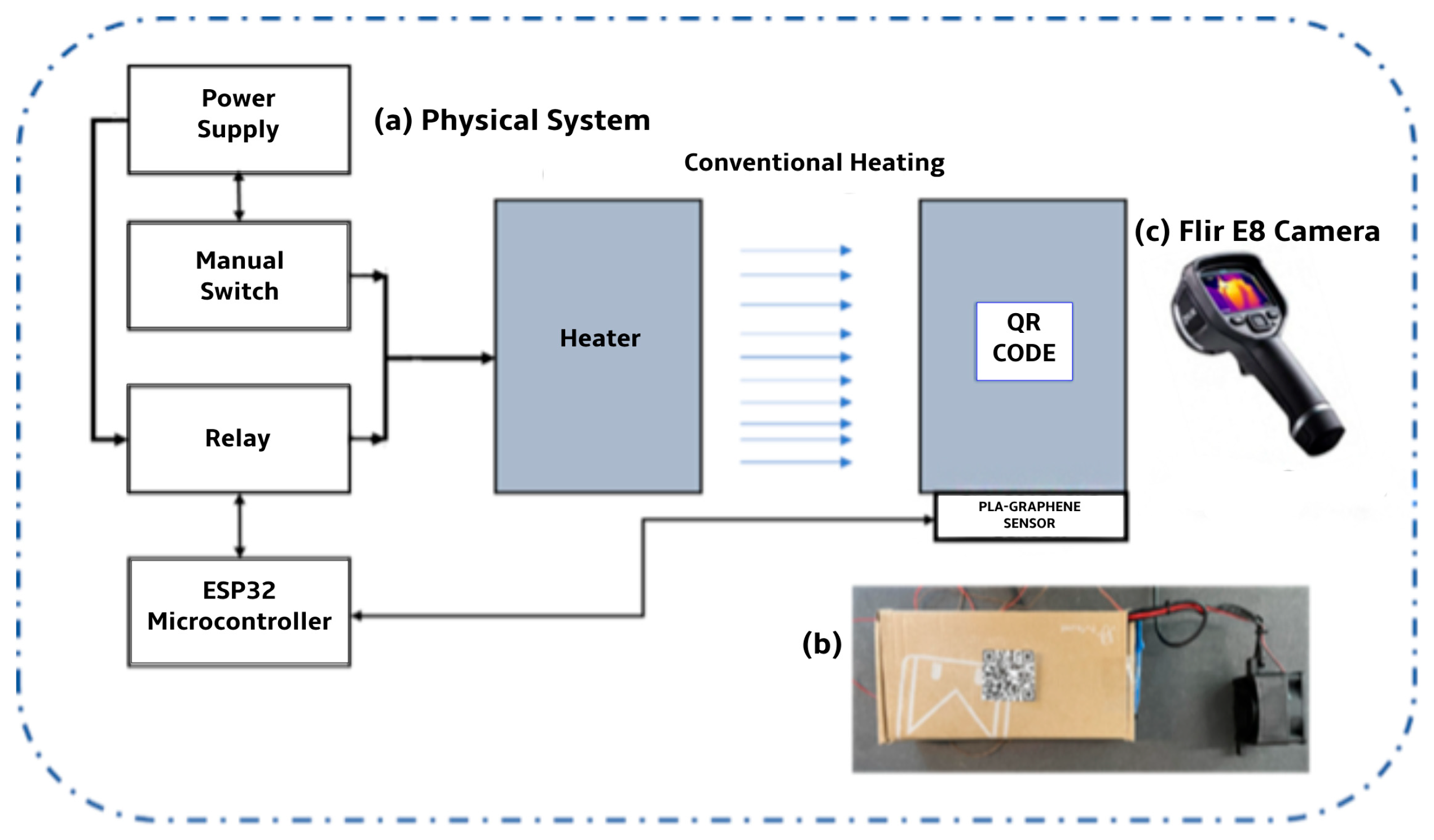

2. Material and Methods

User Study

- Metric 1: Response time—the time taken to “turn off” the heater after reaching the maximum temperature.

- Metric 2: Error—change in temperature upon completing the task.

- Metric 3: Presence—after each experiment, every participant assessed the feeling of presence in the virtual environment using the Holistic Presence Questionnaire (HPQ) [58]; the scores were calculated based on the official source for the HPQ questionnaire.

- Metric 4: Ease and frequency of use, intuitive, orientation, speed, and fatigue—following the completion of each experiment, the participants were given a 5-point Likert scale [60] and asked to rate the ease and frequency of use, intuition, speed, and fatigue of the method (1—strongly disagree, 2—disagree. 3—neutral, 4—agree, 5—strongly agree).

3. Results and Discussion

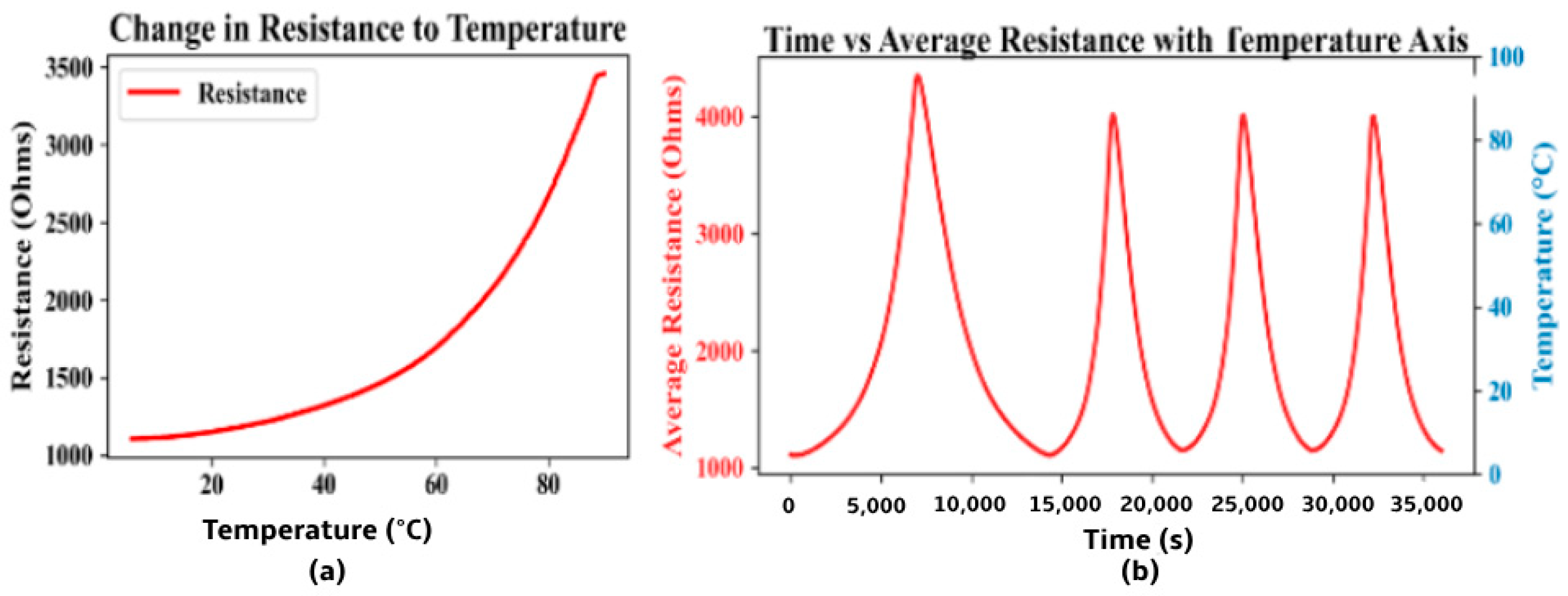

3.1. 3D-Printed Graphene Temperature Sensor

3.2. XR-Based Temperature Monitor and Control

3.2.1. Evaluation of the User Study Metrics

3.2.2. Evaluation of Metric 4: Ease and Frequency of Use, Speed, Fatigue, Trust, and Reliability

3.3. Discussion: Challenges and Future Perspective

- Hands-on Training: conduct more structured, hands-on workshops to enhance user familiarity and confidence with the XR environment and its reliability.

- Iterative Usability Testing: refine XR interface elements iteratively to minimize cognitive load and enhance intuitiveness and ease of use.

- Latency Reduction: optimize data pipelines and networking to ensure real-time sensor updates without perceptible delays.

- Multiple Feedback Mechanisms: integrate multiple forms of user feedback, including visual, haptic, and auditory cues, alongside color-coded indicators to reinforce user confidence in sensor readings.

- Ongoing Validation Exercises: regularly benchmark XR sensor readings against established physical methods (such as thermal cameras), transparently presenting performance metrics within the XR interface itself.

- User Feedback Loops: systematically solicit and incorporate participant feedback after each CPS design trial, allowing iterative improvements that continually enhance trust and user satisfaction.

4. Conclusions

- The graphene-PLA sensor exhibited a thermal coefficient of resistance (TCR) of approximately 0.0061 °C−1, demonstrating a promising capability for real-time temperature assessments and achieving a response time of 1.1 min while maintaining a drift of approximately −0.5% over a 12-h period.

- The user study experiment data, based on both quantitative and qualitative metrics, favored the sensor-XR-based temperature monitoring and control system over the traditional manual training method using thermal camera. Namely, implementing the sensor in conjunction with the XR interface led to improved user response efficiency, reducing the task completion time to 1.97 s compared to 4.36 s of the conventional method. The new system elevated the accuracy metric, with an error of 0.91 °C versus 2.23 °C.

- Another improvement was the fact that participants were able to monitor and control the temperature without the need to physically move from their monitoring location (as opposed to the manual method). This would reduce fatigue when using the sensor-XR system while enhancing safety, for example, when handling components with excessive temperature or radiation. The average recorded movement speed for participants during the manual method was 0.191 m/s.

- Participants commented on the increased degree of freedom and multitasking capabilities provided by the sensor-XR system within the working floor space. However, they also indicated concerns regarding trust in such automated systems, reflected in a relatively low score of 2.83/5.00. This suggests a broader issue for future studies regarding the enhancement of the user acceptance of emerging digital technologies under Industry 4.0, as well as the continual training and upskilling of operators in their use.

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Other (Secondary) Experimental Factors Considered

| Factor | Description |

|---|---|

| Distance from the Target | The object of the temperature control can be defined as the target. Regarding thermal cameras, the temperature measurement accuracy is directly related to the distance between the camera and the object being measured. Furthermore, the distance between the physical control switch also affects the time taken to respond to a high-temperature measurement. Similarly, when using the XR tool, the camera’s focus can be affected by the distance between the user and the object, and the image target might not be activated. However, because of the IoT, the temperature control is not affected by the distance between the object and the user. Here, the distance between the users was fixed at 2 m. In addition, the floor was marked with red tape to ensure the users maintained the distance uniformly. |

| Number of targets | The number of targets to measure can affect the experiment’s time and the user’s fatigue level. Here, the target number was fixed at one object. However, the model can be scaled to as many targets as needed. |

| Devices used | The instruments used during tests can impact the user experience, response time, fatigue, and other factors due to the device’s specifications, such as screen size, camera focal length, phone weight, and device resolution. Here, to expose all participants to the same device treatment, the same FLIR E8 thermal camera was used (for Experiment 1) and the same Samsung M30 Android smartphone (for Experiment 2). |

| Target location and direction | Changes in the direction of the target at each trial would introduce interactions, and differences in the target could demand increased mobility, which may contribute to the user’s fatigue and increase the time taken to complete the task. Here, throughout the experiments, the target was placed in the same direction in front of the user. |

References

- Nuanmeesri, S.; Tharasawatpipat, C.; Poomhiran, L. Transfer Learning Artificial Neural Network-based Ensemble Voting of Water Quality Classification for Different Types of Farming. Eng. Technol. Appl. Sci. Res. 2024, 14, 15384–15392. [Google Scholar] [CrossRef]

- Klieštik, T.; Kral, P.; Bugaj, M.; Durana, P. Generative Artificial Intelligence of Things Systems, Multisensory Immersive Extended Reality Technologies, and Algorithmic Big Data Simulation and Modelling Tools in Digital Twin Industrial Metaverse. Equilibrium. Q. J. Econ. Econ. Policy 2024, 19, 429–461. [Google Scholar] [CrossRef]

- Nuanmeesri, S. The Affordable Virtual Learning Technology of Sea Salt Farming across Multigenerational Users through Improving Fitts’ Law. Sustainability 2024, 16, 7864. [Google Scholar] [CrossRef]

- Zheng, D.; Fu, X.; Liu, X.; Xing, L.; Peng, R. Modeling and Analysis of Cascading Failures in Industrial Internet of Things Considering Sensing-Control Flow and Service Community. IEEE Trans. Reliab. 2024, 74, 2723–2737. [Google Scholar] [CrossRef]

- Fu, X.; Pace, P.; Aloi, G.; Li, W.; Fortino, G. Toward robust and energy-efficient clustering wireless sensor networks: A double-stage scale-free topology evolution model. Comput. Netw. 2021, 200, 108521. [Google Scholar] [CrossRef]

- Baumers, M.; Tuck, C.; Wildman, R.; Ashcroft, I.; Hague, R. Shape Complexity and Process Energy Consumption in Electron Beam Melting: A Case of Something for Nothing in Additive Manufacturing? J. Ind. Ecol. 2016, 21, S157–S167. [Google Scholar] [CrossRef]

- Ching, N.T.; Ghobakhloo, M.; Iranmanesh, M.; Maroufkhani, P.; Asadi, S. Industry 4.0 Applications for Sustainable Manufacturing: A Systematic Literature Review and a Roadmap to Sustainable Development. J. Clean. Prod. 2022, 334, 130133. [Google Scholar] [CrossRef]

- Yao, X.; Ma, N.; Zhang, J.; Wang, K.; Yang, E.; Faccio, M. Enhancing Wisdom Manufacturing as Industrial Metaverse for Industry and Society 5.0. J. Intell. Manuf. 2022, 35, 235–255. [Google Scholar] [CrossRef]

- Kammerer, K.; Pryss, R.; Sommer, K.; Reichert, M. Towards Context-Aware Process Guidance in Cyber-Physical Systems with Augmented Reality. In Proceedings of the 2018 4th International Workshop on Requirements Engineering for Self-Adaptive, Collaborative, and Cyber Physical Systems (RESACS), Banff, AB, Canada, 20 August 2018; pp. 44–49. [Google Scholar] [CrossRef]

- Mourtzis, D.; Angelopoulos, J. Development of an Extended Reality-Based Collaborative Platform for Engineering Education: Operator 5.0. Electronics 2023, 12, 3663. [Google Scholar] [CrossRef]

- Yang, C.; Tu, X.; Autiosalo, J.; Ala-Laurinaho, R.; Mattila, J.; Salminen, P.; Tammi, K. Extended Reality Application Framework for a Digital-Twin-Based Smart Crane. Appl. Sci. 2022, 12, 6030. [Google Scholar] [CrossRef]

- Liu, X.; Cao, J.; Yang, Y.; Jiang, S. CPS-based smart warehouse for Industry 4.0: A survey of the underlying technologies. Computers 2018, 7, 13. [Google Scholar] [CrossRef]

- Odeyinka, O.F.; Omoegun, O.G. Warehouse Operations: An Examination of Traditional and Automated Approaches in Supply Chain Management. IntechOpen 2023. [Google Scholar] [CrossRef]

- Sodiya, E.O.; Umoga, U.J.; Amoo, O.O.; Atadoga, A. AI-driven warehouse automation: A comprehensive review of systems. GSC Adv. Res. Rev. 2024, 18, 272–282. [Google Scholar] [CrossRef]

- Oliveira, G.; Röning, J.; Plentz, P.; Carvalho, J. Efficient task allocation in smart warehouses with multi-delivery stations and heterogeneous robots. arXiv 2022, arXiv:2203.00119. [Google Scholar] [CrossRef]

- Sahara, C.; Aamer, A. Real-time data integration of an Internet-of-Things-based smart warehouse: A case study. Int. J. Pervasive Comput. Commun. 2021, 18, 622–644. [Google Scholar] [CrossRef]

- Affia, I.; Aamer, A. An Internet of Things-based smart warehouse infrastructure: Design and application. J. Sci. Technol. Policy Manag. 2021, 13, 90–109. [Google Scholar] [CrossRef]

- Geest, M.; Teki, B.; Catal, C. Smart warehouses: Rationale, challenges and solution directions. Appl. Sci. 2021, 12, 219. [Google Scholar] [CrossRef]

- Hefnawy, A.; Bouras, A.; Cherifi, C. IoT for smart city services. In Proceedings of the 2016 Augmented Human International Conference, Geneva, Switzerland, 25–27 February 2016; pp. 10–15. [Google Scholar] [CrossRef]

- Zheng, P.; Lin, T.; Chen, C.; Xu, X. A systematic design approach for service innovation of smart product-service systems. J. Clean. Prod. 2018, 201, 657–667. [Google Scholar] [CrossRef]

- Zhang, J.-H.; Li, Z.; Liu, Z.; Li, M.; Guo, J.; Du, J.; Cai, C.; Zhang, S.; Sun, N.; Li, Y.; et al. Inorganic Dielectric Materials Coupling Micro-/Nanoarchitectures for State-of-the-Art Biomechanical-to-Electrical Energy Conversion Devices. Adv. Mater. 2025, 2419081. [Google Scholar] [CrossRef]

- Bose, S.; Kar, B.; Roy, M.; Gopalakrishnan, P.; Basu, A. Adepos. In Proceedings of the ASPDAC 19: 24th Asia and South Pacific Design Automation Conference, Tokyo, Japan, 21–24 January 2019; pp. 597–602. [Google Scholar] [CrossRef]

- Tan, Y.; Yang, W.; Yoshida, K.; Takakuwa, S. Application of IoT-aided simulation to manufacturing systems in cyber-physical system. Machines 2019, 7, 2. [Google Scholar] [CrossRef]

- Dewi, I.; Shofa, R. Development of warehouse management system to manage warehouse operations. JAISI 2023, 1, 15–23. [Google Scholar] [CrossRef]

- Burns, M.; Manganelli, J.; Wollman, D.; Boring, R.L.; Gilbert, S.; Griffor, E.; Lee, Y.C.; Nathan-Roberts, D.; Smith-Jackson, T. Elaborating the human aspect of the NIST framework for cyber-physical systems. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2018, 62, 450–454. [Google Scholar] [CrossRef]

- Dafflon, B.; Moalla, N.; Ouzrout, Y. The challenges, approaches, and used techniques of CPS for manufacturing in Industry 4.0: A literature review. Int. J. Adv. Manuf. Technol. 2021, 113, 2395–2412. [Google Scholar] [CrossRef]

- Peng, X.; Kuang, X.; Roach, D.J.; Wang, Y.; Hamel, C.M.; Lu, C.; Qi, H.J. Integrating digital light processing with direct ink writing for hybrid 3D printing of functional structures and devices. Addit. Manuf. 2021, 40, 101911. [Google Scholar] [CrossRef]

- Xu, Z.; Kolev, S.; Todorov, E. Design, Optimization, Calibration, and a Case Study of a 3D-Printed, Low-Cost Fingertip Sensor for Robotic Manipulation. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; Available online: https://ieeexplore.ieee.org/document/6907253 (accessed on 15 June 2025).

- Patel, S.; Park, H.; Bonato, P.; Chan, L.; Rodgers, M. A review of wearable sensors and systems with application in rehabilitation. J. Neuroeng. Rehabil. 2012, 9, 21. [Google Scholar] [CrossRef]

- Luo, Y.; Abidian, M.R.; Ahn, J.-H.; Akinwande, D.; Andrews, A.M.; Antonietti, M.; Bao, Z.; Berggren, M.; Berkey, C.A.; Bettinger, C.J.; et al. Technology Roadmap for Flexible Sensors. ACS Nano 2023, 17, 5211–5295. [Google Scholar] [CrossRef]

- Dasarathy, B. Sensor fusion potential exploitation—Innovative architectures and illustrative applications. IEEE J. Mag. 1997, 85, 24–38. [Google Scholar] [CrossRef]

- Ando, B.; Baglio, S. All-Inkjet Printed Strain Sensors. IEEE J. Mag. 2013, 13, 4874–4879. [Google Scholar] [CrossRef]

- Correia, V.; Caparros, C.; Casellas, C.; Francesch, L.; Rocha, J.G.; Lanceros-Mendez, S. Development of inkjet printed strain sensors. Smart Mater. Struct. 2013, 22, 105028. [Google Scholar] [CrossRef]

- Luo, X.; Cheng, H.; Chen, K.; Gu, L.; Liu, S.; Wu, X. Multi-Walled Carbon Nanotube-Enhanced Polyurethane Composite Materials and the Application in High-Performance 3D Printed Flexible Strain Sensors. Compos. Sci. Technol. 2024, 257, 110818. [Google Scholar] [CrossRef]

- Kim, K.; Park, J.; Suh, J.-H.; Kim, M.; Jeong, Y.; Park, I. 3D printing of multiaxial force sensors using carbon nanotube (CNT)/thermoplastic polyurethane (TPU) filaments. Sens. Actuators A Phys. 2017, 263, 493–500. [Google Scholar] [CrossRef]

- Nag, A.; Feng, S.; Mukhopadhyay, S.C.; Kosel, J.; Inglis, D. 3D printed mould-based graphite/PDMS sensor for low-force applications. Sens. Actuators A Phys. 2018, 280, 525–534. [Google Scholar] [CrossRef]

- Lee, B.M.; Nguyen, Q.H.; Shen, W. Flexible Multifunctional Sensors Using 3-D-Printed PEDOT:PSS Composites. IEEE J. Mag. 2024, 24, 7584–7592. [Google Scholar] [CrossRef]

- Qian, F.; Jia, R.; Cheng, M.; Chaudhary, A.; Melhi, S.; Mekkey, S.D.; Zhu, N.; Wang, C.; Razak, F.; Xu, X.; et al. An overview of polylactic acid (PLA) nanocomposites for sensors. Adv. Compos. Hybrid Mater. 2024, 7, 75. [Google Scholar] [CrossRef]

- Ionel, R.; Mâțiu-Iovan, L. Flying probe measurement accuracy improvement by external LCR integration. Measurement 2022, 190, 110703. [Google Scholar] [CrossRef]

- Wolterink, G.; Sanders, R.; Krijnen, G. Thin, Flexible, Capacitive Force Sensors Based on Anisotropy in 3D-Printed Structures. In Proceedings of the 2018 IEEE SENSORS, New Delhi, India, 28–31 October 2018. [Google Scholar] [CrossRef][Green Version]

- Kebede, G.A.; Ahmad, A.R.; Lee, S.-C.; Lin, C.-Y. Decoupled Six-Axis Force–Moment Sensor with a Novel Strain Gauge Arrangement and Error Reduction Techniques. Sensors 2019, 19, 3012. [Google Scholar] [CrossRef] [PubMed]

- Shatokhin, O.; Dzedzickis, A.; Pečiulienė, M.; Bučinskas, V. Extended Reality: Types and Applications. Appl. Sci. 2025, 15, 3282. [Google Scholar] [CrossRef]

- Munir, A.; Aved, A.; Blasch, E. Situational Awareness: Techniques, Challenges, and Prospects. AI 2022, 3, 55–77. [Google Scholar] [CrossRef]

- Alhakamy, A. Extended Reality (XR) Toward Building Immersive Solutions: The Key to Unlocking Industry 4.0. ACM Comput. Surv. 2024, 56, 1–38. [Google Scholar] [CrossRef]

- Dodoo, J.E.; Al-Samarraie, H.; Alzahrani, A.I.; Tang, T. XR and Workers’ safety in High-Risk Industries: A comprehensive review. Saf. Sci. 2025, 185, 106804. [Google Scholar] [CrossRef]

- Xi, N.; Chen, J.; Gama, F.; Riar, M.; Hamari, J. The challenges of entering the metaverse: An experiment on the effect of extended reality on workload. Inf. Syst. Front. 2023, 25, 659–680. [Google Scholar] [CrossRef]

- Rivera, F.M.-L.; Mora-Serrano, J.; Oñate, E.; Montecinos-Orellana, S. A Comprehensive Framework for Integrating Extended Reality into Lifecycle-Based Construction Safety Management. Appl. Sci. 2025, 15, 5690. [Google Scholar] [CrossRef]

- Lesch, V.; Züfle, M.; Bauer, A.; Iffländer, L.; Krupitzer, C.; Kounev, S. A literature review of IoT and CPS—What they are, and what they are not. J. Syst. Softw. 2023, 200, 111631. [Google Scholar] [CrossRef]

- Mosqueira-Rey, E.; Hernández-Pereira, E.; Alonso-Ríos, D.; Bobes-Bascarán, J.; Fernández-Leal, Á. Human-in-the-loop machine learning: A state of the art. Artif. Intell. Rev. 2023, 56, 3005–3054. [Google Scholar] [CrossRef]

- Yang, S.J.; Ogata, H.; Matsui, T.; Chen, N.-S. Human-centered artificial intelligence in education: Seeing the invisible through the visible. Comput. Educ. Artif. Intell. 2021, 2, 100008. [Google Scholar] [CrossRef]

- Chen, H.; Li, S.; Fan, J.; Duan, A.; Yang, C.; Navarro-Alarcon, D.; Zheng, P. Human-in-the-Loop Robot Learning for Smart Manufacturing: A Human-Centric Perspective. IEEE J. Mag. 2025, 22, 11062–11086. [Google Scholar] [CrossRef]

- Hassan, M.; Shraban, S.S.; Islam, A.; Basaruddin, K.S.; Ijaz, M.F.; Bin Kamarrudin, N.S.; Takemura, H. Integration of Extended Reality Technologies in Transportation Systems: A Bibliometric Analysis and Review of Emerging Trends, Challenges, and Future Research. Results Eng. 2025, 21, 105334. [Google Scholar] [CrossRef]

- Conductive Graphene Filament. Filament2Print. Available online: https://filament2print.com/en/conductive/653-1508-conductive-graphene.html#/217-diameter-175_mm/626-format-spool_100_g (accessed on 15 June 2025).

- ESP32 Wi-Fi & Bluetooth SoC | Espressif Systems. Available online: https://www.espressif.com/en/products/socs/esp32 (accessed on 15 June 2025).

- Espressif Systems ESP32-DEVKITC-32E. DigiKey Electronics. Available online: https://www.digikey.in/en/products/detail/espressif-systems/ESP32-DEVKITC-32E/12091810 (accessed on 15 June 2025).

- Espressif, GitHub—Espressif/Arduino-esp32: Arduino Core for the ESP32. GitHub. Available online: https://github.com/espressif/arduino-esp32 (accessed on 15 June 2025).

- Firebase Data Storage. Available online: https://firebase.google.com/?gad_source=1&gad_campaignid=20100026061&gbraid=0AAAAADpUDOjTXyaVcul8Qsj3QW48AyiOT&gclid=CjwKCAjw3rnCBhBxEiwArN0QE9iPcmRp5jltY4X3ODtGV4-u2JhlcV74SBcdlbvRTm_j6PNmhUr8dBoCXS8QAvD_BwE&gclsrc=aw.ds (accessed on 15 June 2025).

- An Introduction to QR Code Technology. IEEE Conference Publication|IEEE Xplore. Available online: https://ieeexplore.ieee.org/document/7966807 (accessed on 15 June 2025).

- Ozturkcan, S. Service innovation: Using augmented reality in the IKEA Place app. J. Inf. Technol. Teach. Cases 2020, 11, 8–13. [Google Scholar] [CrossRef]

- Nuanmeesri, S.; Poomhiran, L. Perspective electrical circuit simulation with virtual reality. Int. J. Online Biomed. Eng. 2019, 15, 28–37. [Google Scholar] [CrossRef]

- Liu, J.; Chen, H.; Zhao, Y. Thermal coefficient of resistance in thin films: Implications for temperature-sensitive devices. Appl. Phys. Lett. 2020, 116, 123501. [Google Scholar] [CrossRef]

- Kuo, C.; Lin, Y.; Chang, C. Understanding the thermal coefficient of resistance in thermistors for enhanced performance. Sens. Actuators A 2019, 284, 232–241. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, H. Characterization of the thermal coefficient of resistance in conductive polymers. Polym. Test. 2021, 92, 106828. [Google Scholar] [CrossRef]

- Chen, L.; Li, Y. The influence of temperature on the electrical resistance of conductive materials. Mater. Sci. Eng. B 2017, 223, 1–23. [Google Scholar] [CrossRef]

- Oksiuta, Z.; Jalbrzykowski, M.; Mystkowska, J.; Romanczuk, E.; Osiecki, T. Mechanical and Thermal Properties of Polylactide (PLA) Composites Modified with Mg, Fe, and Polyethylene (PE) Additives. Polymers 2020, 12, 2939. [Google Scholar] [CrossRef]

- Pérez, M.A.; González, J. Thermal properties of polylactic acid (PLA) and its blends. Mater. Sci. Eng. C 2017, 80, 785. [Google Scholar] [CrossRef]

- Rasal, R.M.; Janorkar, A.V.; Hirt, D.E. Poly(lactic acid) modifications. Prog. Polym. Sci. 2008, 33, 391–410. [Google Scholar] [CrossRef]

- Auras, R.; Lim, L.T.; Selke, S.E.M.; Tsuji, H. Poly(Lactic Acid): Synthesis, Structures, Properties, Processing, and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Tsuji, H.; Eto, T.; Sakamoto, Y. Synthesis and Hydrolytic Degradation of Substituted Poly(DL-Lactic Acid)s. Materials 2011, 4, 1384–1398. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhao, Y.; Zhang, J. Graphene-based thermistors on flexible PDMS substrates for temperature sensing applications. Sens. Actuators A 2018, 273, 147–158. [Google Scholar] [CrossRef]

- Htwe, Y.Z.N. and Mariatti, M. Printed graphene and hybrid conductive inks for flexible, stretchable, and wearable electronics: Progress, opportunities, and challenges. J. Sci. Adv. Mater. Devices 2022, 7, 100435. [Google Scholar] [CrossRef]

- Makwana, M.V.; Patel, A.M. Multiwall Carbon Nanotubes: A Review on Synthesis and Applications. Nanosci. Nanotechnol. Asia 2022, 12, e131021197215. [Google Scholar] [CrossRef]

- Xu, M.; Fralick, D.; Zheng, J.Z.; Wang, B.; Tu, X.M.; Feng, C. The differences and similarities between two-sample t-test and paired t-test. Shanghai Arch. Psychiatry 2017, 29, 184–188. [Google Scholar] [CrossRef] [PubMed]

- Derrac, J.; García, S.; Molina, D.; Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

- O’Malley, M.; Woods, J.; Byrant, J.; Miller, L. How is western lowland gorilla (Gorilla gorilla gorilla) behavior and physiology impacted by 360° visitor viewing access? Anim. Behav. Cogn. 2021, 8, 468–480. [Google Scholar] [CrossRef]

- Valencia, A.; Hincapie, R.A.; Gallego, R.A. Integrated Planning of MV/LV Distribution Systems with DG Using Single Solution-Based Metaheuristics with a Novel Neighborhood Search Method Based on the Zbus Matrix. J. Electr. Comput. Eng. 2022, 2022, 2617125. [Google Scholar] [CrossRef]

- Akbulut, A.S. The effect of TMJ intervention on instant postural changes and dystonic contractions in patients diagnosed with dystonia: A case study. Diagnostics 2023, 13, 3177. [Google Scholar] [CrossRef]

- Ding, M.; Zhao, D.; Wei, R.; Duan, Z.; Zhao, Y.; Li, Z.; Lin, T.; Li, C. Multifunctional elastomeric composites based on 3D graphene porous materials. Exploration 2023, 4, 20230057. [Google Scholar] [CrossRef]

- Wang, K.; Sun, X.; Cheng, S.; Cheng, Y.; Huang, K.; Liu, R.; Yuan, H.; Li, W.; Liang, F.; Yang, Y.; et al. Multispecies-coadsorption-induced rapid preparation of graphene glass fiber fabric and applications in flexible pressure sensor. Nat. Commun. 2024, 15, 5040. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Q.; Wan, Y.; Qin, Y.; Qu, X.; Zhou, M.; Huo, S.; Wang, X.; Yu, Z.; He, H. Durable and Wearable Self-powered Temperature Sensor Based on Self-healing Thermoelectric Fiber by Coaxial Wet Spinning Strategy for Fire Safety of Firefighting Clothing. Adv. Fiber Mater. 2024, 6, 1387–1401. [Google Scholar] [CrossRef]

- Ryu, W.M.; Lee, Y.; Son, Y.; Park, G.; Park, S. Thermally Drawn Multi-material Fibers Based on Polymer Nanocomposite for Continuous Temperature Sensing. Adv. Fiber Mater. 2023, 5, 1712–1724. [Google Scholar] [CrossRef]

- Yin, W.; Qin, M.; Yu, H.; Sun, J.; Feng, W. Hyperelastic Graphene Aerogels Reinforced by In-suit Welding Polyimide Nano Fiber with Leaf Skeleton Structure and Adjustable Thermal Conductivity for Morphology and Temperature Sensing. Adv. Fiber Mater. 2023, 5, 1037–1049. [Google Scholar] [CrossRef]

- Golkarnarenji, G.; Naebe, M.; Badii, K.; Milani, A.S.; Jazar, R.N.; Khayyam, H. A Machine Learning Case Study with Limited Data for Prediction of Carbon Fiber Mechanical Properties. Comput. Ind 2019, 105, 123–132. [Google Scholar] [CrossRef]

- Kasaei, A.; Abedian, A.; Milani, A.S. An Application of Quality Function Deployment Method in Engineering Materials Selection. Mater. Des. 2014, 55, 912–920. [Google Scholar] [CrossRef]

| Control Method | Response Time (s) | Error (°C) | HPQs | ||||||

|---|---|---|---|---|---|---|---|---|---|

| M | SD | M | SD | ||||||

| Manual | 4.36 | 1.97 | 2.2 | 0.71 | Sensory | Emotional | Cognitive | Behavioral | Reasoning |

| XR-based | 1.97 | 0.73 | 0.9 | 0.40 | M 3.50 | M 3.83 | M 3.50 | M 3.83 | M 4.0 |

| SD 1.95 | SD 1.60 | SD 1.60 | SD 1.64 | SD 1.67 | |||||

| Control Method | Ease and Frequency of Use | Intuitive | Trust | Fatigue | Reliability | Speed | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| M | SD | M | SD | M | SD | M | SD | M | SD | M | SD | |

| XR-based | 4.5 | 0.83 | 4.17 | 0.75 | 2.83 | 1.47 | 1.83 | 0.98 | 3.83 | 0.75 | 4.67 | 0.51 |

| Manual | 1.83 | 0.98 | 2.0 | 0.89 | 3.00 | 1.26 | 4.0 | 0.89 | 4.17 | 0.75 | 2.50 | 1.04 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Krishnamurthy, R.J.; Milani, A.S. Graphene–PLA Printed Sensor Combined with XR and the IoT for Enhanced Temperature Monitoring: A Case Study. J. Sens. Actuator Netw. 2025, 14, 68. https://doi.org/10.3390/jsan14040068

Krishnamurthy RJ, Milani AS. Graphene–PLA Printed Sensor Combined with XR and the IoT for Enhanced Temperature Monitoring: A Case Study. Journal of Sensor and Actuator Networks. 2025; 14(4):68. https://doi.org/10.3390/jsan14040068

Chicago/Turabian StyleKrishnamurthy, Rohith J., and Abbas S. Milani. 2025. "Graphene–PLA Printed Sensor Combined with XR and the IoT for Enhanced Temperature Monitoring: A Case Study" Journal of Sensor and Actuator Networks 14, no. 4: 68. https://doi.org/10.3390/jsan14040068

APA StyleKrishnamurthy, R. J., & Milani, A. S. (2025). Graphene–PLA Printed Sensor Combined with XR and the IoT for Enhanced Temperature Monitoring: A Case Study. Journal of Sensor and Actuator Networks, 14(4), 68. https://doi.org/10.3390/jsan14040068