Abstract

Visually Impaired People (VIP) face significant challenges in their daily lives, relying on others or trained dogs for assistance when navigating outdoors. Researchers have developed the Smart Stick (SS) system as a more effective aid than traditional ones to address these challenges. Developing and utilizing the SS systems for VIP improves mobility, reliability, safety, and accessibility. These systems help users by identifying obstacles and hazards, keeping VIP safe and efficient. This paper presents the design and real-world implementation of an SS using an Arduino Nano microcontroller, GPS, GSM module, heart rate sensor, ultrasonic sensor, moisture sensor, vibration motor, and Buzzer. Based on sensor data, the SS can provide warning signals to VIP about the presence of obstacles and hazards around them. Several Machine Learning (ML) algorithms were used to improve the SS alert decision accuracy. Therefore, this paper used sensor data to train and test ten ML algorithms to find the most effective alert decision accuracy. Based on the ML algorithms, the alert decision, including the presence of obstacles, environmental conditions, and user health conditions, was examined using several performance metrics. Results showed that the AdaBoost, Gradient boosting, and Random Forest ML algorithms outperformed others and achieved an AUC and specificity of 100%, with 99.9% accuracy, F1-score, precision, recall, and MCC in the cross-validation phase. Integrating sensor data with ML algorithms revealed that the SS enables VIP to live independently and move safely without assistance.

1. Introduction

Visually Impaired People (VIP) encounter challenges in their daily activities, particularly in travelling and obtaining accurate information from their surroundings. Often, those with visual impairments must depend on assistance from others. Additionally, individuals who are blind require the support of a cane to navigate and walk independently [1]. The challenging environmental conditions further complicate matters for those with visual impairments. A visually impaired person must remain observant to avoid collision by encountering holes and obstacles, navigating staircases, or slipping on moist terrain. Furthermore, it could be essential to notify family members or caretakers in an emergency, along with their location [2]. World Health Organization (WHO) data show 2.2 billion people worldwide are blind or VIP [3,4]. About 217 million have impaired eyesight, and 36 million are considered blind. Remarkably, 82% of blindness cases occur in people 50 years of age or older [5].

Technological advancements allow people with vision impairments to move around independently. These devices, called blind assistance features, include cameras that offer complete guidance and obstacle detection, wet or dry surface sensors, ultrasonic sensors, GPS and sensing systems, infrared sensors, and cameras [6]. By integrating visual and physical aspects, the integrated Internet of Things (IoT) enhances blind assistance devices further [7,8]. Promising technologies like artificial intelligence, containing Machine Learning (ML) techniques and Deep Learning (DL) algorithms [9], such as neural networks [10], Fuzzy logic control [11], Support Vector Machine (SVM) [12], Random Forest [13], convolutional neural network (CNN) [14], and generative adversarial neural network [8], are expected to improve the quality of life for people with visual impairments. These algorithms are progressively employed in diagnosing eye diseases and creating visual assistance tools [8].

This study aimed to develop and implement a smart stick (SS) system for VIP. The system incorporates moisture and ultrasound sensors to realistically detect objects in the environment, providing valuable environmental information to assist users. A small DC vibration motor was employed to alert users of obstacles during movement. In addition, a pulse sensor is incorporated into the stick to monitor the heart rate of the VIP. GPS technology allows for tracking of the user’s location and shares this information with family members or dependents as SMS messages via GSM. Moreover, ten ML algorithms were used to improve the accuracy of warning decisions based on sensor data. These algorithms were assessed to show the best results regarding several performance metrics of the alert decision.

The contribution of this research paper is to design and practically implement an SS system equipped with humidity and ultrasound sensors to recognize obstacles and ground conditions for VIP in indoor and outdoor environments, as well as a heart rate sensor to monitor the heart rate of the VIP. In addition, the SS includes a Buzzer and a vibration motor to alert VIP of existing hazards. Moreover, the SS contains a GPS and GSM to inform the VIP’s family members with SMS for security and emergency support. Furthermore, ML models were utilized to improve the accuracy of alert decisions based on sensor data. The ML algorithms were also assessed to find the best algorithm with more accurate alert decisions.

The rest of this article is structured as follows: Section 2 reviews the related works. Section 3 presents the SS system design. Section 4 explains the hardware of the SS system, which highlights several parts, including the microcontroller-based Arduino Nano, GPS module, GSM module, biomedical and environmental sensors, actuators (vibration motor and Buzzer), and power source. Section 5 introduces the software architecture of the SS. Section 6 covers the ML algorithms details by introducing data collection, implementation algorithms, and performance evaluation. Section 7 presents results and discussion encompassing two main subsections: results of sensor measurements and results of ML algorithms. Section 8 compares the results of our system with those reported in previous studies using ML algorithms. Finally, Section 9 concludes the research.

2. Related Work

Numerous research projects have been recently introduced to develop SSs to assist individuals with visual impairments, such as [3,15,16,17,18]. Panazan and Dulf [16] presented a smart cane with two ultrasonic obstacle sensors designed for the visually impaired. The device works well in various situations, providing warnings of obstacles above and below via vibration and audio signals. Ultrasonic sensors measure distances between obstacles between 2 and 400 cm, providing information for the VIP’s reaction. They included a mobile app paired with Bluetooth to improve the smart cane’s performance, especially if a stick is misplaced. However, the study relied on traditional methods and did not incorporate smart technology into a smart cane. Li et al. [17] developed an assistive system for VIP based on artificial intelligence. The system aims to be cost-effective and versatile, offering features such as obstacle distance measurement and object detection. Through SS and wearable smart glasses, the system could detect falls, heart rate, humidity-temperature environments, and body temperature. Object recognition is performed using the YOLOv5 deep neural network, and the results showed an accuracy of 92.16%.

Sahoo et al. [19] combined a walking stick and a programmable interface controller with a Raspberry Pi as the control core. The system has GPS, obstacle detection sensors, and alerting features. Based on a recognized impediment, a Buzzer or vibration is used to alert VIP. The visually challenged individual’s locations are recorded by the GPS module, which enables the person in charge to follow those using Android-based applications. With infrared and ultrasonic sensors, a Buzzer, and a vibration motor, Nguyen et al. [20] developed an intelligent blind stick system. Particularly when navigating inside stairs, the system could identify various obstructions. There is an alert feature that can identify stairs. The system also has built-in GSM and GPS modules for real-time position through a smartphone app, helping family members. The intelligent blind stick has a detection range of 5 cm to 150 cm, providing benefits like affordability, barrier detection above the knee stage, and capability for future accuracy and reliability testing in real-world scenarios. Senthilnathan et al. [21] developed an energy-efficient and lightweight SS to help the VIP identify their environments. The SS was furnished with a water sensor for identifying dry or moisture surfaces and an infrared sensor to detect barriers. The SS gives alerting messages when obstacles or wet/moist grounds are identified ahead of the user. The SS can detect impediments within a 70 cm radius. A conventional stick supplied with a smartphone was used by Khan et al. [22] to improve the navigation capability of VIP, assisting navigation within their location.

Thi Pham et al. [23] developed a walking cane for the visually impaired, combining low-cost features and ease of use. The key component is an ultrasonic sensor that analyzes reflected waves to detect obstacles. When the obstacles are detected, the microcontroller processes signals to determine direction and distance from its surroundings and provides real-time feedback via tone and vibration. Additional functionalities comprise an MPU 6050 acceleration sensor, GPS, SIM800L card, and an emergency call button. In case of a fall, if the user is inactive for 5 s, the SIM800L initiates a call to a pre-configured contact, providing GPS location through a Google Maps link. A low-cost assistive system for obstacle detection and environmental depiction was developed by Islam et al. [13] using DL techniques. The proposed object detection model utilizes the Raspberry Pi embedded system, TensorFlow object detection, API, and SSDLite MobileNetV2 to aid VIP. The object detection modes and environment description were investigated and estimated on a desktop computer. Bazi et al. [24] presented a moveable camera-based method to help VIP identify several objects within images. This approach employs a multi-label convolutional SVM network, which uses forward-supervised learning and linear SVMs for training and filtering. The method achieved a sensitivity of 93.64% and a specificity of 92.17%. Gensytskyy et al. [25] used images from a smartphone camera as the CNN model’s input, facilitating VIP’s navigation in urban areas. In addition, the proposed system attempts to account for the friction coefficient of the soil to provide safe navigation for blind and visually impaired users.

Chopda et al. [26] introduced a wearable intelligent voice assistant to assist VIP in various aspects of daily life. The device uses computer vision and advances in speech processing, suggesting functionalities such as emergency response, object recognition, and optical character detection. The system is equipped with hardware components supplying feedback via speech, sound, and haptics, helping avoid obstacles. In addition, it smoothly incorporates a smartphone app, allowing users to read SMS messages, customize device settings, localize the device if misplaced with a simple button press, and send emergency SOS messages. Ben Atitallah et al. [27] proposed and developed an obstacle detection algorithm based on a neural network using the YOLO v5 model. They trained and tested the model using outdoor and indoor MS COCO and IODR datasets. The proposed method achieved an average precision of 81.02%. However, the method remains untested in real-world applications. Ma et al. [28] developed a low-cost smart stick that uses sensors, computer vision, and edge-cloud operating systems. The smart cane contained a high-speed camera, object and obstacle detection, traffic light detection, and fall detection to aid VIP’s navigation. The results demonstrated that the obstacle and fall detection accuracies were 92.5% and 90%.

This research differs from previous works by adopting environmental and health conditions. The sensor measurements of these conditions were used to train and test several ML algorithms to extract the best model that achieves higher accuracy.

3. System Design

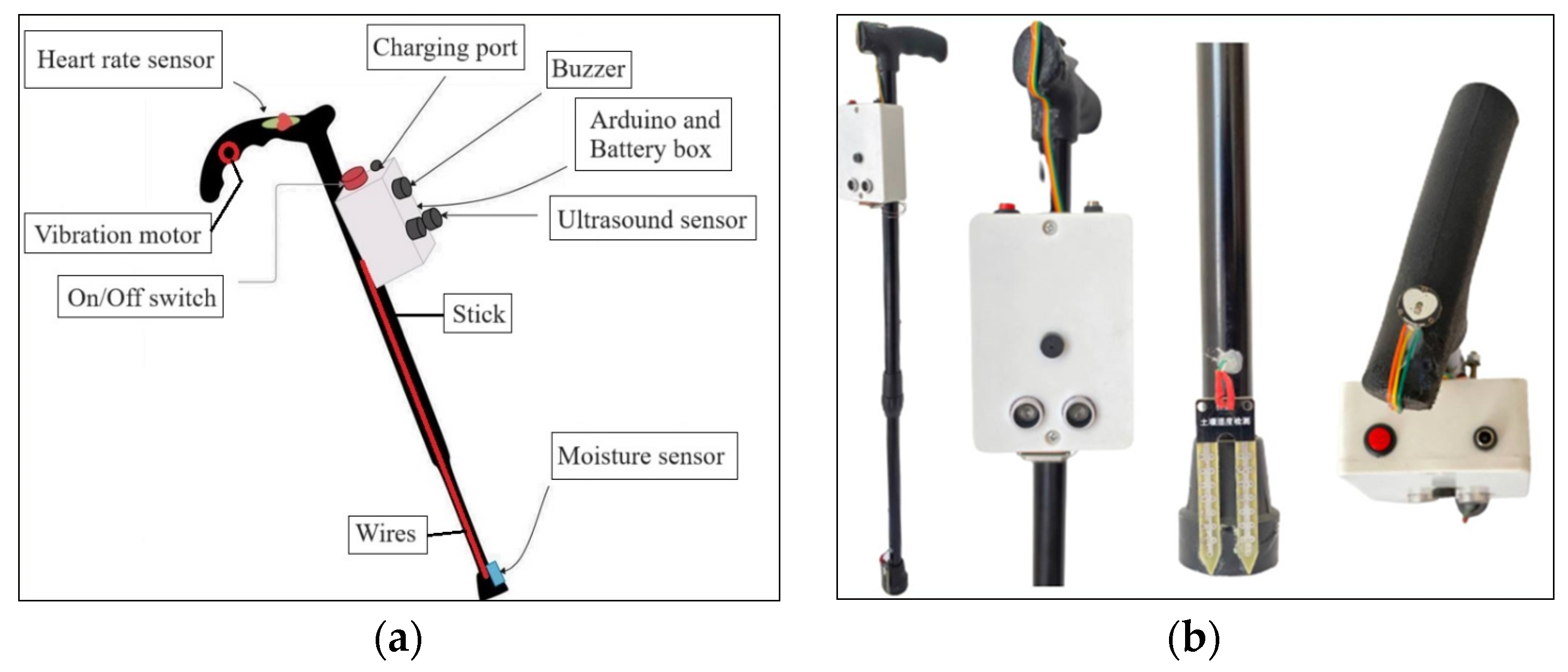

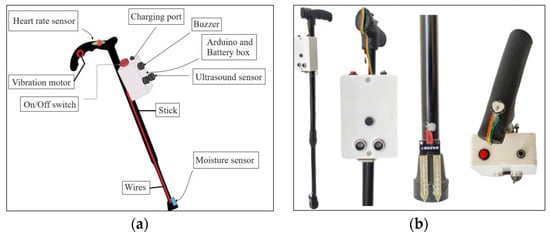

The SS system for VIP, shown in Figure 1a,b, is a sophisticated system designed to enhance the mobility and safety of individuals with visual impairments. The system comprises a stick, an Arduino Nano microcontroller, a Global Positioning System (GPS), a Global System for Mobile Communications (GSM) module, a heart rate sensor, an ultrasound sensor, a moisture sensor, a vibration motor, a Buzzer, a battery, a battery charging port, a plastic box, wires, and an on/off switch. The hardware and operational strategy of this SS will be clarified in Section 4 and Section 5.

Figure 1.

The proposed SS system consists of (a) an SS equipped with all components and (b) an SS integrated with various sensors.

4. Hardware Architecture

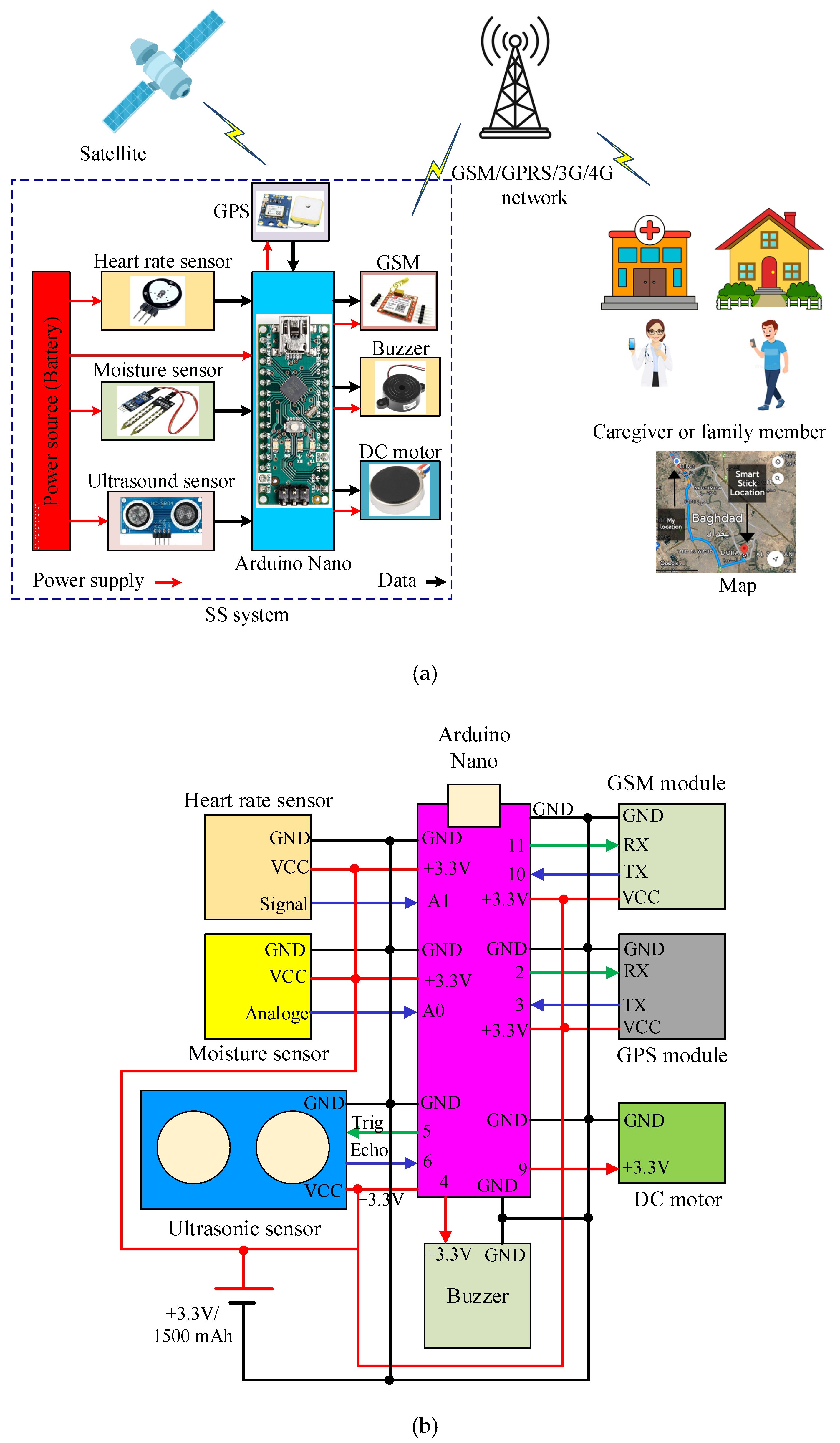

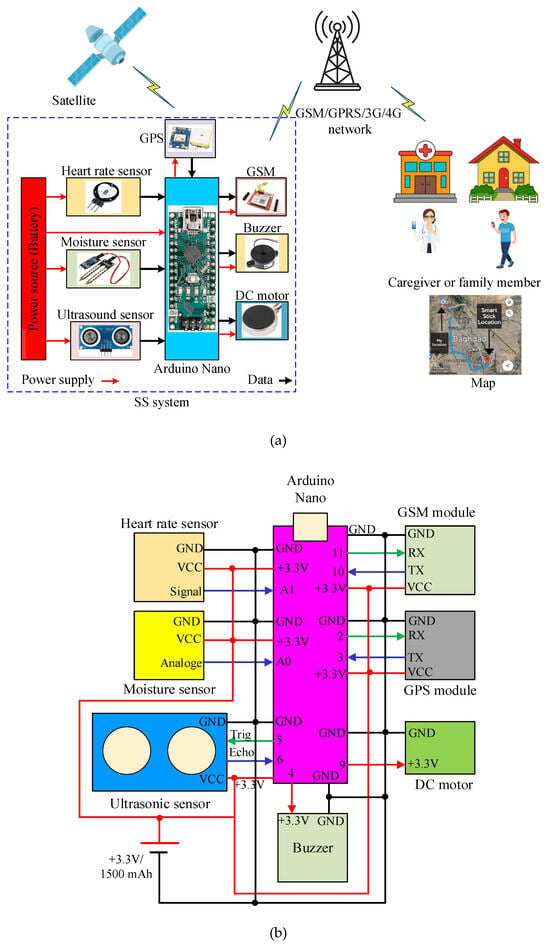

The illustration in Figure 2a depicts the SS system’s whole configuration, highlighting its components’ hardware and interconnection, while Figure 2b shows the wire connections of the SS system. Figure 2b illustrates the wire connection of all components with the Arduino Nano microcontroller. The sensors, including ultrasonic, moisture, heart rate, and wireless technology encompassing GPS and GSM, are connected to the Arduino Nano microcontroller and a Buzzer and coin vibration motor. The moisture and heart rate sensors are connected to the Arduino Nano over three wires—two for the power supply and one for the measurement signal—to measure the ground conditions and VIP’s heartbeat, respectively. The ultrasonic sensor is linked over four wires—two for power supply, one for trigger output signal from the Arduino Nano, and one for input echo signal to the Arduino—to measure the distances among the obstacles and VIP. The GPS and GSM modules are connected through four wires—two for power supply, one for transmitting, and one for receiving data. The GPS module receives the VIP’s position coordinates and transmits them as a message to the caregiver’s or family member’s mobile phone via the GSM module. The coin vibration motor and Buzzer are connected to the Arduino Nano over two wires—one ground wire and the other for turning on/off. All components are battery-powered by a 3.3 V, 1500 mAh using a lithium-ion battery. The explanation of the main components of the SS system is detailed as follows:

Figure 2.

Whole SS system for VIP: (a) block diagram of the whole system and (b) wiring connections of the SS system.

4.1. Microcontroller-Based Arduino Nano

Integrating a microcontroller-based Arduino Nano is fundamental to the functionality of the SS designed for VIP. The Arduino Nano serves as the brain of the device, organizing the seamless operation of various sensors, modules, and actuators. The SS system is driven by a microcontroller, specifically the ATmega328P-based Arduino Nano. The Arduino Nano-based microcontroller is compact, lightweight, cost-effective, programmed in C++, and designed for low energy consumption [29]. This microcontroller performs a critical role in collecting information from an array of sensors strategically combined into the device. The microcontroller is programmed to perform commands, making it a flexible and customizable element to fulfil the SS’s specific criteria.

The Arduino Nano works as a central processing device in this work, efficiently using data from sensors constructed into the SS. These sensors, including heart rate, moisture, and ultrasound, provide well-being and environments information as a feedback signal to the VIP. In addition, the microcontroller is interfaced with GPS and GSM modules, providing essential information about the VIP’s locations to the caregiver or family members in real time. Moreover, the Arduino Nano integrates a tiny DC vibration motor and Buzzer to give a feedback signal to the VIP as a vibration or sound that makes users more aware of their surroundings and helps them navigate.

4.2. GPS Module

A GPS module, specifically NEO-6M, is employed to track the position of the VIP in the described system. The NEO-6M module [30] is fashioned to receive the signal from 24 satellites [31] and then measure accurate positions, including longitude and latitude. A GPS module [32] permits the SS to constantly receive signals from earth-orbiting satellites to position the user precisely. By incorporating NEO-6M with an SS, the system can provide real-time position information to VIP. This information is very beneficial for navigation, serving VIP to recognize their environments and realize the most fitting routes to their destinations. The GPS permits the SS to be combined into many activities. Including this combination develops the device’s efficacy by allowing family members to receive SMS about the VIP’s position. This feature is valuable in delivering instant assistance when required.

4.3. GSM Module

The SS employs the GSM-based SIM800L module [33,34] to establish the communication between the SS and the mobile of the caregiver or family member via the GSM network. The SIM800L module is fashioned for mobile communication uses and functions on a GSM network. The GSM allows the SS to perform SMS messages or phone calls. This feature is vital for VIP to communicate with their families or caregivers in an emergency or when help is needed. In addition, incorporating the GSM with the GPS helps the SS to determine the VIP’s position and send that information to the selected contacts. Family members will be able to receive updated positions on the VIP during navigation in real time, increasing safety and permitting timely assistance. For instance, the GSM module can directly transmit notifications if the SS system discovers a hazardous situation or the VIP requires immediate support.

4.4. Biomedical and Environmental Sensors

The biomedical and environmental sensors used in this article encompass a heart rate sensor (SEN-11574), a moisture sensor (LM393 chip-based YL-69), and an ultrasonic PING sensor (HC-SR04) [35]. The biomedical and environmental sensors substantially improve the ability of the SS, providing important information about the health and environmental conditions of the visually impaired. The heart rate sensor is essential for observing the VIP’s vital signs. In this work, the heart rate sensor was installed on the handle of the SS. The heart rate sensor captures the heart rate data of the VIP in real time, supplying respective information about the VIP’s health and well-being.

In addition to the biomedical feature, the moisture sensor was used for sensing wet ground, water, or mud. This sensor was implemented to identify moisture levels on the route of the VIP and give key information on how moist or wet the surrounding soil is. This feature makes the SS appear more valuable to existing users. By feeling the terrain, the SS can help VIP avoid wet or muddy areas, increasing their overall movement and comfort. This feature is mainly suitable for VIP who may encounter various terrains daily.

Moreover, the SS integrates an ultrasound PING sensor. This sensor utilizes ultrasound waves to calculate distance, letting the SS observe how close objects are to the VIP. The HC-SR04 is a cost-effective sensor, making it more appropriate for SS systems. Its non-contact measurement abilities are specifically important for SSs employed by VIP, as they prevent danger from straight contact with obstacles in the navigation. The HC-SR04 can measure distances from 2 to 400 cm [36]. This attribute has a distance precision of up to 3 mm [37]. The sensor unit comprises an ultrasonic receiver, transmitter, and control circuitry, ensuring comprehensive operation. The smooth integration of the ultrasonic sensor with Arduino microcontrollers makes it comfortable with our SS system hardware. By incorporating a PING ultrasonic sensor, the SS system enhances its ability to detect obstacles, further contributing to VIP safety and navigation assistance. The sensor can detect objects in real time and helps users make appropriate environmental decisions to ensure easy and safe navigation. The distance (D) in centimeters between the object and the ultrasonic transmitter can be computed by applying Equation (1) [16].

where ν is the speed of sound measured in cm/µs, and T in microseconds is the time required for the ultrasound signal to propagate from the transmitter to the receiver after the obstacle reflects it.

4.5. Actuators (Vibration Motor and Buzzer)

Actuators, specifically the vibration motor and Buzzer, play a pivotal role in the SS designed for VIP, enhancing user interaction and providing essential feedback. The vibration motor serves as a tactile feedback mechanism for the user. When integrated into the smart stick, it can convey information about the user’s surroundings or alert them to specific conditions. For example, the vibration motor can be programmed to give tactile feedback when the SS detects an obstacle in the user’s path. The intensity and shape of the vibration can be adjusted to reflect the proximity and shape of the obstacle, allowing the user to interpret feedback and make informed directional decisions. In this study, the vibrating coin-like devices attached to the handle of the SS, powered by a 3.7 V battery, were provided. Actuator specifications include a 10 mm outer diameter, 3 mm thickness, rated voltage range of 1–6 V, 66 mA current draw, 12,000 rpm output speed, and 12 kHz frequency [38].

In this article, the Piezo Buzzer is employed as a hearing feedback element to generate different sound patterns, such as tones and basic beeps, to convey messages or alerts to the VIP. For example, the Buzzer can be encoded to generate a specific sound when the SS identifies a change in environments, such as barriers, approaches a busy area, or encounters a specific marker, and the ground is wet or muddy. In this case, the microcontroller triggers a feedback signal to alert the VIP, enhancing their alertness to their environments. In addition, the Buzzer can be used to know the SS’s operating condition, battery status, or other essential information. The Buzzer and vibration motor collaboration contributes to a multi-sensory feedback system that enhances the VIP’s experience and navigation awareness. By combining these two elements, the SS system offers real-time ecological information, making it easier for VIP to move around safely and freely.

4.6. Power Source

A 3.3 V/1500 mAh lithium-ion battery powers the whole SS system. These high-capacity batteries guarantee reliable and consistent power for all components, contributing to the overall efficacy and self-sufficiency of the SS. In addition, a power reduction system, such as a sleep/active strategy [39], can develop the overall battery lifetime of the system. Furthermore, an energy harvesting system, such as wireless power transfer [40], can be used to charge the battery of the SS wirelessly. The SS system for VIP is a comprehensive and technically advanced system that utilizes sensor data, microcontroller application, and real-time communication to enhance VIP safety and navigation experiences. However, the SS is aimed for outside use and does not work efficiently indoors. This limitation is because the GPS is not suitable for indoor applications due to many indoor surroundings’ limitations [41].

5. Software Architecture

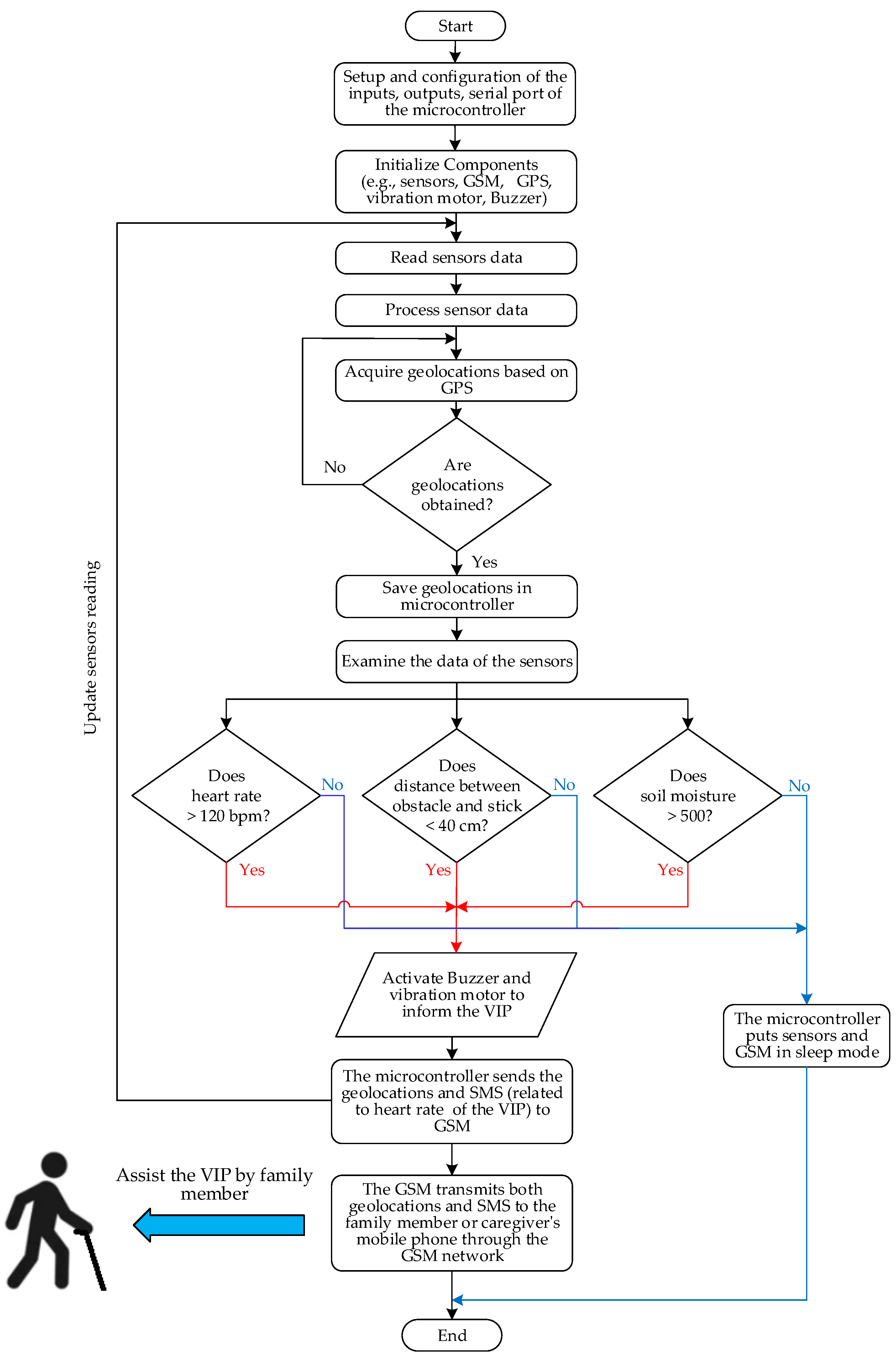

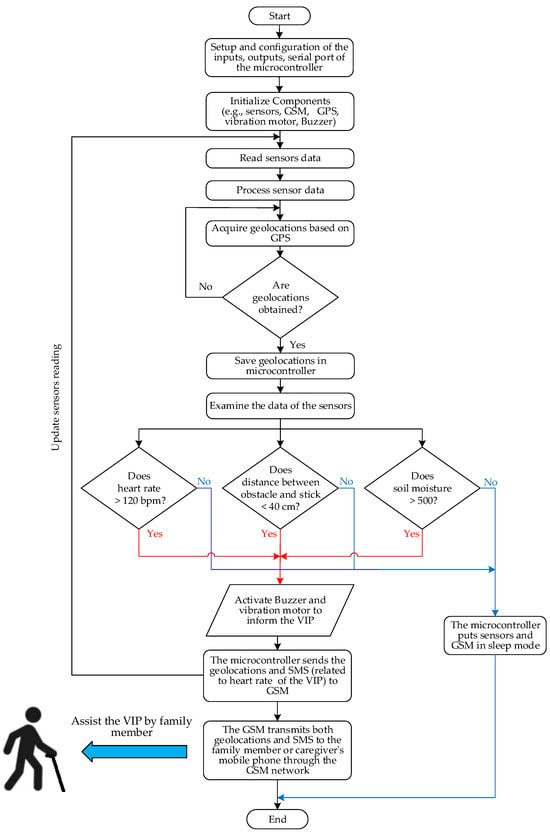

The functioning sequence of the suggested algorithm for the SS system is shown in Figure 3. The steps of the algorithm can be explained as follows:

Figure 3.

Operation flowchart of the SS system.

- Initially, the microcontroller’s input and output ports and the serial port’s data rate were configured.

- Subsequently, the sensors were initialized, encompassing heart rate, soil moisture, ultrasound, GSM, and GPS.

- The sensors acquired relevant data and stored and processed data within the microcontroller.

- The microcontroller prompted the GPS to acquire the VIP’s geolocations (latitude and longitude).

- If the geolocations were successfully obtained, they were stored in the microcontroller for subsequent transmission to the family member or caregiver; otherwise, the process returned to step 4.

- The stored sensor data underwent testing. If the heart rate exceeded 120 bpm, an obstacle was identified within a distance less than 40 cm, or if the soil moisture level surpassed 500, the microcontroller activated a Buzzer and DC vibration motor to alert the VIP to their health and environmental conditions. Otherwise, the microcontroller transitioned the sensors and GSM into sleep mode to conserve energy for the SS system. A timestep of 3 s was configured to process the data acquired from the sensors. This time was selected to provide the blind person with a real-time warning signal in the form of sound or vibration and enable him/her to take necessary action.

- The microcontroller transmitted the geolocations and SMS (related to the VIP’s heart rate) to the GSM.

- The GSM conveyed the geolocations and SMS to the mobile phone of the family member or caregiver via the GSM network.

- Finally, the family member assisted the VIP in emergencies.

6. Machine Learning Algorithms

ML algorithms are computational models that enable computers to learn patterns and make predictions without being explicitly programmed. These algorithms can be broadly categorized into three types: supervised learning, unsupervised learning, and reinforcement learning. In supervised learning, algorithms learn from labeled data to make predictions or classify new, unseen data. Unsupervised learning involves finding patterns and relationships in unlabeled data, such as clustering similar data points. Reinforcement learning focuses on the training process via trial and error, where they learn by receiving feedback based on their actions. ML modules typically have a training phase and a testing phase. The learning phase learns from historical data, while the testing phase evaluates their performance based on new data. Applications of ML modules cover numerous fields, including but not limited to medical diagnosis [42], customer segmentation, human activity recognition [43], agriculture [44], image recognition, recommendation systems, sport [45], autonomous vehicles, cybersecurity [46], speech recognition, natural language processing [47], fraud detection [48], industrial [49], predictive maintenance, sentiment analysis [50], and many others.

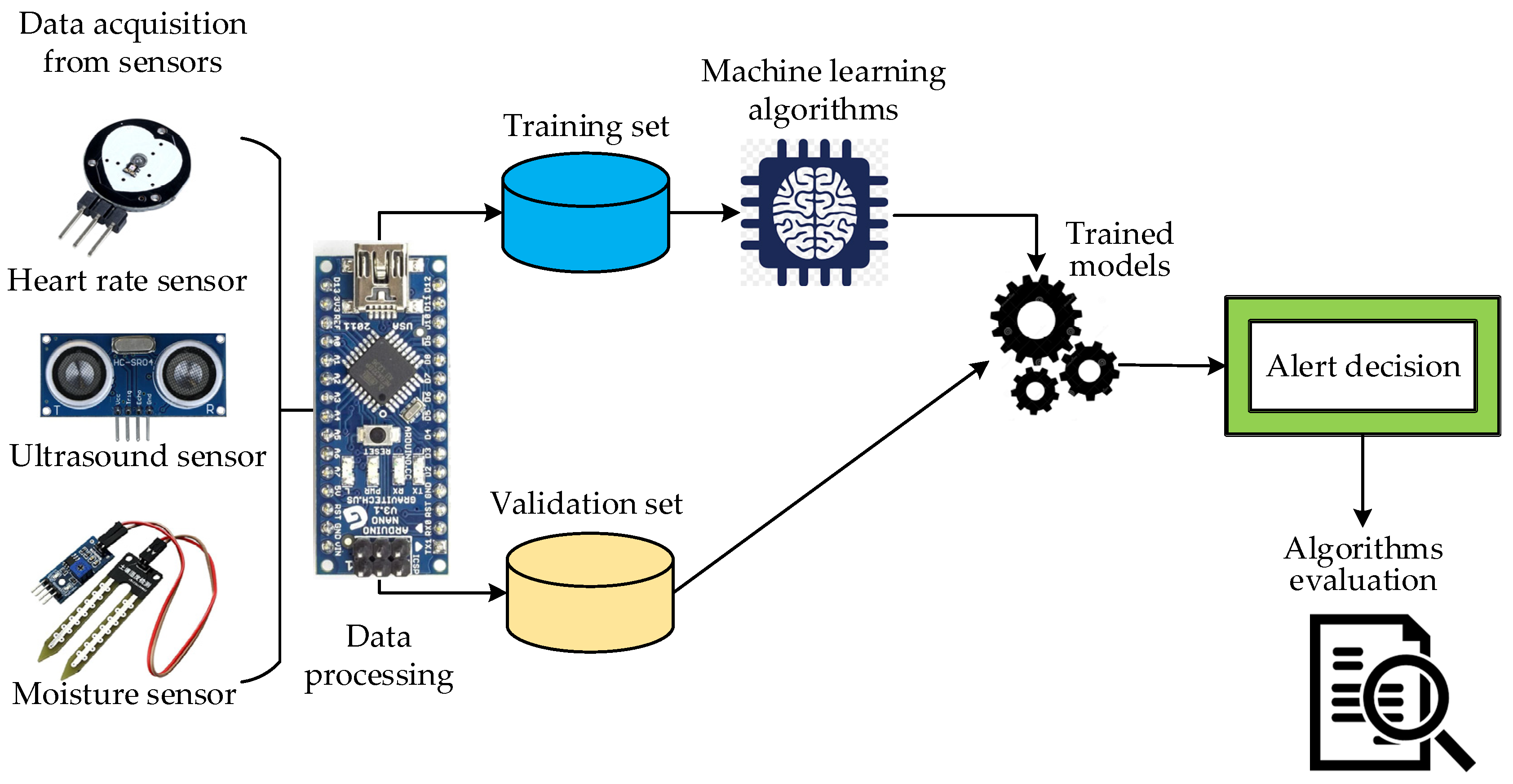

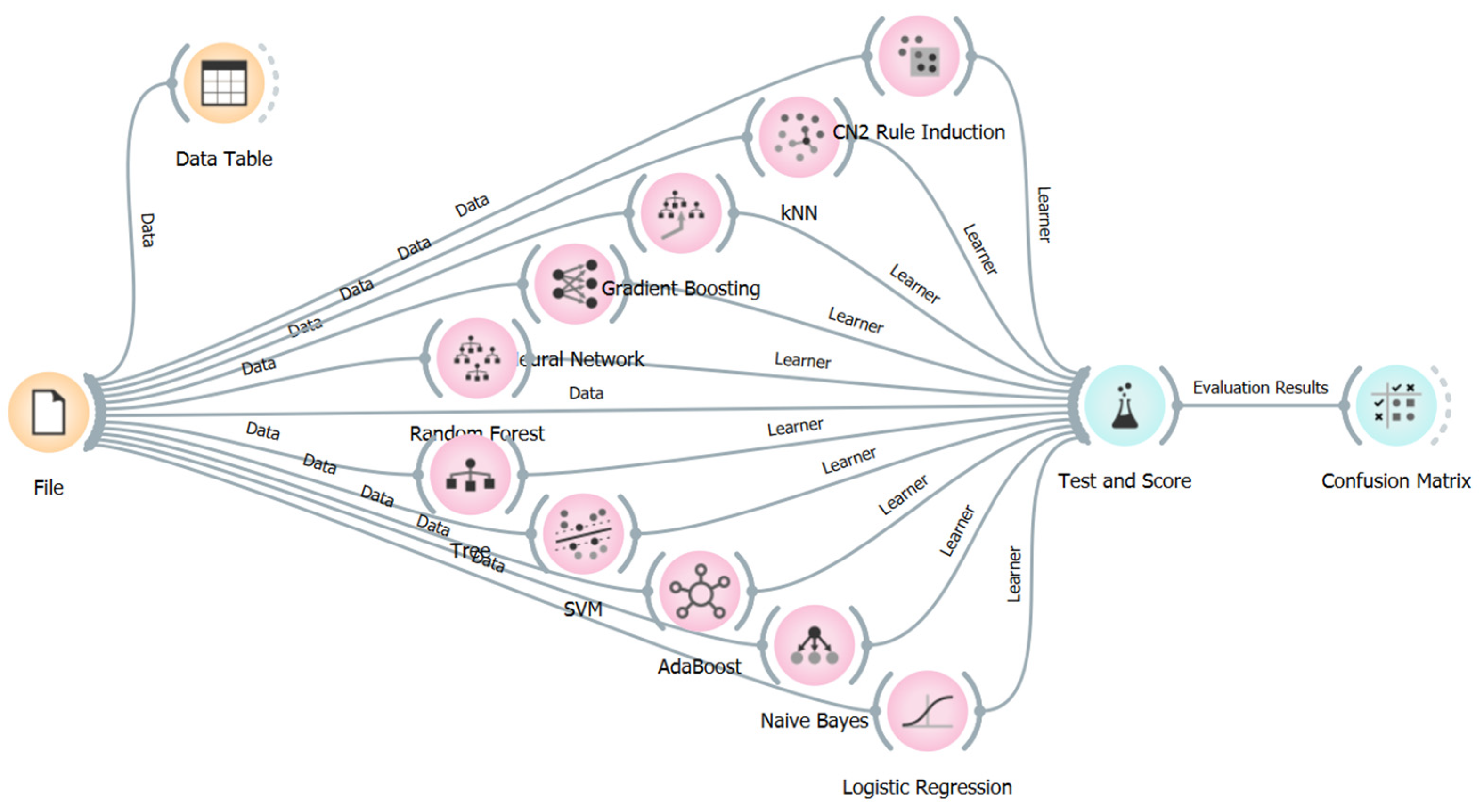

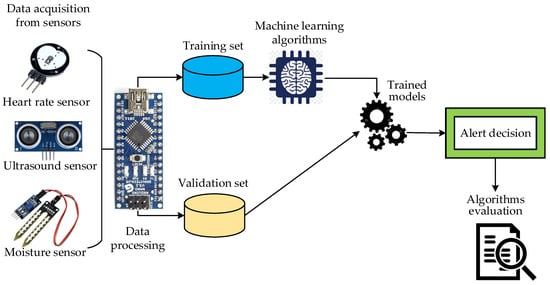

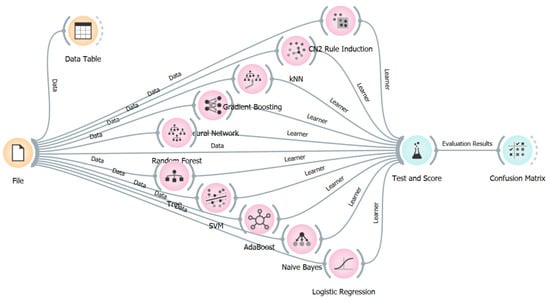

ML algorithms are important in improving the performance of smart canes designed for VIP. Figure 4 shows the schematic diagram of the ML algorithm developed in this research using SS sensors. These algorithms enable the SS to intelligently process and analyze sensory data, such as data from biomedical and environmental sensors, enabling it to detect and react to different environmental conditions. ML algorithms are trained to detect obstacles, distinguish objects or terrain, and adapt response strategies based on real-time conditions, such as vibrations or alerts. ML empowers SS to continuously learn and improve its performance, providing dynamic and adaptive solutions for VIP. By leveraging ML capabilities, SS can provide more accurate and contextual assistance to enhance further the VIP’s mobility and safety in dynamic environments.

Figure 4.

ML algorithm with input data from sensors.

6.1. Data Collection for ML Algorithm

In this paper, the dataset used to train and test the ML algorithms was obtained through several experiments and real-world data collection. These data are acquired from sensors that capture physiological parameters (i.e., heart rate) and environmental conditions (i.e., moisture and obstacles) of VIP. The dataset included various daily activities and situations that VIP encounter to ensure thorough coverage. This includes indoor and outdoor circumstances such as movement in the house, stairs, room, hallway, parks, crosswalks, streets, and sidewalks. A total of 4000 samples were measured from three sensors (i.e., heart rate, moisture, and distance) to train and test the ML algorithms, with about 1333 readings per sensor. The dataset was then divided into 3000 samples for training and 1000 samples for cross-validation.

6.2. Implementation of Machine Learning Algorithms

In this study, an assessment was conducted on ten ML algorithms to identify the most effective one with superior performance compared to others. The evaluated algorithms encompass CN2 Rule Induction, k-nearest Neighbors (kNN), Gradient Boosting, Neural Network, Random Forest, Decision Tree, SVM, Adaptive Boosting (AdaBoost), Naïve Bayes, and Logistic Regression as shown in Figure 5. The Orange software served as the implementation software for these algorithms. The evaluation process consisted of two phases: the training phase and the cross-validation phase. An amount of 3000 out of 4000 data samples were allocated for training, while the remaining 1000 were reserved for cross-validation. The training and validation iterations were set to 100. Input data for the algorithms included measurements from three sensors: heart rate measured in bpm (1333 samples), distance measured in centimeters (1333 samples), and moisture measured in integer values. The target (output) of the ML algorithms is a binary alert (1 or 0). This output functions as a digital signal to activate the Buzzer and DC vibration motor to work in order to alert the VIP that one of the environmental or health conditions is abnormal. The objective for testing several ML algorithms was to determine the algorithm that exhibits optimal performance across various criteria, contributing to informed decision making in the study context. These algorithms cover a broad spectrum of ML approaches, each with strengths and weaknesses, making them suitable for different tasks and datasets. The hyperparameters of the adopted ML algorithms are presented in Table 1.

Figure 5.

The adopted machine learning algorithms in this research.

Table 1.

Hyperparameters of the adopted ML models.

6.3. Performance Evaluation of Machine Learning Algorithms

The ML algorithms can assess the alert decision using various metrics, such as Classification Accuracy (Acc), Precision (Prec), Recall (Rec), F1 score (F1), Specificity (Spec), Matthews Correlation Coefficient (MCC), and Area Under the Curve (AUC). These metrics provide comprehensive insights into different aspects of algorithmic performance, enabling a thorough evaluation across multiple criteria. Table 2 displays the mathematical equations utilized to assess the performance of the adopted ML algorithm.

Table 2.

Mathematical equations of the performance metrics [51,52,53].

7. Results and Discussion

This section encompasses the results obtained from the practical hardware implementation for the SS system and ML algorithms. First, the measurements of the adopted sensors are presented. Then, the results of the ML algorithms are introduced as follows:

7.1. Results of Sensors Measurements

This part introduces the sensor measurement of the SS system, including distance, heart rate, moisture, and geolocation estimation, as in the following subsections.

7.1.1. Results of Distance Measurements

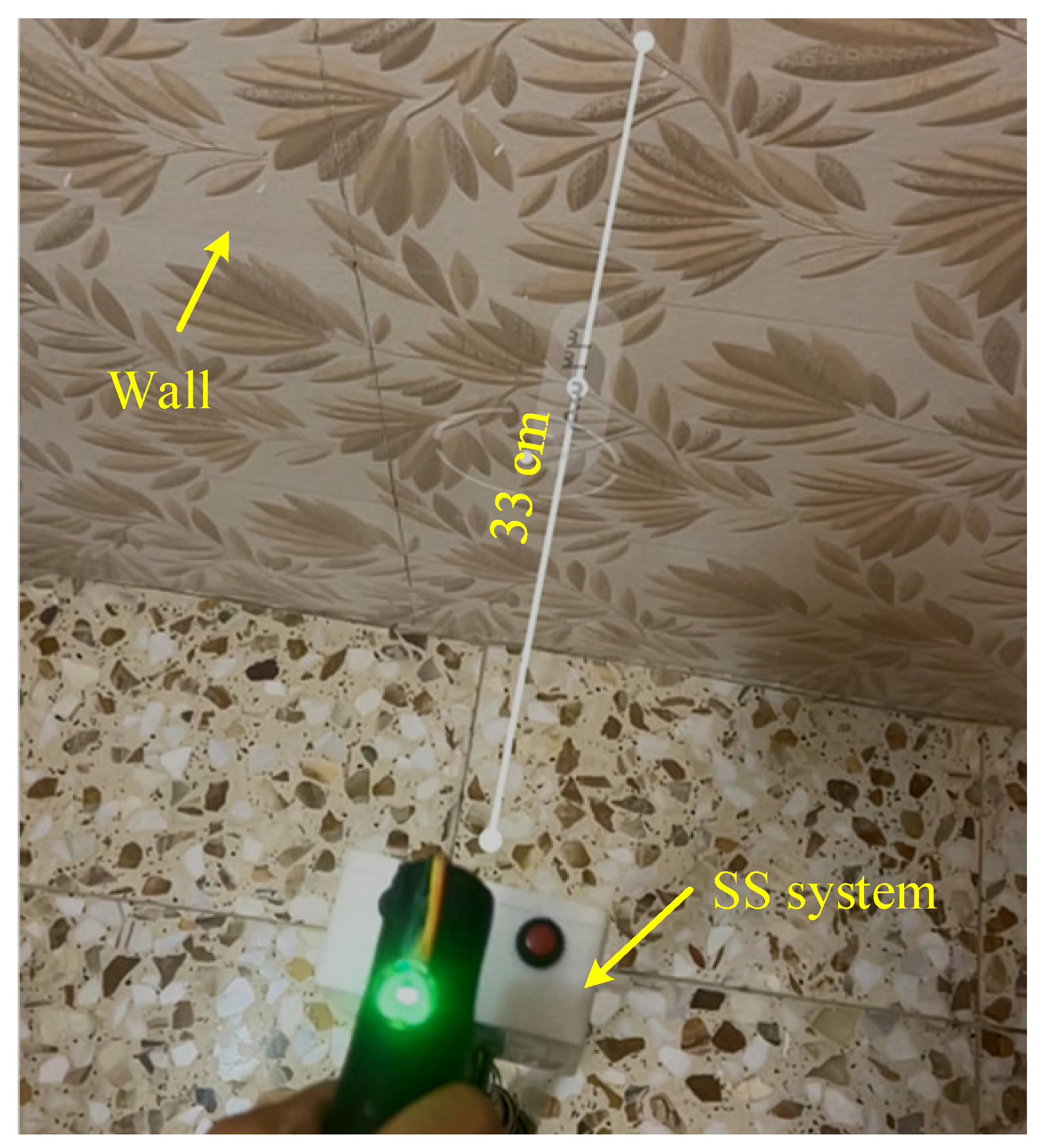

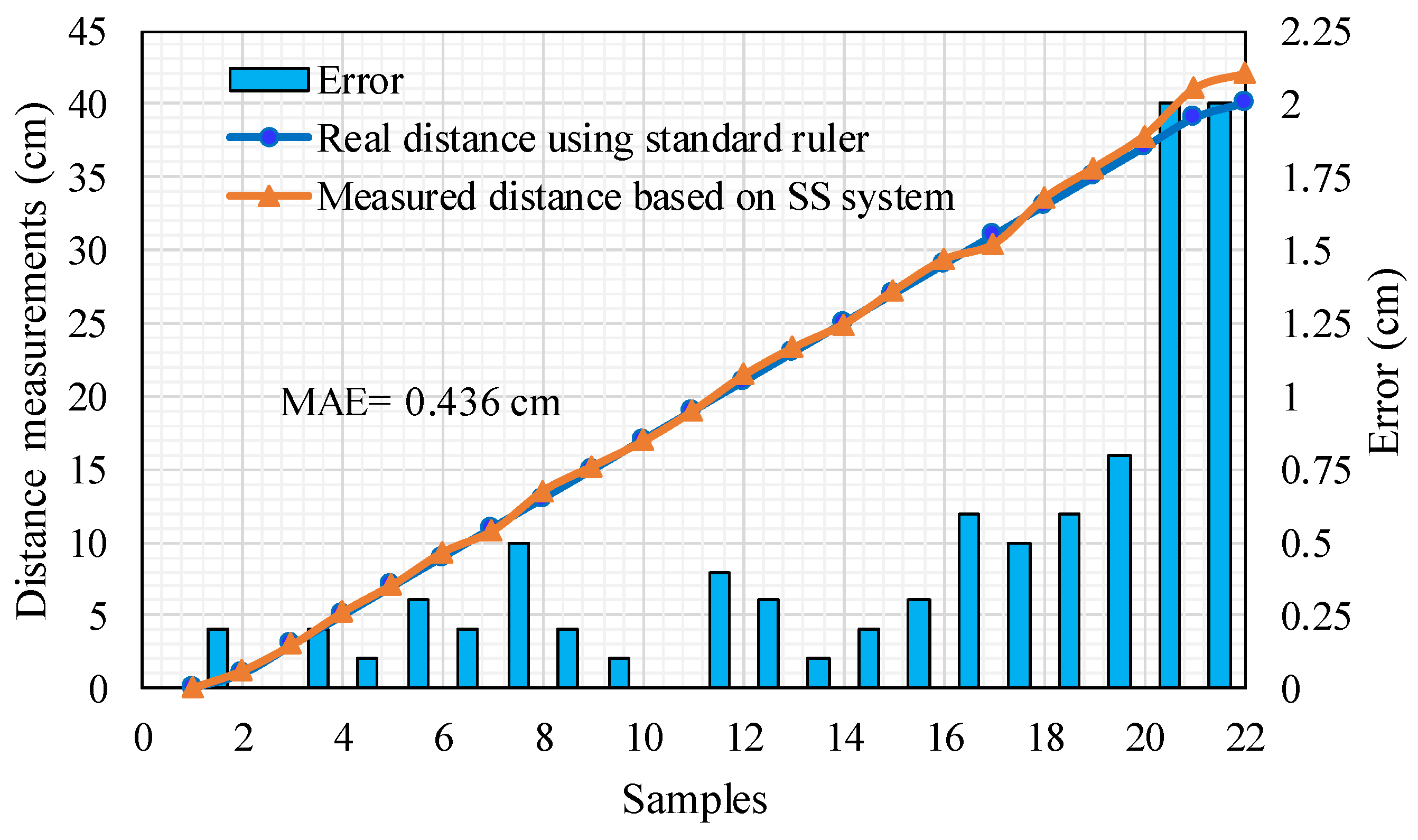

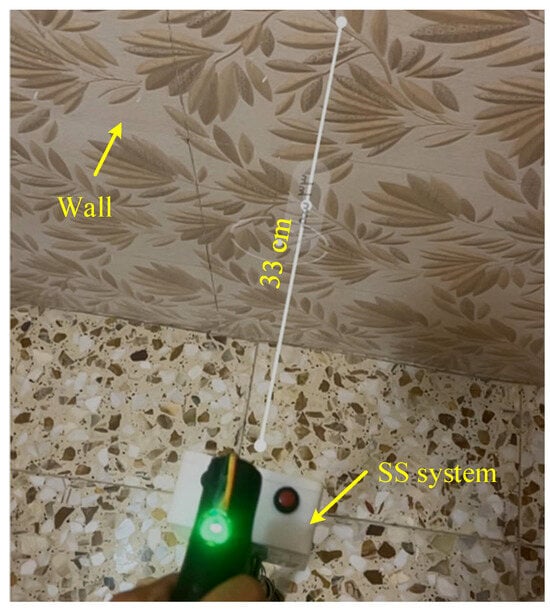

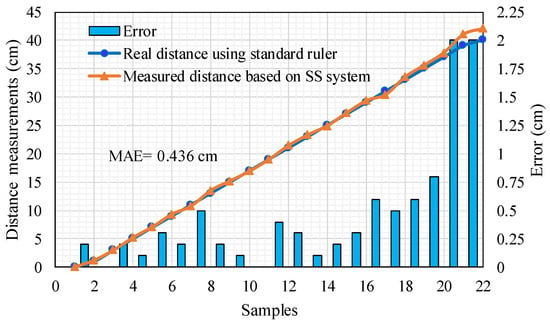

Distance measurements were conducted using an ultrasound PING sensor (HC-SR04). Incorporating the obstacle detection system in the SS is crucial, as it enables the timely identification of obstacles in the path of VIP. This practical detection allows users to avoid potential obstacles before reaching proximity. Our application tested the obstacle detection system using ultrasonic sensors, evaluating its performance within a 40 cm range between SS and obstacles. Figure 4 illustrates an example of the testing setup, showcasing the distance between the SS and obstacles (in this case, a wall). The measurements obtained from the ultrasonic sensor (red line marked with a triangle) are compared to traditional measurements taken with a standard ruler (blue line marked with a circle), as depicted in Figure 6. Figure 7 shows the distance measurement for the SS system’s standard ruler and ultrasonic sensor concerning 22 sample measurements. Both systems concurrently collect distance measurement data.

Figure 6.

Distance measurements at 33 cm.

Figure 7.

Distance measurements for both systems with errors and MAE.

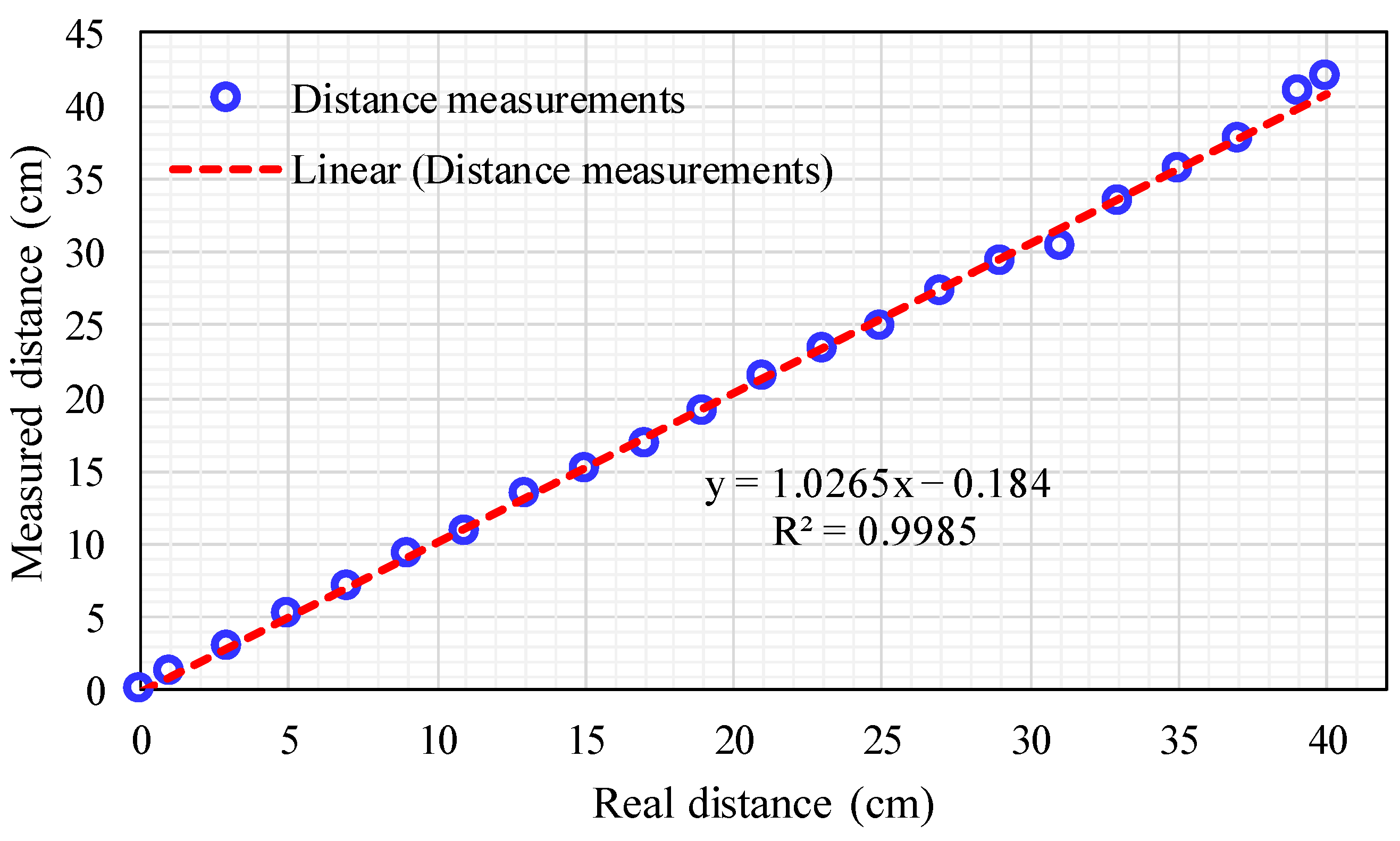

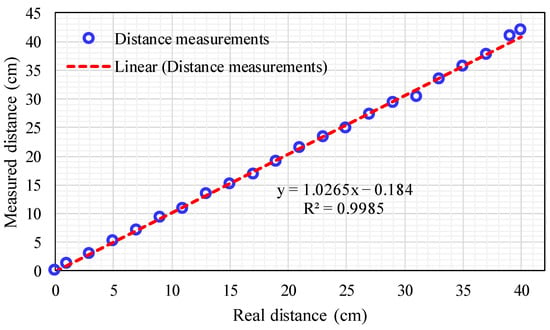

The number of samples was plotted on the x-axis, and both system’s distance measurements were plotted on the left y-axis. Nevertheless, the errors at each sample point are plotted on the right y-axis, as in the blue bar chart. It is observed that the distance measurement error increases to more than 2 cm, particularly when the distance between SS and the obstacles is more than 40 cm. This means the SS system works well at distances less than 40 cm. However, a close agreement between the measurements of both systems was achieved. The SS system demonstrates a mean absolute error (MAE) of 0.436 cm relative to the real measurements. Looking closely, we will see a slight difference that the correlation coefficient in Figure 8 can evaluate. In Figure 8, the data points representing real distance on the x-axis (obtained from ruler measurements) and measured distance of the SS system on the y-axis (obtained from our system using the ultrasound sensor) exhibit extraordinary consistency. The correlation coefficient achieved is 0.9985, indicating a strong correlation between the two sets of measurements.

Figure 8.

The correlation coefficient between the two systems for distance measurements.

7.1.2. Results of Heart Rate Measurements

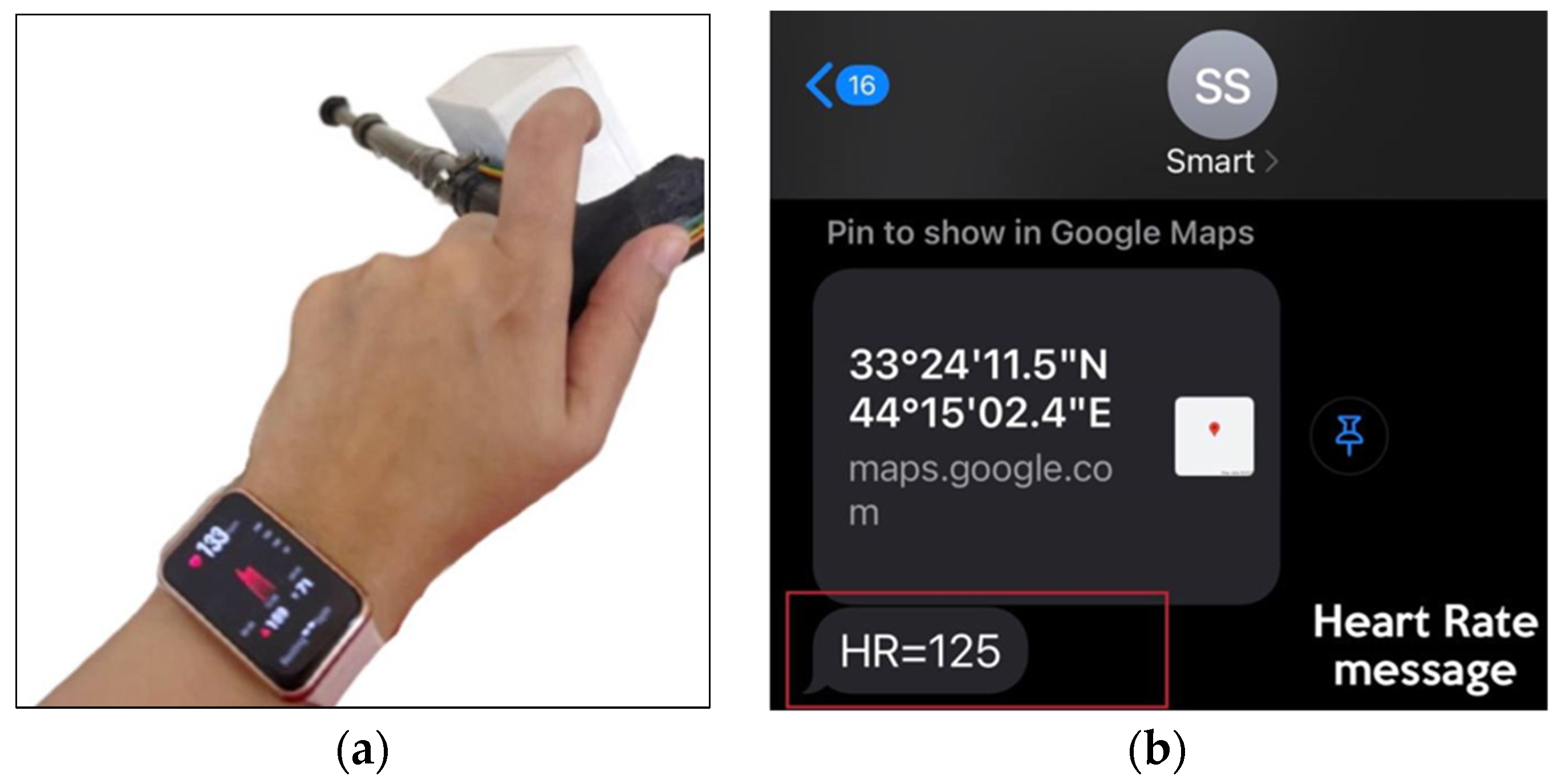

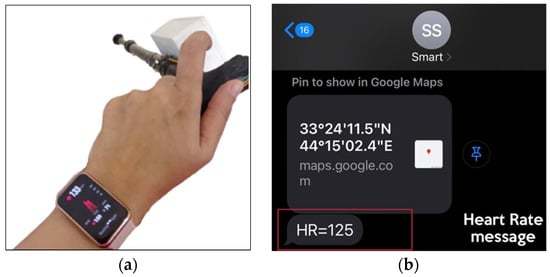

Heart rate measurements were obtained using a heart rate sensor (SEN-11574). Upon completing the component setup, the SS system measures heart rate by capturing the heartbeats of the VIP. The VIP position their finger on the stick where the heart rate sensor is attached. Following that, the heart rate sensor captures the pulse of the VIP and sends the measurements to the Arduino microcontroller. The microcontroller then relays the measured data to the family member’s mobile phone via GSM. To validate the accuracy of the heart rate sensor, a comparison is made with a benchmark device (smartwatch), where the benchmark device is connected to the VIP’s wrist, as depicted in Figure 9a. Figure 9b displays the heart rate measurements captured by the SS system. Both systems simultaneously collect heart rate data.

Figure 9.

Heart rate measurements: (a) benchmark (smartwatch) and (b) the SS system received on the family member’s mobile phone.

During the heart rate measurement experiment, participants were instructed to engage in exercises to elevate their heart rate, allowing for the assessment of the Buzzer and vibration motor functionality. In this scenario, the heart rate was elevated to 125 bpm, as depicted in Figure 9b, surpassing the predefined threshold value of 120 bpm. Consequently, the microcontroller activated the Buzzer and vibration motor to alert the VIP.

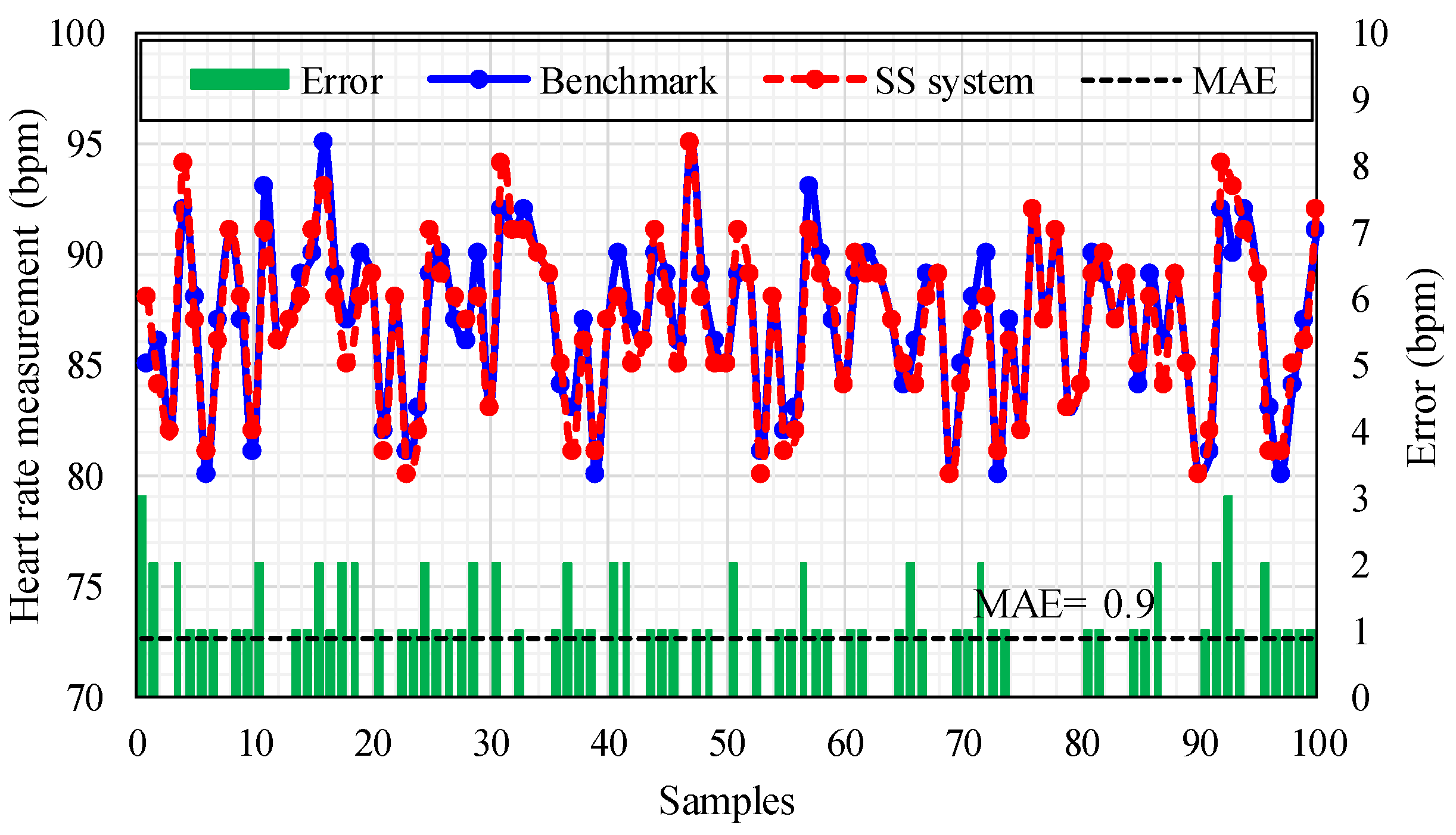

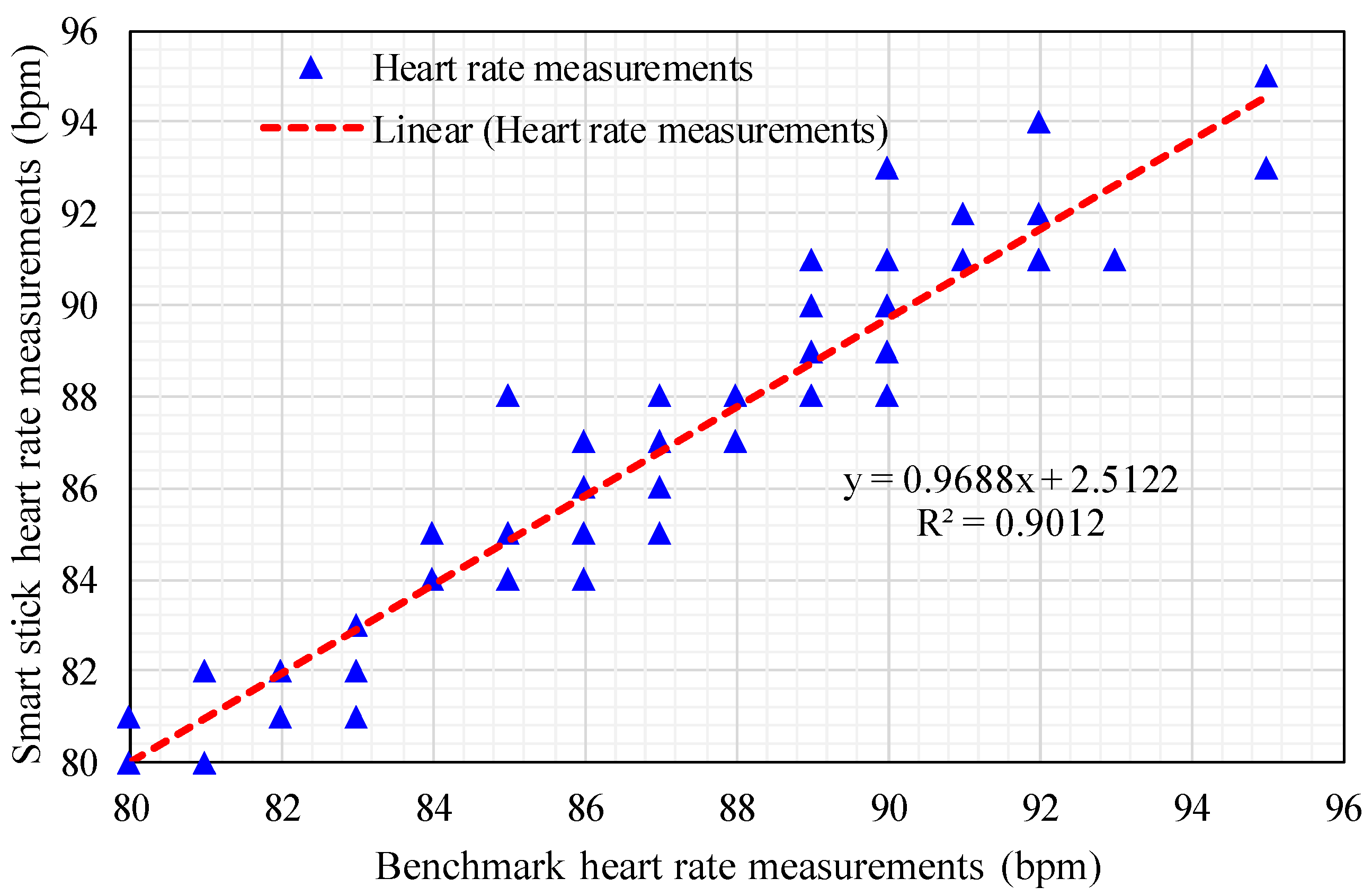

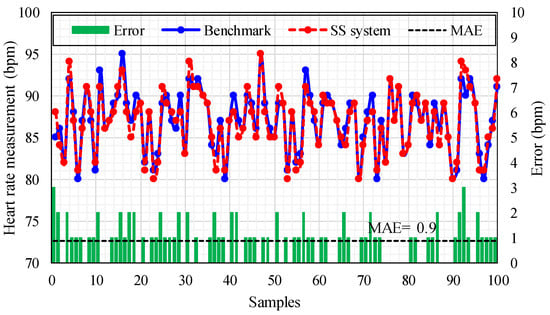

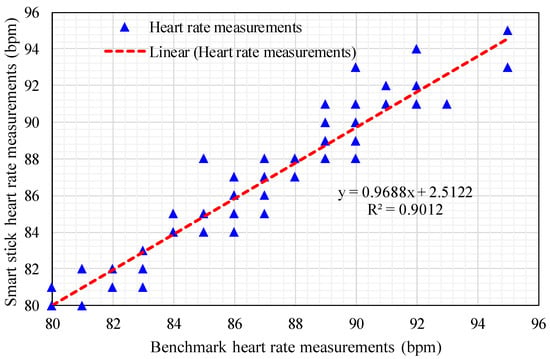

Figure 10 illustrates heart rate measurements for both the benchmark and the heart rate sensor of the SS system across 100 sample measurements. The x-axis represents the number of samples, while the left y-axis depicts heart rate measurements in beats per minute (bpm) for both systems. The blue line with circles points to the benchmark measurements, while the red dashed line with circles indicates the SS measurements. Concurrently, the errors at each sample point are displayed on the right y-axis—the error changes between 0 and 3 bpm (green bar) for the adopted 100 samples. Notably, there is a close agreement between the measurements of both systems, with the SS system demonstrating a mean absolute error (MAE) of 0.9 bpm (dashed black line) relative to the benchmark measurements. Upon closer examination in Figure 11, the correlation coefficient is employed to evaluate the differences. The x-axis represents data points representing heart rate measurements of the benchmark, while the y-axis depicts heart rate measurements of the SS system. The data points (blue triangles) exhibit a high degree of consistency, with a correlation coefficient of 0.901, signifying a good correlation between the two sets of measurements.

Figure 10.

Heart rate measurements for both systems with errors and MAE.

Figure 11.

The correlation coefficient between the two systems for heart rate measurements.

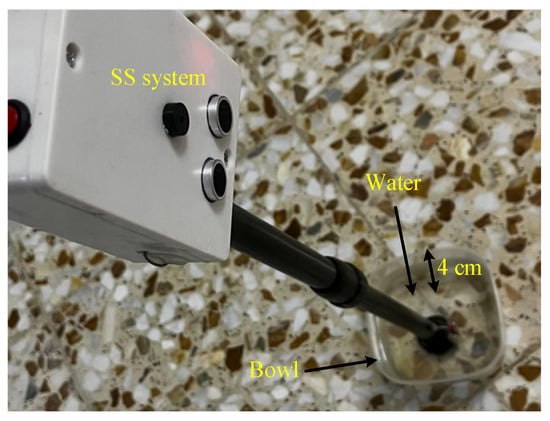

7.1.3. Results of Moisture Measurements

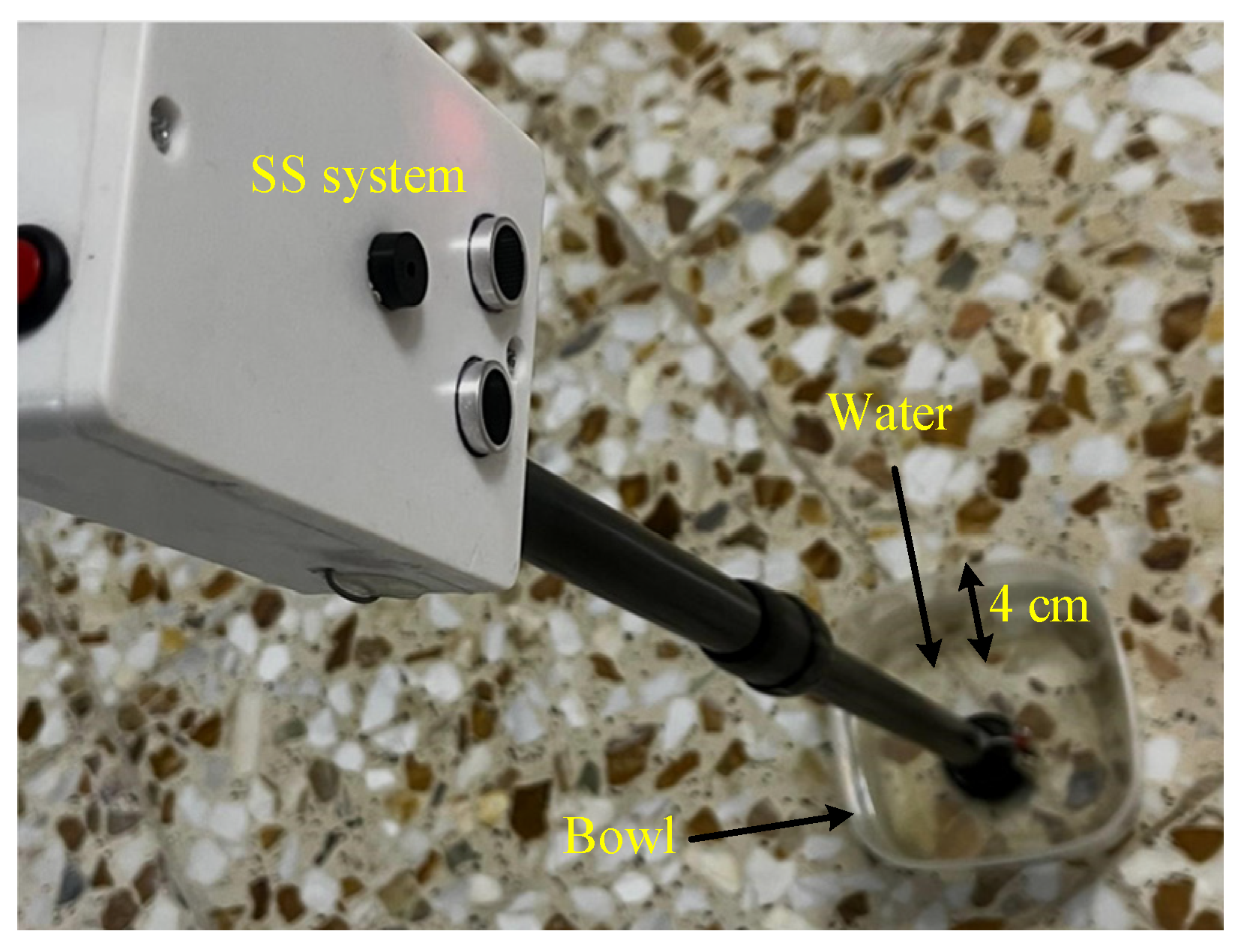

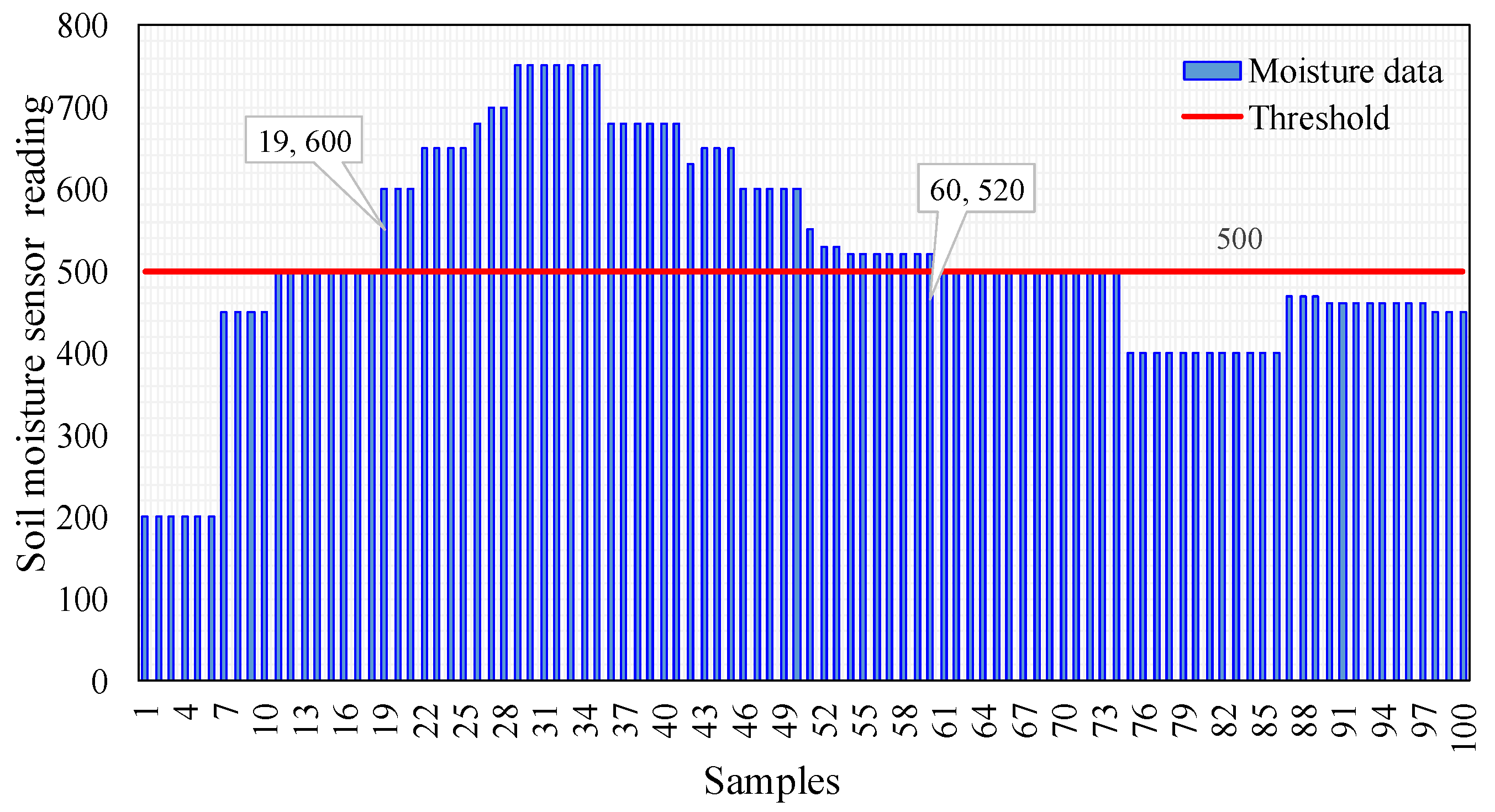

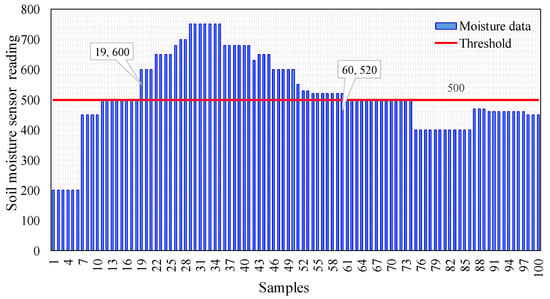

The moisture measurements were achieved based on a moisture sensor YL-69. The moisture sensor plays a crucial role for VIP by enhancing their environmental awareness and safety. By detecting and continuously monitoring moisture levels in the surrounding soil, the sensor enables these individuals to assess the ground conditions they are navigating. This information becomes especially vital when fluctuations in moisture may signal potential hazards, such as slippery surfaces or uneven terrains. The sensor’s ability to notify users about changes in soil moisture levels empowers them to make informed decisions, navigate safely, and proactively avoid potential obstacles. Upon receiving a moisture notification, the VIP can detect the vibration motor on the SS grip or receive a sound alert. Figure 12 demonstrates a sample testing arrangement, highlighting moisture measurements conducted with a plastic bowl containing water with a depth of 4 cm.

Figure 12.

Soil moisture measurements test.

Figure 13 depicts the soil moisture sensor readings for 100 samples, ranging from 200 to 750, as an integer unit. A threshold value of 500 (red line) was set, and when the sensor reading exceeded this threshold, the microcontroller activated both the Buzzer and the vibration motor. Conversely, when the sensor reading fell below 500, the Buzzer and the vibration motor remained in the off state. Figure 13 reveals that the Buzzer and the vibration motor operated only between samples 19 to 60 (i.e., wet ground), corresponding to sensor readings between 600 and 520 (more than threshold level, i.e., 500), and the Buzzer and the vibration motor remained in the off state for the remaining samples and sensor readings (i.e., less than threshold level, i.e., 500).

Figure 13.

Measurements of soil moisture sensor across different levels of soil moisture.

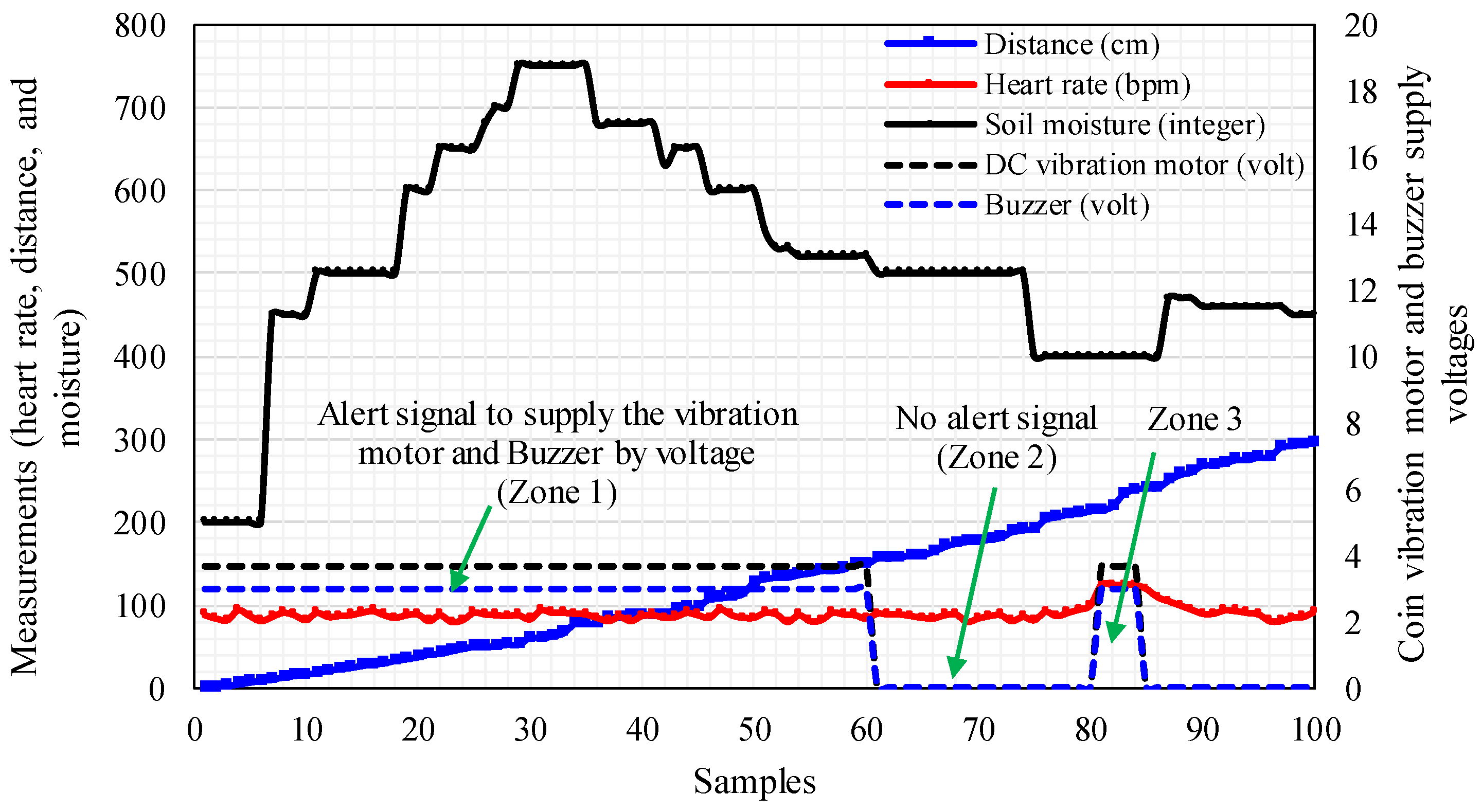

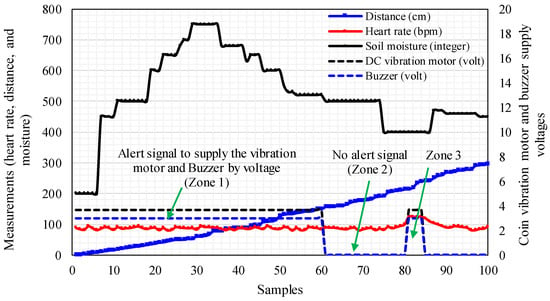

Figure 14 serves as a visual representation of an SS system designed to monitor and respond to certain physiological and environmental parameters. The figure provides a comprehensive overview of how the system assesses heart rate, distance, and moisture levels, which are crucial metrics in this context. The SS system uses sensors to measure heart rate, distance, and moisture, and these measurements play a critical role in determining the appropriate response. Predefined threshold values for each parameter in SS (i.e., 40 cm for distance, 120 bpm for heart rate, and 500 integer value for humidity) act as metrics to determine when these metrics enter the critical zone. Accordingly, if one or more sensors crosses a threshold level, the microcontroller activates a coin vibration motor and the Buzzer. Otherwise, if one or more than one sensor stays below the threshold level, it shuts down the motor and Buzzer, thereby ensuring that those with visual limitations can be promptly notified when certain physiological or environmental conditions reach critical points.

Figure 14.

Heart rate, distance, and moisture are measured concerning the supply voltages applied to the DC vibration motor and Buzzer.

Figure 14 shows the samples on the x-axis while the sensor measurements and the supply voltage of the coin vibration motor and Buzzer are on the left and right y-axes, respectively. The figure illustrates the alert/non-alert signal voltage the microcontroller generates based on the SS measurement. Zone 1 in Figure 14 indicates that the microcontroller triggers the alert signal to supply the Buzzer and coin vibration motor with voltages of 3.0 and 3.7 volts, respectively, when the humidity sensor surpasses the value of the threshold level (i.e., >500) for samples between 1 and 60. However, the microcontroller turns off the supply voltage to the Buzzer and coin vibration motor when one or more than one sensor measurements are below the threshold level, as seen for samples between 61 and 80, where the heart rate is 90 bpm, the distance is 157 cm, and the ground moisture level is less than or equal to 500. This is illustrated in Zone 2 as “No alert signal”. In Zone 3, which contains samples 81 to 84, the heart rate is 125 bpm, the distance is 240 cm, and the ground moisture level is 400. Therefore, the microcontroller triggers the Buzzer and coin vibration motor because the heart rate exceeds the threshold level, and this scheme continues for the remaining zones.

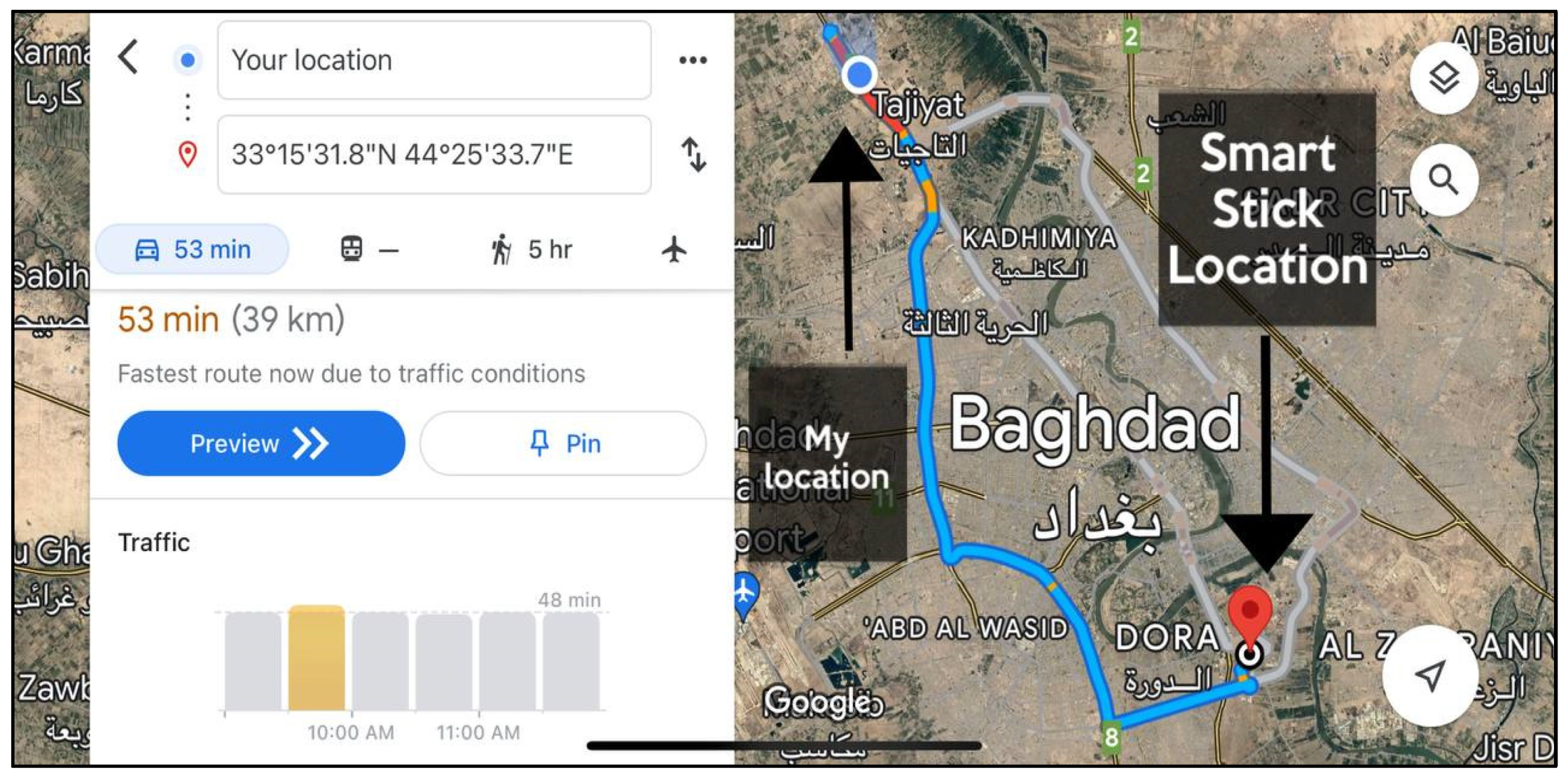

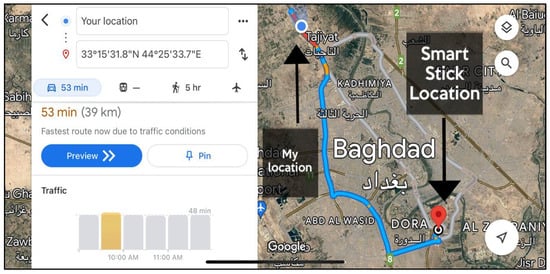

7.1.4. Results of Geolocations Estimation

The NEO-6M GPS calculates the geolocations of VIP during navigation. VIP can determine their positions by relying on GPS and GSM. The SIM card integrated into the SS system’s GSM automatically transmits the VIP’s geolocations to a mobile phone of the family member or caregiver in the case of emergency as a text message. In addition, it transmits the Google map link with the message mentioned above. Figure 15 shows a Google map with the geolocations received by the family member. When a family member or caregiver receives an SMS message from SS, they can click on the web link associated with the message. This link opens a Google map of the VIP’s location coordinates, which helps identify the VIP’s location and the family member or caregiver. The map also shows when the observer has estimated to travel to the VIP based on the traffic distance between them, as shown in Figure 15. In the figure, the red icon indicates where the VIP’s location (Smart Stick location) is located, while the blue icon represents the location of the family member (My location). For example, the figure shows they are 39 km apart with an expected arrival time of 53 min within Baghdad city.

Figure 15.

The mobile phone of a family member receives the geolocation information of a VIP using NEO-6M GPS and GSM.

7.2. Results of ML Algorithms

This part presents the results of confusion metrics parameters and the performance metrics, as follows.

7.2.1. Results of Confusion Metrics Parameters

Table 3 and Table 4 present the results of different ML algorithms regarding their confusion matrix parameters TN, FP, FN, and TP for training and cross-validation phases, respectively. These results provide insights into each algorithm’s performance in correctly and incorrectly classifying instances into different classes or categories. In both tables, it is obvious that the true positive and true negative values are notably high for all algorithms. In contrast, the false positive values are lower than the false negative ones. However, the true positive scores surpass the others. This observation suggests that the ML models exhibit strong performance in decision-making evaluation. The total samples for each sensor were 4000, divided into 3000 samples for training and 1000 for cross-validation.

Table 3.

Confusion matrix parameters for different ML algorithms for the training phase.

Table 4.

Confusion matrix parameters for different ML algorithms for the cross-validation phase.

Table 3 shows that the kNN algorithm demonstrated good performance. The Random Forest algorithm also showed strong results. Logistic Regression achieved decent performance, but it has many false positives. Gradient Boosting and AdaBoost performed well, with a low error rate. The Neural Network algorithm has many errors. The SVM algorithm showed reasonable performance but with more errors. The Decision Tree algorithm showed a good balance and reliable accuracy. Naïve Bayes was very conservative. CN2 Rule Induction performed exceptionally well with very low error rates.

Table 4 demonstrates that the kNN showed good performance. The Neural Network has a high number of false negatives. Gradient Boosting achieved high accuracy. Naïve Bayes correctly identified all negative cases with no false positives. AdaBoost performed exceptionally well, with no false negatives. Like Gradient Boosting, Random Forest had few false positives and no negatives. The Decision Tree algorithm also performed very well, with few false positives and negatives. The SVM algorithm showed reasonable performance but with some errors. CN2 Rule Induction achieved perfect performance with no false positives or false negatives. Logistic Regression achieved good performance but has many false positives and negatives.

7.2.2. Results of Performance Metrics

The results of ML algorithms can be further analyzed to derive metrics like alert decision accuracy, precision, recall, specificity, MCC, AUC, and F1-score to evaluate the overall effectiveness of the algorithms in their respective tasks, as presented in Table 5 and Table 6 for training and validation phases, respectively.

Table 5.

Performance metrics for different ML algorithms for the training phase.

Table 6.

Performance metrics for different ML algorithms for the cross-validation phase.

Table 5 presents the comprehensive training performance of the utilized ML algorithms. Among them, the AdaBoost and Gradient boosting algorithms stand out with the highest alert decision accuracy, F1-score, precision, and recall, up to 98.4%. Additionally, its MCC, specificity, and AUC peak at 96.6%, 97.7%, and 98.1, respectively. Conversely, Random Forest, SVM, Decision Tree, kNN, Logistic Regression, CN2 Rule Induction, and Naïve Bayes exhibit lower scores, while Neural Network performs the least effectively among the evaluated algorithms. However, the training running time varies between 0.177 s (Decision Tree) and 19.24 s (CN2 Rule Induction).

Table 6 presents the comprehensive cross-validation performance of the utilized ML algorithms. Among them, the AdaBoost, Gradient boosting, Decision Tree, and Random Forest algorithms have the highest alert decision accuracy, F1-score, precision, MCC, and recall, up to 99.9%. Additionally, its AUC and specificity peak at 100%. Conversely, kNN, CN2 Rule Induction, SVM, Neural Network, and Logistic Regression exhibit lower scores, while Naïve Bayes performs the least effectively among the evaluated algorithms. However, the cross-validation running time varies between 0.001 s (Decision Tree) and 19.24 s (SVM).

By analyzing these algorithms’ performance, we can determine which algorithm might be the best based on their accuracy and error rate for a particular application. Therefore, these algorithms can be classified into three categories as follows:

- High-performance algorithms: Gradient Boosting, AdaBoost, CN2 Rule Induction, Random Forest, and Decision Tree, as highlighted in bold black color.

- Moderate performance algorithms: kNN, Neural Network, and SVM.

- Lower performance algorithms: Logistic Regression and Naïve Bayes.

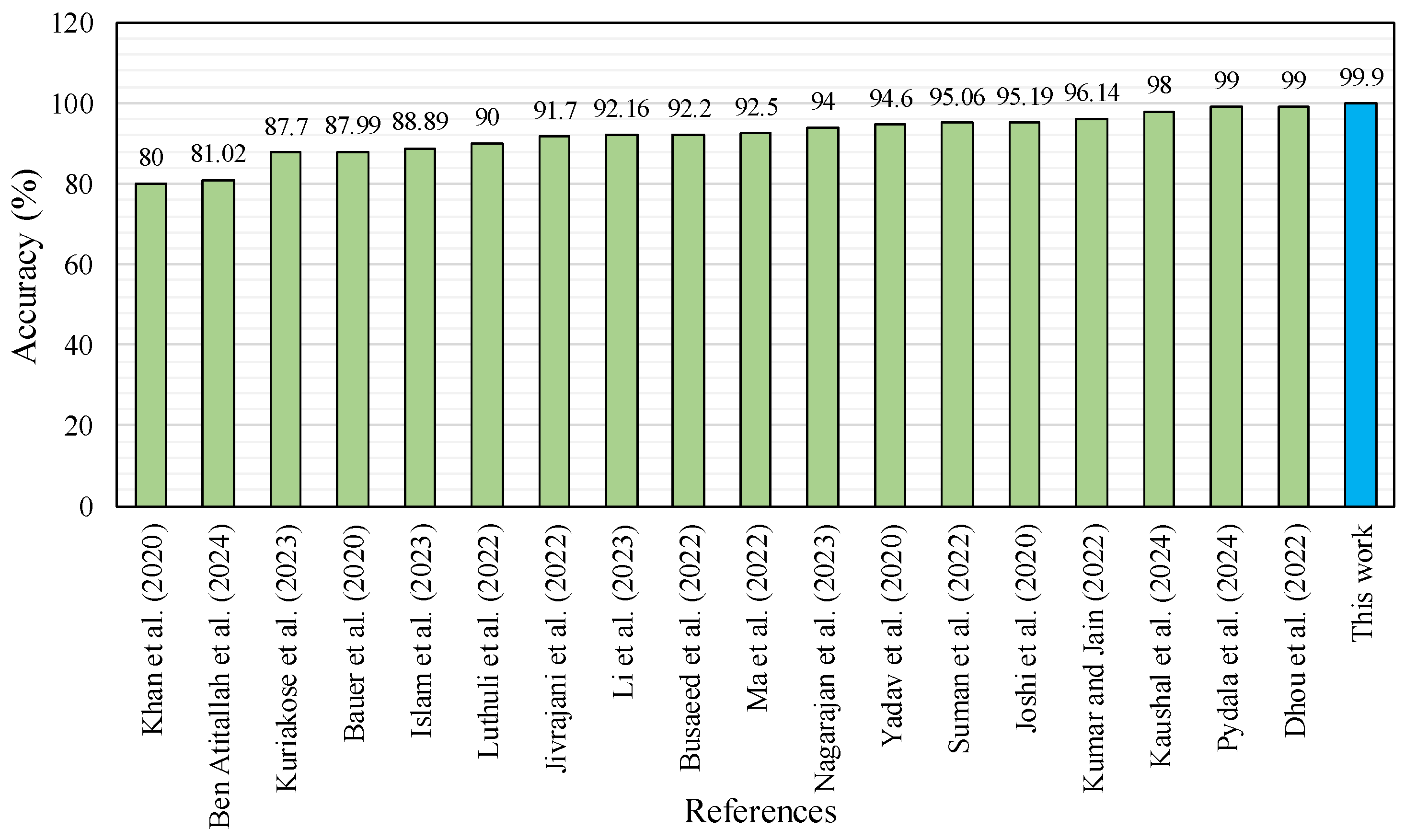

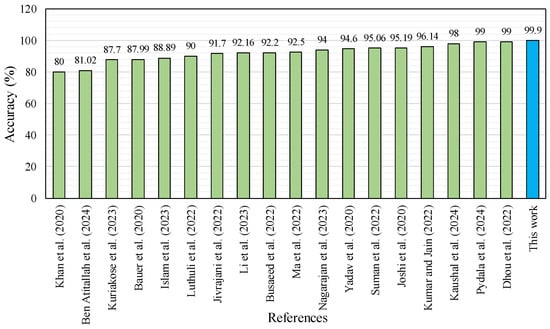

8. Comparison Results

Table 7 provides a detailed comparison between the results obtained in our current study and those reported in previous studies using ML algorithms. In particular, the table includes comparisons with algorithms, such as Gaussian Mixture Model (GMM)-based Plane Classifier, Light Detection and Ranging (LiDAR), Recurrent Neural Network (RNN), Deep Convolutional Neural Network (DCNN), Deep Residual Network (DRN), Deep Learning Navigation Assistant Visual Impairments (DeepNAVI), You Only Look Once (YOLO), and Region-based Convolutional Neural Network (R-CNN).

Our research utilized advanced ML techniques and highly effective AdaBoost, Gradient Boosting, and Random Forest models to increase the accuracy of alert decisions. Remarkably, these models achieved an impressive accuracy of 99.9%, better than any other system described in the literature. This significant improvement highlights the effectiveness of our chosen methods in detecting and accurately classifying relevant information. It highlights the ML models’ achievement in making the right decision for the VIP. In contrast, a study by Khan et al. [36] reported lower accuracy than algorithms using a DL model based on Tesseract version 4. The variance in decision performance in terms of accuracy in the listed research in Table 7 mainly depends on the type of the adopted ML algorithm and the data processing method. The high accuracy achieved by our model indicates its robustness and flexibility, suggesting that ML approaches may provide better performance for similar applications in the future. Furthermore, our SS system surpassed others by utilizing minimal, affordable, simple, and readily available hardware. Unlike other systems presented in Table 7, our system did not need a Raspberry Pi, a camera, an accelerometer sensor, and a headphone device, which are typically costly, such as shown in research works [17,28,36,54,55,56,57,58]. Thus, our proposed SS system’s complexity and cost were reduced compared to previous systems.

The results obtained from our system are compared to those in the state of the art to confirm the performance of the proposed SS system in terms of alert decision accuracy, as shown in Figure 16. The figure shows eighteen reliable articles are compared with our SS system. These research works are similar to our SS system on VIP usage and adopted artificial intelligence. Figure 16 revealed that the accuracy of the proposed SS system outperformed that in the state of the art, where the alert decision accuracy of 99.9% was achieved, among those of other artificial intelligence techniques.

Table 7.

Comparison between the current study with previous works.

Table 7.

Comparison between the current study with previous works.

| Ref./Year | Task | ML Model | Sensors | Actuator/Device | Communication Module | Processor | Detection Type | Environments | Performance Metric | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ultrasonic | Moisture | Hear Rate | Accelerometer | Vibration Motor | Buzzer | Camera | Earphone/ Headphone | GPS | GSM | Raspberry Pi | Arduino | Obstacle Detection | Moisture Detection | Health Monitoring | Indoor | Outdoor | Accuracy (%) | |||

| Jivrajani et al. [1]/2022 | Obstacle detection | CNN | ✓ | × | ✓ | × | × | × | ✓ | × | ✓ | ✓ | ✓ | × | ✓ | ✓ | ✓ | ✓ | ✓ | 91.7 |

| Luthuli et al. [54]/2022 | Detect obstacles and objects | CNN | ✓ | × | × | × | × | × | ✓ | ✓ | × | × | ✓ | × | ✓ | × | × | ✓ | ✓ | 90.00 |

| Yang et al. [59]/2024 |

| A novel approach-based TENG | × | × | × | × | ✓ | ✓ | × | × | × | × | × | ✓ | ✓ | ✓ | × | × | × | N/A |

| Ben Atitallah et al. [27]/ 2024 | Obstacle detection | YOLO v5 neural network | × | × | × | × | × | × | × | × | × | × | × | × | ✓ | × | × | ✓ | ✓ | 81.02 |

| Ma et al. [28]/2024 |

| YOLACT algorithm-based CNN | ✓ | × | × | ✓ | ✓ | × | ✓ | × | ✓ | × | ✓ | ✓ | ✓ | × | × | × | × | 92.5 |

| Khan et al. [36]/2020 |

| Tesseract OCR engine | ✓ | × | × | × | × | × | ✓ | ✓ | × | × | ✓ | × | ✓ | × | × | ✓ | ✓ | 80.00 |

| Bauer et al. [60]/2020 |

|

| × | × | × | × | × | × | ✓ | × | × | × | × | × | ✓ | × | × | × | ✓ | 87.99 |

| Dhou et al. [55]/2022 |

|

| ✓ | × | × | ✓ | × | ✓ | ✓ | × | ✓ | × | ✓ | × | ✓ | × | × | ✓ | ✓ | 89 99 98 93 |

| Busaeed et al. [61]/2022 | Obstacle detection | Kstar | ✓ | × | × | × | ✓ | ✓ | ✓ | ✓ | × | × | × | ✓ | ✓ | × | × | ✓ | ✓ | 92.20 |

| Suman et al. [10]/2022 | Obstacle identification | RNN | ✓ | ✓ | × | × | × | × | ✓ | ✓ | × | × | × | ✓ | ✓ | ✓ | × | ✓ | ✓ | 95.06 (ind) 87.68 (oud) |

| Kumar and Jain [56]/ 2022 | Recognize the environments and navigate | YOLO | ✓ | × | × | × | × | × | ✓ | ✓ | ✓ | × | ✓ | × | ✓ | × | × | ✓ | ✓ | 96.14 |

| Nagarajan and Gopinath [9]/2023 | Object recognition | DCNN and DRN | × | × | × | × | × | × | × | × | × | × | × | × | ✓ | × | × | ✓ | × | 94.00 |

| Kuriakose et al. [62]/2023 | Navigation assistant | DeepNAVI | ✓ | × | × | × | × | × | ✓ | × | × | × | ✓ | × | ✓ | × | × | ✓ | ✓ | 87.70 |

| Islam et al. [13]/2023 |

| SSDLite MobileNetV2 | ✓ | × | × | × | × | × | ✓ | × | × | × | ✓ | × | ✓ | × | × | ✓ | ✓ | 88.89 |

| Li et al. [17]/2023 |

| YOLO v5 | ✓ | ✓ | ✓ | ✓ | × | × | ✓ | ✓ | × | × | ✓ | × | ✓ | ✓ | ✓ | ✓ | ✓ | 92.16 |

| Pydala et al. [63]/2024 |

|

| ✓ | × | × | × | × | × | ✓ | ✓ | × | × | × | × | ✓ | × | × | × | × | 99.00 |

| Kaushal et al. [64]/2024 | Obstacle identification | CNN | ✓ | × | × | × | × | ✓ | ✓ | × | ✓ | ✓ | × | ✓ | ✓ | × | × | ✓ | ✓ | 98.00 |

| Yadav et al. [57]/2020 | Object identification | CNN | ✓ | × | × | × | ✓ | × | ✓ | ✓ | × | × | ✓ | ✓ | × | × | × | × | 94.6 | |

| Kumar et al. [65] /2024 | Obstacle detection | CNN-based MobileNet model | ✓ | × | × | × | × | × | × | ✓ | ✓ | ✓ | × | ✓ | ✓ | × | × | ✓ | × | N/A |

| Joshi et al. [58]/2020 |

| YOLO v3 | ✓ | × | × | × | × | × | ✓ | ✓ | × | × | ✓ | × | ✓ | × | × | ✓ | ✓ | 95.19 |

| This work |

|

| ✓ | ✓ | ✓ | × | ✓ | ✓ | × | × | ✓ | ✓ | × | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 99.9 |

✓: Found; ×: Not found; N/A: Not available; TENG: Triboelectric Nanogenerator; GMM: Gaussian Mixture Model-based Plane Classifier; LiDAR: Light Detection and Ranging; RNN: Recurrent Neural Network; DCNN: Deep Convolutional Neural Network; DRN: Deep Residual Network; DeepNAVI: Deep Learning Navigation Assistant Visual Impairments; YOLO: You Only Look Once; R-CNN: Region-based Convolutional Neural Network; LR: Logistic Regression.

Figure 16.

Comparison of accuracy of the SS system with previous works [1,9,10,13,17,27,28,36,54,55,56,57,58,60,61,62,63,64].

9. Conclusions

This study effectively presented the development and implementation of an innovative smart stick designed to improve the movement and autonomy of VIP. Using an array of sensors, including GSM, GPS, heart rate, moisture, ultrasound, vibration motor, and an Arduino Nano microcontroller, the smart stick provides real-time feedback and alerts to its user. Integrating unconventional ML algorithms, particularly AdaBoost, Random Forest, and Gradient Boosting, proved highly effective, accomplishing outstanding performance metrics with 99.9% accuracy and perfect F1-score, AUC, recall, precision, specificity, and MCC. These results highlight the smart stick’s potential to considerably develop the quality of life for VIP, permitting them to navigate their surroundings with greater assurance and independence. This work marks an essential step toward more sophisticated assistive tools that foster blind people and the well-being of VIP. The comparison with previous scholars highlights our progress in the current research and the potential of these findings to inform and advance future ML techniques development and implementation. Our models’ exceptional accuracy reveals their current effectiveness and paves the way for new features and growth in the field.

The findings of this study have important implications for the development of independence and quality of life for the VIP. Developing a new SS system equipped with sensors and using advanced machine learning algorithms represents a significant step forward in assistive technology.

The SS system demonstrates significant potential to enhance the mobility and safety of VIP. However, several approaches for future improvements and extensions could further optimize its functionality and user experience, as follows:

- Extra sensors such as thermal imaging, light detection ranging, infrared, and cameras could enable the system to detect and classify obstacles more precisely, especially in varying environmental conditions.

- Other health sensors that can be added to the SS system, such as oxygen saturation and blood pressure monitors, can present a more thorough health monitoring system.

- Using edge and cloud computing increases real-time data processing capabilities and enables continuous learning and updating of ML algorithms based on data collected from multiple VIP.

- Implementing deep learning algorithms can improve decision making and pattern recognition processes, making the SS system more adaptive to complex and diverse scenarios.

- Utilizing reinforcement-learning algorithms, for example, Deep Q-Networks, can help SS systems learn specific user preferences and behaviors, resulting in a more personalized and effective travel experience.

- An SS system with smart eyeglasses can improve VIP navigation in various surroundings.

- Another potential enhancement relates to the battery usage of the SS, as the battery lifetime is presently limited. To overcome the limitation of the power resources, for example, a wireless power transfer technology can be used to charge the battery of the SS when not in use by VIP.

- Expanding the feedback instruments to comprise haptic responses and voice signals can provide a wide range of user preferences and requests, increasing the overall user experience.

- ML was trained and tested at the offline phase in the current work. Therefore, in the next step, we plan to implement it in real time, which requires a sophisticated microcontroller, such as the Arduino Nano 33 BLE Sense, to incorporate sensor measurements with ML algorithms.

By addressing these potential improvements and expansions, the SS navigation system can evolve into a comprehensive, user-friendly assistive technology to enhance the lives of VIP.

Author Contributions

Conceptualization, S.K.G. and H.S.K.; data curation, A.A.M. and L.A.S.; formal analysis, H.S.K., A.A.M. and L.A.S.; investigation, S.K.G., H.S.K. and A.A.M.; methodology, S.K.G. and A.A.M.; resources, L.A.S.; software, H.S.K.; supervision, S.K.G.; validation, S.K.G. and A.A.M.; visualization, L.A.S.; writing—original draft, S.K.G., H.S.K. and A.A.M.; writing—review and editing, S.K.G., H.S.K., A.A.M. and L.A.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Dataset available on request from the authors.

Acknowledgments

The authors thank the Department of Medical Instrumentation Engineering Techniques faculty at the Electrical Engineering Technical College, Middle Technical University-Baghdad, Iraq, for cooperating.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jivrajani, K.; Patel, S.K.; Parmar, C.; Surve, J.; Ahmed, K.; Bui, F.M.; Al-Zahrani, F.A. AIoT-based smart stick for visually impaired person. IEEE Trans. Instrum. Meas. 2022, 72, 2501311. [Google Scholar] [CrossRef]

- Ashrafuzzaman, M.; Saha, S.; Uddin, N.; Saha, P.K.; Hossen, S.; Nur, K. Design and Development of a Low-cost Smart Stick for Visually Impaired People. In Proceedings of the International Conference on Science & Contemporary Technologies (ICSCT), Dhaka, Bangladesh, 5–7 August 2021; pp. 1–6. [Google Scholar]

- Farooq, M.S.; Shafi, I.; Khan, H.; Díez, I.D.L.T.; Breñosa, J.; Espinosa, J.C.M.; Ashraf, I. IoT Enabled Intelligent Stick for Visually Impaired People for Obstacle Recognition. Sensors 2022, 22, 8914. [Google Scholar] [CrossRef] [PubMed]

- Ben Atitallah, A.; Said, Y.; Ben Atitallah, M.A.; Albekairi, M.; Kaaniche, K.; Alanazi, T.M.; Boubaker, S.; Atri, M. Embedded implementation of an obstacle detection system for blind and visually impaired persons’ assistance navigation. Comput. Electr. Eng. 2023, 108, 108714. [Google Scholar] [CrossRef]

- Gharghan, S.K.; Al-Kafaji, R.D.; Mahdi, S.Q.; Zubaidi, S.L.; Ridha, H.M. Indoor Localization for the Blind Based on the Fusion of a Metaheuristic Algorithm with a Neural Network Using Energy-Efficient WSN. Arab. J. Sci. Eng. 2023, 48, 6025–6052. [Google Scholar] [CrossRef]

- Ali, Z.A. Design and evaluation of two obstacle detection devices for visually impaired people. J. Eng. Res. 2023, 11, 100–105. [Google Scholar] [CrossRef]

- Singh, B.; Ekvitayavetchanuku, P.; Shah, B.; Sirohi, N.; Pundhir, P. IoT-Based Shoe for Enhanced Mobility and Safety of Visually Impaired Individuals. EAI Endorsed Trans. Internet Things 2024, 10, 1–19. [Google Scholar] [CrossRef]

- Wang, J.; Wang, S.; Zhang, Y. Artificial intelligence for visually impaired. Displays 2023, 77, 102391. [Google Scholar] [CrossRef]

- Nagarajan, A.; Gopinath, M.P. Hybrid Optimization-Enabled Deep Learning for Indoor Object Detection and Distance Estimation to Assist Visually Impaired Persons. Adv. Eng. Softw. 2023, 176, 103362. [Google Scholar] [CrossRef]

- Suman, S.; Mishra, S.; Sahoo, K.S.; Nayyar, A. Vision Navigator: A Smart and Intelligent Obstacle Recognition Model for Visually Impaired Users. Mob. Inf. Syst. 2022, 2022, 9715891. [Google Scholar] [CrossRef]

- Mohd Romlay, M.R.; Mohd Ibrahim, A.; Toha, S.F.; De Wilde, P.; Venkat, I.; Ahmad, M.S. Obstacle avoidance for a robotic navigation aid using Fuzzy Logic Controller-Optimal Reciprocal Collision Avoidance (FLC-ORCA). Neural Comput. Appl. 2023, 35, 22405–22429. [Google Scholar] [CrossRef]

- Holguín, A.I.H.; Méndez-González, L.C.; Rodríguez-Picón, L.A.; Olguin, I.J.C.P.; Carreón, A.E.Q.; Anaya, L.G.G. Assistive Device for the Visually Impaired Based on Computer Vision. In Innovation and Competitiveness in Industry 4.0 Based on Intelligent Systems; Méndez-González, L.C., Rodríguez-Picón, L.A., Pérez Olguín, I.J.C., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 71–97. [Google Scholar] [CrossRef]

- Islam, R.B.; Akhter, S.; Iqbal, F.; Rahman, M.S.U.; Khan, R. Deep learning based object detection and surrounding environment description for visually impaired people. Heliyon 2023, 9, e16924. [Google Scholar] [CrossRef] [PubMed]

- Balasubramani, S.; Mahesh Rao, E.; Abdul Azeem, S.; Venkatesh, N. Design IoT-Based Blind Stick for Visually Disabled Persons. In Proceedings of the International Conference on Computing, Communication, Electrical and Biomedical Systems, Coimbatore, India, 25–26 March 2021; pp. 385–396. [Google Scholar]

- Dhilip Karthik, M.; Kareem, R.M.; Nisha, V.; Sajidha, S. Smart Walking Stick for Visually Impaired People. In Privacy Preservation of Genomic and Medical Data; Tyagi, A.K., Ed.; Wiley: Hoboken, NJ, USA, 2023; pp. 361–381. [Google Scholar]

- Panazan, C.-E.; Dulf, E.-H. Intelligent Cane for Assisting the Visually Impaired. Technologies 2024, 12, 75. [Google Scholar] [CrossRef]

- Li, J.; Xie, L.; Chen, Z.; Shi, L.; Chen, R.; Ren, Y.; Wang, L.; Lu, X. An AIoT-Based Assistance System for Visually Impaired People. Electronics 2023, 12, 3760. [Google Scholar] [CrossRef]

- Hashim, Y.; Abdulbaqi, A.G. Smart stick for blind people with wireless emergency notification. TELKOMNIKA (Telecommun. Comput. Electron. Control) 2024, 22, 175–181. [Google Scholar] [CrossRef]

- Sahoo, N.; Lin, H.-W.; Chang, Y.-H. Design and Implementation of a Walking Stick Aid for Visually Challenged People. Sensors 2019, 19, 130. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, H.Q.; Duong, A.H.L.; Vu, M.D.; Dinh, T.Q.; Ngo, H.T. Smart Blind Stick for Visually Impaired People; Springer International Publishing: Cham, Switzerland, 2022; pp. 145–165. [Google Scholar]

- Senthilnathan, A.; Palanivel, P.; Sowmiya, M. Intelligent stick for visually impaired persons. In Proceedings of the AIP Conference Proceedings, Coimbatore, India, 24–25 March 2022; p. 060016. [Google Scholar] [CrossRef]

- Khan, I.; Khusro, S.; Ullah, I. Identifying the walking patterns of visually impaired people by extending white cane with smartphone sensors. Multimed. Tools Appl. 2023, 82, 27005–27025. [Google Scholar] [CrossRef]

- Thi Pham, L.T.; Phuong, L.G.; Tam Le, Q.; Thanh Nguyen, H. Smart Blind Stick Integrated with Ultrasonic Sensors and Communication Technologies for Visually Impaired People. In Deep Learning and Other Soft Computing Techniques: Biomedical and Related Applications; Phuong, N.H., Kreinovich, V., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2023; Volume 1097, pp. 121–134. [Google Scholar]

- Bazi, Y.; Alhichri, H.; Alajlan, N.; Melgani, F. Scene Description for Visually Impaired People with Multi-Label Convolutional SVM Networks. Appl. Sci. 2019, 9, 5062. [Google Scholar] [CrossRef]

- Gensytskyy, O.; Nandi, P.; Otis, M.J.D.; Tabi, C.E.; Ayena, J.C. Soil friction coefficient estimation using CNN included in an assistive system for walking in urban areas. J. Ambient Intell. Humaniz. Comput. 2023, 14, 14291–14307. [Google Scholar] [CrossRef]

- Chopda, R.; Khan, A.; Goenka, A.; Dhere, D.; Gupta, S. An Intelligent Voice Assistant Engineered to Assist the Visually Impaired. In Proceedings of the Intelligent Computing and Networking, Mumbai, India, 25–26 February 2022; Lecture Notes in Networks and Systems; pp. 143–155. [Google Scholar]

- Ben Atitallah, A.; Said, Y.; Ben Atitallah, M.A.; Albekairi, M.; Kaaniche, K.; Boubaker, S. An effective obstacle detection system using deep learning advantages to aid blind and visually impaired navigation. Ain Shams Eng. J. 2024, 15, 102387. [Google Scholar] [CrossRef]

- Ma, Y.; Shi, Y.; Zhang, M.; Li, W.; Ma, C.; Guo, Y. Design and Implementation of an Intelligent Assistive Cane for Visually Impaired People Based on an Edge-Cloud Collaboration Scheme. Electronics 2022, 11, 2266. [Google Scholar] [CrossRef]

- Mahmood, M.F.; Mohammed, S.L.; Gharghan, S.K. Energy harvesting-based vibration sensor for medical electromyography device. Int. J. Electr. Electron. Eng. Telecommun. 2020, 9, 364–372. [Google Scholar] [CrossRef]

- Kulurkar, P.; kumar Dixit, C.; Bharathi, V.; Monikavishnuvarthini, A.; Dhakne, A.; Preethi, P. AI based elderly fall prediction system using wearable sensors: A smart home-care technology with IOT. Meas. Sens. 2023, 25, 100614. [Google Scholar] [CrossRef]

- Jumaah, H.J.; Kalantar, B.; Halin, A.A.; Mansor, S.; Ueda, N.; Jumaah, S.J. Development of UAV-Based PM2.5 Monitoring System. Drones 2021, 5, 60. [Google Scholar] [CrossRef]

- Ahmed, N.; Gharghan, S.K.; Mutlag, A.H. IoT-based child tracking using RFID and GPS. Int. J. Comput. Appl. 2023, 45, 367–378. [Google Scholar] [CrossRef]

- Fakhrulddin, S.S.; Gharghan, S.K.; Al-Naji, A.; Chahl, J. An Advanced First Aid System Based on an Unmanned Aerial Vehicles and a Wireless Body Area Sensor Network for Elderly Persons in Outdoor Environments. Sensors 2019, 19, 2955. [Google Scholar] [CrossRef] [PubMed]

- Fakhrulddin, S.S.; Gharghan, S.K. An Autonomous Wireless Health Monitoring System Based on Heartbeat and Accelerometer Sensors. J. Sens. Actuator Netw. 2019, 8, 39. [Google Scholar] [CrossRef]

- Al-Naji, A.; Al-Askery, A.J.; Gharghan, S.K.; Chahl, J. A System for Monitoring Breathing Activity Using an Ultrasonic Radar Detection with Low Power Consumption. J. Sens. Actuator Netw. 2019, 8, 32. [Google Scholar] [CrossRef]

- Khan, M.A.; Paul, P.; Rashid, M.; Hossain, M.; Ahad, M.A.R. An AI-based visual aid with integrated reading assistant for the completely blind. IEEE Trans. Hum. Mach. Syst. 2020, 50, 507–517. [Google Scholar] [CrossRef]

- Zakaria, M.I.; Jabbar, W.A.; Sulaiman, N. Development of a smart sensing unit for LoRaWAN-based IoT flood monitoring and warning system in catchment areas. Internet Things Cyber-Phys. Syst. 2023, 3, 249–261. [Google Scholar] [CrossRef]

- Sharma, A.K.; Singh, V.; Goyal, A.; Oza, A.D.; Bhole, K.S.; Kumar, M. Experimental analysis of Inconel 625 alloy to enhance the dimensional accuracy with vibration assisted micro-EDM. Int. J. Interact. Des. Manuf. 2023, 1–15. [Google Scholar] [CrossRef]

- Gharghan, S.K.; Nordin, R.; Ismail, M. Energy Efficiency of Ultra-Low-Power Bicycle Wireless Sensor Networks Based on a Combination of Power Reduction Techniques. J. Sens. 2016, 2016, 7314207. [Google Scholar] [CrossRef]

- Mahmood, M.F.; Gharghan, S.K.; Mohammed, S.L.; Al-Naji, A.; Chahl, J. Design of Powering Wireless Medical Sensor Based on Spiral-Spider Coils. Designs 2021, 5, 59. [Google Scholar] [CrossRef]

- Mahdi, S.Q.; Gharghan, S.K.; Hasan, M.A. FPGA-Based neural network for accurate distance estimation of elderly falls using WSN in an indoor environment. Measurement 2021, 167, 108276. [Google Scholar] [CrossRef]

- Prabhakar, A.J.; Prabhu, S.; Agrawal, A.; Banerjee, S.; Joshua, A.M.; Kamat, Y.D.; Nath, G.; Sengupta, S. Use of Machine Learning for Early Detection of Knee Osteoarthritis and Quantifying Effectiveness of Treatment Using Force Platform. J. Sens. Actuator Netw. 2022, 11, 48. [Google Scholar] [CrossRef]

- Kaseris, M.; Kostavelis, I.; Malassiotis, S. A Comprehensive Survey on Deep Learning Methods in Human Activity Recognition. Mach. Learn. Knowl. Extr. 2024, 6, 842–876. [Google Scholar] [CrossRef]

- Anarbekova, G.; Ruiz, L.G.B.; Akanova, A.; Sharipova, S.; Ospanova, N. Fine-Tuning Artificial Neural Networks to Predict Pest Numbers in Grain Crops: A Case Study in Kazakhstan. Mach. Learn. Knowl. Extr. 2024, 6, 1154–1169. [Google Scholar] [CrossRef]

- Runsewe, I.; Latifi, M.; Ahsan, M.; Haider, J. Machine Learning for Predicting Key Factors to Identify Misinformation in Football Transfer News. Computers 2024, 13, 127. [Google Scholar] [CrossRef]

- Onur, F.; Gönen, S.; Barışkan, M.A.; Kubat, C.; Tunay, M.; Yılmaz, E.N. Machine learning-based identification of cybersecurity threats affecting autonomous vehicle systems. Comput. Ind. Eng. 2024, 190, 110088. [Google Scholar] [CrossRef]

- Jingning, L. Speech recognition based on mobile sensor networks application in English education intelligent assisted learning system. Meas. Sens. 2024, 32, 101084. [Google Scholar] [CrossRef]

- Mutemi, A.; Bacao, F. E-Commerce Fraud Detection Based on Machine Learning Techniques: Systematic Literature Review. Big Data Min. Anal. 2024, 7, 419–444. [Google Scholar] [CrossRef]

- Makulavičius, M.; Petkevičius, S.; Rožėnė, J.; Dzedzickis, A.; Bučinskas, V. Industrial Robots in Mechanical Machining: Perspectives and Limitations. Robotics 2023, 12, 160. [Google Scholar] [CrossRef]

- Alslaity, A.; Orji, R. Machine learning techniques for emotion detection and sentiment analysis: Current state, challenges, and future directions. Behav. Inf. Technol. 2024, 43, 139–164. [Google Scholar] [CrossRef]

- Gharghan, S.K.; Hashim, H.A. A comprehensive review of elderly fall detection using wireless communication and artificial intelligence techniques. Measurement 2024, 226, 114186. [Google Scholar] [CrossRef]

- Chicco, D.; Warrens, M.J.; Jurman, G. The Matthews correlation coefficient (MCC) is more informative than Cohen’s Kappa and Brier score in binary classification assessment. IEEE Access 2021, 9, 78368–78381. [Google Scholar] [CrossRef]

- Sannino, G.; De Falco, I.; De Pietro, G. Non-Invasive Risk Stratification of Hypertension: A Systematic Comparison of Machine Learning Algorithms. J. Sens. Actuator Netw. 2020, 9, 34. [Google Scholar] [CrossRef]

- Luthuli, M.B.; Malele, V.; Owolawi, P.A. Smart Walk: A Smart Stick for the Visually Impaired. In Proceedings of the International Conference on Intelligent and Innovative Computing Applications, Balaclava, Mauritius, 8–9 December 2022; pp. 131–136. [Google Scholar]

- Dhou, S.; Alnabulsi, A.; Al-Ali, A.-R.; Arshi, M.; Darwish, F.; Almaazmi, S.; Alameeri, R. An IoT machine learning-based mobile sensors unit for visually impaired people. Sensors 2022, 22, 5202. [Google Scholar] [CrossRef] [PubMed]

- Kumar, N.; Jain, A. A Deep Learning Based Model to Assist Blind People in Their Navigation. J. Inf. Technol. Educ. Innov. Pract. 2022, 21, 95–114. [Google Scholar] [CrossRef] [PubMed]

- Yadav, D.K.; Mookherji, S.; Gomes, J.; Patil, S. Intelligent Navigation System for the Visually Impaired—A Deep Learning Approach. In Proceedings of the 2020 Fourth International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 11–13 March 2020; pp. 652–659. [Google Scholar] [CrossRef]

- Joshi, R.C.; Yadav, S.; Dutta, M.K.; Travieso-Gonzalez, C.M. Efficient Multi-Object Detection and Smart Navigation Using Artificial Intelligence for Visually Impaired People. Entropy 2020, 22, 941. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Gao, M.; Choi, J. Smart walking cane based on triboelectric nanogenerators for assisting the visually impaired. Nano Energy 2024, 124, 109485. [Google Scholar] [CrossRef]

- Bauer, Z.; Dominguez, A.; Cruz, E.; Gomez-Donoso, F.; Orts-Escolano, S.; Cazorla, M. Enhancing perception for the visually impaired with deep learning techniques and low-cost wearable sensors. Pattern Recognit. Lett. 2020, 137, 27–36. [Google Scholar] [CrossRef]

- Busaeed, S.; Mehmood, R.; Katib, I.; Corchado, J.M. LidSonic for visually impaired: Green machine learning-based assistive smart glasses with smart app and Arduino. Electronics 2022, 11, 1076. [Google Scholar] [CrossRef]

- Kuriakose, B.; Shrestha, R.; Sandnes, F.E. DeepNAVI: A deep learning based smartphone navigation assistant for people with visual impairments. Expert Syst. Appl. 2023, 212, 118720. [Google Scholar] [CrossRef]

- Pydala, B.; Kumar, T.P.; Baseer, K.K. VisiSense: A Comprehensive IOT-based Assistive Technology System for Enhanced Navigation Support for the Visually Impaired. Scalable Comput. Pract. Exp. 2024, 25, 1134–1151. [Google Scholar] [CrossRef]

- Kaushal, R.K.; Kumar, T.P.; Sharath, N.; Parikh, S.; Natrayan, L.; Patil, H. Navigating Independence: The Smart Walking Stick for the Visually Impaired. In Proceedings of the 2024 5th International Conference on Mobile Computing and Sustainable Informatics (ICMCSI), Lalitpur, Nepal, 18–19 January 2024; pp. 103–108. [Google Scholar] [CrossRef]

- Kumar, A.; Surya, G.; Sathyadurga, V. Echo Guidance: Voice-Activated Application for Blind with Smart Assistive Stick Using Machine Learning and IoT. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).