Depth Imaging-Based Framework for Efficient Phenotypic Recognition in Tomato Fruit

Abstract

1. Introduction

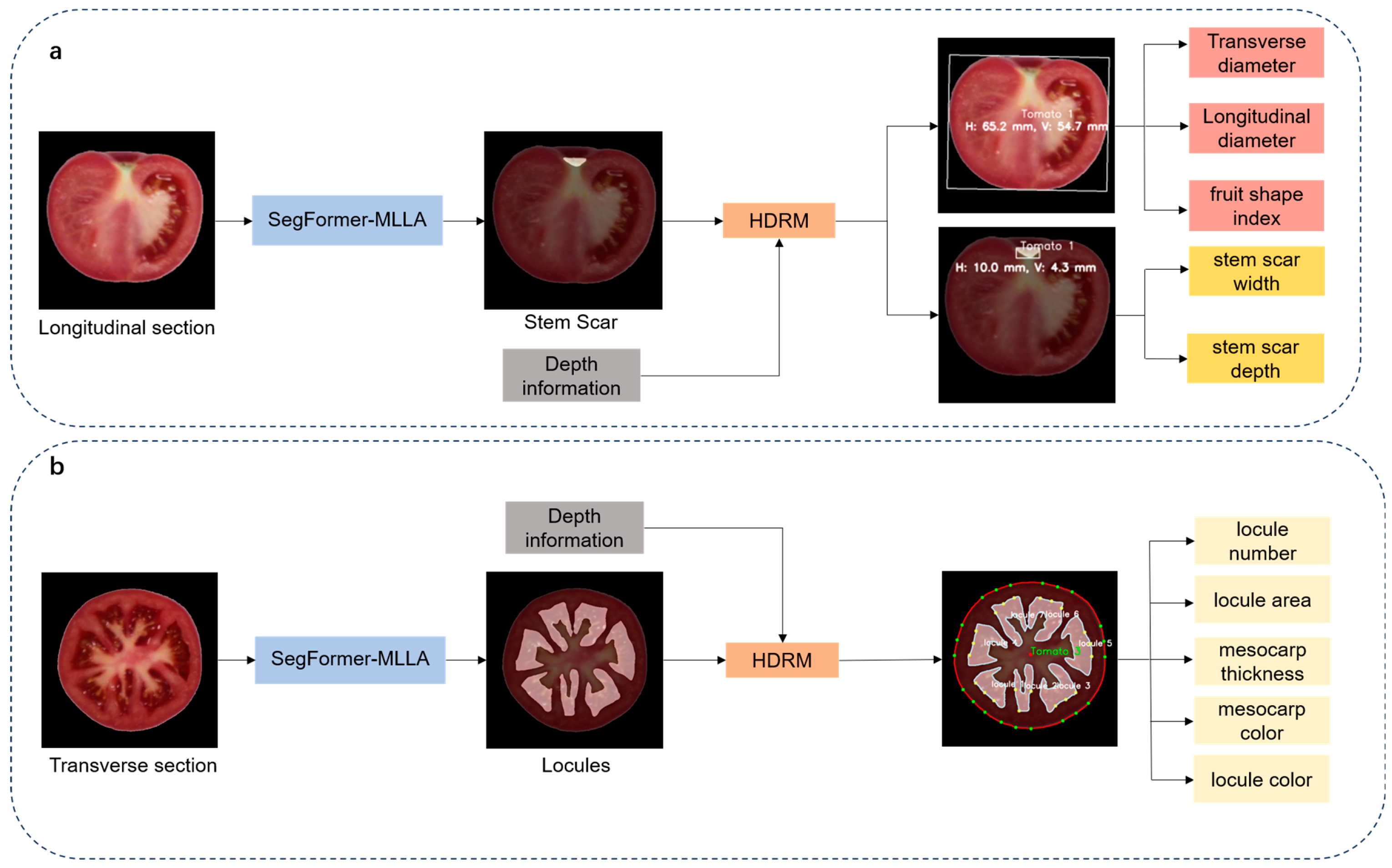

- The framework analyzes 12 phenotypic traits, including fruit transverse and longitudinal diameters, shape index, stem scar structure in the fruit, as well as stem scar depth and width, locule structure, locule number, locule area, mesocarp thickness, mesocarp color, and locule color.

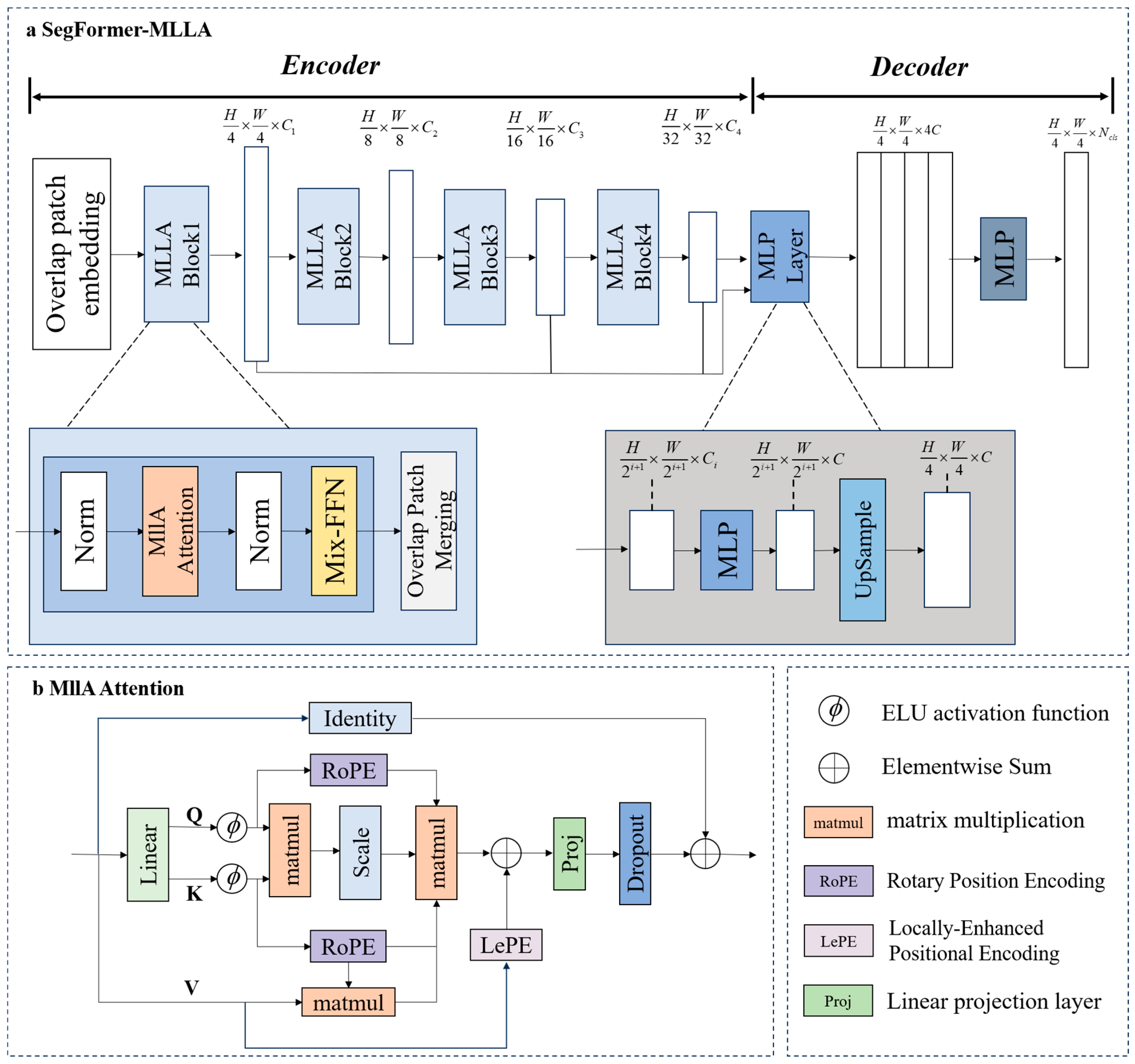

- Based on the SegFormer architecture [35], the MLLA linear attention mechanism was introduced to develop a SegFormer-MLLA model for tomato fruit phenotypic traits segmentation [36]. This model enhances computational efficiency while maintaining high segmentation accuracy, enabling precise segmentation of the locule and stem scar structures in tomato fruits.

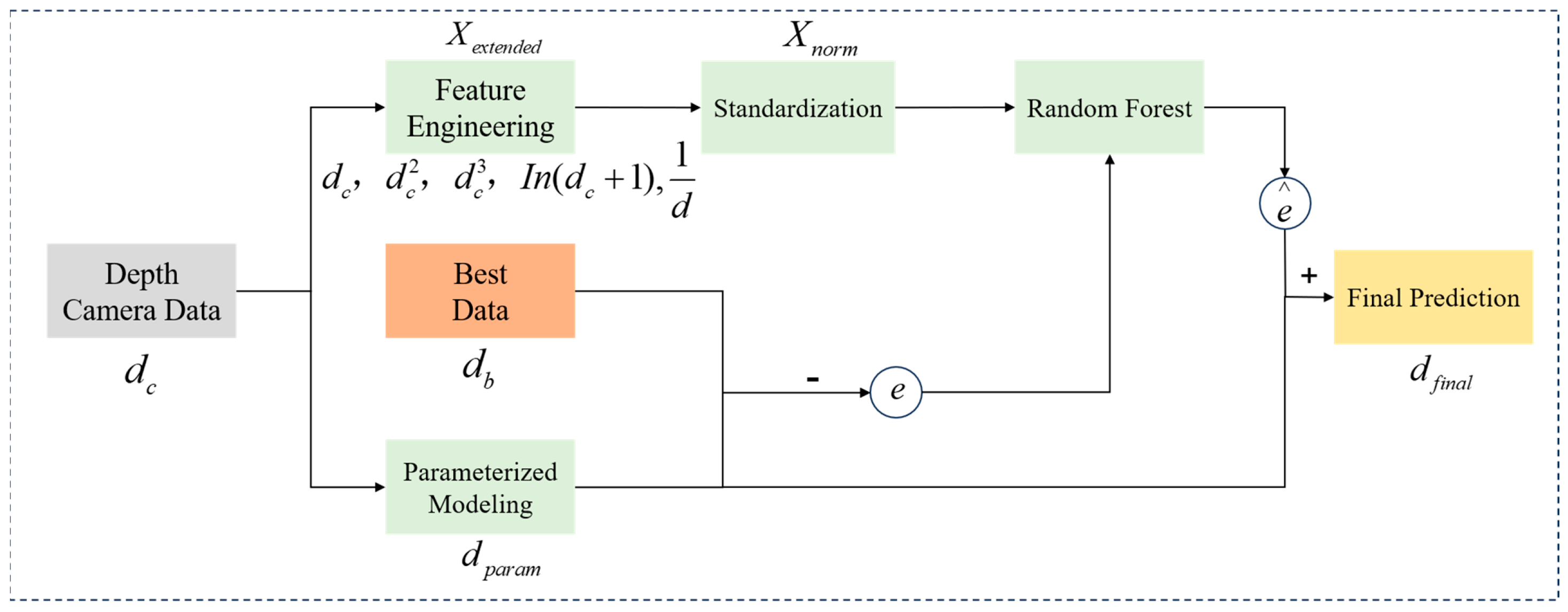

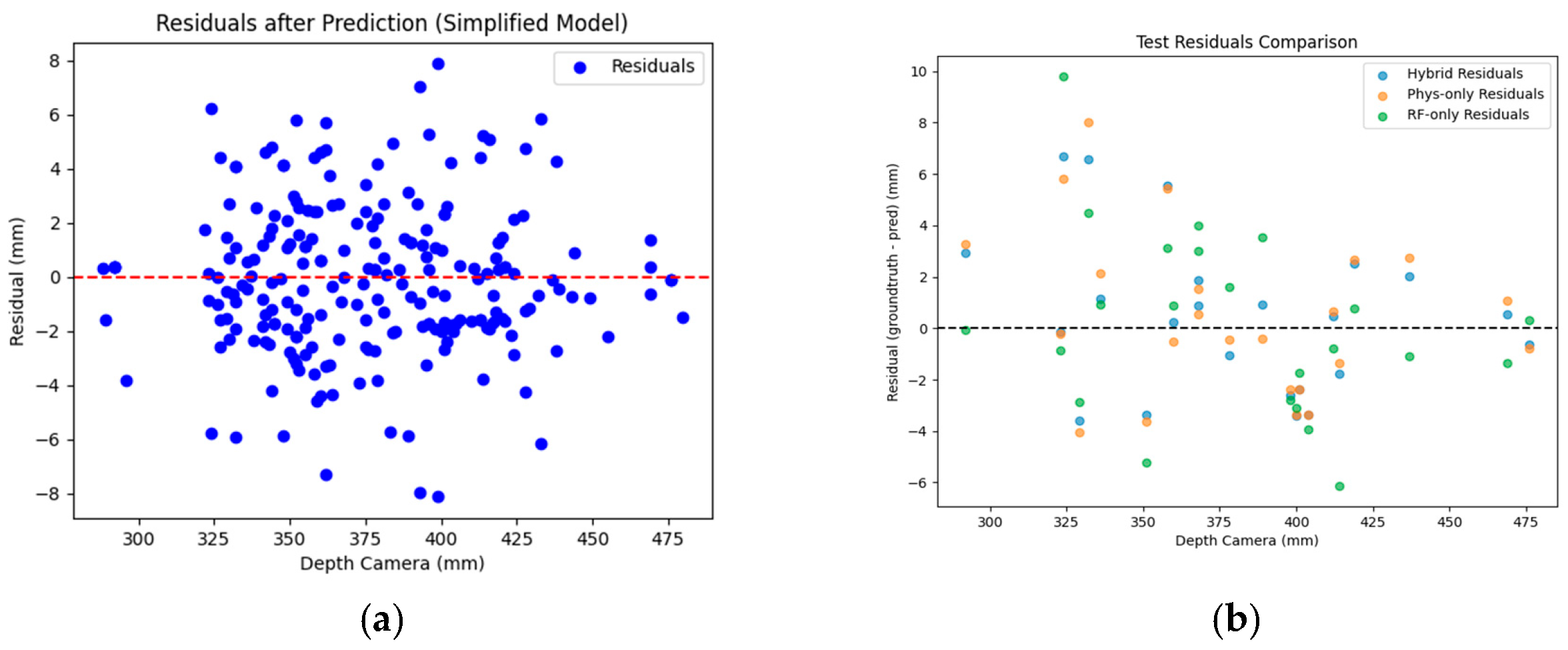

- By integrating depth information, the dimensions of tomato fruit traits were measured. To address depth information errors caused by optical interference, such as specular reflections, a Hybrid Depth Regression Model (HDRM) was designed. This model captures the optimal depth distance of the tomato fruit images through modeling parameter errors, calculating residuals, and applying random forest-based residual correction.

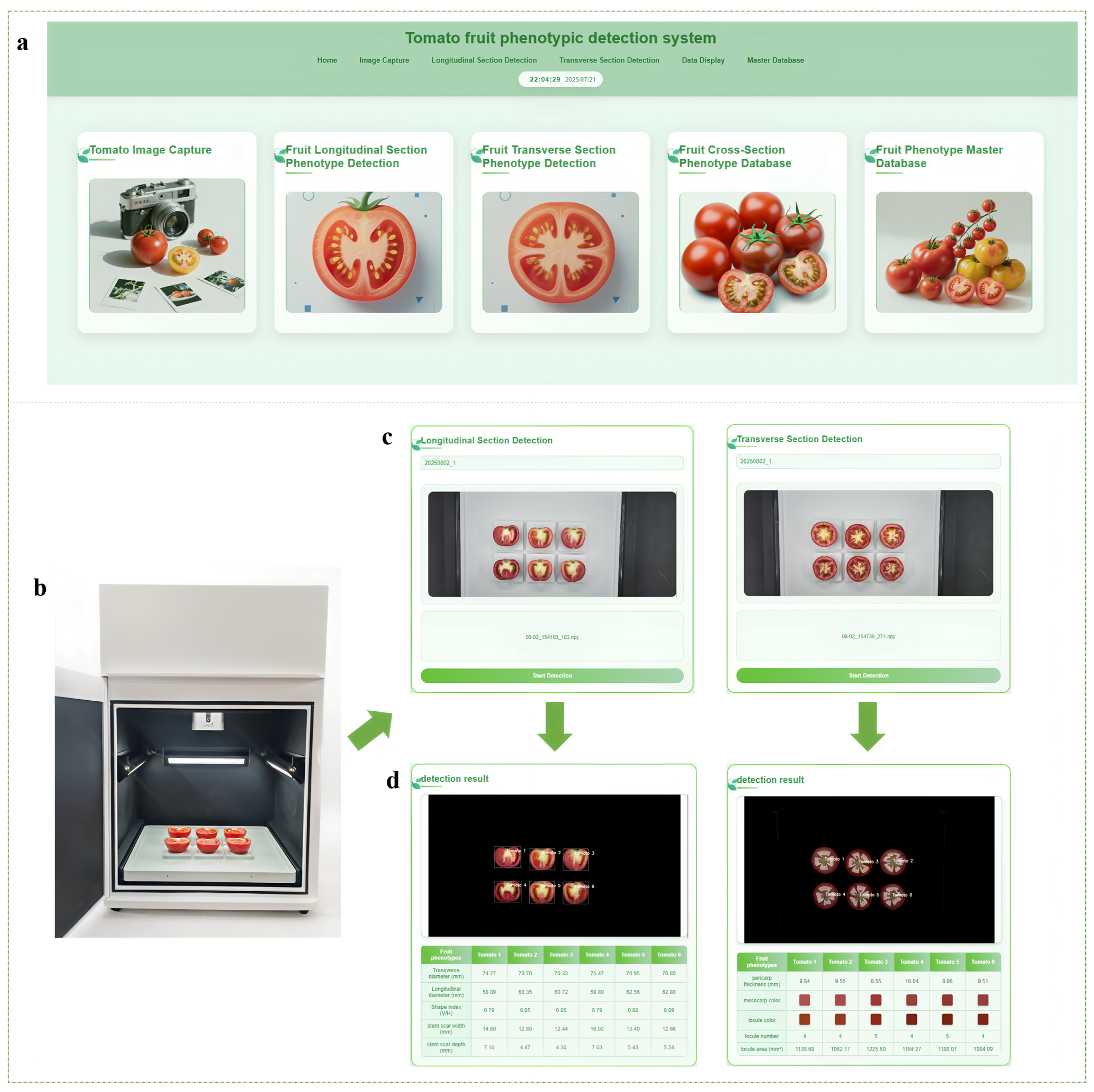

- We designed an intelligent detection system for tomato fruit phenomics analysis, which integrates both software and hardware components. During the detection process, each sample was assigned a corresponding label to establish a mapping with its phenotypic data, enabling efficient and accurate detection and data storage of tomato fruit phenotypic traits.

2. Materials and Methods

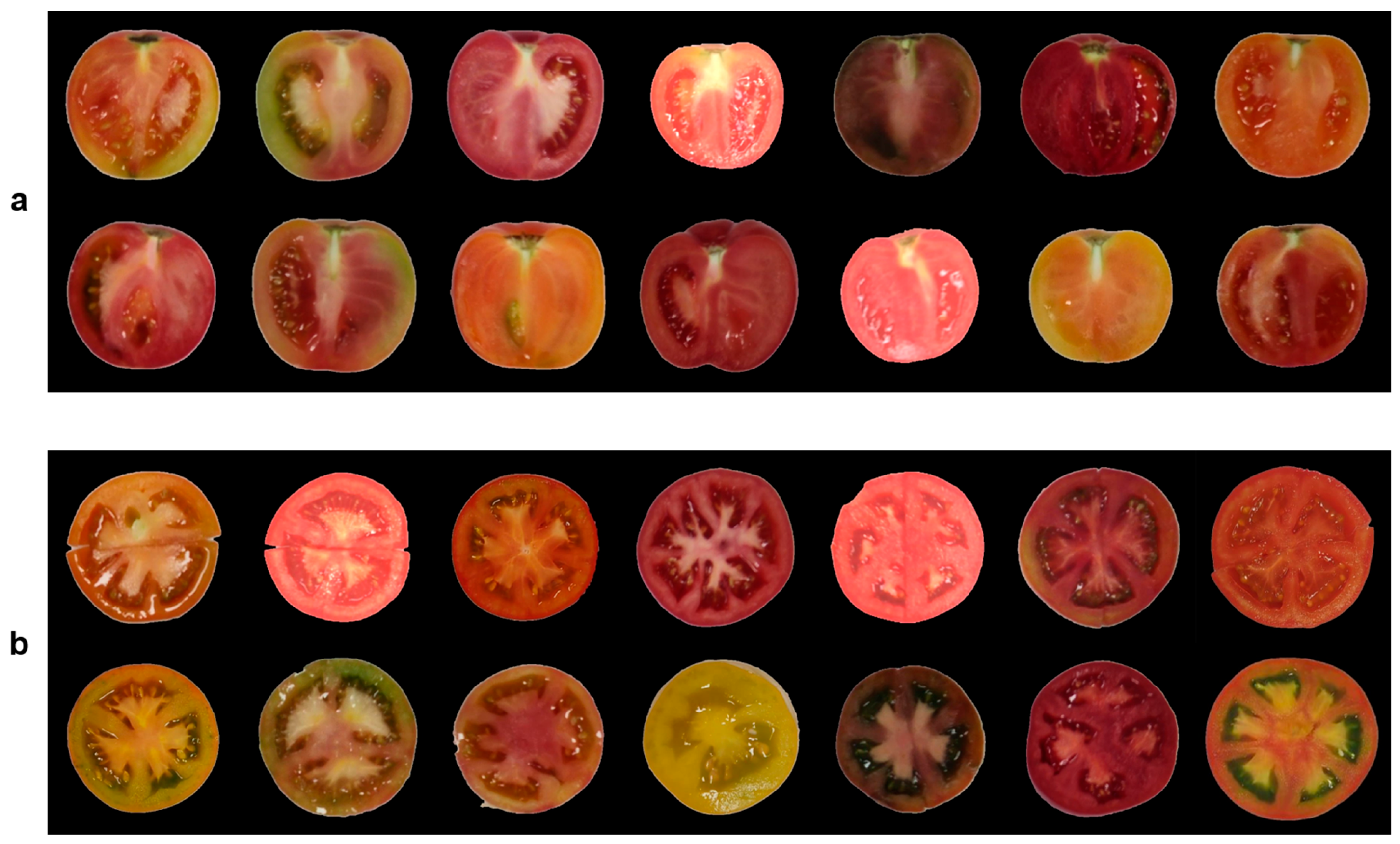

2.1. Image Acquisition

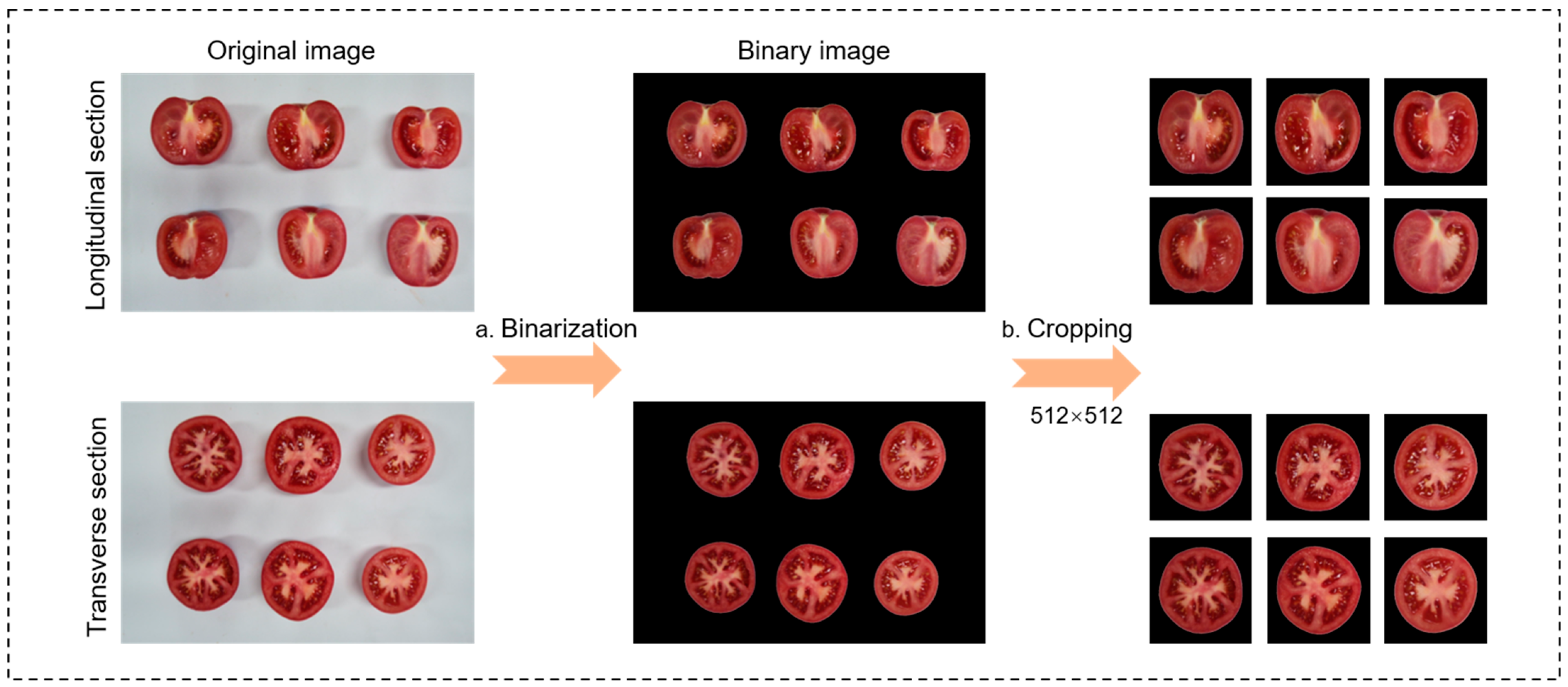

2.2. Image Preprocessing

- Binarization module

- 2.

- Image cropping module

- 3.

- Data annotation module

- 4.

- Data partitioning module

2.3. SegFormer-MLLA Model

2.4. Tomato Fruit Phenotypic Size Transformation

- Parameterized Modeling for Error Correction. A nonlinear parametric model was established, and the optimal parameter solution was obtained by fitting the objective function using the least squares method. Let dc denote the depth value measured by the camera, db denote the optimal depth value, which is obtained through multiple rounds of tuning and calibration, and dparam denote the initial predicted depth value as given by Equation (10):where α, β, ϕ, and γ are parameters derived from data fitting.

- Residual Calculation. The residual error e, which denotes the deviation predicted by the parametric error model, was calculated for further correction using a random forest regression model, as defined below:

- Feature Engineering. To further capture the nonlinear relationships within the residuals, an extended feature set Xextended was constructed, including linear, quadratic, cubic, logarithmic, and reciprocal terms:

- Standardization. To prevent feature scale discrepancies from affecting model training, the feature set was standardized to obtain Xnorm, ensuring consistency in the input to the random forest model. Here, μ denotes the mean of the feature vector, and σ denotes the standard deviation of the feature vector:

- Random Forest Regressor for Residual Correction (RF). A random forest RF model was employed to predict the residual correction value . The RF model integrates the outputs of multiple decision trees, Tk, and its predicted value is given by:

- Final Corrected Model Depth. The final corrected depth is the sum of the parametric model prediction and the random forest residual prediction:

2.5. Tomato Fruit Phenotype Recognition Process

- To extract morphological features from tomato sections, this study used image processing and threshold segmentation algorithms to generate a binary mask for each tomato fruit. A minimum bounding rectangle was fitted to each tomato fruit in the image, with its height defined as the longitudinal diameter and its width as the transverse diameter. The fruit shape index was calculated as the ratio between the longitudinal and transverse diameters of the fruit.

- We utilized a SegFormer-MLLA model for tomato fruit phenotypic trait segmentation to achieve efficient and precise segmentation of the stem scar boundary. Based on the segmentation results, a minimum bounding rectangle was fitted to the stem scar, with its width and depth determined.

- Using the obtained data, we integrated RGB-D information from the depth camera and employed the HDRM model to optimize depth values, thereby converting pixel distances into physical dimensions and obtaining the actual values of the tomato fruit’s transverse diameter, longitudinal diameter, stem scar depth, and stem scar width.

- Tomato fruit transverse section images were processed at 512 × 512 resolution. The SegFormer-MLLA model was applied to segment the locule structure for quantitative analysis of locule number and area. To ensure systematic and traceable analysis, a numbering system was designed for tomato fruits and their internal locules, assigning unique identifiers to establish correspondence.

- Three rays were drawn from the centroid of each tomato fruit toward each locule. Experimental results showed that offsetting the two side rays by 12° from the central ray provided optimal performance. The minimum Euclidean distance between the intersection points of rays with the locule contour and the tomato outer contour was calculated, and their average was used as an approximate estimate of mesocarp thickness.

- Using the depth information provided by the depth image and the HDRM model, pixel-based measurements of mesocarp thickness and locule area were converted into actual physical dimensions.

- Further color recognition analysis was conducted. By averaging the RGB values of each tomato locule, the representative color features of the locule were obtained. Additionally, based on a tomato flesh mask (generated by subtracting the locule mask from the overall tomato mask), a morphological erosion algorithm was employed to extract the pericarp region near the tomato’s outer edge, and its color features were identified.

3. Results

3.1. Experimental Environment

3.2. Evaluation Metrics

3.3. Evaluation of Segmentation Results

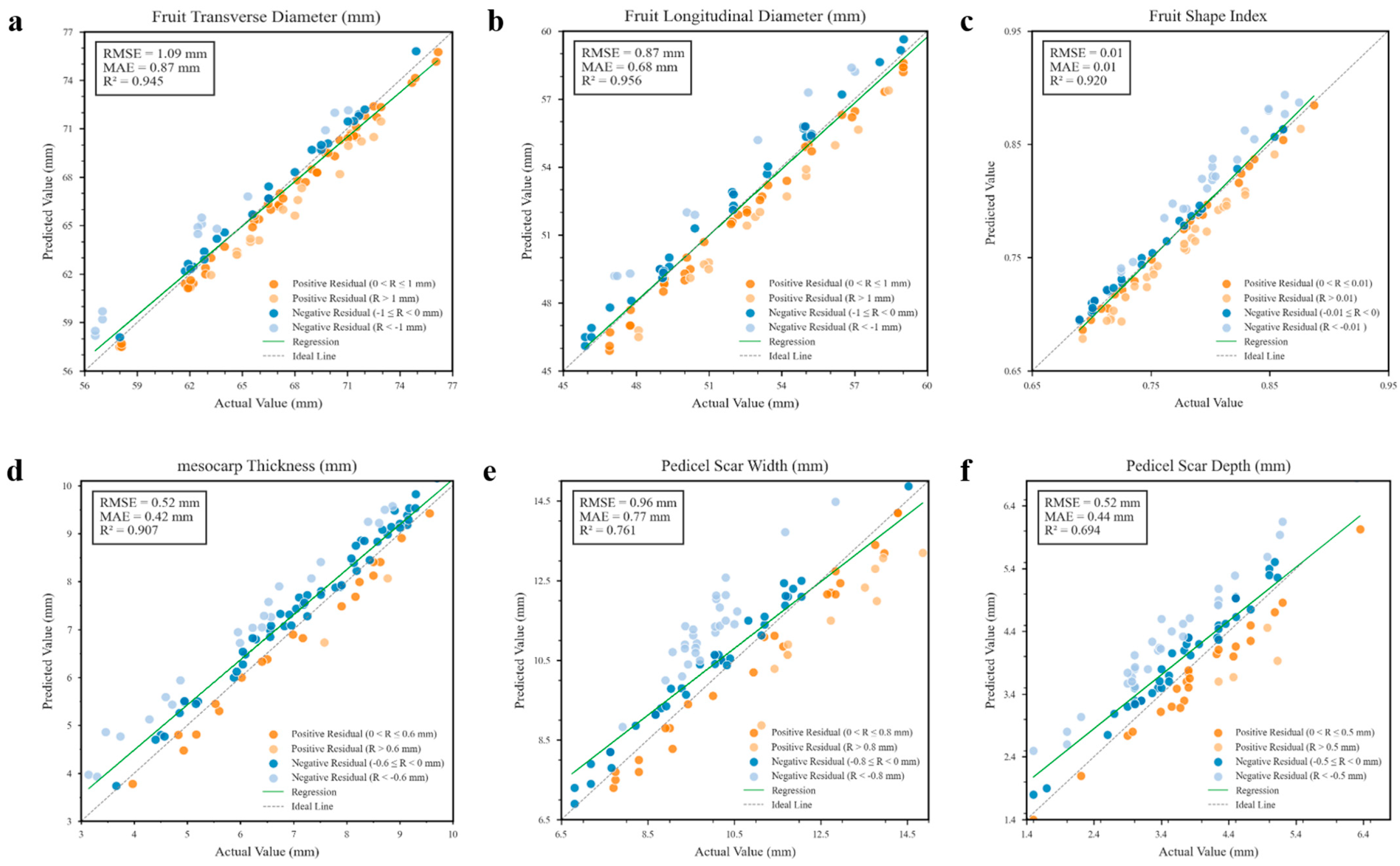

3.4. Tomato Fruit Size Phenotypic Information Extraction

3.5. Ablation Experiment

3.6. Device Detection and Software Development

4. Discussion

4.1. The Feasibility and Practical Significance of SegFormer-MLLA in Tomato Fruit Trait Analysis

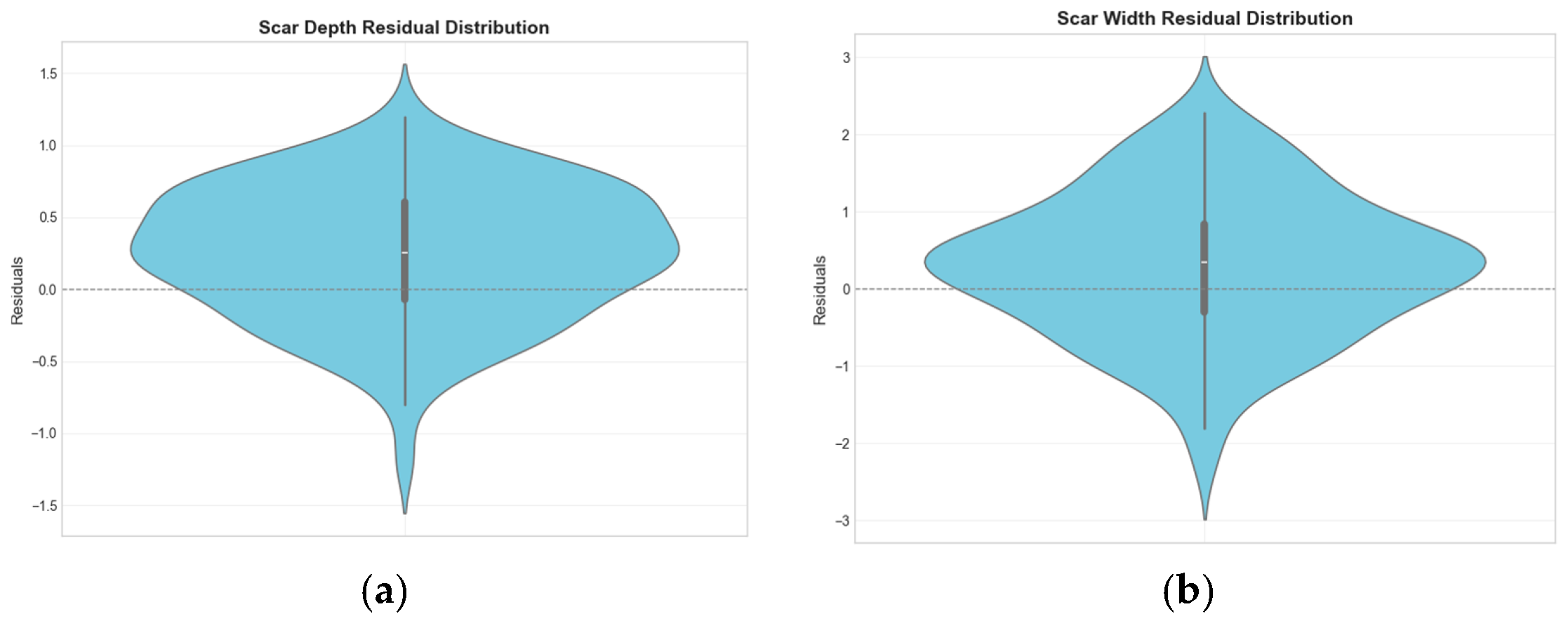

4.2. Effect of HDRM Model on Size Detection

4.3. Phenotypic Research of Tomato Stem Scars

4.4. Benefits of the Automated Phenotyping System

4.5. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kim, M.; Nguyen, T.T.P.; Ahn, J.-H.; Kim, G.-J.; Sim, S.-C. Genome-wide association study identifies QTL for eight fruit traits in cultivated tomato (Solanum lycopersicum L.). Hortic. Res. 2021, 8, 203. [Google Scholar] [CrossRef] [PubMed]

- Perveen, R.; Suleria, H.A.R.; Anjum, F.M.; Butt, M.S.; Pasha, I.; Ahmad, S.J. Tomato (Solanum lycopersicum) carotenoids and lycopenes chemistry; metabolism, absorption, nutrition, and allied health claims—A comprehensive review. Crit. Rev. Food Sci. Nutr. 2015, 55, 919–929. [Google Scholar] [CrossRef]

- Lin, T.; Zhu, G.; Zhang, J.; Xu, X.; Yu, Q.; Zheng, Z.; Zhang, Z.; Lun, Y.; Li, S.; Wang, X.; et al. Genomic analyses provide insights into the history of tomato breeding. Nat. Genet. 2014, 46, 1220–1226. [Google Scholar] [CrossRef]

- Zhu, G.; Wang, S.; Huang, Z.; Zhang, S.; Liao, Q.; Zhang, C.; Lin, T.; Qin, M.; Peng, M.; Yang, C.; et al. Rewiring of the Fruit Metabolome in Tomato Breeding. Cell 2018, 172, 249–261.e12. [Google Scholar] [CrossRef]

- Mata-Nicolás, E.; Montero-Pau, J.; Gimeno-Paez, E.; Garcia-Carpintero, V.; Ziarsolo, P.; Menda, N.; Mueller, L.A.; Blanca, J.; Cañizares, J.; van der Knaap, E.; et al. Exploiting the diversity of tomato: The development of a phenotypically and genetically detailed germplasm collection. Hortic. Res. 2020, 7, 66. [Google Scholar] [CrossRef] [PubMed]

- Oltman, A.E.; Jervis, S.M.; Drake, M.A. Consumer Attitudes and Preferences for Fresh Market Tomatoes. J. Food Sci. 2014, 79, S2091–S2097. [Google Scholar] [CrossRef] [PubMed]

- Tanksley, S.D. The Genetic, Developmental, and Molecular Bases of Fruit Size and Shape Variation in Tomato. Plant Cell. 2004, 16 (Suppl. S1), S181–S189. [Google Scholar] [CrossRef]

- Mounet, F.; Moing, A.; Garcia, V.; Petit, J.; Maucourt, M.; Deborde, C.; Bernillon, S.; Le Gall, G.; Colquhoun, I.; Defernez, M.; et al. Gene and Metabolite Regulatory Network Analysis of Early Developing Fruit Tissues Highlights New Candidate Genes for the Control of Tomato Fruit Composition and Development. Plant Physiol. 2009, 149, 1505–1528. [Google Scholar] [CrossRef]

- Barrero, L.S.; Tanksley, S.D. Evaluating the genetic basis of multiple-locule fruit in a broad cross section of tomato cultivars. Theor. Appl. Genet. 2004, 109, 669–679. [Google Scholar] [CrossRef]

- van der Knaap, E.; Chakrabarti, M.; Chu, Y.H.; Clevenger, J.P.; Illa-Berenguer, E.; Huang, Z.; Keyhaninejad, N.; Mu, Q.; Sun, L.; Wang, Y.; et al. What lies beyond the eye: The molecular mechanisms regulating tomato fruit weight and shape. Front. Plant Sci. 2014, 5, 227. [Google Scholar] [CrossRef]

- Yang, J.; Liu, Y.; Liang, B.; Yang, Q.; Li, X.; Chen, J.; Li, H.; Lyu, Y.; Lin, T. Genomic basis of selective breeding from the closest wild relative of large-fruited tomato. Hortic. Res. 2023, 10, uhad142. [Google Scholar] [CrossRef]

- Kumar, P.; Irfan, M. Green ripe fruit in tomato: Unraveling the genetic tapestry from cultivated to wild varieties. J. Exp. Bot. 2024, 75, 3203–3205. [Google Scholar] [CrossRef]

- Zhang, J.; Lyu, H.; Chen, J.; Cao, X.; Du, R.; Ma, L.; Wang, N.; Zhu, Z.; Rao, J.; Wang, J.; et al. Releasing a sugar brake generates sweeter tomato without yield penalty. Nature 2024, 635, 647–656. [Google Scholar] [CrossRef]

- Yang, T.; Ali, M.; Lin, L.; Li, P.; He, H.; Zhu, Q.; Sun, C.; Wu, N.; Zhang, X.; Huang, T.; et al. Recoloring tomato fruit by CRISPR/Cas9-mediated multiplex gene editing. Hortic. Res. 2022, 10, uhac214. [Google Scholar] [CrossRef] [PubMed]

- Mansoor, S.; Karunathilake, E.M.B.M.; Tuan, T.T.; Chung, Y.S. Genomics, phenomics, and machine learning in transforming plant research: Advancements and challenges. Hortic. Plant J. 2025, 11, 486–503. [Google Scholar] [CrossRef]

- Einspanier, S.; Tominello-Ramirez, C.; Hasler, M.; Barbacci, A.; Raffaele, S.; Stam, R. High-Resolution Disease Phenotyping Reveals Distinct Resistance Mechanisms of Tomato Crop Wild Relatives against Sclerotinia sclerotiorum. Plant Phenomics 2024, 6, 0214. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Zhang, Z.; Bao, Z.; Li, H.; Lyu, Y.; Zan, Y.; Wu, Y.; Cheng, L.; Fang, Y.; Wu, K.; et al. Graph pangenome captures missing heritability and empowers tomato breeding. Nature 2022, 606, 527–534. [Google Scholar] [CrossRef]

- Gan, L.; Song, M.; Wang, X.; Yang, N.; Li, H.; Liu, X.; Li, Y. Cytokinins are involved in regulation of tomato pericarp thickness and fruit size. Hortic. Res. 2022, 9, uhab041. [Google Scholar] [CrossRef]

- Fanourakis, D.; Papadakis, V.M.; Machado, M.; Psyllakis, E.; Nektarios, P.A. Non-invasive leaf hydration status determination through convolutional neural networks based on multispectral images in chrysanthemum. Plant Growth Regul. 2024, 102, 485–496. [Google Scholar] [CrossRef]

- Jiang, X.; Wang, J.; Xie, K.; Cui, C.; Du, A.; Shi, X.; Yang, W.; Zhai, R. PlantCaFo: An efficient few-shot plant disease recognition method based on foundation models. Plant Phenomics 2025, 7, 100024. [Google Scholar] [CrossRef]

- Zhang, J.; Xie, J.; Zhang, F.; Gao, J.; Yang, C.; Song, C.; Rao, W.; Zhang, Y. Greenhouse tomato detection and pose classification algorithm based on improved YOLOv5. Comput. Electron. Agric. 2024, 216, 108519. [Google Scholar] [CrossRef]

- Zhang, W.; Liu, Y.; Chen, K.; Li, H.; Duan, Y.; Wu, W.; Shi, Y.; Guo, W. Lightweight fruit-detection algorithm for edge computing applications. Front. Plant Sci. 2021, 12, 740936. [Google Scholar] [CrossRef] [PubMed]

- Yu, C.; Shi, X.; Luo, W.; Feng, J.; Zheng, Z.; Yorozu, A.; Hu, Y.; Guo, J. MLG-YOLO: A Model for Real-Time Accurate Detection and Localization of Winter Jujube in Complex Structured Orchard Environments. Plant Phenomics 2024, 6, 0258. [Google Scholar] [CrossRef] [PubMed]

- Wan, P.; Toudeshki, A.; Tan, H.; Ehsani, R. A methodology for fresh tomato maturity detection using computer vision. Comput. Electron. Agric. 2018, 146, 43–50. [Google Scholar] [CrossRef]

- Ireri, D.; Belal, E.; Okinda, C.; Makange, N.; Ji, C. A computer vision system for defect discrimination and grading in tomatoes using machine learning and image processing. Artif. Intell. Agric. 2019, 2, 28–37. [Google Scholar] [CrossRef]

- Deng, Y.; Xi, H.; Zhou, G.; Chen, A.; Wang, Y.; Li, L.; Hu, Y. An Effective Image-Based Tomato Leaf Disease Segmentation Method Using MC-UNet. Plant Phenomics 2023, 5, 0049. [Google Scholar] [CrossRef]

- Kang, R.; Huang, J.; Zhou, X.; Ren, N.; Sun, S. Toward Real Scenery: A Lightweight Tomato Growth Inspection Algorithm for Leaf Disease Detection and Fruit Counting. Plant Phenomics 2024, 6, 0174. [Google Scholar] [CrossRef]

- Tsaniklidis, G.; Makraki, T.; Papadimitriou, D.; Nikoloudakis, N.; Taheri-Garavand, A.; Fanourakis, D. Non-Destructive Estimation of Area and Greenness in Leaf and Seedling Scales: A Case Study in Cucumber. Agronomy 2025, 15, 2294. [Google Scholar] [CrossRef]

- Zhu, Y.; Gu, Q.; Zhao, Y.; Wan, H.; Wang, R.; Zhang, X.; Cheng, Y. Quantitative Extraction and Evaluation of Tomato Fruit Phenotypes Based on Image Recognition. Front. Plant Sci. 2022, 13, 859290. [Google Scholar] [CrossRef]

- Xu, S.; Shen, J.; Wei, Y.; Li, Y.; He, Y.; Hu, H.; Feng, X. Automatic plant phenotyping analysis of Melon (Cucumis melo L.) germplasm resources using deep learning methods and computer vision. Plant Methods 2024, 20, 166. [Google Scholar] [CrossRef]

- Xue, W.; Ding, H.; Jin, T.; Meng, J.; Wang, S.; Liu, Z.; Ma, X.; Li, J. CucumberAI: Cucumber Fruit Morphology Identification System Based on Artificial Intelligence. Plant Phenomics 2024, 6, 0193. [Google Scholar] [CrossRef]

- Park, J.; Kim, H.; Tai, Y.-W.; Brown, M.S.; Kweon, I. High quality depth map upsampling for 3D-TOF cameras. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE: New York, NY, USA; pp. 1623–1630. [Google Scholar]

- Mufti, F.; Mahony, R. Statistical analysis of signal measurement in time-of-flight cameras. ISPRS J. Photogramm. Remote. Sens. 2011, 66, 720–731. [Google Scholar] [CrossRef]

- Kang, Z.; Zhou, B.; Fei, S.; Wang, N. Predicting the greenhouse crop morphological parameters based on RGB-D Computer Vision. Smart Agric. Technol. 2025, 11, 100968. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Han, D.; Wang, Z.; Xia, Z.; Han, Y.; Pu, Y.; Ge, C.; Song, J.; Song, S.; Zheng, B.; Huang, G. Demystify mamba in vision: A linear attention perspective. Adv. Neural Inf. Process. Syst. 2024, 37, 127181–127203. [Google Scholar]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Jiang, Y.; Li, Z.; Chen, X.; Xie, H.; Cai, J. Mlla-unet: Mamba-like linear attention in an efficient u-shape model for medical image segmentation. arXiv 2024, arXiv:2410.23738. [Google Scholar]

- He, H.; Zhang, J.; Cai, Y.; Chen, H.; Hu, X.; Gan, Z.; Wang, Y.; Wang, C.; Wu, Y.; Xie, L. Mobilemamba: Lightweight multi-receptive visual mamba network. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 4497–4507. [Google Scholar]

- Su, J.; Ahmed, M.; Lu, Y.; Pan, S.; Bo, W.; Liu, Y. RoFormer: Enhanced transformer with Rotary Position Embedding. Neurocomputing 2024, 568, 127063. [Google Scholar] [CrossRef]

- Dong, X.; Bao, J.; Chen, D.; Zhang, W.; Yu, N.; Yuan, L.; Chen, D.; Guo, B. Cswin transformer: A general vision transformer backbone with cross-shaped windows. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12124–12134. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. In U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical image Computing and Computer-Assisted Intervention, Munich, Germany, 9–15 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Xu, J.; Xiong, Z.; Bhattacharyya, S.P. PIDNet: A real-time semantic segmentation network inspired by PID controllers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 8–22 June 2023; pp. 19529–19539. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–22 June 2022; pp. 11976–11986. [Google Scholar]

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. In Masked-attention mask transformer for universal image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–22 June 2022; pp. 1290–1299. [Google Scholar]

- Assad, T.; Jabeen, A.; Roy, S.; Bhat, N.; Maqbool, N.; Yadav, A.; Aijaz, T. Using an image processing technique, correlating the lycopene and moisture content in dried tomatoes. Food Humanit. 2024, 2, 100186. [Google Scholar] [CrossRef]

- Cardellicchio, A.; Solimani, F.; Dimauro, G.; Petrozza, A.; Summerer, S.; Cellini, F.; Renò, V. Detection of tomato plant phenotyping traits using YOLOv5-based single stage detectors. Comput. Electron. Agric. 2023, 207, 107757. [Google Scholar] [CrossRef]

- Rong, J.; Zhou, H.; Zhang, F.; Yuan, T.; Wang, P. Tomato cluster detection and counting using improved YOLOv5 based on RGB-D fusion. Comput. Electron. Agric. 2023, 207, 107741. [Google Scholar] [CrossRef]

- Wang, X.; Liu, J.; Zhu, X. Early real-time detection algorithm of tomato diseases and pests in the natural environment. Plant Methods 2021, 17, 43. [Google Scholar] [CrossRef]

- Rodríguez, G.R.; Moyseenko, J.B.; Robbins, M.D.; Huarachi Morejón, N.; Francis, D.M.; van der Knaap, E. Tomato Analyzer: A Useful Software Application to Collect Accurate and Detailed Morphological and Colorimetric Data from Two-dimensional Objects. J. Vis. Exp. 2010, 37, 1856. [Google Scholar]

- Lei, L.; Yang, Q.; Yang, L.; Shen, T.; Wang, R.; Fu, C. Deep learning implementation of image segmentation in agricultural applications: A comprehensive review. Artif. Intell. Rev. 2024, 57, 149. [Google Scholar] [CrossRef]

- Leide, J.; Hildebrandt, U.; Hartung, W.; Riederer, M.; Vogg, G. Abscisic acid mediates the formation of a suberized stem scar tissue in tomato fruits. New Phytol. 2012, 194, 402–415. [Google Scholar] [CrossRef]

| Models | Stem Scar | ||||

|---|---|---|---|---|---|

| IoU (%) | Dice (%) | Precision (%) | Recall (%) | Parameters (M) | |

| Unet | 74.52 | 85.32 | 84.97 | 85.26 | 29.06 |

| Deeplabv3+ | 74.78 | 85.41 | 85.73 | 85.41 | 43.59 |

| Pidnet | 73.37 | 84.64 | 85.14 | 84.15 | 7.72 |

| Convnext | 76.95 | 86.97 | 87.96 | 86.01 | 59.28 |

| Mask2former | 77.64 | 89.12 | 85.76 | 89.12 | 44.00 |

| SegFormer-b0 | 77.82 | 87.46 | 87.34 | 87.58 | 3.72 |

| SegFormer-MLLA-a | 77.86 | 87.55 | 87.59 | 87.51 | 3.06 |

| SegFormer-b2 | 78.29 | 87.82 | 88.44 | 87.21 | 24.72 |

| SegFormer-MLLA-b | 78.36 | 87.87 | 88.15 | 87.59 | 18.98 |

| Models | Locule | ||||

|---|---|---|---|---|---|

| IoU (%) | Dice (%) | Precision (%) | Recall (%) | Parameters (M) | |

| Unet | 84.26 | 91.47 | 92.02 | 90.93 | 29.06 |

| Deeplabv3+ | 84.43 | 91.56 | 92.03 | 91.10 | 43.59 |

| Pidnet | 84.33 | 91.50 | 92.27 | 90.74 | 7.72 |

| Convnext | 84.62 | 91.67 | 91.46 | 91.88 | 59.28 |

| Mask2former | 85.15 | 91.98 | 92.48 | 91.49 | 44.00 |

| SegFormer-b0 | 84.84 | 91.80 | 92.33 | 91.27 | 3.72 |

| SegFormer-MLLA-a | 85.00 | 91.88 | 91.88 | 91.91 | 3.06 |

| SegFormer-b2 | 85.04 | 91.92 | 92.57 | 91.27 | 24.72 |

| SegFormer-MLLA-b | 85.24 | 92.03 | 92.47 | 91.59 | 18.98 |

| HDRM | Transverse Diameter (mm) | Longitudinal Diameter (mm) | |||

|---|---|---|---|---|---|

| Random Forest | Parametric Model | RMSE | MAE | RMSE | MAE |

| 2.312 | 2.176 | 2.824 | 2.600 | ||

| √ | 1.153 | 0.946 | 0.991 | 0.818 | |

| √ | 1.072 | 0.891 | 0.970 | 0.808 | |

| √ | √ | 1.064 | 0.880 | 0.965 | 0.803 |

| SegFormer | MLLA | HDRM | Mesocarp Thickness | Stem Scar Width | Stem Scar Depth | ||||

|---|---|---|---|---|---|---|---|---|---|

| Random Forest | Parametric Model | RMSE | MAE | RMSE | MAE | RMSE | MAE | ||

| √ | 0.682 | 0.586 | 1.163 | 0.957 | 0.553 | 0.457 | |||

| √ | √ | 0.686 | 0.527 | 1.147 | 0.944 | 0.473 | 0.371 | ||

| √ | √ | 0.484 | 0.378 | 0.990 | 0.740 | 0.447 | 0.367 | ||

| √ | √ | √ | 0.371 | 0.322 | 0.983 | 0.777 | 0.441 | 0.360 | |

| √ | √ | 0.463 | 0.355 | 0.998 | 0.780 | 0.449 | 0.367 | ||

| √ | √ | √ | 0.348 | 0.304 | 0.940 | 0.735 | 0.399 | 0.319 | |

| √ | √ | √ | 0.458 | 0.353 | 0.986 | 0.772 | 0.447 | 0.367 | |

| √ | √ | √ | √ | 0.349 | 0.303 | 0.937 | 0.735 | 0.397 | 0.315 |

| Phenotypic Trait | Measure Size | Position | |||||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | ||

| transverse diameter (mm) | 74.91 | 74.61 | 73.36 | 75.17 | 74.28 | 73.20 | 74.48 |

| longitudinal diameter (mm) | 59.86 | 59.91 | 59.15 | 59.68 | 59.85 | 59.41 | 59.35 |

| shape index | 0.80 | 0.80 | 0.81 | 0.79 | 0.81 | 0.81 | 0.80 |

| stem scar width (mm) | 13.23 | 13.61 | 13.16 | 13.34 | 13.61 | 13.27 | 12.91 |

| stem scar depth (mm) | 4.67 | 4.64 | 5.13 | 4.99 | 5.28 | 5.32 | 5.31 |

| mesocarp thickness (mm) | 8.36 | 8.63 | 8.28 | 8.42 | 8.45 | 8.30 | 8.47 |

| locule number | 6 | 6 | 6 | 6 | 6 | 6 | 6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Dong, G.; Liu, Y.; Yuan, H.; Xu, Z.; Nie, W.; Zhang, Y.; Shi, Q. Depth Imaging-Based Framework for Efficient Phenotypic Recognition in Tomato Fruit. Plants 2025, 14, 3434. https://doi.org/10.3390/plants14223434

Li J, Dong G, Liu Y, Yuan H, Xu Z, Nie W, Zhang Y, Shi Q. Depth Imaging-Based Framework for Efficient Phenotypic Recognition in Tomato Fruit. Plants. 2025; 14(22):3434. https://doi.org/10.3390/plants14223434

Chicago/Turabian StyleLi, Junqing, Guoao Dong, Yuhang Liu, Hua Yuan, Zheng Xu, Wenfeng Nie, Yan Zhang, and Qinghua Shi. 2025. "Depth Imaging-Based Framework for Efficient Phenotypic Recognition in Tomato Fruit" Plants 14, no. 22: 3434. https://doi.org/10.3390/plants14223434

APA StyleLi, J., Dong, G., Liu, Y., Yuan, H., Xu, Z., Nie, W., Zhang, Y., & Shi, Q. (2025). Depth Imaging-Based Framework for Efficient Phenotypic Recognition in Tomato Fruit. Plants, 14(22), 3434. https://doi.org/10.3390/plants14223434