MRSliceNet: Multi-Scale Recursive Slice and Context Fusion Network for Instance Segmentation of Leaves from Plant Point Clouds

Abstract

1. Introduction

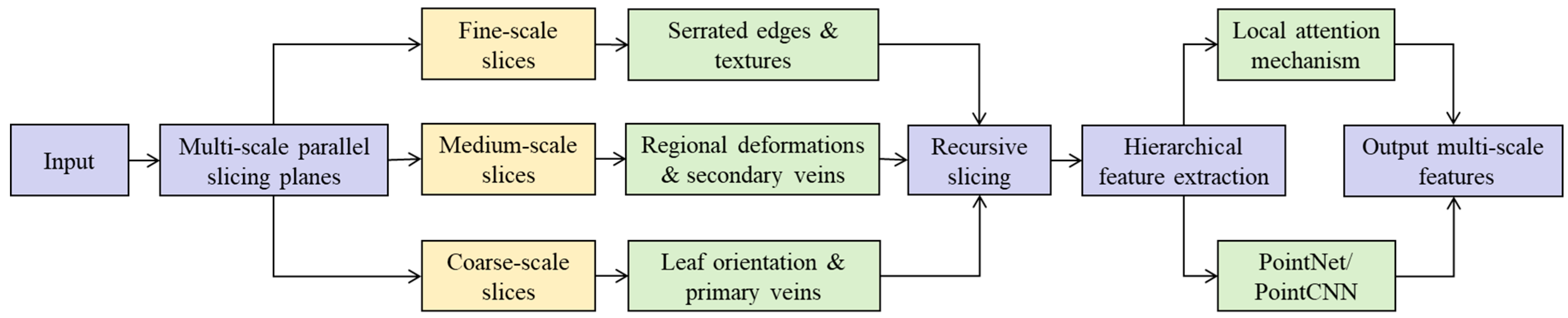

- Multi-scale Recursive Slicing Module (MRSM): This module simulates the process of “examining” local structures from multiple perspectives. It recursively slices the point cloud using multiple orthogonal planes across multi-scale spaces and employs a lightweight sub-network to extract rich local geometric features from each slice. This strategy efficiently and focusedly captures details of key regions such as leaf margins and tips.

- Context Fusion Module (CFM): To integrate the multi-scale local features extracted by MRSM with global morphological information, the CFM module aggregates features across different scales and locations via attention mechanisms or graph convolutional networks. This enriches the feature representation of each point with both its local structural context and its position within the global structure, effectively addressing occlusion and adhesion issues.

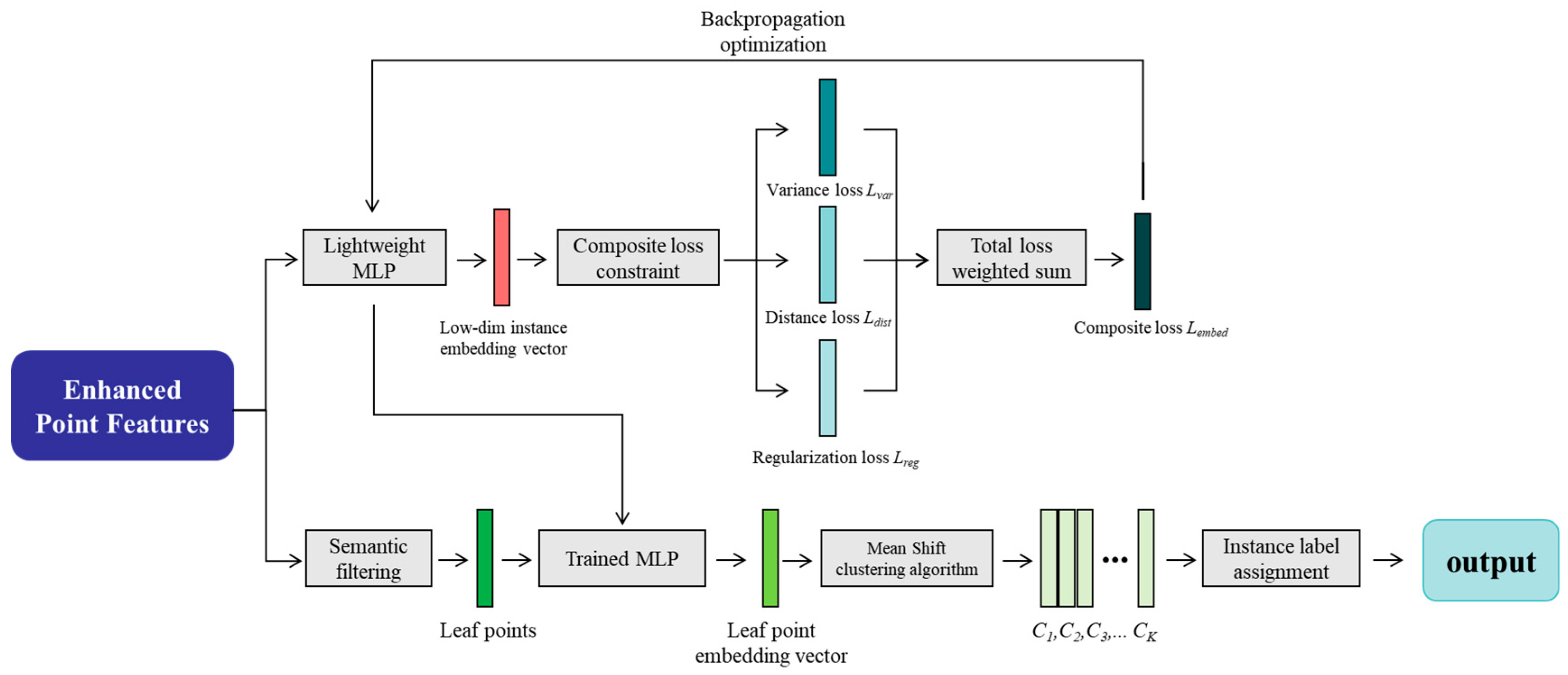

- Instance-Aware Clustering Head (IACH): The network ultimately outputs instance-aware feature embeddings for each point through this clustering head. Unlike simple clustering, this module is guided by a loss function that ensures points from the same instance are highly compact in the feature space, while those from different instances are distinctly separated, enabling accurate and automatic instance segmentation.

2. Related Work

3. Materials and Methods

3.1. Discriminative Feature Extractor for Leaf Segmentation in Plant Point Clouds

3.1.1. Multi-Scale Slicing Strategy

- (1)

- The coarse-scale (with larger intervals, typically 5–10% of the plant height) captures the overall architecture, such as primary branch orientation and the global distribution of leaves, while being robust to noise and fine-scale clutter.

- (2)

- The medium-scale (with intervals calibrated to the size of typical leaf clusters or individual leaves) reveals meso-structural patterns like regional leaf deformations and secondary venation.

- (3)

- The fine-scale (with smaller intervals, approaching the resolution limit of the point cloud) precisely extracts high-resolution details, including serrated leaf margins, subtle curls, and insect perforations.

3.1.2. Recursive Operation Mechanism

3.1.3. Feature Learning and Fusion

3.1.4. Output and Feature Enhancement

3.2. Integration and Enhancement of Cross-Slice Semantic Information

3.2.1. Feature Alignment and Aggregation for Global Context Encoding

3.2.2. Integrating Multiscale Features via Pyramidal Fusion

3.2.3. Highly Descriptive Point Features

3.3. Instance-Aware Clustering Head for Discriminating and Segmenting Leaves

3.3.1. Towards a Discriminative Instance Embedding Space

3.3.2. From Embedding Space to Segmentation Masks

3.4. Implementation Details

4. Results

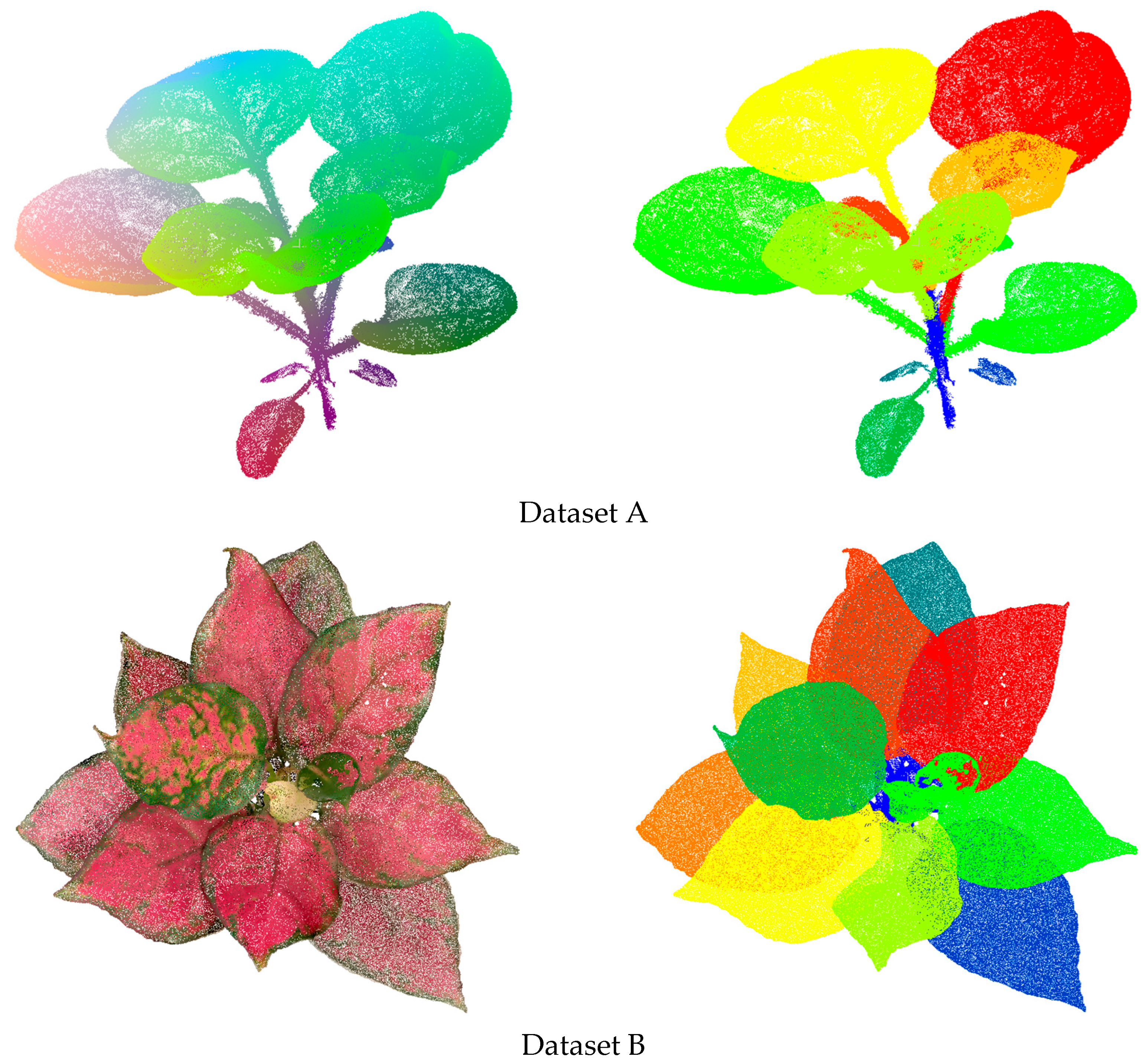

4.1. Dataset Description and Evaluation Criteria

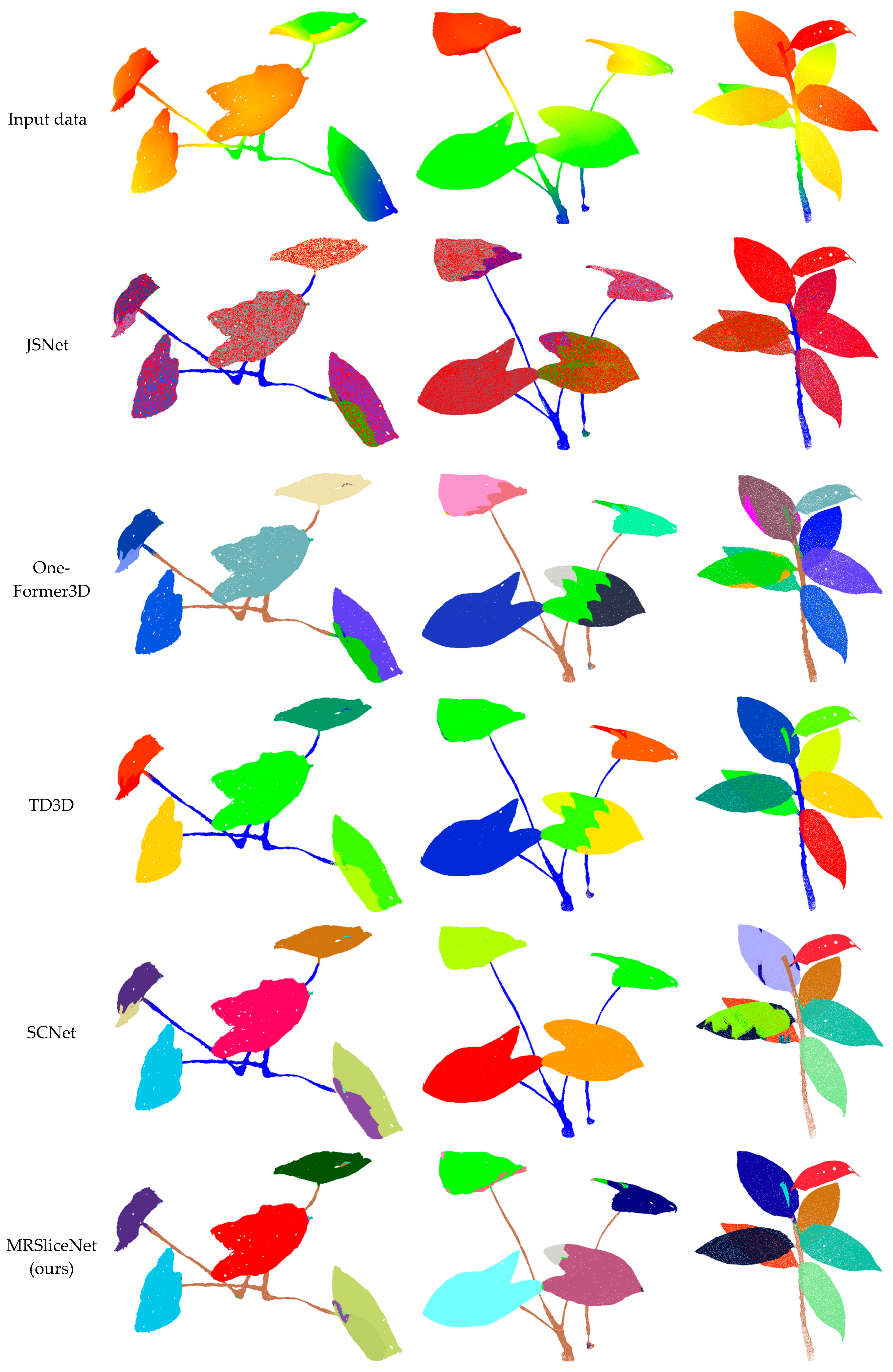

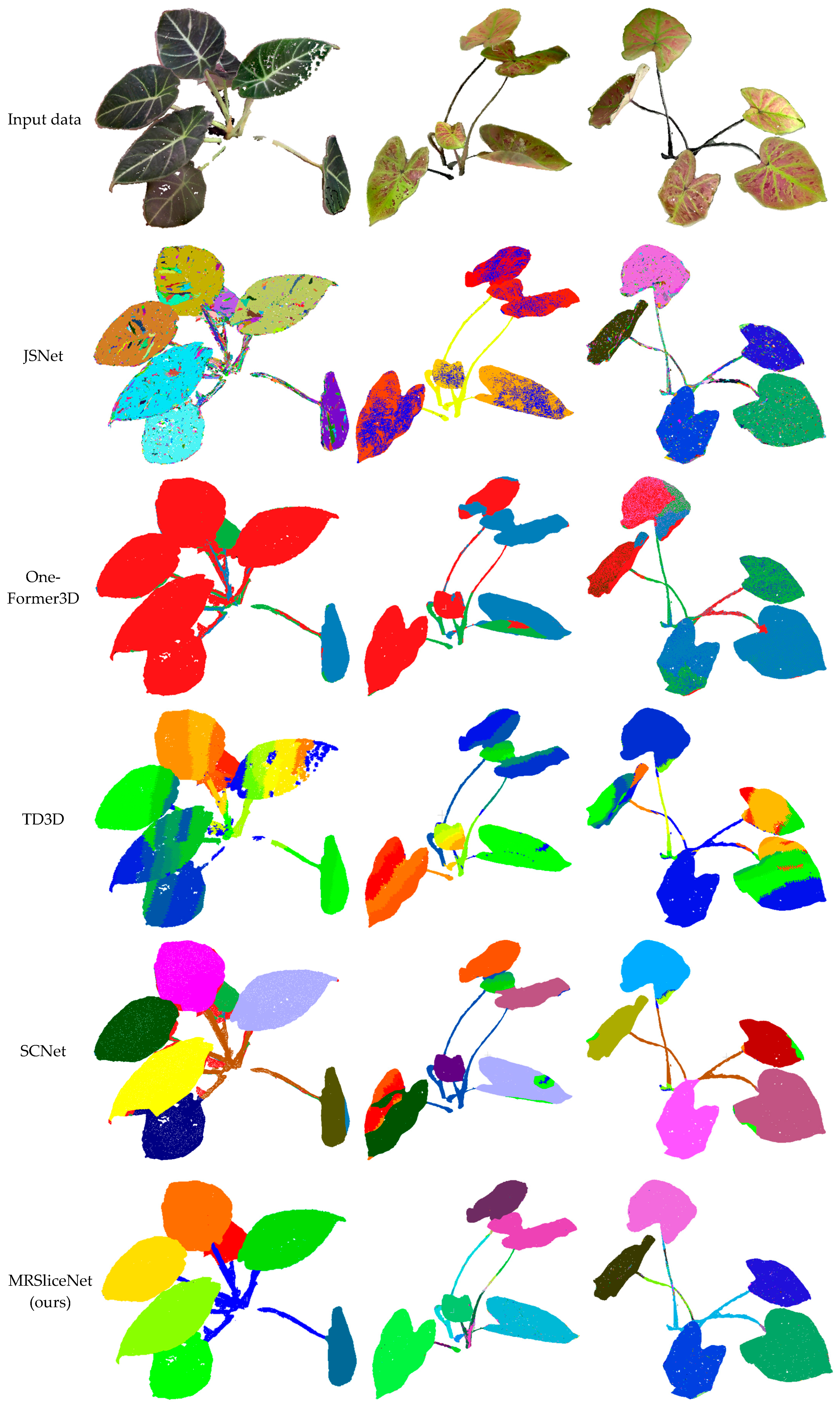

4.2. Qualitative Results

4.3. Quantitative Evaluation

| Methods | AP (%) | AP50 (%) | AP25 (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Stem | Leaf | Overall | Stem | Leaf | Overall | Stem | Leaf | Overall | |

| JSNet [61] | 9.30 | 18.60 | 14.98 | 17.10 | 29.20 | 23.60 | 20.78 | 33.80 | 26.16 |

| OneFormer3D [62] | 25.98 | 28.26 | 27.08 | 31.68 | 39.60 | 35.59 | 40.02 | 51.80 | 46.05 |

| TD3D [63] | 14.41 | 20.49 | 17.45 | 22.58 | 27.32 | 24.95 | 32.93 | 34.44 | 33.68 |

| SCNet [56] | 46.75 | 50.63 | 48.95 | 55,46 | 62.81 | 60.56 | 60.12 | 67.85 | 64.06 |

| MRSliceNet (ours) | 50.80 | 58.94 | 55.04 | 60.95 | 69.48 | 65.37 | 69.00 | 78.56 | 74.68 |

| Methods | AP (%) | AP50 (%) | AP25 (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Stem | Leaf | Overall | Stem | Leaf | Overall | Stem | Leaf | Overall | |

| JSNet [61] | 8.77 | 10.90 | 9.40 | 15.85 | 29.03 | 22.56 | 18.90 | 35.66 | 27.25 |

| OneFormer3D [62] | 27.74 | 30.69 | 29.52 | 33.44 | 39.31 | 36.93 | 40.77 | 45.70 | 42.73 |

| TD3D [63] | 12.62 | 20.50 | 17.56 | 20.05 | 27.34 | 23.19 | 29.51 | 34.47 | 32.49 |

| SCNet [56] | 49.23 | 52.33 | 51.47 | 52.15 | 55.69 | 54.09 | 59.50 | 69.18 | 64.83 |

| MRSliceNet (ours) | 51.12 | 55.08 | 53.78 | 62.89 | 65.76 | 64.00 | 70.13 | 76.79 | 73.45 |

4.4. Architecture Design Analysis

5. Experimental Analysis and Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jin, S.; Li, D.; Yun, T.; Tang, J.; Wang, K.; Li, S.; Yang, H.; Yang, S.; Xu, S.; Cao, L.; et al. Deep learning for three-dimensional (3D) plant phenomics. Plant Phenomics 2025, 7, 100107. [Google Scholar] [CrossRef]

- Diatta, A.; Abaye, O.; Battaglia, M.; Leme, J.; Seleiman, M.; Babur, E.; Thomason, W.E. Mungbean [Vigna radiata (L.) Wilczek] and its potential for crop diversification and sustainable food production in Sub-Saharan Africa: A review. Technol. Agron. 2024, 4, e031. [Google Scholar] [CrossRef]

- Xu, W.; Shrestha, A.; Wang, G.; Wang, T. Site-based climate-smart tree species selection for forestation under climate change. Clim. Smart Agric. 2024, 1, 100019. [Google Scholar] [CrossRef]

- Song, X.; Deng, Q.; Camenzind, M.; Luca, S.V.; Qin, W.; Hu, Y.; Minceva, M.; Yu, K. High-throughput phenotyping of canopy dynamics of wheat senescence using UAV multispectral imaging. Smart Agric. Technol. 2025, 12, 101176. [Google Scholar] [CrossRef]

- Huang, M.; Zhong, S.; Ge, Y.; Lin, H.; Chang, L.; Zhu, D.; Zhang, L.; Xiao, C.; Altan, O. Evaluating the Performance of SDGSAT-1 GLI Data in Urban Built-Up Area Extraction from the Perspective of Urban Morphology and City Scale: A Case Study of 15 Cities in China. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 17166–17180. [Google Scholar] [CrossRef]

- Hu, H.; Wang, J.; Nie, S.; Zhao, J.; Batley, J.; Edwards, D. Plant pangenomics, current practice and future direction. Agric. Commun. 2024, 2, 100039. [Google Scholar] [CrossRef]

- Walsh, J.J.; Mangina, E.; Negrão, S. Advancements in Imaging Sensors and AI for Plant Stress Detection: A Systematic Literature Review. Plant Phenomics 2024, 6, 0153. [Google Scholar] [CrossRef]

- Lv, X.; Zhang, X.; Gao, H.; He, T.; Lv, Z.; Zhangzhong, L. When crops meet machine vision: A review and development framework for a low-cost nondestructive online monitoring technology in agricultural production. Agric. Commun. 2024, 2, 100029. [Google Scholar] [CrossRef]

- Zhuang, T.; Liang, X.; Xue, B.; Tang, X. An in-vehicle real-time infrared object detection system based on deep learning with resource-constrained hardware. Intell. Robot. 2024, 4, 276–292. [Google Scholar] [CrossRef]

- Wang, P.; Tang, Y.; Liao, Z.; Yan, Y.; Dai, L.; Liu, S.; Jiang, T. Road-Side Individual Tree Segmentation from Urban MLS Point Clouds Using Metric Learning. Remote Sens. 2023, 15, 1992. [Google Scholar] [CrossRef]

- Tao, H.; Xu, S.; Tian, Y.; Li, Z.; Ge, Y.; Zhang, J.; Wang, Y.; Zhou, G.; Deng, X.; Zhang, Z.; et al. Proximal and remote sensing in plant phenomics: 20 years of progress, challenges, and perspectives. Plant Commun. 2022, 3, 100344. [Google Scholar] [CrossRef]

- Mahto, I.C.; Mathew, J. Compact deep learning models for leaf disease classification and recognition in precision agriculture. Neural Comput. Appl. 2025, 37, 18379–18399. [Google Scholar] [CrossRef]

- Xue, W.; Ding, H.; Jin, T.; Meng, J.; Wang, S.; Liu, Z.; Ma, X.; Li, J. CucumberAI: Cucumber Fruit Morphology Identification System Based on Artificial Intelligence. Plant Phenomics 2024, 6, 0193. [Google Scholar] [CrossRef]

- Wang, B.; Yang, C.; Zhang, J.; You, Y.; Wang, H.; Yang, W. IHUP: An Integrated High-Throughput Universal Phenotyping Software Platform to Accelerate Unmanned-Aerial-Vehicle-Based Field Plant Phenotypic Data Extraction and Analysis. Plant Phenomics 2024, 6, 0164. [Google Scholar] [CrossRef]

- Sun, H.; Huang, M.; Lin, H.; Ge, Y.; Zhu, D.; Gong, D.; Altan, O. Spatiotemporal Dynamics of Ecological Environment Quality in Arid and Sandy Regions with a Particular Remote Sensing Ecological Index: A Study of the Beijing-Tianjin Sand Source Region. Geo-Spat. Inf. Sci. 2025, 1–20. [Google Scholar] [CrossRef]

- Tsaniklidis, G.; Makraki, T.; Papadimitriou, D.; Nikoloudakis, N.; Taheri-Garavand, A.; Fanourakis, D. Non-Destructive Estimation of Area and Greenness in Leaf and Seedling Scales: A Case Study in Cucumber. Agronomy 2025, 15, 2294. [Google Scholar] [CrossRef]

- Krafft, D.; Scarboro, C.G.; Hsieh, W.; Doherty, C.; Balint-Kurti, P.; Kudenov, M. Mitigating Illumination-, Leaf-, and View-Angle Dependencies in Hyperspectral Imaging Using Polarimetry. Plant Phenomics 2024, 6, 0157. [Google Scholar] [CrossRef]

- Jin, S.; Sun, X.; Wu, F.; Su, Y.; Li, Y.; Song, S.; Xu, K.; Ma, Q.; Baret, F.; Jiang, D.; et al. Lidar sheds new light on plant phenomics for plant breeding and management: Recent advances and future prospects. ISPRS J. Photogramm. Remote Sens. 2021, 171, 202–223. [Google Scholar] [CrossRef]

- Ghaffari, A. Precision seed certification through machine learning. Technol. Agron. 2024, 4, e019. [Google Scholar] [CrossRef]

- Xin, J.; Tao, G.; Tang, Q.; Zou, F.; Xiang, C. Structural damage identification method based on Swin Transformer and continuous wavelet transform. Intell. Robot. 2024, 4, 200–215. [Google Scholar] [CrossRef]

- Lin, Y. Tracking Darwin’s footprints but with LiDAR for booting up the 3D and even beyond-3D understanding of plant intelligence. Remote Sens. Environ. 2024, 311, 114246. [Google Scholar] [CrossRef]

- Tang, L.; Asai, M.; Kato, Y.; Fukano, Y.; Guo, W. Channel Attention GAN-Based Synthetic Weed Generation for Precise Weed Identification. Plant Phenomics 2024, 6, 0122. [Google Scholar] [CrossRef]

- Al-sammarraie, M.; Ilbas, A. Harnessing automation techniques for supporting sustainability in agriculture. Technol. Agron. 2024, 4, e029. [Google Scholar] [CrossRef]

- Chen, M.; Han, K.; Yu, Z.; Feng, A.; Hou, Y.; You, S.; Soibelman, L. An Aerial Photogrammetry Benchmark Dataset for Point Cloud Segmentation and Style Translation. Remote Sens. 2024, 16, 4240. [Google Scholar] [CrossRef]

- Brunklaus, M.; Kellner, M.; Reiterer, A. Three-Dimensional Instance Segmentation of Rooms in Indoor Building Point Clouds Using Mask3D. Remote Sens. 2025, 17, 1124. [Google Scholar] [CrossRef]

- Zhu, Y.; Wang, Z.; Ye, Q.; Pang, L.; Wang, Q.; Zheng, X.; Hu, C. SPA-Net: An Offset-Free Proposal Network for Individual Tree Segmentation from TLS Data. Remote Sens. 2025, 17, 2292. [Google Scholar] [CrossRef]

- Chu, P.; Han, B.; Guo, Q.; Wan, Y.; Zhang, J. A Three-Dimensional Phenotype Extraction Method Based on Point Cloud Segmentation for All-Period Cotton Multiple Organs. Plants 2025, 14, 1578. [Google Scholar] [CrossRef]

- Wan, P.; Shao, J.; Jin, S.; Wang, T.; Yang, S.; Yan, G.; Zhang, W. A novel and efficient method for wood–leaf separation from terrestrial laser scanning point clouds at the forest plot level. Methods Ecol. Evol. 2021, 12, 2473–2486. [Google Scholar] [CrossRef]

- Li, M.; Zhang, W.; Pan, W.; Zhu, J.; Song, X.; Wang, C.; Liu, P. Three-dimensional reconstruction and phenotypic identification of the wheat plant using RealSense D455 sensor. Int. J. Agric. Biol. Eng. 2025, 18, 254–265. [Google Scholar]

- Zhang, H.; Liu, N.; Xia, J.; Chen, L.; Chen, S. Plant Height Estimation in Corn Fields Based on Column Space Segmentation Algorithm. Agriculture 2025, 15, 236. [Google Scholar] [CrossRef]

- Song, H.; Wen, W.; Wu, S.; Guo, X. Comprehensive Review on 3D Point Cloud Segmentation in Plants. Artif. Intell. Agric. 2025, 15, 296–315. [Google Scholar] [CrossRef]

- Li, Y.; Wen, W.; Miao, T.; Wu, S.; Yu, Z.; Wang, X.; Guo, X.; Zhao, C. Automatic organ-level point cloud segmentation of maize shoots by integrating high-throughput data acquisition and deep learning. Comput. Electron. Agric. 2022, 193, 106702. [Google Scholar] [CrossRef]

- Zhang, S.; Chen, Y.; Wang, B.; Pan, D.; Zhang, W.; Li, A. SPTNet: Sparse Convolution and Transformer Network for Woody and Foliage Components Separation from Point Clouds. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5702718. [Google Scholar] [CrossRef]

- Genze, N.; Ajekwe, R.; Güreli, Z.; Haselbeck, F.; Grieb, M.; Grimm, D.G. Deep learning-based early weed segmentation using motion blurred UAV images of sorghum fields. Comput. Electron. Agric. 2022, 202, 107388. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep learning for 3D point clouds: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 4338–4364. [Google Scholar] [CrossRef]

- Roth, L.; Barendregt, C.; Bétrix, C.A.; Hund, A.; Walter, A. High-throughput field phenotyping of soybean: Spotting an ideotype. Remote Sens. Environ. 2022, 269, 112797. [Google Scholar] [CrossRef]

- Li, D.; Shi, G.; Kong, W.; Wang, S.; Chen, Y. A leaf segmentation and phenotypic feature extraction framework for Multiview stereo plant point clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2321–2336. [Google Scholar] [CrossRef]

- Jiang, T.; Liu, S.; Zhang, Q.; Xu, X.; Sun, J.; Wang, Y. Segmentation of individual trees in urban MLS point clouds using a deep learning framework based on cylindrical convolution network. Int. J. Appl. Earth Obs. Geoinf. 2023, 123, 103473. [Google Scholar] [CrossRef]

- Li, D.; Shi, G.; Li, J.; Chen, Y.; Zhang, S.; Xiang, S.; Jin, S. PlantNet: A dual-function point cloud segmentation network for multiple plant species. ISPRS J. Photogramm. Remote Sens. 2022, 184, 243–263. [Google Scholar] [CrossRef]

- Du, R.; Ma, Z.; Xie, P.; He, Y.; Cen, H. PST: Plant segmentation transformer for 3D point clouds of rapeseed plants at the podding stage. ISPRS J. Photogramm. Remote Sens. 2023, 195, 380–392. [Google Scholar] [CrossRef]

- Mirande, K.; Godin, C.; Tisserand, M.; Charlaix, J.; Besnard, F.; Hétroy-Wheeler, F. A graph-based approach for simultaneous semantic and instance segmentation of plant 3D point clouds. Front. Plant Sci. 2022, 13, 1012669. [Google Scholar] [CrossRef]

- Yang, X.; Miao, T.; Tian, X.; Wang, D.; Zhao, J.; Lin, L.; Zhu, C.; Yang, T.; Xu, T. Maize stem–leaf segmentation framework based on deformable point clouds. ISPRS J. Photogramm. Remote Sens. 2024, 211, 49–66. [Google Scholar] [CrossRef]

- Li, D.; Liu, L.; Xu, S.; Jin, S. TrackPlant3D: 3D organ growth tracking framework for organ-level dynamic phenotyping. Comput. Electron. Agric. 2024, 226, 109435. [Google Scholar] [CrossRef]

- Zhang, R.; Jin, S.; Zhang, Y.; Zang, J.; Wang, Y.; Li, Q.; Sun, Z.; Wang, X.; Zhou, Q.; Cai, J.; et al. PhenoNet: A two-stage lightweight deep learning framework for real-time wheat phenophase classification. ISPRS J. Photogramm. Remote Sens. 2024, 208, 136–157. [Google Scholar] [CrossRef]

- Huang, Q.; Wang, W.; Neumann, U. Recurrent Slice Networks for 3D Segmentation of Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Luo, Z.; Liu, D.; Li, J.; Chen, Y.; Xiao, Z.; Junior, J.M.; Gonçalves, W.N.; Wang, C. Learning sequential slice representation with an attention-embedding network for 3D shape recognition and retrieval in MLS point clouds. ISPRS J. Photogramm. Remote Sens. 2020, 161, 147–163. [Google Scholar] [CrossRef]

- Ye, X.; Li, J.; Huang, H.; Du, L.; Zhang, X. 3D Recurrent Neural Networks with Context Fusion for Point Cloud Semantic Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Charles, R.Q.; Su, H.; Kaichun, M.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Ma, X.; Zheng, B.; Jiang, G.; Liu, L. Cellular Network Traffic Prediction Based on Correlation ConvLSTM and Self-Attention Network. IEEE Commun. Lett. 2023, 27, 1909–1912. [Google Scholar] [CrossRef]

- Lv, Q.; Geng, L.; Cao, Z.; Cao, M.; Li, S.; Li, W. Adaptive Sparse Softmax: An Effective and Efficient Softmax Variant. IEEE Trans. Audio Speech Lang. Process. 2025, 33, 3148–3159. [Google Scholar] [CrossRef]

- Zhou, J.; Zeng, S.; Gao, G.; Chen, Y.; Tang, Y. A Novel Spatial–Spectral Pyramid Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5519314. [Google Scholar] [CrossRef]

- Liao, Y.; Zhu, H.; Zhang, Y.; Ye, C.; Chen, T.; Fan, J. Point Cloud Instance Segmentation with Semi-Supervised Bounding-Box Mining. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 10159–10170. [Google Scholar] [CrossRef]

- Chu, J.; Zhang, Y.; Li, S.; Leng, L.; Miao, J. Syncretic-NMS: A Merging Non-Maximum Suppression Algorithm for Instance Segmentation. IEEE Access 2020, 8, 114705–114714. [Google Scholar] [CrossRef]

- Jiang, T.; Zhang, Q.; Liu, S.; Liang, C.; Dai, L.; Zhang, Z.; Sun, J.; Wang, Y. LWSNet: A Point-Based Segmentation Network for Leaf-Wood Separation of Individual Trees. Forests 2023, 14, 1303. [Google Scholar] [CrossRef]

- Li, Z.; Li, M.; Shi, L.; Li, D. A novel fatigue driving detection method based on whale optimization and Attention-enhanced GRU. Intell. Robot. 2024, 4, 230–243. [Google Scholar] [CrossRef]

- Zhao, L.; Wu, S.; Fu, J.; Fang, S.; Liu, S.; Jiang, T. Panoptic Plant Recognition in 3D Point Clouds: A Dual-Representation Learning Approach with the PP3D Dataset. Remote Sens. 2025, 17, 2673. [Google Scholar] [CrossRef]

- Wang, Y.; Jiang, T.; Liu, J.; Li, X.; Liang, C. Hierarchical Instance Recognition of Individual Roadside Trees in Environmentally Complex Urban Areas from UAV Laser Scanning Point Clouds. ISPRS Int. J. Geo-Inf. 2020, 9, 595. [Google Scholar] [CrossRef]

- Schult, J.; Engelmann, F.; Hermans, A.; Litany, O.; Tang, S.; Leibe, B. Mask3D: Mask Transformer for 3D Semantic Instance Segmentation. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023. [Google Scholar]

- Wang, D. Unsupervised semantic and instance segmentation of forest point clouds. ISPRS J. Photogramm. Remote Sens. 2020, 165, 86–97. [Google Scholar] [CrossRef]

- Fang, Z.; Zhuang, C.; Lu, Z.; Wang, Y.; Liu, L.; Xiao, J. BGPSeg: Boundary-Guided Primitive Instance Segmentation of Point Clouds. IEEE Trans. Image Process. 2025, 34, 1454–1468. [Google Scholar] [CrossRef] [PubMed]

- Zhao, L.; Tao, W. JSNet: Joint instance and semantic segmentation of 3d point clouds. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), New York, NY, USA, 7–12 February 2020; pp. 12951–12958. [Google Scholar]

- Kolodiazhnyi, M.; Vorontsova, A.; Konushin, A.; Rukhovich, D. OneFormer3D: One Transformer for Unified Point Cloud Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024. [Google Scholar]

- Kolodiazhnyi, M.; Vorontsova, A.; Konushin, A.; Rukhovich, D. Top-Down Beats Bottom-Up in 3D Instance Segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2024. [Google Scholar]

- Wang, J.; Wang, C.; Chen, W.; Dou, Q.; Chi, W. Embracing the future: The rise of humanoid robots and embodied AI. Intell. Robot. 2024, 4, 196–199. [Google Scholar] [CrossRef]

- Jiang, T.; Wang, Y.; Liu, S.; Zhang, Q.; Zhao, L.; Sun, J. Instance recognition of street trees from urban point clouds using a three-stage neural network. ISPRS J. Photogramm. Remote Sens. 2023, 199, 305–334. [Google Scholar] [CrossRef]

- Fanourakis, D.; Papadakis, V.; Machado, M.; Psyllakis, E.; Nektarios, P. Non-invasive leaf hydration status determination through convolutional neural networks based on multispectral images in chrysanthemum. Plant Growth Regul. 2024, 102, 485–496. [Google Scholar] [CrossRef]

| Methods | Moudle | AP (%) | AP50 (%) | AP25 (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Stem | Leaf | Overall | Stem | Leaf | Overall | Stem | Leaf | Overall | ||

| Model A | Baseline (PointNet++) | 45.23 | 48.90 | 47.55 | 52.11 | 62.03 | 57.02 | 61.58 | 69.00 | 64.77 |

| Model B | +MRSM | 47.74 | 50.69 | 49.22 | 53.74 | 64.31 | 59.03 | 63.77 | 71.70 | 68.73 |

| Model C | +MRSM + CFM | 51.08 | 53.95 | 52.78 | 60.88 | 63.75 | 62.05 | 68.56 | 72.75 | 70.95 |

| Model D-NSF | +MRSM + CFM + IACH (No SF) | 51.11 | 54.60 | 53.01 | 61.25 | 65.00 | 63.35 | 69.15 | 75.03 | 72.19 |

| Model D | +MRSM + CFM + IACH (Full) | 51.12 | 55.08 | 53.78 | 62.89 | 65.76 | 64.00 | 70.13 | 76.79 | 73.45 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, S.; Wang, G.; Fang, H.; Huang, M.; Jiang, T.; Wang, Y. MRSliceNet: Multi-Scale Recursive Slice and Context Fusion Network for Instance Segmentation of Leaves from Plant Point Clouds. Plants 2025, 14, 3349. https://doi.org/10.3390/plants14213349

Liu S, Wang G, Fang H, Huang M, Jiang T, Wang Y. MRSliceNet: Multi-Scale Recursive Slice and Context Fusion Network for Instance Segmentation of Leaves from Plant Point Clouds. Plants. 2025; 14(21):3349. https://doi.org/10.3390/plants14213349

Chicago/Turabian StyleLiu, Shan, Guangshuai Wang, Hongbin Fang, Min Huang, Tengping Jiang, and Yongjun Wang. 2025. "MRSliceNet: Multi-Scale Recursive Slice and Context Fusion Network for Instance Segmentation of Leaves from Plant Point Clouds" Plants 14, no. 21: 3349. https://doi.org/10.3390/plants14213349

APA StyleLiu, S., Wang, G., Fang, H., Huang, M., Jiang, T., & Wang, Y. (2025). MRSliceNet: Multi-Scale Recursive Slice and Context Fusion Network for Instance Segmentation of Leaves from Plant Point Clouds. Plants, 14(21), 3349. https://doi.org/10.3390/plants14213349