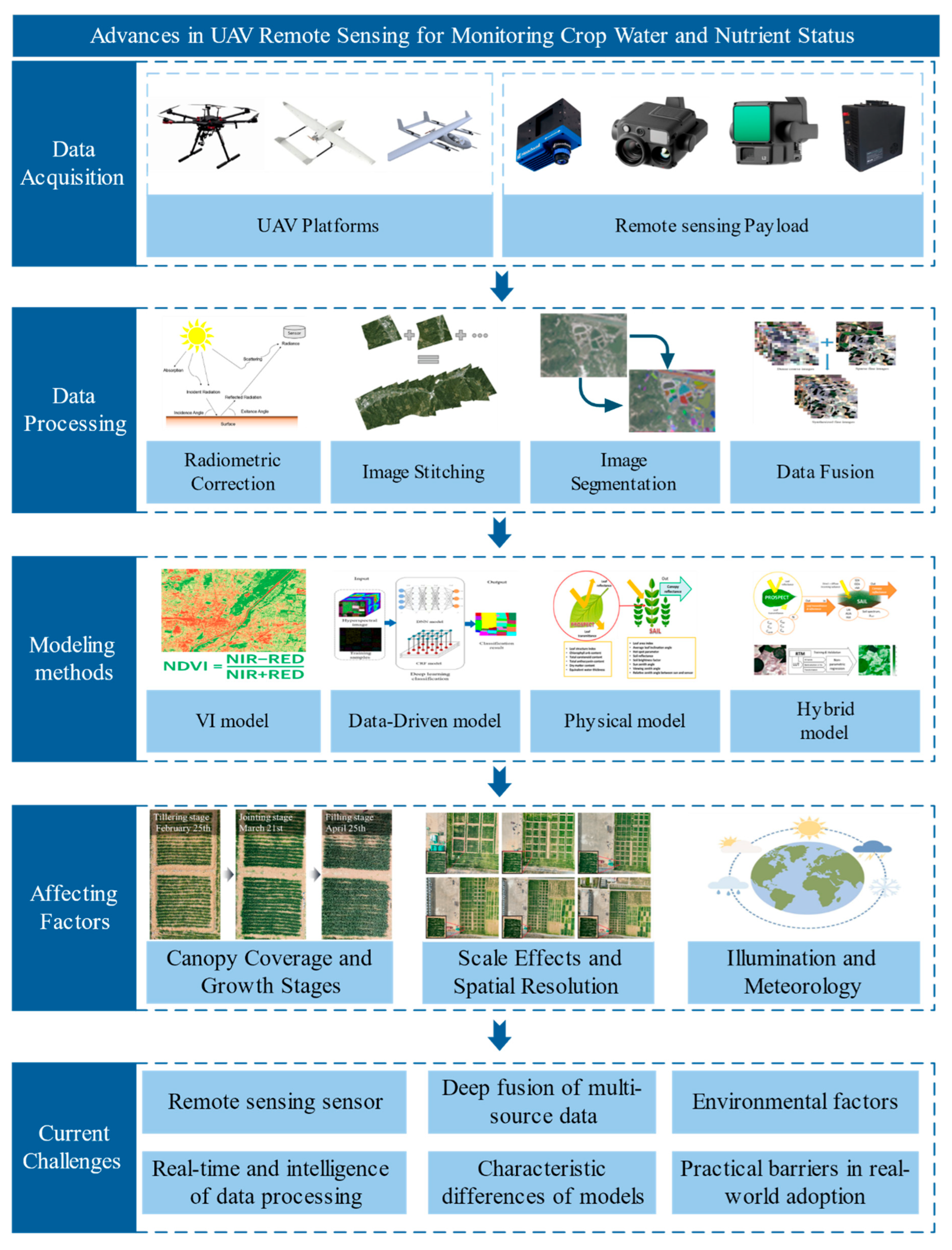

Advances in UAV Remote Sensing for Monitoring Crop Water and Nutrient Status: Modeling Methods, Influencing Factors, and Challenges

Abstract

1. Introduction

2. Data Acquisition and Processing

2.1. UAV Platforms

2.2. Remote Sensing Payload

2.3. Data Processing

2.3.1. Radiometric Correction

2.3.2. Image Stitching

2.3.3. Image Segmentation

2.3.4. Data Fusion

3. UAV-Based Remote Sensing Modeling Methods for Crop Water and Nutrient Status

3.1. VI-Based Approaches

3.1.1. Applications of VIs in Monitoring Crop Water Status

3.1.2. Applications of VIs in Monitoring Crop Nutrient Status

3.2. Data-Driven Approaches

3.2.1. Data-Driven Approaches for Crop Water Status Estimation

3.2.2. Data-Driven Approaches for Crop Nutrient Estimation

3.3. Physically Based Approaches

3.3.1. Canopy Radiative Transfer Models

3.3.2. Microwave Radiative Transfer Models

3.3.3. Energy Balance Models

3.4. Hybrid Models

3.5. Comparative Evaluation of Modeling Approaches

4. Key Factors Affecting UAV-Based Remote Sensing of Crop Water and Nutrient Status

4.1. Influence of Canopy Coverage and Growth Stage

4.2. The Impact of Scale Effects and Spatial Resolution

4.3. Effects of Illumination, Meteorology, and Other Environmental Factors

5. Challenges and Future Prospects for UAV-Based Monitoring of Crop Water and Nutrient Status

5.1. Current Challenges

- (1)

- Delayed development of proximal remote sensing sensors and insufficient specialization and adaptability. At present, spectral sensors used for crop water and nutrient monitoring are still dominated by general-purpose multispectral or TIR devices, lacking wavelength configurations and structural designs optimized for agricultural scenarios. For example, high-resolution sensors tailored to key spectral bands sensitive to crop water and nutrient status have not yet been developed, making it difficult to detect subtle changes in crop physiological conditions. In addition, under complex field conditions such as high temperature, humidity, and wind, current sensors often suffer from limited stability and adaptability, which restricts both data quality and monitoring frequency.

- (2)

- Lengthy data processing chains with limited real-time performance and intelligence. Although UAV-based remote sensing platforms offer high-resolution observation capabilities, the massive volume and diversity of the acquired imagery still require complex and time-consuming post-processing workflows. These steps include image mosaicking, radiometric correction, and parameter inversion, which are often labor-intensive and heavily dependent on manual intervention. These workflows hinder timely analysis, reduce data utilization efficiency, and fail to meet the rapid response demands of agricultural decision-making or support high-frequency, dynamic monitoring tasks.

- (3)

- Insufficient depth in multi-source data fusion and underutilization of spatial information. Current research efforts are largely focused on processing data from a single platform, with limited integration of remote sensing information across multiple platforms—including satellites, UAVs, and ground-based sensors—and modalities such as optical, TIR, and radar. Particularly, systematic fusion methods are lacking in areas such as scale transformation, temporal gap-filling, and canopy structural reconstruction. This limits the ability to capture in-field variability and support regional-scale decision-making, constraining the spatial adaptability of precision agriculture.

- (4)

- Significant environmental interference in remote sensing inversion leads to high data uncertainty. UAV-based remote sensing primarily relies on passive sensors to capture surface reflectance, making it highly susceptible to solar angle, wind speed, atmospheric humidity, and cloud cover. During the early growth stages, strong soil background signals may obscure crop spectral features, while in later stages, dense canopy overlap can result in spectral saturation. Moreover, shadow occlusion and terrain variation further challenge radiometric consistency. Without correction, these factors can compromise the stability and reliability of model outputs.

- (5)

- Limited model generalization, cross-regional adaptability, and interpretability. Most existing models rely heavily on locally trained datasets, making them difficult to generalize across different crops, regions, seasons, and management practices. In complex agricultural environments, these models are prone to overfitting and transfer failures. Although DL-based “black-box” models often achieve high accuracy, they lack explicit physiological or physical interpretability, undermining their credibility in intelligent field diagnostics. This limitation hinders their practical deployment and scalability in real-world agricultural management.

- (6)

- Practical barriers in real-world adoption. Despite the rapid development of UAV-based remote sensing technologies, their widespread adoption in agricultural practice faces several practical barriers. High initial investment costs for UAV platforms and sensors, as well as the need for trained personnel to operate and maintain these systems, often limit their accessibility for smallholder farmers. In addition, regulatory restrictions—such as flight permits, operational safety requirements, and data privacy concerns—pose institutional challenges, especially in regions with evolving UAV policies. These factors hinder the scalability and routine use of UAV-based diagnostics, emphasizing the need for cost-effective solutions, simplified user interfaces, and policy support frameworks to facilitate broader implementation.

5.2. Future Prospects

- (1)

- Development of application-specific sensors and edge-intelligent devices agricultural scenarios. To address the limitations of general-purpose sensors in crop monitoring, future efforts will focus on developing sensor modules designed to capture crop-sensitive spectral bands related to water and nutrient status. These sensors will be optimized for lightweight design, low power consumption, and enhanced resistance to field interference. Moreover, smart terminals with edge computing modules—such as UAVs or in-field sensor nodes—will enable real-time data processing, including image stitching, VI computation, and key region extraction during flight. Only essential information will be transmitted to the cloud, thereby reducing bandwidth demand and latency. This approach ensures stable, high-frequency sensing data to support in-field diagnosis of crop water and nutrient status.

- (2)

- Establishing an edge–cloud collaborative architecture to enhance processing efficiency and intelligence. Leveraging 5G communication and AI algorithms, a new dual-layer architecture can be established for UAV-based remote sensing that integrates data acquisition, transmission, and processing. This architecture consists of edge-level preprocessing and cloud-based deep analysis. Onboard UAV systems will handle basic tasks such as noise reduction, VI computation, and target segmentation, while high-complexity operations—such as radiometric correction, inversion modeling, and large-scale data analysis—are performed in the cloud. This division improves processing efficiency, enables near real-time diagnosis, and promotes the transformation of UAV-based remote sensing from a “data acquisition tool” to an “intelligent decision-making system.”

- (3)

- Multi-scale collaborative remote sensing and 3D data fusion to enhance spatial awareness and decision support. Integrating UAVs, satellites, and ground-based platforms enables unified crop monitoring across centimeter- to kilometer-scale resolutions. Combining LiDAR and multispectral imagery, high-precision 3D canopy models can be generated, allowing joint feature extraction of crop height, canopy structure, and spectral responses. Based on these models, AI models can automatically detect spatially heterogeneous regions, such as zones of water stress or uneven fertilization. These outputs support precision fertilization and site-specific irrigation, improving the efficiency and operability of precision agriculture.

- (4)

- Developing dynamic correction mechanisms to improve environmental adaptability and reduce remote sensing uncertainty. To address the common environmental interferences in remote sensing inversion, adaptive models and correction frameworks should be developed, including coverage-adaptive models, physiology–spectrum coupled models, and real-time environmental correction systems. For instance, in the early growth stage, soil reflectance modeling can reduce spectral contamination, while in the grain-filling stage, multi-angle imaging can alleviate saturation effects. Additionally, integrating real-time meteorological variables—such as wind speed, humidity, and solar radiation—can guide radiometric correction algorithms to perform shadow compensation, angle normalization, and wind–temperature coupling adjustment, improving data consistency and reliability across time and space.

- (5)

- Integrating physical mechanisms with AI for interpretable models to improve generalization and robustness. To overcome limitations in model transferability and interpretability, a “pretraining–fine-tuning” paradigm can be adopted. Large-scale, multi-crop datasets can build generalized base models, which are then rapidly adapted to specific regions or crops using small local samples. Furthermore, integrating physical priors with neural networks—such as embedding radiative transfer models (e.g., PROSAIL) into the architecture—enables the incorporation of physical constraints during training. This hybrid approach enhances model stability, interpretability, and data efficiency, facilitating a transition from opaque “black-box” systems to transparent, knowledge- and data-driven solutions in agricultural remote sensing.

- (6)

- Promoting practical deployment through cost-effective design, policy support, and user-friendly tools. To overcome real-world barriers, future research and development should prioritize cost-effective UAV systems and simplified operational workflows tailored for agricultural end-users. Developing modular, low-cost UAV platforms with plug-and-play sensors can reduce entry barriers for small and medium-sized farms. In parallel, intuitive software interfaces and semi-automated workflows will lower the technical threshold for non-expert users. Moreover, establishing supportive regulatory frameworks and providing training programs or service outsourcing models will facilitate broader and safer UAV adoption in agricultural practice, bridging the gap between research innovations and field-level implementation.

Author Contributions

Funding

Conflicts of Interest

References

- Katsoulas, N.; Elvanidi, A.; Ferentinos, K.P.; Kacira, M.; Bartzanas, T.; Kittas, C. Crop reflectance monitoring as a tool for water stress detection in greenhouses: A review. Biosyst. Eng. 2016, 151, 374–398. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiao, J.; Yan, K.; Lu, X.; Li, W.; Tian, H.; Wang, L.; Deng, J.; Lan, Y. Advances and Developments in Monitoring and Inversion of the Biochemical Information of Crop Nutrients Based on Hyperspectral Technology. Agronomy 2023, 13, 2163. [Google Scholar] [CrossRef]

- Hunt, E.; Daughtry, C. What good are unmanned aircraft systems for agricultural remote sensing and precision agriculture? Int. J. Remote Sens. 2018, 39, 5345–5376. [Google Scholar] [CrossRef]

- Maes, W.H.; Steppe, K. Perspectives for Remote Sensing with Unmanned Aerial Vehicles in Precision Agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Zhang, S.; Lizaga, I.; Zhang, Y.; Ge, X.; Zhang, Z.; Zhang, W.; Huang, Q.; Hu, Z. UAS-based remote sensing for agricultural Monitoring: Current status and perspectives. Comput. Electron. Agric. 2024, 227, 109501. [Google Scholar] [CrossRef]

- García-Berná, J.; Ouhbi, S.; Benmouna, B.; García-Mateos, G.; Fernández-Alemán, J.; Molina-Martínez, J. Systematic Mapping Study on Remote Sensing in Agriculture. Appl. Sci. 2020, 10, 3456. [Google Scholar] [CrossRef]

- Dong, H.; Dong, J.; Sun, S.; Bai, T.; Zhao, D.; Yin, Y.; Shen, X.; Wang, Y.; Zhang, Z.; Wang, Y. Crop water stress detection based on UAV remote sensing systems. Agric. Water Manag. 2024, 303, 109059. [Google Scholar] [CrossRef]

- Zhu, H.; Lin, C.; Liu, G.; Wang, D.; Qin, S.; Li, A.; Xu, J.L.; He, Y. Intelligent agriculture: Deep learning in UAV-based remote sensing imagery for crop diseases and pests detection. Front. Plant Sci. 2024, 15, 1435016. [Google Scholar] [CrossRef] [PubMed]

- Fu, Y.Y.; Yang, G.J.; Pu, R.L.; Li, Z.H.; Li, H.L.; Xu, X.G.; Song, X.Y.; Yang, X.D.; Zhao, C.J. An overview of crop nitrogen status assessment using hyperspectral remote sensing: Current status and perspectives. Eur. J. Agron. 2021, 124, 126241. [Google Scholar] [CrossRef]

- Yang, G.J.; Liu, J.G.; Zhao, C.J.; Li, Z.H.; Huang, Y.B.; Yu, H.Y.; Xu, B.; Yang, X.D.; Zhu, D.M.; Zhang, X.Y.; et al. Unmanned Aerial Vehicle Remote Sensing for Field-Based Crop Phenotyping: Current Status and Perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef]

- Karthikeyan, L.; Chawla, I.; Mishra, A.K. A review of remote sensing applications in agriculture for food security: Crop growth and yield, irrigation, and crop losses. J. Hydrol. 2020, 586, 124905. [Google Scholar] [CrossRef]

- Wu, B.F.; Zhang, M.; Zeng, H.W.; Tian, F.Y.; Potgieter, A.B.; Qin, X.L.; Yan, N.N.; Chang, S.; Zhao, Y.; Dong, Q.H.; et al. Challenges and opportunities in remote sensing-based crop monitoring: A review. Natl. Sci. Rev. 2023, 10, nwac290. [Google Scholar] [CrossRef] [PubMed]

- Virnodkar, S.S.; Pachghare, V.K.; Patil, V.C.; Jha, S.K. Remote sensing and machine learning for crop water stress determination in various crops: A critical review. Precis. Agric. 2020, 21, 1121–1155. [Google Scholar] [CrossRef]

- Liu, S.; Cheng, J.; Liang, L.; Bai, H.; Dang, W. Light-Weight Semantic Segmentation Network for UAV Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8287–8296. [Google Scholar] [CrossRef]

- Vergouw, B.; Nagel, H.; Bondt, G.; Custers, B. Drone Technology: Types, Payloads, Applications, Frequency Spectrum Issues and Future Developments. In The Future of Drone Use: Opportunities and Threats from Ethical and Legal Perspectives; Custers, B., Ed.; T.M.C. Asser Press: The Hague, The Netherlands, 2016; pp. 21–45. [Google Scholar]

- Sun, G.; Hu, T.; Chen, S.; Sun, J.; Zhang, J.; Ye, R.; Zhang, S.; Liu, J. Using UAV-based multispectral remote sensing imagery combined with DRIS method to diagnose leaf nitrogen nutrition status in a fertigated apple orchard. Precis. Agric. 2023, 24, 2522–2548. [Google Scholar] [CrossRef]

- Lazarević, B.; Carović-Stanko, K.; Safner, T.; Poljak, M. Study of High-Temperature-Induced Morphological and Physiological Changes in Potato Using Nondestructive Plant Phenotyping. Plants 2022, 11, 3534. [Google Scholar] [CrossRef]

- Ahmad, U.; Alvino, A.; Marino, S. A Review of Crop Water Stress Assessment Using Remote Sensing. Remote Sens. 2021, 13, 4155. [Google Scholar] [CrossRef]

- Hosseinpour-Zarnaq, M.; Omid, M.; Sarmadian, F.; Ghasemi-Mobtaker, H.; Alimardani, R.; Bohlol, P. Exploring the capabilities of hyperspectral remote sensing for soil texture evaluation. Ecol. Inform. 2025, 90, 103336. [Google Scholar] [CrossRef]

- Raj, R.; Walker, J.P.; Pingale, R.; Banoth, B.N.; Jagarlapudi, A. Leaf nitrogen content estimation using top-of-canopy airborne hyperspectral data. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102584. [Google Scholar] [CrossRef]

- Surase, R.R.; Kale, K.V.; Varpe, A.B.; Vibhute, A.D.; Gite, H.R.; Solankar, M.M.; Gaikwad, S.; Nalawade, D.B. Estimation of Water Contents from Vegetation Using Hyperspectral Indices; Springer: Singapore, 2019; pp. 247–255. [Google Scholar]

- Kumar, A.; Tripathi, R.P. Thermal Infrared Radiation for Assessing Crop Water Stress in Wheat. J. Agron. Crop Sci. 1990, 164, 268–272. [Google Scholar] [CrossRef]

- Mangus, D.L.; Sharda, A.; Zhang, N. Development and evaluation of thermal infrared imaging system for high spatial and temporal resolution crop water stress monitoring of corn within a greenhouse. Comput. Electron. Agric. 2016, 121, 149–159. [Google Scholar] [CrossRef]

- Eweys, O.A.; Elwan, A.; Borham, T.I. Retrieving topsoil moisture using RADARSAT-2 data, a novel approach applied at the east of the Netherlands. J. Hydrol. 2017, 555, 670–682. [Google Scholar] [CrossRef]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and Assessment of Spectrometric, Stereoscopic Imagery Collected Using a Lightweight UAV Spectral Camera for Precision Agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef]

- Tuominen, S.; Pekkarinen, A. Local radiometric correction of digital aerial photographs for multi source forest inventory. Remote Sens. Environ. 2004, 89, 72–82. [Google Scholar] [CrossRef]

- Weinreb, M.P.; Fleming, H.E. Empirical radiance corrections: A technique to improve satellite soundings of atmospheric temperature. Geophys. Res. Lett. 1974, 1, 298–301. [Google Scholar] [CrossRef]

- Hall, F.G.; Strebel, D.E.; Nickeson, J.E.; Goetz, S.J. Radiometric rectification: Toward a common radiometric response among multidate, multisensor images. Remote Sens. Environ. 1991, 35, 11–27. [Google Scholar] [CrossRef]

- Honkavaara, E.; Khoramshahi, E. Radiometric Correction of Close-Range Spectral Image Blocks Captured Using an Unmanned Aerial Vehicle with a Radiometric Block Adjustment. Remote Sens. 2018, 10, 256. [Google Scholar] [CrossRef]

- Luo, S.; Jiang, X.; Yang, K.; Li, Y.; Fang, S. Multispectral remote sensing for accurate acquisition of rice phenotypes: Impacts of radiometric calibration and unmanned aerial vehicle flying altitudes. Front. Plant Sci. 2022, 13, 958106. [Google Scholar] [CrossRef]

- Wang, Y.; Kootstra, G.; Yang, Z.; Khan, H.A. UAV multispectral remote sensing for agriculture: A comparative study of radiometric correction methods under varying illumination conditions. Biosyst. Eng. 2024, 248, 240–254. [Google Scholar] [CrossRef]

- Rosas, J.T.F.; de Carvalho Pinto, F.D.A.; Queiroz, D.M.D.; de Melo Villar, F.M.; Martins, R.N.; Silva, S.D.A. Low-cost system for radiometric calibration of UAV-based multispectral imagery. J. Spat. Sci. 2022, 67, 395–409. [Google Scholar] [CrossRef]

- Aragon, B.; Johansen, K.; Parkes, S.; Malbeteau, Y.; Al-Mashharawi, S.; Al-Amoudi, T.; Andrade, C.F.; Turner, D.; Lucieer, A.; McCabe, M.F. A Calibration Procedure for Field and UAV-Based Uncooled Thermal Infrared Instruments. Sensors 2020, 20, 3316. [Google Scholar] [CrossRef]

- Elfarkh, J.; Johansen, K.; Angulo, V.; Camargo, O.L.; McCabe, M.F. Quantifying Within-Flight Variation in Land Surface Temperature from a UAV-Based Thermal Infrared Camera. Drones 2023, 7, 617. [Google Scholar] [CrossRef]

- Wang, Z.; Zhou, J.; Ma, J.; Wang, Y.; Liu, S.; Ding, L.; Tang, W.; Pakezhamu, N.; Meng, L. Removing temperature drift and temporal variation in thermal infrared images of a UAV uncooled thermal infrared imager. ISPRS J. Photogramm. 2023, 203, 392–411. [Google Scholar] [CrossRef]

- Jakob, S.; Zimmermann, R.; Gloaguen, R. The Need for Accurate Geometric and Radiometric Corrections of Drone-Borne Hyperspectral Data for Mineral Exploration: MEPHySTo—A Toolbox for Pre-Processing Drone-Borne Hyperspectral Data. Remote Sens. 2017, 9, 88. [Google Scholar] [CrossRef]

- Song, L.; Li, H.; Chen, T.; Chen, J.; Liu, S.; Fan, J.; Wang, Q. An Integrated Solution of UAV Push-Broom Hyperspectral System Based on Geometric Correction with MSI and Radiation Correction Considering Outdoor Illumination Variation. Remote Sens. 2022, 14, 6267. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, X.; Gao, C.; Qiu, X.; Tian, Y.; Zhu, Y.; Cao, W. Rapid Mosaicking of Unmanned Aerial Vehicle (UAV) Images for Crop Growth Monitoring Using the SIFT Algorithm. Remote Sens. 2019, 11, 1226. [Google Scholar] [CrossRef]

- Ren, X.; Sun, M.; Zhang, X.; Liu, L. A Simplified Method for UAV Multispectral Images Mosaicking. Remote Sens. 2017, 9, 962. [Google Scholar] [CrossRef]

- Yang, Z.; Pu, F.; Chen, H.; He, Y.; Xu, X. IBEWMS: Individual Band Spectral Feature Enhancement Based Waterfront Environment UAV Multispectral Image Stitching. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 18, 221–240. [Google Scholar] [CrossRef]

- Xu, J.; Zhao, D.; Ren, Z.; Fu, F.; Sun, Y.; Fang, M. A Parallax Image Mosaic Method for Low Altitude Aerial Photography with Artifact and Distortion Suppression. J. Imaging 2022, 9, 5. [Google Scholar] [CrossRef]

- Mo, Y.; Kang, X.; Duan, P.; Li, S. A Robust UAV Hyperspectral Image Stitching Method Based on Deep Feature Matching. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Malenovský, Z.; King, D.; Robinson, S. Spatial Co-Registration of Ultra-High Resolution Visible, Multispectral and Thermal Images Acquired with a Micro-UAV over Antarctic Moss Beds. Remote Sens. 2014, 6, 4003–4024. [Google Scholar] [CrossRef]

- Kapil, R.; Castilla, G.; Marvasti-Zadeh, S.M.; Goodsman, D.; Erbilgin, N.; Ray, N. Orthomosaicking Thermal Drone Images of Forests via Simultaneously Acquired RGB Images. Remote Sens. 2023, 15, 2653. [Google Scholar] [CrossRef]

- Bosilj, P.; Duckett, T.; Cielniak, G. Connected attribute morphology for unified vegetation segmentation and classification in precision agriculture. Comput. Ind. 2018, 98, 226–240. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Liu, C.; Ouyang, A.; Li, B.; Chen, N.; Wang, J.; Liu, Y.d. Early Detection of Slight Bruises in Yellow Peaches (Amygdalus persica) Using Multispectral Structured-Illumination Reflectance Imaging and an Improved Ostu Method. Foods 2024, 13, 3843. [Google Scholar] [CrossRef]

- Singh, M.P.; Gayathri, V.; Chaudhuri, D. A Simple Data Preprocessing and Postprocessing Techniques for SVM Classifier of Remote Sensing Multispectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 7248–7262. [Google Scholar] [CrossRef]

- Wang, Y.; Lv, J.; Xu, L.; Gu, Y.; Zou, L.; Ma, Z. A segmentation method for waxberry image under orchard environment. Sci. Hortic. 2020, 266, 109309. [Google Scholar] [CrossRef]

- Ren, C.N.; Liu, B.; Liang, Z.; Lin, Z.L.; Wang, W.; Wei, X.Z.; Li, X.J.; Zou, X.J. An Innovative Method of Monitoring Cotton Aphid Infestation Based on Data Fusion and Multi-Source Remote Sensing Using Unmanned Aerial Vehicles. Drones 2025, 9, 229. [Google Scholar] [CrossRef]

- Wen, H.; Hu, X.K.; Zhong, P. Detecting rice straw burning based on infrared and visible information fusion with UAV remote sensing. Comput. Electron. Agric. 2024, 222, 109078. [Google Scholar] [CrossRef]

- Wu, B.; Fan, L.Q.; Xu, B.W.; Yang, J.J.; Zhao, R.M.; Wang, Q.; Ai, X.T.; Zhao, H.X.; Yang, Z.R. UAV-based LiDAR and multispectral sensors fusion for cotton yield estimation: Plant height and leaf chlorophyll content as a bridge linking remote sensing data to yield. Ind. Crops Prod. 2025, 230, 121110. [Google Scholar] [CrossRef]

- Xian, G.L.; Liu, J.A.; Lin, Y.X.; Li, S.; Bian, C.S. Multi-Feature Fusion for Estimating Above-Ground Biomass of Potato by UAV Remote Sensing. Plants 2024, 13, 3356. [Google Scholar] [CrossRef] [PubMed]

- Veloso, A.; Mérmoz, S.; Bouvet, A.; Toan, T.; Planells, M.; Dejoux, J.; Ceschia, E. Understanding the temporal behavior of crops using Sentinel-1 and Sentinel-2-like data for agricultural applications. Remote Sens. Environ. 2017, 199, 415–426. [Google Scholar] [CrossRef]

- Wu, Z.J.; Cui, N.B.; Zhang, W.J.; Yang, Y.A.; Gong, D.Z.; Liu, Q.S.; Zhao, L.; Xing, L.W.; He, Q.Y.; Zhu, S.D.; et al. Estimation of soil moisture in drip-irrigated citrus orchards using multi-modal UAV remote sensing. Agric. Water Manag. 2024, 302, 108972. [Google Scholar] [CrossRef]

- Song, Z.S.; Zhang, Z.T.; Yang, S.Q.; Ding, D.Y.; Ning, J.F. Identifying sunflower lodging based on image fusion and deep semantic segmentation with UAV remote sensing imaging. Comput. Electron. Agric. 2020, 179, 105812. [Google Scholar] [CrossRef]

- Pinder, J.E.; McLeod, K.W. Indications of Relative Drought Stress in Longleaf Pine from Thematic Mapper Data. Photogramm. Eng. Remote Sens. 1999, 65, 495–501. [Google Scholar]

- Roujean, J.-L.; Breon, F.-M. Estimating PAR absorbed by vegetation from bidirectional reflectance measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Huete, A. A comparison of vegetation indices over a global set of TM images for EOS-MODIS. Remote Sens. Environ. 1997, 59, 440–451. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color Indices for Weed Identification Under Various Soil, Residue, and Lighting Conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Wilson, E.H.; Sader, S.A. Detection of forest harvest type using multiple dates of Landsat TM imagery. Remote Sens. Environ. 2002, 80, 385–396. [Google Scholar] [CrossRef]

- Pettorelli, N.; Vik, J.; Mysterud, A.; Gaillard, J.; Tucker, C.; Stenseth, N. Using the satellite-derived NDVI to assess ecological responses to environmental change. Trends Ecol. Evol. 2005, 20, 503–510. [Google Scholar] [CrossRef]

- Zeng, Y.; Hao, D.; Badgley, G.; Damm, A.; Rascher, U.; Ryu, Y.; Johnson, J.; Krieger, V.; Wu, S.; Qiu, H.; et al. Estimating near-infrared reflectance of vegetation from hyperspectral data. Remote Sens. Environ. 2021, 267, 112723. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Birth, G.S.; McVey, G.R. Measuring the Color of Growing Turf with a Reflectance Spectrophotometer. Agron. J. 1968, 60, 640–643. [Google Scholar] [CrossRef]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Bannari, A.; Asalhi, H.; Teillet, P.M. Transformed difference vegetation index (TDVI) for vegetation cover mapping. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Toronto, ON, Canada, 24–28 June 2002; Volume 3055, pp. 3053–3055. [Google Scholar]

- Rouse, J.W., Jr.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with Erts. In NASA Special Publication; Freden, S.C., Mercanti, E.P., Becker, M.A., Eds.; National Aeronautics and Space Administration: Washington, DC, USA, 1974; Volume 351, p. 309. [Google Scholar]

- Nguyen, C.T.; Chidthaisong, A.; Diem, P.K.; Huo, L. A Modified Bare Soil Index to Identify Bare Land Features during Agricultural Fallow-Period in Southeast Asia Using Landsat 8. Land 2021, 10, 231. [Google Scholar] [CrossRef]

- Decsi, K.; Kutasy, B.; Hegedűs, G.; Alföldi, Z.P.; Kálmán, N.; Nagy, Á.; Virág, E. Natural immunity stimulation using ELICE16INDURES® plant conditioner in field culture of soybean. Heliyon 2023, 9, e12907. [Google Scholar] [CrossRef]

- Gao, B.-c. NDWI—A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Wang, L.; Qu, J.J. NMDI: A normalized multi-band drought index for monitoring soil and vegetation moisture with satellite remote sensing. Geophys. Res. Lett. 2007, 34, L20405. [Google Scholar] [CrossRef]

- Gao, W.; Gao, Z.; Niu, Z.; Roussos, P.; Sygrimis, N.; Zhang, D.; Li, M. Lightweighting of kiwifruit root soil water content inversion model based on novel vegetation indices. Smart Agric. Technol. 2025, 11, 100995. [Google Scholar] [CrossRef]

- Tanner, C.B. Plant Temperatures 1. Agron. J. 1963, 55, 210–211. [Google Scholar] [CrossRef]

- Idso, S.; Jackson, R.D.; Pinter, P.; Reginato, R.; Hatfield, J. Normalizing the stress-degree-day parameter for environmental variability☆. Agric. Meteorol. 1981, 24, 45–55. [Google Scholar] [CrossRef]

- Jackson, R.D.; Idso, S.B.; Reginato, R.J.; Pinter, P.J. Canopy temperature as a crop water stress indicator. Water Resour. Res. 1981, 17, 1133–1138. [Google Scholar] [CrossRef]

- Xu, J.; Zhao, Y.; Liu, Z. Research on Ecological Environment Change of Middle and Western Inner-Mongolia Region Using RS and GIS. Natl. Remote Sens. Bull. 2002, 6, 142–149. [Google Scholar] [CrossRef]

- Sandholt, I.; Rasmussen, K.; Andersen, J.A. A simple interpretation of the surface temperature/vegetation index space for assessment of surface moisture status. Remote Sens. Environ. 2002, 79, 213–224. [Google Scholar] [CrossRef]

- Zhang, R.; Bao, X.; Hong, R.; He, X.; Yin, G.; Chen, J.; Ouyang, X.; Wang, Y.; Liu, G. Soil moisture retrieval over croplands using novel dual-polarization SAR vegetation index. Agric. Water Manag. 2024, 306, 109159. [Google Scholar] [CrossRef]

- Rouse, J.; Haas, R.H.; Deering, D.; Schell, J.A.; Harlan, J. Monitoring the Vernal Advancement and Retrogradation (Green Wave Effect) of Natural Vegetation. [Great Plains Corridor]; National Aeronautics and Space Administration: Washington, DC, USA, 1973.

- Tian, Y.C.; Yao, X.; Yang, J.; Cao, W.X.; Hannaway, D.B.; Zhu, Y. Assessing newly developed and published vegetation indices for estimating rice leaf nitrogen concentration with ground- and space-based hyperspectral reflectance. Field Crops Res. 2011, 120, 299–310. [Google Scholar] [CrossRef]

- Dash, J.; Curran, P.J. The MERIS terrestrial chlorophyll index. Int. J. Remote Sens. 2004, 25, 5403–5413. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef]

- Li, F.; Miao, Y.X.; Feng, G.H.; Yuan, F.; Yue, S.C.; Gao, X.W.; Liu, Y.Q.; Liu, B.; Ustine, S.L.; Chen, X.P. Improving estimation of summer maize nitrogen status with red edge-based spectral vegetation indices. Field Crops Res. 2014, 157, 111–123. [Google Scholar] [CrossRef]

- Zhao, B.; Duan, A.W.; Ata-Ul-Karim, S.T.; Liu, Z.D.; Chen, Z.F.; Gong, Z.H.; Zhang, J.Y.; Xiao, J.F.; Liu, Z.G.; Qin, A.Z.; et al. Exploring new spectral bands and vegetation indices for estimating nitrogen nutrition index of summer maize. Eur. J. Agron. 2018, 93, 113–125. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, Z.C.; Spohrer, K.; Reineke, A.J.; He, X.K.; Müller, J. Vegetation indices for the detection and classification of leaf nitrogen deficiency in maize. Eur. J. Agron. 2025, 168, 127665. [Google Scholar] [CrossRef]

- Wang, J.J.; Shi, T.Z.; Liu, H.Z.; Wu, G.F. Successive projections algorithm-based three-band vegetation index for foliar phosphorus estimation. Ecol. Indic. 2016, 67, 12–20. [Google Scholar] [CrossRef]

- Lu, J.S.; Yang, T.C.; Su, X.; Qi, H.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Monitoring leaf potassium content using hyperspectral vegetation indices in rice leaves. Precis. Agric. 2020, 21, 324–348. [Google Scholar] [CrossRef]

- Yang, T.C.; Lu, J.S.; Liao, F.; Qi, H.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Retrieving potassium levels in wheat blades using normalised spectra. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102412. [Google Scholar] [CrossRef]

- Chandel, A.; Khot, L.; Yu, L.-X. Alfalfa (Medicago sativa L.) crop vigor and yield characterization using high-resolution aerial multispectral and thermal infrared imaging technique. Comput. Electron. Agric. 2021, 182, 105999. [Google Scholar] [CrossRef]

- Shukla, G.; Garg, R.; Srivastava, H.; Garg, P. Performance analysis of different predictive models for crop classification across an aridic to ustic area of Indian states. Geocarto Int. 2018, 33, 240–259. [Google Scholar] [CrossRef]

- Huang, Y. Improved SVM-Based Soil-Moisture-Content Prediction Model for Tea Plantation. Plants 2023, 12, 2309. [Google Scholar] [CrossRef] [PubMed]

- Zhao, L.; Qing, S.; Li, H.; Qiu, Z.; Niu, X.; Shi, Y.; Chen, S.; Xing, X. Estimating maize evapotranspiration based on hybrid back-propagation neural network models and meteorological, soil, and crop data. Int. J. Biometeorol. 2024, 68, 511–525. [Google Scholar] [CrossRef]

- Han, X.; Zhong, Y.; Zhang, L. An Efficient and Robust Integrated Geospatial Object Detection Framework for High Spatial Resolution Remote Sensing Imagery. Remote Sens. 2017, 9, 666. [Google Scholar] [CrossRef]

- Zhang, C.; Liu, J.; Shang, J.; Cai, H. Capability of crop water content for revealing variability of winter wheat grain yield and soil moisture under limited irrigation. Sci. Total Environ. 2018, 631–632, 677–687. [Google Scholar] [CrossRef]

- Chen, H.; Chen, H.; Zhang, S.; Chen, S.; Cen, F.; Zhao, Q.; Huang, X.; He, T.; Gao, Z. Comparison of CWSI and Ts-Ta-VIs in moisture monitoring of dryland crops (sorghum and maize) based on UAV remote sensing. J. Integr. Agric. 2024, 23, 2458–2475. [Google Scholar] [CrossRef]

- Savchik, P.; Nocco, M.; Kisekka, I. Mapping almond stem water potential using machine learning and multispectral imagery. Irrig. Sci. 2024, 43, 105–120. [Google Scholar] [CrossRef]

- Divya Dharshini, S.; Anurag; Kumar, A.; Satpal; Kumar, M.; Priyanka, P.; Pugazenthi, K. Evaluation of machine-learning algorithms in estimation of relative water content of sorghum under different irrigated environments. J. Arid. Environ. 2025, 229, 105390. [Google Scholar] [CrossRef]

- Babaeian, E.; Paheding, S.; Siddique, N.; Devabhaktuni, V.K.; Tuller, M. Estimation of root zone soil moisture from ground and remotely sensed soil information with multisensor data fusion and automated machine learning. Remote Sens. Environ. 2021, 260, 112434. [Google Scholar] [CrossRef]

- Yang, N.; Zhang, Z.; Yang, X.; Dong, N.; Xu, Q.; Chen, J.; Sun, S.; Cui, N.; Ning, J. Evaluation of crop water status using UAV-based images data with a model updating strategy. Agric. Water Manag. 2025, 312, 109445. [Google Scholar] [CrossRef]

- Yang, N.; Zhang, Z.; Zhang, J.; Yang, X.; Liu, H.; Chen, J.; Ning, J.; Sun, S.; Shi, L. Accurate estimation of winter-wheat leaf water content using continuous wavelet transform-based hyperspectral combined with thermal infrared on a UAV platform. Eur. J. Agron. 2025, 168, 127624. [Google Scholar] [CrossRef]

- Jiang, X.T.; Gao, L.T.; Xu, X.; Wu, W.B.; Yang, G.J.; Meng, Y.; Feng, H.K.; Li, Y.F.; Xue, H.Y.; Chen, T.E. Combining UAV Remote Sensing with Ensemble Learning to Monitor Leaf Nitrogen Content in Custard Apple (Annona squamosa L.). Agronomy 2025, 15, 38. [Google Scholar] [CrossRef]

- Zha, H.N.; Miao, Y.X.; Wang, T.T.; Li, Y.; Zhang, J.; Sun, W.C.; Feng, Z.Q.; Kusnierek, K. Improving Unmanned Aerial Vehicle Remote Sensing-Based Rice Nitrogen Nutrition Index Prediction with Machine Learning. Remote Sens. 2020, 12, 215. [Google Scholar] [CrossRef]

- Chen, R.Q.; Liu, W.P.; Yang, H.; Jin, X.L.; Yang, G.J.; Zhou, Y.; Zhang, C.J.; Han, S.Y.; Meng, Y.; Zhai, C.Y.; et al. A novel framework to assess apple leaf nitrogen content: Fusion of hyperspectral reflectance and phenology information through deep learning. Comput. Electron. Agric. 2024, 219, 108816. [Google Scholar] [CrossRef]

- Xiao, Q.; Wu, N.; Tang, W.; Zhang, C.; Feng, L.; Zhou, L.; Shen, J.; Zhang, Z.; Gao, P.; He, Y. Visible and near-infrared spectroscopy and deep learning application for the qualitative and quantitative investigation of nitrogen status in cotton leaves. Front. Plant Sci. 2022, 13, 1080745. [Google Scholar] [CrossRef]

- Zhang, X.; Han, L.X.; Sobeih, T.; Lappin, L.; Lee, M.A.; Howard, A.; Kisdi, A. The Self-Supervised Spectral-Spatial Vision Transformer Network for Accurate Prediction of Wheat Nitrogen Status from UAV Imagery. Remote Sens. 2022, 14, 1400. [Google Scholar] [CrossRef]

- Du, R.Q.; Chen, J.Y.; Xiang, Y.Z.; Zhang, Z.T.; Yang, N.; Yang, X.Z.; Tang, Z.J.; Wang, H.; Wang, X.; Shi, H.Z.; et al. Incremental learning for crop growth parameters estimation and nitrogen diagnosis from hyperspectral data. Comput. Electron. Agric. 2023, 215, 108356. [Google Scholar] [CrossRef]

- Dehghan-Shoar, M.H.; Kereszturi, G.; Pullanagari, R.R.; Orsi, A.A.; Yule, I.J.; Hanly, J. A physically informed multi-scale deep neural network for estimating foliar nitrogen concentration in vegetation. Int. J. Appl. Earth Obs. Geoinf. 2024, 130, 103917. [Google Scholar] [CrossRef]

- Verhoef, W. Light scattering by leaf layers with application to canopy reflectance modeling: The SAIL model. Remote Sens. Environ. 1984, 16, 125–141. [Google Scholar] [CrossRef]

- Jacquemoud, S.; Baret, F. PROSPECT: A model of leaf optical properties spectra. Remote Sens. Environ. 1990, 34, 75–91. [Google Scholar] [CrossRef]

- Jacquemoud, S.; Verhoef, W.; Baret, F.; Bacour, C.; Zarco-Tejada, P.J.; Asner, G.P.; François, C.; Ustin, S.L. PROSPECT+SAIL models: A review of use for vegetation characterization. Remote Sens. Environ. 2009, 113, S56–S66. [Google Scholar] [CrossRef]

- Li, X.; Strahler, A.H. Geometric-Optical Modeling of a Conifer Forest Canopy. IEEE Trans. Geosci. Remote Sens. 1985, GE-23, 705–721. [Google Scholar] [CrossRef]

- Yang, N.; Zhang, Z.; Yang, X.; Zhang, J.; Zhang, B.; Xie, P.; Wang, Y.; Chen, J.; Shi, L. UAV-based stomatal conductance estimation under water stress using the PROSAIL model coupled with meteorological factors. Int. J. Appl. Earth Obs. Geoinf. 2025, 137, 104425. [Google Scholar] [CrossRef]

- Pasqualotto, N.; Delegido, J.; Van Wittenberghe, S.; Verrelst, J.; Rivera, J.P.; Moreno, J. Retrieval of canopy water content of different crop types with two new hyperspectral indices: Water Absorption Area Index and Depth Water Index. Int. J. Appl. Earth Obs. Geoinf. 2018, 67, 69–78. [Google Scholar] [CrossRef] [PubMed]

- Tripathi, R.; Sahoo, R.N.; Sehgal, V.K.; Tomar, R.K.; Chakraborty, D.; Nagarajan, S. Inversion of PROSAIL Model for Retrieval of Plant Biophysical Parameters. J. Indian Soc. Remote Sens. 2011, 40, 19–28. [Google Scholar] [CrossRef]

- Li, Z.; Jin, X.; Yang, G.; Drummond, J.; Yang, H.; Clark, B.; Li, Z.; Zhao, C. Remote Sensing of Leaf and Canopy Nitrogen Status in Winter Wheat (Triticum aestivum L.) Based on N-PROSAIL Model. Remote Sens. 2018, 10, 1463. [Google Scholar] [CrossRef]

- Li, D.; Wu, Y.; Berger, K.; Kuang, Q.; Feng, W.; Chen, J.M.; Wang, W.; Zheng, H.; Yao, X.; Zhu, Y.; et al. Estimating canopy nitrogen content by coupling PROSAIL-PRO with a nitrogen allocation model. Int. J. Appl. Earth Obs. Geoinf. 2024, 135, 104280. [Google Scholar] [CrossRef]

- Fung, A.K.; Li, Z.; Chen, K.S. Backscattering from a randomly rough dielectric surface. IEEE Trans. Geosci. Remote Sens. 1992, 30, 356–369. [Google Scholar] [CrossRef]

- Zhang, L.; Li, H.; Xue, Z. Calibrated Integral Equation Model for Bare Soil Moisture Retrieval of Synthetic Aperture Radar: A Case Study in Linze County. Appl. Sci. 2020, 10, 7921. [Google Scholar] [CrossRef]

- Chen, K.S.; Tzong-Dar, W.; Leung, T.; Qin, L.; Jiancheng, S.; Fung, A.K. Emission of rough surfaces calculated by the integral equation method with comparison to three-dimensional moment method simulations. IEEE Trans. Geosci. Remote Sens. 2003, 41, 90–101. [Google Scholar] [CrossRef]

- Attema, E.P.W.; Ulaby, F.T. Vegetation modeled as a water cloud. Radio Sci. 1978, 13, 357–364. [Google Scholar] [CrossRef]

- Ulaby, F.T.; McDonald, K.; Sarabandi, K.; Dobson, M.C. Michigan Microwave Canopy Scattering Models (MIMICS). In Proceedings of the International Geoscience and Remote Sensing Symposium, ‘Remote Sensing: Moving Toward the 21st Century’, Edinburgh, UK, 12–16 September 1988; p. 1009. [Google Scholar]

- Romshoo, S.A.; Koike, M.; Onaka, S.; Oki, T.; Musiake, K. Influence of surface and vegetation characteristics on C-band radar measurements for soil moisture content. J. Indian Soc. Remote Sens. 2002, 30, 229–244. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, B.; Zhao, H.; Li, T.; Chen, Q. Physical-based soil moisture retrieval method over bare agricultural areas by means of multi-sensor SAR data. Int. J. Remote Sens. 2018, 39, 3870–3890. [Google Scholar] [CrossRef]

- Yahia, O.; Guida, R.; Iervolino, P. Novel Weight-Based Approach for Soil Moisture Content Estimation via Synthetic Aperture Radar, Multispectral and Thermal Infrared Data Fusion. Sensors 2021, 21, 3457. [Google Scholar] [CrossRef]

- Bastiaanssen, W.G.M.; Menenti, M.; Feddes, R.A.; Holtslag, A.A.M. A remote sensing surface energy balance algorithm for land (SEBAL). 1. Formulation. J. Hydrol. 1998, 212–213, 198–212. [Google Scholar] [CrossRef]

- Allen, R.G.; Tasumi, M.; Trezza, R. Satellite-Based Energy Balance for Mapping Evapotranspiration with Internalized Calibration (METRIC)—Model. J. Irrig. Drain. Eng. 2007, 133, 380–394. [Google Scholar] [CrossRef]

- Colaizzi, P. Advances in a Two-Source Energy Balance Model: Partitioning of Evaporation and Transpiration for Cotton. Trans. ASABE 2016, 59, 181–197. [Google Scholar] [CrossRef]

- Hoffmann, H.; Nieto, H.; Jensen, R.; Guzinski, R.; Zarco-Tejada, P.; Friborg, T. Estimating evaporation with thermal UAV data and two-source energy balance models. Hydrol. Earth Syst. Sci. 2016, 20, 697–713. [Google Scholar] [CrossRef]

- Khormizi, H.Z.; Malamiri, H.R.G.; Ferreira, C.S.S. Estimation of Evaporation and Drought Stress of Pistachio Plant Using UAV Multispectral Images and a Surface Energy Balance Approach. Horticulturae 2024, 10, 515. [Google Scholar] [CrossRef]

- Na, L.; Qingshan, L.; Zimeng, L.; Yang, L.; Zongzheng, Y.; Liwei, S. Non-destructive method using UAVs for high-throughput water productivity assessment for winter wheat cultivars. Agric. Water Manag. 2025, 314, 109526. [Google Scholar] [CrossRef]

- Impollonia, G.; Croci, M.; Blandinières, H.; Marcone, A.; Amaducci, S. Comparison of PROSAIL Model Inversion Methods for Estimating Leaf Chlorophyll Content and LAI Using UAV Imagery for Hemp Phenotyping. Remote Sens. 2022, 14, 5801. [Google Scholar] [CrossRef]

- Ling, J.; Zeng, Z.; Shi, Q.; Li, J.; Zhang, B. Estimating Winter Wheat LAI Using Hyperspectral UAV Data and an Iterative Hybrid Method. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 8782–8794. [Google Scholar] [CrossRef]

- Bhadra, S.; Sagan, V.; Sarkar, S.; Braud, M.; Mockler, T.C.; Eveland, A.L. PROSAIL-Net: A transfer learning-based dual stream neural network to estimate leaf chlorophyll and leaf angle of crops from UAV hyperspectral images. ISPRS J. Photogramm. 2024, 210, 1–24. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Gitelson, A.A. Wide Dynamic Range Vegetation Index for Remote Quantification of Biophysical Characteristics of Vegetation. J. Plant Physiol. 2004, 161, 165–173. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Mutanga, O.; Masenyama, A.; Sibanda, M. Spectral saturation in the remote sensing of high-density vegetation traits: A systematic review of progress, challenges, and prospects. ISPRS J. Photogramm. 2023, 198, 297–309. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Fan, Y.; Yue, J.; Chen, R.; Ma, Y.; Bian, M.; Yang, G. Improving potato above ground biomass estimation combining hyperspectral data and harmonic decomposition techniques. Comput. Electron. Agric. 2024, 218, 108699. [Google Scholar] [CrossRef]

- Pineda, M.; Barón, M.; Pérez-Bueno, M.-L. Thermal Imaging for Plant Stress Detection and Phenotyping. Remote Sens. 2021, 13, 68. [Google Scholar] [CrossRef]

- Anconelli, S.; Mannini, P.; Battilani, A. Cwsi and Baseline Studies to Increase Quality of Processing Tomatoes; International Society For Horticultural Science: Leuven, Belgium, 1994; pp. 303–306. [Google Scholar]

- Yu, F.H.; Zhao, D.; Guo, Z.H.; Jin, Z.Y.; Guo, S.; Chen, C.L.; Xu, T.Y. Characteristic Analysis and Decomposition of Mixed Pixels From UAV Hyperspectral Images in Rice Tillering Stage. Spectrosc. Spect. Anal. 2022, 42, 947–953. [Google Scholar] [CrossRef]

- Bayat, B.; Van der Tol, C.; Verhoef, W. Remote Sensing of Grass Response to Drought Stress Using Spectroscopic Techniques and Canopy Reflectance Model Inversion. Remote Sens. 2016, 8, 557. [Google Scholar] [CrossRef]

- Wang, J.J.; Ding, J.L.; Ge, X.Y.; Zhang, Z.; Han, L.J. Application of Fractional Differential Technique in Estimating Soil Water Content from Airborne Hyperspectral Data. Spectrosc. Spect. Anal. 2022, 42, 3559–3567. [Google Scholar] [CrossRef]

- Li, J.; Wu, W.; Zhao, C.; Bai, X.; Dong, L.; Tan, Y.; Yusup, M.; Akelebai, G.; Dong, H.; Zhi, J. Effects of solar elevation angle on the visible light vegetation index of a cotton field when extracted from the UAV. Sci. Rep. 2025, 15, 18497. [Google Scholar] [CrossRef]

- Yuan, X.; Lv, Z.; Laakso, K.; Han, J.; Liu, X.; Meng, Q.; Xue, S. Observation Angle Effect of Near-Ground Thermal Infrared Remote Sensing on the Temperature Results of Urban Land Surface. Land 2024, 13, 2170. [Google Scholar] [CrossRef]

- Shafiee, S.; Mroz, T.; Burud, I.; Lillemo, M. Evaluation of UAV multispectral cameras for yield and biomass prediction in wheat under different sun elevation angles and phenological stages. Comput. Electron. Agric. 2023, 210, 107874. [Google Scholar] [CrossRef]

- Liu, S.; Lu, L.; Mao, D.; Jia, L. Evaluating parameterizations of aerodynamic resistance to heat transfer using field measurements. Hydrol. Earth Syst. Sci. 2007, 11, 769–783. [Google Scholar] [CrossRef]

| Rotor Type | Endurance Time | Maximum Altitude | Equipment Cost | Maintenance Complexity | Typical Platform |

|---|---|---|---|---|---|

| Multirotor | <1 h | Within a few hundred meters | Relatively low | Relatively simple | DJI M300 |

| Fixed-wing | Several hours | Several kilometers | Moderate | Moderate | HC-141 |

| Hybrid-wing | Several hours | Several kilometers | Relatively high | Relatively complex | CW-100 |

| Sensor Type | Spectral Range | Typical Payload | Applications |

|---|---|---|---|

| Optical remote sensing | 0.4–1.1 μm (Visible/NIR) | Micasense RedEdge-P | Vegetation index-based assessment of nitrogen status; estimation of LAI |

| Hyperspectral sensor | 0.4–2.5 μm (Hundreds of narrow bands) | Cubert ULTRIS 5 | Inversion of soil organic matter; early detection of crop pests and diseases; nutrient deficiency diagnosis |

| Thermal infrared sensor | 8–14 μm | FLIR Vue Pro | Monitoring canopy temperature for water stress; estimation of surface water content via thermal inertia |

| Microwave remote sensing | 0.1 cm–1 m (C/X/L bands) | FSAR miniSAR | Soil moisture inversion; crop biomass estimation; flood and waterlogging monitoring |

| Vegetable Index | Formula | Reference |

|---|---|---|

| Drought Stress Index (DSI) | [56] | |

| Difference Vegetation Index (DVI) | [57] | |

| Enhanced Vegetation Index (EVI) | [58] | |

| Excess Green Index (ExG) | [59] | |

| Green Normalized Difference Vegetation Index (GNDVI) | [60] | |

| Normalized Difference Moisture Index (NDMI) | [61] | |

| Normalized Difference Vegetation Index (NDVI) | [62] | |

| Hyperspectral Near-Infrared Reflectance of Vegetation (NIRvH2) | [63] | |

| Optimized Soil-Adjusted Vegetation Index (OSAVI) | [64] | |

| Ratio Vegetation Index (RVI) | [65] | |

| Soil-Adjusted Vegetation Index (SAVI) | [66] | |

| Transformed Difference Vegetation Index (TDVI) | [67] | |

| Transformed Vegetation Index (TVI) | [68] |

| Modeling Approach | Accuracy | Scalability | Data Requirements | Robustness | Advantages |

|---|---|---|---|---|---|

| VI-based methods | Moderate; sensitive to canopy coverage | High; easy to implement | Low (few spectral bands) | Limited under heterogeneous or stressed conditions | Simple, interpretable, cost-effective |

| Data-driven models | High with sufficient training data | Moderate; limited cross-region transfer | High (large, labeled datasets required) | Variable; prone to overfitting | Nonlinear modeling capacity, flexible |

| Physically based models | Moderate-high; depends on parameterization | High; applicable across crops and regions | Medium-high (field + meteorological inputs) | Strong generalization across conditions | Mechanistic, interpretable |

| Hybrid models | High; balance of accuracy and interpretability | Promising, but not fully validated | Medium-high (multi-source data required) | Strong; potential for transferability | Combine physical priors with AI adaptability |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, X.; Chen, J.; Lu, X.; Liu, H.; Liu, Y.; Bai, X.; Qian, L.; Zhang, Z. Advances in UAV Remote Sensing for Monitoring Crop Water and Nutrient Status: Modeling Methods, Influencing Factors, and Challenges. Plants 2025, 14, 2544. https://doi.org/10.3390/plants14162544

Yang X, Chen J, Lu X, Liu H, Liu Y, Bai X, Qian L, Zhang Z. Advances in UAV Remote Sensing for Monitoring Crop Water and Nutrient Status: Modeling Methods, Influencing Factors, and Challenges. Plants. 2025; 14(16):2544. https://doi.org/10.3390/plants14162544

Chicago/Turabian StyleYang, Xiaofei, Junying Chen, Xiaohan Lu, Hao Liu, Yanfu Liu, Xuqian Bai, Long Qian, and Zhitao Zhang. 2025. "Advances in UAV Remote Sensing for Monitoring Crop Water and Nutrient Status: Modeling Methods, Influencing Factors, and Challenges" Plants 14, no. 16: 2544. https://doi.org/10.3390/plants14162544

APA StyleYang, X., Chen, J., Lu, X., Liu, H., Liu, Y., Bai, X., Qian, L., & Zhang, Z. (2025). Advances in UAV Remote Sensing for Monitoring Crop Water and Nutrient Status: Modeling Methods, Influencing Factors, and Challenges. Plants, 14(16), 2544. https://doi.org/10.3390/plants14162544