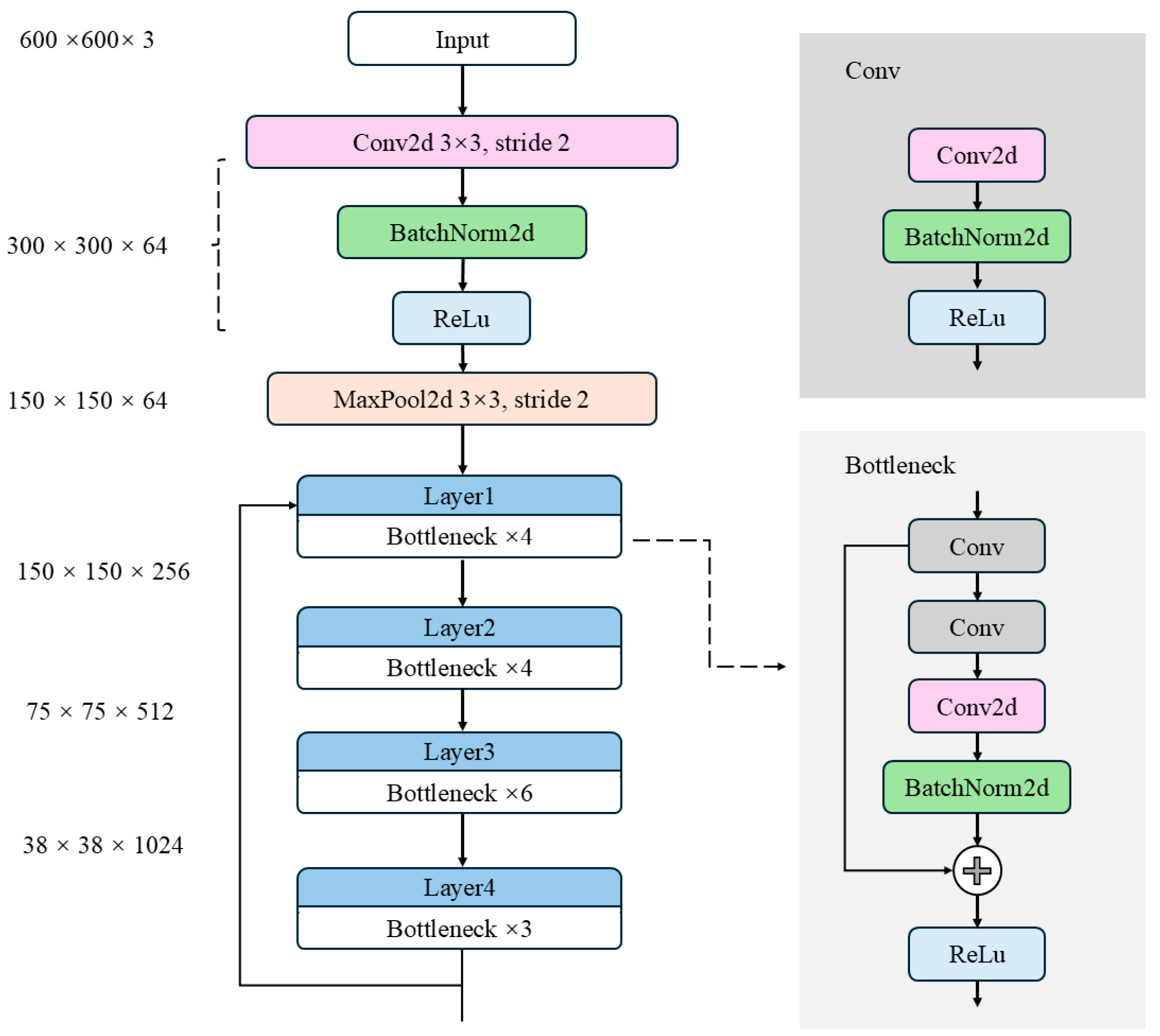

2.2.1. Lightweight Bottleneck Layer

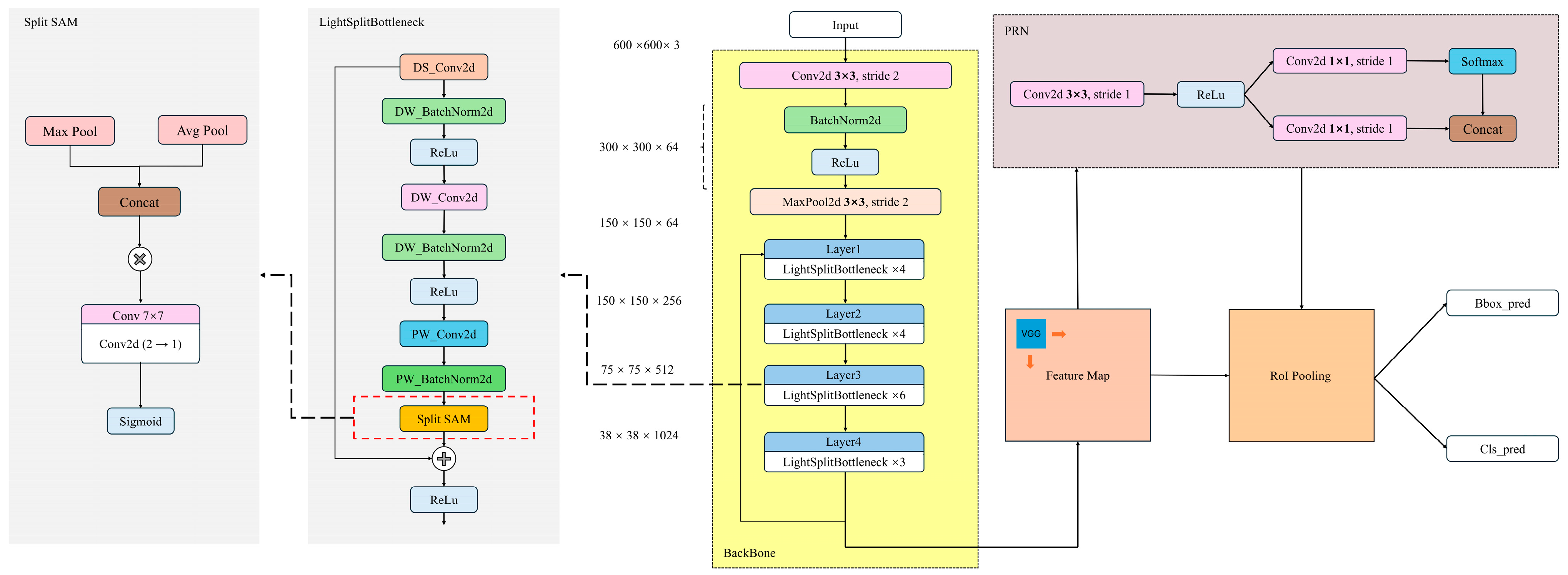

As depicted in

Figure 7, the original Faster R-CNN backbone network [

24] is composed of three key components: the feature extraction network layer, the region proposal network, and the RoI pooling layer. The input image is initially resized to a 600 × 600 × 3 format without loss of information, and then it is passed through the backbone network for feature extraction. In the Faster R-CNN architecture, feature extraction primarily relies on deep neural networks made up of multiple convolutional modules (e.g., Conv Blocks, which include Conv and Identity Blocks as shown in

Figure 7, with Conv operating at a stride of 1). These modules are tasked with extracting high-level semantic features from the input image. However, as the model depth and parameter size increase, deploying Faster R-CNN on embedded devices and in real-time applications becomes increasingly challenging.

To tackle this challenge, recent research has focused on developing lightweight network structures. For instance, Zhu et al. [

25] demonstrated that depthwise separable convolution significantly reduces the number of parameters and computational costs by applying a single convolution kernel to each channel of the feature map. Additionally, pointwise convolution using 1 × 1 kernels further optimizes computation by integrating channel information. These techniques have worked really well in applications like cashmere and wool fiber recognition. Similarly, many parts of wild plants, like leaves and stems, also have complex textures and loose structures like wool.

Inspired by these findings, this study introduces a lightweight bottleneck structure based on depthwise separable convolution to replace the traditional ResNet bottleneck module. This modification aims to reduce the computational load and model parameters in the Faster R-CNN backbone network. The design of this module incorporates structural optimization strategies commonly used in lightweight networks like MobileNet and ShuffleNet [

26,

27]. By decoupling convolution calculations across channels and spatial dimensions, this approach significantly reduces resource consumption while maintaining model performance.

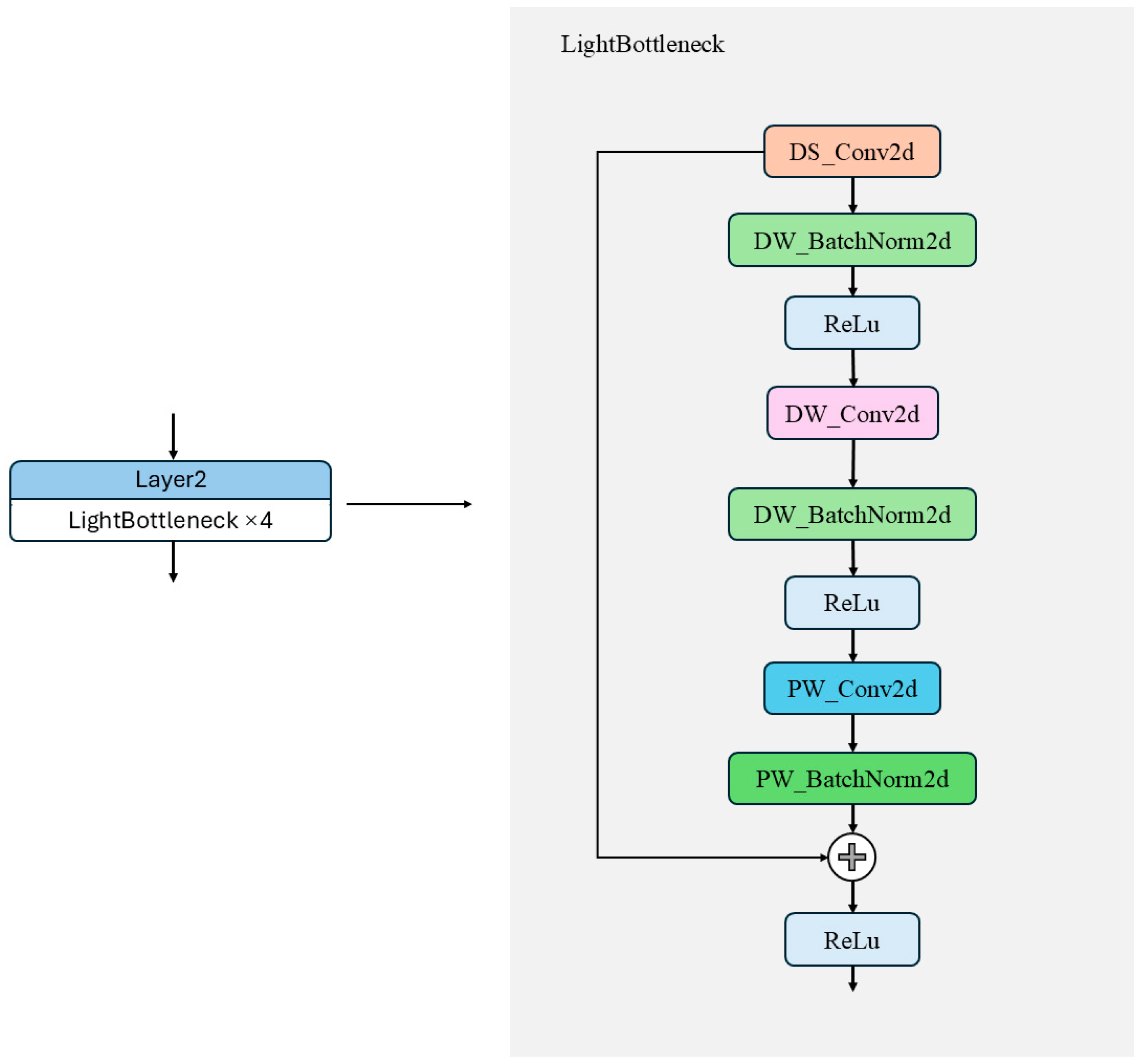

Specifically, the lightweight bottleneck module first employs a 1 × 1 depthwise separable convolution combination (DS_Conv2d and DW_BatchNorm2d) to compress the channels of the input feature map, thereby reducing subsequent computation. Next, a 3 × 3 depthwise convolution combination (DW_Conv2d and DW_BatchNorm2d) is applied for spatial feature extraction. This operation performs independent convolution within each channel, significantly reducing computational overhead. Finally, a 1 × 1 pointwise convolution combination (PW_Conv2d and PW_BatchNorm2d) restores the output feature channels to meet the dimensional requirements of the residual connection, as illustrated in

Figure 8. Compared to traditional convolution, pointwise convolution has fewer parameters and lower computational cost. By integrating features extracted by multiple convolution kernels at different scales, this structure not only significantly reduces the parameter scale and FLOPs but also maintains strong feature representation capability.

2.2.2. Split SAM Attention Mechanism

Figure 7 illustrates the traditional ResNet architecture, particularly the ResNet50 model that employs bottleneck modules. While residual connections effectively mitigate the vanishing gradient problem and capture multi-level features by progressively increasing the number of channels, several significant issues remain.

First, the fixed receptive field limits the network’s adaptability when handling multi-scale objects, making it unable to flexibly adjust to features of different scales. This restricts the network’s ability to effectively capture both global information and fine-grained details.

Second, the information transmission efficiency is low. Although residual connections promote information flow between layers, there is no effective mechanism to dynamically adjust the attention weights of different levels. This results in insufficient fusion of high-level and low-level features, impairing the network’s ability to express subtle differences.

Finally, the computational complexity is high. While the bottleneck modules effectively improve the performance of deep networks, their gradually increasing computational cost, especially during cross-scale feature fusion, leads to redundant calculations due to the limited receptive field and insufficient information transmission. This impacts the network’s efficiency and inference speed.

These issues pose challenges for ResNet when dealing with complex scenarios and call for improvements in network design.

To address these problems, we propose an improved network architecture that combines a lightweight bottleneck module with an enhanced spatial attention mechanism module, named Split SAM, as shown in

Figure 9. In this architecture, the introduction of Split SAM plays a key role, particularly in dynamically adjusting the focus areas of feature maps and enhancing the separation between foreground and background, highly boosting the network’s performance and efficiency.

By integrating the lightweight bottleneck module with Split SAM, we can enhance the network’s adaptability in multi-scale object detection and complex scenarios while maintaining lightweight computation. This integration also improves the accuracy and efficiency of feature fusion.

The core idea of the Split SAM spatial attention module is to divide the channel dimension of the input feature map into multiple subspaces and independently learn a spatial attention map for each subspace. Let the input feature map be defined as follows:

Here,

represents the batch size,

is the number of channels, and

and

are the spatial dimensions of the feature map. The channel dimension is divided into G subspaces, with each subspace containing

channels:

In our design, we set to reduce computational complexity and memory access cost, which is particularly important for deployment on resource-constrained edge devices. A larger leads to more convolution operations and fragmented memory access, increasing latency and energy consumption.

For each subspace

, Split SAM extracts the spatial context features of the group using max pooling and average pooling, as follows:

The two are concatenated along the channel dimension to obtain a 2D feature map:

Then, a 7 × 7 convolutional layer is used to extract spatial attention features, and finally a Sigmoid activation function is applied to generate the attention map:

The obtained attention map is then element-wise multiplied with the original subgroup feature map:

Finally, the outputs of all subgroups are concatenated back along the channel dimension to obtain the final output.

This module only affects the spatial dimensions of local feature maps. It does not change the number of input channels or the spatial resolution. Also, it does not rely on any particular network structure or semantic level. As a lightweight attention module, Split SAM does spatial modeling in each channel group on its own. It does not make the structure more complex and improves the local spatial response. It can be easily added after any convolutional layer in the backbone network. This helps the model better understand spatial details like object edges and textures.

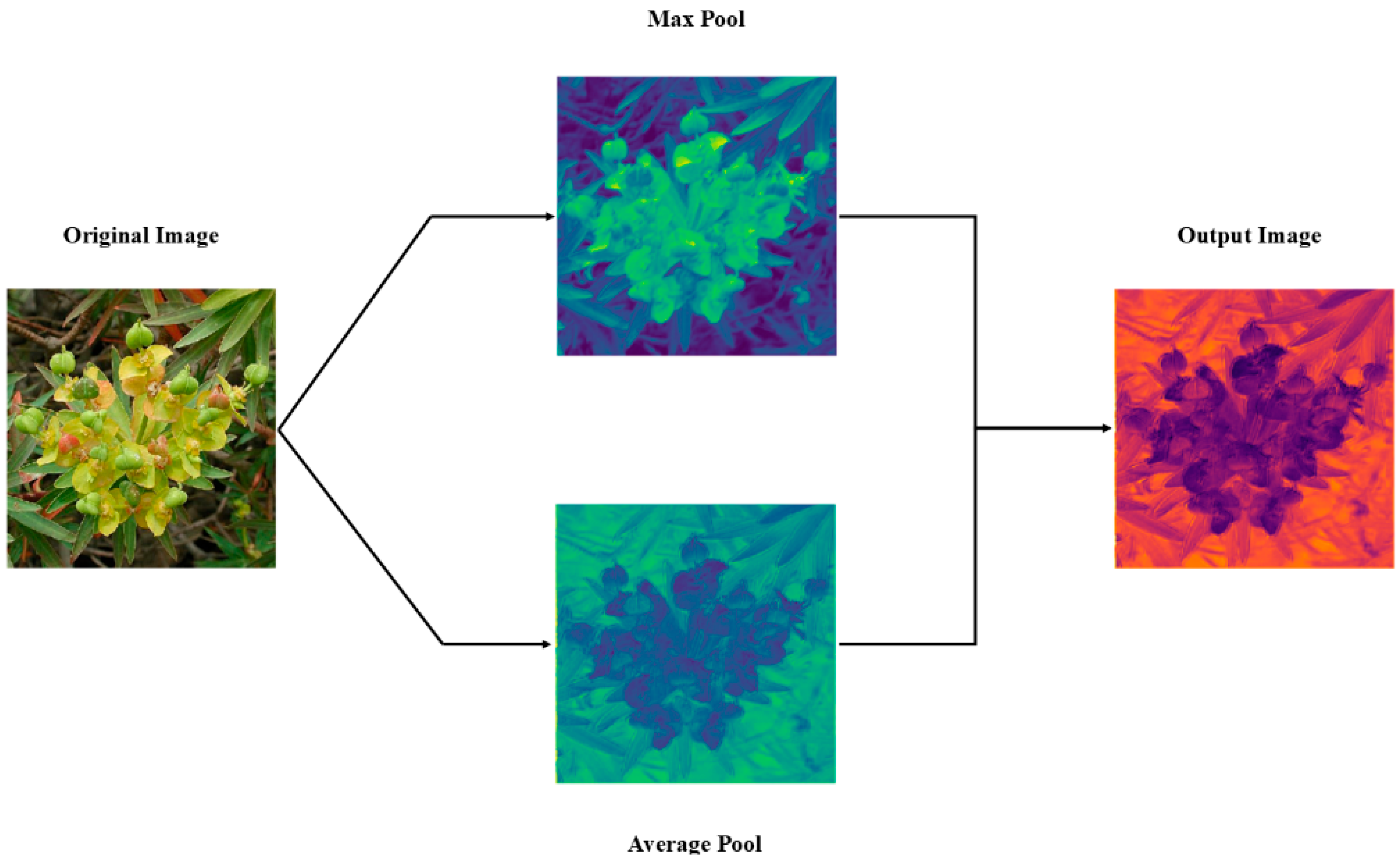

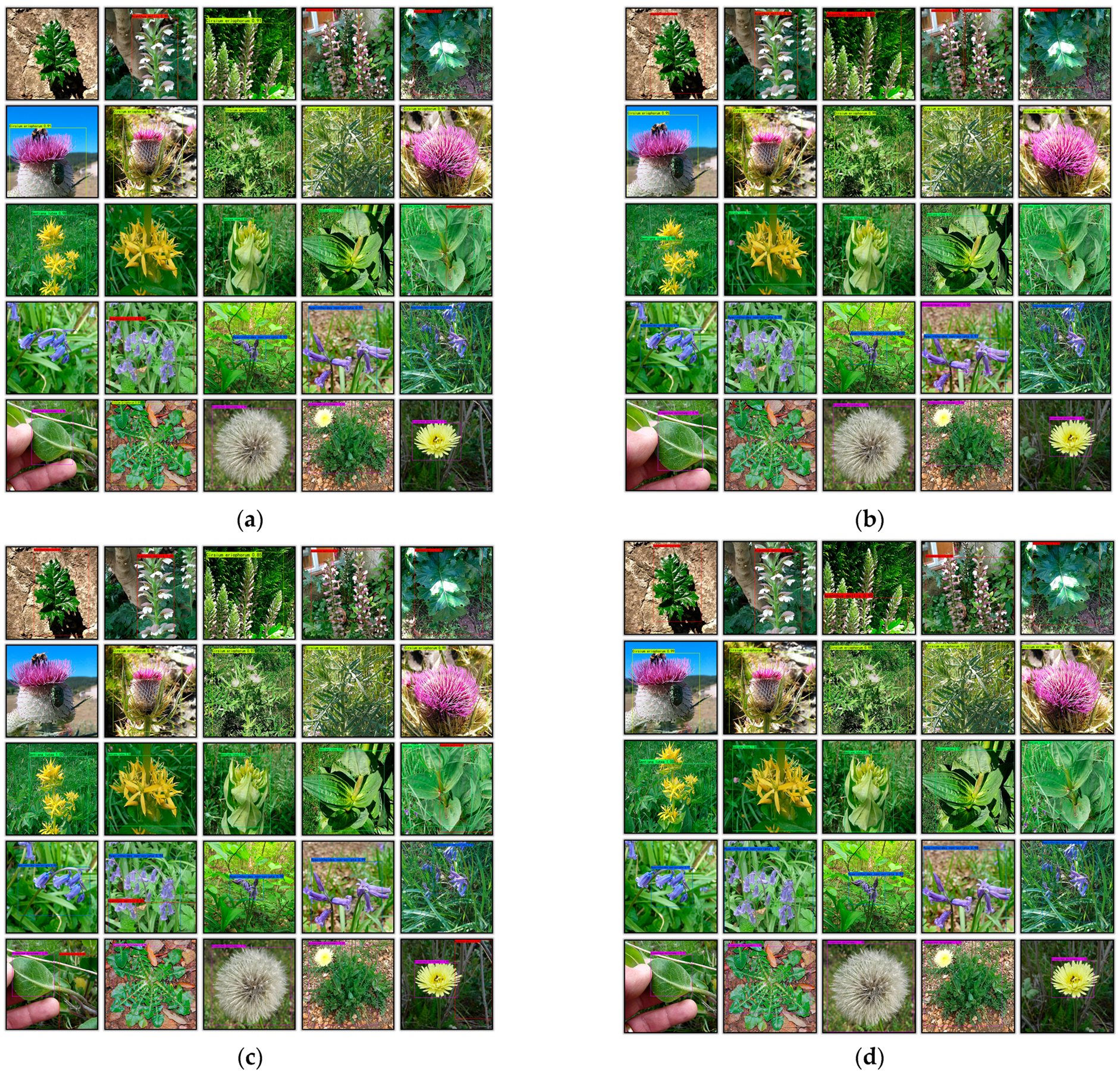

Figure 10 clearly demonstrates the effect of Split SAM in visualizing the attention map for the original image. From the figure, it can be seen that the original image is first processed through max pooling and average pooling, resulting in two feature maps. The max pooling map highlights the local maximum values in the image, making the foreground more prominent, while the average pooling map shows the smooth features of the image, which will enhance the background features. These two feature maps are then merged to generate an output attention map. The output attention map reflects the importance of different regions in the image, with the highlighted areas indicating the parts where attention is concentrated. In

Figure 10, the output attention map has a significant chromatic difference between the foreground (the target plant) and the background (the wild environment), indicating that this processing makes the contrast between the foreground and background in the image more prominent, that is, the main detection and recognition objects are more prominent, thereby enhancing the model’s perception ability for the foreground and background, and improving the detection and recognition accuracy.

After improving the backbone and neck architectures, the framework of the Light Split SAM Faster R-CNN is shown in

Figure 11. The main modules and their functions are described as follows:

After improving the backbone feature extraction network, the framework of LS-FRCNN is shown in

Figure 11. The main module functions are described as follows:

Proposal Region Proposal Network (RPN): This is a key component of the Faster R-CNN model. It consists of two-dimensional convolutional layers, batch normalization, and activation functions, which are used to identify the regions in the feature map that may contain the target and generate candidate proposals. This approach helps to reduce the number of regions that need to be processed subsequently, thereby improving the detection efficiency.

RoI Pooling Layer: This is a specialized pooling layer that maps candidate regions of varying scales onto fixed-size feature maps, integrating multi-scale feature information to provide a unified input size for subsequent classification and bounding box regression.

Classification and Bounding Box Regression Module: This module consists of two parallel branches. The bbox_pred branch predicts bounding boxes by refining the proposals to accurately localize the objects; the cls_pred branch predicts the object classes using a Softmax function. The final output includes predictions of bounding boxes and class labels.

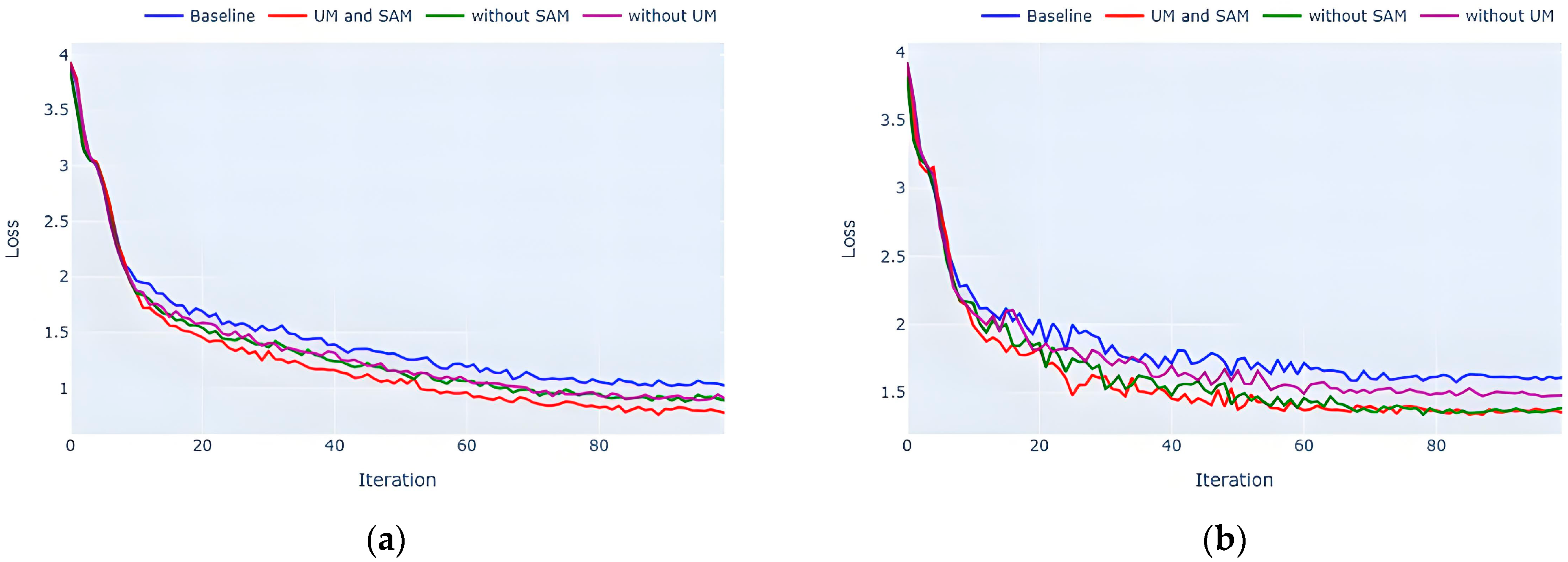

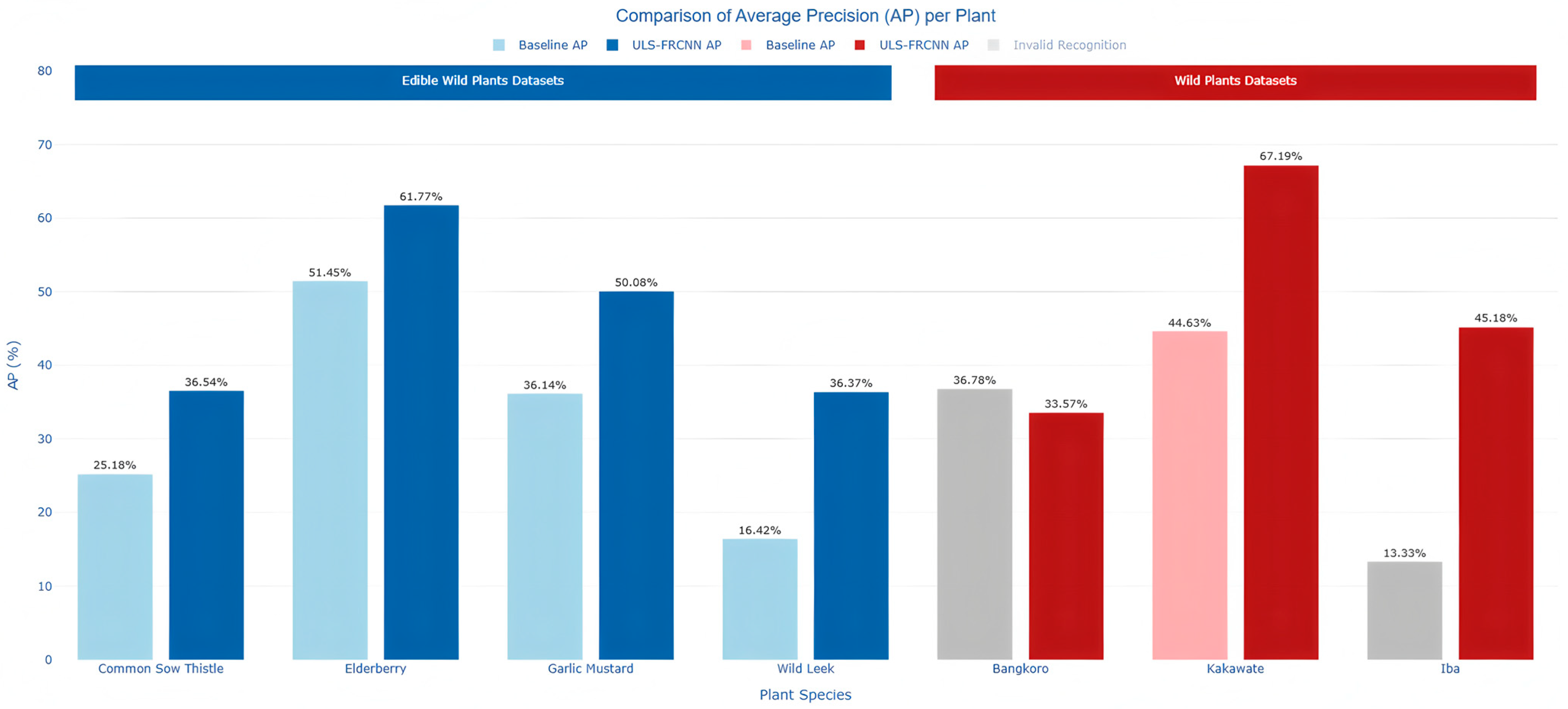

Compared with the original “Faster R-CNN”, our model differs in the following aspects in terms of improvements:

Firstly, by improving the lightweight bottleneck module, we have addressed the issues of high computational complexity and insufficient sensitivity to fine features in the traditional ResNet50 architecture. The use of depthwise separable convolutions not only reduces the computational load and the number of parameters but also enhances the ability to capture fine features.

Secondly, the introduction of the Split SAM module solves the problems of fixed receptive fields and inadequate foreground–background separation in the original feature extraction architecture. This module can adjust the network’s focus on different areas in a dynamic and efficient way, making the important details stand out more. Because of this, the network gets much better at finding objects of different sizes and recognizing targets against complex backgrounds.