FCA-STNet: Spatiotemporal Growth Prediction and Phenotype Extraction from Image Sequences for Cotton Seedlings

Abstract

1. Introduction

- Dataset Construction: Due to the lack of publicly available time-series image datasets of cotton seedling growth under real field conditions and suited to morphological similarity recognition tasks, we manually collected image sequences and applied data augmentation techniques to expand the dataset, thereby improving the generalization capability of the model.

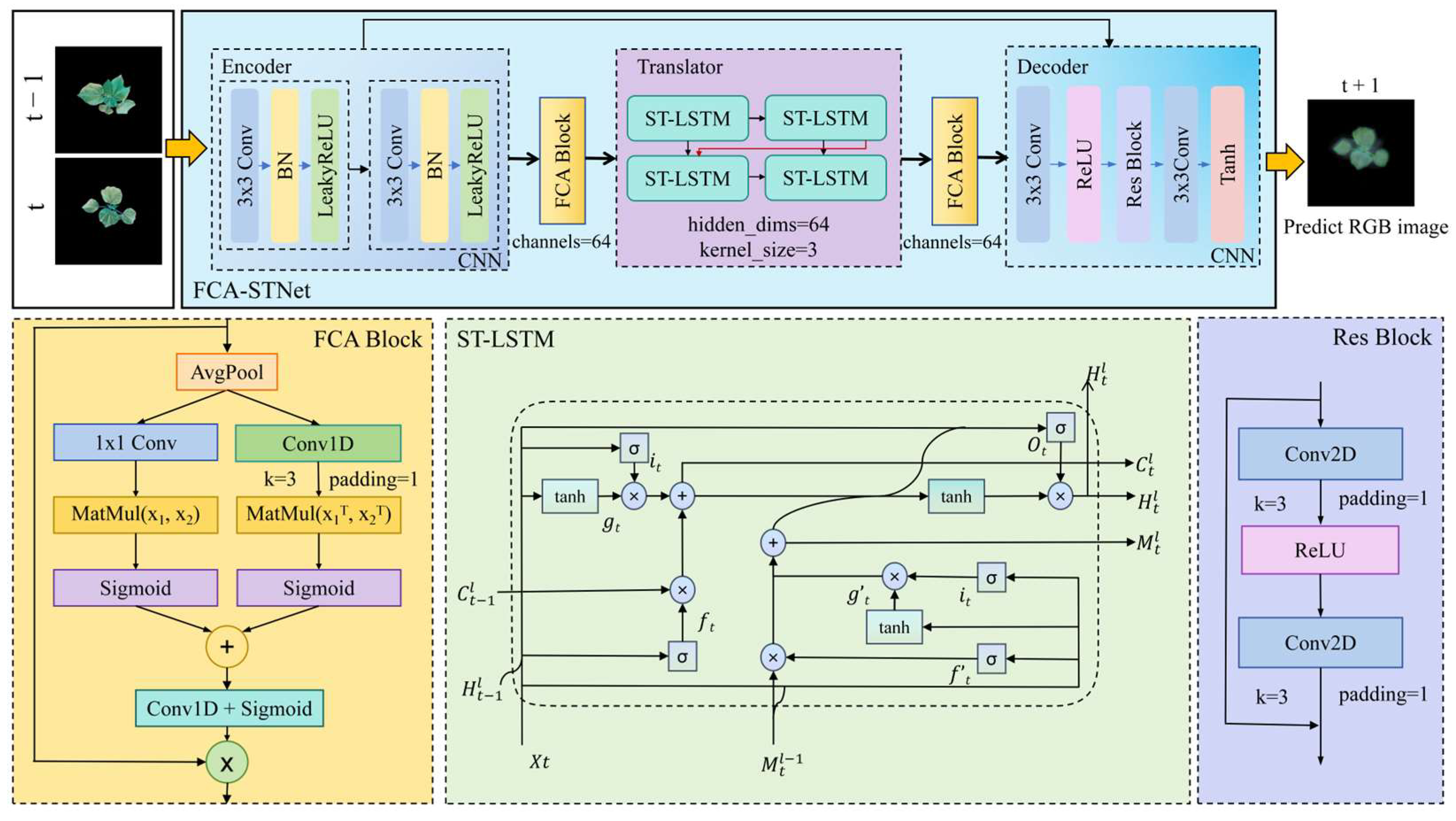

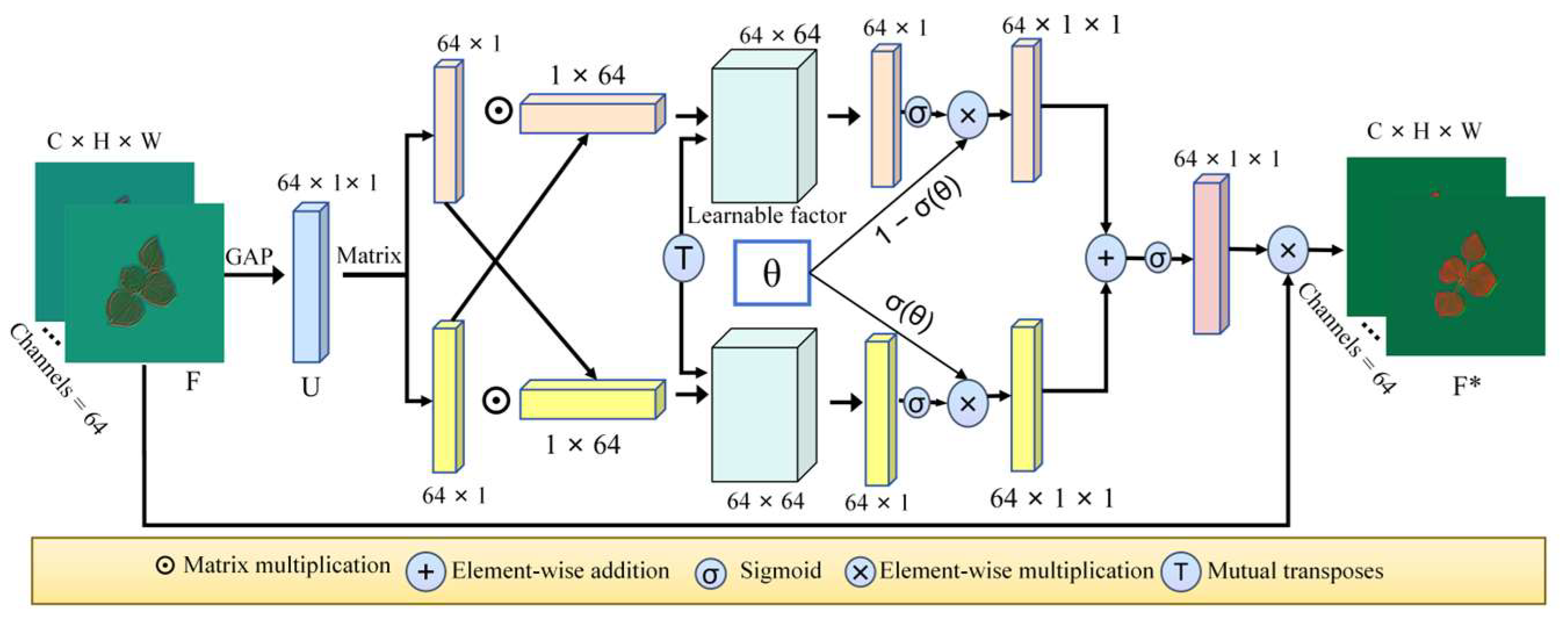

- FCA-STNet Architecture: We propose FCA-STNet, a prediction network based on RGB image sequences of cotton seedlings. The network is built upon a self-designed STNet backbone, which is tailored to enhance spatiotemporal feature extraction under conditions where long-term dependencies and local variations coexist. An Adaptive Fine-Grained Channel Attention (FCA) module is incorporated to dynamically reweight channel-wise features, suppressing the interference from non-uniform lighting and wind disturbances. This improves the clarity and robustness of feature representation and enables the model to better adapt to complex visual variations during cotton seedling growth.

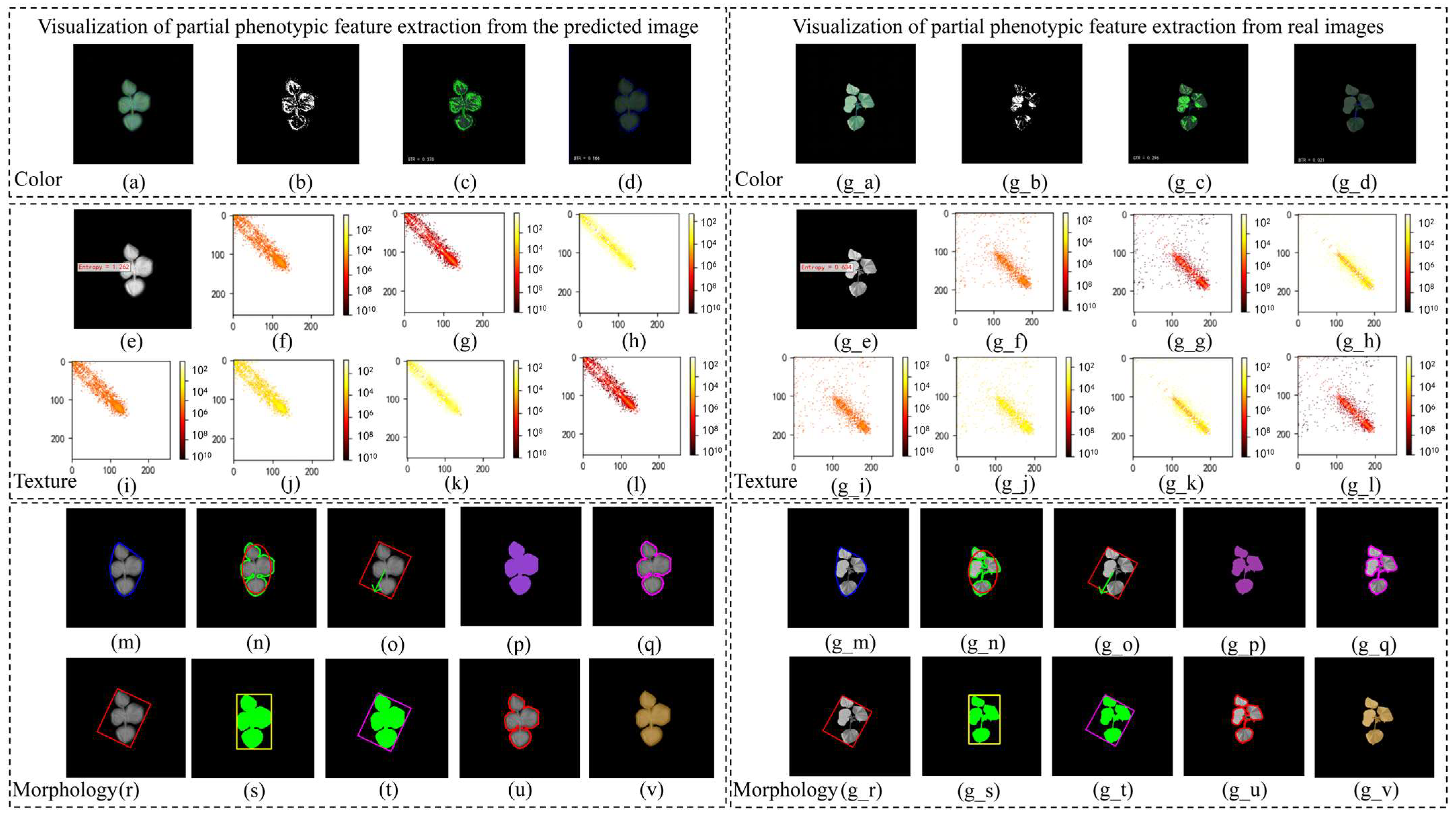

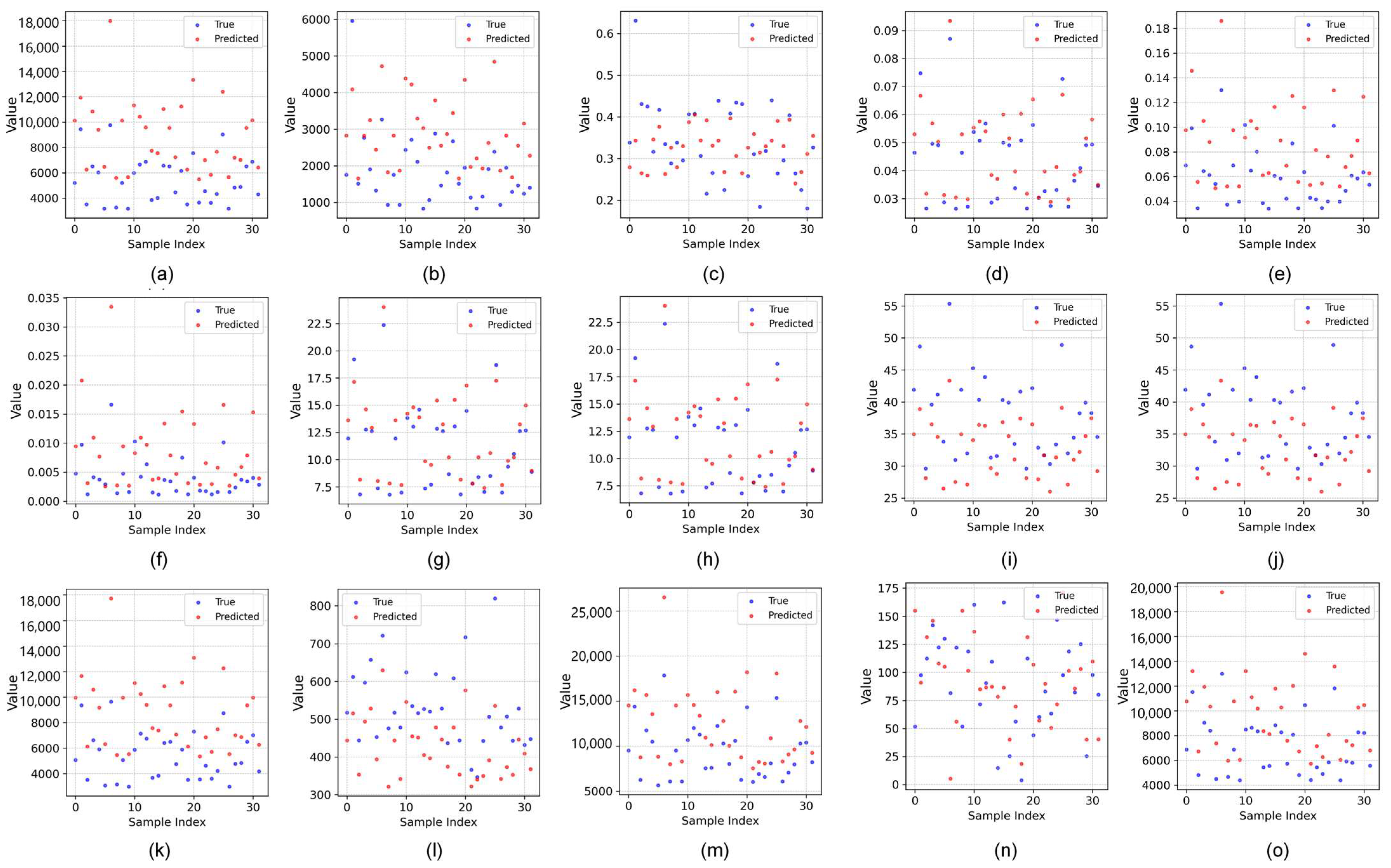

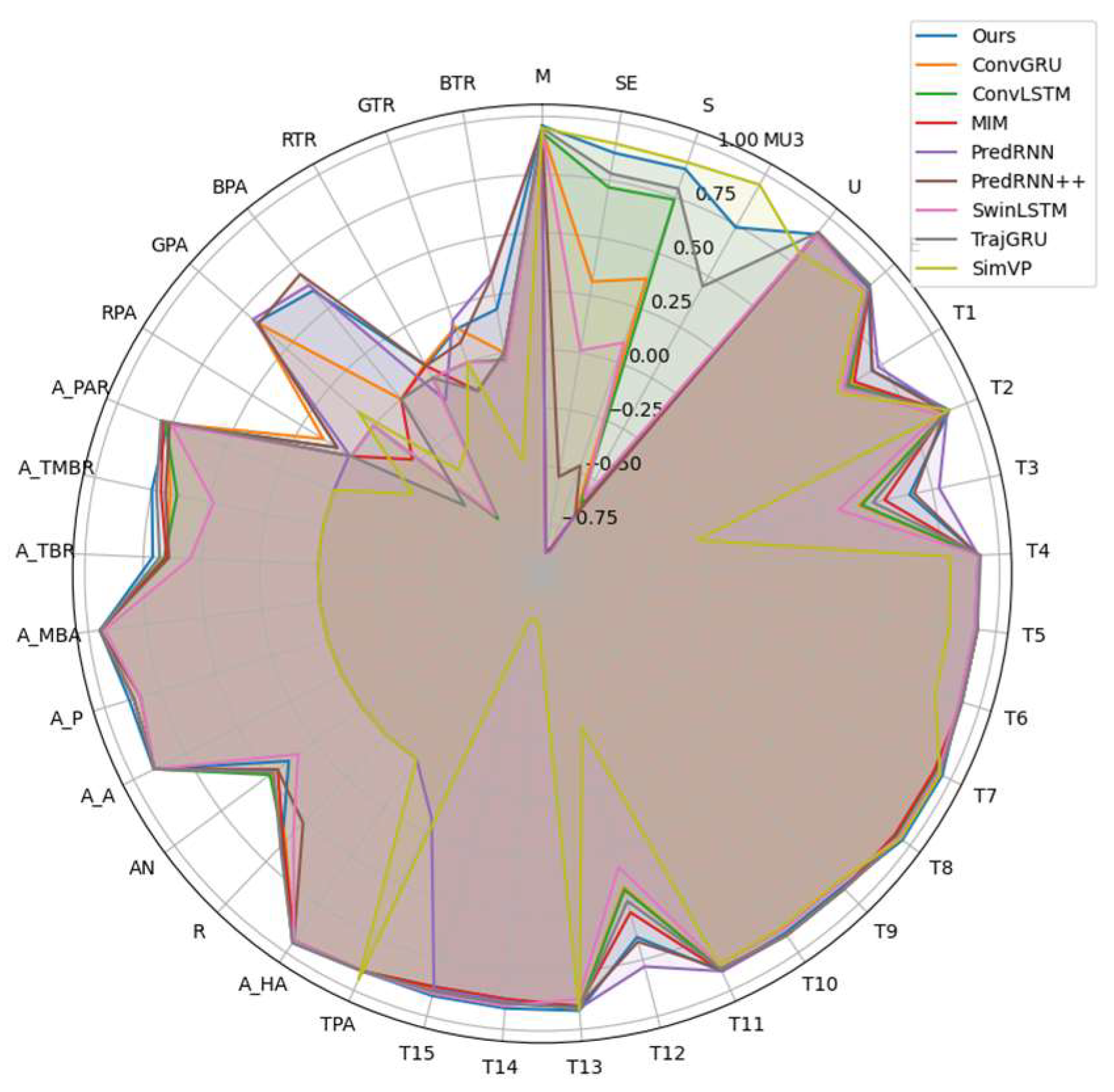

- Phenotypic Prediction and Evaluation: The predicted image at time t + 1 is used to extract 37 phenotypic traits, including the color, morphology, and texture features. These traits serve both as a basis for evaluating the model accuracy and as a visual reference for assessing the cotton seedling status at t + 1, thereby supporting the optimization of agronomic practices.

2. Results

2.1. Dataset Construction and Preprocessing

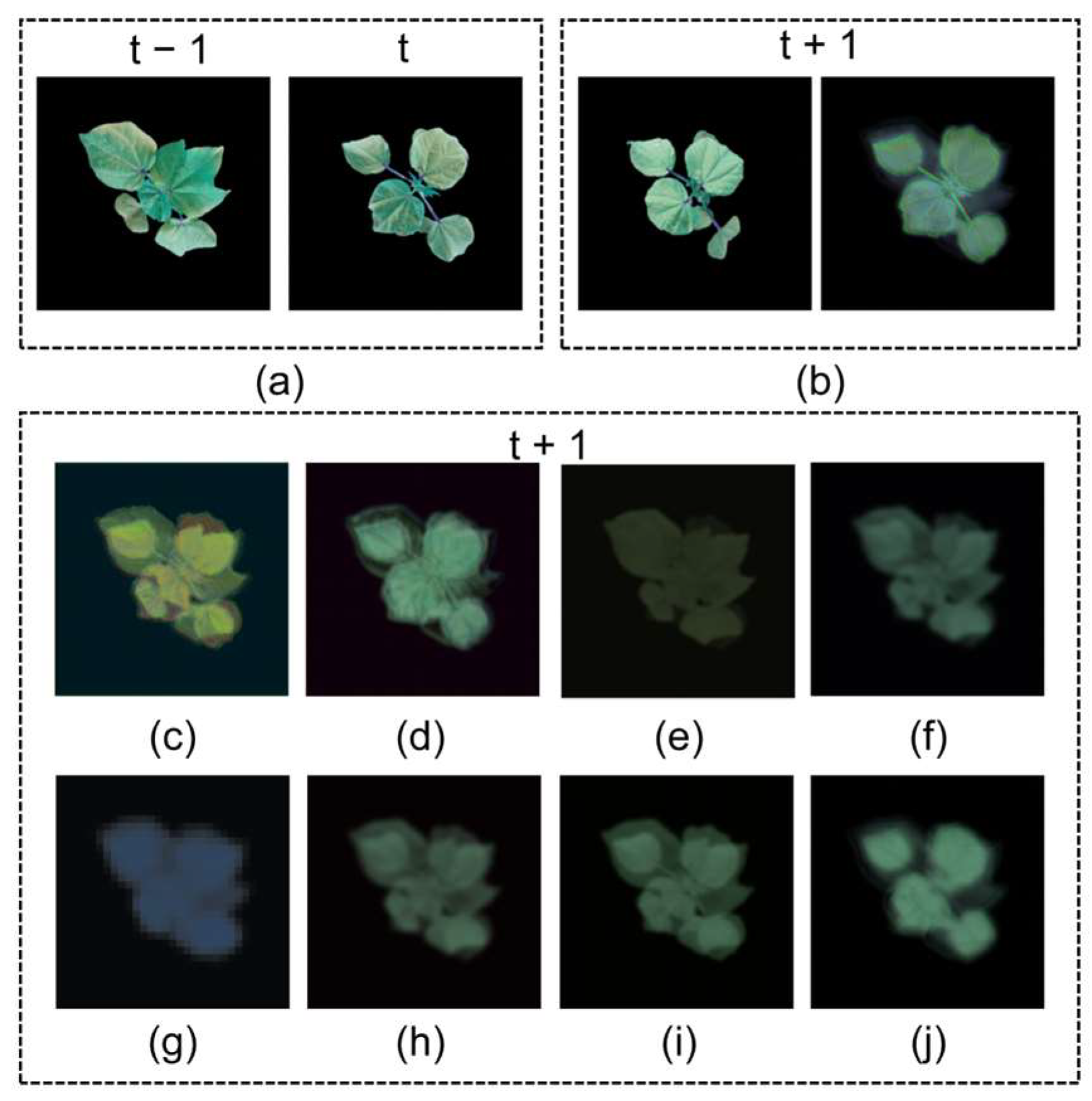

2.2. Prediction Results for Cotton Seedlings

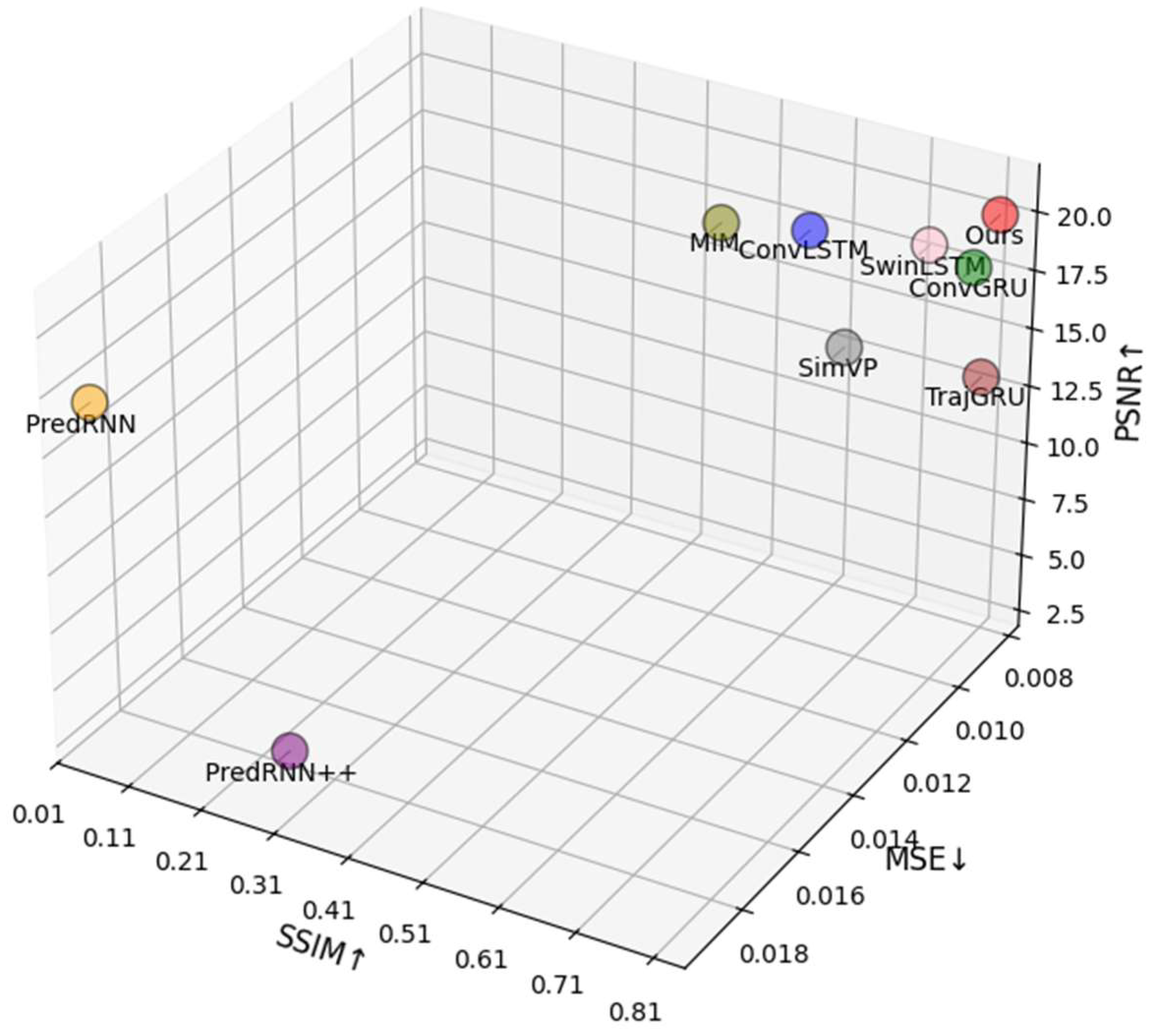

2.2.1. Comparison with Other Prediction Models

2.2.2. Ablation Study

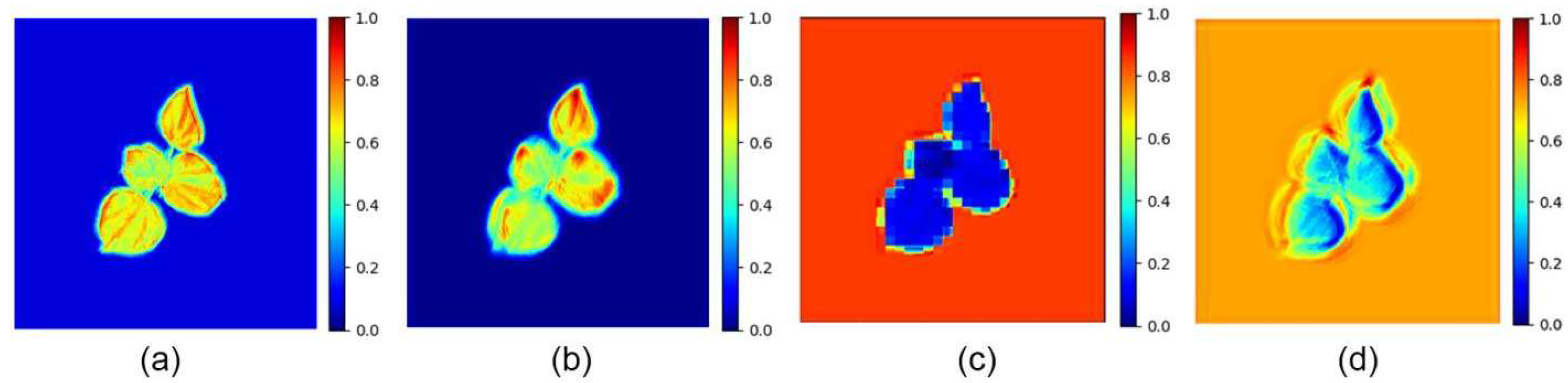

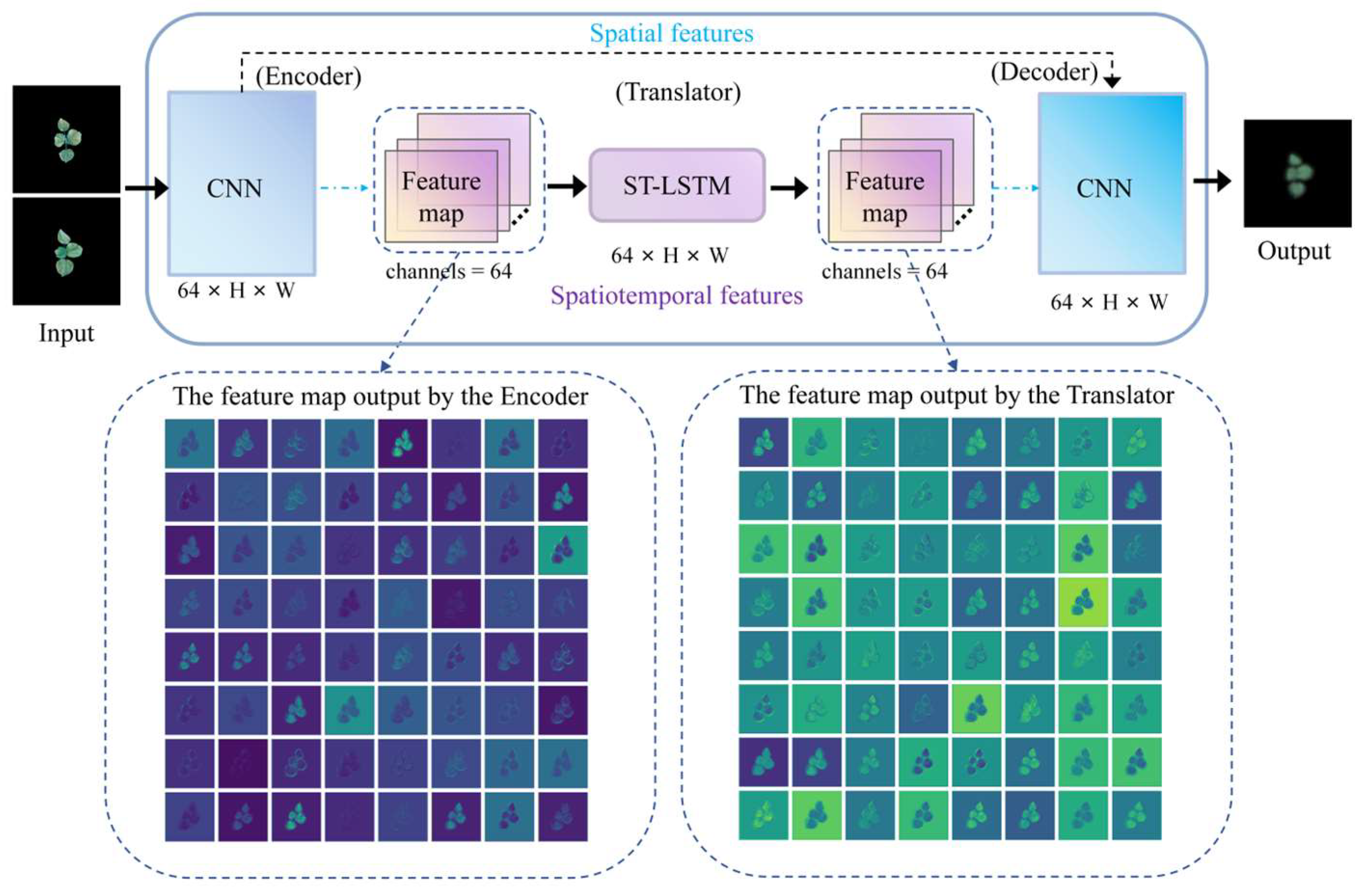

2.2.3. Spatiotemporal Feature Extraction in Translator

2.2.4. Comparison of Attention Mechanisms

2.3. Phenotypic Feature Correlation Analysis

3. Discussion

4. Materials and Methods

4.1. FCA-STNet Network Architecture

4.1.1. STNet Structure

4.1.2. FCA Module

4.1.3. Evaluation Metrics

4.2. Phenotypic Feature Extraction

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhao, C.J.; Lu, S.L.; Guo, X.Y.; Xiao, B.X.; Wen, W.L. Exploration of digital plants and their technical system. Sci. Agric. Sin. 2010, 43, 2023–2030, (In Chinese with English abstract). [Google Scholar]

- Zhang, H.C.; Wang, G.S.; Bian, L.M.; Zheng, J.Q.; Zhou, H.P. Research on plant phenotype measurement system and temporal growth model based on optical cameras. Trans. Chin. Soc. Agric. Mach. 2019, 50, 197–207, (In Chinese with English abstract). [Google Scholar]

- Zhu, X.G.; Chang, T.G.; Song, Q.F.; Chang, S.Q.; Wang, C.R.; Zhang, G.Q.; Guo, Y.; Zhou, S.C. Digital plants: Scientific connotation, bottlenecks, and development strategies. Syn. Biol. J. 2020, 1, 285–297, (In Chinese with English abstract). [Google Scholar]

- Espana, M.; Baret, F.; Aries, F.; Chelle, M.; Andrieu, B.; Prevot, L. Modeling maize canopy 3D architecture: Application to reflectance simulation. Ecol. Model. 1999, 122, 25–43. [Google Scholar] [CrossRef]

- Qian, B.; Huang, W.J.; Xie, D.H.; Ye, H.C.; Guo, A.; Pan, Y.H.; Jin, Y.; Xie, Q.Y.; Jiao, Q.J.; Zhang, B.Y.; et al. Coupled maize model: A 4D maize growth model based on growing degree days. Comput. Electron. Agric. 2023, 212, 108124. [Google Scholar] [CrossRef]

- Minorsky, P.V. Achieving the in silico plant: Systems biology and the future of plant biological research. Plant Physiol. 2003, 132, 404–409. [Google Scholar] [CrossRef][Green Version]

- Prusinkiewicz, P. Modeling plant growth and development. Curr. Opin. Plant Biol. 2004, 7, 79–83. [Google Scholar] [CrossRef]

- Kim, T.H.; Lee, S.H.; Oh, M.M.; Kim, J.O. Plant growth prediction based on hierarchical auto-encoder. In Proceedings of the International Conference on Electronics, Information and Communication (ICEIC), Jeju, Republic of Korea, 6–9 February 2022; pp. 1–3. [Google Scholar]

- Kim, T.H.; Lee, S.H.; Kim, J.O. A novel shape-based plant growth prediction algorithm using deep learning and spatial transformation. IEEE Access 2022, 10, 37731–37742. [Google Scholar] [CrossRef]

- Sakurai, S.; Uchiyama, H.; Shimada, A.; Taniguchi, R. Plant growth prediction using convolutional LSTM. In Proceedings of the 14th International Joint Conference, VISIGRAPP 2019, Prague, Czech Republic, 25–27 February 2019; Volume 14, pp. 105–113. [Google Scholar]

- Wang, C.Y.; Pan, W.T.; Li, X.; Liu, P. A plant growth and development prediction model based on STLSTM. Trans. Chin. Soc. Agric. Mach. 2022, 53, 250–258, (In Chinese with English abstract). [Google Scholar]

- Wang, C.; Pan, W.; Song, X.; Yu, H.; Zhu, J.; Liu, P.; Li, X. Predicting plant growth and development using time-series images. Agronomy 2022, 12, 2213. [Google Scholar] [CrossRef]

- Yasrab, R.; Zhang, J.; Smyth, P.; Pound, M.P. Predicting plant growth from time-series data using deep learning. Remote Sens. 2021, 13, 331. [Google Scholar] [CrossRef]

- Drees, L.; Junker-Frohn, L.V.; Kierdorf, J.; Roscher, R. Temporal prediction and evaluation of Brassica growth in the field using conditional generative adversarial networks. Comput. Electron. Agric. 2021, 190, 106415. [Google Scholar] [CrossRef]

- Duan, L.F.; Wang, X.Y.; Wang, Z.H.; Geng, Z.D.; Lu, Y.R.; Yang, W.N. A visualization prediction method of multi-variety rice growth based on improved Pix2Pix-HD network. Acta Agron. Sin. 2024, 50, 3083–3095, (In Chinese with English abstract). [Google Scholar] [CrossRef]

- Snider, J.L.; Collins, G.D.; Whitaker, J.R.; Chapman, K.D.; Horn, P.J. The impact of seed size and chemical composition on seedling vigor, yield, and fiber quality of cotton in five production environments. Field Crops Res. 2016, 193, 186–195. [Google Scholar] [CrossRef]

- Shi, X.; Gao, Z.; Lausen, L.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Deep learning for precipitation nowcasting: A benchmark and a new model. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Tang, S.; Li, C.; Zhang, P.; Tang, R. SwinLSTM: Improving spatiotemporal prediction accuracy using Swin Transformer and LSTM. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 13424–13433. [Google Scholar]

- Kong, L.; Dong, J.; Li, M.; Ge, J.; Pan, J.S. Efficient frequency domain-based transformers for high-quality image deblurring. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 5886–5895. [Google Scholar]

- Lu, L.; Xiong, Q.; Chu, D.; Xu, B. MixDehazeNet: Mix structure block for image dehazing network. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; pp. 1–10. [Google Scholar]

- Wang, Y.; Zhang, J.; Zhu, H.; Long, M.; Wang, J.; Yu, P.S. Memory in memory: A predictive neural network for learning higher-order non-stationarity from spatiotemporal dynamics. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 9146–9154. [Google Scholar]

- Wang, Y.; Wu, H.; Zhang, J.; Gao, Z.; Wang, J.; Yu, P.S.; Long, M. PredRNN: A recurrent neural network for spatiotemporal predictive learning. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 45, 2208–2225. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Gao, Z.; Long, M.; Wang, J.; Yu, P.S. PredRNN++: Towards a resolution of the deep-in-time dilemma in spatiotemporal predictive learning. In Proceedings of the International Conference on Machine Learning (ICML), Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2012, 60, 84–90. [Google Scholar] [CrossRef]

- Siam, M.; Valipour, S.; Jägersand, M.; Ray, N. Convolutional gated recurrent networks for video segmentation. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3090–3094. [Google Scholar]

- Chen, Z.; He, Z.; Lu, Z.M. DEA-Net: Single image dehazing based on detail-enhanced convolution and content-guided attention. IEEE Trans. Image Process. 2023, 33, 1002–1015. [Google Scholar] [CrossRef]

- Reddy, K.R.; Brand, D.; Wijewardana, C.; Gao, W. Temperature effects on cotton seedling emergence, growth, and development. Agron. J. 2017, 109, 1379–1387. [Google Scholar] [CrossRef]

- Wanjura, D.F.; Buxton, D.R. Hypocotyl and radicle elongation of cotton as affected by soil environment. Agron. J. 1972, 64, 431–434. [Google Scholar] [CrossRef]

- Steiner, J.J.; Jacobsen, T.A. Time of planting and diurnal soil temperature effects on cotton seedling field emergence and rate of development. Crop Sci. 1992, 32, 238–244. [Google Scholar] [CrossRef]

- Liu, R.S. Effects of density on cotton seedling transplanting in southern Xinjiang. J. Anhui Agric. Sci. 2011, 39, 2623–2625. [Google Scholar]

- Tamadon-Rastegar, M.; Gharineh, M.H.; Mashadi, A.A.; Siadat, S.A.; Barzali, M. The effects of moisture stress on seedling growth characteristics of cotton cultivars. Int. J. Agric. Biosci. 2015, 4, 42–48. [Google Scholar]

- Oosterhuis, D.M. Growth and development of a cotton plant. In Nitrogen Nutrition of Cotton; Miley, W.N., Oosterhuis, D.M., Eds.; ASA, CSSA, and SSSA Books Series; American Society of Agronomy: Madison, WI, USA, 1990; pp. 1–15. [Google Scholar] [CrossRef]

- Gao, Z.; Tan, C.; Wu, L.; Li, S.Z. SimVP: Simpler yet better video prediction. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 3160–3170. [Google Scholar]

- Wang, Y.B.; Long, M.S.; Wang, J.M.; Gao, Z.; Yu, P.S. PredRNN: Recurrent neural networks for predictive learning using spatiotemporal LSTMs. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 879–888. [Google Scholar]

- Shi, X.J.; Chen, Z.R.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In Proceedings of the 28th International Conference on Neural Information Processing Systems (NeurIPS), Montreal, QC, Canada, 7–12 December 2015; pp. 802–810. [Google Scholar]

- Sun, H.; Wen, Y.; Feng, H.; Zheng, Y.; Mei, Q.; Ren, D.; Yu, M. Unsupervised bidirectional contrastive reconstruction and adaptive fine-grained channel attention networks for image dehazing. Neural Netw. 2024, 176, 106314. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Liu, L.; Phonevilay, V.; Gu, K.; Xia, R.; Xie, J.; Zhang, Q.; Yang, K. Image super-resolution reconstruction based on feature map attention mechanism. Appl. Intell. 2021, 51, 4367–4380. [Google Scholar] [CrossRef]

- Pearson, K. Mathematical contributions to the theory of evolution. III. Regression, heredity, and panmixia. Philos. Trans. R. Soc. A 1896, 187, 253–318. [Google Scholar]

- Oh, J.; Guo, X.; Lee, H.; Lewis, R.L.; Singh, S. Action-conditional video prediction using deep networks in Atari games. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

| Model | MSE↓ | MAE↓ | SSIM↑ | PSNR↑ |

|---|---|---|---|---|

| PredRNN | 0.0188 | 0.1205 | 0.0233 | 16.2197 |

| PredRNN++ | 0.0184 | 0.0900 | 0.2660 | 3.0651 |

| MIM | 0.0107 | 0.0423 | 0.5435 | 20.0066 |

| ConvLSTM | 0.0091 | 0.0351 | 0.6038 | 18.5990 |

| SwinLSTM | 0.0099 | 0.0361 | 0.7873 | 20.3337 |

| ConvGRU | 0.0085 | 0.0326 | 0.7994 | 18.0765 |

| TrajGRU | 0.0084 | 0.0313 | 0.8115 | 13.3757 |

| SimVP | 0.0137 | 0.0555 | 0.8114 | 20.1878 |

| FCA-STNet (Ours) | 0.0086 | 0.0321 | 0.8339 | 20.7011 |

| STNet | FCA | MSE↓ | MAE↓ | SSIM↑ | PSNR↑ | |

|---|---|---|---|---|---|---|

| Encoder | Translator | |||||

| √ | 0.0088 | 0.0322 | 0.7962 | 18.6170 | ||

| √ | √ | 0.0086 | 0.0321 | 0.7993 | 19.7616 | |

| √ | √ | 0.0089 | 0.0318 | 0.7704 | 15.8190 | |

| √ | √ | √ | 0.0086 | 0.0321 | 0.8339 | 20.7011 |

| Translator | Layers | hidden_dims | MSE↓ | MAE↓ | SSIM↑ | PSNR↑ | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 32 | 64 | 128 | |||||

| ST-LSTM | √ | √ | 0.0084 | 0.0311 | 0.7211 | 15.5391 | ||||

| √ | √ | 0.0084 | 0.0299 | 0.7946 | 16.5742 | |||||

| √ | √ | 0.0080 | 0.0315 | 0.7260 | 16.6728 | |||||

| √ | √ | 0.0088 | 0.0322 | 0.7962 | 18.6170 | |||||

| √ | √ | 0.0084 | 0.0340 | 0.6888 | 17.8139 | |||||

| ConvLSTM | √ | √ | 0.0082 | 0.0324 | 0.7248 | 18.8963 | ||||

| ConvGRU | √ | √ | 0.0085 | 0.0308 | 0.7802 | 16.6416 | ||||

| Name of the Attention Mechanism | MSE↓ | MAE↓ | SSIM↑ | PSNR↑ |

|---|---|---|---|---|

| FCA | 0.0086 | 0.0321 | 0.8339 | 20.7011 |

| CGA | 0.0089 | 0.0411 | 0.0123 | 0.95110 |

| FSAS | 0.0083 | 0.0338 | 0.6591 | 19.8130 |

| EPA | 0.0092 | 0.0321 | 0.6921 | 15.0228 |

| Trait Category | Trait Type | Abbreviation | Definition | PCC |

|---|---|---|---|---|

| Texture Features | Gray Histogram | M | Mean | 0.96 |

| SE | Smoothness | 0.87 | ||

| S | Standard Deviation | 0.88 | ||

| MU3 | Third-Order Moment | 0.74 | ||

| U | Uniformity | 0.91 | ||

| E | Entropy | 0.90 | ||

| GLCM Features | T1 | Correlation | 0.70 | |

| T2 | Low-Gradient Emphasis | 0.92 | ||

| T3 | High-Gradient Emphasis | 0.65 | ||

| T4 | Energy | 0.91 | ||

| T5 | Gray-Level Non-Uniformity | 0.91 | ||

| T6 | Gradient Non-Uniformity | 0.91 | ||

| T7 | Gray Mean | 0.96 | ||

| T8 | Gradient Mean | 0.96 | ||

| T9 | Gray Entropy | 0.90 | ||

| T10 | Gradient Entropy | 0.90 | ||

| T11 | Mixed Entropy | 0.91 | ||

| T12 | Difference Moment | 0.65 | ||

| T13 | Inverse Difference Moment | 0.92 | ||

| T14 | Gray Standard Deviation | 0.91 | ||

| T15 | Gradient Standard Deviation | 0.91 | ||

| Morphological Features | Overall Morphology | TPA | Total Projected Area | 0.91 |

| A_HA | Convex Hull Area | 0.95 | ||

| R | Circularity | 0.65 | ||

| AN | Rotation Angle | 0.39 | ||

| Average Leaf Shape | A_A | Average Leaf Area | 0.90 | |

| A_P | Average Leaf Perimeter | 0.89 | ||

| A_MBA | Average Minimum Bounding Box Area | 0.95 | ||

| Ratio-Based Morphology | A_TBR | Ratio of Average Total Area to Bounding Box | 0.71 | |

| A_TMBR | Ratio of Average Total Area to Min Box | 0.75 | ||

| A_PAR | Perimeter-to-Area Ratio | 0.77 | ||

| Color Features | RPA | Red Projected Area | 0.07 | |

| GPA | Green Projected Area | 0.67 | ||

| BPA | Blue Projected Area | 0.60 | ||

| RTR | Ratio of Red Area to Total Projected Area | 0.06 | ||

| GTR | Ratio of Green Area to Total Projected Area | 0.15 | ||

| BTR | Ratio of Blue Area to Total Projected Area | 0.19 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wan, Y.; Han, B.; Chu, P.; Guo, Q.; Zhang, J. FCA-STNet: Spatiotemporal Growth Prediction and Phenotype Extraction from Image Sequences for Cotton Seedlings. Plants 2025, 14, 2394. https://doi.org/10.3390/plants14152394

Wan Y, Han B, Chu P, Guo Q, Zhang J. FCA-STNet: Spatiotemporal Growth Prediction and Phenotype Extraction from Image Sequences for Cotton Seedlings. Plants. 2025; 14(15):2394. https://doi.org/10.3390/plants14152394

Chicago/Turabian StyleWan, Yiping, Bo Han, Pengyu Chu, Qiang Guo, and Jingjing Zhang. 2025. "FCA-STNet: Spatiotemporal Growth Prediction and Phenotype Extraction from Image Sequences for Cotton Seedlings" Plants 14, no. 15: 2394. https://doi.org/10.3390/plants14152394

APA StyleWan, Y., Han, B., Chu, P., Guo, Q., & Zhang, J. (2025). FCA-STNet: Spatiotemporal Growth Prediction and Phenotype Extraction from Image Sequences for Cotton Seedlings. Plants, 14(15), 2394. https://doi.org/10.3390/plants14152394