A Comprehensive Review of Deep Learning Applications in Cotton Industry: From Field Monitoring to Smart Processing

Abstract

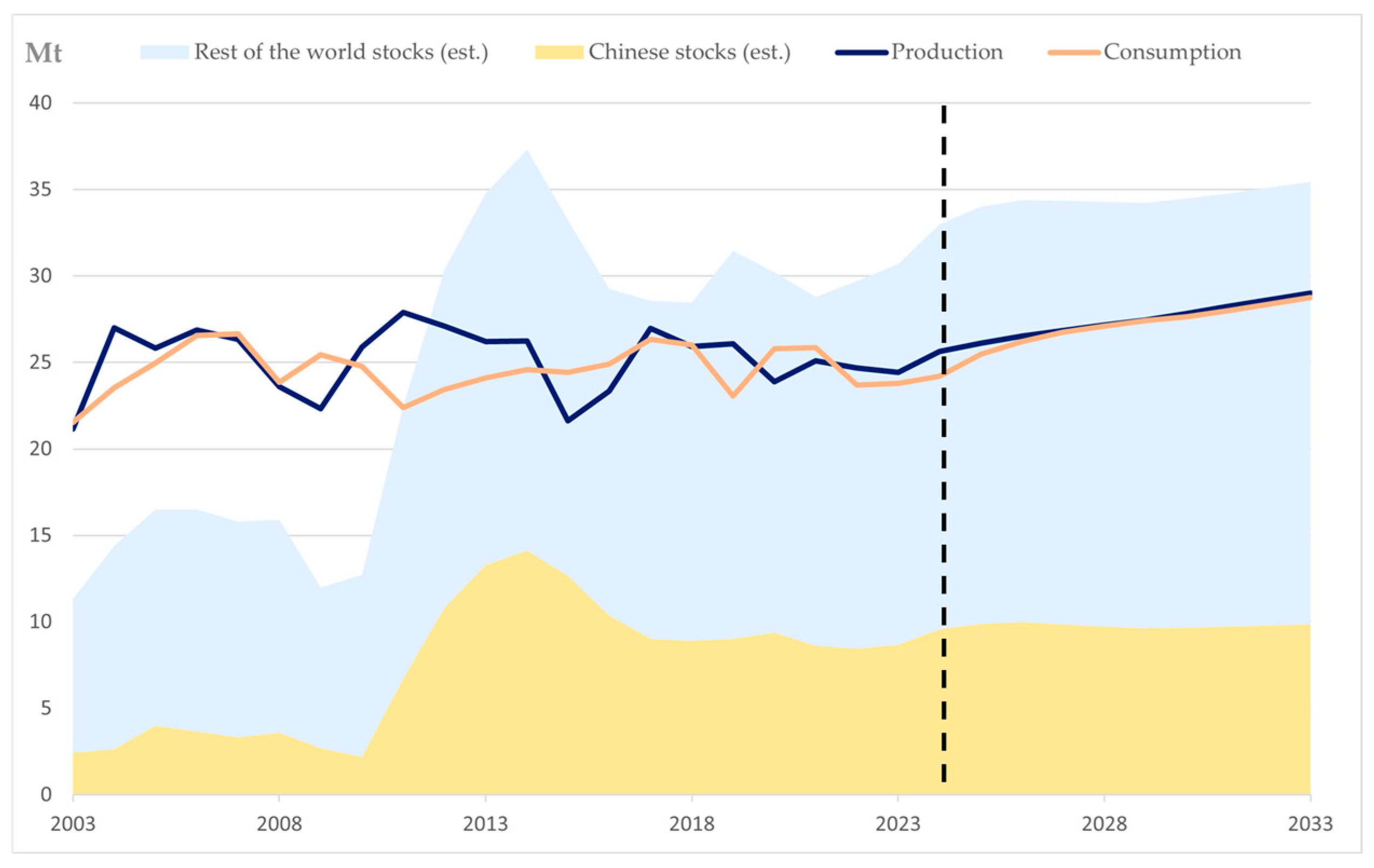

1. Introduction

- The application of deep learning in the cotton cultivation stage;

- The application of deep learning in cotton growth management;

- Application of deep learning in cotton harvesting and processing.

2. The Application of Deep Learning in the Cotton Cultivation Stage

2.1. Cotton Seed Selection and Variety Optimization

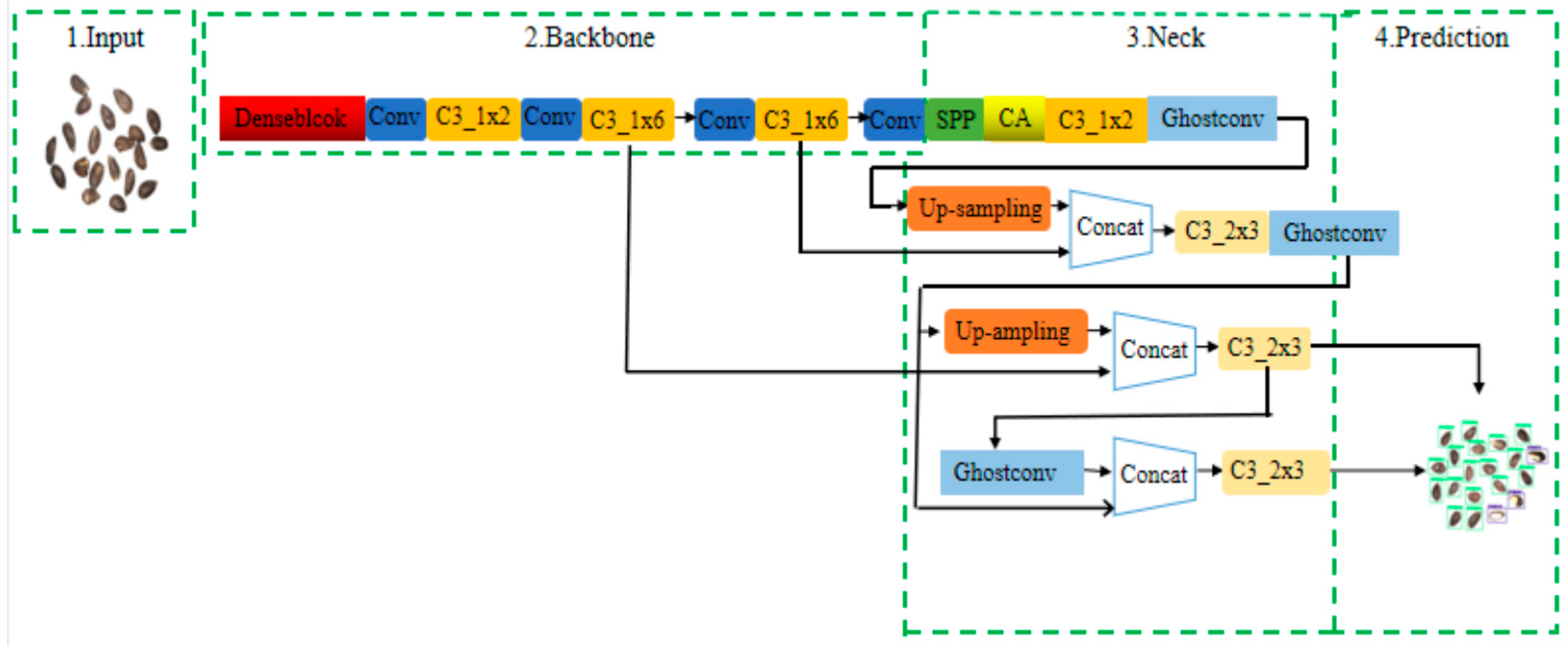

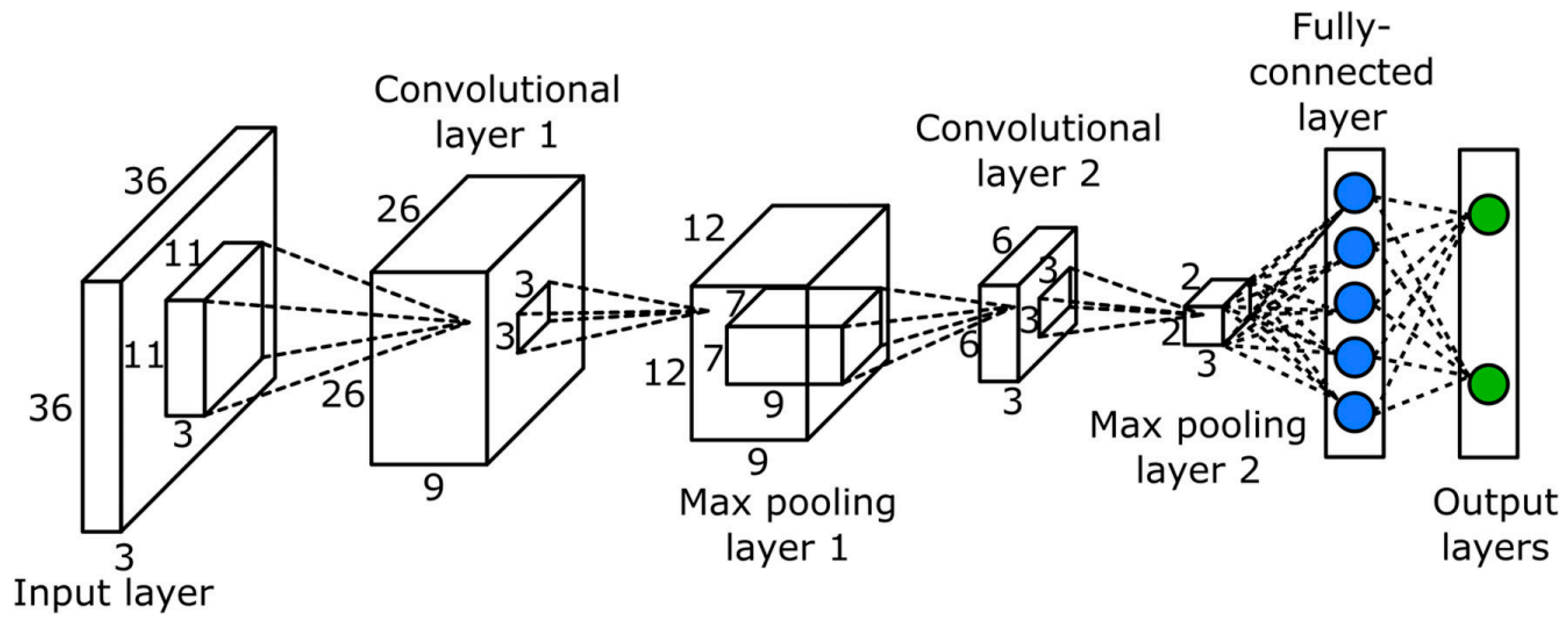

2.1.1. Deep Learning-Based Cotton Seed Quality Detection

- High equipment costs and deployment constraints: hyperspectral imaging systems and deep learning models require substantial computational resources, which may limit their adoption in resource-constrained agricultural settings.

- Data acquisition difficulties: The training of deep learning models depends on large-scale, high-quality datasets. However, agricultural data collection is often constrained by environmental conditions, equipment limitations, and technical capacity, potentially leading to insufficient data quality and quantity, which undermines model performance.

- Limited model generalization: The variability of agricultural environments—including lighting conditions, soil types, and climatic changes—can affect model generalization. More robust models are needed to address these challenges.

2.1.2. Cotton Genomic Data Analysis and Variety Improvement

- High costs and computational resource limitations: Genome-wide identification and expression analysis are costly, which may limit their large-scale application in resource-limited agricultural environments. Moreover, the computational resource requirements of deep learning models pose a challenge for research teams or agricultural enterprises with limited hardware capabilities.

- Database compatibility issues: although cotton-specific genomic databases have been gradually improved in recent years, poor compatibility between different databases makes data integration and sharing difficult, thus hindering the efficient use of data.

- Data annotation and model generalization: The application of deep learning in genomic research relies heavily on high-quality annotated data. However, data acquisition in agriculture is constrained by environmental conditions and experimental design, leading to varying data quality. Furthermore, the complexity of genomic data means that the generalization ability of deep learning models needs further optimization to enhance their adaptability to different environments and breeding contexts.

2.2. Soil Testing and Precision Sowing

2.2.1. Application of Remote Sensing and Computer Vision in Soil Quality Assessment

- When combined with field sampling, laboratory analysis, and machine learning, remote sensing effectively monitors key soil parameters such as organic carbon, nitrogen content, and salinization, optimizing management strategies.

- UAV imagery, satellite data, and ground-based spectral measurements support nitrogen management, planting density optimization, yield prediction, and soil degradation monitoring.

- Deep learning demonstrated superior performance in soil quality assessment and prediction, enabling efficient and accurate analysis of soil data.

- Limited spatial resolution hampers detection of fine-scale soil variations, constraining precision in localized management.

- Multi-source data fusion is hindered by inconsistencies in format, resolution, and quality across datasets, complicating integration and error correction.

- Environmental variability—including climate change, pest outbreaks, and anthropogenic factors—affects soil quality, yet current models insufficiently account for such dynamic influences.

- Acquisition and application of high-resolution remote sensing data to improve the detection of small-scale variations in soil properties;

- Optimization of multi-source data fusion techniques to increase compatibility across data sources and improve the efficiency of information integration;

- Developing robust deep learning models adaptable to environmental variability, improving the reliability of soil quality assessments in precision agriculture.

2.2.2. Deep Learning-Based Intelligent Sowing System

- Vision- and deep learning-based intelligent systems can monitor seeding quality in real time, optimize parameters, and promote agricultural automation.

- Combining UAV remote sensing, morphological analysis, and intelligent algorithms improves cotton emergence detection and supports replanting decisions.

- Environmental adaptability: current systems need better resilience to varying soil types, climates, and crop species.

- Multimodal fusion and precision decision-making: integrating remote sensing, ground monitoring, and DL to refine seeding strategies is still under development.

- High hardware costs: dependence on precision sensors and automated machinery limits adoption, especially in resource-limited regions.

- Enhancing model adaptability to improve the generalization of intelligent seeding systems under diverse field conditions;

- Optimizing data fusion strategies by integrating multi-source data—including remote sensing, ground-based monitoring, and machinery operation data—to improve seeding precision;

- Reducing hardware costs and increasing system accessibility to promote the widespread adoption of intelligent seeding systems in global agricultural production.

2.3. Pest and Disease Detection and Management

2.3.1. Diagnosis of Cotton Pests and Diseases

2.3.2. Early Warning Systems and Precision Prevention and Control Strategies

- Integrating early warning with precise, evidence-based control strategies;

- Designing intuitive, user-friendly system interfaces;

- Adapting technologies to suit resource-constrained agricultural settings to facilitate broader adoption in precision pest and disease management.

3. The Application of Deep Learning in Cotton Growth Management

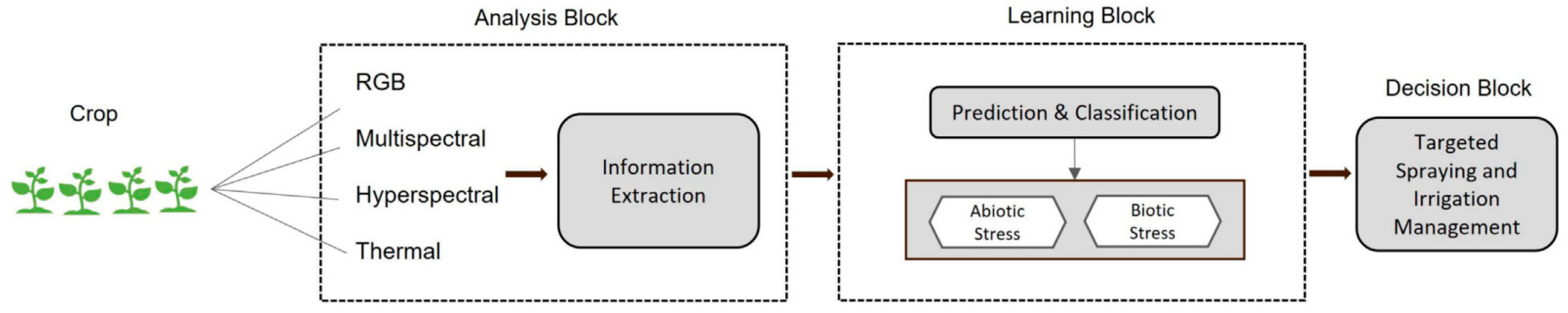

3.1. Crop Growth Monitoring and Health Assessment

3.1.1. Cotton Growth Monitoring

- Lighting variations: while preprocessing can mitigate illumination effects, extreme lighting and complex field environments (e.g., dynamic shadows) still affect detection stability, limiting practical deployment.

- Generalization limitations: models trained on data from specific cotton fields often lack validation across diverse regions, varieties, soils, and climates, reducing their adaptability.

- Model complexity: high computational demands and large parameter sizes hinder deployment on resource-limited platforms such as UAVs and field devices, necessitating lightweight, efficient architectures.

3.1.2. Yield Prediction

- Model generalization: Most models are region-specific and lack cross-regional validation. Expanding datasets to cover diverse environments is essential to enhance adaptability and robustness.

- Quality of remote sensing data: UAV and satellite imagery are affected by weather, sensor precision, and acquisition methods, impacting model performance. Improved preprocessing techniques—such as denoising, illumination correction, and enhancement—are needed to ensure data consistency.

- Long-term prediction: Current models often focus on intra-season forecasts, with limited attention to multi-year trends. Incorporating multi-year remote sensing and meteorological data with temporal models (e.g., Transformers, Bi-LSTM) could improve long-term forecasting and strategic planning.

3.2. Intelligent Lrrigation and Fertilization

- Model interpretability: deep learning models, though effective in uncertainty management, often lack transparency, limiting their applicability in agricultural settings that demand traceable and explainable decision-making.

- Data transmission and control limitations: in large-scale farms, data transmission delays—exacerbated by poor network infrastructure or high data volumes—can compromise real-time control, underscoring the need for efficient transmission protocols and edge computing.

- Data scarcity: In regions with limited or unstable meteorological and remote sensing data, model performance may degrade. Robust preprocessing and imputation techniques are essential to enhance model generalization and reliability.

- Integrating explainable AI (XAI) to improve model transparency and usability;

- Advancing edge computing and data transmission frameworks for real-time responsiveness;

- Enhancing data imputation and augmentation to support model performance in data-limited environments.

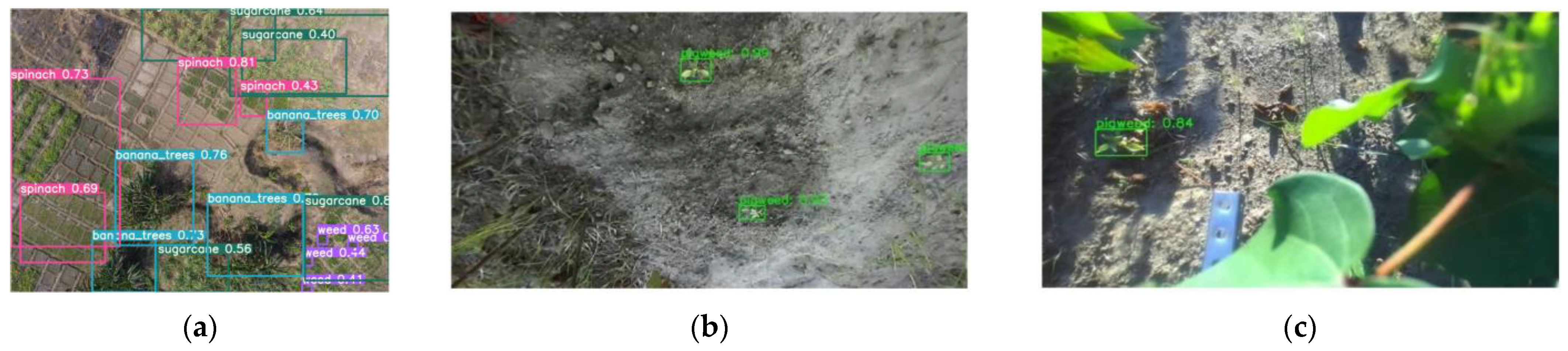

3.3. Weed Detection and Precision Weed Control

3.3.1. Field Weed Identification

- Complex field environments and weed variability: dynamic conditions—such as fluctuating lighting, soil backgrounds, and weed growth stages—can lead to recognition errors and reduce detection robustness.

- Limited generalization: many models are trained on region-specific datasets and recognize only a few weed species, with uncertain performance on unseen types or under diverse conditions.

- Short-term focus: Most research emphasizes single-season detection, with limited assessment of long-term weed control impacts on cotton growth and yield.

- Expanding dataset diversity to enhance model generalization across different environments, lighting, soil types, and weed stages;

- Incorporating multispectral and hyperspectral imaging to improve species-level discrimination, especially when weeds and crops have similar visual features;

- Developing long-term monitoring frameworks using temporal models (e.g., LSTM, Transformer) to assess the prolonged effects of weed management strategies on crop performance.

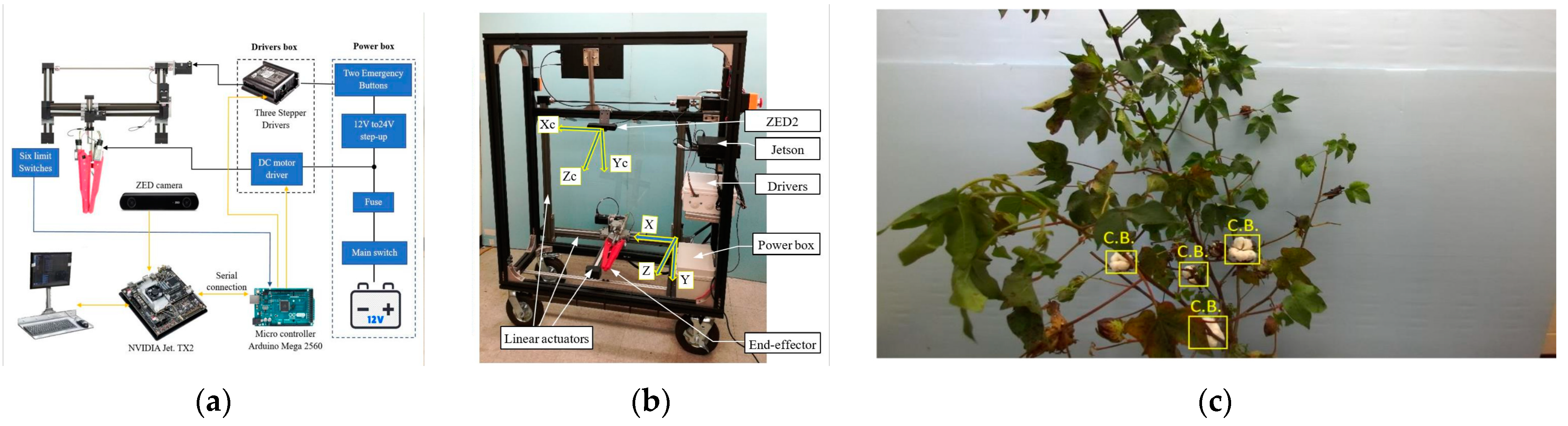

3.3.2. Weeding System and Intelligent Spraying System

- A.

- Weeding System

- Limited generalizability and modularity: Most current systems are crop-specific and lack versatility. Future research can focus on modular tool designs that support quick attachment changes or develop multi-crop-compatible intelligent weeding platforms.

- Navigation and energy limitations: Autonomous navigation and energy supply remain critical bottlenecks. Enhancing localization accuracy and reducing reliance on human intervention, along with integrating renewable energy sources such as solar power, could improve performance and sustainability in large-scale operations.

- B.

- Intelligent Spraying System

- Coordinated management in large-scale operations: In expansive fields, multiple UAVs or autonomous sprayers require effective task allocation, communication, and collaborative control. Optimizing path planning, avoiding redundant spraying, and coordinating task distribution are key research priorities;

- Precision spraying and environmental protection: While systems can adjust pesticide type and dosage based on disease classification, further improvements are needed to ensure chemicals are applied exclusively to target vegetation. Integration of path optimization, spray control algorithms, and advanced nozzle technologies is essential;

- Robustness under complex lighting conditions: Reduced recognition accuracy under shaded or uneven lighting remains a concern. Solutions may include multispectral imaging, high dynamic range techniques, and adaptive illumination correction algorithms.

- Developing collaborative control strategies for multi-device coordination;

- Enhancing environmental adaptability to maintain accuracy in complex conditions such as dense vegetation and low-light environments;

- Integrating multi-sensor fusion (e.g., RGB, thermal, and multispectral) to improve decision reliability in precision spraying.

4. Application of Deep Learning in Cotton Harvesting and Processing

4.1. Intelligent Harvesting Robot

- Reliance on prior knowledge for obstacle avoidance: Most current algorithms depend on predefined information, limiting their ability to respond to unknown obstacles. Future research should explore self-supervised learning and model-free reinforcement learning to enable autonomous learning in complex environments.

- Limited navigation performance in complex terrains: Navigation accuracy and stability remain insufficient in scenarios involving curved paths, uneven terrain, and crop row transitions. Integrating multimodal sensor fusion (e.g., RGB-D cameras and LiDAR) and fusion technologies [159] may improve adaptability.

- Lack of open agricultural datasets: The scarcity of standardized datasets constrains model training and evaluation. Establishing large-scale, publicly available agricultural image and path datasets would enhance the generalization of deep learning models and accelerate intelligent equipment development.

- Developing adaptive obstacle avoidance strategies through autonomous learning in unknown environments;

- Optimizing path planning by combining reinforcement learning with dynamic search algorithms to improve performance in complex field conditions;

- Building comprehensive agricultural datasets to support robust model training and improve real-world applicability.

4.2. Cotton Quality Inspection and Grading

4.2.1. Fiber Quality Inspection

- The quality of image capture may be influenced by lighting conditions and the positioning of samples, necessitating further optimization of hardware design to minimize the impact of environmental factors.

- For most models, detecting small targets remains a challenge. For instance, the detection capability of the model may decrease when identifying foreign fibers smaller than 0.5 mm2.

4.2.2. Cotton Impurity Identification

- Limited datasets and poor model generalization: Most existing datasets are derived from controlled environments and fail to represent the variability of real-world conditions, such as lighting changes, background interference, and regional cotton color differences. Enhanced testing and optimization in diverse field and factory settings are needed to improve model robustness and generalizability.

- High computational complexity limiting real-time performance: On high-speed production lines, some deep learning models are too computationally intensive, hindering real-time detection. Future research should prioritize lightweight architectures (e.g., MobileNet, ShuffleNet) and employ techniques such as model pruning, quantization, and edge computing to enhance deployment efficiency.

- Expanding dataset size to enhance model generalization, ensuring high accuracy across varying lighting conditions, backgrounds, and cotton varieties;

- Optimizing model structures using lightweight CNNs, Transformers, and adaptive enhancement methods to ensure real-time, efficient detection;

- Incorporating multi-modal sensing technologies (e.g., polarization imaging, NIR spectroscopy) to enhance impurity recognition under complex conditions.

5. Discussion

5.1. Challenges

5.1.1. High Cost of Data Acquisition and Annotation

5.1.2. Interpretability Issues of Deep Learning Models

5.1.3. Computational Resource Constraints and Challenges in Practical Deployment

5.2. Future Perspectives

- Developing XAI techniques, such as attention visualization and causal inference-based explanations, to reveal neural network decision processes;

- Creating lightweight, transparent models to reduce computational complexity, improve interpretability, and ensure their broad applicability in agriculture, thereby increasing trust in intelligent agricultural systems among farmers and researchers.

- Model compression and acceleration: Techniques such as pruning, quantization, knowledge distillation, and low-rank decomposition can significantly reduce model size and computational load. For instance, pruning eliminates redundant weights, while quantization replaces floating-point operations with low-bit integer calculations to accelerate inference [188];

- Lightweight network design: architectures such as MobileNet, ShuffleNet, EfficientDet, and YOLOv7 reduce resource consumption, making them suitable for deployment on mobile and embedded devices [189];

- Advanced architecture exploration: investigating state-of-the-art models such as YOLOv12 can enhance both detection accuracy and processing speed, improving adaptability in dynamic agricultural environments;

- Semi-supervised and self-supervised learning: These approaches reduce dependence on large labeled datasets and improve model generalization across diverse conditions. Additionally, methods such as wavelet interpolation transformations can be employed to further boost model robustness and performance [190,191].

- Optimizing in-memory computing architectures to reduce data transfer overhead, improve energy efficiency, and support heterogeneous hardware (e.g., GPUs, CPUs, and FPGAs) through universal scheduling frameworks [192];

- Leveraging cloud and distributed computing to provide more powerful training and inference capabilities for deep learning, while integrating cloud–edge collaborative computing to reduce data transmission and enhance the intelligence of agricultural equipment [193];

- Adopting hardware acceleration technologies, such as TensorRT, Google Edge TPU, and FPGAs, to boost model performance and reduce energy consumption [194];

- Designing energy-efficient AI devices to support low-power deep learning applications suitable for agricultural scenarios.

- Constructing multi-view, high-precision datasets that span growth stages, lighting conditions, and regions to improve model generalization;

- Applying transfer learning and few-shot learning to reduce dependence on extensive labeled data, enabling broader applicability in data-scarce regions [195];

- Fusing multi-modal data, such as remote sensing, meteorological, soil moisture, and growth data, to build comprehensive agricultural monitoring systems;

- Promoting open data sharing and establishing standardized cotton datasets to facilitate global collaboration and innovation.

- Early disease detection systems using multi-source data to monitor and control pests and diseases such as fusarium wilt and cotton bollworm;

- Individual plant-level management through computer vision and UAVs for optimizing irrigation, fertilization, and pest control;

- Intelligent spraying and selective weeding systems using object detection to precisely apply agrochemicals, reducing environmental impact and improving efficiency.

- The large volumes of data generated during cotton cultivation and processing involve personal information of farmers and agricultural workers. Therefore, how to appropriately handle and protect this data, ensuring that its use complies with ethical and legal standards, is crucial for future development.

- Data sharing and collaboration should follow a governance framework that protects the interests of all parties involved, while also promoting data flow and sharing to support more precise agricultural decision-making.

- In regions with limited technological and financial resources, there may be challenges in adopting and benefiting from these technologies, which could further exacerbate the digital divide between rural and urban areas, as well as between countries.

- Future AI applications in agriculture should take into account accessibility across different regions and groups, ensuring that the use of AI does not widen the wealth gap, but instead helps a broader range of farmers and agricultural workers.

- As automation and intelligent equipment become more common, some traditional agricultural jobs may decrease or disappear. Therefore, future research should explore labor retraining and skill transition programs to help affected farmers and workers adapt to the changes brought by new technologies.

- Furthermore, the shift in agricultural production methods due to AI applications may affect agricultural policies, market supply and demand relationships, and global trade dynamics. Therefore, future studies should not only focus on technological advancements, but also conduct in-depth analysis of the potential socioeconomic consequences.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, Z.; Huang, J.; Yao, Y.; Peters, G.; Macdonald, B.; La Rosa, A.D.; Wang, Z.; Scherer, L. Environmental Impacts of Cotton and Opportunities for Improvement. Nat. Rev. Earth Environ. 2023, 4, 703–715. [Google Scholar] [CrossRef]

- Tokel, D.; Dogan, I.; Hocaoglu-Ozyigit, A.; Ozyigit, I.I. Cotton Agriculture in Turkey and Worldwide Economic Impacts of Turkish Cotton. J. Nat. Fibers 2022, 19, 10648–10667. [Google Scholar] [CrossRef]

- Chao, K. The Development of Cotton Textile Production in China; BRILL: Leiden, The Netherlands, 2020; Volume 74. [Google Scholar]

- Riello, G. Cotton Textiles and the Industrial Revolution in a Global Context. Past Present 2022, 255, 87–139. [Google Scholar] [CrossRef]

- Kowalski, K.; Matera, R.; Sokołowicz, M.E. Cotton Matters. A Recognition and Comparison of the Cottonopolises in Central-Eastern Europe during the Industrial Revolution. Fibres Text. East. Eur. 2018, 26, 16–23. [Google Scholar] [CrossRef]

- Khozhâlepesov, P.Z.; İsmailov, T.K. The Main Issues of the Development of Cotton and Textile Industry in the Republic of Uzbekistan. Int. Sci. J. Theor. Appl. Sci. 2022, 7, 158–160. [Google Scholar] [CrossRef]

- Dochia, M.; Pustianu, M. Cotton dominant natural fibre: Production, properties and limitations in its production. Nat. Fibers 2017, 1. [Google Scholar]

- chares Subash, M.; Muthiah, P. Eco-Friendly Degumming of Natural Fibers for Textile Applications: A Comprehensive Review. Clean. Eng. Technol. 2021, 5, 100304. [Google Scholar] [CrossRef]

- Krifa, M.; Stevens, S.S. Cotton Utilization in Conventional and Non-Conventional textiles—A Statistical Review. Agric. Sci. 2016, 7, 747–758. [Google Scholar] [CrossRef]

- Stevens, C.V. Industrial Applications of Natural Fibres: Structure, Properties and Technical Applications; John Wiley & Sons: Hoboken, NJ, USA, 2010. [Google Scholar]

- Randela, R. Integration of Emerging Cotton Farmers into the Commercial Agricultural Economy. PhD Thesis, University of the Free State, Bloemfontein, South Africa, 2005. [Google Scholar]

- Salam, A. 3. Production, prices, and emerging challenges in the pakistan cotton sector. In Cotton-Textile-Apparel Sectors of Pakistan; International Food Policy Research Institute: Washington, DC, USA, 2008; p. 22. [Google Scholar]

- Levidow, L.; Zaccaria, D.; Maia, R.; Vivas, E.; Todorovic, M.; Scardigno, A. Improving Water-Efficient Irrigation: Prospects and Difficulties of Innovative Practices. Agric. Water Manag. 2014, 146, 84–94. [Google Scholar] [CrossRef]

- Dai, J.; Dong, H. Intensive Cotton Farming Technologies in China: Achievements, Challenges and Countermeasures. Field Crops Res. 2014, 155, 99–110. [Google Scholar] [CrossRef]

- Luttrell, R.G.; Teague, T.G.; Brewer, M.J. Cotton Insect Pest Management. Cotton 2015, 57, 509–546. [Google Scholar]

- Adeleke, A.A. Technological Advancements in Cotton Agronomy: A Review and Prospects. Technol. Agron. 2024, 4, e008. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, X.; Mei, S. Shannon-Cosine Wavelet Precise Integration Method for Locust Slice Image Mixed Denoising. Math. Probl. Eng. 2020, 2020, 4989735. [Google Scholar] [CrossRef]

- Tu, Y.-H.; Wang, R.-F.; Su, W.-H. Active Disturbance Rejection Control—New Trends in Agricultural Cybernetics in the Future: A Comprehensive Review. Machines 2025, 13, 111. [Google Scholar] [CrossRef]

- Cui, K.; Li, R.; Polk, S.L.; Lin, Y.; Zhang, H.; Murphy, J.M.; Plemmons, R.J.; Chan, R.H. Superpixel-Based and Spatially-Regularized Diffusion Learning for Unsupervised Hyperspectral Image Clustering. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4405818. [Google Scholar] [CrossRef]

- Zhou, G.; Wang, R.-F. The Heterogeneous Network Community Detection Model Based on Self-Attention. Symmetry 2025, 17, 432. [Google Scholar] [CrossRef]

- Cui, K.; Tang, W.; Zhu, R.; Wang, M.; Larsen, G.D.; Pauca, V.P.; Alqahtani, S.; Yang, F.; Segurado, D.; Fine, P.; et al. Real-Time Localization and Bimodal Point Pattern Analysis of Palms Using UAV Imagery. arXiv 2024, arXiv:2410.11124. [Google Scholar]

- Cui, K.; Li, R.; Polk, S.L.; Murphy, J.M.; Plemmons, R.J.; Chan, R.H. Unsupervised Spatial-Spectral Hyperspectral Image Reconstruction and Clustering with Diffusion Geometry. In Proceedings of the 2022 12th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Rome, Italy, 13–16 September 2022; pp. 1–5. [Google Scholar]

- Zou, J.; Han, Y.; So, S.-S. Overview of Artificial Neural Networks. In Artificial Neural Networks: Methods and Applications; Humana Press: Totowa, NJ, USA, 2009; pp. 14–22. [Google Scholar]

- Wang, R.-F.; Su, W.-H. The Application of Deep Learning in the Whole Potato Production Chain: A Comprehensive Review. Agriculture 2024, 14, 1225. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, R.; Wang, M.; Lai, T.; Zhang, M. Self-Supervised Transformer-Based Pre-Training Method with General Plant Infection Dataset. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Urumqi, China, 18–20 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 189–202. [Google Scholar]

- Korir, N.K.; Han, J.; Shangguan, L.; Wang, C.; Kayesh, E.; Zhang, Y.; Fang, J. Plant Variety and Cultivar Identification: Advances and Prospects. Crit. Rev. Biotechnol. 2013, 33, 111–125. [Google Scholar] [CrossRef]

- Kumar, V.; Aydav, P.S.S.; Minz, S. Crop Seeds Classification Using Traditional Machine Learning and Deep Learning Techniques: A Comprehensive Survey. SN Comput. Sci. 2024, 5, 1031. [Google Scholar] [CrossRef]

- Zhu, S.; Zhou, L.; Gao, P.; Bao, Y.; He, Y.; Feng, L. Near-Infrared Hyperspectral Imaging Combined with Deep Learning to Identify Cotton Seed Varieties. Molecules 2019, 24, 3268. [Google Scholar] [CrossRef]

- Liu, X.; Guo, P.; Xu, Q.; Du, W. Cotton Seed Cultivar Identification Based on the Fusion of Spectral and Textural Features. PLoS ONE 2024, 19, e0303219. [Google Scholar] [CrossRef] [PubMed]

- Du, X.; Si, L.; Li, P.; Yun, Z. A Method for Detecting the Quality of Cotton Seeds Based on an Improved ResNet50 Model. PLoS ONE 2023, 18, e0273057. [Google Scholar] [CrossRef]

- Li, Q.; Zhou, W.; Zhang, H. Integrating Spectral and Image Information for Prediction of Cottonseed Vitality. Front. Plant Sci. 2023, 14, 1298483. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Wang, L.; Liu, Z.; Wang, X.; Hu, C.; Xing, J. Detection of Cotton Seed Damage Based on Improved YOLOv5. Processes 2023, 11, 2682. [Google Scholar] [CrossRef]

- Liu, Y.; Lv, Z.; Hu, Y.; Dai, F.; Zhang, H. Improved Cotton Seed Breakage Detection Based on YOLOv5s. Agriculture 2022, 12, 1630. [Google Scholar] [CrossRef]

- Zhang, H.; Li, Q.; Luo, Z. Efficient Online Detection Device and Method for Cottonseed Breakage Based on Light-YOLO. Front. Plant Sci. 2024, 15, 1418224. [Google Scholar] [CrossRef]

- Yang, Z.; Gao, C.; Zhang, Y.; Yan, Q.; Hu, W.; Yang, L.; Wang, Z.; Li, F. Recent Progression and Future Perspectives in Cotton Genomic Breeding. J. Integr. Plant Biol. 2023, 65, 548–569. [Google Scholar] [CrossRef]

- Su, J.; Song, S.; Wang, Y.; Zeng, Y.; Dong, T.; Ge, X.; Duan, H. Genome-Wide Identification and Expression Analysis of DREB Family Genes in Cotton. BMC Plant Biol. 2023, 23, 169. [Google Scholar] [CrossRef]

- Xia, S.; Zhang, H.; He, S. Genome-Wide Identification and Expression Analysis of ACTIN Family Genes in the Sweet Potato and Its Two Diploid Relatives. Int. J. Mol. Sci. 2023, 24, 10930. [Google Scholar] [CrossRef]

- Zhu, C.-C.; Wang, C.-X.; Lu, C.-Y.; Wang, J.-D.; Zhou, Y.; Xiong, M.; Zhang, C.-Q.; Liu, Q.-Q.; Li, Q.-F. Genome-Wide Identification and Expression Analysis of OsbZIP09 Target Genes in Rice Reveal Its Mechanism of Controlling Seed Germination. Int. J. Mol. Sci. 2021, 22, 1661. [Google Scholar] [CrossRef] [PubMed]

- Zhu, T.; Liang, C.; Meng, Z.; Sun, G.; Meng, Z.; Guo, S.; Zhang, R. CottonFGD: An Integrated Functional Genomics Database for Cotton. BMC Plant Biol. 2017, 17, 101. [Google Scholar] [CrossRef] [PubMed]

- Peng, Z.; Li, H.; Sun, G.; Dai, P.; Geng, X.; Wang, X.; Zhang, X.; Wang, Z.; Jia, Y.; Pan, Z.; et al. CottonGVD: A Comprehensive Genomic Variation Database for Cultivated Cottons. Front. Plant Sci. 2021, 12, 803736. [Google Scholar] [CrossRef] [PubMed]

- Dai, F.; Chen, J.; Zhang, Z.; Liu, F.; Li, J.; Zhao, T.; Hu, Y.; Zhang, T.; Fang, L. COTTONOMICS: A Comprehensive Cotton Multi-Omics Database. Database 2022, 2022, baac080. [Google Scholar] [CrossRef]

- Yu, J.; Jung, S.; Cheng, C.-H.; Lee, T.; Zheng, P.; Buble, K.; Crabb, J.; Humann, J.; Hough, H.; Jones, D.; et al. CottonGen: The Community Database for Cotton Genomics, Genetics, and Breeding Research. Plants 2021, 10, 2805. [Google Scholar] [CrossRef]

- Li, L.; Chang, H.; Zhao, S.; Liu, R.; Yan, M.; Li, F.; El-Sheery, N.I.; Feng, Z.; Yu, S. Combining High-Throughput Deep Learning Phenotyping and GWAS to Reveal Genetic Variants of Fruit Branch Angle in Upland Cotton. Ind. Crops Prod. 2024, 220, 119180. [Google Scholar] [CrossRef]

- Zhang, M.; Deng, Y.; Shi, W.; Wang, L.; Zhou, N.; Heng, W.; Zhang, Z.; Guan, X.; Zhao, T. Predicting Cold-Stress Responsive Genes in Cotton with Machine Learning Models. Crop Des. 2024, 4, 100085. [Google Scholar] [CrossRef]

- Zhao, T.; Wu, H.; Wang, X.; Zhao, Y.; Wang, L.; Pan, J.; Mei, H.; Han, J.; Wang, S.; Lu, K.; et al. Integration of eQTL and Machine Learning to Dissect Causal Genes with Pleiotropic Effects in Genetic Regulation Networks of Seed Cotton Yield. Cell Rep. 2023, 42, 113111. [Google Scholar] [CrossRef]

- Xu, N.; Fok, M.; Li, J.; Yang, X.; Yan, W. Optimization of Cotton Variety Registration Criteria Aided with a Genotype-by-Trait Biplot Analysis. Sci. Rep. 2017, 7, 17237. [Google Scholar] [CrossRef]

- Li, Y.; Huang, G.; Lu, X.; Gu, S.; Zhang, Y.; Li, D.; Guo, M.; Zhang, Y.; Guo, X. Research on the Evolutionary History of the Morphological Structure of Cotton Seeds: A New Perspective Based on High-Resolution Micro-CT Technology. Front. Plant Sci. 2023, 14, 1219476. [Google Scholar] [CrossRef]

- Dhaliwal, J.K.; Panday, D.; Saha, D.; Lee, J.; Jagadamma, S.; Schaeffer, S.; Mengistu, A. Predicting and Interpreting Cotton Yield and Its Determinants under Long-Term Conservation Management Practices Using Machine Learning. Comput. Electron. Agric. 2022, 199, 107107. [Google Scholar] [CrossRef]

- Aghayev, A.; Řezník, T.; Konečnỳ, M. Enhancing Agricultural Productivity: Integrating Remote Sensing Techniques for Cotton Yield Monitoring and Assessment. ISPRS Int. J. Geo-Inf. 2024, 13, 340. [Google Scholar] [CrossRef]

- Jia, Y.; Li, Y.; He, J.; Biswas, A.; Siddique, K.H.; Hou, Z.; Luo, H.; Wang, C.; Xie, X. Enhancing Precision Nitrogen Management for Cotton Cultivation in Arid Environments Using Remote Sensing Techniques. Field Crops Res. 2025, 321, 109689. [Google Scholar] [CrossRef]

- Carneiro, F.M.; de Brito Filho, A.L.; Ferreira, F.M.; Junior, G.d.F.S.; Brandao, Z.N.; da Silva, R.P.; Shiratsuchi, L.S. Soil and Satellite Remote Sensing Variables Importance Using Machine Learning to Predict Cotton Yield. Smart Agric. Technol. 2023, 5, 100292. [Google Scholar] [CrossRef]

- Qi, G.; Chang, C.; Yang, W.; Zhao, G. Soil Salinity Inversion in Coastal Cotton Growing Areas: An Integration Method Using Satellite-Ground Spectral Fusion and Satellite-UAV Collaboration. Land Degrad. Dev. 2022, 33, 2289–2302. [Google Scholar] [CrossRef]

- Tian, F.; Ransom, C.J.; Zhou, J.; Wilson, B.; Sudduth, K.A. Assessing the Impact of Soil and Field Conditions on Cotton Crop Emergence Using UAV-Based Imagery. Comput. Electron. Agric. 2024, 218, 108738. [Google Scholar] [CrossRef]

- Feng, A.; Zhou, J.; Vories, E.; Sudduth, K.A. Prediction of Cotton Yield Based on Soil Texture, Weather Conditions and UAV Imagery Using Deep Learning. Precis. Agric. 2024, 25, 303–326. [Google Scholar] [CrossRef]

- Ghazal, S.; Munir, A.; Qureshi, W.S. Computer Vision in Smart Agriculture and Precision Farming: Techniques and Applications. Artif. Intell. Agric. 2024, 13, 64–83. [Google Scholar] [CrossRef]

- Zhao, D.; Arshad, M.; Li, N.; Triantafilis, J. Predicting Soil Physical and Chemical Properties Using Vis-NIR in Australian Cotton Areas. Catena 2021, 196, 104938. [Google Scholar] [CrossRef]

- Liu, W.; Zhou, J.; Zhang, T.; Zhang, P.; Yao, M.; Li, J.; Sun, Z.; Ma, G.; Chen, X.; Hu, J. Key Technologies in Intelligent Seeding Machinery for Cereals: Recent Advances and Future Perspectives. Agriculture 2024, 15, 8. [Google Scholar] [CrossRef]

- Li, Y.; Song, Z.; Li, F.; Yan, Y.; Tian, F.; Sun, X. Design and Test of Combined Air Suction Cotton Breed Seeder. J. Eng. 2020, 2020, 7598164. [Google Scholar] [CrossRef]

- Abbas, Q.; Ahmad, S. Effect of Different Sowing Times and Cultivars on Cotton Fiber Quality under Stable Cotton-Wheat Cropping System in Southern Punjab, Pakistan. Pak. J. Life Soc. Sci. 2018, 16, 77–84. [Google Scholar]

- Kiliç, H.; Gürsoy, S. Effect of Seeding Rate on Yield and Yield Components of Durum Wheat Cultivars in Cotton-Wheat Cropping System. Sci. Res. Essays 2010, 5, 2078–2084. [Google Scholar]

- Wang, Y.; Yang, Y. Research on Application of Smart Agriculture in Cotton Production Management. In Proceedings of the 2020 International Workshop on Electronic Communication and Artificial Intelligence (IWECAI), Shanghai, China, 12–14 June 2020; pp. 120–123. [Google Scholar]

- Bai, S.; Yuan, Y.; Niu, K.; Shi, Z.; Zhou, L.; Zhao, B.; Wei, L.; Liu, L.; Zheng, Y.; An, S.; et al. Design and Experiment of a Sowing Quality Monitoring System of Cotton Precision Hill-Drop Planters. Agriculture 2022, 12, 1117. [Google Scholar] [CrossRef]

- Supak, J.; Boman, R. Making Replant Decisions; Texas A&M AgriLife Extension Department: College Station, TX, USA, 2024. [Google Scholar]

- Butler, S. Making the Replant Decision: Utilization of an Aerial Platform to Guide Replant Decisions in Tennessee Cotton. Ph.D. Thesis, University of Tennessee, Knoxville, TN, USA, 2019. [Google Scholar]

- Gallo, I.; Rehman, A.U.; Dehkordi, R.H.; Landro, N.; La Grassa, R.; Boschetti, M. Deep Object Detection of Crop Weeds: Performance of YOLOv7 on a Real Case Dataset from UAV Images. Remote Sens. 2023, 15, 539. [Google Scholar] [CrossRef]

- Xu, Q.; Jin, M.; Guo, P. Enhancing Cotton Seedling Recognition: A Method for High-Resolution UAV Remote Sensing Images. Int. J. Remote Sens. 2025, 46, 105–118. [Google Scholar] [CrossRef]

- Hillocks, R. Integrated Management of Insect Pests, Diseases and Weeds of Cotton in Africa. Integr. Pest Manag. Rev. 1995, 1, 31–47. [Google Scholar] [CrossRef]

- He, Q.; Ma, B.; Qu, D.; Zhang, Q.; Hou, X.; Zhao, J. Cotton Pests and Diseases Detection Based on Image Processing. TELKOMNIKA Indones. J. Electr. Eng. 2013, 11, 3445–3450. [Google Scholar] [CrossRef]

- Manavalan, R. Towards an Intelligent Approaches for Cotton Diseases Detection: A Review. Comput. Electron. Agric. 2022, 200, 107255. [Google Scholar] [CrossRef]

- Toscano-Miranda, R.; Toro, M.; Aguilar, J.; Caro, M.; Marulanda, A.; Trebilcok, A. Artificial-Intelligence and Sensing Techniques for the Management of Insect Pests and Diseases in Cotton: A Systematic Literature Review. J. Agric. Sci. 2022, 160, 16–31. [Google Scholar] [CrossRef]

- M., A.; Zekiwos, M.; Bruck, A. Deep Learning-Based Image Processing for Cotton Leaf Disease and Pest Diagnosis. J. Electr. Comput. Eng. 2021, 2021, 9981437. [Google Scholar] [CrossRef]

- Memon, M.S.; Kumar, P.; Iqbal, R. Meta Deep Learn Leaf Disease Identification Model for Cotton Crop. Computers 2022, 11, 102. [Google Scholar] [CrossRef]

- Ganguly, S.; Jathin, S.; Mohak, M.; Sanjay, M.; Somashekhar, B.; Shobharani, N. Automated Detection and Classification of Cotton Leaf Diseases: A Computer Vision Approach. In Proceedings of the 2024 International Conference on Advances in Modern Age Technologies for Health and Engineering Science (AMATHE), Shivamogga, India, 16–17 May 2024; pp. 1–7. [Google Scholar]

- Remya, S.; Anjali, T.; Abhishek, S.; Ramasubbareddy, S.; Cho, Y. The Power of Vision Transformers and Acoustic Sensors for Cotton Pest Detection. IEEE Open J. Comput. Soc. 2024, 5, 356–367. [Google Scholar]

- Lin, Z.; Xie, L.; Bian, Y.; Zhou, L.; Zhang, X.; Shi, M. Research on Cotton Pest and Disease Identification Method Based on RegNet-CMTL. In Proceedings of the 2024 39th Youth Academic Annual Conference of Chinese Association of Automation (YAC), Dalian, China, 7–9 June 2024; pp. 1358–1363. [Google Scholar]

- Zhang, Y.; Ma, B.; Hu, Y.; Li, C.; Li, Y. Accurate Cotton Diseases and Pests Detection in Complex Background Based on an Improved YOLOX Model. Comput. Electron. Agric. 2022, 203, 107484. [Google Scholar] [CrossRef]

- Li, R.; He, Y.; Li, Y.; Qin, W.; Abbas, A.; Ji, R.; Li, S.; Wu, Y.; Sun, X.; Yang, J. Identification of Cotton Pest and Disease Based on CFNet-VoV-GCSP-LSKNet-YOLOv8s: A New Era of Precision Agriculture. Front. Plant Sci. 2024, 15, 1348402. [Google Scholar] [CrossRef]

- Gao, R.; Dong, Z.; Wang, Y.; Cui, Z.; Ye, M.; Dong, B.; Lu, Y.; Wang, X.; Song, Y.; Yan, S. Intelligent Cotton Pest and Disease Detection: Edge Computing Solutions with Transformer Technology and Knowledge Graphs. Agriculture 2024, 14, 247. [Google Scholar] [CrossRef]

- Yang, S.; Zhou, G.; Feng, Y.; Zhang, J.; Jia, Z. SRNet-YOLO: A Model for Detecting Tiny and Very Tiny Pests in Cotton Fields Based on Super-Resolution Reconstruction. Front. Plant Sci. 2024, 15, 1416940. [Google Scholar] [CrossRef]

- Caldeira, R.F.; Santiago, W.E.; Teruel, B. Identification of Cotton Leaf Lesions Using Deep Learning Techniques. Sensors 2021, 21, 3169. [Google Scholar] [CrossRef]

- Pechuho, N.; Khan, Q.; Kalwar, S. Cotton Crop Disease Detection Using Machine Learning via Tensorflow. PakJET 2020, 3, 126–130. [Google Scholar] [CrossRef]

- Wu, Q.; Zeng, J.; Wu, K. Research and Application of Crop Pest Monitoring and Early Warning Technology in China. Front. Agric. Sci. Eng. 2022, 9, 19–36. [Google Scholar] [CrossRef]

- Zhang, R.; Ma, T. Study on Early-Warning System of Cotton Production in Hebei Province. In Proceedings of the International Conference on Computer and Computing Technologies in Agriculture, Beijing, China, 18–20 October 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 973–980. [Google Scholar]

- Cao, B.; Zhou, P.; Chen, W.; Wang, H.; Liu, S. Real-Time Monitoring and Early Warning of Cotton Diseases and Pests Based on Agricultural Internet of Things. Procedia Comput. Sci. 2024, 243, 253–260. [Google Scholar] [CrossRef]

- Sitokonstantinou, V.; Koukos, A.; Kontoes, C.; Bartsotas, N.S.; Karathanassi, V. Semi-Supervised Phenology Estimation in Cotton Parcels with Sentinel-2 Time-Series. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 8491–8494. [Google Scholar]

- Islam, M.M.; Talukder, M.A.; Sarker, M.R.A.; Uddin, M.A.; Akhter, A.; Sharmin, S.; Al Mamun, M.S.; Debnath, S.K. A Deep Learning Model for Cotton Disease Prediction Using Fine-Tuning with Smart Web Application in Agriculture. Intell. Syst. Appl. 2023, 20, 200278. [Google Scholar] [CrossRef]

- Jia, B.; He, H.; Ma, F.; Diao, M.; Jiang, G.; Zheng, Z.; Cui, J.; Fan, H. Use of a Digital Camera to Monitor the Growth and Nitrogen Status of Cotton. Sci. World J. 2014, 2014, 602647. [Google Scholar] [CrossRef]

- Zhao, D.; Reddy, K.R.; Kakani, V.G.; Read, J.J.; Koti, S. Canopy Reflectance in Cotton for Growth Assessment and Lint Yield Prediction. Eur. J. Agron. 2007, 26, 335–344. [Google Scholar] [CrossRef]

- Yang, S.; Wang, R.; Zheng, J.; Zhao, P.; Han, W.; Mao, X.; Fan, H. Cotton Growth Monitoring Combined with Coefficient of Variation Method and Machine Learning Model. Bull. Surv. Mapp. 2024, 111–116. [Google Scholar] [CrossRef]

- Wan, S.; Zhao, K.; Lu, Z.; Li, J.; Lu, T.; Wang, H. A Modularized IoT Monitoring System with Edge-Computing for Aquaponics. Sensors 2022, 22, 9260. [Google Scholar] [CrossRef] [PubMed]

- Feng, A.; Zhou, J.; Vories, E.; Sudduth, K.A. Evaluation of Cotton Emergence Using UAV-Based Imagery and Deep Learning. Comput. Electron. Agric. 2020, 177, 105711. [Google Scholar] [CrossRef]

- Xu, R.; Li, C.; Paterson, A.H.; Jiang, Y.; Sun, S.; Robertson, J.S. Aerial Images and Convolutional Neural Network for Cotton Bloom Detection. Front. Plant Sci. 2018, 8, 2235. [Google Scholar] [CrossRef]

- Jiang, Y.; Li, C.; Xu, R.; Sun, S.; Robertson, J.S.; Paterson, A.H. DeepFlower: A Deep Learning-Based Approach to Characterize Flowering Patterns of Cotton Plants in the Field. Plant Methods 2020, 16, 156. [Google Scholar] [CrossRef]

- Wang, S.; Li, Y.; Yuan, J.; Song, L.; Liu, X.; Liu, X. Recognition of Cotton Growth Period for Precise Spraying Based on Convolution Neural Network. Inf. Process. Agric. 2021, 8, 219–231. [Google Scholar] [CrossRef]

- Jin, K.; Zhang, J.; Liu, N.; Li, M.; Ma, Z.; Wang, Z.; Zhang, J.; Yin, F. Improved MobileVit Deep Learning Algorithm Based on Thermal Images to Identify the Water State in Cotton. Agric. Water Manag. 2025, 310, 109365. [Google Scholar] [CrossRef]

- Zhao, L.; Um, D.; Nowka, K.; Landivar-Scott, J.L.; Landivar, J.; Bhandari, M. Cotton Yield Prediction Utilizing Unmanned Aerial Vehicles (UAV) and Bayesian Neural Networks. Comput. Electron. Agric. 2024, 226, 109415. [Google Scholar] [CrossRef]

- Yildirim, T.; Moriasi, D.N.; Starks, P.J.; Chakraborty, D. Using Artificial Neural Network (ANN) for Short-Range Prediction of Cotton Yield in Data-Scarce Regions. Agronomy 2022, 12, 828. [Google Scholar] [CrossRef]

- Meghraoui, K.; Sebari, I.; Pilz, J.; Ait El Kadi, K.; Bensiali, S. Applied Deep Learning-Based Crop Yield Prediction: A Systematic Analysis of Current Developments and Potential Challenges. Technologies 2024, 12, 43. [Google Scholar] [CrossRef]

- Mishra, S.; Mishra, D.; Santra, G.H. Applications of Machine Learning Techniques in Agricultural Crop Production: A Review Paper. Indian J. Sci. Technol 2016, 9, 1–14. [Google Scholar] [CrossRef]

- Shahid, M.F.; Khanzada, T.J.; Aslam, M.A.; Hussain, S.; Baowidan, S.A.; Ashari, R.B. An Ensemble Deep Learning Models Approach Using Image Analysis for Cotton Crop Classification in AI-Enabled Smart Agriculture. Plant Methods 2024, 20, 104. [Google Scholar] [CrossRef] [PubMed]

- Xu, W.; Chen, P.; Zhan, Y.; Chen, S.; Zhang, L.; Lan, Y. Cotton Yield Estimation Model Based on Machine Learning Using Time Series UAV Remote Sensing Data. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102511. [Google Scholar] [CrossRef]

- Oikonomidis, A.; Catal, C.; Kassahun, A. Hybrid Deep Learning-Based Models for Crop Yield Prediction. Appl. Artif. Intell. 2022, 36, 2031822. [Google Scholar] [CrossRef]

- Nguyen, L.H.; Zhu, J.; Lin, Z.; Du, H.; Yang, Z.; Guo, W.; Jin, F. Spatial-Temporal Multi-Task Learning for within-Field Cotton Yield Prediction. In Advances in Knowledge Discovery and Data Mining, Proceedings of the 23rd Pacific-Asia Conference, PAKDD 2019, Macau, China, 14–17 April 2019; Proceedings, Part I 23; Springer: Berlin/Heidelberg, Germany, 2019; pp. 343–354. [Google Scholar]

- Kang, X.; Huang, C.; Zhang, L.; Zhang, Z.; Lv, X. Downscaling Solar-Induced Chlorophyll Fluorescence for Field-Scale Cotton Yield Estimation by a Two-Step Convolutional Neural Network. Comput. Electron. Agric. 2022, 201, 107260. [Google Scholar] [CrossRef]

- Niu, H.; Peddagudreddygari, J.R.; Bhandari, M.; Landivar, J.A.; Bednarz, C.W.; Duffield, N. In-Season Cotton Yield Prediction with Scale-Aware Convolutional Neural Network Models and Unmanned Aerial Vehicle RGB Imagery. Sensors 2024, 24, 2432. [Google Scholar] [CrossRef]

- Zhang, M.; Chen, W.; Gao, P.; Li, Y.; Tan, F.; Zhang, Y.; Ruan, S.; Xing, P.; Guo, L. YOLO SSPD: A Small Target Cotton Boll Detection Model during the Boll-Spitting Period Based on Space-to-Depth Convolution. Front. Plant Sci. 2024, 15, 1409194. [Google Scholar] [CrossRef]

- Haghverdi, A.; Washington-Allen, R.A.; Leib, B.G. Prediction of Cotton Lint Yield from Phenology of Crop Indices Using Artificial Neural Networks. Comput. Electron. Agric. 2018, 152, 186–197. [Google Scholar] [CrossRef]

- Pravallika, K.; Karuna, G.; Anuradha, K.; Srilakshmi, V. Deep Neural Network Model for Proficient Crop Yield Prediction. Proc. E3S Web Conf. 2021, 309, 01031. [Google Scholar] [CrossRef]

- Liu, H.; Meng, L.; Zhang, X.; Susan, U.; Ning, D.; Sun, S. Estimation Model of Cotton Yield with Time Series Landsat Images. Trans. Chin. Soc. Agric. Eng. 2015, 31, 215–220. [Google Scholar]

- Koudahe, K.; Sheshukov, A.Y.; Aguilar, J.; Djaman, K. Irrigation-Water Management and Productivity of Cotton: A Review. Sustainability 2021, 13, 10070. [Google Scholar] [CrossRef]

- Li, L.; Wang, H.; Wu, Y.; Chen, S.; Wang, H.; Sigrimis, N.A. Investigation of Strawberry Irrigation Strategy Based on K-Means Clustering Algorithm. Trans. Chin. Soc. Agric. Mach. 2020, 51, 295–302. [Google Scholar]

- Yuan, H.; Cheng, M.; Pang, S.; Li, L.; Wang, H.; NA, S. Construction and Performance Experiment of Integrated Water and Fertilization Irrigation Recycling System. Trans. Chin. Soc. Agric. Eng. 2014, 30, 72–78. [Google Scholar]

- Li, L.; Li, J.; Wang, H.; Georgieva, T.; Ferentinos, K.; Arvanitis, K.; Sygrimis, N. Sustainable Energy Management of Solar Greenhouses Using Open Weather Data on MACQU Platform. Int. J. Agric. Biol. Eng. 2018, 11, 74–82. [Google Scholar] [CrossRef]

- Phasinam, K.; Kassanuk, T.; Shinde, P.P.; Thakar, C.M.; Sharma, D.K.; Mohiddin, M.K.; Rahmani, A.W. Application of IoT and Cloud Computing in Automation of Agriculture Irrigation. J. Food Qual. 2022, 2022, 8285969. [Google Scholar] [CrossRef]

- Singh, G.; Sharma, D.; Goap, A.; Sehgal, S.; Shukla, A.; Kumar, S. Machine Learning Based Soil Moisture Prediction for Internet of Things Based Smart Irrigation System. In Proceedings of the 2019 5th International Conference on Signal Processing, Computing and Control (ISPCC), Solan, India, 10–12 October 2019; pp. 175–180. [Google Scholar]

- Janani, M.; Jebakumar, R. A Study on Smart Irrigation Using Machine Learning. Cell Cell. Life Sci. J. 2019, 4, 1–8. [Google Scholar] [CrossRef]

- Wang, H.; Fu, Q.; Meng, F.; Mei, S.; Wang, J.; Li, L. Optimal Design and Experiment of Fertilizer EC Regulation Based on Subsection Control Algorithm of Fuzzy and PI. Trans. Chin. Soc. Agric. Eng. 2016, 32, 110–116. [Google Scholar]

- Chen, Y.; Lin, M.; Yu, Z.; Sun, W.; Fu, W.; He, L. Enhancing Cotton Irrigation with Distributional Actor–Critic Reinforcement Learning. Agric. Water Manag. 2025, 307, 109194. [Google Scholar] [CrossRef]

- Ramirez, J.G.C. Optimizing Water and Fertilizer Use in Agriculture Through AI-Driven IoT Networks: A Comprehensive Analysis. Artif. Intell. Mach. Learn. Rev. 2025, 6, 1–7. [Google Scholar]

- Magesh, S. A Convolutional Neural Network Model and Algorithm Driven Prototype for Sustainable Tilling and Fertilizer Optimization. npj Sustain. Agric. 2025, 3, 5. [Google Scholar] [CrossRef]

- Zhu, F.; Zhang, L.; Hu, X.; Zhao, J.; Meng, Z.; Zheng, Y. Research and Design of Hybrid Optimized Backpropagation (BP) Neural Network PID Algorithm for Integrated Water and Fertilizer Precision Fertilization Control System for Field Crops. Agronomy 2023, 13, 1423. [Google Scholar] [CrossRef]

- Sami, M.; Khan, S.Q.; Khurram, M.; Farooq, M.U.; Anjum, R.; Aziz, S.; Qureshi, R.; Sadak, F. A Deep Learning-Based Sensor Modeling for Smart Irrigation System. Agronomy 2022, 12, 212. [Google Scholar] [CrossRef]

- Abioye, E.A.; Hensel, O.; Esau, T.J.; Elijah, O.; Abidin, M.S.Z.; Ayobami, A.S.; Yerima, O.; Nasirahmadi, A. Precision Irrigation Management Using Machine Learning and Digital Farming Solutions. AgriEngineering 2022, 4, 70–103. [Google Scholar] [CrossRef]

- Nalini, K.; Murhukrishnan, P.; Chinnusamy, C.; Vennila, C. Weeds of Cotton–A Review. Agric. Rev. 2015, 36, 140–146. [Google Scholar] [CrossRef]

- Memon, M.S.; Chen, S.; Shen, B.; Liang, R.; Tang, Z.; Wang, S.; Zhou, W.; Memon, N. Automatic Visual Recognition, Detection and Classification of Weeds in Cotton Fields Based on Machine Vision. Crop Prot. 2025, 187, 106966. [Google Scholar] [CrossRef]

- Shen, B.; Chen, S.; Yin, J.; Mao, H. Image Recognition of Green Weeds in Cotton Fields Based on Color Feature. Trans. Chin. Soc. Agric. Eng. 2009, 25, 163–167. [Google Scholar]

- Jin, X.; Che, J.; Chen, Y. Weed Identification Using Deep Learning and Image Processing in Vegetable Plantation. IEEE Access 2021, 9, 10940–10950. [Google Scholar] [CrossRef]

- Reedha, R.; Dericquebourg, E.; Canals, R.; Hafiane, A. Transformer Neural Network for Weed and Crop Classification of High Resolution UAV Images. Remote Sens. 2022, 14, 592. [Google Scholar] [CrossRef]

- Ajayi, O.G.; Ashi, J.; Guda, B. Performance Evaluation of YOLO v5 Model for Automatic Crop and Weed Classification on UAV Images. Smart Agric. Technol. 2023, 5, 100231. [Google Scholar] [CrossRef]

- Chang-Tao, Z.; Rui-Feng, W.; Yu-Hao, T.; Xiao-Xu, P.; Wen-Hao, S. Automatic Lettuce Weed Detection and Classification Based on Optimized Convolutional Neural Networks for Robotic Weed Control. Agronomy 2024, 14, 2838. [Google Scholar] [CrossRef]

- Rahman, A.; Lu, Y.; Wang, H. Performance Evaluation of Deep Learning Object Detectors for Weed Detection for Cotton. Smart Agric. Technol. 2023, 3, 100126. [Google Scholar] [CrossRef]

- Mwitta, C.; Rains, G.C.; Prostko, E.P. Autonomous Diode Laser Weeding Mobile Robot in Cotton Field Using Deep Learning, Visual Servoing and Finite State Machine. Front. Agron. 2024, 6, 1388452. [Google Scholar] [CrossRef]

- Hasan, A.M.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M.G. A Survey of Deep Learning Techniques for Weed Detection from Images. Comput. Electron. Agric. 2021, 184, 106067. [Google Scholar] [CrossRef]

- Maja, J.M.; Cutulle, M.; Barnes, E.; Enloe, J.; Weber, J. Mobile Robot Weeder Prototype for Cotton Production. 2021. Available online: https://www.cabidigitallibrary.org/doi/pdf/10.5555/20220278590 (accessed on 1 April 2025).

- Shao, L.; Gong, J.; Fan, W.; Zhang, Z.; Zhang, M. Cost Comparison between Digital Management and Traditional Management of Cotton fields—Evidence from Cotton Fields in Xinjiang, China. Agriculture 2022, 12, 1105. [Google Scholar] [CrossRef]

- Lytridis, C.; Pachidis, T. Recent Advances in Agricultural Robots for Automated Weeding. AgriEngineering 2024, 6, 3279–3296. [Google Scholar] [CrossRef]

- Qin, Y.-M.; Tu, Y.-H.; Li, T.; Ni, Y.; Wang, R.-F.; Wang, H. Deep Learning for Sustainable Agriculture: A Systematic Review on Applications in Lettuce Cultivation. Sustainability 2025, 17, 3190. [Google Scholar] [CrossRef]

- Quan, L.; Jiang, W.; Li, H.; Li, H.; Wang, Q.; Chen, L. Intelligent Intra-Row Robotic Weeding System Combining Deep Learning Technology with a Targeted Weeding Mode. Biosyst. Eng. 2022, 216, 13–31. [Google Scholar] [CrossRef]

- Chang, C.-L.; Xie, B.-X.; Chung, S.-C. Mechanical Control with a Deep Learning Method for Precise Weeding on a Farm. Agriculture 2021, 11, 1049. [Google Scholar] [CrossRef]

- Ilangovan, P.; Meena, K.; Begum, M.S. Weedbot: Weed Easy Extraction Using Deep Learning with a Robotic System. Proc. J. Phys. Conf. Ser. 2024, 2923, 012003. [Google Scholar] [CrossRef]

- Wang, R.-F.; Tu, Y.-H.; Chen, Z.-Q.; Zhao, C.-T.; Su, W.-H. A Lettpoint-Yolov11l Based Intelligent Robot for Precision Intra-Row Weeds Control in Lettuce. Available at SSRN 5162748. Available online: https://ssrn.com/abstract=5162748 (accessed on 1 April 2025).

- Barnes, E.; Morgan, G.; Hake, K.; Devine, J.; Kurtz, R.; Ibendahl, G.; Sharda, A.; Rains, G.; Snider, J.; Maja, J.M.; et al. Opportunities for Robotic Systems and Automation in Cotton Production. AgriEngineering 2021, 3, 339–362. [Google Scholar] [CrossRef]

- Fan, X.; Chai, X.; Zhou, J.; Sun, T. Deep Learning Based Weed Detection and Target Spraying Robot System at Seedling Stage of Cotton Field. Comput. Electron. Agric. 2023, 214, 108317. [Google Scholar] [CrossRef]

- Meena, S.B.; Karthickraja, A.; Saravanane, P. Harnessing AI and Robotics for Smart Weed Management in Cotton. In Recent Trends in Agriculture and Allied Sciences; JPS Scientific Publications: Tamil Nadu, India, 2024; pp. 87–94. [Google Scholar]

- Neupane, J.; Maja, J.M.; Miller, G.; Marshall, M.; Cutulle, M.; Greene, J.; Luo, J.; Barnes, E. The next Generation of Cotton Defoliation Sprayer. AgriEngineering 2023, 5, 441–459. [Google Scholar] [CrossRef]

- Chen, P.; Xu, W.; Zhan, Y.; Wang, G.; Yang, W.; Lan, Y. Determining Application Volume of Unmanned Aerial Spraying Systems for Cotton Defoliation Using Remote Sensing Images. Comput. Electron. Agric. 2022, 196, 106912. [Google Scholar] [CrossRef]

- Latif, G.; Alghazo, J.; Maheswar, R.; Vijayakumar, V.; Butt, M. Deep Learning Based Intelligence Cognitive Vision Drone for Automatic Plant Diseases Identification and Spraying. J. Intell. Fuzzy Syst. 2020, 39, 8103–8114. [Google Scholar] [CrossRef]

- Li, H.; Guo, C.; Yang, Z.; Chai, J.; Shi, Y.; Liu, J.; Zhang, K.; Liu, D.; Xu, Y. Design of Field Real-Time Target Spraying System Based on Improved YOLOv5. Front. Plant Sci. 2022, 13, 1072631. [Google Scholar] [CrossRef]

- Sabóia, H.d.S.; Mion, R.L.; Silveira, A.d.O.; Mamiya, A.A. Real-Time Selective Spraying for Viola Rope Control in Soybean and Cotton Crops Using Deep Learning. Eng. Agrícola 2022, 42, e20210163. [Google Scholar] [CrossRef]

- Gharakhani, H. Robotic Cotton Harvesting with a Multi-Finger End-Effector: Research, Design, Development, Testing, and Evaluation. Ph.D. Thesis, Mississippi State University, Oktibbeha, MS, USA, 2023. [Google Scholar]

- Thapa, S.; Rains, G.C.; Porter, W.M.; Lu, G.; Wang, X.; Mwitta, C.; Virk, S.S. Robotic Multi-Boll Cotton Harvester System Integration and Performance Evaluation. AgriEngineering 2024, 6, 803–822. [Google Scholar] [CrossRef]

- Rajendran, V.; Debnath, B.; Mghames, S.; Mandil, W.; Parsa, S.; Parsons, S.; Ghalamzan-E, A. Towards Autonomous Selective Harvesting: A Review of Robot Perception, Robot Design, Motion Planning and Control. J. Field Robot. 2024, 41, 2247–2279. [Google Scholar] [CrossRef]

- Oliveira, A.I.; Carvalho, T.M.; Martins, F.F.; Leite, A.C.; Figueiredo, K.T.; Vellasco, M.M.; Caarls, W. On the Intelligent Control Design of an Agricultural Mobile Robot for Cotton Crop Monitoring. In Proceedings of the 2019 12th International Conference on Developments in eSystems Engineering (DeSE), Kazan, Russia, 7–10 October 2019; pp. 563–568. [Google Scholar]

- Mwitta, C.; Rains, G.C. The Integration of GPS and Visual Navigation for Autonomous Navigation of an Ackerman Steering Mobile Robot in Cotton Fields. Front. Robot. AI 2024, 11, 1359887. [Google Scholar] [CrossRef]

- Wang, W.; Wu, Z.; Luo, H.; Zhang, B. Path Planning Method of Mobile Robot Using Improved Deep Reinforcement Learning. J. Electr. Comput. Eng. 2022, 2022, 5433988. [Google Scholar] [CrossRef]

- Yang, J.; Ni, J.; Li, Y.; Wen, J.; Chen, D. The Intelligent Path Planning System of Agricultural Robot via Reinforcement Learning. Sensors 2022, 22, 4316. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; He, Z.; Cao, D.; Ma, L.; Li, K.; Jia, L.; Cui, Y. Coverage Path Planning for Kiwifruit Picking Robots Based on Deep Reinforcement Learning. Comput. Electron. Agric. 2023, 205, 107593. [Google Scholar] [CrossRef]

- Wang, T.; Chen, B.; Zhang, Z.; Li, H.; Zhang, M. Applications of Machine Vision in Agricultural Robot Navigation: A Review. Comput. Electron. Agric. 2022, 198, 107085. [Google Scholar] [CrossRef]

- Li, Z.; Sun, C.; Wang, H.; Wang, R.-F. Hybrid Optimization of Phase Masks: Integrating Non-Iterative Methods with Simulated Annealing and Validation via Tomographic Measurements. Symmetry 2025, 17, 530. [Google Scholar] [CrossRef]

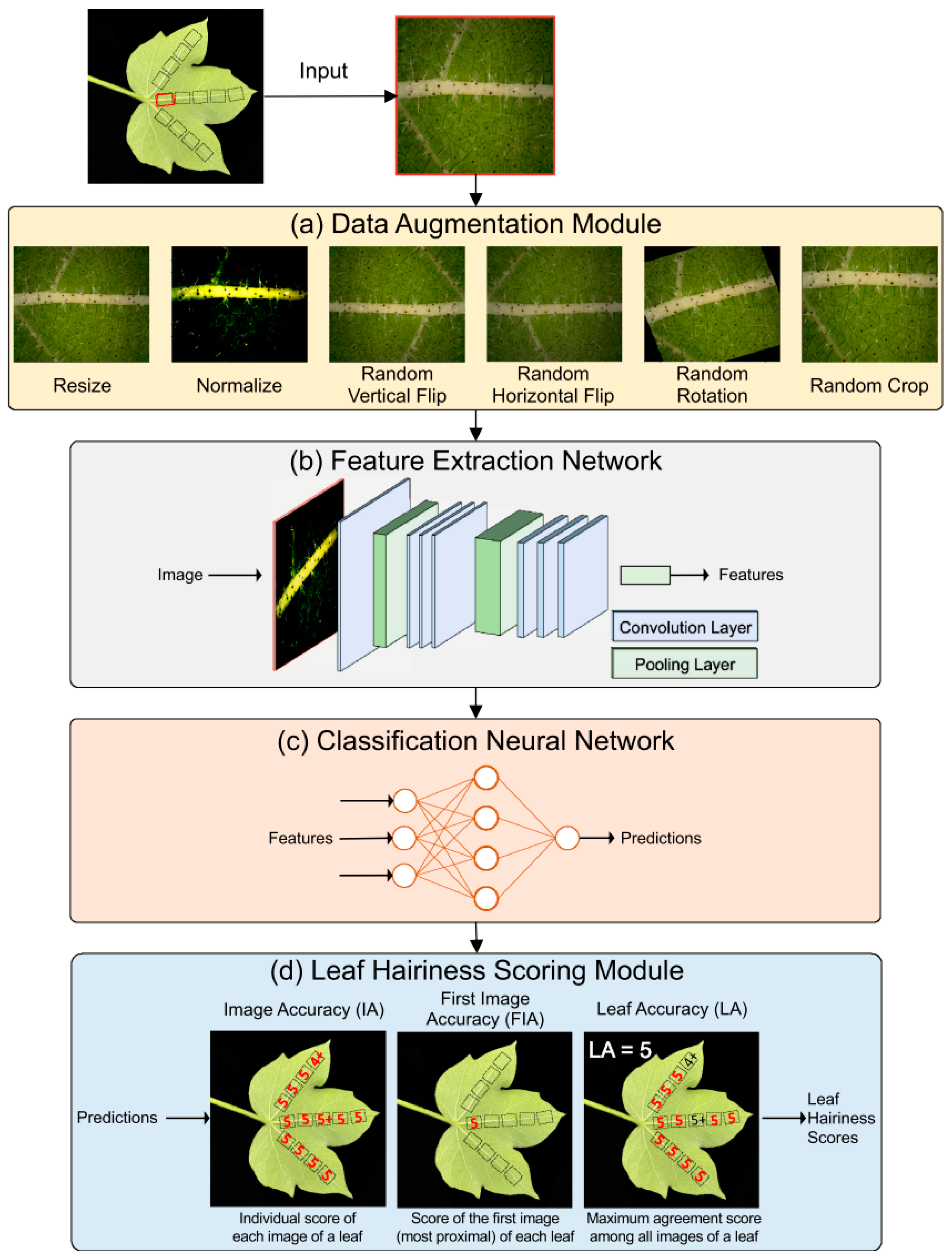

- Rolland, V.; Farazi, M.R.; Conaty, W.C.; Cameron, D.; Liu, S.; Petersson, L.; Stiller, W.N. HairNet: A Deep Learning Model to Score Leaf Hairiness, a Key Phenotype for Cotton Fibre Yield, Value and Insect Resistance. Plant Methods 2022, 18, 8. [Google Scholar] [CrossRef]

- Dai, N.; Jin, H.; Xu, K.; Hu, X.; Yuan, Y.; Shi, W. Prediction of Cotton Yarn Quality Based on Attention-GRU. Appl. Sci. 2023, 13, 10003. [Google Scholar] [CrossRef]

- Geng, L.; Yan, P.; Ji, Z.; Song, C.; Song, S.; Zhang, R.; Zhang, Z.; Zhai, Y.; Jiang, L.; Yang, K. A Novel Nondestructive Detection Approach for Seed Cotton Lint Percentage Using Deep Learning. J. Cotton Res. 2024, 7, 16. [Google Scholar] [CrossRef]

- Wang, R.; Zhang, Z.-F.; Yang, B.; Xi, H.-Q.; Zhai, Y.-S.; Zhang, R.-L.; Geng, L.-J.; Chen, Z.-Y.; Yang, K. Detection and Classification of Cotton Foreign Fibers Based on Polarization Imaging and Improved YOLOv5. Sensors 2023, 23, 4415. [Google Scholar] [CrossRef] [PubMed]

- Tantaswadi, P. Machine Vision for Quality Inspection of Cotton in the Textile Industry. Sci. Technol. Asia 2001, 6, 60–63. [Google Scholar]

- Zhou, W.; Xv, S.; Liu, C.; Zhang, J. Applications of near Infrared Spectroscopy in Cotton Impurity and Fiber Quality Detection: A Review. Appl. Spectrosc. Rev. 2016, 51, 318–332. [Google Scholar] [CrossRef]

- Fisher, O.J.; Rady, A.; El-Banna, A.A.; Emaish, H.H.; Watson, N.J. AI-Assisted Cotton Grading: Active and Semi-Supervised Learning to Reduce the Image-Labelling Burden. Sensors 2023, 23, 8671. [Google Scholar] [CrossRef]

- Zhang, C.; Li, T.; Zhang, W. The Detection of Impurity Content in Machine-Picked Seed Cotton Based on Image Processing and Improved YOLO V4. Agronomy 2021, 12, 66. [Google Scholar] [CrossRef]

- Li, Q.; Zhou, W.; Zhang, X.; Li, H.; Li, M.; Liang, H. Cotton-Net: Efficient and Accurate Rapid Detection of Impurity Content in Machine-Picked Seed Cotton Using near-Infrared Spectroscopy. Front. Plant Sci. 2024, 15, 1334961. [Google Scholar] [CrossRef]

- Xu, T.; Ma, A.; Lv, H.; Dai, Y.; Lin, S.; Tan, H. A Lightweight Network of near Cotton-Coloured Impurity Detection Method in Raw Cotton Based on Weighted Feature Fusion. IET Image Process. 2023, 17, 2585–2595. [Google Scholar] [CrossRef]

- Jiang, L.; Chen, W.; Shi, H.; Zhang, H.; Wang, L. Cotton-YOLO-Seg: An Enhanced YOLOV8 Model for Impurity Rate Detection in Machine-Picked Seed Cotton. Agriculture 2024, 14, 1499. [Google Scholar] [CrossRef]

- Wang, M.; Ren, Y.; Lin, Y.; Wang, S. The Tightly Super 3-Extra Connectivity and Diagnosability of Locally Twisted Cubes. Am. J. Comput. Math. 2017, 7, 127–144. [Google Scholar] [CrossRef]

- Wang, S.; Wang, M. The Strong Connectivity of Bubble-Sort Star Graphs. Comput. J. 2019, 62, 715–729. [Google Scholar] [CrossRef]

- Bishshash, P.; Nirob, A.S.; Shikder, H.; Sarower, A.H.; Bhuiyan, T.; Noori, S.R.H. A Comprehensive Cotton Leaf Disease Dataset for Enhanced Detection and Classification. Data Brief 2024, 57, 110913. [Google Scholar] [CrossRef]

- Muzaddid, M.A.A.; Beksi, W.J. NTrack: A Multiple-Object Tracker and Dataset for Infield Cotton Boll Counting. IEEE Trans. Autom. Sci. Eng. 2024, 21, 7452–7464. [Google Scholar] [CrossRef]

- Amani, M.A.; Marinello, F. A Deep Learning-Based Model to Reduce Costs and Increase Productivity in the Case of Small Datasets: A Case Study in Cotton Cultivation. Agriculture 2022, 12, 267. [Google Scholar] [CrossRef]

- Zhang, Y.; Tiňo, P.; Leonardis, A.; Tang, K. A Survey on Neural Network Interpretability. IEEE Trans. Emerg. Top. Comput. Intell. 2021, 5, 726–742. [Google Scholar] [CrossRef]

- Wu, C.; Gales, M.J.F.; Ragni, A.; Karanasou, P.; Sim, K.C. Improving Interpretability and Regularization in Deep Learning. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 26, 256–265. [Google Scholar] [CrossRef]

- Dong, Y.; Su, H.; Zhu, J.; Zhang, B. Improving Interpretability of Deep Neural Networks with Semantic Information. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 975–983. [Google Scholar]

- Monga, V.; Li, Y.; Eldar, Y.C. Algorithm Unrolling: Interpretable, Efficient Deep Learning for Signal and Image Processing. IEEE Signal Process. Mag. 2021, 38, 18–44. [Google Scholar] [CrossRef]

- Askr, H.; El-dosuky, M.; Darwish, A.; Hassanien, A.E. Explainable ResNet50 Learning Model Based on Copula Entropy for Cotton Plant Disease Prediction. Appl. Soft Comput. 2024, 164, 112009. [Google Scholar] [CrossRef]

- Chen, Y.; Zheng, B.; Zhang, Z.; Wang, Q.; Shen, C.; Zhang, Q. Deep Learning on Mobile and Embedded Devices: State-of-the-Art, Challenges, and Future Directions. ACM Comput. Surv. 2020, 53, 84:1–84:37. [Google Scholar] [CrossRef]

- Wang, M.-J.-S.; Xiang, D.; Hsieh, S.-Y. G-Good-Neighbor Diagnosability under the Modified Comparison Model for Multiprocessor Systems. Theor. Comput. Sci. 2025, 1028, 115027. [Google Scholar] [CrossRef]

- Wang, M.; Xiang, D.; Qu, Y.; Li, G. The Diagnosability of Interconnection Networks. Discret. Appl. Math. 2024, 357, 413–428. [Google Scholar] [CrossRef]

- Pan, C.-H.; Qu, Y.; Yao, Y.; Wang, M.-J.-S. HybridGNN: A Self-Supervised Graph Neural Network for Efficient Maximum Matching in Bipartite Graphs. Symmetry 2024, 16, 1631. [Google Scholar] [CrossRef]

- Zhou, G.; Wang, R.-F.; Cui, K. A Local Perspective-Based Model for Overlapping Community Detection. arXiv 2025, arXiv:2503.21558. [Google Scholar]

- Wang, M.; Wang, S. Connectivity and Diagnosability of Center K-Ary n-Cubes. Discret. Appl. Math. 2021, 294, 98–107. [Google Scholar] [CrossRef]

- Wang, M.; Lin, Y.; Wang, S.; Wang, M. Sufficient Conditions for Graphs to Be Maximally 4-Restricted Edge Connected. Australas. J. Comb. 2018, 70, 123–136. [Google Scholar]

- Thompson, N.; Greenewald, K.; Lee, K.; Manso, G.F. The Computational Limits of Deep Learning. In Proceedings of the Computing within Limits, LIMITS, Online, 14–15 June 2023. [Google Scholar]

- Shuvo, M.M.H.; Islam, S.K.; Cheng, J.; Morshed, B.I. Efficient Acceleration of Deep Learning Inference on Resource-Constrained Edge Devices: A Review. Proc. IEEE 2023, 111, 42–91. [Google Scholar] [CrossRef]

- Wang, H.; Liu, J.; Liu, L.; Zhao, M.; Mei, S. Coupling Technology of OpenSURF and Shannon-Cosine Wavelet Interpolation for Locust Slice Images Inpainting. Comput. Electron. Agric. 2022, 198, 107110. [Google Scholar] [CrossRef]

- Wang, H.; Mei, S.-L. Shannon Wavelet Precision Integration Method for Pathologic Onion Image Segmentation Based on Homotopy Perturbation Technology. Math. Probl. Eng. 2014, 2014, 601841. [Google Scholar] [CrossRef]

- Ye, Z.; Gao, W.; Hu, Q.; Sun, P.; Wang, X.; Luo, Y.; Zhang, T.; Wen, Y. Deep Learning Workload Scheduling in GPU Datacenters: A Survey. ACM Comput. Surv. 2024, 56, 146:1–146:38. [Google Scholar] [CrossRef]

- Chan, K.Y.; Abu-Salih, B.; Qaddoura, R.; Al-Zoubi, A.M.; Palade, V.; Pham, D.-S.; Ser, J.D.; Muhammad, K. Deep Neural Networks in the Cloud: Review, Applications, Challenges and Research Directions. Neurocomputing 2023, 545, 126327. [Google Scholar] [CrossRef]

- Liu, H.-I.; Galindo, M.; Xie, H.; Wong, L.-K.; Shuai, H.-H.; Li, Y.-H.; Cheng, W.-H. Lightweight Deep Learning for Resource-Constrained Environments: A Survey. ACM Comput. Surv. 2024, 56, 1–42. [Google Scholar] [CrossRef]

- Li, H.; Wang, G.; Dong, Z.; Wei, X.; Wu, M.; Song, H.; Amankwah, S.O.Y. Identifying Cotton Fields from Remote Sensing Images Using Multiple Deep Learning Networks. Agronomy 2021, 11, 174. [Google Scholar] [CrossRef]

- Wang, M.; Lin, Y.; Wang, S. The Connectivity and Nature Diagnosability of Expanded k -Ary n -Cubes. RAIRO-Theor. Inform. Appl.-Inform. Théorique Appl. 2017, 51, 71–89. [Google Scholar] [CrossRef]

- Wang, M.; Lin, Y.; Wang, S. The Nature Diagnosability of Bubble-Sort Star Graphs under the PMC Model and MM∗ Model. Int. J. Eng. Appl. Sci. 2017, 4, 2394–3661. [Google Scholar]

- Wang, S.; Wang, Y.; Wang, M. Connectivity and Matching Preclusion for Leaf-Sort Graphs. J. Interconnect. Netw. 2019, 19, 1940007. [Google Scholar] [CrossRef]

| Criteria Type | Description |

|---|---|

| Inclusion | 1. The research focuses on specific stages within the cotton value chain, such as planting, pest and disease identification, harvesting, grading, and processing. |

| 2. At least one mainstream deep learning algorithm (e.g., CNN, RNN, Transformer, YOLO) is employed in the study. | |

| 3. The selected papers are published in either Chinese or English to ensure readability and accurate comprehension. | |

| 4. The studies are based on real-world field or factory data, or are validated, rather than purely theoretical models or simulation tests. | |

| 5. All selected papers are peer-reviewed journal or conference papers, ensuring academic rigor and verifiability. | |

| Exclusion | 1. The study exclusively uses traditional machine learning methods (e.g., SVM, RF, and KNN), without employing deep learning techniques. |

| 2. The paper is limited to a technical review or patent literature, without original experimental data or performance results. | |

| 3. The paper lacks a complete model architecture description or experimental validation, such as those that describe methods without performance evaluation. |

| Application Type | Common Models | Common Evaluation Metrics | Typical Tasks/Examples |

|---|---|---|---|

| Image classification | CNN, ResNet, VGG, DenseNet | Accuracy, precision, recall, F1-score, AUC | Cotton species classification, disease type identification, and damage detection |

| Object detection | YOLO(v5/v8), Faster R-CNN YOLOX, CenterNet | mAP(@50 or @0.5:0.95) precision, recall, FPS | Cotton damage detection, cotton bollworm identification, and disease localization |

| Image segmentation | U-Net, SegNet, DeepLabV3+ | IoU, dice coefficient | Cotton field area segmentation, cotton plant recognition, and cotton leaf lesion area extraction |

| Regression prediction | LSTM, GRU, MLP, 1D-CNN, Transformer | RMSE, MAE, R2, MSE | Cotton yield prediction, vitality forecasting, and growth stage estimation |

| Multimodal/fusion recognition | CNN + GLCM, Transformer + KGE YOLO + SRNet | Accuracy, mAP, FPS, recall | Hyperspectral + texture feature fusion, knowledge graph-assisted recognition, and small object enhancement detection |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Z.-Y.; Xia, W.-K.; Chu, H.-Q.; Su, W.-H.; Wang, R.-F.; Wang, H. A Comprehensive Review of Deep Learning Applications in Cotton Industry: From Field Monitoring to Smart Processing. Plants 2025, 14, 1481. https://doi.org/10.3390/plants14101481

Yang Z-Y, Xia W-K, Chu H-Q, Su W-H, Wang R-F, Wang H. A Comprehensive Review of Deep Learning Applications in Cotton Industry: From Field Monitoring to Smart Processing. Plants. 2025; 14(10):1481. https://doi.org/10.3390/plants14101481

Chicago/Turabian StyleYang, Zhi-Yu, Wan-Ke Xia, Hao-Qi Chu, Wen-Hao Su, Rui-Feng Wang, and Haihua Wang. 2025. "A Comprehensive Review of Deep Learning Applications in Cotton Industry: From Field Monitoring to Smart Processing" Plants 14, no. 10: 1481. https://doi.org/10.3390/plants14101481

APA StyleYang, Z.-Y., Xia, W.-K., Chu, H.-Q., Su, W.-H., Wang, R.-F., & Wang, H. (2025). A Comprehensive Review of Deep Learning Applications in Cotton Industry: From Field Monitoring to Smart Processing. Plants, 14(10), 1481. https://doi.org/10.3390/plants14101481