Improvement of the YOLOv8 Model in the Optimization of the Weed Recognition Algorithm in Cotton Field

Abstract

1. Introduction

2. Results and Discussion

2.1. Experimental Platform

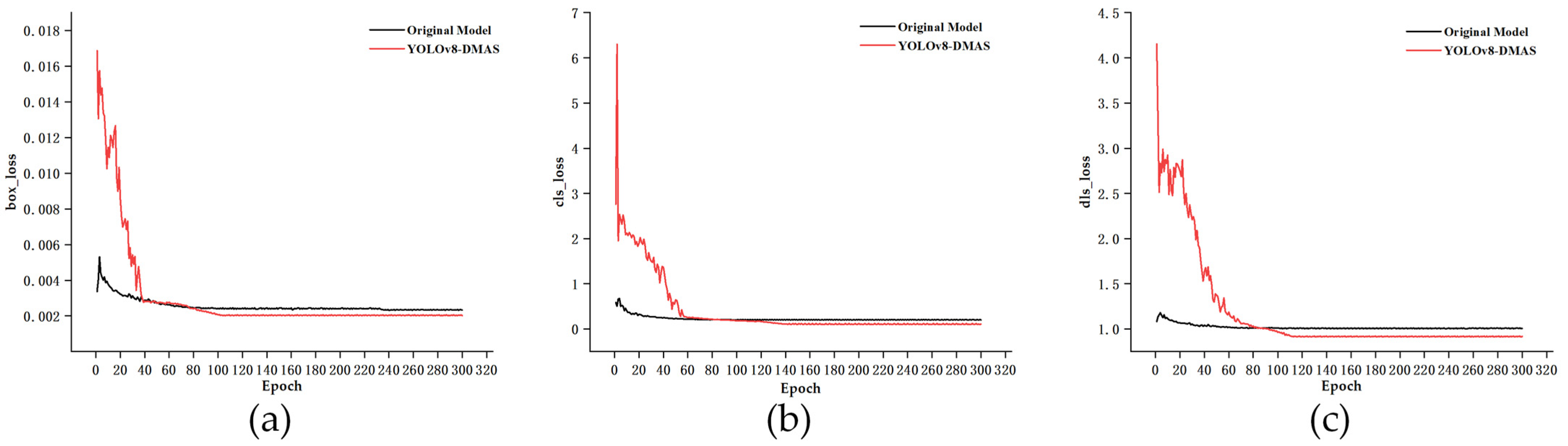

2.2. Performance Evaluation of Models

2.3. Ablation Experiments

2.4. Comparison Experiments

2.5. Visual Analysis of Test Results

3. Materials and Methods

3.1. Cotton Weed Dataset

3.1.1. Data Selection

3.1.2. Image Augmentation

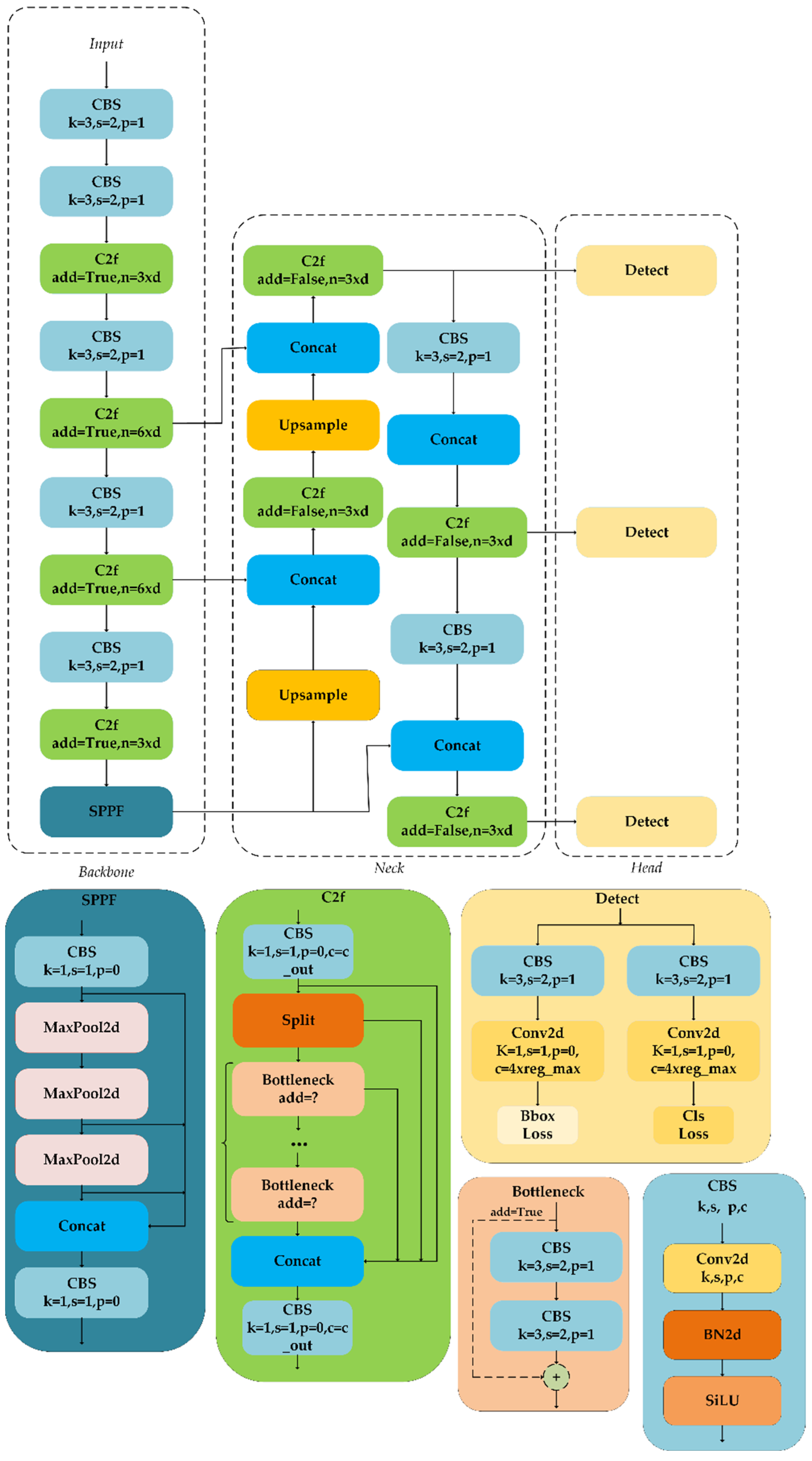

3.2. YOLOv8 Model

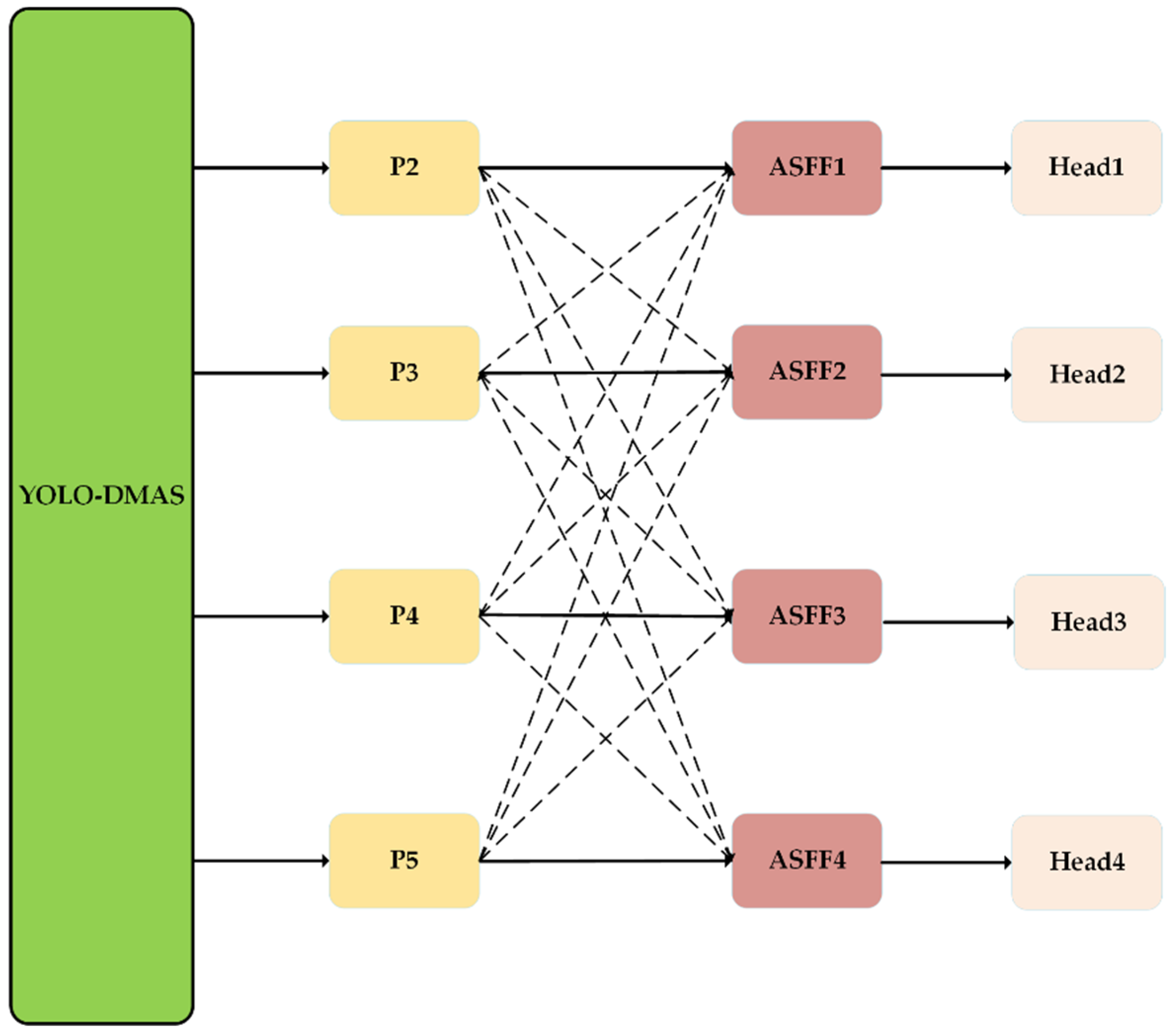

3.3. Improved YOLOv8-DMAS

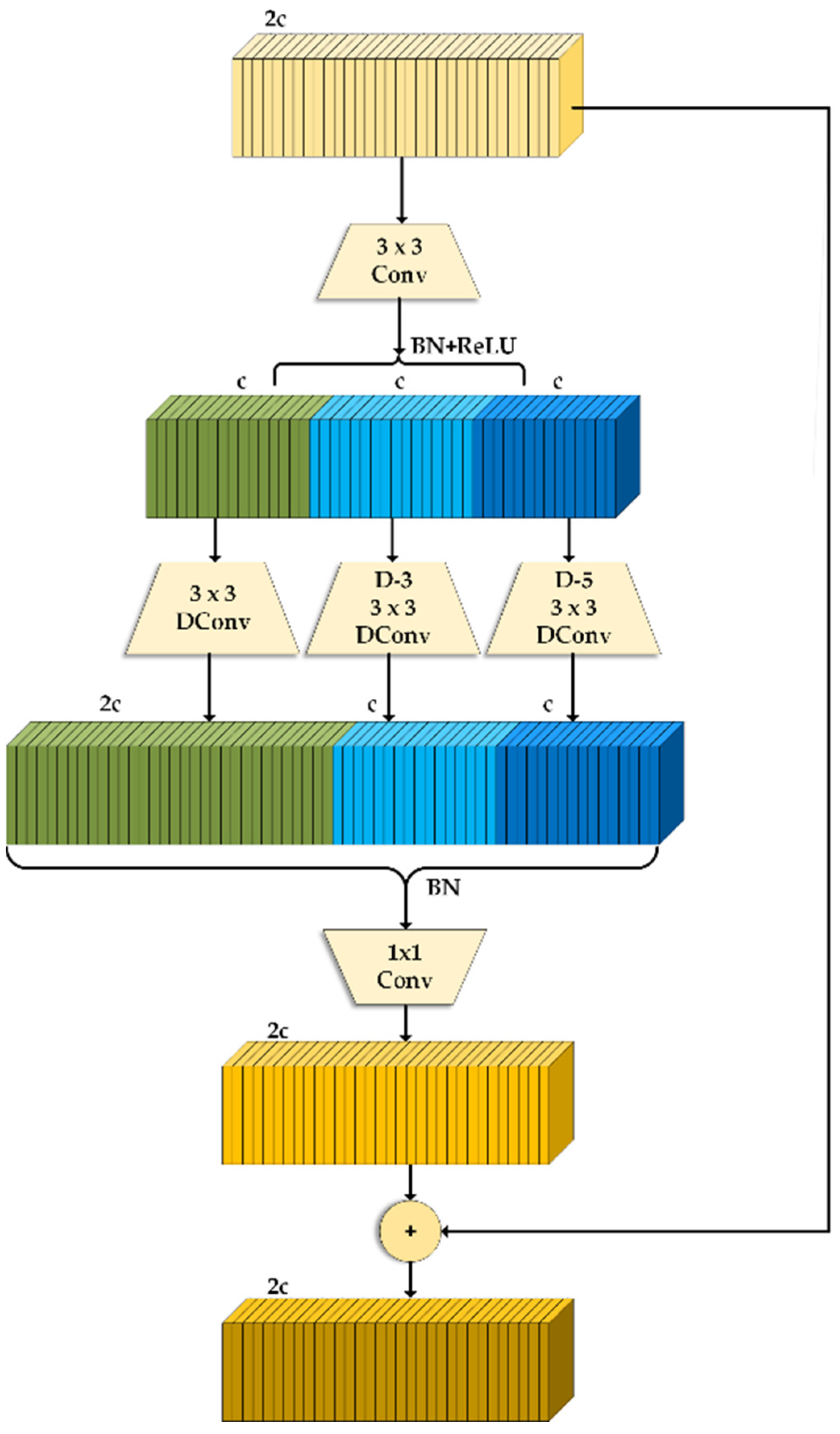

3.3.1. DWR Module

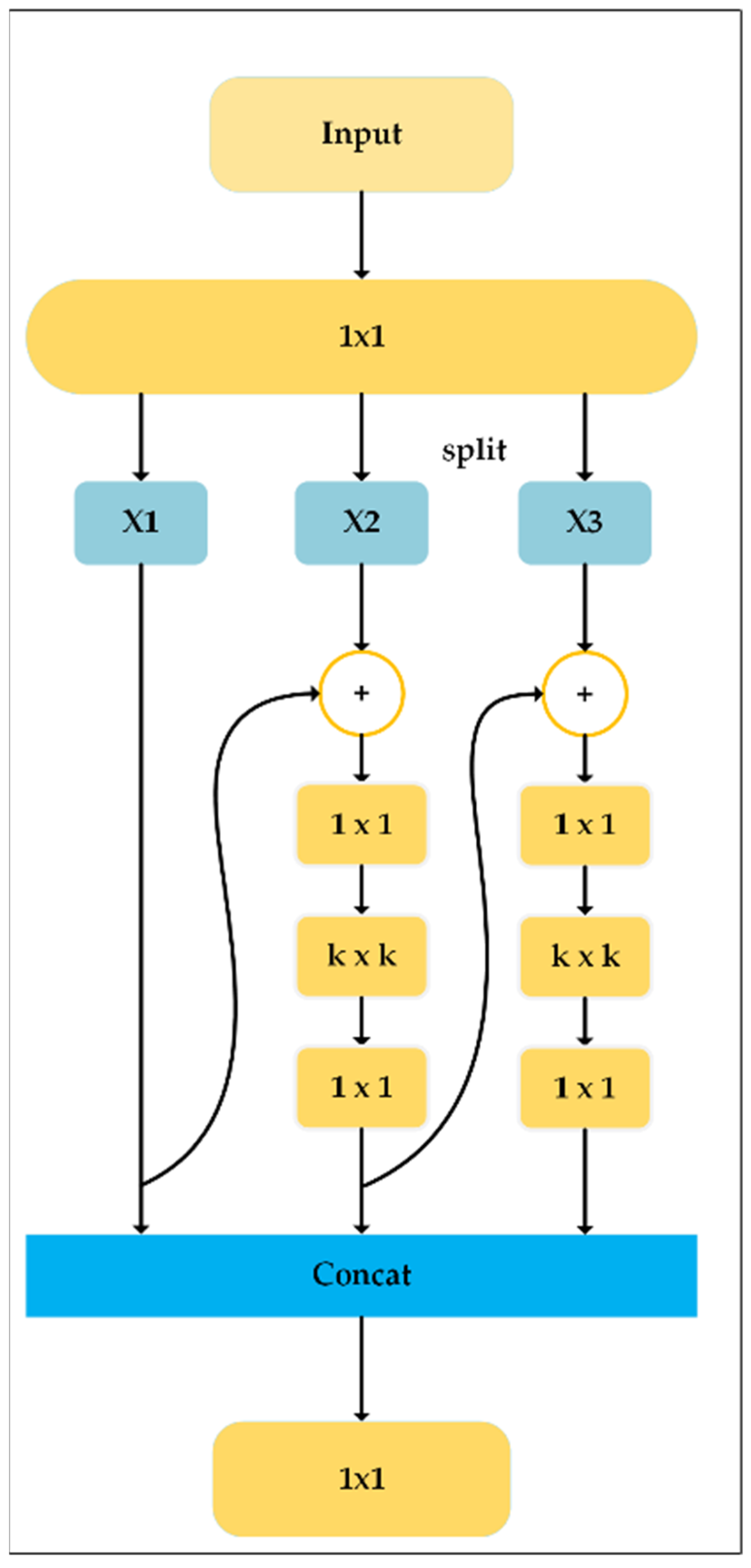

3.3.2. Multi-Scale Block

3.3.3. Improved Detection Head

3.3.4. Soft-NMS Method

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Prajapati, B.S.; Dabhi, V.K.; Prajapati, H.B. A survey on detection and classification of cotton leaf diseases. In Proceedings of the 2016 International Conference on Electrical, Electronics, and Optimization Techniques (ICEEOT), Chennai, India, 3–5 March 2016; pp. 2499–2506. [Google Scholar]

- Revathi, P.; Hemalatha, M. Advance computing enrichment evaluation of cotton leaf spot disease detection using Image Edge detection. In Proceedings of the 2012 Third International Conference on Computing, Communication and Networking Technologies (ICCCNT’12), Coimbatore, India, 26–28 July 2012; pp. 1–5. [Google Scholar]

- Oerke, E.C. Crop losses to pests. J. Agric. Sci. 2006, 144, 31–43. [Google Scholar] [CrossRef]

- Aravecchia, W.X.; Lottes, S.; Stachniss, P.; Pradalier, C. Robotic weed control using automated weed and crop classification. J. Field Robot. 2020, 37, 322–340. [Google Scholar]

- Genze, N.; Ajekwe, R.; Güreli, Z.; Haselbeck, F.; Grieb, M.; Grimm, D.G. Deep learning-based early weed segmentation using motion blurred UAV images of sorghum fields. Comput. Electron. Agric. 2022, 202, 107388. [Google Scholar] [CrossRef]

- Zhao, B.; Wu, H.; Li, S.; Mao, W.; Zhang, X. Research on weed recognition method based on invariant moments. In Proceedings of the 11th World Congress on Intelligent Control and Automation, Shenyang, China, 29 June–4 July 2014; pp. 2167–2169. [Google Scholar]

- Lavania, S.; Matey, P.S. Novel Method for Weed Classification in Maize Field Using Otsu and PCA Implementation. In Proceedings of the 2015 IEEE International Conference on Computational Intelligence & Communication Technology, Ghaziabad, India, 13–14 February 2015; pp. 534–537. [Google Scholar]

- Bakhshipour, A.; Jafari, A. Evaluation of support vector machine and artificial neural networks in weed detection using shape features. Comput. Electron. Agric. 2018, 145, 153–160. [Google Scholar] [CrossRef]

- Zhang, S.; Huang, W.; Wang, Z. Combing modified Grabcut, K-means clustering and sparse representation classification for weed recognition in wheat field. Neurocomputing 2021, 452, 665–674. [Google Scholar] [CrossRef]

- Taigman, Y.; Yang, M.; Ranzato, M.; Wolf, L. Deepface: Closing the gap to human-level performance in face verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1701–1708. [Google Scholar]

- Hung, C.; Xu, Z.; Sukkarieh, S. Feature Learning Based Approach for Weed Classification Using High Resolution Aerial Images from a Digital Camera Mounted on a UAV. Remote Sens. 2014, 6, 12037–12054. [Google Scholar] [CrossRef]

- Chavan, T.R.; Nandedkar, A.V. AgroAVNET for crops and weeds classification: A step forward in automatic farming. Comput. Electron. Agric. 2018, 154, 361–372. [Google Scholar] [CrossRef]

- Abdalla, A.; Cen, H.; Wan, L.; Rashid, R.; Weng, H.; Zhou, W.; He, Y. Fine-tuning convolutional neural network with transfer learning for semantic segmentation of ground-level oilseed rape images in a field with high weed pressure. Comput. Electron. Agric. 2019, 167, 105091. [Google Scholar] [CrossRef]

- Dos Santos Ferreira, A.; Freitas, D.M.; da Silva, G.G.; Pistori, H.; Folhes, M.T. Unsupervised deep learning and semi-automatic data labeling in weed discrimination. Comput. Electron. Agric. 2019, 165, 104963. [Google Scholar] [CrossRef]

- Zhao, H.; Cao, Y.; Yue, Y. Field weed recognition based on improved DenseNet. Trans. Chin. Soc. Agric. Eng. 2021, 37, 136–142. [Google Scholar]

- Yang, S.; Zhang, H.; Xing, L. Light weight recognition of weeds in the field based on improved MobileViT network. Trans. Chin. Soc. Agric. Eng. 2023, 39, 152–160. [Google Scholar]

- Xu, L.; Wang, Y.; Shi, X.; Tang, Z.; Chen, X.; Wang, Y.; Zou, Z.; Huang, P.; Liu, B.; Yang, N.; et al. Real-time and accurate detection of citrus in complex scenes based on HPL-YOLOv4. Comput. Electron. Agric. 2023, 205, 107590. [Google Scholar] [CrossRef]

- Peng, H.; Li, Z.; Zhou, Z.; Shao, Y. Weed detection in paddy field using an improved RetinaNet network. Comput. Electron. Agric. 2022, 199, 107179. [Google Scholar] [CrossRef]

- Sharpe, S.M.; Schumann, A.W.; Boyd, N.S. Goosegrass Detection in Strawberry and Tomato Using a Convolutional Neural Network. Sci. Rep. 2020, 10, 9548. [Google Scholar] [CrossRef] [PubMed]

- Gao, J.; French, A.P.; Pound, M.P. Deep convolutional neural networks for image-based Convolvulus sepium detection in sugar beet fields. Plant Methods 2020, 16, 29. [Google Scholar] [CrossRef] [PubMed]

- Ahmad, A.; Saraswat, D.; Aggarwal, V.; Etienne, A.; Hancock, B. Performance of deep learning models for classifying and detecting common weeds in corn and soybean production systems. Comput. Electron. Agric. 2021, 184, 106081. [Google Scholar] [CrossRef]

- Zhao, J.; Tian, G.; Qiu, C.; Gu, B.; Zheng, K.; Liu, Q. Weed Detection in Potato Fields Based on Improved YOLOv4: Optimal Speed and Accuracy of Weed Detection in Potato Fields. Electronics 2022, 11, 3709. [Google Scholar] [CrossRef]

- Shao, Y.; Guan, X.; Xuan, G.; Gao, F.; Feng, W.; Gao, G.; Wang, Q.; Huang, X.; Li, J. GTCBS-YOLOv5s: A lightweight model for weed species identification in paddy fields. Comput. Electron. Agric. 2023, 215, 108461. [Google Scholar] [CrossRef]

- García-Navarrete, O.L.; Santamaria, O.; Martín-Ramos, P.; Valenzuela-Mahecha, M.Á.; Navas-Gracia, L.M. Development of a Detection System for Types of Weeds in Maize (Zea mays L.) under Greenhouse Conditions Using the YOLOv5 v7.0 Model. Agriculture 2024, 14, 286. [Google Scholar] [CrossRef]

- Zhu, H.; Zhang, Y.; Mu, D.; Bai, L.; Wu, X.; Zhuang, H.; Li, H. Research on improved YOLOx weed detection based on lightweight attention module. Comput. Electron. Agric. 2024, 177, 106563. [Google Scholar] [CrossRef]

- Peng, M.; Xia, J.; Peng, H. Efficient recognition of cotton and weed in field based on Faster R-CNN by integrating FPN. Trans. Chin. Soc. Agric. Eng. 2019, 35, 202. [Google Scholar]

- Liu, W. SSD: Single Shot MultiBox Detector. arXiv 2015, arXiv:1512.02325. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef] [PubMed]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. arXiv 2016, arXiv:1610.02391. [Google Scholar]

- Dang, F.; Chen, D.; Lu, Y.; Li, Z. YOLOWeeds: A novel benchmark of YOLO object detectors for multi-class weed detection in cotton production systems. Comput. Electron. Agric. 2023, 205, 107655. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. You Only Learn One Representation: Unified Network for Multiple Tasks. arXiv 2021, arXiv:2105.04206. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. Scaled-YOLOv4: Scaling Cross Stage Partial Network. arXiv 2020, arXiv:2011.08036. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Yeh, I.H. Designing Network Design Strategies Through Gradient Path Analysis. arXiv 2022, arXiv:2211.04800. [Google Scholar]

- Wei, H.; Liu, X.; Xu, S.; Dai, Z.; Dai, Y.; Xu, X. DWRSeg: Rethinking Efficient Acquisition of Multi-scale Contextual Information for Real-time Semantic Segmentation. arXiv 2022, arXiv:2212.01173. [Google Scholar]

- Chen, Y.; Yuan, X.; Wu, R.; Wang, J.; Hou, Q.; Cheng, M.-M. YOLO-MS: Rethinking Multi-Scale Representation Learning for Real-time Object Detection. arXiv 2023, arXiv:2308.05480. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Learning Spatial Fusion for Single-Shot Object Detection. arXiv 2019, arXiv:1911.09516. [Google Scholar]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS—Improving Object Detection with One Line of Code. arXiv 2017, arXiv:1704.04503. [Google Scholar]

| D | M | A | S | P (%) | R (%) | mAP0.5 (%) | mAP0.5:0.95 (%) | Params (M) | GFlops (G) |

|---|---|---|---|---|---|---|---|---|---|

| × | × | × | × | 94.1 | 88.4 | 93.4 | 88.4 | 11.2 | 28.6 |

| √ | × | × | × | 94.9 | 87.5 | 93.9 | 88.9 | 10.6 | 27.4 |

| √ | √ | × | × | 94.3 | 89.6 | 94.7 | 89.7 | 13.2 | 29.5 |

| √ | √ | √ | × | 95.3 | 91.1 | 94.5 | 91.4 | 19.03 | 51.2 |

| √ | √ | √ | √ | 95.8 | 92.2 | 95.5 | 92.1 | 19.03 | 51.2 |

| Algorithm | Parameters (M) | P (%) | R (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) | Calculations (GFLOPs) | FPS (ms/Frame) |

|---|---|---|---|---|---|---|---|

| YOLOv8s | 11.13 | 94.1 | 88.4 | 93.4 | 88.4 | 28.4 | 85.67 |

| YOLOv3 | 61.583 | 88.4 | 88.2 | 91.5 | 83.6 | 154.7 | 80.65 |

| YOLOv4 Tiny | 6.057 | 94.1 | 79.00 | 89.6 | 61.7 | 6.9 | 92.18 |

| YOLOv5s | 7.023 | 93.9 | 88.2 | 93.7 | 87.3 | 15.8 | 85.19 |

| YOLOv7 | 37.256 | 94.2 | 88.0 | 93.4 | 87.6 | 105.3 | 74.07 |

| YOLOv8l | 43.64 | 94.5 | 88.8 | 94.0 | 89.7 | 165.5 | 73.69 |

| SSD | 50.21 | 83.4 | 86.6 | 89.6 | 49.2 | 360.7 | 47.938 |

| Faster R-CNN | 137.09 | 77.6 | 95.1 | 94.74 | 74.3 | 370.2 | 10.262 |

| Ours | 19.03 | 95.8 | 92.2 | 95.5 | 92.1 | 51.2 | 82.47 |

| Dataset Category | Division Ratio | Sample Size | Augmented |

|---|---|---|---|

| Training set | 70% | 7906 | √ |

| Validation set | 15% | 847 | × |

| Test set | 15% | 848 | × |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, L.; Yi, J.; He, P.; Tie, J.; Zhang, Y.; Wu, W.; Long, L. Improvement of the YOLOv8 Model in the Optimization of the Weed Recognition Algorithm in Cotton Field. Plants 2024, 13, 1843. https://doi.org/10.3390/plants13131843

Zheng L, Yi J, He P, Tie J, Zhang Y, Wu W, Long L. Improvement of the YOLOv8 Model in the Optimization of the Weed Recognition Algorithm in Cotton Field. Plants. 2024; 13(13):1843. https://doi.org/10.3390/plants13131843

Chicago/Turabian StyleZheng, Lu, Junchao Yi, Pengcheng He, Jun Tie, Yibo Zhang, Weibo Wu, and Lyujia Long. 2024. "Improvement of the YOLOv8 Model in the Optimization of the Weed Recognition Algorithm in Cotton Field" Plants 13, no. 13: 1843. https://doi.org/10.3390/plants13131843

APA StyleZheng, L., Yi, J., He, P., Tie, J., Zhang, Y., Wu, W., & Long, L. (2024). Improvement of the YOLOv8 Model in the Optimization of the Weed Recognition Algorithm in Cotton Field. Plants, 13(13), 1843. https://doi.org/10.3390/plants13131843