1. Introduction

Maize is an important crop widely cultivated, not only for its high nutritional value but also as an important food source for humans and animals [

1]. It also plays an important role in the field of food processing and can be used to produce edible oil, animal feed, maize flour, beer, and other products [

2]. Maize is also a key raw material in many industrial production processes [

3]. Since 2001, maize has surpassed rice to become the world’s second-largest grain. It is estimated that maize, as the staple food for over 200 million people, provides about 15% of the world’s protein and 20% of calories [

4]. Therefore, the impact of maize diseases and pests on maize yield and the resulting economic losses cannot be ignored [

5]. The accurate identification of diseases and pests on maize leaves and the corresponding prevention and control measures are crucial for reducing economic losses caused by diseases and pests during the growth process. At present, the identification of maize diseases and pests mainly relies on manual work, and preliminary diagnosis is made through people’s learning and understanding of the characteristics of maize leaf diseases and pests. However, this method has problems of low efficiency, susceptibility to subjective factors such as emotions, fatigue, etc., and requires a large amount of labor, resulting in high costs and difficulty in large-scale application. In order to effectively reduce the impact of diseases and pests on maize growth and yield, it is necessary to diagnose the diseases and pests as early as possible and take corresponding prevention and control measures, such as spraying pesticides, increasing fertilizer application, and introducing natural enemies of pests. This requires the precise identification of maize leaves to ensure the adoption of correct treatment methods and an effective response to the challenges posed by maize diseases and pests.

With the rapid development of computer vision technology, especially driven by deep learning applications such as image segmentation, classification, and detection, significant progress has been made in the field of automated identification of plant diseases and pests [

6]. By using deep learning models to accurately identify the disease and pest characteristics of plant leaves, not only can the accuracy of diagnosis be improved, but early warning and timely intervention can also be achieved, effectively reducing the impact of diseases and pests on plant growth and yield. Nivethithaa and Vijayalakshmi (2023) proposed an optimized fish swarm optimization-support vector machine (FSOSVM) model for plant disease identification [

7]. This model achieved an accuracy of 98.3% and 98.9% in identifying rice and maize leaf diseases, respectively. However, the FSOSVM model only identifies four types of maize leaf diseases, which may not meet the needs of diversified disease detection in actual agricultural production. Ramadan et al. (2023) used various convolutional neural networks and transformer architectures to detect maize leaf diseases, among which the ViT-B/16 model performed the best, with an accuracy of 94.51% [

8]. However, compared to CNN, the ViT model has higher computational resource requirements, which may lead to insufficient identification efficiency in practical applications. Mehta et al. (2023) constructed and evaluated a federated learning CNN model for identifying and diagnosing maize diseases, achieving an overall accuracy of 89.4% on the test set and demonstrating adaptability and consistency to various disease categories [

9]. However, 89.4% of the identification accuracy may not meet the requirements for precise identification of maize leaf diseases and pests in practical applications.

Bhuyan et al. (2023) proposed a Res4net-CBAM model that combines the Res4net architecture and the Convolutional Block Attention Module (CBAM) [

10]. This model can extract complex features related to different diseases and achieve an average identification accuracy of 98.27% on a self-built tea disease dataset. Although Res4net CBAM has advantages in disease feature extraction, further optimization may be needed to deal with complex background interference in practical applications. Lv et al. (2020) integrated maize leaf disease feature enhancement technology with the DMS-Robust Alexnet approach to propose a novel method for maize disease identification [

11]. This method achieved a classification accuracy of 98.62% on a dataset containing images of six types of maize leaves. However, the feature extraction ability of DMS-Robust Alexnet may still need to be improved when facing a wider variety of maize diseases and pests. Lin et al. (2021) designed a novel neural network EM-ERNet based on the ResNet backbone architecture and a particle swarm optimization ELM algorithm (PELM) to accelerate the fusion network of the final fully connected layer, achieving excellent identification performance on public banana disease detection datasets [

12]. However, considering that banana images in public datasets have already undergone background processing in advance, this may limit the model’s adaptability in real-world environments.

These studies provide valuable experience for identifying maize leaf diseases and pests, but the identification process still faces some challenges:

- (1)

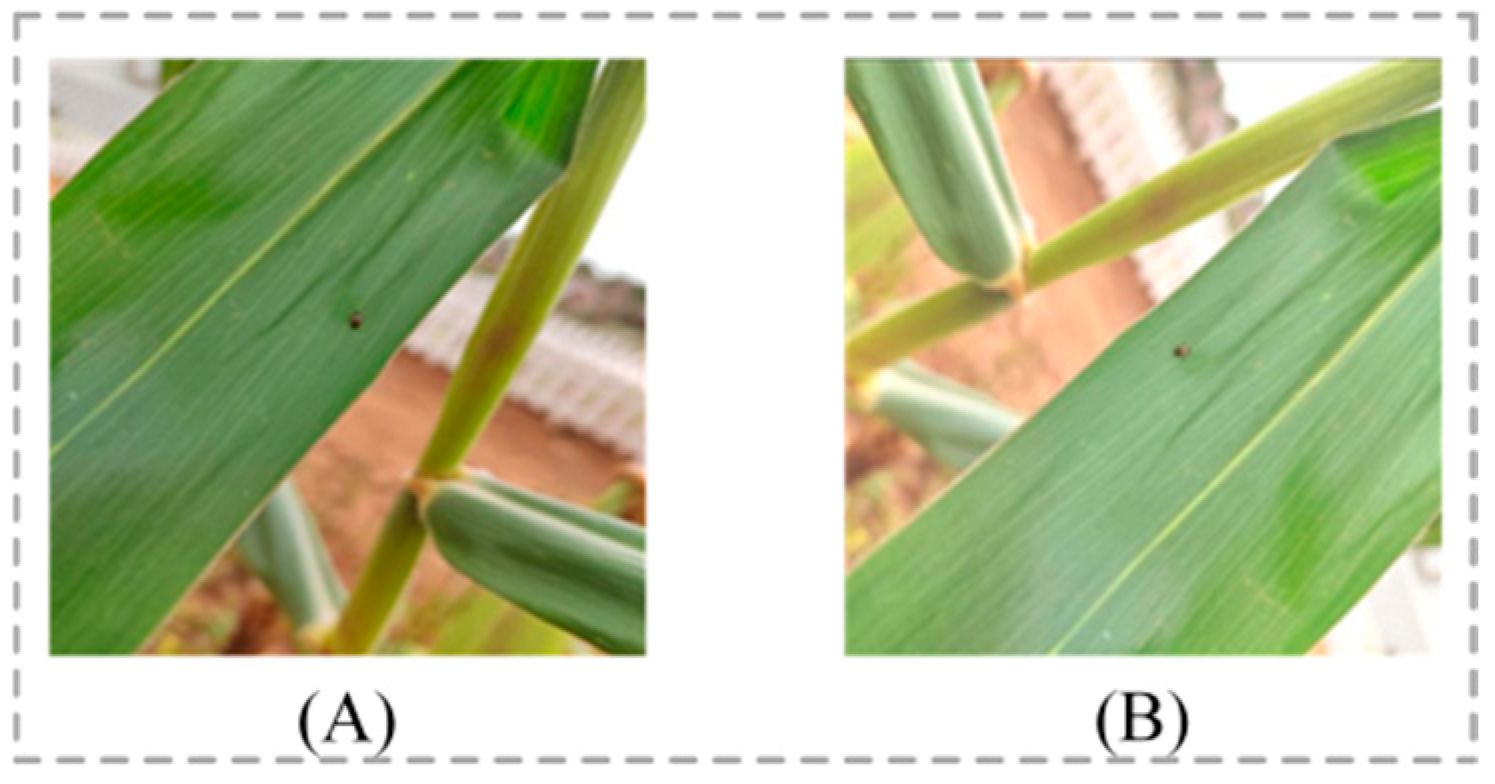

Complex background interference: Images captured in natural environments may have various complex backgrounds, as shown in

Figure 1A. These complex backgrounds may affect the model’s learning of disease and pest features, leading to the model capturing features unrelated to diseases and pests, thereby reducing identification accuracy.

- (2)

The characteristics of diseases and pests are subtle: Some diseases and pests have relatively small characteristics on maize leaves, as shown in

Figure 1B. These subtle features occupy a small proportion of pixels in the entire image, making feature extraction challenging.

In order to solve the problem of complex background interference, Qian et al. (2022) proposed a model for maize leaf identification based on converters and self-attention [

13]. The model represents the visual information of local regions in the image through labels, calculates the correlation between local regions using attention mechanisms, and finally integrates global information for classification, effectively reducing the interference of complex backgrounds. Chen et al. (2020) used binary wavelet transform combined with Retinex (BWTR) for image denoising and enhancement and used the artificial bee colony algorithm (ABCK)-optimized KSW to separate tomato leaves from the background [

14]. Finally, a dual-channel residual attention network model (B-ARNet) was used for image identification, with an overall identification accuracy of approximately 89%. Fang et al. (2022) proposed the HCA-MFFNet model, which uses the Hard Coordination Attention Mechanism (HCA) at different spatial scales to extract features of maize leaf disease images, reducing the impact of complex backgrounds [

5]. The model achieved an average identification accuracy of 97.75% and an F1 score of 97.03% on a self-built dataset. Zhang et al. (2023) proposed BCTNet for detecting apple leaf diseases in unconstrained environments. In BCTNet, a Cross-Attention Module (CAM) is adopted to reduce the computational workload of the detection network in non-disease areas in order to reduce the impact of background interference information on the network’s feature representation ability [

15]. BCTNet achieved an accuracy of 85.23% and an average detection speed of 33 FPS on a self-built dataset.

In order to address the issue of subtle features, Alirezazadeh et al. (2022) applied the Convolutional Block Attention Module (CBAM) to the output feature map of CNN, highlighting important local regions and extracting more discriminative features [

16]. This enabled EfficientNetB0 + CBAM to achieve a classification accuracy of 86.89% on the pear-leaf-disease dataset, DiaMOS Plant. Zhang et al. (2021) proposed an apple disease detection method based on a deep multi-scale dual-channel convolutional neural network (DMCNN) [

17]. The HSV color subspace analysis network and RGB color subspace texture analysis network are used to extract features, and a DMCNN was constructed using a homologous feature cross-fusion mechanism. A detection rate of 99.5% was achieved on a self-built apple disease dataset. Li et al. (2023) proposed a tomato-leaf-disease identification method based on LMBRNet, constructed a Comprehensive Grouping Discriminant Residual (CGDR), and utilized the multi-branched structure of CGDR to capture diverse feature information about tomato leaf diseases in different dimensions and receptive fields, achieving an accuracy rate of 99.7% in tomato leaf disease identification [

18]. Deng et al. (2023) proposed a novel image identification network, GR-ARNet, based on ResNet50, which implements the Ghost Module to improve the network’s ability to extract deep feature information about banana leaf diseases and identification speed [

19]. The ResNeSt module is also used to adjust the weights of each channel, enhance the banana disease feature extraction ability, and effectively reduce the identification error rate of similar diseases. The GR-ARNet model achieved an average accuracy of 96.98% and an accuracy of 89.31%.

These studies indicate that by combining advanced image processing techniques, attention mechanisms, multi-scale analysis, feature fusion, and innovative deep learning architectures, the accuracy and robustness of maize leaf disease and pest identification can be effectively improved. In order to further address the issues of complex background interference and subtle disease and pest characteristics in the identification of maize leaf diseases and pests, this study proposes an LFMNet model based on ResNext50. The following are the main contributions of this article:

- (1)

Innovative design of the LFMNet model:

- (a)

A localized multiscale inverted residual convolutional block (LMSB) was proposed, which overcomes the problem of losing important features during initial down-sampling in traditional convolution by combining local attention mechanisms, inverted residual structures, and multi-scale feature extraction. It achieves image down-sampling while retaining important disease and pest feature information for accurate extraction of fine disease and pest features in the subsequent structure of the model.

- (b)

The feature localization bottleneck (FLB) was proposed, which effectively combines the bottleneck module with an attention mechanism to address the shortcomings of the bottleneck module in dealing with complex background interference and extracting subtle features. The FLB helps the model focus on the disease and pest feature areas in the feature map, reducing the interference of complex backgrounds and helping to accurately extract subtle disease and pest features, thereby improving the accuracy and robustness of the model for disease and pest identification.

- (c)

The multi-hop local-feature fusion architecture (MLFFA) was proposed, which effectively overcomes the shortcomings of ResNext50 in fine feature extraction through a multi-hop local-feature fusion architecture. The combination of Efficient Localized Convolution (ELC) and a multi-stage feature fusion strategy significantly improves the model’s ability to capture and integrate subtle features of maize leaf diseases and pests, achieving more accurate disease and pest identification performance.

- (2)

On the self-built dataset, the LFMNet model achieved an average testing accuracy of 95.68% and an F1 score of 95.83%, demonstrating excellent performance. The LFMNet model has significant advantages over existing methods, effectively reducing the interference of complex backgrounds in practice and achieving the accurate extraction of fine features of diseases and pests. LFMNet can not only accurately identify maize leaf diseases and pests but may also provide reference values for the identification of other crop diseases and pests.

2. Maize Leaf Diseases and Pests Characteristics

The growth and yield of maize are not only affected by weed competition but may also be threatened by various pests and pathogens, as well as the effects of deficiencies in trace elements such as phosphorus and potassium.

Maize diseases include northern leaf blight, gray leaf spot, common rust, blight, and mold, as well as phosphorus and potassium deficiency. Northern leaf blight is caused by helminthosporium turcicum, which leads to slender gray-green to brown spots on leaves [

20]. Gray leaf spot is caused by cercospora zeae-maydis and cercospora zeina [

21]. The disease is initially surrounded by a yellow halo and later forms rectangular gray spots parallel to the leaf veins. Common rust causes orange-brown abscesses to appear on the leaves, which, in severe cases, cover the entire leaf surface [

22]. Blight is caused by the individual or combined infection of several types of fusarium or pythium, with grayish green leaves that resemble hot water or frost. Mold is caused by infection by mold or miscellaneous fungi, with black mold spots on the leaves. When phosphorus deficiency occurs, the leaf tips and edges appear purple-red, while when potassium deficiency occurs, yellow stripes appear on the leaves, from the leaf tips to the base.

Maize pests include the leaf beetle, the red spider mite, the

Mythimna separata (walker), and the

Phyllotreta striolata. After being damaged by the leaf beetle, there are residual network veins or epidermis on the leaves, which appear as small, irregular white spots from a distance [

23]. The red spider mite causes the surface of leaves to be covered with flocculent or mesh-like substances [

24]. The damage caused by the

Mythimna separata (walker) leads to holes or irregular notches in the leaves [

25]. The

Phyllotreta striolata causes adult insects with yellow stripe markings to be visible on the leaves [

26].

Table 1 provides a detailed description of the leaf characteristics of these 11 maize diseases and pests, providing important references for the identification and management of diseases and pests.

4. Experimentation and Analysis

This section demonstrates the effectiveness of LFMNet in the identification of 12 types of maize leaf diseases and pests through experiments. The section is divided into eight subsections: (1) Experimental Environment and Preparation; (2) Evaluation Indicators; (3) Performance Analysis of LFMNet; (4) Effectiveness Analysis of Individual Modules; (5) Ablation Experiment; (6) Comparison with Other Advanced Networks; (7) Confusion Matrix of Model Test Results; (8) Model Visualization of LFMNet; (9) Generalization Experiments. We conducted comprehensive performance tests on the model and compared LFMNet with other models from various perspectives.

4.1. Experimental Environment and Preparation

To ensure the reliability of the experimental results and avoid the impact of changes in experimental conditions, all experiments in this study were conducted under the same hardware and software conditions. The hardware used includes an NVIDIA GeForce RTX 4090 GPU (NVIDIA, Santa Clara, CA, USA) and an Intel (R) Xeon (R) Platinum 8336C CPU (Intel, Santa Clara, CA, USA) at 2.30 GHz. The server runs on the Windows 10 64-bit operating system. LFMNet was built on the PyTorch 2.1.0 framework.

Table 5 provides specific hardware and software details.

The input images are 256 × 256 pixels, and the total number of images is 19,451. The images were divided into a training set of 13,616 images, a validation set of 3889 images, and a test set of 1946 images, with a ratio of approximately 7:2:1. The training set is used for model training, the validation set is used for adjusting model parameters and training parameters, as well as evaluating model performance, and the test set is used for the final assessment of model performance. After continuous adjustments, the final parameter settings during the training process are presented in

Table 6.

4.2. Evaluation Indicators

In this study, we evaluate the performance of the model using the following metrics: accuracy, precision, recall, F1 score, and testing time.

Among them, TP (true positive) represents the number of positive samples correctly predicted by the model, FP (false positive) represents the number of negative samples incorrectly predicted as positive, FN (false negative) represents the number of positive samples incorrectly predicted as negative, and TN (true negative) represents the number of negative samples correctly predicted. Precision measures the proportion of actual positive categories predicted by the model. Recall measures the proportion of all actual positive categories correctly predicted by the model as positive categories. Accuracy measures the proportion of correct predictions among all predictions made by the model. The F1 score is the harmonic mean of precision and recall, which strikes a balance between the two. In image identification, these indicators provide methods for evaluating model performance from different perspectives. Testing time is used to measure the time required for the model, with trained weights, to conduct performance testing on the test set.

4.3. Performance Analysis of LFMNet

In order to evaluate the performance of LFMNet in identifying 12 types of maize leaves, we conducted training and validation on the MLDP dataset. We initially trained the model, adjusting the training parameters based on the metrics of the validation set during the training process. The accuracy and loss values for both the training and validation sets were recorded and plotted in

Figure 4, which reflects the model’s generalization ability on the dataset to some extent. As depicted in the graph, after training for 120 epochs, the accuracy of the training process reached 99.99%, and the loss decreased to around 0.0009. The accuracy of the validation process reached 95.78%, and the loss decreased to around 0.1548.

To minimize experimental variability, we conducted a ten-fold cross-validation [

34] on LFMNet. In this procedure, the dataset was divided into 10 subsets, with each subset alternately serving as the test set, while the remaining 9 subsets were used as the training set. Subsequently, LFMNet underwent training and testing in each iteration, and the results of the ten-fold cross-validation were aggregated. The summarized accuracy for the ten-fold cross-validation is presented in

Figure 5, with an average accuracy of 95.46%.

4.4. Effectiveness Analysis of Individual Modules

4.4.1. Effectiveness of LMSB

In this study, we propose a new initial down-sampling block, the LMSB, aimed at solving the problem of traditional 7 × 7 convolution losing important feature information during initial down-sampling. To verify the effectiveness of the LMSB, we compared it with the ResNet-C [

35] module. The experimental results are shown in

Table 7.

The ResNet-C module adopts the strategy of replacing 7 × 7 convolutions with three consecutive 3 × 3 convolutions. The first 3 × 3 convolution is responsible for down-sampling the image, while the next two 3 × 3 convolutions further extract the features of the feature map. However, although this design can provide more detailed feature extraction in some cases, it comes at the cost of increasing the overall number of parameters, and its performance in maize disease and pest identification tasks is not ideal. This indicates that the ResNet-C module may be more suitable for application scenarios that do not require high requirements for fine feature extraction.

In contrast, the LMSB utilizes its multi-scale and feature localization characteristics to effectively preserve important feature regions in the image while down-sampling. This is crucial for capturing subtle features in subsequent network structures. The design of the LMSB helps to improve the extraction effect of disease and pest features but also maintains a lower number of parameters, which is a significant advantage in model design. The experimental results, as shown in

Table 7, demonstrate that the LMSB can provide better performance compared to ResNet-C while maintaining parameter efficiency, especially in maize disease and pest identification tasks that require high-precision feature extraction.

4.4.2. Effectiveness of FLB

In this study, to address the shortcomings of the bottleneck module in handling complex background interference and fine feature extraction, we proposed an FLB. To verify the effectiveness of the FLB, we conducted comparative experiments with three existing bottleneck block improvement schemes: CBAMB, composed of CBAM; CAB, composed of CA; SEB, composed of SE; and the original bottleneck. The experimental results are shown in

Table 8.

The CBAMB achieves dual optimization of the bottleneck structure by integrating the CBAM attention mechanism, enabling the network to focus on information-rich channels and regions in the image, and improving the model’s ability to recognize image features. However, the spatial attention mechanism of CBAMB is two-dimensional and not as flexible and detailed as ELA in FLB, which may limit the performance of CBAMB in tasks that require subtle spatial feature identification. The CAB integrates the CA (coord attention) attention mechanism to bring dynamic focusing ability in the spatial dimension to the module, improving the accuracy and efficiency of image identification. However, although the component CA attention mechanism of the CAB enhances coordinate information, it also reduces channel dimension, which has a negative impact on the generated attention and limits the performance of the CABottleneck. The SEBottleneck introduces the SE (squeeze and excitation) attention mechanism to achieve adaptive weighting of channel features in the bottleneck structure, improving the ability of feature representation and enhancing the accuracy and generalization ability of the network. The SE attention mechanism of SEB mainly focuses on the channel dimension, capturing statistical information between channels through global average pooling, but lacks attention in the spatial dimension.

Compared with the above scheme, the ELA attention mechanism in the FLB independently applies attention in two dimensions of space (height and width), which can more finely adjust the spatial features of the feature map. According to the experimental results in

Table 8, the FLB is superior to the CBAMB, CAB, and SEB in all indicators. Therefore, we chose the FLB as the basic component of LFMNet to achieve significant improvements in its complex background interference processing ability and fine-feature extraction ability. The design of the FLB considers the fine extraction of spatial features, which is crucial for improving the performance of the model in practical disease and pest identification tasks. By introducing the FLB, LFMNet can more effectively identify diseases and pests on maize leaves, maintaining high accuracy even in complex and variable backgrounds.

4.4.3. Effectiveness of the MLFFA

This study proposes the MLFFA with the aim of addressing the limitations of ResNext50 in extracting the subtle features of maize leaf diseases and pests. The design core of MLFFA lies in its multi-hop local-feature fusion architecture, which enables the network to more effectively capture and integrate the subtle features of diseases and pests. To verify the effectiveness of the MLFFA, we compared it with the original ResNext50 architecture and the ResNet50 architecture.

The experimental results showed that the MLFFA has significant advantages in capturing subtle features of maize leaf diseases and pests and exhibits more accurate and robust performance in disease and pest identification tasks compared to ResNext50 and ResNet50. The specific experimental results are shown in

Table 9. In terms of various evaluation indicators, the MLFFA is superior to the ResNext50 architecture and the ResNet50 architecture. Based on these results, we adopt the MLFFA as the network architecture in LFMNet to achieve the effective extraction and fusion of fine features of diseases and pests.

4.5. Ablation Experiment

This paper proposes the LMSB, the FLB, and the MLFFA. To evaluate the effectiveness of these modules, we conducted ablation experiments. In this context, the LMSB is the localized multiscale inverted residual convolutional block proposed in this paper, the FLB is the feature localization bottleneck proposed in this paper, and the MLFFA is the multi-hop local-feature fusion architecture. We used the control variable method to gradually replace the LMSB, FLB, and MLFFA in the ResNext50 model, forming seven different schemes. The models corresponding to each scheme are trained using unified training parameters on MLDPs to ensure the fairness of the experiment. The training parameters are shown in

Table 6 of

Section 4.1. After completing the training, all models were tested on the same test set, and the final test results are summarized in

Table 10. The experimental results show that, while keeping other conditions the same, the identification accuracy of the model gradually improves with the integration of new modules. This discovery validates the effectiveness of the LMSB, FLB, and MLFFA in improving model performance.

Through these experiments, we not only demonstrated the independent contributions of each module but also showed that, when they work together, they can significantly improve the accuracy of identifying maize leaf diseases and pests.

4.6. Comparison with Other Advanced Networks

We compared LFMNet with other advanced networks in this study. The networks used for comparison included CSPResNext50 [

39], ResNet50 [

40], ResNext50, MobileNetv2 [

41], HS-ResNet50 [

42], GhostNetv3 [

43], and Vision Transformer [

44]. The training parameters of all models are the same, as shown in

Table 6. All models were trained for 120 epochs, and testing was conducted on the same test set. The experimental results are presented in

Table 11. Afterwards, we conducted statistical analysis on the accuracy of each model on each type of maize leaf image, as illustrated in

Figure 6. Here, numerical values are used to represent each type of maize leaf in place of their complete names: 1 blight; 2 common rust; 3 gray leaf spot; 4 healthy; 5 leaf beetle; 6 mold; 7 northern leaf blight; 8 phosphorus deficiency; 9 potassium deficiency; 10 red spider mite; 11 mythimna separata (walker); 12 phyllotreta striolata.

From

Table 11, it is evident that LFMNet achieves the highest accuracy, precision, recall, and F1 score among the eight models.

Figure 6 shows that LFMNet achieves the highest accuracy in identifying 12 different types of leaves, except for the third type, where the identification accuracy is slightly lower than the highest accuracy. This result highlights the robustness and accuracy of the LFMNet model in identifying diverse diseases and pests in maize leaves.

4.7. Confusion Matrix of Model Test Results

The confusion matrix between LFMNet and other models is shown in

Figure 7. The subfigures represent the following: (A) ResNet50, (B) ResNext50, (C) MobileNet-v2, (D) Vision Transformer, (E) HS-ResNet50, (F) GhostNet-v3, (G) CSPResNext50, and (H) LFMNet. The following is an explanation of the abbreviations of leaf names in the confusion matrix: B represents

blight, CR represents

common rust, GLS represents

gray leaf spot, H represents

healthy, LB represents

leaf beetle, M represents

mold, NLB represents

northern leaf blight, PD1 represents

phosphorus deficiency, PD2 represents

potassium deficiency, RSM represents

red spider mite, MS represents

mythimna separata (

walker) and PS represents

phyllotreta striolata.

Through the confusion matrix, we can clearly see the advantages of LFMNet in identifying various maize leaf diseases and pests.

4.8. Model Visualization of LFMNet

In order to visually observe the impact of different regions of different types of maize leaves on LFMNet for disease and pest identification, we visualized some images using the model weights obtained after training. We used Grad-CAM to visualize the weights of the LFMNet model.

Figure 8 shows the visualization result generated by overlaying the maize leaf image with its thermal map.

The visualization results shown in

Figure 8 clearly demonstrate the excellent performance of LFMNet in extracting subtle features of maize leaf diseases and pests. By overlaying the heatmap, we can intuitively see how the model effectively focuses on key feature areas in the image. These areas are often the decisive factors for identifying diseases and pests, and LFMNet can accurately identify and give them more attention, significantly improving the accuracy of identification.

4.9. Generalization Experiments

Generalization experiments are crucial for evaluating the practical application potential of models. They test the applicability and robustness of models by training and testing them on diverse datasets. These experiments particularly focus on the performance of the model under various input conditions, as well as on its ability to accurately identify target objects (such as plant leaves) in complex backgrounds. Through this comprehensive testing, we can verify whether the model has the ability to maintain consistent performance in a wide range of scenarios, not just based on specific training data. The purpose of generalization experiments is to ensure that the model can overcome the limitations of the training environment and demonstrate stable predictive ability in real-world applications. A comprehensive generalization evaluation not only helps us gain a deeper insight into the generalization potential of the model but also provides us with an important basis for judging the reliability of the model. In addition, it also reveals the problems and limitations that may be encountered during the actual deployment process, thereby pointing the way for further optimization and improvement of the model.

In order to comprehensively evaluate the generalization ability of the LFMNet model, we selected four standard datasets for experiments, including Plant Village-Apple, Almond, Fish, and Plant Leaves, as shown in

Table 12. These datasets cover different fields, which can comprehensively test the applicability of the model in various scenarios. We also implemented data augmentation strategies for the Plant Village-Apple and Almond datasets to further test the robustness of the model when facing diverse data. The training parameters are shown in

Table 6 of

Section 4.1. All datasets are divided into training and testing sets, with an approximate ratio of 9:1. The experimental results show that LFMNet exhibits excellent performance on all four datasets. Specifically, as shown in

Table 13, the accuracy on the Plant Village-Apple, Almond, Fish, and Plant Leaves datasets reached 99.37%, 99.35%, 99.67%, and 99.44%, respectively. These results not only demonstrate the accuracy of LFMNet in identifying maize leaf diseases and pests but also highlight its cross-domain generalization ability.

The generalization experiment has demonstrated that LFMNet not only performs well in identifying maize leaf diseases and pests but also maintains high accuracy in identifying different types of image data. This indicates that LFMNet has good generalization potential and can play an important role in practical applications.

5. Discussion

We propose an identification method for maize leaf diseases and pests based on LFMNet and verify the effectiveness of this method through a series of experiments.

Table 11 and

Figure 6 show that LFMNet has improved accuracy and precision in 12 maize-leaf classification tasks compared to other existing models.

GhostNet v3 is centered around its lightweight design, providing efficient computational performance, even while maintaining a small model size. However, on the MLDP dataset, GhostNet v3 did not demonstrate its advantages, with accuracy, precision, recall, and F1 scores of 87.62%, 87.49%, 87.16%, and 87.25%, respectively, and a testing time of 7.78 s. Vision Transformer divides an image into multiple small blocks and linearly embeds them into a sequence, allowing the transformer to process the image similarly to a text sequence. However, on the MLDP dataset, Vision Transformer did not perform well, with accuracy, precision, recall, and F1 scores of 87.72%, 87.16%, 88.20%, and 87.59%, respectively, and a testing time of 7.27 s. ResNet50, combined with residual blocks, achieved an accuracy of 89.88%, an accuracy of 89.63%, a recall rate of 89.86%, an F1 score of 89.68%, and a testing time of 3.47 s on the 12 types of maize leaves. MobileNetv2 is a lightweight deep neural network architecture with a testing time of 3.31 s while maintaining high performance, achieving an accuracy of 90.39%, a recall of 90.43%, and an F1 score of 90.56%. ResNext50 improves the model’s representation ability by introducing the concept of group convolution on the basis of ResNet50. Compared to ResNet50, ResNext50 achieved higher performance with a comparable number of parameters, specifically with an accuracy of 90.54% and a testing time of 3.56 s. In addition, ResNext50 also performed well in accuracy, recall, and the F1 score, with scores of 90.81%, 90.14%, and 90.36%, respectively. CSPResNext50 is an efficient convolutional neural network architecture that combines the computational efficiency of Cross-Stage Partial Network (CSPNet) with the feature representation capability of ResNext50, achieving an accuracy of 92.19%, an accuracy of 92.45%, a recall rate of 92.14%, an F1 score of 92.28%, and a testing time of 4.86 s. HS ResNet50 is an improved ResNet model that enhances the feature extraction ability of the model by introducing a hybrid shift module, achieving an accuracy of 93.73%, an accuracy of 94.04%, a recall rate of 93.09%, and an F1 score of 93.49%. However, the testing time of HS ResNet50 is relatively long, reaching 21.59 s, which may become a limitation in practical applications. LFMNet achieved excellent performance with an accuracy of 95.68%, an accuracy of 95.91%, a recall rate of 95.78%, an F1 score of 95.83%, and a testing time of 4.71 s. Compared to ResNext50, LFMNet improved accuracy, precision, recall, and F1 score by 5.14%, 5.1%, 5.64%, and 5.47%, respectively, while also increasing testing time by 1.15 s.

Figure 7 shows the confusion matrix of eight models. The confusion matrix is an important tool for evaluating the performance of classification models. It presents the relationship between model predictions and actual labels in an intuitive table form, identifies misclassifications of the model in specific categories, guides model optimization, and provides more accurate performance evaluations in cases of imbalanced categories. The confusion matrix shows that LFMNet still has room for improvement in the identification of gray leaf spot and

Mythimna separata (walker), two types of maize leaf diseases. The identification accuracy for gray leaf spot is 2.81%, lower than that of MobileNetv2. Although the highest identification accuracy among the eight models was achieved on the

Mythimna separata (walker), it only reached 86.59%.

The following is a summary of the factors that improve the performance of the LFMNet model:

- (1)

The LMSB module proposed in LFMNet accurately preserves important disease and pest feature regions during initial down-sampling, which helps to extract fine features from subsequent network structures. According to

Table 7, LMSB has a positive impact on all four key identification indicators. In

Table 10, schemes D, E, and G are compared to schemes B, C, and F. The accuracy increased by 0.41%, 1.90%, and 0.95%, respectively, demonstrating the contribution of LMSB to performance improvement.

- (2)

To cope with complex background interference, LFMNet adopts the FLB components. Compared to bottleneck, CBAMB, CAB, and SEB, the FLB showed an accuracy improvement of 2.45%, 1.34%, 1.80%, and 1.96% in

Table 8. In

Table 10, schemes D, F, and G are compared to schemes A, C, and E. The accuracy was improved by 3.08%, 2.62%, and 2.69%, respectively, further confirming the role of the FLB in improving the performance of LFMNet.

- (3)

In response to the challenge of extracting subtle features, the MLFFA module proposed in this study replaces the ResNext50 architecture.

Table 9 shows that compared to ResNet50 and ResNext50, the MLFFA achieved an accuracy improvement of 2.41% and 1.73%, respectively. In

Table 10, schemes E, F, and G are compared to schemes A, B, and D. The accuracy has increased by 2.67%, 0.72%, and 1.28%, respectively, fully demonstrating the effectiveness of the MLFFA.

The LFMNet model has shown excellent performance in identifying maize leaf diseases and pests, not only achieving high-precision recognition on specific datasets but also demonstrating good generalization ability on other datasets. This achievement can improve the automation level of identifying maize leaf diseases and pests, effectively supplementing the shortcomings of traditional manual identification methods.

However, in practical applications, in order to meet the needs of real-time processing, we recognize the need to further optimize the model parameters. This includes improving the computational speed of the model without sacrificing recognition accuracy to ensure that LFMNet can respond quickly in actual agricultural production environments. Meanwhile, the same diseased leaves will exhibit different degrees of disease, such as early, middle, and late stages. Leaves with different degrees of disease may appear completely different in appearance. It is necessary to further classify diseased leaves based on their degree of illness.

In the future, we plan to further classify the same diseased leaves according to their severity in order to improve the reliability of our research. We also plan to make lightweight improvements to LFMNet to enhance its applicability in real-time application scenarios. In addition, current research mainly focuses on the identification of pests and diseases during the image acquisition stage. In the future, we will expand our research scope and apply this technology to every step of the maize planting cycle, thereby providing more comprehensive and in-depth technical support for agricultural production.

6. Conclusions

The LFMNet model based on the ResNext50 architecture proposed in this study significantly improves the accuracy and robustness of maize leaf pest identification by introducing innovative modules such as the LMSB, FLB, and MLFFA. The experimental results on the self-built dataset showed that the average accuracy of LFMNet reached 95.68%, with an F1 score of 95.83%, proving its excellent performance. In addition, through comparison with other models and testing its generalization ability, LFMNet has demonstrated its superiority in image recognition tasks. This achievement is expected to improve the automation level of disease and pest identification, effectively supplementing the shortcomings of traditional manual identification methods.

However, in order to meet the demand for real-time processing, we recognize the need to further optimize model parameters to improve recognition speed and maintain accuracy, ensuring that LFMNet can respond quickly in actual agricultural production. Meanwhile, considering the appearance differences of the same disease at different stages, we plan to conduct more detailed leaf classification based on disease severity in future research to enhance the reliability of the study. In addition, we will expand our research scope and apply this technology to various stages of maize cultivation, providing more comprehensive technical support for agricultural production.