Development of a Low-Cost System for 3D Orchard Mapping Integrating UGV and LiDAR

Abstract

:1. Introduction

2. Materials and Methods

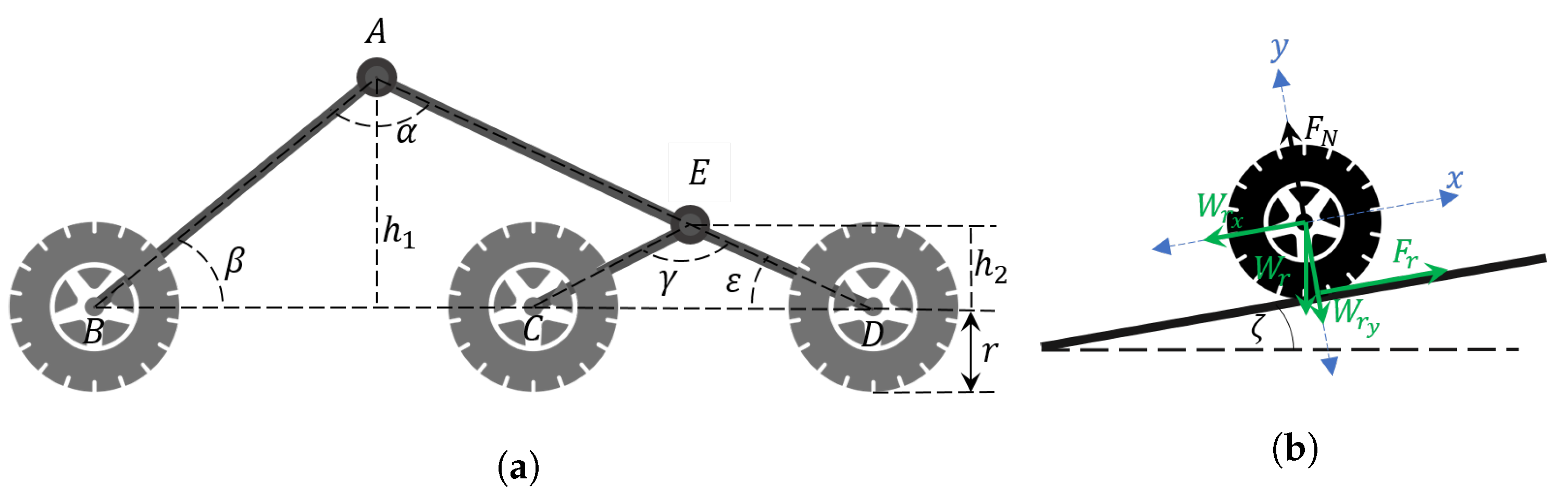

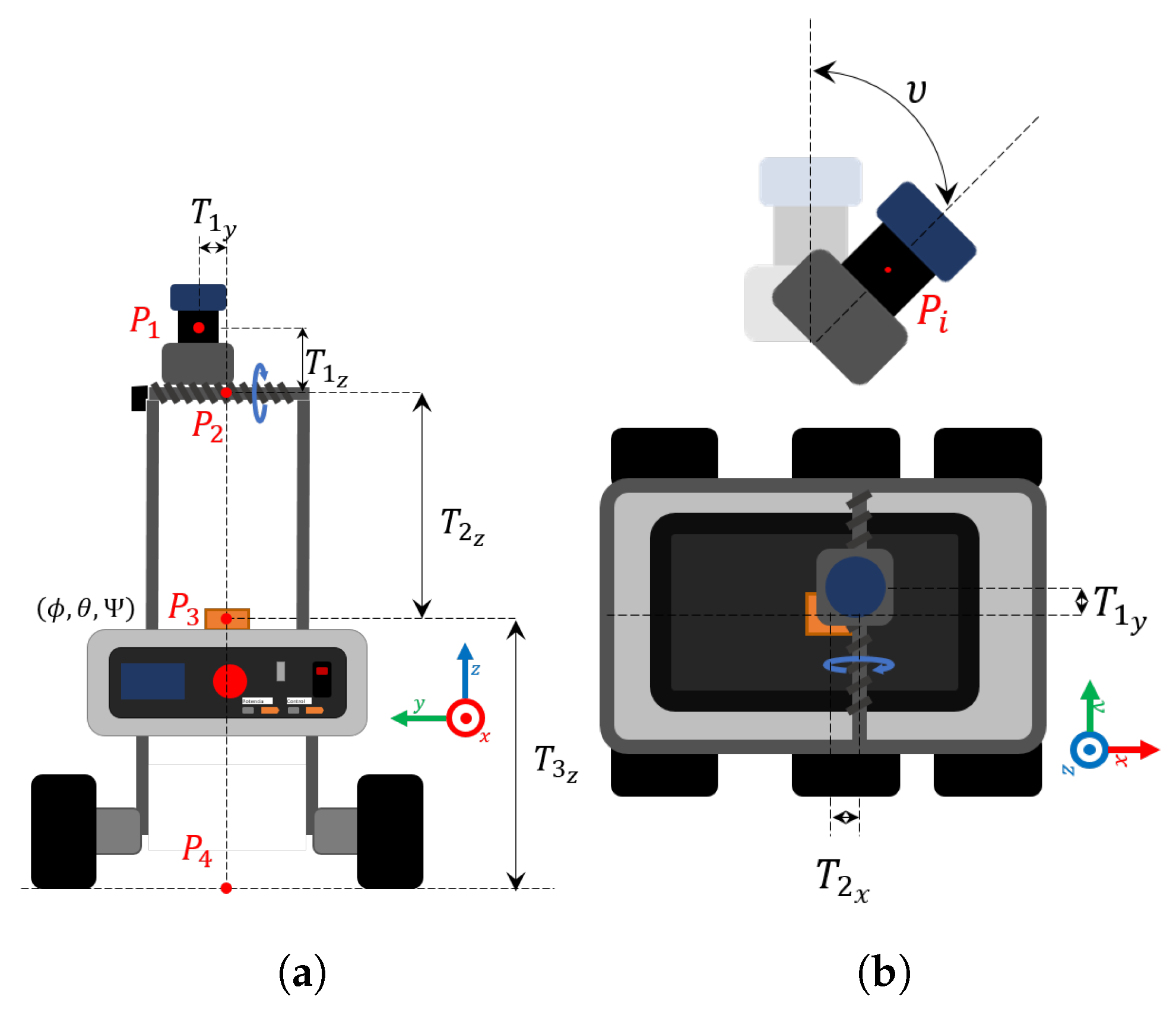

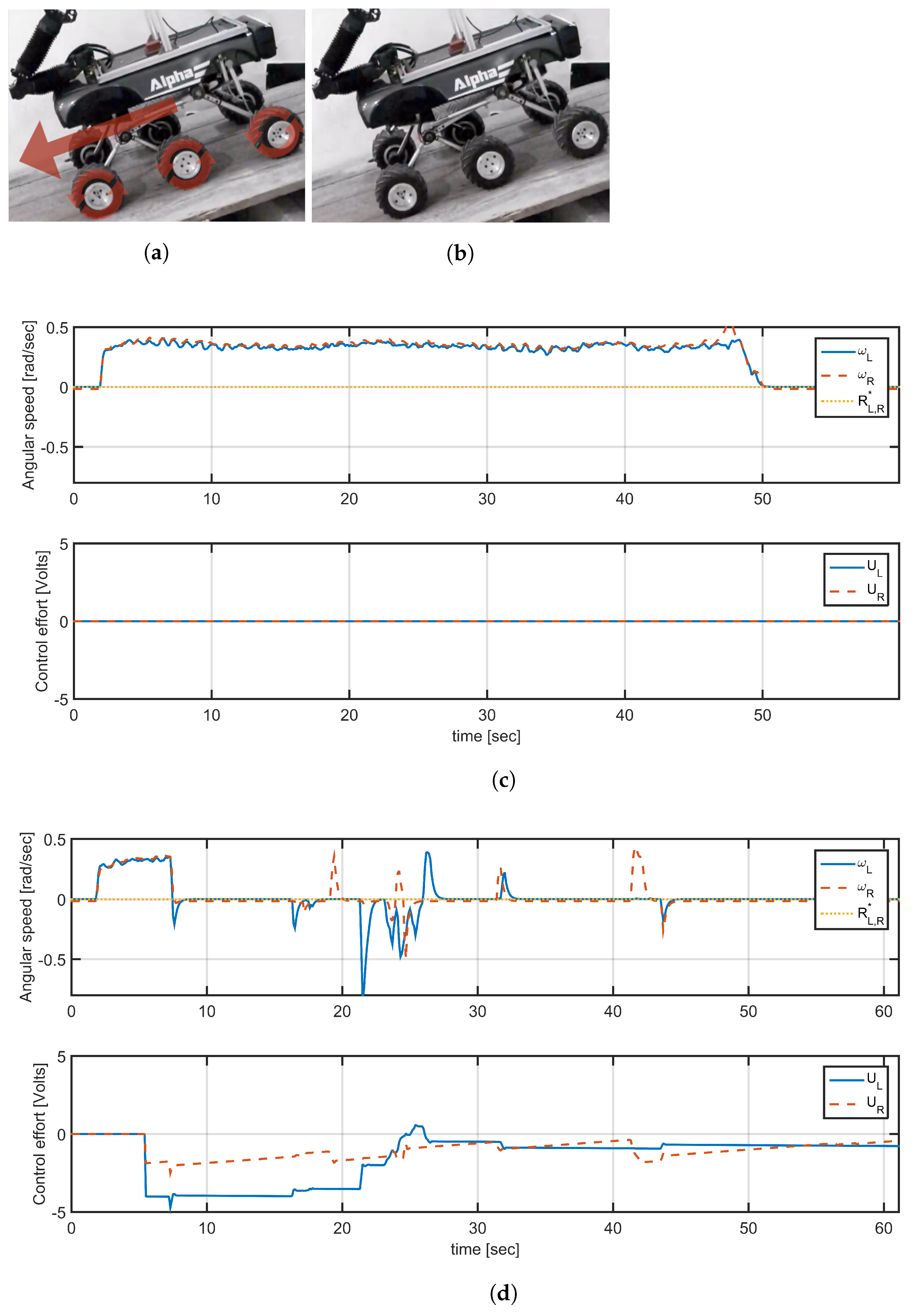

2.1. Terrestrial Mobile Robot

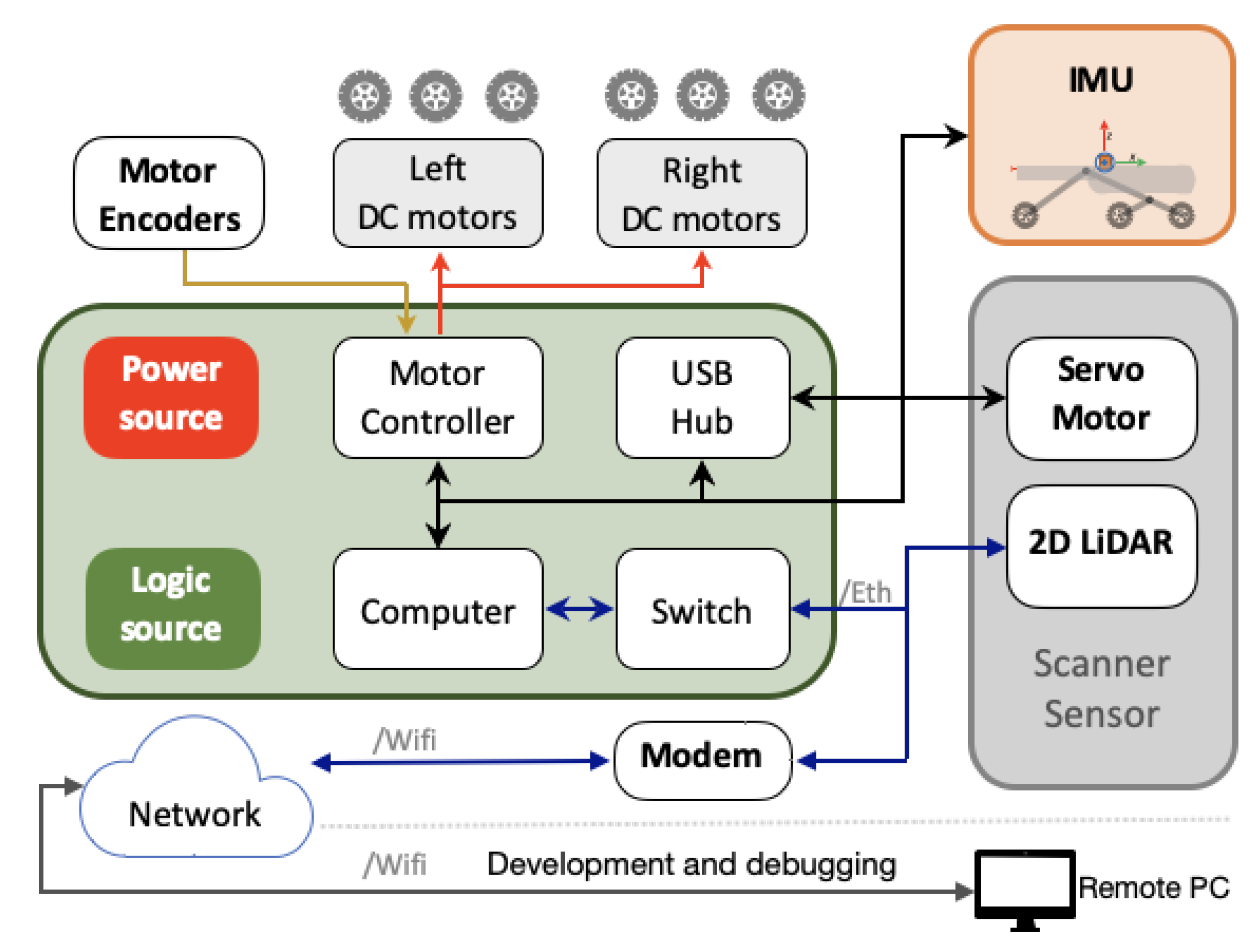

2.1.1. Hardware Design

2.1.2. Software Design

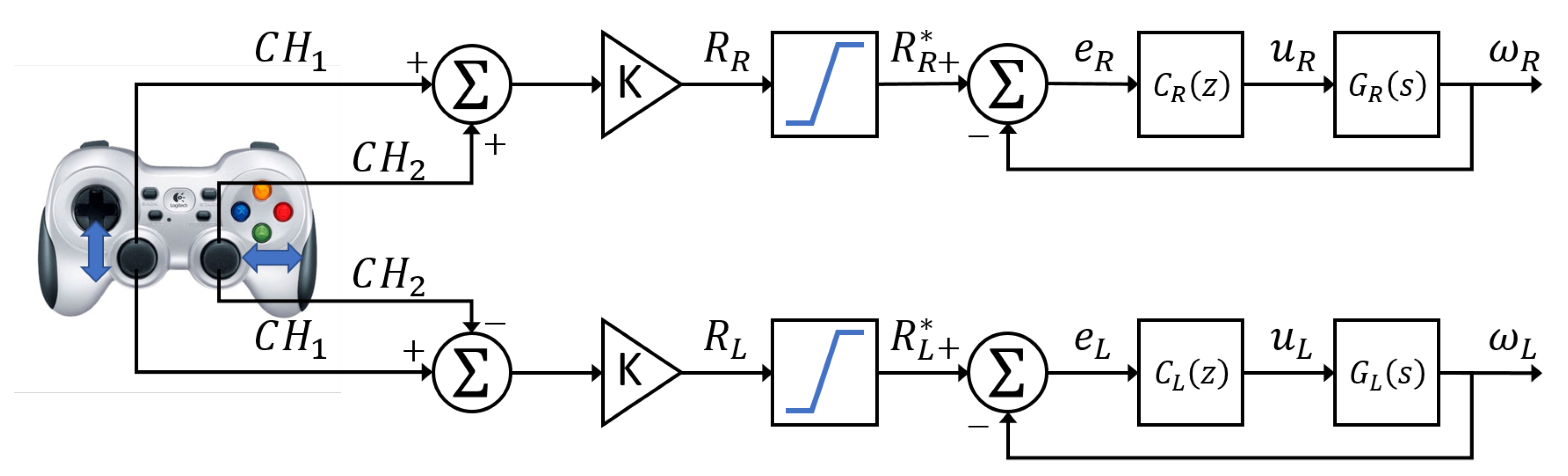

2.1.3. Embedded Cruise Control

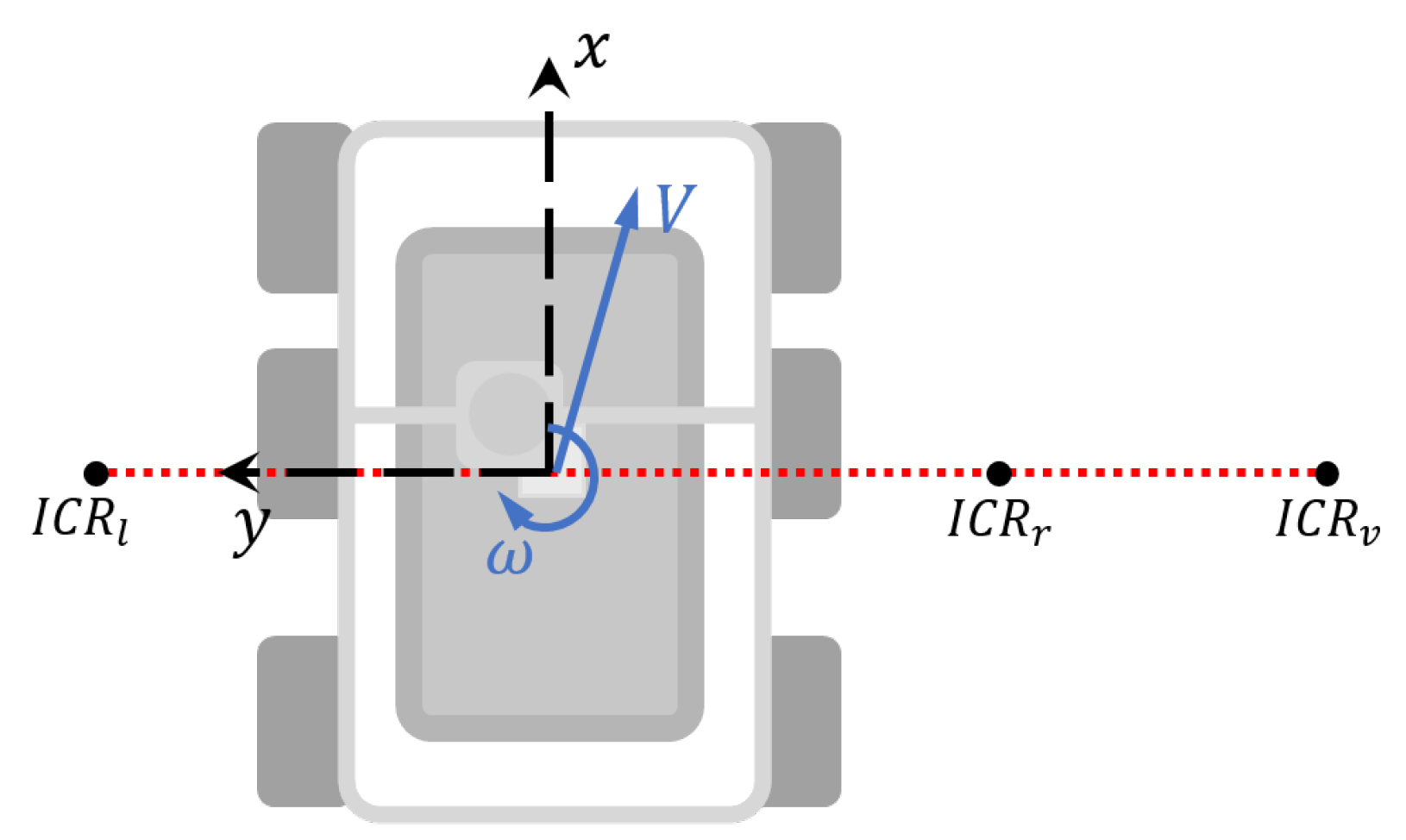

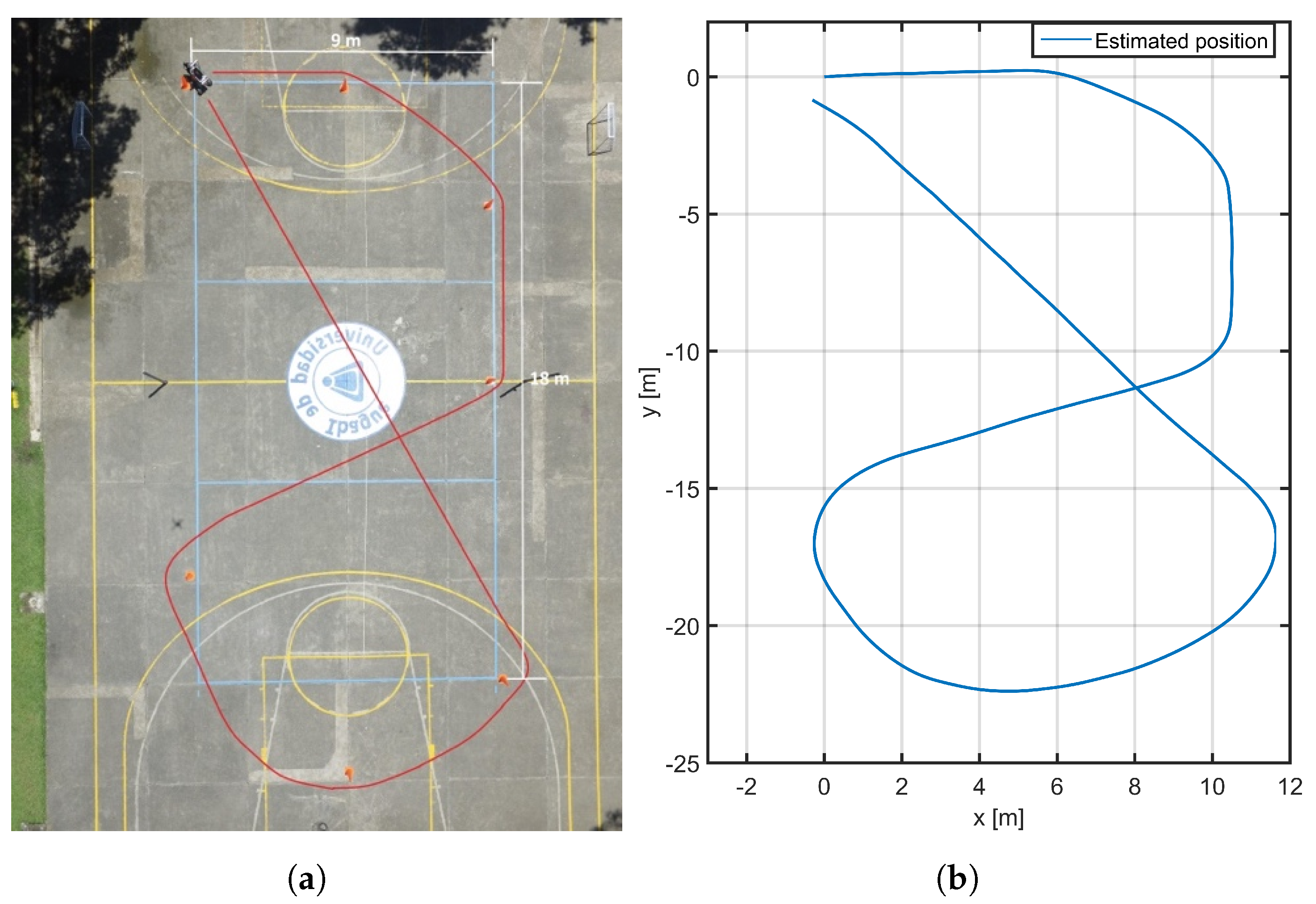

2.2. Pose Estimation

2.3. Lidar Mapping

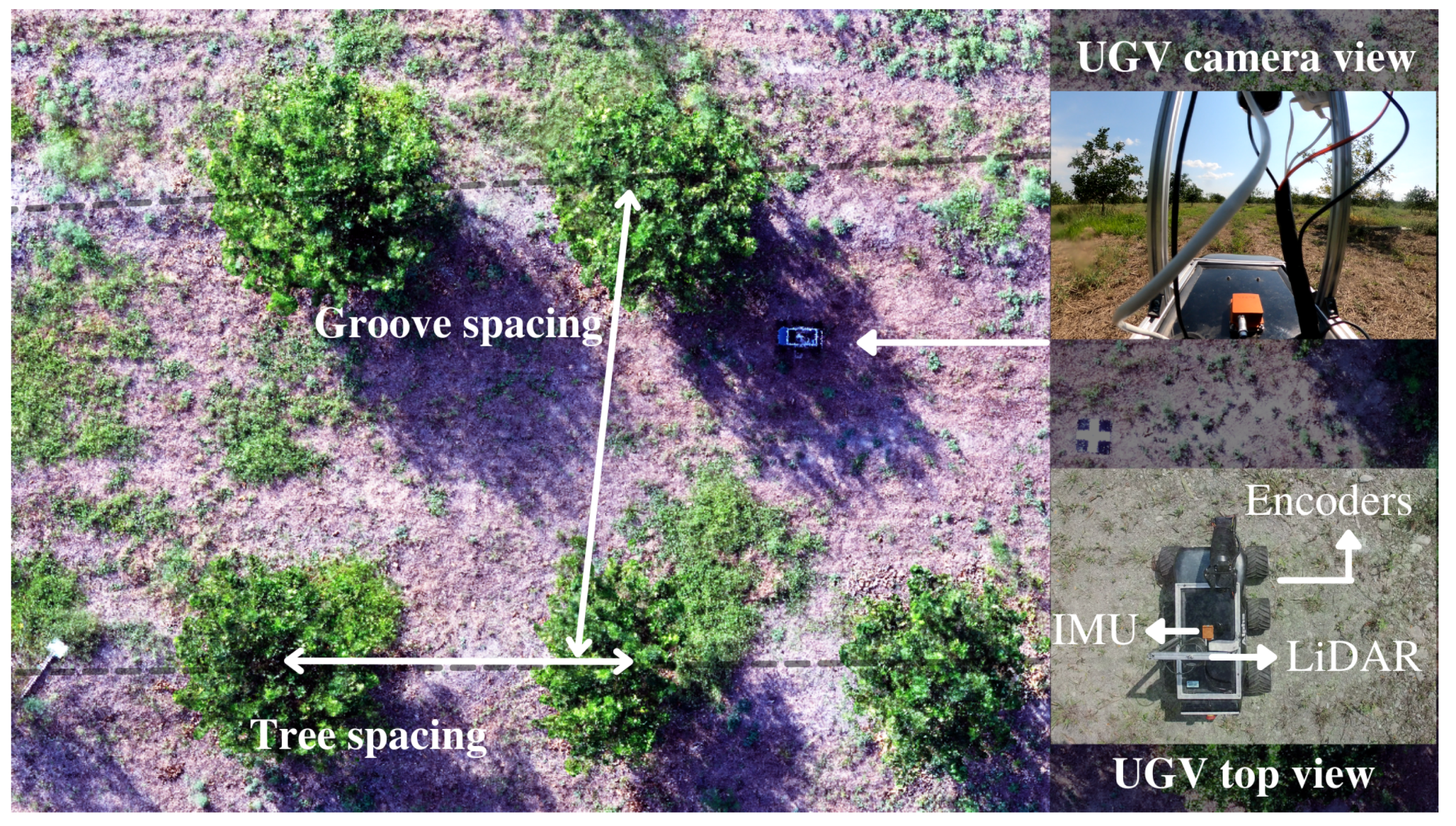

2.4. Field Data Collection

2.5. Crop Parameters Estimation

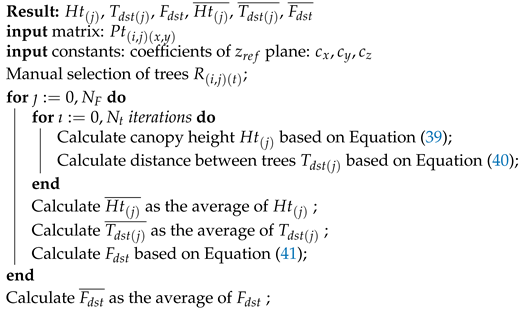

| Algorithm 1: Crop parameters determination |

|

3. Results

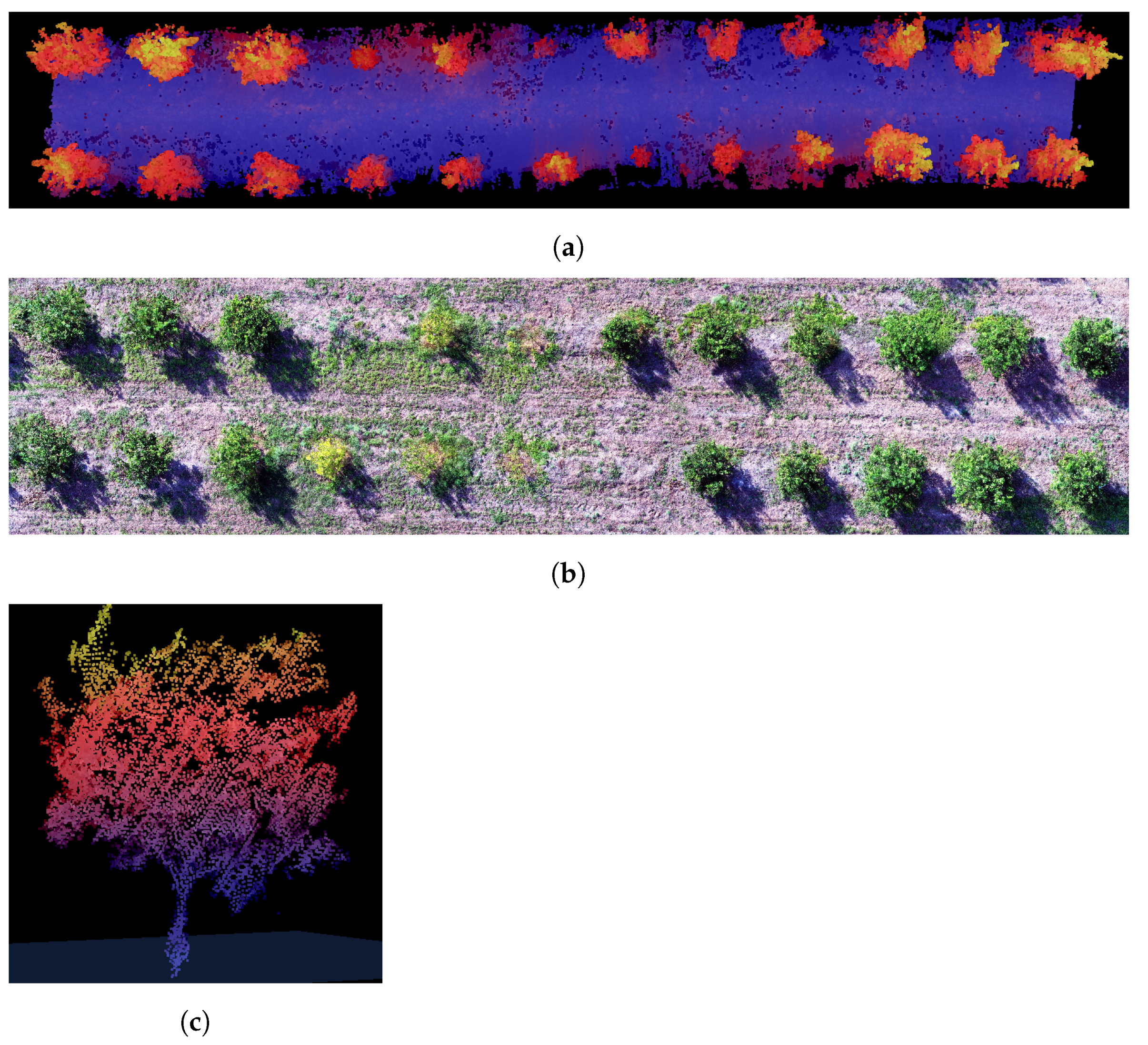

3.1. Generated Point Clouds

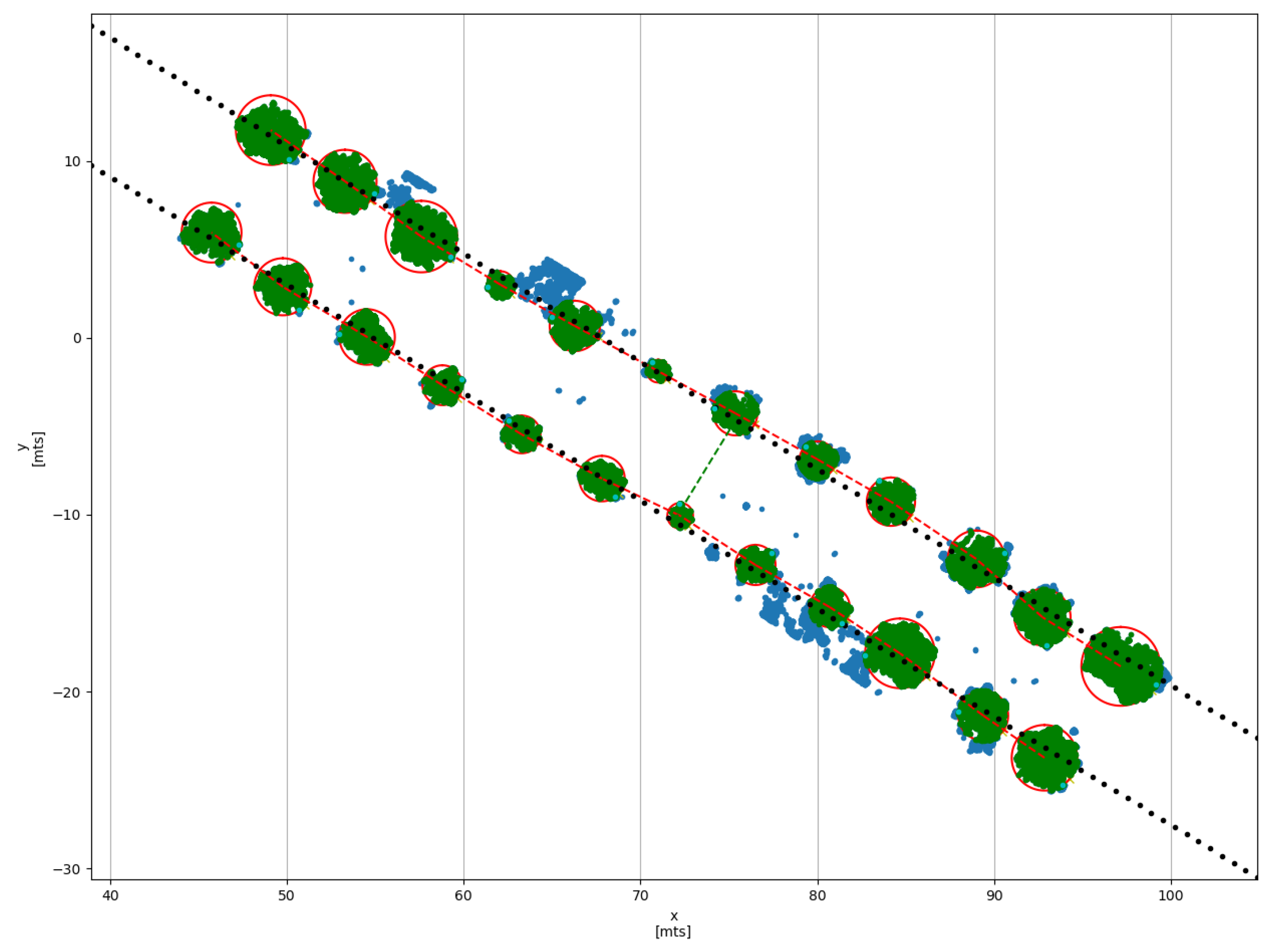

3.2. Crop Parameters Estimation

- Canopy height. Measure the height of the canopy and color each point within the region in green color.

- Crown diameter. From the maximum radius defined graphically by the user with the red rings, the system registers the diameter of the canopy in the corresponding tree.

- Distance between trees. Measure the tree to tree distance into the same furrow and drown a dashed red line between centers of trees

- Distance between furrows. Measure the furrow to furrow based on the estimation of a first-degree polynomial function in each furrow , which is drown with dotted black line. The achieved average distance between both straight lines in the evaluated range is illustrated by dashed green line.

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Khanal, S.; KC, K.; Fulton, J.P.; Shearer, S.; Ozkan, E. Remote Sensing in Agriculture—Accomplishments, Limitations, and Opportunities. Remote Sens. 2020, 12, 3783. [Google Scholar] [CrossRef]

- Mallet, C.; Bretar, F. Full-waveform topographic lidar: State-of-the-art. ISPRS J. Photogramm. Remote Sens. 2009, 64, 1–16. [Google Scholar] [CrossRef]

- Jin, S.; Sun, X.; Wu, F.; Su, Y.; Li, Y.; Song, S.; Xu, K.; Ma, Q.; Baret, F.; Jiang, D.; et al. Lidar sheds new light on plant phenomics for plant breeding and management: Recent advances and future prospects. ISPRS J. Photogramm. Remote Sens. 2021, 171, 202–223. [Google Scholar] [CrossRef]

- Jin, S.; Su, Y.; Wu, F.; Pang, S.; Gao, S.; Hu, T.; Liu, J.; Guo, Q. Stem-Leaf Segmentation and Phenotypic Trait Extraction of Individual Maize Using Terrestrial LiDAR Data. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1336–1346. [Google Scholar] [CrossRef]

- Qiu, Q.; Sun, N.; Bai, H.; Wang, N.; Fan, Z.; Wang, Y.; Meng, Z.; Li, B.; Cong, Y. Field-based high-throughput phenotyping for maize plant using 3d LIDAR point cloud generated with a “phenomobile”. Front. Plant Sci. 2019, 10, 554. [Google Scholar] [CrossRef] [Green Version]

- Guo, T.; Fang, Y.; Cheng, T.; Tian, Y.; Zhu, Y.; Chen, Q.; Qiu, X.; Yao, X. Detection of wheat height using optimized multi-scan mode of LiDAR during the entire growth stages. Comput. Electron. Agric. 2019, 165, 104959. [Google Scholar] [CrossRef]

- Yuan, H.; Bennett, R.S.; Wang, N.; Chamberlin, K.D. Development of a peanut canopy measurement system using a ground-based lidar sensor. Front. Plant Sci. 2019, 10, 203. [Google Scholar] [CrossRef] [Green Version]

- Tsoulias, N.; Paraforos, D.S.; Fountas, S.; Zude-Sasse, M. Estimating canopy parameters based on the stem position in apple trees using a 2D lidar. Agronomy 2019, 9, 740. [Google Scholar] [CrossRef] [Green Version]

- Xu, L.; Xu, T.; Li, X. Corn Seedling Monitoring Using 3-D Point Cloud Data from Terrestrial Laser Scanning and Registered Camera Data. IEEE Geosci. Remote Sens. Lett. 2020, 17, 137–141. [Google Scholar] [CrossRef]

- Berk, P.; Stajnko, D.; Belsak, A.; Hocevar, M. Digital evaluation of leaf area of an individual tree canopy in the apple orchard using the LIDAR measurement system. Comput. Electron. Agric. 2020, 169, 105158. [Google Scholar] [CrossRef]

- Wu, D.; Phinn, S.; Johansen, K.; Robson, A.; Muir, J.; Searle, C. Estimating changes in leaf area, leaf area density, and vertical leaf area profile for Mango, Avocado, and Macadamia tree crowns using Terrestrial Laser Scanning. Remote Sens. 2018, 10, 1750. [Google Scholar] [CrossRef] [Green Version]

- Schulze-Brüninghoff, D.; Hensgen, F.; Wachendorf, M.; Astor, T. Methods for LiDAR-based estimation of extensive grassland biomass. Comput. Electron. Agric. 2019, 156, 693–699. [Google Scholar] [CrossRef]

- Wang, H.; Lin, Y.; Wang, Z.; Yao, Y.; Zhang, Y.; Wu, L. Validation of a low-cost 2D laser scanner in development of a more-affordable mobile terrestrial proximal sensing system for 3D plant structure phenotyping in indoor environment. Comput. Electron. Agric. 2017, 140, 180–189. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Gregorio, E.; Auat Cheein, F.; Guevara, J.; Llorens, J.; Sanz-Cortiella, R.; Escolà, A.; Rosell-Polo, J.R. Fruit detection, yield prediction and canopy geometric characterization using LiDAR with forced air flow. Comput. Electron. Agric. 2020, 168, 105121. [Google Scholar] [CrossRef]

- Lemaire, T.; Lacroix, S.; Solà, J. A practical 3D bearing-only SLAM algorithm. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005. [Google Scholar] [CrossRef] [Green Version]

- Chakraborty, M.; Khot, L.R.; Sankaran, S.; Jacoby, P.W. Evaluation of mobile 3D light detection and ranging based canopy mapping system for tree fruit crops. Comput. Electron. Agric. 2019, 158, 284–293. [Google Scholar] [CrossRef]

- Malambo, L.; Popescu, S.C.; Horne, D.W.; Pugh, N.A.; Rooney, W.L. Automated detection and measurement of individual sorghum panicles using density-based clustering of terrestrial lidar data. ISPRS J. Photogramm. Remote Sens. 2019, 149, 1–13. [Google Scholar] [CrossRef]

- Nguyen, P.; Badenhorst, P.E.; Shi, F.; Spangenberg, G.C.; Smith, K.F.; Daetwyler, H.D. Design of an Unmanned Ground Vehicle and LiDAR Pipeline for the High-Throughput Phenotyping of Biomass in Perennial Ryegrass. Remote Sens. 2020, 13, 20. [Google Scholar] [CrossRef]

- Siebers, M.H.; Edwards, E.J.; Jimenez-Berni, J.A.; Thomas, M.R.; Salim, M.; Walker, R.R. Fast phenomics in vineyards: Development of grover, the grapevine rover, and LiDAR for assessing grapevine traits in the field. Sensors 2018, 18, 2924. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rosell-Polo, J.R.; Gregorio, E.; Gene, J.; Llorens, J.; Torrent, X.; Arno, J.; Escola, A. Kinect v2 sensor-based mobile terrestrial laser scanner for agricultural outdoor applications. IEEE/ASME Trans. Mechatronics 2017, 22, 2420–2427. [Google Scholar] [CrossRef] [Green Version]

- ©2002–2021 Dassault Systèmes. SOLIDWORKS. [Computer Software]. Available online: https://www.solidworks.com/ (accessed on 1 December 2021).

- De Keyser, R.; Ionescu, C. FRtool: A frequency response tool for CACSD in Matlab. In Proceedings of the 2006 IEEE Conference on Computer Aided Control System Design, 2006 IEEE International Conference on Control Applications, 2006 IEEE International Symposium on Intelligent Control, Munich, Germany, 4–6 October 2006; pp. 2275–2280. [Google Scholar] [CrossRef]

- Huskić, G.; Buck, S.; Herrb, M.; Lacroix, S.; Zell, A. High-speed path following control of skid-steered vehicles. Int. J. Robot. Res. 2019, 38, 1124–1148. [Google Scholar] [CrossRef]

- Martínez, J.L.; Mandow, A.; Morales, J.; Pedraza, S.; García-Cerezo, A. Approximating kinematics for tracked mobile robots. Int. J. Robot. Res. 2005, 24, 867–878. [Google Scholar] [CrossRef]

- Mandow, A.; Martínez, J.L.; Morales, J.; Blanco, J.L.; García-Cerezo, A.; González, J. Experimental kinematics for wheeled skid-steer mobile robots. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 1222–1227. [Google Scholar] [CrossRef]

- Murcia, H.F.; Monroy, M.F.; Mora, L.F. 3D scene reconstruction based on a 2D moving LiDAR. In Communications in Computer and Information Science; Springer: Cham, Switzerland, 2018; Volume 942. [Google Scholar] [CrossRef]

- Barrero, O.; Tilaguy, S.; Nova, Y.M. Outdoors Trajectory Tracking Control for a Four Wheel Skid-Steering Vehicle*. In Proceedings of the 2018 IEEE 2nd Colombian Conference on Robotics and Automation, Barranquilla, Colombia, 1–3 November 2018. [Google Scholar] [CrossRef]

- Professional Photogrammetry and Drone Mapping Software|Pix4D. [Computer Software]. Available online: https://www.pix4d.com/ (accessed on 1 December 2021).

- CloudCompare (Version 2.11.1) [GPL Software]. 2021. Available online: http://www.cloudcompare.org/ (accessed on 1 December 2021).

- Torresan, C.; Berton, A.; Carotenuto, F.; Chiavetta, U.; Miglietta, F.; Zaldei, A.; Gioli, B. Development and Performance Assessment of a Low-Cost UAV Laser Scanner System (LasUAV). Remote Sens. 2018, 10, 1094. [Google Scholar] [CrossRef] [Green Version]

- Geng, B.; Ge, Y.; Scoby, D.; Leavitt, B.; Stoerger, V.; Kirchgessner, N.; Irmak, S.; Graef, G.; Schnable, J.; Awada, T. NU-Spidercam: A large-scale, cable-driven, integrated sensing and robotic system for advanced phenotyping, remote sensing, and agronomic research. Comput. Electron. Agric. 2019, 160, 71–81. [Google Scholar] [CrossRef]

- N, V.; K, S.; P, S.T.; MJ, H. Field Scanalyzer: An automated robotic field phenotyping platform for detailed crop monitoring. Funct. Plant Biol. FPB 2016, 44, 143–153. [Google Scholar] [CrossRef] [Green Version]

- Walter, J.D.C.; Edwards, J.; McDonald, G.; Kuchel, H. Estimating Biomass and Canopy Height With LiDAR for Field Crop Breeding. Front. Plant Sci. 2019, 10, 1145. [Google Scholar] [CrossRef]

- Lumme, J.; Karjalainen, M.; Kaartinen, H.; Kukko, A.; Hyyppä, J.; Hyyppä, H.; Jaakkola, A.; Kleemola, J. Terrestrial laser scanning of agricultural crops. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 563–566. [Google Scholar]

- Bargoti, S.; Underwood, J.P. Image Segmentation for Fruit Detection and Yield Estimation in Apple Orchards. J. Field Robot. 2017, 34, 1039–1060. [Google Scholar] [CrossRef] [Green Version]

- Ten Harkel, J.; Bartholomeus, H.; Kooistra, L. Biomass and Crop Height Estimation of Different Crops Using UAV-Based Lidar. Remote Sens. 2019, 12, 17. [Google Scholar] [CrossRef] [Green Version]

- Escolà, A.; Martínez-Casasnovas, J.A.; Rufat, J.; Arnó, J.; Arbonés, A.; Sebé, F.; Pascual, M.; Gregorio, E.; Rosell-Polo, J.R. Mobile terrestrial laser scanner applications in precision fruticulture/horticulture and tools to extract information from canopy point clouds. Precis. Agric. 2017, 18, 111–132. [Google Scholar] [CrossRef] [Green Version]

- Andújar, D.; Dorado, J.; Bengochea-Guevara, J.M.; Conesa-Muñoz, J.; Fernández-Quintanilla, C.; Ribeiro, Á. Influence of wind speed on RGB-D images in tree plantations. Sensors (Switzerland) 2017, 17, 914. [Google Scholar] [CrossRef] [Green Version]

- Rosell, J.R.; Sanz, R. A review of methods and applications of the geometric characterization of tree crops in agricultural activities. Comput. Electron. Agric. 2012, 81, 124–141. [Google Scholar] [CrossRef] [Green Version]

- Auat Cheein, F.A.; Guivant, J.; Sanz, R.; Escolà, A.; Yandún, F.; Torres-Torriti, M.; Rosell-Polo, J.R. Real-time approaches for characterization of fully and partially scanned canopies in groves. Comput. Electron. Agric. 2015, 118, 361–371. [Google Scholar] [CrossRef] [Green Version]

- Lin, K.; Lyu, M.; Jiang, M.; Chen, Y.; Li, Y.; Chen, G.; Xie, J.; Yang, Y. Improved allometric equations for estimating biomass of the three Castanopsis carlesii H. forest types in subtropical China. New For. 2017, 48, 115–135. [Google Scholar] [CrossRef]

- Rosell, J.R.; Llorens, J.; Sanz, R.; Arnó, J.; Ribes-Dasi, M.; Masip, J.; Escolà, A.; Camp, F.; Solanelles, F.; Gràcia, F.; et al. Obtaining the three-dimensional structure of tree orchards from remote 2D terrestrial LIDAR scanning. Agric. For. Meteorol. 2009, 149, 1505–1515. [Google Scholar] [CrossRef] [Green Version]

| Application | Crop | Sensing Device | Localization Device | Platform | Ref. |

|---|---|---|---|---|---|

| Fruit detection, yield prediction, and canopy characterization | Apple | 3D Velodyne VLP-16 | GPS1200+ Leica RTK-GNSS | MTLS | [14] |

| Canopy mapping. | Apple | 3D Velodyne VLP-16 | GPS 3D Robotics | Tractor | [16] |

| Panicle measurement. | Sorghum | 3D FARO Focus X330 | RTK GNSS | Tractor | [17] |

| Precision fruticulture and horticulture. | Vineyards | 3D MS Kinect V2 | RTK GNSS | UGV | [20] |

| Fast phenotyping. | Vineyards | 2D SICK LMS-400 | GPS/IMU - Advanced Navigation, RTK | UGV | [19] |

| High-throughput phenotyping | Maize | 3D Velodyne HDL64-S3 | GNSS receiver with two antennas | UGV | [5] |

| Ryegrass | 2D SICK LMS-400 | Here+ V2 RTK GPS | UGV | [18] |

| Variables | Values | Unities |

|---|---|---|

| rad | ||

| 20 | Kg | |

| 5 | Kg | |

| 0.7 | ||

| 0.5 | ||

| 0.7 | - | |

| SF | 70 | % |

| g | 9.8 |

| Elements | Power |

|---|---|

| Computer (Jetson TK1) | 3 |

| USB hub TP-Link AC750 wireless router | 12 |

| LiDAR UTM-30LX-EW | 8.5 |

| Switch TP-Link | 4 |

| Servo motor Dynamixel AX-12A | 13.5 |

| Parameter | Value | Units |

|---|---|---|

| k | 0.1667 | rad/s |

| 5.003 | rad/s | |

| 0.9033 | - | |

| 0.1 | s | |

| Robustness | >0.8 | - |

| Settling time | <2 | s |

| Overshoot | <0.5 | - |

| 6.677 | rad/s | |

| 28.656 | rad/s | |

| 0.0734 | rad/s |

| Parameter | Reference [m] | Obtained [m] | RMSE [m] | Ee [%] | |

|---|---|---|---|---|---|

| - | - | 0.308 | 0.732 | 9.282 | |

| - | - | 0.457 | 0.637 | 17.294 | |

| 5.087 | 5.116 | - | - | 0.575 | |

| 8.294 | 7.759 | - | - | 6.443 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Murcia, H.F.; Tilaguy, S.; Ouazaa, S. Development of a Low-Cost System for 3D Orchard Mapping Integrating UGV and LiDAR. Plants 2021, 10, 2804. https://doi.org/10.3390/plants10122804

Murcia HF, Tilaguy S, Ouazaa S. Development of a Low-Cost System for 3D Orchard Mapping Integrating UGV and LiDAR. Plants. 2021; 10(12):2804. https://doi.org/10.3390/plants10122804

Chicago/Turabian StyleMurcia, Harold F., Sebastian Tilaguy, and Sofiane Ouazaa. 2021. "Development of a Low-Cost System for 3D Orchard Mapping Integrating UGV and LiDAR" Plants 10, no. 12: 2804. https://doi.org/10.3390/plants10122804

APA StyleMurcia, H. F., Tilaguy, S., & Ouazaa, S. (2021). Development of a Low-Cost System for 3D Orchard Mapping Integrating UGV and LiDAR. Plants, 10(12), 2804. https://doi.org/10.3390/plants10122804