Strawberry Fungal Leaf Scorch Disease Identification in Real-Time Strawberry Field Using Deep Learning Architectures

Abstract

:1. Introduction

2. Materials and Method

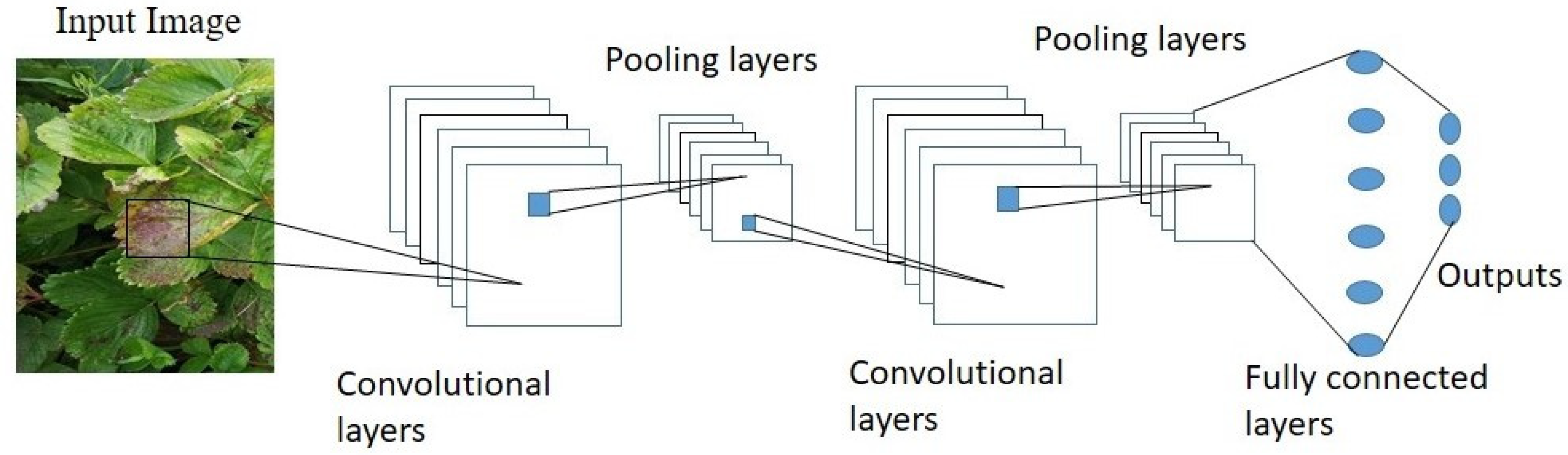

2.1. Convolutional Neural Network Models

2.1.1. AlexNet

2.1.2. VGG-16

2.1.3. SqueezeNet

2.1.4. EfficientNet

2.2. Dataset Description

2.3. CNN Models Training and Testing

2.4. CNNs Models Evaluation Parameters

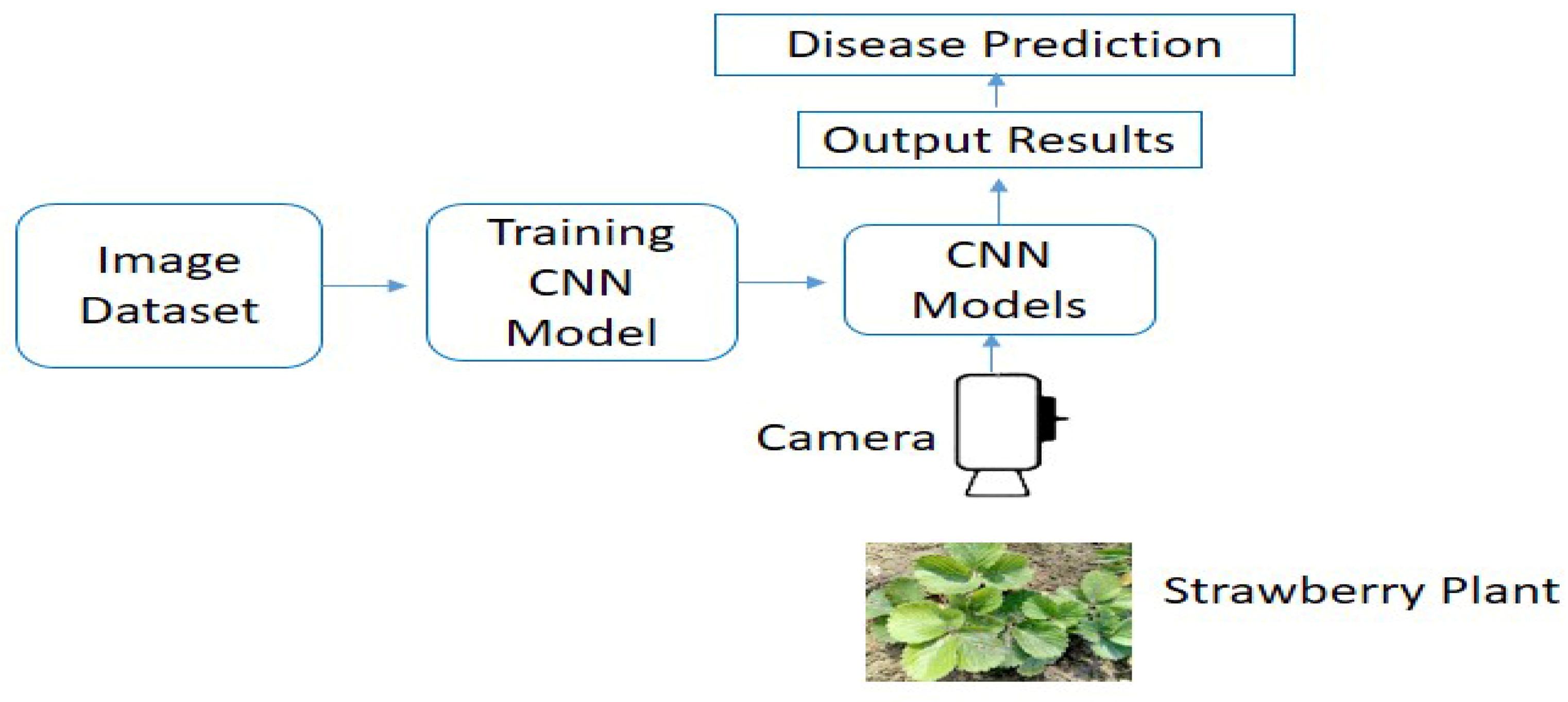

2.5. Performance Evaluation of Trained CNN Models for Real-Time Plant Disease Classification

Experimental Plan

3. Results

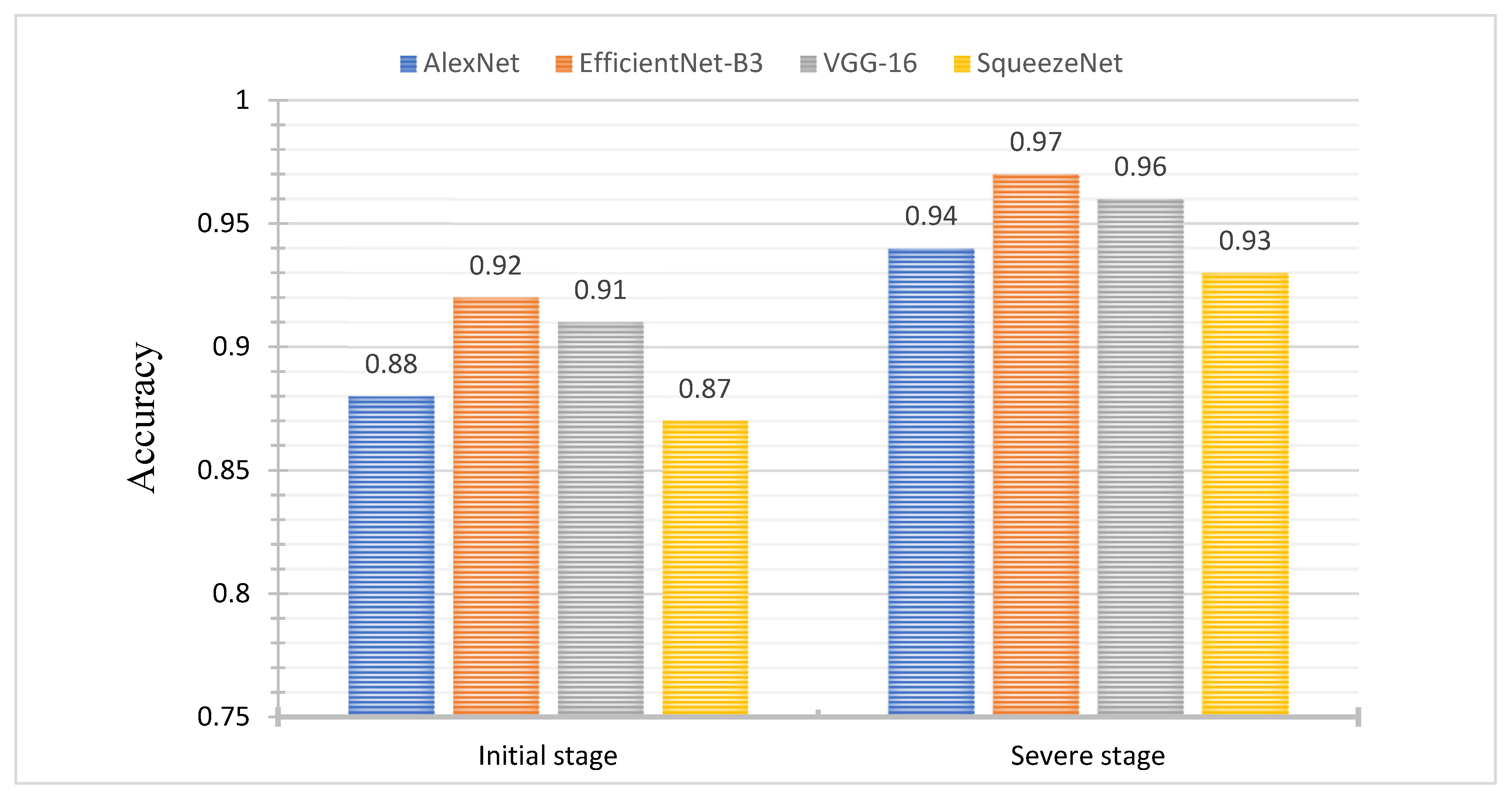

3.1. CNNs Models Performance Results

CNNs Models Inference Time

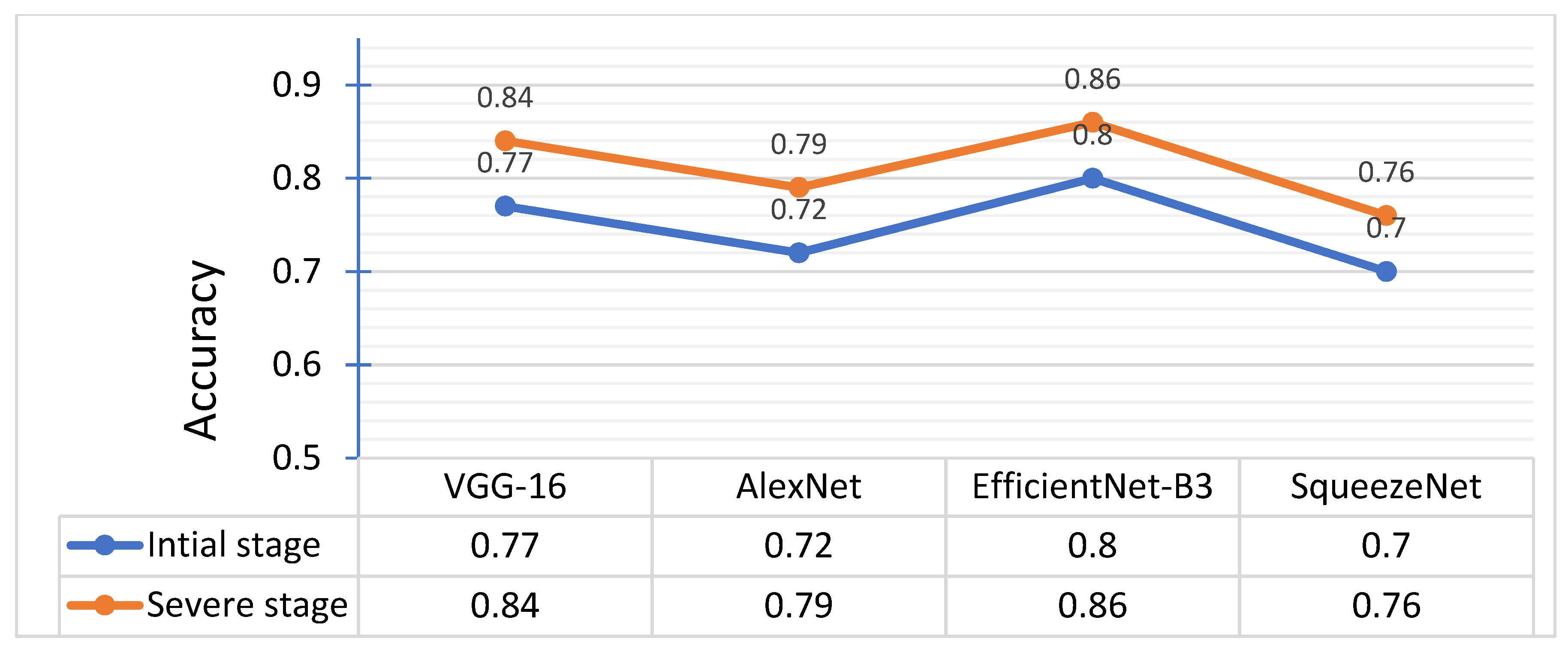

3.2. Performance of CNN Models in Real-Time Field Experiments

3.2.1. Field Experiment with Natural Lighting

3.2.2. Field Experiment with Controlled Sunlight Environment

4. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Faithpraise, F.; Birch, P.; Young, R.; Obu, J.; Faithpraise, B.; Chatwin, C. Automatic plant pest detection and recognition using k-means clustering algorithm and correspondence filters. Int. J. Adv. Biotechnol. Res. 2013, 4, 189–199. [Google Scholar]

- Hanssen, I.M.; Lapidot, M. Major tomato viruses in the Mediterranean basin. Adv. Virus Res. 2012, 84, 31–66. [Google Scholar]

- Brahimi, M.; Boukhalfa, K.; Moussaoui, A. Deep learning for tomato diseases: Classification and symptoms visualization. Appl. Artif. Intell. 2017, 31, 299–315. [Google Scholar] [CrossRef]

- Skrovankova, S.; Sumczynski, D.; Mlcek, J.; Jurikova, T.; Sochor, J. Bioactive compounds and antioxidant activity in different types of berries. Int. J. Mol. Sci. 2015, 16, 24673–24706. [Google Scholar] [CrossRef] [Green Version]

- Tylewicz, U.; Mannozzi, C.; Romani, S.; Castagnini, J.M.; Samborska, K.; Rocculi, P.; Rosa, M.D. Chemical and physicochemical properties of semi-dried organic strawberries. LWT 2019, 114, 108377. [Google Scholar] [CrossRef]

- Pan, L.; Zhang, W.; Zhu, N.; Mao, S.; Tu, K. Early detection and classification of pathogenic fungal disease in post-harvest strawberry fruit by electronic nose and gas chromatography–mass spectrometry. Food Res. Int. 2014, 62, 162–168. [Google Scholar] [CrossRef]

- Maas, J. Strawberry diseases and pests—Progress and problems. Acta Hortic. 2014, 1049, 133–142. [Google Scholar] [CrossRef]

- Strawberry: Growth, Development and Diseases; Husaini, A.M.; Davide, N. (Eds.) CABI: Wallingford, UK, 2016. [Google Scholar]

- Dhakte, M.; Ingole, A.B. Diagnosis of pomegranate plant diseases using a neural network. In Proceedings of the 2015 Fifth National Conference on Computer Vision, Pattern Recognition, Image Processing and Graphics (NCVPRIPG), Patna, India, 16–19 December 2015. [Google Scholar]

- Harvey, C.; Rakotobe, Z.; Rao, N.; Dave, R.; Razafimahatratra, H.; Rabarijohn, R.; Rajaofara, H.; MacKinnon, J. Extreme Vulnerability of Smallholder Farmers To Agricultural Risks And Climate Change In Madagascar. Philos. Trans. R. Soc. B Biol. Sci. 2014, 369, 20130089. [Google Scholar] [CrossRef] [Green Version]

- Grasswitz, T.R. Integrated pest management (IPM) for small-scale farms in developed economies: Challenges and opportunities. Insects 2019, 10, 179. [Google Scholar] [CrossRef] [Green Version]

- Kalia, A.; Gosal, S.K. Effect of pesticide application on soil microorganisms. Arch. Agron. Soil Sci. 2011, 57, 569–596. [Google Scholar] [CrossRef]

- Bock, C.; Poole, G.; Parker, P.; Gottwald, T. Plant Disease Severity Estimated Visually, By Digital Photography and Image Analysis, and by Hyperspectral Imaging. Crit. Rev. Plant Sci. 2010, 29, 59–107. [Google Scholar] [CrossRef]

- Barbedo, J.G. Factors influencing the use of deep learning for plant disease recognition. Biosyst. Eng. 2018, 172, 84–91. [Google Scholar] [CrossRef]

- Kawasaki, Y.; Uga, H.; Kagiwada, S.; Iyatomi, H. Basic study of automated diagnosis of viral plant diseases using convolutional neural networks. In International Symposium on Visual Computing; Springer: Cham, Switzerland, 2015; pp. 638–645. [Google Scholar]

- Kobayashi, T.; Kanda, E.; Kitada, K.; Ishiguro, K.; Torigoe, Y. Detection of rice panicle blast with multispectral radiometer and the potential of using airborne multispectral scanners. Phytopathology 2001, 91, 316–323. [Google Scholar] [CrossRef] [Green Version]

- Al-Hiary, H.; Bani-Ahmad, S.; Reyalat, M.; Braik, M.; Alrahamneh, Z. Fast andnaccurate detection and classification of plant diseases. Int. J. Comput. Appl. 2011, 17, 31–38. [Google Scholar]

- Bai, X.; Cao, Z.; Zhao, L.; Zhang, J.; Lv, C.; Li, C.; Xie, J. Rice heading stage automatic observation by multi-classifier cascade based rice spike detection method. Agricul. For. Meteorol. 2018, 259, 260–270. [Google Scholar] [CrossRef]

- Mutka, A.; Bart, R. Image-Based Phenotyping of Plant Disease Symptoms. Front. Plant Sci. 2015, 5, 734. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, N.; Wang, M.; Wang, N. Precision Agriculture—A Worldwide Overview. Comput. Electron. Agric. 2002, 36, 113–132. [Google Scholar] [CrossRef]

- Liaghat, S.; Balasundram, S.K. A review: The role of remote sensing in precision agriculture. Am. J. Agric. Biol. Sci. 2010, 5, 50–55. [Google Scholar] [CrossRef] [Green Version]

- Al Bashish, D.; Braik, M.; Bani-Ahmad, S. Detection and classification of leaf diseases using K-means-based segmentation and Information. Technol. J. 2011, 10, 267–275. [Google Scholar]

- Pooja, V.; Das, R.; Kanchana, V. Identification of plant leaf diseases using image processing techniques. In Proceedings of the 2017 IEEE Technological Innovations in ICT for Agriculture and Rural Development (TIAR), Chennai, India, 7–8 April 2017; pp. 130–133. [Google Scholar]

- Khirade, S.D.; Patil, A.B. Plant disease detection using image processing. In Proceedings of the 2015 International Conference on Computing Communication Control and Automation, Mumbai, India, 15–17 January 2015; pp. 768–771. [Google Scholar]

- Jiang, F.; Jiang, Y.; Zhi, H.; Dong, Y.; Li, H.; Ma, S.; Wang, Y.; Dong, Q.; Shen, H.; Wang, Y. Artificial Intelligence in Healthcare: Past, Present And Future. Stroke Vasc. Neurol. 2017, 2, 230–243. [Google Scholar] [CrossRef]

- Garcia, J.; Barbedo, A. A Review on the Main Challenges in Automatic Plant Disease Identification Based on Visible Range Images. Biosyst. Eng. 2016, 144, 52–60. [Google Scholar]

- Vibhute, A.; Bodhe, S.K. Applications of Image Processing in Agriculture: A Survey. Int. J. Comput. Appl. 2012, 52, 34–40. [Google Scholar] [CrossRef]

- Arnal Barbedo, J. Digital Image Processing Techniques for Detecting, Quantifying and Classifying Plant Diseases. SpringerPlus 2013, 2, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Barbedo, J.; Koenigkan, L.; Santos, T. Identifying Multiple Plant Diseases Using Digital Image Processing. Biosyst. Eng. 2016, 147, 104–116. [Google Scholar] [CrossRef]

- Mohanty, S.P.; Hughes, D.P.; Salathé, M. Using deep learning for image-based plant disease detection. Front. Plant Sci. 2016, 7, 1419. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Atoum, Y.; Afridi, M.; Liu, X.; McGrath, J.; Hanson, L. On Developing and Enhancing Plant-Level Disease Rating Systems in Real Fields. Pattern Recognit. 2016, 53, 287–299. [Google Scholar] [CrossRef]

- Lu, B.; Dao, P.; Liu, J.; He, Y.; Shang, J. Recent Advances of Hyperspectral Imaging Technology and Applications in Agriculture. Remote Sens. 2020, 12, 2659. [Google Scholar] [CrossRef]

- Dyrmann, M.; Jørgensen, R.N.; Midtiby, H.S. RoboWeedSupport—Detection of weed locations in leaf occluded cereal crops using a fully convolutional neural network. Adv. Anim. Biosci. 2017, 8, 842–847. [Google Scholar] [CrossRef]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. DeepFruits: A Fruit Detection System Using Deep Neural Networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Padhy, R.P.; Verma, S.; Ahmad, S.; Choudhury, S.K.; Sa, P.K. Deep Neural Network for Autonomous UAV Navigation in Indoor Corridor Environments. Proc. Comput. Sci. 2018, 133, 643–650. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [Green Version]

- Ghosal, S.; Blystone, D.; Singh, A.; Ganapathysubramanian, B.; Singh, A.; Sarkar, S. An Explainable Deep Machine Vision Framework for Plant Stress Phenotyping. Proc. Natl. Acad. Sci. USA 2018, 115, 4613–4618. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Fujita, E.; Kawasaki, Y.; Uga, H.; Kagiwada, S.; Iyatomi, H. Basic investigation on a robust and practical plant diagnostic system. In Proceedings of the 15th IEEE International Conference on Machine Learning and Applications, Anaheim, CA, USA, 18–20 December 2016; pp. 989–992. [Google Scholar]

- Sladojevic, S.; Arsenovic, M.; Anderla, A.; Culibrk, D.; Stefanovic, D. Deep neural networks based recognition of plant diseases by leaf image classification. Comput. Intell. Neurosci. 2016, 2016, 3289801. [Google Scholar] [CrossRef] [Green Version]

- Fuentes, A.; Yoon, S.; Kim, S.C.; Park, D.S. A robust deep-learning-based detector for real-time tomato plant diseases and pests recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef] [Green Version]

- Zhang, B.; Huang, W.; Li, J.; Zhao, C.; Fan, S.; Wu, J.; Liu, C. Principles, Developments and Applications of Computer Vision for External Quality Inspection of Fruits and Vegetables: A Review. Food Res. Int. 2014, 62, 326–343. [Google Scholar] [CrossRef]

- Cubero, S.; Lee, W.; Aleixos, N.; Albert, F.; Blasco, J. Automated Systems Based on Machine Vision for Inspecting Citrus Fruits from The Field To Postharvest—A Review. Food Bioprocess Technol. 2016, 9, 1623–1639. [Google Scholar] [CrossRef] [Green Version]

- Ferentinos, K.P. Deep learning models for plant disease detection and diagnosis. Comput. Electron. Agric. 2018, 145, 311–318. [Google Scholar] [CrossRef]

- Dyrmann, M.; Karstoft, H.; Midtiby, H.S. Plant species classification using deep convolutional neural network. Biosyst. Eng. 2016, 151, 72–80. [Google Scholar] [CrossRef]

- Lu, Y.; Yi, S.; Zeng, N.; Liu, Y.; Zhang, Y. Identification of rice diseases using deep convolutional neural networks. Neurocomputing 2017, 267, 378–384. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. Squeezenet: AlexNet-Level Accuracy with 50× Fewer Parameters and <0.5 MB Model Size. arXiv 2016, arXiv:1602.07360v4. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the 36th International Conference on Machine Learning, ICML, Long Beach, CA, USA, 9–15 June 2019; International Machine Learning Society (IMLS): Long Beach, CA, USA, 2019; Volume 2019, pp. 10691–10700. [Google Scholar]

- Taylor, L.; Nitschke, G. Improving Deep Learning with Generic Data Augmentation. In Proceedings of the 2018 IEEE Symposium Series on Computational Intelligence (SSCI), Bangalore, India, 18–21 November 2018. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, Y.; He, D.; Li, Y. Identification of apple leaf diseases based on deep convolutional neural networks. Symmetry 2018, 10, 11. [Google Scholar] [CrossRef] [Green Version]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; et al. TensorFlow: Large-scale machine learning on heterogeneous distributed systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Infor. Proc. Manage. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Kurtulmus, F.; Lee, W.S.; Vardar, A. Green citrus detection using ‘eigenfruit’, color and circular Gabor texture features under natural outdoor conditions. Comput. Electron. Agric. 2011, 78, 140–149. [Google Scholar] [CrossRef]

- Atila, U.; Uçar, M.; Akyol, K.; Uçar, E. Plant Leaf Disease Classification Using Efficientnet Deep Learning Model. Ecol. Inform. 2021, 61, 101182. [Google Scholar] [CrossRef]

- Venkataramanan, A.; Honakeri, D.K.P.; Agarwal, P. Plant disease detection and classification using deep neural ne works. Int. J. Comput. Sci. Eng. 2019, 11, 40–46. [Google Scholar]

- Zainab, A.; Syed, D. Deployment of deep learning models on resource-deficient devices for object detection. In Proceedings of the 2020 IEEE International Conference on Informatics, IoT, and Enabling Technologies (ICIoT), Doha, Qatar, 2–5 February 2020. [Google Scholar] [CrossRef]

- Ramcharan, A.; McCloskey, P.; Baranowski, K.; Mbilinyi, N.; Mrisho, L.; Ndalahwa, M.; Legg, J.; Hughes, D. A Mobile-Based Deep Learning Model for Cassava Disease Diagnosis. Front. Plant Sci. 2019, 10, 272. [Google Scholar] [CrossRef] [Green Version]

- Ahmed, F.; Al-Mamun, H.A.; Bari, A.H.; Hossain, E.; Kwan, P. Classification of crops and weeds from digital images: A support vector machine approach. Crop Prot. 2012, 40, 98–104. [Google Scholar] [CrossRef]

- Haug, S.; Biber, P.; Michaels, A.; Ostermann, J. Plant Stem Detection and Position Estimation Using Machine Vision. In Workshop Proceedings of Conference on Intelligent Autonomous Systems; 2014; pp. 483–490. Available online: http://www.scienzaegoverno.org/sites/default/files/file_attach/Germania_0.pdf (accessed on 10 July 2021).

| Dataset Splitting Ratio | Training/Validation/Testing | Total Leaves Images | Healthy Leaves | Initial Disease Stage | Severe Disease Stage |

|---|---|---|---|---|---|

| 80:20 | Training | 10,809 | 3595 | 3611 | 3603 |

| validation | 1756 | 580 | 590 | 585 | |

| testing | 945 | 310 | 320 | 315 |

| Model | Disease Stage | Precision | Sensitivity/ Recall | F1 Score | Inference Time (ms) |

|---|---|---|---|---|---|

| VGG-16 | Initial | 0.91 | 0.90 | 0.90 | 355 |

| Severe | 0.96 | 0.95 | 0.95 | 349 | |

| AlexNet | Initial | 0.88 | 0.89 | 0.88 | 109 |

| Severe | 0.94 | 0.93 | 0.93 | 111 | |

| SqueezeNet | Initial | 0.87 | 0.88 | 0.87 | 76 |

| Severe | 0.93 | 0.92 | 0.92 | 66 | |

| EfficientNet-B3 | Initial | 0.92 | 0.91 | 0.91 | 212 |

| Severe | 0.98 | 0.97 | 0.97 | 222 |

| Model | Disease Stage | Precision | Sensitivity | F1 Score |

|---|---|---|---|---|

| VGG-16 | Initial | 0.70 | 0.67 | 0.68 |

| Severe | 0.80 | 0.78 | 0.78 | |

| AlexNet | Initial | 0.66 | 0.63 | 0.64 |

| Severe | 0.75 | 0.73 | 0.73 | |

| SqueezeNet | Initial | 0.64 | 0.61 | 0.62 |

| Severe | 0.73 | 0.71 | 0.71 | |

| EfficientNet-B3 | Initial | 0.73 | 0.70 | 0.71 |

| Severe | 0.83 | 0.81 | 0.81 |

| Model | Disease Stage | Precision | Sensitivity | F1 Score |

|---|---|---|---|---|

| VGG-16 | Initial | 0.78 | 0.75 | 0.76 |

| Severe | 0.85 | 0.83 | 0.83 | |

| AlexNet | Initial | 0.73 | 0.70 | 0.71 |

| Severe | 0.80 | 0.78 | 0.78 | |

| SqueezeNet | Initial | 0.71 | 0.68 | 0.68 |

| Severe | 0.77 | 0.75 | 0.76 | |

| EfficientNet-B3 | Initial | 0.80 | 0.77 | 0.78 |

| Severe | 0.87 | 0.85 | 0.85 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abbas, I.; Liu, J.; Amin, M.; Tariq, A.; Tunio, M.H. Strawberry Fungal Leaf Scorch Disease Identification in Real-Time Strawberry Field Using Deep Learning Architectures. Plants 2021, 10, 2643. https://doi.org/10.3390/plants10122643

Abbas I, Liu J, Amin M, Tariq A, Tunio MH. Strawberry Fungal Leaf Scorch Disease Identification in Real-Time Strawberry Field Using Deep Learning Architectures. Plants. 2021; 10(12):2643. https://doi.org/10.3390/plants10122643

Chicago/Turabian StyleAbbas, Irfan, Jizhan Liu, Muhammad Amin, Aqil Tariq, and Mazhar Hussain Tunio. 2021. "Strawberry Fungal Leaf Scorch Disease Identification in Real-Time Strawberry Field Using Deep Learning Architectures" Plants 10, no. 12: 2643. https://doi.org/10.3390/plants10122643

APA StyleAbbas, I., Liu, J., Amin, M., Tariq, A., & Tunio, M. H. (2021). Strawberry Fungal Leaf Scorch Disease Identification in Real-Time Strawberry Field Using Deep Learning Architectures. Plants, 10(12), 2643. https://doi.org/10.3390/plants10122643