Accuracy Assessment of a UAV Block by Different Software Packages, Processing Schemes and Validation Strategies

Abstract

1. Introduction

2. Methods

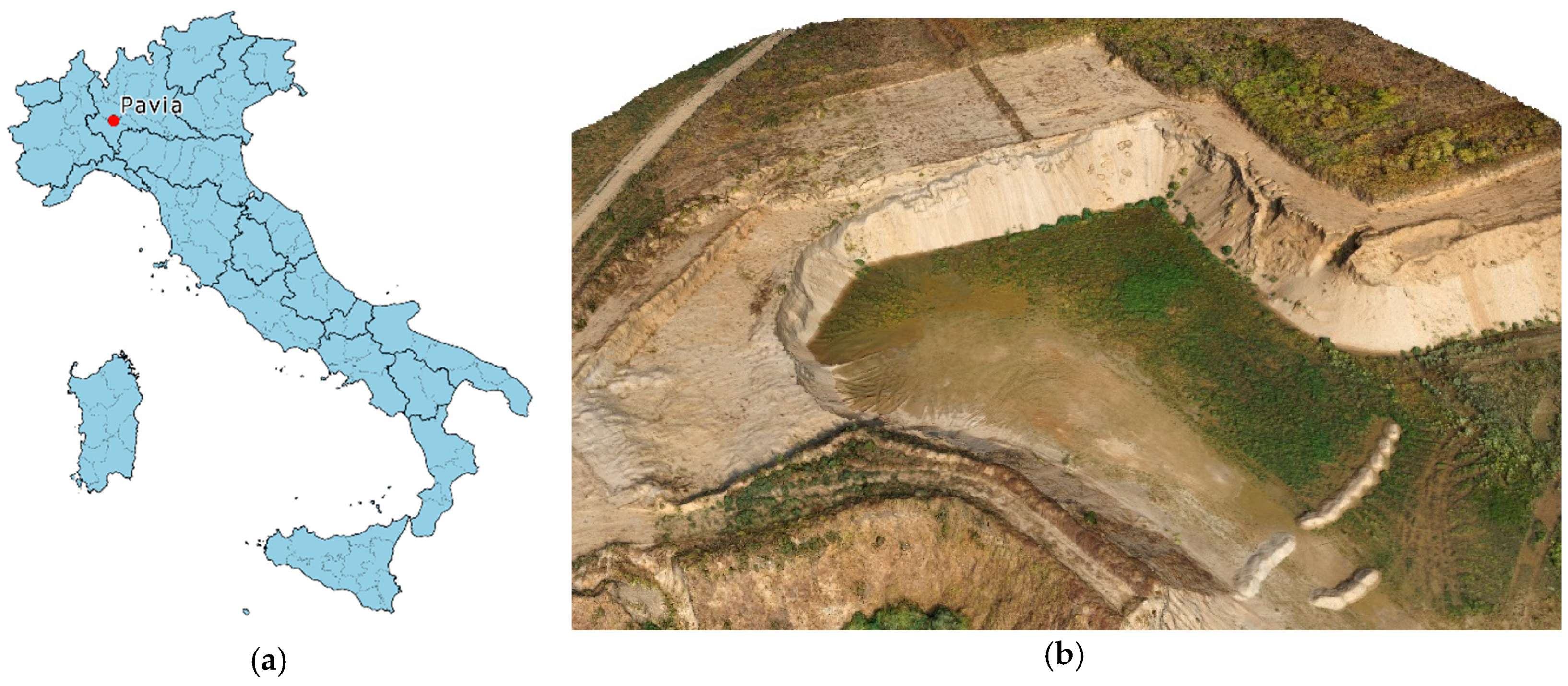

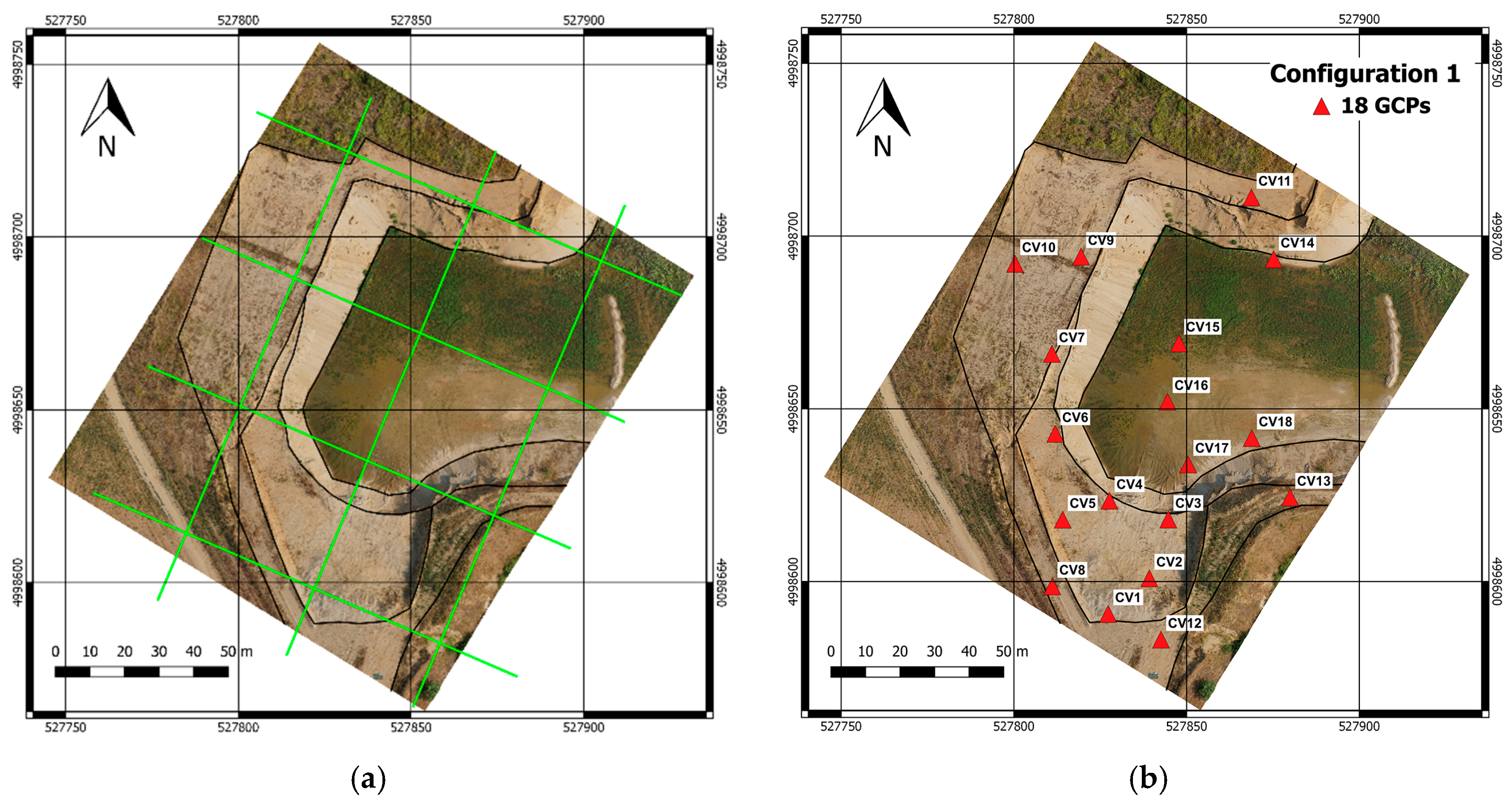

2.1. Data Acquisition

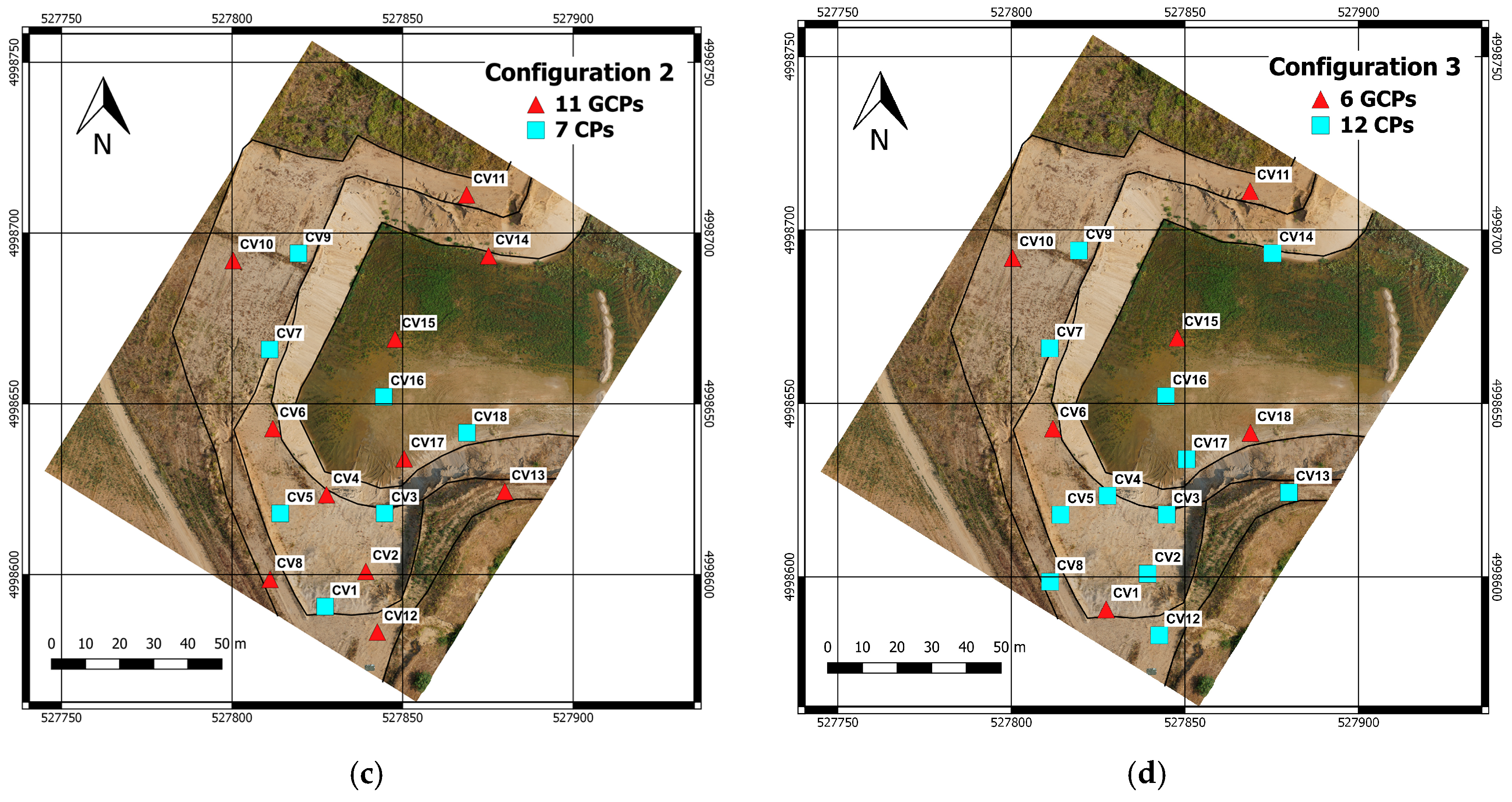

2.2. Data-Processing

- Configuration 1—all the markers are used as GCPs, to perform robust camera calibration (18 GCPs/0 CPs);

- Configuration 2—an intermediate setup with strong ground control and still some check points (11 GCPs/7 CPs);

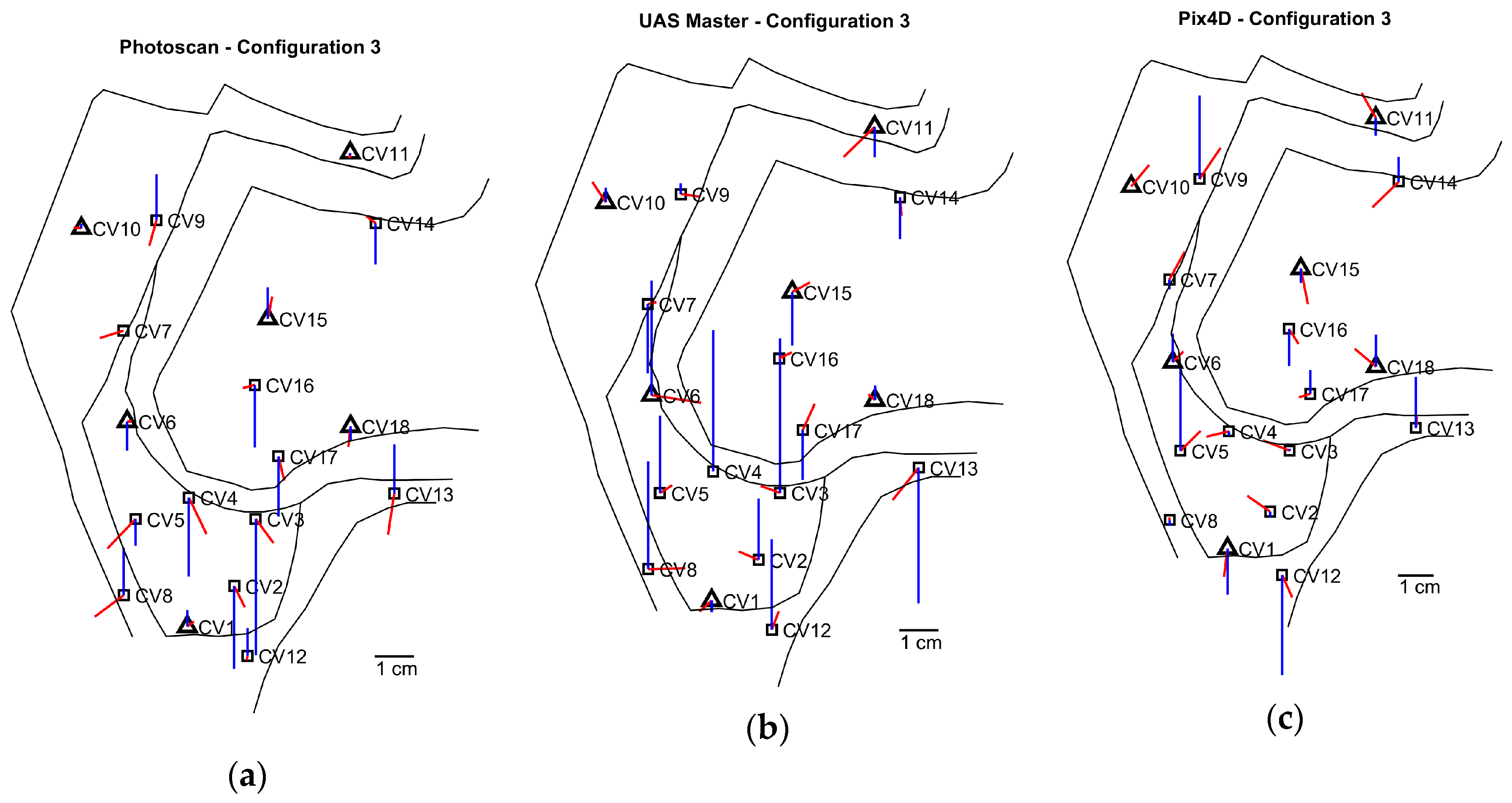

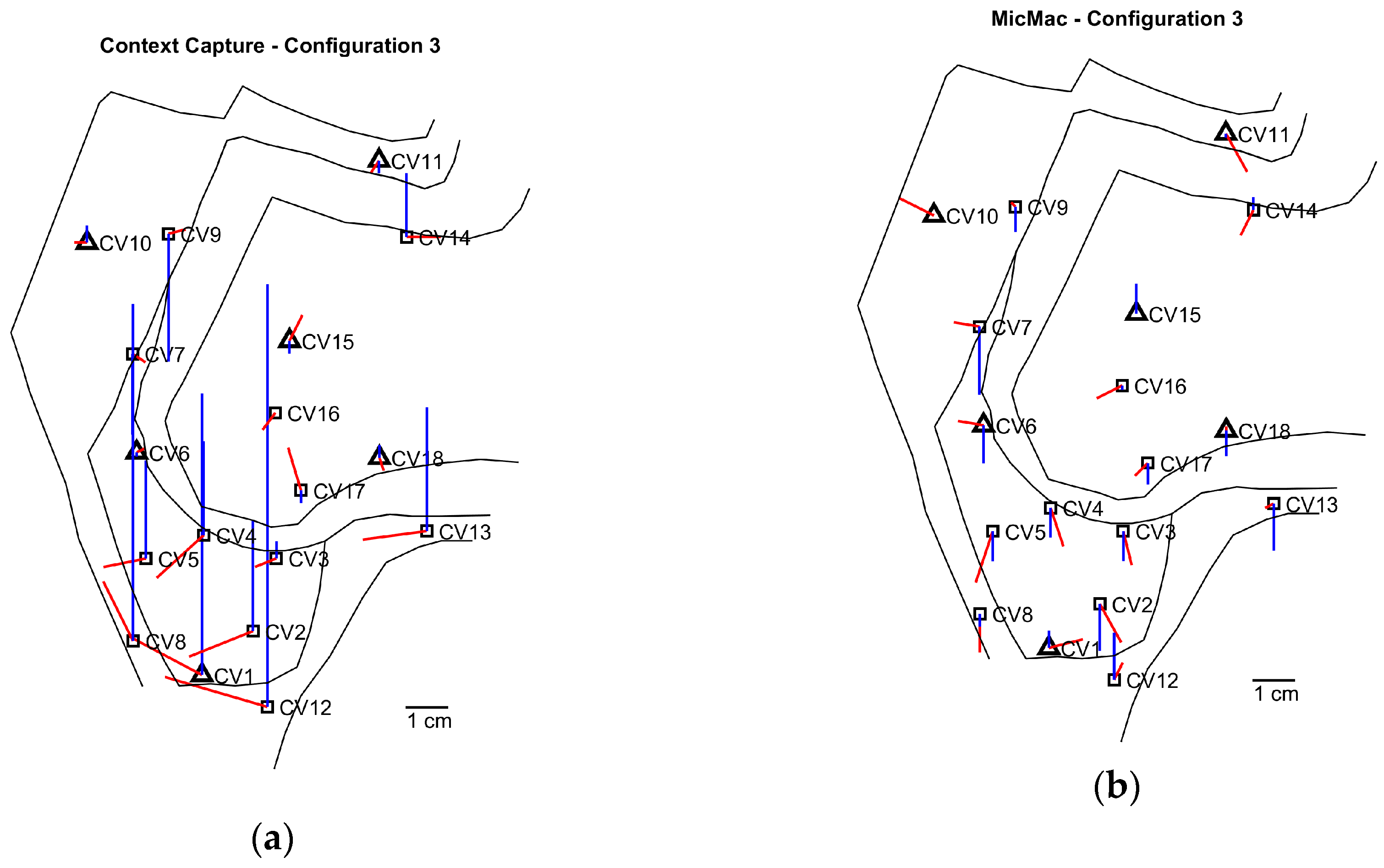

- Configuration 3—only six points are used as GCPs, that is realistic for routine surveying (6 GCPs/12 CPs).

2.2.1. Agisoft PhotoScan

2.2.2. Inpho UAS Master

2.2.3. Pix4D

2.2.4. Bentley ContextCapture

2.2.5. MicMac

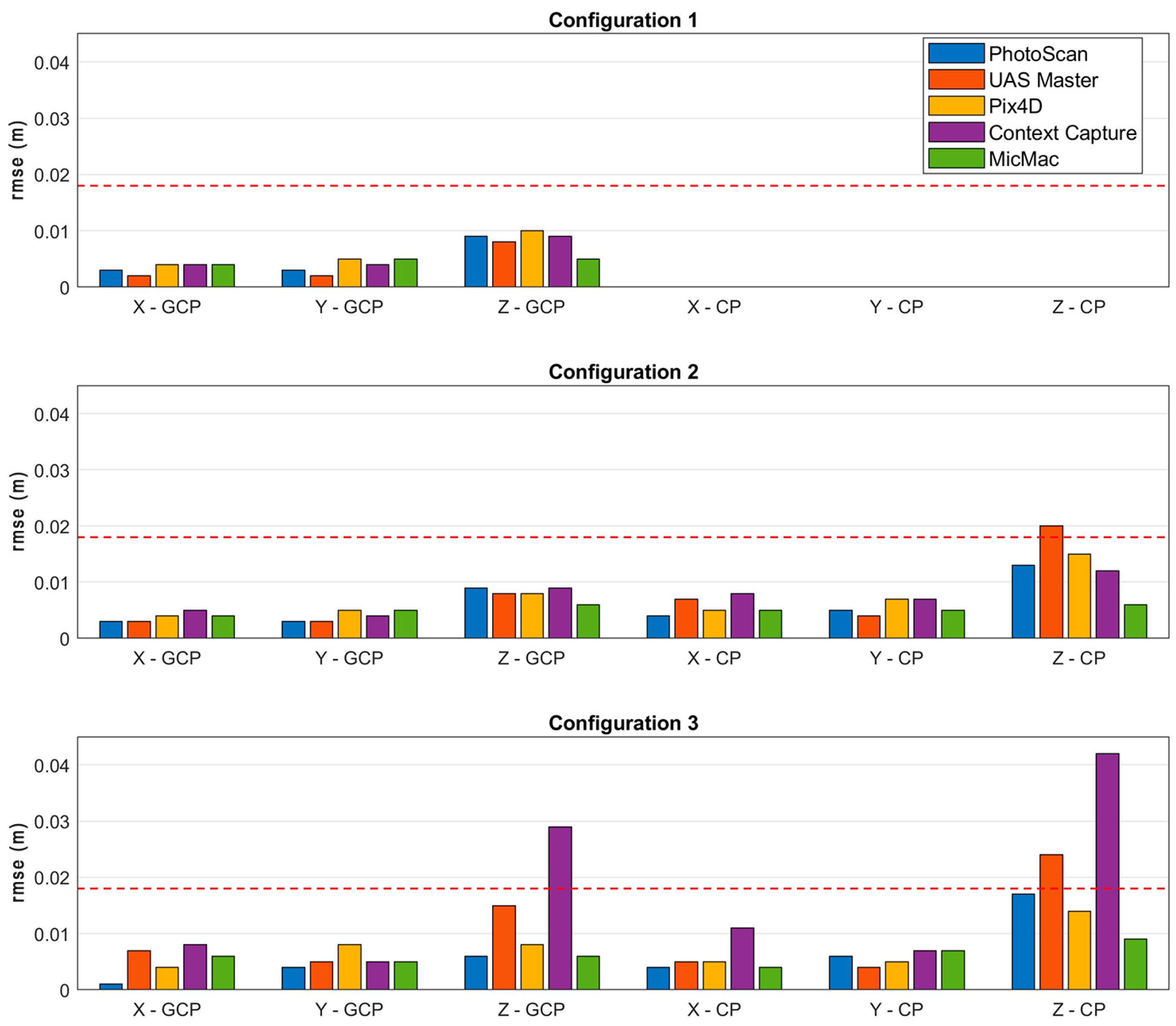

3. Bundle Block Adjustment Results Analysis

3.1. GCPs and CPs Residuals Analysis

3.2. Comparative Analysis Among Software Packages

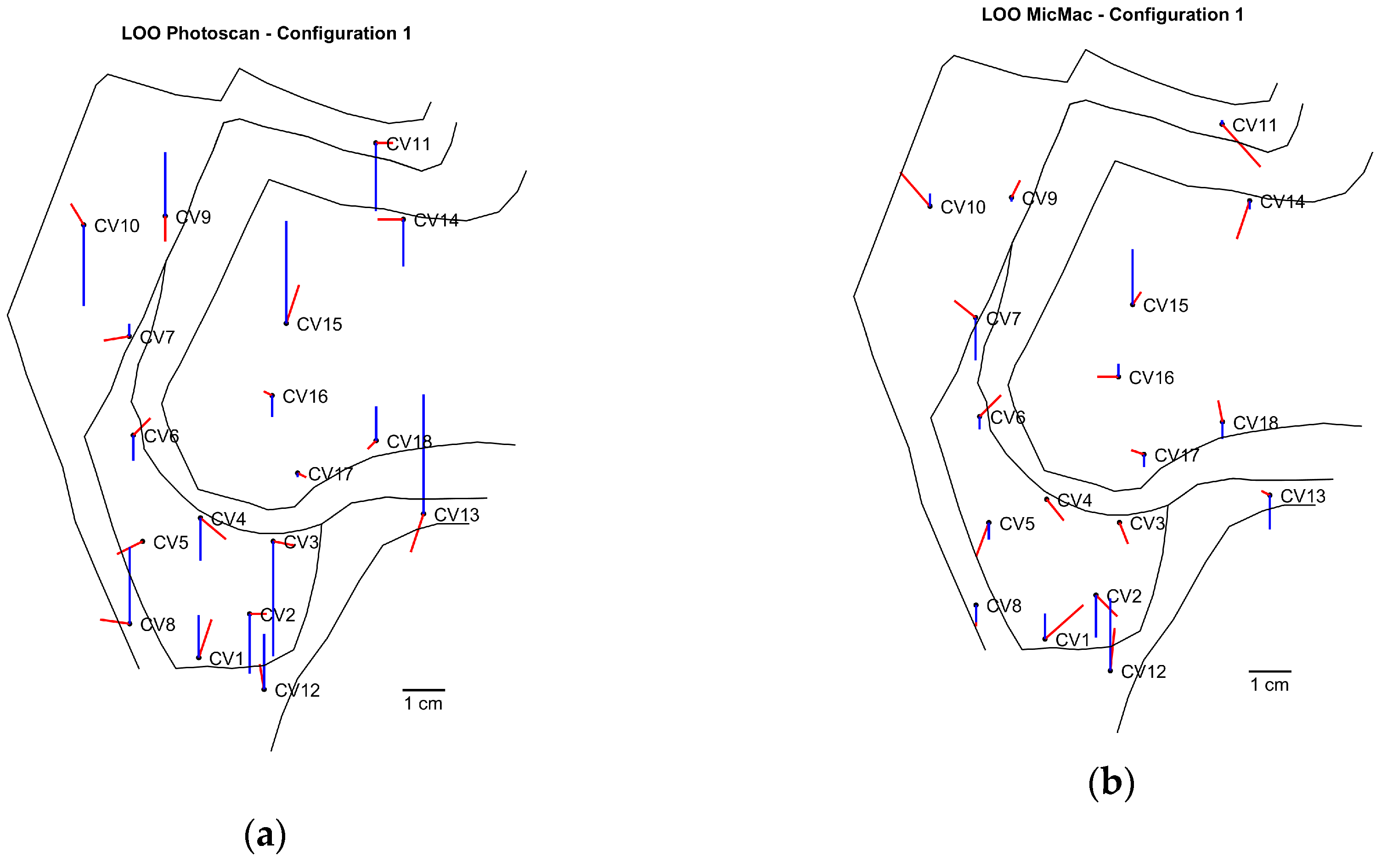

3.3. Cross-Validation

4. Discussion and Further Activities

5. Conclusions

Supplementary Materials

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Lütjens, M.; Kersten, T.P.; Dorschel, B.; Tschirschwitz, F. Virtual Reality in Cartography: Immersive 3D Visualization of the Arctic Clyde Inlet (Canada) Using Digital Elevation Models and Bathymetric Data. Multimodal Technol. Interact. 2019, 3, 9. [Google Scholar] [CrossRef]

- Edler, D.; Husar, A.; Keil, J.; Vetter, M.; Dickmann, F. Virtual Reality (VR) and open source software: A workflow for constructing an interactive cartographic VR environment to explore urban landscapes. Kartograph. Nachr. 2018, 68, 3–11. [Google Scholar]

- Rusnák, M.; Sládek, J.; Kidová, A.; Lehotský, M. Template for high-resolution river landscape mapping using UAV technology. Measurement 2018, 115, 139–151. [Google Scholar] [CrossRef]

- Hemmelder, S.; Marra, W.; Markies, H.; De Jong, S.M. Monitoring river morphology & bank erosion using UAV imagery–A case study of the river Buëch, Hautes-Alpes, France. Int. J. Appl. Earth Obs. 2018, 73, 428–437. [Google Scholar] [CrossRef]

- Fonstad, M.A.; Dietrich, J.T.; Courville, B.C.; Jensen, J.L.; Carbonneau, P.E. Topographic structure from motion: A new development in photogrammetric measurement. Earth Surf. Processes 2013, 38, 421–430. [Google Scholar] [CrossRef]

- Mancini, F.; Dubbini, M.; Gattelli, M.; Stecchi, F.; Fabbri, S.; Gabbianelli, G. Using unmanned aerial vehicles (UAV) for high-resolution reconstruction of topography: The structure from motion approach on coastal environments. Remote Sens. 2013, 5, 6880–6898. [Google Scholar] [CrossRef]

- Lu, C.H. Applying UAV and photogrammetry to monitor the morphological changes along the beach in Penghu islands. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 1153–1156. [Google Scholar] [CrossRef]

- Stöcker, C.; Eltner, A.; Karrasch, P. Measuring gullies by synergetic application of UAV and close-range photogrammetry—A case study from Andalusia, Spain. Catena 2013, 132, 1–11. [Google Scholar] [CrossRef]

- Shahbazi, M.; Sohn, G.; Théau, J.; Ménard, P. UAV-based point cloud generation for open-pit mine modelling. Int. Arch. Photogramm. 2015, 40. [Google Scholar] [CrossRef]

- Tong, X.; Liu, X.; Chen, P.; Liu, S.; Luan, K.; Li, L.; Liu, S.; Liu, X.; Xie, H.; Jin, Y.; et al. Integration of UAV-based photogrammetry and terrestrial laser scanning for the three-dimensional mapping and monitoring of open-pit mine areas. Remote Sens. 2015, 7, 6635–6662. [Google Scholar] [CrossRef]

- Ryan, J.C.; Hubbard, A.L.; Box, J.E.; Todd, J.; Christoffersen, P.; Carr, J.R.; Holt, T.O.; Snooke, N.A. UAV photogrammetry and structure from motion to assess calving dynamics at Store Glacier, a large outlet draining the Greenland ice sheet. Cryosphere 2015, 9, 1–11. [Google Scholar] [CrossRef]

- Dall’Asta, E.; Delaloye, R.; Diotri, F.; Forlani, G.; Fornari, M.; Morra di Cella, U.; Pogliotti, P.; Roncella, R.; Santise, M. Use of uas in a high mountain landscape: The case of gran sommetta rock glacier (AO). Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 391. [Google Scholar] [CrossRef]

- Rosnell, T.; Honkavaara, E.; Nurminen, K. On geometric processing of multi-temporal image data collected by light UAV systems. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 38, 1–6. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 99, pp. 1150–1157. [Google Scholar]

- Harwin, S.; Lucieer, A. Assessing the accuracy of georeferenced point clouds produced via multi-view stereopsis from unmanned aerial vehicle (UAV) imagery. Remote Sens. 2010, 4, 1573–1599. [Google Scholar] [CrossRef]

- Lucieer, A.; Jong, S.M.D.; Turner, D. Mapping landslide displacements using Structure from Motion (SfM) and image correlation of multi-temporal UAV photography. Prog. Phys. Geogr. 2014, 38, 97–116. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; de Jong, S. Time series analysis of landslide dynamics using an unmanned aerial vehicle (UAV). Remote Sens. 2015, 7, 1736–1757. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S.; d’Oleire-Oltmanns, S.; Niethammer, U. Optimising UAV topographic surveys processed with structure-from-motion: Ground control quality, quantity and bundle adjustment. Geomorphology 2017, 280, 51–66. [Google Scholar] [CrossRef]

- Rango, A.; Laliberte, A.; Herrick, J.E.; Winters, C.; Havstad, K.; Steele, C.; Browning, D. Unmanned aerial vehicle-based remote sensing for rangeland assessment, monitoring, and management. J. Appl. Remote Sens. 2009, 3, 033542. [Google Scholar]

- Jaud, M.; Passot, S.; Le Bivic, R.; Delacourt, C.; Grandjean, P.; Le Dantec, N. Assessing the accuracy of high- resolution digital surface models computed by PhotoScan® and MicMac® in sub-optimal survey conditions. Remote Sens. 2016, 8, 465. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Nocerino, E.; Menna, F.; Remondino, F.; Saleri, R. Accuracy and block deformation analysis in automatic UAV and terrestrial photogrammetry-Lesson learnt. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 2, 203–208. [Google Scholar] [CrossRef]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Dandois, J.; Olano, M.; Ellis, E. Optimal altitude, overlap, and weather conditions for computer vision UAV estimates of forest structure. Remote Sens. 2015, 7, 13895–13920. [Google Scholar] [CrossRef]

- Peppa, M.V.; Mills, J.P.; Moore, P.; Miller, P.E.; Chambers, J.E. Accuracy assessment of a UAV-based landslide monitoring system. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 895–902. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; Borra-Serrano, I.; Peña, J.M. Assessing UAV-collected image overlap influence on computation time and digital surface model accuracy in olive orchards. Precis. Agric. 2018, 19, 115–133. [Google Scholar] [CrossRef]

- Haala, N.; Cramer, M.; Rothermel, M. Quality of 3D point clouds from highly overlapping UAV imagery. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-1/W2, 183–188. [Google Scholar] [CrossRef]

- Tahar, K.N. An evaluation on different number of ground control points in unmanned aerial vehicle photogrammetric block. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 40, 93–98. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.J.; Torres-Sánchez, J.; Clavero-Rumbao, I.; García-Ferrer, A.; Peña, J.M.; Borra-Serrano, I.; López-Granados, F. Assessing optimal flight parameters for generating accurate multispectral orthomosaics by UAV to support site-specific crop management. Remote Sens. 2015, 7, 12793–12814. [Google Scholar] [CrossRef]

- Rangel, J.M.G.; Gonçalves, G.R.; Pérez, J.A. The impact of number and spatial distribution of GCPs on the positional accuracy of geospatial products derived from low-cost UASs. Int. J. Remote Sens. 2018, 39, 7154–7171. [Google Scholar] [CrossRef]

- Cawley, G.C.; Talbot, N.L. Efficient leave-one-out cross-validation of kernel fisher discriminant classifiers. Pattern Recognit. 2003, 36, 2585–2592. [Google Scholar] [CrossRef]

- Brovelli, M.A.; Crespi, M.; Fratarcangeli, F.; Giannone, F.; Realini, E. Accuracy assessment of high-resolution satellite imagery orientation by leave-one-out method. ISPRS J. Photogramm. 2008, 63, 427–440. [Google Scholar] [CrossRef]

- Biagi, L.; Caldera, S. An efficient Leave One Block Out approach to identify outliers. J. Appl. Geod. 2013, 7, 11–19. [Google Scholar] [CrossRef]

- Neitzel, F.; Klonowski, J. Mobile 3D mapping with a low-cost UAV system. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 38, 1–6. [Google Scholar] [CrossRef]

- Sona, G.; Pinto, L.; Pagliari, D.; Passoni, D.; Gini, R. Experimental analysis of different software packages for orientation and digital surface modelling from UAV images. Earth Sci. Inform. 2014, 7, 97–107. [Google Scholar] [CrossRef]

- Samad, A.M.; Kamarulzaman, N.; Hamdani, M.A.; Mastor, T.A.; Hashim, K.A. The potential of Unmanned Aerial Vehicle (UAV) for civilian and mapping application. In Proceedings of the 3rd IEEE International Conference on System Engineering and Technology, Shah Alam, Malaysia, 19–20 August 2013; pp. 313–318. [Google Scholar] [CrossRef]

- Unger, J.; Reich, M.; Heipke, C. UAV-based photogrammetry: Monitoring of a building zone. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 601–606. [Google Scholar] [CrossRef]

- Verykokou, S.; Ioannidis, C.; Athanasiou, G.; Doulamis, N.; Amditis, A. 3D reconstruction of disaster scenes for urban search and rescue. Multimed. Tools Appl. 2018, 77, 9691–9717. [Google Scholar] [CrossRef]

- Alidoost, F.; Arefi, H. Comparison of UAS-based photogrammetry software for 3D point cloud generation: A survey over a historical site. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 55–61. [Google Scholar] [CrossRef]

- Raczynski, R.J. Accuracy Analysis of Products Obtained from UAV-Borne Photogrammetry Influenced by Various Flight Parameters. Master’s Thesis, Norwegian University of Science and Technology, Trondheim, Norway, June 2017. [Google Scholar]

- Casella, V.; Franzini, M. Modelling steep surfaces by various configurations of nadir and oblique photogrammetry. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 175–182. [Google Scholar] [CrossRef]

- Agisoft, L.L.C. Agisoft PhotoScan User Manual: Professional Edition. 2016. Available online: https://www.agisoft.com/pdf/photoscan-pro_1_4_en.pdf (accessed on 9 March 2020).

- Pix4D, S.A. Pix4Dmapper 4.1 User Manual; Pix4D SA: Lausanne, Switzerland, 2016. [Google Scholar]

- Hastedt, H.; Luhmann, T. Investigations on the quality of the interior orientation and its impact in object space for UAV photogrammetry. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 321–328. [Google Scholar] [CrossRef]

- Deseilligny, M.P.; Cléry, I. Apero, an open source bundle adjusment software for automatic calibration and orientation of set of images. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 5, 269–276. [Google Scholar] [CrossRef]

- Rupnik, E.; Daakir, M.; Deseilligny, M.P. MicMac–a free, open-source solution for photogrammetry. Open Geospatial Data Softw. Stand. 2017, 2, 1–9. [Google Scholar] [CrossRef]

- Casella, V.; Chiabrando, F.; Franzini, M.; Manzino, A.M. Accuracy assessment of a photogrammetric UAV block by using different software and adopting diverse processing strategies. In Proceedings of the 5th International Conference on Geographical Information Systems Theory, Applications and Management, Heraklion, Crete, Greece, 3–5 May 2019. [Google Scholar] [CrossRef]

- Fraser, C. Digital camera self-calibration. ISPRS J. Photogramm. 1997, 52, 149–159. [Google Scholar] [CrossRef]

- Sanz-Ablanedo, E.; Chandler, J.; Rodríguez-Pérez, J.; Ordóñez, C. Accuracy of unmanned aerial vehicle (UAV) and SfM photogrammetry survey as a function of the number and location of ground control points used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef]

- Martínez-Carricondo, P.; Agüera-Vega, F.; Carvajal-Ramírez, F.; Mesas-Carrascosa, F.J.; García-Ferrer, A.; Pérez-Porras, F.J. Assessment of UAV-photogrammetric mapping accuracy based on variation of ground control points. Int. J. Appl. Earth Obs. 2018, 72, 1–10. [Google Scholar] [CrossRef]

- Fischer, R.L.; Ruby, J.G.; Armstrong, A.J.; Edwards, J.D.; Spore, N.J.; Brodie, K.L. Geospatial Accuracy of Small Unmanned Airborne System Data in the Coastal Environment (No. ERDC SR-19–1); ERDC Vicksburg: Vicksburg, MS, USA, 2019. [Google Scholar]

| # Block | Description |

|---|---|

| Block 1 | North-South linear strips, at 70 meters flying height (with respect to the upper part of the site), nadiral images |

| Block 2 | East-West linear strips, 70 m, nadiral |

| Block 3 | Radial linear strips, 70 m, nadiral |

| Block 4 | Radial linear strips, 70 m, 30° oblique (off-nadir) |

| Block 5 | Circular trajectory, 30 m, 45° oblique |

| Block 6 | North-South linear strips, 40 m, nadiral |

| Block 7 | East-West linear strips, 40 m, nadiral |

| Config 1: GCP 18 | GCP | CP | |||||

|---|---|---|---|---|---|---|---|

| X (m) | Y (m) | Z (m) | X (m) | Y (m) | Z (m) | ||

| PhotoScan | mean | 0.000 | 0.000 | 0.000 | - | - | - |

| std | 0.003 | 0.003 | 0.009 | - | - | - | |

| rmse | 0.003 | 0.003 | 0.009 | - | - | - | |

| UAS Master | mean | 0.000 | 0.000 | 0.000 | - | - | - |

| std | 0.002 | 0.002 | 0.008 | - | - | - | |

| rmse | 0.002 | 0.002 | 0.008 | - | - | - | |

| Pix4D | mean | 0.000 | 0.000 | −0.001 | - | - | - |

| std | 0.004 | 0.005 | 0.010 | - | - | - | |

| rmse | 0.004 | 0.005 | 0.010 | - | - | - | |

| ContextCapture | mean | 0.000 | 0.000 | 0.000 | - | - | - |

| std | 0.004 | 0.004 | 0.009 | - | - | - | |

| rmse | 0.004 | 0.004 | 0.009 | - | - | - | |

| MicMac | mean | 0.000 | 0.000 | 0.000 | - | - | - |

| std | 0.004 | 0.005 | 0.005 | - | - | - | |

| rmse | 0.004 | 0.005 | 0.005 | - | - | - | |

| Config 2: GCP 11/CP 7 | GCP | CP | |||||

|---|---|---|---|---|---|---|---|

| X (m) | Y (m) | Z (m) | X (m) | Y (m) | Z (m) | ||

| PhotoScan | mean | 0.000 | 0.000 | 0.000 | −0.001 | −0.001 | −0.001 |

| std | 0.003 | 0.003 | 0.009 | 0.004 | 0.005 | 0.013 | |

| rmse | 0.003 | 0.003 | 0.009 | 0.004 | 0.005 | 0.013 | |

| UAS Master | mean | 0.000 | 0.000 | 0.000 | 0.002 | −0.001 | 0.010 |

| std | 0.003 | 0.003 | 0.008 | 0.007 | 0.004 | 0.017 | |

| rmse | 0.003 | 0.003 | 0.008 | 0.007 | 0.004 | 0.020 | |

| Pix4D | mean | 0.000 | 0.000 | −0.001 | 0.002 | 0.002 | 0.003 |

| std | 0.004 | 0.005 | 0.008 | 0.005 | 0.007 | 0.015 | |

| rmse | 0.004 | 0.005 | 0.008 | 0.005 | 0.007 | 0.015 | |

| ContextCapture | mean | 0.001 | −0.001 | 0.000 | 0.001 | −0.002 | −0.003 |

| std | 0.005 | 0.004 | 0.009 | 0.008 | 0.007 | 0.012 | |

| rmse | 0.005 | 0.004 | 0.009 | 0.008 | 0.007 | 0.012 | |

| MicMac | mean | 0.000 | −0.001 | −0.001 | 0.000 | 0.000 | −0.003 |

| std | 0.004 | 0.005 | 0.006 | 0.005 | 0.006 | 0.005 | |

| rmse | 0.004 | 0.005 | 0.006 | 0.005 | 0.005 | 0.006 | |

| Config 3: GCP 6/CP 12 | GCP | CP | |||||

|---|---|---|---|---|---|---|---|

| X (m) | Y (m) | Z (m) | X (m) | Y (m) | Z (m) | ||

| PhotoScan | mean | 0.000 | 0.000 | 0.000 | −0.001 | −0.005 | −0.007 |

| std | 0.001 | 0.004 | 0.006 | 0.004 | 0.004 | 0.016 | |

| rmse | 0.001 | 0.004 | 0.006 | 0.004 | 0.006 | 0.017 | |

| UAS Master | mean | 0.000 | −0.001 | 0.002 | 0.001 | 0.00 | 0.007 |

| std | 0.007 | 0.005 | 0.015 | 0.005 | 0.004 | 0.023 | |

| rmse | 0.007 | 0.005 | 0.015 | 0.005 | 0.004 | 0.024 | |

| Pix4D | mean | 0.000 | 0.001 | −0.001 | −0.001 | 0.001 | 0.002 |

| std | 0.004 | 0.008 | 0.008 | 0.005 | 0.005 | 0.014 | |

| rmse | 0.004 | 0.008 | 0.008 | 0.005 | 0.005 | 0.014 | |

| ContextCapture | mean | −0.003 | 0.002 | 0.011 | −0.007 | 0.000 | 0.020 |

| std | 0.007 | 0.005 | 0.027 | 0.009 | 0.007 | 0.037 | |

| rmse | 0.008 | 0.005 | 0.029 | 0.011 | 0.007 | 0.042 | |

| MicMac | mean | 0.000 | 0.000 | −0.001 | −0.001 | −0.005 | −0.005 |

| std | 0.006 | 0.005 | 0.006 | 0.003 | 0.005 | 0.007 | |

| rmse | 0.006 | 0.005 | 0.006 | 0.004 | 0.007 | 0.009 | |

| GCP | CP | |||||

|---|---|---|---|---|---|---|

| X (m) | Y (m) | Z (m) | X (m) | Y (m) | Z (m) | |

| Config 1 GCP 18 | 0.003 | 0.004 | 0.008 | - | - | - |

| Config 2 GCP 11/CP7 | 0.004 | 0.004 | 0.008 | 0.006 | 0.006 | 0.013 |

| Config 3 GCP 6 /CP12 | 0.005 | 0.005 | 0.013 | 0.006 | 0.006 | 0.021 |

| 0.009 | 0.016 | |||||

| PhotoScan | MicMac | |||||

|---|---|---|---|---|---|---|

| X (m) | Y (m) | Z (m) | X (m) | Y (m) | Z (m) | |

| mean | 0.000 | 0.000 | 0.001 | 0.001 | 0.000 | 0.000 |

| std | 0.004 | 0.005 | 0.016 | 0.005 | 0.006 | 0.007 |

| rmse | 0.004 | 0.005 | 0.016 | 0.005 | 0.006 | 0.007 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Casella, V.; Chiabrando, F.; Franzini, M.; Manzino, A.M. Accuracy Assessment of a UAV Block by Different Software Packages, Processing Schemes and Validation Strategies. ISPRS Int. J. Geo-Inf. 2020, 9, 164. https://doi.org/10.3390/ijgi9030164

Casella V, Chiabrando F, Franzini M, Manzino AM. Accuracy Assessment of a UAV Block by Different Software Packages, Processing Schemes and Validation Strategies. ISPRS International Journal of Geo-Information. 2020; 9(3):164. https://doi.org/10.3390/ijgi9030164

Chicago/Turabian StyleCasella, Vittorio, Filiberto Chiabrando, Marica Franzini, and Ambrogio Maria Manzino. 2020. "Accuracy Assessment of a UAV Block by Different Software Packages, Processing Schemes and Validation Strategies" ISPRS International Journal of Geo-Information 9, no. 3: 164. https://doi.org/10.3390/ijgi9030164

APA StyleCasella, V., Chiabrando, F., Franzini, M., & Manzino, A. M. (2020). Accuracy Assessment of a UAV Block by Different Software Packages, Processing Schemes and Validation Strategies. ISPRS International Journal of Geo-Information, 9(3), 164. https://doi.org/10.3390/ijgi9030164