The Metaverse Is Geospatial: A System Model Architecture Integrating Spatial Computing, Digital Twins, and Virtual Worlds

Abstract

1. Introduction

2. Background and Related Work

2.1. 3D Virtual Worlds and the Metaverse: A Review

- It has a 3D graphical interface and integrated audio. An environment with a text interface alone does not constitute an advanced virtual world.

- It supports massively multi-user remote interactivity. Simultaneous interactivity among many users in remote physical locations is a minimum requirement, not an advanced feature.

- It is persistent. The virtual environment continues to operate even when a particular user is not connected.

- It is immersive. The environment’s level of spatial, environmental, and multisensory realism creates a sense of psychological presence. Users have an understanding of “being inside”, “inhabiting”, or “residing within” the digital environment rather than being outside of it, thus intensifying their psychological experience.

- Emphasises user-generated activities and goals. In contrast to immersive games, where goals are built into the program, virtual worlds provide a more open-ended setting where, similar to real life, users can define their own activities and goals.

- Seamless transition: enabling users to visit and leave virtual worlds as easily as navigating between websites.

- Browser integration: incorporating virtual world viewers into web browsers.

- Avatar interoperability: ensuring avatars can move and function across different virtual worlds.

- Federation of virtual worlds: developing various methods to allow individual virtual worlds to operate independently yet be part of a larger interconnected network, similar to how websites function.

- Network infrastructure: the basic communication of the Metaverse is supported by the 5G network.

- Cyber-reality interface: Metaverse links and switches between the virtual and the real world through expanded reality, smart devices, and human –computer interaction.

- Data management and application: Metaverse relies on cloud computing, big data, and edge computing to achieve large-scale data acquisition, data analysis, data storage, and data transmission.

- Authentication mechanism: Metaverse uses blockchain, which provides a transparent, stable, and reliable trust mechanism to solve problems such as data sovereignty determination, virtual identity authentication, value attribution, and circulation using biometrics technology.

- Content generation: Metaverse maps the physical world to cyberspace using digital twins.

- Smart cities: smart countries, smart city domains, and edge devices with sensor modalities (IoT).

- Users: citizens, organisations, and institutions.

- Metaverse components: communication infrastructure, Metaverse tools and platforms, and Metaverse world development and content enrichment utilities.

- Digital twins and metaverse pool: digital shops, digital towns, virtual world interview tools, and Cloud-edge computing.

- Urban Metaverse as a service (UMaaS): entertainment events, interaction with other digital users, virtual touristic journeys, attend events, psychotherapy services, and game playing services.

2.2. The Metaverse Is Geospatial

2.3. Geospatial Virtual World Definition

- Comprehensive 3D geospatial context (M): Every element within a GVW, including objects, users, and processes, is assigned precise three-dimensional geospatial coordinates. This ensures a verifiable and consistent mapping between the physical and virtual realms.

- Persistent, multi-user interaction (M): A GVW maintains continuous operation independent of individual user sessions and supports concurrent interactions. This persistence is evident from the uninterrupted availability and collaborative functionality of the environment.

- Social and collaborative focus (M): beyond simple visualisation, GVWs facilitate rich communication (text, voice, video) and user-driven content creation, fostering a community-oriented digital ecosystem that transcends basic data display.

- Immersive 3D interaction and presence (M): users can navigate, communicate, and manipulate three-dimensional geospatial elements in real time through avatars or mixed reality interfaces, creating a profound sense of “inhabiting” the digital environment.

- Expandable and integrative (M): Built on modular architectures, GVWs can easily incorporate emerging technologies and adapt to evolving user needs and industry trends, such as AI. They can also facilitate virtual economies, supporting transactions, digital asset ownership, and novel business models within the 3D geospatial context.

- Rich simulation capabilities (O): physics engines, animations, and behaviour systems enable complex, interactive simulations from urban planning and infrastructure management to cultural heritage preservation.

- Real-time synchronisation and data integration (O): GVWs continuously incorporate live sensor inputs, IoT streams, and data from external systems. This real-time data flow produces constant, measurable updates that align the virtual environment with physical world conditions.

3. Proposed Approach of the GVW System Model

3.1. Methodology

3.2. Design Principles and Use Case Scenario

3.3. System Overview and Scope

3.4. Class Diagram of a Geospatial Virtual World

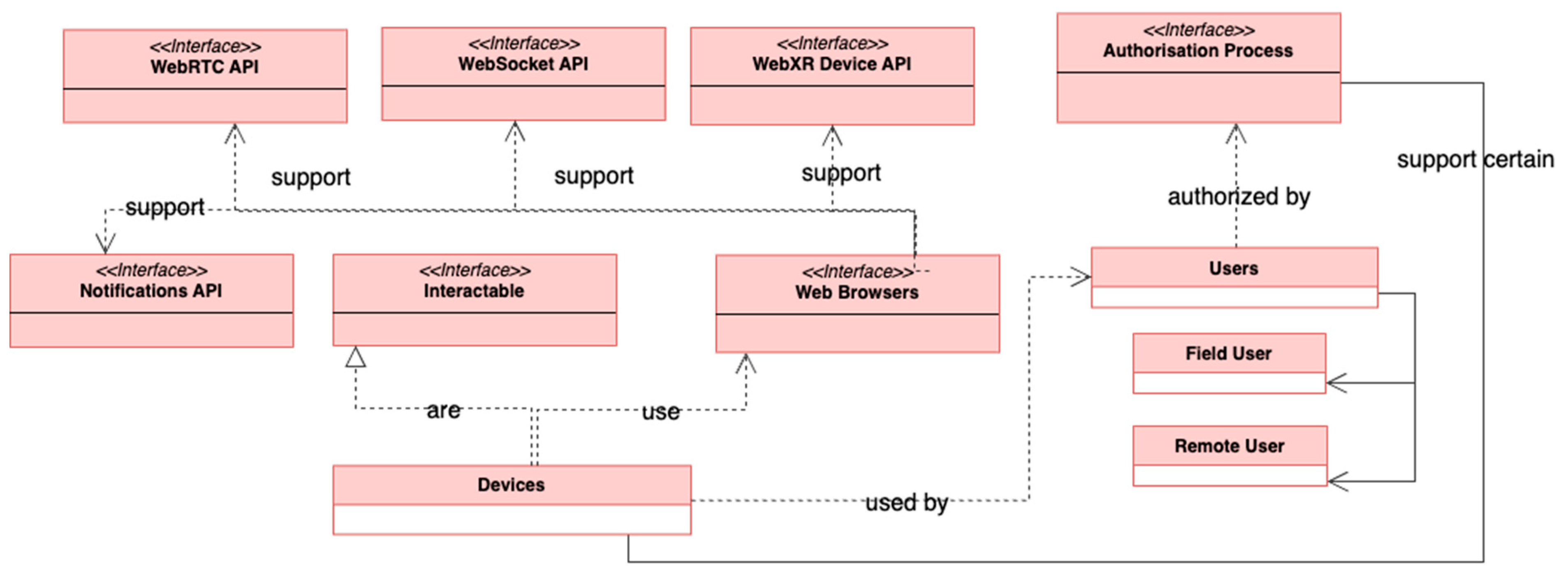

3.4.1. Access Layer

3.4.2. World Layer

3.4.3. Integration Layer

4. Discussion

4.1. Connection to Prior Work

4.2. Strengths and Weaknesses

4.3. Spatial Computing in the GVW System Model

4.4. Future Developments: Interoperability, Blockchain, and POC

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Pirotti, F.; Brovelli, M.A.; Prestifilippo, G.; Zamboni, G.; Kilsedar, C.E.; Piragnolo, M.; Hogan, P. An open source virtual globe rendering engine for 3D applications: NASA World Wind. Open Geospat. Data Softw. Stand. 2017, 2, 4. [Google Scholar] [CrossRef]

- Neves, J.N.; Câmara, A. Virtual environments and GIS. Geogr. Inf. Syst. 1999, 1, 557–565. [Google Scholar]

- Ellis, S.R. What are virtual environments? IEEE Comput. Graph. Appl. 1994, 14, 17–22. [Google Scholar] [CrossRef]

- Tuttle, B.T.; Anderson, S.; Huff, R. Virtual globes: An overview of their history, uses, and future challenges. Geogr. Compass 2008, 2, 1478–1505. [Google Scholar] [CrossRef]

- Stone, R.J. Applications of virtual environments: An overview. In Handbook of Virtual Environments; CRC Press: Boca Raton, FL, USA, 2002; pp. 867–896. [Google Scholar]

- Fauville, G.; Queiroz, A.C.M.; Bailenson, J.N. Virtual reality as a promising tool to promote climate change awareness. In Technology and Health; Elsevier: Amsterdam, The Netherlands, 2020; pp. 91–108. [Google Scholar]

- Lee, J.G.; Kang, M. Geospatial big data: Challenges and opportunities. Big Data Res. 2015, 2, 74–81. [Google Scholar] [CrossRef]

- Open Geospatial Consortium. Our History. Available online: https://www.ogc.org/our-history/ (accessed on 10 January 2025).

- Breunig, M.; Bradley, P.E.; Jahn, M.; Kuper, P.; Mazroob, N.; Rösch, N.; Al-Doori, M.; Stefanakis, E.; Jadidi, M. Geospatial data management research: Progress and future directions. ISPRS Int. J. Geo-Inf. 2020, 9, 95. [Google Scholar] [CrossRef]

- Cesium. CesiumJS. Available online: https://cesium.com/platform/cesiumjs/ (accessed on 10 January 2025).

- Google. 3D Maps JavaScript Demo. Available online: https://mapsplatform.google.com/demos/3d-maps-javascript/ (accessed on 10 January 2025).

- Evangelidis, K.; Papadopoulos, T. Is there life in Virtual Globes? Free Open Source Softw. Geospat. (FOSS4G) Conf. Proc. 2016, 16, 7. [Google Scholar]

- Downey, S. History of the (virtual) worlds. J. Technol. Stud. 2014, 40, 54–66. [Google Scholar] [CrossRef]

- Dionisio, J.D.N.; Iii, W.G.B.; Gilbert, R. 3D virtual worlds and the metaverse: Current status and future possibilities. ACM Comput. Surv. (CSUR) 2013, 45, 1–38. [Google Scholar] [CrossRef]

- Linden Lab. Second Life. Available online: https://secondlife.com/ (accessed on 10 January 2025).

- OpenSimulator. Main Page. Available online: http://opensimulator.org/wiki/Main_Page (accessed on 10 January 2025).

- Metaverse Standards Forum. Available online: https://metaverse-standards.org/ (accessed on 10 January 2025).

- Gilbert, R.L. The prose (psychological research on synthetic environments) project: Conducting in-world psychological research on 3d virtual worlds. J. Virtual Worlds Res. 2011, 4. [Google Scholar] [CrossRef]

- Worlds Inc. Worlds. Available online: https://www.worlds.com/ (accessed on 10 January 2025).

- Frey, D.; Royan, J.; Piegay, R.; Kermarrec, A.M.; Anceaume, E.; Le Fessant, F. Solipsis: A decentralized architecture for virtual environments. In Proceedings of the 1st International Workshop on Massively Multiuser Virtual Environments, Reno, NV, USA, 8 March 2008. [Google Scholar]

- Kaplan, J.; Yankelovich, N. Open wonderland: An extensible virtual world architecture. IEEE Internet Comput. 2011, 15, 38–45. [Google Scholar] [CrossRef]

- Alatalo, T. An entity-component model for extensible virtual worlds. IEEE Internet Comput. 2011, 15, 30–37. [Google Scholar] [CrossRef]

- Lopes, C. Hypergrid: Architecture and protocol for virtual world interoperability. IEEE Internet Comput. 2011, 15, 22–29. [Google Scholar] [CrossRef]

- VRChat. VRChat. Available online: https://hello.vrchat.com/ (accessed on 10 January 2025).

- Meta. AltspaceVR. Available online: https://www.meta.com/experiences/altspacevr/2133027990157329/ (accessed on 10 January 2025).

- Active Worlds. Active Worlds. Available online: https://www.activeworlds.com/ (accessed on 10 January 2025).

- Mozilla. End Support for Mozilla Hubs. Available online: https://support.mozilla.org/en-US/kb/end-support-mozilla-hubs (accessed on 10 January 2025).

- Thompson, C.W. Virtual world architectures. IEEE Internet Comput. 2011, 15, 11–14. [Google Scholar] [CrossRef]

- Ricci, A.; Piunti, M.; Tummolini, L.; Castelfranchi, C. The mirror world: Preparing for mixed-reality living. IEEE Pervasive Comput. 2015, 14, 60–63. [Google Scholar] [CrossRef]

- Dorri, A.; Kanhere, S.S.; Jurdak, R. Multi-agent systems: A survey. IEEE Access 2018, 6, 28573–28593. [Google Scholar] [CrossRef]

- Modahl, M.; Agarwalla, B.; Saponas, T.S.; Abowd, G.; Ramachandran, U. UbiqStack: A taxonomy for a ubiquitous computing software stack. Pers. Ubiquitous Comput. 2006, 10, 21–27. [Google Scholar] [CrossRef]

- Evangelidis, K.; Papadopoulos, T.; Sylaiou, S. Mixed Reality: A reconsideration based on mixed objects and geospatial modalities. Appl. Sci. 2021, 11, 2417. [Google Scholar] [CrossRef]

- Evangelidis, K.; Sylaiou, S.; Papadopoulos, T. Mergin’mode: Mixed reality and geoinformatics for monument demonstration. Appl. Sci. 2020, 10, 3826. [Google Scholar] [CrossRef]

- George, A.H.; Fernando, M.; George, A.S.; Baskar, T.; Pandey, D. Metaverse: The next stage of human culture and the internet. Int. J. Adv. Res. Trends Eng. Technol. (IJARTET) 2021, 8, 1–10. [Google Scholar]

- Wang, D.; Yan, X.; Zhou, Y. Research on Metaverse: Concept, development and standard system. In Proceedings of the 2021 2nd International Conference on Electronics, Communications and Information Technology (CECIT), Sanya, China, 27–29 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 983–991. [Google Scholar]

- Duan, H.; Li, J.; Fan, S.; Lin, Z.; Wu, X.; Cai, W. Metaverse for social good: A university campus prototype. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 153–161. [Google Scholar]

- Holonext. Metaverse 101: Understanding the Seven Layers. Available online: https://holonext.com/metaverse-101-understanding-the-seven-layers/ (accessed on 10 January 2025).

- Setiawan, K.D.; Anthony, A. The essential factor of metaverse for business based on 7 layers of metaverse–systematic literature review. In Proceedings of the 2022 International Conference on Information Management and Technology (ICIMTech), Semarang, Indonesia, 11–12 August 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 687–692. [Google Scholar]

- Kuru, K. Metaomnicity: Toward immersive urban metaverse cyberspaces using smart city digital twins. IEEE Access 2023, 11, 43844–43868. [Google Scholar] [CrossRef]

- Venugopal, J.P.; Subramanian AA, V.; Peatchimuthu, J. The realm of metaverse: A survey. Comput. Animat. Virtual Worlds 2023, 34, e2150. [Google Scholar] [CrossRef]

- Rawat, D.B.; El Alami, H. Metaverse: Requirements, architecture, standards, status, challenges, and perspectives. IEEE Internet Things Mag. 2023, 6, 14–18. [Google Scholar] [CrossRef]

- Wang, Y.; Su, Z.; Zhang, N.; Xing, R.; Liu, D.; Luan, T.H.; Shen, X. A survey on metaverse: Fundamentals, security, and privacy. IEEE Commun. Surv. Tutor. 2022, 25, 319–352. [Google Scholar] [CrossRef]

- Fu, Y.; Li, C.; Yu, F.R.; Luan, T.H.; Zhao, P.; Liu, S. A survey of blockchain and intelligent networking for the metaverse. IEEE Internet Things J. 2022, 10, 3587–3610. [Google Scholar] [CrossRef]

- Yang, L. Recommendations for metaverse governance based on technical standards. Humanit. Soc. Sci. Commun. 2023, 10, 1–10. [Google Scholar] [CrossRef]

- Sami, H.; Hammoud, A.; Arafeh, M.; Wazzeh, M.; Arisdakessian, S.; Chahoud, M.; Wehbi, O.; Ajaj, M.; Mourad, A.; Otrok, H.; et al. The metaverse: Survey, trends, novel pipeline ecosystem & future directions. IEEE Commun. Surv. Tutor. 2024, 26, 2914–2960. [Google Scholar]

- Trunfio, M.; Rossi, S. Advances in metaverse investigation: Streams of research and future agenda. Virtual Worlds 2022, 1, 103–129. [Google Scholar] [CrossRef]

- Stubbs, A.; Hughes, J.J.; Eisikovits, N.; Burley, J. The Democratic Metaverse: Building an Extended Reality Safe for Citizens, Workers and Consumers; Institute for Ethics and Emerging Technologies: Boston, MA, USA, 2023. [Google Scholar]

- Ball, M. The Metaverse: And How It Will Revolutionize Everything; Liveright Publishing: New York, NY, USA, 2022. [Google Scholar]

- Open Geospatial Consortium. The Metaverse Is Geospatial. Available online: https://www.ogc.org/blog-article/the-metaverse-is-geospatial/ (accessed on 10 January 2025).

- Ritterbusch, G.D.; Teichmann, M.R. Defining the metaverse: A systematic literature review. IEEE Access 2023, 11, 12368–12377. [Google Scholar] [CrossRef]

- Türk, T. The concept of metaverse, its future and its relationship with spatial information. Adv. Geomat. 2022, 2, 17–22. [Google Scholar]

- Papadopoulos, T.; Evangelidis, K.; Kaskalis, T.H.; Evangelidis, G.; Sylaiou, S. Interactions in augmented and mixed reality: An overview. Appl. Sci. 2021, 11, 8752. [Google Scholar] [CrossRef]

- Epic Games. Unreal Engine 5.4 Is Now Available. Available online: https://www.unrealengine.com/en-US/blog/unreal-engine-5-4-is-now-available (accessed on 10 January 2025).

- Habib, A.; Ghanma, M.; Morgan, M.; Al-Ruzouq, R. Photogrammetric and LiDAR data registration using linear features. Photogramm. Eng. Remote Sens. 2005, 71, 699–707. [Google Scholar] [CrossRef]

- Evangelidis, K.; Papadopoulos, T.; Papatheodorou, K.; Mastorokostas, P.; Hilas, C. 3D geospatial visualizations: Animation and motion effects on spatial objects. Comput. Geosci. 2018, 111, 200–212. [Google Scholar] [CrossRef]

- Naderi, H.; Shojaei, A. Digital twinning of civil infrastructures: Current state of model architectures, interoperability solutions, and future prospects. Autom. Constr. 2023, 149, 104785. [Google Scholar] [CrossRef]

- Papadopoulos, T.; Evangelidis, K.; Evangelidis, G.; Kaskalis, T. Immersive mixed reality experience empowered by the internet of things and geospatial technologies. In Proceedings of the Fourteenth International Conference on Computational Structures Technology, Montpellier, France, 23–25 August 2022. [Google Scholar]

- Gantz, J.; Reinsel, D. The digital universe in 2020: Big data, bigger digital shadows, and biggest growth in the far east. IDC Iview IDC Anal. Future 2012, 2007, 1–16. [Google Scholar]

- Sepasgozar, S.M. Differentiating digital twin from digital shadow: Elucidating a paradigm shift to expedite a smart, sustainable built environment. Buildings 2021, 11, 151. [Google Scholar] [CrossRef]

- Lv, Z.; Shang, W.L.; Guizani, M. Impact of digital twins and metaverse on cities: History, current situation, and application perspectives. Appl. Sci. 2022, 12, 12820. [Google Scholar] [CrossRef]

- Lee, L.H.; Braud, T.; Zhou, P.Y.; Wang, L.; Xu, D.; Lin, Z.; Kumar, A.; Bermejo, C.; Hui, P. All one needs to know about metaverse: A complete survey on technological singularity, virtual ecosystem, and research agenda. Found. Trends Hum. Comput. Interact. 2024, 18, 100–337. [Google Scholar] [CrossRef]

- Cortex2. Available online: https://cortex2.eu/ (accessed on 10 January 2025).

- XR4ED. Available online: https://xr4ed.eu/ (accessed on 10 January 2025).

- Chaturvedi, A.R.; Dolk, D.R.; Drnevich, P.L. Design principles for virtual worlds. MIS Q. 2011, 35, 673–684. [Google Scholar] [CrossRef]

- Nickerson, J.V.; Seidel, S.; Yepes, G.; Berente, N. Design principles for coordination in the metaverse. Acad. Manag. Annu. Meet. 2022, 2022, 15178. [Google Scholar] [CrossRef]

- Seidel, S.; Berente, N.; Nickerson, J.; Yepes, G. Designing the metaverse. In Proceedings of the 55th Hawaii International Conference on System Sciences, Maui, HI, USA, 4–7 January 2022. [Google Scholar]

| Design Principle | Short Description |

|---|---|

| Diverse user accommodation | This accommodation should support various user types, including citizens, professionals, and domain experts, ensuring inclusivity and broad applicability. |

| Citizen-centric experience | Prioritise intuitive navigation, visualisation, and data access to make the environment meaningful and easy to use. |

| Persistent user-generated content | Ensure that users’ digital assets remain stable and accessible over time. |

| Scalable complexity | Allow for different layers of complexity, from simple interactions to complex analytics and simulations. |

| Real–virtual integration | Seamlessly merge real-world geospatial and sensor data into the virtual environment, bridging physical and digital domains. |

| Narrative composability | Enable smooth transitions between diverse experiences, fostering emergent storytelling opportunities and user-driven exploration. |

| Social assortativity | Facilitate social encounters and communities, ensuring users can easily engage with others as desired. |

| Path discoverability | Provide infrastructure and services that help users find relevant experiences and content based on their interests. |

| Balance of spatial, temporal, artifactual, actor, and transition tensions | Carefully manage world design, object fidelity, user roles, and transitions to create a coherent yet dynamic environment. |

| Name | Type | Short Description |

|---|---|---|

| Devices | Class | Represents user hardware such as computers, phones, and AR/VR headsets. |

| Interactable | Interface | Defines supported interactions for devices and scene objects. |

| Web Browsers | Class | Client platform for accessing and interacting with virtual environments. |

| WebSocket API | Interface | Enables real-time communication between clients and the server. |

| WebRTC API | Interface | Enables capturing and optionally streaming audio and/or video media. |

| Notifications API | Interface | Delivers timely alerts and reminders to user devices. |

| WebXR Device API | Interface | Supports immersive AR/VR experiences through compatible XR devices. |

| Authorisation Process | Interface | Ensures secure and controlled access to platform features and user data. |

| Users | Class | Represents users interacting with the environment through avatars. |

| Name | Type | Short Description |

|---|---|---|

| Coordinate System | Interface | Defines spatial references for accurate positioning in 3D environments. |

| 3D Scenes | Class | Represents the virtual environment, including cameras, lights, and context. |

| Scene Objects | Class | Individual entities within the 3D scenes that users can interact with or manipulate. |

| Virtual Agents/NPCs | Class | Represent non-player characters interacting autonomously within the virtual world. |

| Avatars | Class | Represents users or agents for interaction in the virtual environment. |

| Animations | Interface | Controls scene objects’ movement and visual transformations. |

| Actions | Interface | Defines tasks users or objects can execute. |

| Behaviours | Interface | Governs object responses to user interactions or events. |

| Physics Engine | Class | Simulates realistic physical interactions and dynamics within the 3D scenes. |

| Entitlement System | Interface | Enables certain actions based on the user’s access rights. |

| Time Spectrum | Interface | Orchestrates time-based events and environment changes. |

| Spatial Audio | Class | Enables location-based auditory feedback from the GVW. |

| Chat Bubble | Class | Enables chat communication between users. |

| Name | Type | Short Description |

|---|---|---|

| IoT Devices | Class | Connects IoT devices and streams real-time data to GVW. |

| APIs | Interface | Integrates external data, services, and functionalities into GVW. |

| Location-Based Services | Interface | Supplies geographic context and queries for location-aware interactions. |

| Artificial Intelligence | Interface | Enables adaptive behaviour and decision making for automated components. |

| Approach (Year) | Type (Short Description) | Real-time Multi-User (*) | Persistence (*) | Geospatial Focus | License |

|---|---|---|---|---|---|

| Worlds (1995) [19] | Platform (early 3D VW) | ✔ | ✔ | None | Proprietary |

| ActiveWorlds (1997) [26] | Platform (user-built worlds) | ✔ | ✔ | None | Proprietary |

| Second Life (2003) [15] | Platform (proprietary large-scale VW) | ✔ | ✔ | None | Proprietary |

| OpenSimulator (2007) [16] | Platform (open-source VW engine) | ✔ | ✔ | None | Open (BSD-like) |

| Solipsis (2009) [20] | Platform (decentralised VW) | ✔ | ✔ | None | Open-source |

| Hypergrid (2009) [23] | Protocol (connects multiple OpenSim grids) | ✔ | ✔ | None | Open-source |

| Virtual World Architectures (2011) [28] | Conceptual (survey/vision) | - | - | Varies | - |

| Open Wonderland (2011) [21] | Platform (3D VW) | ✔ | ✔ | Low | Open-source (GPL) |

| RealXtend (2011) [22] | Platform (virtual world) | ✔ | ✔ | Low | Open-source |

| VRChat (2014) [24] | Platform (social virtual reality) | ✔ | ✔ | None | Proprietary |

| Mirror World (2015) [29] | Conceptual framework (3-layer stack) | - | - | Medium | - |

| AltspaceVR (2015) [25] | Platform (social virtual reality) | ✔ | ✔ | None | Proprietary (Microsoft) |

| Mergin’Mode (2020) [33] | Platform (monument demonstration) | - | - | High | Open-source |

| Duan’s 3-Layer (2021) [36] | Conceptual (infrastructure, interaction, ecosystem) | - | - | Medium | - |

| Lee et al. (2021) [61] | Conceptual (digital twins-native continuum) | - | - | High | - |

| George et al. (2021) [34] | Conceptual (Metaverse spatial computing) | - | - | Medium | - |

| Kristian (2022) [38] | Conceptual (applies Radoff’s 7 layers to business) | - | - | Varies | - |

| Radoff’s 7 Layers (2022) [37] | Conceptual (experience, discovery, creator economy, spatial computing, decentralisation, human interface and infrastructure) | - | - | Medium | - |

| metaOmnicity (2023) [39] | Conceptual (urban Metaverse architecture) | - | - | High | - |

| Jothi (2023) -meta-stack [40] | Conceptual (four-level Metaverse stack) | - | - | Medium | - |

| Zhihan et al. (2023) [60] | Conceptual (digital cities in Metaverse) | - | - | High | - |

| Proposed GVW Model | System model (modular system architecture) | ✔ | ✔ | High | Open-source (MIT) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Society for Photogrammetry and Remote Sensing. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Papadopoulos, T.; Evangelidis, K.; Kaskalis, T.H.; Evangelidis, G. The Metaverse Is Geospatial: A System Model Architecture Integrating Spatial Computing, Digital Twins, and Virtual Worlds. ISPRS Int. J. Geo-Inf. 2025, 14, 126. https://doi.org/10.3390/ijgi14030126

Papadopoulos T, Evangelidis K, Kaskalis TH, Evangelidis G. The Metaverse Is Geospatial: A System Model Architecture Integrating Spatial Computing, Digital Twins, and Virtual Worlds. ISPRS International Journal of Geo-Information. 2025; 14(3):126. https://doi.org/10.3390/ijgi14030126

Chicago/Turabian StylePapadopoulos, Theofilos, Konstantinos Evangelidis, Theodore H. Kaskalis, and Georgios Evangelidis. 2025. "The Metaverse Is Geospatial: A System Model Architecture Integrating Spatial Computing, Digital Twins, and Virtual Worlds" ISPRS International Journal of Geo-Information 14, no. 3: 126. https://doi.org/10.3390/ijgi14030126

APA StylePapadopoulos, T., Evangelidis, K., Kaskalis, T. H., & Evangelidis, G. (2025). The Metaverse Is Geospatial: A System Model Architecture Integrating Spatial Computing, Digital Twins, and Virtual Worlds. ISPRS International Journal of Geo-Information, 14(3), 126. https://doi.org/10.3390/ijgi14030126