Using a Flexible Model to Compare the Efficacy of Geographical and Temporal Contextual Information of Location-Based Social Network Data for Location Prediction

Abstract

1. Introduction

1.1. Problem Statement

1.2. Main Contributions

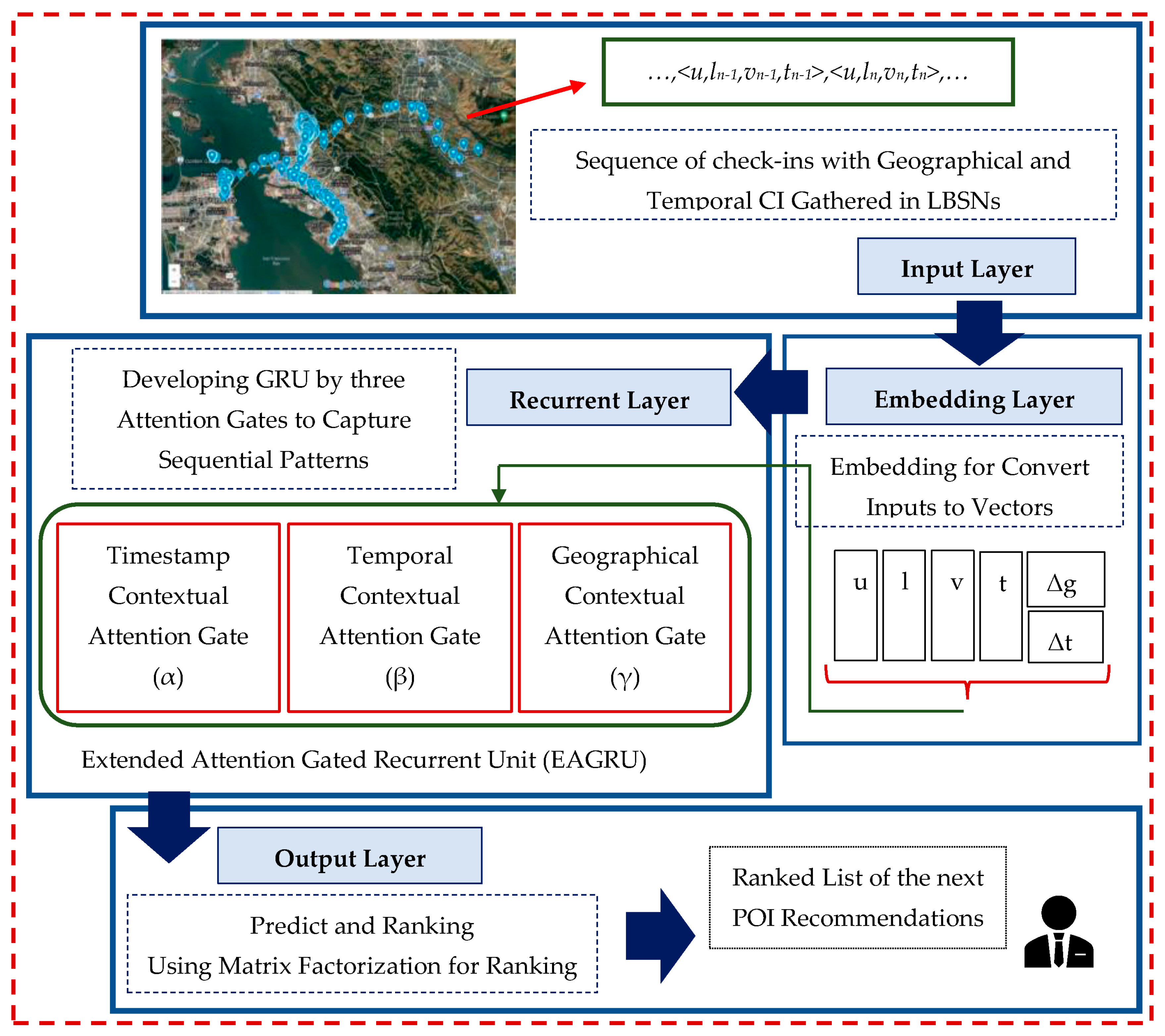

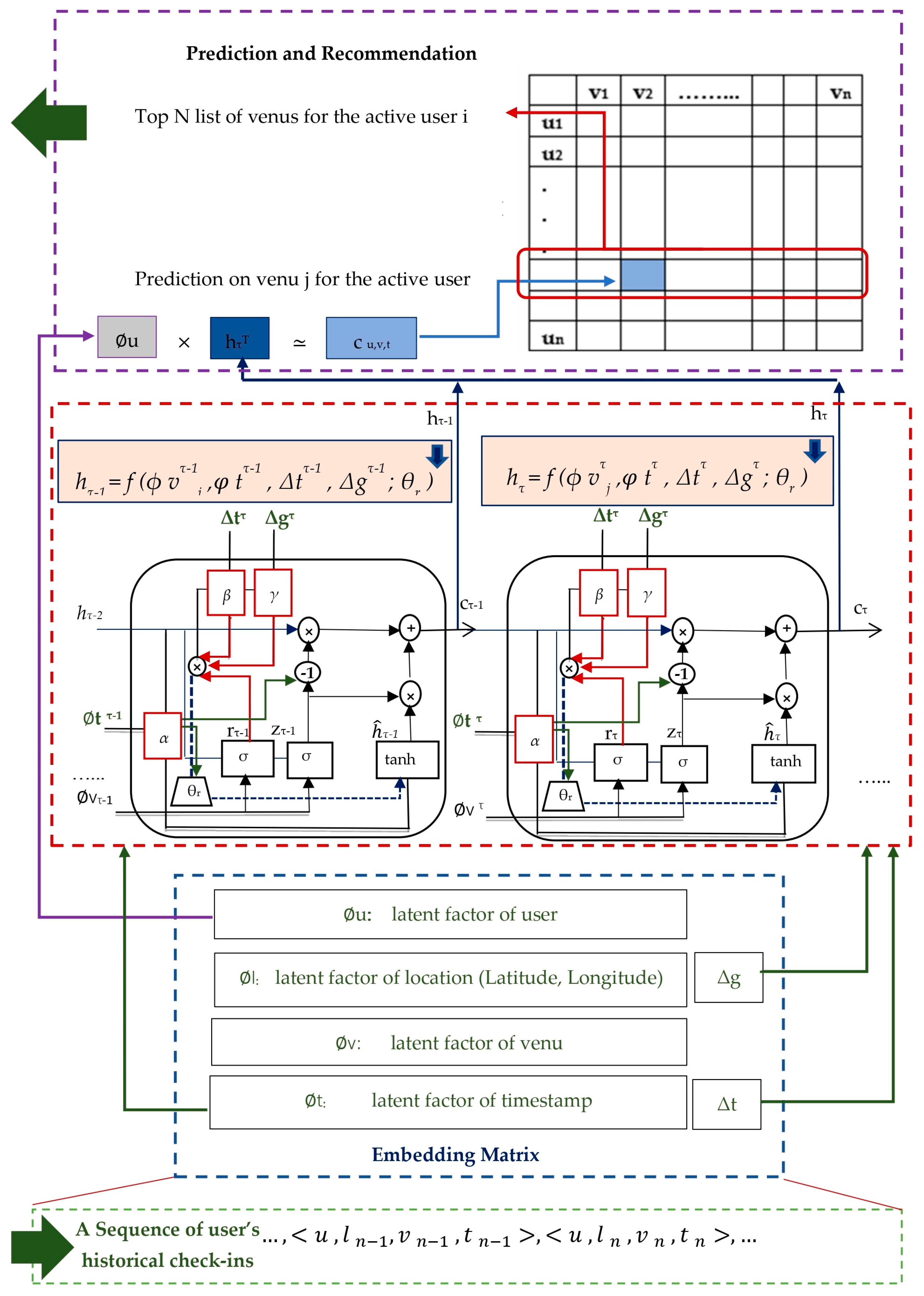

- The GRU model and the MF method are combined in the EAGRU approach. This is carried out to take advantage of each model’s strengths while minimizing the problems each method has on its own. The recurrent layer of our proposed architecture represents an improvement over the GRU model. In this layer, the flexibility of the GRU model was used, and the GRU model was improved by adding the three extra attention gates mentioned above. This was managed with an attention-based technique. The extra attention gates include the timestamp attention gate (α), the temporal contextual attention gate (β), and the geographical contextual attention gate (γ). The α controls the influence of timestamps of earlier visited locations, whereas β and γ control the effect of the hidden state of the earlier recurrent unit based on time intervals and geographical distances between two successive check-ins, respectively. In this way, it is possible to extend the model to another CI. Since these gates pay attention to geographical and temporal CI separately, the innovation of the present research is in defining these gates in the recurrent layer of the EAGRU model with the aim of comparing their impact on predicting the location of people.

- In this research, we designed four states of experiments to evaluate the effect of each of the contextual attention gates added to the basic GRU model. Since these contextual attention gates control the CI of the check-ins of the users’ trajectory data, the effect of the CI of the check-ins of the users’ trajectory data in predicting the location of people is evaluated by these four states of experiments. The EAGRU model was also used as a basis for designing the experimental states presented in this study. It was innovative to design the four experimental states to test the EAGRU model. Reviewing the related literature revealed that no research has investigated and evaluated the CI of trajectory data in the form presented in this study. This development of the GRU model and the proposed experimental states of this research provides the possibility of evaluating the separate impact of more CIs on users’ trajectory data in future research.

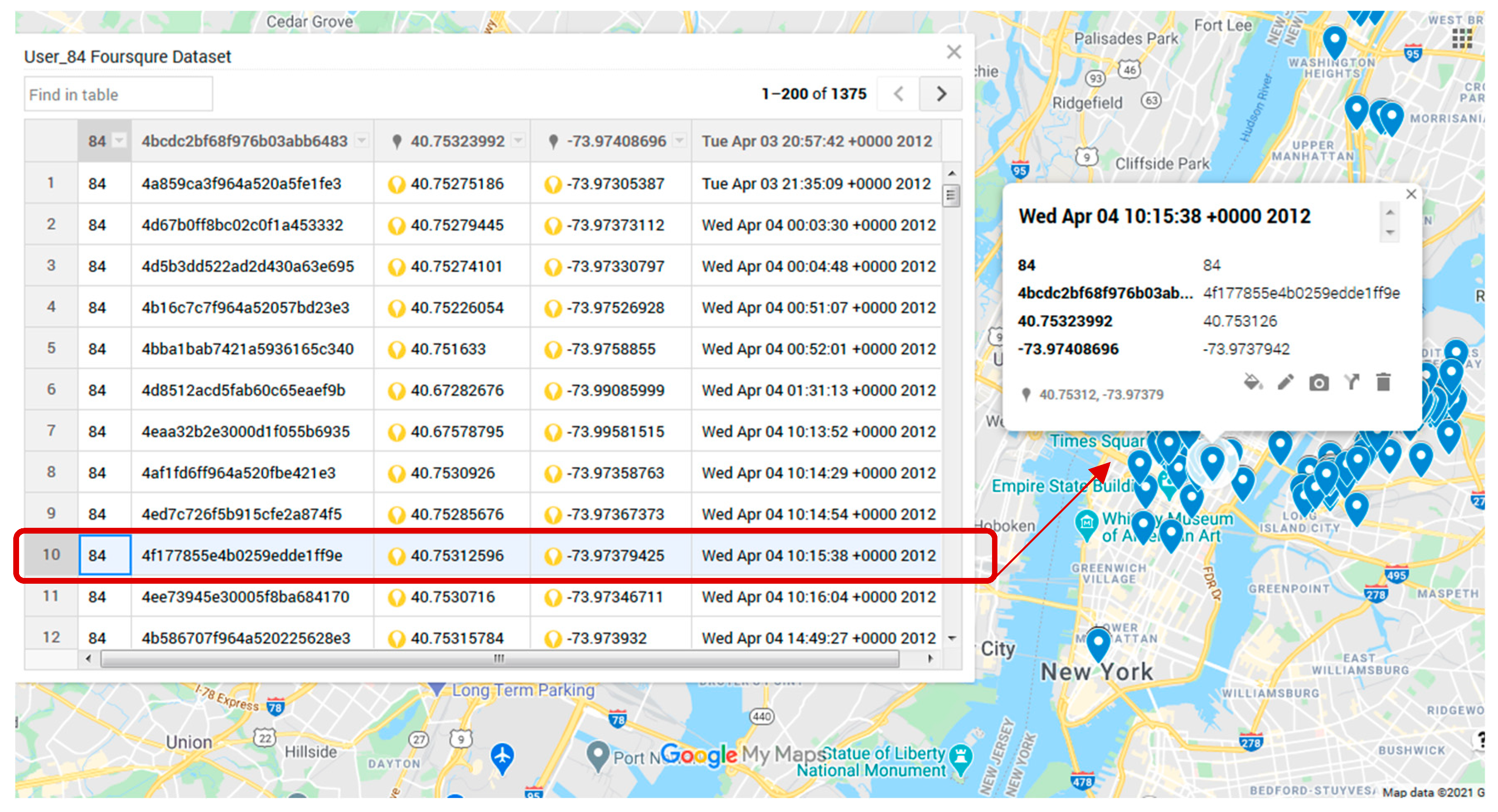

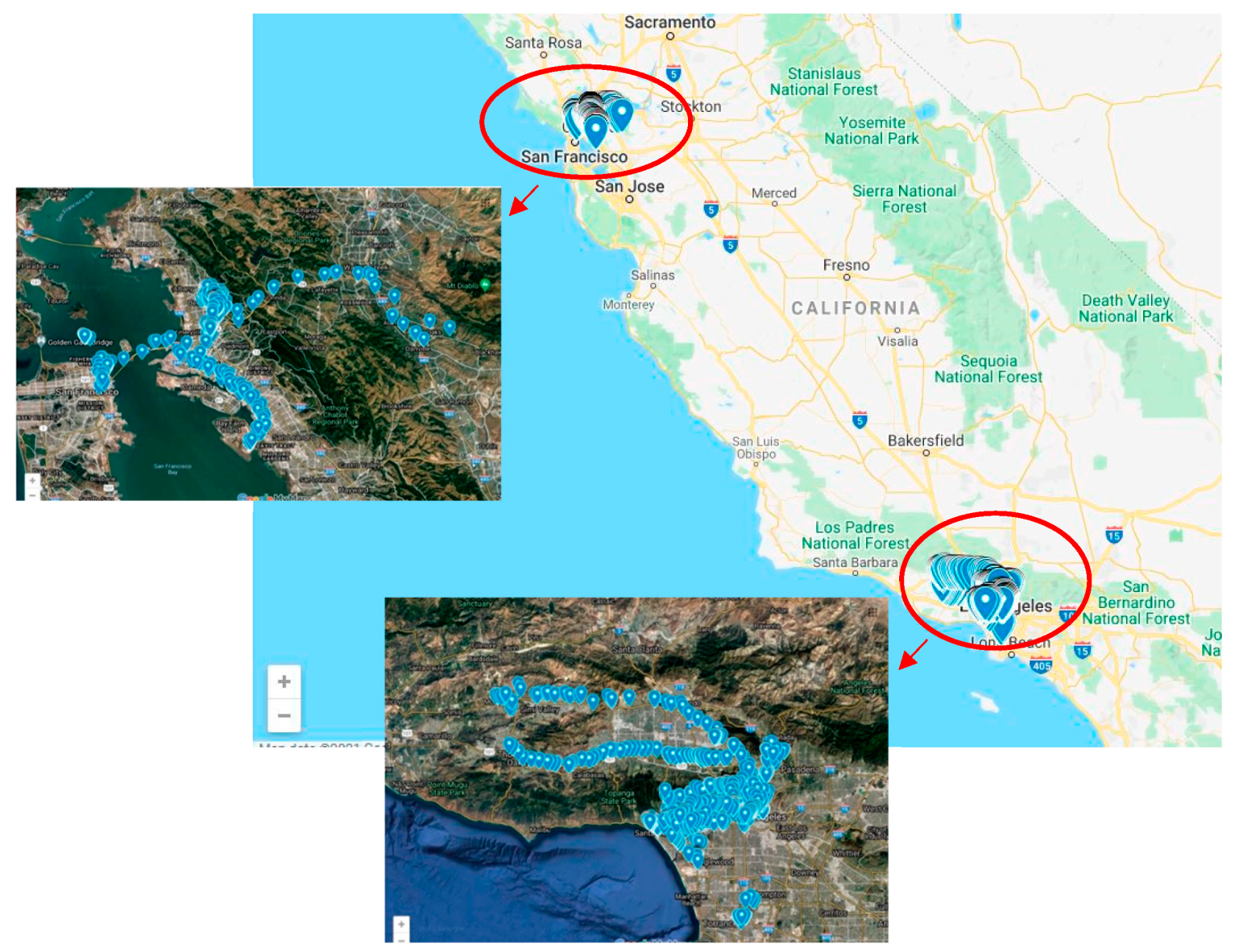

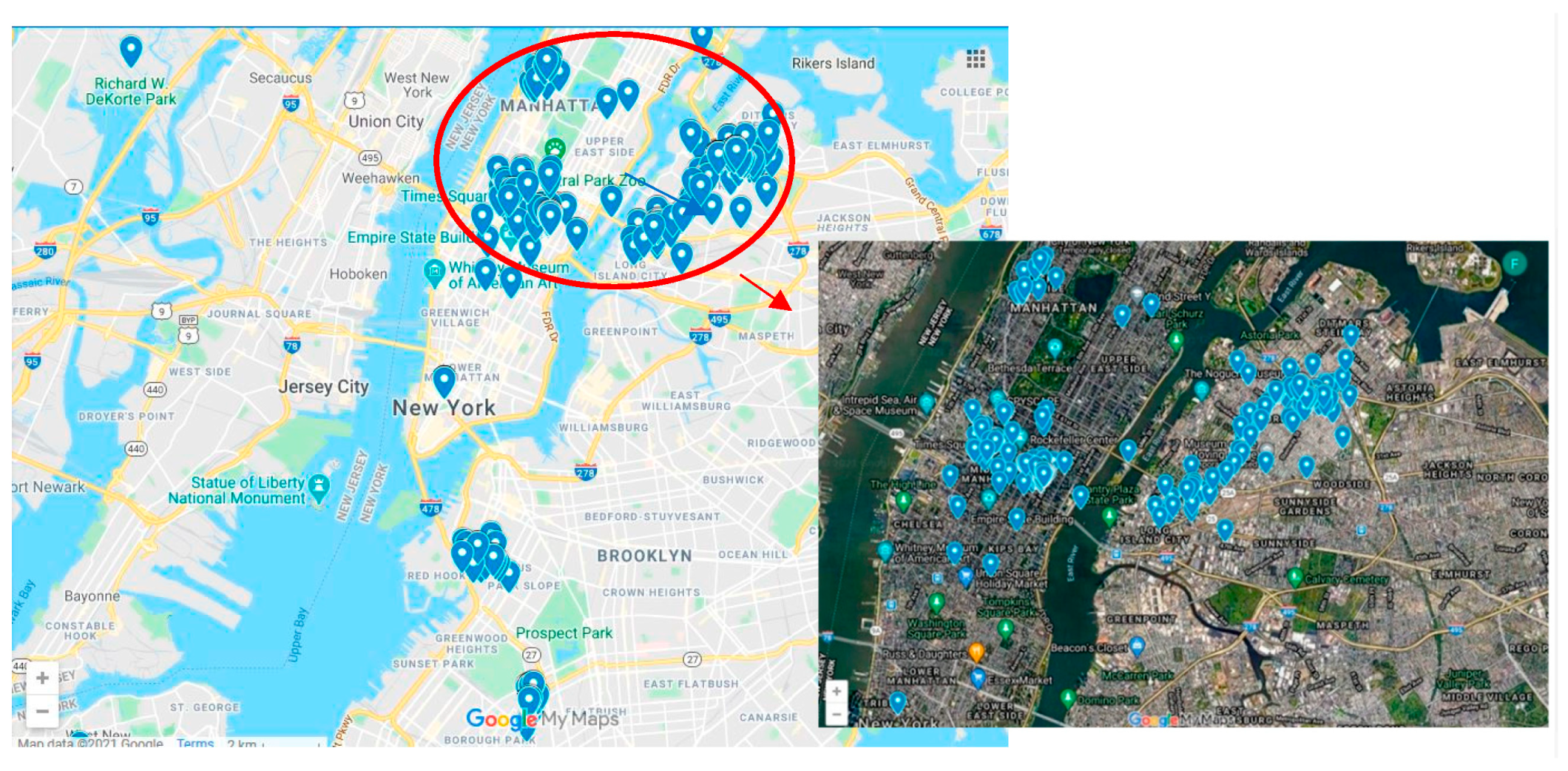

- Four comprehensive experiments were performed on two real-world, large-scale datasets, namely, Gowalla [23] and Foursquare [24], which are widely used in related studies to predict user POIs in LBSNs. The goal was to see how well the geographical and temporal CI of check-in data could be used to predict where LBSN users are.

1.3. Organization

2. Review of the Related Works

3. Preliminaries

3.1. Definitions and Notations

3.2. The MF Method in the CF Approach

3.3. Recurrent Models in Deep Learning

4. Description of the Proposed Flexible Model

4.1. The EAGRU Model Layers

4.2. Developing the EAGRU Model

ĥτ = tanh[W(Øvτj +(α ⊙ ϕt τ) + (β ⊙ Δtτ) + Δgτ) + U (rτ ⊙ hτ−1) + bh]

4.3. Network Training

| Algorithm 1: Training of EAGRU. | |

| Input: Set of users Us and set of historical check-in sequences Su | |

| //construct training instances | |

| 1. | Initialize Du = ∅, Du is a set of check-in trajectory samples combined with negative POIs of u |

| 2. | For each user u Us do |

| 3. | For each check-in sequence Su = {st1u, st2u,…, stnu } do |

| 4. | Get the set of negative samples |

| 5. | For each check-in activity in Su do |

| 6. | Compute the embedded vector vτu |

| 7. | Compute the geographical contexts vector gτu |

| 8. | Compute the temporal contexts vector tτu |

| 9. | End for |

| 10. | Add a training instance ({vτu, gτu, tτu },{}) into Du |

| 11. | End for |

| 12. | End for |

| //train the model | |

| 13. | Initialize the parameter set Θ |

| 14. | While (exceed (maximum number of iterations) == FALSE) do |

| 15. | For each user u in U do |

| 16. | Randomly select a batch of instances Dnu from Du |

| 17. | Find Θ minimizing the objective Equation (29) with Dnu |

| 18. | End for |

| 19. | End While |

| 20. | Return the set of parameters Θ |

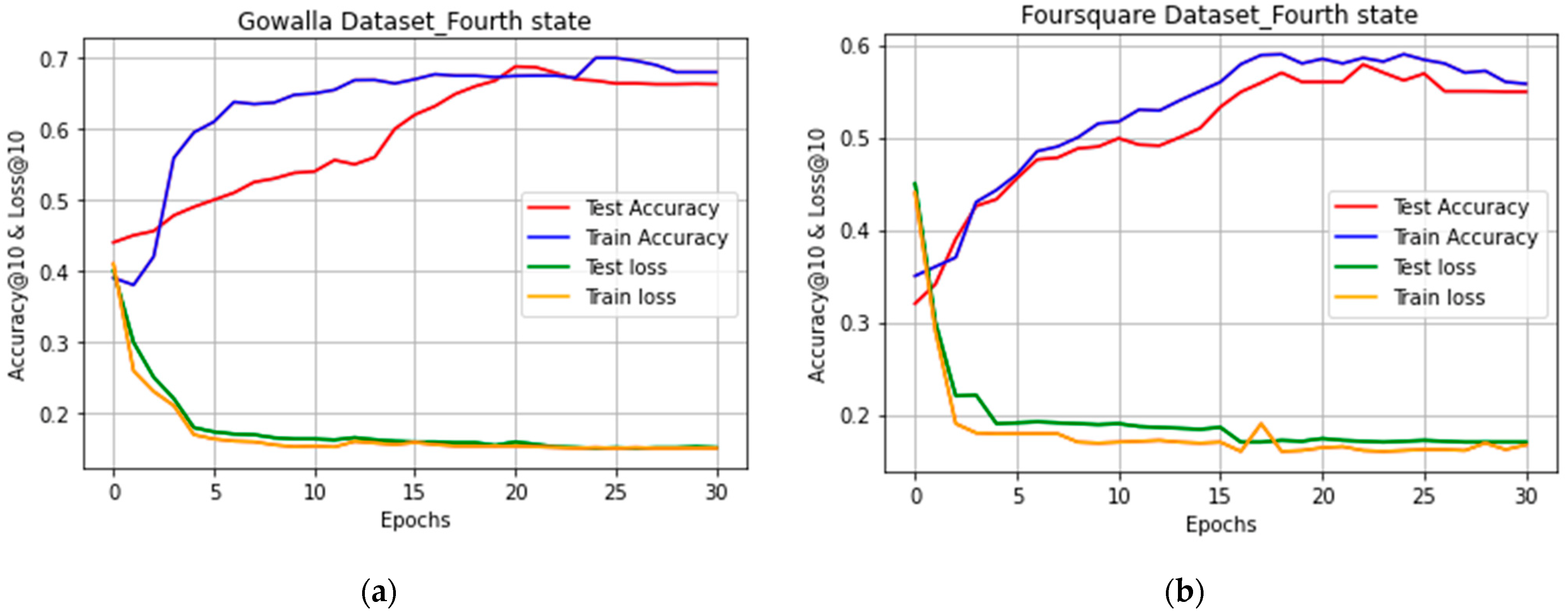

5. Experimental Results

- RQ1: How can the EAGRU architecture be developed to compare the efficacies of geographical and temporal CI associated with the sequence of check-ins for location prediction?

- RQ2: Which CI, geographical distance or time interval, has the greatest effect on location prediction accuracy?

- RQ3: Does EAGRU, which leverages multiple types of CI, improve prediction by applying additional attention gates or, does it outperform the previous methods?

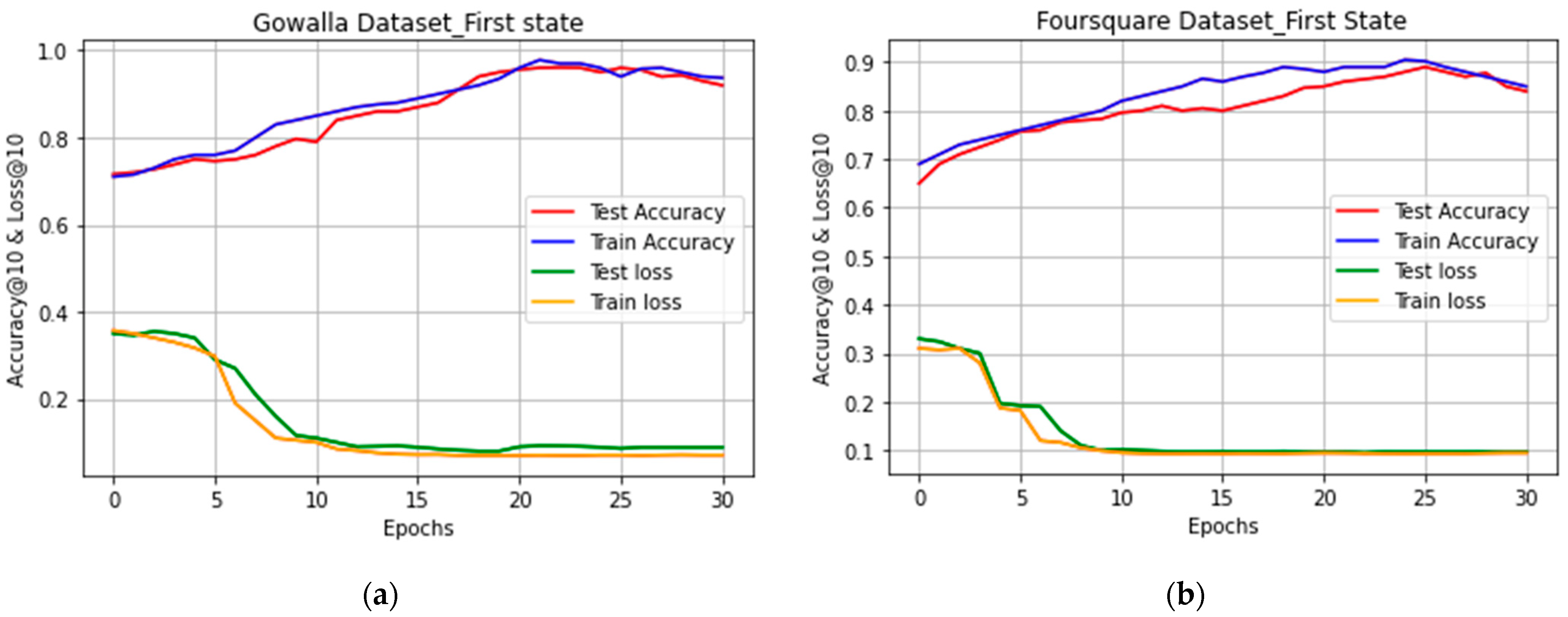

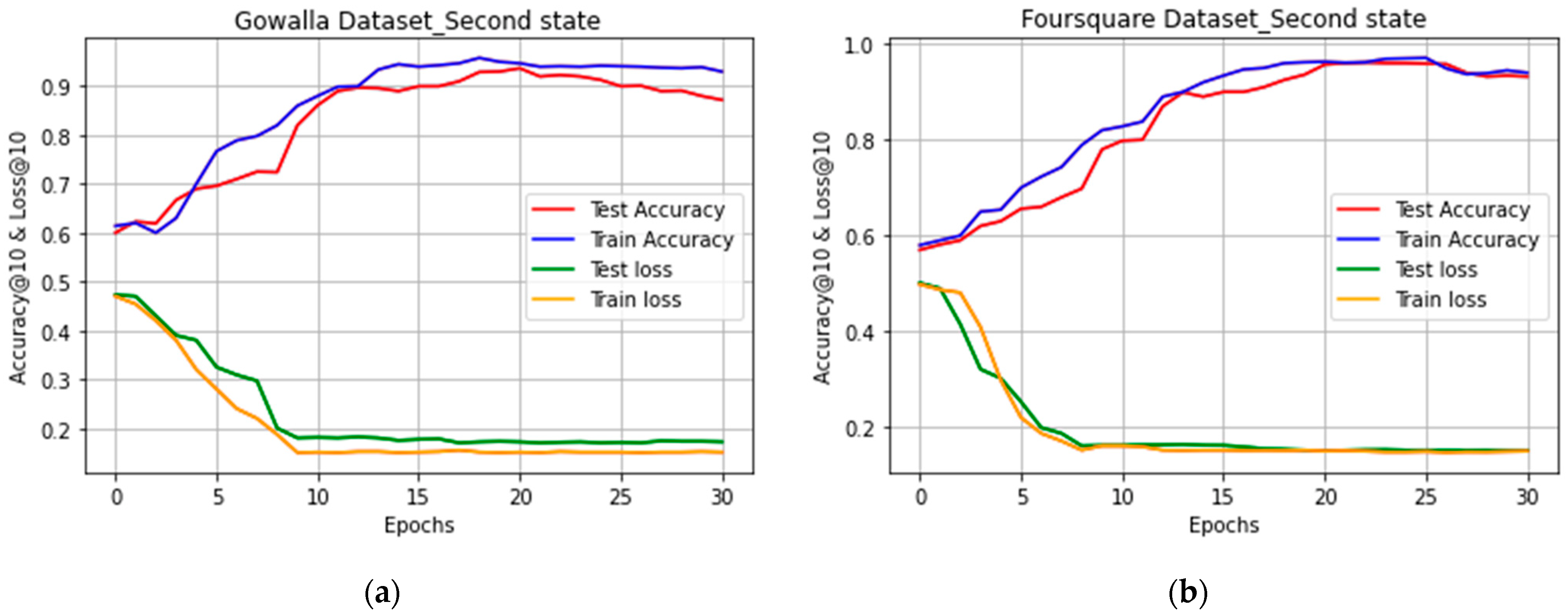

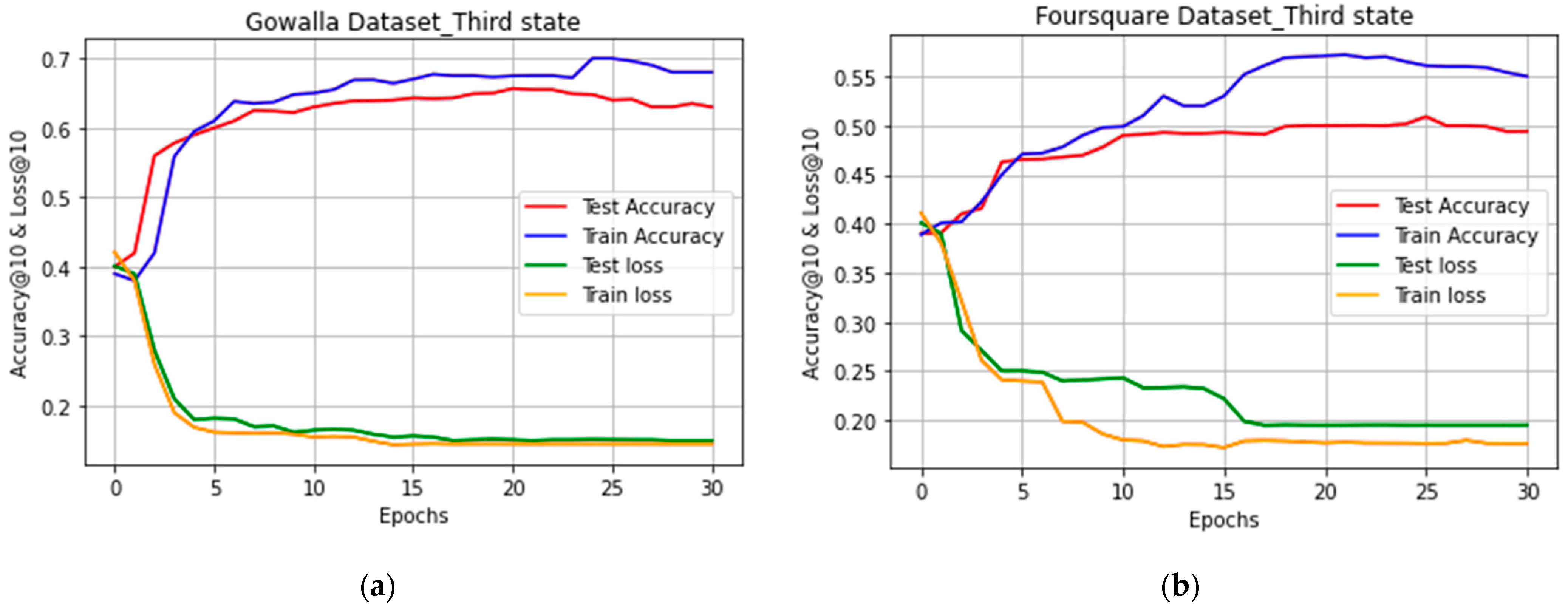

5.1. Incorporation of the Four Experiment States

5.2. Other Methods in Comparison

5.3. Results and Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fan, X.; Guo, L.; Han, N.; Wang, Y.; Shi, J.; Yuan, Y. A Deep Learning Approach for Next Location Prediction. In Proceedings of the 22th IEEE International Conference on Computer Supported Cooperative Work in Design (CSCWD), Nanjing, China, 9–11 May 2018. [Google Scholar]

- Baral, R.; Li, T.; Zhu, X. CAPS: Context Aware Personalized POI Sequence Recommender System. arXiv 2018, arXiv:1803.01245. [Google Scholar]

- Liu, C.; Liu, J.; Wang, J.; Xu, S.; Han, H.; Chen, Y. An Attention-Based Spatiotemporal Gated Recurrent Unit Network for Point-of-Interest Recommendation. ISPRS Int. J. Geo-Inf. 2019, 8, 355. [Google Scholar] [CrossRef]

- Huang, L.; Ma, Y.; Wang, S.; Liu, Y. An Attention-based Spatiotemporal LSTM Network for Next POI Recommendation. IEEE Trans. Serv. Comput. 2019, 14, 1585–1597. [Google Scholar] [CrossRef]

- Christoforidis, C.; Kefalas, P.; Papadopoulos, A.N.; Manolopoulos, Y. RELINE: Point-of-Interest Recommendations using Multiple Network Embeddings. J. Knowl. Inf. Syst. 2019, 63, 791–817. [Google Scholar] [CrossRef]

- Yuan, Q.; Cong, G.; Ma, Z.; Sun, A.; Magnenat-Thalmann, N. Time-aware Point-of-interest Recommendation. In Proceedings of the 36th ACM SIGIR Conference on Research and Development in Information Retrieval, Dublin, Ireland, 28 July–1 August 2013. [Google Scholar]

- Luan, W.; Liu, G.; Jiang, C.; Qi, L. Partition-based collaborative tensor factorization for POI recommendation. IEEE/CAA J. Autom. Sin. 2017, 4, 437–446. [Google Scholar] [CrossRef]

- Maroulis, S.; Boutsis, I.; Kalogeraki, V. Context-aware Point-of-Interest Recommendation Using Tensor Factorization. In Proceedings of the IEEE International Conference on Big Data, Washington, DC, USA, 5–8 December 2016. [Google Scholar]

- Gao, Q.; Zhou, F.; Trajcevski, G.; Zhang, K.; Zhong, T.; Zhang, F. Predicting Human Mobility via Variational Attention. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019. [Google Scholar]

- Huang, L.; Ma, Y.; Liu, Y.; He, K. DAN-SNR: A Deep Attentive Network for Social-Aware Next Point-of-Interest Recommendation. ACM Trans. Internet Technol. 2020, 21, 1–27. [Google Scholar] [CrossRef]

- Manotumruksa, J.; Macdonald, C.; Ounis, I. A Contextual Attention Recurrent Architecture for Context-Aware Venue Recommendation. In Proceedings of the 41th International ACM SIGIR Conference on Research & Development in Information Retrieval, Ann Arbor, MI, USA, 8–12 July 2018. [Google Scholar]

- Yao, D.; Zhang, C.; Huang, J.; Bi, J. SERM: A Recurrent Model for Next Location Prediction in Semantic Trajectories. In Proceedings of the ACM Conference on Information and Knowledge Management, Singapore, 6–10 November 2017. [Google Scholar]

- Li, J.; Liu, G.; Yan, C.; Jiang, C. LORI: A Learning-to-Rank-Based Integration Method of Location Recommendation. IEEE Trans. Comput. Soc. Syst. 2019, 6, 430–440. [Google Scholar] [CrossRef]

- Liu, Q.; Wu, S.; Wang, L.; Tan, T. Predicting the Next Location: A Recurrent Model with Geographical and Temporal Contexts. In Proceedings of the Conference on Artificial Intelligence (AAAI), Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Zhao, P.; Luo, A.; Liu, Y.; Xu, J.; Li, Z.; Zhuang, F.; Sheng, V.S.; Zhou, X. Where to Go Next: A Spatio-Temporal Gated Network for Next POI Recommendation. IEEE Trans. Knowl. Data Eng. 2020, 34, 2512–2524. [Google Scholar] [CrossRef]

- Wang, P.; Wang, H.; Zhang, H.; Lu, F.; Wu, S. A Hybrid Markov and LSTM Model for Indoor Location Prediction. IEEE Access 2019, 7, 185928–185940. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, C.; Wu, Z.; Sun, A.; Ye, D.; Luo, X. NEXT: A Neural Network Framework for Next POI Recommendation. Front. Comput. Sci. 2017, 14, 314–333. [Google Scholar] [CrossRef]

- Liu, Q.; Wu, S.; Wang, D.; Li, Z.; Wang, L. Context-aware Sequential Recommendation. In Proceedings of the IEEE 16th International Conference on Data Mining (ICDM), Barcelona, Spain, 12–15 December 2016. [Google Scholar]

- Feng, J.; Li, Y.; Zhang, C.; Sun, F.; Meng, F.; Guo, A.; Jin, D. DeepMove: Predicting Human Mobility with Attention Recurrent Networks. In Proceedings of the International World Wide Web Conference Committee-IW3C2, Lyon, France, 23–27 April 2018. [Google Scholar]

- Sun, K.; Qian, T.; Chen, T.; Liang, Y.; Nguyen, Q.V.H.; Yin, H. Where to Go Next: Modeling Long- and Short-Term User Preferences for Point-of-Interest Recommendation. In Proceedings of the Conference on Artificial Intelligence (AAAI), New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Liao, J.; Liu, T.; Liu, M.; Wang, J.; Wang, Y.; Sun, H. Multi-Context Integrated Deep Neural Network Model for Next Location Prediction. IEEE Access 2018, 6, 21980–21990. [Google Scholar] [CrossRef]

- Kala, K.U.; Nandhini, M. Context Category Specific sequence aware Point of Interest Recommender System with Multi Gated Recurrent Unit. J. Ambient Intell. Humaniz. Comput. 2019. [Google Scholar] [CrossRef]

- Cho, E.; Myers, S.A.; Leskovec, J. Friendship and mobility: User movement in location-based social networks. In Proceedings of the 17th ACM International Conference on Knowledge Discovery and Data (SIGKDD), San Diego, CA, USA, 21–24 August 2011. [Google Scholar]

- Yang, D.; Zhang, D.; Zheng, V.W.; Yu, Z. Modeling User Activity Preference by Leveraging User Geographical Temporal Characteristics in LBSNs. IEEE Trans. Syst. Man Cybern. Syst. 2014, 45, 129–142. [Google Scholar] [CrossRef]

- Zhao, S.; Zhao, T.; King, I.; Lyu, M.R. Geo-Teaser: Geo-Temporal Sequential Embedding Rank for Point-of-interest Recommendation. In Proceedings of the 26th International Conference on World Wide Web Companion, Perth, Australia, 3–7 April 2017. [Google Scholar]

- Dai, G.; Ma, C.; Xu, X. Short-term traffic flow prediction method for urban road sections based on space–time analysis and GRU. IEEE Access 2019, 7, 143025–143035. [Google Scholar] [CrossRef]

- Doan, K.D.; Yang, G.; Reddy, C.K. An Attentive Spatio-Temporal Neural Model for Successive Point of Interest. In Proceedings of the Springer Pacific-Asia Conference on Knowledge Discovery and Data Mining, Macau, China, 14–17 April 2019; pp. 346–358. [Google Scholar]

- Liu, Y.; Song, Z.; Xu, X.; Rafique, W.; Zhang, X.; Shen, J.; Khosravi, M.; Qi, L. Bidirectional GRU networks-based next POI category prediction for healthcare. Int. J. Intell. Syst. 2020, 37, 4020–4040. [Google Scholar] [CrossRef]

- Gui, Z.; Sun, Y.; Yang, L.; Peng, D.; Li, F.; Wu, H.; Guo, C.; Guo, W.; Gong, J. LSI-LSTM: An attention-aware LSTM for real-time driving destination prediction by considering location semantics and location importance of trajectory points. Neurocomputing 2021, 440, 72–88. [Google Scholar] [CrossRef]

- Wang, X.; Liu, X.; Li, L.; Chen, X.; Liu, J.; Wu, H. Time-aware user modeling with check-in time prediction for next POI recommendation. In Proceedings of the IEEE International Conference on Web Services (ICWS), Chicago, IL, USA, 5–10 September 2021; pp. 125–134. [Google Scholar]

- Liu, Y.; Pei, A.; Wang, F.; Yang, Y.; Zhang, X.; Wang, H.; Dai, H.; Qi, L.; Ma, R. An attention-based category-aware GRU model for the next POI recommendation. Int. J. Intell. Syst. 2021, 36, 3174–3189. [Google Scholar] [CrossRef]

- Li, F.; Gui, Z.; Zhang, Z.; Peng, D.; Tian, S.; Yuan, K.; Sun, Y.; Wu, H.; Gong, J.; Lei, Y. A hierarchical temporal attention-based LSTM encoder-decoder model for individual mobility prediction. Neurocomputing 2020, 403, 153–166. [Google Scholar] [CrossRef]

- Chen, Y.; Thaipisutikul, T.; Shih, T. A learning-based POI recommendation with spatiotemporal context awareness. IEEE Trans. Cybern. 2020, 52, 2453–2466. [Google Scholar] [CrossRef]

- Bokde, D.; Girase, S.; Mukhopadhyay, D. Role of Matrix Factorization Model in Collaborative Filtering Algorithm: A Survey. Int. J. Adv. Found. Res. Comput. 2014, 1, 111–118. [Google Scholar]

- Gan, M.; Gao, L. Discovering Memory-Based Preferences for POI Recommendation in Location-Based Social Networks. ISPRS Int. J. Geo-Inf. 2019, 8, 279. [Google Scholar] [CrossRef]

- Bokde, D.; Girase, S.; Mukhopadhyay, D. Matrix Factorization Model in Collaborative Filtering Algorithms: A Survey. Procedia Comput. Sci. 2015, 49, 136–146. [Google Scholar] [CrossRef]

- Manotumruksa, J.; Macdonald, C.; Ounis, I. A Deep Recurrent Collaborative Filtering Framework for Venue Recommendation. In Proceedings of the ACM Conference on Information and Knowledge Management, Singapore, 6–10 November 2017. [Google Scholar]

- Islam, M.A.; Mohammad, M.M.; Sarathi Das, S.S.; Eunus Ali, M. A Survey on Deep Learning Based Point-Of-Interest (POI) Recommendations. arXiv 2020, arXiv:2011.10187. [Google Scholar] [CrossRef]

- Semwal, V.B.; Gupta, A.; Lalwan, P. An optimized hybrid deep learning model using ensemble learning approach for human walking activities recognition. J. Supercomput. 2021, 77, 12256–12279. [Google Scholar] [CrossRef]

- Rendle, S.; Freudenthaler, C.; Gantner, Z.; Schmidt Thieme, L. BPR: Bayesian Personalized Ranking from Implicit Feedback. In Proceedings of the 25th Conference on Uncertainty in Artificial Intelligence, Montreal, QC, Canada, 18–21 June 2009. [Google Scholar]

- Yang, K.; Zhu, J. Next POI Recommendation via Graph Embedding Representation From H-Deepwalk on Hybrid Network. IEEE Access 2019, 7, 171105–171113. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J.L. Adam: A Method for Stochastic Optimization. In Proceedings of the 3th International Conference for Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

| Model Name and Approach | Method Summary | Challenges | |

|---|---|---|---|

| CF-based | UM * [6] | Assuming that time plays an important role in POI recommendations and developing time-aware POI recommendations based on CF for a given user at a specified time in a day. | ChC1, ChC3, ChC5, ChC6, ChC7, and ChC8 |

| PCTF [7] | The aim was to develop a generalization model of collaborative tensor factorization. In order to realize the POI recommendation, all users’ check-in behaviors were modeled as a 3-mode “user-POI-time” tensor, and three feature matrices from different perspectives were constructed. Users’ POI preferences were recovered by using the partition-based collaborative tensor factorization method. | ChC1, ChC6, ChC7, ChC8, and low model efficiency results | |

| CoTF [8] | Proposing a method that consists of two parts: an initialization phase that extracts the CI from check-ins and initializes a tensor structure; and a tensor factorization phase where a stochastic gradient descent algorithm is employed to calculate the latent factors for users, POIs, and context, and finally a tensor is reconstructed that contains the recommendations for each user. | ChC1, ChC6, ChC7 | |

| GT-SEER [25] | Proposing the temporal POI embedding model to capture the check-ins’ sequential contexts and temporal characteristics on different days, as well as developing the geographically hierarchical pairwise ranking model to improve the recommendation performance by incorporating geographical influence. | ChC1, ChC2, and weakness in discussing sequences that consist of consecutive check-ins whose interval is under a fixed time threshold | |

| DL-based (RNN) | LORI [13] | Applying a confidence coefficient for each user in the integration process and designing a learning-to-rank-based algorithm to train confidence coefficients. | ChD2, ChD3, ChD4, ChD7 |

| ST-RNN [14] | Extending RNN and using a transition matrix for capturing the temporal cyclical effect and geographical influence. | ChD1, ChD3, ChD5 | |

| STGN [15] | Developing a spatio-temporal gated network by enhancing long- and short-term memory to model users’ sequential visiting behaviors. There were two time gates and two distance gates that were designed to exploit time and distance intervals to memorize time and distance intervals to model long-term interest. | ChD3, ChD5 | |

| SERM [2] | Jointly learning the embedding of multiple factors (user, location, time, and keywords) and the transition parameters of an RNN in a unified framework. | ChD2, ChD3, ChD5, ChD9 | |

| CA-RNN [18] | Employing adaptive context-specific input matrices and adaptive context-specific transition matrices. | ChD1, ChD8, ChD9, and poor performance | |

| LSTPM [20] | Capturing long-term preference modeling by using a non-local network and short-term preference modeling by using geo-dilated LSTM. | ChD3, ChD4, ChD9 | |

| MCI-DNN [21] | Integrating sequence context, input contexts, and user preferences into a cohesive framework and modeling the sequence context and interaction of different kinds of input contexts jointly by extending the recurrent neural network to capture the semantic pattern of user behaviors from check-in records. | ChD1, ChD2, ChD9, and poor performance | |

| STFSA [26] | Proposing a short-term traffic flow prediction model that combined the spatio-temporal analysis with the GRU model. Time and spatial correlation analyses were performed on the collected traffic flow data. The spatio-temporal feature selection algorithm was employed to define the optimal input time interval and spatial data volume. The GRU was used to process the spatio-temporal feature information of the internal traffic flow of the matrix to achieve the purpose of prediction. | ChD3, ChD9, and less attention to the geographical distance of traffic flow data, little flexibility to use other CIs in the model | |

| DL-based (AM and RNN) | ATST-LSTM [4] | Using the spatio-temporal CI and developing an attention-based spatio-temporal LSTM network to selectively focus on the relevant historical check-in records in a check-in sequence. | ChD7, ChD9, and encountering high complexity in implementation |

| Deep Move [19] | Capturing complex dependencies and the multi-level periodicity nature of humans using embedding, GRU, and AM. | ChD2, ChD8, ChD9, and limitation on taking into account the time interval between two check-ins to model the behavioral pattern of user check-ins | |

| DAN-SNR [10] | Using the self-attention mechanism instead of the architecture of recurrent neural networks to model sequential influence and social influence in a unified manner. | ChD9, weakness in modeling sequential user data due to not using recursive models, and low efficiency | |

| ASTEM [27] | Proposing a deep LSTM model with an attention mechanism for the successive POI recommendation problem that captures both the sequential and temporal/spatial characteristics in its learned representations. | ChD8, ChD9, and increasing the number of model parameters due to the use of LSTM model | |

| CARA [11] | Capturing the impact of geographical and temporal CI by using the GRU model. An attention gate was defined for the timestamp to control the latent factor of time at each state. The attention gate aims to capture the correlation between the latent factor ϕtτ at the current step τ and the hidden state hτ−1 of the previous step. | ChD9 | |

| ABG_poic [28] | Regarding the user’s POI category as the user’s interest preference. Utilizing a bidirectional GRU to capture the dynamic dependence of users’ check-ins and combining the attention mechanism with a bidirectional GRU to selectively focus on historical check-in records, which can improve the interpretability of the model. | ChD9, focusing more on the effect of the user’s check-in time, and the weakness in paying attention to the CI of the geographic distance between consecutive check-ins. | |

| LSL-LSTM [29] | Proposing a real-time individual driving destination prediction model based on an attention-aware LSTM by taking the location semantics and location importance of trajectory points into account. A trajectory location semantics extraction method enriches feature descriptions with prior knowledge for implicit travel intention learning. | ChD2, ChD8, ChD9, and the possibility of reducing the efficiency of the model due to the existence of complex rules governing the sequence of people’s mobility habits | |

| Time-aware [30] | Developing a POI recommendation method that includes a cross-graph neural network component, a multi-perspective self-attention component, and a multi-task learning component. utilizing a multi-perspective self-attention component to capture the comprehensive preferences of users. | ChD2, paying more attention to the time characteristic of check-ins, and the possibility of weakness in modeling the periodic behavior of users in long-term sequences | |

| ATCA-GRU [31] | Developing an attention-based category-aware GRU model, which can alleviate the sparsity of users’ check-ins and can capture the short-term and long-term dependence between user check-ins. Predicting the probability of the user’s next check-in category and recommending the top-K categories according to the probability. | ChD2, ChD8, ChD9 | |

| HTA-LSTM [32] | Proposing a hierarchical temporal attention-based LSTM encoder–decoder model for individual location sequence prediction. The hierarchical temporal attention networks consist of location temporal attention and global temporal attention to respectively capture travel regularities with daily and weekly long-term dependencies. | ChD8, ChD9, and the possibility of reducing the efficiency of the model due to the complex rules of the sequence of people’s mobility habits in long sequences | |

| DeNavi [33] | Proposing a novel POI recommendation system for deep navigators to predict the next move. Including the time and distance intervals between POI check-ins in the memory unit, three learning models (DeNavi-LSTM, DeNavi-GRU, and DeNavi-Alpha) were developed to enhance the performance of the standard recurrent networks. | ChD2, ChD9, and paying little attention to the CI of the geographical distance between consecutive check-ins | |

| Notation | Description |

|---|---|

| u, l, v, t | user, location (longitude and latitude), venue or POI, timestamp |

| lat v, lng v | POI v’s latitude and longitude (geographical coordinates) |

| cu, v, t | user u-recorded check-in in POI v and timestamp t |

| Δg, Δt | geographical distance and time interval between two successive check-ins |

| Su | a set of all user u-generated check-ins |

| Us, V, T | set of users, POIs, and timestamps |

| vτu | POI visited by user u at timestamp |

| tτu, gτu | vector representations of time interval and geographical distance |

| tru | a sequence of chronologically ordered check-ins linked to u |

| ϕu, ϕv, ϕt | the latent factors of user u, POI v, and timestamp t |

| , h | the hidden and candidate states of the EAGRU |

| zr, rr | update and reset gates of GRU |

| sigmoid function |

| Dataset | #Users | #Check-Ins | #POIs | Density |

|---|---|---|---|---|

| Gowalla | 1047 | 614,340 | 5011 | 0.1170 |

| Foursquare | 615 | 108,195 | 19,245 | 0.0091 |

| Recall | |||

|---|---|---|---|

| States | @k | Gowalla | Foursquare |

| First | 5 | 0.7945 | 0.7033 |

| 10 | 0.9063 | 0.8814 | |

| Second | 5 | 0.7854 | 0.8312 |

| 10 | 0.8940 | 0.9301 | |

| Third | 5 | 0.4072 | 0.4178 |

| 10 | 0.4275 | 0.4383 | |

| Fourth | 5 | 0.5098 | 0.4987 |

| 10 | 0.5451 | 0.5236 | |

| Percentage of Change | ||||

|---|---|---|---|---|

| States | Removed Attention Gates | K | Gowalla (%) | Foursquare (%) |

| Second | α | 5 | −01.15 | +18.19 |

| 10 | −01.36 | +05.53 | ||

| Third | γ | 5 | −48.75 | −40.59 |

| 10 | −52.83 | −50.27 | ||

| Fourth | β | 5 | −35.83 | −29.09 |

| 10 | −39.85 | −40.59 | ||

| Methods | Recall | |||

|---|---|---|---|---|

| Gowalla | Foursquare | |||

| @5 | @10 | @5 | @10 | |

| GT-SEER | 0.0650 | 0.1200 | 0.1401 | 0.2001 |

| LSTPM | 0.2021 | 0.2510 | 0.3372 | 0.4091 |

| ASTEM | 0.1440 | 0.2660 | 0.3280 | 0.4140 |

| MCI-DNN | 0.3665 | 0.4396 | 0.2918 | 0.4006 |

| CARA | - | 0.7385 | - | 0.8851 |

| EAGRU | 0.7945 | 0.9603 | 0.7033 | 0.8814 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ghanaati, F.; Ekbatanifard, G.; Khoshhal Roudposhti, K. Using a Flexible Model to Compare the Efficacy of Geographical and Temporal Contextual Information of Location-Based Social Network Data for Location Prediction. ISPRS Int. J. Geo-Inf. 2023, 12, 137. https://doi.org/10.3390/ijgi12040137

Ghanaati F, Ekbatanifard G, Khoshhal Roudposhti K. Using a Flexible Model to Compare the Efficacy of Geographical and Temporal Contextual Information of Location-Based Social Network Data for Location Prediction. ISPRS International Journal of Geo-Information. 2023; 12(4):137. https://doi.org/10.3390/ijgi12040137

Chicago/Turabian StyleGhanaati, Fatemeh, Gholamhossein Ekbatanifard, and Kamrad Khoshhal Roudposhti. 2023. "Using a Flexible Model to Compare the Efficacy of Geographical and Temporal Contextual Information of Location-Based Social Network Data for Location Prediction" ISPRS International Journal of Geo-Information 12, no. 4: 137. https://doi.org/10.3390/ijgi12040137

APA StyleGhanaati, F., Ekbatanifard, G., & Khoshhal Roudposhti, K. (2023). Using a Flexible Model to Compare the Efficacy of Geographical and Temporal Contextual Information of Location-Based Social Network Data for Location Prediction. ISPRS International Journal of Geo-Information, 12(4), 137. https://doi.org/10.3390/ijgi12040137