Deep Learning Semantic Segmentation for Land Use and Land Cover Types Using Landsat 8 Imagery

Abstract

1. Introduction

2. Materials and Methods

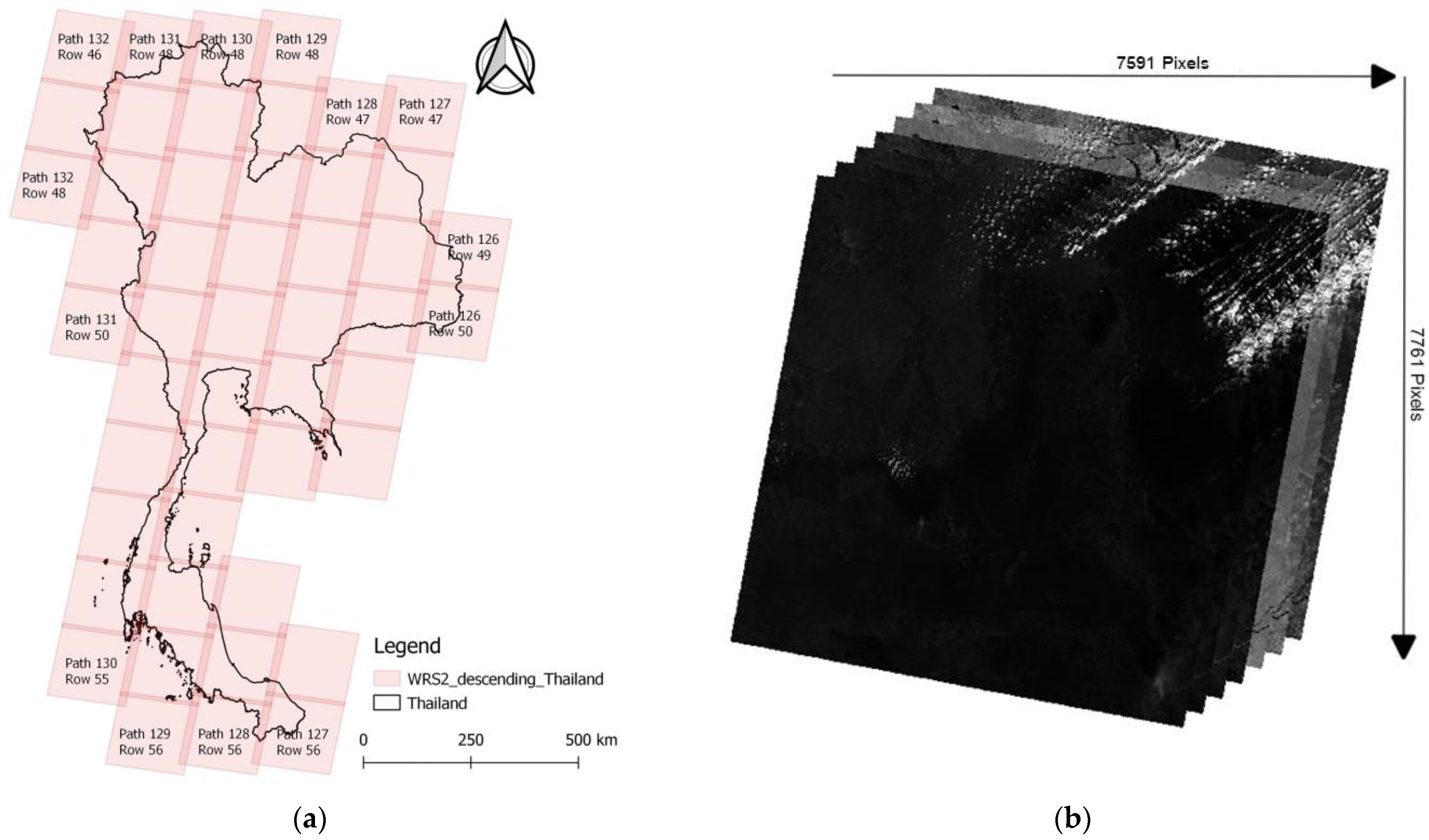

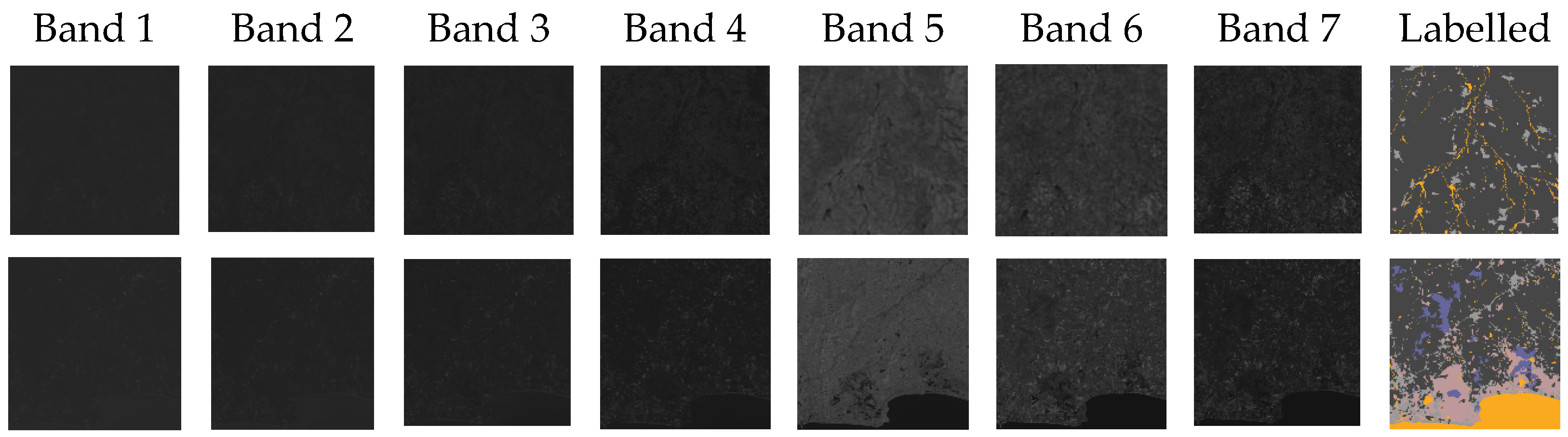

2.1. Land Use Dataset

2.2. Proposed Network Architecture

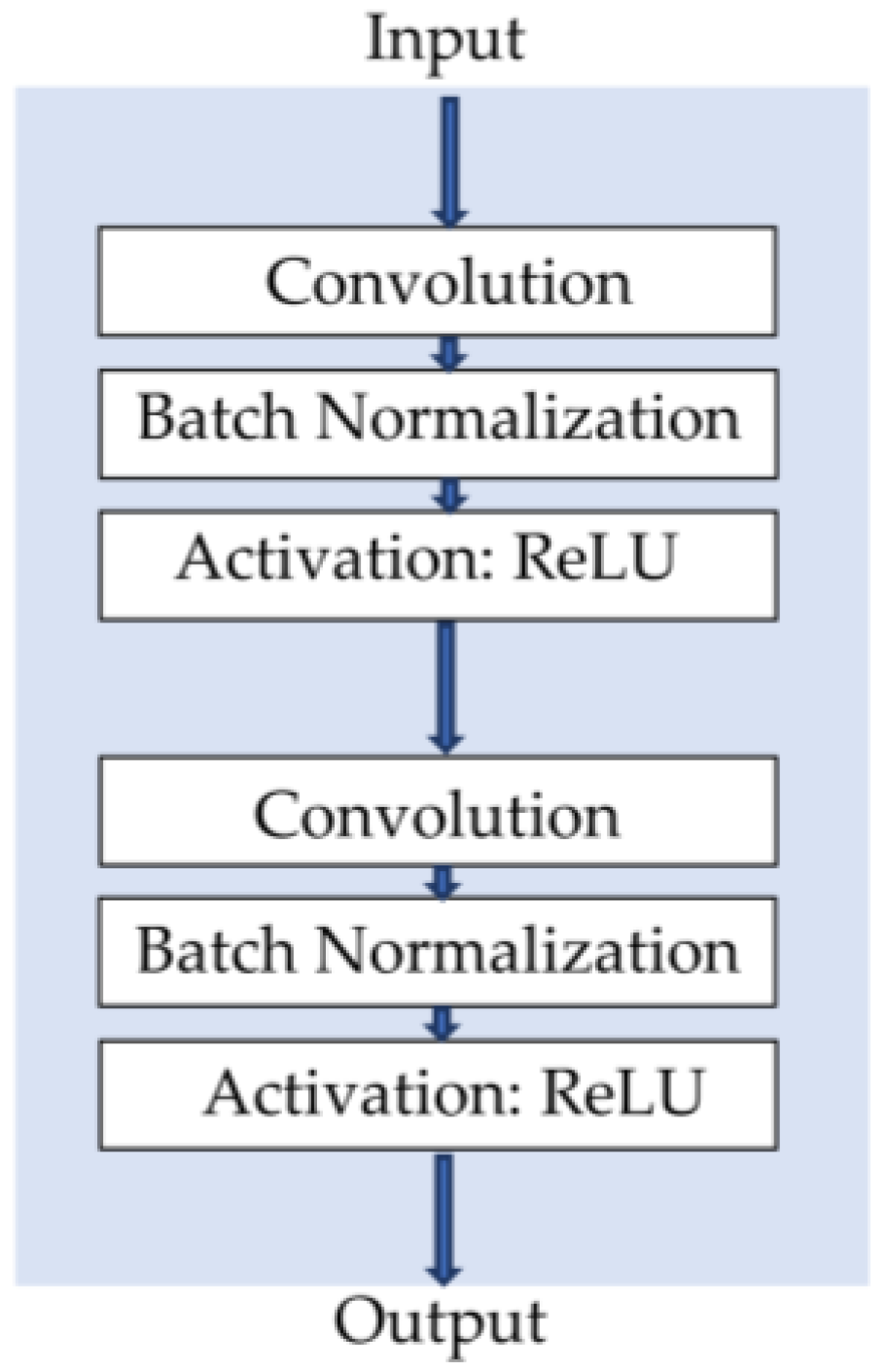

2.2.1. Convolution Block

2.2.2. Convolution Loop

2.2.3. The Proposed Network Architecture

2.3. Training

2.4. Evaluation Metrics

3. Experiments and Analysis

3.1. Experiment Designs

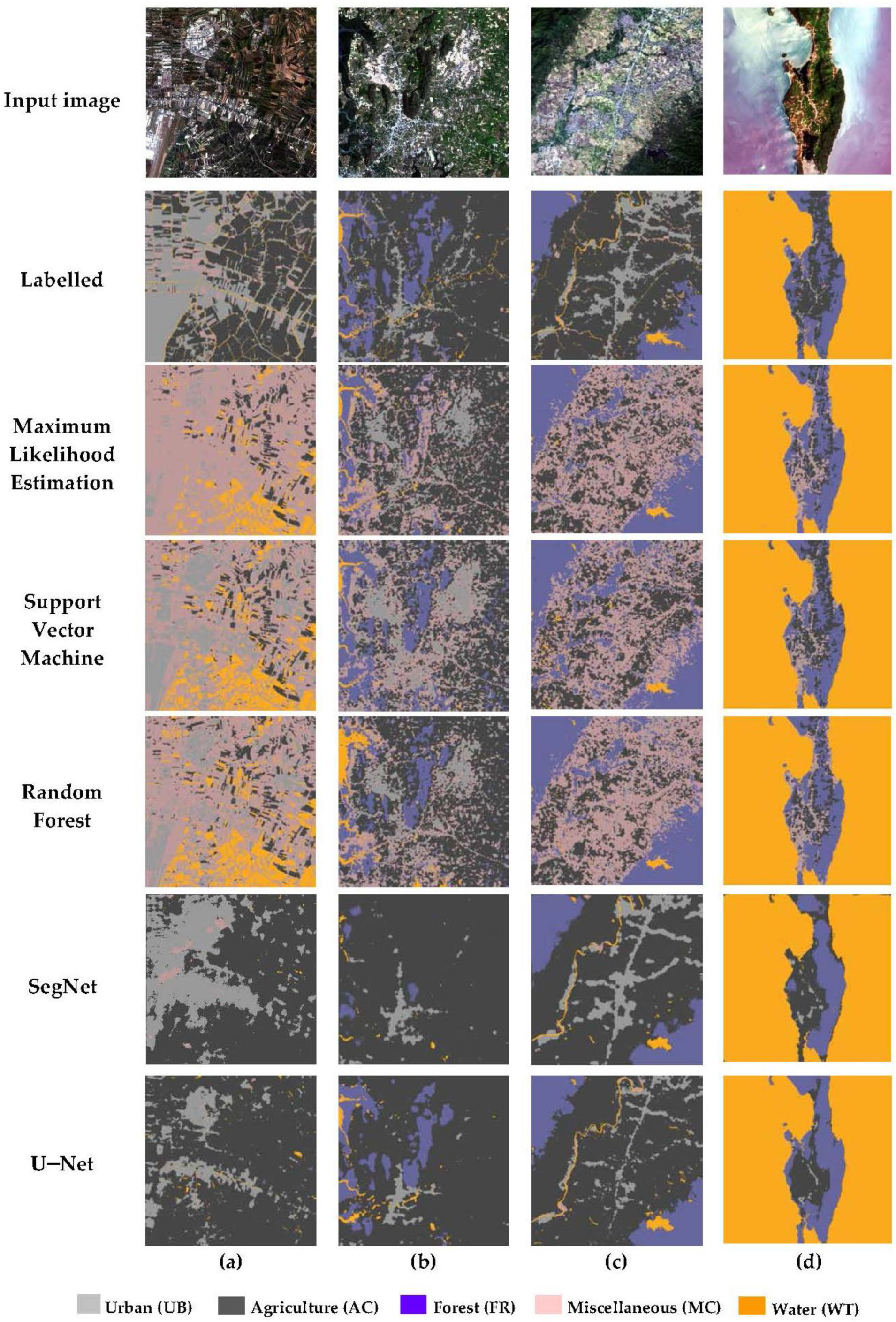

3.2. Experiment 1: Comparison of Pixel-Based Classification and Deep Learning Semantic Segmentation for Land Use Classification

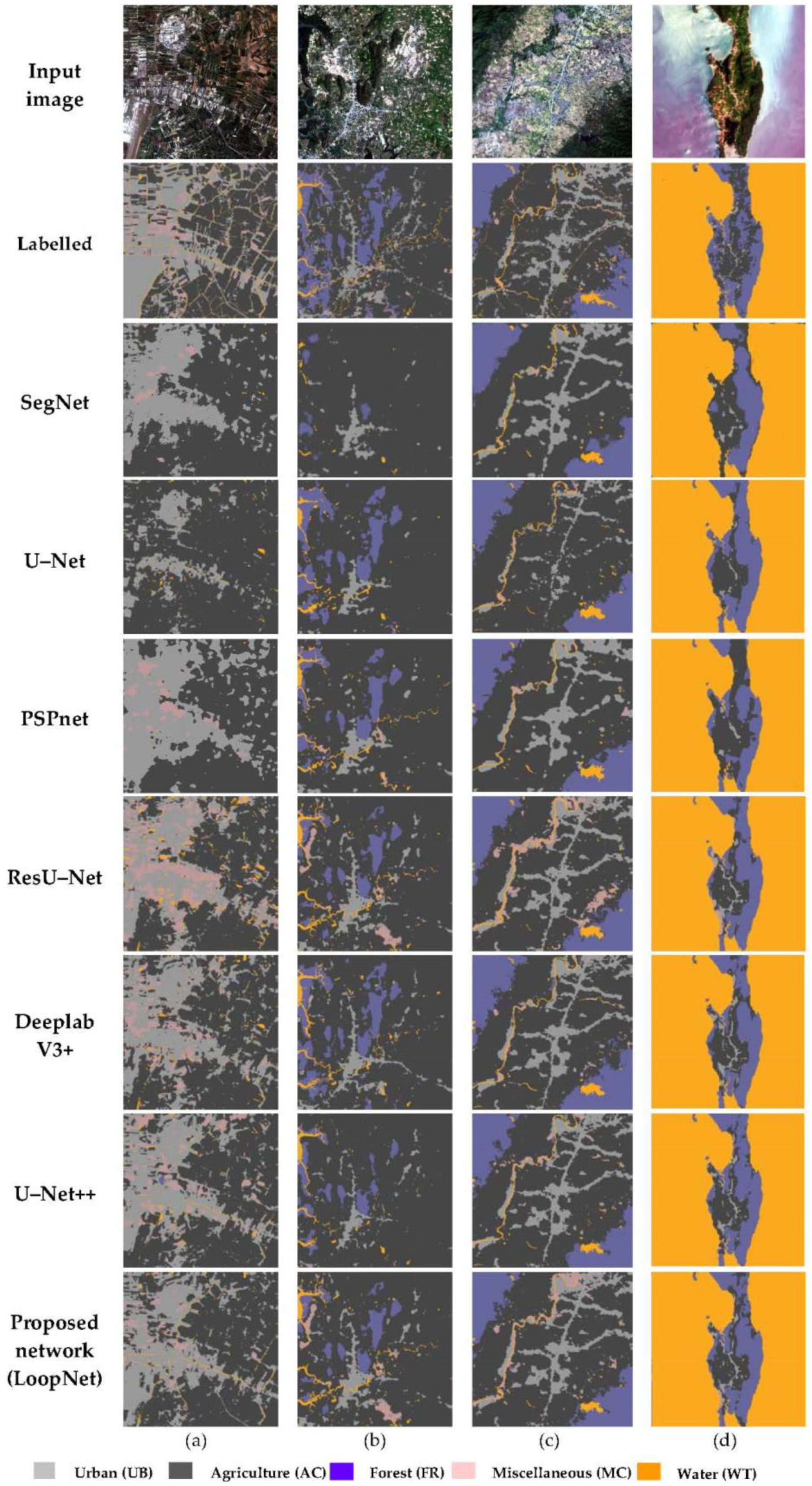

3.3. Experiment 2: Evaluation of the Proposed Network and State-of-the-Art Network Architecture for Land Use Segmentation

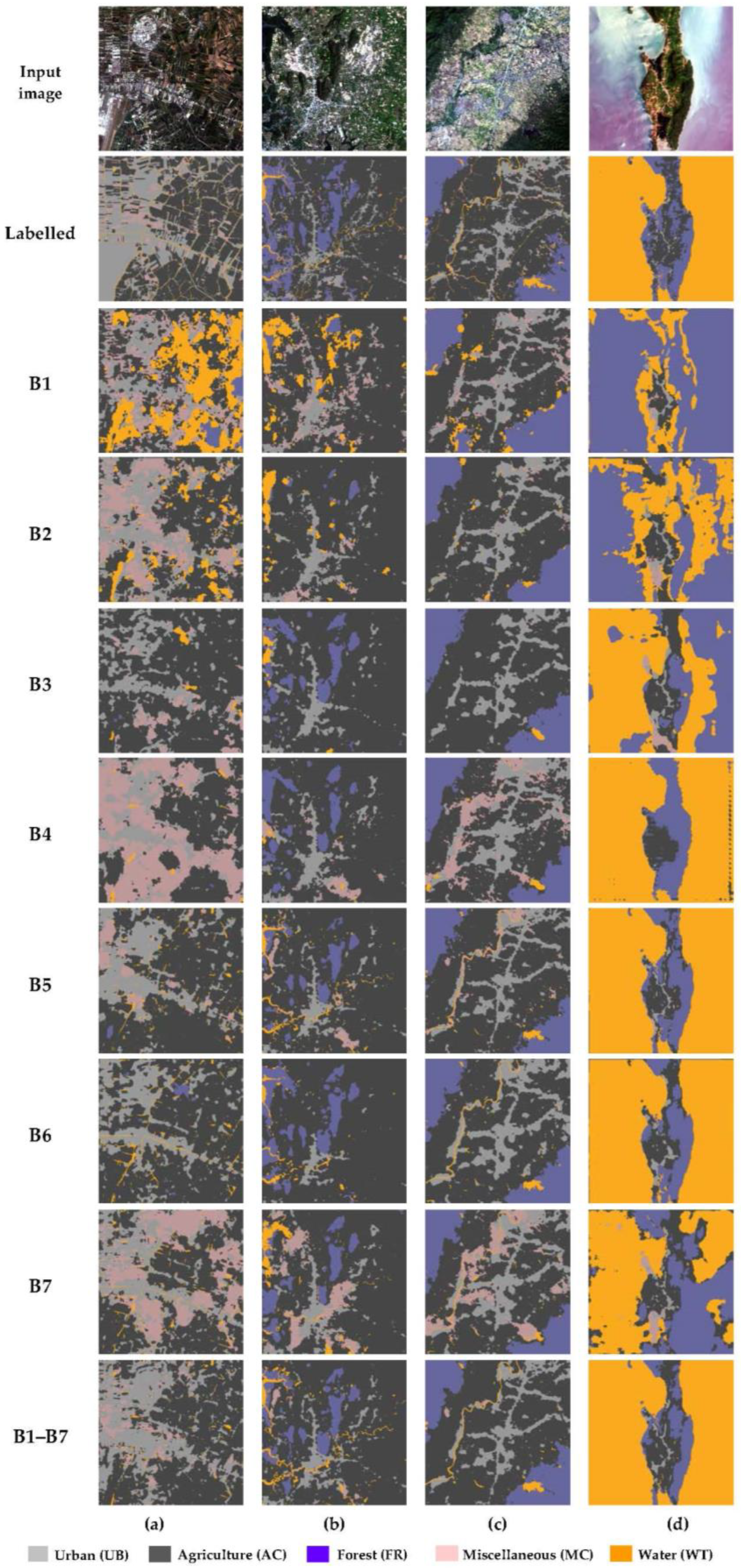

3.4. Experiment 3: Evaluation of Spectral Bands for Land Use Segmentation

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Van Leeuwen, B.; Tobak, Z.; Kovács, F. Machine learning techniques for land use/land cover classification of medium resolution optical satellite imagery focusing on temporary inundated areas. J. Environ. Geogr. 2020, 13, 43–52. [Google Scholar] [CrossRef]

- Wang, J.; Bretz, M.; Dewan, M.A.A.; Delavar, M.A. Machine learning in modelling land-use and land cover-change (LULCC): Current status, challenges and prospects. Sci. Total Environ. 2022, 822, 153559. [Google Scholar] [CrossRef] [PubMed]

- Alademomi, A.S.; Okolie, C.J.; Daramola, O.E.; Akinnusi, S.A.; Adediran, E.; Olanrewaju, H.O.; Alabi, A.O.; Salami, T.J.; Odumosu, J. The interrelationship between LST, NDVI, NDBI, and land cover change in a section of Lagos metropolis, Nigeria. Appl. Geomat. 2022, 14, 299–314. [Google Scholar] [CrossRef]

- Fathizad, H.; Tazeh, M.; Kalantari, S.; Shojaei, S. The investigation of spatiotemporal variations of land surface temperature based on land use changes using NDVI in southwest of Iran. J. Afr. Earth Sci. 2017, 134, 249–256. [Google Scholar] [CrossRef]

- Macarringue, L.S.; Bolfe, E.; Pereira, P.R.M. Developments in land use and land cover classification techniques in remote sensing: A review. J. Geogr. Inf. Syst. 2022, 14, 1–28. [Google Scholar] [CrossRef]

- Senf, C.; Hostert, P.; van der Linden, S. Using MODIS time series and random forests classification for mapping land use in South-East Asia. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; pp. 6733–6736. [Google Scholar]

- Anderson, J.R. A Land Use and Land Cover Classification System for Use with Remote Sensor Data; US Government Printing Office: Washington, DC, USA, 1976; Volume 964.

- Qu, L.a.; Chen, Z.; Li, M.; Zhi, J.; Wang, H. Accuracy improvements to pixel-based and object-based lulc classification with auxiliary datasets from Google Earth engine. Remote Sens. 2021, 13, 453. [Google Scholar] [CrossRef]

- Amini, S.; Saber, M.; Rabiei-Dastjerdi, H.; Homayouni, S. Urban Land Use and Land Cover Change Analysis Using Random Forest Classification of Landsat Time Series. Remote Sens. 2022, 14, 2654. [Google Scholar] [CrossRef]

- Duro, D.C.; Franklin, S.E.; Dubé, M.G. A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Li, M.; Stein, A. Mapping land use from high resolution satellite images by exploiting the spatial arrangement of land cover objects. Remote Sens. 2020, 12, 4158. [Google Scholar] [CrossRef]

- Jamali, A. Evaluation and comparison of eight machine learning models in land use/land cover mapping using Landsat 8 OLI: A case study of the northern region of Iran. SN Appl. Sci. 2019, 1, 1448. [Google Scholar] [CrossRef]

- Mayani, M.B.; Itagi, R. Machine Learning Techniques in Land Cover Classification using Remote Sensing Data. In Proceedings of the 2021 International Conference on Intelligent Technologies (CONIT), Hubli, India, 25–27 June 2021; pp. 1–5. [Google Scholar]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Song, J.; Gao, S.; Zhu, Y.; Ma, C. A survey of remote sensing image classification based on CNNs. Big Earth Data 2019, 3, 232–254. [Google Scholar] [CrossRef]

- Alem, A.; Kumar, S. Deep learning methods for land cover and land use classification in remote sensing: A review. In Proceedings of the 2020 8th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions)(ICRITO), Noida, India, 4–5 June 2020; pp. 903–908. [Google Scholar]

- Vali, A.; Comai, S.; Matteucci, M. Deep learning for land use and land cover classification based on hyperspectral and multispectral earth observation data: A review. Remote Sens. 2020, 12, 2495. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep learning classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Storie, C.D.; Henry, C.J. Deep learning neural networks for land use land cover mapping. In Proceedings of the IGARSS 2018–2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 3445–3448. [Google Scholar]

- Alhassan, V.; Henry, C.; Ramanna, S.; Storie, C. A deep learning framework for land-use/land-cover mapping and analysis using multispectral satellite imagery. Neural Comput. Appl. 2020, 32, 8529–8544. [Google Scholar] [CrossRef]

- Gharbia, R.; Khalifa, N.E.M.; Hassanien, A.E. Land cover classification using deep convolutional neural networks. In Proceedings of the International Conference on Intelligent Systems Design and Applications, Online, 12–15 December 2020; pp. 911–920. [Google Scholar]

- Sun, Z.; Di, L.; Fang, H.; Burgess, A. Deep learning classification for crop types in north dakota. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2200–2213. [Google Scholar] [CrossRef]

- Zhang, C.; Sargent, I.; Pan, X.; Li, H.; Gardiner, A.; Hare, J.; Atkinson, P.M. Joint Deep Learning for land cover and land use classification. Remote Sens. Environ. 2019, 221, 173–187. [Google Scholar] [CrossRef]

- Rousset, G.; Despinoy, M.; Schindler, K.; Mangeas, M. Assessment of deep learning techniques for land use land cover classification in southern new Caledonia. Remote Sens. 2021, 13, 2257. [Google Scholar] [CrossRef]

- Helber, P.; Bischke, B.; Dengel, A.; Borth, D. Eurosat: A novel dataset and deep learning benchmark for land use and land cover classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2217–2226. [Google Scholar] [CrossRef]

- Zhang, Z.; Cui, X.; Zheng, Q.; Cao, J. Land use classification of remote sensing images based on convolution neural network. Arab. J. Geosci. 2021, 14, 267. [Google Scholar] [CrossRef]

- Kotaridis, I.; Lazaridou, M. Remote sensing image segmentation advances: A meta-analysis. ISPRS J. Photogramm. Remote Sens. 2021, 173, 309–322. [Google Scholar] [CrossRef]

- Poliyapram, V.; Imamoglu, N.; Nakamura, R. Deep learning model for water/ice/land classification using large-scale medium resolution satellite images. In Proceedings of the IGARSS 2019–2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 3884–3887. [Google Scholar]

- Mutreja, G.; Kumar, S.; Jha, D.; Singh, A.; Singh, R. Identifying Settlements Using SVM and U-Net. In Proceedings of the IGARSS 2020–2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 1217–1220. [Google Scholar]

- Du, M.; Huang, J.; Chai, D.; Lin, T.; Wei, P. Classification and Mapping of Paddy Rice using Multi-temporal Landsat Data with a Deep Semantic Segmentation Model. In Proceedings of the 2021 9th International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Shenzhen, China, 26–29 July 2021; pp. 1–6. [Google Scholar]

- de Almeida Pereira, G.H.; Fusioka, A.M.; Nassu, B.T.; Minetto, R. Active fire detection in Landsat-8 imagery: A large-scale dataset and a deep-learning study. ISPRS J. Photogramm. Remote Sens. 2021, 178, 171–186. [Google Scholar] [CrossRef]

- Wang, L.; Wang, J.; Liu, Z.; Zhu, J.; Qin, F. Evaluation of a deep-learning model for multispectral remote sensing of land use and crop classification. Crop J. 2022, 10, 1435–1451. [Google Scholar] [CrossRef]

- Department of Land Development. Land Use 2562–2564. Available online: http://www1.ldd.go.th/web_OLP/result/landuse2562-2564.htm (accessed on 4 October 2022).

| Dataset | Training | Validating | Testing |

|---|---|---|---|

| Land use dataset | 8000 | 2000 | 3600 |

| Method | Per Class IoU | Precision | Recall | mIoU | OA | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| BG | AC | FR | MC | UB | WT | |||||

| Maximum likelihood estimation | 71.89 | 52.12 | 41.13 | 15.45 | 30.23 | 67.94 | 52.23 | 53.37 | 21.23 | 68.12 |

| Support Vector Machine | 73.76 | 58.45 | 48.09 | 19.54 | 36.54 | 70.98 | 55.61 | 53.76 | 24.67 | 72.86 |

| Random Forest | 73.65 | 61.11 | 55.56 | 18.47 | 33.64 | 69.72 | 56.85 | 54.21 | 26.14 | 74.69 |

| SegNet | 87.39 | 66.55 | 61.05 | 30.27 | 61.53 | 53.74 | 84.18 | 84.10 | 54.20 | 84.13 |

| U-Net | 88.33 | 67.57 | 58.85 | 30.75 | 52.75 | 57.55 | 81.94 | 81.92 | 55.09 | 81.93 |

| Method | Per Class IoU | Precision | Recall | mIoU | OA | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| BG | AC | FR | MC | UB | WT | |||||

| SegNet | 87.39 | 66.55 | 61.05 | 31.27 | 31.53 | 53.74 | 84.18 | 84.10 | 64.20 | 84.13 |

| U-Net | 88.33 | 67.57 | 58.85 | 30.75 | 22.75 | 57.55 | 81.94 | 81.92 | 65.09 | 81.93 |

| PSPnet | 88.26 | 70.86 | 67.53 | 31.89 | 28.30 | 57.66 | 86.25 | 86.22 | 61.93 | 86.22 |

| ResU-Net | 88.68 | 70.10 | 68.94 | 37.21 | 39.65 | 58.85 | 85.50 | 85.40 | 65.75 | 85.44 |

| DeeplabV3+ | 88.29 | 72.13 | 69.78 | 34.65 | 46.60 | 60.78 | 87.15 | 87.10 | 66.17 | 85.12 |

| U-Net++ | 88.28 | 73.75 | 71.45 | 35.58 | 42.07 | 61.71 | 87.77 | 87.72 | 66.87 | 87.74 |

| LoopNet | 90.22 | 75.70 | 73.41 | 40.19 | 43.12 | 65.95 | 87.88 | 87.81 | 71.69 | 89.84 |

| Method | Per Class IoU | Precision | Recall | mIoU | OA | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| BG | AC | FR | MC | UB | WT | |||||

| B1 | 89.45 | 64.17 | 49.76 | 28.02 | 48.79 | 38.57 | 77.89 | 76.36 | 50.23 | 77.32 |

| B2 | 88.18 | 62.33 | 48.65 | 33.74 | 62.91 | 35.81 | 75.24 | 74.35 | 54.73 | 74.78 |

| B3 | 87.43 | 66.18 | 61.26 | 31.08 | 54.56 | 46.16 | 83.96 | 83.88 | 52.45 | 83.12 |

| B4 | 88.26 | 67.85 | 65.58 | 30.15 | 57.40 | 56.53 | 84.88 | 82.40 | 51.74 | 83.40 |

| B5 | 88.24 | 66.84 | 63.54 | 32.33 | 54.96 | 58.86 | 83.98 | 83.86 | 51.67 | 83.91 |

| B6 | 88.17 | 65.34 | 66.04 | 30.60 | 59.32 | 58.49 | 82.66 | 82.53 | 54.96 | 82.59 |

| B7 | 88.23 | 66.93 | 62.77 | 35.23 | 62.41 | 50.89 | 81.93 | 81.80 | 54.78 | 81.86 |

| B1ߝB7 | 90.22 | 75.70 | 73.41 | 40.19 | 73.12 | 65.95 | 87.88 | 87.81 | 71.69 | 89.84 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Boonpook, W.; Tan, Y.; Nardkulpat, A.; Torsri, K.; Torteeka, P.; Kamsing, P.; Sawangwit, U.; Pena, J.; Jainaen, M. Deep Learning Semantic Segmentation for Land Use and Land Cover Types Using Landsat 8 Imagery. ISPRS Int. J. Geo-Inf. 2023, 12, 14. https://doi.org/10.3390/ijgi12010014

Boonpook W, Tan Y, Nardkulpat A, Torsri K, Torteeka P, Kamsing P, Sawangwit U, Pena J, Jainaen M. Deep Learning Semantic Segmentation for Land Use and Land Cover Types Using Landsat 8 Imagery. ISPRS International Journal of Geo-Information. 2023; 12(1):14. https://doi.org/10.3390/ijgi12010014

Chicago/Turabian StyleBoonpook, Wuttichai, Yumin Tan, Attawut Nardkulpat, Kritanai Torsri, Peerapong Torteeka, Patcharin Kamsing, Utane Sawangwit, Jose Pena, and Montri Jainaen. 2023. "Deep Learning Semantic Segmentation for Land Use and Land Cover Types Using Landsat 8 Imagery" ISPRS International Journal of Geo-Information 12, no. 1: 14. https://doi.org/10.3390/ijgi12010014

APA StyleBoonpook, W., Tan, Y., Nardkulpat, A., Torsri, K., Torteeka, P., Kamsing, P., Sawangwit, U., Pena, J., & Jainaen, M. (2023). Deep Learning Semantic Segmentation for Land Use and Land Cover Types Using Landsat 8 Imagery. ISPRS International Journal of Geo-Information, 12(1), 14. https://doi.org/10.3390/ijgi12010014