4.1. Overall Framework and Workflow of the Model

In this paper, we propose a hybrid neural network model for Chinese place-name recognition. The overall structure of the model is shown in

Figure 3, and the whole model is divided into five parts: the input layer, ALBERT layer, BiLSTM layer, CRF layer, and output layer.

We present our model from bottom to top, characterizing the layers of the neural network. The input layer contains the individual words of a message which are used as the input to the model.

The next layer represents each word as vectors, using a pretraining approach. It uses pretrained word embeddings to represent the words in the input sequence. In particular, we use ALBERT, which captures the different semantics of a word under varied contexts. Note that the pretrained word embeddings capture the semantics of words based on their typical usage contexts and therefore provide static representations of words; by contrast, ALBERT provides a dynamic representation for a word by modeling the particular sentence within which the word is used. This layer captures four different aspects of a word, and their representation vectors are concatenated together into a large vector to represent each input word. These vectors are then used as the input to next layer, which is a BiLSTM layer consisting of two layers of LSTM cells: one forward layer capturing information before the target word and one backward layer capturing information after the target word.

The BiLSTM layer combines the outputs of the two LSTM layers and feeds the combined output into a fully connected layer. Then the next layer is a CRF layer, which takes the output from the fully connected layer and performs sequence labeling. The CRF layer uses the standard BIEO model from NER research to label each word but focuses on locations. Thus, a word is annotated as either “B–L” (the beginning of a location phrase), “I–L” (inside a location phrase), “E–L” (end a location phrase), or “O” (outside a location phrase).

The workflow of the model is as follows:

(1) First, the dataset is composed of text X (X1, X2, …, Xn), which is input to the ALBERT layer, where Xi denotes the i-th word in the input text.

(2) The input text data are serialized in the ALBERT layer, and the model generates feature vectors, Ci, based on each word, Xi, in the text to enhance the text vector representation and transforms Ci into word vectors, E = (E1, E2, …, En), with location features based on Transformer (Trm) in the word vector representation layer of ALBERT.

(3) Using Ei as the input of each time step of the bidirectional LSTM layer and performing feature calculation, the forward LSTM F = (F1, F2, …, Fn) and the reverse LSTM B = (B1, B2, …, Bn) of the BiLSTM layer are used to extract the contextual features and generate the feature matrix, H = (H1, H2, …, Hn), by position splicing to capture the semantic information in both directions in the sentence.

(4) Consider the transfer features between annotations in the CRF layer, obtain the dependencies between adjacent labels, and output the corresponding labels Y (Y1, Y2, …, Yn) to obtain the final annotation results.

4.2. BERT and ALBERT Pretraining Models

The pretraining model provides a better initialization parameter for the neural network, accelerates the convergence of the neural network, and provides better generalization ability on the target task. The development of pretraining models is divided into two stages: shallow word embedding and deep coding. The shallow word embedding models mainly use the current word and previous word information for training; they only consider the local information of the text and fail to effectively use the overall information of the text [

22,

27]. BERT uses a bidirectional transformer network structure with stronger epistemic capability to train the corpus and achieve a deep bidirectional representation for pretraining [

25]. The BERT model’s “masked language model” (MLM) can fuse the left and right contexts of the current word. BERT has achieved remarkable results in tasks such as named-entity recognition [

28], text classification, machine translation [

29], etc. The next sentence prediction (NSP) captures sentence-level representations and obtains semantically rich, high-quality feature representation vectors.

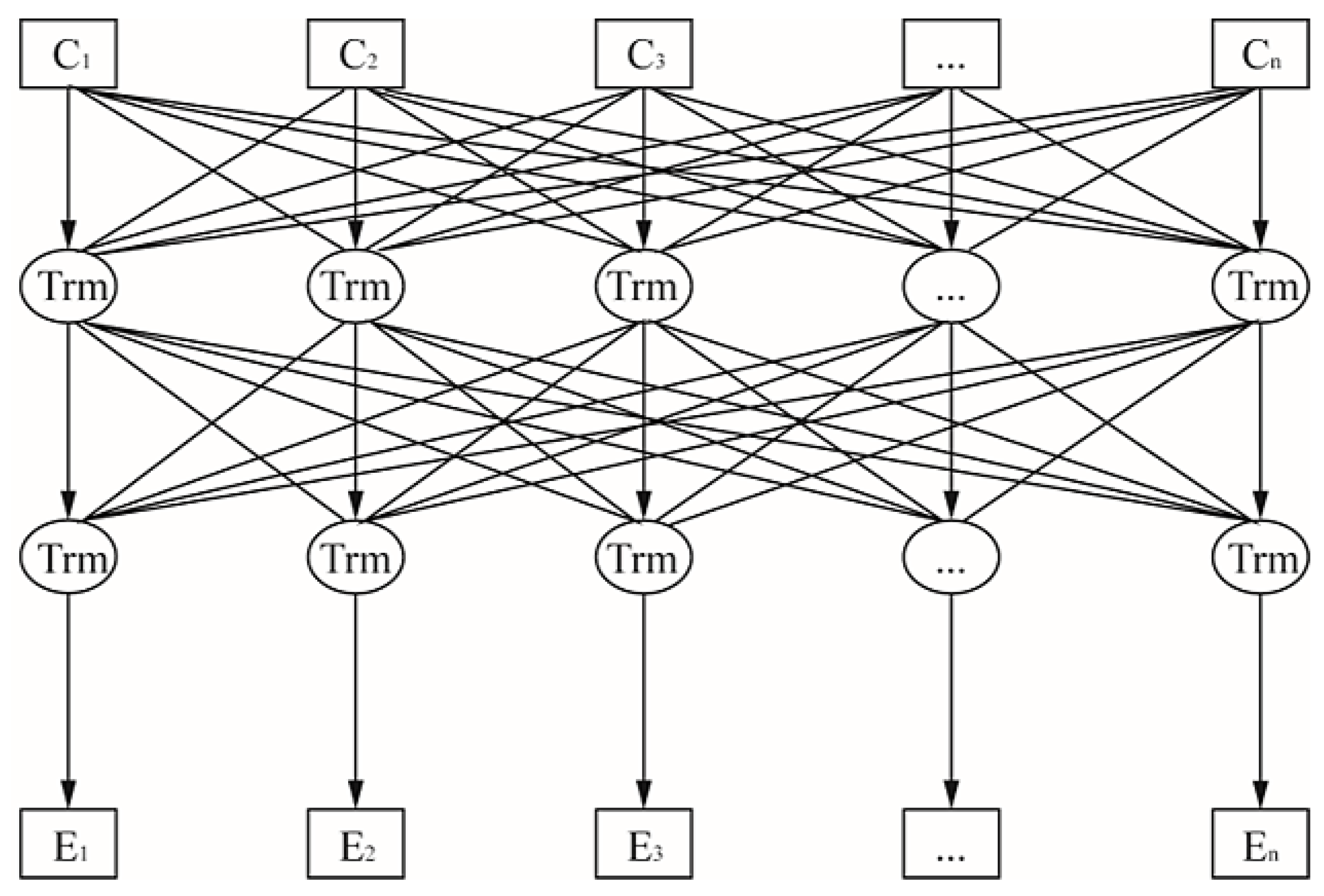

However, the BERT model contains hundreds of millions of parameters, and the model training is easily limited by hardware memory. The ALBERT model is a lightweight pretrained language model that is based on the BERT model [

30]. The BERT model uses a bidirectional transformer encoder to obtain the feature representation of text, and its model structure is shown in

Figure 4. ALBERT has only 10% of the number of parameters of the original BERT model but retains the accuracy of the BERT model.

The transformer structure of the BERT model is composed of an encoder and decoder. The encoder part mainly consists of six identical layers, and each layer consists of two sub-layers, the multi-head self-attention mechanism and the fully connected feed-forward network, respectively. Since each sub-layer is added with residual connection and normalization, the output of the sub-layer can be represented as shown in the following equation:

The multi-head self-attention mechanism projects the three matrices, namely

Q,

V, and

K, by

h different linear transformations and finally splices the different attention results. The main calculation equation is shown below:

For the decoder part, the basic structure is similar to the encoder part, but with the addition of a sub-layer of attention.

ALBERT uses two methods to reduce the number of parameters: (i) factorized embedding parameterization, which separates the size of the hidden layer from the size of the lexical embedding matrix by decomposing the huge lexical embedding matrix into two smaller matrices; and (ii) cross-layer parameter sharing, which significantly reduces the number of parameters of the model by sharing the parameters of the neural layer of the model without significantly affecting its performance.

In the figure, C = (C1, C2, …, Cn) indicates that each character in the sequence is trained by a multilayer bidirectional transformer (Trm) encoder to finally obtain the feature vector of the text, denoted as E = (E1, E2, …, En). After the input text is first processed by word embedding, the positional information encoding (positional encoding) of each word in that sentence is added. The model learns more text features by combining multiple self-attentive layers to form multi-head attention. The output of the multi-head attention-based layer is passed through the Add&Nom layer, where “Add” means adding the input and output of the multi-head attention layer, and “Norm” means normalization. The result, after passing through the Add&Nom layer, is passed to the feed-forward neural layer (Feed Forward) and outputted by the Add&Norm layer.

The ALBERT used in this paper has several design features that enhance its performance on the task of toponym recognition from social media messages. First, our presented ALBERT uses the pretrained word embeddings that are specifically derived from social media messages. We performed the following steps on the basis of the collected text data: (1) cleaning the data—we removed the messy codes and incomplete sentences to ensure that the sentences were smooth; (2) cutting the sentences—we added [CLS], [SEP], [MASK], etc., to each text item to obtain 25.6 GB of training data; and (3) training corpus—we trained on 3090 GPU for 4 days, with the epoch set to 100,000 and learning rate set to 5 × 10−5.

We used the GloVe word embeddings (the number of tokens is 54,238, and the dictionary size is 399 KB) that were trained on 2 billion texts, with 11 billion tokens and 1.8 million vocabulary items collected from Baidu Encyclopedia, Weibo, WeChat, etc. These word embeddings, specifically trained on a large social-media-messages corpus, include many vernacular words and unregistered words used by people in social media messages. Previous geoparsing and NER models typically use word embeddings trained on well-formatted text, such as news articles, and many vernacular words are not covered by those embeddings. When that happens, an embedding for a generic unknown token is usually used to represent this vernacular word and, as a result, the actual semantics of the word are lost. Second, compared with the basic BiLSTM–CRF model, our presented model adds an ALBERT layer to capture the dynamic and contextualized semantics of words.

4.3. BiLSTM Layer

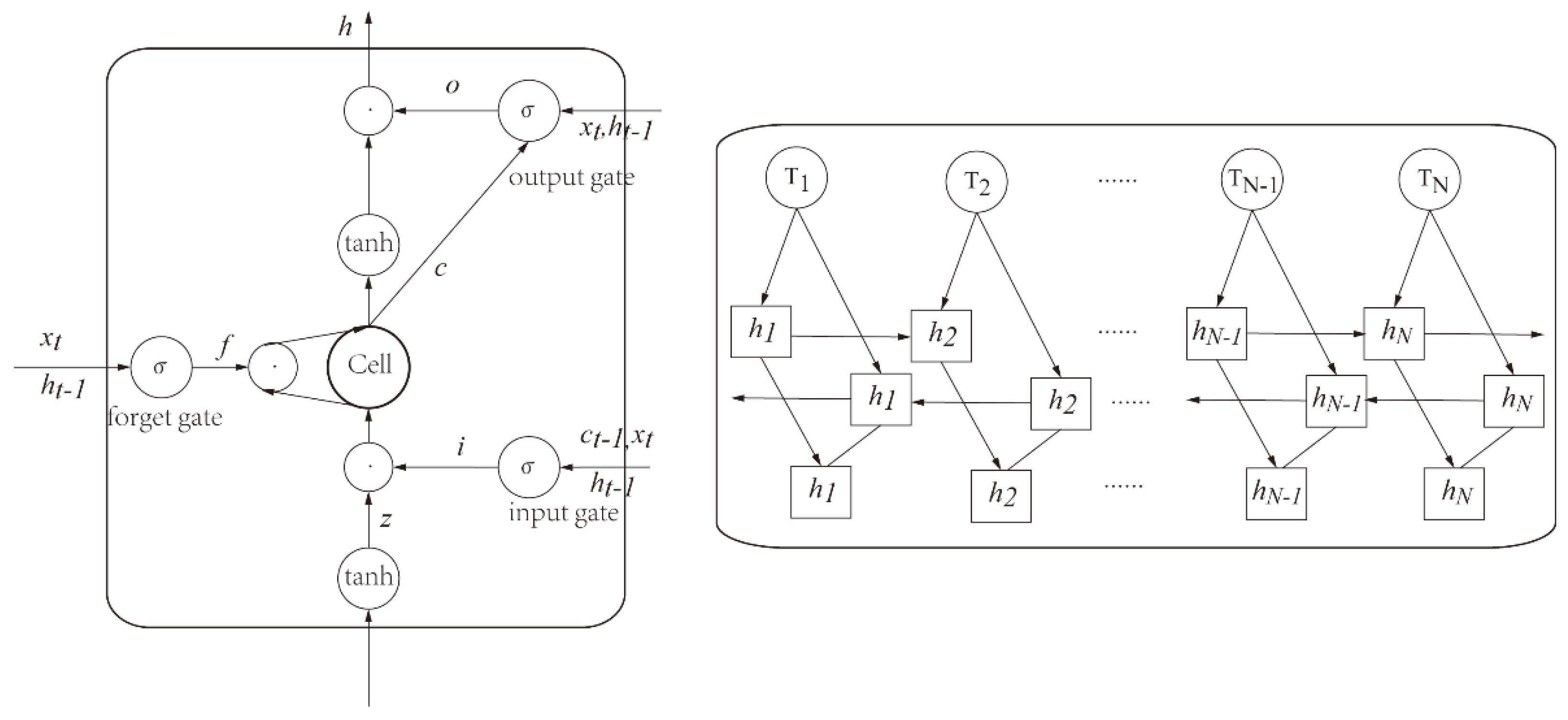

Recurrent neural networks are more suitable for sequence annotation tasks due to their ability to remember the historical information of text sequences. An LSTM model was proposed in the literature [

31,

32,

33,

34] that incorporates specially designed memory units in the hidden layer compared to RNNs and can better solve the problem of gradient explosion or gradient disappearance that RNNs tend to have as the sequence length increases. The neuron structure of the LSTM model is shown in

Figure 5.

The LSTM network consists of three gate structures and one state unit; these gate structures include input gates, oblivion gates, and output gates. The input gate determines how much of the input to the network is saved to the cell state at the current moment. The forgetting gate selectively discards certain information. The output gate determines the final output value based on the cell state. The long-term dependency problem of recurrent neural networks can be better solved by the three-gate structure to maintain and update the state for long-term memory function. A typical LSTM network structure can be represented formally in Equations (5)–(10):

where

xt represents the input word at moment

t;

it represents the memory gate;

ft represents the forget gate;

ot represents the output gate;

Ct represents the cell state;

represents the temporary cell state;

ht represents the hidden state output at each time step;

ht−1 represents the hidden state at the previous moment;

Ct−1 represents the cell state at the previous moment;

Wi,

Wf,

Wo, and

Wc represent the weight matrix at the current state; and

bi,

bf,

bo, and

bC denote the offset of the current state, respectively.

4.4. CRF Layer

The conditional random field model is a discriminative probabilistic model [

34]. The conditional random field model combines the advantages of the HMM and maximum entropy model (MEM). It addresses the strict independence assumption condition of the hidden Markov model, avoids the disadvantages of the local optimum and labeling bias problem of the maximum entropy model, is suitable for the labeling of sequence data CRF, considers the sequential problem among labels, and obtains the global optimal labeling sequence through the relationship of adjacent labels, adding constraints to the final predicted labels. For example, a tag starting with “B” is not followed by an “O” class tag, and a tag starting with “E” cannot be sequentially connected with tag “I” sequence. Assuming that the model input,

x = (

x1,

x2, …,

xn), has a sequence of tags,

y = (

y1,

y2, …,

yn), the score vector of the sentence can be calculated by Equation (7):

where

Pi,yi is the probability of the

yi label of the character, and A is the transfer probability matrix. The CRF score vector is normalized and trained by using the log-likelihood function as the loss function, as shown in Equation (8):

In the prediction phase, the network model is labeled by using the Viterbi algorithm to obtain the optimal sequence, as shown in Equation (9):