Linking Geosocial Sensing with the Socio-Demographic Fabric of Smart Cities

Abstract

1. Introduction

1.1. Motivation

1.2. Related Work

1.3. Research Objectives

- What are the criteria for a geosocial sensor based on geosocial media to be used as a smart city “sensor”?

- What is the relation between content or sentiments of geosocial media and socio-demographic indicators (e.g., of deprivation) at two different administrative scales over time and space?

1.4. Paper Structure

2. Materials and Methods

2.1. Data Sets

2.1.1. Twitter Data

2.1.2. Flickr Data

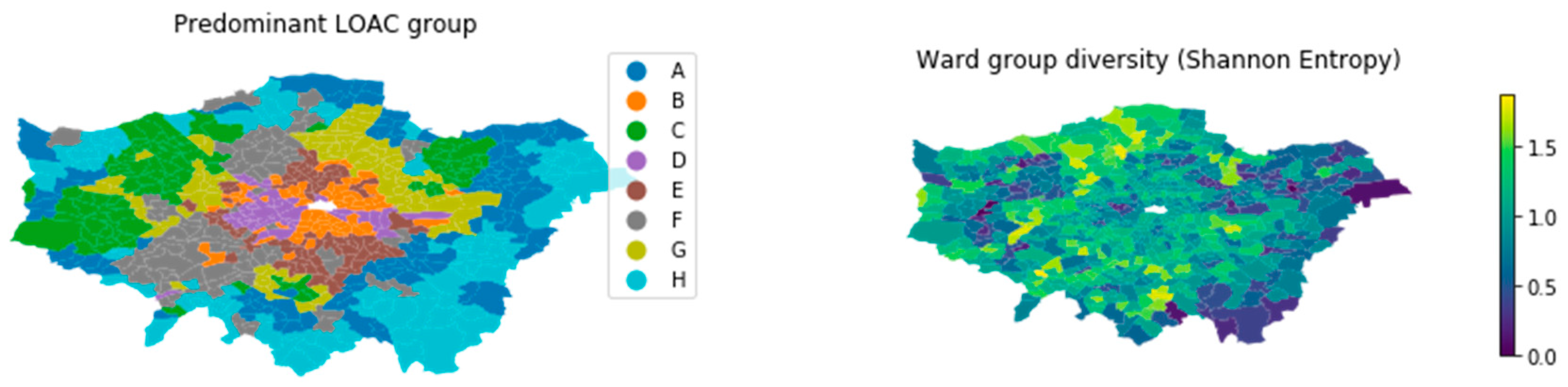

2.1.3. Socio-Demographic Data

2.2. Methods and Processing

- Use a dictionary of descriptive terms and calculate (spatial) TF-IDF scores (Section 2.2.1).

- Test several sentiment classifiers and validate results with manual inspection of a random sample of Tweets (Section 2.2.2).

- Apply chosen sentiment analysis methods to full Twitter data set and correlate and model with socio-demographics (Section 2.2.3).

- Continue with spatial and temporal analysis for both (spatial) TF-IDF and sentiments (Section 2.2.4).

2.2.1. Semantics with Spatial TF-IDF

- Find Top-5 terms per MSOA (i.e., rank terms according to TF-IDF scores per MSOA and choose the five highest).

- Count frequencies of all terms being in an MSOA Top-5 and then sum the reverse of those ranks (i.e., ranked first counts as a score of 5, ranked second as 4, etc.), to rank the terms according to their frequency of appearance in the MSOA Top-5.

- For the 5 terms ranked highest in step 2, look up their global and local scores in each MSOA.

- Compare the global and local TF-IDF scores of those overall top-ranked terms.

2.2.2. Sentiments from Geosocial Media

2.2.3. Sentiments and Socio-Demographic Indicators

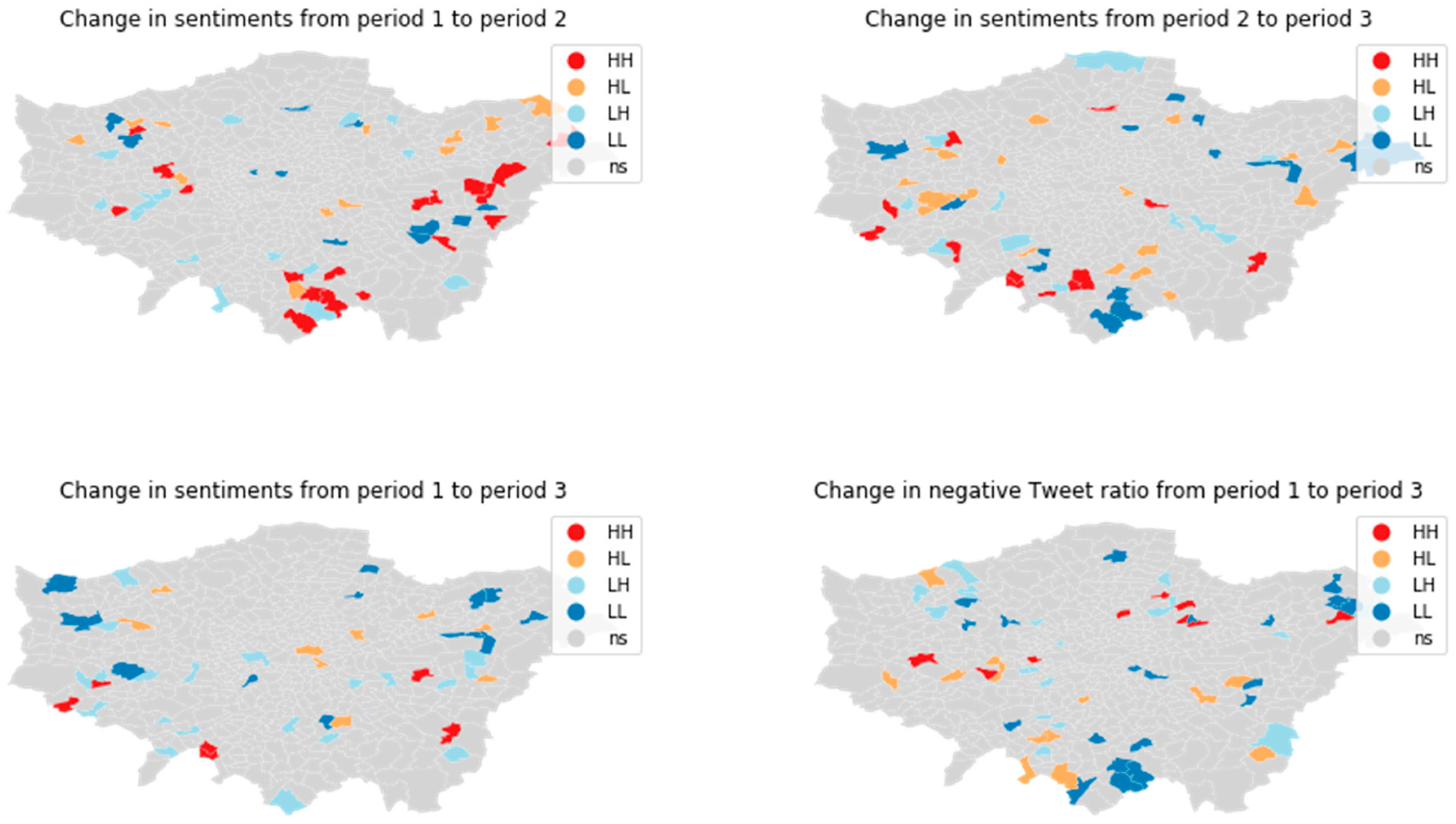

2.2.4. Spatial Distributions and Changes over Time

3. Results

3.1. Criteria for a Geosocial Sensor

3.2. Geosocial Semantics and Socio-Demographic Indicators

3.3. Sentiments and Socio-Demographic Indicators

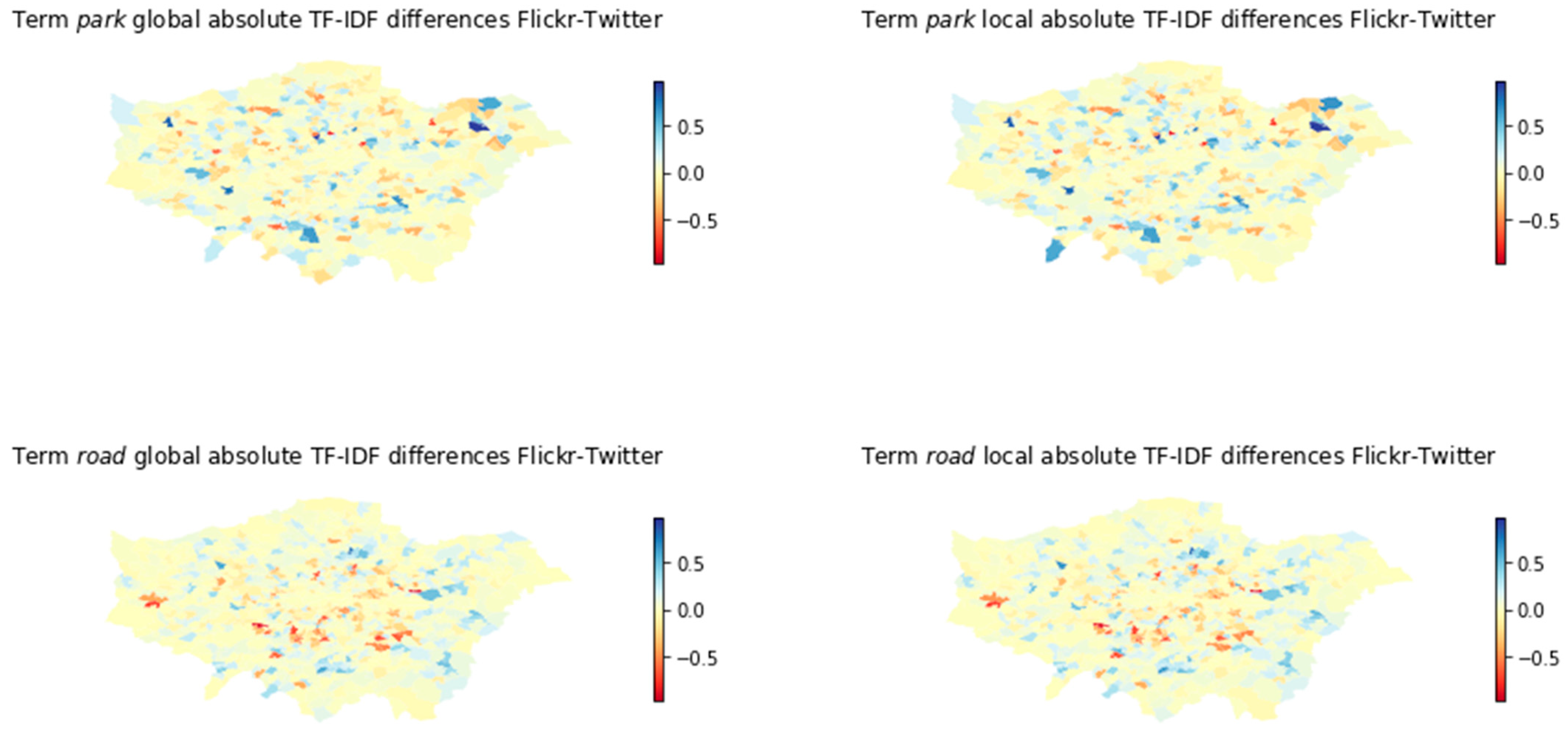

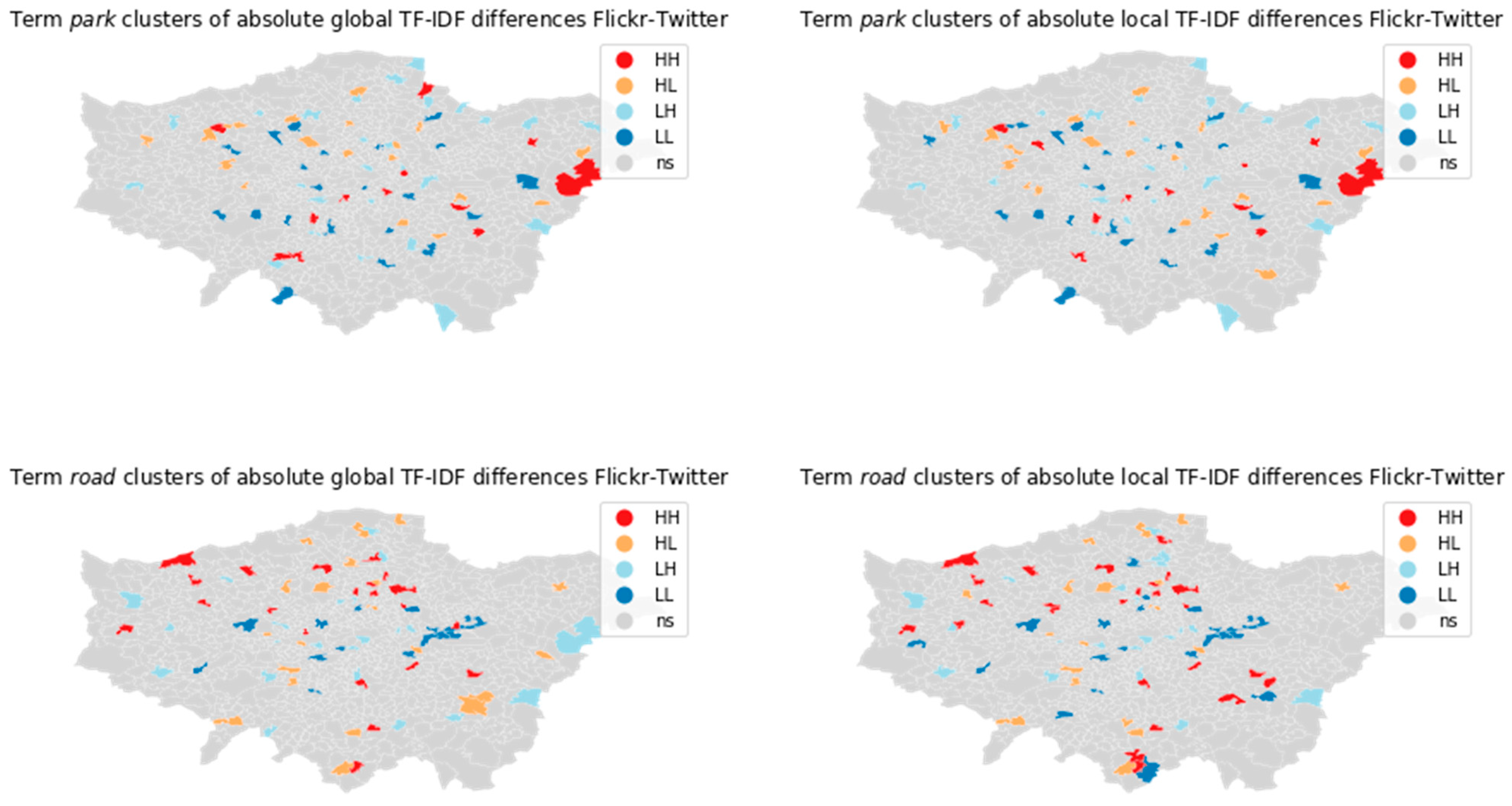

3.4. Geosocial Semantics in Space

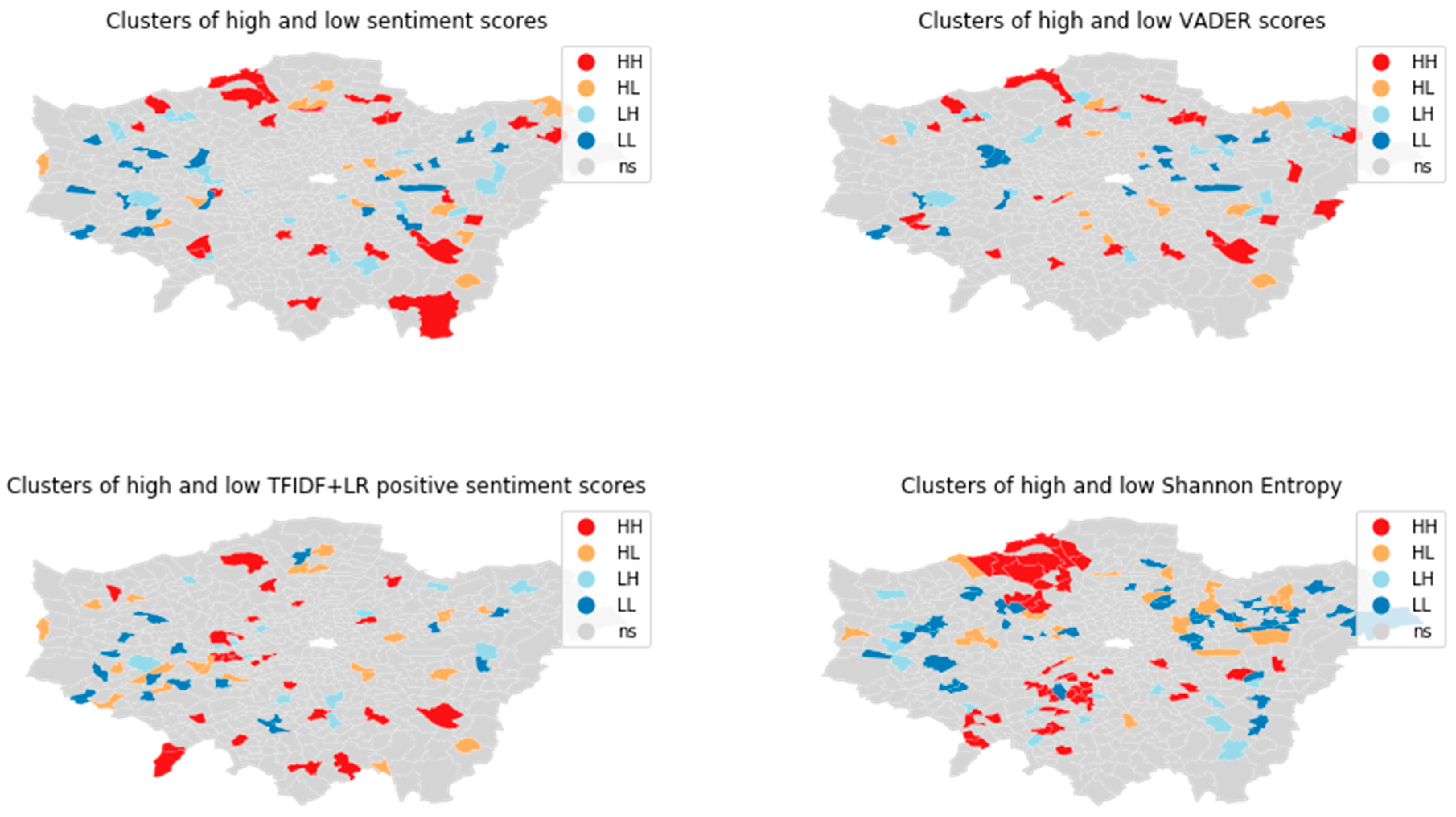

3.5. Spatial Distribution of Sentiments

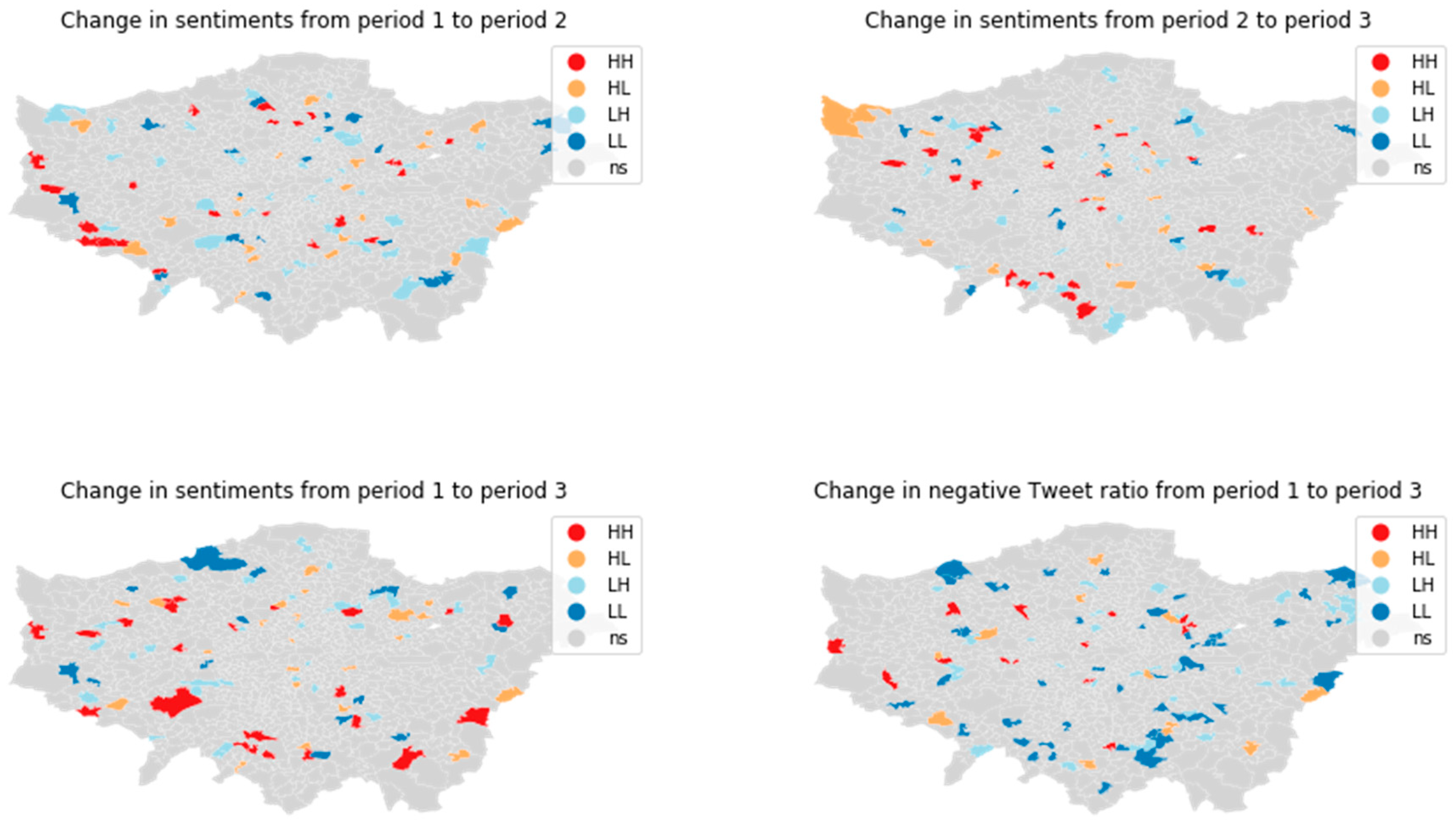

3.6. Developments over Time

4. Discussion and Conclusions

4.1. Discussion of Results and Study Design

4.2. Implications and Outlook

Supplementary Materials

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shaw, J.; Graham, M. An Informational Right to the City? Code, Content, Control, and the Urbanization of Information. Antipode 2017, 49, 907–927. [Google Scholar] [CrossRef]

- Srivastava, M.; Abdelzaher, T.; Szymanski, B. Human-centric sensing. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2012, 370, 176–197. [Google Scholar] [CrossRef] [PubMed]

- Kelley, M.J. The emergent urban imaginaries of geosocial media. GeoJournal 2013, 78, 181–203. [Google Scholar] [CrossRef]

- Acedo, A.; Painho, M.; Casteleyn, S. Place and city: Operationalizing sense of place and social capital in the urban context. Trans. GIS 2017, 21, 503–520. [Google Scholar] [CrossRef]

- Roche, S. Geographic information science II: Less space, more places in smart cities. Prog. Hum. Geogr. 2016, 40, 565–573. [Google Scholar] [CrossRef]

- Graham, M.; Hogan, B.; Straumann, R.K.; Medhat, A. Uneven Geographies of User-Generated Information: Patterns of Increasing Informational Poverty. Ann. Assoc. Am. Geogr. 2014, 104, 746–764. [Google Scholar] [CrossRef]

- Crowe, P.R.; Foley, K.; Collier, M.J. Operationalizing urban resilience through a framework for adaptive co-management and design: Five experiments in urban planning practice and policy. Environ. Sci. Policy 2016, 62, 112–119. [Google Scholar] [CrossRef]

- Mattern, S. Interfacing Urban Intelligence. Places J. 2014, 2014, 140428. [Google Scholar] [CrossRef]

- Sieber, R.E.; Haklay, M. The epistemology(s) of volunteered geographic information: A critique. GEO Geogr. Environ. 2015. [Google Scholar] [CrossRef]

- Kitchin, R. Making sense of smart cities: Addressing present shortcomings. Camb. J. Reg. Econ. Soc. 2015, 8, 131–136. [Google Scholar] [CrossRef]

- Kitchin, R. The real-time city? Big data and smart urbanism. GeoJournal 2014, 79. [Google Scholar] [CrossRef]

- Tenney, M.; Sieber, R. Data-Driven Participation: Algorithms, Cities, Citizens, and Corporate Control. Urban Plan. 2016, 1, 101. [Google Scholar] [CrossRef]

- Sloan, L.; Morgan, J.; Burnap, P.; Williams, M. Who Tweets? Deriving the Demographic Characteristics of Age, Occupation and Social Class from Twitter User Metadata. PLoS ONE 2015, 10, e0115545. [Google Scholar] [CrossRef] [PubMed]

- Preoţiuc-Pietro, D.; Volkova, S.; Lampos, V.; Bachrach, Y.; Aletras, N. Studying User Income through Language, Behaviour and Affect in Social Media. PLoS ONE 2015, 10, e0138717. [Google Scholar] [CrossRef]

- Longley, P.A.; Adnan, M. Geo-temporal Twitter demographics. Int. J. Geogr. Inf. Sci. 2015. [Google Scholar] [CrossRef]

- Bokányi, E.; Kondor, D.; Dobos, L.; Sebok, T.; Stéger, J.; Csabai, I.; Vattay, G. Race, religion and the city: Twitter word frequency patterns reveal dominant demographic dimensions in the United States. Palgrave Commun. 2016, 2, 16010. [Google Scholar] [CrossRef]

- Jenkins, A.; Croitoru, A.; Crooks, A.T.; Stefanidis, A. Crowdsourcing a Collective Sense of Place. PLoS ONE 2016, 11, e0152932. [Google Scholar] [CrossRef]

- Gao, S.; Janowicz, K.; Couclelis, H. Extracting urban functional regions from points of interest and human activities on location-based social networks. Trans. Gis 2017, 21, 446–467. [Google Scholar] [CrossRef]

- Feick, R.; Robertson, C. A multi-scale approach to exploring urban places in geotagged photographs. Comput. Environ. Urban Syst. 2014. [Google Scholar] [CrossRef]

- Ostermann, F.O.; Huang, H.; Andrienko, G.; Andrienko, N.; Capineri, C.; Farkas, K. Extracting and comparing places using geo-social media. In ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences; Copernicus Publications: La Grande Motte, France, 2015; pp. 311–316. [Google Scholar] [CrossRef]

- Lai, J.; Lansley, G.; Haworth, J.; Cheng, T. A name-led approach to profile urban places based on geotagged Twitter data. Trans. GIS 2019, 24, 858–879. [Google Scholar] [CrossRef]

- Cranshaw, J.; Schwartz, R.; Hong, J.; Sadeh, N. The Livehoods Project: Utilizing Social Media to Understand the Dynamics of a City. In Proceedings of the Sixth International AAAI Conference on Weblogs and Social Media, Dublin, Ireland, 4–8 June 2012; AAAI Publications: Palo Alto, CA, USA, 2012. [Google Scholar]

- Yuan, X.; Crooks, A.; Züfle, A. A Thematic Similarity Network Approach for Analysis of Places Using Volunteered Geographic Information. IJGI 2020, 9, 385. [Google Scholar] [CrossRef]

- Ostermann, F.O.; Tomko, M.; Purves, R. User Evaluation of Automatically Generated Keywords and Toponyms for Geo-Referenced Images. J. Am. Soc. Inf. Sci. Technol. 2013, 64, 480–499. [Google Scholar] [CrossRef]

- Steiger, E.; Resch, B.; Zipf, A. Exploration of spatiotemporal and semantic clusters of Twitter data using unsupervised neural networks. Int. J. Geogr. Inf. Sci. 2015. [Google Scholar] [CrossRef]

- McKenzie, G.; Janowicz, K. The Effect of Regional Variation and Resolution on Geosocial Thematic Signatures for Points of Interest. In Societal Geo-Innovation; Bregt, A., Sarjakoski, T., van Lammeren, R., Rip, F., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 237–256. [Google Scholar]

- Fu, C.; McKenzie, G.; Frias-Martinez, V.; Stewart, K. Identifying spatiotemporal urban activities through linguistic signatures. Comput. Environ. Urban Syst. 2018, 72, 25–37. [Google Scholar] [CrossRef]

- Lansley, G.; Longley, P.A. The geography of Twitter topics in London. Comput. Environ. Urban Syst. 2016, 58, 85–96. [Google Scholar] [CrossRef]

- Feick, R.; Robertson, C. Identifying Locally- and Globally-Distinctive Urban Place Descriptors from Heterogeneous User-Generated Content. In Advances in Spatial Data Handling and Analysis; Harvey, F., Leung, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 51–63. [Google Scholar]

- Steiger, E.; Westerholt, R.; Resch, B.; Zipf, A. Twitter as an indicator for whereabouts of people? Correlating Twitter with UK census data. Comput. Environ. Urban Syst. 2015, 54, 255–265. [Google Scholar] [CrossRef]

- Ballatore, A.; de Sabbata, S. Charting the Geographies of Crowdsourced Information in Greater London. In Geospatial Technologies for All; Mansourian, A., Pilesjö, P., Harrie, L., van Lammeren, R., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 149–168. [Google Scholar]

- Ballatore, A.; de Sabbata, S. Los Angeles as a digital place: The geographies of user-generated content. Trans. GIS 2020, 24, 880–902. [Google Scholar] [CrossRef]

- Li, L.; Goodchild, M.F.; Xu, B. Spatial, temporal, and socioeconomic patterns in the use of Twitter and Flickr. Cartogr. Geogr. Inf. Sci. 2013, 40, 61–77. [Google Scholar] [CrossRef]

- Jiang, Y.; Li, Z.; Ye, X. Understanding demographic and socioeconomic biases of geotagged Twitter users at the county level. Cartogr. Geogr. Inf. Sci. 2018. [Google Scholar] [CrossRef]

- Shelton, T.; Poorthuis, A.; Zook, M. Social media and the city: Rethinking urban socio-spatial inequality using user-generated geographic information. Landsc. Urban Plan. 2015, 142, 198–211. [Google Scholar] [CrossRef]

- Zou, L.; Lam, N.S.N.; Shams, S.; Cai, H.; Meyer, M.A.; Yang, S.; Lee, K.; Park, S.-J.; Reams, M.A. Social and geographical disparities in Twitter use during Hurricane Harvey. Int. J. Digit. Earth 2018. [Google Scholar] [CrossRef]

- Gibbons, J.; Malouf, R.; Spitzberg, B.H.; Martinez, L.; Appleyard, B.; Thompson, C.; Nara, A.; Tsou, M.H. Twitter-based measures of neighborhood sentiment as predictors of residential population health. PLoS ONE 2019, 14, e0219550. [Google Scholar] [CrossRef] [PubMed]

- Mitchell, L.; Frank, M.R.; Harris, K.D.; Dodds, P.S.; Danforth, C.M. The Geography of Happiness: Connecting Twitter Sentiment and Expression, Demographics, and Objective Characteristics of Place. PLoS ONE 2013, 8, e64417. [Google Scholar] [CrossRef] [PubMed]

- Felmlee, D.H.; Blanford, J.I.; Matthews, S.A.; MacEachren, A.M. The geography of sentiment towards the Women’s March of 2017. PLoS ONE 2020, 15, e0233994. [Google Scholar] [CrossRef] [PubMed]

- Haffner, M. A spatial analysis of non-English Twitter activity in Houston, TX. Trans. GIS 2018, 22, 913–929. [Google Scholar] [CrossRef]

- Johnson, I.L.; Sengupta, S.; Schöning, J.; Hecht, B. The Geography and Importance of Localness in Geotagged Social Media. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems—CHI’16, Santa Clara, CA, USA, 7–12 May 2016; pp. 515–526. [Google Scholar] [CrossRef]

- Westerholt, R.; Steiger, E.; Resch, B.; Zipf, A. Abundant Topological Outliers in Social Media Data and Their Effect on Spatial Analysis. PLoS ONE 2016, 11, e0162360. [Google Scholar] [CrossRef]

- Roberts, H.V. Using Twitter data in urban green space research: A case study and critical evaluation. Appl. Geogr. 2017, 81, 13–20. [Google Scholar] [CrossRef]

- D’Acci, L. Monetary, Subjective and Quantitative Approaches to Assess Urban Quality of Life and Pleasantness in Cities (Hedonic Price, Willingness-to-Pay, Positional Value, Life Satisfaction, Isobenefit Lines). Soc. Indic. Res. 2014, 115, 531–559. [Google Scholar] [CrossRef]

- Marsal-Llacuna, M.-L.; Colomer-Llinàs, J.; Meléndez-Frigola, J. Lessons in urban monitoring taken from sustainable and livable cities to better address the Smart Cities initiative. Technol. Forecast. Soc. Chang. 2015, 90, 611–622. [Google Scholar] [CrossRef]

- Smarzaro, R.; Lima, T.F.d.; Davis, J.C.A. Could Data from Location-Based Social Networks Be Used to Support Urban Planning? In Proceedings of the 26th International Conference on World Wide Web Companion, Perth, Australia, 3–7 April 2017; pp. 1463–1468. [Google Scholar]

- Buil-Gil, D.; Medina, J.; Shlomo, N. The geographies of perceived neighbourhood disorder. A small area estimation approach. Appl. Geogr. 2019, 109, 102037. [Google Scholar] [CrossRef]

- Zivanovic, S.; Martinez, J.; Verplanke, J. Capturing and mapping quality of life using Twitter data. GeoJournal 2018. [Google Scholar] [CrossRef]

- Rzeszewski, M. Geosocial capta in geographical research—A critical analysis. Cartogr. Geogr. Inf. Sci. 2016, 1–13. [Google Scholar] [CrossRef]

- Roche, S. Geographic Information Science I: Why does a smart city need to be spatially enabled? Prog. Hum. Geogr. 2014, 38, 703–711. [Google Scholar] [CrossRef]

- Ostermann, F.O. Geosocial Sensor London. Available online: https://github.com/foost/GeosocialSensorLondon (accessed on 25 January 2021).

- London Datastore—Greater London Authority. Available online: https://data.london.gov.uk/ (accessed on 28 October 2020).

- Purves, R.; Edwardes, A.; Wood, J. Describing Place through User Generated Content. First Monday; Volume 16, Number 9—5 September 2011. 2011. Available online: http://www.uic.edu/htbin/cgiwrap/bin/ojs/index.php/fm/article/view/3710/3035 (accessed on 25 January 2021).

- Strasser, V. Assessing the Spatial Context of Sentiments in Geo-Social Media. Master’s Thesis, University of Twente, Enschede, The Netherlands, 2019. [Google Scholar]

- Ribeiro, F.N.; Araújo, M.; Gonçalves, P.; Gonçalves, M.A.; Benevenuto, F. SentiBench—A benchmark comparison of state-of-the-practice sentiment analysis methods. EPJ Data Sci. 2016, 5, 23. [Google Scholar] [CrossRef]

- Dodds, P.S.; Harris, K.D.; Kloumann, I.M.; Bliss, C.A.; Danforth, C.M. Temporal Patterns of Happiness and Information in a Global Social Network: Hedonometrics and Twitter. PLoS ONE 2011, 6, e26752. [Google Scholar] [CrossRef]

- Kim, R. Another Twitter Sentiment Analysis with Python. Available online: https://github.com/tthustla/twitter_sentiment_analysis_part1 (accessed on 25 January 2021).

- Hutto, C.J.; Gilbert, E.E. VADER: A Parsimonious Rule-based Model for Sentiment Analysis of Social Media Text. Presented at the Eighth International Conference on Weblogs and Social Media (ICWSM-14), Ann Arbor, MI, USA, 1–4 June 2014. [Google Scholar]

- Comber, A.; Brunsdon, C.; Charlton, M.; Dong, G.; Harris, R.; Lu, B.; Lü, Y.; Murakami, D.; Nakaya, T.; Wang, Y.; et al. The GWR route map: A guide to the informed application of Geographically Weighted Regression. arXiv 2020, arXiv:2004.06070. Available online: http://arxiv.org/abs/2004.06070 (accessed on 20 April 2020).

- Craglia, M.; Ostermann, F.O.; Spinsanti, L. Digital Earth from vision to practice: Making sense of citizen-generated content. Int. J. Digit. Earth 2012, 5, 398–416. [Google Scholar] [CrossRef]

- Degrossi, L.C.; de Albuquerque, J.P.; Rocha, R.S.; Zipf, A. A taxonomy of quality assessment methods for volunteered and crowdsourced geographic information. Trans. GIS 2018, 22, 542–560. [Google Scholar] [CrossRef] [PubMed]

- Ostermann, F.O.; Granell, C. Advancing Science with VGI: Reproducibility and Replicability of Recent Studies using VGI. Trans. GIS 2017, 21, 224–237. [Google Scholar] [CrossRef]

- Barbosa, O.; Tratalos, J.A.; Armsworth, P.R.; Davies, R.G.; Fuller, R.A.; Johnson, P.; Gaston, K.J. Who benefits from access to green space? A case study from Sheffield, UK. Landsc. Urban Plan. 2007, 83, 187–195. [Google Scholar] [CrossRef]

- Nüst, D.; Granell, C.; Hofer, B.; Konkol, M.; Ostermann, F.O.; Sileryte, R.; Cerutti, V. Reproducible research and GIScience: An evaluation using AGILE conference papers. PeerJ 2018, 6, e5072. [Google Scholar] [CrossRef] [PubMed]

- Organisation for Economic Cooperation and Development, How’s Life?: Measuring Well-Being. OECD, 2011. Available online: https://www.oecd-ilibrary.org/economics/how-s-life_9789264121164-en (accessed on 7 November 2020).

- Federal Statistical Office. Quality of Life Indicators. Available online: https://www.bfs.admin.ch/bfs/en/home/statistiken/querschnittsthemen/city-statistics/indikatoren-lebensqualitaet.html (accessed on 25 January 2021).

- Robertson, C.; Feick, R. Inference and analysis across spatial supports in the big data era: Uncertain point observations and geographic contexts. Trans. GIS 2018, 22, 455–476. [Google Scholar] [CrossRef]

- Hu, Y.; Wang, R.-Q. Understanding the removal of precise geotagging in tweets. Nat. Hum. Behav. 2020. [Google Scholar] [CrossRef] [PubMed]

- Rose, A.N.; Nagle, N.N. Validation of spatiodemographic estimates produced through data fusion of small area census records and household microdata. Comput. Environ. Urban Syst. 2017, 63, 38–49. [Google Scholar] [CrossRef]

| Variable | Explanation |

|---|---|

| age_0-15_perc age_16-29_perc age_30-44_perc age_45-64_perc age_65_perc | Percentage of that respective age group in residential population |

| qual_4min | Percentage of highest level of qualification (Level 4 qualifications and above) |

| hh_1p_perc | Percentage of single-person households |

| hh_cpl_perc | Percentage of two-person households |

| hh_cpl_kids_perc | Percentage of two-person households with children |

| BAME_perc | Percentage of Black, Asian, and Minority Ethnic residents |

| Algorithmic Classification | Manual Classification | Grand Total | ||

|---|---|---|---|---|

| Negative | Neutral | Positive | ||

| Negative | 292 | 177 | 31 | 500 |

| Neutral | 20 | 381 | 99 | 500 |

| Positive | 0 | 192 | 308 | 500 |

| Grand Total | 312 | 192 | 308 | 1500 |

| Algorithmic Classification | Manual Classification | Grand Total | ||

|---|---|---|---|---|

| Negative | Neutral | Positive | ||

| Negative | 293 | 191 | 16 | 500 |

| Neutral | 7 | 491 | 2 | 500 |

| Positive | 7 | 256 | 237 | 500 |

| Grand Total | 307 | 938 | 255 | 1500 |

| Algorithmic Classification | Manual Classification | Grand Total | ||

|---|---|---|---|---|

| Negative | Neutral | Positive | ||

| Negative | 446 | 52 | 2 | 500 |

| Neutral | 14 | 411 | 75 | 500 |

| Positive | 1 | 107 | 392 | 500 |

| Grand Total | 461 | 570 | 469 | 1500 |

| Predominant Group | Tweet Count | Negative Tweet Count | Negative Tweet Ratio | Average Sentiment | Average VADER Score | Avg TF-IDF+LR Positive Score | Shannon Entropy |

|---|---|---|---|---|---|---|---|

| A | 1012.54 | 19.42 | 0.02 | 1.18 | 0.33 | 0.74 | 0.95 |

| B | 7927.45 | 99.54 | 0.01 | 1.17 | 0.30 | 0.75 | 1.02 |

| C | 1849.19 | 42.72 | 0.02 | 1.15 | 0.29 | 0.73 | 0.74 |

| D | 36,185.93 | 348.89 | 0.01 | 1.18 | 0.33 | 0.76 | 0.94 |

| E | 4491.02 | 56.06 | 0.01 | 1.18 | 0.32 | 0.76 | 1.12 |

| F | 3277.74 | 53.75 | 0.02 | 1.19 | 0.34 | 0.75 | 1.16 |

| G | 2125.75 | 31.34 | 0.02 | 1.17 | 0.31 | 0.74 | 0.95 |

| H | 1009.00 | 13.14 | 0.02 | 1.19 | 0.35 | 0.75 | 0.87 |

| Count P1 | Count P2 | Count P3 | Sent P1 | Sent P2 | Sent P3 | Sent P1 -> P2 | Sent P2 -> P3 | Sent P1 -> P3 | NTR P1 -> P3 | |

|---|---|---|---|---|---|---|---|---|---|---|

| mean | 1508.01 | 1137.16 | 716.90 | 1.15 | 1.20 | 1.20 | 4.58 | 0.32 | 4.70 | 0.11 |

| std | 10,805.94 | 8773.74 | 4822.94 | 0.06 | 0.08 | 0.08 | 7.10 | 7.33 | 7.91 | 1.56 |

| min | 12.00 | 8.00 | 5.00 | 0.94 | 0.91 | 0.90 | −25.00 | −25.78 | −26.85 | −1.00 |

| 25% | 155.00 | 121.00 | 84.00 | 1.11 | 1.15 | 1.15 | 0.57 | −3.27 | 0.50 | −0.64 |

| 50% | 373.00 | 291.00 | 197.00 | 1.15 | 1.20 | 1.20 | 4.31 | 0.32 | 4.43 | −0.16 |

| 75% | 902.00 | 614.00 | 472.00 | 1.18 | 1.24 | 1.24 | 8.27 | 4.08 | 8.70 | 0.20 |

| max | 259,828 | 212,996 | 112,079 | 1.63 | 1.53 | 1.57 | 32.26 | 38.46 | 37.84 | 13.43 |

| total | 942,507 | 710,722 | 448,063 |

| SFOS | OECD | Geosocial Sensor |

|---|---|---|

| Traffic noise | -/- | Mentions of traffic noise |

| Air quality | Air quality | Mentions of air quality |

| Violence | Homicides Feeling safe at night | Mentions of violence |

| Burglaries | -/- | Mentions of burglaries |

| Road accidents | -/- | Mentions of road accidents |

| Nationalities | -/- | Language of Tweet, user profile information |

| Cultural demand | -/- | Mentions of wishes to visit cinemas, theaters, museums, etc. |

| Cultural offer | -/- | Mentions of visits |

| -/- | Recreational green space | Mentions of park visits or related activities |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ostermann, F.O. Linking Geosocial Sensing with the Socio-Demographic Fabric of Smart Cities. ISPRS Int. J. Geo-Inf. 2021, 10, 52. https://doi.org/10.3390/ijgi10020052

Ostermann FO. Linking Geosocial Sensing with the Socio-Demographic Fabric of Smart Cities. ISPRS International Journal of Geo-Information. 2021; 10(2):52. https://doi.org/10.3390/ijgi10020052

Chicago/Turabian StyleOstermann, Frank O. 2021. "Linking Geosocial Sensing with the Socio-Demographic Fabric of Smart Cities" ISPRS International Journal of Geo-Information 10, no. 2: 52. https://doi.org/10.3390/ijgi10020052

APA StyleOstermann, F. O. (2021). Linking Geosocial Sensing with the Socio-Demographic Fabric of Smart Cities. ISPRS International Journal of Geo-Information, 10(2), 52. https://doi.org/10.3390/ijgi10020052