Real-Time Vertical Ground Reaction Force Estimation in a Unified Simulation Framework Using Inertial Measurement Unit Sensors

Abstract

1. Introduction

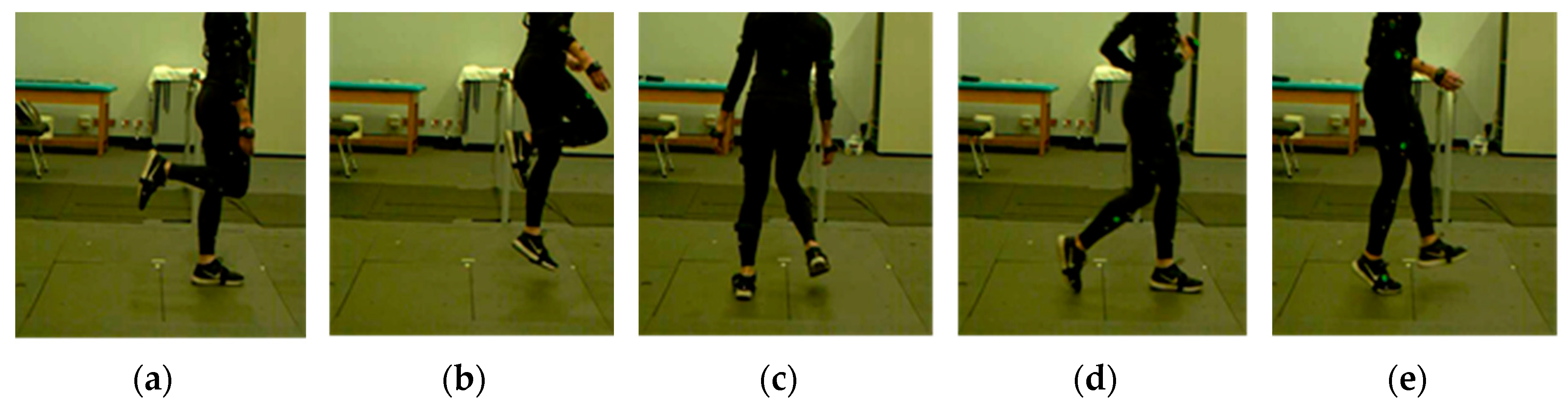

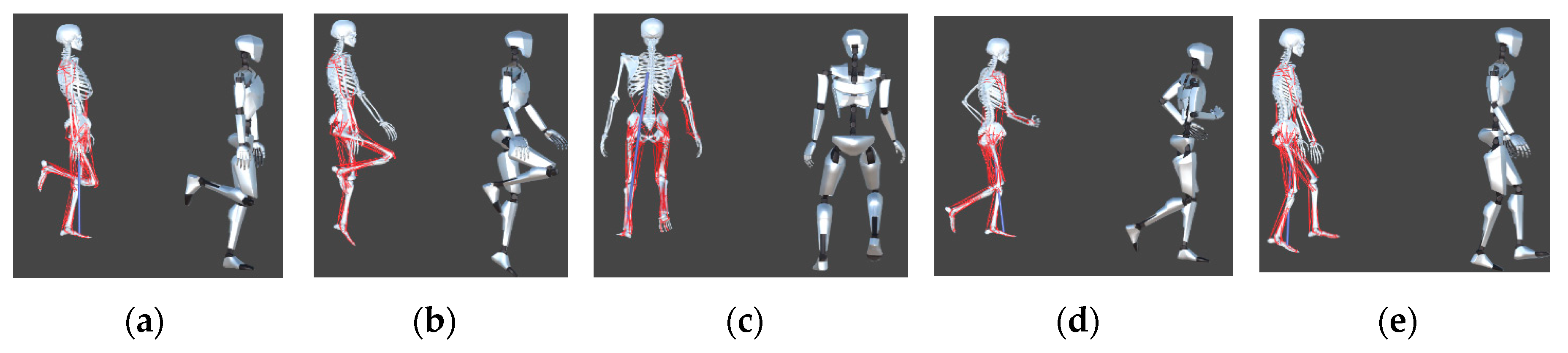

2. Methods

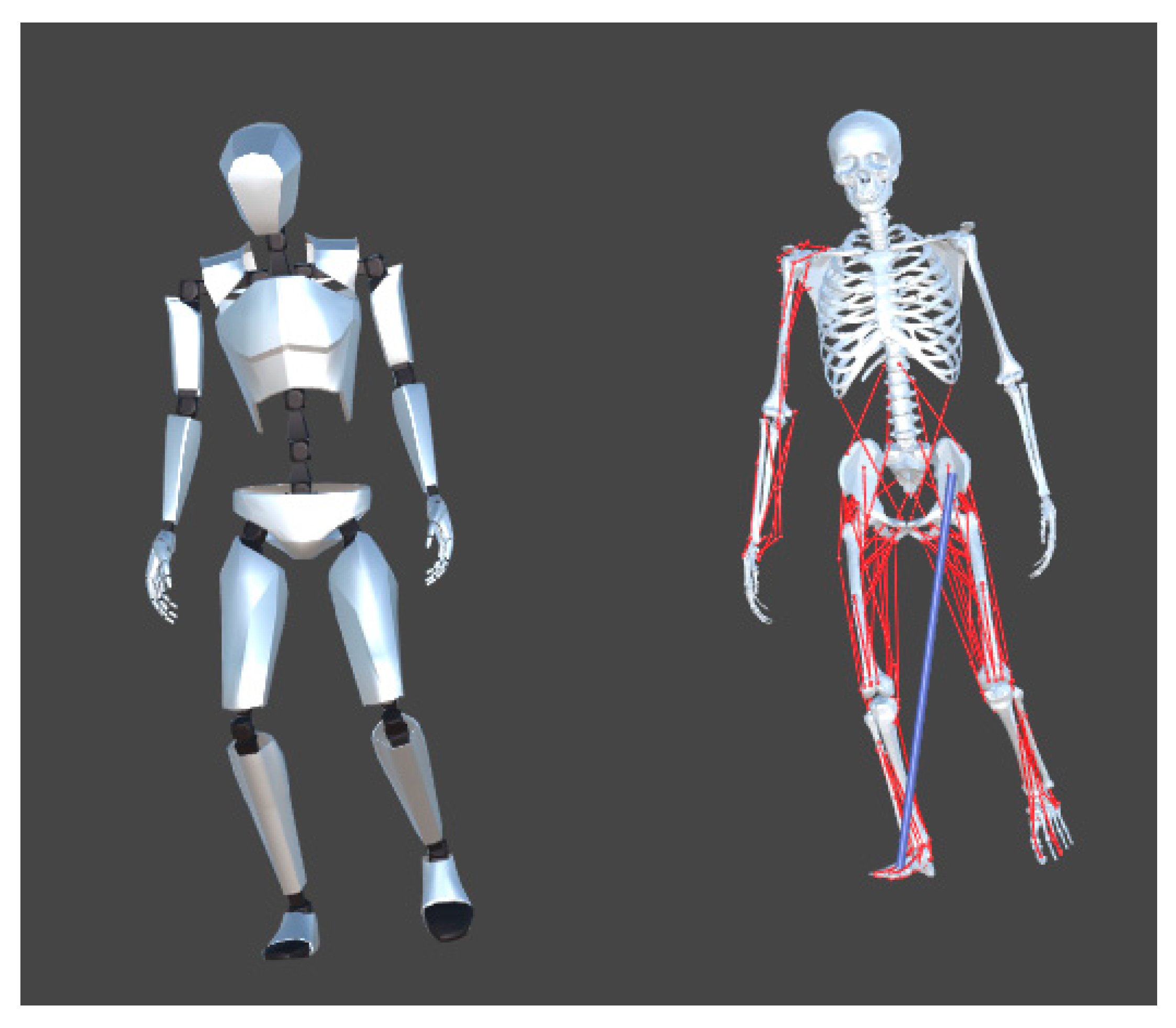

2.1. Framework

2.2. OpenSim

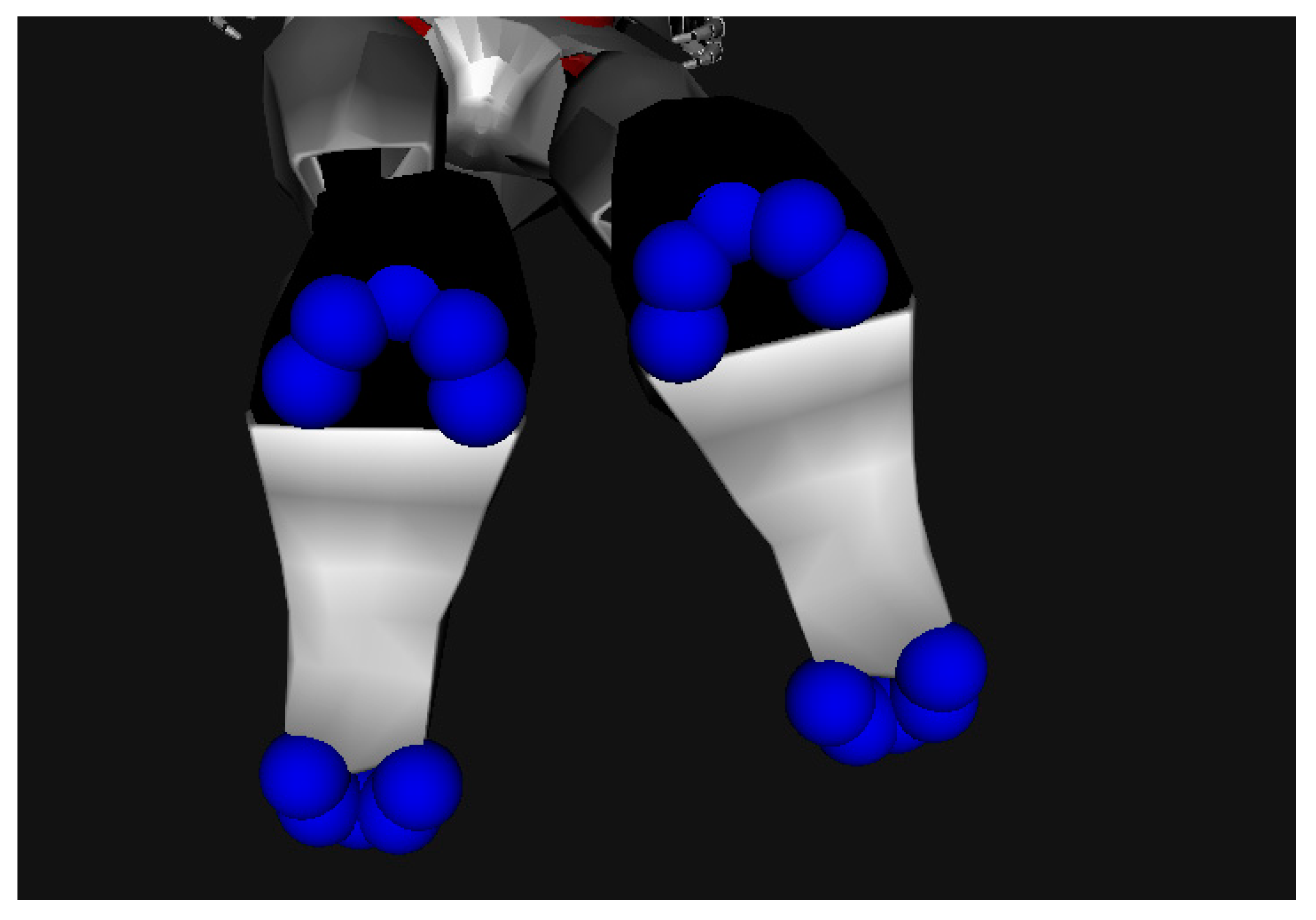

2.3. Ground Reaction Force Estimation

3. Experimental Setup

3.1. Subject Information

3.2. Equipment

3.3. Subject Preparation

3.4. Experimental Protocol

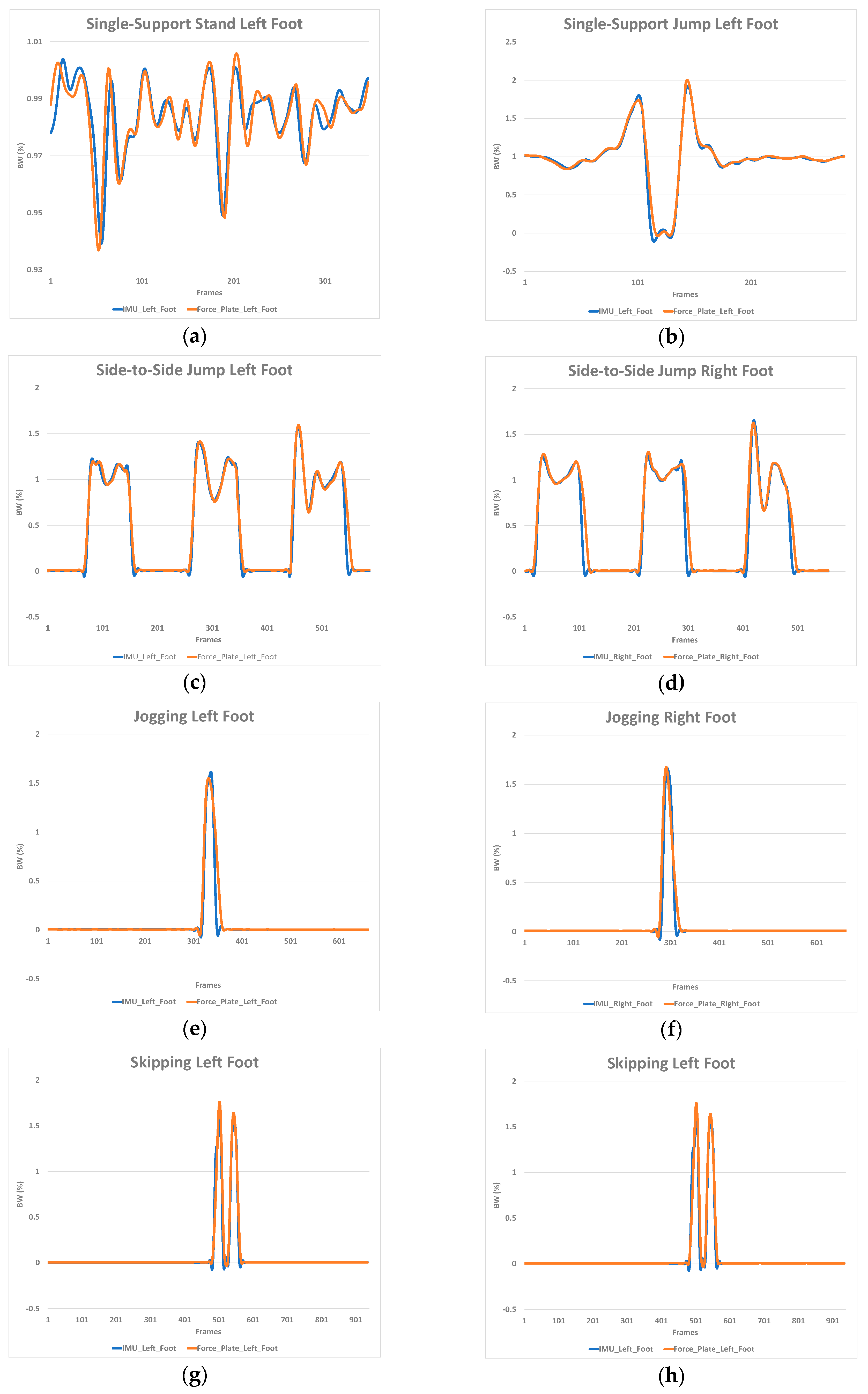

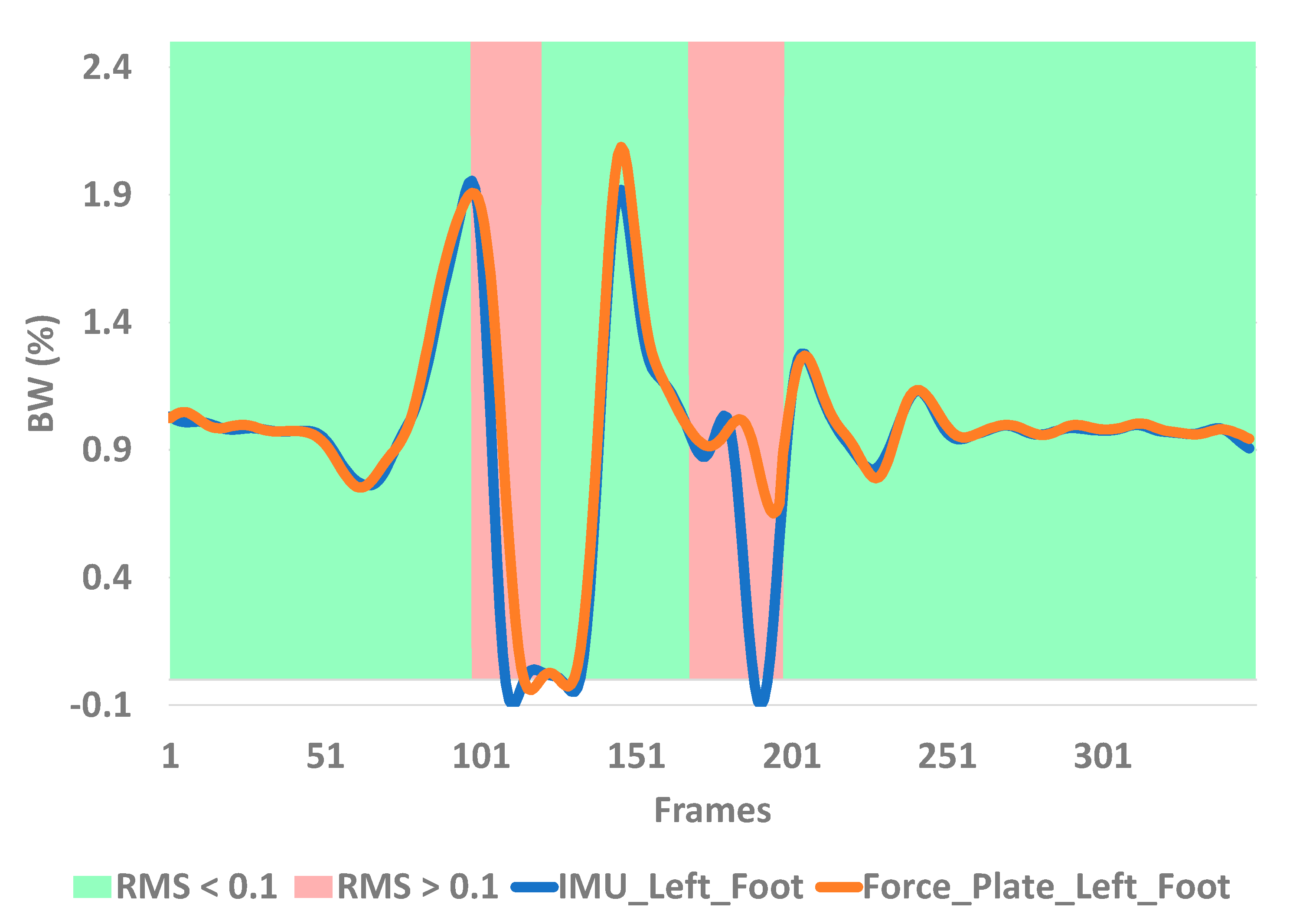

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Ethical Statements

References

- Messier, S.P.; Legault, C.; Schoenlank, C.R.; Newman, J.J.; Martin, D.F.; Devita, P. Risk Factors and Mechanisms of Knee Injury in Runners. Med. Sci. Sports Exerc. 2008, 40, 1873–1879. [Google Scholar] [CrossRef] [PubMed]

- Veltink, P.H.; Liedtke, C.; Droog, E.; vanderKooij, H. Ambulatory Measurement of Ground Reaction Forces. IEEE Trans. Neural Syst. Rehabil. Eng. 2005, 13, 423–427. [Google Scholar] [CrossRef] [PubMed]

- Howell, A.M.; Kobayashi, T.; Hayes, H.A.; Foreman, K.B.; Bamberg, S.J.M. Kinetic Gait Analysis Using a Low-Cost Insole. IEEE Trans. Biomed. Eng. 2013, 60, 3284–3290. [Google Scholar] [CrossRef] [PubMed]

- Kong, P.W.; De Heer, H. Wearing the F-Scan mobile in-shoe pressure measurement system alters gait characteristics during running. Gait Posture 2009, 29, 143–145. [Google Scholar] [CrossRef] [PubMed]

- Van den Noort, J.; van der Esch, M.; Steultjens, M.P.; Dekker, J.; Schepers, M.; Veltink, P.H.; Harlaar, J. Influence of the instrumented force shoe on gait pattern in patients with osteoarthritis of the knee. Med. Biol. Eng. Comput. 2011, 49, 1381–1392. [Google Scholar] [CrossRef] [PubMed]

- Devita, P.; Skelly, W.A. Effect of landing stiffness on joint kinetics and energetics in the lower extremity. Med. Sci. Sports Exerc. 1992, 24, 108–115. [Google Scholar] [CrossRef] [PubMed]

- Edwards, W.B.; Derrick, T.R.; Hamill, J. Musculoskeletal Attenuation of Impact Shock in Response to Knee Angle Manipulation. J. Appl. Biomech. 2012, 28, 502–510. [Google Scholar] [CrossRef] [PubMed]

- Cavanagh, P.R.; Lafortune, M.A. Ground reaction forces in distance running. J. Biomech. 1980, 13, 397–406. [Google Scholar] [CrossRef]

- Wouda, F.J.; Giuberti, M.; Bellusci, G.; Maartens, E.; Reenalda, J.; van Beijnum, B.-J.F.; Veltink, P.H. Estimation of Vertical Ground Reaction Forces and Sagittal Knee Kinematics During Running Using Three Inertial Sensors. Front. Physiol. 2018, 9, 218. [Google Scholar] [CrossRef] [PubMed]

- Ngoh, K.J.-H.; Gouwanda, D.; Gopalai, A.A.; Chong, Y.Z. Estimation of vertical ground reaction force during running using neural network model and uniaxial accelerometer. J. Biomech. 2018, 76, 269–273. [Google Scholar] [CrossRef] [PubMed]

- Abella, J.; Demircan, E. A Multi-body Simulation Framework for Live Motion Tracking and Analysis within the Unity Environment. In Proceedings of the 2019 16th International Conference on Ubiquitous Robots (UR), Jeju, Korea, 24–27 June 2019; pp. 654–659. [Google Scholar]

- Delp, S.L.; Loan, J.P.; Hoy, M.G.; Zajac, F.E.; Topp, E.L.; Rosen, J.M. An interactive graphics-based model of the lower extremity to study orthopaedic surgical procedures. IEEE Trans. Biomed. Eng. 1990, 37, 757–767. [Google Scholar] [CrossRef] [PubMed]

- Qualisys. Available online: https://www.qualisys.com/hardware/5-6-7/ (accessed on 30 August 2020).

- Bertec Model 6090-15. Available online: https://www.bertec.com/products/force-plates (accessed on 30 August 2020).

- Perception Neuron Pro Suit. Available online: https://neuronmocap.com/content/product/perception-neuron-pro (accessed on 30 August 2020).

- Oliphant, T.E. Guide to NumPy; Continuum Press: Austin, TX, USA, 2015; ISBN 9781517300074. [Google Scholar]

- SciPy 1.0 Contributors; Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; et al. SciPy 1.0: Fundamental algorithms for scientific computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.-C.; Wen, Y.-T.; Lee, Y.-J. IMU sensors beneath walking surface for ground reaction force prediction in gait. IEEE Sens. J. 2020, 20, 9372–9376. [Google Scholar] [CrossRef]

- Refai, M.I.M.; van Beijnum, B.-J.F.; Buurke, J.H.; Veltink, P.H. Portable Gait Lab: Estimating 3D GRF Using a Pelvis IMU in a Foot IMU Defined Frame. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 1308–1316. [Google Scholar] [CrossRef] [PubMed]

- Shahabpoor, E.; Pavic, A.; Brownjohn, J.M.W.; Billings, S.A.; Guo, L.-Z.; Bocian, M. Real-Life Measurement of Tri-Axial Walking Ground Reaction Forces Using Optimal Network of Wearable Inertial Measurement Units. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1243–1253. [Google Scholar] [CrossRef] [PubMed]

- Fukushi, K.; Sekiguchi, Y.; Honda, K.; Yaguchi, H.; Izumi, S.-I. Three-dimensional GRF and CoP Estimation during Stair and Slope Ascent/Descent with Wearable IMUs and Foot Pressure Sensors. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 6401–6404. [Google Scholar]

- Leporace, G.; Batista, L.A.; Metsavaht, L.; Nadal, J. Residual analysis of ground reaction forces simulation during gait using neural networks with different configurations. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 2812–2815. [Google Scholar]

| TASK | TRIAL 1 | TRIAL 2 | TRIAL 3 | TRIAL 4 |

|---|---|---|---|---|

| (BW) | (BW) | (BW) | (BW) | |

| JOGGING | 0.106 | 0.080 | 0.074 | 0.090 |

| LEFT LEG | 0.117 | 0.087 | 0.085 | 0.091 |

| RIGHT LEG | 0.096 | 0.074 | 0.062 | 0.089 |

| SKIPPING | 0.102 | 0.087 | 0.121 | 0.091 |

| LEFT LEG | 0.080 | 0.055 | 0.136 | 0.075 |

| RIGHT LEG | 0.124 | 0.119 | 0.106 | 0.108 |

| LEFT LEG SINGLE-SUPPORT | 0.004 | 0.068 | 0.031 | 0.002 |

| LEFT LEG SINGLE-SUPPORT JUMP | 0.181 | 0.215 | 0.140 | 0.160 |

| SIDE-TO-SIDE JUMP | 0.274 | 0.108 | 0.154 | 0.158 |

| LEFT LEG | 0.461 | 0.081 | 0.159 | 0.148 |

| RIGHT LEG | 0.087 | 0.134 | 0.149 | 0.168 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Recinos, E.; Abella, J.; Riyaz, S.; Demircan, E. Real-Time Vertical Ground Reaction Force Estimation in a Unified Simulation Framework Using Inertial Measurement Unit Sensors. Robotics 2020, 9, 88. https://doi.org/10.3390/robotics9040088

Recinos E, Abella J, Riyaz S, Demircan E. Real-Time Vertical Ground Reaction Force Estimation in a Unified Simulation Framework Using Inertial Measurement Unit Sensors. Robotics. 2020; 9(4):88. https://doi.org/10.3390/robotics9040088

Chicago/Turabian StyleRecinos, Elliot, John Abella, Shayan Riyaz, and Emel Demircan. 2020. "Real-Time Vertical Ground Reaction Force Estimation in a Unified Simulation Framework Using Inertial Measurement Unit Sensors" Robotics 9, no. 4: 88. https://doi.org/10.3390/robotics9040088

APA StyleRecinos, E., Abella, J., Riyaz, S., & Demircan, E. (2020). Real-Time Vertical Ground Reaction Force Estimation in a Unified Simulation Framework Using Inertial Measurement Unit Sensors. Robotics, 9(4), 88. https://doi.org/10.3390/robotics9040088