Probabilistic Allocation of Specialized Robots on Targets Detected Using Deep Learning Networks

Abstract

1. Introduction

2. Related Work

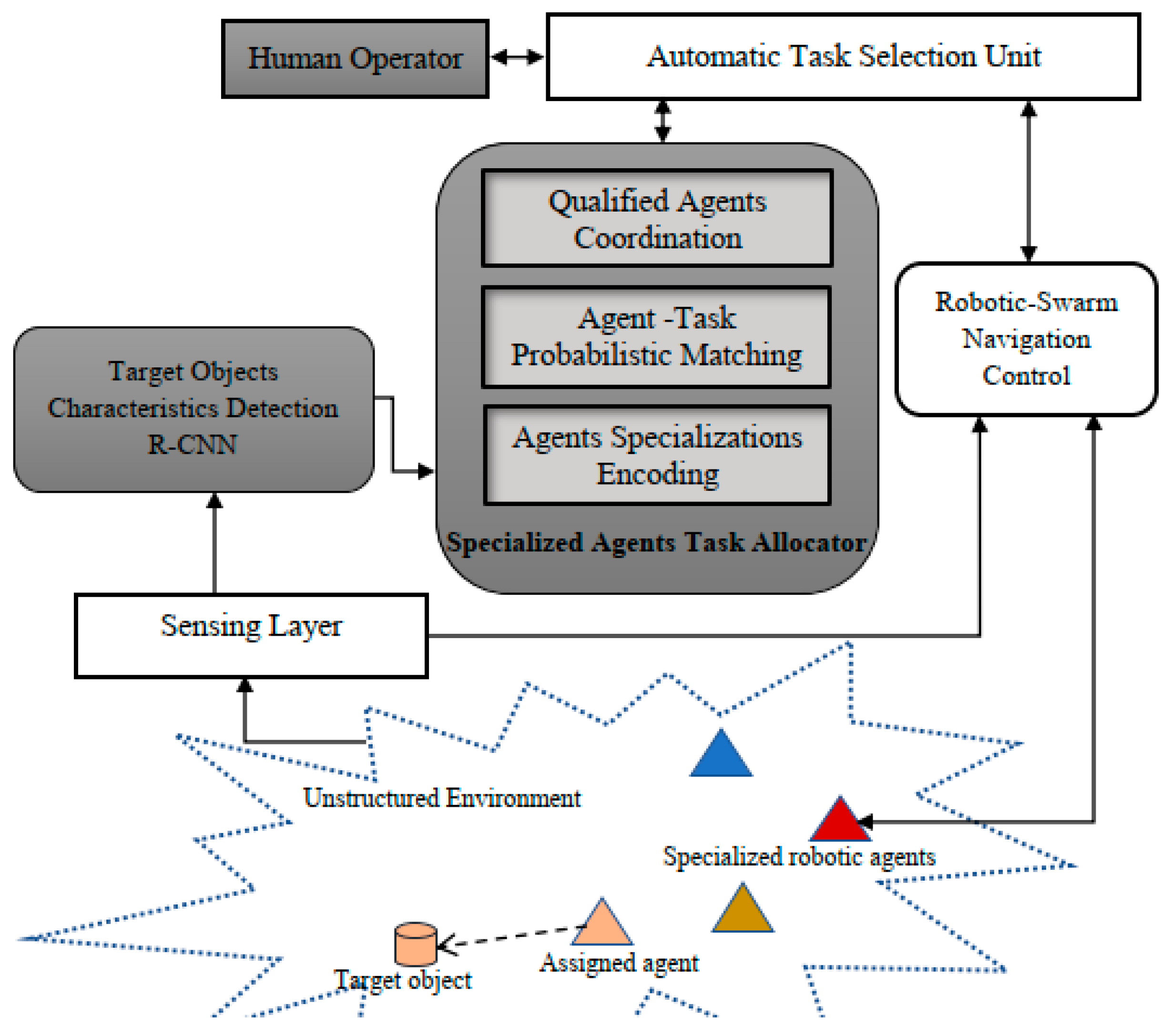

3. Proposed Framework

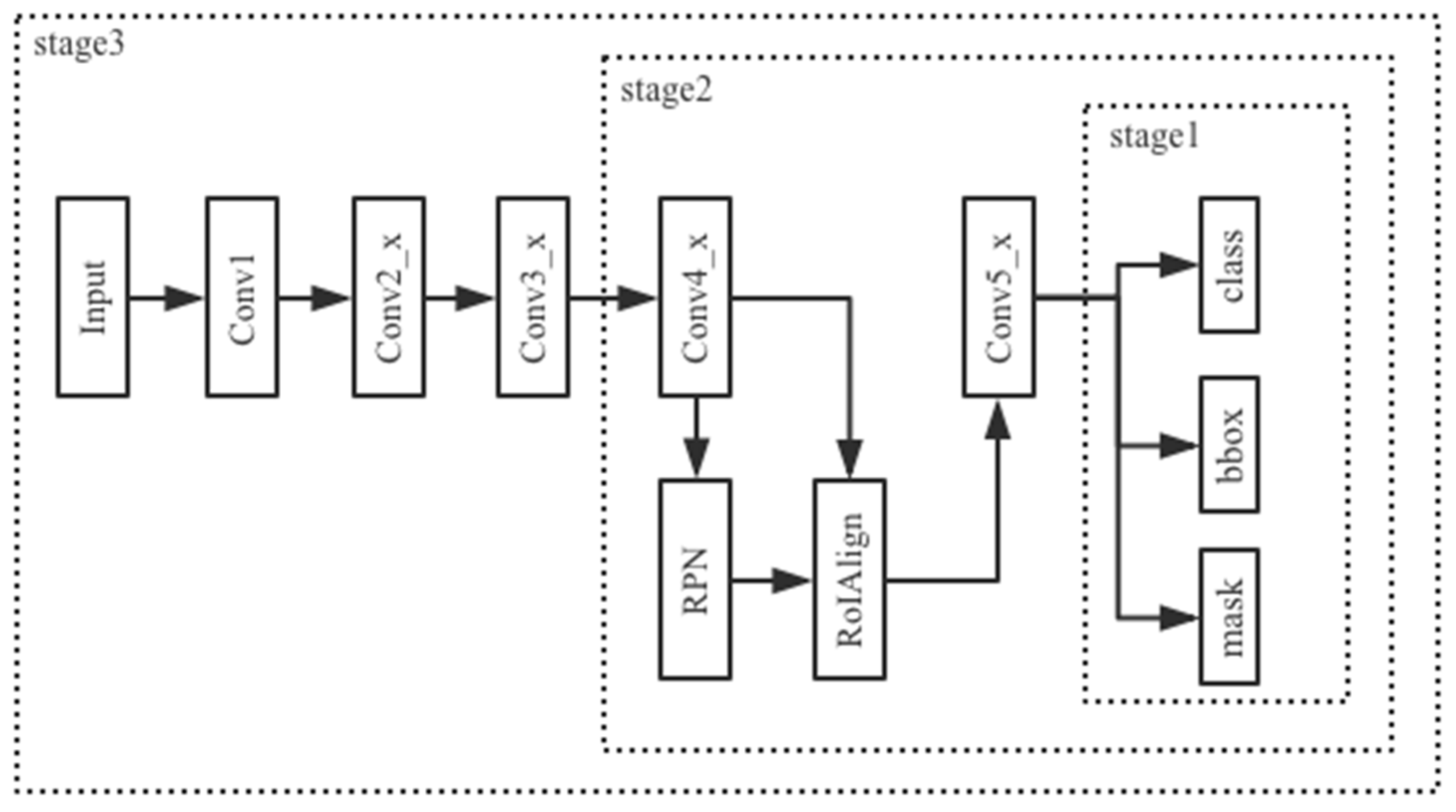

4. Target Object Recognition

4.1. Deep Learning Network Training

4.2. Target Objects Detection

5. Probabilistic Task Allocation Scheme

5.1. Specialization Definition and Coding

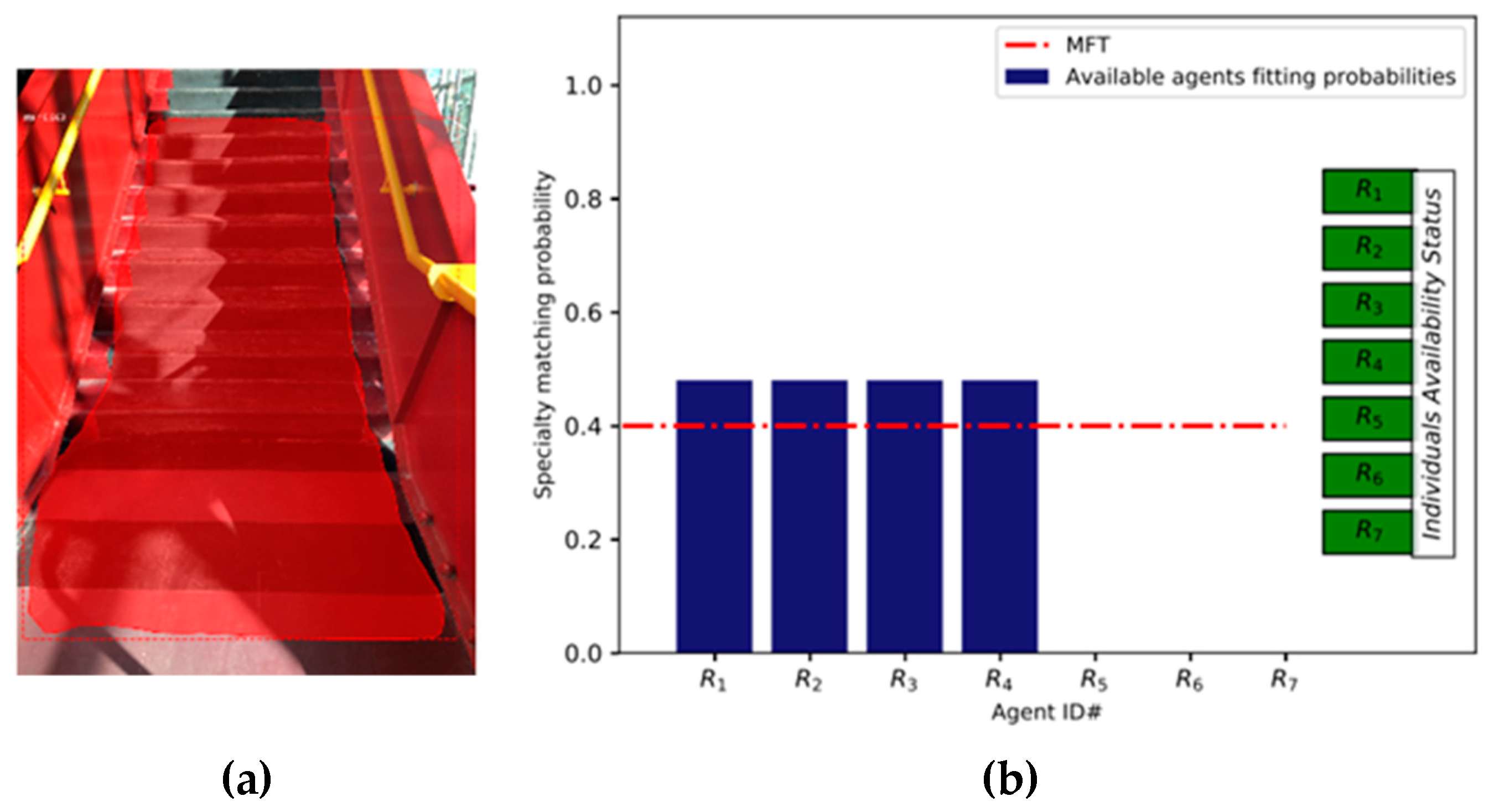

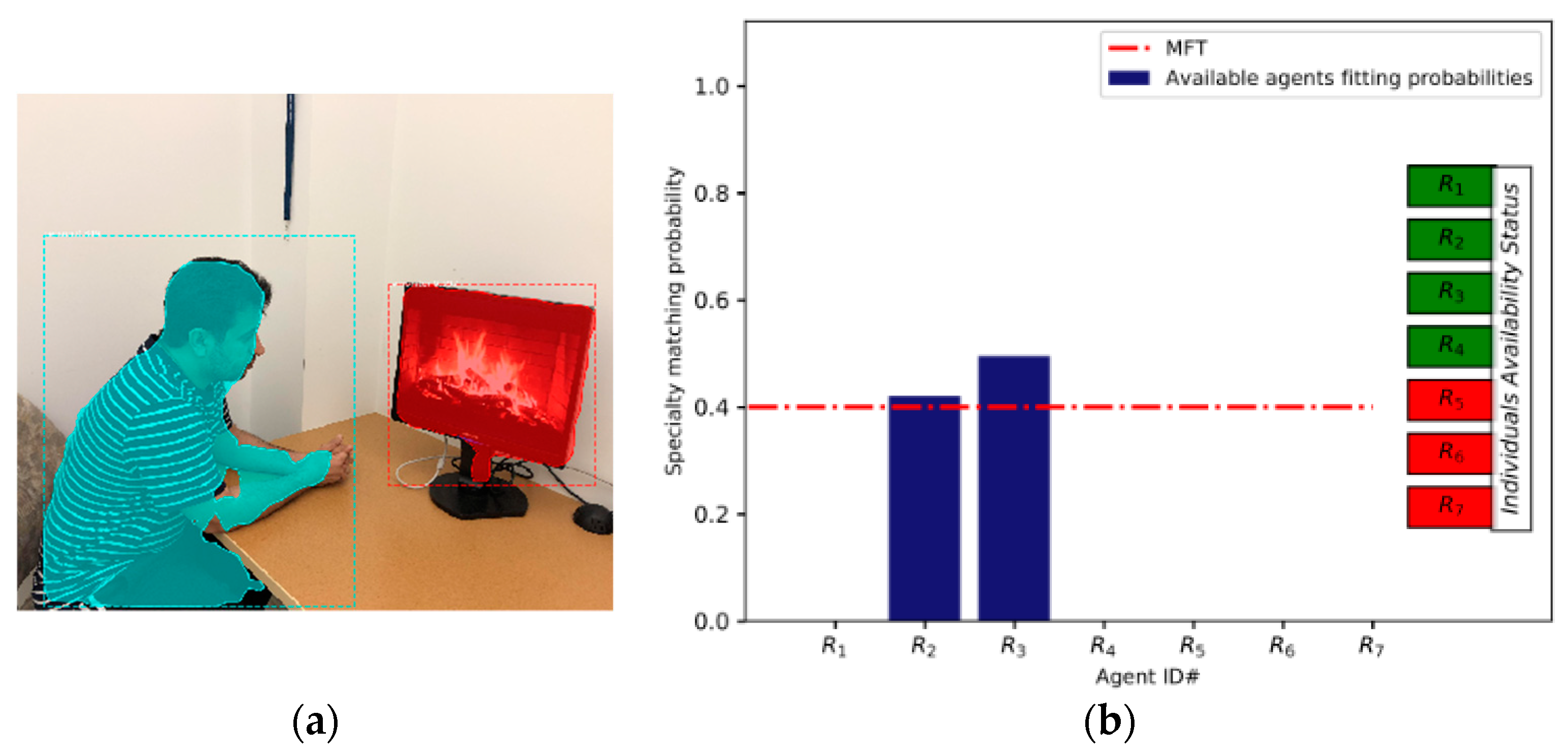

5.2. Agents Fitting Probabilities Computation

5.3. Qualified Agents Coordination

5.4. Human in the Loop

6. Experimental Results

7. Quantitative Analysis of Performance

8. Comparison

8.1. Interface Delay Task Allocation (IDTA)

8.2. Multiple Travelling Salesman Assignment (MTSA)

8.3. Taxonomy of Multi-Agent Task Allocation (An Optimization Approach)

8.4. Task-Allocation Algorithms in Multi-Robot Exploration

9. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Korte, B.; Vygen, J. Combinatorial Optimization: Theory and Algorithms; Springer: Berlin, Germany, 2008. [Google Scholar]

- Hall, P. On representatives of subsets. In Classic Papers in Combinatorics; Birkhäuser: Boston, MA, USA, 2009; pp. 58–62. [Google Scholar]

- Jones, C.; Mataric, M.J. Adaptive Division of Labor in Large-scale Minimalist Multi-robot Systems. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 27–31 October 2003; Volume 2, pp. 1969–1974. [Google Scholar]

- Smith, S.L.; Bullo, F. Target assignment for robotic networks: Asymptotic performance under limited communication. In Proceedings of the 2007 American Control Conference, New York, NY, USA, 9–13 July 2007; pp. 1155–1160. [Google Scholar]

- Claes, D.; Robbel, P.; Oliehoek, F.A.; Tuyls, K.; Hennes, D.; van der Hoek, W. Affective Approximation for Multi-Robot Coordination in Spatially Distributed Tasks. In Proceedings of the Intl Conference on Autonomous Agents and Multi-agent Systems, Istanbul, Turkey, May 2015; 2015; pp. 881–890. [Google Scholar]

- Yasuda, T.; Kage, K.; Ohkura, K. Response Threshold-Based Task Allocation in a Reinforcement Learning Robotic Swarm. In Proceedings of the IEEE 7th International Workshop on Computational Intelligence and Applications (IWCIA), Hiroshima, Japan, 7–8 November 2014; pp. 189–194. [Google Scholar]

- Wu, H.; Li, H.; Xiao, R.; Liu, J. Modeling and simulation of dynamic ant colony’s labor division for task allocation of UAV swarm. Phys. A Stat. Mech. Appl. 2018, 491, 127–141. [Google Scholar] [CrossRef]

- Matarić, M.; Sukhatme, G.; Qstergaard, E. Multi-Robot Task Allocation in Uncertain Environments. Auton. Robot. 2003, 14, 255–263. [Google Scholar] [CrossRef]

- Amigoni, F.; Brandolini, A.; Caglioti, V.; Di Lecce, C.; Guerriero, A.; Lazzaroni, M.; Lombardo, F.; Ottoboni, R.; Pasero, E.; Piuri, V.; et al. Agencies for perception in environmental monitoring. IEEE Trans. Instrum. Meas. 2006, 55, 1038–1050. [Google Scholar] [CrossRef]

- Rastegari, M.; Ordonez, V.; Redmon, J.; Farhadi, A. XNOR-Net: Imagenet classification using binary convolutional neural networks. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 525–542. [Google Scholar]

- Lins, R.G.; Givigi, S.N.; Kurka, P.G. Vision-based measurement for localization of objects in 3-D for robotic applications. IEEE Trans. Instrum. Meas. 2015, 64, 2950–2958. [Google Scholar] [CrossRef]

- Wu, W.; Payeur, P.; Al-Buraiki, O.; Ross, M. Vision-Based Target Objects Recognition and Segmentation for Unmanned Systems Task Allocation. In Proceedings of the International Conference on Image Analysis and Recognition, Waterloo, ON, Canada, 27–30 August 2019; Karray, F., Campilho, A., Yu, A., Eds.; Springer: Cham, Switzerland, 2019; pp. 252–263. [Google Scholar]

- Ren, S.; He, K.; Girshik, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Al-Buraiki, O.; Payeur, P.; Castillo, Y.R. Task switching for specialized mobile robots working in cooperative formation. In Proceedings of the IEEE International Symposium on Robotics and Intelligent Sensors, Tokyo, Japan, 17–20 December 2016; pp. 207–212. [Google Scholar]

- Al-Buraiki, O.; Payeur, P. Agent-Task assignation based on target characteristics for a swarm of specialized agents. In Proceedings of the 13th Annual IEEE International Systems Conference, Orlando, FL, USA, 8–11 April 2019; pp. 268–275. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Bashiri, F.S.; LaRose, E.; Peissig, P.; Tafti, A.P. MCIndoor20000: A fully-labeled image dataset to advance indoor objects detection. Data Brief 2018, 17, 71–75. [Google Scholar] [CrossRef] [PubMed]

- Everingham, M.; van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Russell, B.C.; Torralba, A.; Murphy, K.P.; Freeman, W.T. LabelMe: A Database and Web-based Tool for Image Annotation. Int. J. Comput. Vis. 2008, 77, 157–173. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hayes, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In 13th European Conference on Computer Vision, Zurich, Switzerland, LNCS; Springer: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. [Google Scholar]

- Brutschy, A.; Pini, G.; Pinciroli, C.; Birattari, M.; Dorigo, M. Self-organized task allocation to sequentially interdependent tasks in swarm robotics. Auton. Agents Multi-Agent Syst. 2014, 28, 101–125. [Google Scholar] [CrossRef]

- Kulich, M.; Faigl, J.; Přeučil, L. On distance utility in the exploration task. In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 4455–4460. [Google Scholar]

- Gerkey, B.; Matarić, M. A formal analysis and taxonomy of task allocation in multi-robot systems. Int. J. Robot. Res. 2004, 23, 939–954. [Google Scholar] [CrossRef]

- Faigl, J.; Olivier, S.; Francois, C. Comparison of task-allocation algorithms in frontier-based multi-robot exploration. In European Conference on Multi-Agent Systems; Springer: Cham, Switzerland, 2014; pp. 101–110. [Google Scholar]

| Category | Number of Images | Training Set | Validation Set | |

|---|---|---|---|---|

| Pre-labelled (195) | person | 101 | 80 | 21 |

| tv-monitor (fire) | 94 | 85 | 9 | |

| Manually labelled (350) | door | 125 | 98 | 27 |

| sign | 117 | 97 | 20 | |

| stairs | 108 | 100 | 8 | |

| Total | 5 classes | 545 | 460 | 85 |

| Input Image | Inference Results | Detected Object | Targets Detection Output | Agent Specialized Functionality |

|---|---|---|---|---|

|  | door () | Open doors | |

|  | stairs () | Climb stairs | |

|  | person () | Assist people | |

|  | tv-monitor (fire) () | Extinguish fire | |

|  | sign () | Read signs |

| Agent ID# | Robots Specialized Functionalities: | |||||

|---|---|---|---|---|---|---|

| Specialty Vector | Open Doors | Climb Stairs | Assist People | Extinguish Fire | Read Signs | |

| 1 | 1 | 0 | 0 | 0 | ||

| 0 | 1 | 1 | 0 | 0 | ||

| 0 | 1 | 0 | 1 | 0 | ||

| 0 | 1 | 0 | 0 | 1 | ||

| 0 | 0 | 1 | 0 | 0 | ||

| 0 | 0 | 0 | 1 | 0 | ||

| 0 | 0 | 0 | 0 | 1 | ||

| Target Objects Detection Confidence | Robot ID# | Availability 1: Available 0: Withdrawn | Available Agents Fitting Probabilities | User Set MFT |

|---|---|---|---|---|

| Door: 0.00 Stairs: 0.96 Person: 0.00 Fire: 0.00 Sign: 0.00 | 1 | 0.48 | 0.4 | |

| 1 | 0.48 | |||

| 1 | 0.48 | |||

| 1 | 0.48 | |||

| 1 | 0.00 | |||

| 1 | 0.00 | |||

| 1 | 0.00 |

| Target Objects Detection Confidence | Robot ID# | Availability 1: Available 0: Withdrawn | Available Agents Fitting Probabilities | User Set MFT |

|---|---|---|---|---|

| Door: 0.98 Stairs: 0.00 Person: 0.00 Fire: 0.00 Sign: 0.00 | 1 | 0.49 | 0.4 | |

| 1 | 0.0 | |||

| 1 | 0.0 | |||

| 1 | 0.0 | |||

| 0 | ---- | |||

| 0 | ---- | |||

| 0 | ---- |

| Target Objects Detection Confidence | Robot ID# | Availability 1: Available 0: Withdrawn | Available Agents Fitting Probabilities | User Set MFT |

|---|---|---|---|---|

Door: 0.00 Stairs: 0.00 Person: 0.84 Fire: 0.99 Sign: 0.00 | 1 | 0.00 | 0.4 | |

| 1 | 0.42 | |||

| 1 | 0.49 | |||

| 1 | 0.00 | |||

| 0 | ---- | |||

| 0 | ---- | |||

| 0 | ---- |

| Person | Fire | Door | Sign | Stairs | Overall | |

|---|---|---|---|---|---|---|

| Precision (%) | 98.2 | 87.5 | 91.2 | 94.7 | 86.4 | 92.9 |

| Recall (%) | 81.2 | 45.5 | 67.4 | 66.7 | 95.0 | 66.6 |

| No. | Input Image with Segmented Detected Target(s) | Recognized Target Object Confidence Level | Assigned Agents | ||

|---|---|---|---|---|---|

| Agent | Fitting Probability | ||||

| 1 |  | Door | 0.991 | 0.49 | |

| Stairs | 0.000 | ||||

| Person | 0.000 | ||||

| Fire | 0.000 | ||||

| Sign | 0.000 | ||||

| 2 |  | Door | 0.000 | ||

| Stairs | 0.965 | , ,, | 0.48 | ||

| Person | 0.000 | ||||

| Fire | 0.000 | ||||

| Sign | 0.000 | ||||

| 3 |  | Door | 0.000 | ||

| Stairs | 0.000 | ||||

| Person | 0.858 | 0.86 | |||

| Fire | 0.901 | 0.90 | |||

| Sign | 0.000 | ||||

| 4 |  | Door | 0.000 | ||

| Stairs | 0.000 | ||||

| Person | 0.987 | 0.99 | |||

| Fire | 0.000 | ||||

| Sign | 0.788 | 0.79 | |||

| 5 |  | Door | 0.000 | ||

| Stairs | 0.000 | ||||

| Person | 0.985 | 0.98 | |||

| Fire | 0.968; 0.628 | 0.97 | |||

| (not assigned) | 0.31 < MFT | ||||

| Sign | 0.000 | ||||

| 6 |  | Door | 0.847 | 0.42 | |

| Stairs | 0.978 | 0.49 | |||

| Person | 0.000 | ||||

| Fire | 0.000 | ||||

| Sign | 0.000 | ||||

| 7 |  | Door | 0.000 | ||

| Stairs | 0.000 | ||||

| Person | 0.000 | ||||

| Fire | 0.000 | ||||

| Sign | 0.984; 0.940; 0.657 | 0.98 | |||

| 0.47 | |||||

| No specialized agent is available to allocate the third target | |||||

| 8 |  | Door | 0.654 | 0.33 < MFT | |

| Stairs | 0.970 | 0.49 | |||

| Person | 0.000 | ||||

| Fire | 0.000 | ||||

| Sign | 0.995; 0.991 | 1 | |||

| No specialized agent is available to allocate the second target | |||||

| 9 |  | Door | 0.993 | 0.49 | |

| Stairs | 0.000 | ||||

| Person | 0.000 | ||||

| Fire | 0.000 | ||||

| Sign | 0.733 | 0.73 | |||

| 10 |  | Door | 0.000 | ||

| Stairs | 0.995 | , , , | 0.5 | ||

| Person | 0.000 | ||||

| Fire | 0.000 | ||||

| Sign | 0.000 | ||||

| 11 |  | Door | 0.000 | ||

| Stairs | 0.000 | ||||

| Person | 0.000 | ||||

| Fire | 0.987 | 0.99 | |||

| Sign | 0.843; 0.624 | 0.84 | |||

| (not assigned) | 0.31 < MFT | ||||

| 12 |  | Door | 0.000 | ||

| Stairs | 0.000 | ||||

| Person | 0.000 | ||||

| Fire | 0.000 | ||||

| Sign | 0.928, 0.824 | 0.93 | |||

| 0.41 | |||||

| 13 |  | Door | 0.000 | ||

| Stairs | 0.977 | 0.49 | |||

| Person | 0.000 | ||||

| Fire | 0.000 | ||||

| Sign | 0.000 | ||||

| 14 |  | Door | 0.000 | ||

| Stairs | 0.000 | ||||

| Person | 0.630 | 0.63 | |||

| Fire | 0.913; 0.879 | 0.91 | |||

| 0.44 | |||||

| Sign | 0.963 | 0.96 | |||

| 15 |  | Door | 0.000 | ------ | |

| Stairs | 0.000 | ||||

| Person | 0.000 | ||||

| Fire | 0.000 | ||||

| Sign | 0.000 | ||||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Buraiki, O.; Wu, W.; Payeur, P. Probabilistic Allocation of Specialized Robots on Targets Detected Using Deep Learning Networks. Robotics 2020, 9, 54. https://doi.org/10.3390/robotics9030054

Al-Buraiki O, Wu W, Payeur P. Probabilistic Allocation of Specialized Robots on Targets Detected Using Deep Learning Networks. Robotics. 2020; 9(3):54. https://doi.org/10.3390/robotics9030054

Chicago/Turabian StyleAl-Buraiki, Omar, Wenbo Wu, and Pierre Payeur. 2020. "Probabilistic Allocation of Specialized Robots on Targets Detected Using Deep Learning Networks" Robotics 9, no. 3: 54. https://doi.org/10.3390/robotics9030054

APA StyleAl-Buraiki, O., Wu, W., & Payeur, P. (2020). Probabilistic Allocation of Specialized Robots on Targets Detected Using Deep Learning Networks. Robotics, 9(3), 54. https://doi.org/10.3390/robotics9030054