Collection and Analysis of Human Upper Limbs Motion Features for Collaborative Robotic Applications

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

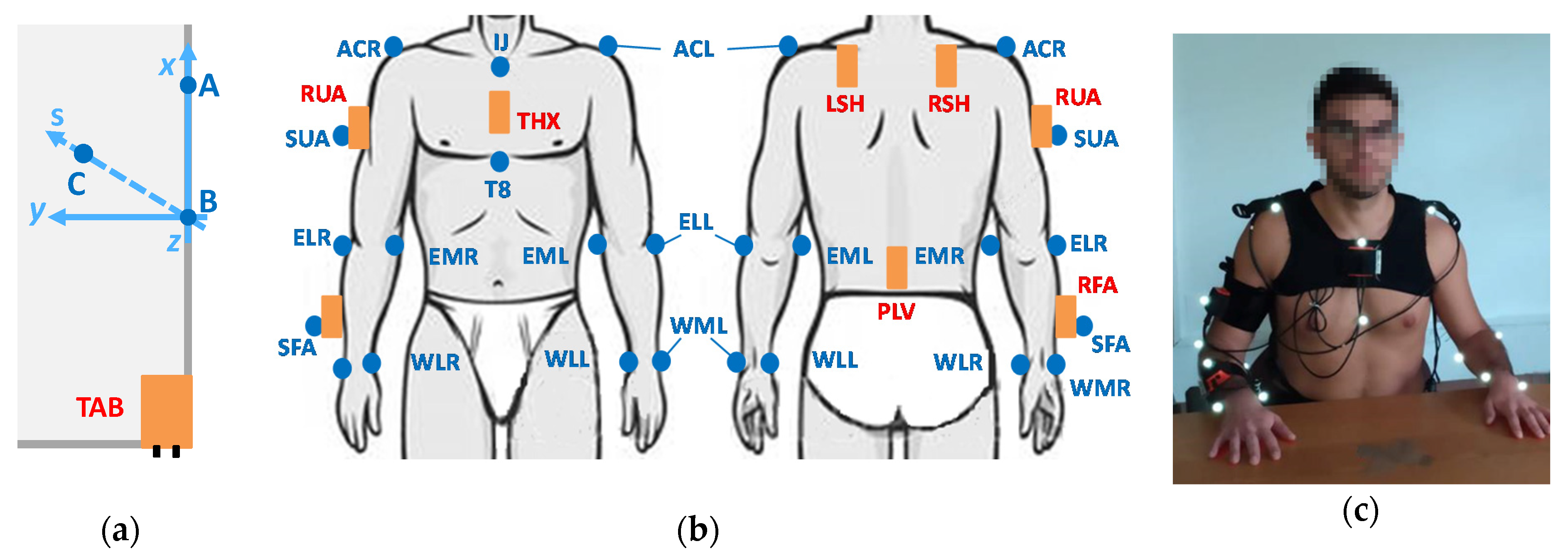

2.2. Instruments

2.2.1. IMUs

- Right forearm (RFA)

- Right upper arm (RUA)

- Shoulders (RSH, LSH)

- Sternum (THX)

- Pelvis (PLV)

2.2.2. Stereophotogrammetric System

- styloid processes (WMR, WLR, WML, WLL)

- elbow condyles (EMR, ELR, EML, ELL)

- acromions (ACR, ACL)

- between suprasternal notches (IJ)

- the spinal process of the 8th thoracic vertebra (T8)

- on RFA-IMU (SFA)

- on RUA-IMU (SUA)

2.3. Protocol

- Start with hands in neutral position

- Pick the box according to the color specified by the experimenter

- Place the box correspondingly to the cross marked on the table

- Return with hands in neutral position

- Pick the same box

- Replace the box in its initial position

- Return with hands in neutral position

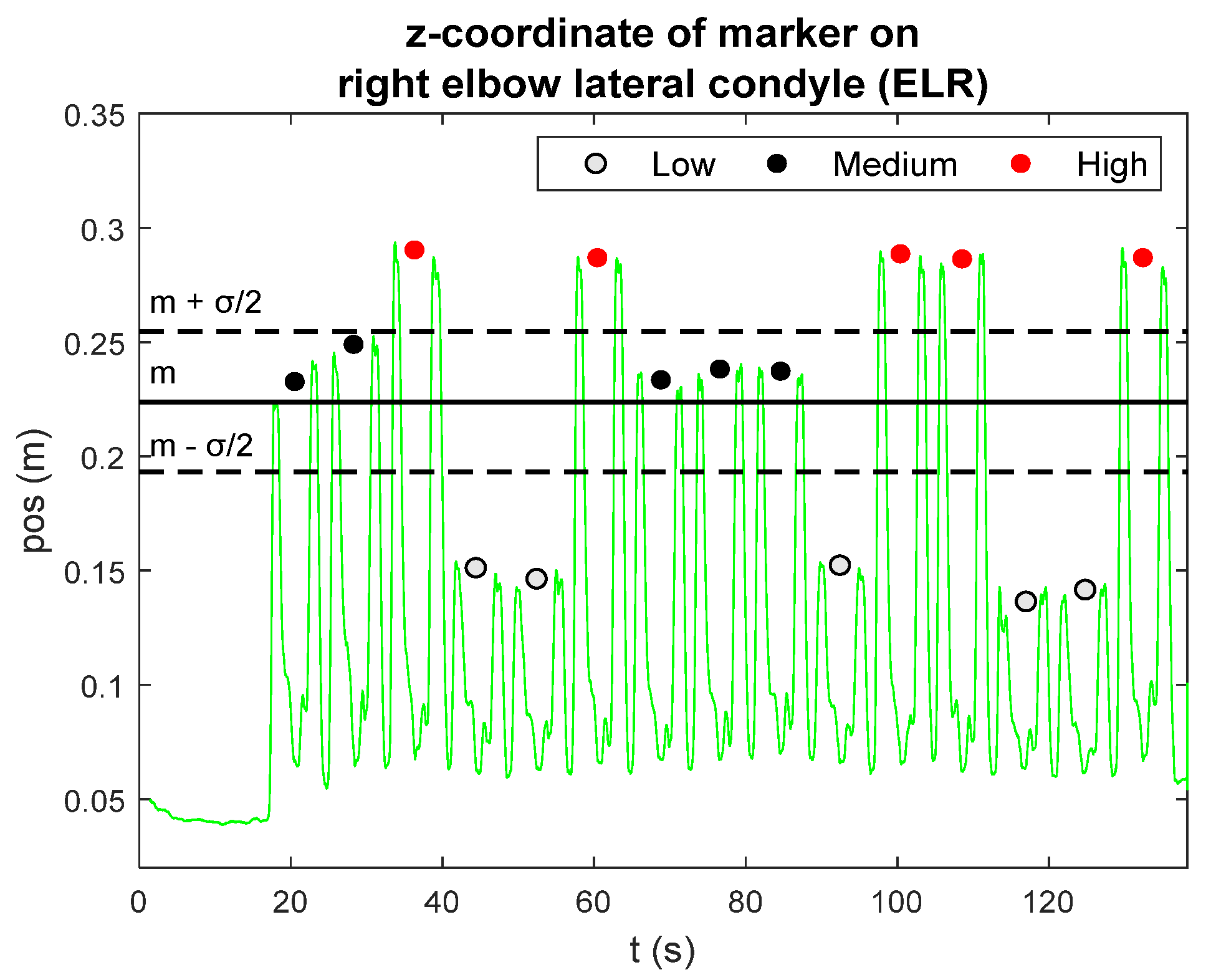

2.4. Signal Processing and Data Analysis

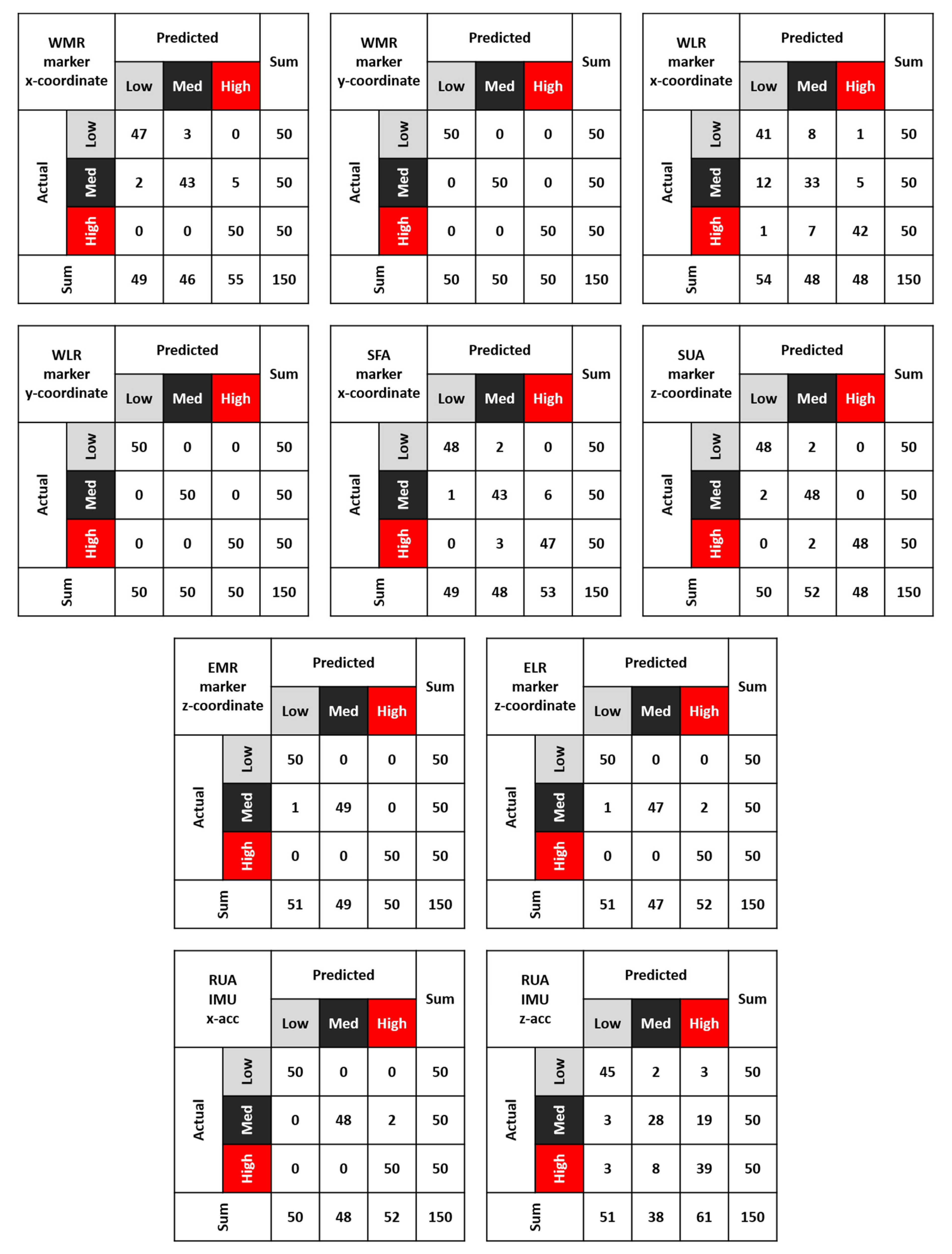

- X-coordinate of the marker on the right wrist medial styloid process (WMR)

- Y-coordinate of the marker on the right wrist medial styloid process (WMR)

- X-coordinate of the marker on the right wrist lateral styloid process (WLR)

- Y-coordinate of the marker on the right wrist lateral styloid process (WLR)

- X-coordinate of the marker on the forearm sensor (SFA)

- Z-coordinate of the marker on the upper arm sensor (SUA)

- Z-coordinate of the marker on the right elbow medial condyle (EMR)

- Z-coordinate of the marker on the right elbow lateral condyle (ELR)

- X-acceleration of the IMU on the right upper arm (RUA)

- Z-acceleration of the IMU on the right upper arm (RUA)

3. Results

4. Discussion

5. Conclusions

- Wrist and forearm trajectories during pick and place gestures are mainly developed on a horizontal plane, parallel to the table, whereas elbow and upper arm trajectories are mainly distributed along the vertical direction;

- the main contribution of upper arm acceleration during pick and place gestures occurs along the longitudinal and the sagittal axes of the segment;

- since the recognition algorithm provided an optimal combination of precision and recall, all tested features can be selected to recognize pick and place gestures at different heights;

- prediction algorithms of human motion in an industrial context could be defined and trained from the combination of upper arm acceleration along the anatomical longitudinal axis with wrist horizontal coordinates and elbow vertical coordinates.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Tsarouchi, P.; Makris, S.; Chryssolouris, G. Human–robot interaction review and challenges on task planning and programming. Int. J. Comput. Integr. Manuf. 2016, 29, 916–931. [Google Scholar] [CrossRef]

- Ajoudani, A.; Zanchettin, A.M.; Ivaldi, S.; Albu-Schäffer, A.; Kosuge, K.; Khatib, O. Progress and prospects of the human–robot collaboration. Auton. Robots 2018, 42, 957–975. [Google Scholar] [CrossRef]

- Bauer, A.; Wollherr, D.; Buss, M. Human–robot collaboration: A survey. Int. J. Hum. Robot. 2008, 5, 47–66. [Google Scholar] [CrossRef]

- Mauro, S.; Pastorelli, S.; Scimmi, L.S. Collision avoidance algorithm for collaborative robotics. Int. J. Autom. Technol. 2017, 11, 481–489. [Google Scholar] [CrossRef]

- Lasota, P.A.; Fong, T.; Shah, J.A. A survey of methods for safe human-robot interaction. Found. Trends Robot. 2017, 5, 261–349. [Google Scholar] [CrossRef]

- Melchiorre, M.; Scimmi, L.S.; Mauro, S.; Pastorelli, S. Influence of human limb motion speed in a collaborative hand-over task. In Proceedings of the ICINCO 2018—Proceedings of the 15th International Conference on Informatics in Control, Automation and Robotics, Porto, Portugal, 29–31 July 2018; Volume 2, pp. 349–356. [Google Scholar]

- Perez-D’Arpino, C.; Shah, J.A. Fast target prediction of human reaching motion for cooperative human-robot manipulation tasks using time series classification. In Proceedings of the Proceedings—IEEE International Conference on Robotics and Automation, Seattle, WA, USA, 26–30 May 2015; pp. 6175–6182. [Google Scholar]

- Pereira, A.; Althoff, M. Overapproximative arm occupancy prediction for human-robot co-existence built from archetypal movements. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Daejeon, Korea, 9–14 October 2016; pp. 1394–1401. [Google Scholar]

- Wang, Y.; Ye, X.; Yang, Y.; Zhang, W. Collision-free trajectory planning in human-robot interaction through hand movement prediction from vision. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots, Birmingham, UK, 15–17 November 2017; pp. 305–310. [Google Scholar]

- Mainprice, J.; Berenson, D. Human-robot collaborative manipulation planning using early prediction of human motion. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 299–306. [Google Scholar]

- Casalino, A.; Bazzi, D.; Zanchettin, A.M.; Rocco, P. Optimal proactive path planning for collaborative robots in industrial contexts. In Proceedings of the Proceedings—IEEE International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019; pp. 6540–6546. [Google Scholar]

- De Momi, E.; Kranendonk, L.; Valenti, M.; Enayati, N.; Ferrigno, G. A neural network-based approach for trajectory planning in robot-human handover tasks. Front. Robot. AI 2016, 3, 34. [Google Scholar] [CrossRef]

- Pellegrinelli, S.; Moro, F.L.; Pedrocchi, N.; Molinari Tosatti, L.; Tolio, T. A probabilistic approach to workspace sharing for human–robot cooperation in assembly tasks. CIRP Ann.-Manuf. Technol. 2016, 65, 57–60. [Google Scholar] [CrossRef]

- Weitschat, R.; Ehrensperger, J.; Maier, M.; Aschemann, H. Safe and efficient human-robot collaboration part I: Estimation of human arm motions. In Proceedings of the Proceedings—IEEE International Conference on Robotics and Automation, Brisbane, QLD, Australia, 21–25 May 2018; pp. 1993–1999. [Google Scholar]

- Ghosh, P.; Song, J.; Aksan, E.; Hilliges, O. Learning human motion models for long-Term predictions. In Proceedings of the Proceedings—2017 International Conference on 3D Vision, 3DV, Qingdao, China, 10–12 October 2017; pp. 458–466. [Google Scholar]

- Butepage, J.; Kjellstrom, H.; Kragic, D. Anticipating many futures: Online human motion prediction and generation for human-robot interaction. In Proceedings of the Proceedings—IEEE International Conference on Robotics and Automation, Brisbane, QLD, Australia, 21–25 May 2018; pp. 4563–4570. [Google Scholar]

- Ionescu, C.; Papava, D.; Olaru, V.; Sminchisescu, C. Human3.6M: Large scale datasets and predictive methods for 3D human sensing in natural environments. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1325–1339. [Google Scholar] [CrossRef] [PubMed]

- Xia, S.; Wang, C.; Chai, J.; Hodgins, J. Realtime style transfer for unlabeled heterogeneous human motion. ACM Trans. Graph. 2015, 34, 1–10. [Google Scholar] [CrossRef]

- Müller, M.; Röder, T.; Clausen, M.; Eberhardt, B.; Krüger, B.; Weber, A. Documentation mocap database hdm05. Tech. Rep. 2007. [Google Scholar]

- Holden, D.; Saito, J.; Komura, T. A deep learning framework for character motion synthesis and editing. ACM Trans. Graph. 2016, 35, 1–11. [Google Scholar] [CrossRef]

- Ofli, F.; Chaudhry, R.; Kurillo, G.; Vidal, R.; Bajcsy, R. Berkeley MHAD: A comprehensive Multimodal Human Action Database. In Proceedings of the Proceedings of IEEE Workshop on Applications of Computer Vision, Tampa, FL, USA, 15–17 January 2013; pp. 53–60. [Google Scholar]

- De La Torre, F.; Hodgins, J.; Bargteil, A.W.; Martin, X.; Macey, J.C.; Collado, A.; Beltran, P. Guide to the Carnegie Mellon University Multimodal Activity (CMU-MMAC) Database; Robotics Institute: Pittsburgh, PA, USA, 2008; 19p. [Google Scholar]

- Escorpizo, R.; Moore, A. The effects of cycle time on the physical demands of a repetitive pick-and-place task. Appl. Ergon. 2007, 38, 609–615. [Google Scholar] [CrossRef] [PubMed]

- Könemann, R.; Bosch, T.; Kingma, I.; Van Dieën, J.H.; De Looze, M.P. Effect of horizontal pick and place locations on shoulder kinematics. Ergonomics 2015, 58, 195–207. [Google Scholar] [CrossRef] [PubMed]

- Chellali, R.; Li, Z.C. Predicting Arm Movements A Multi-Variate LSTM Based Approach for Human-Robot Hand Clapping Games. In Proceedings of the RO-MAN 2018—27th IEEE International Symposium on Robot and Human Interactive Communication, Nanjing, China, 27–31 August 2018; pp. 1137–1142. [Google Scholar]

- Digo, E.; Antonelli, M.; Pastorelli, S.; Gastaldi, L. Upper limbs motion tracking for collaborative robotic applications. In Proceedings of the International Conference on Human Interaction & Emerging Technologies, Paris, France, 27–29 August 2020. [Google Scholar]

- Digo, E.; Pierro, G.; Pastorelli, S.; Gastaldi, L. Tilt-twist Method using Inertial Sensors to assess Spinal Posture during Gait. In International Conference on Robotics in Alpe-Adria Danube Region; Spinger: Cham, Switzerland, 2019; pp. 384–392. [Google Scholar]

- Panero, E.; Digo, E.; Agostini, V.; Gastaldi, L. Comparison of Different Motion Capture Setups for Gait Analysis: Validation of spatio-temporal parameters estimation. In Proceedings of the MeMeA 2018—2018 IEEE International Symposium on Medical Measurements and Applications, Rome, Italy, 11–13 June 2018; pp. 1–6. [Google Scholar]

- Esat, I.I.; Ozada, N. Articular human joint modelling. Robotica 2010, 28, 321–339. [Google Scholar] [CrossRef][Green Version]

- Gastaldi, L.; Lisco, G.; Pastorelli, S. Evaluation of functional methods for human movement modelling. Acta Bioeng. Biomech. 2015, 17, 31–38. [Google Scholar]

- Rab, G.; Petuskey, K.; Bagley, A. A method for determination of upper extremity kinematics. Gait Posture 2002, 15, 113–119. [Google Scholar] [CrossRef]

- Sun, L.; Zhang, D.; Li, B.; Guo, B.; Li, S. Activity recognition on an accelerometer embedded mobile phone with varying positions and orientations. Lect. Notes Comput. Sci. (Incl. Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinform.) 2010, 6406 LNCS, 548–562. [Google Scholar]

- Machart, P.; Ralaivola, L. Confusion Matrix Stability Bounds for Multiclass Classification. arXiv 2012, arXiv:1202.6221. [Google Scholar]

| Age (Years) | BMI (kg/m2) | Up (cm) | Fo (cm) | Tr (cm) | Ac (cm) |

|---|---|---|---|---|---|

| 24.7 ± 2.1 | 22.3 ± 3.0 | 27.8 ± 3.2 | 27.9 ± 1.5 | 49.1 ± 5.2 | 35.9 ± 3.6 |

| Features | F1-Score (%) | ||

|---|---|---|---|

| Low | Medium | High | |

| WMR x-coordinate | 94.9 | 89.6 | 95.2 |

| WMR y-coordinate | 100.0 | 100.0 | 100.0 |

| WLR x-coordinate | 78.8 | 67.3 | 85.7 |

| WLR y-coordinate | 100.0 | 100.0 | 100.0 |

| SFA x-coordinate | 97.0 | 87.8 | 91.3 |

| SUA z-coordinate | 96.0 | 94.1 | 98.0 |

| EMR z-coordinate | 99.0 | 99.0 | 100.0 |

| ELR z-coordinate | 99.0 | 96.9 | 98.0 |

| RUA x-acceleration | 100.0 | 98.0 | 98.0 |

| RUA z-acceleration | 89.1 | 63.6 | 70.3 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Digo, E.; Antonelli, M.; Cornagliotto, V.; Pastorelli, S.; Gastaldi, L. Collection and Analysis of Human Upper Limbs Motion Features for Collaborative Robotic Applications. Robotics 2020, 9, 33. https://doi.org/10.3390/robotics9020033

Digo E, Antonelli M, Cornagliotto V, Pastorelli S, Gastaldi L. Collection and Analysis of Human Upper Limbs Motion Features for Collaborative Robotic Applications. Robotics. 2020; 9(2):33. https://doi.org/10.3390/robotics9020033

Chicago/Turabian StyleDigo, Elisa, Mattia Antonelli, Valerio Cornagliotto, Stefano Pastorelli, and Laura Gastaldi. 2020. "Collection and Analysis of Human Upper Limbs Motion Features for Collaborative Robotic Applications" Robotics 9, no. 2: 33. https://doi.org/10.3390/robotics9020033

APA StyleDigo, E., Antonelli, M., Cornagliotto, V., Pastorelli, S., & Gastaldi, L. (2020). Collection and Analysis of Human Upper Limbs Motion Features for Collaborative Robotic Applications. Robotics, 9(2), 33. https://doi.org/10.3390/robotics9020033