A Novel Design and Implementation of Autonomous Robotic Car Based on ROS in Indoor Scenario

Abstract

1. Introduction

1.1. Background

1.2. Objectives behind this Article

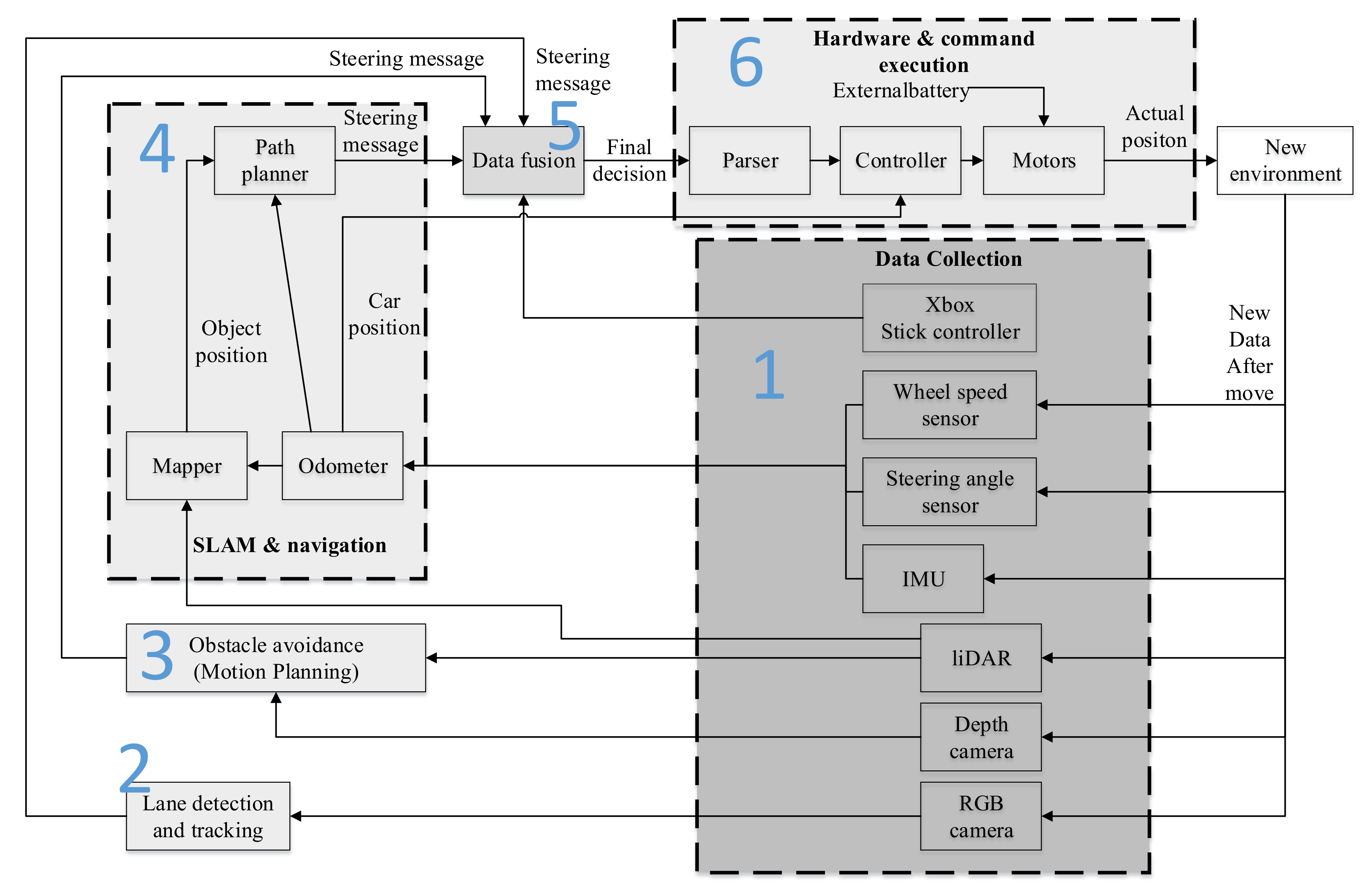

2. Control Loop of Intelligent Autonomous Robotic Car

3. Function Modules Design

3.1. Intelligent Obstacle Avoidance System

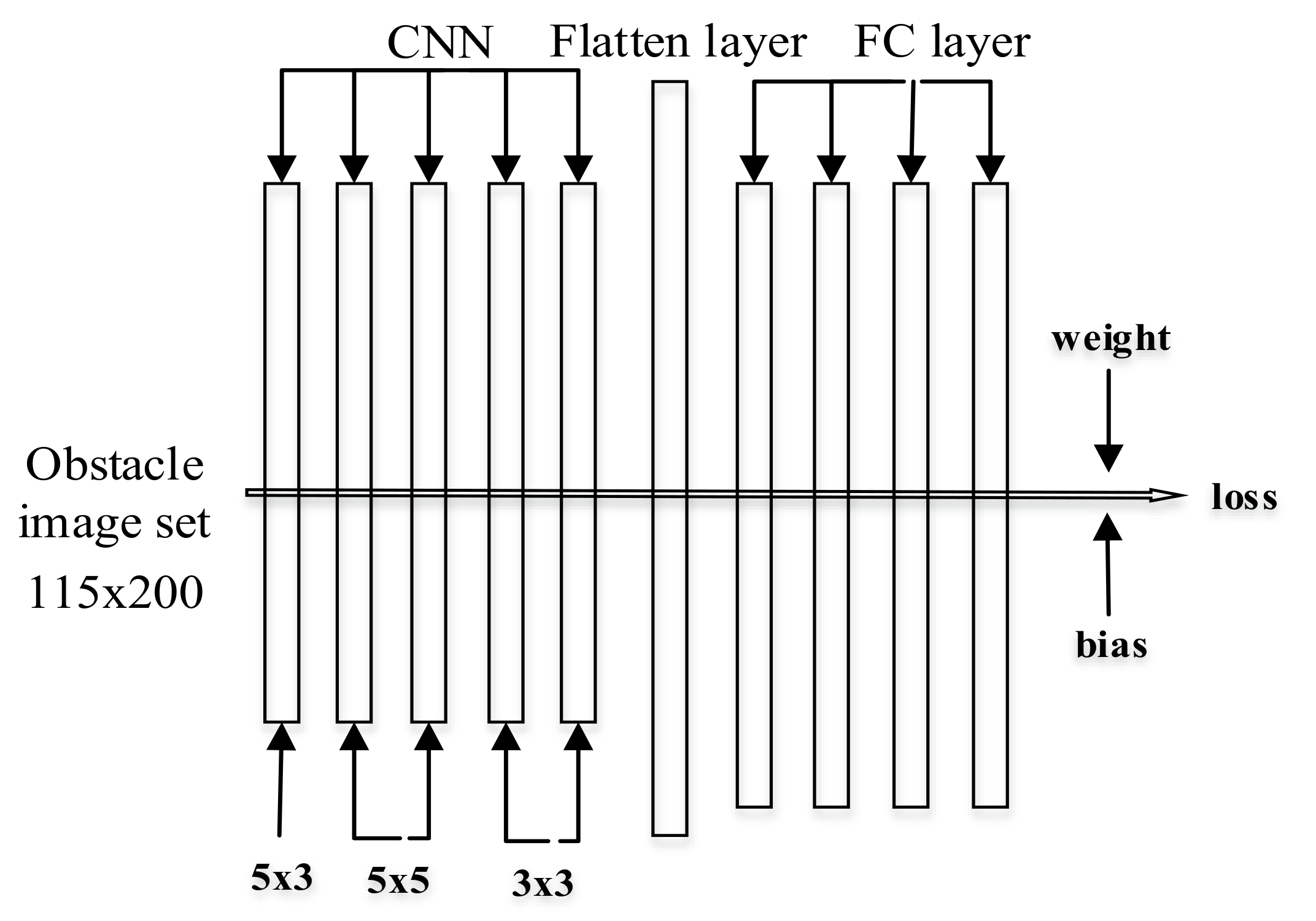

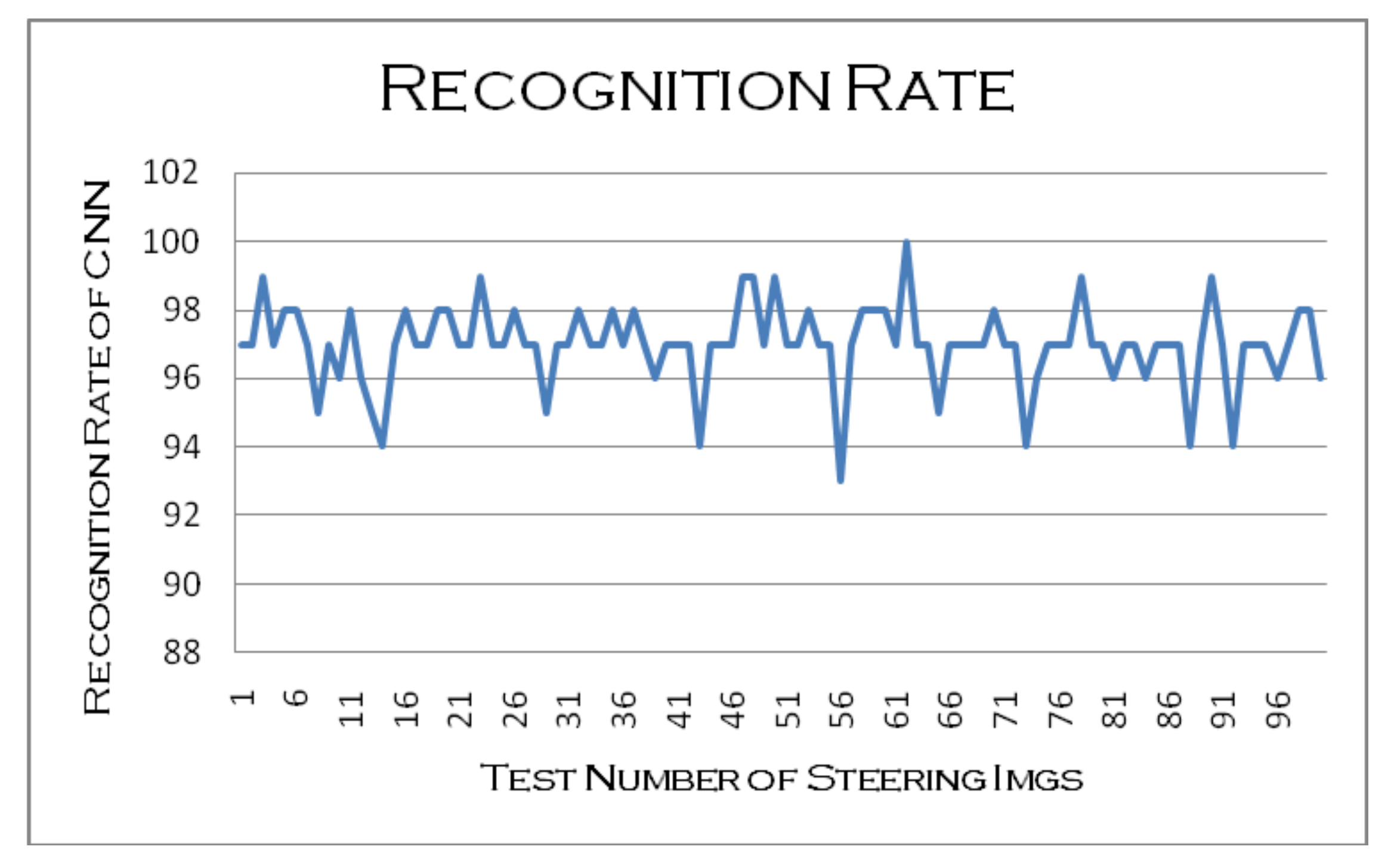

3.1.1. Obstacle Avoidance Based on CNN

3.1.2. Obstacle Avoidance Based on the Timed-Elastic-Band (TEB) Method

3.2. Simultaneously Localization and Mapping (SLAM) and Navigation System

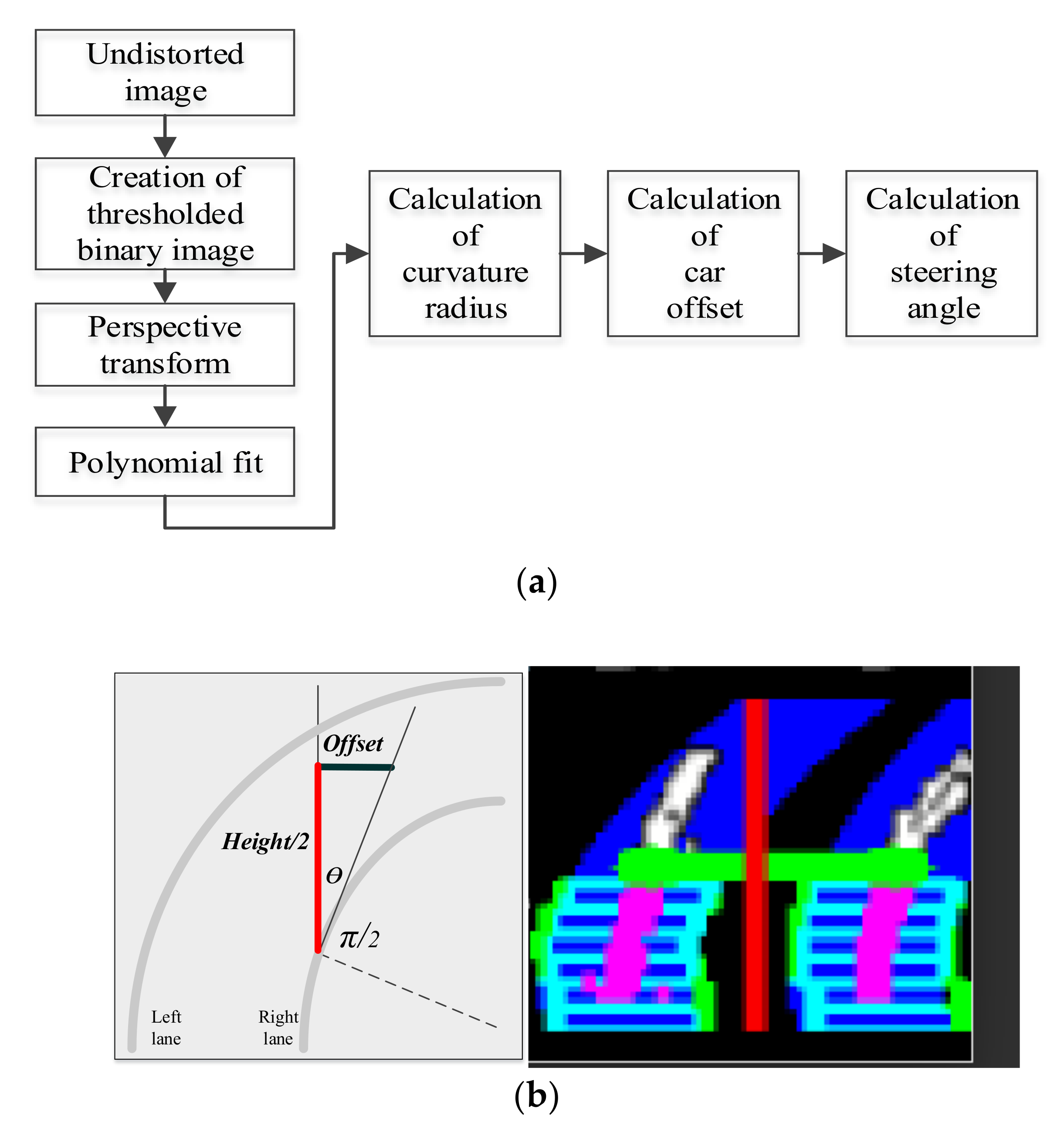

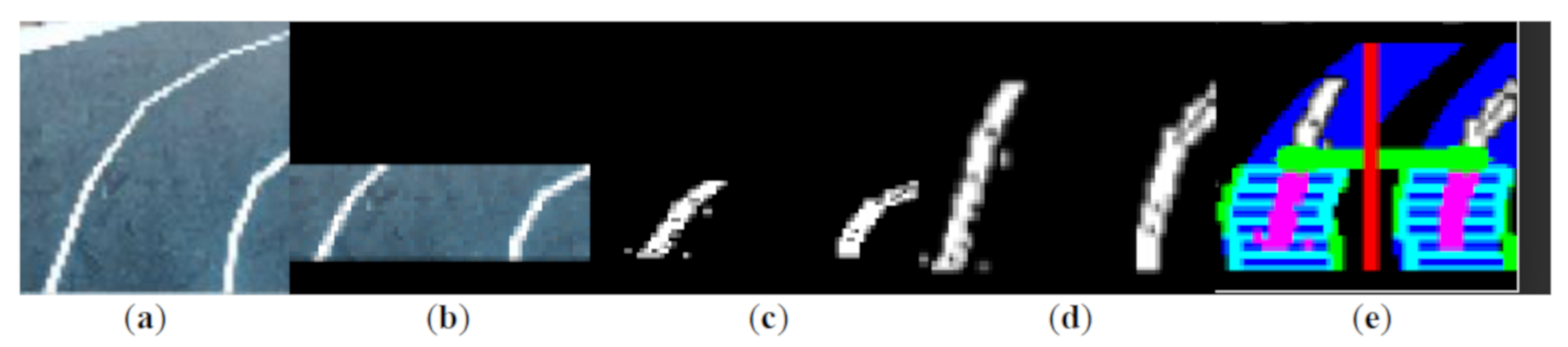

3.3. Lane Detection and Tracking

3.4. Data Fusion

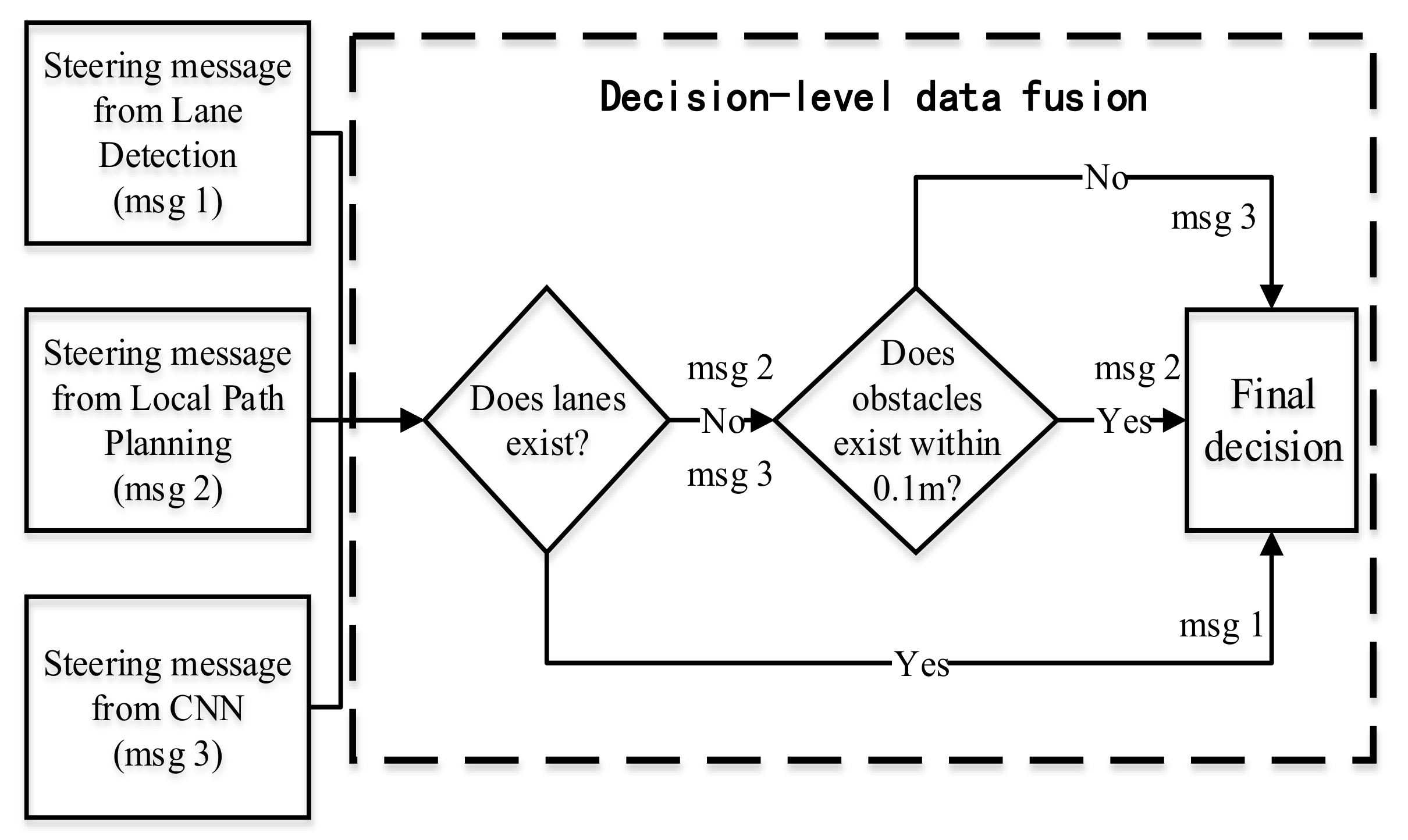

Decision-Level Data Fusion Design

3.5. Auxiliary Modules

3.5.1. Data Recording

3.5.2. Message Parsing

3.5.3. Proportion Integration Differentiation (PID)

4. Experiments and Conclusions

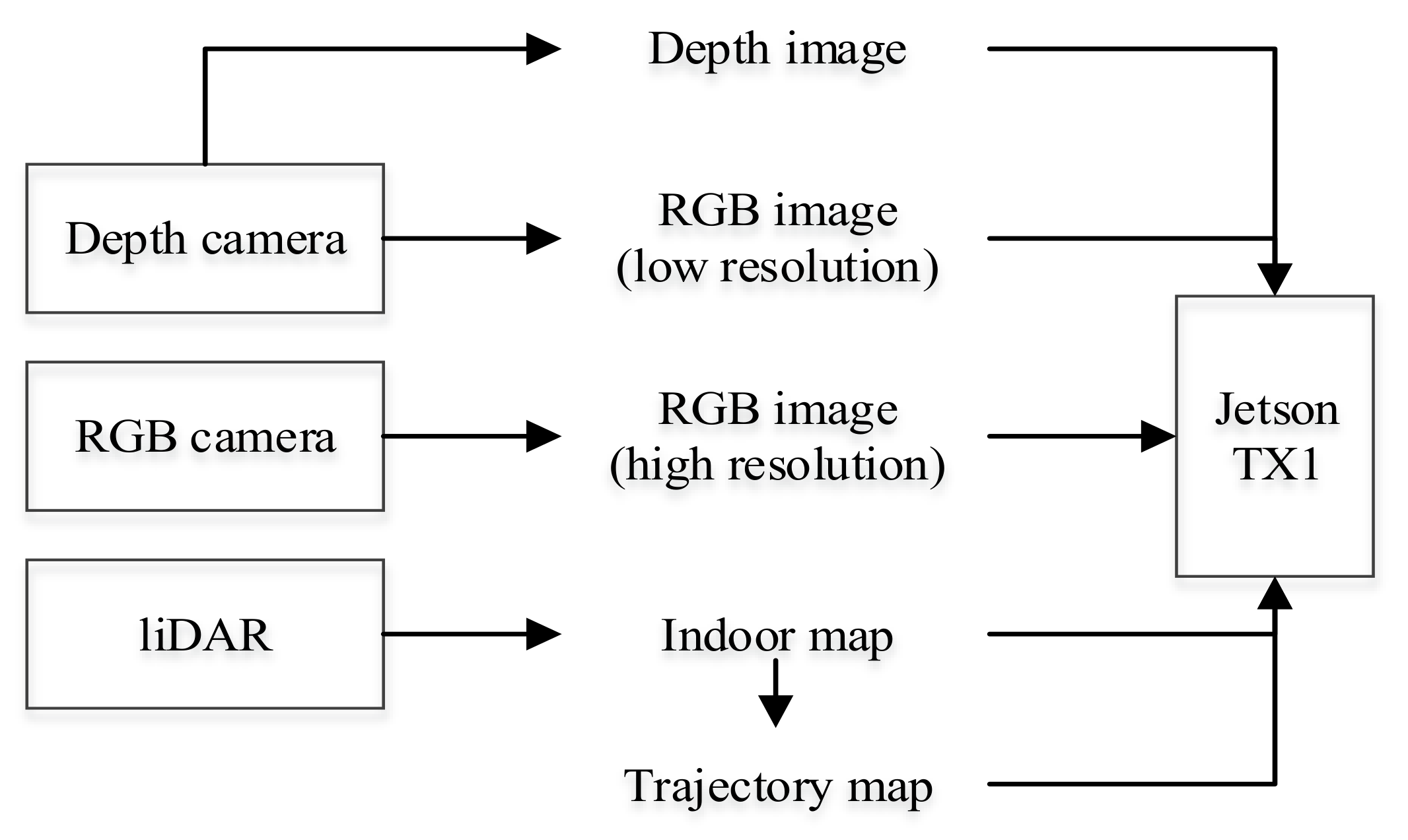

4.1. Hardware

4.2. Result of Lane Detection and Tracking

4.3. Result of Intelligent Obstacle Avoidance

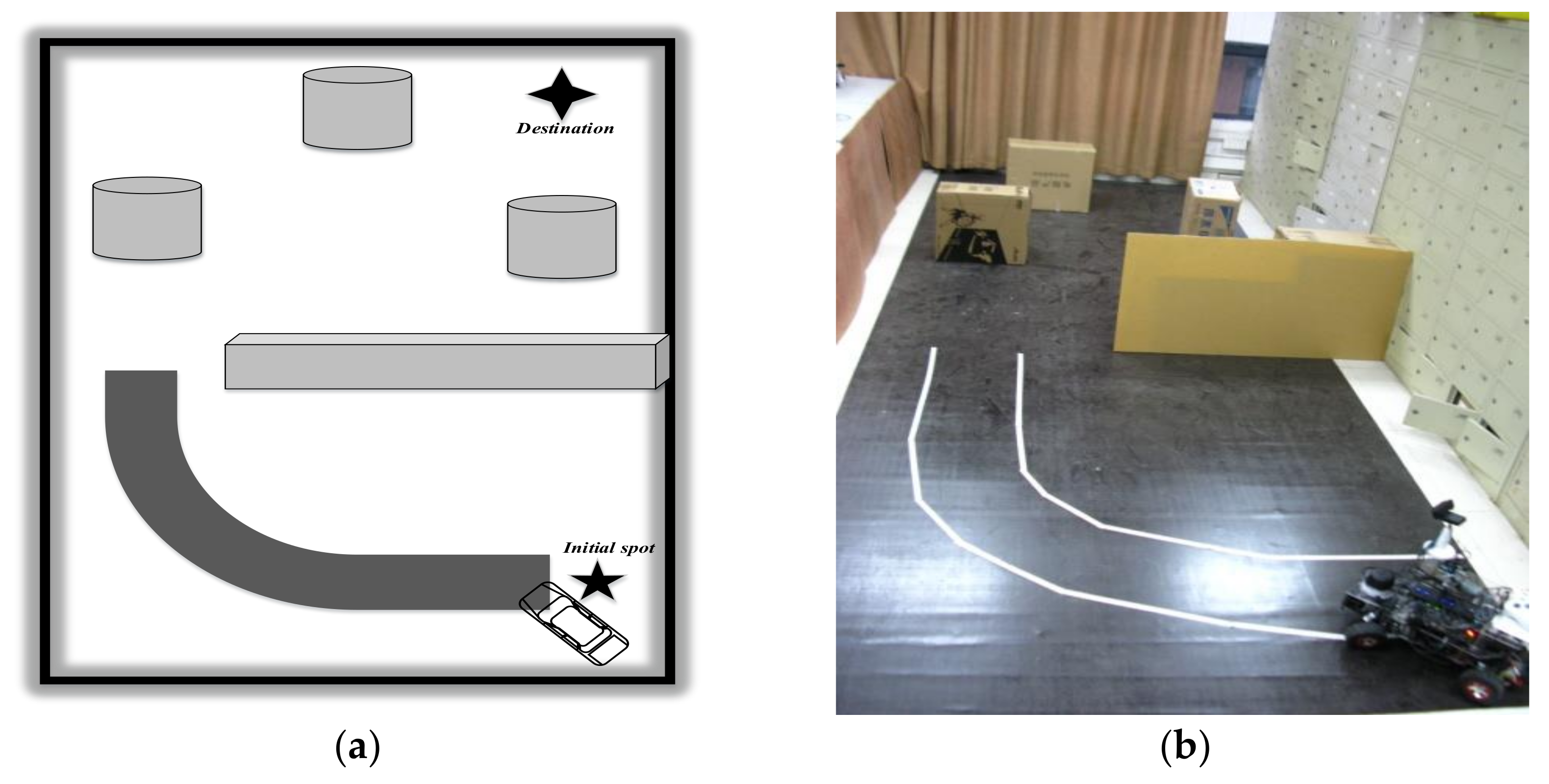

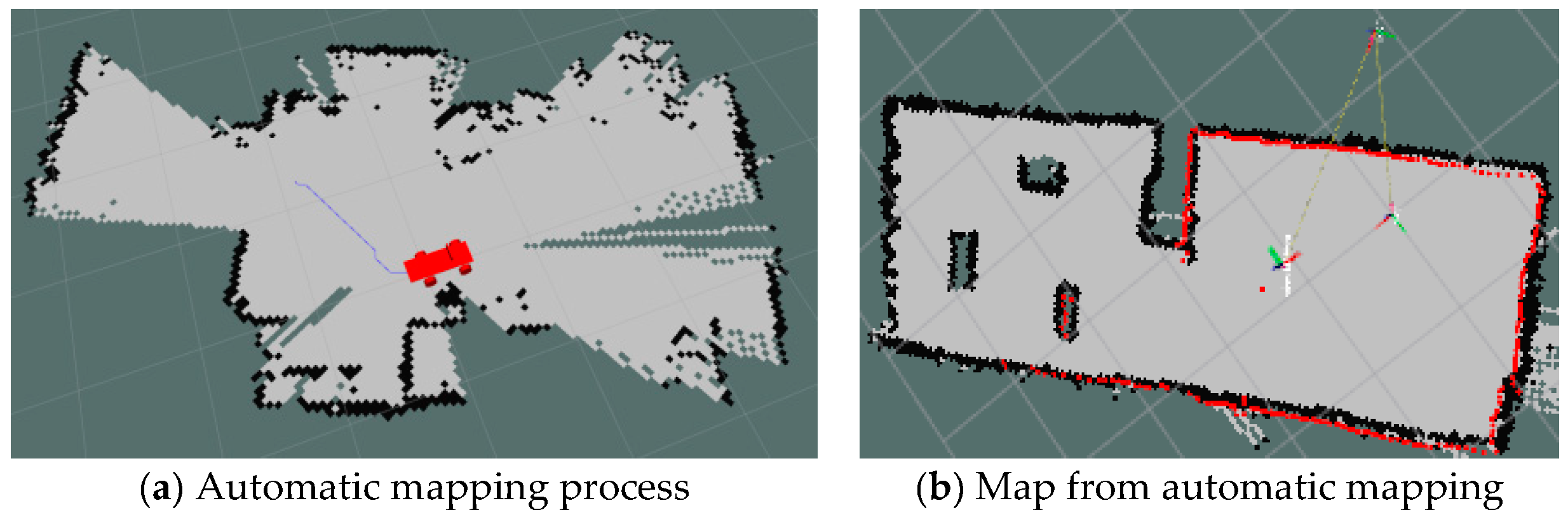

4.4. Result of SLAM

4.5. Result of Pose Estimation

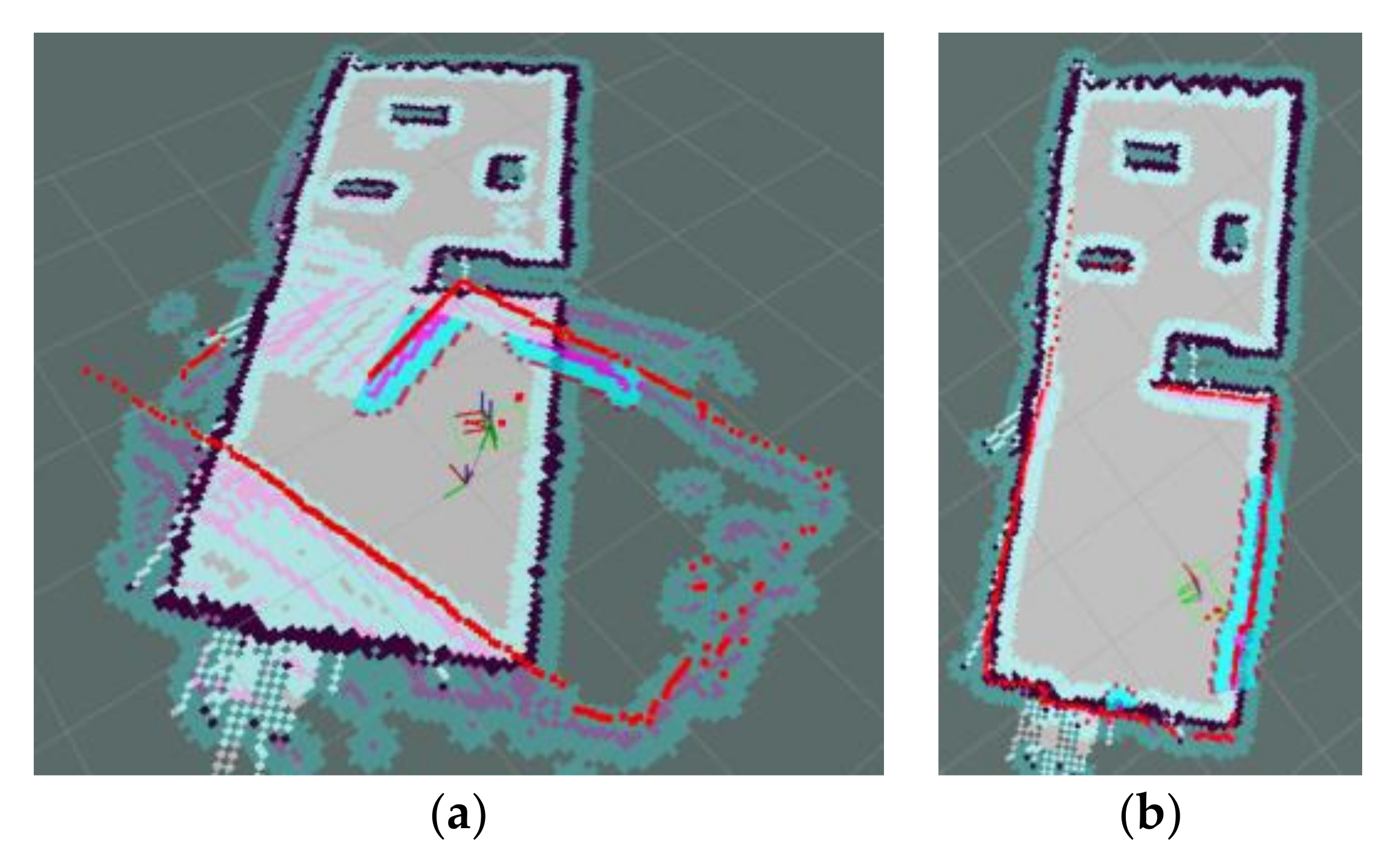

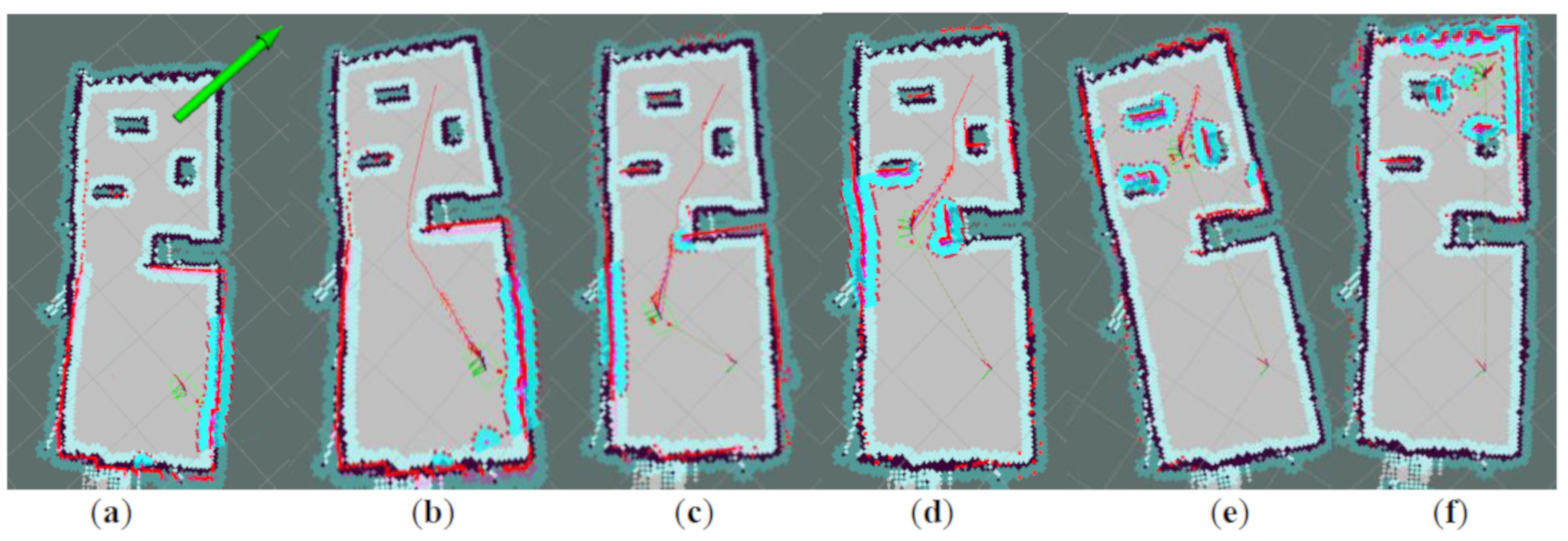

4.6. Result of Navigation

5. Conclusions and Future Works

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Varghese, J.; Boone, R.G. Overview of autonomous vehicle sensors and systems. In Proceedings of the International Conference on Operations Excellence and Service Engineering, Orlando, FL, USA, 10–11 September 2015. [Google Scholar]

- U.S. Department of Transportation Releases Policy on Automated Vehicle Development. N.p., 30 May 2013. Available online: https://mobility21.cmu.edu/u-s-department-of-transportation-releases-policy-on-auto- mated-vehicle-development (accessed on 20 March 2020).

- Xu, P.; Dherbomez, G.; Hery, E.; Abidli, A.; Bonnifait, P. System architecture of a driverless electric car in the grand cooperative driving challenge. IEEE Intell. Transp. Syst. Mag. 2018, 10, 47–59. [Google Scholar] [CrossRef]

- Wei, J.; Snider, J.M.; Kim, J.; Dolan, J.M.; Rajkumar, R.; Litkouhi, B. Towards a viable autonomous driving research platform. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, Australia, 23–26 June 2013. [Google Scholar]

- Cofield, R.G.; Gupta, R. Reactive trajectory planning and tracking for pedestrian-aware autonomous driving in urban environments. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 747–754. [Google Scholar]

- Lima, D.A.D.; Pereira, G.A.S. Navigation of an autonomous car using vector fields and the dynamic window approach. J. Control Autom. Electr. Syst. 2013, 24, 106–116. [Google Scholar] [CrossRef]

- Jo, K.; Kim, J.; Kim, D.; Jang, C.; Sunwoo, M. Development of autonomous car-part i: Distributed system architecture and development process. IEEE Trans. Ind. Electron. 2014, 61, 7131–7140. [Google Scholar] [CrossRef]

- Jo, K.; Kim, J.; Kim, D.; Jang, C.; Sunwoo, M. Development of autonomous car-part II: A case study on the implementation of an autonomous driving system based on distributed architecture. IEEE Trans. Ind. Electron. 2015, 62, 5119–5132. [Google Scholar] [CrossRef]

- Liu, L.; Wu, T.; Fang, Y.; Hu, T.; Song, J. A smart map representation for autonomous vehicle navigation. In Proceedings of the 12th International Conference on Fuzzy Systems and Knowledge Discovery (FSKD), Zhangjiajie, China, 15–17 August 2015; pp. 2308–2313. [Google Scholar]

- Kunz, F.; Nuss, D.; Wiest, J.; Deusch, H.; Reuter, S.; Gritschneder, F.; Scheel, A.; Stübler, M.; Bach, M.; Hatzelmann, P. Autonomous driving at ULM university: A modular, robust, and sensor-independent fusion approach. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium (IV), Seoul, Korea, 28 June–1 July 2015; pp. 666–673. [Google Scholar]

- Cardoso, V.; Oliveira, J.; Teixeira, T.; Badue, C.; Filipe, M.; Oliveira-Santos, T.; Veronese, L.; de Souza, A.F. A model-predictive motion planner for the IARA autonomous car. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 225–230. [Google Scholar]

- Al-Harasis, R. Design and implementation of an autonomous UGV for the twenty second intelligent ground vehicle competition. In Proceedings of the International Conference on Software Engineering, Mobile Computing and Media Informatics (SEMCMI2015), Kuala Lumpur, Malaysia, 8–10 September 2015. [Google Scholar]

- Ferreira, T.; Garcia, O.; Vaqueiro, J. Software Architecture for an Autonomous Car Simulation Using ROS, Morse and a QT Based Software for Control and Monitoring. In Proceedings of the XII Simpósio brasileiro de automação Inteligente, Uberlândia, MG, Brasil, 23–25 October 2015. [Google Scholar]

- Memon, K.R.; Memon, S.; Memon, B.; Rafique, A.; Shah, A. Real time implementation of path planning algorithm with obstacle avoidance for autonomous vehicle. In Proceedings of the 3rd International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 16–18 March 2016; pp. 2048–2053. [Google Scholar]

- Parakkal, P.G.; Variyar, V.V.S. Gps based navigation system for autonomous car. In Proceedings of the 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Udupi, India, 13–16 September 2017; pp. 1888–1893. [Google Scholar]

- Beiker, S. Implementation of an automated mobility-on-demand system. In Autonomous Driving; Maurer, M., Gerdes, J., Lenz, B., Winner, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Shimchik, I.; Sagitov, A.; Afanasyev, I.; Matsuno, F.; Magid, E. Golf cart prototype development and navigation simulation using ros and gazebo. In Proceedings of the MATEC Web of Conferences, Punjab, India, 18 May 2016; Volume 75, p. 9005. [Google Scholar]

- Evans-Thomson, S. Environmental Mapping and Software Architecture of an Autonomous SAE Electric Race Car. Ph.D. Thesis, The University of Western Australia, Perth, Australia, 2017. [Google Scholar]

- Gupta, N.; Vijay, R.; Korupolu, P.V.N. Architecture of Autonomous Vehicle Simulation and Control Framework. In Proceedings of the 2015 Conference on Advances in Robotics, Goa, India, 2–4 July 2015; pp. 1–6. [Google Scholar]

- Zhang, L.; Qin, Q. China’s new energy vehicle policies: Evolution, comparison and recommendation. Transp. Res. Part A Policy Pract. 2018, 110, 57–72. [Google Scholar] [CrossRef]

- Tian, Y.; Pei, K.; Jana, S.; Ray, B. Deeptest: Automated testing of deep-neural-network-driven autonomous cars. arXiv, 2017; arXiv:1708.08559 [cs.SE]. [Google Scholar]

- Du, X.X.; Marcelo, J.; Daniela, R. Car detection for autonomous vehicle: LIDAR and vision fusion approach through deep learning framework. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017. [Google Scholar] [CrossRef]

- Tobias, D.J. Cherry Autonomous Race Car. N.p., 6 Aug. 2016. Web. 29 May 2017. Available online: https://github.com/ DJTobias/-Cherry-Autonomous-Racecar (accessed on 24 March 2020).

- Quinlan, S. Real-Time Modification of Collision-Free Paths; Technical Report; Stanford University: Stanford, CA, USA, 1995. [Google Scholar]

- Fox, D.; Burgard, W.; Thrun, S. The dynamic window approach to collision avoidance. IEEE Robot. Autom. Mag. 1997, 4, 23–33. [Google Scholar] [CrossRef]

- Smann, C.R.; Hoffmann, F.; Bertram, T. Kinodynamic trajectory optimization and control for car-like robots. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 5681–5686. [Google Scholar]

- Pannu, G.S.; Ansari, M.D.; Gupta, P. Design and implementation of autonomous car using raspberry pi. Int. J. Comput. Appl. 2015, 113, 22–29. [Google Scholar]

- Zhou, C.; Li, F.; Cao, W. Architecture Design and Implementation of Image Based Autonomous Car: Thunder-1. Multimedia Tools Appl. 2019, 78, 28557–28573. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, C.; Zhou, C.; Cao, W.; Li, F.; Jia, P. A Novel Design and Implementation of Autonomous Robotic Car Based on ROS in Indoor Scenario. Robotics 2020, 9, 19. https://doi.org/10.3390/robotics9010019

Liu C, Zhou C, Cao W, Li F, Jia P. A Novel Design and Implementation of Autonomous Robotic Car Based on ROS in Indoor Scenario. Robotics. 2020; 9(1):19. https://doi.org/10.3390/robotics9010019

Chicago/Turabian StyleLiu, Chunmei, Chengmin Zhou, Wen Cao, Fei Li, and Pengfei Jia. 2020. "A Novel Design and Implementation of Autonomous Robotic Car Based on ROS in Indoor Scenario" Robotics 9, no. 1: 19. https://doi.org/10.3390/robotics9010019

APA StyleLiu, C., Zhou, C., Cao, W., Li, F., & Jia, P. (2020). A Novel Design and Implementation of Autonomous Robotic Car Based on ROS in Indoor Scenario. Robotics, 9(1), 19. https://doi.org/10.3390/robotics9010019