Smart Agricultural Machine with a Computer Vision-Based Weeding and Variable-Rate Irrigation Scheme

Abstract

1. Introduction

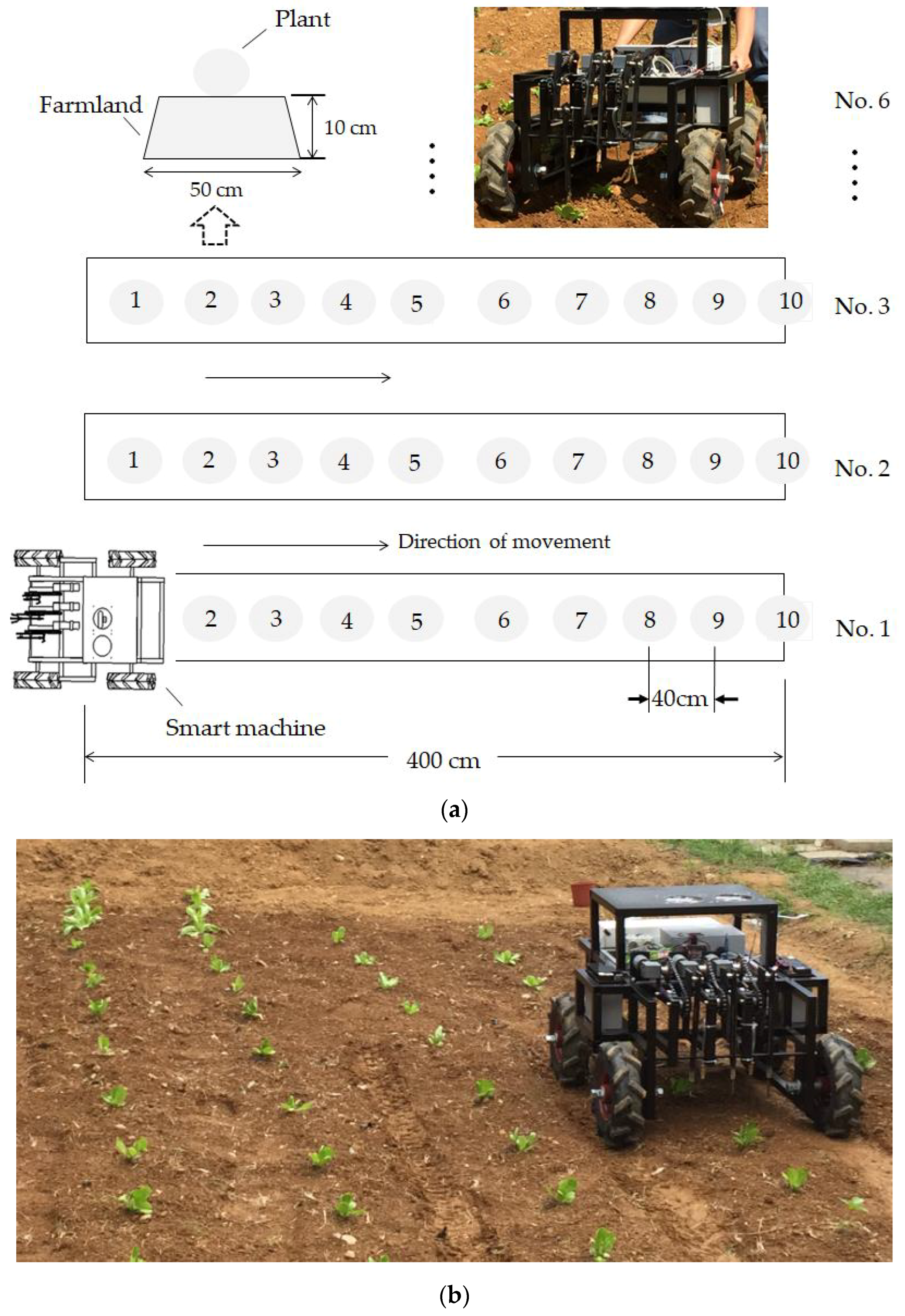

2. Materials and Methods

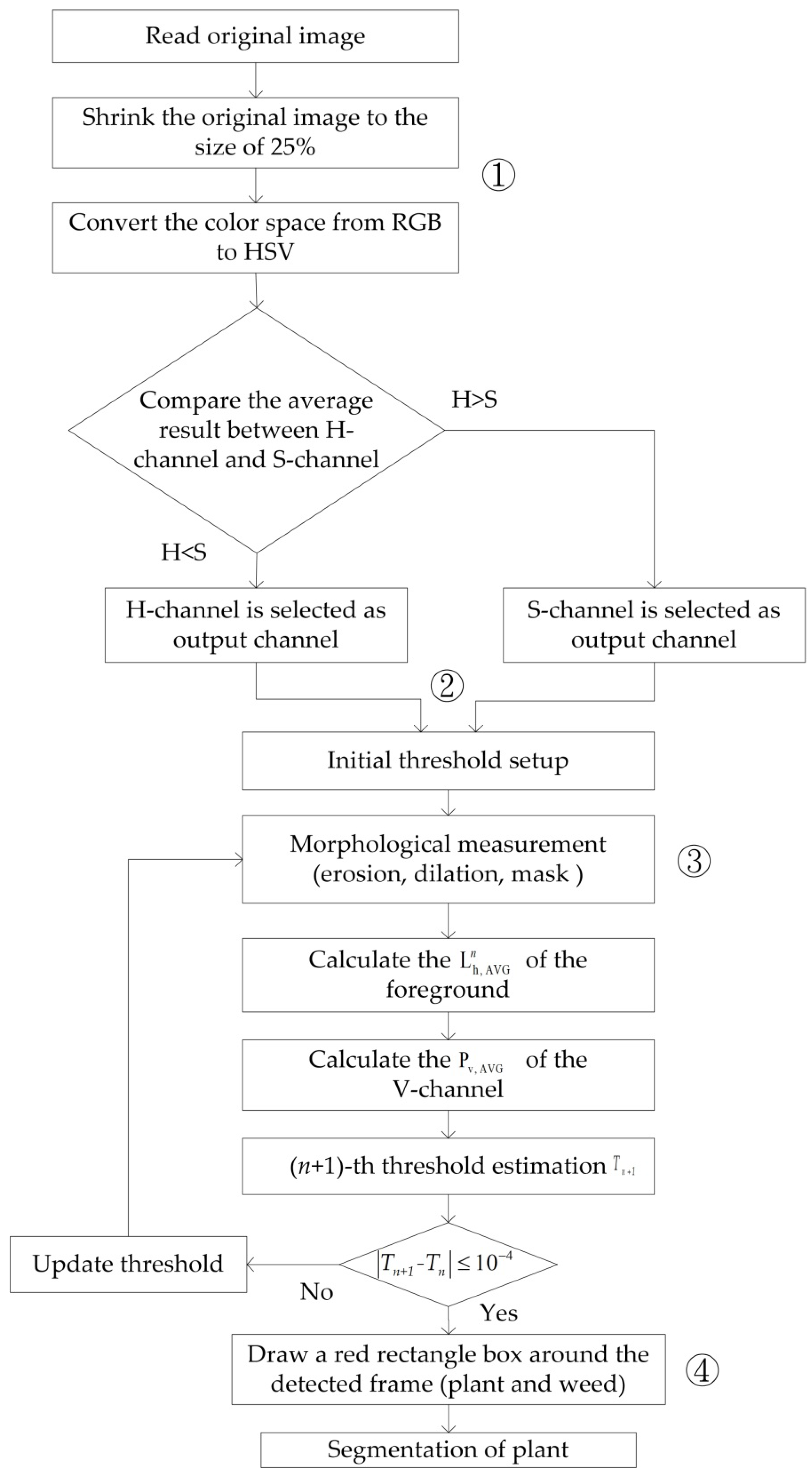

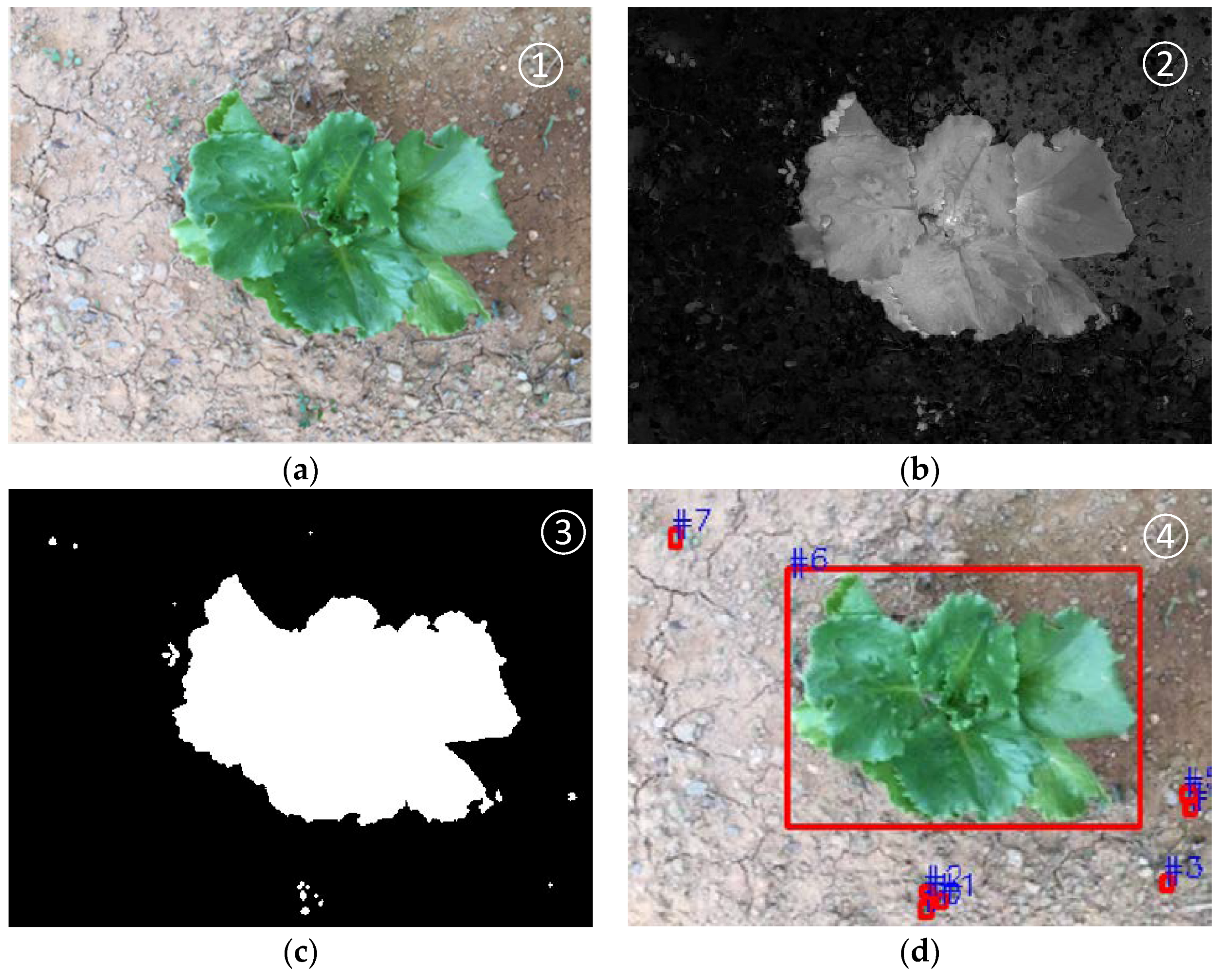

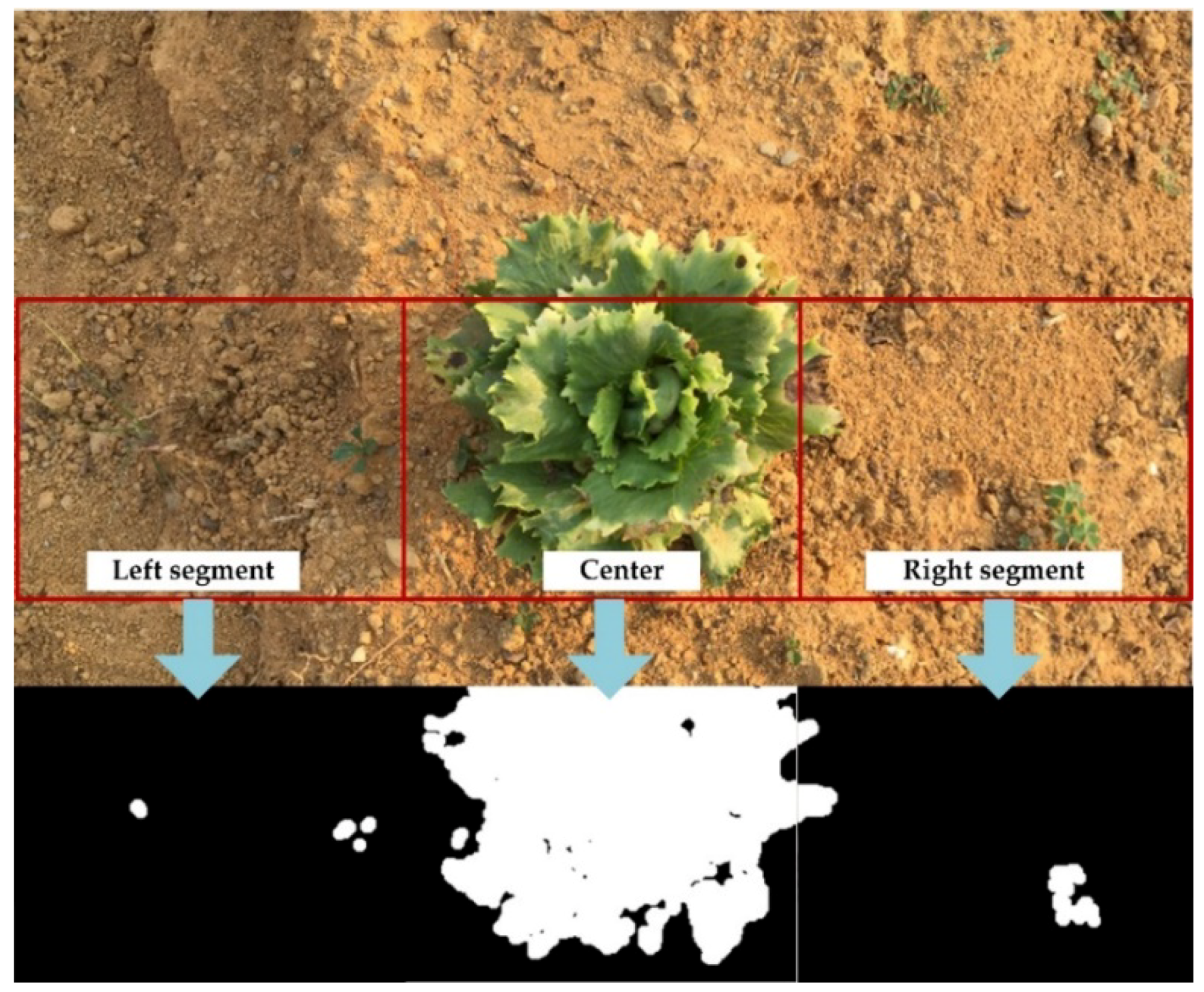

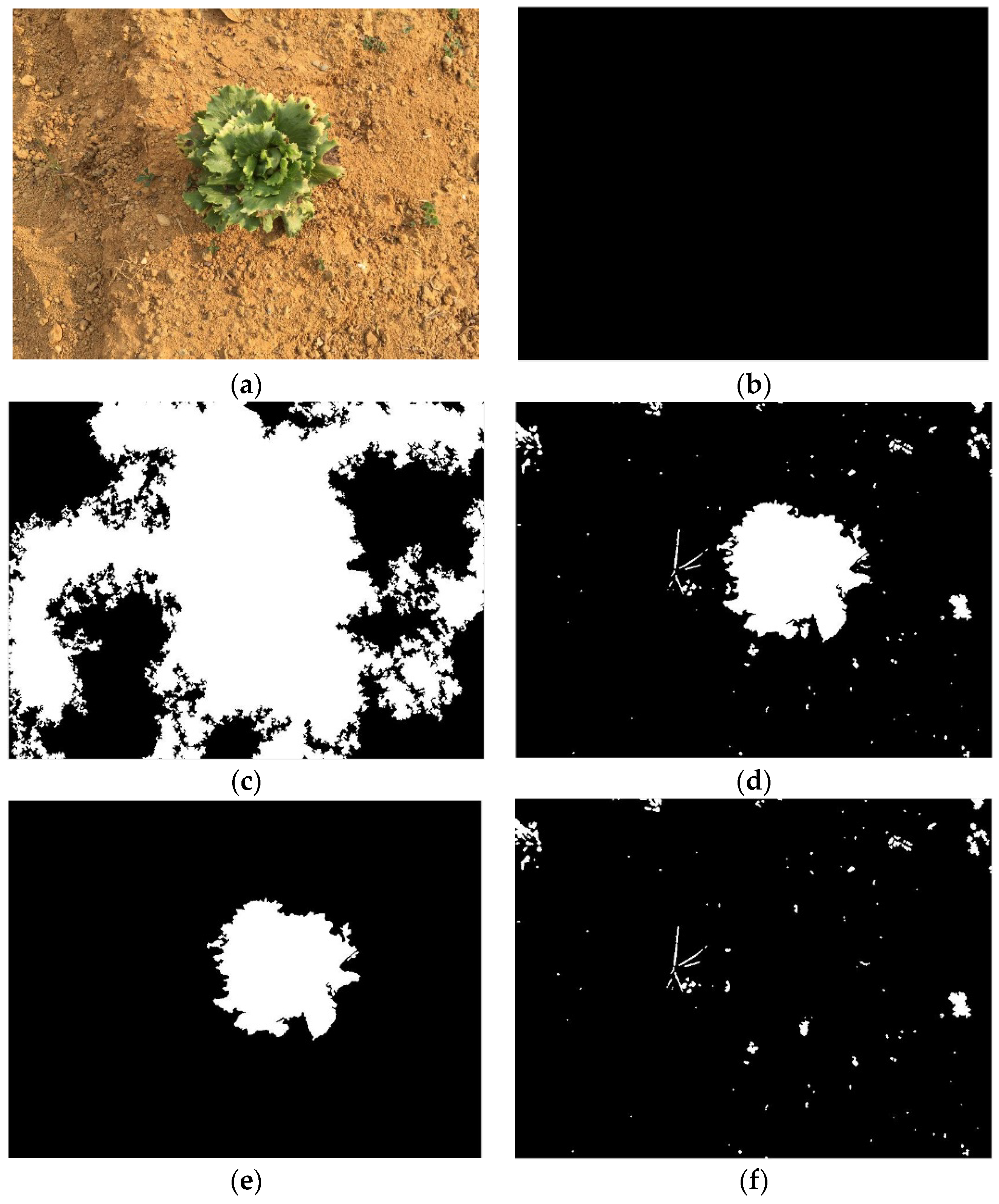

2.1. Image Processing Technique

2.1.1. Weed/Plant Classification

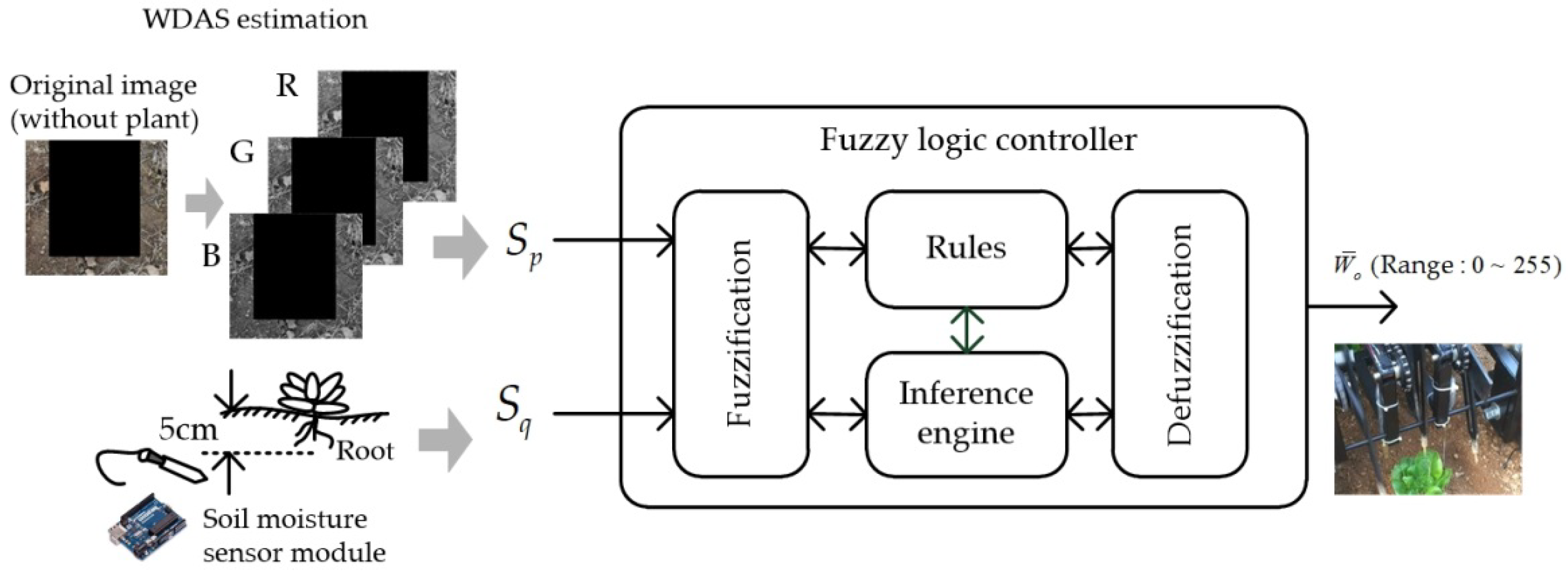

2.1.2. Soil Surfaces Moisture Content Estimation

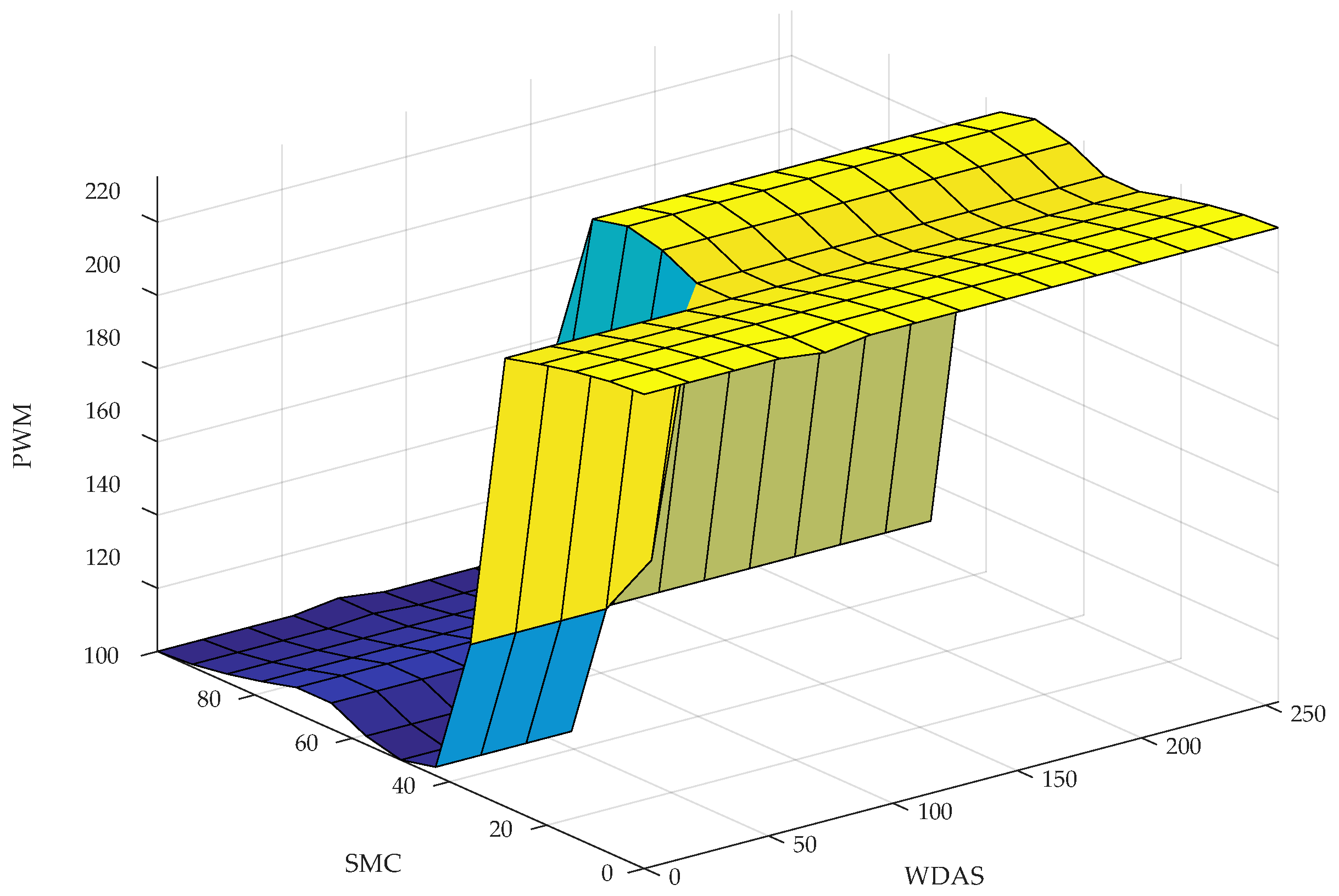

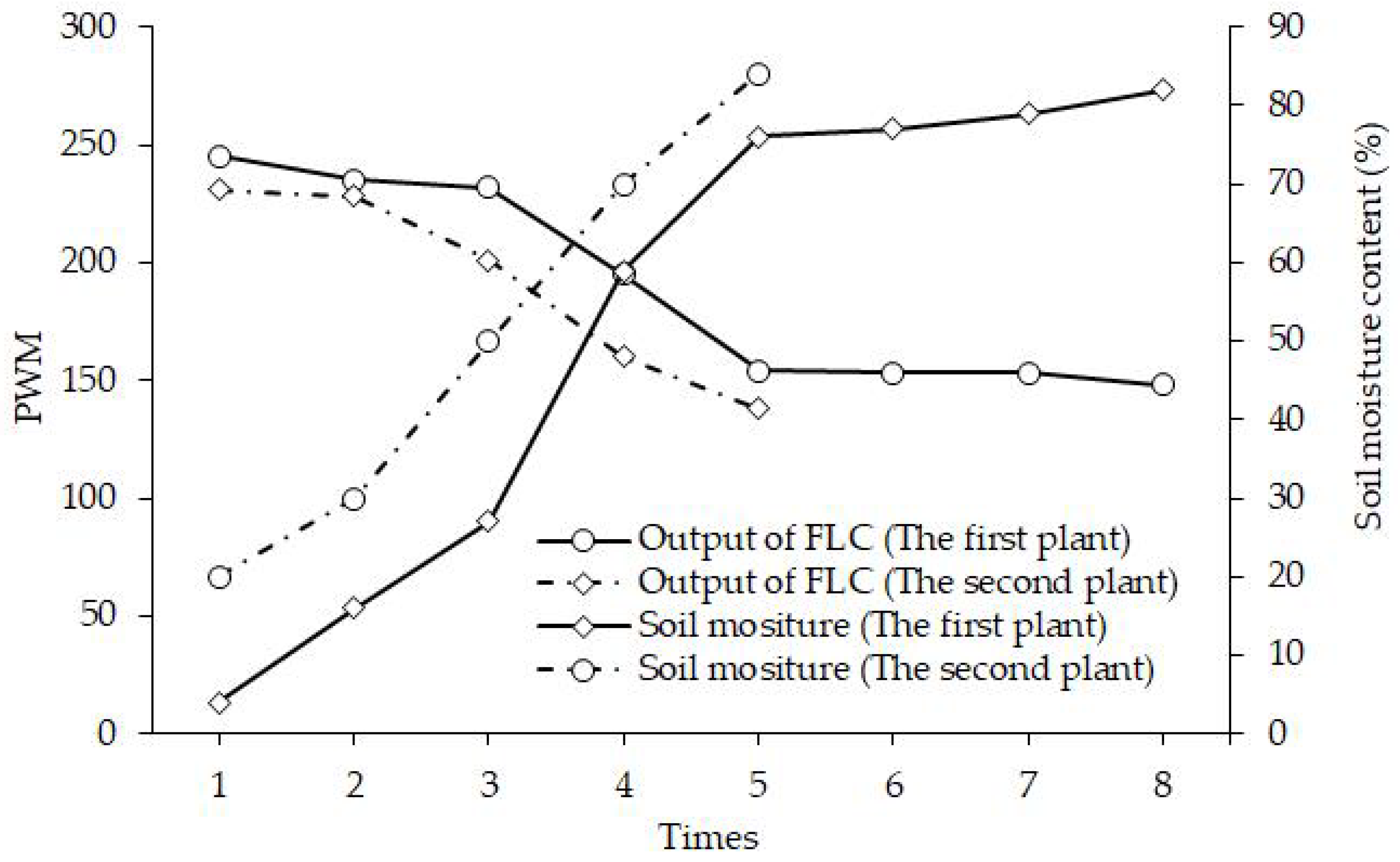

2.2. Variable Rate Irrigation Method

2.3. Multi-Tasking Process

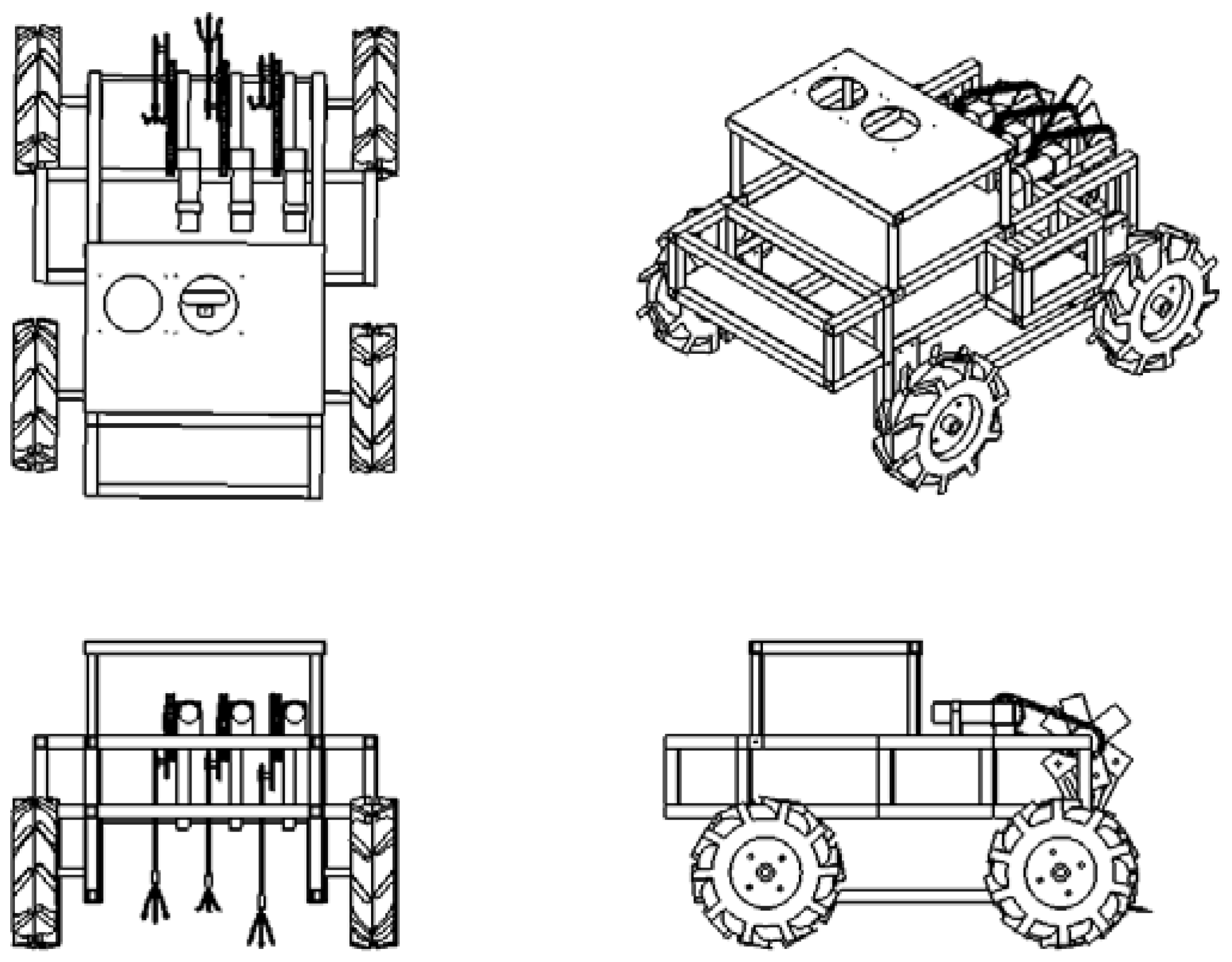

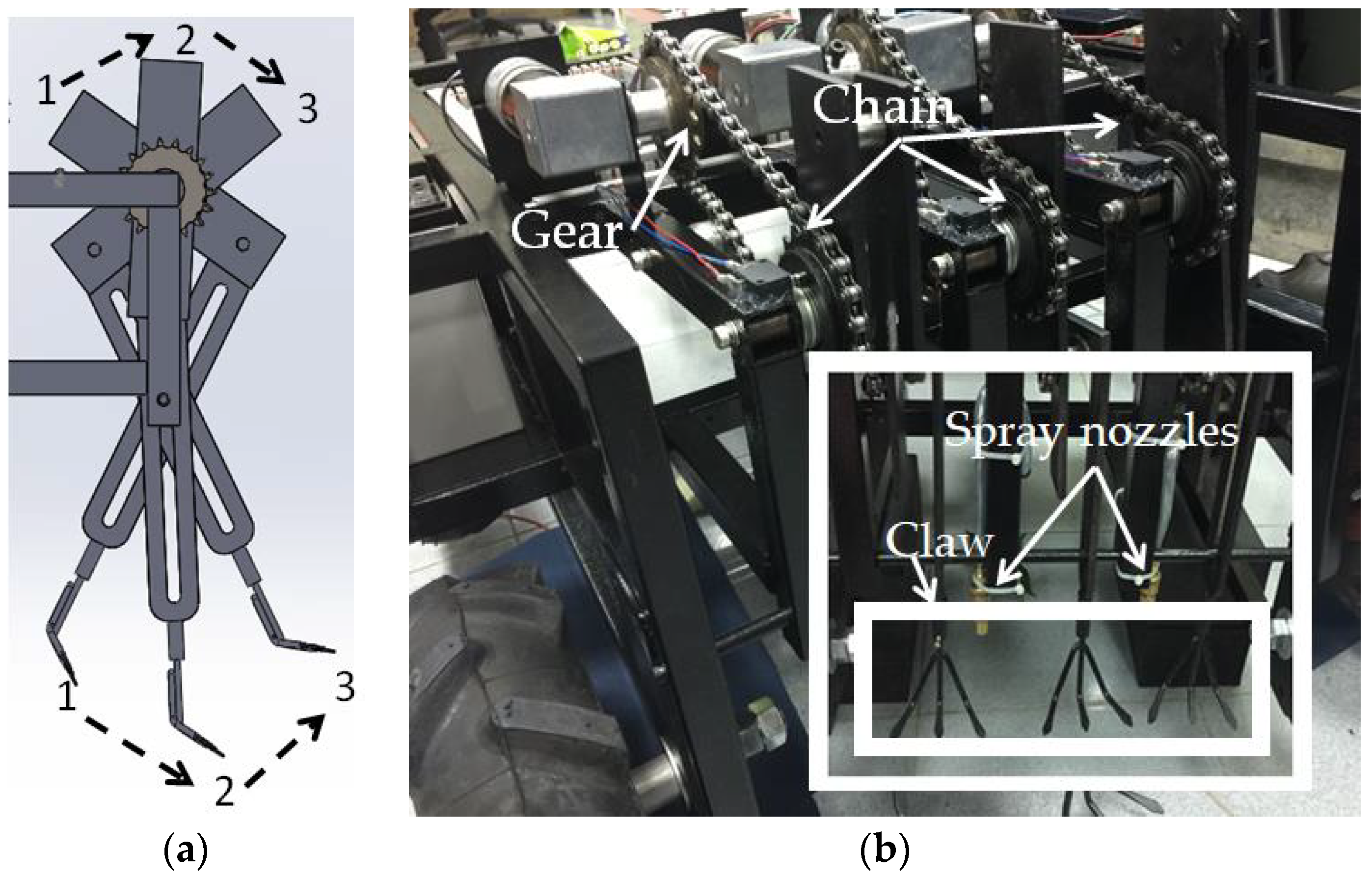

3. Description of the Mechatronics System

3.1. Mechanism Design

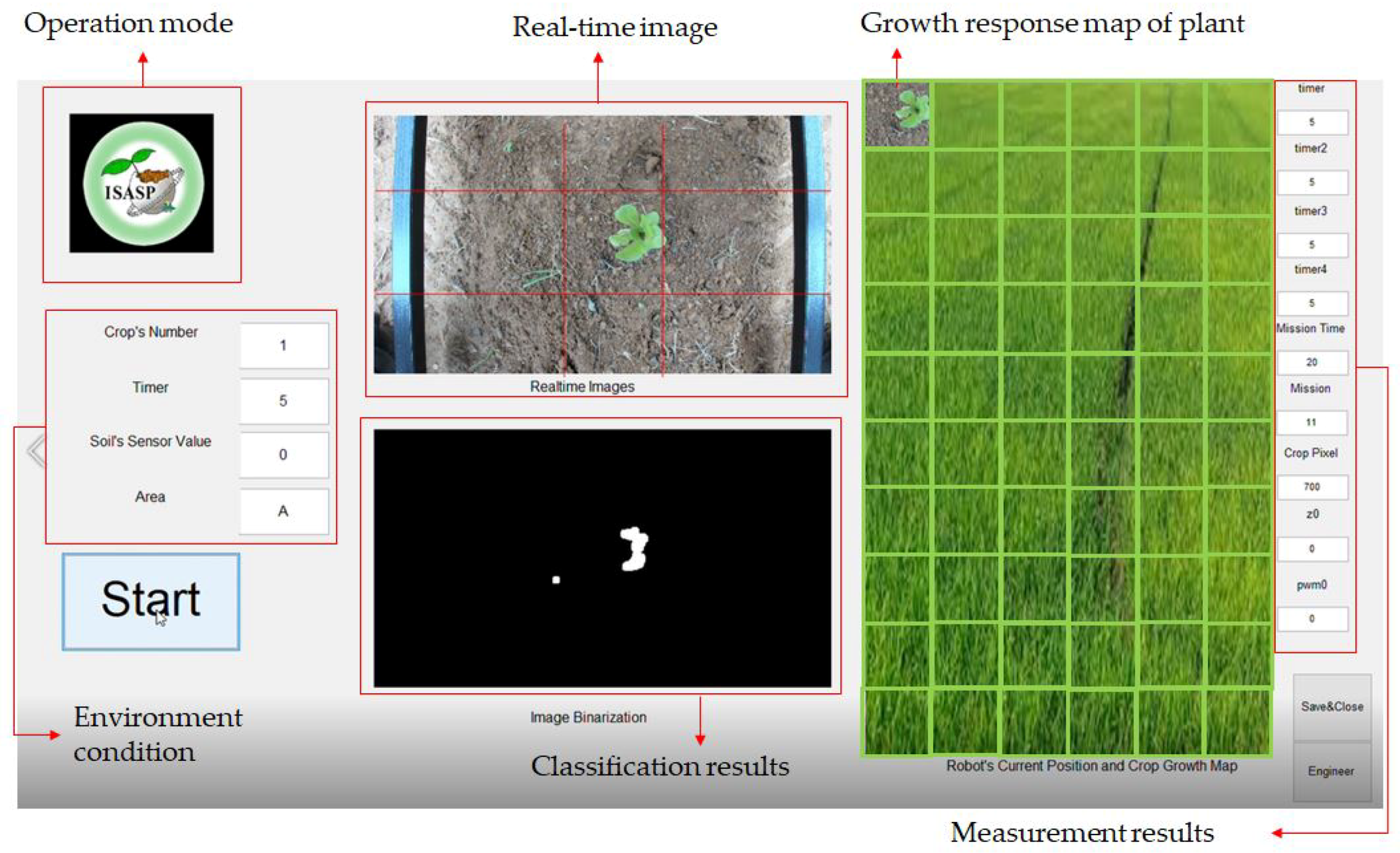

3.2. Hardware/Software System Implementation

4. Results and Discussion

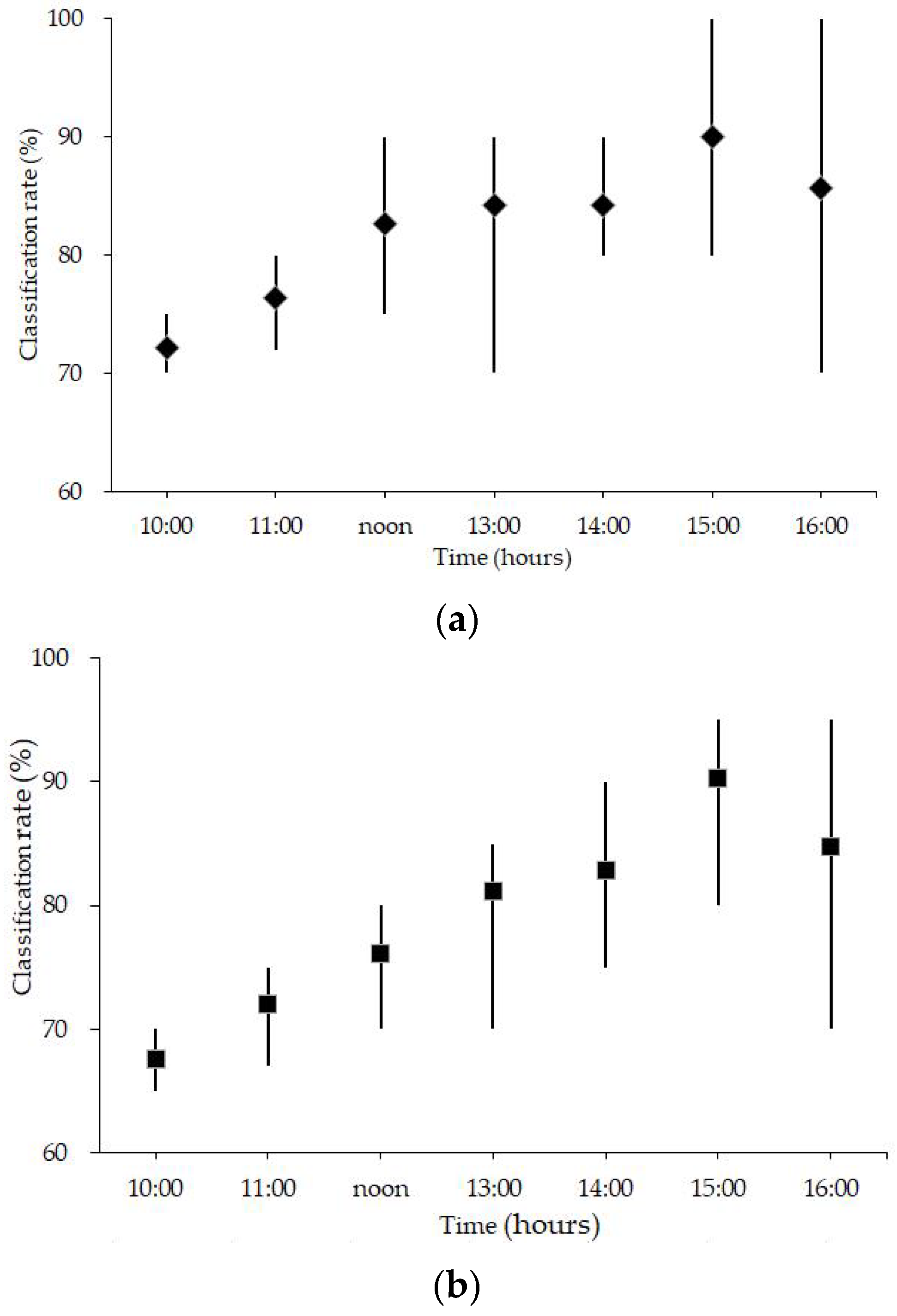

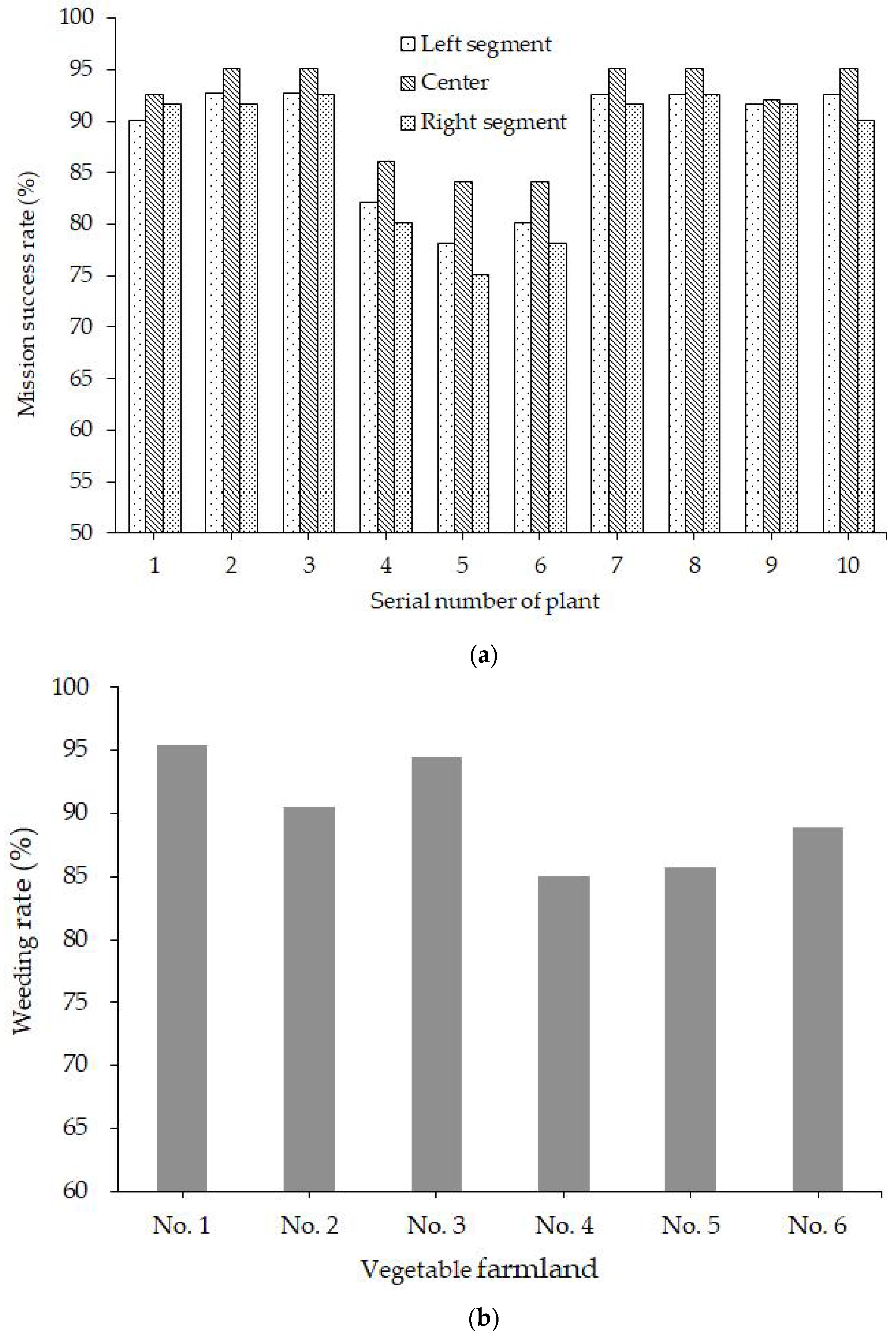

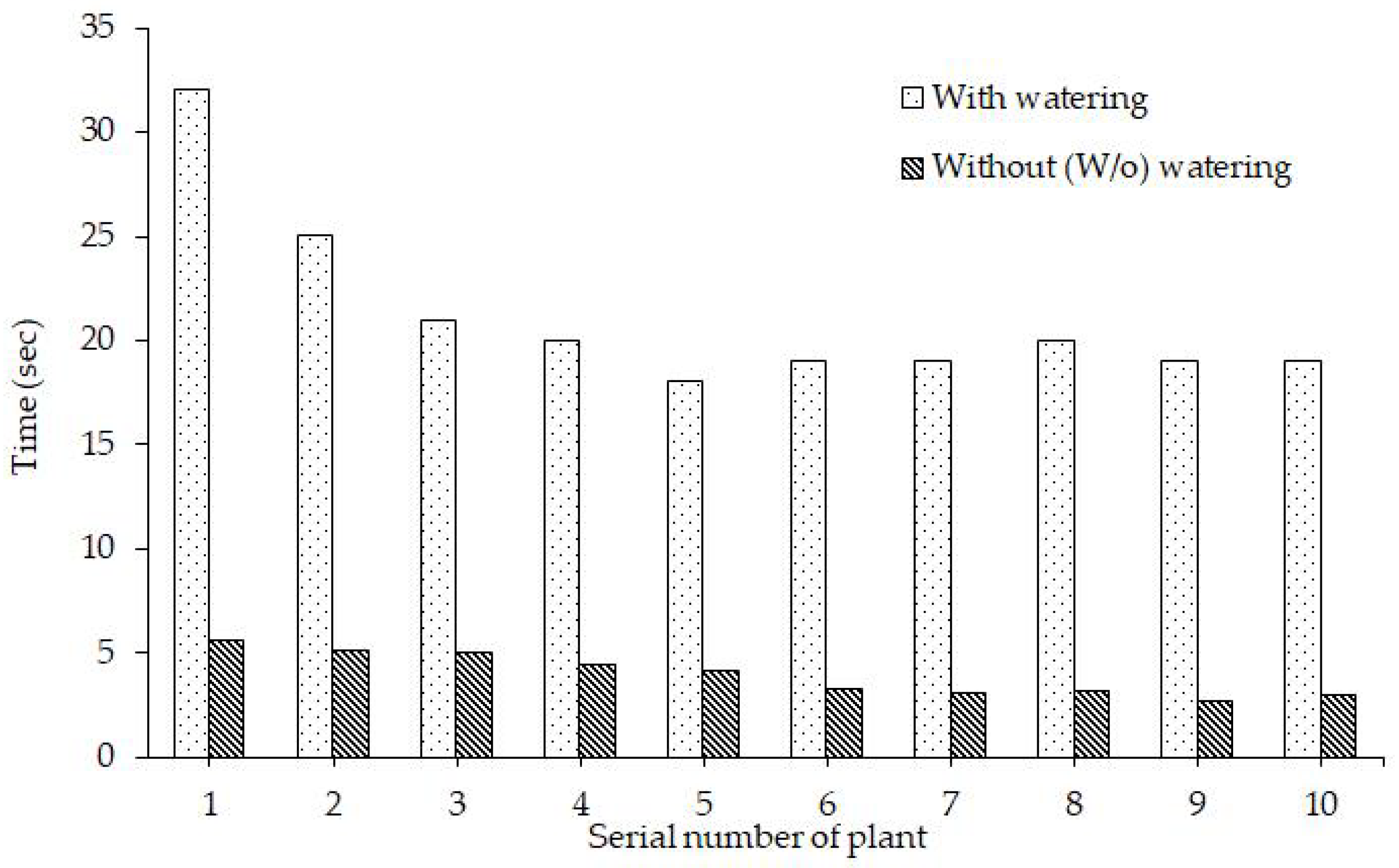

4.1. Scenario 1

4.2. Scenario 2

4.3. Scenario 3

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Sims, B.; Heney, J. Promoting smallholder adoption of conservation agriculture through mechanization services. Agriculture 2017, 7, 64. [Google Scholar] [CrossRef]

- Pullen, D.; Cowell, P. An evaluation of the performance of mechanical weeding mechanisms for use in high speed inter-row weeding of arable crops. J. Agric. Eng. Res. 1997, 67, 27–34. [Google Scholar] [CrossRef]

- Hemming, J.; Nieuwenhuizen, A.T.; Struik, L.E. Image analysis system to determine crop row and plant positions for an intra-row weeding machine. In Proceedings of the CIGR International Symposium on Sustainable Bioproduction, Tokyo, Japan, 19–23 September 2011; pp. 1–7. [Google Scholar]

- Burgos-Artizzu, X.; Ribeiro, A.; Guijarro, M.; Pajares, G. Real-time image processing for crop/weed discrimination in maize fields. Comput. Electron. Agric. 2011, 75, 337–346. [Google Scholar] [CrossRef]

- Amir, H.; Kargar, B. Automatic weed detection system and smart herbicide sprayer robot for com fields. Int. J. Res. Comput. Commun. Technol. 2013, 2, 55–58. [Google Scholar]

- Choi, K.H.; Han, S.K.; Han, S.H.; Park, K.H.; Kim, K.S.; Kim, S. Morphology-based guidance line extraction for an autonomous weeding robot in pddy fields. Comput. Electron. Agric. 2015, 113, 266–274. [Google Scholar] [CrossRef]

- Herrera, P.J.; Dorado, J.; Ribeiro, A. A Novel Approach for Weed Type Classification Based on Shape Descriptors and a Fuzzy Decision-Making Method. Sensors 2014, 14, 15304–15324. [Google Scholar] [CrossRef] [PubMed]

- Cordill, C.; Grift, T. Design and testing of an intra-row mechanical weeding machine for corn. Biosyst. Eng. 2011, 110, 247–252. [Google Scholar] [CrossRef]

- Andujar, D.; Weis, M.; Gerhards, R. An ultrasonic system for weed detection in cereal crops. Sensors 2012, 12, 17343–17357. [Google Scholar] [CrossRef] [PubMed]

- Perez-Ruiz, M.; Slaughter, D.; Fathallah, F.; Gliever, C.; Miller, B. Co-robotic intra-row weed control system. Biosyst. Eng. 2014, 126, 45–55. [Google Scholar] [CrossRef]

- Tillett, N.; Hague, T.; Grundy, A.; Dedousis, A. Mechanical within row weed control for transplanted crops using computer vision. Biosyst. Eng. 2008, 99, 171–178. [Google Scholar] [CrossRef]

- Gobor, Z. Mechatronic system for mechanical weed control of the intra-row area in row crops. KI-Künstliche Intell. 2013, 27, 379–383. [Google Scholar] [CrossRef]

- Langsenkamp, F.; Sellmann, F.; Kohlbrecher, M.; Trautz, D. Tube Stamp for mechanical intra-row individual Plant Weed Control. In Proceedings of the 18th World Congress of CIGR, Beijing, China, 16–19 September 2014. [Google Scholar]

- Blasco, J.; Aleixos, N.; Roger, J.M.; Rabatel, G.; Molto, E. Robotics weed control using machine vision. Biosyst. Eng. 2002, 83, 149–157. [Google Scholar] [CrossRef]

- Xiong, Y.; Ge, Y.; Liang, Y.; Blackmore, S. Development of a prototype robot and fast path-planning algorithm for static laser weeding. Comput. Electron. Agric. 2017, 142, 494–503. [Google Scholar] [CrossRef]

- Bawden, O.; Kulk, J.; Russell, R.; McCool, C.; English, A.; Dayoub, F.; Lehnert, C.; Perez, T. Robot for weed species plant-specific management. J. Field Robot. 2017, 34, 1179–1199. [Google Scholar] [CrossRef]

- McCool, C.; Beattie, J.; Firn, J.; Lehnert, C.; Kulk, J.; Bawden, O.; Russell, R.; Perez, T. Efficacy of mechanical weeding tools: A study into alternative weed management strategies enabled by robotics. IEEE Robot. Autom. Lett. 2018, 3, 1184–1190. [Google Scholar] [CrossRef]

- Teixido, M.; Font, D.; Palleja, T.; Tresanchez, M.; Nogues, M.; Palacin, J. Definition of linear color models in the RGB vector color space to detect red peaches in orchard images taken under natural illumination. Sensors 2012, 12, 7701–7718. [Google Scholar] [CrossRef] [PubMed]

- Hamuda, E.; Ginley, B.; Glavin, M.; Jones, E. Automatic crop detection under field conditions using the HSV colour space and morphological operations. Comput. Electron. Agric. 2017, 133, 97–107. [Google Scholar] [CrossRef]

- Yang, W.; Wang, S.; Zhao, X.; Zhang, J.; Feng, J. Greenness identification based on HSV decision tree. Inf. Proc. Agric. 2015, 2, 149–160. [Google Scholar] [CrossRef]

- Romeo, J.; Pajares, J.; Montalvo, M.; Guerrero, J.; Guijarro, M. A new expert system for greenness identification in agricultural images. Expert Syst. Appl. 2013, 40, 2275–2286. [Google Scholar] [CrossRef]

- Arroyo, J.; Guijarro, M.; Pajares, G. An instance-based learning approach for thresholding in crop images under different outdoor conditions. Comput. Electron. Agric. 2016, 127, 669–679. [Google Scholar] [CrossRef]

- Suh, H.K.; Hofstee, J.W.; Henten, E.J. Improved vegetation segmentation with ground shadow removal using an HDR camera. Precis. Agric. 2018, 19, 218–237. [Google Scholar] [CrossRef]

- Keely, M.; Ehn, E.; Patzoldt, W. Smart Machines for Weed Control & Beyond. In Proceedings of the 65th West Texas Agricultural Chemicals Institute Conference, Lubbock, TX, USA, 13 September 2017; Available online: http://www.plantmanagementnetwork.org/edcenter/seminars/2017AgChemicalsConference/SmartMachinesWeedControl/SmartMachinesWeedControl.pdf (accessed on 28 June 2018).

- Tanner, S. Low—Herbicide Robotic Weeding. World Agri—Tech Innovation Summit London, 17–18 October 2017. Available online: http://worldagritechinnovation.com/wp-content/uploads/2017/10/EcoRobotix.pdf (accessed on 25 June 2018).

- Amatya, S.; Karkee, M.; Zhang, Q.; Whiting, M.D. Automated detection of branch shaking locations for robotic cherry harvesting using machine vision. Robotics 2017, 6, 31. [Google Scholar] [CrossRef]

- Cubero, S.; Lee, W.S.; Aleixos, N.; Albert, F.; Blasco, J. Automated systems based on machine vision for inspecting citrus fruits from the field to postharvest—A review. Food Bioprocess Technol. 2016, 9, 1623–1639. [Google Scholar] [CrossRef]

- Chang, C.; Lin, K.; Fu, W. An intelligent crop cultivation system based on computer vision with a multiplex switch approach. In Proceedings of the ASABE Annual International Meeting, Spokane, WA, USA, 16–19 July 2017. [Google Scholar]

- Zadeh, L.A. A rationale for fuzzy control. J. Dyn. Syst. Meas. Control 1972, 94, 3–4. [Google Scholar] [CrossRef]

- Lee, C.C. Fuzzy logic in control system: Fuzzy logic controller. I. IEEE Trans. Syst. Man Cybern. 1990, 20, 404–418. [Google Scholar] [CrossRef]

| , , , | Linguistic Labels | , , | Linguistic Labels | , , | Linguistic Labels |

|---|---|---|---|---|---|

| [0, 0, 91, 109] | W | [0, 0, 40] | H | [0, 0, 145] | L |

| [97, 105, 113, 0] | N | [30, 50, 70] | M | [135, 170, 200] | M |

| [100, 118, 255, 255] | D | [60, 100, 100] | L | [185, 255, 255] | H |

| W | N | D | ||

|---|---|---|---|---|

| (SMC) | L | H | H | H |

| M | L | M | H | |

| H | L | L | L | |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, C.-L.; Lin, K.-M. Smart Agricultural Machine with a Computer Vision-Based Weeding and Variable-Rate Irrigation Scheme. Robotics 2018, 7, 38. https://doi.org/10.3390/robotics7030038

Chang C-L, Lin K-M. Smart Agricultural Machine with a Computer Vision-Based Weeding and Variable-Rate Irrigation Scheme. Robotics. 2018; 7(3):38. https://doi.org/10.3390/robotics7030038

Chicago/Turabian StyleChang, Chung-Liang, and Kuan-Ming Lin. 2018. "Smart Agricultural Machine with a Computer Vision-Based Weeding and Variable-Rate Irrigation Scheme" Robotics 7, no. 3: 38. https://doi.org/10.3390/robotics7030038

APA StyleChang, C.-L., & Lin, K.-M. (2018). Smart Agricultural Machine with a Computer Vision-Based Weeding and Variable-Rate Irrigation Scheme. Robotics, 7(3), 38. https://doi.org/10.3390/robotics7030038