A Review of Human Intention Recognition Frameworks in Industrial Collaborative Robotics

Abstract

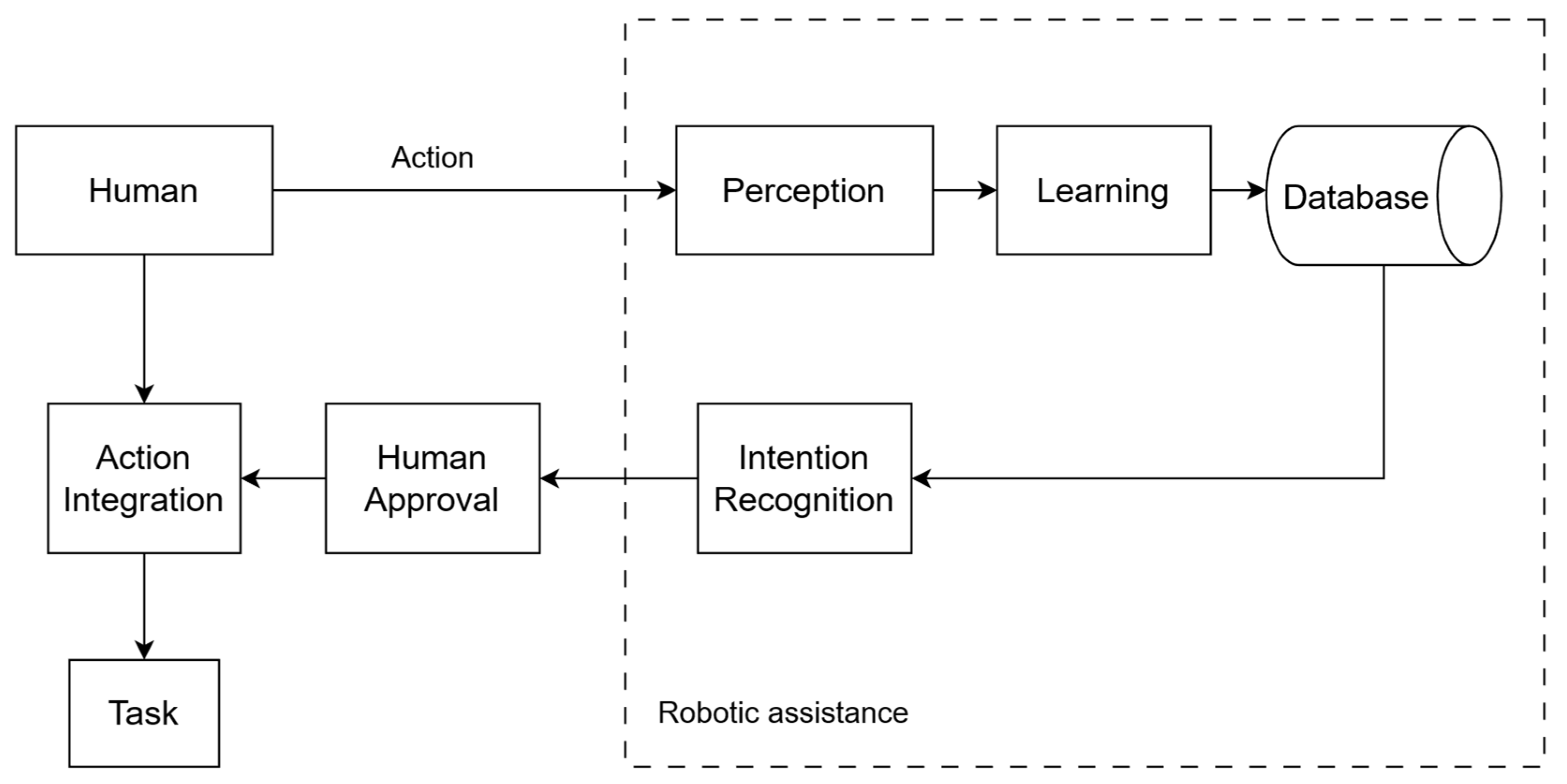

1. Introduction

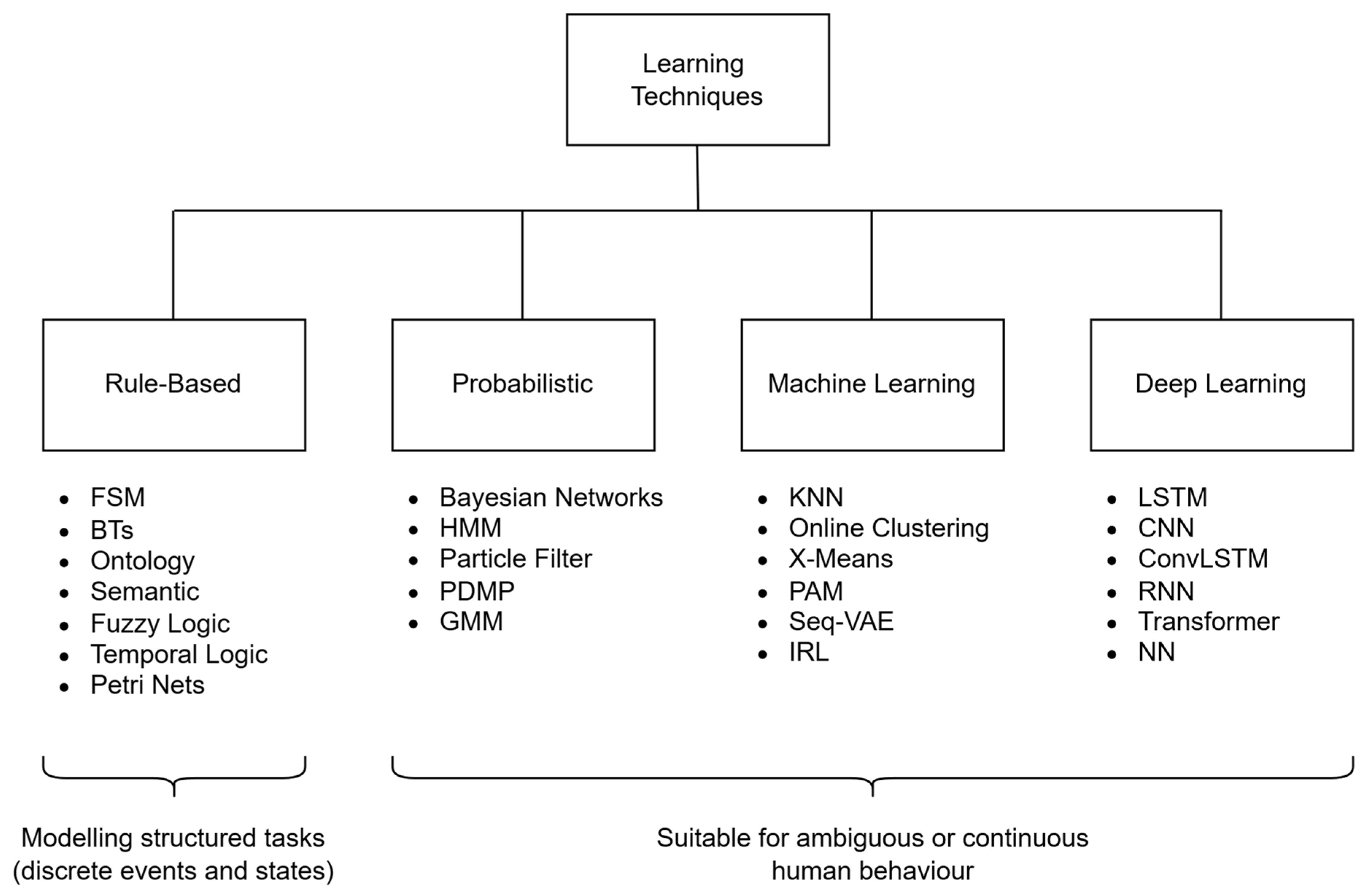

2. Learning Techniques for Intention Detection Towards Human-like Adaptability

2.1. Rule-Based Approaches

2.2. Probabilistic Models

2.3. Machine Learning Models

2.4. Deep Learning Models

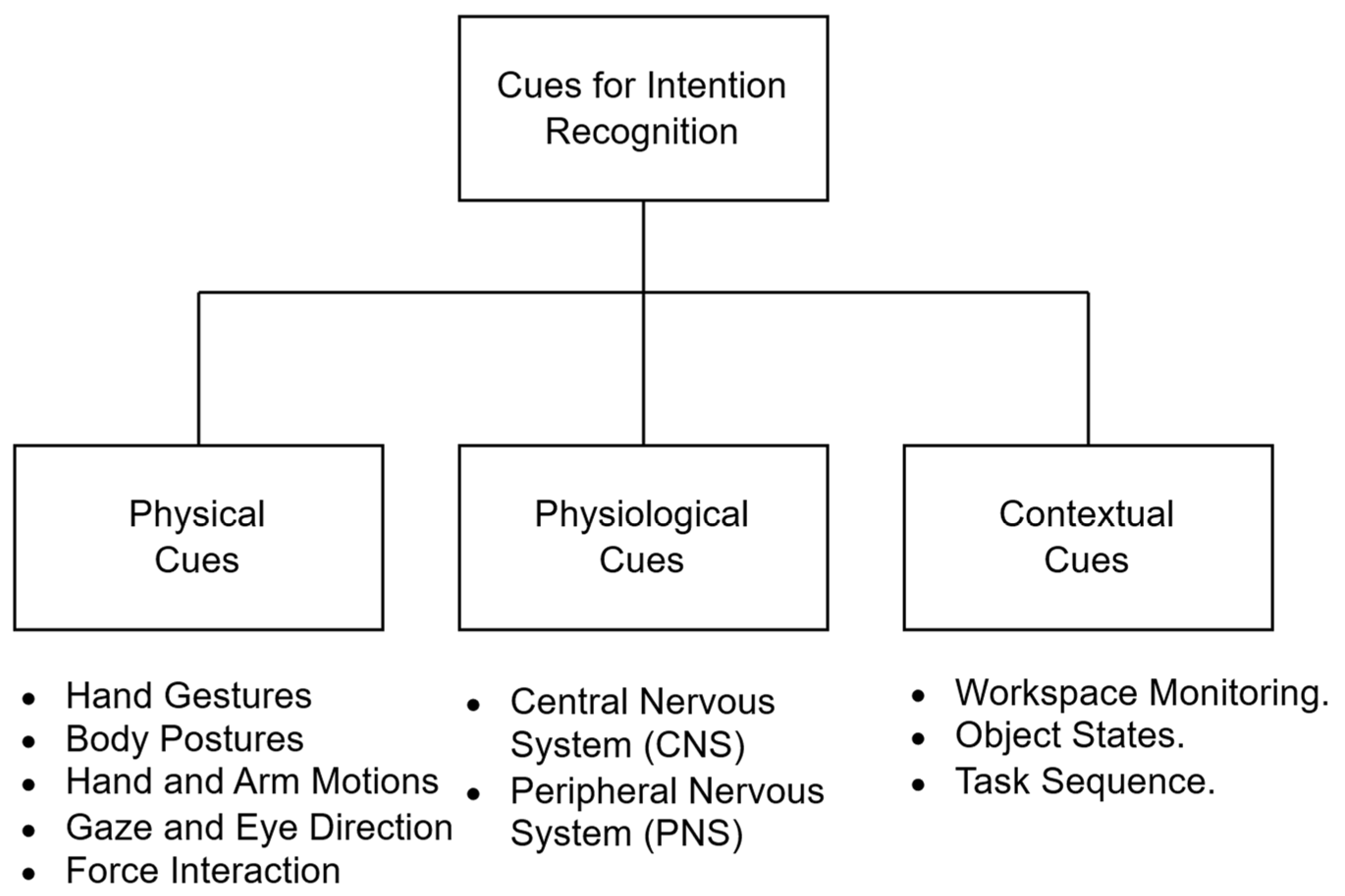

3. Cues for Human Intention Recognition

3.1. Physical Cues

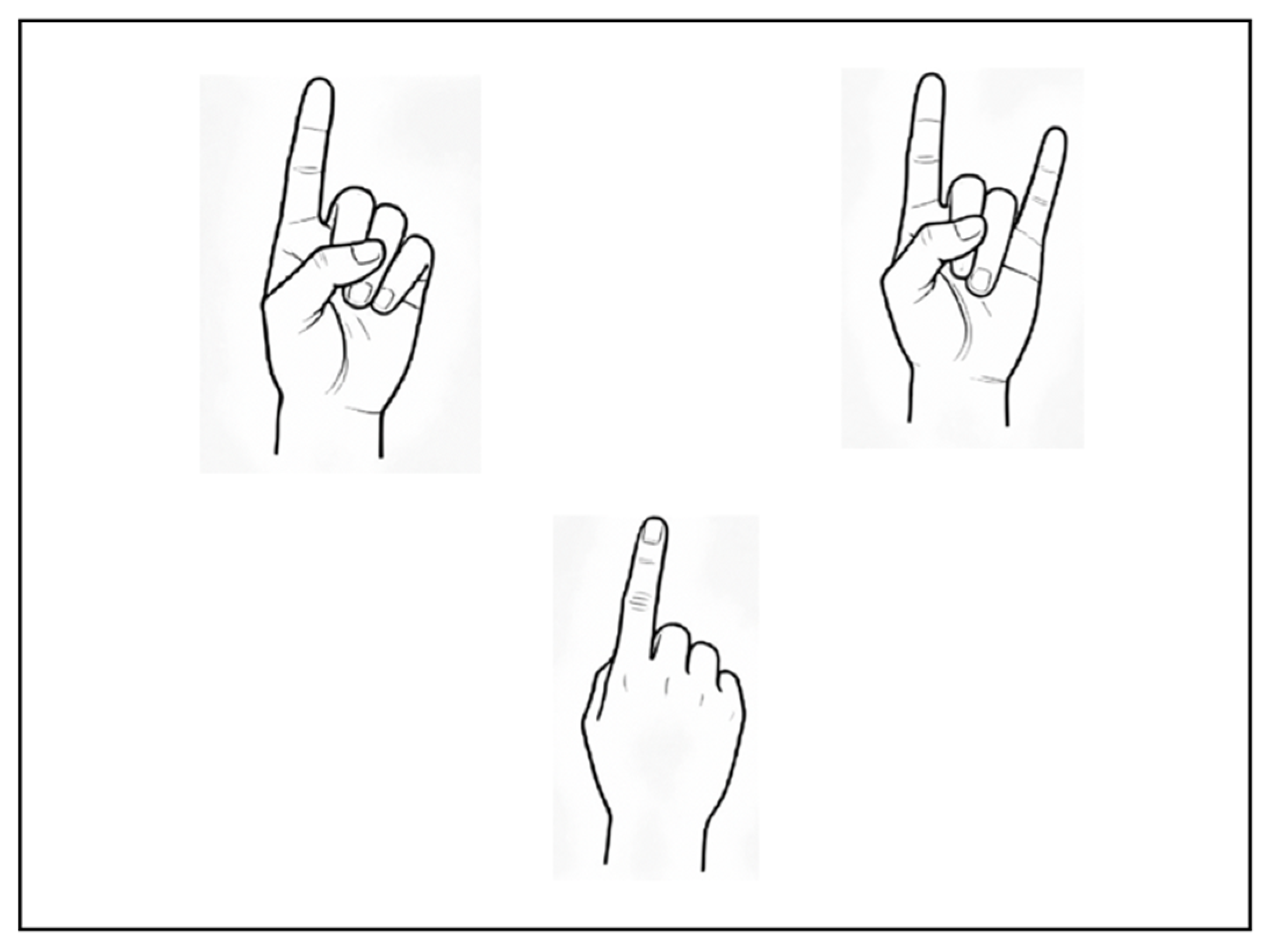

3.1.1. Hand Gestures

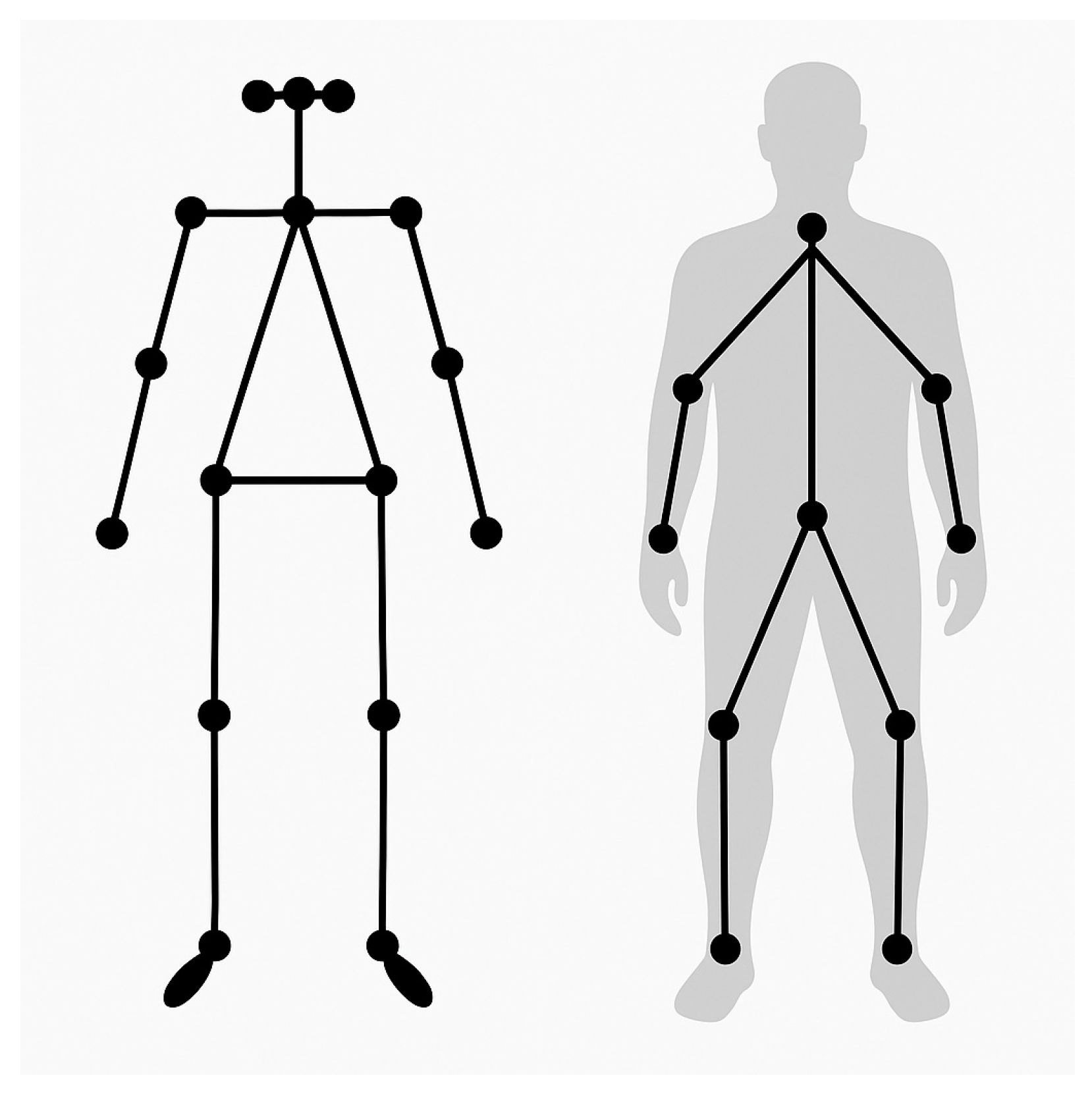

3.1.2. Body Postures

3.1.3. Hand and Arm Motions

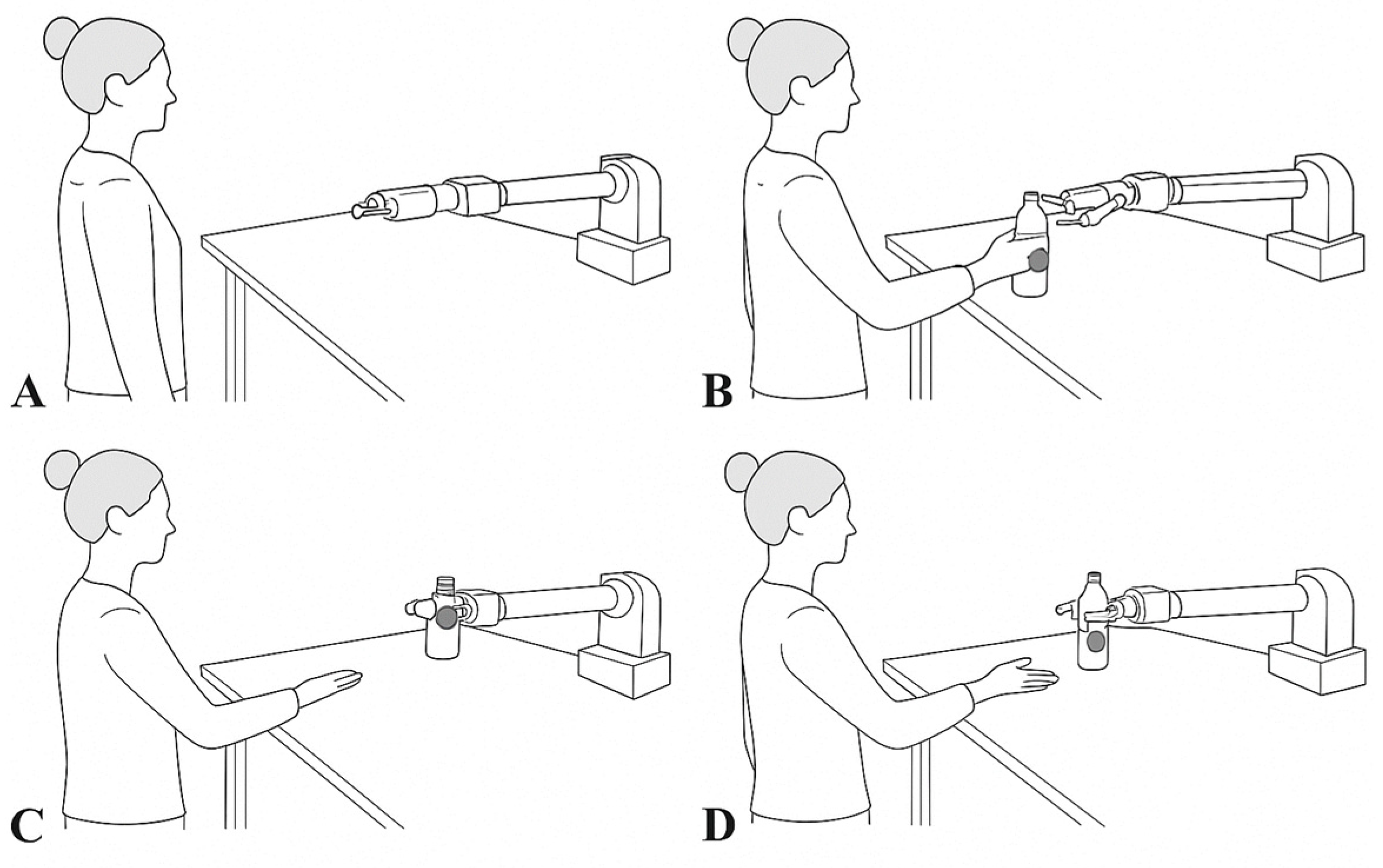

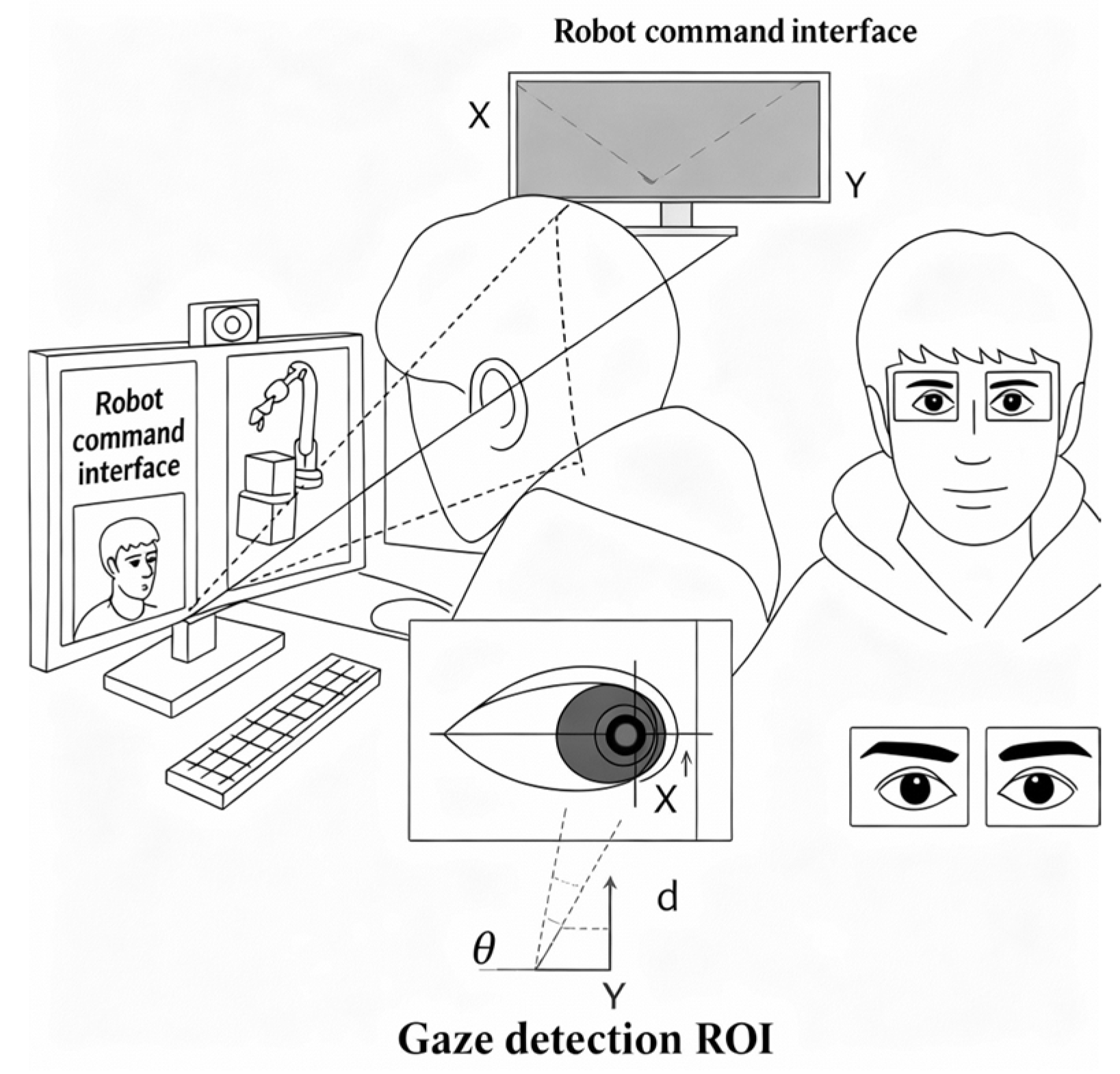

3.1.4. Gaze and Eye Direction

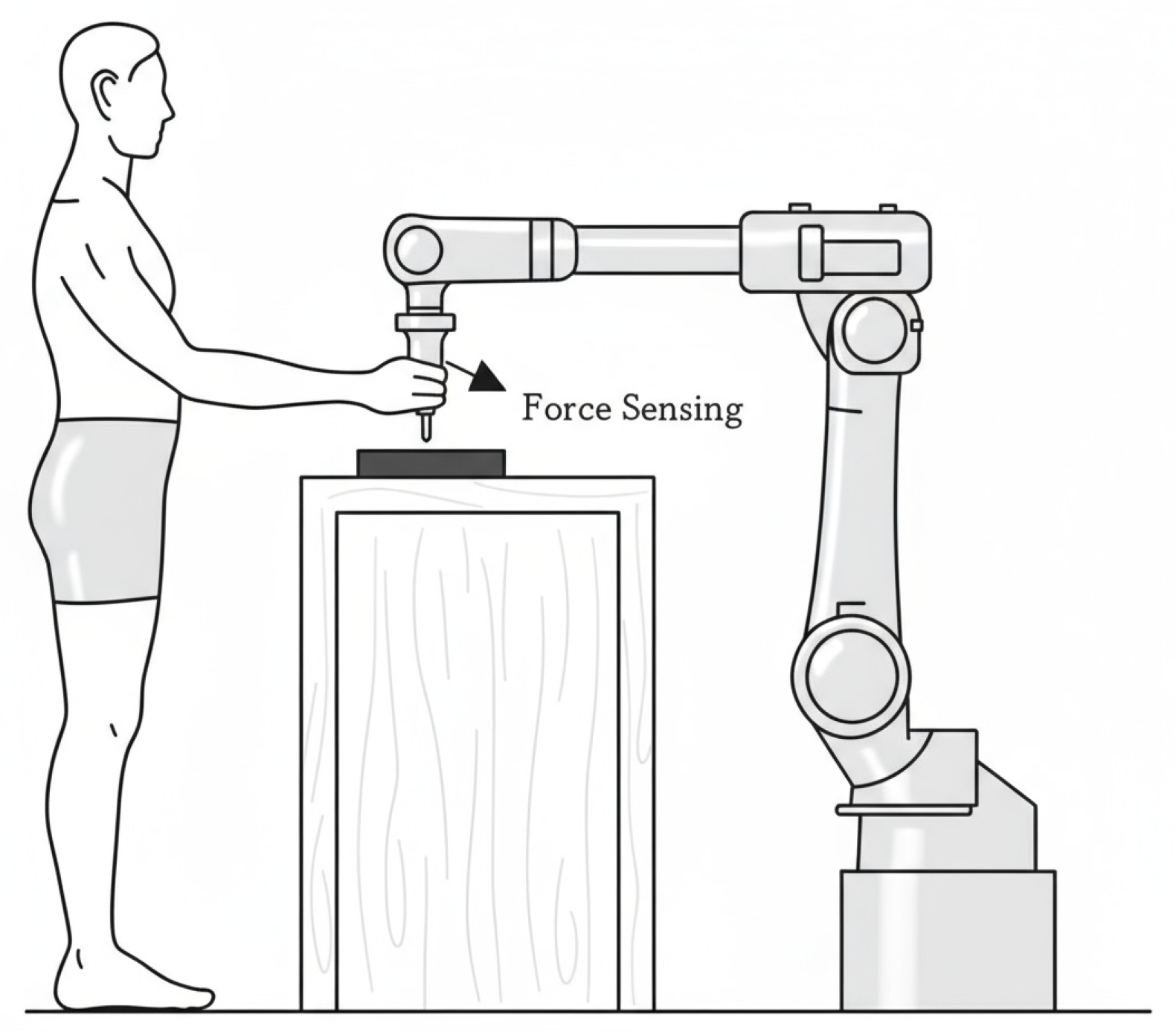

3.1.5. Force Interaction

3.2. Physiological Cues

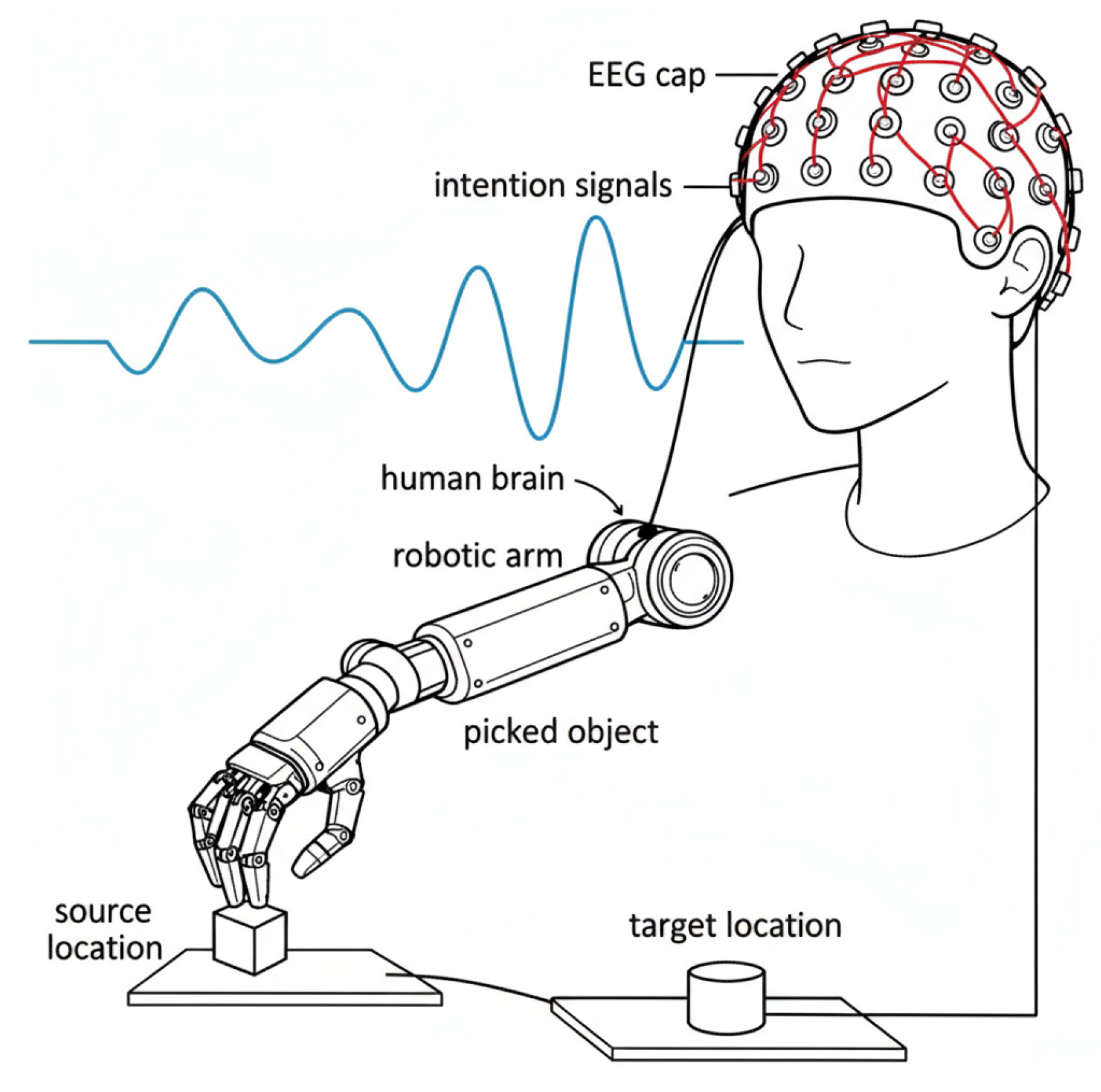

3.2.1. Central Nervous System Cues

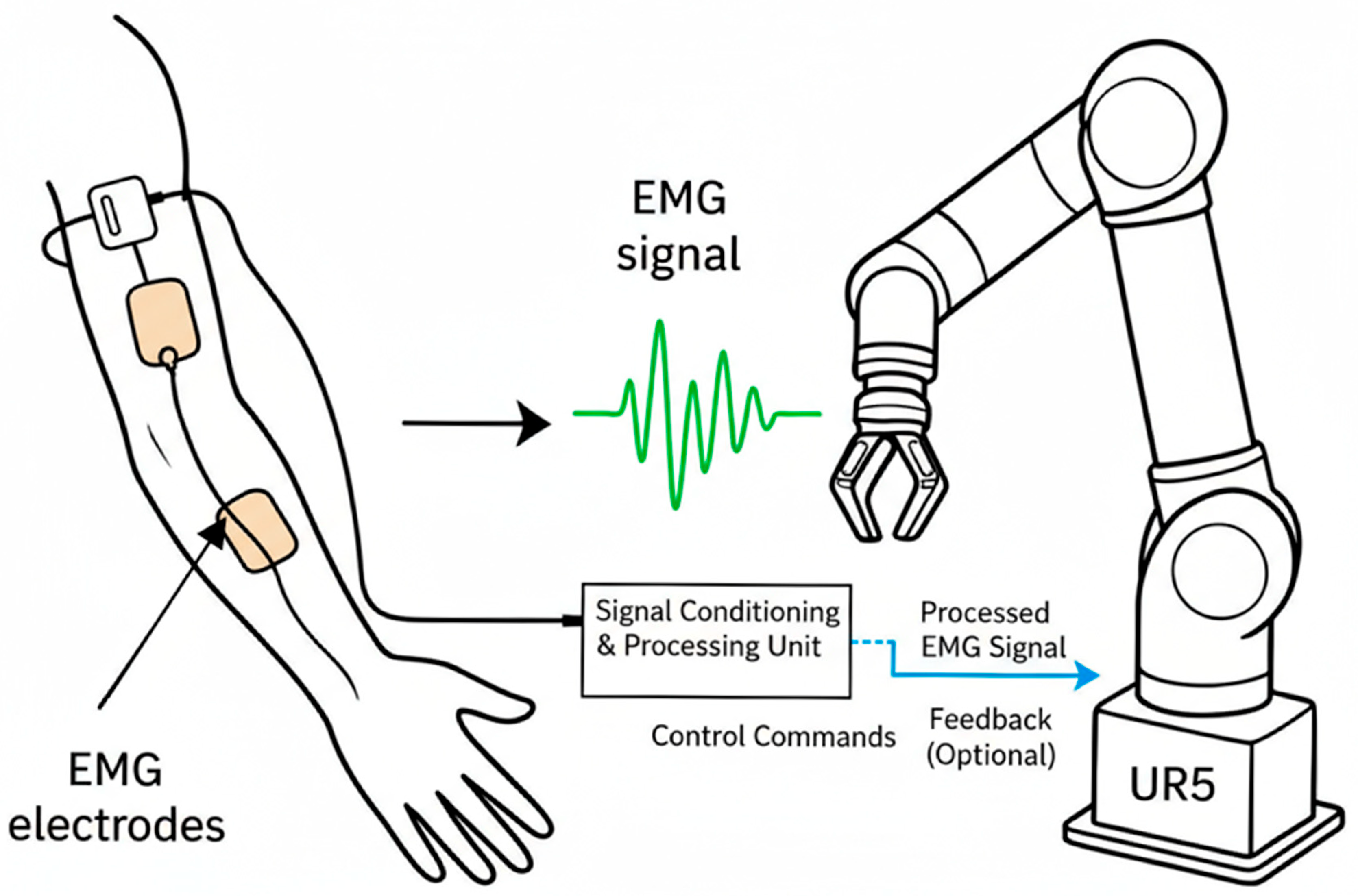

3.2.2. Peripheral Nervous System Cues

3.3. Contextual Cues

4. Discussion

4.1. Progression of Learning Models and Sensory Approaches

4.2. Interaction Types and Application Cases

4.3. Key Limitations

- Scalability and complexity: Rule-based models are interpretable, but they often lack flexibility. As tasks grow, their complexity can increase quickly. This can make them difficult to manage in changing environments and limits their ability to adapt to new interactions. They also depend on simple, linearly classified gestures, which restrict their use in more complicated human actions [35,36,38,39,40,41,97].

- Accuracy and Robustness: Probabilistic models can show temporary mispredictions when dealing with unclear inputs or similar initial trajectories [51,53,54,55,99]. Machine learning [8,10,57,58,59,60] and Deep Learning [66,67,68,69,70,71] models are data-dependent, requiring extensive, well-labelled datasets that are often difficult to obtain for diverse industrial scenarios.

- Latency in Prediction: While the goal is to provide proactive assistance, several methods create delays. This happens either because of extensive data collection or processing tasks. These factors hinder real-time responsiveness and reduce the robot’s ability to act in advance [14].

- Multimodal Integration Challenges: Although multimodal input is important, effectively combining different types of sensory data is still a challenge. Each cue has its own limitations. For example, gaze can be delayed and less stable [86]. Force interaction may create confusion [88]. Physiological signals can vary widely among users [89]. Additionally, contextual cues from overhead vision can be blocked [98].

- Cognitive Understanding and Implicit Cues: Current systems often emphasise clear actions, but they see human intention as a problem to solve for task planning. There is a significant gap in understanding subtle, hidden human signals and internal thoughts. Many models continue to depend on predictions made at a single point in time instead of capturing the complete and changing intent behind human behaviour [70].

- Data Requirements: Machine Learning [8,10,57,58,59,60] and Deep Learning [66,67,68,69,70,71] approaches depend on extensive and meticulously labelled datasets, which are difficult to obtain for varying industrial scenarios. Studies are often conducted with small or limited datasets, thus frequently failing to capture the full variability of real-world conditions.

- Interpretability: Machine Learning and Deep learning models often function as “black boxes”, lacking transparency [101].

- Lack of Standardised Evaluation: Frameworks are often tested in very specific, constrained, or virtual environments. This makes it difficult to assess how well they perform in real industrial conditions.

- Usability: Several systems need a complicated setup, careful adjustments, or manual parameter selection from users. This makes them difficult to deploy and adapt.

- Industrial Readiness: Many proposed frameworks have high computational demands and latency. This makes real-time processing difficult, which is crucial for dynamic industrial applications.

4.4. Recommendations

- Sophisticated Sensor fusion: Combining different sensory inputs. This will help overcome the limits of individual sensors.

- Generalisable learning models: Focus on creating models that can perform well across a variety of tasks and for different users.

- Operation in complex environments: Ensuring systems work well in real-time, changing, and unstructured industrial environments.

- Address prediction latency: Cutting down the time it takes to predict human intentions. This will enable more proactive and seamless collaboration.

- Deeper cognitive understanding: Creating models that move past basic action recognition. The goal is to reach a deeper understanding of human intent, which includes grasping ambiguous and implicit signals.

- Account for Human and Environmental Variability: Human intentions are not static. Humans continually adapt their actions in response to the robot’s behaviour and dynamic environmental conditions. This makes intention prediction highly context-dependent and variable over time.

- Extensive exploration on hybrid models: Developing next-generation hybrid models that can combine the strengths of various algorithmic approaches.

- Ethical aspects: Investigating the ethical issues, such as the possibility of algorithmic bias in models and the wider effects of using these technologies in the workplace.

- Integration of Complementary Control Approaches: Future research can improve by including complementary control strategies. For example, focusing on learning the desired motion profile to satisfy a force objective [102]. It can also learn environmental dynamics to allow the robot to follow the user’s motion intent with high manoeuvrability [103].

- Standardisation efforts: Establishing standardised benchmark datasets and evaluation protocols. This would address the current limitation where systems are often tested in very specific or limited scenarios. Standardisation would allow for fairer comparisons and speed up the development of truly strong and industry-ready solutions.

- Moving Beyond Prescriptive Safety: The current safety standards for collaborative robotics, such as ISO/TS 15066, offer important but often rigid guidelines. The fast development of technology in collaborative workspaces can benefit from a shift from reactive safety to proactive safety measures [98].

- Addressing Handedness Bias in Collaborative Robot Gesture Recognition: Future research must develop balanced, hand-agnostic models and datasets and gesture-based systems to ensure fair safety and efficiency for varying handedness.

- Prioritising Elicitation Studies for Intent-Driven Human–robot Collaboration: Future frameworks require a user-centred system design that integrates comprehensive elicitation studies early in the development process. These studies are important for collecting rich behavioural data. This data captures human intent more effectively than kinematic information alone. Using this human-centred information helps create strong predictive models, enabling the robots to be more proactive than reactive.

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AE | Autoencoder |

| AI | Artificial Intelligence |

| AJCP | Arm Joint Centre Positions |

| BCI | Brain–Computer Interface |

| BCI + VCV | Brain–Computer Interface Vigilance-Controlled Velocity |

| BETR-XP-LLM | Behaviour Tree Expansion with Large Language Models |

| BN | Bayesian Network |

| BTs | Bahaviour Trees |

| CBAM | Convolutional Block Attention Module |

| CC | Creative Commons |

| CNN | Convolutional Neural Network |

| CNS | Central Nervous System |

| ConvLSTM | Convolutional Long Short-Term Memory |

| CPNs | Coloured Petri Nets |

| DOF | Degree-of-Freedom |

| DTW | Dynamic Time Warping |

| EEG | Electroencephalography |

| EM | Expectation–Estimation/Expectation–Maximisation |

| EMG | Electromyography |

| FDE | Final Displacement Error |

| Fps | Frames per Second |

| F’SATI | French South African Institute of Technology |

| FSM | Finite State Machines |

| GMM | Gaussian Mixture Model |

| GMR | Gaussian Mixture Regression |

| HAR | Human Activity Recognition |

| HDD | Hard Disk Drive |

| HHIP | Human Handover Intention Prediction |

| HMDs | Head-Mounted Displays |

| HMM | Hidden Markov Model |

| HRCA | Human–robot Collaboration Assembly |

| HSMM | Hidden Semi-Markov Model |

| I-DT | Identification using Dispersion Threshold |

| IK | Inverse Kinematics |

| ILM | Intention-Aware Linear Model |

| IMUs | Inertial Measurement Units |

| IRL | Inverse Reinforcement Learning |

| KNN | K-Nearest Neighbours |

| LLM | Large Language Model |

| LSTM | Long Short-Term Memory |

| mAP | Mean Average Precision |

| MDPs | Markov Decision Processes |

| MIF | Mutable Intention Filter |

| ML | Machine Learning |

| MPJPE | Mean Per Joint Position Error |

| Ms | milliseconds |

| OCRA | Ontology for Collaborative Robotics and Adaptation |

| OWL | Web Ontology Language |

| NN | Neural Network |

| PAM | Partitioning Around Medoids |

| PCA | Principal Component Analysis |

| PCCR | Pupil Centre-Corneal Reflection |

| PDMP | Probabilistic Dynamic Movement Primitive |

| PNS | Peripheral Nervous System |

| PP | Palm Position |

| RCC8 | Region Connection Calculus 8 |

| RL | Reinforcement Learning |

| RNN | Recurrent Neural Network |

| Seq-VAE | Sequential Variational Autoencoder |

| SNR | Signal-to-Noise Ratio |

| SRLs | Supernumerary Robotic Limbs |

| SSVEP | Steady-State Visual Evoked Potential |

| ST-GCN | Spatial–Temporal Graph Convolutional Networks |

| SVM | Support Vector Machine |

| TOH | Tower of Hanoi |

| UOLA | Unsupervised Online Learning Algorithm |

| VR | Virtual Reality |

References

- Matheson, E.; Minto, R.; Zampieri, E.G.; Faccio, M.; Rosati, G. Human–robot collaboration in manufacturing applications: A review. Robotics 2019, 8, 100. [Google Scholar] [CrossRef]

- Semeraro, F.; Griffiths, A.; Cangelosi, A. Human–robot collaboration and machine learning: A systematic review of recent research. Robot. Comput.-Integr. Manuf. 2023, 79, 102432. [Google Scholar] [CrossRef]

- Arents, J.; Abolins, V.; Judvaitis, J.; Vismanis, O.; Oraby, A.; Ozols, K. Human-Robot Collaboration Trends and Safety Aspects: A Systematic Review. J. Sens. Actuator Netw. 2021, 10, 48. [Google Scholar] [CrossRef]

- Zhang, Y.; Doyle, T. Integrating intention-based systems in human-robot interaction: A scoping review of sensors, algorithms, and trust. Front. Robot. AI 2023, 10, 1233328. [Google Scholar] [CrossRef] [PubMed]

- Schmid, A.J.; Weede, O.; Worn, H. Proactive robot task selection given a human intention estimate. In Proceedings of the RO-MAN 2007-The 16th IEEE International Symposium on Robot and Human Interactive Communication, Jeju, Republic of Korea, 26–29 August 2007; pp. 726–731. [Google Scholar]

- Khan, F.; Asif, S.; Webb, P. Communication components for human intention prediction–a survey. In Proceedings of the 14th International Conference on Applied Human Factors and Ergonomics (AHFE 2023), San Francisco, CA, USA, 20–24 July 2023. [Google Scholar]

- Hoffman, G.; Bhattacharjee, T.; Nikolaidis, S. Inferring human intent and predicting human action in human–robot collaboration. Annu. Rev. Control Robot. Auton. Syst. 2024, 7, 73–95. [Google Scholar] [CrossRef]

- Lin, H.-I.; Nguyen, X.-A.; Chen, W.-K. Active intention inference for robot-human collaboration. Int. J. Comput. Methods Exp. Meas. 2018, 6, 772–784. [Google Scholar] [CrossRef]

- Liu, C.; Hamrick, J.; Fisac, J.; Dragan, A.; Hedrick, J.; Sastry, S.; Griffiths, T. Goal Inference Improves Objective and Perceived Performance in Human-Robot Collaboration. arXiv 2018, arXiv:1802.01780. [Google Scholar] [CrossRef]

- Olivares-Alarcos, A.; Foix, S.; Alenya, G. On inferring intentions in shared tasks for industrial collaborative robots. Electronics 2019, 8, 1306. [Google Scholar] [CrossRef]

- Zhang, Y.; Ding, K.; Hui, J.; Lv, J.; Zhou, X.; Zheng, P. Human-object integrated assembly intention recognition for context-aware human-robot collaborative assembly. Adv. Eng. Inform. 2022, 54, 101792. [Google Scholar] [CrossRef]

- Johannsmeier, L.; Haddadin, S. A hierarchical human-robot interaction-planning framework for task allocation in collaborative industrial assembly processes. IEEE Robot. Autom. Lett. 2016, 2, 41–48. [Google Scholar] [CrossRef]

- Mohammadi Amin, F.; Rezayati, M.; van de Venn, H.W.; Karimpour, H. A mixed-perception approach for safe human–robot collaboration in industrial automation. Sensors 2020, 20, 6347. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Tian, S.; Liang, X.; Zheng, M.; Behdad, S. Early Prediction of Human Intention for Human–Robot Collaboration Using Transformer Network. J. Comput. Inf. Sci. Eng. 2024, 24, 051003. [Google Scholar] [CrossRef]

- Gomez Cubero, C.; Rehm, M. Intention recognition in human robot interaction based on eye tracking. In Proceedings of the IFIP Conference on Human-Computer Interaction, Bari, Italy, 30 August–3 September 2021; pp. 428–437. [Google Scholar]

- Nicora, M.L.; Ambrosetti, R.; Wiens, G.J.; Fassi, I. Human–robot collaboration in smart manufacturing: Robot reactive behavior intelligence. J. Manuf. Sci. Eng. 2021, 143, 031009. [Google Scholar] [CrossRef]

- Van Den Broek, M.K.; Moeslund, T.B. Ergonomic adaptation of robotic movements in human-robot collaboration. In Proceedings of the Companion of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020; pp. 499–501. [Google Scholar]

- Gildert, N.; Millard, A.G.; Pomfret, A.; Timmis, J. The Need for Combining Implicit and Explicit Communication in Cooperative Robotic Systems. Front. Robot. AI 2018, 5, 65. [Google Scholar] [CrossRef]

- Che, Y.; Okamura, A.M.; Sadigh, D. Efficient and trustworthy social navigation via explicit and implicit robot–human communication. IEEE Trans. Robot. 2020, 36, 692–707. [Google Scholar] [CrossRef]

- Baptista, J.; Castro, A.; Gomes, M.; Amaral, P.; Santos, V.; Silva, F.; Oliveira, M. Human–Robot Collaborative Manufacturing Cell with Learning-Based Interaction Abilities. Robotics 2024, 13, 107. [Google Scholar] [CrossRef]

- Knepper, R.A.; Mavrogiannis, C.I.; Proft, J.; Liang, C. Implicit communication in a joint action. In Proceedings of the 2017 Acm/Ieee International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 283–292. [Google Scholar]

- Bogue, R. Sensors for robotic perception. Part one: Human interaction and intentions. Ind. Robot 2015, 42, 386–391. [Google Scholar] [CrossRef]

- Safeea, M.; Neto, P.; Béarée, R. A quest towards safe human robot collaboration. In Proceedings of the Towards Autonomous Robotic Systems: 20th Annual Conference, TAROS 2019, London, UK, 3–5 July 2019; pp. 493–495. [Google Scholar]

- Hoskins, G.O.; Padayachee, J.; Bright, G. Human-robot interaction: The safety challenge (an inegrated frame work for human safety). In Proceedings of the 2019 Southern African Universities Power Engineering Conference/Robotics and Mechatronics/Pattern Recognition Association of South Africa (SAUPEC/RobMech/PRASA), Bloemfontein, South Africa, 28–30 January 2019; pp. 74–79. [Google Scholar]

- Anand, G.; Rahul, E.; Bhavani, R.R. A sensor framework for human-robot collaboration in industrial robot work-cell. In Proceedings of the 2017 International Conference on Intelligent Computing, Instrumentation and Control Technologies (ICICICT), Kerala, India, 6–7 July 2017; pp. 715–720. [Google Scholar]

- Mia, M.R.; Shuford, J. Exploring the Synergy of Artificial Intelligence and Robotics in Industry 4.0 Applications. J. Artif. Intell. Gen. Sci. 2024, 1. [Google Scholar] [CrossRef]

- Pereira, L.M. State-of-the-art of intention recognition and its use in decision making. AI Commun. 2013, 26, 237–246. [Google Scholar]

- Rozo, L.; Silvério, J.; Calinon, S.; Caldwell, D.G. Exploiting interaction dynamics for learning collaborative robot behaviors. In Proceedings of the 2016 AAAI International Joint Conference on Artificial Intelligence: Interactive Machine Learning Workshop (IJCAI), New York, NY, USA, 9–11 July 2016. [Google Scholar]

- Görür, O.C.; Rosman, B.; Sivrikaya, F.; Albayrak, S. Social cobots: Anticipatory decision-making for collaborative robots incorporating unexpected human behaviors. In Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; pp. 398–406. [Google Scholar]

- Görür, O.C.; Rosman, B.; Sivrikaya, F.; Albayrak, S. FABRIC: A framework for the design and evaluation of collaborative robots with extended human adaptation. ACM Trans. Hum.-Robot Interact. 2023, 12, 1–54. [Google Scholar] [CrossRef]

- Cipriani, G.; Bottin, M.; Rosati, G. Applications of learning algorithms to industrial robotics. In Proceedings of the The International Conference of IFToMM ITALY, Online, 9–11 September 2020; pp. 260–268. [Google Scholar]

- Ding, H.; Schipper, M.; Matthias, B. Collaborative behavior design of industrial robots for multiple human-robot collaboration. In Proceedings of the IEEE ISR 2013, Seoul, Repulic of Korea, 24–26 October 2013; pp. 1–6. [Google Scholar]

- Abraham, A. Rule-Based expert systems. In Handbook of Measuring System Design; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2005. [Google Scholar]

- Wang, W.; Li, R.; Chen, Y.; Jia, Y. Human intention prediction in human-robot collaborative tasks. In Proceedings of the Companion of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; pp. 279–280. [Google Scholar]

- Zhao, X.; He, Y.; Chen, X.; Liu, Z. Human–robot collaborative assembly based on eye-hand and a finite state machine in a virtual environment. Appl. Sci. 2021, 11, 5754. [Google Scholar] [CrossRef]

- Styrud, J.; Iovino, M.; Norrlöf, M.; Björkman, M.; Smith, C. Automatic Behavior Tree Expansion with LLMs for Robotic Manipulation. arXiv 2024, arXiv:2409.13356. [Google Scholar]

- Olivares-Alarcos, A.; Foix, S.; Borgo, S.; Alenyà, G. OCRA–An ontology for collaborative robotics and adaptation. Comput. Ind. 2022, 138, 103627. [Google Scholar] [CrossRef]

- Akkaladevi, S.C.; Plasch, M.; Hofmann, M.; Pichler, A. Semantic knowledge based reasoning framework for human robot collaboration. Procedia CIRP 2021, 97, 373–378. [Google Scholar] [CrossRef]

- Zou, R.; Liu, Y.; Li, Y.; Chu, G.; Zhao, J.; Cai, H. A Novel Human Intention Prediction Approach Based on Fuzzy Rules through Wearable Sensing in Human–Robot Handover. Biomimetics 2023, 8, 358. [Google Scholar] [CrossRef]

- Cao, C.; Yang, C.; Zhang, R.; Li, S. Discovering intrinsic spatial-temporal logic rules to explain human actions. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2023; Volume 36, pp. 67948–67959. [Google Scholar]

- Llorens-Bonilla, B.; Asada, H.H. Control and coordination of supernumerary robotic limbs based on human motion detection and task petri net model. In Proceedings of the Dynamic Systems and Control Conference, Palo Alto, CA, USA 21–23 October 2013; p. V002T027A006. [Google Scholar]

- Bdiwi, M.; Al Naser, I.; Halim, J.; Bauer, S.; Eichler, P.; Ihlenfeldt, S. Towards safety4. 0: A novel approach for flexible human-robot-interaction based on safety-related dynamic finite-state machine with multilayer operation modes. Front. Robot. AI 2022, 9, 1002226. [Google Scholar] [CrossRef]

- Awais, M.; Henrich, D. Human-robot collaboration by intention recognition using probabilistic state machines. In Proceedings of the 19th International Workshop on Robotics in Alpe-Adria-Danube Region (RAAD 2010), Budapest, Hungary, 24–26 June 2010; pp. 75–80. [Google Scholar]

- Iodice, F.; De Momi, E.; Ajoudani, A. Intelligent Framework for Human-Robot Collaboration: Safety, Dynamic Ergonomics, and Adaptive Decision-Making. arXiv 2025, arXiv:2503.07901. [Google Scholar] [CrossRef]

- Colledanchise, M.; Ögren, P. Behavior Trees in Robotics and AI: An Introduction; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Palm, R.; Chadalavada, R.T.; Lilienthal, A. Fuzzy Modeling and Control for Intention Recognition in Human-robot Systems. In Proceedings of the 8th International Joint Conference on Computational Intelligence, Porto, Portugal, 9–11 November 2016. [Google Scholar]

- Nawaz, F.; Peng, S.; Lindemann, L.; Figueroa, N.; Matni, N. Reactive temporal logic-based planning and control for interactive robotic tasks. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab Emirates, 14–18 October 2024; pp. 12108–12115. [Google Scholar]

- Wu, B.; Hu, B.; Lin, H. A learning based optimal human robot collaboration with linear temporal logic constraints. arXiv 2017, arXiv:1706.00007. [Google Scholar] [CrossRef]

- Zurawski, R.; Zhou, M. Petri nets and industrial applications: A tutorial. IEEE Trans. Ind. Electron. 1994, 41, 567–583. [Google Scholar] [CrossRef]

- Ebert, S. A model-driven approach for cobotic cells based on Petri nets. In Proceedings of the 23rd ACM/IEEE International Conference on Model Driven Engineering Languages and Systems: Companion Proceedings, Virtual, 16–23 October 2020; pp. 1–6. [Google Scholar]

- Hernandez-Cruz, V.; Zhang, X.; Youcef-Toumi, K. Bayesian intention for enhanced human robot collaboration. arXiv 2024, arXiv:2410.00302. [Google Scholar] [CrossRef]

- Huang, Z.; Mun, Y.-J.; Li, X.; Xie, Y.; Zhong, N.; Liang, W.; Geng, J.; Chen, T.; Driggs-Campbell, K. Hierarchical intention tracking for robust human-robot collaboration in industrial assembly tasks. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 9821–9828. [Google Scholar]

- Qu, J.; Li, Y.; Liu, C.; Wang, W.; Fu, W. Prediction of Assembly Intent for Human-Robot Collaboration Based on Video Analytics and Hidden Markov Model. Comput. Mater. Contin. 2025, 84, 3787–3810. [Google Scholar] [CrossRef]

- Luo, R.C.; Mai, L. Human intention inference and on-line human hand motion prediction for human-robot collaboration. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 5958–5964. [Google Scholar]

- Lyu, J.; Ruppel, P.; Hendrich, N.; Li, S.; Görner, M.; Zhang, J. Efficient and collision-free human–robot collaboration based on intention and trajectory prediction. IEEE Trans. Cogn. Dev. Syst. 2022, 15, 1853–1863. [Google Scholar] [CrossRef]

- Liu, T.; Wang, J.; Meng, M.Q.H. Evolving hidden Markov model based human intention learning and inference. In Proceedings of the 2015 IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015; pp. 206–211. [Google Scholar]

- Luo, R.; Hayne, R.; Berenson, D. Unsupervised early prediction of human reaching for human–robot collaboration in shared workspaces. Auton. Robot. 2018, 42, 631–648. [Google Scholar] [CrossRef]

- Vinanzi, S.; Goerick, C.; Cangelosi, A. Mindreading for robots: Predicting intentions via dynamical clustering of human postures. In Proceedings of the 2019 Joint IEEE 9th International Conference on Development and Learning and Epigenetic Robotics (ICDL-EpiRob), Oslo, Norway, 19–22 August 2019; pp. 272–277. [Google Scholar]

- Xiao, S.; Wang, Z.; Folkesson, J. Unsupervised robot learning to predict person motion. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 691–696. [Google Scholar]

- Zhang, X.; Yi, D.; Behdad, S.; Saxena, S. Unsupervised Human Activity Recognition Learning for Disassembly Tasks. IEEE Trans. Ind. Inform. 2023, 20, 785–794. [Google Scholar] [CrossRef]

- Fruggiero, F.; Lambiase, A.; Panagou, S.; Sabattini, L. Cognitive human modeling in collaborative robotics. Procedia Manuf. 2020, 51, 584–591. [Google Scholar] [CrossRef]

- Ogenyi, U.E.; Liu, J.; Yang, C.; Ju, Z.; Liu, H. Physical human–robot collaboration: Robotic systems, learning methods, collaborative strategies, sensors, and actuators. IEEE Trans. Cybern. 2019, 51, 1888–1901. [Google Scholar] [CrossRef] [PubMed]

- Obidat, O.; Parron, J.; Li, R.; Rodano, J.; Wang, W. Development of a teaching-learning-prediction-collaboration model for human-robot collaborative tasks. In Proceedings of the 2023 IEEE 13th International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Qinhuangdao, China, 11–14 July 2023; pp. 728–733. [Google Scholar]

- Vinanzi, S.; Cangelosi, A.; Goerick, C. The collaborative mind: Intention reading and trust in human-robot interaction. Iscience 2021, 24, 102130. [Google Scholar] [CrossRef]

- Liu, R.; Chen, R.; Abuduweili, A.; Liu, C. Proactive human-robot co-assembly: Leveraging human intention prediction and robust safe control. In Proceedings of the 2023 IEEE Conference on Control Technology and Applications (CCTA), Bridgetown, Barbados, 16–18 August 2023; pp. 339–345. [Google Scholar]

- Rekik, K.; Gajjar, N.; Da Silva, J.; Müller, R. Predictive intention recognition using deep learning for collaborative assembly. In Proceedings of the 2024 10th International Conference on Control, Decision and Information Technologies (CoDIT), Vallette, Malta, 1–4 July 2024; pp. 1153–1158. [Google Scholar]

- Kamali Mohammadzadeh, A.; Alinezhad, E.; Masoud, S. Neural-Network-Driven Intention Recognition for Enhanced Human–Robot Interaction: A Virtual-Reality-Driven Approach. Machines 2025, 13, 414. [Google Scholar] [CrossRef]

- Keshinro, B.; Seong, Y.; Yi, S. Deep Learning-based human activity recognition using RGB images in Human-robot collaboration. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2022, 66, 1548–1553. [Google Scholar] [CrossRef]

- Maceira, M.; Olivares-Alarcos, A.; Alenya, G. Recurrent neural networks for inferring intentions in shared tasks for industrial collaborative robots. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 665–670. [Google Scholar]

- Kedia, K.; Bhardwaj, A.; Dan, P.; Choudhury, S. Interact: Transformer models for human intent prediction conditioned on robot actions. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 621–628. [Google Scholar]

- Dell’Oca, S.; Matteri, D.; Montini, E.; Cutrona, V.; Barut, Z.M.; Bettoni, A. Improving collaborative robotics: Insights on the impact of human intention prediction. In Proceedings of the International Workshop on Human-Friendly Robotics, Lugano, Switzerland, 30 September–1 October 2024; pp. 1–15. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Miyazawa, K.; Nagai, T. Survey on multimodal transformers for robots. TechRxiv 2023. [Google Scholar] [CrossRef]

- Ding, P.; Zhang, J.; Zhang, P.; Lyu, Y. Large Language Model-powered Operator Intention Recognition for Human-Robot Collaboration. IFAC-PapersOnLine 2025, 59, 2280–2285. [Google Scholar] [CrossRef]

- Liu, H.; Gamboa, H.; Schultz, T. Sensor-Based Human Activity and Behavior Research: Where Advanced Sensing and Recognition Technologies Meet. Sensors 2023, 23, 125. [Google Scholar] [CrossRef]

- Xu, B.; Li, J.; Wong, Y.; Zhao, Q.; Kankanhalli, M.S. Interact as you intend: Intention-driven human-object interaction detection. IEEE Trans. Multimed. 2019, 22, 1423–1432. [Google Scholar] [CrossRef]

- Schreiter, T.; Rudenko, A.; Rüppel, J.V.; Magnusson, M.; Lilienthal, A.J. Multimodal Interaction and Intention Communication for Industrial Robots. arXiv 2025, arXiv:2502.17971. [Google Scholar] [CrossRef]

- Lei, Q.; Zhang, H.; Yang, Y.; He, Y.; Bai, Y.; Liu, S. An investigation of applications of hand gestures recognition in industrial robots. Int. J. Mech. Eng. Robot. Res. 2019, 8, 729–741. [Google Scholar] [CrossRef]

- Darmawan, I.; Rusydiansyah, M.H.; Purnama, I.; Fatichah, C.; Purnomo, M.H. Hand Gesture Recognition for Collaborative Robots Using Lightweight Deep Learning in Real-Time Robotic Systems. arXiv 2025, arXiv:2507.10055. [Google Scholar] [CrossRef]

- Shrinah, A.; Bahraini, M.S.; Khan, F.; Asif, S.; Lohse, N.; Eder, K. On the design of human-robot collaboration gestures. arXiv 2024, arXiv:2402.19058. [Google Scholar] [CrossRef]

- Abdulghafor, R.; Turaev, S.; Ali, M.A. Body language analysis in healthcare: An overview. Healthcare 2022, 10, 1251. [Google Scholar] [CrossRef]

- Kuo, C.-T.; Lin, J.-J.; Jen, K.-K.; Hsu, W.-L.; Wang, F.-C.; Tsao, T.-C.; Yen, J.-Y. Human posture transition-time detection based upon inertial measurement unit and long short-term memory neural networks. Biomimetics 2023, 8, 471. [Google Scholar] [CrossRef]

- Kurniawan, W.C.; Liang, Y.W.; Okumura, H.; Fukuda, O. Design of human motion detection for non-verbal collaborative robot communication cue. Artif. Life Robot. 2025, 30, 12–20. [Google Scholar] [CrossRef]

- Laplaza, J.; Moreno, F.; Sanfeliu, A. Enhancing robotic collaborative tasks through contextual human motion prediction and intention inference. Int. J. Soc. Robot. 2024, 17, 2077–2096. [Google Scholar] [CrossRef]

- Belcamino, V.; Takase, M.; Kilina, M.; Carfí, A.; Mastrogiovanni, F.; Shimada, A.; Shimizu, S. Gaze-Based Intention Recognition for Human-Robot Collaboration. In Proceedings of the 2024 International Conference on Advanced Visual Interfaces, Genoa, Italy, 3–7 June 2024. [Google Scholar]

- Ban, S.; Lee, Y.J.; Yu, K.J.; Chang, J.W.; Kim, J.-H.; Yeo, W.-H. Persistent human–machine interfaces for robotic arm control via gaze and eye direction tracking. Adv. Intell. Syst. 2023, 5, 2200408. [Google Scholar] [CrossRef]

- Losey, D.P.; McDonald, C.G.; Battaglia, E.; O’Malley, M.K. A review of intent detection, arbitration, and communication aspects of shared control for physical human–robot interaction. Appl. Mech. Rev. 2018, 70, 010804. [Google Scholar] [CrossRef]

- Zhou, Y.; Tang, N.; Li, Z.; Sun, H. Methodology for Human–Robot Collaborative Assembly Based on Human Skill Imitation and Learning. Machines 2025, 13, 431. [Google Scholar] [CrossRef]

- Savur, C.; Sahin, F. Survey on Physiological Computing in Human–Robot Collaboration. Machines 2023, 11, 536. [Google Scholar] [CrossRef]

- Fazli, B.; Sajadi, S.S.; Jafari, A.H.; Garosi, E.; Hosseinzadeh, S.; Zakerian, S.A.; Azam, K. EEG-Based Evaluation of Mental Workload in a Simulated Industrial Human-Robot Interaction Task. Health Scope 2025, 14, e158096. [Google Scholar] [CrossRef]

- Richter, B.; Putze, F.; Ivucic, G.; Brandt, M.; Schütze, C.; Reisenhofer, R.; Wrede, B.; Schultz, T. Eeg correlates of distractions and hesitations in human–robot interaction: A lablinking pilot study. Multimodal Technol. Interact. 2023, 7, 37. [Google Scholar] [CrossRef]

- Lyu, J.; Maýe, A.; Görner, M.; Ruppel, P.; Engel, A.K.; Zhang, J. Coordinating human-robot collaboration by EEG-based human intention prediction and vigilance control. Front. Neurorobot. 2022, 16, 1068274. [Google Scholar] [CrossRef]

- Rani, P.; Sarkar, N.; Smith, C.A.; Kirby, L.D. Anxiety detecting robotic system–towards implicit human-robot collaboration. Robotica 2004, 22, 85–95. [Google Scholar] [CrossRef]

- Peternel, L.; Tsagarakis, N.; Ajoudani, A. A human–robot co-manipulation approach based on human sensorimotor information. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 811–822. [Google Scholar] [CrossRef]

- Marić, J.; Petrović, L.; Marković, I. Human Intention Recognition in Collaborative Environments using RGB-D Camera. In Proceedings of the 2023 46th MIPRO ICT and Electronics Convention (MIPRO), Opatija, Croatia, 22–26 May 2023; pp. 350–355. [Google Scholar]

- Khan, S.U.; Sultana, M.; Danish, S.; Gupta, D.; Alghamdi, N.S.; Woo, S.; Lee, D.-G.; Ahn, S. Multimodal feature fusion for human activity recognition using human centric temporal transformer. Eng. Appl. Artif. Intell. 2025, 160, 111844. [Google Scholar] [CrossRef]

- Schlenoff, C.; Pietromartire, A.; Kootbally, Z.; Balakirsky, S.; Foufou, S. Ontology-based state representations for intention recognition in human–robot collaborative environments. Robot. Auton. Syst. 2013, 61, 1224–1234. [Google Scholar] [CrossRef]

- Zhang, Z.; Peng, G.; Wang, W.; Chen, Y.; Jia, Y.; Liu, S. Prediction-Based Human-Robot Collaboration in Assembly Tasks Using a Learning from Demonstration Model. Sensors 2022, 22, 4279. [Google Scholar] [CrossRef]

- Huang, Z.; Mun, Y.-J.; Li, X.; Xie, Y.; Zhong, N.; Liang, W.; Geng, J.; Chen, T.; Driggs-Campbell, K. Hierarchical intention tracking for robust human-robot collaboration in industrial assembly tasks. arXiv 2022, arXiv:2203.09063. [Google Scholar]

- Nie, Y.; Ma, X. Gaze Based Implicit Intention Inference with Historical Information of Visual Attention for Human-Robot Interaction. In Intelligent Robotics and Applications; Springer: Cham, Switzerland, 2021; pp. 293–303. [Google Scholar]

- Zhou, J.; Su, X.; Fu, W.; Lv, Y.; Liu, B. Enhancing intention prediction and interpretability in service robots with LLM and KG. Sci. Rep. 2024, 14, 26999. [Google Scholar] [CrossRef]

- Xing, X.; Maqsood, K.; Zeng, C.; Yang, C.; Yuan, S.; Li, Y. Dynamic motion primitives-based trajectory learning for physical human–robot interaction force control. IEEE Trans. Ind. Inform. 2023, 20, 1675–1686. [Google Scholar] [CrossRef]

- Xing, X.; Burdet, E.; Si, W.; Yang, C.; Li, Y. Impedance learning for human-guided robots in contact with unknown environments. IEEE Trans. Robot. 2023, 39, 3705–3721. [Google Scholar] [CrossRef]

| Model | Input Data | Sensing Device | Scenario | Key Features/ Architecture | Performance | Limitations | Key Findings | Ref. |

|---|---|---|---|---|---|---|---|---|

| Finite State Machines (FSM) | Hand and eye gaze data. | Leap Motion Sensor and Tobii Eye Tracker 4c. | 6-DOF robot assembling building blocks in a virtual setting. | FSM as a state transfer module. | 100% accuracy. Assembly time reduced By 57.6% | Delays and stability. | Efficient eye-hand coordination and FSM. | [35] |

| Behaviour Trees (BTs) | Natural language instructions. | Azure Kinect camera (object detection). | ABB YuMi robot picking and placing cubes. | BT with Large Language Model (LLM) + GPT-4-1106. | 100% goal interpretation. | Scalability Uncertainty. | High goal interpretation accuracy. | [36] |

| Ontology-Based Rules | Hand pose and velocity measurements. | HTC Vive Tracker. | A human and a robot collaboratively fill a tray during an industrial kitting task. | Web Ontology Language 2 (OWL 2) reasoner for intention inference. | Maintain robustness In incongruent limit cases. | Decreased expressiveness of computational formalisation. | Enhanced robotic assistance and safety via intention inference. | [37] |

| Semantic Knowledge-Based Rules | Perception data. | Camera. | Human–robot collaborative teaching in an industrial assembly. | GrakAI for semantic knowledge representation. | Reduced training data Requirements. | Addressed manually modelling event rules. | Better management of novel situations. | [38] |

| Fuzzy Rules | Hand gestures. | Wearable data glove. | Human–robot handover. | Fuzzy Rules-based Fast Human Handover Intention Prediction (HHIP). | Average prediction accuracy of 99.6% | Prediction accuracy is not yet 100% | The method avoids visual occlusion/safety risks. | [39] |

| Temporal Logic Rules | Skeletal data. | Azure Kinect DK camera. | Human–robot decelerator assembly. | Spatial-Temporal Graph Convolutional Networks (ST-GCN) | Top-1 accuracy of 40%. mAP of 96.89% at 54.37 fps | Lower accuracy of assembly action recognition in isolation | Framework reliably deduces agent roles with high probability. | [40] |

| Petri Nets | Human gestures | Wearable Inertial Measurement Units (IMUs). | Aircraft assembly task using Supernumerary Robotic Limbs (SRLs). | Coloured Petri Nets (CPNs) with graphical notations and high-level programming capabilities. | Successful detection of 90% of nods. | Linearly classified simple gestures. | Minimal states and perfect resource management. | [41] |

| Computational Model | Advantages | Disadvantages | Representative Application Scenarios | Potential Improvements | Ref. |

|---|---|---|---|---|---|

| FSM | Effective for discrete state control. Clear stages for optimisation. Reduces user burden. | Lack of direct sensory feedback incorporation. Potential for delay/stability issues. | Collaborative assembly tasks. Virtual prototyping. Semiautomatic operation management. | Optimisation of interaction states. Incorporation of a feedback mechanism. | [35] |

| BTs | Transparent and readable. Enhanced reactivity. Strong structure and modularity. Amenable to formal verification is possible. | Limited scalability. Difficulty with long-horizon planning. Challenges with handling missing information. Requires substantial engineering effort. | Collaborative object manipulation tasks (Pick-and-place operations). | Automated and continuous policy updates. Eliminating reflective feedback. Simplified parameter selection. Robust resolution of ambiguous instructions. | [36] |

| Ontology-Based Rules | Higher state recognition accuracy. Key enabler for human–robot safety. | Challenge of non-convex objects. High ontology update overhead in dynamic environments | Cooperative human–robot environments. Industrial collaborative assembly tasks. | Model more complex spatial relationships. Implement a system for failure determination and replanning. | [37] |

| Semantic Knowledge-Based Rules | Effective handling of novel situations. Knowledge portability across robots. | Requires manual rule modelling and user interaction. | Human–robot collaborative teaching. User-guided systems. | Support for episodic definition of novel events. Development of an action generator for task execution. | [38] |

| Fuzzy Rules | Faster intention prediction. Mitigates sensor-related safety threats. | Challenges with sensor fusion and prediction. | Human–robot handover. Object transfer. Robot motion adjustments. | Integrate multimodal sensor information to improve intention detection accuracy. | [39] |

| Temporal Logic Rules | Effective reactive behaviour specification. Superior long-term future action prediction. | Performance degrades with sub-optimal agents. Complex and exhaustive prediction search. | Human movement prediction in a collaborative task | Introduce constraints to robot motions. Extensions for non-holonomic robots. | [40] |

| Petri Nets | Effective for modelling concurrent and deterministic processes. Excellent for the management of parallel tasks. | Basic structure unsuitable for complex tasks. Incompatible with complex human uncertainties. | Specialised complex collaborative assembly tasks | Increase sensor variety and sophisticated models. | [41] |

| Model | Input Data | Sensing Device | Scenario | Key Features/ Architecture | Performance | Limitations | Key Findings | Ref. |

|---|---|---|---|---|---|---|---|---|

| Bayesian Network (BN) | Head and hand orientation. Hand velocities. | Intel RealSense D455 depth camera. | Tabletop pick-and-place task involving a UR5 robot. | Bayesian Network (BN) for modelling variable causal relationships. | 85% accuracy. 36% precision F1 score = 60% | Exhibit temporary mispredictions. | Achieves real-time and smooth collision avoidance. | [51] |

| Particle Filter | 3D human wrist trajectories. | Intel RealSense RGBD camera. | Assembly of Misumi waterproof E-Model Crimp wire connectors. | Mutable Intention Filter (MIF) as a particle filter variant. | Frame-wise accuracy of 90.4% | Single layer Filter. | Zero assembly failures. Fast completion time. Shorter guided path. | [52] |

| Hidden Markov Model (HMM) | Assembly action sequence video data. | Xiaomi 11 camera. | Reducer assembly process. | Integration with assembly task constraints. | Prediction accuracy of 90.6% | Need for further optimisation. | Efficient feature extraction. | [53] |

| Probabilistic Dynamic Movement Primitive (PDMP) | 3D right hand motion trajectories. | Microsoft Kinect V1. | Tabletop manipulation task | Offline stage of PDMP construction | Good adaptation and generalisation | Temporal mismatches. | High performance in predicted hand motion trajectory similarity. | [54] |

| Gaussian Mixture Model (GMM) | Human palm trajectory data. | PhaseSpace Impulse X2 motion-capture system. | Shared workspace pick-and-place and assembly tasks | GMM for human intention target estimation | Accurate and robust estimates | False classifications for close targets | Generates shorter robot trajectories and faster task execution. | [55] |

| Computational Model | Advantages | Disadvantages | Representative Application Scenarios | Potential Improvements | Ref. |

|---|---|---|---|---|---|

| BN | High accuracy and speed. Clear causality between variables. Good interpretability. | Single-modality baselines takes less to predict. | Collaborative object pick-and-place operations. | Automate obstacle size. Offline LLM integration for structure. | [51] |

| Particle Filter | Effective for tracking continuously changing intentions. | Base MIF is for a single layer intention tracking. | Low-level intention tracking in collaborative assembly tasks. | Generalise from rule-based intentions to latent states. Explore time-varying intention transition settings. | [52] |

| HMM | Require a smaller sample size for prediction. Mitigate action recognition errors effectively. | Untrained sequences prevent prediction. | Collaborative assembly task: predicting the operator’s next assembly intention. | Integration of assembly task constraints. Improve generalisation ability and real-time performance. | [53] |

| PDMP | Easy adaptability and generalisation. Better flexibility in unstructured environments. Low noise sensitivity. | High prediction error in very early movement stages. Temporal mismatches due to the average duration. | Online inference of human intention in collaborative tasks (e.g., object manipulation). | Reduce path attractor differences. Reduce temporal mismatch. Generalise the framework to arm or whole-body motion prediction. | [54] |

| GMM | Estimates target accurately early on with the predicted trajectory. Low computational load for few targets. | Poor target accuracy initially based on the observed trajectory. Not suited for trajectory shape regression. | Estimating the human reaching goals in collaborative assembly tasks. | Fuse GMM input with extra information. | [55] |

| Model | Input Data | Sensing Device | Scenario | Key Features/ Architecture | Performance | Limitations | Key Findings | Ref. |

|---|---|---|---|---|---|---|---|---|

| K-Nearest Neighbours (KNN) | Labelled force/torque signals. | ATI Multi-Axis Force/Torque sensor Mini40-Si-20-1 | Car emblem polishing: robot holds object, human polishes. | KNN with Dynamic Time Warping (DTW). | F1 score = 98.14% with inference time of 0.85 s. | Bias towards “move” intent. | Validation showed evidence of user adaptation. | [10] |

| Unsupervised Learning (Online clustering) | Unlabeled human reaching trajectories. | VICON System | Predicting reaching motions for robot avoidance. | Two-layer Gaussian Mixture Models (GMMs) + online unsupervised learning. | 99.0% (simple tasks), 93.0% (realistic scenarios) | Reduced early prediction accuracy in complex settings. | Faster decision making for human motion inference. | [57] |

| X-means Clustering | Human skeletal data. | iCub Robot Eye Cameras | Block building game with iCub robot. | X-means clustering + Hidden Semi-Markov Model (HSMM). | 100% accuracy at ~57.5% task completion, 4.49 s latency. | Reliance solely on skeletal input data | Approach eliminates the need for handcrafted plan libraries. | [58] |

| Partitioning Around Medoids (PAM) | Short trajectories of people moving. | LIDAR or RGBD camera | Predicting human motion for interference avoidance. | Pre-trained SVM + PAM clustering. | 70% prediction accuracy. | Manual selection of prototype numbers. Small dataset. | Prediction accuracy was effectively demonstrated in a lab environment. | [59] |

| Sequential Variational Autoencoder (Seq-VAE) | Unlabeled video frames of human poses. | Digital Camera | Hard disk drive disassembly. | Seq-VAE + Hidden Markov Model (HMM). | 91.52% recognition accuracy, reduced annotation effort. | Robustness to real- world product variability | Successfully identifies continuous complex activities. | [60] |

| Inverse Reinforcement Learning (IRL) | Images of humans gestures and object attributes. | Kinect camera | Coffee-making and pick-and-place tasks. | Inverse Reinforcement Learning (IRL) in Markov Decision Processes. | Derived globally optimal policies. Outperformed frequency-based optimisation. | Insufficient demonstration data | Robot predicts successive actions and suggests support. | [8] |

| Computational Model | Advantages | Disadvantages | Representative Application Scenarios | Potential Improvements | Ref. |

|---|---|---|---|---|---|

| KNN | Algorithmic simplicity and concept. Good performance in time series classification. | Slow inference time. Computationally intense. Cannot handle non-sequential data. | Inferring intentions during physical collaborative tasks for time series classification. | Incorporate diverse contextual data. | [10] |

| Online Clustering | Manual labelling is not required. Models built on-the-fly. Reduce the influence of noisy motions. | Prediction performance degrades with challenging setups. | Industrial manipulation tasks for early prediction of human intent. | Explore ways to generate smoother predicted trajectories. Explore fast motion planning algorithms for the robot. | [57] |

| X-means Clustering | Autonomously discovers an optimal cluster. | Untrained sequences prevent prediction. | Low-level clustering of human postures in collaborative tasks. | Integration with new data sources. | [58] |

| PAM | Minimises general pairwise dissimilarities. | Manual selection of prototypes through trial and error. | Unsupervised learning to find prototypical patterns of motion in industrial settings. | Automate the selection of the number of prototypes. Incorporate cluster statistics. | [59] |

| Seq-VAE | Reduces manual annotation needs. Latent space clearly separates actions. Captures detailed subactions effectively. Distinguishes new actions without retraining. | Requires tuning hyperparameters. Higher Mean Square Error (MSE) for frame reconstruction. | Unsupervised human activity recognition in disassembly tasks. | Integrate advanced self-supervised learning methods. | [60] |

| IRL | Effectively discovers optimal reward function. Reduces the learning time significantly. | Ineffective if there are gesture recognition errors or insufficient demonstration data. | Pick-and-place collaborative tasks. | Consider more actions for the versatility of the collaboration. | [8] |

| Model | Input Data | Sensing Device | Scenario | Key Features/ Architecture | Performance | Limitations | Key Findings | Ref. |

|---|---|---|---|---|---|---|---|---|

| Long Short-Term Memory (LSTM) | Time series 3D tensor of human key point coordinates + depth values | 3D Azure Kinect Cameras | Human hand destination prediction in microcontroller housing assembly | Captures long-term dependencies. | ~89% validation accuracy | Limited to predefined locations. | Faster and stable convergence. | [66] |

| Convolutional Neural Network (CNN) | High-resolution, multimodal data of human motion and gestures. | HTC Vive Trackers and Pro Eye Arena System | Completing a series of predefined activities in a virtual manufacturing environment. | Extract local spatial Patterns and fine-grained motion details. | F1 score = 0.95, Response time = 0.81 s | Difficulty capturing complex gait patterns. | Achieved near-perfect overall accuracy. | [67] |

| Convolutional LSTM | RGB images resized to 64 × 64 Pixels, normalised. | Stationary Kinect Camera | Predict human intentions for HRC scenarios like furniture assembly or table tennis | Combines CNN for spatial feature extraction + LSTM for temporal modelling. | 74.11% accuracy. | Low accuracy for practical deployment. | ConvLSTM clearly outperformed alternative methods. | [68] |

| Recurrent Neural Network (RNN) | 6D force/torque sensor data from shared object manipulation | ATI Multi-Axis Force/Torque Sensor | Classify intentions in industrial polishing/collaborative object handling. | Processes sequential force sensor measurements. SoftMax classification. | F1 = 0.937 Response time = 0.103 s | Specific to force- based tasks, may not generalise to vision-based scenarios. | Speed superior to the window-based methods. | [69] |

| Transformer | Human poses | OptiTrack Motion Capture System | Close proximity human–robot tasks such as cabinet pick, cart place, and tabletop manipulation. | Two-stage training: human–human and human–robot. | Achieved lower FDE, better pose prediction at 1 s horizon. | Limited environments per task. | Outperformed marginal models in human–robot interactions. | [70] |

| Neural Network (NN) | Contextual information and Visual features | Work-cell camera Setup | Turn-based Tower of Hanoi (TOH) game. | Multi-input Neural Network (NN). | Test accuracy of more than 95% | Single-use case. | Efficient, consistent, and predictable performance. | [71] |

| Computational Model | Advantages | Disadvantages | Representative Application Scenarios | Potential Improvements | Ref. |

|---|---|---|---|---|---|

| LSTM | Faster response time. Effectively captures long-term dependencies in sequential data. | Mispredictions due to partial hand occlusions. Prediction instability due to human hesitation. | Real-time human intention prediction in collaborative assembly tasks. | Incorporating wider situations. Develop a task planning module. | [66] |

| CNN | Excellent for extracting local spatial features. Robust for multi-dimensional input data. | Lacks the capability to excel on its own in tasks requiring an understanding of temporal dynamics. | Classification of the seven-core human activities in collaborative tasks. | Combination with other architectures to improve the capturing of long-range temporal dependencies. | [67] |

| ConvLSTM | High prediction accuracy. Effectively captures spatial and temporal relationships in video frames. | Training time is longer. Limited to a few actions. | Industrial collaborative tasks. | Expand applications to more complex problems. Increase action types. | [68] |

| RNN | Reduces decision latency. Flexible, reacting dynamically to changes in user intention. | Performance saturation. | Force sensor data for inferring operator intentions in a shared task. | Expand input modalities. | [69] |

| Transformer | Effectively tackles the dependency problem between human intent and robot actions. | Training requires large-scale paired human–robot interaction data. | Collaborative human–robot object manipulation. | Dataset expansion to cover a wider distribution of motions. | [70] |

| NN | Outperforms optimal logic in predicting human actions. Provides more stable performance. | Only uses single-point-in-time predictions. | Collaborative tasks for anticipating human moves. | Test in use cases with higher task variability. | [71] |

| Aspect | Rule-Based Models | Probabilistic Models | Machine Learning and Deep Learning Models |

|---|---|---|---|

| Core Principle | Explicit logic using predefined rules and symbolic reasoning. | Uncertainty modelling through probability distributions. | Data-driven learning through pattern extraction neural architectures |

| Interpretability | Highly interpretable due to explicit rules and decision logic. | Moderately interpretable, though probabilistic dependencies may obscure reasoning. | Low interpretability, often considered a “black-box” with limited interpretability. |

| Adaptability | Low—limited to programmed rules, poor scalability to novel scenarios. | Moderate -adapts to uncertainty but relies on predefined probability structures. | High—dynamically learns from a large dataset and adapts to changing human behaviours. |

| Data Requirement | Low—operates effectively with small or no datasets. | Medium—requires data to estimate probability distributions. | High—needs large, diverse datasets for training and generalisation. |

| Robustness to Uncertainty | Poor—fails when faced with unexpected or noisy human behaviour. | Strong—model behavioural variability effectively. | Very strong—handles noisy, multimodal sensory inputs robustly |

| Computational Cost | Low—simple logical processing. | Medium—depends on the complexity of algorithmic structures. | High—training and intention inference require intensive computation. |

| Suitability for Industrial Environments | Ideal for safety-critical systems requiring transparency. | Suitable for probabilistic uncertainty must be managed. | Suitable for adaptive, context-rich environments requiring predictive intelligence. |

| Weaknesses | Rigid, cannot generalise beyond predefined rules. | Requires accurate probabilistic modelling and assumptions. | Low explainability, potential for algorithmic bias |

| Interaction Types | Description | Application Case | Key Cues for Intention Recognition |

|---|---|---|---|

| Collaborative Assembly Tasks | Sequenced, interdependent tasks between a human and a robot working together. | Part placement and alignment [52]. Multi-component assembly [40,53,66,68]. Complex and specialised assembly [41]. | Physical and contextual cues (hand trajectory, component type, and sequence of operation). |

| Object Manipulation and Handover | Transferring or collaboratively handling objects in a workspace. | Pick-and-place operations [8,36,51,55,69]. Handover prediction [39,57]. | Physical and contextual cues (hand position, gesture, and object location). |

| Shared Physical Tasks and Co-Manipulation | Humans and robots apply force to a shared object. | Polishing and inspection [10]. Co-manipulation [54,69,71]. | Physical cues (force/torque signals, motion dynamics). |

| Teaching and Guidance | Human instructing and demonstrating a task to the robot. | Collaborative teaching [38]. Learning from demonstration [98]. | Physical cues (movement patterns, demonstration trajectories). |

| Cognitive and Simulation Tasks | Highly structured and tests cognitive frameworks. | Virtual prototyping [35,67]. | Physical, physiological, and contextual cues (gaze direction, cognitive state, and task states). |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kekana, M.; Du, S.; Steyn, N.; Benali, A.; Djerroud, H. A Review of Human Intention Recognition Frameworks in Industrial Collaborative Robotics. Robotics 2025, 14, 174. https://doi.org/10.3390/robotics14120174

Kekana M, Du S, Steyn N, Benali A, Djerroud H. A Review of Human Intention Recognition Frameworks in Industrial Collaborative Robotics. Robotics. 2025; 14(12):174. https://doi.org/10.3390/robotics14120174

Chicago/Turabian StyleKekana, Mokone, Shengzhi Du, Nico Steyn, Abderraouf Benali, and Halim Djerroud. 2025. "A Review of Human Intention Recognition Frameworks in Industrial Collaborative Robotics" Robotics 14, no. 12: 174. https://doi.org/10.3390/robotics14120174

APA StyleKekana, M., Du, S., Steyn, N., Benali, A., & Djerroud, H. (2025). A Review of Human Intention Recognition Frameworks in Industrial Collaborative Robotics. Robotics, 14(12), 174. https://doi.org/10.3390/robotics14120174