Enhancing Emergency Response: The Critical Role of Interface Design in Mining Emergency Robots

Abstract

1. Introduction

- − RQ1: Which human factors most critically affect the design and operation of robotic interfaces in underground mine SAR missions?

- − RQ2: How can interface design mitigate these challenges to improve operator performance and mission safety?

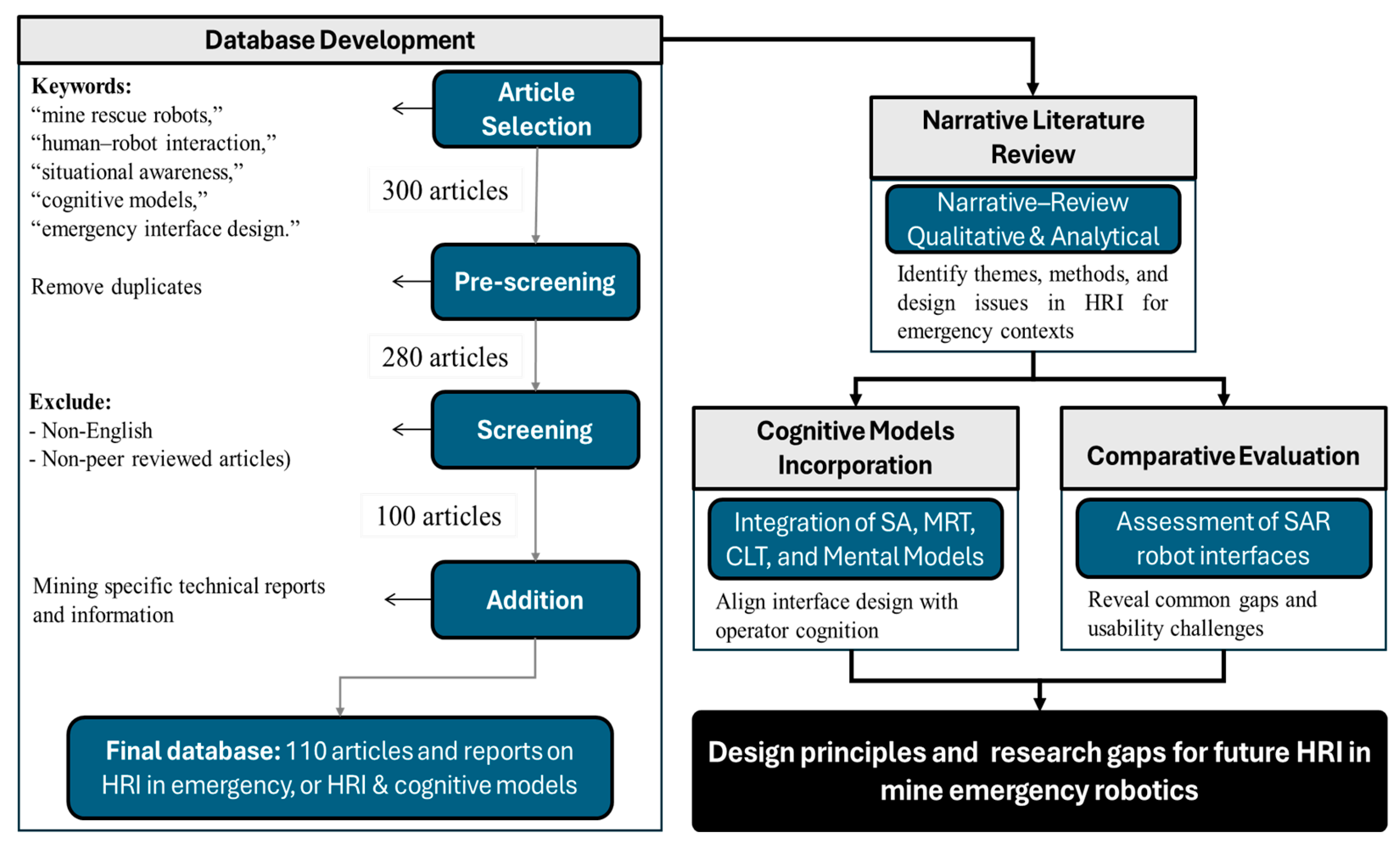

2. Methodology

2.1. Literature Search and Selection

- Records identified through database searching: ~300

- Duplicates removed and irrelevant records excluded: ~190

- Studies assessed for eligibility: ~110

- Studies included in final synthesis: 110 peer-reviewed journal and conference papers

2.2. Data Analysis

2.3. Comparative Evaluation

3. Robotic Technologies in Mining: An Overview

Search and Rescue Robots in Mining Emergencies

4. HRI in Underground Mine Emergencies

5. Human Factors Challenges in SAR Interface Design

5.1. Situational Awareness (SA)

5.2. Cognitive Load

5.3. Trust and Transparency

5.4. Attention and Individual Differences

5.5. Stress and Fragility

6. Cognitive Models in Interface Design

6.1. Endsley’s Situational Awareness Model

6.2. Wickens’ Multiple Resource Theory

6.3. Cognitive Load Theory

6.4. Mental Models

6.5. Technology Acceptance Model

6.6. Ecological Interface Design

| Cognitive Model | Description | Importance in SAR | HRI Relevance |

|---|---|---|---|

| Endsley’s Situational Awareness (SA) | Endsley’s model defines Situational Awareness (SA) as a three-tiered process involving the perception of environmental elements, comprehension of their meaning, and projection of their future status | Operators need to monitor robot location, gas levels, victim status, and structural hazards, all in real time. | Improves operator awareness during interaction, essential for decision-making in dynamic environments. |

| Wickens’ Multiple Resource Theory | Suggests that human attention is divided across separate cognitive and sensory channels, such as visual-spatial, auditory-verbal, and manual-motor resources | Reduces overload and optimizes attention during multitasking, especially when the operator must control, monitor, and communicate simultaneously by distributing information across different resources | Help avoid overload by using different sensory channels for information delivery. |

| Cognitive Load Theory | distinguishes between intrinsic load (related to the task’s complexity), extraneous load (caused by poor design or presentation), and germane load (the mental effort directed toward learning or schema construction) | Stress and complexity can impair memory and performance; simplicity boosts decision speed and accuracy | Prevent performance degradation by reducing unnecessary cognitive effort in complex systems. |

| Mental Model (Norman) | The internal representations that users develop to understand and predict how a system behaves. | Users must quickly grasp system behavior, often under stress, mismatched expectations lead to errors. | Ensures intuitive interaction by aligning system behavior with user expectations. |

| Technology Acceptance Model (TAM) | Explains technology adoption via perceived usefulness (PU) and perceived ease of use (PEOU), later extensions add trust and behavioral intention. | Acceptance hinges on effectiveness, reliability, and ease of operation—especially under stress. | Promotes adoption by maximizing perceived usefulness and ease of use. |

| Ecological Interface Design (EID) | Embeds system constraints and affordances into the interface using abstraction hierarchies, enabling operators to directly perceive limits and possibilities rather than compute them mentally | Degraded sensing, rapid decisions; EID makes limits visible—gas, energy, comms, terrain—speeding action and improving resilience. | Accelerates decisions by making constraints and affordances directly visible [83,84]. |

7. Interface Design Modalities and Technologies

8. Design Recommendations for Underground HRI Interfaces

- Layered “SA-first” Displays with Ecological Structure:Situational awareness is best supported by a central “mission pane” that fuses live video, map overlays, and hazard indicators, surrounded by compact widgets for system status (battery, communication, tether). By applying ecological interface design principles—such as making constraint boundaries visually legible—operators can perceive safe vs. unsafe states without exhaustive scanning. This approach reduces extraneous cognitive load and supports higher-level projection [83].

- Multimodal Feedback to Reduce Visual Bottlenecks:Relying solely on visual channels in underground, low-visibility settings risks overloading the operator. Incorporating audio cues (e.g., rising tones for gas trends) and haptic signals (e.g., joystick vibration near obstacles) helps distribute key information across modalities. In shared-autonomy teleoperation, haptic feedback has been shown to improve both task performance and user satisfaction, enhancing situational awareness [115].

- Camera Strategy for Mitigating the “Soda-Straw” Effect:To avoid narrow, tunnel-vision views, interfaces should provide multiple simultaneous camera/LiDAR feeds (forward, rear, side) with stitched or panoramic views and optional immersive VR/AR modes for planning. Work in immersive teleoperation shows improved spatial awareness and control under challenging environments [116].

- Latency-Aware Control with Predictive Aids:Under underground communication constraints, latency can degrade control and raise operator stress. Adding predictive displays—such as a “ghost” robot trajectory preview—enables the operator to anticipate the robot’s motion despite delays. A low-cost predictive display improved operator performance by ~20% under latency in teleoperation experiments [117]. More advanced approaches combine XR-based intention overlaid displays and shared control to maintain operability under variable delays [118].

- Shared Autonomy with Transparent Handover:Providing operators with autonomy modes (e.g., obstacle-avoid, path-following, safe-stop) must be accompanied by clear, real-time explanations of the robot’s decisions to maintain trust and situational alignment. Learning-based shared autonomy methods dynamically adjust assistance based on operator intent and constraints [119], mobile manipulator reviews emphasize variable autonomy for reducing workload in hazardous tasks [120].

- Context-Sensitive, Workload-Aware Interfaces:Interfaces should default to minimal, essential displays and dynamically surface detailed panels or alarms when critical events occur (e.g., gas rise, fault). This adaptive design prevents information overload and helps operators focus. It aligns with calls for renewed user-centric teleoperation design to manage cognitive load [121].

- Sensor Fusion for Hazard Projection:Integrating gas sensors, thermal imagery, LiDAR, and inertial measurements into a unified dashboard with predictive cues enables operators to anticipate hazardous conditions. This aligns interface behavior with operator mental models and supports proactive planning. In teleoperation survey work, fused perception-control frameworks are recommended for complex environments [122].

- Audio & Communication as Core Interface Features:In subterranean contexts, video may degrade or fail, robust audio and voice channels become primary information conduits. Interfaces should maintain duplex audio with noise suppression and map distinct tones to hazard classes, as well as provide short spoken alerts (“Low O2—Stop”). Teleoperation latency studies confirm that audio and haptic feedback mitigate cognitive load and stabilize performance under delay [123].

9. Illustration of the Current State of HRI in Mine Rescue and Areas for Improvement

- Limited support for projection: Few interfaces offer trend-based cues or predictive overlays to help the operator anticipate future states, reducing the ability to project situations per Endsley’s SA model [126].

| Name | Interface Details | Challenges | Human Factor Implications |

|---|---|---|---|

| RATLER [8,25] | Console-based teleoperation with stereo/video cameras via RF/microwave | Rough terrain navigation, latency, communication reliability | Interface delays and poor feedback impair perception and projection (SA). Recommend enhancing real-time feedback and predictive cues to support SA and reduce visual/manual load (MRT). Delayed/low-fidelity feedback depresses PU; simplify control/visuals to raise PEOU; add status rationale and confidence cues to strengthen trust. |

| Numbat [8] | Fiber-optic tethered GUI + joystick station with multiple camera feeds | High cognitive workload due to poor lighting and terrain; interface complexity | Overload illustrates high intrinsic cognitive load (CLT). Simplified visual channels and adaptive feedback are needed. Mapping views to user mental models improves response efficiency. Interface complexity under poor lighting lowers PEOU; show mission benefit (gas/map fusion) to raise PU; transparent fault reporting builds trust. |

| ANDROS Wolverine (V2) [7] | Rugged laptop GUI, joystick/gamepad, touchscreen diagnostics; multiple cameras | Operator overload during tracking and control in mission-critical scenarios | Interface must integrate state + control feedback to support comprehension (SA). Failure highlights poor multitasking design violating MRT; automation could offload user demand (CLT). Overload and split attention reduce PEOU; integrate tracking + control to raise PU; clear autonomy/override cues calibrate trust. |

| Cave Crawler [133] | PC GUI with SLAM-enabled mapping, laser + sensors; wired/wireless control | Low bandwidth, underground delay, darkness | Communication loss reduces perception and projection (SA). Interfaces should use buffered visuals, simplified overlays to match user mental models under degraded feedback. Bandwidth dropouts undermine trust and PU; buffered video + progressive disclosure improve PEOU; signal/quality badges restore trust. |

| Souryu V [134] | Handheld controller for serial crawler, video feedback | Narrow, cluttered environments; limited visibility | Tight spaces increase visual-spatial complexity (CLT). Ergonomic feedback and spatial mapping must reinforce mental models and reduce split attention (MRT). Tight-space teleoperation mapping raises effort (PEOU); add 3D/path hints to raise PU; obstacle-detection reliability indicators support trust. |

| Inuktun VGTV [110] | Tethered video monitor + gamepad, video feeds via color/BW cameras | Navigation in confined spaces, cable drag | Physical drag and split displays raise extraneous load (CLT). Feedback delay undermines comprehension (SA). Control-response alignment must follow mental model predictability. Cable drag + split displays hurt PEOU; unified view + tether-tension widgets improve PU; link quality/tension health boosts trust. |

| Western Australia Water Co. Robot [34] | Fiber-optic tethered inspection robot with cameras and gas sensors | Tether navigation in debris-filled passages; heavy design limited retrieval | Tether strain adds physical and cognitive workload (CLT). Intuitive layout and tether tension indicators improve mental model stability and reduce operator frustration. Tether handling burden lowers PEOU; retrieval aids and route previews raise PU; stable comms + failure modes increase trust. |

| Sub-terranean Robot [135] | Semi-autonomous amphibious robot with GUI/joystick control | Water-filled shafts, zero visibility, sensor dropout, slippage | Sensor loss breaks perception chain (SA). Interface must predict/react to sensor gaps while maintaining operator trust via consistent logic (mental models, CLT). Sensor loss breaks trust and harms PU; graceful degradation + confidence bands aid PEOU; data provenance restores trust. |

| Leader [34] | Remotely operated robot with multiple cameras and gas sensors | Limited communication, unstructured post-explosion environment | Dynamic terrain affects projection and comprehension (SA). Modular views and signal confidence indicators help user maintain situational awareness and mental control loop. Comms limits reduce perceived benefit (PU); fused camera/gas overlays raise PU and PEOU; latency/signal badges calibrate trust. |

| Gemini Scout [23] | Rugged PC + joystick; onboard gas sensor, thermal + pan-tilt cameras | Hazardous mines, poor visibility | High visual demand stresses visual resource channel (MRT). Sensor fusion and heat-map overlays support comprehension (SA) and reduce cognitive switching. EID can further reduce workload by visually encoding hazard thresholds (e.g., gas explosion limits, tether strain) to help operators instantly perceive safe vs. unsafe states. High visual demand stresses PEOU; heatmaps + waypoint assist raise PU; route-choice rationale (why this path) strengthens trust. |

| MINBOT II [136] | Remote console + GUI, skid-steer teleoperate with sensors | Rugged terrain, visibility issues | Predictive control aids reduce intrinsic cognitive load (CLT). Aligning visual layout with terrain types supports projection (SA) and operator mental modeling. Rugged terrain control cost lowers PEOU; predictive assists and terrain-aware UI raise PU; stability/fail-safe feedback builds trust. |

| CUMT V [8] | GUI console with joystick; fiber + wireless relay; semi-autonomous drive | Operator burden in terrain switching; signal reliability | Frequent mode-switching disrupts situational continuity (SA). Adaptive displays and automation handover can offload demand (CLT) and reduce confusion across visual/manual channels (MRT). Frequent mode switches harm PEOU; consistent handover UX and unified controls raise PU; explicit mode/authority cues improve trust. |

| KRZ I | Teleoperated system with IR camera, posture and obstacle alarms | Navigation under zero illumination, explosion-proof constraints | Poor lighting reduces perception (SA). IR must be presented with integrated overlays. Alarm overload risks channel interference (MRT), so prioritization and pre-attentive cues are key. Alarm overload hurts PEOU; prioritized, grouped alerts raise PU; IR calibration/health indicators support trust. |

| Mobile Inspection Platform [137] | Remote GUI with video and gas sensor logging interface | Mapping gas in explosive zones; communication reliability | Mission-critical data must support comprehension under time stress (SA). Clear alert hierarchy + sensor syncing align with mental models and reduce user error. Unsynced gas/video lowers PU; timestamped sync + actionable thresholds raise PEOU/PU; sensor health bars bolster trust. |

| Tele Rescuer [138] | VR/AR immersive GUI with gamepad + 3D mapping and gas sensors | VR latency, data overload, remote comms limitations | Overload reflects split-channel conflict (MRT). Excess sensory input burdens working memory (CLT). Interface should support selective attention and layered information access. VR latency/data overload reduces PEOU; limit channels + prediction panels raise PU; stable FPS/lag indicators calibrate trust. |

10. Conclusions

- Identified HRI limitations in current SAR robot systems through cognitive and operational analysis.

- Developed a cognitive model–based interface design framework tailored for underground emergency response.

- Mapped specific design strategies to human factor needs with examples from real-world SAR robots.

- Supported a shift from technology-centered to human-centered design thinking in mining robotics.

11. Limitation and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CLT | Cognitive Load Theory |

| HRC | Human–Robot Collaboration |

| HRCp | Human–Robot Cooperation |

| HRCx | Human–Robot Coexistence |

| HRI | Human–Robot Interaction |

| MM | Mental Models |

| MSHA | Mine Safety and Health Administration |

| MRT | Multiple Resource Theory |

| NIOSH | National Institute for Occupational Safety and Health |

| SA | Situational Awareness |

| SAR | Search and Rescue |

| TAM | Technology Acceptance Model |

| EID | Ecological Interface Design |

References

- Lee, W.; Alkouz, B.; Shahzaad, B.; Bouguettaya, A. Package Delivery Using Autonomous Drones in Skyways. In Proceedings of the Adjunct Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2021 ACM International Symposium on Wearable Computers; Virtual, 21–26 September 2021, pp. 48–50.

- Asadi, E.; Li, B.; Chen, I.M. Pictobot: A Cooperative Painting Robot for Interior Finishing of Industrial Developments. IEEE Robot. Autom. Mag. 2018, 25, 82–94. [Google Scholar] [CrossRef]

- Belanger, F.; Resor, J.; Crossler, R.E.; Finch, T.A.; Allen, K.R. Smart Home Speakers and Family Information Disclosure Decisions. In Proceedings of the AMCIS 2021 Proceedings, Montreal, QC, Canada, 9–13 August 2021. [Google Scholar]

- Dahn, N.; Fuchs, S.; Gross, H.-M. Situation Awareness for Autonomous Agents. In Proceedings of the 27th IEEE International Symposium on Robot and Human Interactive Communication, Nanjing, China, 27–31 August 2018. [Google Scholar]

- Davids, A. Urban search and rescue robots: From tragedy to technology. IEEE Intell. Syst. 2002, 17, 81–83. [Google Scholar] [CrossRef]

- Bin Motaleb, A.K.; Hoque, M.B.; Hoque, M.A. Bomb disposal robot. In Proceedings of the 2016 International Conference on Innovations in Science, Engineering and Technology (ICISET), Dhaka, Bangladesh, 28–29 October 2016; pp. 1–5. [Google Scholar]

- Murphy, R.; Kravitz, J.; Stover, S.; Shoureshi, R. Mobile robots in mine rescue and recovery. IEEE Robot. Autom. Mag. 2009, 16, 91–103. [Google Scholar] [CrossRef]

- Zhai, G.; Zhang, W.; Hu, W.; Ji, Z. Coal Mine Rescue Robots Based on Binocular Vision: A Review of the State of the Art. IEEE Access 2020, 8, 130561–130575. [Google Scholar] [CrossRef]

- Caiazzo, C.; Savković, M.; Pusica, M.; Milojevic, D.; Leva, M.; Djapan, M. Development of a Neuroergonomic Assessment for the Evaluation of Mental Workload in an Industrial Human-Robot Interaction Assembly Task: A Comparative Case Study. Machines 2023, 11, 995. [Google Scholar] [CrossRef]

- Senaratne, H.; Tian, L.; Sikka, P.; Williams, J.; Howard, G.; Kulic, D.; Paris, C. A Framework for Dynamic Situational Awareness in Human Robot Teams: An Interview Study. ACM Trans. Hum.-Robot. Interact. 2025, 15, 5. [Google Scholar] [CrossRef]

- Endsley, M. Toward a Theory of Situation Awareness in Dynamic Systems. Human. Factors 1995, 37, 32–64. [Google Scholar] [CrossRef]

- Wickens, C.D. Multiple resources and mental workload. Hum. Factors 2008, 50, 449–455. [Google Scholar] [CrossRef]

- Norman, D.A. The Design of Everyday Things; Basic Books, Inc.: New York, NY, USA, 2002. [Google Scholar]

- Sweller, J. Cognitive Load During Problem Solving: Effects on Learning. Cogn. Sci. 1988, 12, 257–285. [Google Scholar] [CrossRef]

- Sweller, J.; Ayres, P.; Kalyuga, S. Cognitive Load Theory. In Psychology of Learning and Motivation; Academic Press: Cambridge, MA, USA, 2011. [Google Scholar] [CrossRef]

- Ren, H.; Zhao, Y.L.; Xiao, W.; Hu, Z.Q. A review of UAV monitoring in mining areas: Current status and future perspectives. Int. J. Coal Sci. Technol. 2019, 6, 320–333. [Google Scholar] [CrossRef]

- Xiao, W.; Chen, J.; Da, H.; Ren, H.; Zhang, J.; Zhang, L. Inversion and Analysis of Maize Biomass in Coal Mining Subsidence Area Based on UAV Images. Nongye Jixie Xuebao/Trans. Chin. Soc. Agric. Mach. 2018, 49, 169–180. [Google Scholar] [CrossRef]

- Jackisch, R.; Lorenz, S.; Zimmermann, R.; Möckel, R.; Gloaguen, R. Drone-Borne Hyperspectral Monitoring of Acid Mine Drainage: An Example from the Sokolov Lignite District. Remote Sens. 2018, 10, 385. [Google Scholar] [CrossRef]

- Martinez Rocamora, B.; Lima, R.R.; Samarakoon, K.; Rathjen, J.; Gross, J.N.; Pereira, G.A.S. Oxpecker: A Tethered UAV for Inspection of Stone-Mine Pillars. Drones 2023, 7, 73. [Google Scholar] [CrossRef]

- Yucel, M.A.; Turan, R.Y. Areal Change Detection and 3D Modeling of Mine Lakes Using High-Resolution Unmanned Aerial Vehicle Images. Arab. J. Sci. Eng. 2016, 41, 4867–4878. [Google Scholar] [CrossRef]

- Pathak, S. UAV based Topographical mapping and accuracy assessment of Orthophoto using GCP. Mersin Photogramm. J. 2023, 6, 1–8. [Google Scholar] [CrossRef]

- Szrek, J.; Zimroz, R.; Wodecki, J.; Michalak, A.; Góralczyk, M.; Worsa-Kozak, M. Application of the Infrared Thermography and Unmanned Ground Vehicle for Rescue Action Support in Underground Mine-The AMICOS Project. Remote Sens. 2021, 13, 69. [Google Scholar] [CrossRef]

- Reddy, A.H.; Kalyan, B.; Murthy, C.S.N. Mine Rescue Robot System–A Review. Procedia Earth Planet. Sci. 2015, 11, 457–462. [Google Scholar] [CrossRef]

- Sandia National Laboratories. Gemini-Scout Mine Rescue Vehicle. Available online: https://www.sandia.gov/research/gemini-scout-mine-rescue-vehicle/ (accessed on 15 October 2025).

- Krotkov, E.; Bares, J.; Katragadda, L.; Simmons, R.; Whittaker, R. Lunar rover technology demonstrations with Dante and Ratler. In Proceedings of the JPL, Third International Symposium on Artificial Intelligence, Robotics, and Automation for Space 1994, Pasadena, CA, USA, 18–20 October 1994. [Google Scholar]

- Purvis, J.W.; Klarer, P.R. RATLER: Robotic All-Terrain Lunar Exploration Rover. In Proceedings of the The Sixth Annual Workshop on Space Operations Applications and Research (SOAR 1992), Houston, TX, USA, 4–6 August 1992. [Google Scholar]

- Klarer, P. Space Robotics Programs at Sandia National Laboratories; Sandia National Labs.: Albuquerque, NM, USA, 1993. [Google Scholar]

- Ralston, J.C.; Hainsworth, D.W.; Reid, D.C.; Anderson, D.L.; McPhee, R.J. Recent advances in remote coal mining machine sensing, guidance, and teleoperation. Robotica 2001, 19, 513–526. [Google Scholar] [CrossRef]

- Hainsworth, D.W. Teleoperation user interfaces for mining robotics. Auton. Robot. 2001, 11, 19–28. [Google Scholar] [CrossRef]

- Ralston, J.; Hainsworth, D. The Numbat: A Remotely Controlled Mine Emergency Response Vehicle; Springer: London, UK, 1998; pp. 53–59. [Google Scholar] [CrossRef]

- Li, Y.; Li, M.; Zhu, H.; Hu, E.; Tang, C.; Li, P.; You, S. Development and applications of rescue robots for explosion accidents in coal mines. J. Field Robot. 2020, 37, 466–489. [Google Scholar] [CrossRef]

- Green, J. Mine Rescue Robots Requirements. In Proceedings of the 6th Robotics and Mechatronics Conference (RobMech), Durban, South Africa, 30–31 October 2013. [Google Scholar]

- Chung, T.; Orekhov, V.; Maio, A. Into the Robotic Depths: Analysis and Insights from the DARPA Subterranean Challenge. Annu. Rev. Control Robot. Auton. Syst. 2022, 6, 477–502. [Google Scholar] [CrossRef]

- Wang, Y.; Tian, P.; Zhou, Y.; Chen, Q. The Encountered Problems and Solutions in the Development of Coal Mine Rescue Robot. J. Robot. 2018, 2018, 8471503. [Google Scholar] [CrossRef]

- Li, M.; Zhu, H.; Tang, C.; You, S.; Li, Y. Coal Mine Rescue Robots: Development, Applications and Lessons Learned. In Proceedings of the 2021 International Conference on Autonomous Unmanned Systems (ICAUS 2021), Changsha, China, 24–26 September 2022; pp. 2127–2138. [Google Scholar] [CrossRef]

- Kadous, M.W.; Sheh, R.K.-M.; Sammut, C. Effective user interface design for rescue robotics. In Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction, Salt Lake City, UT, USA, 2–3 March 2006; pp. 250–257. [Google Scholar]

- Bakzadeh, R.; Androulakis, V.; Khaniani, H.; Shao, S.; Hassanalian, M.; Roghanchi, P. Robots in Mine Search and Rescue Operations: A Review of Platforms and Design Requirements. Preprints 2023. [Google Scholar] [CrossRef]

- Hidalgo, M.; Reinerman-Jones, L.; Barber, D. Spatial Ability in Military Human-Robot Interaction: A State-of-the-Art Assessment. In International Conference on Human-Computer Interaction; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Caccavale, R.; Finzi, A. Flexible Task Execution and Attentional Regulations in Human-Robot Interaction. IEEE Trans. Cogn. Dev. Syst. 2017, 9, 68–79. [Google Scholar] [CrossRef]

- Goodrich, M.A.; Schultz, A.C. Human-Robot Interaction: A Survey. Found. Trends® Hum.-Comput. Interact. 2007, 1, 203–275. [Google Scholar] [CrossRef]

- Perzanowski, D.; Schultz, A.C.; Adams, W.; Marsh, E.; Bugajska, M. Building a multimodal human-robot interface. IEEE Intell. Syst. 2001, 16, 16–21. [Google Scholar] [CrossRef]

- Haddadin, S.; Haddadin, S.; Khoury, A.; Rokahr, T.; Parusel, S.; Burgkart, R.; Bicchi, A.; Albu-Schäffer, A. On making robots understand safety: Embedding injury knowledge into control. Int. J. Robot. Res. 2012, 31, 1578–1602. [Google Scholar] [CrossRef]

- Haddadin, S.; Albu-Schäffer, A.; Hirzinger, G. Requirements for Safe Robots: Measurements, Analysis and New Insights. Int. J. Robot. Res. 2009, 28, 1507–1527. [Google Scholar] [CrossRef]

- Sanchez Restrepo, S.; Raiola, G.; Chevalier, P.; Lamy, X.; Sidobre, D. Iterative virtual guides programming for human-robot comanipulation. In Proceedings of the 2017 IEEE International Conference on Advanced Intelligent Mechatronics (AIM), Munich, Germany, 3–7 July 2017; pp. 219–226. [Google Scholar]

- Andrisano, A.O.; Leali, F.; Pellicciari, M.; Pini, F.; Vergnano, A. Hybrid Reconfigurable System design and optimization through virtual prototyping and digital manufacturing tools. Int. J. Interact. Des. Manuf. 2012, 6, 17–27. [Google Scholar] [CrossRef]

- Schiavi, R.; Bicchi, A.; Flacco, F. Integration of Active and Passive Compliance Control for Safe Human-Robot Coexistence. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009. [Google Scholar]

- Shen, Y.; Reinhart, G.; Tseng, M.M. A Design Approach for Incorporating Task Coordination for Human-Robot-Coexistence within Assembly Systems. In Proceedings of the Annual IEEE Systems Conference (SysCon) Proceedings, Vancouver, BC, Canada, 13–16 April 2015; IEEE: New York, NY, USA, 2012. [Google Scholar]

- Gaz, C.; Magrini, E.; De Luca, A. A model-based residual approach for human-robot collaboration during manual polishing operations. Mechatronics 2018, 55, 234–247. [Google Scholar] [CrossRef]

- Luca, A.D.; Flacco, F. Integrated control for pHRI: Collision avoidance, detection, reaction and collaboration. In Proceedings of the International Conference on Biomedical Robotics and Biomechatronics, Rome, Italy, 24–27 June 2012. [Google Scholar]

- Mörtl, A.; Lawitzky, M.; Kucukyilmaz, A.; Sezgin, M.; Basdogan, C.; Hirche, S. The role of roles: Physical cooperation between humans and robots. Int. J. Robot. Res. 2012, 31, 1656–1674. [Google Scholar] [CrossRef]

- Krüger, J.; Lien, T.K.; Verl, A. Cooperation of human and machines in assembly lines. CIRP Ann. 2009, 58, 628–646. [Google Scholar] [CrossRef]

- Chandrasekaran, B.; Conrad, J.M. Human-Robot Collaboration: A Survey. In Proceedings of the IEEE SoutheastCon 2015, Fort Lauderdale, FL, USA, 9–12 April 2015. [Google Scholar]

- Flacco, F.; Kroeger, T.; De Luca, A.; Khatib, O. A Depth Space Approach for Evaluating Distance to Objects. J. Intell. Robot. Syst. 2015, 80, 7–22. [Google Scholar] [CrossRef]

- Mainprice, J.; Alami, E.A.S.T.S.R. Planning Safe and Legible Hand-over Motions for Human-Robot Interaction. In Proceedings of the IARP/IEEE-RAS/EURON Workshop on Technical Challenges for Dependable Robots in Human Environments, Toulouse, France, January 2010. [Google Scholar]

- Pak, H.; Mostafavi, A. Situational Awareness as the Imperative Capability for Disaster Resilience in the Era of Complex Hazards and Artificial Intelligence. arXiv 2025, arXiv:2508.16669. [Google Scholar] [CrossRef]

- Szafir, D.; Szafir, D.A. Connecting Human-Robot Interaction and Data Visualization. In Proceedings of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 09–11 March 2021; pp. 281–292. [Google Scholar]

- Kok, B.C.; Soh, H. Trust in Robots: Challenges and Opportunities. Curr. Robot. Rep. 2020, 1, 297–309. [Google Scholar] [CrossRef]

- Wagner, A.R.; Robinette, P. Chapter 9-An explanation is not an excuse: Trust calibration in an age of transparent robots. In Trust in Human-Robot Interaction; Nam, C.S., Lyons, J.B., Eds.; Academic Press: Cambridge, MA, USA, 2021; pp. 197–208. [Google Scholar] [CrossRef]

- Alonso, V.; de la Puente, P. System Transparency in Shared Autonomy: A Mini Review. Front. Neurorobot 2018, 12, 83. [Google Scholar] [CrossRef]

- Wit, J.d.; Vogt, P.; Krahmer, E. The Design and Observed Effects of Robot-performed Manual Gestures: A Systematic Review. J. Hum.-Robot. Interact. 2023, 12, 1–62. [Google Scholar] [CrossRef]

- de Barros, P.G.; Lindeman, R.W. Multi-Sensory Urban Search-and-Rescue Robotics: Improving the Operator’s Omni-Directional Perception. Front. Robot. AI 2014, 1, 14. [Google Scholar] [CrossRef]

- Triantafyllidis, E.; McGreavy, C.; Gu, J.; Li, Z. Study of Multimodal Interfaces and the Improvements on Teleoperation. IEEE Access 2020, 7, 78213–78227. [Google Scholar] [CrossRef]

- Sosnowski, M.J.; Brosnan, S.F. Under pressure: The interaction between high-stakes contexts and individual differences in decision-making in humans and non-human species. Anim. Cogn. 2023, 26, 1103–1117. [Google Scholar] [CrossRef]

- NASA-STD-3001; NASA Space Flight Human-System Standard, Volume 2: Human Factors, Habitability, and Environmental Health. NASA Technical Standard: Washington, DC, USA, 2022; Volume 2, Revision C.

- Khaliq, M.; Munawar, H.; Ali, Q. Haptic Situational Awareness Using Continuous Vibrotactile Sensations. arXiv 2021, arXiv:2108.04611. [Google Scholar] [CrossRef]

- Chitikena, H.; Sanfilippo, F.; Ma, S. Robotics in Search and Rescue (SAR) Operations: An Ethical and Design Perspective Framework for Response Phase. Appl. Sci. 2023, 13, 1800. [Google Scholar] [CrossRef]

- Sarter, N. Multiple-Resource Theory as a Basis for Multimodal Interface Design: Success Stories, Qualifications, and Research Needs. In Attention: From Theory to Practice; Oxford University Press: Oxford, UK, 2006; pp. 187–195. [Google Scholar]

- Tabrez, A.; Luebbers, M.; Hayes, B. A Survey of Mental Modeling Techniques in Human–Robot Teaming. Curr. Robot. Rep. 2020, 1, 259–267. [Google Scholar] [CrossRef]

- Endsley, M.R. Situation Awareness Misconceptions and Misunderstandings. J. Cogn. Eng. Decis. Mak. 2015, 9, 4–32. [Google Scholar] [CrossRef]

- Rey-Becerra, E.; Wischniewski, S. Mastering a robot workforce: Review of single human multiple robots systems and their impact on occupational safety and health and system performance. Ergonomics 2025, 1–25. [Google Scholar] [CrossRef]

- Han, Z.; Norton, A.; McCann, E.; Baraniecki, L.; Ober, W.; Shane, D.; Skinner, A.; Yanco, H.A. Investigation of Multiple Resource Theory Design Principles on Robot Teleoperation and Workload Management. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021. [Google Scholar]

- National Aeronautics Space Administration. Risk of Inadequate Human-System Integration Architecture. In Human Research Program, Human Factors and Behavioral Performance; NASA Lyndon B. Johnson Space Center: Houston, TX, USA, 2021. [Google Scholar]

- Cavicchi, S.; Abubshait, A.; Siri, G.; Mustile, M.; Ciardo, F. Can humanoid robots be used as a cognitive offloading tool? Cogn. Res. Princ. Implic. 2025, 10, 17. [Google Scholar] [CrossRef]

- Richter, P.; Wersing, H.; Vollmer, A.-L. Improving Human-Robot Teaching by Quantifying and Reducing Mental Model Mismatch. arXiv 2025, arXiv:2501.04755. [Google Scholar] [CrossRef]

- Javaid, M.; Estivill-Castro, V. Explanations from a Robotic Partner Build Trust on the Robot’s Decisions for Collaborative Human-Humanoid Interaction. Robotics 2021, 10, 51. [Google Scholar] [CrossRef]

- Zheng, C.; Wang, K.; Gao, S.; Yu, Y.; Wang, Z.; Tang, Y. Design of multi-modal feedback channel of human–robot cognitive interface for teleoperation in manufacturing. J. Intell. Manuf. 2025, 36, 4283–4303. [Google Scholar] [CrossRef]

- Lee, Y.; Kozar, K.; Larsen, K. The Technology Acceptance Model: Past, Present, and Future. Commun. Assoc. Inf. Syst. 2003, 12, 50. [Google Scholar] [CrossRef]

- Cimperman, M.; Makovec Brenčič, M.; Trkman, P. Analyzing older users’ home telehealth services acceptance behavior-applying an Extended UTAUT model. Int. J. Med. Inform. 2016, 90, 22–31. [Google Scholar] [CrossRef] [PubMed]

- Al-Emran, M.; Shaalan, K. Recent Advances in Technology Acceptance Models and Theories; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Wang, Z.; Wang, Y.; Zeng, Y.; Su, J.; Li, Z. An investigation into the acceptance of intelligent care systems: An extended technology acceptance model (TAM). Sci. Rep. 2025, 15, 17912. [Google Scholar] [CrossRef] [PubMed]

- Vicente, K.J.; Rasmussen, J. A Theoretical Framework for Ecological Interface Design; Risø National Laboratory: Roskilde, Denmark, 1988. [Google Scholar]

- Ma, J.; Li, Y.; Zuo, Y. Design and Evaluation of Ecological Interface of Driving Warning System Based on AR-HUD. Sensors 2024, 24, 8010. [Google Scholar] [CrossRef] [PubMed]

- Schewe, F.; Vollrath, M. Ecological interface design effectively reduces cognitive workload–The example of HMIs for speed control. Transp. Res. Part. F Traffic Psychol. Behav. 2020, 72, 155–170. [Google Scholar] [CrossRef]

- Zohrevandi, E.; Westin, C.; Vrotsou, K.; Lundberg, J. Exploring Effects of Ecological Visual Analytics Interfaces on Experts’ and Novices’ Decision-Making Processes: A Case Study in Air Traffic Control. Comput. Graph. Forum 2022, 41, 453–464. [Google Scholar] [CrossRef]

- Neisser, U. Cognition and Reality: Principles and Implications of Cognitive Psychology; W. H. Freeman: San Francisco, CA, USA, 1976. [Google Scholar]

- Vicente, K.J.; Rasmussen, J. Ecological interface design: Theoretical foundations. IEEE Trans. Syst. Man Cybern. 1992, 22, 589–606. [Google Scholar] [CrossRef]

- Zhao, J.; Gao, J.; Zhao, F.; Liu, Y. A Search-and-Rescue Robot System for Remotely Sensing the Underground Coal Mine Environment. Sensors 2017, 17, 2426. [Google Scholar] [CrossRef]

- Seo, S.H. Novel Egocentric Robot Teleoperation Interfaces for Search and Rescue. In Department of Computer Science; University of Manitoba: Winnipeg, MB, Canada, 2021. [Google Scholar]

- Roldán, J.J.; Peña-Tapia, E.; Martín-Barrio, A.; Olivares-Méndez, M.A.; Del Cerro, J.; Barrientos, A. Multi-Robot Interfaces and Operator Situational Awareness: Study of the Impact of Immersion and Prediction. Sensors 2017, 17, 1720. [Google Scholar] [CrossRef]

- Tabrez, A.; Luebbers, M.B.; Hayes, B. Descriptive and Prescriptive Visual Guidance to Improve Shared Situational Awareness in Human-Robot Teaming. In Proceedings of the 21st International Conference on Autonomous Agents and Multiagent Systems, Virtual Event, 9–13 May 2022; International Foundation for Autonomous Agents and Multiagent Systems: Auckland, New Zealand, 2022; pp. 1256–1264. [Google Scholar]

- Walker, M.; Gramopadhye, M.; Ikeda, B.; Burns, J.; Szafir, D. The Cyber-Physical Control Room: A Mixed Reality Interface for Mobile Robot Teleoperation and Human-Robot Teaming. In Proceedings of the HRI ’24: 2024 ACM/IEEE International Conference on Human-Robot Interaction, 2024; Boulder, CO, USA, 11–15 March 2024, pp. 762–771.

- Moniruzzaman, M.D.; Rassau, A.; Chai, D.; Islam, S. Teleoperation methods and enhancement techniques for mobile robots: A comprehensive survey. Robot. Auton. Syst. 2021, 150, 103973. [Google Scholar] [CrossRef]

- Louca, J.; Eder, K.; Vrublevskis, J.; Tzemanaki, A. Impact of Haptic Feedback in High Latency Teleoperation for Space Applications. J. Hum.-Robot. Interact. 2024, 13, 16. [Google Scholar] [CrossRef]

- Lee, Y.J.; Park, M.W. 3D tracking of multiple onsite workers based on stereo vision. Autom. Constr. 2019, 98, 146–159. [Google Scholar] [CrossRef]

- Dennler, N.; Delgado, D.; Zeng, D.; Nikolaidis, S.; Matarić, M. The RoSiD Tool: Empowering Users to Design Multimodal Signals for Human-Robot Collaboration. In International Symposium on Experimental Robotics; Springer Nature: Cham, Switzerland, 2024; pp. 3–10. [Google Scholar]

- Ranganeni, V.; Dhat, V.; Ponto, N.; Cakmak, M. AccessTeleopKit: A Toolkit for Creating Accessible Web-Based Interfaces for Tele-Operating an Assistive Robot. In Proceedings of the 37th Annual ACM Symposium on User Interface Software and Technology, Pittsburgh, PA, USA, 13–16 October 2024; Association for Computing Machinery: Pittsburgh, PA, USA, 2024; p. 87. [Google Scholar]

- Draheim, C.; Pak, R.; Draheim, A.; Engle, R. The role of attention control in complex real-world tasks. Psychon. Bull. Rev. 2022, 29, 1143–1197. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.D.; See, K.A. Trust in Automation: Designing for Appropriate Reliance. Human. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef]

- Yang, X.J.; Schemanske, C.; Searle, C. Toward Quantifying Trust Dynamics: How People Adjust Their Trust After Moment-to-Moment Interaction With Automation. Human. Factors: J. Human. Factors Ergon. Soc. 2021, 65, 001872082110347. [Google Scholar] [CrossRef]

- Guo, X.; Liu, Y.; Ma, X.; Fu, H. Human trust effect in remote human–robot collaboration construction task for different level of automation. Adv. Eng. Inform. 2025, 68, 103647. [Google Scholar] [CrossRef]

- Wickens, C. Multiple resources and performance prediction. Theor. Issues Ergon. Sci. 2002, 3, 159–177. [Google Scholar] [CrossRef]

- Marvel, J.A.; Bagchi, S.; Zimmerman, M.; Antonishek, B. Towards Effective Interface Designs for Collaborative HRI in Manufacturing: Metrics and Measures. J. Hum.-Robot. Interact. 2020, 9, 25. [Google Scholar] [CrossRef]

- Murphy, R.R.; Tadokoro, S.; Kleiner, A. Disaster Robotics. In Springer Handbook of Robotics; Siciliano, B., Khatib, O., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 1577–1604. [Google Scholar]

- Bouzón, I.; Pascual, J.; Costales, C.; Crespo, A.; Cima, C.; Melendi, D. Design, Implementation and Evaluation of an Immersive Teleoperation Interface for Human-Centered Autonomous Driving. Sensors 2025, 25, 4679. [Google Scholar] [CrossRef]

- Nourbakhsh, I.; Sycara, K.; Koes, M.; Yong, M.; Lewis, M.; Burion, S. Human-Robot Teaming for Search and Rescue. Pervasive Comput. IEEE 2005, 4, 72–79. [Google Scholar] [CrossRef]

- Diab, M.; Demiris, Y. A framework for trust-related knowledge transfer in human–robot interaction. Auton. Agents Multi-Agent. Syst. 2024, 38, 24. [Google Scholar] [CrossRef]

- Hancock, P.A.; Parasuraman, R. Human factors and safety in the design of intelligent vehicle-highway systems (IVHS). J. Saf. Res. 1992, 23, 181–198. [Google Scholar] [CrossRef]

- Panakaduwa, C.; Coates, S.; Munir, M.; De Silva, O. Optimising Visual User Interfaces to Reduce Cognitive Fatigue and Enhance Mental Well-being. In Proceedings of the 35th Annual Conference of the International Information Management Association (IIMA), Manchester, UK, 2–4 September 2024; University of Salford: Media City, Manchester, UK, 2024. [Google Scholar]

- Murphy, R.; Tadokoro, S. Disaster Robotics: Results from the ImPACT Tough Robotics Challenge; Springer: Berlin/Heidelberg, Germany, 2019; pp. 507–528. [Google Scholar]

- Casper, J.; Murphy, R. Human-robot interactions during the robot-assisted urban search and rescue response at the World Trade Center. IEEE Trans. Syst. Man Cybern. Part. B Cybern. A Publ. IEEE Syst. Man Cybern. Soc. 2003, 33, 367–385. [Google Scholar] [CrossRef] [PubMed]

- Drury, J.; Scholtz, J.; Yanco, H.A. Awareness in human-robot interactions. In Proceedings of the SMC’03—2003 IEEE International Conference on Systems, Man and Cybernetics, Conference Theme—System Security and Assurance (Cat. No.03CH37483). Washington, DC, USA, 8 October 2003; Volume 1, pp. 912–918. [Google Scholar]

- Ali, R.; Islam, T.; Prato, B.; Chowdhury, S.; Al Rakib, A. Human-Centered Design in Human-Robot Interaction Evaluating User Experience and Usability. Bull. Bus. Econ. 2023, 12, 454–459. [Google Scholar] [CrossRef]

- Kujala, S. User involvement: A review of the benefits and challenges. Behav. Inf. Technol. 2003, 22, 1–16. [Google Scholar] [CrossRef]

- Wheeler, S.; Engelbrecht, H.; Hoermann, S. Human Factors Research in Immersive Virtual Reality Firefighter Training: A Systematic Review. Front. Virtual Real. 2021, 2, 671664. [Google Scholar] [CrossRef]

- Zhang, D.; Tron, R.; Khurshid, R. Haptic Feedback Improves Human-Robot Agreement and User Satisfaction in Shared-Autonomy Teleoperation. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021. [Google Scholar]

- Kocer, B.; Stedman, H.; Kulik, P.; Caves, I.; van Zalk, N.; Pawar, V.; Kovac, M. Immersive View and Interface Design for Teleoperated Aerial Manipulation. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 4919–4926. [Google Scholar]

- Dybvik, H.; Løland, M.; Gerstenberg, A.; Slåttsveen, K.B.; Steinert, M. A low-cost predictive display for teleoperation: Investigating effects on human performance and workload. Int. J. Hum.-Comput. Stud. 2021, 145, 102536. [Google Scholar] [CrossRef]

- Zhu, Y.; Fusano, K.; Aoyama, T.; Hasegawa, Y. Intention-reflected predictive display for operability improvement of time-delayed teleoperation system. ROBOMECH J. 2023, 10, 17. [Google Scholar] [CrossRef]

- Manschitz, S.; Güler, B.; Ma, W.; Ruiken, D. Sampling-Based Grasp and Collision Prediction for Assisted Teleoperation. arXiv 2025, arXiv:2504.18186. [Google Scholar] [CrossRef]

- Contreras, C.A.; Rastegarpanah, A.; Chiou, M.; Stolkin, R. A mini-review on mobile manipulators with Variable Autonomy. Front. Robot. AI 2025, 12, 1540476. [Google Scholar] [CrossRef]

- Rea, D.J.; Seo, S.H. Still Not Solved: A Call for Renewed Focus on User-Centered Teleoperation Interfaces. Front. Robot. AI 2022, 9, 704225. [Google Scholar] [CrossRef]

- Luo, J.; He, W.; Yang, C. Combined Perception, Control, and Learning for Teleoperation: Key Technologies, Applications, and Challenges. Cogn. Comput. Syst. 2020, 2, 33–43. [Google Scholar] [CrossRef]

- Kamtam, S.B.; Lu, Q.; Bouali, F.; Haas, O.C.L.; Birrell, S. Network Latency in Teleoperation of Connected and Autonomous Vehicles: A Review of Trends, Challenges, and Mitigation Strategies. Sensors 2024, 24, 3957. [Google Scholar] [CrossRef] [PubMed]

- Pollak, A.; Biolik, E.; Chudzicka-Czupała, A. New Luddites? Counterproductive Work Behavior and Its Correlates, Including Work Characteristics, Stress at Work, and Job Satisfaction Among Employees Working With Industrial and Collaborative Robots. Hum. Factors Ergon. Manuf. Serv. Ind. 2025, 35, e70016. [Google Scholar] [CrossRef]

- Van Dijk, W.; Baltrusch, S.J.; Dessers, E.; De Looze, M.P. The Effect of Human Autonomy and Robot Work Pace on Perceived Workload in Human-Robot Collaborative Assembly Work. Front. Robot. Ai 2023, 10, 1244656. [Google Scholar] [CrossRef]

- Mutzenich, C.; Durant, S.; Helman, S.; Dalton, P. Situation Awareness in Remote Operators of Autonomous Vehicles: Developing a Taxonomy of Situation Awareness in Video-Relays of Driving Scenes. Front. Psychol. 2021, 12, 727500. [Google Scholar] [CrossRef]

- Xie, B. Visual Simulation of Interactive Information of Robot Operation Interface. Autom. Mach. Learn. 2023, 4, 61–67. [Google Scholar] [CrossRef]

- Chang, Y.L.; Luo, D.H.; Huang, T.R.; Goh, J.O.; Yeh, S.L.; Fu, L.C. Identifying Mild Cognitive Impairment by Using Human–Robot Interactions. J. Alzheimer’s Dis. 2022, 85, 1129–1142. [Google Scholar] [CrossRef]

- Lu, C.L.; Huang, J.T.; Huang, C.I.; Liu, Z.Y.; Hsu, C.C.; Huang, Y.Y.; Chang, P.K.; Ewe, Z.L.; Huang, P.J.; Li, P.L.; et al. A Heterogeneous Unmanned Ground Vehicle and Blimp Robot Team for Search and Rescue Using Data-Driven Autonomy and Communication-Aware Navigation. Field Robot. 2022, 2, 557–594. [Google Scholar] [CrossRef]

- Belkaid, M.; Kompatsiari, K.; De Tommaso, D.; Zablith, I.; Wykowska, A. Mutual Gaze With a Robot Affects Human Neural Activity and Delays Decision-Making Processes. Sci. Robot. 2021, 6, eabc5044. [Google Scholar] [CrossRef]

- Ciardo, F.; Wykowska, A. Robot’s Social Gaze Affects Conflict Resolution but Not Conflict Adaptations. Journal of Cognition 2022, 5, 2. [Google Scholar] [CrossRef]

- Suh, H.T.; Xiong, X.; Singletary, A.; Ames, A.D.; Burdick, J.W. Energy-Efficient Motion Planning for Multi-Modal Hybrid Locomotion. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 7027–7033. [Google Scholar] [CrossRef]

- Morris, A.; Ferguson, D.; Omohundro, Z.; Bradley, D.; Silver, D.; Baker, C.; Thayer, S.; Whittaker, C.; Whittaker, W. Recent developments in subterranean robotics. J. Field Robot. 2006, 23, 35–57. [Google Scholar] [CrossRef]

- Arai, M.; Tanaka, Y.; Hirose, S.; Kuwahara, H.; Tsukui, S. Development of “Souryu-IV” and “Souryu-V:” serially connected crawler vehicles for in-rubble searching operations. J. Field Robot. 2008, 25, 31–65. [Google Scholar] [CrossRef]

- Ray, D.N.; Dalui, R.; Maity, A.; Majumder, S. Sub-terranean robot: A challenge for the Indian coal mines. Online J. Electron. Electr. Eng. 2009, 2, 217–222. [Google Scholar]

- Wang, W.; Dong, W.; Su, Y.; Wu, D.; Du, Z. Development of Search-and-rescue Robots for Underground Coal Mine Applications. J. Field Robot. 2014, 31, 386–407. [Google Scholar] [CrossRef]

- Bołoz, Ł.; Biały, W. Automation and robotization of underground mining in Poland. Appl. Sci. 2020, 10, 7221. [Google Scholar] [CrossRef]

- Moczulski, W.; Cyran, K.; Novak, P.; Rodriguez, A.; Januszka, M. TeleRescuer-a concept of asystem for teleimmersion of a rescuerto areas of coal mines affected by catastrophes. Proc. Inst. Veh. 2015, 2, 57–62. [Google Scholar]

| HRI Category | Description | Emergency Scenario |

|---|---|---|

| Human robot coexistence (HRCx) | Robots and humans operate in shared environments with separate tasks, minimal interaction | Robot (UGV & UAV) navigates a collapsed area prior to searching and rescue entrance |

| Human robot Cooperation (HRCp) | Robots and humans pursue a shared goal with coordinated actions in time and space | Rescuers and robots inspect the same area simultaneously; robots scan for structural integrity while rescuer performs triage |

| Human robot collaboration (HRC) | Direct or indirect communication and intentional cooperation between human and robot | Robot assists responders by interpreting hand gestures or spoken instructions to deliver tools, provide lighting, or stabilize equipment |

| Challenge | Description | Design Implication |

|---|---|---|

| Situational Awareness | Difficulty maintaining an accurate mental model of the robot’s environment due to limited visual feedback and sensor data. | Use multi-camera views and panoramic stitching Integrate map overlays and spatial cues Provide visual or auditory alerts for out-of-view hazards |

| Cognitive Load | Overload of working memory caused by simultaneous tasks such as navigation, communication, and sensor monitoring. | Layer information hierarchies (critical vs. secondary) Automate routine or repetitive tasks Apply visual grouping and hierarchy to reduce search time |

| Trust and Transparency | Misalignment between perceived and actual system reliability, leading to under-trust or over-trust of automation. | Incorporate explainable AI modules Display confidence levels and system rationale Provide manual override with clear consequence feedback |

| Attention and Individual Differences | Cognitive strain from modality switching and diverse operator preferences for visual, auditory, or haptic cues. | Support multimodal (visual, auditory, haptic) feedback Allow user customization based on role/preference Ensure redundant communication across channels |

| Stress and Fragility | Cognitive and motor performance decline under high-pressure and emergency conditions. | Use large, forgiving interface elements Minimize menu depth and complex interactions Build in redundant cues (e.g., visual + audio alerts) |

| Interface Type | Key Features | Advantages | Limitations | Mining SAR Suitability |

|---|---|---|---|---|

| Graphical User Interface (GUI) | 2D/3D displays with video, maps, and telemetry; standard input devices | Familiar and easy to implement; supports multiple data streams | High visual load; requires fine motor control; vulnerable to clutter | Widely used; needs optimization for low-light and noisy data environments |

| Multimodal Interface | Combines visual, auditory, and haptic feedback | Distributes cognitive load across modalities; improves redundancy and accessibility | Risk of sensory conflict; requires synchronization and user customization | Highly suitable if well-calibrated; reduces modality overload |

| Haptic/Tactile Interface | Physical cues such as vibration or resistance to simulate contact or force | Enhances feedback in low-visibility conditions; supports intuitive sensing | Limited precision; requires calibration; may be difficult to use with PPE or remotely | Useful in niche contexts or advanced systems; not widely deployed yet |

| Immersive Interface (AR/VR) | Simulates environment using 3D visualization through headsets or projections | Enhances spatial awareness and planning; ideal for training or mission rehearsal | Susceptible to motion sickness and fatigue; hardware and reliability constraints in field use | Promising for planning and training; less practical for real-time control in rescue scenarios |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bakzadeh, R.; Joao, K.M.; Androulakis, V.; Khaniani, H.; Shao, S.; Hassanalian, M.; Roghanchi, P. Enhancing Emergency Response: The Critical Role of Interface Design in Mining Emergency Robots. Robotics 2025, 14, 148. https://doi.org/10.3390/robotics14110148

Bakzadeh R, Joao KM, Androulakis V, Khaniani H, Shao S, Hassanalian M, Roghanchi P. Enhancing Emergency Response: The Critical Role of Interface Design in Mining Emergency Robots. Robotics. 2025; 14(11):148. https://doi.org/10.3390/robotics14110148

Chicago/Turabian StyleBakzadeh, Roya, Kiazoa M. Joao, Vasileios Androulakis, Hassan Khaniani, Sihua Shao, Mostafa Hassanalian, and Pedram Roghanchi. 2025. "Enhancing Emergency Response: The Critical Role of Interface Design in Mining Emergency Robots" Robotics 14, no. 11: 148. https://doi.org/10.3390/robotics14110148

APA StyleBakzadeh, R., Joao, K. M., Androulakis, V., Khaniani, H., Shao, S., Hassanalian, M., & Roghanchi, P. (2025). Enhancing Emergency Response: The Critical Role of Interface Design in Mining Emergency Robots. Robotics, 14(11), 148. https://doi.org/10.3390/robotics14110148