Automated On-Tree Detection and Size Estimation of Pomegranates by a Farmer Robot

Abstract

1. Introduction

1.1. Related Work

1.2. Aim of the Study

- A novel pomegranate fruit segmentation and modeling approach that combines 2D and 3D information acquired by an Intel RealSense D435 camera. Two-dimensional segmentation relies on multi-stage transfer learning and semi-supervised image annotation, which relieve the burden of manual labeling. Three-dimensional information is directly available from the sensing device, thus avoiding the need for calibration targets. These characteristics make the overall system viable for real-world implementation;

- An automated fruit sizing method based on an elliptical model of the pomegranate to measure polar and equatorial diameters for precise fruit shape estimation in 3D space. Polar and equatorial diameters are fundamental morphological parameters to determine the ripening and quality of pomegranates, as well as to estimate fruit mass [34];

- An integrated robotic platform for automated in-field data gathering. The use of a robotic farmer is essential to automate image acquisition and to guarantee continuous monitoring of the growing status directly in the field.

1.3. Outline of the Paper

2. Materials and Methods

2.1. Robotic Platform

2.2. Datasets

2.3. Multi-Stage Image Segmentation

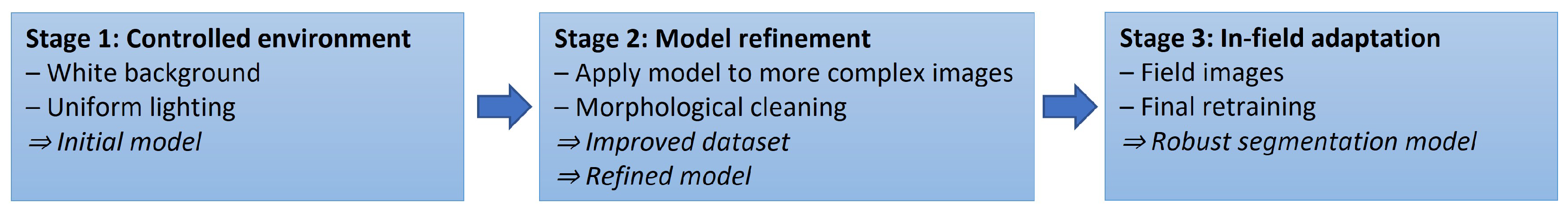

- Stage 1—Controlled environment: The initial training set consists of images of picked fruits placed against a white monochrome background, which allows straightforward mask extraction via color thresholding (see Figure 4). In this phase, lighting conditions are carefully controlled, providing diffuse and uniform illumination. This minimizes shadows and prevents dark regions from blending into the background, ensuring a clear fruit definition.

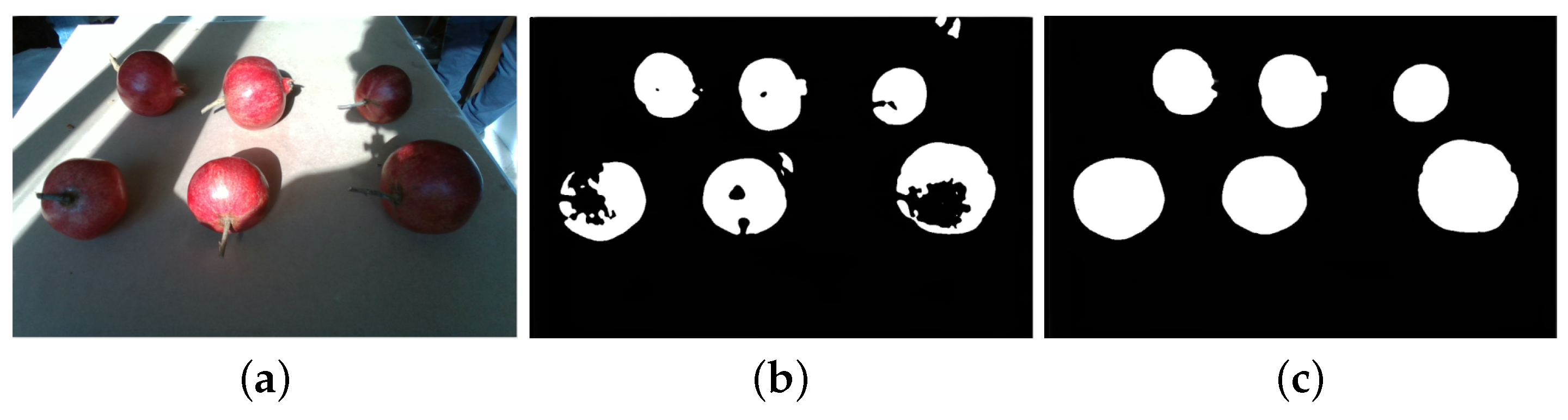

- Stage 2—Model refinement: The network trained under controlled conditions is next applied to images of pomegranates acquired in the same environment but under intense, non-uniform lighting, which introduces shadow beams and strong contrasts. While color thresholding fails in these cases, the initial model produces coarse labels that can still capture the fruit’s shape. These labels are then refined using morphological operations and merged with the original dataset, before retraining the model (see Figure 5).

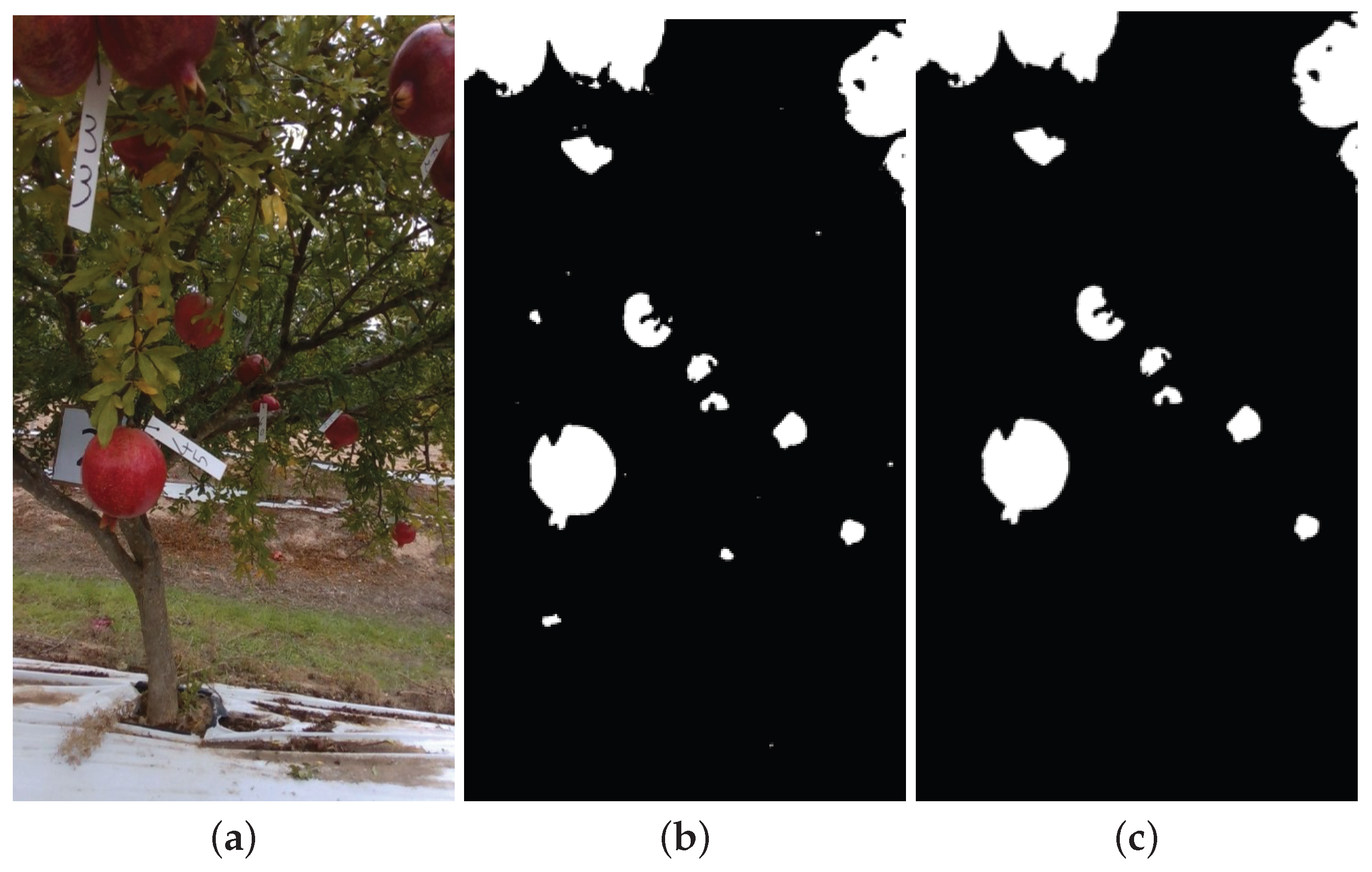

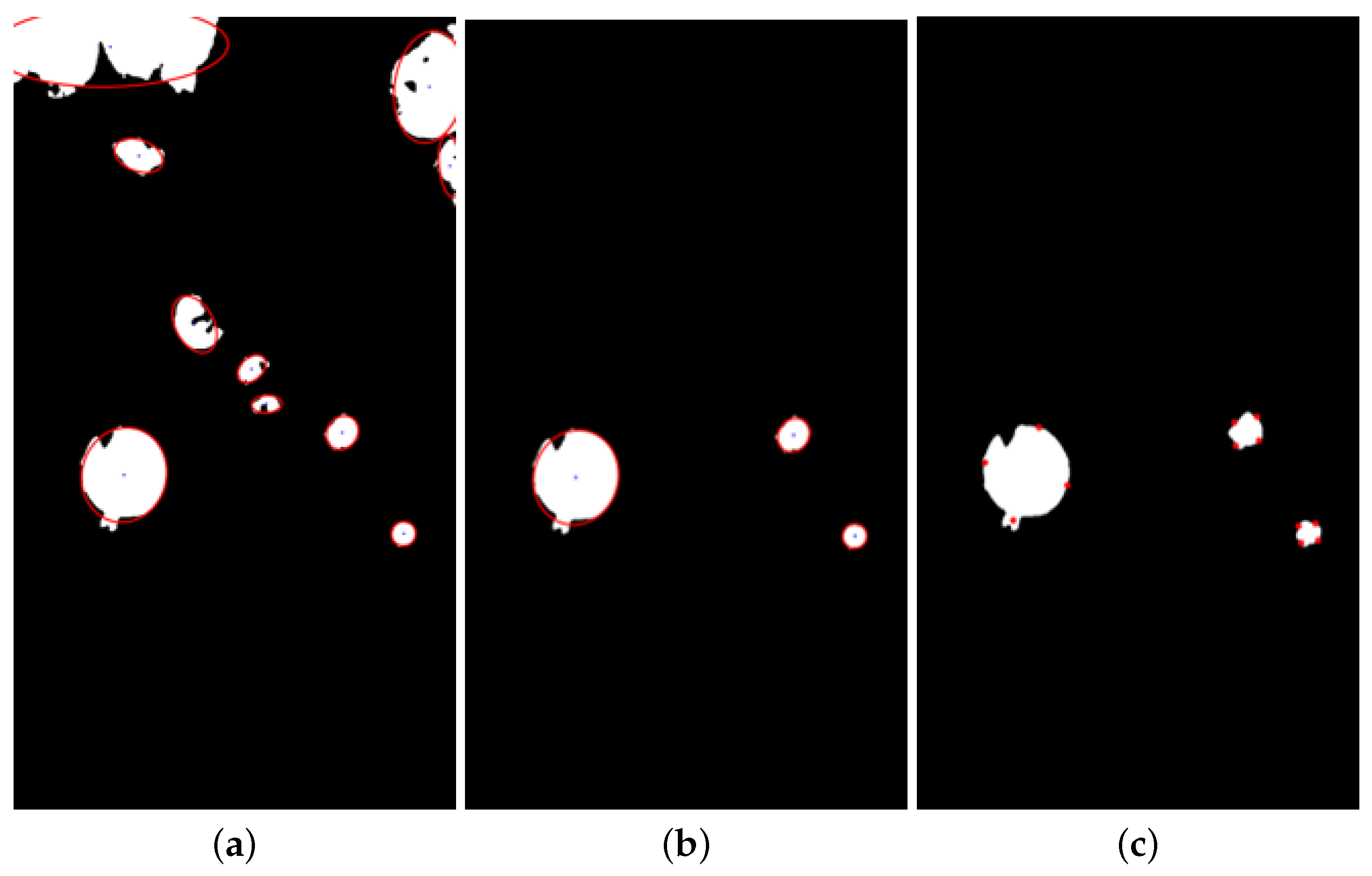

- Stage 3—In-field adaptation: Finally, the same procedure is repeated using a limited number of in-field images, enabling the model to adapt to real-world conditions. Segmentation results from the final network are presented in Figure 6 for a representative test case. Specifically, the original image is shown in Figure 6a, while the semantic segmentation output is displayed in Figure 6b, where cyan pixels indicate the background and blue pixels denote the fruits.

2.4. Fruit Clustering and Modeling

2.5. Fruit Sizing

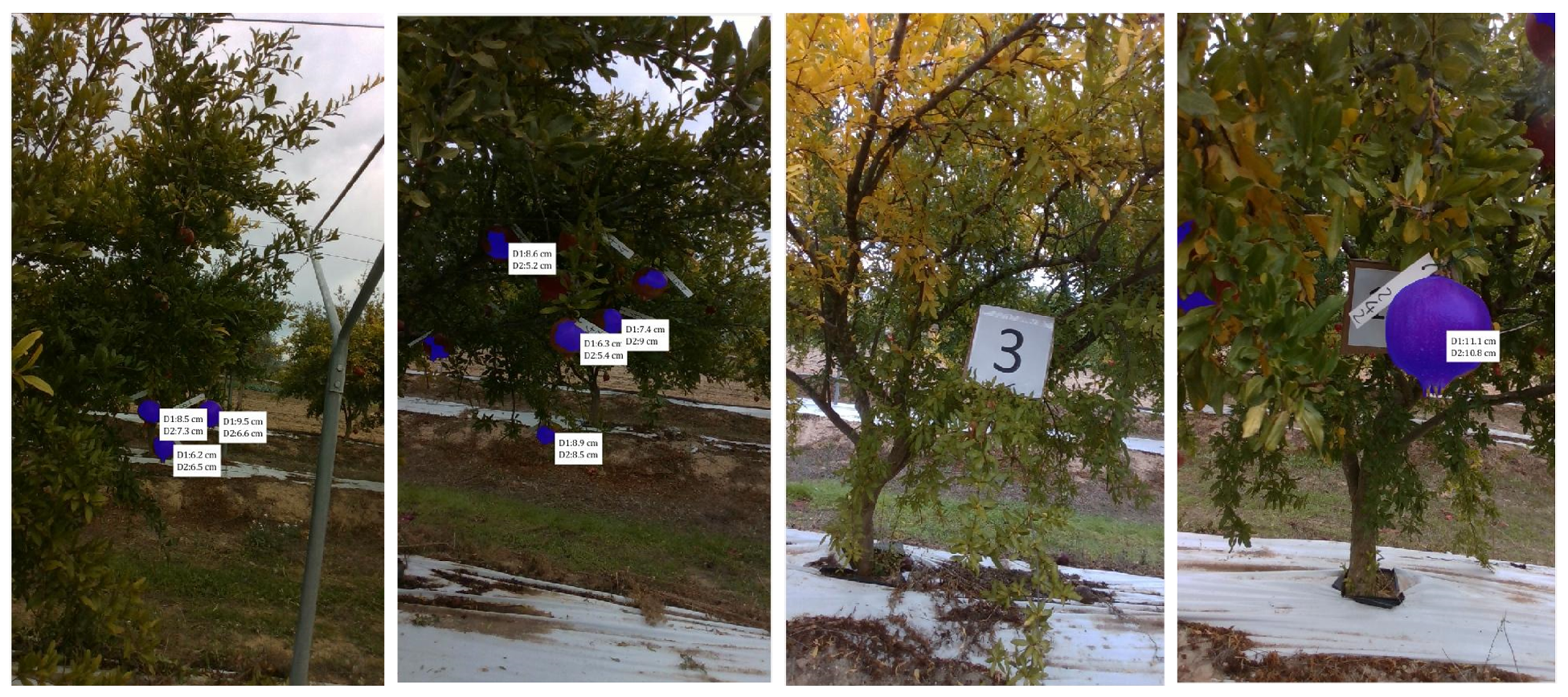

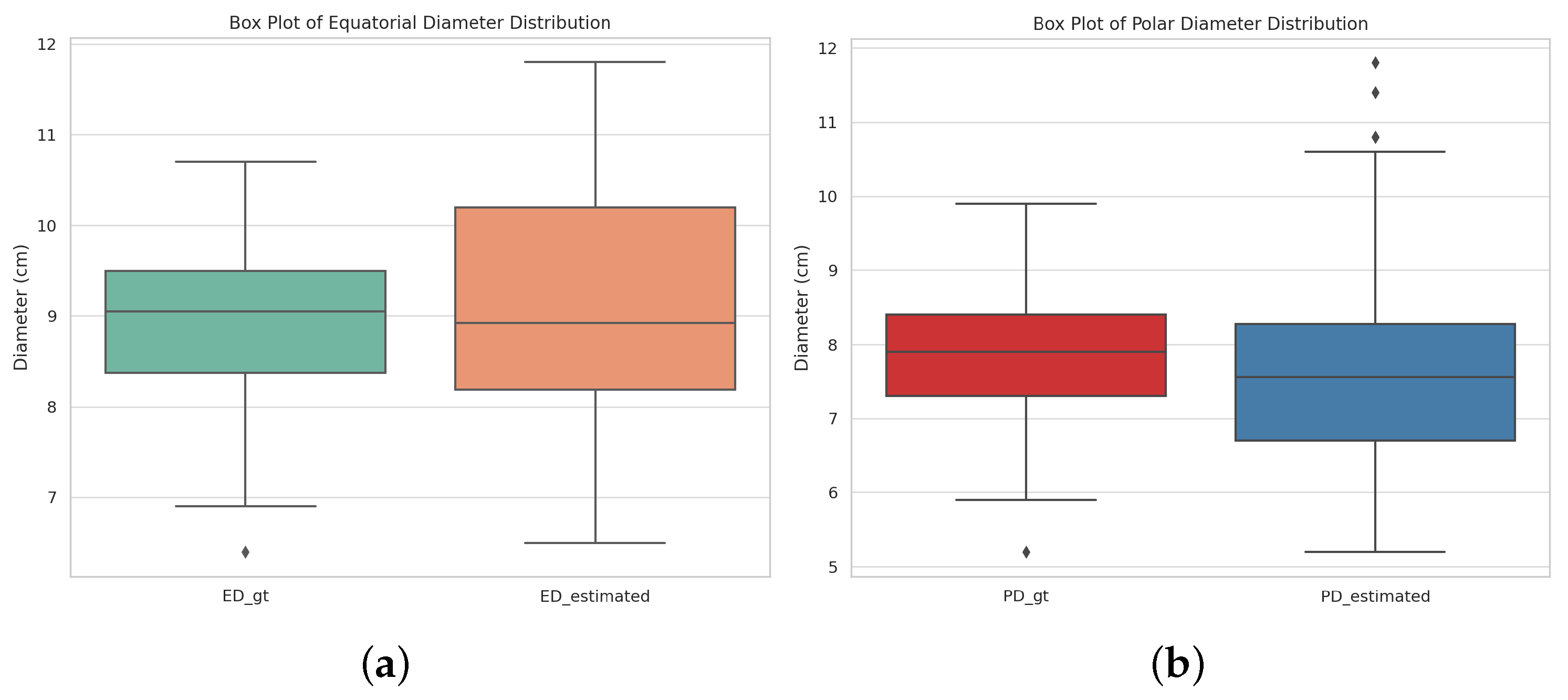

3. Results

4. Discussion

- Segmentation issues: Difficulties in correctly detecting fruits, as a result of partially obscured or incorrectly identified fruit regions, which are common in real-world applications with high scene complexity.

- Uncontrolled acquisition conditions: All tests are conducted in fully natural settings, without control over lighting and without removing major occlusions (e.g., through defoliation), which introduces variability in the data.

- Acquisition platform limitations: Data collection is based on a consumer-grade depth sensor worth a few hundred euros mounted on a mobile robotic platform, occasionally leading to motion artifacts and reduced image quality.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Montefusco, A.; Durante, M.; Migoni, D.; De Caroli, M.; Ilahy, R.; Pék, Z.; Helyes, L.; Fanizzi, F.P.; Mita, G.; Piro, G.; et al. Analysis of the Phytochemical Composition of Pomegranate Fruit Juices, Peels and Kernels: A Comparative Study on Four Cultivars Grown in Southern Italy. Plants 2021, 10, 2521. [Google Scholar] [CrossRef] [PubMed]

- Available online: https://esploradati.istat.it/databrowser/#/it/dw/categories/IT1,Z1000AGR,1.0/AGR_CRP/DCSP_COLTIVAZIONI/IT1,101_1015_DF_DCSP_COLTIVAZIONI_1,1.0 (accessed on 9 September 2025).

- Miranda, J.C.; Gené-Mola, J.; Zude-Sasse, M.; Tsoulias, N.; Escolà, A.; Arnó, J.; Rosell-Polo, J.R.; Sanz-Cortiella, R.; Martínez-Casasnovas, J.A.; Gregorio, E. Fruit sizing using AI: A review of methods and challenges. Postharvest Biol. Technol. 2023, 206, 112587. [Google Scholar] [CrossRef]

- Kaleem, A.; Hussain, S.; Aqib, M.; Cheema, M.J.M.; Saleem, S.R.; Farooq, U. Development Challenges of Fruit-Harvesting Robotic Arms: A Critical Review. AgriEngineering 2023, 5, 2216–2237. [Google Scholar] [CrossRef]

- Massaglia, S.; Borra, D.; Peano, C.; Sottile, F.; Merlino, V.M. Consumer Preference Heterogeneity Evaluation in Fruit and Vegetable Purchasing Decisions Using the Best–Worst Approach. Foods 2019, 8, 266. [Google Scholar] [CrossRef]

- Neupane, C.; Pereira, M.; Koirala, A.; Walsh, K.B. Fruit Sizing in Orchard: A Review from Caliper to Machine Vision with Deep Learning. Sensors 2023, 23, 3868. [Google Scholar] [CrossRef]

- Walsh, B.B. Advances in Agricultural Machinery and Technologies, 1st ed.; Chapter Fruit and Vegetable Packhouse Technologies for Assessing Fruit Quantity and Quality; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Gongal, A.; Amatya, S.; Karkee, M.; Zhang, Q.; Lewis, K. Sensors and systems for fruit detection and localization: A review. Comput. Electron. Agric. 2015, 116, 8–19. [Google Scholar] [CrossRef]

- Bargoti, S.; Underwood, J. Deep fruit detection in orchards. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3626–3633. [Google Scholar] [CrossRef]

- Xiao, F.; Wang, H.; Xu, Y.; Zhang, R. Fruit Detection and Recognition Based on Deep Learning for Automatic Harvesting: An Overview and Review. Agronomy 2023, 13, 1625. [Google Scholar] [CrossRef]

- Milella, A.; Marani, R.; Petitti, A.; Reina, G. In-field high throughput grapevine phenotyping with a consumer-grade depth camera. Comput. Electron. Agric. 2019, 156, 293–306. [Google Scholar] [CrossRef]

- Santos, T.T.; de Souza, L.L.; dos Santos, A.A.; Avila, S. Grape detection, segmentation, and tracking using deep neural networks and three-dimensional association. Comput. Electron. Agric. 2020, 170, 105247. [Google Scholar] [CrossRef]

- Mane, S.; Bartakke, P.; Bastewad, T. DetSSeg: A Selective On-Field Pomegranate Segmentation Approach. In Proceedings of the 2023 IEEE International Conference on Computer Vision and Machine Intelligence (CVMI), Gwalior, India, 10–11 December 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Wang, J.; Liu, M.; Du, Y.; Zhao, M.; Jia, H.; Guo, Z.; Su, Y.; Lu, D.; Liu, Y. PG-YOLO: An efficient detection algorithm for pomegranate before fruit thinning. Eng. Appl. Artif. Intell. 2024, 134, 108700. [Google Scholar] [CrossRef]

- Zhao, J.; Du, C.; Li, Y.; Mudhsh, M.; Guo, D.; Fan, Y.; Wu, X.; Wang, X.; Almodfer, R. YOLO-Granada: A lightweight attentioned Yolo for pomegranates fruit detection. Sci. Rep. 2024, 14, 16848. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, K.; Ge, L.; Zou, K.; Wang, S.; Zhang, J.; Li, W. A method for organs classification and fruit counting on pomegranate trees based on multi-features fusion and support vector machine by 3D point cloud. Sci. Hortic. 2021, 278, 109791. [Google Scholar] [CrossRef]

- Stajnko, D.; Rakun, J.; Blanke, M.M. Modelling apple fruit yield using image analysis for fruit colour, shape and texture. Eur. J. Hortic. Sci. 2009, 74, 260–267. [Google Scholar] [CrossRef]

- Stajnko, D.; Lakota, M.; Hočevar, M. Estimation of number and diameter of apple fruits in an orchard during the growing season by thermal imaging. Comput. Electron. Agric. 2004, 42, 31–42. [Google Scholar] [CrossRef]

- Apolo-Apolo, O.; Martínez-Guanter, J.; Egea, G.; Raja, P.; Pérez-Ruiz, M. Deep learning techniques for estimation of the yield and size of citrus fruits using a UAV. Eur. J. Agron. 2020, 115, 126030. [Google Scholar] [CrossRef]

- Lu, S.; Chen, W.; Zhang, X.; Karkee, M. Canopy-attention-YOLOv4-based immature/mature apple fruit detection on dense-foliage tree architectures for early crop load estimation. Comput. Electron. Agric. 2022, 193, 106696. [Google Scholar] [CrossRef]

- Giménez-Gallego, J.; Martínez-del Rincon, J.; Blaya-Ros, P.J.; Navarro-Hellín, H.; Navarro, P.J.; Torres-Sánchez, R. Fruit Monitoring and Harvest Date Prediction Using On-Tree Automatic Image Tracking. IEEE Trans. Agrifood Electron. 2025, 3, 56–68. [Google Scholar] [CrossRef]

- Méndez, V.; Pérez-Romero, A.; Sola-Guirado, R.; Miranda-Fuentes, A.; Manzano-Agugliaro, F.; Zapata-Sierra, A.; Rodríguez-Lizana, A. In-Field Estimation of Orange Number and Size by 3D Laser Scanning. Agronomy 2019, 9, 885. [Google Scholar] [CrossRef]

- Ferrer-Ferrer, M.; Ruiz-Hidalgo, J.; Gregorio, E.; Vilaplana, V.; Morros, J.R.; Gené-Mola, J. Simultaneous fruit detection and size estimation using multitask deep neural networks. Biosyst. Eng. 2023, 233, 63–75. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Sanz-Cortiella, R.; Rosell-Polo, J.R.; Escolà, A.; Gregorio, E. In-field apple size estimation using photogrammetry-derived 3D point clouds: Comparison of 4 different methods considering fruit occlusions. Comput. Electron. Agric. 2021, 188, 106343. [Google Scholar] [CrossRef]

- Wang, Z.; Walsh, K.B.; Verma, B. On-Tree Mango Fruit Size Estimation Using RGB-D Images. Sensors 2017, 17, 2738. [Google Scholar] [CrossRef]

- Aerobotics. Available online: https://aerobotics.com/ (accessed on 5 September 2025).

- Green Atlas. Available online: https://greenatlas.com/ (accessed on 5 September 2025).

- Tevel. Available online: https://www.tevel-tech.com/ (accessed on 5 September 2025).

- Kootstra, G.; Wang, X.; Blok, P.M.; Hemming, J.; van Henten, E. Selective Harvesting Robotics: Current Research, Trends, and Future Directions. Curr. Robot. Rep. 2021, 2, 95–104. [Google Scholar] [CrossRef]

- Benos, L.; Moysiadis, V.; Kateris, D.; Tagarakis, A.C.; Busato, P.; Pearson, S.; Bochtis, D. Human–Robot Interaction in Agriculture: A Systematic Review. Sensors 2023, 23, 6776. [Google Scholar] [CrossRef] [PubMed]

- Benos, L.; Bechar, A.; Bochtis, D. Safety and ergonomics in human-robot interactive agricultural operations. Biosyst. Eng. 2020, 200, 55–72. [Google Scholar] [CrossRef]

- Devanna, R.P.; Milella, A.; Marani, R.; Garofalo, S.P.; Vivaldi, G.A.; Pascuzzi, S.; Galati, R.; Reina, G. In-Field Automatic Identification of Pomegranates Using a Farmer Robot. Sensors 2022, 22, 5821. [Google Scholar] [CrossRef] [PubMed]

- Yu, T.; Hu, C.; Xie, Y.; Liu, J.; Li, P. Mature pomegranate fruit detection and location combining improved F-PointNet with 3D point cloud clustering in orchard. Comput. Electron. Agric. 2022, 200, 107233. [Google Scholar] [CrossRef]

- Khoshnam, F.; Tabatabaeefar, A.; Varnamkhasti, M.G.; Borghei, A. Mass modeling of pomegranate (Punica granatum L.) fruit with some physical characteristics. Sci. Hortic. 2007, 114, 21–26. [Google Scholar] [CrossRef]

- Reina, G.; Mantriota, G. On the Climbing Ability of Passively Suspended Tracked Robots. J. Mech. Robot. 2025, 17, 075001. [Google Scholar] [CrossRef]

- Keselman, L.; Woodfill, J.I.; Grunnet-Jepsen, A.; Bhowmik, A. Intel(R) RealSense(TM) Stereoscopic Depth Cameras. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1267–1276. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shapiro, L.G. Computer and Robot Vision; Addison–Wesley: Reading, MA, USA, 1992; Volume 1. [Google Scholar]

- Milella, A.; Rilling, S.; Rana, A.; Galati, R.; Petitti, A.; Hoffmann, M.; Stanly, J.L.; Reina, G. Robot-as-a-Service as a New Paradigm in Precision Farming. IEEE Access 2024, 12, 47942–47949. [Google Scholar] [CrossRef]

| MAE [cm] | St.Dev. [cm] | RMSE [cm] | MAPE [%] | |

|---|---|---|---|---|

| Eq. Diam. | 1.10 | 0.78 | 1.35 | 12.4 |

| Pol. Diam. | 1.05 | 0.79 | 1.31 | 13.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Devanna, R.P.; Vicino, F.; Garofalo, S.P.; Vivaldi, G.A.; Pascuzzi, S.; Reina, G.; Milella, A. Automated On-Tree Detection and Size Estimation of Pomegranates by a Farmer Robot. Robotics 2025, 14, 131. https://doi.org/10.3390/robotics14100131

Devanna RP, Vicino F, Garofalo SP, Vivaldi GA, Pascuzzi S, Reina G, Milella A. Automated On-Tree Detection and Size Estimation of Pomegranates by a Farmer Robot. Robotics. 2025; 14(10):131. https://doi.org/10.3390/robotics14100131

Chicago/Turabian StyleDevanna, Rosa Pia, Francesco Vicino, Simone Pietro Garofalo, Gaetano Alessandro Vivaldi, Simone Pascuzzi, Giulio Reina, and Annalisa Milella. 2025. "Automated On-Tree Detection and Size Estimation of Pomegranates by a Farmer Robot" Robotics 14, no. 10: 131. https://doi.org/10.3390/robotics14100131

APA StyleDevanna, R. P., Vicino, F., Garofalo, S. P., Vivaldi, G. A., Pascuzzi, S., Reina, G., & Milella, A. (2025). Automated On-Tree Detection and Size Estimation of Pomegranates by a Farmer Robot. Robotics, 14(10), 131. https://doi.org/10.3390/robotics14100131