Adaptive Path Planning for Subsurface Plume Tracing with an Autonomous Underwater Vehicle †

Abstract

1. Introduction

- Illustration of the feasibility of applying adaptive path planning using the DDQN algorithm to plume tracing problems by numerical simulations.

- Analysis of the superiority of the adaptive survey approach over the conventional lawnmower approach in terms of survey efficiency for large-scale exploration.

2. Problem Formulation

3. Methods

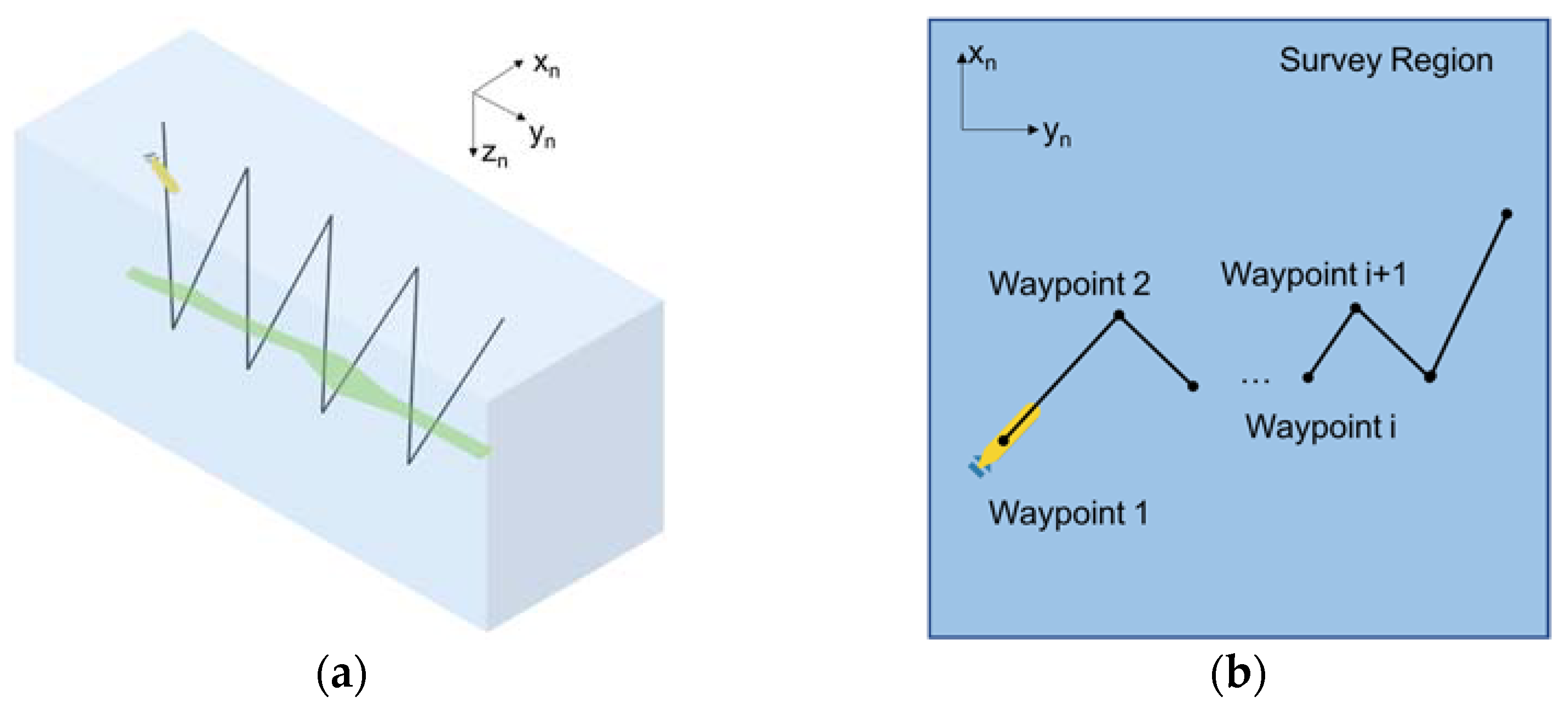

3.1. AUV Kinematic Model

3.2. Markov Decision Process

- State space S: the state space is defined as a set of data pairs that are composed of the position of the AUV and the sensor measurement of the hydrocarbon concentration at different locations.

- Action space A: the action space is a set of all possible controls that the AUV can execute to get to the next waypoints. In this work, it is assumed that the AUV is traveling at a constant speed during the plume tracing process, and it decides the next waypoint at constant time intervals. The action is then associated with the AUV’s heading. The action space is discretized for calculation simplicity, though maneuvers are continuous.

- Reward function R: the reward function, , describes the feedback the AUV obtains from the environment. The immediate reward the AUV receives as soon as it completes one action is closely related to the measurements, and the reward function is defined as:

- State transition function P: the state transition function describes the dynamics of the environment. In accordance with the fact that in real-world applications, no prior information is available on the distribution of plume concentrations, the state transition function is unknown in this case. AUVs need to learn from raw experience through iterative interaction with the environment.

- Discount rate : the discount rate is a parameter. Its value lies between 0 and 1. It indicates whether the AUV is “myopic”, i.e., whether it concerns only maximizing the immediate reward or if it pays more attention to future overall benefit in the process of plume tracing.

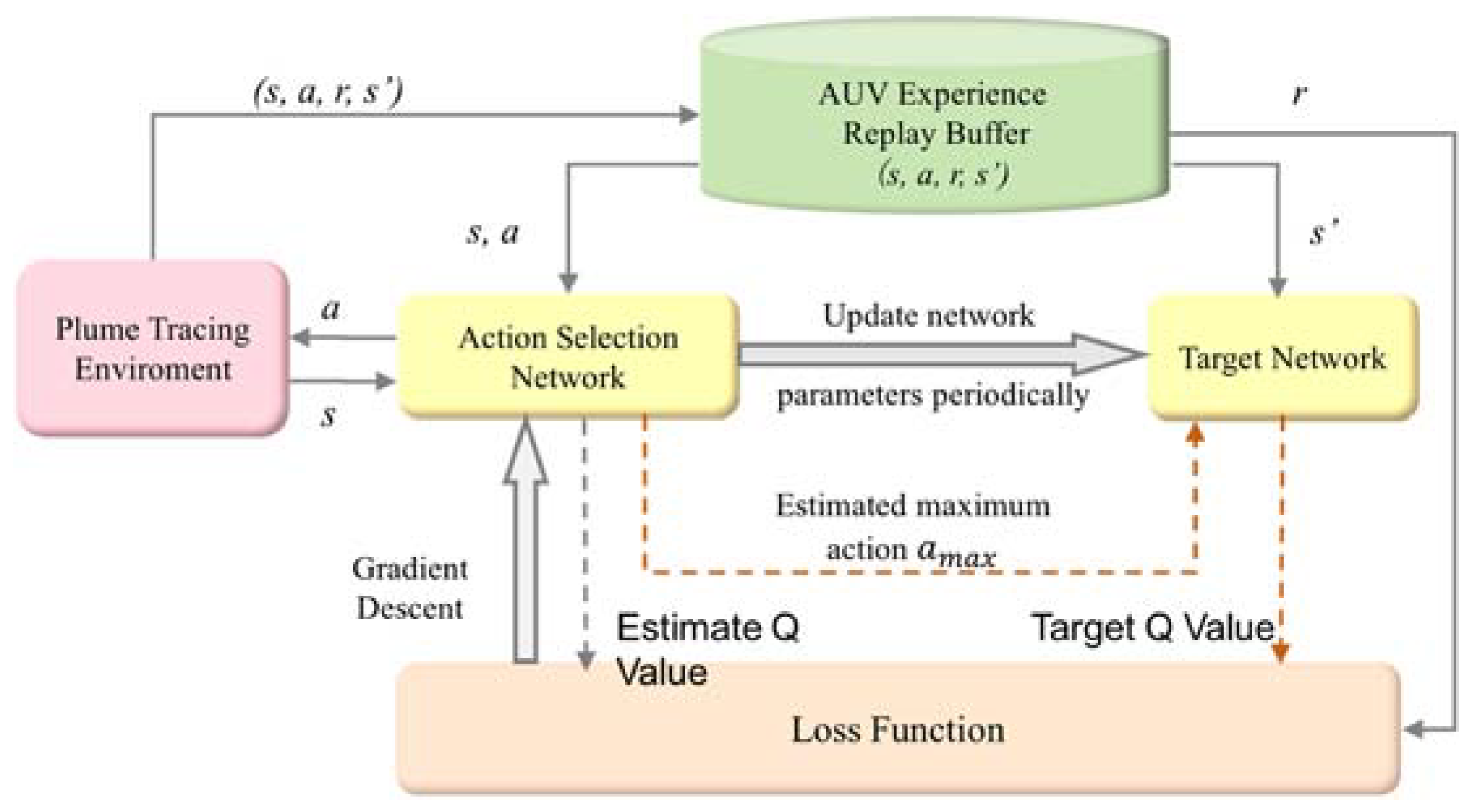

3.3. DDQN Algorithm

4. Numerical Simulation and Results

4.1. Numerical Simulation Setup

4.2. Plume Models

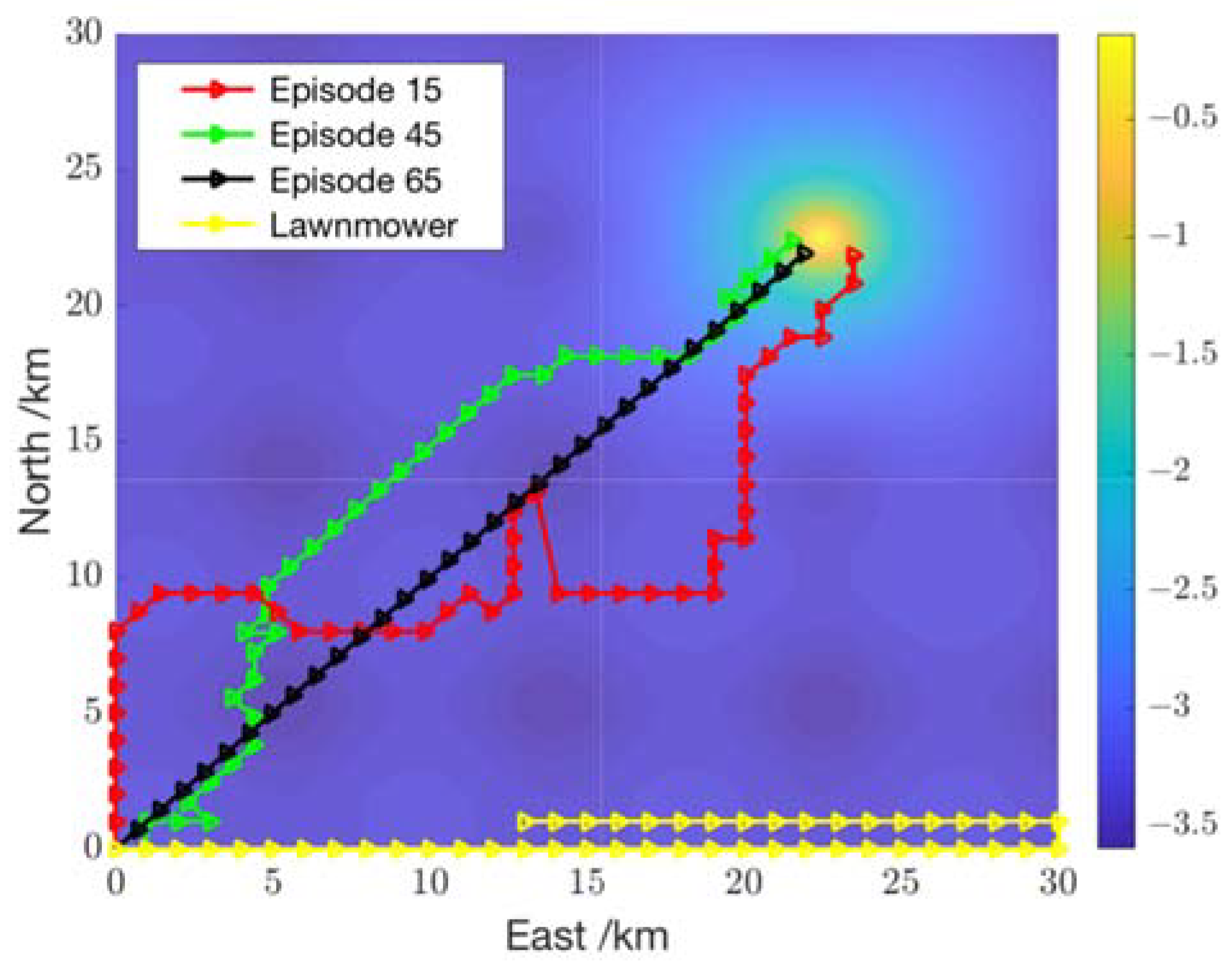

4.3. Steady Plume Tracing

4.4. Transient Plume Tracing

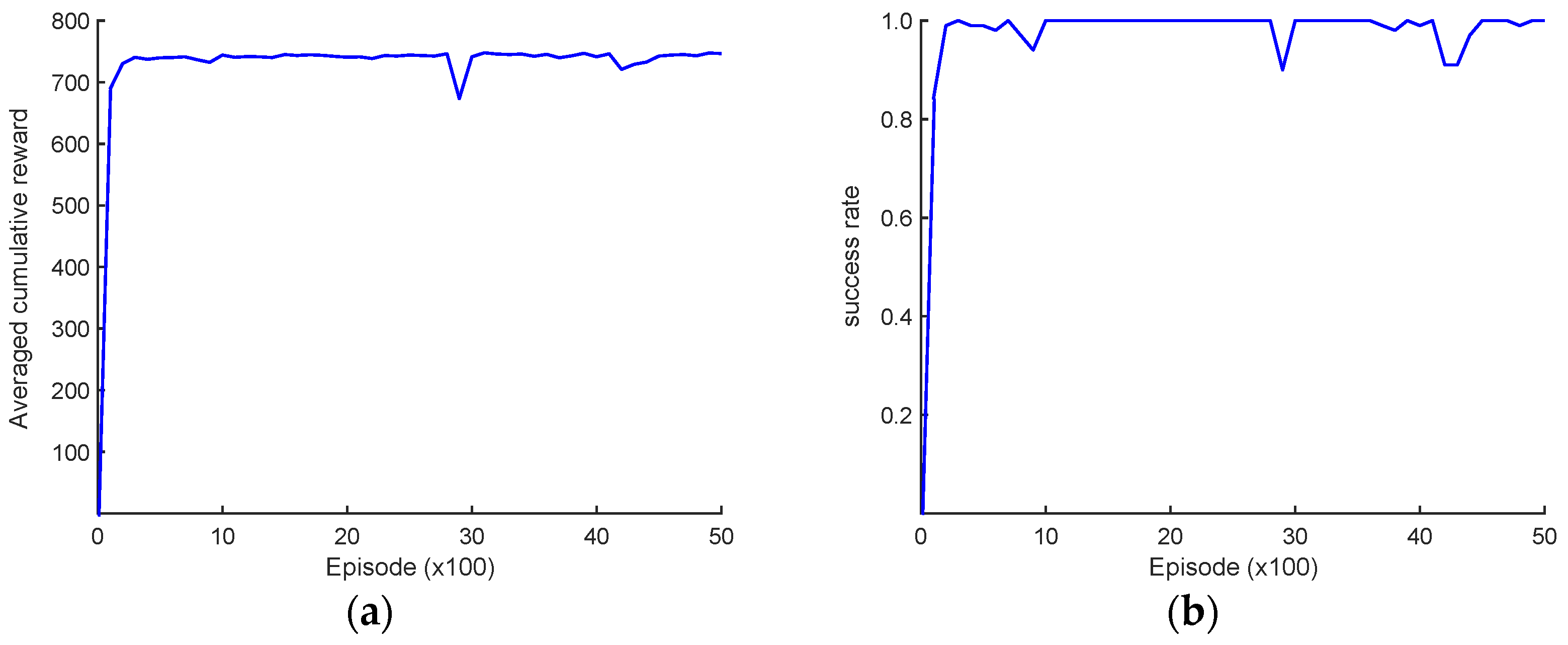

4.5. Learning Performance

4.6. Comparison with Lawnmower Approach

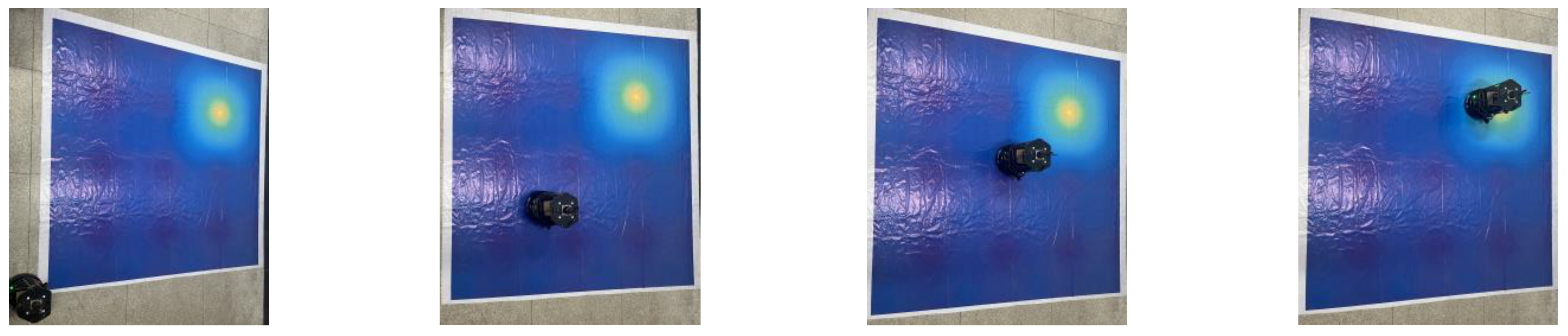

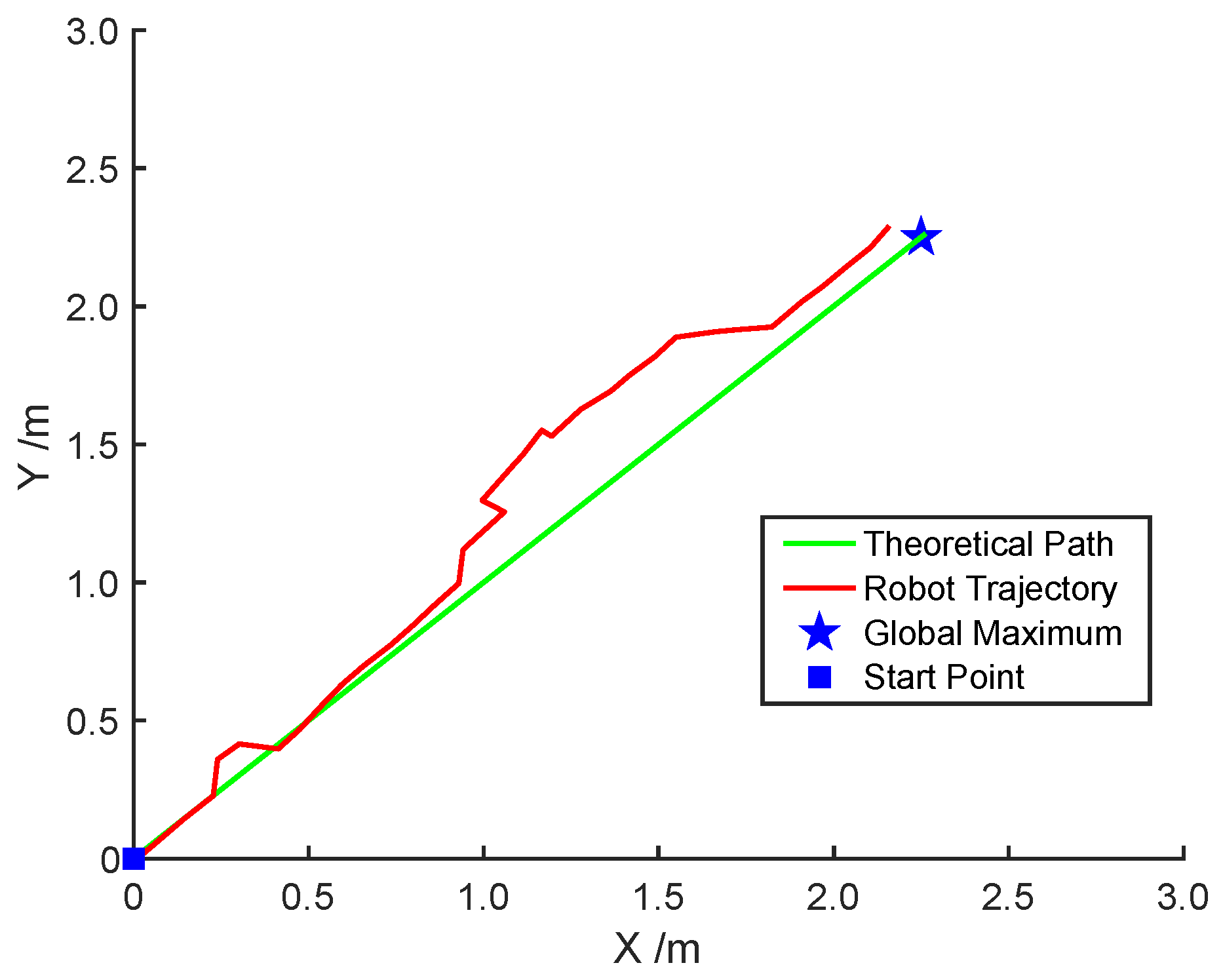

5. Experiment with AGV

6. Conclusions

7. Future Work

- Dynamics of ocean currents. Ocean currents are the major environmental factors that affect AUVs’ motion and path planning. In this work, we consider a simplified case of uniform flow field. The dynamics of ocean currents will be included into the algorithm by sophisticated modeling or oceanographic data for more accurate estimation.

- Obstacle avoidance. Underwater obstacles such as subsea terrain features and man-made structures can pose navigation hazards to AUVs. Strategies will be designed for collision avoidance in the reinforcement learning framework.

- Sensor and actuation errors. Variations in water density, temperature, and salinity can affect acoustic communication and influence the performance of sensors for navigation. In this work, we model the AUV decision-making processes as MDPs. The MDP model will be replaced by Partially Observable Markov Decision Process (POMDP) to represent influence of these uncertainties.

- AUV field trials. Field trials play an important role in path planning algorithm testing. The algorithm performance needs to be evaluated and the models used within can then be improved using measurement and data from underwater experiments.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Beyer, J.; Trannum, H.C.; Bakke, T.; Hodson, P.V.; Collier, T.K. Environmental effects of the Deepwater Horizon oil spill: A review. Mar. Pollut. Bull. 2016, 110, 28–51. [Google Scholar] [CrossRef]

- Westerholm, D.A.; Rauch, S.D., III. Deepwater Horizon Oil Spill: Final Programmatic Damage Assessment and Restoration Plan and Final Programmatic Environmental Impact Statement. Deep. Horiz. Nat. Resour. Damage Assess. Trustees 2016. [Google Scholar]

- Zhang, Y.; McEwen, R.S.; Ryan, J.P.; Bellingham, J.G.; Thomas, H.; Thompson, C.H.; Rienecker, E. A peak-capture algorithm used on an autonomous underwater vehicle in the 2010 Gulf of Mexico oil spill response scientific survey. J. Field Robot. 2011, 28, 484–496. [Google Scholar] [CrossRef]

- Kujawinski, E.B.; Soule, M.C.K.; Valentine, D.L.; Boysen, A.K.; Longnecker, K.; Redmond, M.C. Fate of Dispersants Associated with the Deepwater Horizon Oil Spill. Environ. Sci. Technol. 2011, 45, 1298–1306. [Google Scholar] [CrossRef] [PubMed]

- Jones, C.E.; Dagestad, K.; Breivik, Ø.; Holt, B.; Röhrs, J.; Christensen, K.H.; Espeseth, M.; Brekke, C.; Skrunes, S. Measurement and modeling of oil slick transport. J. Geophys. Res. Ocean. 2016, 121, 7759–7775. [Google Scholar] [CrossRef]

- Hwang, J.; Bose, N.; Nguyen, H.D.; Williams, G. Acoustic Search and Detection of Oil Plumes Using an Autonomous Underwater Vehicle. J. Mar. Sci. Eng. 2020, 8, 618. [Google Scholar] [CrossRef]

- Camilli, R.; Reddy, C.M.; Yoerger, D.R.; Van Mooy, B.A.S.; Jakuba, M.V.; Kinsey, J.C.; McIntyre, C.P.; Sylva, S.P.; Maloney, J.V. Tracking Hydrocarbon Plume Transport and Biodegradation at Deepwater Horizon. Science 2010, 330, 201–204. [Google Scholar] [CrossRef]

- Wynn, R.B.; Huvenne, V.A.I.; Le Bas, T.P.; Murton, B.J.; Connelly, D.P.; Bett, B.J.; Ruhl, H.A.; Morris, K.J.; Peakall, J.; Parsons, D.R.; et al. Autonomous Underwater Vehicles (AUVs): Their past, present and future contributions to the advancement of marine geoscience. Mar. Geol. 2014, 352, 451–468. [Google Scholar] [CrossRef]

- Reddy, C.M.; Arey, J.S.; Seewald, J.S.; Sylva, S.P.; Lemkau, K.L.; Nelson, R.K.; Carmichael, C.A.; McIntyre, C.P.; Fenwick, J.; Ventura, G.T.; et al. Composition and fate of gas and oil released to the water column during the Deepwater Horizon oil spill. Proc. Natl. Acad. Sci. USA 2012, 109, 20229–20234. [Google Scholar] [CrossRef]

- DiPinto, L.; Forth, H.; Holmes, J.; Kukulya, A.; Conmy, R.; Garcia, O. Three-Dimensional Mapping of Dissolved Hydrocarbons and Oil Droplets Using a REMUS-600 Autonomous Underwater Vehicle. In Report to Bureau of Safety and Environmental Enforcement; BSEE: New Orleans, LA, USA, 2019. [Google Scholar]

- Hwang, J.; Bose, N.; Millar, G.; Gillard, A.B.; Nguyen, H.D.; Williams, G. Enhancement of AUV Autonomy Using Backseat Driver Control Architecture. Int. J. Mech. Eng. Robot. Res. 2021, 10, 292–300. [Google Scholar] [CrossRef]

- Gomez-Ibanez, D.; Kukulya, A.L.; Belani, A.; Conmy, R.N.; Sundaravadivelu, D.; DiPinto, L. Autonomous Water Sampler for Oil Spill Response. J. Mar. Sci. Eng. 2022, 10, 526. [Google Scholar] [CrossRef]

- Berg, H.C. Bacterial microprocessing. Cold Spring Harb. Symp. Quant. Biol. 1990, 55, 539–545. [Google Scholar] [CrossRef] [PubMed]

- Petillo, S.; Schmidt, H.R. Autonomous and Adaptive Underwater Plume Detection and Tracking with AUVs: Concepts, Methods, and Available Technology. IFAC Proc. Vol. 2016, 45, 232–237. [Google Scholar] [CrossRef]

- Farrell, J.A.; Pang, S.; Li, W. Chemical plume tracing via an autonomous underwater vehicle. IEEE J. Ocean. Eng. 2005, 30, 428–442. [Google Scholar] [CrossRef]

- Li, W.; Farrell, J.; Pang, S.; Arrieta, R. Moth-inspired chemical plume tracing on an autonomous underwater vehicle. IEEE Trans. Robot. 2006, 22, 292–307. [Google Scholar] [CrossRef]

- Grasso, F.W.; Atema, J. Integration of Flow and Chemical Sensing for Guidance of Autonomous Marine Robots in Turbulent Flows. Environ. Fluid Mech. 2002, 2, 95–114. [Google Scholar] [CrossRef]

- Hu, H.; Song, S.; Chen, C.L.P. Plume Tracing via Model-Free Reinforcement Learning Method. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2515–2527. [Google Scholar] [CrossRef]

- Marques, L.; Nunes, U.; de Almeida, A.T. Particle swarm-based olfactory guided search. Auton Robot. 2006, 20, 277–287. [Google Scholar] [CrossRef]

- Yang, B.; Ding, Y.; Jin, Y.; Hao, K. Self-organized swarm robot for target search and trapping inspired by bacterial chemotaxis. Robot. Auton. Syst. 2015, 72, 83–92. [Google Scholar] [CrossRef]

- Marjovi, A.; Marques, L. Optimal Swarm Formation for Odor Plume Finding. IEEE Trans. Cybern. 2014, 44, 2302–2315. [Google Scholar] [CrossRef]

- Sampathkumar, A.; Dugaev, D.; Song, A.; Hu, F.; Peng, Z.; Zhang, F. Plume tracing simulations using multiple autonomous underwater vehicles. In Proceedings of the 16th International Conference on Underwater Networks & Systems, Association for Computing Machinery, Boston, MA, USA, 14–16 November 2022. [Google Scholar]

- Smith, R.N.; Chao, Y.; Li, P.P.; Caron, D.A.; Jones, B.H.; Sukhatme, G.S. Planning and implementing trajectories for autonomous underwater vehicles to track evolving ocean processes based on predictions from a regional ocean model. Int. J. Robot. Res. 2010, 29, 1475–1497. [Google Scholar] [CrossRef]

- Li, R.; Wu, H. Multi-robot plume source localization by distributed quantum-inspired guidance with formation behavior. IEEE Trans. Intell. Transp. Syst. 2023, 24, 11889–11904. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, T.; Lei, X.; Peng, X. Collective behavior of self-propelled particles with heterogeneity in both dynamics and delays. Chaos Solitons Fractals 2024, 180, 114596. [Google Scholar] [CrossRef]

- Wang, T.; Peng, X.; Wang, T.; Liu, T.; Xu, D. Automated design of action advising trigger conditions for multiagent reinforcement learning: A genetic programming-based approach. Swarm Evol. Comput. 2024, 85, 101475. [Google Scholar] [CrossRef]

- Pang, S.; Farrell, J.A. Chemical Plume Source Localization. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2006, 36, 1068–1080. [Google Scholar] [CrossRef]

- Jiu, H.-F.; Chen, Y.; Deng, W.; Pang, S. Underwater chemical plume tracing based on partially observable Markov decision process. Int. J. Adv. Robot. Syst. 2019, 16. [Google Scholar] [CrossRef]

- Marchant, R.; Ramos, F. Bayesian optimisation for Intelligent Environmental Monitoring. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012. [Google Scholar]

- Wang, L.; Pang, S. Autonomous underwater vehicle based chemical plume tracing via deep reinforcement learning methods. J. Mar. Sci. Eng. 2023, 11, 366. [Google Scholar] [CrossRef]

- Bao, Z.; Li, Y.; Shao, X.; Wu, Z.; Li, Q. Adaptive path planning for plume detection with an underwater glider. In Proceedings of the IFToMM World Congress on Mechanism and Machine Science, Tokyo, Japan, 5–10 November 2023. [Google Scholar]

- Zhang, Y.; Hobson, B.W.; Kieft, B.; Godin, M.A.; Ravens, T.; Ulmgren, M. Adaptive Zigzag Mapping of a Patchy Field by a Long-Range Autonomous Underwater Vehicle. IEEE J. Ocean. Eng. 2024, 49, 403–415. [Google Scholar] [CrossRef]

- Blanchard, A.; Sapsis, T. Informative path planning for anomaly detection in environment exploration and monitoring. Ocean. Eng. 2022, 243, 110242. [Google Scholar] [CrossRef]

- Bracco, A.; Paris, C.B.; Esbaugh, A.J.; Frasier, K.; Joye, S.B.; Liu, G.; Polzin, K.L.; Vaz, A.C. Transport, Fate and Impacts of the Deep Plume of Petroleum Hydrocarbons Formed During the Macondo Blowout. Front. Mar. Sci. 2020, 7, 542147. [Google Scholar] [CrossRef]

- Su, F.; Fan, R.; Yan, F.; Meadows, M.; Lyne, V.; Hu, P.; Song, X.; Zhang, T.; Liu, Z.; Zhou, C.; et al. Widespread global disparities between modelled and observed mid-depth ocean currents. Nat. Commun. 2023, 14, 1–9. [Google Scholar] [CrossRef]

- Lewis, T.; Bhaganagar, K. A comprehensive review of plume source detection using unmanned vehicles for environmental sensing. Sci. Total Environ. 2021, 762, 144029. [Google Scholar] [CrossRef]

- Holzbecher, E. Environmental Modeling Using MATLAB; Springer: Berlin/Heidelberg, Germany, 2012; pp. 303–316. [Google Scholar]

- Naeem, W.; Sutton, R.; Chudley, J. Chemical Plume Tracing and Odour Source Localisation by Autonomous Vehicles. J. Navig. 2007, 60, 173–190. [Google Scholar] [CrossRef]

- Paris, C.B.; Le Hénaff, M.; Aman, Z.M.; Subramaniam, A.; Helgers, J.; Wang, D.-P.; Kourafalou, V.H.; Srinivasan, A. Evolution of the Macondo Well Blowout: Simulating the Effects of the Circulation and Synthetic Dispersants on the Subsea Oil Transport. Environ. Sci. Technol. 2012, 46, 13293–13302. [Google Scholar] [CrossRef] [PubMed]

- Boudlal, A.; Khafaji, A.; Elabbadi, J. Entropy adjustment by interpolation for exploration in Proximal Policy Optimization (PPO). Eng. Appl. Artif. Intell. 2024, 113, 108401. [Google Scholar] [CrossRef]

- Foster, S.D.; Hosack, G.R.; Hill, N.A.; Barrett, N.S.; Lucieer, V.L. Choosing between strategies for designing surveys: Autonomous underwater vehicles. Methods Ecol. Evol. 2014, 5, 287–297. [Google Scholar]

| Parameter | Value |

|---|---|

| Survey region | 30 km × 30 km |

| AUV survey endurance | 36 h |

| Total time step limit per survey | 60 |

| AUV speed | 0.5 m/s |

| Time step length | 2000 s |

| Initial Sampling Strategy | Earliest Successful Episode | Number of Successful Episodes within the First 100 Attempts |

|---|---|---|

| Random | 55th | 21 |

| Uniform | 59th | 19 |

| w/o | 53rd | 23 |

| Parameter | Value | Note |

|---|---|---|

| Ocean current velocity | 0.1 m/s | in the positive horizontal direction |

| Diffusion coefficient | 1 m2/s | horizontal |

| Steady Plume | Transit Plume | |||

|---|---|---|---|---|

| DDQN | PPO | DDQN | PPO | |

| Success rate | 100% | 100% | 100% | 100% |

| First successful episode | ~50th | 3000th | ~20th | 1800th |

| Convergence speed | ~300 eps | ~2.8 × 104 eps | ~400 eps | ~3.7 × 104 eps |

| Parameter | Preset Value | Experimental Result | Qualified (Yes/No) |

|---|---|---|---|

| Number of steps | 60 | 36 | Yes |

| Distance to the goal (mm) | 100 | 94 | Yes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Z.; Wang, S.; Shao, X.; Liu, F.; Bao, Z. Adaptive Path Planning for Subsurface Plume Tracing with an Autonomous Underwater Vehicle. Robotics 2024, 13, 132. https://doi.org/10.3390/robotics13090132

Wu Z, Wang S, Shao X, Liu F, Bao Z. Adaptive Path Planning for Subsurface Plume Tracing with an Autonomous Underwater Vehicle. Robotics. 2024; 13(9):132. https://doi.org/10.3390/robotics13090132

Chicago/Turabian StyleWu, Zhiliang, Shuozi Wang, Xusong Shao, Fang Liu, and Zefeng Bao. 2024. "Adaptive Path Planning for Subsurface Plume Tracing with an Autonomous Underwater Vehicle" Robotics 13, no. 9: 132. https://doi.org/10.3390/robotics13090132

APA StyleWu, Z., Wang, S., Shao, X., Liu, F., & Bao, Z. (2024). Adaptive Path Planning for Subsurface Plume Tracing with an Autonomous Underwater Vehicle. Robotics, 13(9), 132. https://doi.org/10.3390/robotics13090132