Image-to-Image Translation-Based Deep Learning Application for Object Identification in Industrial Robot Systems

Abstract

1. Introduction

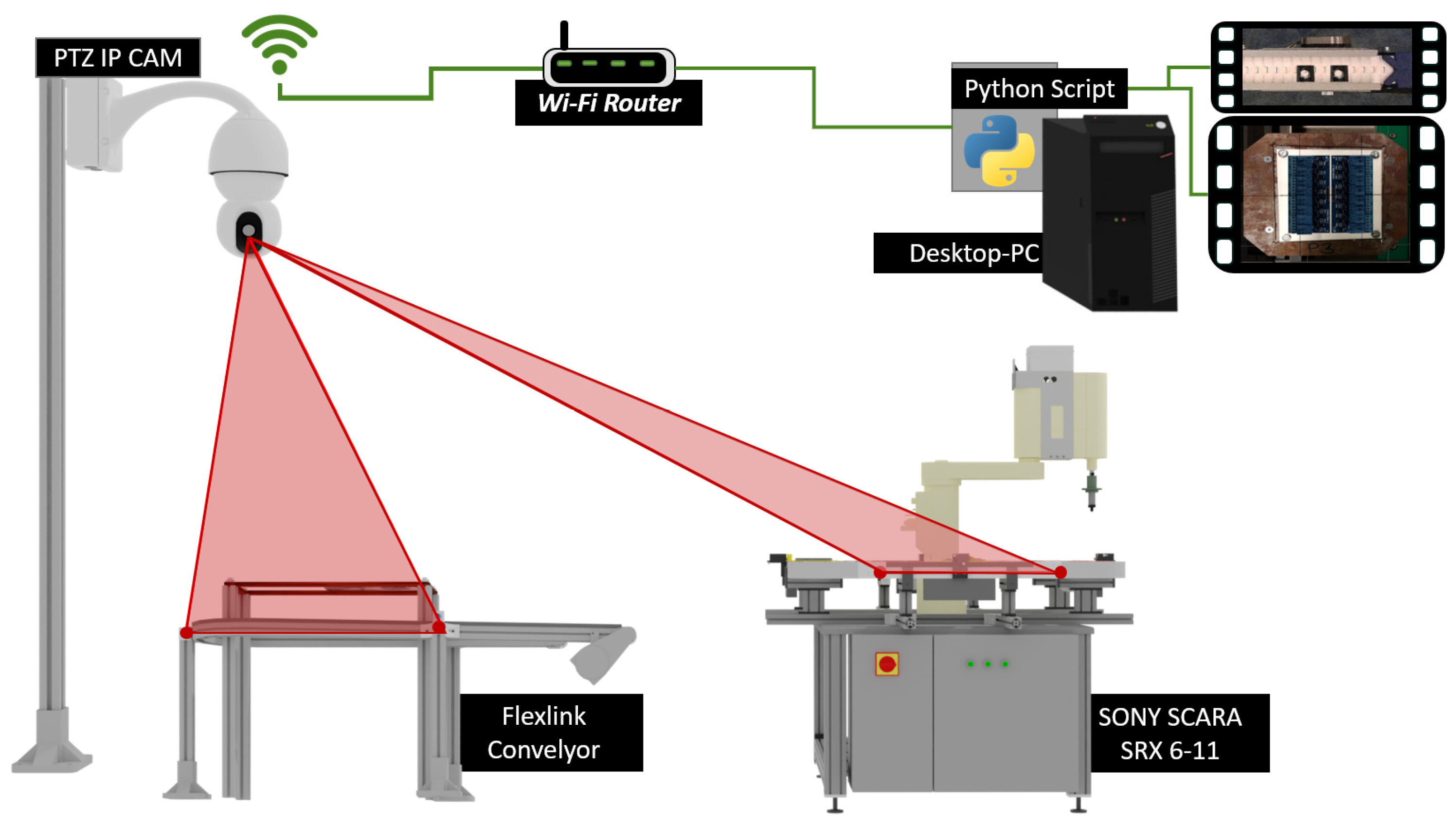

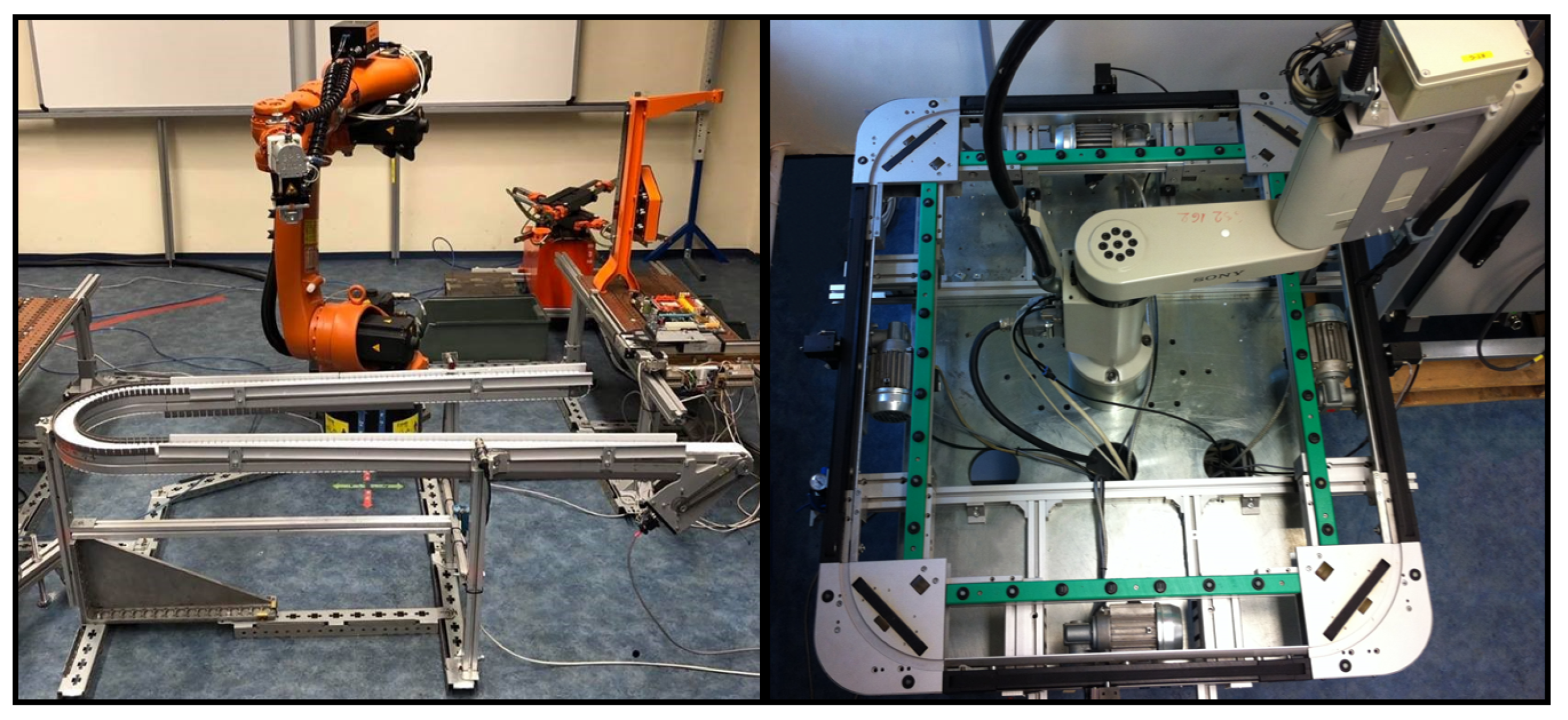

2. Multi-Axis Robot Units and Conveyor Belts

3. Dataset Translation Method

3.1. Related Works

3.2. Theoretical Model of Scene

3.3. Architecture of Our Proposed Deep Learning-Based Image Domain Translation Method

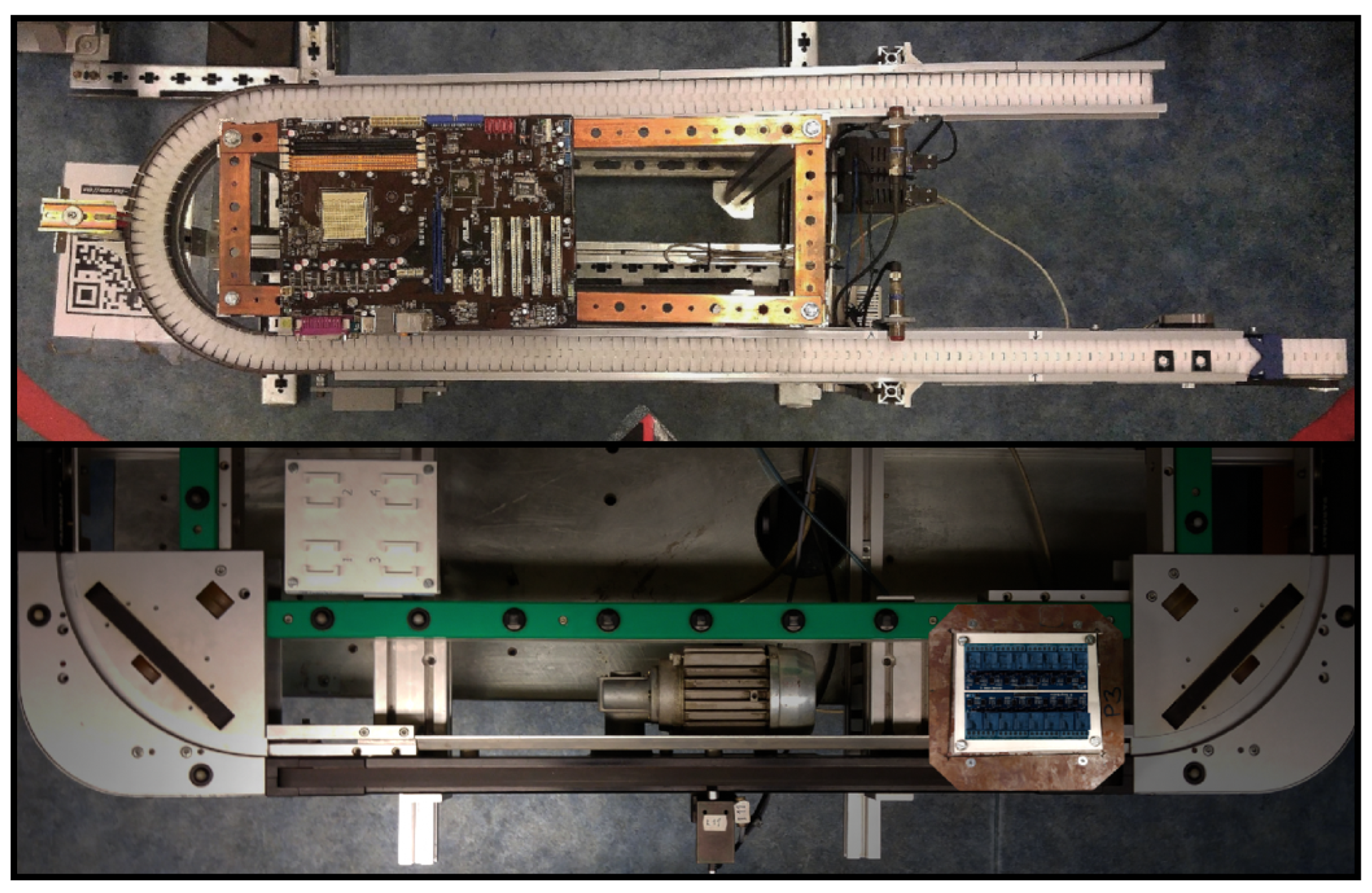

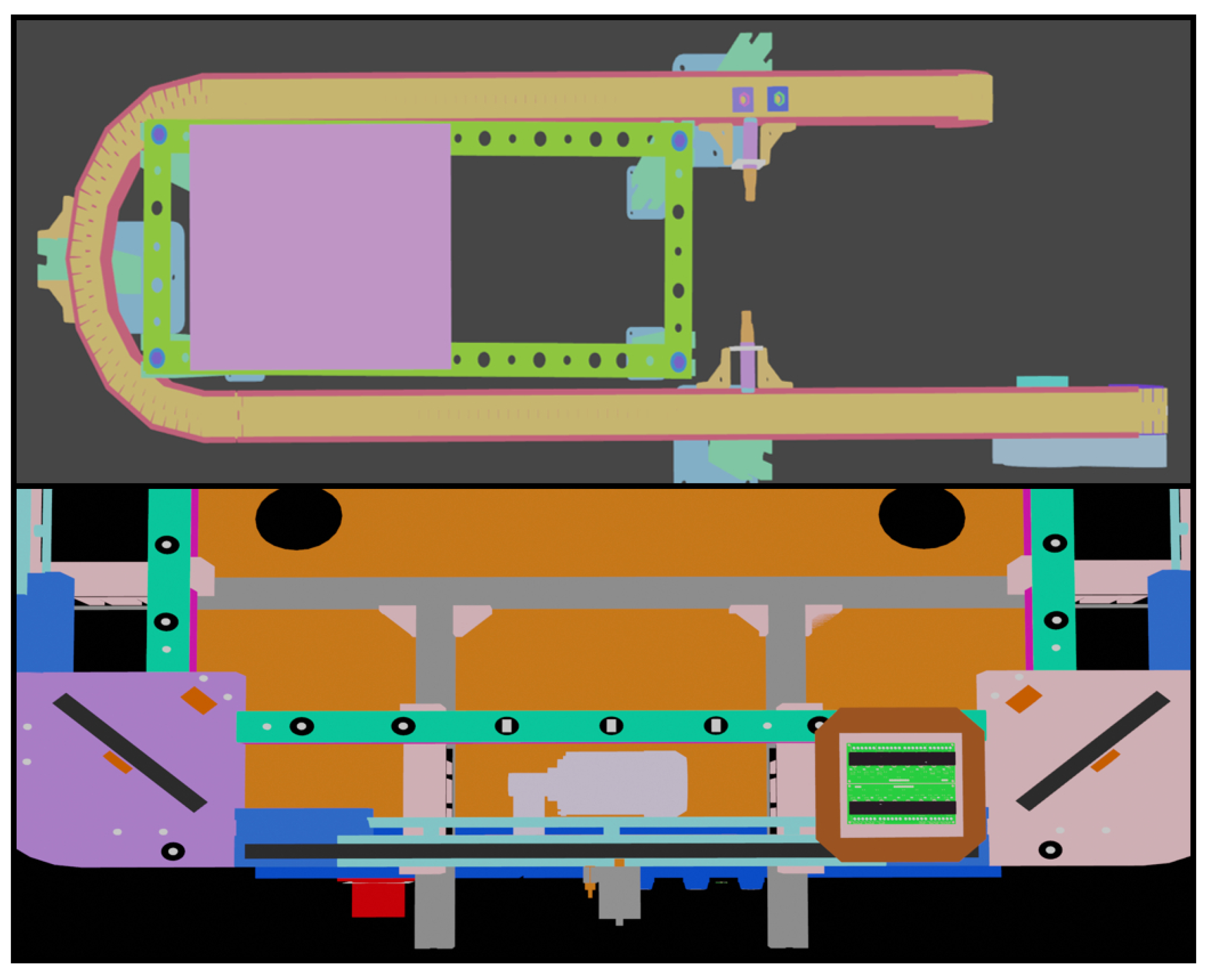

4. Creating Datasets to Train the Dataset Translation Method with Real and Rendered Images

4.1. In-House-Developed FDM 3D Printer and Printing of Test Objects

- Height: 32 cm;

- Width: 15 cm;

- Length: 20 cm.

4.2. Rendered Scene Datasets with Real Captured Images

5. Architecture Description of the Deep Learning-Based Detector Algorithms

6. Experiments

6.1. Training Details of the Selected Deep Learning-Based Algorithms

6.1.1. pix2pxHD

6.1.2. YOLO-Based Detectors

6.2. Results

- A.

- pix2pixHD

- B.

- YOLO-based detectors

- C.

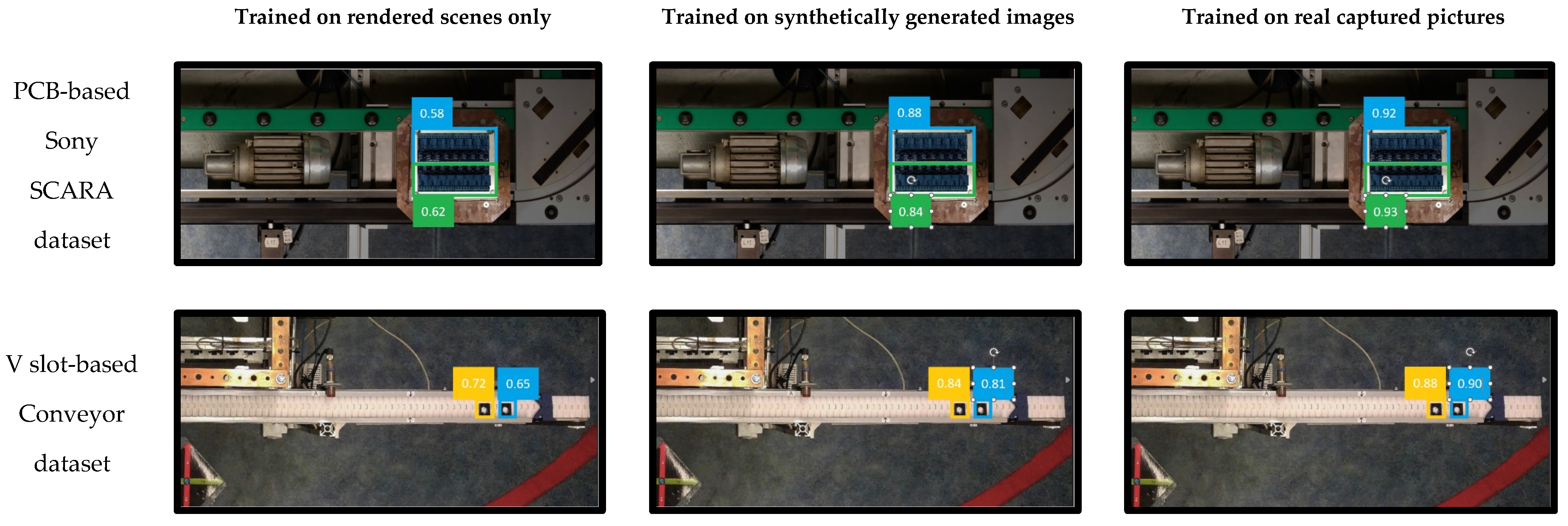

- Real application

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Rikalovic, A.; Suzic, N.; Bajic, B.; Piuri, V. Industry 4.0 Implementation Challenges and Opportunities: A Technological Perspective. IEEE Syst. J. 2022, 16, 2797–2810. [Google Scholar] [CrossRef]

- Pascal, C.; Raveica, L.-O.; Panescu, D. Robotized application based on deep learning and Internet of Things. In Proceedings of the 2018 22nd International Conference on System Theory, Control and Computing (ICSTCC), Sinaia, Romania, 10 October 2018; pp. 646–651. [Google Scholar] [CrossRef]

- Ayub, A.; Wagner, A.R. F-SIOL-310: A Robotic Dataset and Benchmark for Few-Shot Incremental Object Learning. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 13496–13502. [Google Scholar] [CrossRef]

- Jiang, P.; Ishihara, Y.; Sugiyama, N.; Oaki, J.; Tokura, S.; Sugahara, A.; Ogawa, A. Depth Image–Based Deep Learning of Grasp Planning for Textureless Planar-Faced Objects in Vision-Guided Robotic Bin-Picking. Sensors 2020, 20, 706. [Google Scholar] [CrossRef] [PubMed]

- Lobbezoo, A.; Qian, Y.; Kwon, H.-J. Reinforcement Learning for Pick and Place Operations in Robotics: A Survey. Robotics 2021, 10, 105. [Google Scholar] [CrossRef]

- Sumanas, M.; Petronis, A.; Bucinskas, V.; Dzedzickis, A.; Virzonis, D.; Morkvenaite Vilkonciene, I. Deep Q-Learning in Robotics: Improvement of Accuracy and Repeatability. Sensors 2022, 22, 3911. [Google Scholar] [CrossRef]

- Imad, M.; Doukhi, O.; Lee, D.J.; Kim, J.C.; Kim, Y.J. Deep Learning-Based NMPC for Local Motion Planning of Last-Mile Delivery Robot. Sensors 2022, 22, 8101. [Google Scholar] [CrossRef] [PubMed]

- KUKA Robotics, Official Documentation of Industrial ARC Welder Robot Arm. Available online: https://www.eurobots.net/robot_kuka_kr5_arc-en.html (accessed on 1 May 2023).

- SONY SCARA SRX—11; High-Speed Assembly Robot, Operation Manual. SONY Corporation: Tokyo, Japan, 1996.

- Kapusi, T.P.; Erdei, T.I.; Husi, G.; Hajdu, A. Application of deep learning in the deployment of an industrial scara machine for real-time object detection. Robotics 2022, 11, 69. [Google Scholar] [CrossRef]

- Bajda, M.; Hardygóra, M.; Marasová, D. Energy Efficiency of Conveyor Belts in Raw Materials Industry. Energies 2022, 15, 3080. [Google Scholar] [CrossRef]

- Stepper Motor, ST5918L4508-B—STEPPER MOTOR—NEMA 23. Available online: https://en.nanotec.com/products/537-st5918l4508-b (accessed on 22 January 2023).

- PARO QE 01 31-6000; Manual of the Modular Conveyor. PARO AG: Subingen, Switzerland, 2016.

- Hullin, M.; Eisemann, E.; Seidel, H.-P.; Lee, S. Physically-based real-time lens flare rendering. ACM Trans. Graph. 2011, 30, 108. [Google Scholar] [CrossRef]

- Lee, S.; Eisemann, E. Practical real-time lens-flare rendering. Comput. Graph. Forum 2013, 32, 1–6. [Google Scholar] [CrossRef]

- Seland, D. An industry demanding more: Intelligent illumination and expansive measurement volume sets the new helix apart from other 3-d metrology solutions. Quality 2011, 50, 22–24. Available online: https://link.gale.com/apps/doc/A264581412/AONE (accessed on 25 August 2023).

- Martinez, P.; Ahmad, D.R.; Al-Hussein, M. A vision-based system for pre-inspection of steel frame manufacturing. Autom. Constr. 2019, 97, 151–163. [Google Scholar] [CrossRef]

- Wu, Y.; He, Q.; Xue, T.; Garg, R.; Chen, J.; Veeraraghavan, A.; Barron, J.T. How to Train Neural Networks for Flare Removal. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 17 October 2021; pp. 2239–2247. [Google Scholar]

- Chen, S.-T.; Cornelius, C.; Martin, J.; Chau, D.H. Robust Physical Adversarial Attack on Faster R-CNN Object Detector. arXiv 2018, arXiv:1804.05810. [Google Scholar]

- Kapusi, T.P.; Kovacs, L.; Hajdu, A. Deep learning-based anomaly detection for imaging in autonomous vehicles. In Proceedings of the 2022 IEEE 2nd Conference on Information Technology and Data Science (CITDS), Debrecen, Hungary, 16–18 May 2022; pp. 142–147. [Google Scholar]

- Branytskyi, V.; Golovianko, M.; Malyk, D.; Terziyan, V. Generative adversarial networks with bio-inspired primary visual cortex for industry 4.0 Procedia Computer. Science 2022, 200, 418–427. [Google Scholar] [CrossRef]

- Mei, S.; Yudan, W.; Wen, G. Automatic fabric defect detection with a multi-scale convolutional denoising autoencoder network model. Sensors 2018, 18, 1064. [Google Scholar] [CrossRef] [PubMed]

- Kaji, S.; Kida, S. Overview of image-to-image translation by use of deep neural networks: Denoising, super-resolution, modality conversion, and reconstruction in medical imaging. Radiol. Phys. Technol. 2019, 12, 235–248. [Google Scholar] [CrossRef] [PubMed]

- Wang, T.-C.; Liu, M.-Y.; Zhu, J.-Y.; Tao, A.; Kautz, J.; Catanzaro, B. High-resolution image synthesis and semantic manipulation with con- ditional gans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8798–8807. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Andreucci, C.A.; Fonseca, E.M.M.; Jorge, R.N. 3D Printing as an Efficient Way to Prototype and Develop Dental Implants. BioMedInformatics 2022, 2, 671–679. [Google Scholar] [CrossRef]

- Korol, M.; Vanca, J.; Majstorovic, V.; Kocisko, M.; Baron, P.; Torok, J.; Vodilka, A.; Hlavata, S. Study of the Influence of Input Parameters on the Quality of Additively Produced Plastic Components. In Proceedings of the 2022 13th International Conference on Mechanical and Aerospace Engineering (ICMAE), Bratislava, Slovakia, 20 July 2022; pp. 39–44. [Google Scholar] [CrossRef]

- Engineers EDGE, “ABS Plastic Filament Engineering Information”. Available online: https://www.engineersedge.com/3D_Printing/abs_plastic_filament_engineering_information_14211.htm (accessed on 12 October 2023).

- Chatzoglou, E.; Kambourakis, G.; Smiliotopoulos, C. Let the Cat out of the Bag: Popular Android IoT Apps under Security Scrutiny. Sensors 2022, 22, 513. [Google Scholar] [CrossRef] [PubMed]

- Du, Y.; Sun, H.Q.; Tian, Q.; Zhang, S.Y.; Wang, C. Design of blender IMC control system based on simple recurrent networks. In Proceedings of the 2009 International Conference on Machine Learning and Cybernetics, Baoding, China, 12 July 2009; pp. 1048–1052. [Google Scholar] [CrossRef]

- Takala, T.M.; Mäkäräinen, M.; Hamalainen, P. Immersive 3D modeling with Blender and off-the-shelf hardware. In Proceedings of the Conference: 3D User Interfaces (3DUI), 2013 IEEE Symposium, Orlando, FL, USA, 16–17 March 2013. [Google Scholar]

- Li, J.; Meng, L.; Yang, B.; Tao, C.; Li, L.; Zhang, W. LabelRS: An Automated Toolbox to Make Deep Learning Samples from Remote Sensing Images. Remote Sens. 2021, 13, 2064. [Google Scholar] [CrossRef]

- Lenovo. ThinkCentre M93 Tower. Available online: https://www.lenovo.com/hu/hu/desktops/thinkcentre/m-series-towers/ThinkCentre-M93P/p/11TC1TMM93P (accessed on 2 October 2022).

- Zamora, M.; Vargas, J.A.C.; Azorin-Lopez, J.; Rodr’ıguez, J. Deep learning-based visual control assistant for assembly in industry 4.0. Comput. Ind. 2021, 131, 103485. [Google Scholar] [CrossRef]

- Yu, L.; Zhu, J.; Zhao, Q.; Wang, Z. An efficient yolo algorithm with an attention mechanism for vision-based defect inspection deployed on FPGA. Micromachines 2022, 13, 1058. [Google Scholar] [CrossRef]

- Zhou, X.; Xu, X.; Liang, W.; Zeng, Z.; Shimizu, S.; Yang, L.T.; Jin, Q. Intelligent small object detection for digital twin in smart manufacturing with industrial cyber-physical systems. IEEE Trans. Ind. Inform. 2022, 18, 1377–1386. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.; Liao, H.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. Available online: https://arxiv.org/abs/2004.10934 (accessed on 30 July 2023).

- Gašparović, B.; Mauša, G.; Rukavina, J.; Lerga, J. Evaluating YOLOV5, YOLOV6, YOLOV7, and YOLOV8 in Underwater Environment: Is There Real Improvement? In Proceedings of the 2023 8th International Conference on Smart and Sustainable Technologies (SpliTech), Split/Bol, Croatia, 20–23 June 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Afdhal, A.; Saddami, K.; Sugiarto, S.; Fuadi, Z.; Nasaruddin, N. Real-Time Object Detection Performance of YOLOv8 Models for Self-Driving Cars in a Mixed Traffic Environment. In Proceedings of the 2023 2nd International Conference on Computer System, Information Technology, and Electrical Engineering (COSITE), Banda Aceh, Indonesia, 2 August 2023; pp. 260–265. [Google Scholar] [CrossRef]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. Available online: https://arxiv.org/abs/2402.13616 (accessed on 20 March 2024).

- Adarsh, P.; Rathi, P.; Kumar, M. Yolo v3-tiny: Object detection and recognition using one stage improved model. In Proceedings of the 2020 6th international conference on advanced computing and communication systems (ICACCS), Coimbatore, India, 6–7 March 2020; pp. 687–694. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. Available online: http://arxiv.org/abs/1804.02767 (accessed on 2 August 2023).

- Arthur, D.; Vassilvitskii, S. K-means++: The advantages of careful seeding. Soda 2007, 8, 1027–1035. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, Nevada, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. Available online: https://arxiv.org/abs/1412.6980 (accessed on 2 August 2023).

- Perez, L.; Wang, J. The effectiveness of data augmentation in image classification using deep learning. arXiv 2017, arXiv:1712.04621. Available online: http://arxiv.org/abs/1712.04621 (accessed on 25 August 2023).

- Li, Z.; Arora, S. An exponential learning rate schedule for deep learning. arXiv 2019, arXiv:1910.07454. Available online: http://arxiv.org/abs/1910.07454 (accessed on 3 August 2023).

- Sampat, M.P.; Wang, Z.; Gupta, S.; Bovik, A.C.; Markey, M.K. Complex wavelet structural similarity: A new image similarity index. IEEE Trans. Image Process. 2009, 18, 2385–2401. [Google Scholar] [CrossRef]

- Nvidia Jetson Nano Developer Kit. 2024. Available online: https://developer.nvidia.com/embedded/jetson-nano-developer-kit (accessed on 9 December 2023).

| Property | Acrylonitrile Butadiene Styrene (ABS) |

|---|---|

| Density p (Mg/m3) | 1.00–1.22 |

| Young’s Modulus E (GPa) | 1.12–2.87 |

| Elongation at break (%) | 3–75 |

| Melting (softening) Temperature (°C) | 88–128 |

| Glass Transition Temperature (°C) | 100 |

| Ultimate Tensile Strength (MPa) | 33–110 |

| Anchor | YOLOV3-Tiny | YOLOV3-tini-3l | YOLOV3-SPP | YOLOV3-5l |

|---|---|---|---|---|

| 0 | 10 × 9 | 12 × 7 | 12 × 7 | 10 × 6 |

| 1 | 13 × 8 | 9 × 10 | 9 × 10 | 10 × 10 |

| 2 | 10 × 10 | 10 × 10 | 10 × 10 | 9 × 10 |

| 3 | 11 × 10 | 11 × 10 | 11 × 10 | 10 × 10 |

| 4 | 12 × 10 | 11 × 10 | 11 × 10 | 10 × 10 |

| 5 | 14 × 10 | 11 × 10 | 11 × 10 | 11 × 10 |

| 6 | - | 13 × 10 | 13 × 10 | 11 × 10 |

| 7 | - | 14 × 9 | 14 × 9 | 11 × 10 |

| 8 | - | 15 × 11 | 15 × 11 | 13 × 8 |

| 9 | - | - | - | 12 × 10 |

| 10 | - | - | - | 11 × 10 |

| 11 | - | - | - | 12 × 10 |

| 12 | 13 × 10 | |||

| 13 | 14 × 10 | |||

| 14 | 15 × 12 |

| Algorithm Name | Parameter Value |

|---|---|

| Saturation | 1.5 |

| Exposure | 1.5 |

| Resizing | 1.5 |

| Hue shifting | 0.3 |

| Detector Type | Learning Rate | Momentum | Decay |

|---|---|---|---|

| YOLOV3-tiny | 0.005 | 0.9 | 0.0005 |

| YOLOV3-tiny-3l | 0.0005 | 0.9 | 0.0005 |

| YOLOV3-SPP | 0.0003 | 0.9 | 0.0005 |

| Parameter Name | Value |

|---|---|

| initial learning rate | 0.01 |

| final learning rate | 0.001 |

| momentum | 0.937 |

| weight_decay | 0.0005 |

| warmup_epochs | 3.0 |

| warmup_momentum | 0.8 |

| warmup_bias_learning rate | 0.1 |

| Epochs | ||||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 0.6134 | 9.5687 × 10−5 | 0.6111 | 0.6156 | 0.3845 | 0.0347 | 0.1245 | 0.6289 |

| 2 | 0.6826 | 9.2365 × 10−4 | 0.6806 | 0.6843 | 0.4005 | 0.0548 | 0.1869 | 0.6902 |

| 3 | 0.7254 | 8.9854 × 10−4 | 0.7224 | 0.7279 | 0.4254 | 0.0654 | 0.2143 | 0.7216 |

| 4 | 0.7389 | 9.7564 × 10−4 | 0.7368 | 0.7397 | 0.4493 | 0.0458 | 0.2110 | 0.7304 |

| 5 | 0.7826 | 8.7052 × 10−4 | 0.7814 | 0.7843 | 0.4647 | 0.0599 | 0.2404 | 0.7477 |

| 6 | 0.8053 | 8.9652 × 10−4 | 0.8034 | 0.8064 | 0.4886 | 0.0321 | 0.2312 | 0.7507 |

| 7 | 0.8115 | 7.4652 × 10−4 | 0.8103 | 0.8131 | 0.4931 | 0.0245 | 0.2408 | 0.7633 |

| 8 | 0.8149 | 7.3254 × 10−4 | 0.8121 | 0.8157 | 0.5026 | 0.0458 | 0.2655 | 0.7707 |

| 9 | 0.8204 | 6.7478 × 10−4 | 0.8194 | 0.8212 | 0.4916 | 0.0501 | 0.2887 | 0.7798 |

| 10 | 0.8349 | 7.6548 × 10−4 | 0.8324 | 0.8361 | 0.5134 | 0.0546 | 0.3247 | 0.7925 |

| 20 | 0.8353 | 6.2248 × 10−4 | 0.8331 | 0.8369 | 0.5495 | 0.0496 | 0.3335 | 0.7811 |

| 30 | 0.8469 | 6.0654 × 10−4 | 0.8447 | 0.8483 | 0.5766 | 0.0512 | 0.3469 | 0.7761 |

| 40 | 0.8579 | 5.6335 × 10−4 | 0.8560 | 0.8591 | 0.6024 | 0.0564 | 0.3504 | 0.7848 |

| 50 | 0.8622 | 4.4256 × 10−4 | 0.8603 | 0.8634 | 0.5889 | 0.0524 | 0.3475 | 0.7991 |

| 60 | 0.8676 | 5.2145 × 10−4 | 0.8658 | 0.8689 | 0.6124 | 0.0530 | 0.3664 | 0.7948 |

| 70 | 0.8706 | 5.0125 × 10−4 | 0.8692 | 0.8714 | 0.6065 | 0.0535 | 0.3586 | 0.7890 |

| 80 | 0.8765 | 4.7879 × 10−4 | 0.8751 | 0.8783 | 0.6248 | 0.0509 | 0.3496 | 0.8122 |

| 90 | 0.8789 | 4.4578 × 10−4 | 0.8762 | 0.8798 | 0.6424 | 0.0496 | 0.3789 | 0.8046 |

| 100 | 0.8795 | 4.1254 × 10−4 | 0.8774 | 0.8909 | 0.6349 | 0.0524 | 0.3864 | 0.7899 |

| 140 | 0.8802 | 3.8878 × 10−4 | 0.8789 | 0.8814 | 0.6401 | 0.0596 | 0.3941 | 0.7943 |

| 200 | 0.8815 | 3.7998 × 10−4 | 0.8801 | 0.8832 | 0.6578 | 0.0552 | 0.4229 | 0.7873 |

| epochs | ||||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 0.7542 | 4.2548× 10−3 | 0.7514 | 0.7586 | 0.4348 | 0.0601 | 0.3621 | 0.5408 |

| 2 | 0.7945 | 3.9547× 10−3 | 0.7926 | 0.7914 | 0.4963 | 0.0569 | 0.4090 | 0.6140 |

| 3 | 0.8002 | 3.6985× 10−3 | 0.7975 | 0.8042 | 0.5269 | 0.0354 | 0.4356 | 0.6432 |

| 4 | 0.8239 | 2.9645× 10−3 | 0.8210 | 0.8251 | 0.5048 | 0.0487 | 0.4541 | 0.6892 |

| 5 | 0.8477 | 2.4123× 10−4 | 0.8453 | 0.8490 | 0.5369 | 0.0369 | 0.4802 | 0.7013 |

| 6 | 0.8914 | 2.2658× 10−4 | 0.8902 | 0.8932 | 0.6087 | 0.0578 | 0.5402 | 0.7274 |

| 7 | 0.9001 | 1.5478 × 10−3 | 0.8982 | 0.9024 | 0.6396 | 0.0469 | 0.5504 | 0.7364 |

| 8 | 0.9057 | 1.9625 × 10−4 | 0.9034 | 0.9076 | 0.6896 | 0.0398 | 0.5666 | 0.7559 |

| 9 | 0.9111 | 1.4785 × 10−4 | 0.9099 | 0.9127 | 0.7069 | 0.0352 | 0.5869 | 0.7624 |

| 10 | 0.9159 | 9.6582 × 10−4 | 0.9135 | 0.9167 | 0.6874 | 0.0245 | 0.5764 | 0.7318 |

| 20 | 0.9209 | 7.6584 × 10−4 | 0.9188 | 0.9213 | 0.7369 | 0.0269 | 0.6145 | 0.8236 |

| 30 | 0.9238 | 6.1458 × 10−4 | 0.9220 | 0.9251 | 0.7846 | 0.0210 | 0.6952 | 0.8735 |

| 40 | 0.9293 | 6.6548 × 10−4 | 0.9287 | 0.9310 | 0.7569 | 0.0289 | 0.6840 | 0.8833 |

| 50 | 0.9359 | 5.2145 × 10−4 | 0.9340 | 0.9372 | 0.8247 | 0.0369 | 0.7248 | 0.9103 |

| 60 | 0.9448 | 5.9658 × 10−4 | 0.9423 | 0.9465 | 0.8569 | 0.0203 | 0.7865 | 0.9245 |

| 70 | 0.9506 | 5.4568 × 10−4 | 0.9485 | 0.9516 | 0.8740 | 0.0326 | 0.8249 | 0.9143 |

| 80 | 0.9548 | 5.1254 × 10−4 | 0.9534 | 0.9562 | 0.8674 | 0.0354 | 0.8341 | 0.9354 |

| 90 | 0.9627 | 4.9854 × 10−4 | 0.9604 | 0.9645 | 0.8990 | 0.0498 | 0.8517 | 0.9366 |

| 100 | 0.9681 | 4.2458 × 10−4 | 0.9669 | 0.9698 | 0.9069 | 0.0283 | 0.8724 | 0.9449 |

| 140 | 0.9706 | 5.3200 × 10−4 | 0.9692 | 0.9710 | 0.9187 | 0.0323 | 0.8844 | 0.9504 |

| 200 | 0.9727 | 6.1205 × 10−4 | 0.9709 | 0.9744 | 0.9269 | 0.0295 | 0.8997 | 0.9569 |

| YOLOV3-Tiny | YOLOv3-Tiny-3l | YOLOv3-SPP | YOLOv3-5l | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| mAP | Prec. | Recall | F1-Score | mAP | Prec. | Recall | F1-Score | mAP | Prec. | Recall | F1-Score | mAP | Prec. | Recall | F1-Score | |

| 1.000 | 0.9065 | 0.91 | 0.90 | 0.91 | 0.8468 | 0.85 | 0.87 | 0.86 | 0.8778 | 0.89 | 0.85 | 0.87 | 0.9296 | 1.00 | 0.94 | 0.96 |

| 0.3845 | 0.3145 | 0.44 | 0.25 | 0.29 | 0.1221 | 0.03 | 0.00 | 0.00 | 0.3949 | 0.42 | 0.40 | 0.48 | 0.4501 | 0.55 | 0.20 | 0.33 |

| 0.4005 | 0.3698 | 0.49 | 0.26 | 0.35 | 0.1469 | 0.26 | 0.08 | 0.15 | 0.4211 | 0.49 | 0.45 | 0.50 | 0.5068 | 0.67 | 0.29 | 0.46 |

| 0.4254 | 0.3846 | 0.52 | 0.22 | 0.34 | 0.3986 | 0.41 | 0.24 | 0.34 | 0.4801 | 0.52 | 0.47 | 0.49 | 0.5698 | 0.75 | 0.38 | 0.54 |

| 0.4931 | 0.4865 | 0.58 | 0.35 | 0.45 | 0.4425 | 0.56 | 0.35 | 0.41 | 0.5259 | 0.56 | 0.54 | 0.55 | 0.6421 | 0.81 | 0.51 | 0.67 |

| 0.5026 | 0.5469 | 0.62 | 0.56 | 0.60 | 0.5685 | 0.60 | 0.48 | 0.52 | 0.5458 | 0.63 | 0.51 | 0.61 | 0.7248 | 0.86 | 0.69 | 0.71 |

| 0.5134 | 0.5694 | 0.76 | 0.62 | 0.69 | 0.6048 | 0.65 | 0.54 | 0.58 | 0.6846 | 0.71 | 0.68 | 0.69 | 0.8694 | 0.89 | 0.72 | 0.79 |

| 0.5495 | 0.6485 | 0.78 | 0.73 | 0.75 | 0.6381 | 0.67 | 0.62 | 0.63 | 0.7954 | 0.81 | 0.78 | 0.79 | 0.8896 | 0.92 | 0.76 | 0.83 |

| 0.6401 | 0.8069 | 0.84 | 0.81 | 0.82 | 0.7954 | 0.81 | 0.77 | 0.78 | 0.8305 | 0.85 | 0.82 | 0.84 | 0.91 | 0.96 | 0.84 | 0.91 |

| YOLOv8-Nano | YOLOv8-Small | YOLOv8-Medium | YOLOv8-Large | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Prec. | Recall | mAP50 | mAP50–95 | Prec. | Recall | mAP50 | mAP50–95 | Prec. | Recall | mAP50 | mAP50–95 | Prec. | Recall | mAP50 | mAP50–95 | |

| 1.00 | 0.999 | 1.000 | 0.995 | 0.835 | 0.999 | 1.000 | 0.995 | 0.843 | 0.999 | 1.000 | 0.995 | 0.836 | 0.998 | 1.000 | 0.995 | 0.845 |

| 0.4348 | 0.8970 | 0.720 | 0.723 | 0.543 | 0.893 | 0.651 | 0.705 | 0.551 | 0.734 | 0.710 | 0.728 | 0.455 | 0.871 | 0.443 | 0.691 | 0.433 |

| 0.4963 | 0.8800 | 0.860 | 0.884 | 0.613 | 0.896 | 0.775 | 0.731 | 0.667 | 0.778 | 0.759 | 0.796 | 0.653 | 0.779 | 0.525 | 0.710 | 0.607 |

| 0.5269 | 0.8860 | 0.902 | 0.846 | 0.658 | 0.896 | 0.895 | 0.764 | 0.699 | 0.848 | 0.793 | 0.836 | 0.735 | 0.860 | 0.746 | 0.740 | 0.681 |

| 0.6396 | 0.8980 | 1.000 | 0.896 | 0.712 | 0.928 | 0.990 | 0.825 | 0.746 | 0.889 | 0.890 | 0.882 | 0.745 | 0.927 | 0.847 | 0.884 | 0.703 |

| 0.6896 | 0.9260 | 1.000 | 0.912 | 0.736 | 0.951 | 1.000 | 0.895 | 0.766 | 0.948 | 0.940 | 0.905 | 0.786 | 0.968 | 0.955 | 0.895 | 0.743 |

| 0.6874 | 0.9180 | 1.000 | 0.923 | 0.742 | 0.998 | 1.000 | 0.901 | 0.812 | 0.998 | 0.980 | 0.944 | 0.797 | 0.989 | 0.979 | 0.925 | 0.784 |

| 0.7369 | 0.9880 | 1.000 | 0.979 | 0.786 | 0.997 | 1.000 | 0.995 | 0.829 | 0.998 | 0.990 | 0.989 | 0.819 | 0.992 | 1.000 | 0.985 | 0.802 |

| 0.9187 | 0.9980 | 1.000 | 0.991 | 0.802 | 0.998 | 1.000 | 0.995 | 0.835 | 0.998 | 1.000 | 0.995 | 0.827 | 0.998 | 1.000 | 0.995 | 0.814 |

| Training Dataset | Conveyor Dataset | Sony SCARA Dataset | ||||

|---|---|---|---|---|---|---|

| Train | Val | Test | Train | Val | Test | |

| Rendered only | 0.624 | 0.612 | 0.511 | 0.689 | 0.674 | 0.655 |

| Synthetic image translation generated | 0.795 | 0.784 | 0.778 | 0.802 | 0.782 | 0.775 |

| Real images | 0.847 | 0.823 | 0.814 | 0.835 | 0.822 | 0.802 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Erdei, T.I.; Kapusi, T.P.; Hajdu, A.; Husi, G. Image-to-Image Translation-Based Deep Learning Application for Object Identification in Industrial Robot Systems. Robotics 2024, 13, 88. https://doi.org/10.3390/robotics13060088

Erdei TI, Kapusi TP, Hajdu A, Husi G. Image-to-Image Translation-Based Deep Learning Application for Object Identification in Industrial Robot Systems. Robotics. 2024; 13(6):88. https://doi.org/10.3390/robotics13060088

Chicago/Turabian StyleErdei, Timotei István, Tibor Péter Kapusi, András Hajdu, and Géza Husi. 2024. "Image-to-Image Translation-Based Deep Learning Application for Object Identification in Industrial Robot Systems" Robotics 13, no. 6: 88. https://doi.org/10.3390/robotics13060088

APA StyleErdei, T. I., Kapusi, T. P., Hajdu, A., & Husi, G. (2024). Image-to-Image Translation-Based Deep Learning Application for Object Identification in Industrial Robot Systems. Robotics, 13(6), 88. https://doi.org/10.3390/robotics13060088