A Broad View on Robot Self-Defense: Rapid Scoping Review and Cultural Comparison

Abstract

1. Introduction

- Academic theory. We explore the concept of RSD in depth based on a rapid scoping review of the literature, encompassing the intersection between robots, crime, and violence, and other “dark” topics in Human-Robot Interaction (HRI).

- Public opinion. Furthermore, we report on the results of an online survey to check how people in two different countries perceive the effects of two factors that we felt could be important, a robot’s embodiment and use of force.

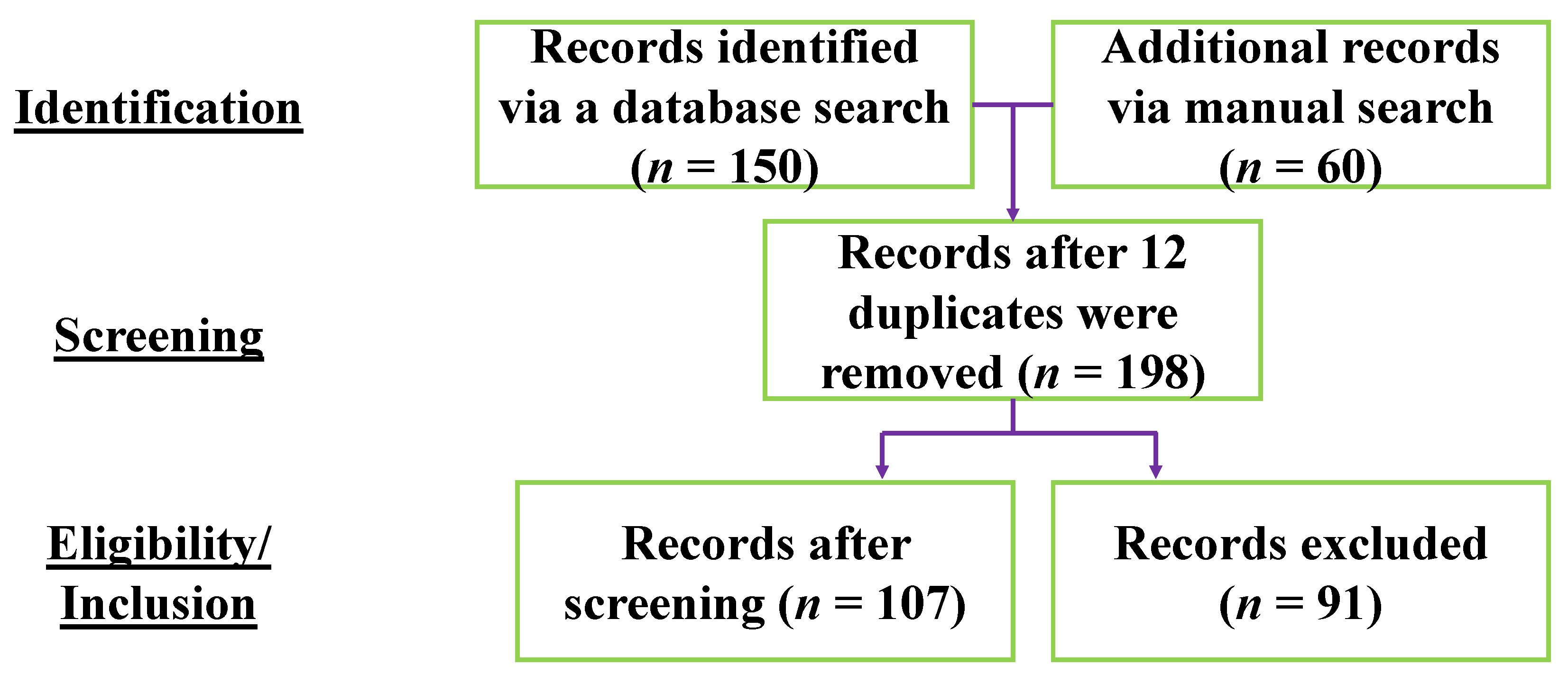

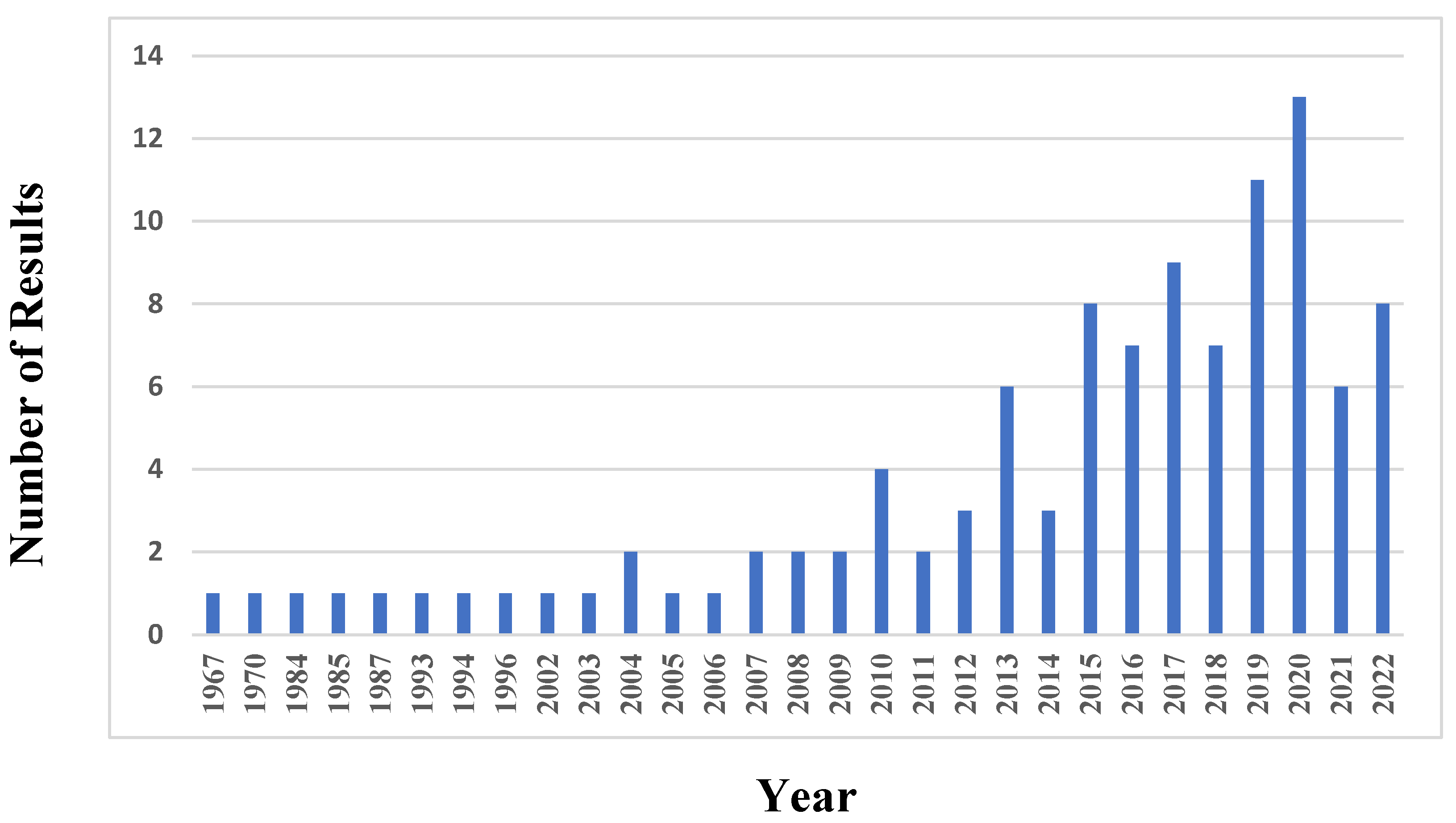

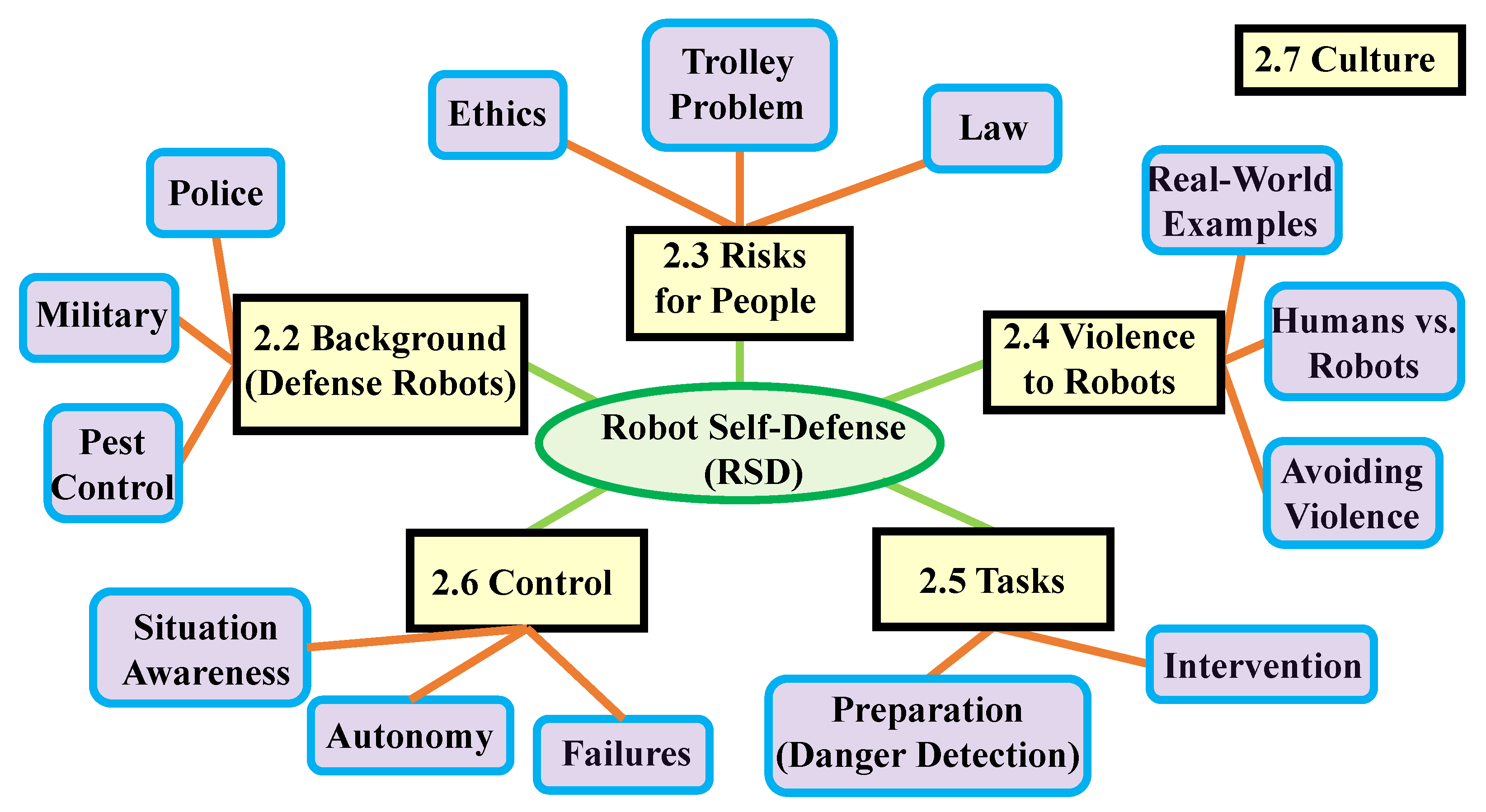

2. Scoping Review

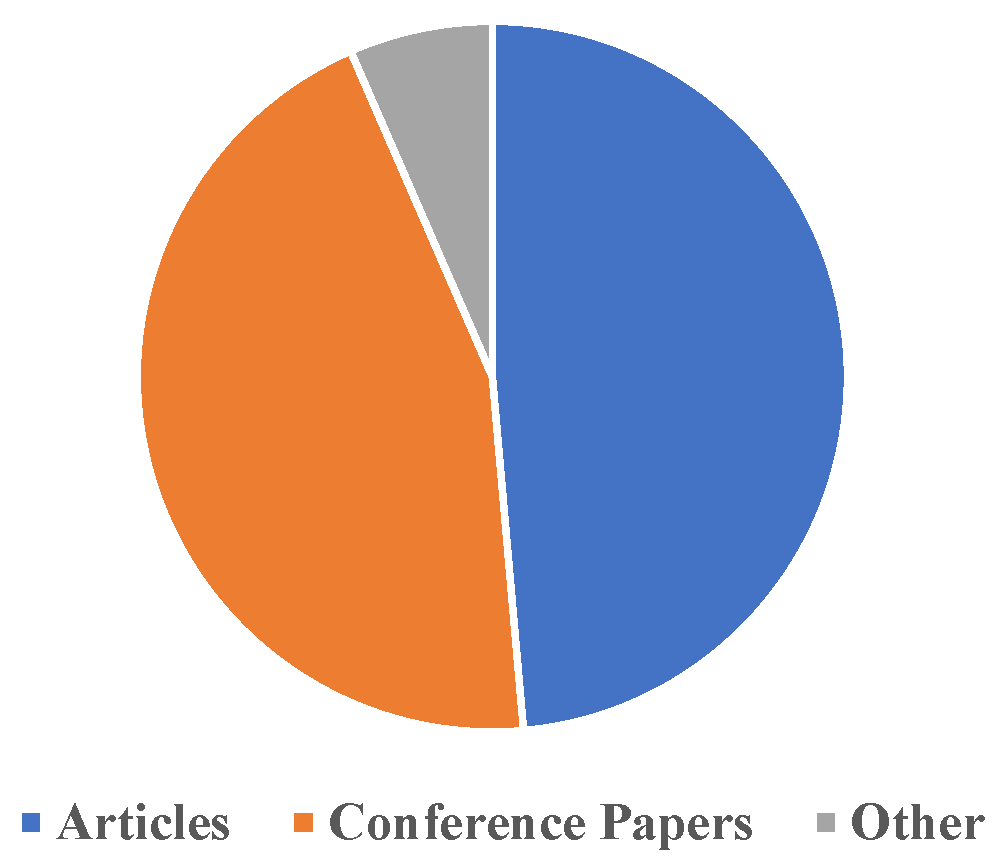

2.1. Review Process

2.2. Background: Defense Robots

2.2.1. Police Robots

2.2.2. Military Robots

2.2.3. Pest Control Robots

2.3. Risks for People

2.3.1. Ethics of Self-Defense Robots

2.3.2. Comparison to the Trolley Problem

2.3.3. Laws Related to Self-Defense Robots

2.4. Violence to Robots

2.4.1. Real-World Examples of Violence toward Robots

- Complications due to people. Could some people’s desire to mistreat robots include sometimes trying to stop a defender robot from doing its job, or faking attacks/crimes in front of the robot to get it to do something? For example, bystanders and victims might not always help a robot to defend a victim. This could be unintentional (due to a misunderstanding) or intentional, as in hybristophilia, Bonnie and Clyde syndrome, or Stockholm Syndrome (in which people feel attracted to those who commit crimes) [97]; lack of trust in robots; or domestic violence, in which fear of later reprisal could result in rejecting needed help. (As well, could RSD create concerns about entrapment, e.g., given decisions on where to place robots? Furthermore, could robot abuse also indirectly lead to violence against humans? For example, if a child gets into a fight trying to protect their robot from a bully.)

- Physical design for self-defense. Should only certain kinds of highly robust robots that would be difficult to topple (e.g., with a wide base, high weight, and short height) be allowed to conduct self-defense?

- Causes for robot abuse. Why were the robots above attacked? Were the delivery robots too slow or in the way, or too oblivious and passing through areas where an attack would be easy to carry out? Do children attack robots because the consequences of being caught would be less and they are not as fettered by social norms as adults?

- Defense against specific groups. How should a self-defense robot deal with drunk people or children? For example, in the case of an attack by a child on another child, should a child be treated the same as an adult? If not, a means of assessing potential harm (threats and consequences of intervention) might be required.

2.4.2. Comparing Perceptions of Humans vs. Robots

2.4.3. Strategies for Robots to Avoid Violence

2.5. Tasks

2.5.1. Preparation: Danger Detection

2.5.2. Intervention

2.6. Control

2.6.1. Situation Awareness

2.6.2. Perception of Robot Autonomy

2.6.3. Effects of Control Failures

2.7. Culture

2.8. Own Work

3. Study Comparing Cultures

3.1. Hypotheses

3.2. Participants

3.3. Measurements

3.4. Procedure

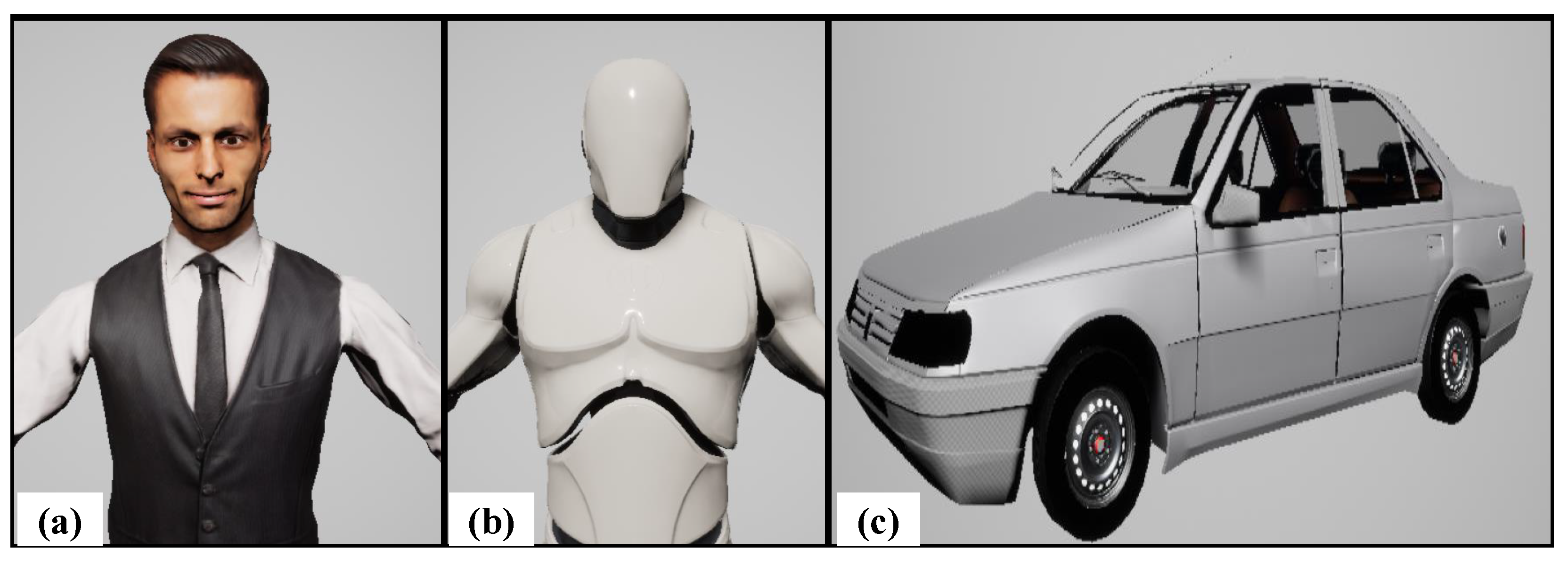

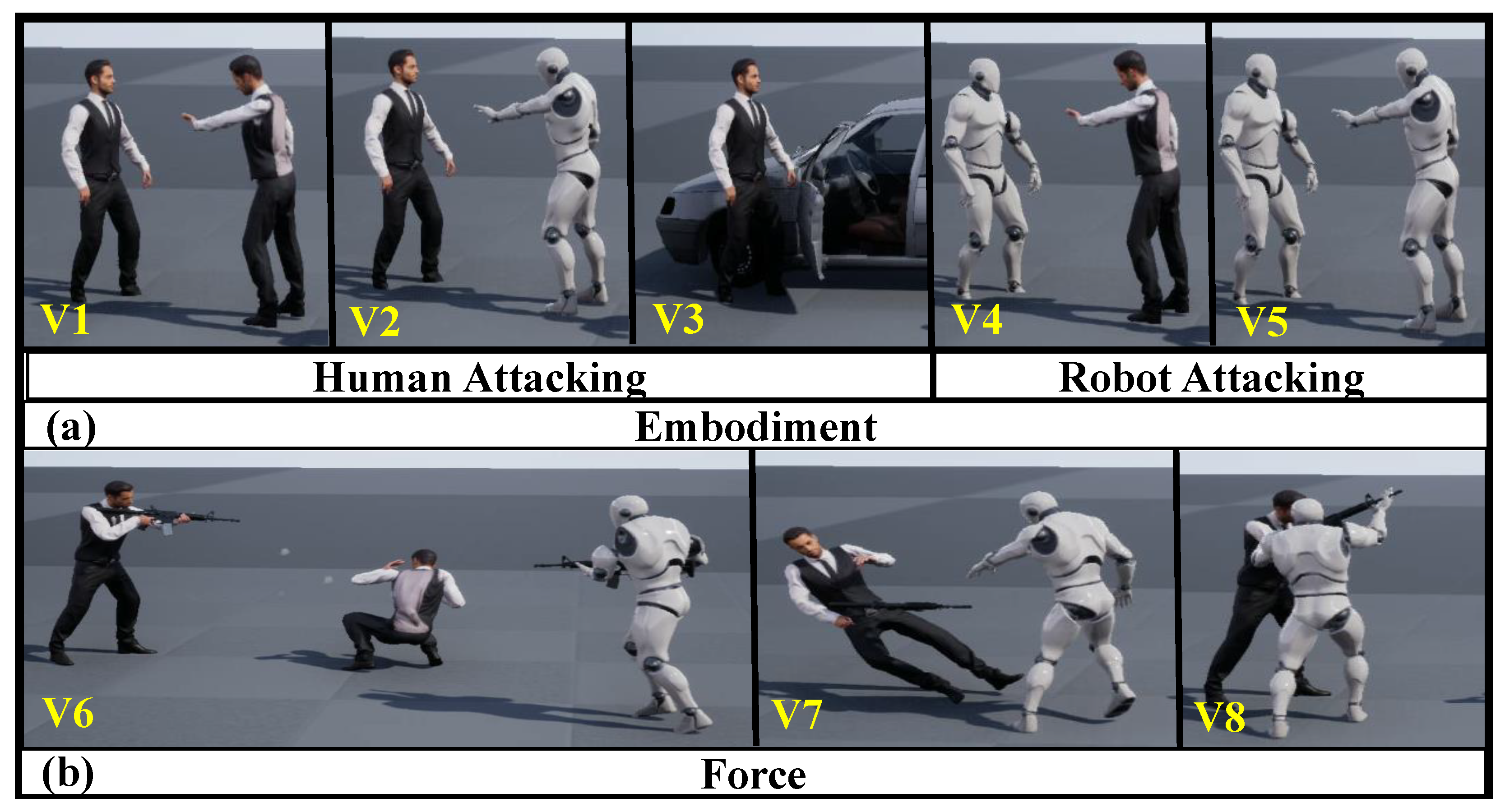

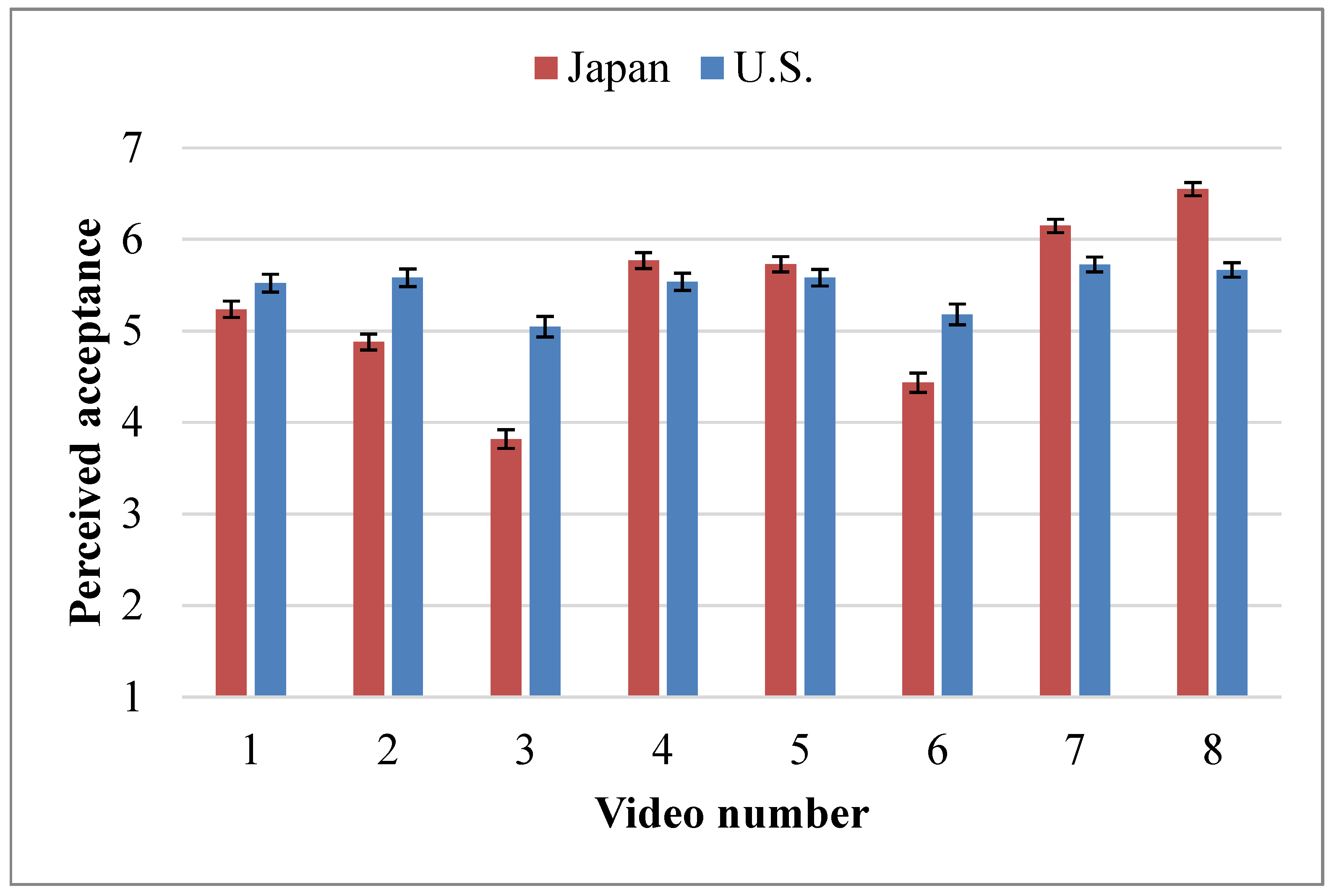

3.5. Videos

- V1. A Human Defender stops a Human Attacker, both using Non-lethal force.

- V2. A Humanoid Robot Defender stops a Human Attacker, both using Non-lethal force.

- V3. An AV stops a Human Attacker, both using Non-lethal force.

- V4. A Human stops a Humanoid Robot Attacker, both using Non-lethal force.

- V5. A Humanoid Robot Defender stops a Humanoid Robot Attacker, both using Non-lethal force.

- V6. A Humanoid Robot Defender stops a Human Attacker, both using Lethal force.

- V7. A Humanoid Robot Defender stops a Human Attacker, using Non-lethal force against Lethal force.

- V8. A Humanoid Robot Defender stops a Human Attacker, using Disarming against Lethal force.

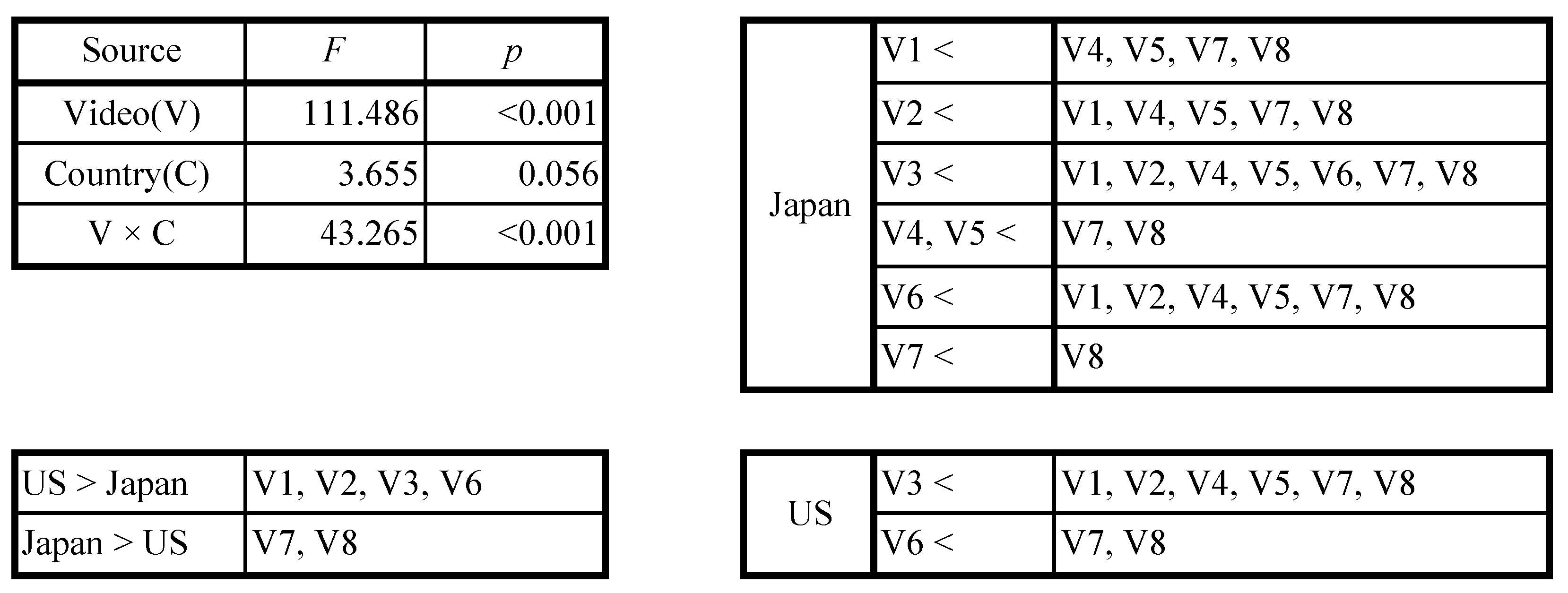

3.6. Statistical Analysis

3.7. Summary of Results

4. Discussion

- Background. We gathered together recent information on the usage of robots by law enforcement, military, and pest control groups in various countries, that could potentially be developed and adapted for self-defense.

- Risks for people. We discussed potential merits and demerits of RSD from the perspectives of historical attitudes to previous force-wielding robots, comparison with humans, as well as the unique qualities of RSD. Furthermore, we contrasted the fundamental dilemma of RSD with that in the well-known trolley problem, pointing out some similarities and three differences (in regard to utilitarian clarity, opposite emphasis on “letting die”, and prevalence of the scenario and problem).

- Negative perceptions. We put forth questions and proposals about how self-defense robots might be designed to put people at ease and avoid violence (e.g., a stable, neutral embodiment with adept communication abilities).

- Tasks. We made various proposals (e.g., exploring how various military ideas such as ISTAR and rules of engagement could be translated to RSD, raising the problem of “Byzantine” intention recognition, and describing objects that might also be important to defend, etc.).

- Control. We proposed extending AV standards to self-defense robots, such as SAE’s J3016 standard for levels of autonomy, and the SOTIF (ISO/PAS 21448) standard for dealing with recognition failures.

- Culture. Since few studies seemed to have looked at cultural influences on RSD, a study was conducted, revealing some cultural differences. A small preference for human defenders found in Japan was not observed in the U.S. As well, the idea of lethal force by a robot was more acceptable in the U.S.

4.1. Limitations and Future Work

4.2. The Genovese Case—Revisited

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| AMT | Amazon Mechanical Turk |

| AV | Autonomous vehicle |

| HRI | Human–robot interaction |

| ISO | International Organization for Standardization |

| ISR | Intelligence, surveillance, and reconnaissance |

| ISTAR | Intelligence, surveillance, target acquisition, and reconnaissance |

| PAS | Publicly available specification |

| RSD | Robot self-defense |

| SAE | Society of Automotive Engineers |

| SOTIF | Safety of the intended functionality |

| STD | Sexually transmitted disease |

| TNT | 2,4,6-trinitrotoluene |

| UN | United Nations |

| U.S. | United States |

| USD | United States Dollars |

References

- Takooshian, H. Not Just a Bystander: The 1964 Kitty Genovese Tragedy: What Have We Learned. Psychology Today, 24 May 2014. [Google Scholar]

- Active Self Protection. Available online: https://www.youtube.com/@ActiveSelfProtection (accessed on 4 March 2023).

- The Economic Value of Peace 2016: Measuring the Global Impact of Violence and Conflict. 2016. Available online: https://reliefweb.int/report/world/economic-value-peace-2016-measuring-global-economic-impact-violence-and-conflict (accessed on 4 March 2023).

- Krug, E.G.; Mercy, J.A.; Dahlberg, L.L.; Zwi, A.B. The world report on violence and health. Lancet 2002, 360, 1083–1088. [Google Scholar] [CrossRef] [PubMed]

- DeLisi, M. Measuring the cost of crime. In The Handbook of Measurement Issues in Criminology and Criminal Justice; Wiley: Hoboken, NJ, USA, 2016; pp. 416–433. [Google Scholar]

- Hobbes, T. Leviathan or the Matter Form and Power of a Commonwealth, Ecclesiastical and Civil; Simon and Schuster: London, UK, 1886; Volume 21. [Google Scholar]

- Tung, W.F.; Jara Santiago Campos, J. User experience research on social robot application. Libr. Hi Tech 2022, 40, 914–928. [Google Scholar] [CrossRef]

- Zarifhonarvar, A. Economics of ChatGPT: A Labor Market View on the Occupational Impact of Artificial Intelligence. 2023. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4350925 (accessed on 15 March 2023).

- Grieco, L. ChatGPT Excitement Sends Investors Flocking to AI Stocks Like Microsoft and Google. 2023. Available online: https://www.proactiveinvestors.co.uk/companies/news/1004479/chatgpt-excitement-sends-investors-flocking-to-ai-stocks-like-microsoft-and-google-1004479.html (accessed on 4 March 2023).

- The Britannica Dictionary: Robot. Available online: https://www.britannica.com/dictionary/robot (accessed on 4 March 2023).

- The Britannica Dictionary: Self-Defense. Available online: https://www.britannica.com/dictionary/self%E2%80%93defense (accessed on 4 March 2023).

- McCormack, W. Targeted Killing at a Distance: Robotics and Self-Defense. Pac. McGeorge Global Bus. Dev. Law J. 2012, 25, 361. [Google Scholar]

- Arksey, H.; O’Malley, L. Scoping studies: Towards a methodological framework. Int. J. Soc. Res. Methodol. 2005, 8, 19–32. [Google Scholar] [CrossRef]

- Peters, M.; Godfrey, C.; McInerney, P.; Soares, C.B.; Khalil, H.; Parker, D. The Joanna Briggs Institute Reviewers’ Manual 2015: Methodology for JBI Scoping Reviews; The Joanna Briggs Institute: Adelaide, Australia, 2015; pp. 6–22. [Google Scholar]

- Haring, K.S.; Novitzky, M.M.; Robinette, P.; De Visser, E.J.; Wagner, A.; Williams, T. The dark side of human–robot interaction: Ethical considerations and community guidelines for the field of HRI. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Republic of Korea, 11–14 March 2019; pp. 689–690. [Google Scholar]

- Martland, C.D. Analysis of the potential impacts of automation and robotics on locomotive rebuilding. IEEE Trans. Eng. Manag. 1987, 34, 92–100. [Google Scholar] [CrossRef]

- Cha, S.S. Customers’ intention to use robot-serviced restaurants in Korea: Relationship of coolness and MCI factors. Int. J. Contemp. Hosp. Manag. 2020, 32, 2947–2968. [Google Scholar] [CrossRef]

- Andersen, M.M.; Schjoedt, U.; Price, H.; Rosas, F.E.; Scrivner, C.; Clasen, M. Playing with fear: A field study in recreational horror. Psychol. Sci. 2020, 31, 1497–1510. [Google Scholar] [CrossRef]

- Zillmann, D. Excitation transfer theory. In The International Encyclopedia of Communication; Wiley: Hoboken, NJ, USA, 2008. [Google Scholar]

- Wood, J.T. Gendered media: The influence of media on views of gender. Gendered Lives Commun. Gender Cult. 1994, 9, 231–244. [Google Scholar]

- Duarte, E.K.; Shiomi, M.; Vinel, A.; Cooney, M. Robot Self-defense: Robots Can Use Force on Human Attackers to Defend Victims. In Proceedings of the 2022 31st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Napoli, Italy, 29 August–2 September 2022; pp. 1606–1613. [Google Scholar]

- Reid, M. Rethinking the fourth amendment in the age of supercomputers, artificial intelligence, and robots. West Va. Law Rev. 2016, 119, 863. [Google Scholar]

- Gwozdz, J.; Morin, N.; Mowris, R.P. Enabling Semi-Autonomous Manipulation on iRobot’s Packbot. Bachelor’s Thesis, Worcester Polytechnic Institute, Worcester, MA, USA, 2014. [Google Scholar]

- Carruth, D.W.; Bethel, C.L. Challenges with the integration of robotics into tactical team operations. In Proceedings of the 2017 IEEE 15th International Symposium on Applied Machine Intelligence and Informatics (SAMI), Herl’any, Slovakia, 26–28 January 2017. [Google Scholar]

- Hayes, M. The Creepy Robot Dog Botched a Test Run With a Bomb Squad. 2020. Available online: https://onezero.medium.com/boston-dynamics-robot-dog-got-stuck-in-sit-mode-during-police-test-emails-reveal-4c8592c7fc2 (accessed on 4 March 2023).

- Farivar, C. Security Robots Expand Across U.S., with Few Tangible Results. 2021. Available online: https://www.nbcnews.com/business/business-news/security-robots-expand-across-u-s-few-tangible-results-n1272421 (accessed on 4 March 2023).

- Jeffrey-Wilensky, J.; Freeman, D. This Police Robot Could Make Traffic Stops Safer. 2019. Available online: https://www.nbcnews.com/mach/science/police-robot-could-make-traffic-stops-safer-ncna1002501 (accessed on 4 March 2023).

- Jackson, R.D. “I Approved It…And I’ll Do It Again”: Robotic Policing and Its Potential for Increasing Excessive Force. In Societal Challenges in the Smart Society; Universidad de La Rioja: La Rioja, Spain, 2020; pp. 511–522. [Google Scholar]

- Prabakar, M.; Kim, J.H. TeleBot: Design concept of telepresence robot for law enforcement. In Proceedings of the 2013 World Congress on Advances in Nano, Biomechanics, Robotics, and Energy Research (ANBRE 2013), Seoul, Republic of Korea, 25–28 August 2013. [Google Scholar]

- Simmons, R. Terry in the Age of Automated Police Officers. Seton Hall Law Rev. 2019, 50, 909. [Google Scholar] [CrossRef]

- Terzian, D. The right to bear (robotic) arms. Penn. State Law Rev. 2012, 117, 755. [Google Scholar] [CrossRef]

- Donlon, M. Automation at the Airport. 2022. Available online: https://electronics360.globalspec.com/article/18513/automation-at-the-airport (accessed on 5 March 2023).

- Lufkin, B. What the World Can Learn from Japan’s Robots. 2020. Available online: https://www.bbc.com/worklife/article/20200205-what-the-world-can-learn-from-japans-robots (accessed on 4 March 2023).

- Glaser, A. 11 Police Robots Patrolling Around the World. 2019. Available online: https://www.wired.com/2016/07/11-police-robots-patrolling-around-world/ (accessed on 5 March 2023).

- Maliphol, S.; Hamilton, C. Smart Policing: Ethical Issues & Technology Management of Robocops. In Proceedings of the 2022 Portland International Conference on Management of Engineering and Technology (PICMET), Portland, OR, USA, 7–11 August 2022; pp. 1–15. [Google Scholar]

- Chia, O. Keeping Watch from the Skies: Police Unveil Two New Drones for Crowd Management, Search and Rescue. 2023. Available online: https://www.straitstimes.com/singapore/courts-crime/keeping-watch-from-the-skies-police-unveil-two-new-drones-for-crowd-management-search-and-rescue (accessed on 4 March 2023).

- Jain, R.; Nagrath, P.; Thakur, N.; Saini, D.; Sharma, N.; Hemanth, D.J. Towards a smarter surveillance solution: The convergence of smart city and energy efficient unmanned aerial vehicle technologies. In Development and Future of Internet of Drones (IoD): Insights, Trends and Road Ahead; Springer: Berlin/Heidelberg, Germany, 2021; pp. 109–140. [Google Scholar]

- Williams, O. China Now Has Robot Police with Facial Recognition. 2017. Available online: https://www.huffingtonpost.co.uk/entry/china-now-has-robot-police-with-facial-recognition_uk_58ac54d2e4b0f077b3ee0dcb (accessed on 5 March 2023).

- Joh, E.E. Policing Police Robots. 2016. Available online: https://www.uclalawreview.org/policing-police-robots (accessed on 4 March 2023).

- Kim, L. Meet South Korea’s New Robotic Prison Guards. 2012. Available online: https://www.digitaltrends.com/cool-tech/meet-south-koreas-new-robotic-prison-guards (accessed on 4 March 2023).

- McKay, C. The carceral automaton: Digital prisons and technologies of detention. Int. J. Crime Justice Soc. Democr. 2022, 11, 100–119. [Google Scholar] [CrossRef]

- White, L. “Iron Man” Power Armour and Robot Dogs Coming to Korean Police. 2022. Available online: https://stealthoptional.com/robotics/iron-man-power-armour-and-robot-dogs-coming-to-korean-police (accessed on 4 March 2023).

- Carbonaro, G. Robot Paw Patrol: The US Space Force Begins Deploying Robots as Guard Dogs. 2022. Available online: https://www.euronews.com/next/2022/08/10/robot-dogs-report-for-duty-the-us-space-force-enlists-robotic-dogs-to-guard-spaceport (accessed on 4 March 2023).

- Kemper, C.; Kolain, M. K9 Police Robots-Strolling Drones, RoboDogs, or Lethal Weapons? In Proceedings of the WeRobot 2022 Conference, Washington, DC, USA, 14–16 September 2022. [Google Scholar]

- Dent, S. Boston Dynamics’ Spot Robot Tested in Combat Training with the French Army. 2021. Available online: https://www.engadget.com/boston-dynamics-spot-robot-combat-training-101732374.html (accessed on 4 March 2023).

- Engineering, I. Unique Military and Police Robots. 2022. Available online: https://youtu.be/Ox6A0hOYL5g (accessed on 4 March 2023).

- Lee, W.; Bang, Y.B.; Lee, K.M.; Shin, B.H.; Paik, J.K.; Kim, I.S. Motion teaching method for complex robot links using motor current. Int. J. Control. Autom. Syst. 2010, 8, 1072–1081. [Google Scholar] [CrossRef]

- Cao, Y.; Yamakawa, Y. Marker-less Kendo Motion Prediction Using High-speed Dual-camera System and LSTM Method. In Proceedings of the 2022 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Hokkaido, Japan, 11–15 July 2022; pp. 159–164. [Google Scholar]

- Jamiepaik. MUSA: Kendo Robot Collective. 2009. Available online: https://www.youtube.com/watch?v=Mh3muo2V818 (accessed on 5 March 2023).

- Electric, Y. Yaskawa Bushido Project/Industrial Robot vs. Sword Master. 2015. Available online: https://www.youtube.com/watch?v=O3XyDLbaUmU (accessed on 5 March 2023).

- Werrell, K.P. The Evolution of the Cruise Missile; Technical Report; Air University Maxwell AFB: Montgomery, AL, USA, 1985. [Google Scholar]

- Brollowski, H. Military Robots and the Principle of Humanity: Distorting the Human Face of the Law? In Armed Conflict and International Law: In Search of the Human Face: Liber Amicorum in Memory of Avril McDonald; Springer: Berlin/Heidelberg, Germany, 2013; pp. 53–96. [Google Scholar]

- Soffar, H. Modular Advanced Armed Robotic System (MAARS Robot) Features, Uses & Design. 2019. Available online: https://www.online-sciences.com/robotics/modular-advanced-armed-robotic-system-maars-robot-features-uses-design/ (accessed on 5 March 2023).

- Chayka, K. Watch This Drone Taser a Guy Until He Collapses. 2014. Available online: https://time.com/19929/watch-this-drone-taser-a-guy-until-he-collapses/ (accessed on 5 March 2023).

- Asaro, P. “ Hands up, don’t shoot!” HRI and the automation of police use of force. J. Hum.-Robot. Interact. 2016, 5, 55–69. [Google Scholar] [CrossRef]

- Holt, K. Ghost Robotics Strapped a Gun to Its Robot Dog. 2021. Available online: https://www.engadget.com/robot-dog-gun-ghost-robotics-sword-international-175529912.html (accessed on 5 March 2023).

- Lee, M. Terrifying Video Shows Chinese Robot Attack Dog with Machine Gun Dropped by Drone. 2022. Available online: https://nypost.com/2022/10/26/terrifying-video-shows-chinese-robot-attack-dog-with-machine-gun-dropped-by-drone/ (accessed on 5 March 2023).

- White, L. Russian Army’s RPG-Equipped Robot Dog Can Be Easily Purchased Online. 2022. Available online: https://stealthoptional.com/robotics/russian-armys-rpg-equipped-robot-dog-easily-purchased-online/ (accessed on 5 March 2023).

- Fish, T. Loitering with Intent. 2022. Available online: https://www.asianmilitaryreview.com/2022/12/loitering-with-intent/ (accessed on 5 March 2023).

- Kirschgens, L.A.; Ugarte, I.Z.; Uriarte, E.G.; Rosas, A.M.; Vilches, V.M. Robot hazards: From safety to security. arXiv 2018, arXiv:1806.06681. [Google Scholar]

- Sharkey, N.; Goodman, M.; Ross, N. The coming robot crime wave. Computer 2010, 43, 115–116. [Google Scholar] [CrossRef]

- Thomas, M. Are Police Robots the Future of Law Enforcement? 2022. Available online: https://builtin.com/robotics/police-robot-law-enforcement (accessed on 5 March 2023).

- Bergman, R.; Fassihi, F. The Scientist and the A.I.-Assisted, Remote-Control Killing Machine. The New York Times, 18 September 2021; Volume 18. [Google Scholar]

- Kallenborn, Z. Was a Flying Killer Robot Used in Libya? Quite Possibly. 2021. Available online: https://thebulletin.org/2021/05/was-a-flying-killer-robot-used-in-libya-quite-possibly/ (accessed on 5 March 2023).

- Gonzalez, L.F.; Montes, G.A.; Puig, E.; Johnson, S.; Mengersen, K.; Gaston, K.J. Unmanned aerial vehicles (UAVs) and artificial intelligence revolutionizing wildlife monitoring and conservation. Sensors 2016, 16, 97. [Google Scholar] [CrossRef]

- Slezak, M. Robots, Lasers, Poison: The High-Tech Bid to Cull Wild Cats in the Outback. 2016. Available online: https://www.theguardian.com/environment/2016/apr/17/robots-lasers-poison-the-high-tech-bid-to-cull-wild-cats-in-the-outback (accessed on 5 March 2023).

- Crumley, B. AeroPest Positions Wasp-Culling Drone for Broader Applications. 2022. Available online: https://dronedj.com/2022/05/23/aeropest-positions-wasp-culling-drone-for-broader-applications/ (accessed on 5 March 2023).

- Hecht, E. Drones in the Nagorno-Karabakh War: Analyzing the Data. Mil. Strateg. Mag 2022, 7, 31–37. [Google Scholar]

- Lin, P.; Bekey, G.; Abney, K. Autonomous Military Robotics: Risk, Ethics, and Design; Technical Report; California Polytechnic State Univesristy San Luis Obispo: San Luis Obispo, CA, USA, 2008. [Google Scholar]

- Dawes, J. UN Fails to Agree on ‘Killer Robot’ Ban as Nations Pour Billions Into Autonomous Weapons Research. 2021. Available online: https://theconversation.com/un-fails-to-agree-on-killer-robot-ban-as-nations-pour-billions-into-autonomous-weapons-research-173616 (accessed on 5 March 2023).

- Froomkin, A.M.; Colangelo, P.Z. Self-defense against robots and drones. Conn. Law Rev. 2015, 48, 1. [Google Scholar]

- King, T.C.; Aggarwal, N.; Taddeo, M.; Floridi, L. Artificial intelligence crime: An interdisciplinary analysis of foreseeable threats and solutions. Sci. Eng. Ethics 2020, 26, 89–120. [Google Scholar] [CrossRef]

- White, L. Killer Robot Cops Are Bad Actually, Decides San Francisco Supervisory Board. 2022. Available online: https://stealthoptional.com/news/killer-robot-cops-bad-actually-decides-san-francisco-supervisory-board/ (accessed on 5 March 2023).

- Sharkey, N.E. The evitability of autonomous robot warfare. Int. Rev. Red Cross 2012, 94, 787–799. [Google Scholar] [CrossRef]

- Digital, C. New Robot Makes Soldiers Obsolete (Corridor Digital). 2019. Available online: https://www.youtube.com/watch?v=y3RIHnK0_NE (accessed on 5 March 2023).

- Gambino, A.; Fox, J.; Ratan, R.A. Building a stronger CASA: Extending the computers are social actors paradigm. Hum.-Mach. Commun. 2020, 1, 5. [Google Scholar] [CrossRef]

- Nedim, U. Is It a Crime Not to Help Someone in Danger? 2015. Available online: https://www.sydneycriminallawyers.com.au/blog/is-it-a-crime-not-to-help-someone-in-danger/ (accessed on 5 March 2023).

- Foot, P. The problem of abortion and the doctrine of the double effect. Oxf. Rev. 1967, 5, 5–15. [Google Scholar]

- Thomson, J.J. The trolley problem. Yale Law J. 1984, 94, 1395. [Google Scholar] [CrossRef]

- Lim, H.S.M.; Taeihagh, A. Algorithmic decision-making in AVs: Understanding ethical and technical concerns for smart cities. Sustainability 2019, 11, 5791. [Google Scholar] [CrossRef]

- Goodall, N.J. Ethical decision making during automated vehicle crashes. Transp. Res. Rec. 2014, 2424, 58–65. [Google Scholar] [CrossRef]

- Lin, P. Robot cars and fake ethical dilemmas. Forbes Magazine, 3 April 2017. [Google Scholar]

- Brooks, R. Unexpected Consequences of Self Driving Cars. 2017. Available online: http://rodneybrooks.com/unexpected-consequences-of-self-driving-cars (accessed on 5 March 2023).

- Stein, B.D.; Jaycox, L.H.; Kataoka, S.; Rhodes, H.J.; Vestal, K.D. Prevalence of child and adolescent exposure to community violence. Clin. Child Fam. Psychol. Rev. 2003, 6, 247–264. [Google Scholar] [CrossRef]

- Joh, E.E. Private security robots, artificial intelligence, and deadly force. UCDL Rev. 2017, 51, 569. [Google Scholar]

- Calo, R. Robotics and the Lessons of Cyberlaw. Calif. Law Rev. 2015, 103, 513. [Google Scholar]

- Robinette, P.; Li, W.; Allen, R.; Howard, A.M.; Wagner, A.R. Overtrust of robots in emergency evacuation scenarios. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016; pp. 101–108. [Google Scholar]

- Lacey, C.; Caudwell, C. Cuteness as a ‘dark pattern’ in home robots. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Republic of Korea, 11–14 March 2019; pp. 374–381. [Google Scholar]

- Carr, N.K. Programmed to protect and serve: The dawn of drones and robots in law enforcement. J. Air Law Commer. 2021, 86, 183. [Google Scholar]

- Mamak, K. Whether to save a robot or a human: On the ethical and legal limits of protections for robots. Front. Robot. AI 2021, 8, 712427. [Google Scholar] [CrossRef] [PubMed]

- Vadymovych, S.Y. Artificial personal autonomy and concept of robot rights. Eur. J. Law Political Sci. 2017, 1, 17–21. [Google Scholar]

- Garber, M. Funerals for Fallen Robots. 2013. Available online: https://www.theatlantic.com/technology/archive/2013/09/funerals-for-fallen-robots/279861 (accessed on 4 March 2023).

- Darling, K. Extending legal protection to social robots: The effects of anthropomorphism, empathy, and violent behavior towards robotic objects. In Robot Law; Edward Elgar Publishing: Cheltenham, UK, 2016; pp. 213–232. [Google Scholar]

- Rehm, M.; Krogsager, A. Negative affect in human robot interaction—Impoliteness in unexpected encounters with robots. In Proceedings of the 2013 IEEE RO-MAN, Gyeongju, Republic of Korea, 26–29 April 2013; pp. 45–50. [Google Scholar]

- Connolly, J.; Mocz, V.; Salomons, N.; Valdez, J.; Tsoi, N.; Scassellati, B.; Vázquez, M. Prompting prosocial human interventions in response to robot mistreatment. In Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020; pp. 211–220. [Google Scholar]

- Bartneck, C.; Keijsers, M. The morality of abusing a robot. Paladyn J. Behav. Robot. 2020, 11, 271–283. [Google Scholar] [CrossRef]

- Takas, K.L. Exploring How and Why Women Become Involved in Relationships with Incarcerated Men. Bachelor’s Thesis, University of South Florida, Tampa, FL, USA, 2004. [Google Scholar]

- Tan, X.Z.; Vázquez, M.; Carter, E.J.; Morales, C.G.; Steinfeld, A. Inducing bystander interventions during robot abuse with social mechanisms. In Proceedings of the 2018 13th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Chicago, IL, USA, 5–8 March 2018; pp. 169–177. [Google Scholar]

- Bartneck, C.; Rosalia, C.; Menges, R.; Deckers, I. Robot abuse-a limitation of the media equation. In Proceedings of the Interact 2005 Workshop on Abuse, Rome, Italy, 12 September 2005. [Google Scholar]

- Eyssel, F.; Kuchenbrandt, D.; Bobinger, S. Effects of anticipated human–robot interaction and predictability of robot behavior on perceptions of anthropomorphism. In Proceedings of the 6th International Conference on Human-Robot Interaction, Lausanne, Switzerland, 6–9 March 2011; pp. 61–68. [Google Scholar]

- Natarajan, M.; Gombolay, M. Effects of anthropomorphism and accountability on trust in human robot interaction. In Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020; pp. 33–42. [Google Scholar]

- Garcia Goo, H.; Winkle, K.; Williams, T.; Strait, M.K. Robots Need the Ability to Navigate Abusive Interactions. In Proceedings of the 2022 ACM/IEEE International Conference on Human-Robot Interaction, Online, 7–10 March 2022. [Google Scholar]

- Luria, M.; Sheriff, O.; Boo, M.; Forlizzi, J.; Zoran, A. Destruction, catharsis, and emotional release in human–robot interaction. ACM Trans. Hum.-Robot. Interact. THRI 2020, 9, 22. [Google Scholar] [CrossRef]

- Brščić, D.; Kidokoro, H.; Suehiro, Y.; Kanda, T. Escaping from children’s abuse of social robots. In Proceedings of the Tenth Annual ACM/IEEE International Conference on Human-Robot Interaction, Portland, OR, USA, 2–5 March 2015; pp. 59–66. [Google Scholar]

- Lucas, H.; Poston, J.; Yocum, N.; Carlson, Z.; Feil-Seifer, D. Too big to be mistreated? Examining the role of robot size on perceptions of mistreatment. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 1071–1076. [Google Scholar]

- Keijsers, M.; Bartneck, C. Mindless robots get bullied. In Proceedings of the 2018 13th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Chicago, IL, USA, 5–8 March 2018; pp. 205–214. [Google Scholar]

- Złotowski, J.; Sumioka, H.; Bartneck, C.; Nishio, S.; Ishiguro, H. Understanding anthropomorphism: Anthropomorphism is not a reverse process of dehumanization. In Proceedings of the International Conference on Social Robotics, Tsukuba, Japan, 22–24 November 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 618–627. [Google Scholar]

- Yamada, S.; Kanda, T.; Tomita, K. An escalating model of children’s robot abuse. In Proceedings of the 2020 15th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Cambridge, UK, 23–26 March 2020; pp. 191–199. [Google Scholar]

- Fogg, B.J. A behavior model for persuasive design. In Proceedings of the 4th international Conference on Persuasive Technology, Claremont, CA, USA, 26–29 April 2009; pp. 1–7. [Google Scholar]

- Davidson, R.; Sommer, K.; Nielsen, M. Children’s judgments of anti-social behaviour towards a robot: Liking and learning. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Republic of Korea, 11–14 March 2019; pp. 709–711. [Google Scholar]

- Nomura, T.; Kanda, T.; Yamada, S. Measurement of moral concern for robots. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Republic of Korea, 11–14 March 2019; pp. 540–541. [Google Scholar]

- Zhang, Q.; Zhao, W.; Chu, S.; Wang, L.; Fu, J.; Yang, J.; Gao, B. Research progress of nuclear emergency response robot. IOP Conf. Ser. Mater. Sci. Eng. 2018, 452, 042102. [Google Scholar] [CrossRef]

- Sakai, Y. Japan’s Decline as a Robotics Superpower: Lessons From Fukushima. Asia Pac. J. 2011, 9, 3546. [Google Scholar]

- Titiriga, R. Autonomy of Military Robots: Assessing the Technical and Legal (Jus In Bello) Thresholds. J. Marshall J. Inf. Technol. Privacy Law 2015, 32, 57. [Google Scholar]

- Hayes, G. Balancing Dangers: An Interview with John Farnam. 2012. Available online: https://armedcitizensnetwork.org/archives/253-february-2012 (accessed on 5 March 2023).

- Coffee-Johnson, L.; Perouli, D. Detecting anomalous behavior of socially assistive robots in geriatric care facilities. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Republic of Korea, 11–14 March 2019; pp. 582–583. [Google Scholar]

- Bambauer, J.R. Dr. Robot. UCDL Rev. 2017, 51, 383. [Google Scholar]

- Cox-George, C.; Bewley, S. I, Sex Robot: The health implications of the sex robot industry. BMJ Sex. Reprod. Health 2018, 44, 161–164. [Google Scholar] [CrossRef]

- Xilun, D.; Cristina, P.; Alberto, R.; Zhiying, W. Novel robot for safety protection identification & detect. In Proceedings of the 2007 IEEE International Workshop on Safety, Security and Rescue Robotics, Rome, Italy, 17–19 September 2007; pp. 1–3. [Google Scholar]

- Matsumoto, R.; Nakayama, H.; Harada, T.; Kuniyoshi, Y. Journalist robot: Robot system making news articles from real world. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 1234–1241. [Google Scholar]

- Latar, N.L. The robot journalist in the age of social physics: The end of human journalism? In The New World of Transitioned Media: Digital Realignment and Industry Transformation; Springer: Berlin/Heidelberg, Germany, 2015; pp. 65–80. [Google Scholar]

- Desk, N. Manchester Arena’s AI Weapon-Scanning Technology—Does It Work?—BBC Newsnight. 2022. Available online: https://theglobalherald.com/news/manchester-arenas-ai-weapon-scanning-technology-does-it-work-bbc-newsnight/ (accessed on 5 March 2023).

- Faife, C. After Uvalde, Social Media Monitoring Apps Struggle to Justify Surveillance. 2022. Available online: https://www.theverge.com/2022/5/31/23148541/digital-surveillance-school-shootings-social-sentinel-uvalde (accessed on 5 March 2023).

- Rotaru, V.; Huang, Y.; Li, T.; Evans, J.; Chattopadhyay, I. Event-level prediction of urban crime reveals a signature of enforcement bias in US cities. Nat. Hum. Behav. 2022, 6, 1056–1068. [Google Scholar] [CrossRef]

- Gerke, H.C.; Hinton, T.G.; Takase, T.; Anderson, D.; Nanba, K.; Beasley, J.C. Radiocesium concentrations and GPS-coupled dosimetry in Fukushima snakes. Sci. Environ. 2020, 734, 139389. [Google Scholar] [CrossRef] [PubMed]

- Saha, D.; Mehta, D.; Altan, E.; Chandak, R.; Traner, M.; Lo, R.; Gupta, P.; Singamaneni, S.; Chakrabartty, S.; Raman, B. Explosive sensing with insect-based biorobots. Biosens. Bioelectron. X 2020, 6, 100050. [Google Scholar] [CrossRef]

- Yaacoub, J.P.A.; Noura, H.N.; Salman, O.; Chehab, A. Robotics cyber security: Vulnerabilities, attacks, countermeasures, and recommendations. Int. J. Inf. Secur. 2022, 21, 115–158. [Google Scholar] [CrossRef] [PubMed]

- Clark, G.W.; Doran, M.V.; Andel, T.R. Cybersecurity issues in robotics. In Proceedings of the 2017 IEEE Conference on Cognitive and Computational Aspects of Situation Management (CogSIMA), Savannah, GA, USA, 27–31 March 2017; pp. 1–5. [Google Scholar]

- Cerrudo, C.; Apa, L. Hacking Robots before Skynet. IOActive Website. 2017. Available online: www.ioactive.com/pdfs/Hacking-Robots-Before-Skynet.pdf (accessed on 15 February 2023).

- Hayashi, Y.; Wakabayashi, K.; Shimojyo, S.; Kida, Y. Using decision support systems for juries in court: Comparing the use of real and CG robots. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Republic of Korea, 11–14 March 2019; pp. 556–557. [Google Scholar]

- Koay, K.L.; Dautenhahn, K.; Woods, S.; Walters, M.L. Empirical results from using a comfort level device in human–robot interaction studies. In Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction, Salt Lake City, UT, USA, 2–3 March 2006; pp. 194–201. [Google Scholar]

- Guiochet, J.; Do Hoang, Q.A.; Kaaniche, M.; Powell, D. Model-based safety analysis of human–robot interactions: The MIRAS walking assistance robot. In Proceedings of the 2013 IEEE 13th International Conference on Rehabilitation Robotics (ICORR), Seattle, WA, USA, 24–26 June 2013; pp. 1–7. [Google Scholar]

- Song, S.; Yamada, S. Bioluminescence-inspired human–robot interaction: Designing expressive lights that affect human’s willingness to interact with a robot. In Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; pp. 224–232. [Google Scholar]

- Flaherty, C. Micro-UAV Augmented 3D Tactics. Small Wars Journal. 2018. Available online: https://smallwarsjournal.com/jrnl/art/micro-uav-augmented-3d-tactics (accessed on 5 March 2023).

- Nakamura, T.; Tomioka, T. Seashore robot for environmental protection and inspection. In Proceedings of the 1993 IEEE/Tsukuba International Workshop on Advanced Robotics, Tsukuba, Japan, 8–9 November 1993; pp. 69–74. [Google Scholar]

- Zhang, W.; Ai, C.S.; Zhang, Y.Z.; Li, W.X. Intelligent path tracking control for plant protection robot based on fuzzy pd. In Proceedings of the 2017 2nd International Conference on Advanced Robotics and Mechatronics (ICARM), Hefei and Tai’an, China, 27–31 August 2017; pp. 88–93. [Google Scholar]

- Weng, Y.H.; Gulyaeva, S.; Winter, J.; Slavescu, A.; Hirata, Y. HRI for legal validation: On embodiment and data protection. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 1387–1394. [Google Scholar]

- Bethel, C.L.; Eakin, D.; Anreddy, S.; Stuart, J.K.; Carruth, D. Eyewitnesses are misled by human but not robot interviewers. In Proceedings of the 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Tokyo, Japan, 3–6 March 2013; pp. 25–32. [Google Scholar]

- Singh, A.K.; Baranwal, N.; Nandi, G.C. Human perception based criminal identification through human robot interaction. In Proceedings of the 2015 Eighth International Conference on Contemporary Computing (IC3), Noida, India, 20–22 August 2015; pp. 196–201. [Google Scholar]

- Rueben, M.; Bernieri, F.J.; Grimm, C.M.; Smart, W.D. User feedback on physical marker interfaces for protecting visual privacy from mobile robots. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016; pp. 507–508. [Google Scholar]

- Xia, L.; Gori, I.; Aggarwal, J.K.; Ryoo, M.S. Robot-centric Activity Recognition from First-person RGB-D Videos. In Proceedings of the 2015 IEEE Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 5–9 January 2015; pp. 357–364. [Google Scholar]

- Garg, N.; Roy, N. Enabling self-defense in small drones. In Proceedings of the 21st International Workshop on Mobile Computing Systems and Applications, Austin, TX, USA, 3 March 2020; pp. 15–20. [Google Scholar]

- Meng, C.; Wang, T.; Chou, W.; Luan, S.; Zhang, Y.; Tian, Z. Remote surgery case: Robot-assisted teleneurosurgery. In Proceedings of the IEEE International Conference on Robotics and Automation, ICRA’04, New Orleans, LA, USA, 26 April–1 May 2004; Volume 1, pp. 819–823. [Google Scholar]

- Trost, M.J.; Chrysilla, G.; Gold, J.I.; Matarić, M. Socially-Assistive robots using empathy to reduce pain and distress during peripheral IV placement in children. Pain Res. Manag. 2020, 2020, 7935215. [Google Scholar] [CrossRef] [PubMed]

- Wanebo, T. Remote killing and the Fourth Amendment: Updating Constitutional law to address expanded police lethality in the robotic age. UCLA Law Rev. 2018, 65, 976. [Google Scholar]

- Casper, J.L.; Murphy, R.R. Workflow study on human–robot interaction in USAR. In Proceedings of the 2002 IEEE International Conference on Robotics and Automation (Cat. No. 02CH37292), Washington, DC, USA, 11–15 May 2002; Volume 2, pp. 1997–2003. [Google Scholar]

- Ventura, R.; Lima, P.U. Search and rescue robots: The civil protection teams of the future. In Proceedings of the 2012 Third International Conference on Emerging Security Technologies, Lisbon, Portugal, 5–7 September 2012; pp. 12–19. [Google Scholar]

- Harriott, C.E.; Adams, J.A. Human performance moderator functions for human–robot peer-based teams. In Proceedings of the 2010 5th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Osaka, Japan, 2–5 March 2010; pp. 151–152. [Google Scholar]

- Weiss, A.; Wurhofer, D.; Lankes, M.; Tscheligi, M. Autonomous vs. tele-operated: How people perceive human–robot collaboration with HRP-2. In Proceedings of the 2009 4th ACM/IEEE International Conference on Human-Robot Interaction (HRI), La Jolla, CA, USA, 9–13 March 2009; pp. 257–258. [Google Scholar]

- Nasir, J.; Oppliger, P.; Bruno, B.; Dillenbourg, P. Questioning Wizard of Oz: Effects of Revealing the Wizard behind the Robot. In Proceedings of the 31st IEEE International Conference on Robot & Human Interactive Communication (RO-MAN), Napoli, Italy, 29 August–2 September 2022. [Google Scholar]

- Tozadore, D.; Pinto, A.; Romero, R.; Trovato, G. Wizard of Oz vs. autonomous: Children’s perception changes according to robot’s operation condition. In Proceedings of the 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Lisbon, Portugal, 28 August–1 September 2017; pp. 664–669. [Google Scholar]

- Bennett, M.; Williams, T.; Thames, D.; Scheutz, M. Differences in interaction patterns and perception for teleoperated and autonomous humanoid robots. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 6589–6594. [Google Scholar]

- Zheng, K.; Glas, D.F.; Kanda, T.; Ishiguro, H.; Hagita, N. How many social robots can one operator control? In Proceedings of the 2011 6th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Lausanne, Switzerland, 6–9 March 2011; pp. 379–386. [Google Scholar]

- Hopkins, D.; Schwanen, T. Talking about automated vehicles: What do levels of automation do? Technol. Soc. 2021, 64, 101488. [Google Scholar] [CrossRef]

- Tian, L.; Oviatt, S. A taxonomy of social errors in human–robot interaction. ACM Trans. Hum.-Robot. Interact. THRI 2021, 10, 13. [Google Scholar] [CrossRef]

- Halbach, T.; Schulz, T.; Leister, W.; Solheim, I. Robot-Enhanced Language Learning for Children in Norwegian Day-Care Centers. Multimodal Technol. Interact. 2021, 5, 74. [Google Scholar] [CrossRef]

- Baeth, M.J.; Aktas, M.S. Detecting misinformation in social networks using provenance data. Concurr. Comput. Pract. Exp. 2019, 31, e4793. [Google Scholar] [CrossRef]

- Friedman, N. Word of the Week: Cunningham’s Law. 2010. Available online: https://nancyfriedman.typepad.com/away_with_words/2010/05/word-of-the-week-cunninghams-law.html (accessed on 5 March 2023).

- Alemi, M.; Meghdari, A.; Ghazisaedy, M. Employing humanoid robots for teaching English language in Iranian junior high-schools. Int. J. Humanoid Robot. 2014, 11, 1450022. [Google Scholar] [CrossRef]

- Vincent, J. The US Is Testing Robot Patrol Dogs on Its Borders. 2022. Available online: https://www.theverge.com/2022/2/3/22915760/us-robot-dogs-border-patrol-dhs-tests-ghost-robotics (accessed on 5 March 2023).

- Olsen, T.; Stiffler, N.M.; O’Kane, J.M. Rapid Recovery from Robot Failures in Multi-Robot Visibility-Based Pursuit-Evasion. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 9734–9741. [Google Scholar]

- ISO 21448:2022; Road Vehicles—Safety of the Intended Functionality. ISO: Geneva, Switzerland, 2022. Available online: https://www.iso.org/standard/77490.html (accessed on 5 March 2023).

- Seok, S.; Hwang, E.; Choi, J.; Lim, Y. Cultural differences in indirect speech act use and politeness in human–robot interaction. In Proceedings of the 2022 17th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Sapporo, Japan, 7–10 March 2022; pp. 1–8. [Google Scholar]

- Persson, A.; Laaksoharju, M.; Koga, H. We Mostly Think Alike: Individual Differences in Attitude Towards AI in Sweden and Japan. Rev. Socionetw. Strateg. 2021, 15, 123–142. [Google Scholar] [CrossRef]

- Bröhl, C.; Nelles, J.; Brandl, C.; Mertens, A.; Nitsch, V. Human–robot collaboration acceptance model: Development and comparison for Germany, Japan, China and the USA. Int. J. Soc. Robot. 2019, 11, 709–726. [Google Scholar] [CrossRef]

- Heine, S.J. Cultural Psychology; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2010. [Google Scholar]

- West, D.M. What Happens if Robots Take the Jobs? The Impact of Emerging Technologies on Employment and Public Policy; Centre for Technology Innovation at Brookings: Washington, DC, USA, 2015. [Google Scholar]

- Violent Crime Rates by Country. 2018. Available online: https://wisevoter.com/country-rankings/violent-crime-rates-by-country/ (accessed on 5 March 2023).

- Grinshteyn, E.; Hemenway, D. Violent death rates: The US compared with other high-income OECD countries, 2010. Am. J. Med. 2016, 129, 266–273. [Google Scholar] [CrossRef] [PubMed]

- Lies, E. Abe Assassination Stuns Japan, a Country Where Gun Violence Is Rare. 2022. Available online: https://www.reuters.com/world/asia-pacific/mostly-gun-free-nation-japanese-stunned-by-abe-killing-2022-07-08/ (accessed on 5 March 2023).

- Stack, S. The effect of the media on suicide: Evidence from Japan, 1955–1985. Suicide -Life-Threat. Behav. 1996, 26, 132–142. [Google Scholar] [PubMed]

- Penal Code. 2017. Available online: https://www.japaneselawtranslation.go.jp/en/laws/view/3581/en (accessed on 5 March 2023).

- Cheng, C.; Hoekstra, M. Does strengthening self-defense law deter crime or escalate violence? Evidence from expansions to castle doctrine. J. Hum. Resour. 2013, 48, 821–854. [Google Scholar] [CrossRef]

- Self Defense and “Stand Your Ground”. 2022. Available online: https://www.ncsl.org/civil-and-criminal-justice/self-defense-and-stand-your-ground (accessed on 5 March 2023).

- Schoettle, B.; Sivak, M. Public Opinion about Self-Driving Vehicles in China, India, Japan, the US, the UK, and Australia; Technical Report; University of Michigan, Ann Arbor, Transportation Research Institute: Ann Arbor, MI, USA, 2014. [Google Scholar]

- Yakubovich, A.R.; Esposti, M.D.; Lange, B.C.; Melendez-Torres, G.; Parmar, A.; Wiebe, D.J.; Humphreys, D.K. Effects of laws expanding civilian rights to use deadly force in self-defense on violence and crime: A systematic review. Am. J. Public Health 2021, 111, e1–e14. [Google Scholar] [CrossRef]

- Gallimore, D.; Lyons, J.B.; Vo, T.; Mahoney, S.; Wynne, K.T. Trusting robocop: Gender-based effects on trust of an autonomous robot. Front. Psychol. 2019, 10, 482. [Google Scholar] [CrossRef]

- Duarte, E.K.; Shiomi, M.; Vinel, A.; Cooney, M. Robot Self-defense: Robot, don’t hurt me, no more. In Proceedings of the 2022 17th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Sapporo, Japan, 7–10 March 2022; pp. 742–745. [Google Scholar]

- Collier, R. Masculinities, Crime and Criminology; Sage: San Mateo, CA, USA, 1998. [Google Scholar]

- Robot Self-Defense Playlist. 2022. Available online: youtube.com/watch?v=F5y7dPy41p0&list=PLtGv2XOitdkQsEsPX528cmat5cnVgd15V (accessed on 5 March 2023).

- Two-Way Mixed ANOVA. Available online: https://guides.library.lincoln.ac.uk/mash/statstest/ANOVA_mixed (accessed on 5 March 2023).

- Fitter, N.T.; Mohan, M.; Kuchenbecker, K.J.; Johnson, M.J. Exercising with Baxter: Preliminary support for assistive social-physical human–robot interaction. J. Neuroeng. Rehabil. 2020, 17, 19. [Google Scholar] [CrossRef]

- Meola, A. Generation Z News: Latest Characteristics, Research, and Facts. 2023. Available online: https://www.insiderintelligence.com/insights/generation-z-facts/ (accessed on 4 March 2023).

- Smith, T.W. The polls: Gender and attitudes toward violence. Public Opin. Q. 1984, 48, 384–396. [Google Scholar] [CrossRef]

- Walters, K.; Christakis, D.A.; Wright, D.R. Are Mechanical Turk worker samples representative of health status and health behaviors in the US? PLoS ONE 2018, 13, e0198835. [Google Scholar] [CrossRef]

- Florida, R. What Is It Exactly That Makes Big Cities Vote Democratic? 2013. Available online: https://www.bloomberg.com/news/articles/2013-02-19/what-is-it-exactly-that-makes-big-cities-vote-democratic (accessed on 5 March 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cooney, M.; Shiomi, M.; Duarte, E.K.; Vinel, A. A Broad View on Robot Self-Defense: Rapid Scoping Review and Cultural Comparison. Robotics 2023, 12, 43. https://doi.org/10.3390/robotics12020043

Cooney M, Shiomi M, Duarte EK, Vinel A. A Broad View on Robot Self-Defense: Rapid Scoping Review and Cultural Comparison. Robotics. 2023; 12(2):43. https://doi.org/10.3390/robotics12020043

Chicago/Turabian StyleCooney, Martin, Masahiro Shiomi, Eduardo Kochenborger Duarte, and Alexey Vinel. 2023. "A Broad View on Robot Self-Defense: Rapid Scoping Review and Cultural Comparison" Robotics 12, no. 2: 43. https://doi.org/10.3390/robotics12020043

APA StyleCooney, M., Shiomi, M., Duarte, E. K., & Vinel, A. (2023). A Broad View on Robot Self-Defense: Rapid Scoping Review and Cultural Comparison. Robotics, 12(2), 43. https://doi.org/10.3390/robotics12020043