1. Introduction

Dementia affects nearly 55 million people worldwide and, driven mainly by the aging of the population, its prevalence is expected to increase to 70 million by 2030 [

1]. People with dementia (PwD) experience a decline in mental ability that interferes with their activities of daily life, reducing their quality of life and forcing many of them to require assistance from caregivers. Most of this care is provided by family members, sometimes referred as the invisible second patient, who often experience significant burden, stress and economic hardship as a result of the demands of the care [

2].

There is no known cure for some types of dementia, such as Alzheimer’s disease, which accounts for 60–70% of all dementia cases. Thus, treatments mostly rely on pharmacological and non-pharmacological interventions that address the symptoms rather than the causes of the disease [

3].

PwD can experience a variety of behavioral and psychological symptoms of dementia (BPSD), such as anxiety, depression, aggression and wandering. Each individual with dementia experiences the disease differently, influenced by their initial predisposing factors, lifelong events, personality and current living conditions [

4]. Moreover, PwD often experience social disengagement and apathy, disinhibition and loss of social tact and propriety, and reduced empathy or sympathy for others [

5]. Altogether, these challenging dementia-related behaviors affect their relationships with their caregivers and close family members and friends, reduce their quality of life, and may lead to isolation and social withdrawal.

These symptoms are problematic not only for PwD, but also for their caregivers. In addition, the effects of challenging behaviors might be felt differently by people caring for the same PwD. For instance, pacing is a type of wandering behavior, experienced by some PwD. If provoked by anxiety or lack of physical activity, pacing can help alleviate these causes and be helpful for the individual with dementia. Yet, a caregiver might find this behavior disturbing and disruptive. Thus, strategies to deal with BPSD need to address the needs of both PwD and their caregivers.

Disruptive eating behaviors (DEB) are one of the most frequent concerns raised by informal caregivers [

6]. These behaviors include becoming agitated and angry at mealtimes or refusing to eat the food offered [

7,

8]. As with other behavioral and psychological symptoms of dementia, DEBs change over time and manifest differently in each individual.

Increasingly, non-pharmacological interventions for dementia make use of assistive technologies to alleviate some of these caring, behavior and social challenges. Assistive technologies may include a smartwatch that detects that the individual with dementia might be wandering outdoors and notifies the caregiver or plays relaxing music in a smart speaker to help reduce her anxiety or apathy. Socially assistive robots (SARs) have also been explored in dementia care for several years [

9], showing promising results in helping PwD who exhibit challenging behaviors, but still generally lacking strong evidence of their effectiveness [

10]. SARs have been developed to act as companions [

11], exercise coaches [

12], daily living assistants [

13,

14] and facilitators in recreational activities [

15].

An Ambient-assisted Intervention System (AaIS) leverages pervasive technologies to detect problematic behaviors in PwD in order to enact interventions in an overly disruptive environment, suggest interventions to the caregiver or prompt the PwD to carry out comforting activities [

16]. By detecting problematic behaviors and inferring probable causes, behavior-aware applications could provide tailored and more opportune interventions, notifying caregivers, offering assurances to the patient or directly modifying the physical environment. These interventions aim at reducing the incidence of problematic behaviors and ultimately stimulating changes in PwD behaviors. Socially assistive robots, with capabilities of detecting abnormal behaviors through their sensors and interacting with the user appropriately seem a promising means to enact ambient-assisted interventions.

While some of these advances are possible due to the availability of increasingly powerful and versatile hardware and software components, the design and evaluation of SARs for dementia care involve numerous challenges and face a myriad of tensions and tradeoffs for human–robot interaction (HRI) design. For example, PwD exhibit visual, cognitive, speech or hearing deficits and other diverse abilities in communication that tend to deteriorate over time [

17]. These challenges influence how PwD engage with the SAR to provide the desired user experience, becoming the central focus of interaction design. Technology adoption also needs to be considered during the evaluation of SARs, in this case, by both people with dementia and caregivers. This issue is increasingly being explored with perceived barriers related to the complexity, the robustness of the technology, and organizational and contextual factors such as settings and regulations [

18].

In this paper, we specifically address challenging eating behaviors experienced by many people with dementia and which place a considerable burden on informal caregivers. This is approached through the design of a SAR that acts as an eating companion for PwD. We describe the different phases of the user-centered design process in which formal and informal caregivers were actively involved. The implementation of the SAR makes use of the EVA robotic platform, which was extended with the use of trained machine learning algorithms to visually recognize relevant user behaviors and adapt the robot’s behavior accordingly. The design was assessed by formal and informal caregivers for the engagement of the robot and their perception of user adoption, while the implementation is evaluated, the accuracy with which relevant behaviors are detected and thus the capacity of the robot to act autonomously to address the task at hand.

2. Related Work

Research in HRI for dementia care usually focuses on either human factors evaluating the potential acceptance, utility and usability of robots or how to resolve technical challenges including the interaction between hardware (specifically sensors and actuators) and software [

19].

The study of interactions between humans and robots is the main focus of HRI research and practice. It involves understanding users’ needs and their expectations, as well as designing, developing and evaluating the affordances of the robots aimed at satisfying the needs of PwD. Since SARs are meant to interact and communicate with people, their success depends on their proper design and evaluation of behaviors that will become the basis of their interaction. As social agents, these robots should be designed for successful interactions and their behaviors should respect social norms and user expectations [

20]. When the user is an individual with dementia there are additional challenges related to interaction design that need to be taken into account.

Interactions between robots and humans could involve verbal or non-verbal communication. Recent advances in speech recognition and synthesis have facilitated the development of conversational robots, that is, robots that rely mostly on speech as means of interaction. Conversing with a person with dementia has been identified as a challenging task, promoting authors and organizations to propose conversational strategies [

21,

22]. Examples of such strategies include the use of short and simple sentences and giving the PwD sufficient time to respond; while strategies such as these can be incorporated in a conversational robot with relative ease, others, such as rephrasing repetitive messages or conveying interest in the conversation can be more challenging. Importantly, verbal communication has been recognized as one of the most important strategies in the treatment of behavioral disorders associated with dementia [

3].

Along these lines, simple strategies like using the name of the individual in the dialog of the interaction can help establish and maintain a relationship in HRI [

12] and more broadly in HCI [

23]. Additional strategies that have been recognized as useful to promote engagement in HCI and HRI include the use of humor and references to mutual knowledge [

23], or the use of positive feedback [

12,

21]. For example, a long-term study of the interactions with a receptionist robot produced some design recommendations to facilitate a more natural interaction [

24]: 1. the use of greetings to make the robot engaging and shape expectations of the interaction; 2. the use of turn-taking to engage the user and allow for short interactions; 3. allowing for common ground to be established between the robot and the user; and 4. providing mechanisms to end the interaction using social norms. While the robot, placed at the entrance of a university building, favored brief interactions, these findings largely apply for PwD and are consistent with recommendations for conversing with PwD [

22].

On the other hand, significant research has been conducted in the use of facial expressions and movement, mostly of head, arms and hands, to convey attention or emotion in support of the interaction. People at advanced stages of dementia might have difficulties understanding speech and often experience a loss of peripheral vision as well as difficulties recognizing emotions [

25]. The Paro robot for instance, which simulates a baby seal, has successfully used both sound and movement for interaction, and has a furry cover that makes it particularly pleasant to touch and hug [

26].

An additional relevant finding from the HRI literature is to avoid for the robot to act repetitively, which might cause the user to perceive the robot as having reduced intelligence and being less trustworthy [

12]. However, since PwD tend to experience a loss of short-term memory, repetitiveness might be less of an issue in PwD–robot interaction with users who experience moderate and advanced stages of dementia.

SARs are specifically intended to assist in a therapeutic way by providing physical assistance, especially when conducting activities of daily living, acting as companions and for therapy delivery. However, there is also early evidence of the positive effect of SARs in addressing symptoms of dementia such as agitation and depression [

27].

Most research in this area has explored the role of SARs as companions or guidance placing a special focus on measuring efficacy. For example, [

28] used the Paro robot to investigate its social effects in both a day care center and a home setting with PwD. A pilot randomized control trial showed Paro improved social expressions that might indicate improvement in affection and increase communication utterances with staff. Similarly, the Paro robot has been used to investigate how emotions might signal affection during a reading group. A pilot randomized control trial shows using Paro in the reading group had a positive impact in quality of life, reduced anxiety and apathy [

29]. Following the notions of the COACH system originally proposed to help PwD when conducting activities of daily living (ADLs), others have explored the development of robots to provide similar guidance. In particular, the robot “Ed” was designed to provide step-by-step guidance to support PwD when making tea [

30]. A pilot study with five individuals with dementia shows Ed is feasible and usable in this context. Overall, this body of work shows that guided by knowledge of factors which affect acceptability [

31], and only when moving beyond feasibility and usability, researchers are able to observe effects from the use of SARs with health-related measurable outcomes. Although using commercially available prototypes has shown positive engagement, it paradoxically limits the way the intervention is being conducted with respect to how such measurements could be collected. Therefore, there is an untapped potential to understand how can we integrate user-centered techniques to develop context-specific prototypes that are more appropriate for therapeutic interventions while maintaining a level of engagement that will enable testing for efficacy.

3. Methods and Tools

3.1. The EVA Robotics Platform

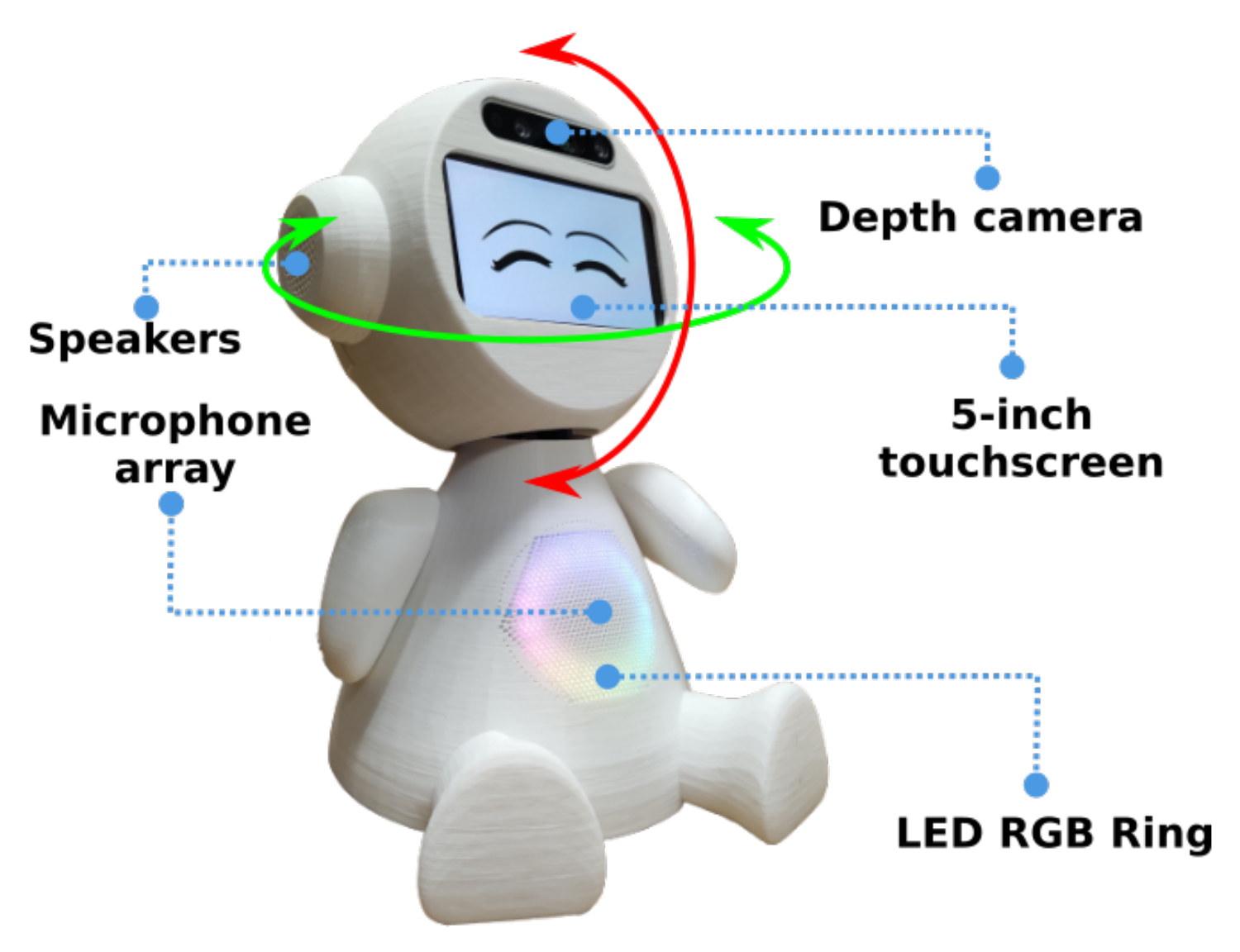

The EVA (

https://eva-social-robot.github.io/, accessed on 10 January 2023) (Embodied Voice Assistant) robot is an open-source platform initially designed and developed to conduct cognitive stimulation therapies for people with dementia guided by a conversational robot [

32,

33]. It has since been extended as a research tool to support studies in human–robot interaction with different populations. The platform includes specifications and resources to build four versions of fully functional social robots at an affordable cost, including 3D models, hardware schematics, assembly manuals, and a software framework to design, implement and test interactions. The standard version includes a microphone array and a speaker to support the voice interaction, and a 5-inch touchscreen display used to show facial expressions, such as attention or emotions, but occasionally animations or short videos. Furthermore, an RGB LED ring fixed on the chest could display light animations to indicate the robot’s status. The intermediate version (see

Figure 1) adds two servo-motors providing 2 degrees of freedom (DOF) in the robot’s head, and a depth camera to enable computer vision components such as user detection and activity recognition. The mobile version adds a platform based on TurtleBot to explore more complex body gestures and mobility features. Finally, a fourth version, about half the size of the standard version, is aimed at a lower budget (no camera, no mobility), with components costing about half the price of the standard version.

The platform’s software architecture includes front-end modules to enable and configure the social robot’s features, attributes and abilities (see

Figure 2). The core modules control the logic and hardware components of the social robot, including back-end components: local server, behavior controller, interaction controller, interaction generator and behaviors editor. The platform was developed using the NodeJS run-time environment and deployed on a Raspberry PI board.

EVA includes speech, natural language processing and a synthesis of emotions. It can work in two modes, autonomous and operated. In the autonomous mode, EVA processes the utterances from users to generate a verbal response, actions (e.g., play music, enact an emotion) or both. In the operated mode, a remote web app is used to operate the robot’s behavior and trigger predefined skills (e.g., telling jokes, completing popular sayings) and can be used to support interventions based on a Wizard-of-Oz approach.

3.2. Design and Evaluation Methods

Designing appropriate HRI interactions for an eating companion requires identifying common tasks, including problematic behaviors that could be exhibited by the PwD, and defining appropriate strategies to mitigate these behaviors. We followed a user-centered approach to design and evaluate the robot.

Data gathering centered on interviews with caregivers to better understand the type of disruptive eating behaviors that are more common and how caregivers usually deal with them. We conducted interviews with six (6) professional caregivers, four of which work at a daycare center for people with dementia and the other two at a nursing home. They are all professionals specialized in nursing, psychology, nutrition or physical rehabilitation, with an average of more than eight years of experience working in dementia care. We conducted rapid contextual design with these interviews and created affinity diagrams to uncover the interactions to be supported by the robot. Design workshops with stakeholders and domain experts were also conducted to refine the design.

To implement the interactions we used the tools provided by the EVA platform, such as the visual programming language. In addition, to detect user behaviors of relevance, such as when the user stops eating or stands up and walks away from the table, we used the camera in the robot, and developed and tested computer vision algorithms.

4. Design of a SAR as an Eating Companion for People with Dementia

The design process included an engagement cycle focused on technology acceptance. During this cycle an initial prototype is developed and evaluated with the participation of stakeholders in order to gather evidence that the robot will be useful and accepted by the target users. Four stages are included in the engagement cycle: an initial understanding of the domain; the co-design of interactions with stakeholders; the implementation of the interactions in the robot; and an assessment of the adoption by the target users.

Once there is evidence that the robot with the interactions designed for the task can be adopted and that it benefits the users a second development cycle, focused on automating the behaviors of the robot, takes place. Four additional phases are included in this cycle: an analysis of the human–robot interaction from the engagement cycle; the design of autonomous behaviors; the implementation of these behaviors in the hardware platform; and the assessment of engagement with the autonomous version of the robot.

We next describe these cycles for the design and evaluation of human–robot interactions with the EVA platform to address the disruptive eating behaviors of people with dementia.

4.1. Engagement Cycle

4.1.1. Initial Understanding of the Domain

We focused our study on people who attend a daycare center. Most of them require some kind of assistance or supervision and many have been diagnosed with dementia. They also have the dual experience of eating some meals at the daycare center with support from professional caregivers and others at home where family members provide most of the care.

The interviews focused on the behavioral problems that PwD exhibit when eating and the techniques that caregivers use to mitigate them. All interviews were transcribed and were analyzed in interpretation sessions using rapid contextual design.

A recurrent issue in the interviews was the diverse way in which individuals experience the disease and the need to personalize the interventions for each person, stage of the disease and context. Caregivers described strategies, developed through training and experience, to successfully deal with problematic eating behaviors, most of which are based on verbal communication. However, they recognized that family members often lack the time, patience or resources to apply these strategies and often experience a significant burden feeding the people they care for.

In fact, caregivers at the daycare center commented that when they reopened after the COVID lockdown they noticed a deterioration in the behavior of older adults when eating. In the daycare center older adults are encouraged to eat independently, giving them enough time to eat and being patient when minor accidents occur. In contrast, informal caregivers often do not have enough time or patience and opt instead to assist them by, for instance, feeding them directly. These practices however tend to decrease the autonomy of the person with dementia when eating.

From the findings of the study we decided to focus our attention on moderate dementia, when eating disorders are often exhibited but the individual is still fairly independent and is not required to be fed by someone else. In addition, we decided to focus our design efforts on transferring knowledge from formal to informal caregivers by assisting meals taken at home and lessening the burden on informal caregivers.

4.1.2. Interaction Co-Design

The themes that emerged from the interpretation of the interviews were grouped in an affinity diagram [

34]. This information helped guide the design sessions.

We conducted two design workshops with an interdisciplinary group of four participants with expertise in HCI, HRI and psychology, to derive design ideas, scenarios and personas. Due to the COVID-19 pandemic these workshops were conducted via videoconference and using shared documents.

At each session the following dynamic was followed: first, we walked through the affinity diagram so that all participants had a shared context. Next, we brainstormed in order to generate design ideas. Then, we cataloged the ideas and asked participants to rank them by priority.

The design ideas that emerged were grouped into three categories. The first category was ‘Personalization’, which group of ideas related to the need for the robot to tailor its interaction with each PwD. The second category was called ‘Monitoring’, which group of ideas related to the automatic detection of disruptive behaviors by the robot. The last category was called ‘Intervention’ which group of ideas related to the robot’s interventions aimed at mitigating disruptive eating behaviors from the PwD.

The design ideas more highly ranked were: (1) verbal stimulation by the robot to help the PwD eat independently, (2) the robot detecting if the PwD stops eating and then motivating her to continue, and (3) playing music to engage the PwD without distracting them from eating.

We next present a persona derived from the design sessions:

Isabel is a 90-year-old woman who was diagnosed with dementia 4 years ago. She lives with one of her daughters and during the day she attends a daycare center for people with dementia. She enjoys participating in the activities organized at the center which help her stay physically, socially and cognitively active. In particular, she enjoys eating there, the music and after-meal conversations. However, she experiences some difficulties eating at home. She can sometimes get distracted or gets anxious when her daughter pressures her to finish her meal. This makes her clumsy and often drops food, which often results in her daughter deciding to feed her in the mouth.

The following scenario was inspired by the type of problems that PwD, such as Isabel, can exhibit when eating and by the design ideas that were proposed and ranked with high priority.

A caregiver at the daycare center notices on Monday that, after a couple of days without going to the daycare center, Isabel seems more insecure and less independent at mealtimes, but he encourages her to eat by herself. After a couple of weeks the caregiver gives Isabel’s daughter an EVA robot tailored to assist her mother. The robot can verbally stimulate the older adult with phrases such as “Isabel, look at the delicious chicken broth they prepared for you, would you like to taste it?”, “Isabel, this meal looks great. Why do not you sit down and finish it?”. Phrases commonly used by caregivers in the daycare center to motivate older adults to feed themselves and focus on their food.

4.1.3. Implement Support for Interaction

We used the visual programming language (VPL) provided by the EVA platform to implement eight vignettes of short interactions based on the scenarios derived during the design sessions. These interactions show challenging eating situations and how the robot can help address them. We recorded short video clips depicting these vignettes. The use of videos is a convenient approach to include more subjects and have more control in the intervention [

35]. We had the additional consideration of lockdown restrictions due to the pandemic which did not allowed us to conduct in-person evaluations with PwD or caregivers.

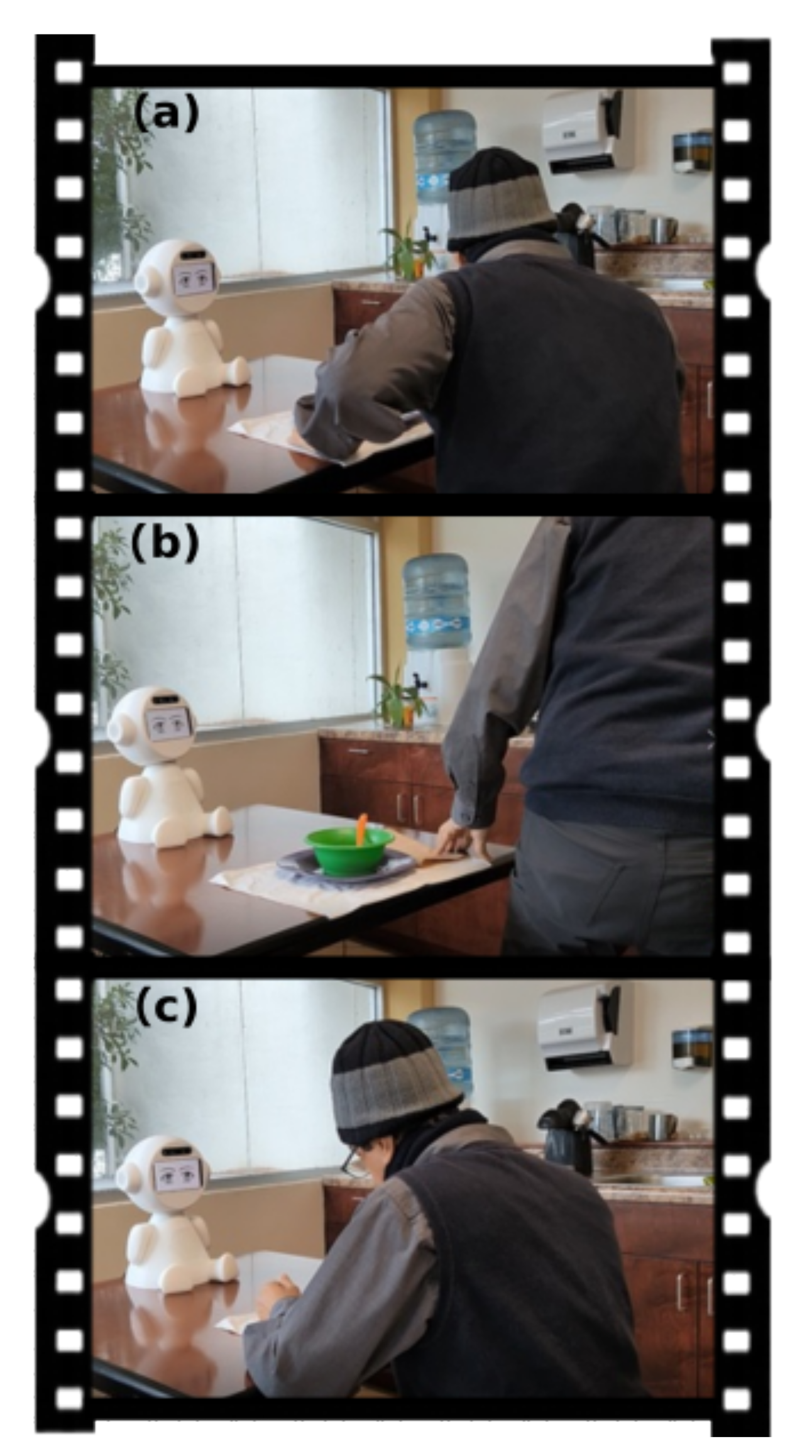

Some of the situations shown in the videos include: entertaining the PwD while the meal is ready; conversing with the PwD to stimulate their appetite; notifying a family member if the PwD needs assistance; and convincing the PwD to continue eating after they get distracted (see

Figure 3).

4.1.4. Assessment of Adoption

To validate the situations shown in the videos and the strategy used by the robot we created an on-line survey that included eight of the videos recorded. The survey asks participants to rate the veracity of the problem shown and appropriateness of the strategy used by the robot. In addition, it includes 14 questions from the Almere model, developed to assess adoption of socially assertive agents by older adults [

36]. These questions focus on the issues of Perceived Enjoyment (PENJ), Perceived Ease of Use (PEOU), Perceived Usefulness (PU), Perceived Sociability (PS) and Intention to Use (ITU).

We posted a message in a Facebook group for family members of people with dementia asking informal caregivers to complete the survey and additionally we asked some of the caregivers who participated in the interview to complete it as well.

A total of 14 caregivers (12 informal, 2 formal), completed the survey. Results show that formal and informal caregivers in general agreed that the scenarios depicted in the videos were realistic and the strategy used by the robot appropriate. Several participants included recommendations for the scenarios. For instance, in one of the videos the robot asks the PwD which song he wants to hear while eating, and the caregiver indicated that PwD often have difficulties answering these open questions and it would be better to suggest a song or let the person choose between a couple of alternatives, simplifying the interaction.

With regards to the questions related to intention of adoption using the Almere model we asked the informants to answer the questions related to Perceived Enjoyment and Perceived Sociability from the perspective of the PwD (acting as a proxy) and the others from their own perspective as caregivers.

The results from the survey corresponding to each construct are shown in

Table 1. They indicate that the informants found the interactions with the robot to be enjoyable (PENJ) and easy to use (PEOU). Perceived Sociability (PS) was ranked slightly lower mostly due to low scores given to the question “I feel the robot understands me”, which might have been interpreted in a deeper sense than intended. A couple of informants added comments indicating that the robot might not be appropriate for people with advanced dementia such as their relatives, which might have influenced the scores for Perceived Usefulness (PU) and Intention to Use (ITU). Nevertheless, the opinions are generally positive and worth pursuing with a few amendments.

4.2. Automation Cycle

The engagement cycle provided sufficient evidence of the feasibility and usefulness of the initial design of a robot to support PwD and caregivers during mealtimes to move to the next cycle focused on automating the interaction. It also provided specific recommendations to improve the interactions as well as the behaviors that could trigger interventions from the robot.

4.2.1. Analysis of Human–Robot Interactions

We designed a template for an eating session that incorporates support for most of the short interactions proposed and evaluated in the engagement cycle. The eating session initiates with a personalized welcoming message from the robot, introducing itself and indicating that it will provide company during mealtime. Once it recognizes that the PwD has sat at the table, if no food is detected the robot initiates a verbal interaction aimed at stimulating the appetite of the user if the meal is scheduled within a few minutes. Alternatively, if there are more than 5 min left before the scheduled mealtime, it plays music to entertain and relax the user.

Once the food arrives and it is detected by the robot, the session enters into a loop in which the robot combines the strategies of verbal stimulation and entertainment with the monitoring of behaviors of interest. Entertainment interactions include playing music personalized for the individual, telling light jokes and triggering dialogues related to mindful eating with utterances such as “your meal looks delicious, have you smelled it?”.

The user behaviors that are monitored include determining whether the person is eating and the approximate speed at which they eat; if the person stands up; and when they finish the meal. Each of these behaviors triggers specific actions from the robot. For instance, if the robot detects that the person stood up it encourages her to sit down and continue eating. If the behavior persists it will notify the caregiver.

4.2.2. Design of Autonomous Behaviors

We conducted an additional design workshop with eight researchers and graduate students with expertise in the design and evaluation of technology for dementia, but who had not participated in the initial phases of this work.

To provide context, the participants saw the interaction videos developed in the adoption cycle and completed the survey.

The design session was organized as a focus group and lasted approximately 35 min. Initially, participants received an explanation about the eating session supported by the robot described above. The focus group was conducted on-line. Participants were encouraged to share their answers either by speaking or by writing on a shared document where the questions were also listed.

Table 2 lists the six questions given to the participants as triggers and some of their responses.

The design workshop provided useful feedback to refine the proposed interactions. We modified the dialogues to be more indirect, using suggestions instead of sentences that might be perceived as orders or commands. We also changed the interactions to avoid open questions to the PwD, such as what song they want to hear. These questions are also personalized to encourage engagement, while some PwD might have no problem choosing between two proposed songs of their liking, for others the robot will just notify the name of the song it will play next.

4.2.3. Implementation of Autonomous Behaviors

To implement the SAR as an eating companion we created a new version of the robot EVA. Its main difference is that it incorporates a depth camera (Intel RealSense D435i) on top of the head. The camera is used to infer user behaviors and states of the environment relevant to the task at hand.

For detecting food on the table we used the Vision API which is part of the Vision package of the Google Cloud Platform. Every 30 s a photo from the robot’s perspective is sent to the Vision API to determine the presence of the ‘Food’ or ‘Dishware’ classes. If any of these two classes appear and have a probability greater than or equal to 85%, the robot infers that the food is served and it initiates an interaction to start eating.

In order to detect if the person is in front of the robot a facial recognition system was implemented. We used Open CV to train a classification model with 30 examples of the person’s face, based on the Haar cascade functions to detect if the person assigned to the meal session is in front of the robot.

In addition, we trained the model to detect if the person is eating, using images from recorded meals made with several subjects. We included images of the person bringing food to their mouth as positive examples and all others for the “no_eating” class.

The detection model was trained with 467 images, of which 231 were labeled with the class ‘eating’ and 236 were labeled as ‘no_eating’. We used the variant of the Google Cloud Platform vision API called Cloud Vision AutoML to train the model.

4.2.4. Assessment of Autonomy

Since the verbal interactions designed are relatively simple and do not require the interpretation of complex user utterances, the main challenge to assess the autonomy of the robot is its capacity to recognize behaviors and events of interest that trigger timely and appropriate responses.

A critical behavior to monitor is if the person is actually eating. Since the hand movement to bring food to the mouth takes just a few seconds, capturing images at a low temporal frequency might miss many eating actions. We recorded four individuals eating at a normal pace and capturing one image every 2 s registers only 51% of the eating actions. Reducing this to 1 frame per second increases the percentage of eating actions captured to 88%. Thus, we decided to use this rate to capture and analyze images as an adequate compromise between registering most eating actions and the computing workload on the robot.

Using the model trained as described in the previous section to detect feeding events resulted in an accuracy of 92%, a sensitivity of 83% and a specificity of 96%. Thus, the model misses some eating actions, but rarely generates a false negative. With this in mind we choose to analyze windows of 30 s (30 frames) and, if 0 or 1 feeding events are detected, the robot concludes that the person is not eating, triggering a verbal encouragement with phrases such as: “Your food looks really tasty, you should try some”. These messages are redacted in a form that even in case of a false negative (the person actually eating perhaps at a slow pace), the suggestion still applies.

We evaluated the accuracy of each activity being monitored by the robot independently and made adjustments to the algorithm to achieve adequate performance. Afterwards, we conducted a couple of complete sessions with one of the authors eating and performing activities such as standing up and stopping and resuming eating. The robot accurately detected the behaviors of interest and provided adequate feedback.

Due to COVID-19 we have not been able to test any of the prototypes with PwD, but caregivers have actively participated in the design and evaluation sessions.

5. Discussion

Research in socially assistive robots has increased significantly in recent years. One of its main areas of application centers on assisting older adults, and more specifically people with dementia [

27]; while promising, this is still a relatively novel area in which much research still focuses on the engineering and development aspects with high quality design experiments still lagging [

10].

Most of the research on SARs for dementia has focused on getting the technology to work and preliminary or feasibility assessment of their acceptance and efficacy. One area that is often not specifically addressed is that of interaction design, which is particularly challenging for people with dementia who might experience reduced perceptive and cognitive skills. Through a user-centered design the work described in the article places special importance on this issue. This is of particular importance for the technology to be adopted [

37]; while our work included the participation of formal and informal caregivers, acting as proxies of people with dementia, to assess intention to adopt, an earlier study with the EVA robot found it to be engaging to users [

38]. However, people experience the disease in different forms and their background, personality and their trajectory in the disease matters, thus the need to personalize the interaction and adapt it to concrete circumstances.

Interventions with SARs meant to address health outcomes most often use commercially available robots. Perhaps the most well known robot for dementia is the Paro robot which is extensively being used in geriatric residences [

26]; while fairly successful, Paro is mostly used as a black-box and cannot be programmed or adapted to various scenarios. More recently, the parrot-shaped PIO robot has shown promising results in addressing symptoms of dementia. Similarly to EVA it can be used to program structured conversations and to perform specific movements to convey affective status. In contrast EVA, being an open platform, can be modified in this behavior and form more significantly, although with more effort. Lovot is also a commercially available social robot and has also been used in an intervention with people with dementia [

39]. Lovot has affordances similar to EVA in terms of movement and expressive capacity, but much more limited capacity to program or adapt its behavior.

Within dementia care, social robots have been proposed and used in a variety of scenarios but one particularly promising one is that of mitigating psychological symptoms of dementia. In this area early evidence suggests that SARs can be effective at decreasing agitation and increasing social interaction [

40]. In a previous study with an early version of the robot EVA, we found positive short-term and long-term effects of the robot guiding a cognitive stimulation therapy [

33].

A frequent type of symptom of dementia that causes considerable burden in caregivers is disruptive eating behaviors. To to our knowledge, no SARs have been designed to specifically address this type of problem.

6. Conclusions

Through a user-centered design approach, we have developed a prototype of a socially assistive robot aimed at addressing disruptive eating behaviors by people with dementia. The approach includes two design/development/evaluation cycles, one focused on assessing user adoption and the second one focused on providing affordances to the robot to act autonomously. Caregivers, both formal and informal, as well as domain experts were involved in several stages of the process, with their observations resulting in changes to the interaction design.

The resulting robot is able to identify some of the most common disruptive eating behaviors experienced by people with moderate dementia, who can still feed themselves but can easily become distracted. Yet, the ability to personalize the interaction is a key element of the design. This not only affects the way in which the robot refers to the user, or the type of music it plays, but also the type of problematic behaviors they normally enact. For instance, for a user who cannot stand up without help the module that detects this behavior will not be executed.

This work provides evidence of the feasibility of designing social assistive robots that are able to infer disruptive behaviors and enact interactions to mitigate their negative impact, thus implementing ambient-assisted interventions for dementia. We plan to evaluate the socially assistive robot with actual users to assess its efficacy.

As the field matures and SARs prove increasingly effective in dementia care it becomes necessary to address their insertion in organizational care practices [

18,

41].