A Simple Controller for Omnidirectional Trotting of Quadrupedal Robots: Command Following and Waypoint Tracking

Abstract

1. Introduction

2. Background and Related Work

3. Low-Level Control

3.1. Hardware Platform

3.2. Overview

3.3. Cartesian Kinematics

3.4. Cartesian Reference Trajectory

3.5. Inverse Kinematics

3.6. Gravity Torque

3.7. Ground Reaction Forces

3.8. Ground Reaction Torques

3.9. Feedback Torque

3.10. Single Leg Implementation

4. High-Level Control

4.1. Overview

4.2. High-Level Model

4.3. High-Level Control

5. Results

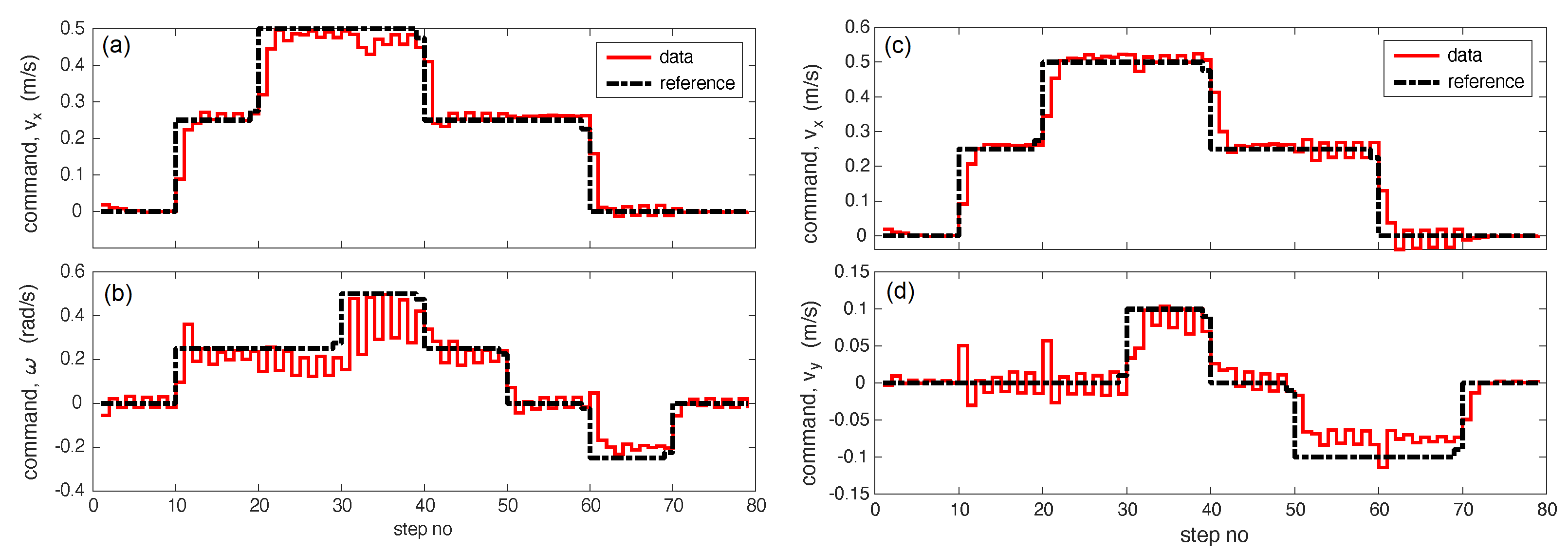

5.1. Command following

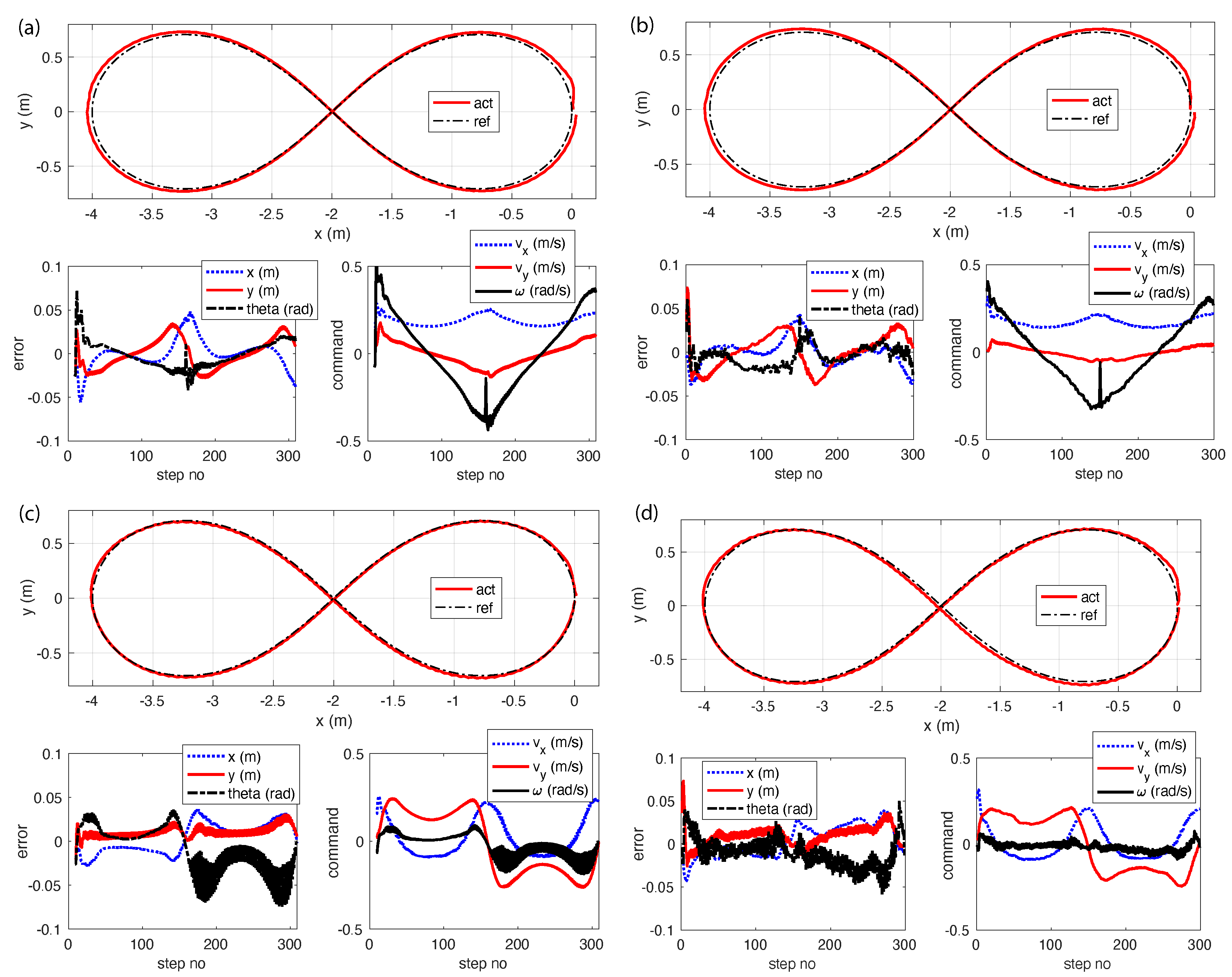

5.2. Waypoint Tracking

6. Discussion

7. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Raibert, M.; Chepponis, M.; Brown, H. Running on four legs as though they were one. IEEE J. Robot. Autom. 1986, 2, 70–82. [Google Scholar] [CrossRef]

- Marhefka, D.W.; Orin, D.E.; Schmiedeler, J.P.; Waldron, K.J. Intelligent control of quadruped gallops. IEEE/ASME Trans. Mechatron. 2003, 8, 446–456. [Google Scholar] [CrossRef]

- Pratt, J.; Dilworth, P.; Pratt, G. Virtual model control of a bipedal walking robot. In Proceedings of the International Conference on Robotics and Automation, Nagoya, Aichi, Japan, 21–27 May 1995; Volume 1, pp. 193–198. [Google Scholar]

- Chen, G.; Guo, S.; Hou, B.; Wang, J. Virtual model control for quadruped robots. IEEE Access 2020, 8, 140736–140751. [Google Scholar] [CrossRef]

- Arena, P.; Patanè, L.; Taffara, S. Energy efficiency of a quadruped robot with neuro-inspired control in complex environments. Energies 2021, 14, 433. [Google Scholar] [CrossRef]

- Liu, C.; Chen, Q.; Xu, T. Locomotion control of quadruped robots based on central pattern generators. In Proceedings of the 2011 ninth World Congress on Intelligent Control and Automation, Taipei, Taiwan, 21–25 June 2011; pp. 1167–1172. [Google Scholar]

- Park, H.W.; Park, S.; Kim, S. Variable-speed quadrupedal bounding using impulse planning: Untethered high-speed 3d running of mit cheetah 2. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 5163–5170. [Google Scholar]

- Ding, Y.; Pandala, A.; Park, H.W. Real-time model predictive control for versatile dynamic motions in quadrupedal robots. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 8484–8490. [Google Scholar]

- Di Carlo, J.; Wensing, P.M.; Katz, B.; Bledt, G.; Kim, S. Dynamic locomotion in the mit cheetah 3 through convex model-predictive control. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–9. [Google Scholar]

- Zheng, A.; Narayanan, S.S.; Vaidya, U.G. Optimal Control for Quadruped Locomotion using LTV MPC. arXiv 2022, arXiv:2212.05154. [Google Scholar]

- Chi, W.; Jiang, X.; Zheng, Y. A linearization of centroidal dynamics for the model-predictive control of quadruped robots. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 4656–4663. [Google Scholar]

- Kang, D.; De Vincenti, F.; Coros, S. Nonlinear Model Predictive Control for Quadrupedal Locomotion Using Second-Order Sensitivity Analysis. arXiv 2022, arXiv:2207.10465. [Google Scholar]

- Hamed, K.A.; Kim, J.; Pandala, A. Quadrupedal locomotion via event-based predictive control and QP-based virtual constraints. IEEE Robot. Autom. Lett. 2020, 5, 4463–4470. [Google Scholar] [CrossRef]

- Chen, S.; Zhang, B.; Mueller, M.W.; Rai, A.; Sreenath, K. Learning Torque Control for Quadrupedal Locomotion. arXiv 2022, arXiv:2203.05194. [Google Scholar]

- Lee, J.; Hwangbo, J.; Wellhausen, L.; Koltun, V.; Hutter, M. Learning quadrupedal locomotion over challenging terrain. Sci. Robot. 2020, 5, eabc5986. [Google Scholar] [CrossRef] [PubMed]

- Peng, X.B.; Coumans, E.; Zhang, T.; Lee, T.W.; Tan, J.; Levine, S. Learning agile robotic locomotion skills by imitating animals. arXiv 2020, arXiv:2004.00784. [Google Scholar]

- Bellegarda, G.; Nguyen, Q. Robust high-speed running for quadruped robots via deep reinforcement learning. arXiv 2021, arXiv:2103.06484. [Google Scholar]

- Tan, J.; Zhang, T.; Coumans, E.; Iscen, A.; Bai, Y.; Hafner, D.; Bohez, S.; Vanhoucke, V. Sim-to-real: Learning agile locomotion for quadruped robots. arXiv 2018, arXiv:1804.10332. [Google Scholar]

- Kumar, A.; Fu, Z.; Pathak, D.; Malik, J. Rma: Rapid motor adaptation for legged robots. arXiv 2021, arXiv:2107.04034. [Google Scholar]

- Li, H.; Yu, W.; Zhang, T.; Wensing, P.M. Zero-shot retargeting of learned quadruped locomotion policies using hybrid kinodynamic model predictive control. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 11971–11977. [Google Scholar]

- Fawcett, R.T.; Afsari, K.; Ames, A.D.; Hamed, K.A. Toward a data-driven template model for quadrupedal locomotion. IEEE Robot. Autom. Lett. 2022, 7, 7636–7643. [Google Scholar] [CrossRef]

- Dudzik, T.; Chignoli, M.; Bledt, G.; Lim, B.; Miller, A.; Kim, D.; Kim, S. Robust autonomous navigation of a small-scale quadruped robot in real-world environments. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 3664–3671. [Google Scholar]

- Chilian, A.; Hirschmüller, H. Stereo camera based navigation of mobile robots on rough terrain. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; pp. 4571–4576. [Google Scholar]

- Karydis, K.; Poulakakis, I.; Tanner, H.G. A navigation and control strategy for miniature legged robots. IEEE Trans. Robot. 2016, 33, 214–219. [Google Scholar] [CrossRef]

- Kim, Y.; Kim, C.; Hwangbo, J. Learning Forward Dynamics Model and Informed Trajectory Sampler for Safe Quadruped Navigation. arXiv 2022, arXiv:2204.08647. [Google Scholar]

- Guizzo, E.; Ackerman, E. $74,500 will fetch you a spot: For the price of a luxury car, you can now buy a very smart, very capable, very yellow robot dog. IEEE Spectr. 2020, 57, 11. [Google Scholar] [CrossRef]

- Hutter, M.; Gehring, C.; Jud, D.; Lauber, A.; Bellicoso, C.D.; Tsounis, V.; Hwangbo, J.; Bodie, K.; Fankhauser, P.; Bloesch, M.; et al. Anymal-a highly mobile and dynamic quadrupedal robot. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 38–44. [Google Scholar]

- Unitree-Robotics. A1 More Dexterity, More Possibilities. 2022. Available online: https://www.unitree.com/products/a1/ (accessed on 17 December 2022).

- Katz, B.; Di Carlo, J.; Kim, S. Mini cheetah: A platform for pushing the limits of dynamic quadruped control. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 6295–6301. [Google Scholar]

- Kau, N.; Bowers, S. Stanford Pupper: A Low-Cost Agile Quadruped Robot for Benchmarking and Education. arXiv 2021, arXiv:2110.00736. [Google Scholar]

- Kau, N.; Schultz, A.; Ferrante, N.; Slade, P. Stanford doggo: An open-source, quasi-direct-drive quadruped. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 6309–6315. [Google Scholar]

- Grimminger, F.; Meduri, A.; Khadiv, M.; Viereck, J.; Wüthrich, M.; Naveau, M.; Berenz, V.; Heim, S.; Widmaier, F.; Flayols, T.; et al. An open torque-controlled modular robot architecture for legged locomotion research. IEEE Robot. Autom. Lett. 2020, 5, 3650–3657. [Google Scholar] [CrossRef]

- LaValle, S.M. Planning Algorithms; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Sanchez, S.; Bhounsule, P.A. Design, Modeling, and Control of a Differential Drive Rimless Wheel That Can Move Straight and Turn. Automation 2021, 2, 6. [Google Scholar] [CrossRef]

- Hackett, J.; Gao, W.; Daley, M.; Clark, J.; Hubicki, C. Risk-constrained motion planning for robot locomotion: Formulation and running robot demonstration. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October–24 January 2021; pp. 3633–3640. [Google Scholar]

- Bhounsule, P.A. A simple Controller for Omnidirectional Trotting of Quadrupeds: Command Following and Tracking. 2022. Available online: https://youtu.be/o8qkcMmQTQI (accessed on 17 December 2022).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bhounsule, P.A.; Yang, C.-M. A Simple Controller for Omnidirectional Trotting of Quadrupedal Robots: Command Following and Waypoint Tracking. Robotics 2023, 12, 35. https://doi.org/10.3390/robotics12020035

Bhounsule PA, Yang C-M. A Simple Controller for Omnidirectional Trotting of Quadrupedal Robots: Command Following and Waypoint Tracking. Robotics. 2023; 12(2):35. https://doi.org/10.3390/robotics12020035

Chicago/Turabian StyleBhounsule, Pranav A., and Chun-Ming Yang. 2023. "A Simple Controller for Omnidirectional Trotting of Quadrupedal Robots: Command Following and Waypoint Tracking" Robotics 12, no. 2: 35. https://doi.org/10.3390/robotics12020035

APA StyleBhounsule, P. A., & Yang, C.-M. (2023). A Simple Controller for Omnidirectional Trotting of Quadrupedal Robots: Command Following and Waypoint Tracking. Robotics, 12(2), 35. https://doi.org/10.3390/robotics12020035