A Framework to Study and Design Communication with Social Robots

Abstract

1. Introduction

“A Social Robot Cannot Not Communicate.” (L. Kunold & L. Onnasch)

2. Definitions

2.1. Social Robots

2.2. Social Robot’s Communication

3. Peculiarities of Communication with Social Robots

4. Views on Communication

4.1. Communication Models in HRI

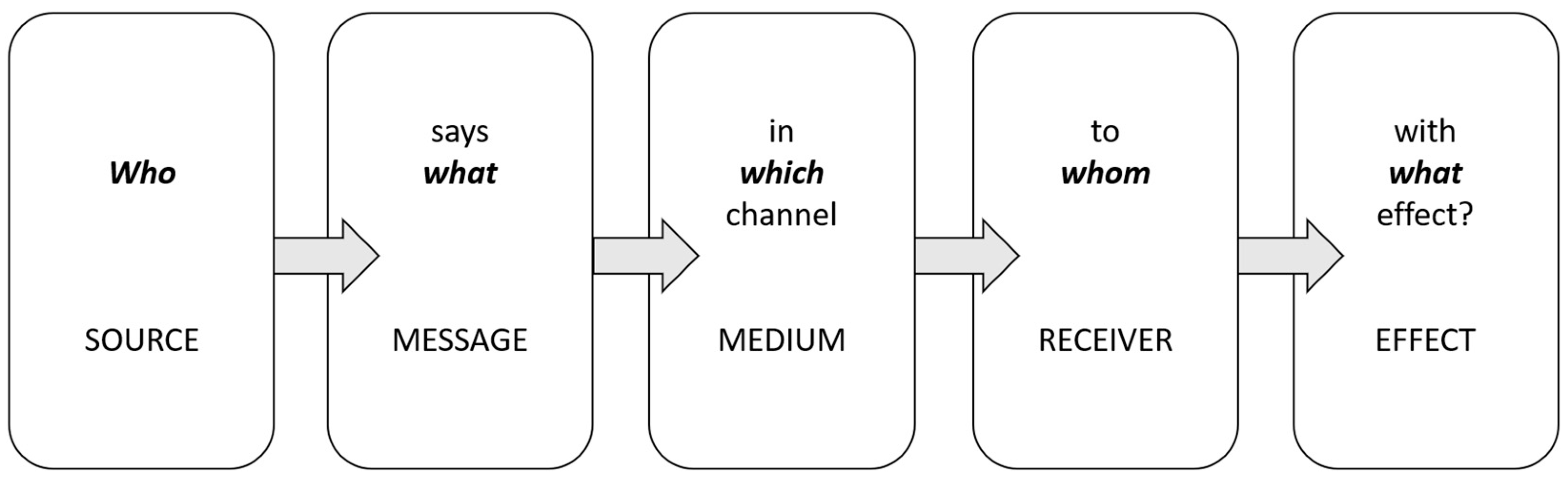

4.2. Transmission Models of Communication

5. Our Application of Lasswell’s Theory to HRI

5.1. The Source or the “Who” in HRI

5.2. The Message or the “What” in HRI

5.3. The “Channel” in HRI

5.4. The Receiver or the “to Whom” in HRI

5.5. The Communication “Effect” in HRI

5.6. Additional Important Variables

6. Example Application of the Framework to Existing Communication Studies

7. Summary

8. Limitations

9. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Watzlawick, P.; Bavelas, J.B.; Jackson, D.D. Menschliche Kommunikation; Verlag Hans Huber: Berne, Switzerland, 1969. [Google Scholar]

- Hegel, F.; Muhl, C.; Wrede, B.; Hielscher-Fastabend, M.; Sagerer, G. Understanding Social Robots. In Proceedings of the 2009 Second International Conferences on Advances in Computer-Human Interactions, Cancun, Mexico, 1–7 February 2009; pp. 169–174. [Google Scholar] [CrossRef]

- Ros, R.; Espona, M. Exploration of a Robot-Based Adaptive Cognitive Stimulation System for the Elderly. In Proceedings of the HRI ’20: ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020; pp. 406–408. [Google Scholar]

- Castellano, G.; De Carolis, B.; Macchiarulo, N.; Pino, O. Detecting Emotions During Cognitive Stimulation Training with the Pepper Robot. In Springer Proceedings in Advanced Robotics; Springer Nature: Berlin/Heidelberg, Germany, 2022; Volume 23, pp. 61–75. [Google Scholar]

- Deutsche Bahn’s Multilingual Travel Assistant—Furhat Robotics. Available online: https://furhatrobotics.com/concierge-robot/ (accessed on 29 August 2022).

- Sandry, E. Re-Evaluating the Form and Communication of Social Robots. Int. J. Soc. Robot. 2015, 7, 335–346. [Google Scholar] [CrossRef]

- Mirnig, N.; Weiss, A.; Skantze, G.; Al Moubayed, S.; Gustafson, J.; Beskow, J.; Granström, B.; Tscheligi, M. Face-to-Face with A Robot: What Do We Actually Talk About? Int. J. Hum. Robot. 2013, 10, 1350011. [Google Scholar] [CrossRef]

- Crumpton, J.; Bethel, C.L. A Survey of Using Vocal Prosody to Convey Emotion in Robot Speech. Int. J. Soc. Robot. 2016, 8, 271–285. [Google Scholar] [CrossRef]

- Fischer, K.; Jung, M.; Jensen, L.C.; Aus Der Wieschen, M.V. Emotion Expression in HRI—When and Why. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Daegu, Korea, 22 March 2019; pp. 29–38. [Google Scholar]

- Klüber, K.; Onnasch, L. Appearance Is Not Everything—Preferred Feature Combinations for Care Robots. Comput. Human Behav. 2022, 128, 107128. [Google Scholar] [CrossRef]

- Hoffmann, L.; Derksen, M.; Kopp, S. What a Pity, Pepper! How Warmth in Robots’ Language Impacts Reactions to Errors during a Collaborative Task. In Proceedings of the HRI ’20: Companion of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020; pp. 245–247. [Google Scholar] [CrossRef]

- James, J.; Watson, C.I.; MacDonald, B. Artificial Empathy in Social Robots: An Analysis of Emotions in Speech. In Proceedings of the RO-MAN 2018—27th IEEE International Symposium on Robot and Human Interactive Communication, Nanjing, China, 27–31 August 2018; pp. 632–637. [Google Scholar]

- Klüber, K.; Onnasch, L. Affect-Enhancing Speech Characteristics for Robot Communication. In Proceedings of the 66th Annual Meeting of the Human Factors and Ergonomics Society, Atlanta, GA, USA, 10–14 October 2022; SAGE Publications: Los Angeles, CA, USA, 2022. [Google Scholar]

- Joosse, M.; Lohse, M.; Evers, V. Sound over Matter: The Effects of Functional Noise, Robot Size and Approach Velocity in Human-Robot Encounters. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Bielefeld, Germany, 3–6 March 2014; pp. 184–185. [Google Scholar]

- Löffler, D.; Schmidt, N.; Tscharn, R. Multimodal Expression of Artificial Emotion in Social Robots Using Color, Motion and Sound. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; pp. 334–343. [Google Scholar]

- Riek, L.D.; Rabinowitch, T.-C.; Bremner, P.; Pipe, A.G.; Fraser, M.; Robinson, P. Cooperative Gestures: Effective Signaling for Humanoid Robots. In Proceedings of the 5th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Osaka, Japan, 2–5 March 2010; IEEE: Osaka, Japan, 2010; pp. 61–68. [Google Scholar]

- Admoni, H.; Weng, T.; Hayes, B.; Scassellati, B. Robot Nonverbal Behavior Improves Task Performance in Difficult Collaborations. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Christchurch, New Zealand, 7–10 March 2016; pp. 51–58. [Google Scholar]

- Onnasch, L.; Kostadinova, E.; Schweidler, P. Humans Can’t Resist Robot Eyes—Reflexive Cueing with Pseudo-Social Stimuli. Front. Robot. AI 2022, 72. [Google Scholar] [CrossRef]

- Boucher, J.-D.; Pattacini, U.; Lelong, A.; Bailly, G.; Elisei, F.; Fagel, S.; Dominey, P.F.; Ventre-Dominey, J. I Reach Faster When I See You Look: Gaze Effects in Human–Human and Human–Robot Face-to-Face Cooperation. Front. Neurorobot. 2012, 6, 1–11. [Google Scholar] [CrossRef]

- Wiese, E.; Weis, P.P.; Lofaro, D.M. Embodied Social Robots Trigger Gaze Following in Real-Time HRI. In Proceedings of the 2018 15th International Conference on Ubiquitous Robots (UR), Honolulu, HI, USA, 26–30 June 2018; pp. 477–482. [Google Scholar] [CrossRef]

- Arora, A.; Fiorino, H.; Pellier, D.; Pesty, S. Learning Robot Speech Models to Predict Speech Acts in HRI. Paladyn 2018, 9, 295–306. [Google Scholar] [CrossRef]

- Liu, P.; Glas, D.F.; Kanda, T.; Ishiguro, H. Data-Driven HRI: Learning Social Behaviors by Example from Human-Human Interaction. IEEE Trans. Robot. 2016, 32, 988–1008. [Google Scholar] [CrossRef]

- Janssens, R.; Wolfert, P.; Demeester, T.; Belpaeme, T. “Cool Glasses, Where Did You Get Them?” Generating Visually Grounded Conversation Starters for Human-Robot Dialogue. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Sapporo Hokkaido, Japan, 7–10 March 2022; pp. 821–825. [Google Scholar]

- Lasswell, H.D. The Structure and Function of Communication in Society. Commun. Ideas 1948, 37, 136–139. [Google Scholar]

- Fong, T.; Nourbakhsh, I.; Dautenhahn, K. A Survey of Socially Interactive Robots. Rob. Auton. Syst. 2003, 42, 143–166. [Google Scholar] [CrossRef]

- Bartneck, C.; Forlizzi, J. A Design-Centred Framework for Social Human-Robot Interaction. In Proceedings of the 2004 IEEE International Workshop in Robot and Human Interactive Communication (RO-MAN 2004), Kurashiki, Japan, 20–22 September 2004; IEEE: Okayama, Japan, 2004; pp. 591–594. [Google Scholar]

- Feine, J.; Gnewuch, U.; Morana, S.; Maedche, A. A Taxonomy of Social Cues for Conversational Agents. Int. J. Hum. Comput. Stud. 2019, 132, 138–161. [Google Scholar] [CrossRef]

- Shannon, C.E.; Weaver, W. The Mathematical Theory of Communication; University of Illinois Press: Champaign, IL, USA, 1949. [Google Scholar]

- Banks, J.; De Graaf, M.M.A. Toward an Agent-Agnostic Transmission Model: Synthesizing Anthropocentric and Technocentric Paradigms in Communication. Hum. -Mach. Commun. 2020, 1, 19–36. [Google Scholar] [CrossRef]

- Onnasch, L.; Roesler, E. A Taxonomy to Structure and Analyze Human–Robot Interaction. Int. J. Soc. Robot. 2021, 13, 833–849. [Google Scholar] [CrossRef]

- Frijns, H.A.; Schürer, O.; Koeszegi, S.T. Communication Models in Human–Robot Interaction: An Asymmetric MODel of ALterity in Human–Robot Interaction (AMODAL-HRI). Int. J. Soc. Robot. 2021, 13, 1–28. [Google Scholar] [CrossRef]

- Nass, C.; Moon, Y. Machines and Mindlessness: Social Responses to Computers. J. Soc. Issues 2000, 56, 81–103. [Google Scholar] [CrossRef]

- Norman, D.A. The Design of Everyday Things: Revised and Expanded Edition; MIT Press: New York, NY, USA, 2013. [Google Scholar]

- Bonarini, A. Communication in Human-Robot Interaction. Curr. Robot. Rep. 2020, 1, 279–285. [Google Scholar] [CrossRef]

- Zimmer, F.; Scheibe, K.; Stock, W.G. A Model for Information Behavior Research on Social Live Streaming Services (SLSSs); Springer International Publishing: Berlin/Heidelberg, Germany, 2018; Volume 10914, ISBN 9783319914848. [Google Scholar]

- Hoffmann, L.; Krämer, N.C. Investigating the Effects of Physical and Virtual Embodiment in Task-Oriented and Conversational Contexts. Int. J. Hum. Comput. Stud. 2013, 71, 763–774. [Google Scholar] [CrossRef]

- Li, J. The Benefit of Being Physically Present: A Survey of Experimental Works Comparing Copresent Robots, Telepresent Robots and Virtual Agents. Int. J. Hum. Comput. Stud. 2015, 77, 23–27. [Google Scholar] [CrossRef]

- de Visser, E.J.; Peeters, M.M.M.; Jung, M.F.; Kohn, S.; Shaw, T.H.; Pak, R.; Neerincx, M.A. Towards a Theory of Longitudinal Trust Calibration in Human–Robot Teams. Int. J. Soc. Robot. 2020, 12, 459–478. [Google Scholar] [CrossRef]

- Lasswell, H.D.; Lerner, D.; Speier, H. Propaganda and Communication in World History; University Press of Hawaii: Honululu, HI, USA, 1979. [Google Scholar]

- Day, R.E. The Conduit Metaphor and the Nature and Politics of Information Studies. J. Am. Soc. Inf. Sci. Technol. 2000, 51, 805–811. [Google Scholar] [CrossRef]

- Patrick Rau, P.-L.; Li, D.; Li, D. A Cross-Cultural Study: Effect of Robot Appearance and Task. Int J Soc Robot 2010, 2, 175–186. [Google Scholar] [CrossRef]

- Babel, F.; Hock, P.; Kraus, J.; Baumann, M. Human-Robot Conflict Resolution at an Elevator—The Effect of Robot Type, Request Politeness and Modality. In Proceedings of the Companion of the 2022 ACM/IEEE International Conference on Human-Robot Interaction, Sapporo Hokkaido, Japan, 7–10 March 2022; pp. 693–697. [Google Scholar]

- Zhong, V.J.; Mürset, N.; Jäger, J.; Schmiedel, T.; Schmiedel, T. Exploring Variables That Affect Robot Likeability. In Proceedings of the 2022 ACM/IEEE International Conference on Human-Robot Interaction, Sapporo Hokkaido, Japan, 7–10 March 2022; pp. 1140–1145. [Google Scholar]

- Kunold, L.; Bock, N.; Rosenthal-von der Pütten, A.M. Not All Robots Are Evaluated Equally: The Impact of Morphological Features on Robots’ Assessment through Capability Attributions. ACM Trans. Human-Robot Interact. 2022. [Google Scholar] [CrossRef]

- Phillips, E.; Zhao, X.; Ullman, D.; Malle, B.F. What Is Human-like?: Decomposing Robots’ Human-like Appearance Using the Anthropomorphic RoBOT (ABOT) Database. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; pp. 105–113. [Google Scholar]

- Onnasch, L.; Hildebrandt, C.L. Impact of Anthropomorphic Robot Design on Trust and Attention in Industrial Human-Robot Interaction. ACM Trans. Human-Robot Interact. 2022, 11, 1–24. [Google Scholar] [CrossRef]

- Roesler, E.; Onnasch, L.; Majer, J.I. The Effect of Anthropomorphism and Failure Comprehensibility on Human-Robot Trust. Hum. Factors Ergon. Soc. Annu. Meet. 2021, 64, 107–111. [Google Scholar] [CrossRef]

- Burleson, B.R. The Nature of Interpersonal Communication: A Message-Centered Approach. In The Handbook of Communication Science; SAGE Publications Inc.: Los Angeles, CA, USA, 2010; pp. 145–166. ISBN 9781412982818. [Google Scholar]

- Kory-Westlund, J.M.; Breazeal, C. Exploring the Effects of a Social Robot’s Speech Entrainment and Backstory on Young Children’s Emotion, Rapport, Relationship, and Learning. Front. Robot. AI 2019, 6, 54. [Google Scholar] [CrossRef]

- Bishop, L.; Van Maris, A.; Dogramadzi, S.; Zook, N. Social Robots: The Influence of Human and Robot Characteristics on Acceptance. Paladyn 2019, 10, 346–358. [Google Scholar] [CrossRef]

- Naneva, S.; Sarda Gou, M.; Webb, T.L.; Prescott, T.J. A Systematic Review of Attitudes, Anxiety, Acceptance, and Trust Towards Social Robots. Int. J. Soc. Robot. 2020, 12, 1179–1201. [Google Scholar] [CrossRef]

- Birmingham, C.; Perez, A.; Matarić, M. Perceptions of Cognitive and Affective Empathetic Statements by Socially Assistive Robots. In Proceedings of the HRI ’22: 2022 ACM/IEEE International Conference on Human-Robot Interaction, Sapporo Hokkaido, Japan, 7–10 March 2022; pp. 323–331. [Google Scholar]

- Hancock, P.A.; Kessler, T.; Kaplan, A.; Brill, J.C.; Szalma, J. Evolving Trust in Robots: Specification Through Sequential and Comparative Meta-Analyses. Hum. Factors 2020, 63, 1196–1229. [Google Scholar] [CrossRef]

- Hancock, P.A.; Billings, D.R.; Schaefer, K.E.; Chen, J.Y.C.; De Visser, E.J.; Parasuraman, R. A Meta-Analysis of Factors Affecting Trust in Human-Robot Interaction. Hum. Factors 2011, 53, 517–527. [Google Scholar] [CrossRef]

- Goetz, J.; Kiesler, S.; Powers, A. Matching Robot Appearance and Behavior to Tasks to Improve Human-Robot Cooperation. In Proceedings of the IEEE International Workshop on Robot and Human Interactive Communication, Millbrae, CA, USA, 31 October–2 November 2003; IEEE: Millbrae, CA, USA, 2003; pp. 55–60. [Google Scholar]

- Reich-Stiebert, N.; Eyssel, F. (Ir)Relevance of Gender?: On the Influence of Gender Stereotypes on Learning with a Robot. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 166–176. [Google Scholar]

- Roesler, E.; Naendrup-Poell, L.; Manzey, D.; Onnasch, L. Why Context Matters: The Influence of Application Domain on Preferred Degree of Anthropomorphism and Gender Attribution in Human–Robot Interaction. Int. J. Soc. Robot. 2022, 14, 1155–1166. [Google Scholar] [CrossRef]

- Fischer, K.; Jensen, L.C.; Kirstein, F.; Stabinger, S.; Erkent, Ö.; Shukla, D.; Piater, J. The Effects of Social Gaze in Human-Robot Collaborative Assembly. In Social Robotics; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 9388, pp. 204–213. [Google Scholar] [CrossRef]

- Roesler, E.; Manzey, D.; Onnasch, L. A Meta-Analysis on the Effectiveness of Anthropomorphism in Human-Robot Interaction. Sci. Robot. 2021, 6, eabj5425. [Google Scholar] [CrossRef] [PubMed]

- Roesler, E.; Onnasch, L. Teammitglied Oder Werkzeug—Der Einfluss Anthropomorpher Gestaltung in Der Mensch-Roboter-Interaktion. In Mensch-Roboter-Kollaboration; Springer Fachmedien Wiesbaden: Wiesbaden, Germany, 2020; pp. 163–175. [Google Scholar]

- Fischer, K. Why Collaborative Robots Must Be Social (and Even Emotional) Actors. Techne Res. Philos. Technol. 2019, 23, 270–289. [Google Scholar] [CrossRef]

- Setapen, A.A.M. Creating Robotic Characters for Long-Term Interaction. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2012. [Google Scholar]

- Short, E.; Swift-Spong, K.; Greczek, J.; Ramachandran, A.; Litoiu, A.; Grigore, E.C.; Feil-Seifer, D.; Shuster, S.; Lee, J.J.; Huang, S.; et al. How to Train Your DragonBot: Socially Assistive Robots for Teaching Children about Nutrition through Play. In Proceedings of the IEEE RO-MAN 2014—23rd IEEE International Symposium on Robot and Human Interactive Communication: Human-Robot Co-Existence: Adaptive Interfaces and Systems for Daily Life, Therapy, Assistance and Socially Engaging Interactions, Edinburgh, Scotland, 15 October 2014; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2014; pp. 924–929. [Google Scholar]

- Brsci, D.; Kidokoro, H.; Suehiro, Y.; Kanda, T. Escaping from Children’s Abuse of Social Robots. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Portland, OR, USA, 2–5 March 2015; ACM: New York, NY, USA, 2015; pp. 59–66. [Google Scholar]

- Yamada, S.; Kanda, T.; Tomita, K. An Escalating Model of Children’s Robot Abuse. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 9 March 2020; pp. 191–199. [Google Scholar]

- Ku, H.; Choi, J.J.; Lee, S.; Jang, S.; Do, W. Designing Shelly, a Robot Capable of Assessing and Restraining Children’s Robot Abusing Behaviors. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; pp. 161–162. [Google Scholar]

- Wickens, C.D. Multiple Resources and Performance Prediction. Theor. Issues Ergon. Sci. 2002, 3, 159–177. [Google Scholar] [CrossRef]

- Jacucci, G.; Morrison, A.; Richard, G.T.; Kleimola, J.; Peltonen, P.; Parisi, L.; Laitinen, T. Worlds of Information: Designing for Engagement at a Public Multi-Touch Display. In Proceedings of the Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010; Volume 4, pp. 2267–2276. [Google Scholar]

- Schneidermeier, T.; Burghardt, M.; Wolff, C. Design Guidelines for Coffee Vending Machines. In Proceedings of the Second International Conference on Design, User Experience, and Usability. Web, Mobile, and Product Design, Las Vegas, NV, USA, 21–26 July 2013; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8015 LNCS, pp. 432–440. [Google Scholar]

- Ho, W.C.; Dautenhahn, K.; Lim, M.Y.; Du Casse, K. Modelling Human Memory in Robotic Companions for Personalisation and Long-Term Adaptation in HRI. In Proceedings of the 2010 Conference on Biologically Inspired Cognitive Architectures (BICA); IOS Press: Amsterdam, Netherlands, 2010; Volume 221, pp. 64–71. [Google Scholar]

- Baxter, P.; Belpaeme, T. Pervasive Memory: The Future of Long-Term Social HRI Lies in the Past. In Proceedings of the AISB 2014—50th Annual Convention of the AISB, London, UK, 1–4 April 2014. [Google Scholar]

- Ahmad, M.I.; Mubin, O.; Orlando, J. Adaptive Social Robot for Sustaining Social Engagement during Long-Term Children–Robot Interaction. Int. J. Hum. Comput. Interact. 2017, 33, 943–962. [Google Scholar] [CrossRef]

- Kunold, L. Seeing Is Not Feeling the Touch from a Robot. In Proceedings of the 31st IEEE International Conference on Robot & Human Interactive Communication (RO-MAN’22), Naples, Italy, 29 August–2 September 2022; pp. 1562–1569. [Google Scholar]

- Bartneck, C. Interacting with an Embodied Emotional Character. In Proceedings of the International Conference on Designing Pleasurable Products and Interfaces, Pittsburgh, PA, USA, 23–26 June 2003; Association for Computing Machinery (ACM): New York, NY, USA, 2003; pp. 55–60. [Google Scholar]

- Kiesler, S.; Powers, A.; Fussell, S.R.; Torrey, C. Anthropomorphic Interactions with a Robot and Robot-like Agent. Soc. Cogn. 2008, 26, 169–181. [Google Scholar] [CrossRef]

- Hoffmann, L.; Bock, N.; Rosenthal Pütten, A.M.V.D. The Peculiarities of Robot Embodiment (EmCorp-Scale): Development, Validation and Initial Test of the Embodiment and Corporeality of Artificial Agents Scale. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; pp. 370–378. [Google Scholar]

- Babel, F.; Kraus, J.; Hock, P.; Asenbauer, H.; Baumann, M. Investigating the Validity of Online Robot Evaluations: Comparison of Findings from an One-Sample Online and Laboratory Study; Association for Computing Machinery: New York, NY, USA, 2021; Volume 1, ISBN 9781450382908. [Google Scholar]

- Castro-González, Á.; Admoni, H.; Scassellati, B. Effects of Form and Motion on Judgments of Social Robots’ Animacy, Likability, Trustworthiness and Unpleasantness. Int. J. Hum. Comput. Stud. 2016, 90, 27–38. [Google Scholar] [CrossRef]

- Hoffmann, L.; Krämer, N.C. The Persuasive Power of Robot Touch. Behavioral and Evaluative Consequences of Non-Functional Touch from a Robot. PLoS ONE 2021, 16, e0249554. [Google Scholar] [CrossRef]

- Robinette, P.; Wagner, A.R.; Howard, A.M. Assessment of Robot to Human Instruction Conveyance Modalities across Virtual, Remote and Physical Robot Presence. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 1044–1050. [Google Scholar] [CrossRef]

- Torre, I.; Goslin, J.; White, L.; Technology, D.Z.-P. Undefined Trust in Artificial Voices: A “Congruency Effect” of First Impressions and Behavioural Experience. In Proceedings of the TechMindSociety ’18: Proceedings of the Technology, Mind, and Society, New York, NY, USA, 5–7 April 2018; Volume 18. [Google Scholar] [CrossRef]

| Type of Communication | Reciprocity | Spatial Proximity | Temporal Proximity | Bodily Contact | * Channel | |

|---|---|---|---|---|---|---|

| * Verbal | * Nonverbal | |||||

| Social/Interpersonal | X | X | X | X | X | X |

| Parasocial (e.g., on TV) | Sometimes | X | X | |||

| Computer mediated (e.g., chat, social media) | X | X | X | Partial | ||

| * Artificial: (e.g., speech assistants) | X | X | X | Partial (reduced to e.g., prosody) | ||

| * Artificial: (e.g., embodied virtual agents) | X | X | X | X | Partial (reduced to visual and acoustic) | |

| * Artificial: (e.g., physically embodied, co-located social robots) | X | X | X | X | X | X |

| Example | Communication Goal | Act of Communication | Effect |

|---|---|---|---|

| “Hi, my name is Pepper” | Interactional (display robot identity), Relational (bonding) | Self-disclosure (Name) | Social treatment Anthropomorphization |

| “What’s your name” | Interactional (start conversation), Relational (signal interest in other) | (Ask) Question | Response/answer Inviting communication |

| “I like your name” | Relational (signal warmth) | Compliment | Liking of robot Positive affect |

| “Error” | Instrumental (information) | Verbal warning | Awareness Attention Understanding |

| “Sorry” | Interactional (reputation management), Relational (relationship maintenance) | Verbal excuse | Trust Repair Positive Affect Cooperation |

| “Pass me the salt” | Instrumental (task fulfillment) | Request Command | Elicit action → hand over object |

| “You are in my way” | Instrumental (task fulfillment) | Annotation/ Complaint | Elicit action → move to let robot pass by |

| “Take the green box and put it into the shelf” | Instrumental (task fulfillment) | Instruction | Elicit action → move object to said position |

| Nonverbal examples | |||

| Robot blinking | Instrumental (attention) | Issue, Error, Problem | Awareness Elicit Action → coping |

| Gaze at object | Instrumental (guide attention) | Affordance | Elicit Action → look to object |

| Point to object | Instrumental (guide attention) | Affordance | Elicit Action → look to object |

| Battery sign | Instrumental (transparency) | Affordance | Awareness Understanding → elicit action: charge battery |

| Smiling | Relational (liking) | Affect display | Liking, Acceptance |

| Output Modality | |||

|---|---|---|---|

| Communication Form | Auditive | Visual | Tactile |

| Verbal | Speech “I am a robot” | Text on Screen “Push the button” | Braille (dots on reader) |

| Nonverbal | Sounds Laughing Yawning | Facial Expression ☺ | Affective touch Handshake |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kunold, L.; Onnasch, L. A Framework to Study and Design Communication with Social Robots. Robotics 2022, 11, 129. https://doi.org/10.3390/robotics11060129

Kunold L, Onnasch L. A Framework to Study and Design Communication with Social Robots. Robotics. 2022; 11(6):129. https://doi.org/10.3390/robotics11060129

Chicago/Turabian StyleKunold, Laura, and Linda Onnasch. 2022. "A Framework to Study and Design Communication with Social Robots" Robotics 11, no. 6: 129. https://doi.org/10.3390/robotics11060129

APA StyleKunold, L., & Onnasch, L. (2022). A Framework to Study and Design Communication with Social Robots. Robotics, 11(6), 129. https://doi.org/10.3390/robotics11060129